Detection and Classification of Knee Osteoarthritis

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

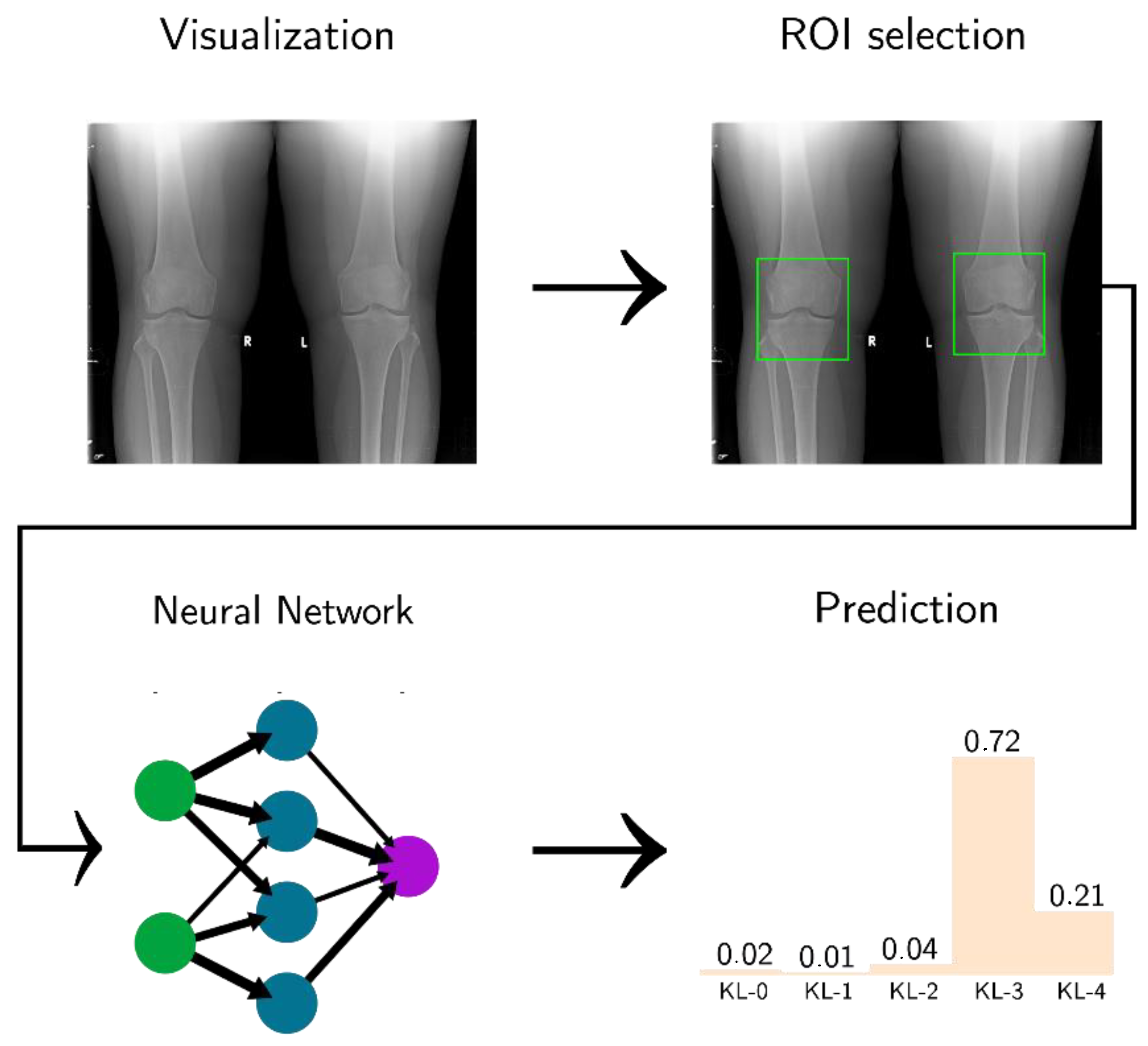

2.2. Preprocessing

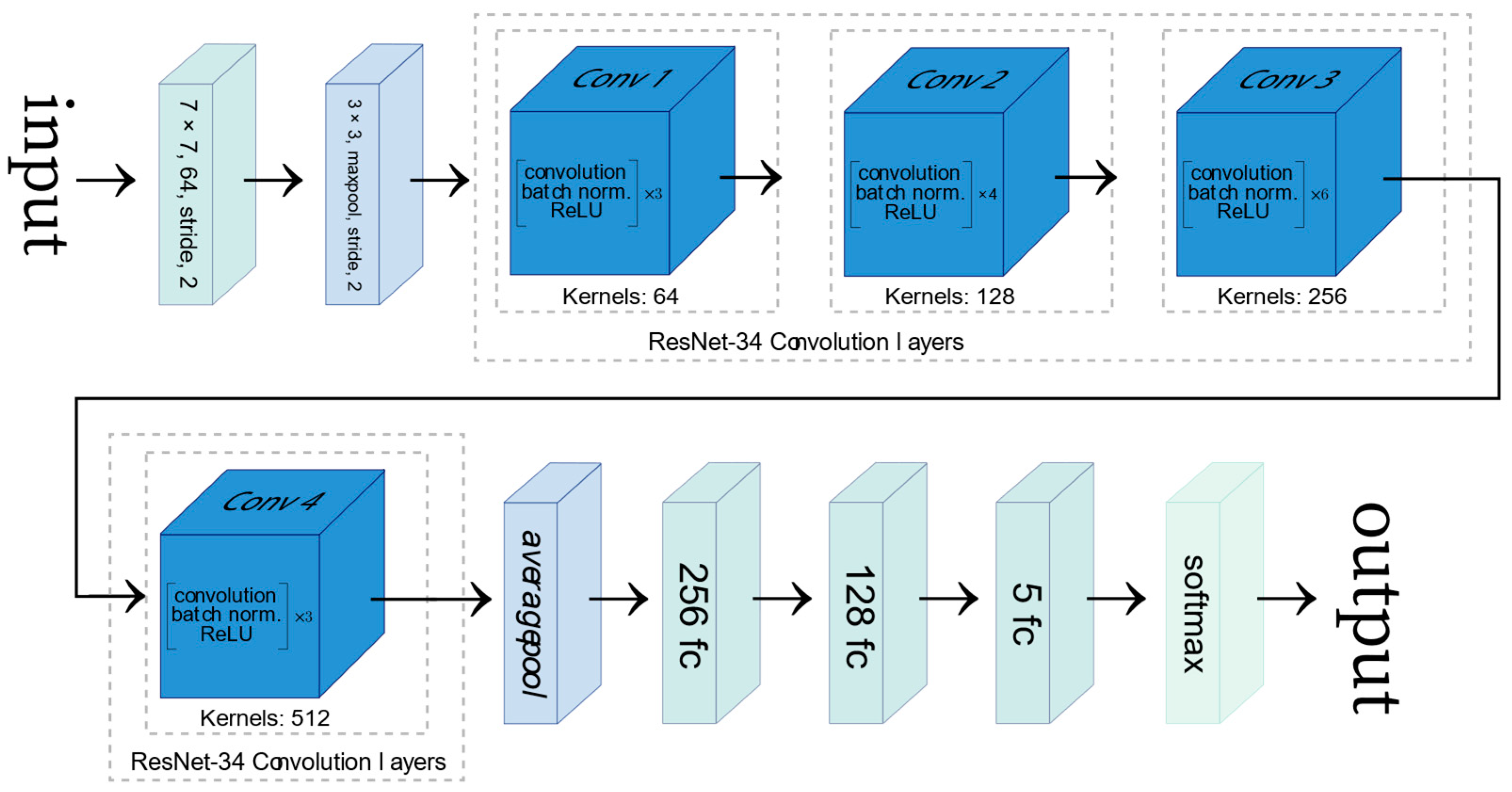

2.3. Network Architecture

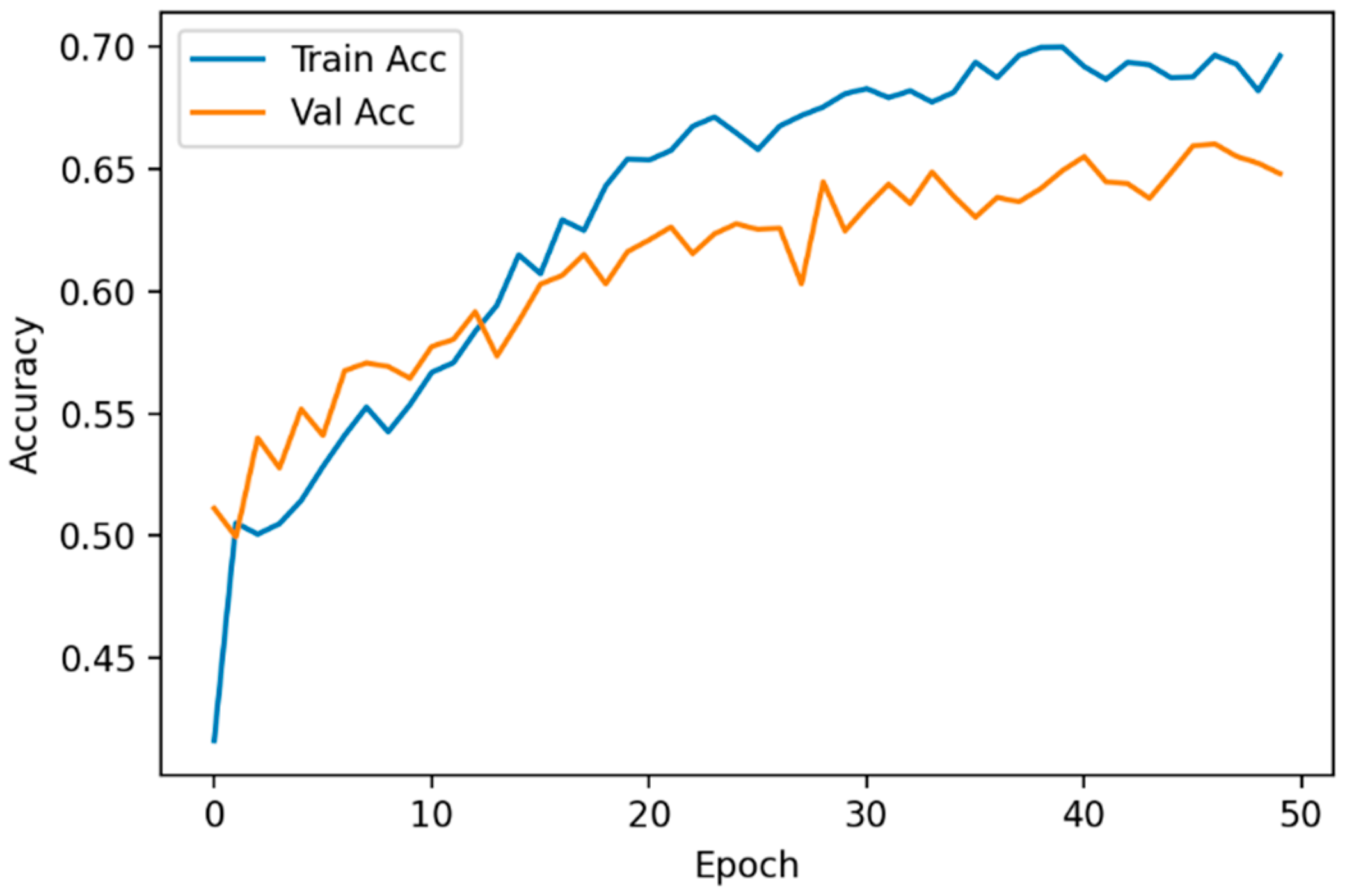

3. Results

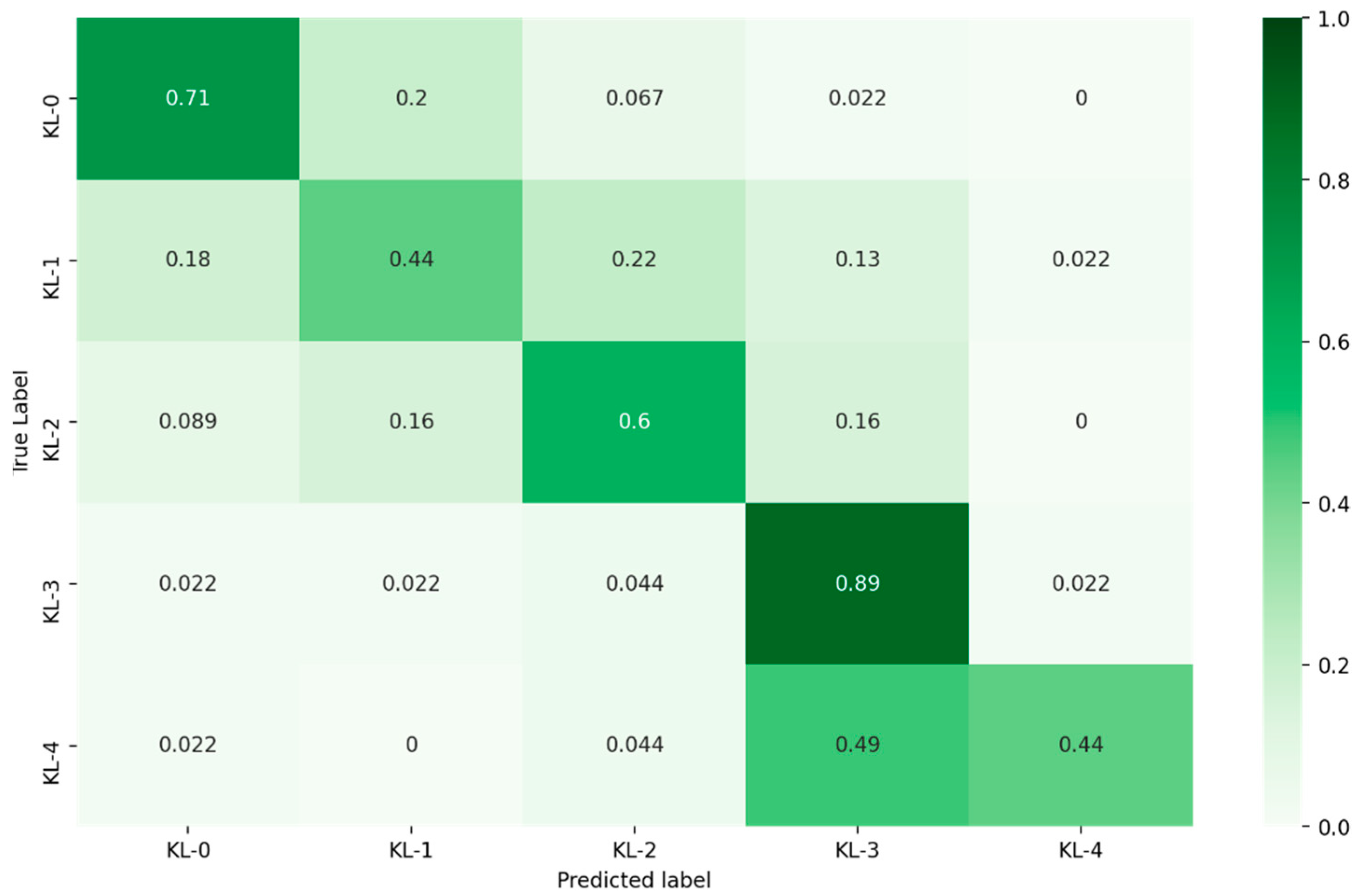

3.1. Classification of KL Degrees

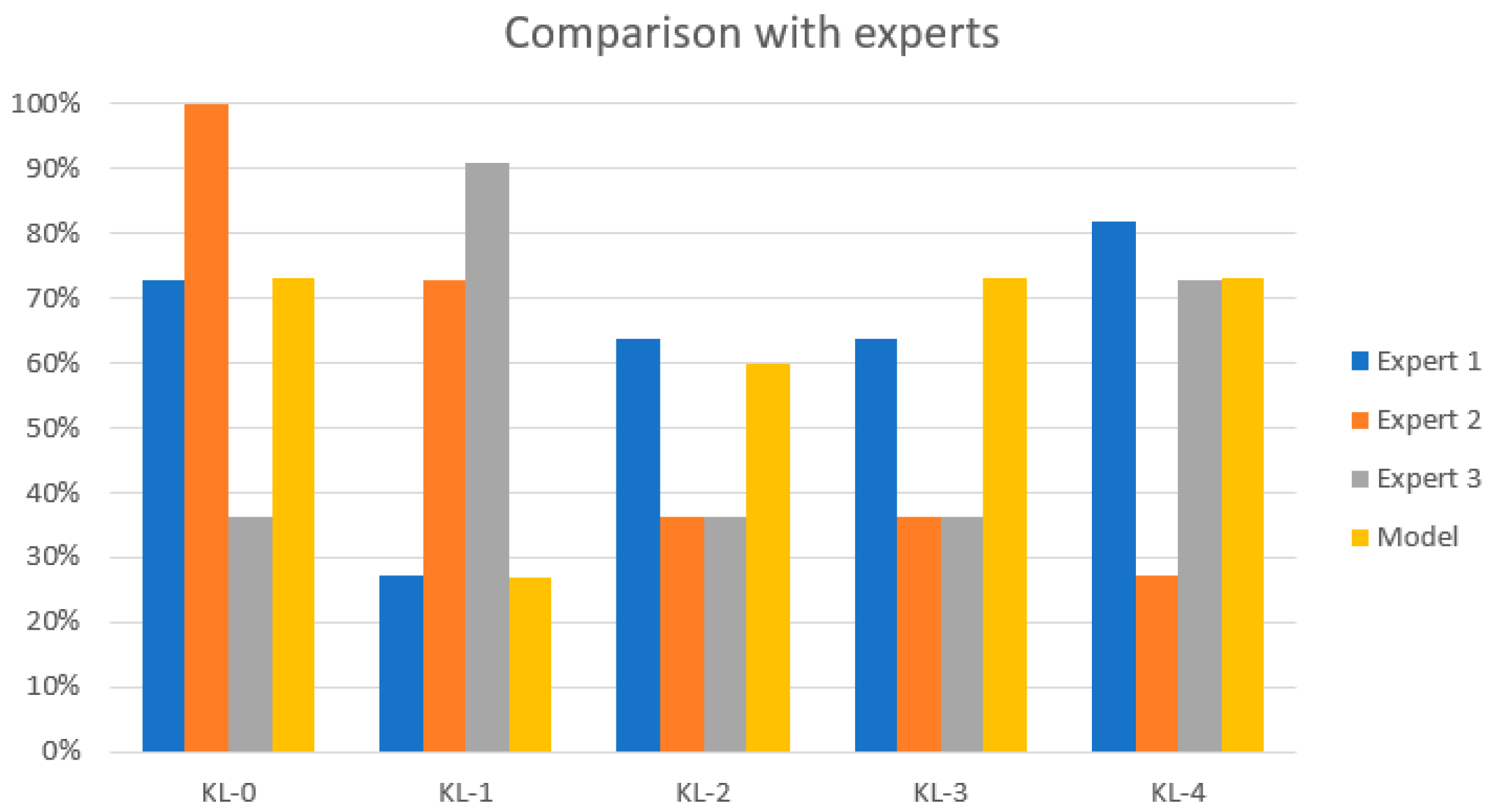

3.2. KL Model Comparison

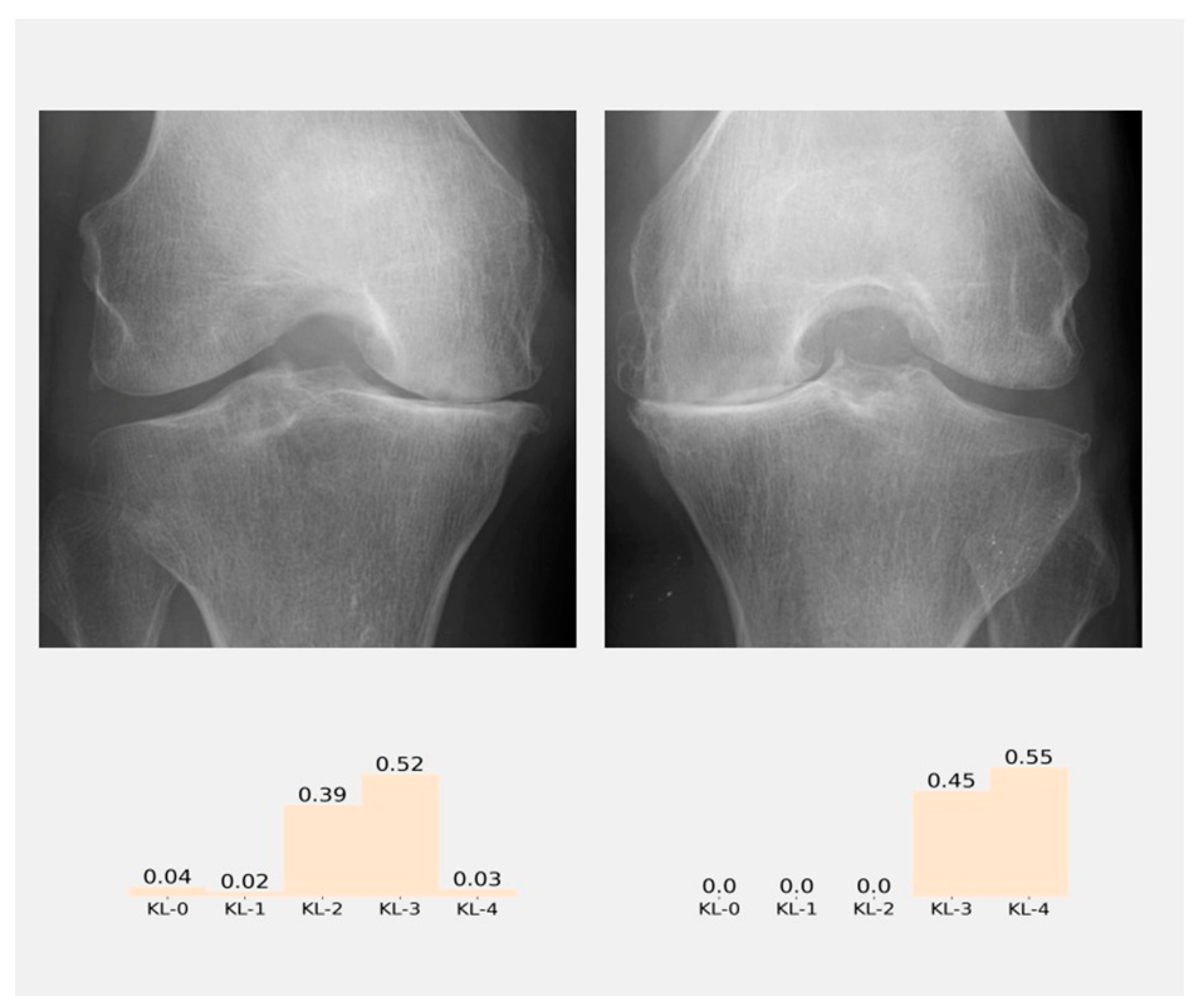

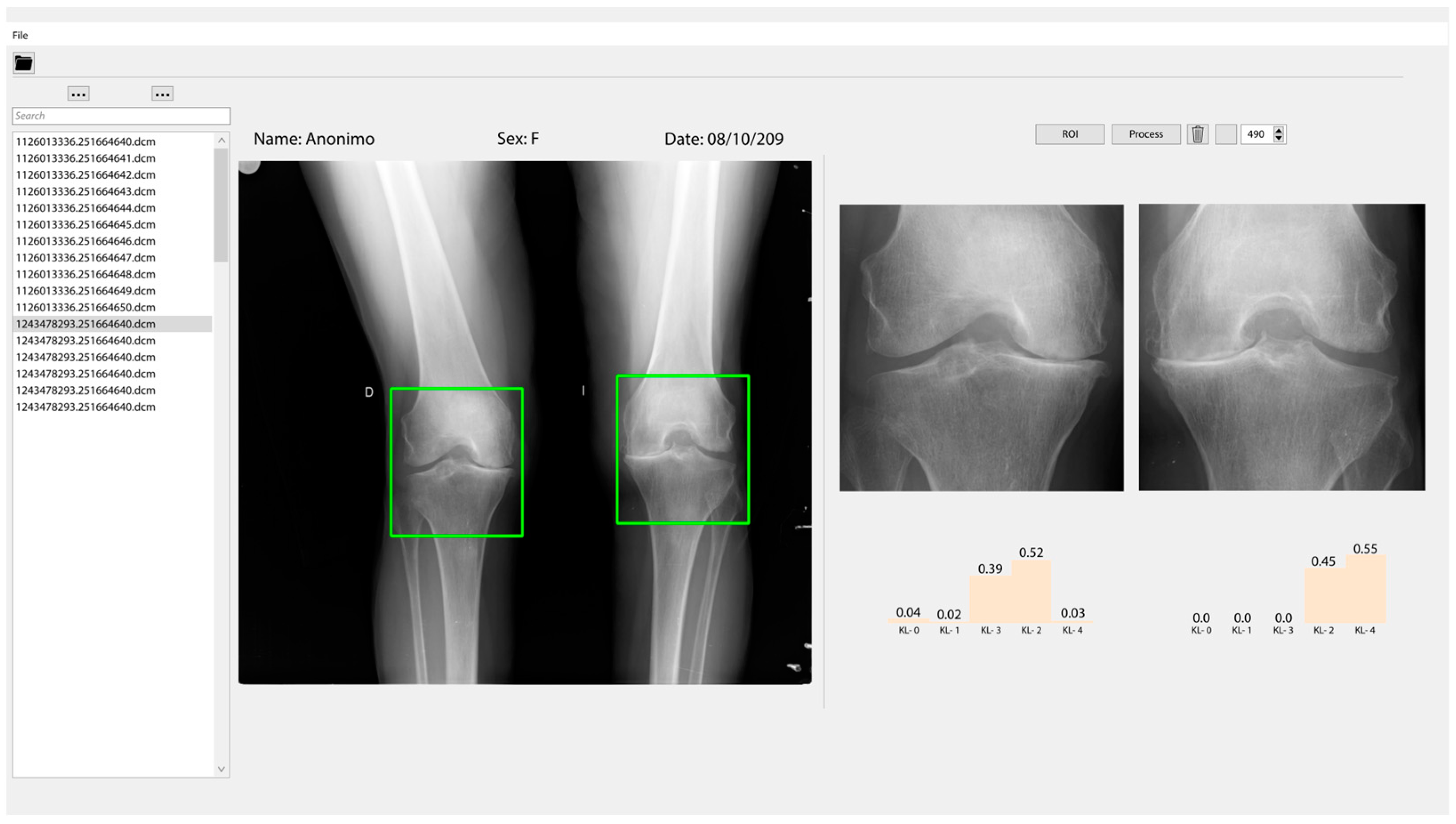

3.3. Classification and User Interface

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| OA | Osteoarthritis |

| KL | Kellgren and Lawrence scale |

| AUC | Area under the curve |

| CNN | Convolutional neural network |

| CAD | Computer-aided diagnosis/detection |

| DL | Deep learning |

References

- Nelson, A.E. Osteoarthritis Year in Review 2017: Clinical. Osteoarthr. Cartil. 2018, 26, 319–325. [Google Scholar] [CrossRef] [PubMed]

- Tiulpin, A.; Thevenot, J.; Rahtu, E.; Lehenkari, P.; Saarakkala, S. Automatic Knee Osteoarthritis Diagnosis from Plain Radiographs: A Deep Learning-Based Approach. Sci. Rep. 2018, 8, 1727. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Gao, L.; Shi, X.; Allen, K.; Yang, L. Fully Automatic Knee Osteoarthritis Severity Grading Using Deep Neural Networks with a Novel Ordinal Loss. Comput. Med. Imaging Graph. 2019, 75, 84–92. [Google Scholar] [CrossRef]

- Abedin, J.; Antony, J.; McGuinness, K.; Moran, K.; O’Connor, N.E.; Rebholz-Schuhmann, D.; Newell, J. Predicting Knee Osteoarthritis Severity: Comparative Modeling Based on Patient’s Data and Plain X-Ray Images. Sci. Rep. 2019, 9, 5761. [Google Scholar] [CrossRef]

- Kalo, K.; Niederer, D.; Schmitt, M.; Vogt, L. Acute effects of a single bout of exercise therapy on knee acoustic emissions in patients with osteoarthritis: A double-blinded, randomized controlled crossover trial. BMC Musculoskelet. Disord 2022, 23, 657. [Google Scholar] [CrossRef] [PubMed]

- Kellgren, J.H.; Lawrance, J.S. Radiological Assessment of Osteo-Arthrosis. Ann. Rheum. Dis. 1957, 16, 494–502. [Google Scholar] [CrossRef] [PubMed]

- Shamir, L.; Ling, S.M.; Scott, W.W.; Bos, A.; Orlov, N.; MacUra, T.J.; Eckley, D.M.; Ferrucci, L.; Goldberg, I.G. Knee X-Ray Image Analysis Method for Automated Detection of Osteoarthritis. IEEE Trans. Biomed. Eng. 2009, 56, 407–415. [Google Scholar] [CrossRef]

- Krakowski, P.; Karpiński, R.; Jojczuk, M.; Nogalska, A.; Jonak, J. Knee mri underestimates the grade of cartilage lesions. Appl. Sci. 2021, 11, 1552. [Google Scholar] [CrossRef]

- Zhang, X.; Lin, D.; Jiang, J.; Guo, Z. Preliminary Study on Grading Diagnosis of Early Knee Osteoarthritis by Shear Wave Elastography. Contrast Media Mol. Imaging 2022, 2022, 4229181. [Google Scholar] [CrossRef]

- Verma, D.K.; Kumari, P.; Kanagaraj, S. Engineering Aspects of Incidence, Prevalence, and Management of Osteoarthritis: A Review. Ann. Biomed. Eng. 2022, 50, 237–252. [Google Scholar] [CrossRef]

- Nevalainen, M.T.; Veikkola, O.; Thevenot, J.; Tiulpin, A.; Hirvasniemi, J.; Niinimäki, J.; Saarakkala, S.S. Acoustic emissions and kinematic instability of the osteoarthritic knee joint: Comparison with radiographic findings. Sci. Rep. 2021, 11, 19558. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Chen, P.; Yang, S.; Wu, M.; Wu, Y. Identification of abnormal knee joint vibroarthrographic signals based on fluctuation features. In Proceedings of the 2014 7th International Conference on Biomedical Engineering and Informatics, Dalian, China, 14–16 October 2014; pp. 318–322. [Google Scholar]

- Abdullah, S.S.; Rajasekaran, M.P. Automatic detection and classification of knee osteoarthritis using deep learning approach. Radiol Med. 2022, 127, 398–406. [Google Scholar] [CrossRef] [PubMed]

- Antony, J.; McGuinness, K.; Moran, K.; O’Connor, N.E. Automatic Detection of Knee Joints and Quantification of Knee Osteoarthritis Severity Using Convolutional Neural Networks; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10358, pp. 376–390. [Google Scholar] [CrossRef]

- Thomson, J.; O’Neill, T.; Felson, D.; Cootes, T. Automated Shape and Texture Analysis for Detection of Osteoarthritis from Radiographs of the Knee; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9350, pp. 127–134. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Depp Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In the Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a Similarity Metric Discriminatively, with Application to Face Verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2005), San Diego, CA, USA, 20–25 June 2005; Volume I. pp. 539–546. [Google Scholar] [CrossRef]

- Karpiński, R. Knee Joint Osteoarthritis Diagnosis Based on Selected Acoustic Signal Discriminants Using Machine Learning. Appl. Comput. Sci. 2022, 18, 71–85. [Google Scholar]

- Castillo, D.; Lakshminarayanan, V.; Rodríguez-Álvarez, M.J. MR Images, Brain Lesions, and Deep Learning. Appl. Sci. 2021, 11, 1675. [Google Scholar] [CrossRef]

- Multicenter Osteoarthritis Study (MOST) Public Data Sharing|MOST Public Data Sharing. Available online: https://most.ucsf.edu/ (accessed on 8 June 2022).

- NIMH Data Archive—OAI. Available online: https://nda.nih.gov/oai/ (accessed on 7 June 2022).

- Knee X-ray Images: Test Dataset. Available online: https://drive.google.com/drive/folders/1NljuU_nZB0R4UVVizk3kXwv10Tsr_ukr?usp=sharing (accessed on 12 June 2022).

- Buda, M.; Maki, A.; Mazurowski, M.A. A Systematic Study of the Class Imbalance Problem in Convolutional Neural Networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A Review of Medical Image Data Augmentation Techniques for Deep Learning Applications. J. Med. Imaging Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Castillo, D.; Cueva, J.; Díaz, P.; Lakshminarayanan, V. Diagnostic Value of Knee Osteoarthritis Through Self-learning. In I+D for Smart Cities and Industry, RITAM 2021, Lecture Notes in Networks and Systems; Zambrano Vizuete, M., Botto-Tobar, M., Diaz Cadena, A., Zambrano Vizuete, A., Eds.; Springer: Cham, Switzerland, 2023; Volume 512. [Google Scholar] [CrossRef]

- Zhang, B.; Tan, J.; Cho, K.; Chang, G.; Deniz, C.M. Attention-Based CNN for KL Grade Classification: Data from the Osteoarthritis Initiative. In Proceedings of the International Symposium on Biomedical Imaging, Iowa City, IA, USA, 3–7 April 2020; pp. 731–735. [Google Scholar] [CrossRef]

- Braun, H.J.; Gold, G.E. Diagnosis of osteoarthritis: Imaging. Bone 2012, 51, 278–288. [Google Scholar] [CrossRef]

- Arrigunaga, F.E.C.; Aguirre-Salinas, F.B.; Villarino, A.M.; Lescano, J.G.B.; Escalante, F.A.M.; May, A.D.J.B. Correlación de la Escala de Kellgren-Lawrence con la Clasificación de Outerbridge en Pacientes con Gonalgia Crónica. Rev. Colomb. De Ortop. Y Traumatol. 2020, 34, 160–166. [Google Scholar] [CrossRef]

- Kumar, H.; Pal, C.P.; Sharma, Y.K.; Kumar, S.; Uppal, A. Epidemiology of knee osteoarthritis using Kellgren and Lawrence scale in Indian population. J. Clin. Orthop. Trauma 2020, 11, S125–S129. [Google Scholar] [CrossRef] [PubMed]

- Kohn, M.D.; Sassoon, A.A.; Fernando, N.D. Classifications in Brief: Kellgren-Lawrence Classification of Osteoarthritis. Clin. Orthop. Relat. Res. 2016, 474, 1886–1893. [Google Scholar] [CrossRef] [PubMed]

| Group | Dataset | Images | KL-0 1 | KL-1 | KL-2 | KL-3 | KL-4 |

|---|---|---|---|---|---|---|---|

| Raw dataset | Chen et al., 2019 [4] | 9182 | 3253 | 1770 | 2578 | 1286 | 285 |

| Raw dataset | Private hospital | 376 | 58 | 65 | 95 | 113 | 45 |

| Training | Chen et al., 2019 [4] | 20,022 | 4422 | 4395 | 4262 | 4648 | 2295 |

| Validation | Chen et al., 2019 [4] | 1359 | 270 | 270 | 270 | 270 | 270 |

| Test | Private hospital | 225 | 45 | 45 | 45 | 45 | 45 |

| Kellgren–Lawrence Scale | TP | FP | FN | Precision | Recall | Execution Time |

|---|---|---|---|---|---|---|

| KL-0 | 32 | 14 | 13 | 70% | 71% | 6.11 s |

| KL-1 | 20 | 17 | 25 | 54% | 44% | |

| KL-2 | 27 | 17 | 18 | 61% | 60% | |

| KL-3 | 40 | 36 | 5 | 53% | 89% | |

| KL-4 | 20 | 2 | 25 | 91% | 44% |

| Kellgren–Lawrence Scale | Expert 1 | Expert 2 | Expert 3 | Our Model |

|---|---|---|---|---|

| KL-0 | 73% | 100% | 36% | 73% |

| KL-1 | 27% | 73% | 91% | 27% |

| KL-2 | 64% | 36% | 36% | 50% |

| KL-3 | 64% | 36% | 36% | 73% |

| KL-4 | 82% | 27% | 73% | 73% |

| Model | Learning Rate | Optimizer | Kappa | Average Multiclass Accuracy |

|---|---|---|---|---|

| This work | 1 × 10−4 | Adam | 0.79 | 61.71% |

| Tiulpin et al., 2018 [3] | 1 × 10−4 | Adam | 0.83 | 66.71% |

| Antony et al., 2017 [14] | 1 × 10−3 | SGD | 0.77 | 59.52% |

| Zhang et al., 2020 [29] | - | - | 0.88 | 74.81% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cueva, J.H.; Castillo, D.; Espinós-Morató, H.; Durán, D.; Díaz, P.; Lakshminarayanan, V. Detection and Classification of Knee Osteoarthritis. Diagnostics 2022, 12, 2362. https://doi.org/10.3390/diagnostics12102362

Cueva JH, Castillo D, Espinós-Morató H, Durán D, Díaz P, Lakshminarayanan V. Detection and Classification of Knee Osteoarthritis. Diagnostics. 2022; 12(10):2362. https://doi.org/10.3390/diagnostics12102362

Chicago/Turabian StyleCueva, Joseph Humberto, Darwin Castillo, Héctor Espinós-Morató, David Durán, Patricia Díaz, and Vasudevan Lakshminarayanan. 2022. "Detection and Classification of Knee Osteoarthritis" Diagnostics 12, no. 10: 2362. https://doi.org/10.3390/diagnostics12102362

APA StyleCueva, J. H., Castillo, D., Espinós-Morató, H., Durán, D., Díaz, P., & Lakshminarayanan, V. (2022). Detection and Classification of Knee Osteoarthritis. Diagnostics, 12(10), 2362. https://doi.org/10.3390/diagnostics12102362