Automated Radiology Alert System for Pneumothorax Detection on Chest Radiographs Improves Efficiency and Diagnostic Performance

Abstract

:1. Introduction

2. Materials and Methods

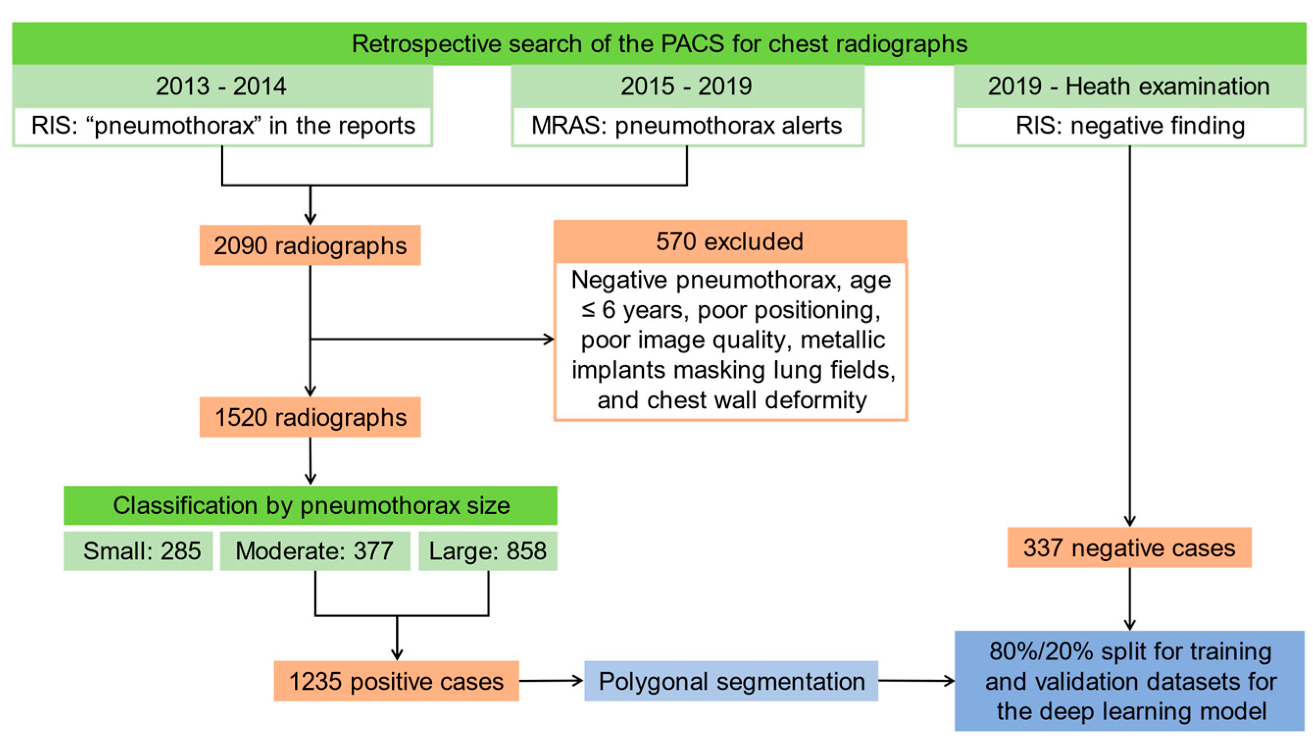

2.1. Patients and Image Acquisition

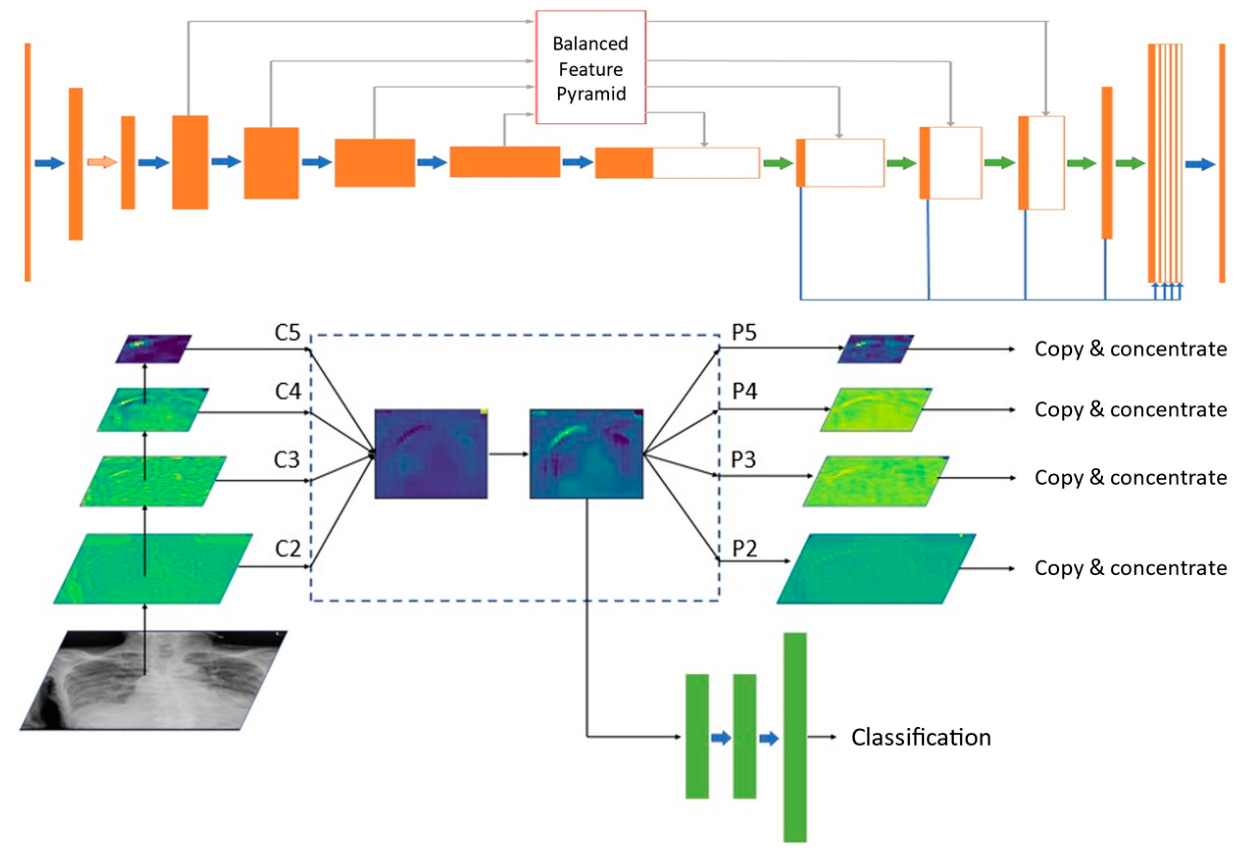

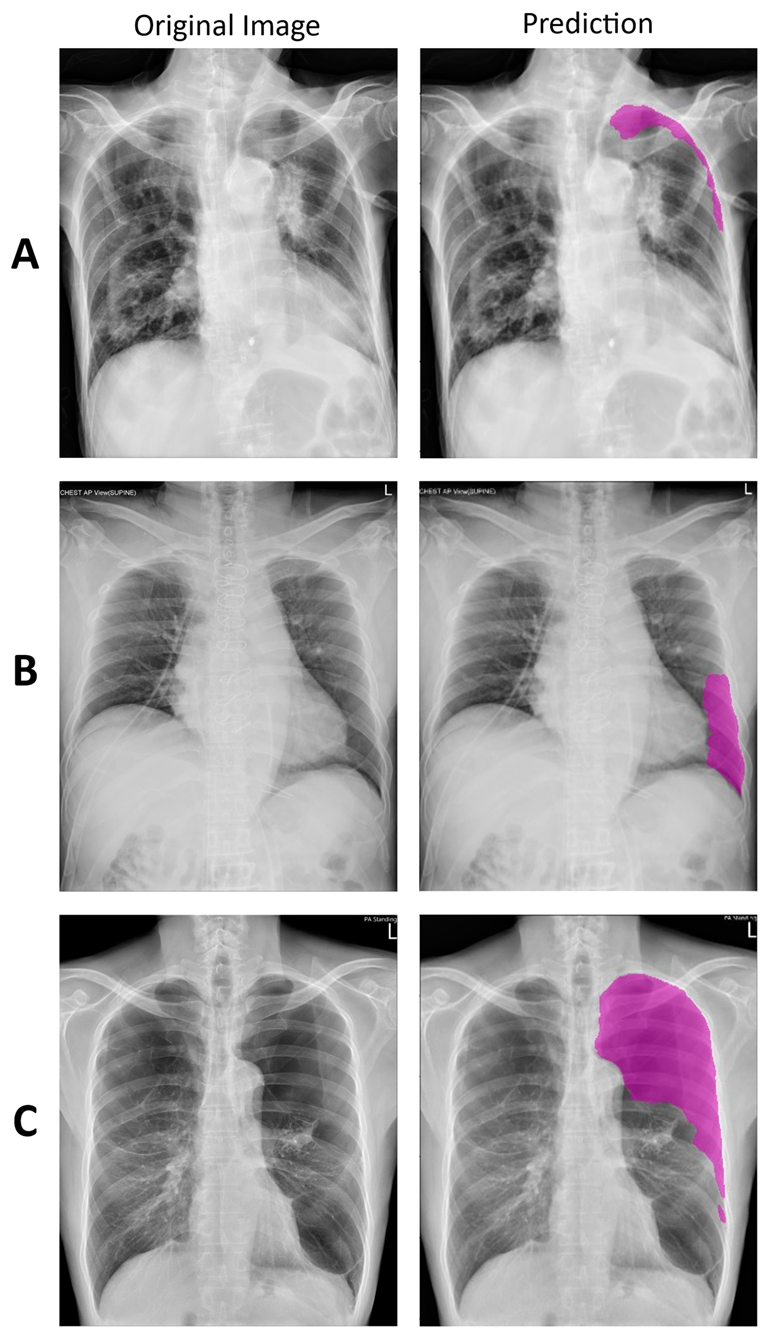

2.2. Deep Learning Model

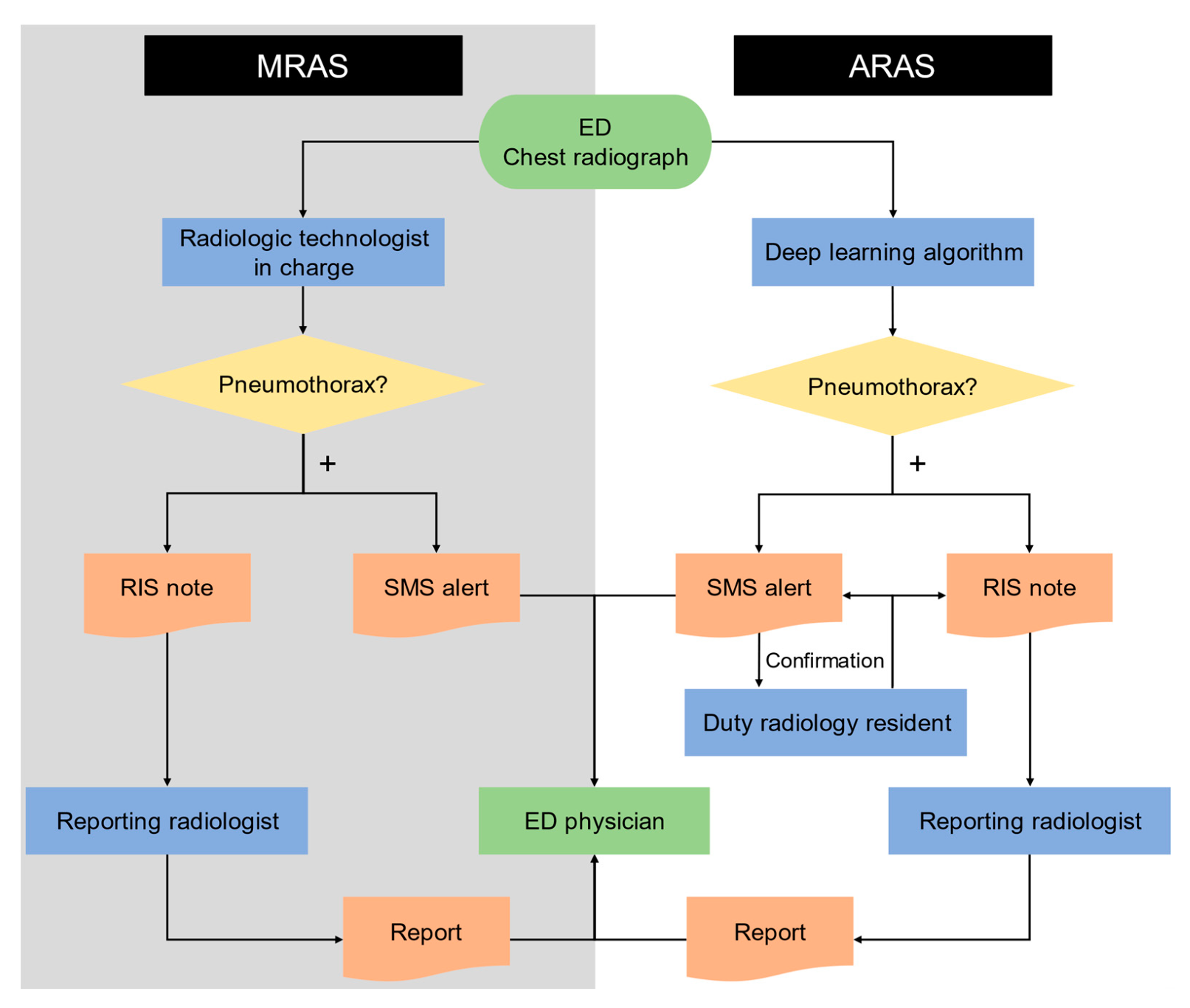

2.3. Manual and Automated Radiology Alert Systems

2.4. Efficiency of Deep Learning Model

2.5. Diagnostic Performance during Parallel Running of the Two Systems

2.6. Statistical Analysis

3. Results

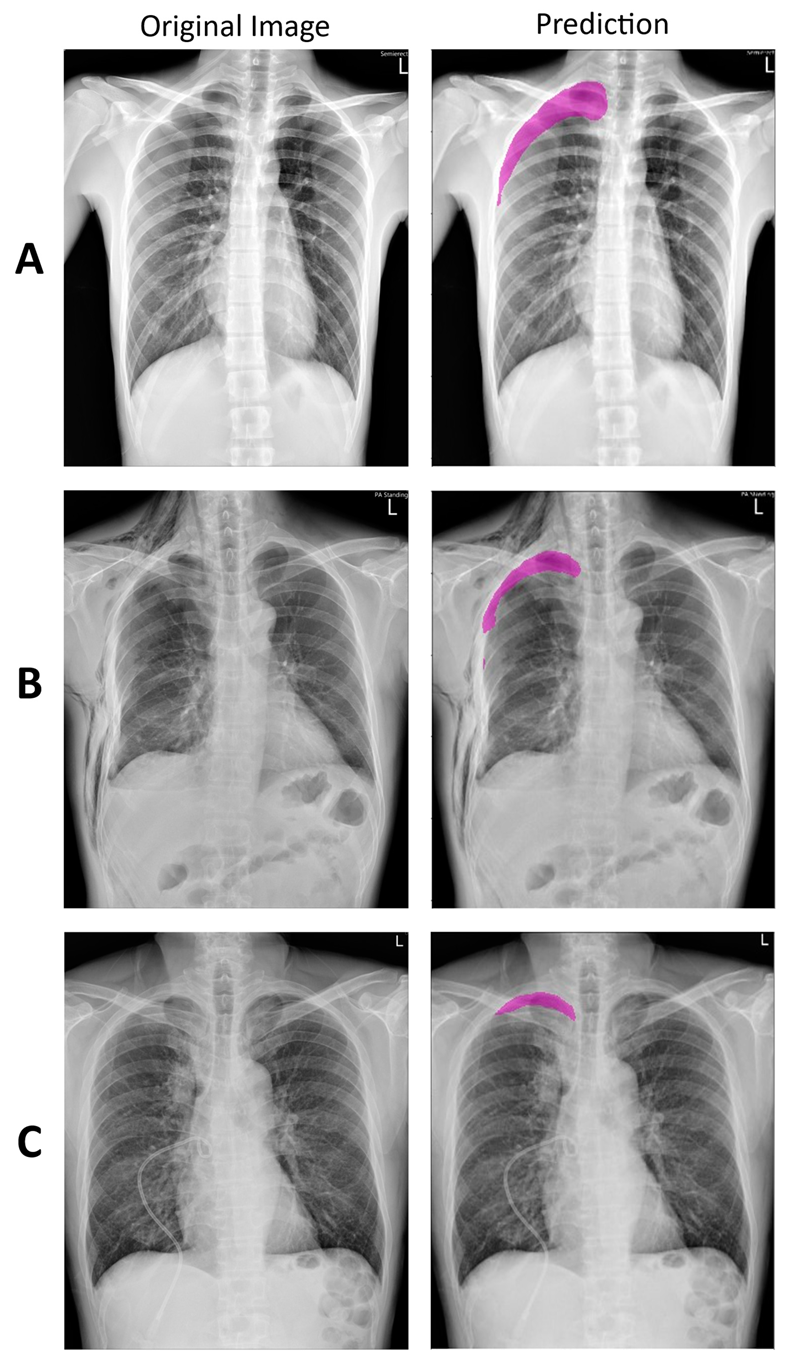

3.1. Deep Learning Model

3.2. Efficiency of Deep Learning Model

3.3. Diagnostic Performance during Parallel Running of the Two Systems

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Raoof, S.; Feigin, D.; Sung, A.; Raoof, S.; Irugulpati, L.; Rosenow, E.C. Interpretation of Plain Chest Roentgenogram. Chest 2012, 141, 545–558. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yarmus, L.; Feller-Kopman, D. Pneumothorax in the Critically Ill Patient. Chest 2012, 141, 1098–1105. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seow, A.; Kazerooni, E.A.; Pernicano, P.G.; Neary, M. Comparison of upright inspiratory and expiratory chest radiographs for detecting pneumothoraces. Am. J. Roentgenol. 1996, 166, 313–316. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thomsen, L.; Natho, O.; Feigen, U.; Schulz, U.; Kivelitz, D. Value of Digital Radiography in Expiration in Detection of Pneumothorax. RoFo Fortschr. Geb. Rontgenstrahlen Bildgeb. Verfahr. 2013, 186, 267–273. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA; pp. 2097–2106. [Google Scholar]

- Hwang, E.J.; Park, S.; Jin, K.-N.; Kim, J.I.; Choi, S.Y.; Lee, J.H.; Goo, J.M.; Aum, J.; Yim, J.-J.; Cohen, J.G.; et al. Development and Validation of a Deep Learning–Based Automated Detection Algorithm for Major Thoracic Diseases on Chest Radiographs. JAMA Netw. Open 2019, 2, e191095. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prevedello, L.M.; Erdal, B.S.; Ryu, J.L.; Little, K.J.; Demirer, M.; Qian, S.; White, R.D. Automated Critical Test Findings Identification and Online Notification System Using Artificial Intelligence in Imaging. Radiology 2017, 285, 923–931. [Google Scholar] [CrossRef] [PubMed]

- Taylor, A.G.; Mielke, C.; Mongan, J. Automated detection of moderate and large pneumothorax on frontal chest X-rays using deep convolutional neural networks: A retrospective study. PLoS Med. 2018, 15, e1002697. [Google Scholar] [CrossRef]

- Wada, K. Labelme: Image Polygonal Annotation with Python, GitHub. 2016. Available online: https://github.com/wkentaro/labelme (accessed on 25 February 2020).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards Balanced Learning for Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2019; pp. 821–830. [Google Scholar]

- Park, S.; Lee, S.M.; Kim, N.; Choe, J.; Cho, Y.; Do, K.-H.; Seo, J.B. Application of deep learning–based computer-aided detection system: Detecting pneumothorax on chest radiograph after biopsy. Eur. Radiol. 2019, 29, 5341–5348. [Google Scholar] [CrossRef] [PubMed]

- Hwang, E.J.; Hong, J.H.; Lee, K.H.; Kim, J.I.; Nam, J.G.; Kim, D.S.; Choi, H.; Yoo, S.J.; Goo, J.M.; Park, C.M. Deep learning algorithm for surveillance of pneumothorax after lung biopsy: A multicenter diagnostic cohort study. Eur. Radiol. 2020, 30, 3660–3671. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Lee, S.M.; Lee, K.H.; Jung, K.-H.; Bae, W.; Choe, J.; Seo, J.B. Deep learning-based detection system for multiclass lesions on chest radiographs: Comparison with observer readings. Eur. Radiol. 2019, 30, 1359–1368. [Google Scholar] [CrossRef] [PubMed]

- Majkowska, A.; Mittal, S.; Steiner, D.F.; Reicher, J.J.; McKinney, S.M.; Duggan, G.E.; Eswaran, K.; Chen, P.-H.C.; Liu, Y.; Kalidindi, S.R.; et al. Chest Radiograph Interpretation with Deep Learning Models: Assessment with Radiologist-adjudicated Reference Standards and Population-adjusted Evaluation. Radiology 2020, 294, 421–431. [Google Scholar] [CrossRef] [PubMed]

- Bobbio, A.; Dechartres, A.; Bouam, S.; Damotte, D.; Rabbat, A.; Régnard, J.-F.; Roche, N.; Alifano, M. Epidemiology of spontaneous pneumothorax: Gender-related differences. Thorax 2015, 70, 653–658. [Google Scholar] [CrossRef] [Green Version]

- Hiyama, N.; Sasabuchi, Y.; Jo, T.; Hirata, T.; Osuga, Y.; Nakajima, J.; Yasunaga, H. The three peaks in age distribution of females with pneumothorax: A nationwide database study in Japan. Eur. J. Cardio Thorac. Surg. 2018, 54, 572–578. [Google Scholar] [CrossRef]

- Kim, D.; Jung, B.; Jang, B.-H.; Chung, S.-H.; Lee, Y.J.; Ha, I.-H. Epidemiology and medical service use for spontaneous pneumo-thorax: A 12-year study using nationwide cohort data in Korea. BMJ Open 2019, 9. [Google Scholar] [CrossRef]

- Tolkachev, A.; Sirazitdinov, I.; Kholiavchenko, M.; Mustafaev, T.; Ibragimov, B. Deep Learning for Diagnosis and Segmentation of Pneumothorax: The Results on the Kaggle Competition and Validation against Radiologists. IEEE J. Biomed. Health Inform. 2021, 25, 1660–1672. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Institute of Electrical and Electronics Engineers (IEEE): New York, NY, USA, 2018; pp. 3–19. [Google Scholar]

| MRAS | ARAS | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Positive | Negative | Positive | Negative | Total | ||||||||

| Ground Truth | Positive | Small | 22 | 2 | 64 | 11 | 72 | 8 | 14 | 5 | 86 | 13 |

| Moderate | 3 | 17 | 14 | 6 | 20 | |||||||

| Large | 17 | 36 | 50 | 3 | 53 | |||||||

| Negative | 0 | 3653 | 33 | 3620 | 3653 | |||||||

| Total | 22 | 3717 | 105 | 3634 | 3739 | |||||||

| MRAS | ARAS | |

|---|---|---|

| Sensitivity (Recall) | 0.256 (0.168–0.361) | 0.837 (0.742–0.908) |

| Specificity | 1.000 (0.999–1.000) | 0.991 (0.987–0.994) |

| PPV (Precision) | 1.000 (1.000–1.000) | 0.686 (0.605–0.756) |

| NPV | 0.983 (0.981–0.985) | 0.996 (0.994–0.998) |

| Accuracy | 0.983 (0.978–0.987) | 0.987 (0.983–0.991) |

| AUC | 0.628 (0.612–0.643) | 0.914 (0.905–0.923) |

| F1 score | 0.407 (0.391–0.423) | 0.754 (0.740–0.768) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kao, C.-Y.; Lin, C.-Y.; Chao, C.-C.; Huang, H.-S.; Lee, H.-Y.; Chang, C.-M.; Sung, K.; Chen, T.-R.; Chiang, P.-C.; Huang, L.-T.; et al. Automated Radiology Alert System for Pneumothorax Detection on Chest Radiographs Improves Efficiency and Diagnostic Performance. Diagnostics 2021, 11, 1182. https://doi.org/10.3390/diagnostics11071182

Kao C-Y, Lin C-Y, Chao C-C, Huang H-S, Lee H-Y, Chang C-M, Sung K, Chen T-R, Chiang P-C, Huang L-T, et al. Automated Radiology Alert System for Pneumothorax Detection on Chest Radiographs Improves Efficiency and Diagnostic Performance. Diagnostics. 2021; 11(7):1182. https://doi.org/10.3390/diagnostics11071182

Chicago/Turabian StyleKao, Cheng-Yi, Chiao-Yun Lin, Cheng-Chen Chao, Han-Sheng Huang, Hsing-Yu Lee, Chia-Ming Chang, Kang Sung, Ting-Rong Chen, Po-Chang Chiang, Li-Ting Huang, and et al. 2021. "Automated Radiology Alert System for Pneumothorax Detection on Chest Radiographs Improves Efficiency and Diagnostic Performance" Diagnostics 11, no. 7: 1182. https://doi.org/10.3390/diagnostics11071182

APA StyleKao, C.-Y., Lin, C.-Y., Chao, C.-C., Huang, H.-S., Lee, H.-Y., Chang, C.-M., Sung, K., Chen, T.-R., Chiang, P.-C., Huang, L.-T., Wang, B., Liu, Y.-S., Chiang, J.-H., Wang, C.-K., & Tsai, Y.-S. (2021). Automated Radiology Alert System for Pneumothorax Detection on Chest Radiographs Improves Efficiency and Diagnostic Performance. Diagnostics, 11(7), 1182. https://doi.org/10.3390/diagnostics11071182