Abstract

Efficient segmentation of Magnetic Resonance (MR) brain tumor images is of the utmost value for the diagnosis of tumor region. In recent years, advancement in the field of neural networks has been used to refine the segmentation performance of brain tumor sub-regions. The brain tumor segmentation has proven to be a complicated task even for neural networks, due to the small-scale tumor regions. These small-scale tumor regions are unable to be identified, the reason being their tiny size and the huge difference between area occupancy by different tumor classes. In previous state-of-the-art neural network models, the biggest problem was that the location information along with spatial details gets lost in deeper layers. To address these problems, we have proposed an encoder–decoder based model named BrainSeg-Net. The Feature Enhancer (FE) block is incorporated into the BrainSeg-Net architecture which extracts the middle-level features from low-level features from the shallow layers and shares them with the dense layers. This feature aggregation helps to achieve better performance of tumor identification. To address the problem associated with imbalance class, we have used a custom-designed loss function. For evaluation of BrainSeg-Net architecture, three benchmark datasets are utilized: BraTS2017, BraTS 2018, and BraTS 2019. Segmentation of Enhancing Core (EC), Whole Tumor (WT), and Tumor Core (TC) is carried out. The proposed architecture have exhibited good improvement when compared with existing baseline and state-of-the-art techniques. The MR brain tumor segmentation by BrainSeg-Net uses enhanced location and spatial features, which performs better than the existing plethora of brain MR image segmentation approaches.

1. Introduction

In modern society, diseases related to the brain are emerging as a big problem especially malignant brain tumors which are greatly influencing human lives [1]. Gliomas are the most-occurring malignant brain tumor, they are caused by abnormal cell transformation, and are largely classified into High-Grade Gliomas (HGG) and Low-Grade Gliomas (LGG) [2]. HGG are malignant tumors that have already grown; their progress has considerably deteriorated and surgery is essential. LGG is less advanced than HGG, and life expectancy can be extended through treatment [3]. There are different methods to distinguish these tumor lesions: Computed Tomography (CT), X-ray, Single-Photon Emission Computed Tomography (SPECT), Ultrasound, Magnetic Resonance Imaging (MRI), Positron Emission Tomography (PET), Magnetic Brain Wave Graph (MEG), and Electroencephalogram (EEG) [4]. However, among all medical imaging techniques, MRI is considered to be the most comprehensive method which can help to to determine the exact size and volume of the malignant tumor [5]. The images generated by MRI are used to measure and analyze the location and size of the tumor, and can be divided according to the characteristics of the tumor, which can be improved with an optimal diagnostic process and treatment method. Because of the high quality of MRI, effective segmentation of brain tumors has become one of the most important research problems in the field of medical imaging [6].

MRI segments a brain tumor through four visualized images with different characteristics. These imaging methods areT1-weighted (T1w), T1w contrast-enhanced (CE), T2-weighted (T2w), and Fluid-Attenuated Inversion Recovery (FLAIR). The T1 image is used to differentiate healthy tissue, and T2 is used to describe the edema area that produces a bright signal. The T1ce can be distinguished by a bright signal from the contrast agent, which has accumulated in the tumor boundary and the active cell area of the tumor tissue. Flair differentiates between edema and cerebrospinal fluid by inhibiting the signals of water molecules [1]. Based on this, tumors can be subdivided into tumor nuclei, reinforced tumors, and whole tumors. Some tumors, such as meningiomas, segment easily, but gliomas and glioblastoma cells spread well and are difficult to segment because these are not contrasted [7]. Besides, it is not easy to segment because it can occur at any location and varies in size.

The brain tumor segmentation models are generally divided into 2D data and 3D data-based models. Several researches [8,9,10,11] have demonstrated that 3D architectures perform better than 2D architectures. However, 3D architectures have limitations as they use more parameters and are computational complex [12]. Specifically, the dataset utilized for applying the 3D model is often reduced to half the size of the existing training data.

In this research, we put forward a model named BrainSeg-Net. BrainSeg-Net uses a FE block, which is added to enhance the features of shallow layers before adding them to deep layers, contributing towards the classification and segmentation process. Moreover, the feature maps extracted by FE block are upsampled and concatenated with the result of the higher-level encoder–decoder connection. This increases the valid receptive field of the network which helps to locate the small-scale tumors.

2. Related Work

In the past few years, various deep learning models for computer vision tasks have been proposed, such as VGGNet [13], ResNet [14], and DenseNet [15]. Deep Neural Networks have the strong ability to automatically extract the discriminant features; therefore, they are widely used in the field of medical imaging and bioinformatics [16,17,18,19,20]. Similarly, the use of deep learning for computer-aided diagnosis of brain tumor has gathered extensive attention. In recent years, Medical Image Computing and Computer-Assisted Invention (MICCAI) and Brain Tumor Segmentation (BraTS) challenge have greatly contributed towards the development of neural network-based architectures for brain tumor diagnosis.

Broadly, the methods for image segmentation based on deep learning can be of two types which are Convolutional Neural Network (CNN) and Full Convolution Network (FCN). CNN based methods use small patch classification technique for tumor segmentation. Havaei et al. applied a segmentation method using CNN architecture to segment brain tumor regions from 2D MRI images and used convolutional kernels of various sizes to extract important contextual features [7]. Another CNN-based technique was proposed by Pereira et al. which is an automated segmentation architecture based on VGG-Net. However, the CNN architecture is a patch-based method, which requires large storage space and lacks spatial continuity, resulting in poor efficiency. On other hand, FCN techniques perform calculation pixel by pixel based on the Encoder–Decoder concept, this not only increases the brain tumor segmentation efficiency but also the spatial continuity problem gets solved [21].

Based on the FCN concept, Ronneberger et al. [22] proposed a U-Net architecture applied to various medical segmentation problems. U-Net is based on the conception of encoding and decoding paths, where encoding path extracts the contextual features while decoding path keeps an accurate track of the location. The concatenation of encoder and decoder features in U-Net architecture greatly improves the medical image segmentation performance. U-Net architecture is widely used by researchers for brain tumor segmentation. Dong et al. proposed a 2D segmentation network based on U-Net along with which they performed real-time data augmentation to increase the performance of brain tumor segmentation [23]. Kong et al. added the feature pyramid module in U-Net architecture to increase the accuracy by integrating location details and multi-scale semantic [24]. Extensive work has been carried out to improve the performance of U-Net for image segmentation like using dilated convolution, introducing up skip connection, using dense block and embedding MultiRes block in base U-Net architecture [25,26,27,28]. For further improvement in U-Net architecture, the Attention Gates (AGs) were integrated into the architecture [29]. The addition of Squeeze-and-Excitation (SE) blocks [30] was made in [31,32] for prostate zonal segmentation on MR images. Vasileios et al. propose a skull stripping technique which classifies skull-free images to give better segmentation performance. McKinley et al. developed a network by applying the extended convolution structure [33]. Wang et al. proved that the brain segmentation performance improves when convolution neural networks are applied with Test Time Augmentation technique (TTA) [34].

Undoubtedly, U-Net has shown great success in the medical field and therefore presently it is mainstream of segmentation architectures for brain tumor MRI. However, the encoder side of the U-Net architecture performs downsampling which reduces the image size, resulting in low performance as small-scale tumors are unable to be identified. To solve this problem, a mechanism needs to be adopted that enhances the local features and help the decoder to locate the tumor efficiently.

3. Datasets

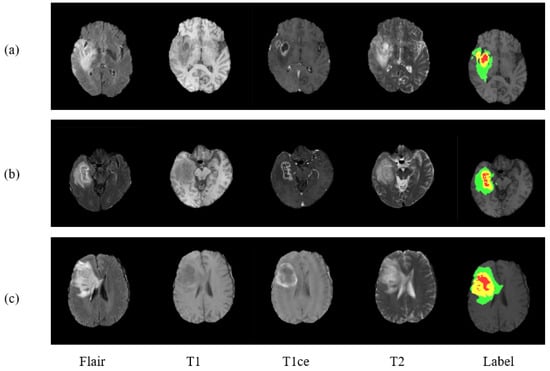

For the evaluation of proposed model, three benchmark databases are taken into consideration: BraTS 2017, Brats 2018, and BraTS 2019. These are publicly available benchmark databases used to train and evaluate the model. The BraTS2017 dataset is a collection of data from 285 glioma patients, consisting of 210 HGG and 75 LGG cases. The validation data set additionally includes images of 46 patients having an unknown grade. Unlike training data, validation data are not labeled, and results can only be generated using the online web of BraTS challenge. The dataset includes all four modalities for every patient as can be seen in Figure 1, where three data of three different patients is exhibited.

Figure 1.

Presenting three MRI cases (a–c) of brain tumor with four modalities and a label plots. From left to right are Flair, T1, T1ce, T2, and Label. In ground truth image each color represents different tumor class. Red for necrosis and non-enhancing, green for edema, and yellow for enhancing tumor.

There are four labels in the dataset:

- Necrosis and Non-enhancing Tumor

- Enhancing Tumor

- Healthy Tissue

- Edema

The training dataset of BraTS 2018 is similar to BraTS2017 but validation dataset is different. The validation dataset of BraTS 2018 has more cases than BraTS 2017 which are 66. There is a different training dataset of BraTS 2019 which have more number of cases than previous databases. BraTS 2019 comprises of 335 glioma cases, where 259 belongs to HGG and remaining 76 belongs to LGG. Further, BraTS 2019 has an expanded validation dataset which carried 125 cases.

4. Methodology

This section discusses the preprocessing process carried out on the input images along with architecture details of BrainSeg-Net. Further, the BrainSeg-Net also discusses the FE block and custom-designed loss function.

4.1. Image Preprocessing

Deep Learning models are undoubtedly high-performing models, but still, they have a few weaknesses. One of them is that these models are prone to noise, which makes the image preprocessing an important task to be carried out on every input image. Therefore we also have preprocessed all the images and for that we adopted N4ITK algorithm [35], a bias correction technique which makes dataset images homogeneous. With further processing, the top 1% and bottom 1% intensities are abandoned as done in [7]. Finally, all the images are normalized with the mean value of 0 and the variance value of 1.

4.2. Proposed BrainSeg-Net

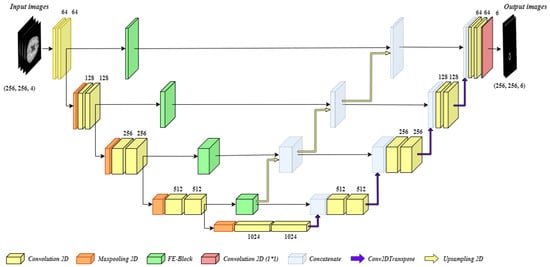

The brain tumor segmentation is a complex task, and the biggest challenge in it to segment small scale tumors. Stronger contextual features are extracted at the deeper stage of the encoder; however, at this stage spatial and location, information is lost due to nonlinear transformations and continuous convolutions. By addressing this issue, an efficient model for brain image segmentation can be developed which can identify small-scale tumors as well. For this purpose, we have developed BrainSeg-Net which uses FE block which propagates spatial and location information during the process of upsampling (decoder side). Figure 2 shows the overall proposed architecture of BrainSeg-Net, and details regarding the BrainSeg-Net architecture are as follows.

Figure 2.

Architecture of the proposed BrainSeg-Net for MRI brain image segmentation.

As can be seen in Figure 2, the proposed BrainSeg-Net architecture is an encoder–decoder-based technique. The left side of the figure shows the encoder, which is a contracting path, while the right side of the figure shows the decoder, which is an expanding path. The input preprocessed image is of size where there are four input channels. The encoder and decoder have four blocks with a single transition at bottom of the architecture. Every block of the encoder side consists of two convolution layers while three of the blocks also carry single max-pooling layer. The blocks of decoder consist of a dropout layer and two convolution layers, while the last decoder block contains an additional convolution layer with the filter size of . The transition block contains a max-pooling layer along with two convolution layers. The output of every encoder block is given to the next encoder block and the FE block. The output of the last block of the encoder is given to transition block while its output is given to the first decoder block. The sequence of decoder blocks starts from the bottom, while the output is taken from the last block of the decoder. The output of every decoder block undergoes Conv2DTranspose. A bridge connection between between every encoder block and its associated decoder block contains FE block. The output of the FE block is concatenated with the output of all the deeper stage FE blocks. Finally, the generated output is concatenated with the output of corresponding Conv2DTranspose layer of the decoder block and dropout is applied on the result, followed by convolution process carried out by two convolution layers of the decoder block.

The information collected by deeper FE blocks is upgraded and is shared with all available higher level decoder blocks. The upsampling operations performed in BrainSeg-Net are done using bilinear interpolation. The convolution layers used in BrainSeg-Net perform convolution with padding which allows achieving similar-sized output as of input. While the baseline architecture does not have this characteristic. The dropout ratio in BrainSeg-Net is kept at 0.3. All convolution layers of BrainSeg-Net contains Batch normalization and Rectified Linear Unit (ReLU) activation function excluding the last convolution layer which utilizes sigmoid activation function. The mathematical expression of ReLU and sigmoid activation function are given as

The BrainSeg-Net uses Adam optimizer along with the custom-designed loss function. The details regarding FE block and custom-designed loss function are discussed in following sub-sections.

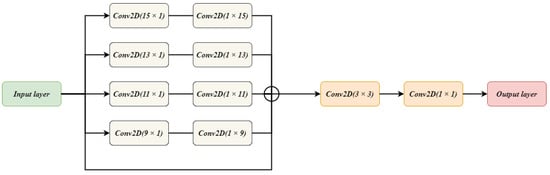

4.2.1. Feature Enhancer (FE)

The FE block helps to propagate the important location information along with spatial details. Sharing these features with all the higher level decoder blocks makes a significant improvement in segmentation performance. One of the most important contributions of the FE block is that it enhances the effective receptive field of the features maps. This increase in the effective receptive field will help in detecting larger regions by reading a more global feature hierarchy. While sharing important location details and spatial information, FE helps the architecture to identify small regions. Moreover, the skip connection of the FE block allows recovering the fine-grained details which got lost during the downsampling process. The architecture of the FE block is discussed as follows.

Figure 3 illustrates the architecture of the FE block. The input from the encoder block is taken and given to five parallel connections. One connection acts as a skip connection, which helps to keep the spatial details from the encoder block unchanged. On the remaining four connections, two convolution layers are applied. A combination is made for the convolution layers. The first convolution layer has a filter size of while the second convolution layer has a filter size of . This combination is used rather than using a one convolution layer with filter size. Such combination is used so that important features are extracted without losing location information. The experiments are carried out to observe the effect of using two cascaded convolutions rather than single convolution layer as discussed earlier. The cascaded system has depicted good performance when compared with the single convolution layer. The output of all the connections is summed together to get a single output. Two convolution layers with a filter size of and , respectively, are applied on the attained output giving us the final result by FE block.

Figure 3.

The Architecture of FE Block used in BrainSeg-Net.

The FE block performs enhancement of the features given to it as an input. The FE block tries to extract features of features by keeping the minimal parameters. For keeping the parameters low, a special combination of convolution layers is applied. The block is not highly dense which helps in preserving the location information of the features.

4.2.2. Custom-Designed Loss Function

The class imbalance is considered to be one of the biggest identified challenges in brain tumor segmentation. The difference between occupied regions between different classes can be understood in a better manner by considering Table 1. Table 1 illustrates the class distribution for BraTS 2017 dataset. In brain tumor MRI, an average occupied region by healthy tissue is 98.46%. Whereas the region occupied by edema, enhancing tumor, and non-enhancing tumor occupy 1.02%, 0.29%, and 0.23%, respectively. The large difference between region occupancy by different classes in brain tumor MRI has a tremendous effect on its segmentation accuracy.

Table 1.

Distribution of area occupancy by different classes in brain tumor MRI.

To address the class imbalance issue, BrainSeg-Net adopts a custom-designed loss function where weight cross-entropy (WCE) and Dice Loss Coefficient (DLC) are summed up as a single loss function. The numerical representation of these loss functions is

where Q is the total number of labels which in our case is 4 and is the label. The represents the forecasted class of the pixel, is the allotted weight and is ground truth class of the pixel.

The total loss function can be represented as

The loss function consists of two objective functions: WCE and DLC. Where DLC is responsible of predicting the segmented regions, while WCE performs classification of tissue cells.

5. Results and Discussion

For carrying out an extensive evaluation of the proposed model, we have done quantitative analysis as well as qualitative analysis. For the qualitative analysis of the proposed model, the evaluation metric we have used is Dice Score. The previous researches in literature have used dice score as a figure of merit so this will lead us to better performance comparison between state-of-the-art techniques and proposed technique. The Dice score is used to measure the similarity indexed between two sets suppose M & N which can be formulated as

where and are the cardinalities of sets M and N, respectively.

The proposed model is evaluated on three benchmark datasets: BraTS 2017, BraTS 2018, and BraTS 2019. The details of this dataset are discussed earlier in the paper. First of all, we evaluated the model on HGG cases of BraTS 2017. Total HGG cases were split into 80% and 20%, where the bigger chunk was used for training and a small chunk was used for testing. Table 2 shows the results obtained by BrainSeg-Net and its comparison with existing state-of-the-art techniques.

Table 2.

Results comparison on the BraTS 2017 HGG data.

For HGG cases of BraTS 2017, the proposed model has exhibited a great increase in performance compared to the existing baseline and state-of-the-art techniques. The BrainSegNet attained a dice score of 0.903, 0.872, and 0.849 for the whole, core, and enhancing tumor, respectively. A segmentation improvement is observed for all the classes. BrainSegNet outperformed its baseline U-Net with high margin.

The second experiment is carried out by keeping the BraTS 2017 validation dataset as a test set and the whole BraTS 2017 training set is used to train the model. Two-hundred-and-eighty-five MR brain images are used to train the model and 57 MR scans are used to evaluate the performance. Table 3 represents the comparison of performance between BrainSeg-Net and existing models.

Table 3.

Results comparison on the BraTS 2017 validation dataset.

The experiment on BraTS 2017 validation dataset illuminates the high performance by BrainSeg-Net. The proposed model has improved the performance by 2.2%, 2.4%, and 2.9% for the whole, core, and enhancing tumor classes, respectively. When compared with the baseline architecture, BrainSeg-Net shows an improvement of 2.8% for the whole tumor, 3% for the core tumorm and 4.5% for the enhancing tumor. The large improvement in performance for enhancing tumor segmentation replicates that BrainSeg-Net is successfully able to resolve the problem regarding small-scale tumor segmentation.

The further experiment is carried out on BraTS 2018 validation dataset. The model is trained on 285 MRI scans of a training dataset of BraTS 2017, as BraTS 2018 do not have a separate training dataset. For the testing purposes, the BraTS 2018 validation dataset carrying 66 MR brain images is used. Table 4 illustrates the comparison between achieved results from BrainSeg-Net and the results achieved by existing techniques.

Table 4.

Results comparison on the BraTS 2018 validation dataset.

On the BraTS 2018 validation dataset, the BrainSeg-Net, respectively, achieves Dice scores of 0.773, 0.826, and 0.894 for enhancing tumor, core tumor, and whole tumor. The existing state-of-the-art results for this experiment are obtained by ResU-Net. The proposed model has successfully improved the performance for all the segmentation classes. The improvement for enhancing tumor is 0.5%, while for core tumor its 2.3 %. Both scenarios have small-scale regions that need to be segmented out. These results show that the information contribution of FE block at every deep layer has contributed towards the betterment of accurate segmentation.

To validate the generalization of the proposed model, we have evaluated it on the BraTS 2019 dataset. Where all training data of BraTS 2019 dataset are used to train the model and the validation data is used for testing. We have used 355 cases for the training purpose and 125 cases for the testing purpose. As this is the recent database, very limited results are reported by other works therefore we have compared our model with the baseline models. Table 5 shows the result comparison of BrainSeg-Net and baseline models.

Table 5.

Results comparison on the BraTS 2019 validation dataset.

The proposed BrainSeg-Net has achieved the highest performance for BraTS 2019 validation dataset. It has achieved the dice score of 0.869 for the whole tumor, 0.775 for the core tumor, and 0.708 for the enhanced tumor. BrainSeg-Net has outperformed its baseline models due to the effectiveness of the FE block which makes it easy to identify the small-scale tumor in brain tumor MRI.

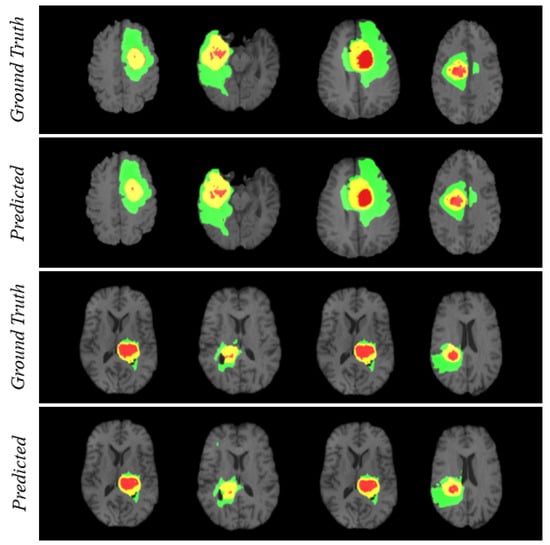

For visualizing the qualitative results, we have added Figure 4. The figure carries eight different cases where the region prediction by BrainSeg-Net is shown along with the labeled ground truth. By comparing both, we can have an idea about the reliability of the segmentation results achieved by BrainSeg-Net. The high resemblance between predicted and ground truth speaks about the high quality of BrainSeg-Net architecture.

Figure 4.

Visualization of segmentation performance by BrainSeg-Net architecture. Three colors in each case represents different classes. Red for necrosis and non-enhancing, green for edema, and yellow for enhancing tumor.

6. Conclusions

The segmentation of MR brain tumor images is considered a complex task. Multiple neural network-based models are proposed in the literature for semantic segmentation of brain tumor MRI. Still, a gap of improvement exists. The biggest challenge is to segment the small-scale tumor as, due to the constant convolution operation and transformation in the neural network, an important location and spatial information get lost. Therefore, this paper proposed BrainSeg-Net, which addresses this issue and improves the semantic segmentation of MR brain tumor images. BrainSeg-Net uses FE block which, at every encoder stage, gets the feature map and performs the defined operation and tries to preserve the important information. The FE block converts the low-level features to middle-level features which minimize the information distortion during feature concatenation. Moreover, the FE block is responsible for improving the effective receptive field of architecture which again contributes towards architecture accuracy. The proposed model is evaluated on multiple benchmark databases. BrainSeg-Net has expressed a viable improvement in the results when compared with existing state-of-the-art semantic segmentation techniques for MR brain tumor images. The proposed BrainSeg-Net has also outperformed its baseline U-Net architecture. In future, we intend to improve this architecture further so that it can prove to be beneficial for human lives. The 2D U-Net has the restriction of important information loss in comparison to 3D U-Net. We have an intention to extend our research to explore 3D-based architecture doe improvement of segmentation performance.

Author Contributions

Conceptualization, M.U.R., S.C., J.K., and K.T.C.; methodology, M.U.R.; software, M.U.R.; validation, M.U.R., S.C., J.K., and K.T.C.; investigation, M.U.R., S.C., J.K., and K.T.C.; writing—original draft preparation: M.U.R. and S.C.; writing—review and editing, M.U.R., S.C., J.K., and K.T.C.; supervision, J.K. and K.T.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by “Human Resources Program in Energy Technology” of the Korea Institute of Energy Technology Evaluation and Planning (KETEP), granted financial resource from the Ministry of Trade, Industry and Energy, Republic of Korea. (No. 20204010600470), the Brain Research Program of the National Research Foundation (NRF) funded by the Korean government (MSIT) (No. NRF-2017M3C7A1044816), and by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2020R1A2C2005612).

Institutional Review Board Statement

Not applicable as the publically available dataset is used.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable. No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Işın, A.; Direkoğlu, C.; Şah, M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Haris, M.; Gupta, R.K.; Singh, A.; Husain, N.; Husain, M.; Pandey, C.M.; Srivastava, C.; Behari, S.; Rathore, R.K.S. Differentiation of infective from neoplastic brain lesions by dynamic contrast-enhanced MRI. Neuroradiology 2008, 50, 531. [Google Scholar] [CrossRef] [PubMed]

- Saut, O.; Lagaert, J.B.; Colin, T.; Fathallah-Shaykh, H.M. A multilayer grow-or-go model for GBM: Effects of invasive cells and anti-angiogenesis on growth. Bull. Math. Biol. 2014, 76, 2306–2333. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Li, M.; Wang, J.; Wu, F.; Liu, T.; Pan, Y. A survey of MRI-based brain tumor segmentation methods. Tsinghua Sci. Technol. 2014, 19, 578–595. [Google Scholar]

- Gonzalez, R., III. Digital Image Processing, 3rd ed.; Gonzalez, R., Woods, R., Eds.; Peasrson: London, UK, 2008. [Google Scholar]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 2014, 34, 1993–2024. [Google Scholar] [CrossRef] [PubMed]

- Havaei, M.; Davy, A.; Warde-Farley, D.; Biard, A.; Courville, A.; Bengio, Y.; Pal, C.; Jodoin, P.M.; Larochelle, H. Brain tumor segmentation with deep neural networks. Med. Image Anal. 2017, 35, 18–31. [Google Scholar] [CrossRef] [PubMed]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; pp. 424–432. [Google Scholar]

- Isensee, F.; Kickingereder, P.; Wick, W.; Bendszus, M.; Maier-Hein, K.H. Brain tumor segmentation and radiomics survival prediction: Contribution to the brats 2017 challenge. In Proceedings of the International MICCAI Brainlesion Workshop, Quebec City, QC, Canada, 10–14 September 2017; pp. 287–297. [Google Scholar]

- Baid, U.; Talbar, S.; Rane, S.; Gupta, S.; Thakur, M.H.; Moiyadi, A.; Thakur, S.; Mahajan, A. Deep learning radiomics algorithm for gliomas (drag) model: A novel approach using 3d unet based deep convolutional neural network for predicting survival in gliomas. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16–20 September 2018; pp. 369–379. [Google Scholar]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef] [PubMed]

- Noori, M.; Bahri, A.; Mohammadi, K. Attention-Guided Version of 2D UNet for Automatic Brain Tumor Segmentation. In Proceedings of the 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 24–25 October 2019; pp. 269–275. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Abbas, Z.; Tayara, H.; Chong, K.T. SpineNet-6mA: A Novel Deep Learning Tool for Predicting DNA N6-Methyladenine Sites in Genomes. IEEE Access 2020, 8, 201450–201457. [Google Scholar] [CrossRef]

- Rehman, M.U.; Chong, K.T. DNA6mA-MINT: DNA-6mA modification identification neural tool. Genes 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Alam, W.; Ali, S.D.; Tayara, H.; Chong, K.T. A CNN-based RNA N6-methyladenosine site predictor for multiple species using heterogeneous features representation. IEEE Access 2020, 8, 138203–138209. [Google Scholar] [CrossRef]

- Rehman, M.U.; Khan, S.H.; Rizvi, S.D.; Abbas, Z.; Zafar, A. Classification of skin lesion by interference of segmentation and convolotion neural network. In Proceedings of the 2018 2nd International Conference on Engineering Innovation (ICEI), Bangkok, Thailand, 5–6 July 2018; pp. 81–85. [Google Scholar]

- Khan, S.H.; Abbas, Z.; Rizvi, S.D. Classification of Diabetic Retinopathy Images Based on Customised CNN Architecture. In Proceedings of the 2019 Amity International Conference on Artificial Intelligence (AICAI), Dubai, UAE, 4–6 2019; pp. 244–248. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Dong, H.; Yang, G.; Liu, F.; Mo, Y.; Guo, Y. Automatic brain tumor detection and segmentation using U-Net based fully convolutional networks. In Proceedings of the Annual conference on medical image understanding and analysis, Edinburgh, UK, 11–13 July 2017; pp. 506–517. [Google Scholar]

- Kong, X.; Sun, G.; Wu, Q.; Liu, J.; Lin, F. Hybrid pyramid u-net model for brain tumor segmentation. In Proceedings of the International Conference on Intelligent Information Processing, Nanning, China, 19–22 October 2018; pp. 346–355. [Google Scholar]

- Liu, D.; Zhang, H.; Zhao, M.; Yu, X.; Yao, S.; Zhou, W. Brain tumor segmention based on dilated convolution refine networks. In Proceedings of the 2018 IEEE 16th International Conference on Software Engineering Research, Management and Applications (SERA), Kunming, China, 13–15 June 2018; pp. 113–120. [Google Scholar]

- Li, H.; Li, A.; Wang, M. A novel end-to-end brain tumor segmentation method using improved fully convolutional networks. Comput. Biol. Med. 2019, 108, 150–160. [Google Scholar] [CrossRef] [PubMed]

- Shaikh, M.; Anand, G.; Acharya, G.; Amrutkar, A.; Alex, V.; Krishnamurthi, G. Brain tumor segmentation using dense fully convolutional neural network. In Proceedings of the International MICCAI Brainlesion Workshop, Quebec City, QC, Canada, 14 September 2017; pp. 309–319. [Google Scholar]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef] [PubMed]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 7132–7141. [Google Scholar]

- Rundo, L.; Han, C.; Nagano, Y.; Zhang, J.; Hataya, R.; Militello, C.; Tangherloni, A.; Nobile, M.S.; Ferretti, C.; Besozzi, D.; et al. USE-Net: Incorporating Squeeze-and-Excitation blocks into U-Net for prostate zonal segmentation of multi-institutional MRI datasets. Neurocomputing 2019, 365, 31–43. [Google Scholar] [CrossRef]

- Pezoulas, V.C.; Zervakis, M.; Pologiorgi, I.; Seferlis, S.; Tsalikis, G.M.; Zarifis, G.; Giakos, G.C. A tissue classification approach for brain tumor segmentation using MRI. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017; pp. 1–6. [Google Scholar]

- McKinley, R.; Meier, R.; Wiest, R. Ensembles of densely-connected CNNs with label-uncertainty for brain tumor segmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16–20 April 2018; pp. 456–465. [Google Scholar]

- Wang, G.; Li, W.; Ourselin, S.; Vercauteren, T. Automatic brain tumor segmentation using convolutional neural networks with test-time augmentation. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16–20 September 2018; pp. 61–72. [Google Scholar]

- Tustison, N.J.; Avants, B.B.; Cook, P.A.; Zheng, Y.; Egan, A.; Yushkevich, P.A.; Gee, J.C. N4ITK: Improved N3 bias correction. IEEE Trans. Med. Imaging 2010, 29, 1310–1320. [Google Scholar] [CrossRef] [PubMed]

- Pereira, S.; Pinto, A.; Alves, V.; Silva, C.A. Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imaging 2016, 35, 1240–1251. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Wu, Y.; DSouza, A.M.; Abidin, A.Z.; Wismüller, A.; Xu, C. MRI tumor segmentation with densely connected 3D CNN. In Proceedings of the Medical Imaging 2018: Image Processing. International Society for Optics and Photonics, Houston, TX, USA, 11–13 February 2018; Volume 10574, p. 105741F. [Google Scholar]

- Kermi, A.; Mahmoudi, I.; Khadir, M.T. Deep convolutional neural networks using U-Net for automatic brain tumor segmentation in multimodal MRI volumes. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16–20 September 2018; pp. 37–48. [Google Scholar]

- Zhao, X.; Wu, Y.; Song, G.; Li, Z.; Zhang, Y.; Fan, Y. A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 2018, 43, 98–111. [Google Scholar] [CrossRef] [PubMed]

- Albiol, A.; Albiol, A.; Albiol, F. Extending 2D deep learning architectures to 3D image segmentation problems. In Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, 16–20 September 2018; pp. 73–82. [Google Scholar]

- Hu, K.; Gan, Q.; Zhang, Y.; Deng, S.; Xiao, F.; Huang, W.; Cao, C.; Gao, X. Brain tumor segmentation using multi-cascaded convolutional neural networks and conditional random field. IEEE Access 2019, 7, 92615–92629. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).