HIRD-Net: An Explainable CNN-Based Framework with Attention Mechanism for Diabetic Retinopathy Diagnosis Using CLAHE-D-DoG Enhanced Fundus Images

Abstract

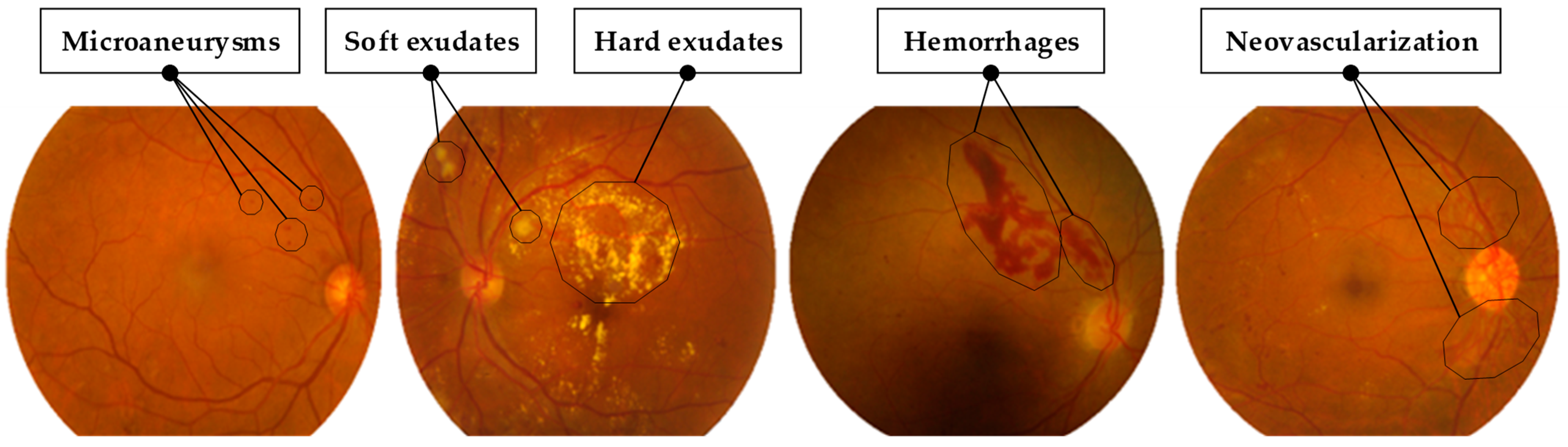

1. Introduction

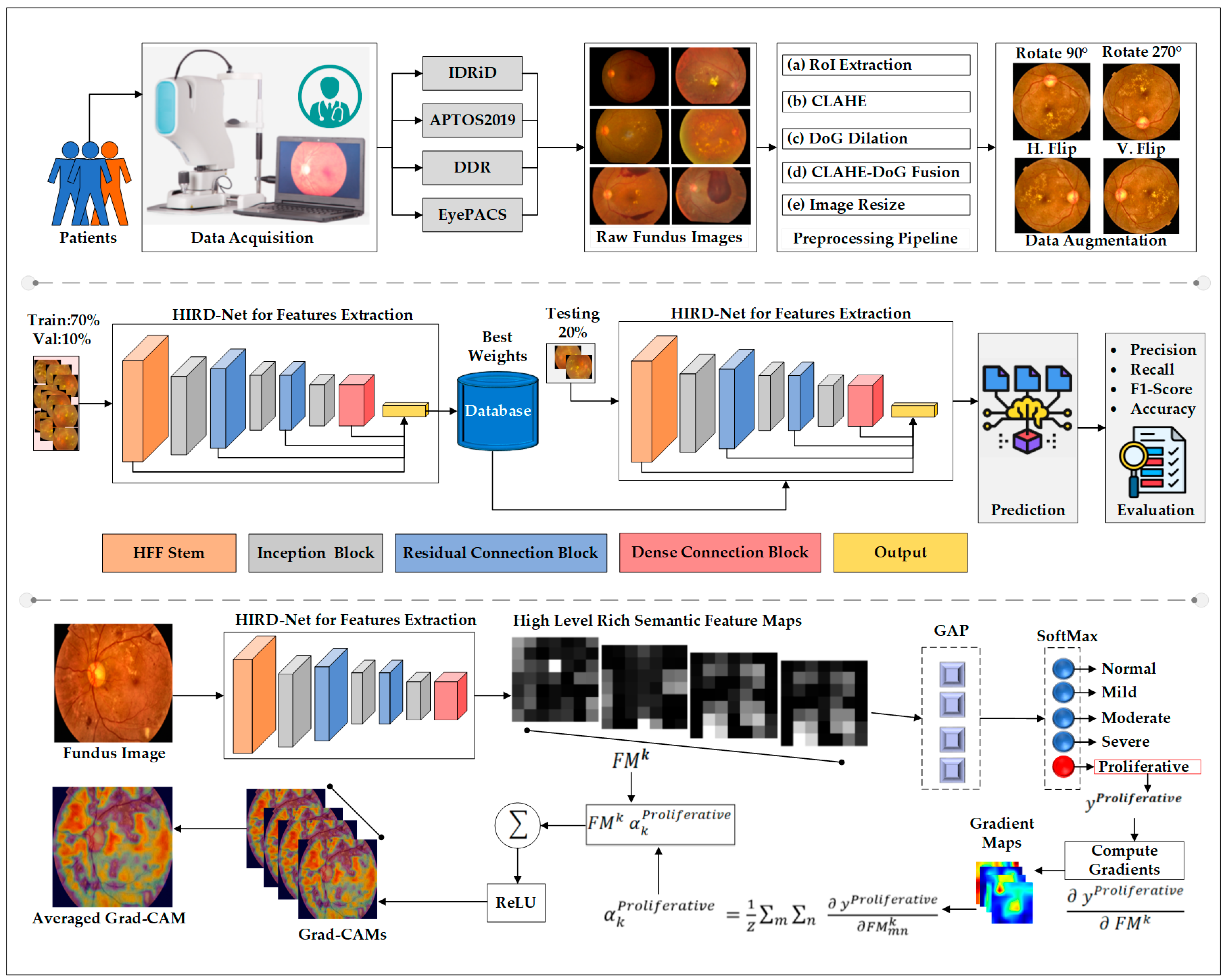

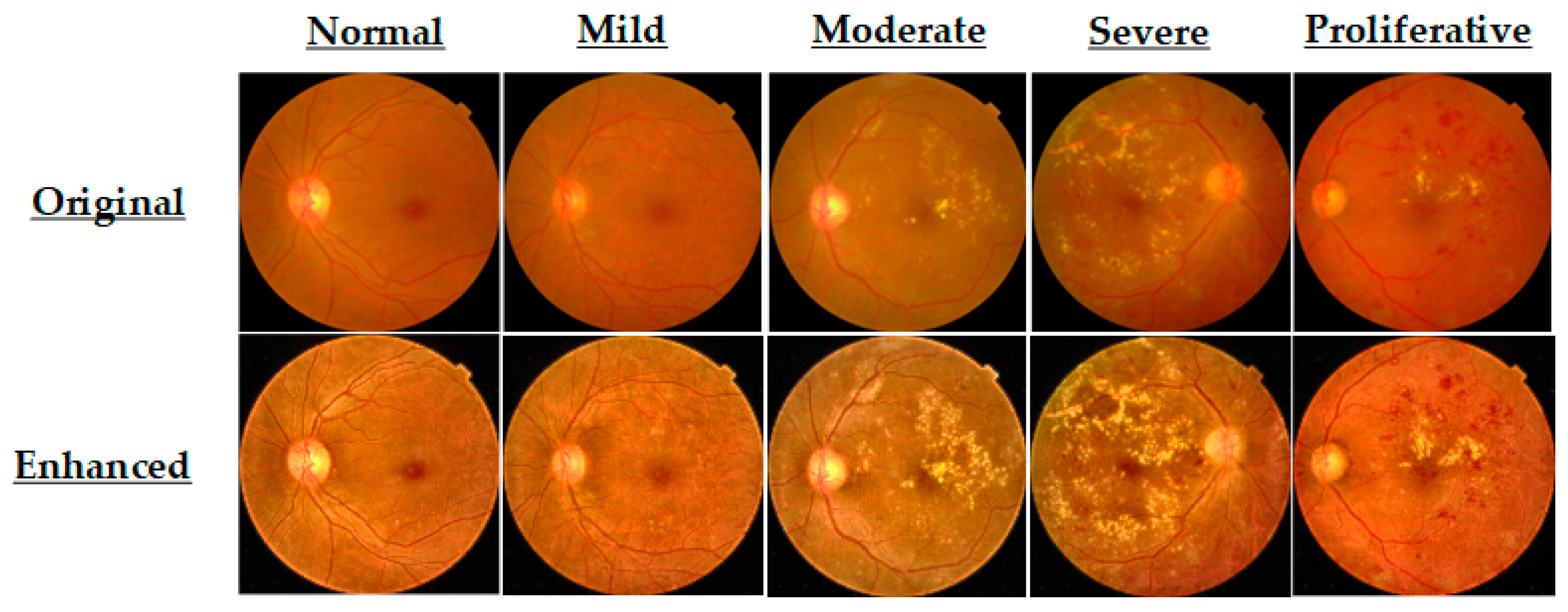

- Integration of CLAHE with D-DoG filtering for enhanced image preprocessing, effectively addressing blurred morphological patterns and improving the visibility of fine-grained features in FIs.

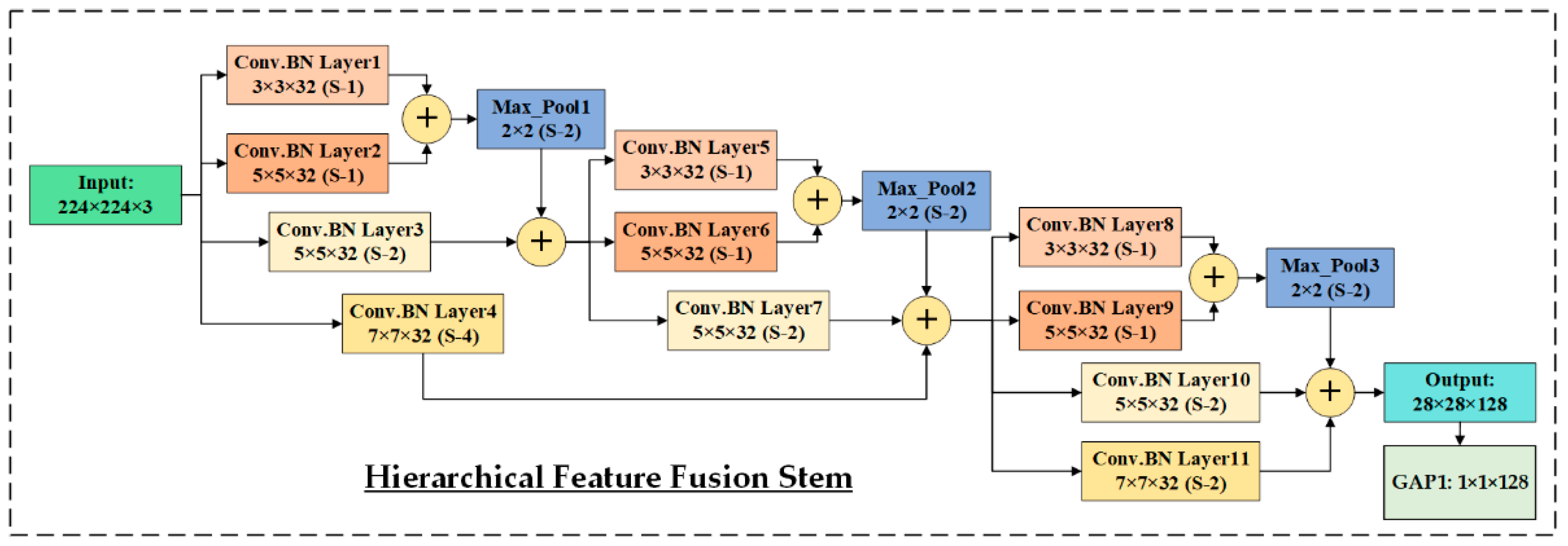

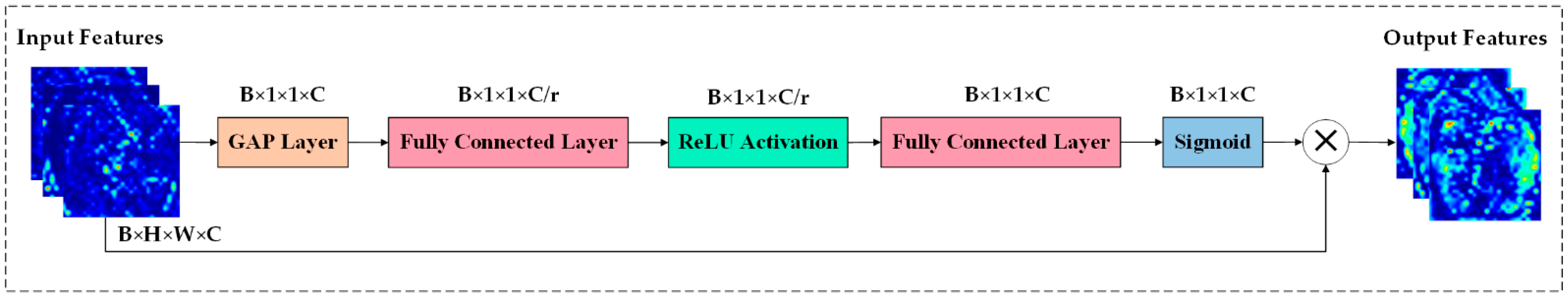

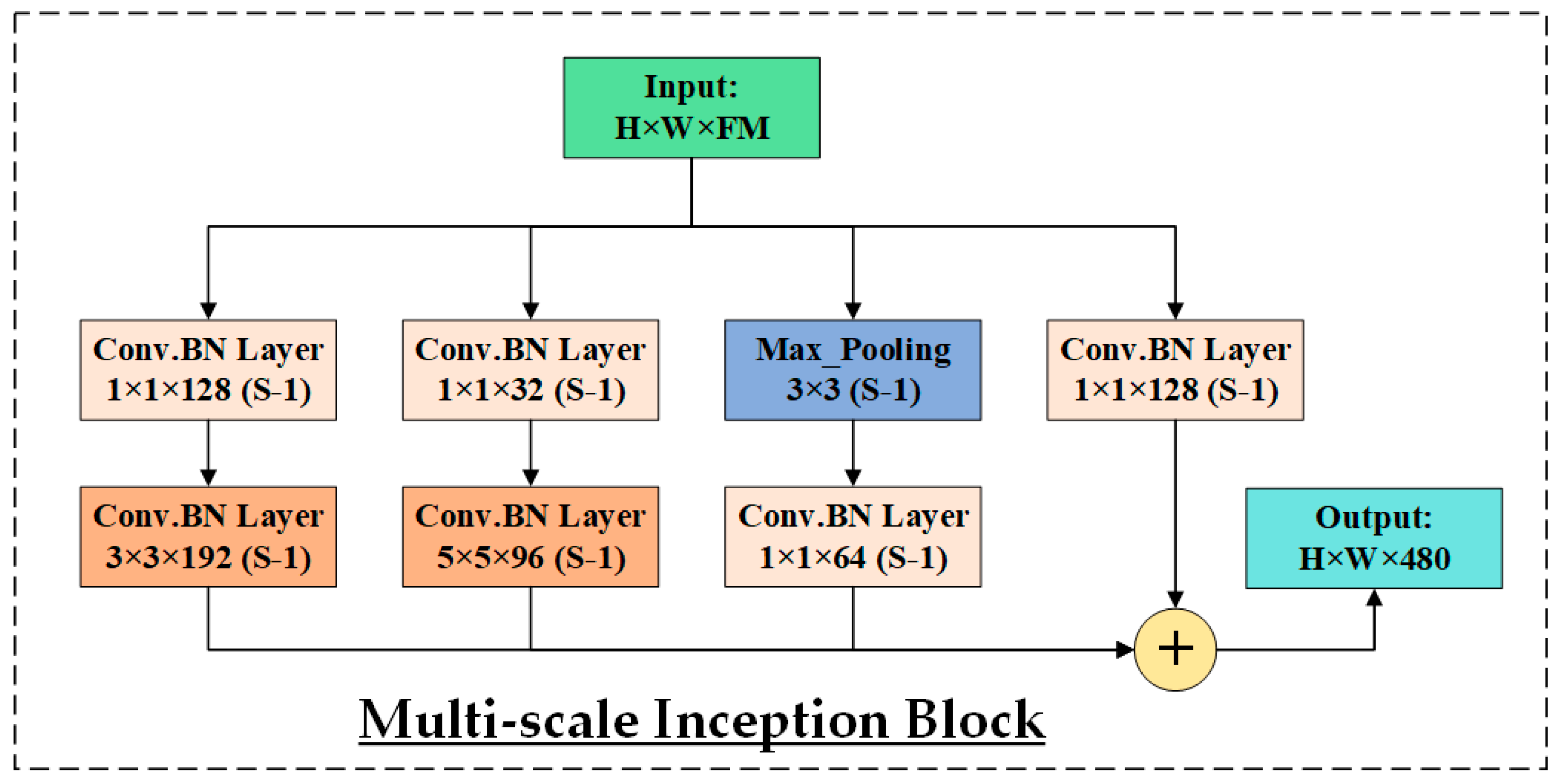

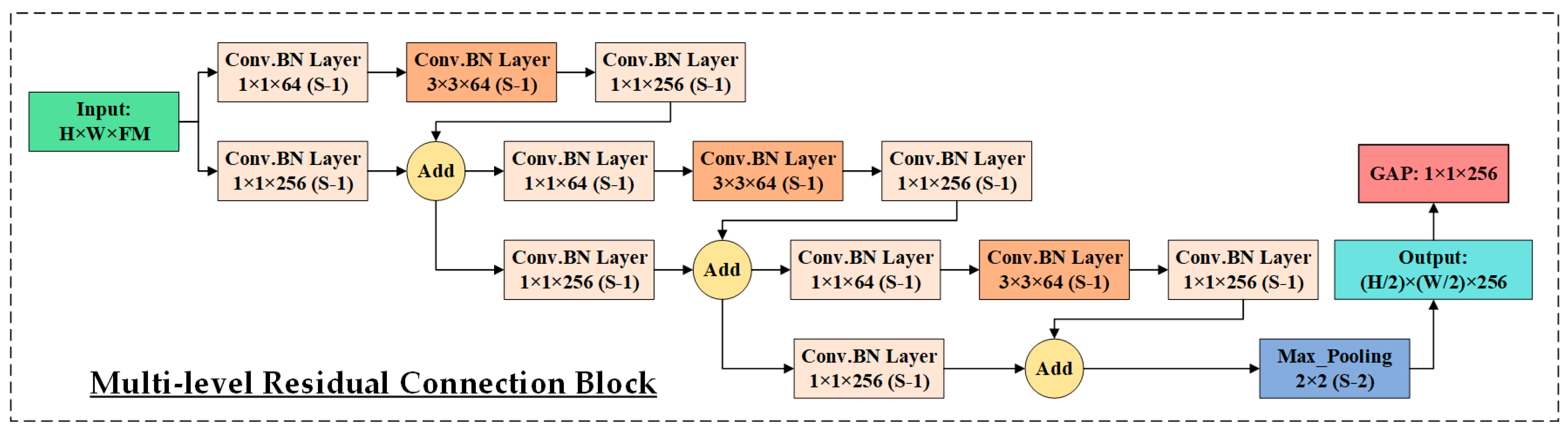

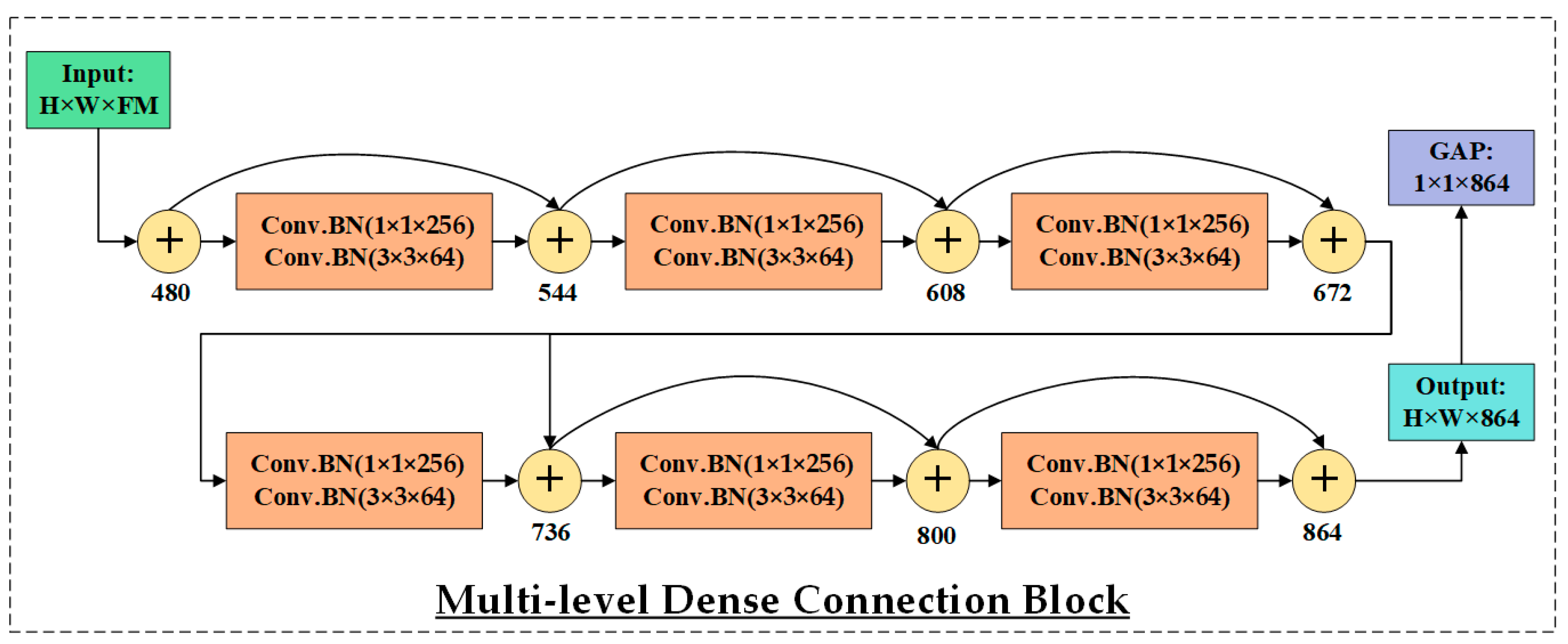

- Proposal of a novel CNN-based architecture, HIRD-Net, specifically designed for DR diagnosis. The model incorporates a hierarchical feature extraction stem along with multiscale and multilevel blocks, enabling the capture of subtle and diverse pathological features. The architecture utilizes four GAP layers to enhance semantic feature aggregation and mitigate overfitting. The Hard-Swish activation function is employed to stabilize gradient propagation, while Softmax activation, combined with focal loss and extensive data augmentation, is used to effectively address class imbalance.

- Incorporate Grad-CAM to provide visual interpretability of HIRD-Net predictions, enabling transparent decision-making and highlighting pathological regions that influence classification.

- Comprehensive empirical evaluation by comparing the proposed framework with existing state-of-the-art methods, demonstrating superior performance across key metrics, including precision, recall, F1-score, and accuracy.

2. Current State-of-the-Art

3. Materials and Methods

3.1. Experimental Datasets

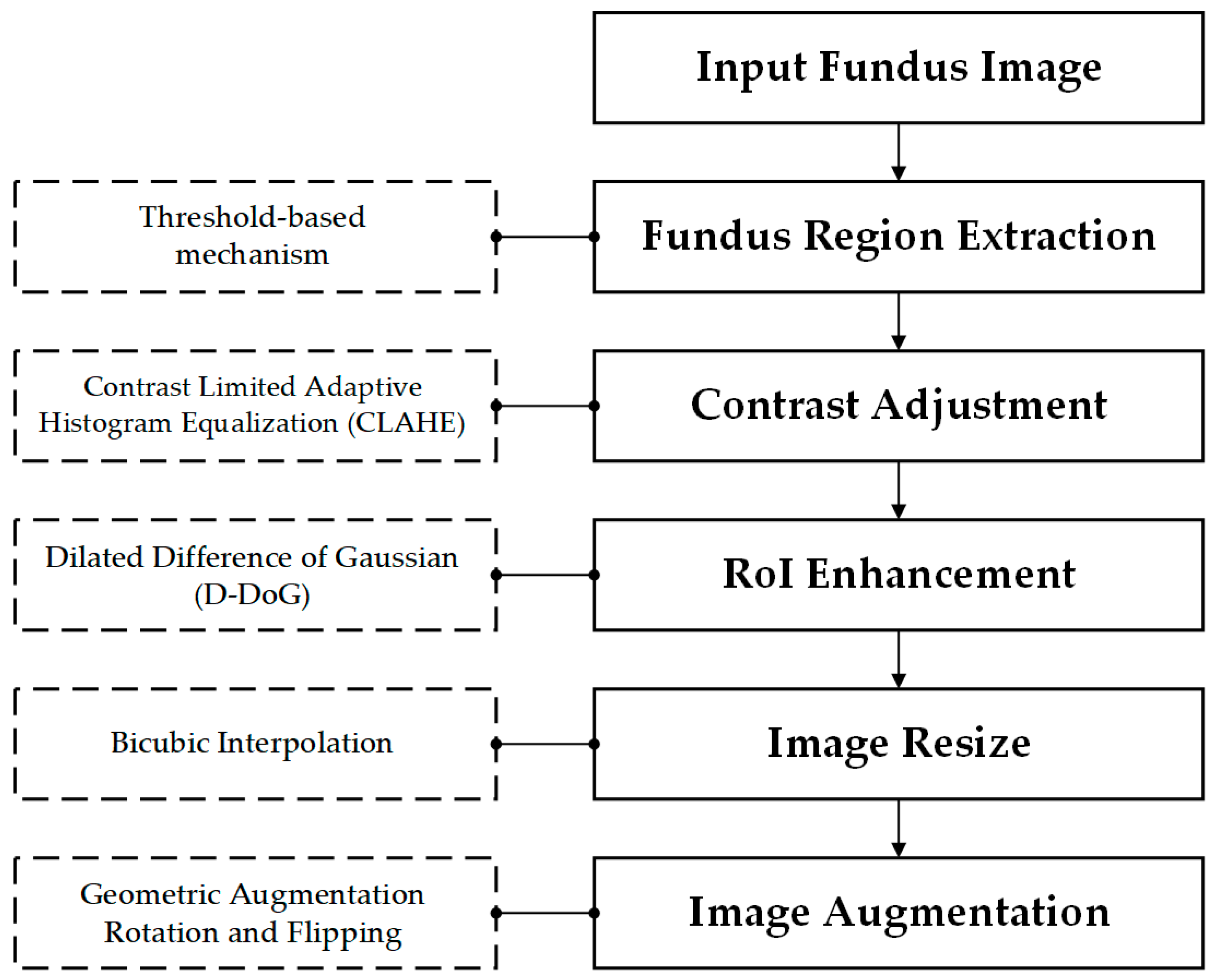

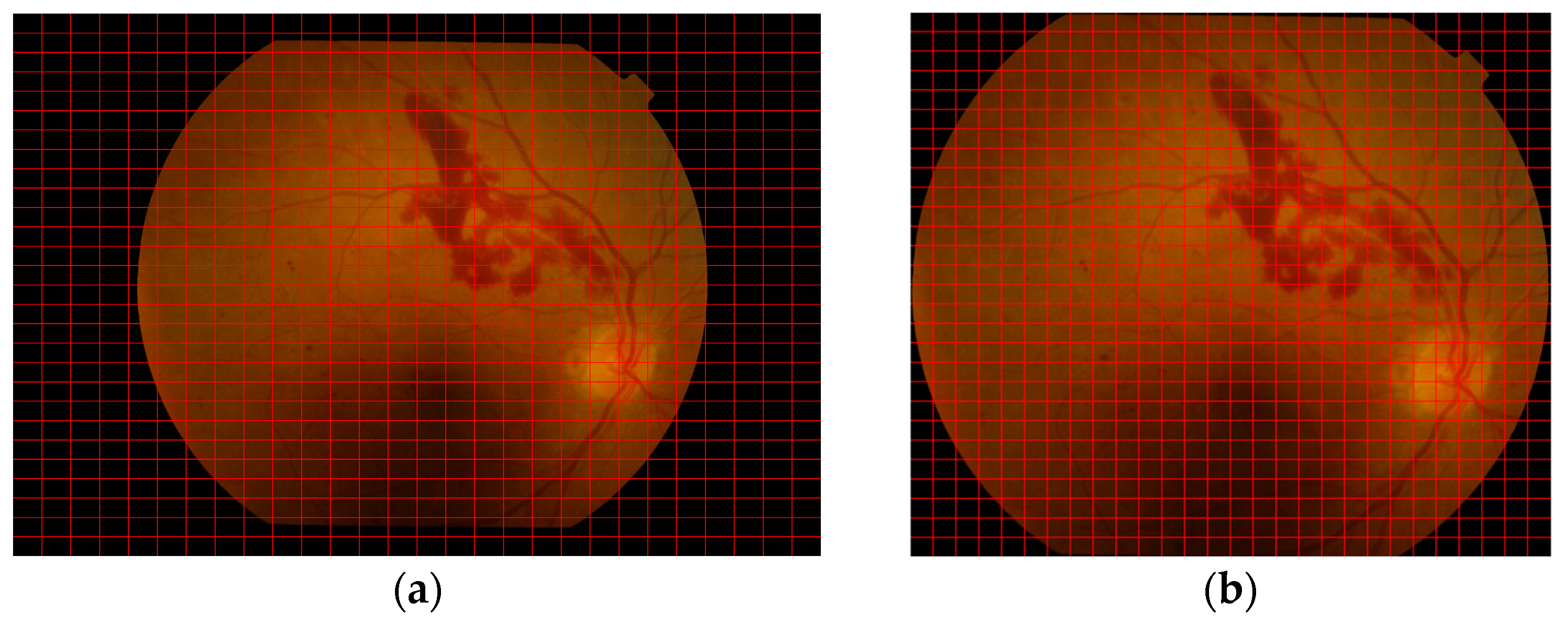

3.2. Data Preprocessing and Enhancement

3.3. HIRD-Net for Features Extraction and Classification

3.3.1. Activation Function

3.3.2. Loss Function

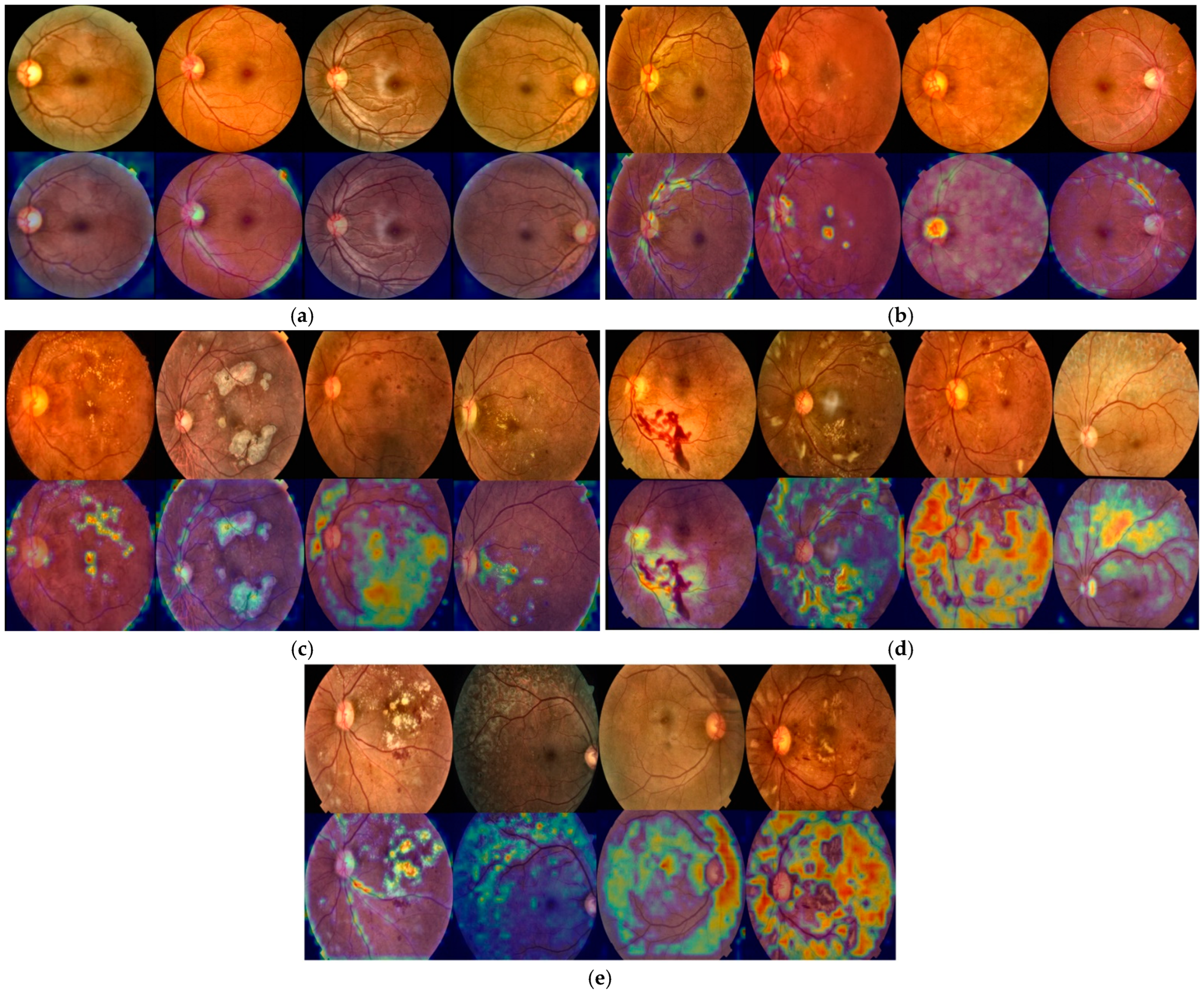

3.4. Interpretability Analysis Using Grad-CAM

3.5. Model Training and Testing

3.6. Performance Evaluation Metrics

- True Positives (TP) for class are denoted by .

- False Positives (FP) for class are calculated as the sum of instances incorrectly predicted as class , i.e., .

- False Negatives (FN) for class correspond to samples that actually belong to class but are misclassified as other classes, i.e., .

4. Results

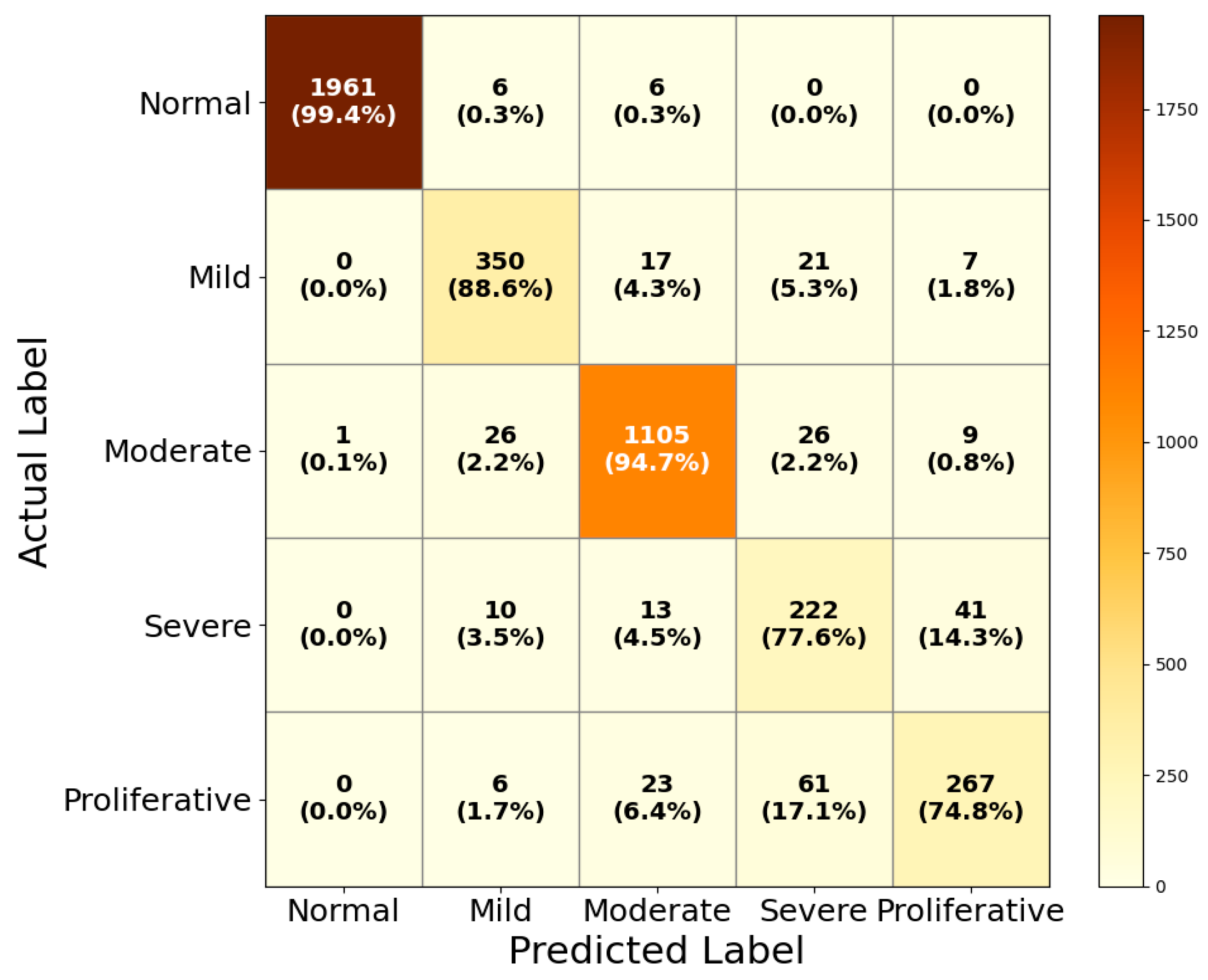

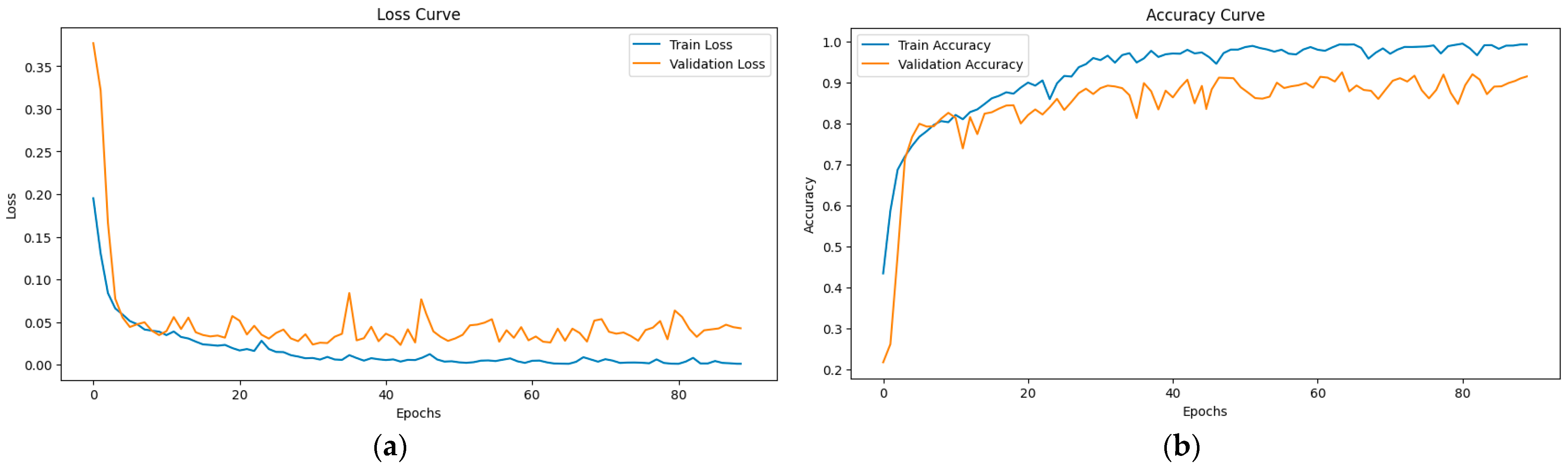

4.1. Ablation Study on IDRiD and APTOS2019

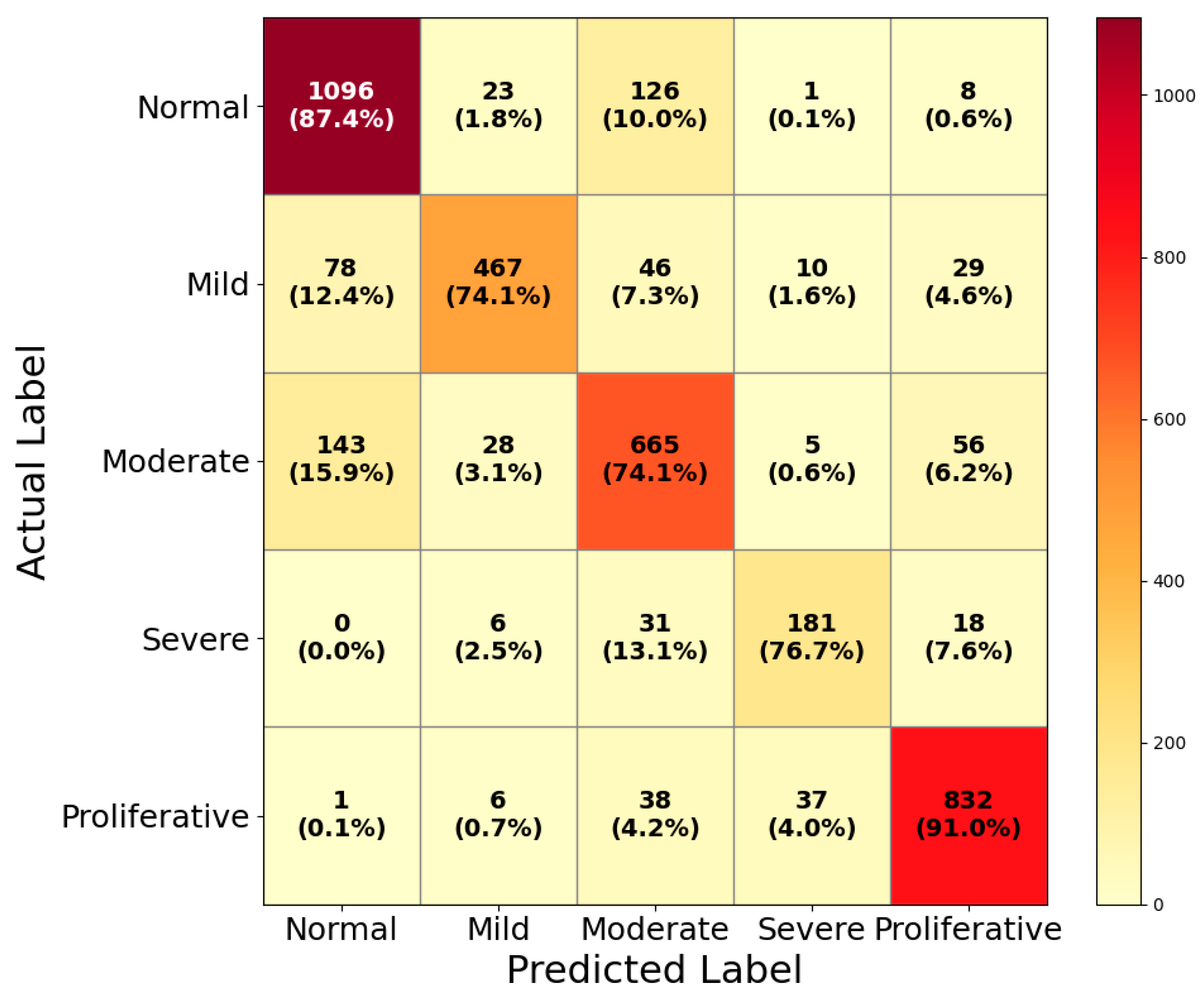

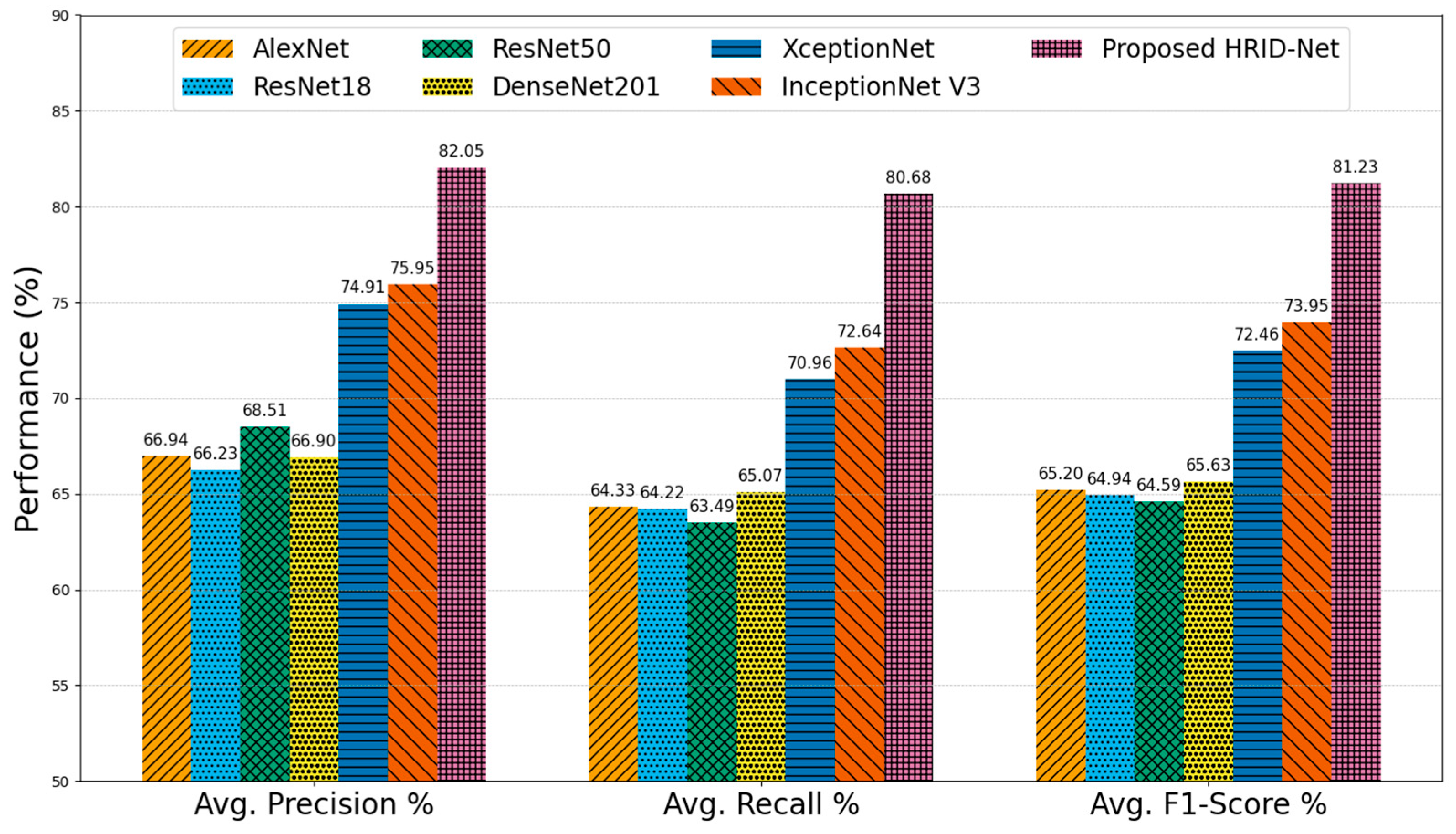

4.2. Performance Evaluation on DDR

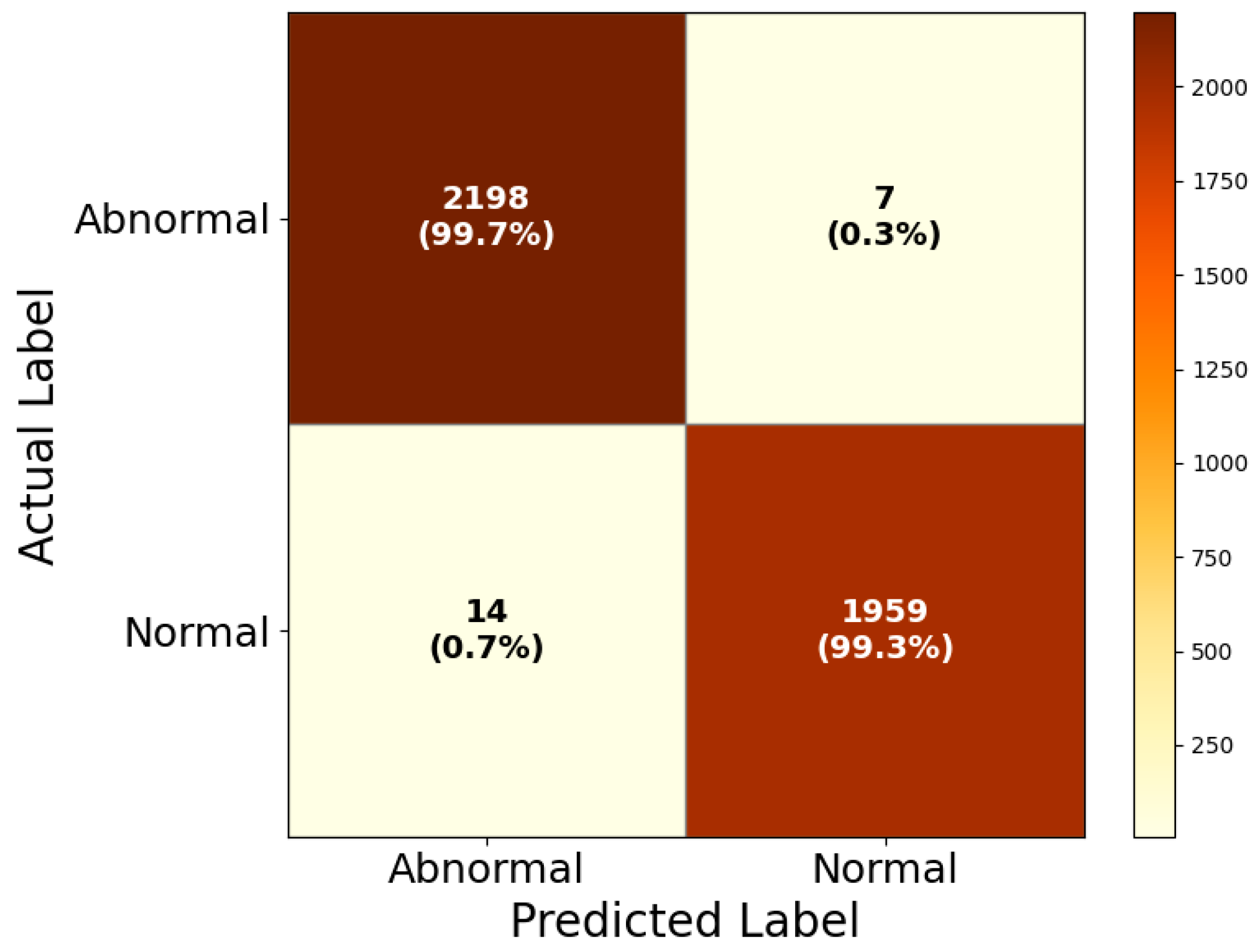

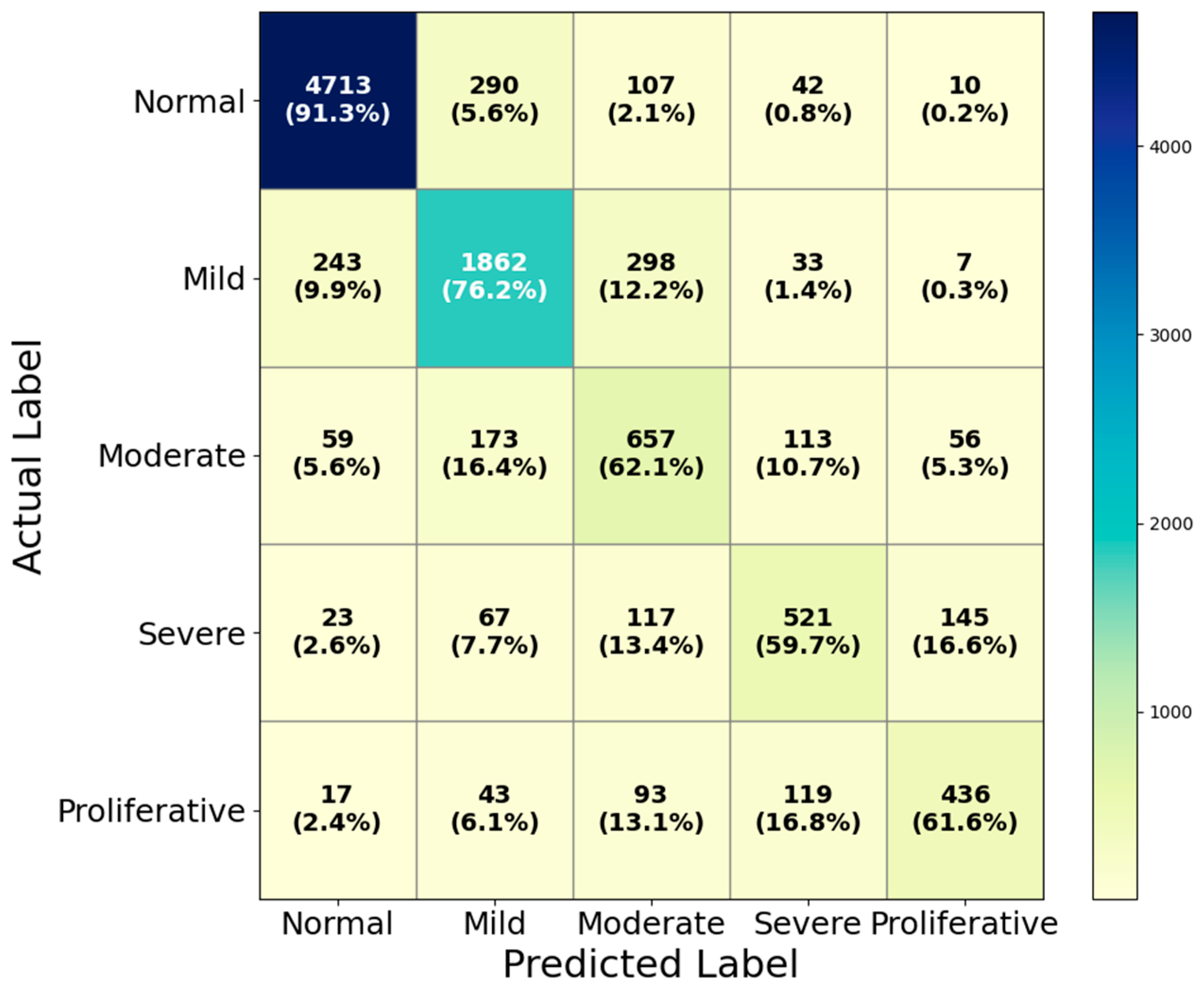

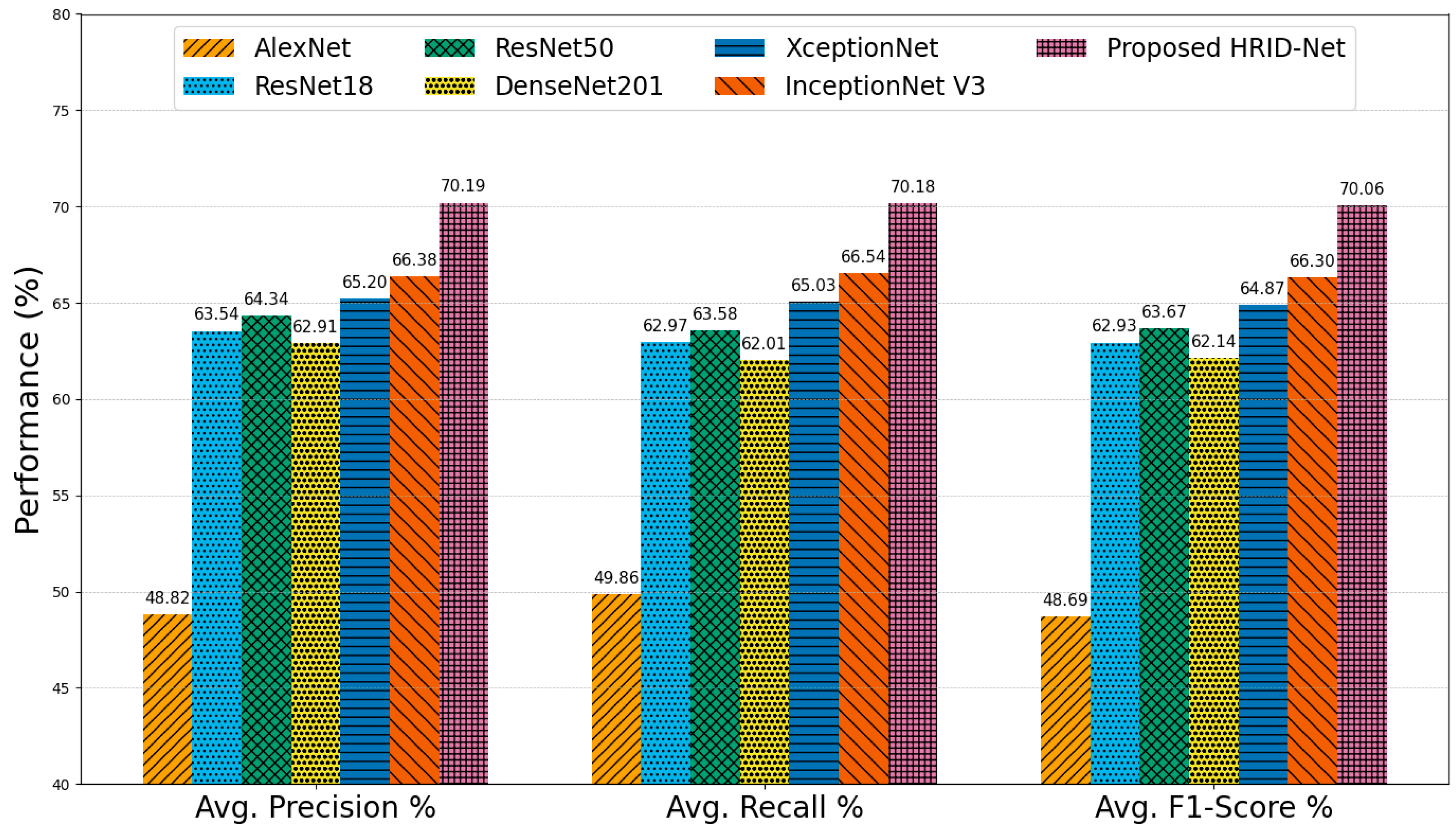

4.3. Performance Evaluation on EyePACS

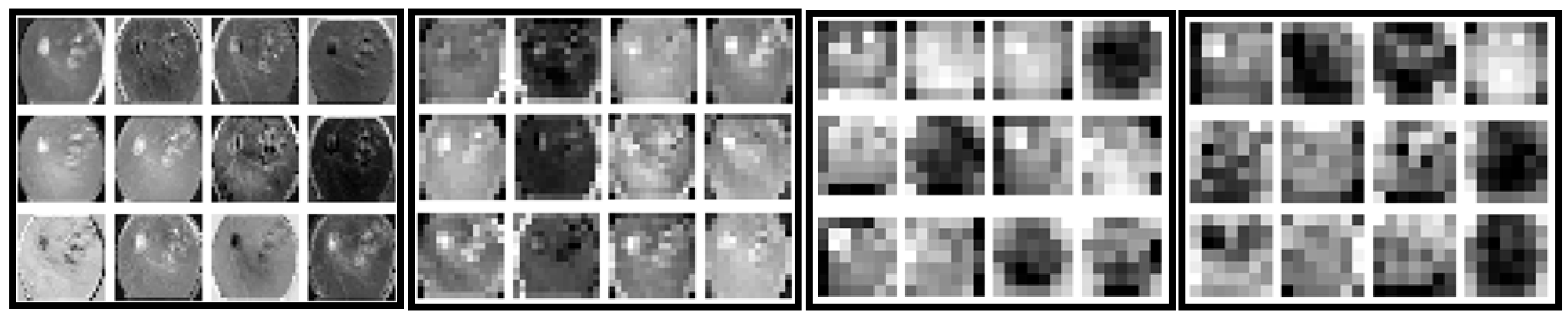

4.4. Grad-CAM-Based Visual Interpretability Analysis of HIRD-Net Predictions

5. Discussion

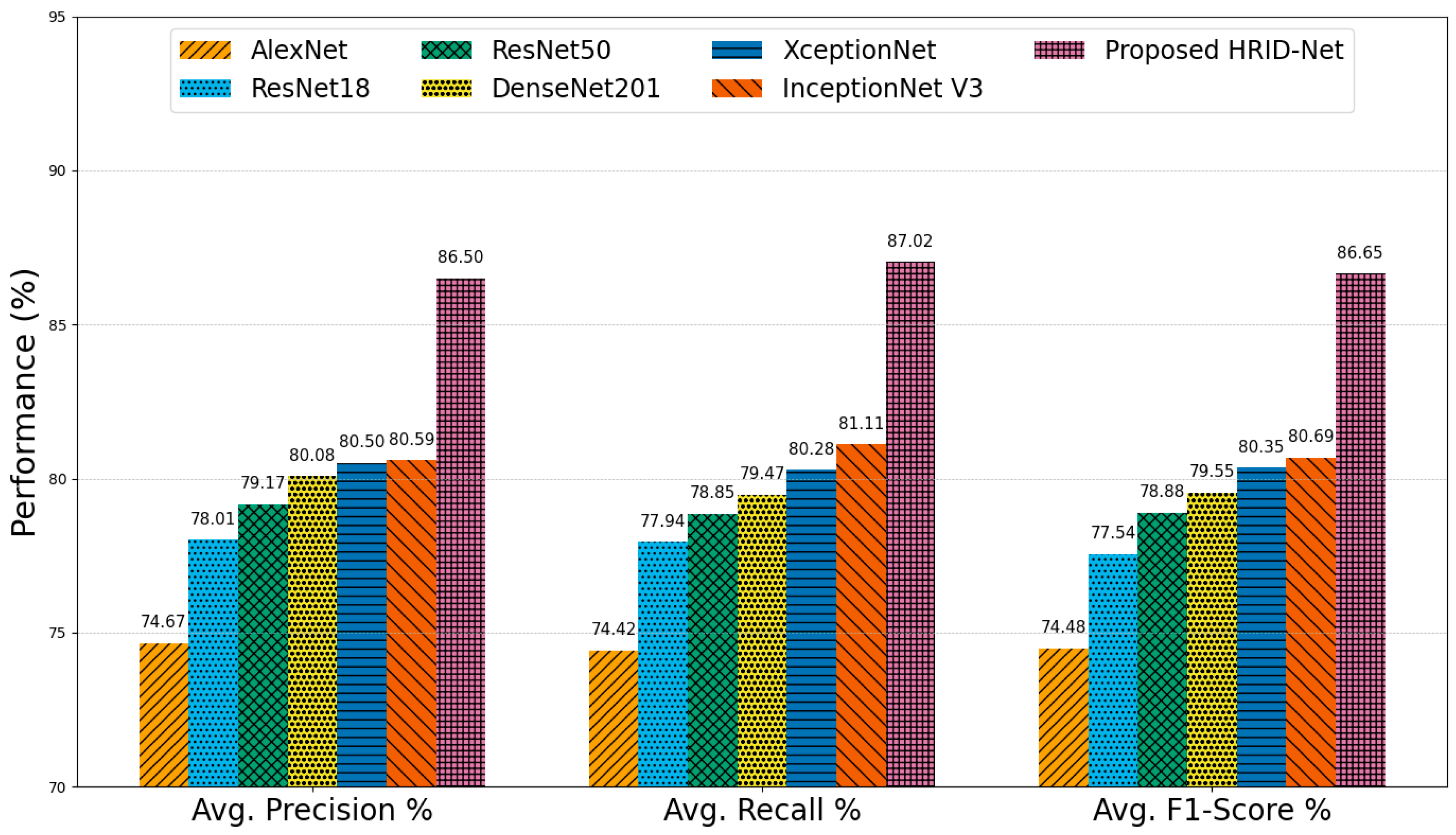

5.1. Comparative Analysis on IDRiD-APTOS2019 Dataset

5.2. Comparative Analysis on DDR Dataset

5.3. Comparative Analysis on EyePACS Dataset

5.4. Overall Comparative Evaluation

| Framework | CNN Architecture | IDRiD-APTOS2019 Accuracy (%) | DDR Accuracy (%) | EyePACS Accuracy (%) | Parameters (Million) |

|---|---|---|---|---|---|

| [31] | AlexNet | 84.97 | 71.13 | 58.77 | ~61 |

| [47] | ResNet18 | 88.75 | 71.92 | 73.39 | ~11.7 |

| [25,37,38] | ResNet50 | 88.87 | 72.73 | 74.39 | ~25.6 |

| [34] | DenseNet201 | 89.61 | 74.46 | 74.09 | ~20.2 |

| [48] | XceptionNet | 90.16 | 76.88 | 74.92 | ~22.9 |

| [14,31] | InceptionNet V3 | 90.43 | 78.28 | 76.02 | ~23.8 |

| Proposed | HIRD-Net | 93.46 | 82.45 | 79.94 | ~4.8 |

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Farooq, M.S.; Arooj, A.; Alroobaea, R.; Baqasah, A.M.; Jabarulla, M.Y.; Singh, D.; Sardar, R. Untangling Computer-Aided Diagnostic System for Screening Diabetic Retinopathy Based on Deep Learning Techniques. Sensors 2022, 22, 1803. [Google Scholar] [CrossRef]

- Ashraf, M.H.; Alghamdi, H. HFF-Net: A hybrid convolutional neural network for diabetic retinopathy screening and grading. Biomed. Technol. 2024, 8, 50–64. [Google Scholar] [CrossRef]

- Nahiduzzaman, M.; Islam, R.; Goni, O.F.; Anower, S.; Ahsan, M.; Haider, J.; Kowalski, M. Diabetic retinopathy identification using parallel convolutional neural network based feature extractor and ELM classifier. Expert Syst. Appl. 2023, 217, 119557. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Hu, Y.; Shen, Y.; Yang, Z.; Gan, M.; Lei, B. Diabetic Retinopathy Diagnosis Using Multichannel Generative Adversarial Network with Semisupervision. IEEE Trans. Autom. Sci. Eng. 2021, 18, 574–585. [Google Scholar] [CrossRef]

- Ikram, A.; Imran, A. ResViT FusionNet Model: An explainable AI-driven approach for automated grading of diabetic retinopathy in retinal images. Comput. Biol. Med. 2025, 186, 109656. [Google Scholar] [CrossRef] [PubMed]

- Ashraf, M.H.; Qureshi, M.E.; Khan, A.; Ahmed, M. DRD-Net: Diabetic Retinopathy Diagnosis Using A Hybrid Convolutional Neural Network. Int. J. Robot. Autom. Sci. 2025, 7, 96. [Google Scholar] [CrossRef]

- Lanzetta, P.; Sarao, V.; Scanlon, P.H.; Barratt, J.; Porta, M.; Bandello, F.; Loewenstein, A.; Vision Academy. Fundamental principles of an effective diabetic retinopathy screening program. Acta Diabetol. 2020, 57, 785–798. [Google Scholar] [CrossRef]

- Mateen, M.; Malik, T.S.; Hayat, S.; Hameed, M.; Sun, S.; Wen, J. Deep Learning Approach for Automatic Microaneurysms Detection. Sensors 2022, 22, 542. [Google Scholar] [CrossRef]

- Kanakaprabha, S.; Radha, D.; Santhanalakshmi, S. Diabetic Retinopathy Detection Using Deep Learning Models. Smart Innov. Syst. Technol. 2022, 302, 75–90. [Google Scholar] [CrossRef]

- da Rocha, D.A.; Ferreira, F.M.F.; Peixoto, Z.M.A. Diabetic retinopathy classification using VGG16 neural network. Res. Biomed. Eng. 2022, 38, 761–772. [Google Scholar] [CrossRef]

- Menaouer, B.; Dermane, Z.; Kebir, N.E.H.; Matta, N. Diabetic Retinopathy Classification Using Hybrid Deep Learning Approach. SN Comput. Sci. 2022, 3, 357. [Google Scholar] [CrossRef]

- Jaichandran, R.; Sivasubramanian, V.; Prakash, J.; Varshni, M. Detection of Diabetic Retinopathy Using Convolutional Neural Networks. ECS Trans. 2022, 107, 13321–13328. [Google Scholar] [CrossRef]

- Shanthi, T.; Sabeenian, R. Modified Alexnet architecture for classification of diabetic retinopathy images. Comput. Electr. Eng. 2019, 76, 56–64. [Google Scholar] [CrossRef]

- Gangwar, A.K.; Ravi, V. Diabetic Retinopathy Detection Using Transfer Learning and Deep Learning. Adv. Intell. Syst. Comput. 2021, 1176, 679–689. [Google Scholar] [CrossRef]

- Chakraborty, K.K.; Mukherjee, R.; Chakroborty, C.; Bora, K. Automated recognition of optical image based potato leaf blight diseases using deep learning. Physiol. Mol. Plant Pathol. 2022, 117, 101781. [Google Scholar] [CrossRef]

- Shankar, K.; Zhang, Y.; Liu, Y.; Wu, L.; Chen, C.-H. Hyperparameter Tuning Deep Learning for Diabetic Retinopathy Fundus Image Classification. IEEE Access 2020, 8, 118164–118173. [Google Scholar] [CrossRef]

- Vives-Boix, V.; Ruiz-Fernández, D. Diabetic retinopathy detection through convolutional neural networks with synaptic metaplasticity. Comput. Methods Programs Biomed. 2021, 206, 106094. [Google Scholar] [CrossRef]

- Asiri, N.; Hussain, M.; Al Adel, F.; Alzaidi, N. Deep learning based computer-aided diagnosis systems for diabetic retinopathy: A survey. Artif. Intell. Med. 2019, 99, 101701. [Google Scholar] [CrossRef]

- Yasser, I.; Khalifa, F.; Abdeltawab, H.; Ghazal, M.; Sandhu, H.S.; El-Baz, A. Automated Diagnosis of Optical Coherence Tomography Angiography (OCTA) Based on Machine Learning Techniques. Sensors 2022, 22, 2342. [Google Scholar] [CrossRef]

- Berbar, M.A. Features extraction using encoded local binary pattern for detection and grading diabetic retinopathy. Health Inf. Sci. Syst. 2022, 10, 14. [Google Scholar] [CrossRef]

- Görgel, P. Greedy segmentation based diabetic retinopathy identification using curvelet transform and scale invariant features. J. Eng. Res. 2021, 9, 134–150. [Google Scholar] [CrossRef]

- Islam, M.; Dinh, A.V.; Wahid, K.A. Automated Diabetic Retinopathy Detection Using Bag of Words Approach. J. Biomed. Sci. Eng. 2017, 10, 86–96. [Google Scholar] [CrossRef]

- Gour, N.; Khanna, P. Automated glaucoma detection using GIST and pyramid histogram of oriented gradients (PHOG) descriptors. Pattern Recognit. Lett. 2020, 137, 3–11. [Google Scholar] [CrossRef]

- Gharaibeh, N.; Al-Hazaimeh, O.M.; Abu-Ein, A.; Nahar, K.M. A Hybrid SVM NAÏVE-BAYES Classifier for Bright Lesions Recognition in Eye Fundus Images. Int. J. Electr. Eng. Inform. 2021, 13, 530–545. [Google Scholar] [CrossRef]

- Yaqoob, M.K.; Ali, S.F.; Bilal, M.; Hanif, M.S.; Al-Saggaf, U.M. ResNet Based Deep Features and Random Forest Classifier for Diabetic Retinopathy Detection. Sensors 2021, 21, 3883. [Google Scholar] [CrossRef]

- Das, D.; Biswas, S.K.; Bandyopadhyay, S. A critical review on diagnosis of diabetic retinopathy using machine learning and deep learning. Multimed. Tools Appl. 2022, 81, 25613–25655. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; Available online: https://arxiv.org/abs/1409.1556v6 (accessed on 3 July 2025).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2016, arXiv:1512.00567. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. 2017. Available online: https://github.com/liuzhuang13/DenseNet (accessed on 3 July 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. 2016. Available online: http://image-net.org/challenges/LSVRC/2015 (accessed on 3 July 2025).

- Tariq, H.; Rashid, M.; Javed, A.; Zafar, E.; Alotaibi, S.S.; Zia, M.Y.I. Performance Analysis of Deep-Neural-Network-Based Automatic Diagnosis of Diabetic Retinopathy. Sensors 2021, 22, 205. [Google Scholar] [CrossRef]

- Alghamdi, H.; Turki, T. PDD-Net: Plant Disease Diagnoses Using Multilevel and Multiscale Convolutional Neural Network Features. Agriculture 2023, 13, 1072. [Google Scholar] [CrossRef]

- Qureshi, M.E.; Ashraf, M.H.; Arshad, M.W.; Khan, A.; Ali, H.; Abdeen, Z.U. Hierarchical Feature Fusion with Inception V3 for Multiclass Plant Disease Classification. Informatica 2025, 49, 145–160. [Google Scholar] [CrossRef]

- Kobat, S.G.; Baygin, N.; Yusufoglu, E.; Baygin, M.; Barua, P.D.; Dogan, S.; Yaman, O.; Celiker, U.; Yildirim, H.; Tan, R.-S.; et al. Automated Diabetic Retinopathy Detection Using Horizontal and Vertical Patch Division-Based Pre-Trained DenseNET with Digital Fundus Images. Diagnostics 2022, 12, 1975. [Google Scholar] [CrossRef]

- Islam, M.R.; Abdulrazak, L.F.; Nahiduzzaman; Goni, O.F.; Anower, S.; Ahsan, M.; Haider, J.; Kowalski, M. Applying supervised contrastive learning for the detection of diabetic retinopathy and its severity levels from fundus images. Comput. Biol. Med. 2022, 146, 105602. [Google Scholar] [CrossRef] [PubMed]

- Khan, Z.; Khan, F.G.; Khan, A.; Rehman, Z.U.; Shah, S.; Qummar, S.; Ali, F.; Pack, S. Diabetic Retinopathy Detection Using VGG-NIN a Deep Learning Architecture. IEEE Access 2021, 9, 61408–61416. [Google Scholar] [CrossRef]

- Deng, L.; Liu, S.; Cheng, Y.; Zhao, G.; Xu, J. Algorithm for diabetic retinal image analysis based on deep learning. Multimed. Tools Appl. 2023, 82, 47559–47584. [Google Scholar] [CrossRef]

- Lin, C.-L.; Wu, K.-C. Development of revised ResNet-50 for diabetic retinopathy detection. BMC Bioinform. 2023, 24, 157. [Google Scholar] [CrossRef] [PubMed]

- Saranya, P.; Prabakaran, S. Automatic detection of non-proliferative diabetic retinopathy in retinal fundus images using convolution neural network. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 5027–5036. [Google Scholar] [CrossRef]

- Porwal, P.; Pachade, S.; Kamble, R.; Kokare, M.; Deshmukh, G.; Sahasrabuddhe, V.; Meriaudeau, F. Indian Diabetic Retinopathy Image Dataset (IDRiD): A Database for Diabetic Retinopathy Screening Research. Data 2018, 3, 25. [Google Scholar] [CrossRef]

- Maggie, S.D.K. APTOS 2019 Blindness Detection. 2019. Kaggle. Available online: https://kaggle.com/competitions/aptos2019-blindness-detection (accessed on 3 July 2025).

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Emma Dugas, J.; Jared; Cukierski, W. Diabetic Retinopathy Detection. Available online: https://www.kaggle.com/competitions/diabetic-retinopathy-detection (accessed on 3 July 2025).

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. In Proceedings of the 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019; Available online: https://arxiv.org/pdf/1711.05101 (accessed on 3 July 2025).

- Sunkari, S.; Sangam, A.; Sreeram, P.V.; Suchetha, M.; Raman, R.; Rajalakshmi, R.; Suchetha, T. A refined ResNet18 architecture with Swish activation function for Diabetic Retinopathy classification. Biomed. Signal Process. Control. 2024, 88, 105630. [Google Scholar] [CrossRef]

- Kassani, S.H.; Kassani, P.H.; Khazaeinezhad, R.; Wesolowski, M.J.; Schneider, K.A.; Deters, R. Diabetic Retinopathy Classification Using a Modified Xception Architecture. In Proceedings of the 2019 IEEE 19th International Symposium on Signal Processing and Information Technology, ISSPIT 2019, Ajman, United Arab Emirates, 10–12 December 2019. [Google Scholar] [CrossRef]

- Almas, S.; Wahid, F.; Ali, S.; Alkhyyat, A.; Ullah, K.; Khan, J.; Lee, Y. Visual Impairment Prevention by Early Detection of Diabetic Retinopathy Based on Stacked Auto-Encoder. Sci. Rep. 2025, 15, 2554. [Google Scholar] [CrossRef]

| Before Augmentation | After Augmentation | |||||

|---|---|---|---|---|---|---|

| Classes | IDRiD + APTOS2019 | DDR | EyePACS | IDRiD + APTOS2019 | DDR | EyePACS |

| Normal | 1973 | 6266 | 25,810 | 9865 | 6266 | 25,810 |

| Mild DR | 395 | 630 | 2443 | 1975 | 3150 | 12,215 |

| Moderate DR | 1167 | 4477 | 5292 | 5835 | 4477 | 5292 |

| Severe DR | 286 | 236 | 873 | 1430 | 1180 | 4365 |

| Proliferative DR | 357 | 913 | 708 | 1785 | 4565 | 3540 |

| Model | Enhancement | Augmentation | Stem | MLF | MSF | Attention Block | Screening Accuracy % | Grading Accuracy % |

|---|---|---|---|---|---|---|---|---|

| ResNet50 | ✘ | ✘ | SFF | ✔ | ✘ | ✘ | 93.13 | 61.79 |

| DenseNet | ✘ | ✘ | SFF | ✔ | ✘ | ✘ | 95.88 | 68.56 |

| InceptionNet | ✘ | ✘ | SFF | ✘ | ✔ | ✘ | 96.72 | 72.01 |

| HIRD-Net | ✘ | ✘ | SFF | ✔ | ✔ | ✘ | 97.12 | 76.45 |

| HIRD-Net | ✘ | ✘ | HFF | ✔ | ✔ | ✘ | 97.58 | 79.74 |

| ResNet50 | ✔ | ✔ | SFF | ✔ | ✘ | ✘ | 97.27 | 88.87 |

| DenseNet | ✔ | ✔ | SFF | ✔ | ✘ | ✘ | 98.03 | 89.61 |

| InceptionNet | ✔ | ✔ | SFF | ✘ | ✔ | ✘ | 97.93 | 90.42 |

| HIRD-Net | ✘ | ✔ | HFF | ✔ | ✔ | ✘ | 98.19 | 88.16 |

| HIRD-Net | ✔ | ✔ | HFF | ✔ | ✔ | ✘ | 99.45 | 92.25 |

| HIRD-Net | ✔ | ✔ | HFF | ✔ | ✔ | ✔ | 99.50 | 93.46 |

| CNN Architecture | Class | TP | FP | FN | TN | Pr. (%) | Rec. (%) | F1. (%) |

|---|---|---|---|---|---|---|---|---|

| Alex-Net | Normal | 1871 | 103 | 102 | 2307 | 94.8 | 94.8 | 94.81 |

| Mild | 303 | 77 | 92 | 3875 | 79.7 | 76.7 | 78.19 | |

| Moderate | 1007 | 163 | 160 | 3171 | 86.1 | 86.3 | 86.18 | |

| Severe | 157 | 168 | 129 | 4021 | 48.3 | 54.9 | 51.39 | |

| Proliferative | 212 | 117 | 145 | 3966 | 64.4 | 59.4 | 61.81 | |

| ResNet18 | Normal | 1965 | 81 | 8 | 2213 | 96.0 | 99.6 | 97.79 |

| Mild | 326 | 80 | 69 | 3852 | 80.3 | 82.5 | 81.40 | |

| Moderate | 1045 | 39 | 122 | 3133 | 96.4 | 89.5 | 92.85 | |

| Severe | 199 | 170 | 87 | 3979 | 53.9 | 69.6 | 60.76 | |

| Proliferative | 173 | 100 | 184 | 4005 | 63.4 | 48.5 | 54.92 | |

| ResNet50 | Normal | 1927 | 82 | 46 | 2251 | 95.9 | 97.7 | 96.79 |

| Mild | 315 | 62 | 80 | 3863 | 83.6 | 79.7 | 81.61 | |

| Moderate | 1073 | 67 | 94 | 3105 | 94.1 | 91.9 | 93.02 | |

| Severe | 193 | 141 | 93 | 3985 | 57.8 | 67.5 | 62.26 | |

| Proliferative | 205 | 113 | 152 | 3973 | 64.5 | 57.4 | 60.74 | |

| DenseNet201 | Normal | 1937 | 88 | 36 | 2241 | 95.7 | 98.2 | 96.90 |

| Mild | 362 | 101 | 33 | 3816 | 78.2 | 91.6 | 84.38 | |

| Moderate | 1072 | 52 | 95 | 3106 | 95.4 | 91.9 | 93.58 | |

| Severe | 161 | 101 | 125 | 4017 | 61.5 | 56.3 | 58.76 | |

| Proliferative | 212 | 92 | 145 | 3966 | 69.7 | 59.4 | 64.15 | |

| XceptionNet | Normal | 1928 | 42 | 45 | 2250 | 97.9 | 97.7 | 97.79 |

| Mild | 356 | 47 | 39 | 3822 | 88.3 | 90.1 | 89.22 | |

| Moderate | 1094 | 82 | 73 | 3084 | 93.0 | 93.7 | 93.38 | |

| Severe | 156 | 102 | 130 | 4022 | 60.5 | 54.5 | 57.35 | |

| Proliferative | 233 | 138 | 124 | 3945 | 62.8 | 65.3 | 64.01 | |

| InceptionNet V3 | Normal | 1925 | 41 | 48 | 2253 | 97.9 | 97.6 | 97.74 |

| Mild | 343 | 105 | 52 | 3835 | 76.6 | 86.8 | 81.38 | |

| Moderate | 1107 | 50 | 60 | 3071 | 95.7 | 94.9 | 95.27 | |

| Severe | 193 | 110 | 93 | 3985 | 63.7 | 67.5 | 65.53 | |

| Proliferative | 210 | 94 | 147 | 3968 | 69.1 | 58.8 | 63.54 | |

| Proposed HIRD-Net | Normal | 1961 | 1 | 12 | 2217 | 99.9 | 99.4 | 99.67 |

| Mild | 350 | 48 | 45 | 3828 | 87.9 | 88.6 | 88.27 | |

| Moderate | 1105 | 59 | 62 | 3073 | 94.9 | 94.7 | 94.81 | |

| Severe | 222 | 108 | 64 | 3956 | 67.3 | 77.6 | 72.08 | |

| Proliferative | 267 | 57 | 90 | 3911 | 82.4 | 74.8 | 78.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ashraf, M.H.; Mehmood, M.N.; Ahmed, M.; Hussain, D.; Khan, J.; Jung, Y.; Zakariah, M.; AlSekait, D.M. HIRD-Net: An Explainable CNN-Based Framework with Attention Mechanism for Diabetic Retinopathy Diagnosis Using CLAHE-D-DoG Enhanced Fundus Images. Life 2025, 15, 1411. https://doi.org/10.3390/life15091411

Ashraf MH, Mehmood MN, Ahmed M, Hussain D, Khan J, Jung Y, Zakariah M, AlSekait DM. HIRD-Net: An Explainable CNN-Based Framework with Attention Mechanism for Diabetic Retinopathy Diagnosis Using CLAHE-D-DoG Enhanced Fundus Images. Life. 2025; 15(9):1411. https://doi.org/10.3390/life15091411

Chicago/Turabian StyleAshraf, Muhammad Hassaan, Muhammad Nabeel Mehmood, Musharif Ahmed, Dildar Hussain, Jawad Khan, Younhyun Jung, Mohammed Zakariah, and Deema Mohammed AlSekait. 2025. "HIRD-Net: An Explainable CNN-Based Framework with Attention Mechanism for Diabetic Retinopathy Diagnosis Using CLAHE-D-DoG Enhanced Fundus Images" Life 15, no. 9: 1411. https://doi.org/10.3390/life15091411

APA StyleAshraf, M. H., Mehmood, M. N., Ahmed, M., Hussain, D., Khan, J., Jung, Y., Zakariah, M., & AlSekait, D. M. (2025). HIRD-Net: An Explainable CNN-Based Framework with Attention Mechanism for Diabetic Retinopathy Diagnosis Using CLAHE-D-DoG Enhanced Fundus Images. Life, 15(9), 1411. https://doi.org/10.3390/life15091411