Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities

Abstract

1. Introduction

2. Section 1: Groundwork and Historical Evaluation

2.1. Artificial Intelligence: A Historical Perspective

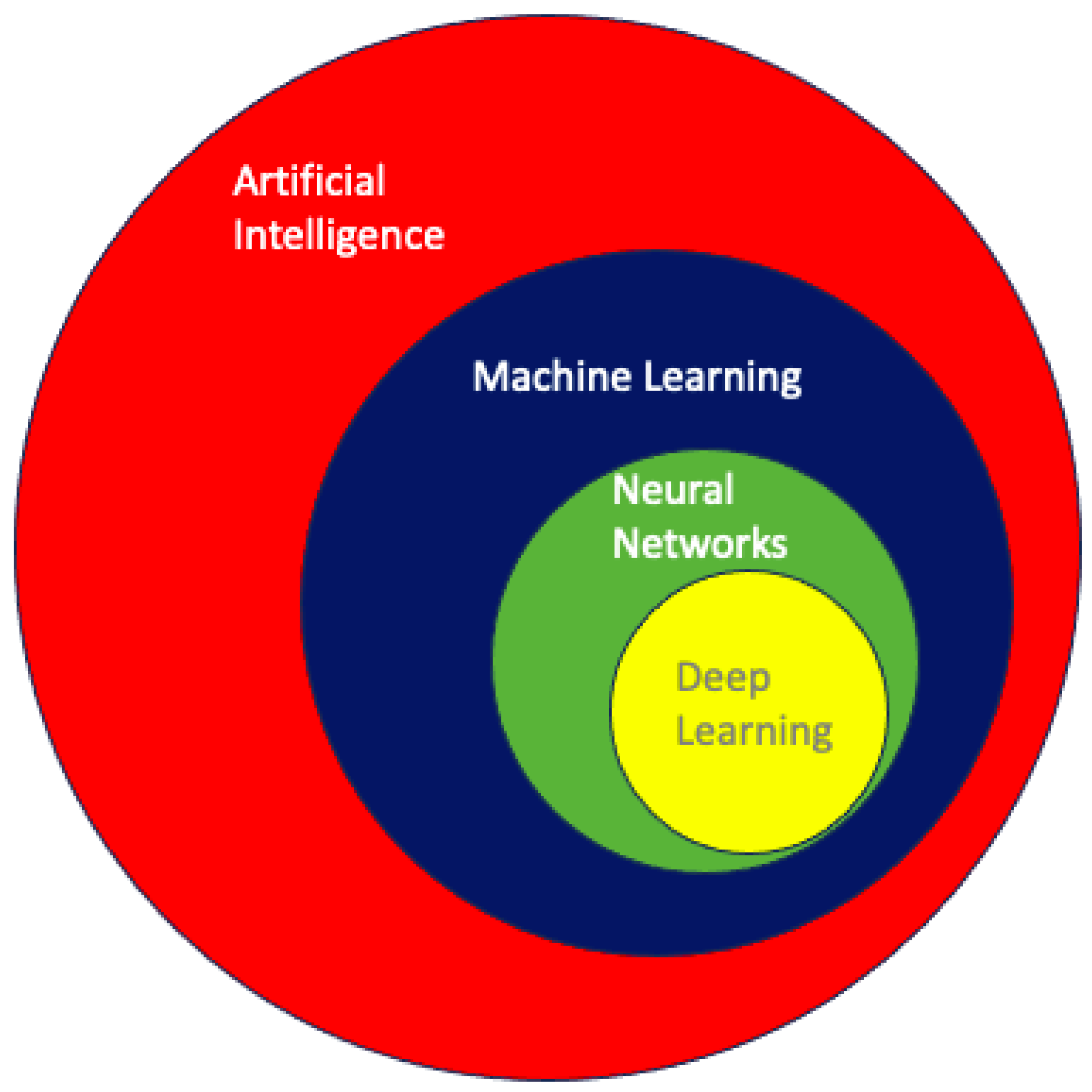

2.2. Machine Learning and Neural Networks in Healthcare

3. Section 2: Current Innovations and Applications

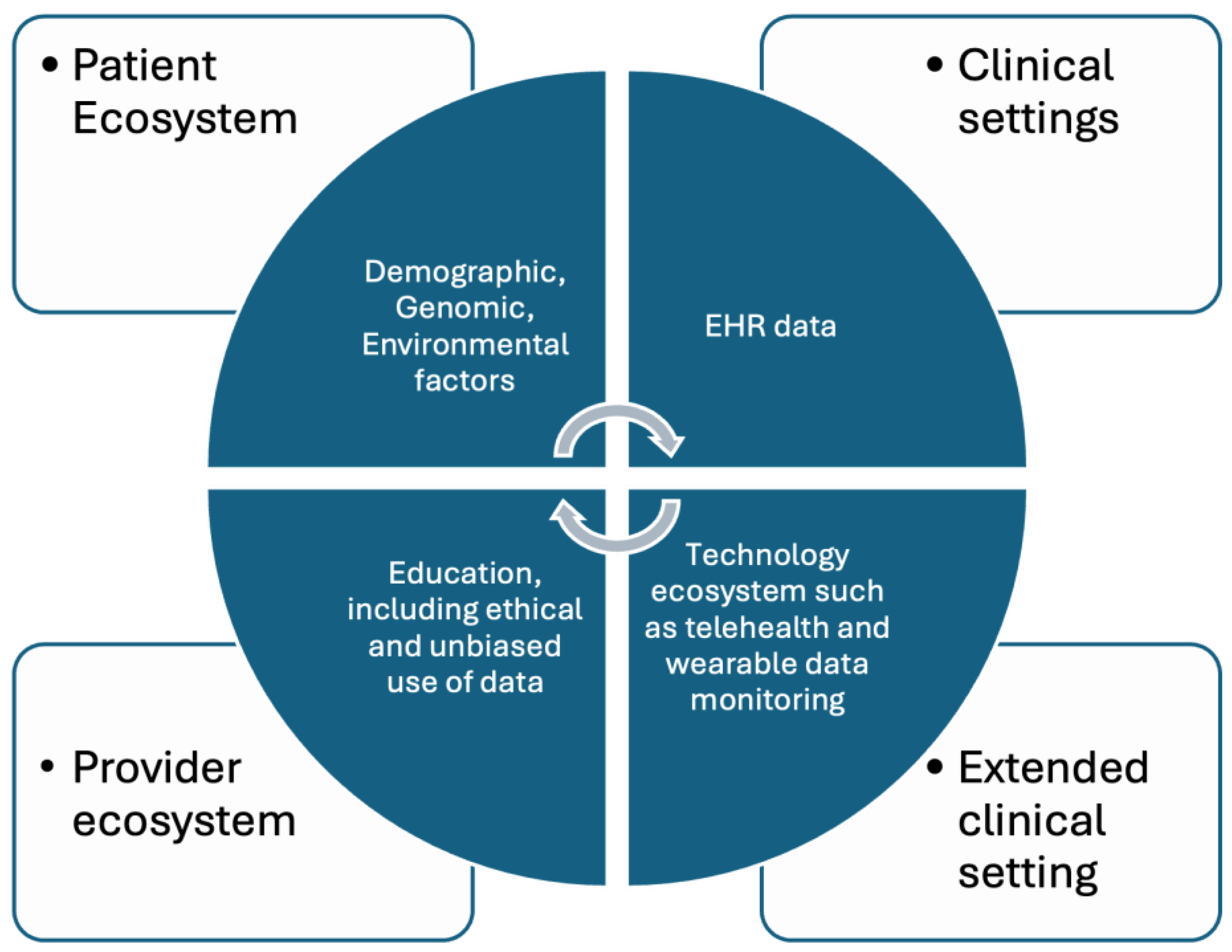

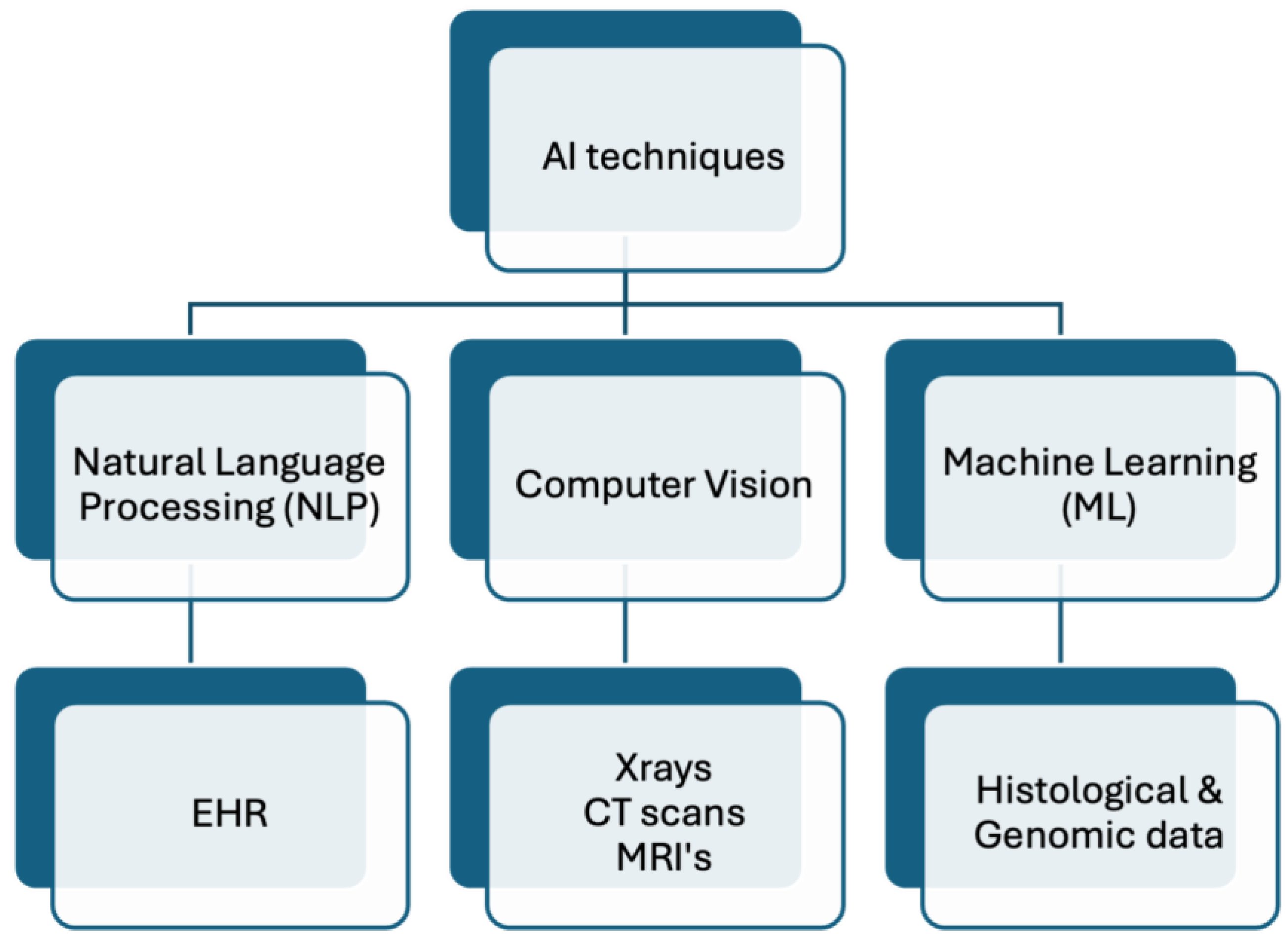

3.1. Advancing Personalized Medicine: Leveraging AI across Multifaceted Health Data Domains

3.2. Real-Time Monitoring of Immunization: Population-Centric Digital Innovations in Public Health and Research

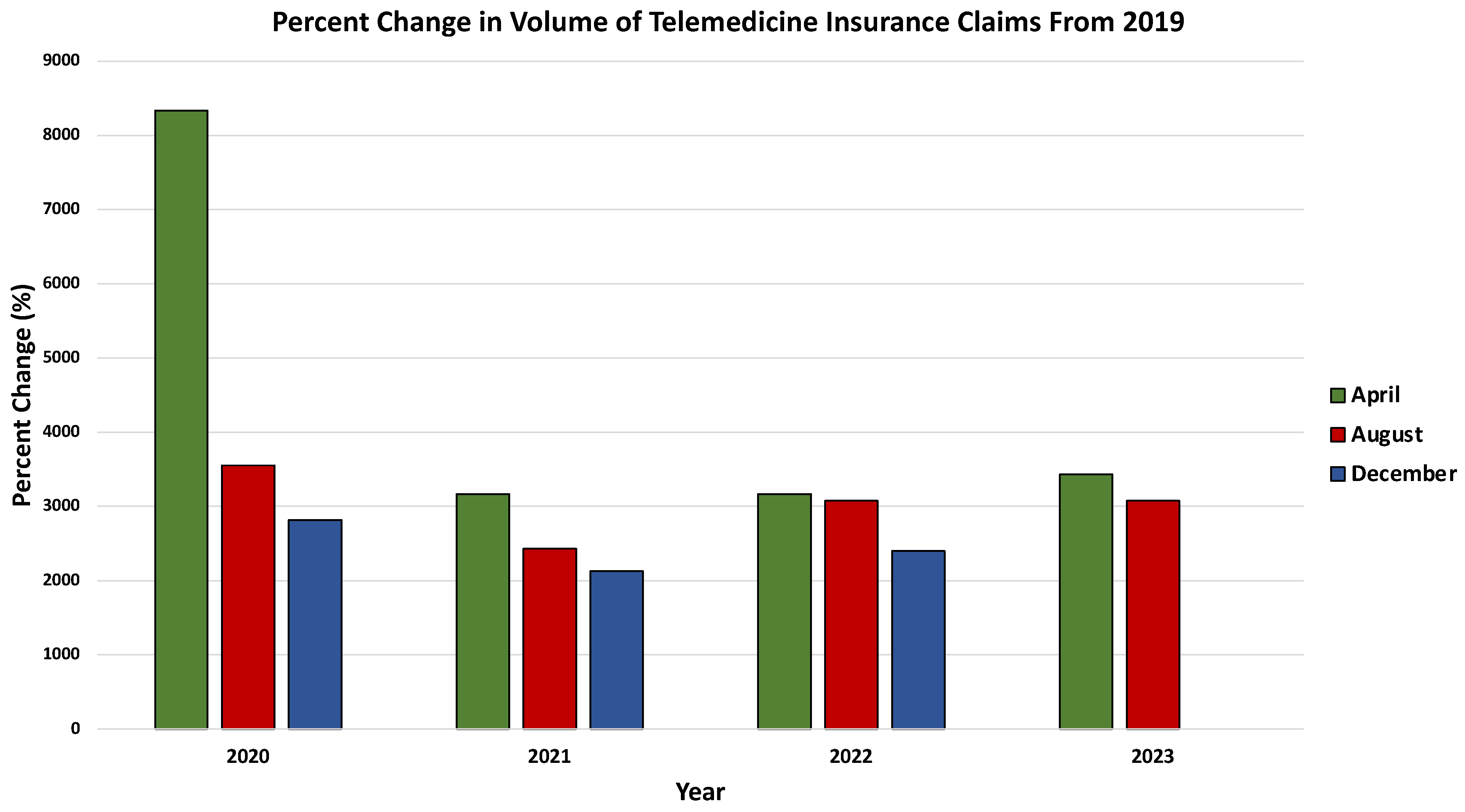

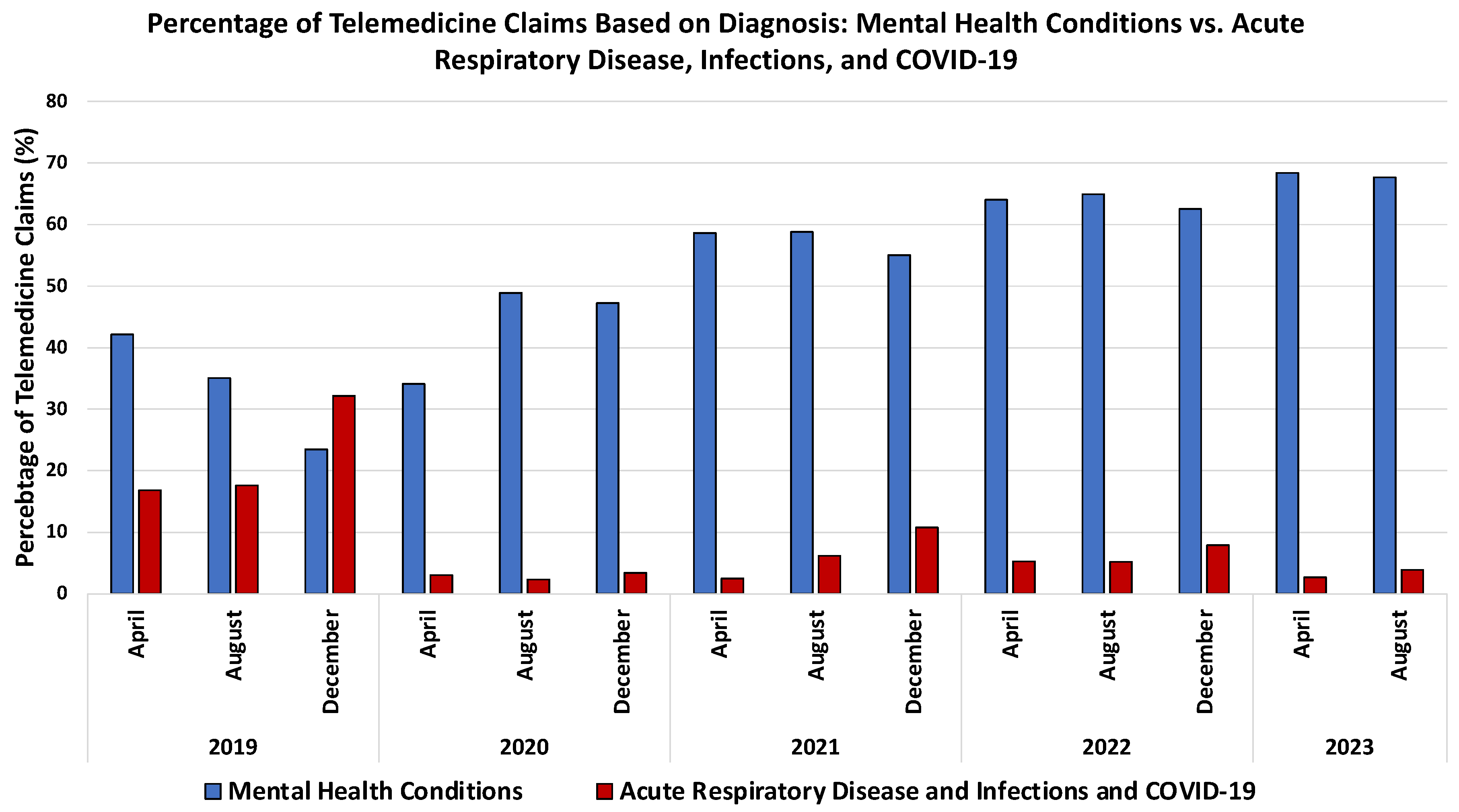

3.3. Revolutionizing Healthcare: The Synergy of Telemedicine and Artificial Intelligence

4. Section 3: AI in Healthcare Engagement and Education

4.1. Exploring the Impact of Chatbots on Patient Engagement, Mental Health Support, and Medical Communication

4.2. AI Integration in Medical Education: Transformative Trends and Challenges

5. Section 4: Ethical Considerations, Limitations, and Future Directions

Ethical and Societal Considerations in Integrating AI into Medical Practice

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| ML | Machine Learning |

| ANNs | Artificial Neural Networks |

| CNNs | Convolutional Neural Networks |

| CAD | Computer-Aided Detection |

| MRI | Magnetic Resonance Imaging |

| EHRs | Electronic Health Records |

| GANs | Generative Adversarial Networks |

| NLP | Natural Language Processing |

| CT | Computed Tomography |

| DNA | Deoxyribonucleic Acid |

| GIS | Geographical Information Systems |

| WHO | World Health Organization |

| HIPAA | Health Insurance Portability and Accountability Act |

| FDA | Food and Drug Administration |

| PPE | Personal Protective Equipment |

| EMRs | Electronic Medical Records |

References

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69, S36–S40. [Google Scholar] [CrossRef] [PubMed]

- Shieber, S.M. The Turing Test as Interactive Proof. Noûs 2007, 41, 686–713. [Google Scholar] [CrossRef]

- Cordeschi, R. Ai Turns Fifty: Revisiting Its Origins. Appl. Artif. Intell. 2007, 21, 259–279. [Google Scholar] [CrossRef]

- AI’s Half Century: On the Thresholds of the Dartmouth Conference. Available online: https://iris.uniroma1.it/handle/11573/97960 (accessed on 6 November 2023).

- Buchanan, B.G. A (Very) Brief History of Artificial Intelligence. AI Mag. 2005, 26, 53. [Google Scholar] [CrossRef]

- Moran, M.E. Evolution of robotic arms. J. Robot. Surg. 2007, 1, 103–111. [Google Scholar] [CrossRef]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1983, 26, 23–28. [Google Scholar] [CrossRef]

- Miller, R.A.; Pople, H.E.; Myers, J.D. Internist-I, an Experimental Computer-Based Diagnostic Consultant for General Internal Medicine. N. Engl. J. Med. 1982, 307, 468–476. [Google Scholar] [CrossRef]

- Kulikowski, C.A. Beginnings of Artificial Intelligence in Medicine (AIM): Computational Artifice Assisting Scientific Inquiry and Clinical Art—With Reflections on Present AIM Challenges. Yearb. Med. Inform. 2019, 28, 249–256. [Google Scholar] [CrossRef] [PubMed]

- Shortliffe, E.H. Mycin: A Knowledge-Based Computer Program Applied to Infectious Diseases. In Proceedings of the Annual Symposium on Computer Application in Medical Care, Washington, DC, USA, 3–5 October 1977; pp. 66–69. [Google Scholar]

- The Laboratory of Computer Science | DXplain. Available online: http://www.mghlcs.org/projects/dxplain (accessed on 6 November 2023).

- Ferrucci, D.; Levas, A.; Bagchi, S.; Gondek, D.; Mueller, E.T. Watson: Beyond Jeopardy! Artif. Intell. 2013, 199–200, 93–105. [Google Scholar] [CrossRef]

- Mintz, Y.; Brodie, R. Introduction to artificial intelligence in medicine. Minim. Invasive Ther. Allied Technol. 2019, 28, 73–81. [Google Scholar] [CrossRef]

- Bakkar, N.; Kovalik, T.; Lorenzini, I.; Spangler, S.; Lacoste, A.; Sponaugle, K.; Ferrante, P.; Argentinis, E.; Sattler, R.; Bowser, R. Artificial intelligence in neurodegenerative disease research: Use of IBM Watson to identify additional RNA-binding proteins altered in amyotrophic lateral sclerosis. Acta Neuropathol. 2018, 135, 227–247. [Google Scholar] [CrossRef]

- Machine Learning—An Overview | ScienceDirect Topics. Available online: https://www.sciencedirect.com/topics/computer-science/machine-learning (accessed on 7 November 2023).

- Chan, S.; Reddy, V.; Myers, B.; Thibodeaux, Q.; Brownstone, N.; Liao, W. Machine Learning in Dermatology: Current Applications, Opportunities, and Limitations. Dermatol. Ther. 2020, 10, 365–386. [Google Scholar] [CrossRef]

- Altman, N.S. An Introduction to Kernel and Nearest-Neighbor Nonparametric Regression. Am. Stat. 1992, 46, 175–185. [Google Scholar] [CrossRef]

- Hand, D.J. The Relationship between Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL). Available online: http://danieljhand.com/the-relationship-between-artificial-intelligence-ai-machine-learning-ml-and-deep-learning-dl.html (accessed on 13 April 2024).

- Zou, J.; Han, Y.; So, S.-S. Overview of artificial neural networks. Methods Mol. Biol. Clifton NJ 2008, 458, 15–23. [Google Scholar] [CrossRef] [PubMed]

- Indolia, S.; Goswami, A.K.; Mishra, S.P.; Asopa, P. Conceptual Understanding of Convolutional Neural Network—A Deep Learning Approach. Procedia Comput. Sci. 2018, 132, 679–688. [Google Scholar] [CrossRef]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Lo, S.-C.B.; Lou, S.-L.A.; Lin, J.-S.; Freedman, M.T.; Chien, M.V.; Mun, S.K. Artificial convolution neural network techniques and applications for lung nodule detection. IEEE Trans. Med. Imaging 1995, 14, 711–718. [Google Scholar] [CrossRef] [PubMed]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Castellino, R.A. Computer aided detection (CAD): An overview. Cancer Imaging 2005, 5, 17–19. [Google Scholar] [CrossRef]

- Nichols, J.A.; Chan, H.W.H.; Baker, M.A.B. Machine learning: Applications of artificial intelligence to imaging and diagnosis. Biophys. Rev. 2019, 11, 111. [Google Scholar] [CrossRef]

- Ghafoorian, M.; Karssemeijer, N.; Heskes, T.; van Uden, I.W.M.; Sanchez, C.I.; Litjens, G.; de Leeuw, F.-E.; van Ginneken, B.; Marchiori, E.; Platel, B. Location Sensitive Deep Convolutional Neural Networks for Segmentation of White Matter Hyperintensities. Sci. Rep. 2017, 7, 5110. [Google Scholar] [CrossRef] [PubMed]

- Ciążyńska, M.; Kamińska-Winciorek, G.; Lange, D.; Lewandowski, B.; Reich, A.; Sławińska, M.; Pabianek, M.; Szczepaniak, K.; Hankiewicz, A.; Ułańska, M.; et al. The incidence and clinical analysis of non-melanoma skin cancer. Sci. Rep. 2021, 11, 4337. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.C.; Bravata, D.M.; Weil, E.; Olkin, I. A comparison of dermatologists’ and primary care physicians’ accuracy in diagnosing melanoma: A systematic review. Arch. Dermatol. 2001, 137, 1627–1634. [Google Scholar] [CrossRef]

- Han, S.S.; Park, G.H.; Lim, W.; Kim, M.S.; Na, J.I.; Park, I.; Chang, S.E. Deep neural networks show an equivalent and often superior performance to dermatologists in onychomycosis diagnosis: Automatic construction of onychomycosis datasets by region-based convolutional deep neural network. PLoS ONE 2018, 13, e0191493. [Google Scholar] [CrossRef] [PubMed]

- Habehh, H.; Gohel, S. Machine Learning in Healthcare. Curr. Genom. 2021, 22, 291–300. [Google Scholar] [CrossRef] [PubMed]

- Kosorok, M.R.; Laber, E.B. Precision Medicine. Annu. Rev. Stat. Its Appl. 2019, 6, 263–286. [Google Scholar] [CrossRef] [PubMed]

- Gordon, E.; Koslow, S.H. Integrative Neuroscience and Personalized Medicine; Oxford University Press: New York, NY, USA, 2011; ISBN 978-0-19-539380-4. [Google Scholar]

- Cirillo, D.; Valencia, A. Big data analytics for personalized medicine. Curr. Opin. Biotechnol. 2019, 58, 161–167. [Google Scholar] [CrossRef] [PubMed]

- Berger, B.; Peng, J.; Singh, M. Computational solutions for omics data. Nat. Rev. Genet. 2013, 14, 333–346. [Google Scholar] [CrossRef] [PubMed]

- Isgut, M.; Gloster, L.; Choi, K.; Venugopalan, J.; Wang, M.D. Systematic Review of Advanced AI Methods for Improving Healthcare Data Quality in Post COVID-19 Era. IEEE Rev. Biomed. Eng. 2023, 16, 53–69. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Dlamini, Z.; Francies, F.Z.; Hull, R.; Marima, R. Artificial intelligence (AI) and big data in cancer and precision oncology. Comput. Struct. Biotechnol. J. 2020, 18, 2300–2311. [Google Scholar] [CrossRef]

- Wang, H.; Fu, T.; Du, Y.; Gao, W.; Huang, K.; Liu, Z.; Chandak, P.; Liu, S.; Van Katwyk, P.; Deac, A.; et al. Scientific discovery in the age of artificial intelligence. Nature 2023, 620, 47–60. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Hossain, E.; Rana, R.; Higgins, N.; Soar, J.; Barua, P.D.; Pisani, A.R.; Turner, K. Natural Language Processing in Electronic Health Records in relation to healthcare decision-making: A systematic review. Comput. Biol. Med. 2023, 155, 106649. [Google Scholar] [CrossRef] [PubMed]

- Machine Learning Classifies Cancer. Available online: https://www.nature.com/articles/d41586-018-02881-7 (accessed on 11 November 2023).

- Abul-Husn, N.S.; Kenny, E.E. Personalized Medicine and the Power of Electronic Health Records. Cell 2019, 177, 58–69. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhang, Y.; Kalra, M.K.; Lin, F.; Chen, Y.; Liao, P.; Zhou, J.; Wang, G. Low-Dose CT With a Residual Encoder-Decoder Convolutional Neural Network. IEEE Trans. Med. Imaging 2017, 36, 2524–2535. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist–level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Piette, J.D.; Newman, S.; Krein, S.L.; Marinec, N.; Chen, J.; Williams, D.A.; Edmond, S.N.; Driscoll, M.; LaChappelle, K.M.; Kerns, R.D.; et al. Patient-Centered Pain Care Using Artificial Intelligence and Mobile Health Tools: A Randomized Comparative Effectiveness Trial. JAMA Intern. Med. 2022, 182, 975–983. [Google Scholar] [CrossRef] [PubMed]

- Automated, Machine Learning—Based Alerts Increase Epilepsy Surgery Referrals: A Randomized Controlled Trial—Wissel—2023—Epilepsia—Wiley Online Library. Available online: https://onlinelibrary.wiley.com/doi/10.1111/epi.17629 (accessed on 12 November 2023).

- Jiang, F.; Fu, X.; Kuang, K.; Fan, D. Artificial Intelligence Algorithm-Based Differential Diagnosis of Crohn’s Disease and Ulcerative Colitis by CT Image. Comput. Math. Methods Med. 2022, 2022, 3871994. [Google Scholar] [CrossRef] [PubMed]

- Sundar, R.; Barr Kumarakulasinghe, N.; Huak Chan, Y.; Yoshida, K.; Yoshikawa, T.; Miyagi, Y.; Rino, Y.; Masuda, M.; Guan, J.; Sakamoto, J.; et al. Machine-learning model derived gene signature predictive of paclitaxel survival benefit in gastric cancer: Results from the randomised phase III SAMIT trial. Gut 2022, 71, 676–685. [Google Scholar] [CrossRef]

- Xiao, Q.; Zhang, F.; Xu, L.; Yue, L.; Kon, O.L.; Zhu, Y.; Guo, T. High-throughput proteomics and AI for cancer biomarker discovery. Adv. Drug Deliv. Rev. 2021, 176, 113844. [Google Scholar] [CrossRef]

- Cowie, M.R.; Blomster, J.I.; Curtis, L.H.; Duclaux, S.; Ford, I.; Fritz, F.; Goldman, S.; Janmohamed, S.; Kreuzer, J.; Leenay, M.; et al. Electronic health records to facilitate clinical research. Clin. Res. Cardiol. 2017, 106, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Fu, S.; Lopes, G.S.; Pagali, S.R.; Thorsteinsdottir, B.; LeBrasseur, N.K.; Wen, A.; Liu, H.; Rocca, W.A.; Olson, J.E.; St. Sauver, J.; et al. Ascertainment of Delirium Status Using Natural Language Processing From Electronic Health Records. J. Gerontol. Ser. A 2022, 77, 524–530. [Google Scholar] [CrossRef]

- Monteith, S.; Glenn, T.; Geddes, J.; Whybrow, P.C.; Bauer, M. Commercial Use of Emotion Artificial Intelligence (AI): Implications for Psychiatry. Curr. Psychiatry Rep. 2022, 24, 203–211. [Google Scholar] [CrossRef] [PubMed]

- Gual-Montolio, P.; Jaén, I.; Martínez-Borba, V.; Castilla, D.; Suso-Ribera, C. Using Artificial Intelligence to Enhance Ongoing Psychological Interventions for Emotional Problems in Real- or Close to Real-Time: A Systematic Review. Int. J. Environ. Res. Public Health 2022, 19, 7737. [Google Scholar] [CrossRef]

- Carroll, N.W.; Jones, A.; Burkard, T.; Lulias, C.; Severson, K.; Posa, T. Improving risk stratification using AI and social determinants of health. Am. J. Manag. Care 2022, 28, 582–587. [Google Scholar] [CrossRef]

- Srivani, M.; Murugappan, A.; Mala, T. Cognitive computing technological trends and future research directions in healthcare—A systematic literature review. Artif. Intell. Med. 2023, 138, 102513. [Google Scholar] [CrossRef]

- Rachlin, A. Routine Vaccination Coverage—Worldwide, 2021. MMWR Morb. Mortal. Wkly. Rep. 2022, 71, 1396–1400. [Google Scholar] [CrossRef]

- Chaney, S.C.; Mechael, P. So Many Choices, How Do I Choose? Considerations for Selecting Digital Health Interventions to Support Immunization Confidence and Demand. J. Med. Internet Res. 2023, 25, e47713. [Google Scholar] [CrossRef] [PubMed]

- Chandir, S.; Siddiqi, D.A.; Setayesh, H.; Khan, A.J. Impact of COVID-19 lockdown on routine immunisation in Karachi, Pakistan. Lancet Glob. Health 2020, 8, e1118–e1120. [Google Scholar] [CrossRef]

- Hirani, R.; Noruzi, K.; Iqbal, A.; Hussaini, A.S.; Khan, R.A.; Harutyunyan, A.; Etienne, M.; Tiwari, R.K. A Review of the Past, Present, and Future of the Monkeypox Virus: Challenges, Opportunities, and Lessons from COVID-19 for Global Health Security. Microorganisms 2023, 11, 2713. [Google Scholar] [CrossRef]

- Hirani, R.; Rashid, D.; Lewis, J.; Hosein-Woodley, R.; Issani, A. Monkeypox outbreak in the age of COVID-19: A new global health emergency. Mil. Med. Res. 2022, 9, 55. [Google Scholar] [CrossRef] [PubMed]

- Chopra, M.; Bhutta, Z.; Chang Blanc, D.; Checchi, F.; Gupta, A.; Lemango, E.T.; Levine, O.S.; Lyimo, D.; Nandy, R.; O’Brien, K.L.; et al. Addressing the persistent inequities in immunization coverage. Bull. World Health Organ. 2020, 98, 146–148. [Google Scholar] [CrossRef] [PubMed]

- Atkinson, K.M.; Mithani, S.S.; Bell, C.; Rubens-Augustson, T.; Wilson, K. The digital immunization system of the future: Imagining a patient-centric, interoperable immunization information system. Ther. Adv. Vaccines Immunother. 2020, 8, 2515135520967203. [Google Scholar] [CrossRef] [PubMed]

- Buchan, S.A.; Rosella, L.C.; Finkelstein, M.; Juurlink, D.; Isenor, J.; Marra, F.; Patel, A.; Russell, M.L.; Quach, S.; Waite, N.; et al. Impact of pharmacist administration of influenza vaccines on uptake in Canada. CMAJ Can. Med. Assoc. J. J. Assoc. Medicale Can. 2017, 189, E146–E152. [Google Scholar] [CrossRef] [PubMed]

- Hogue, M.D.; Grabenstein, J.D.; Foster, S.L.; Rothholz, M.C. Pharmacist involvement with immunizations: A decade of professional advancement. J. Am. Pharm. Assoc. 2006, 46, 168–179; quiz 179–182. [Google Scholar] [CrossRef] [PubMed]

- Bello, I.M.; Sylvester, M.; Ferede, M.; Akpan, G.U.; Ayesheshem, A.T.; Mwanza, M.N.; Okiror, S.; Anyuon, A.; Oluseun, O.O. Real-time monitoring of a circulating vaccine-derived poliovirus outbreak immunization campaign using digital health technologies in South Sudan. Pan Afr. Med. J. 2021, 40, 200. [Google Scholar] [CrossRef] [PubMed]

- White, B.M.; Shaban-Nejad, A. Utilization of Digital Health Dashboards in Improving COVID-19 Vaccination Uptake, Accounting for Health Inequities. Stud. Health Technol. Inform. 2022, 295, 499–502. [Google Scholar] [CrossRef] [PubMed]

- Brakefield, W.S.; Ammar, N.; Olusanya, O.A.; Shaban-Nejad, A. An Urban Population Health Observatory System to Support COVID-19 Pandemic Preparedness, Response, and Management: Design and Development Study. JMIR Public Health Surveill. 2021, 7, e28269. [Google Scholar] [CrossRef] [PubMed]

- Brakefield, W.S.; Ammar, N.; Olusanya, O.; Ozdenerol, E.; Thomas, F.; Stewart, A.J.; Johnson, K.C.; Davis, R.L.; Schwartz, D.L.; Shaban-Nejad, A. Implementing an Urban Public Health Observatory for (Near) Real-Time Surveillance for the COVID-19 Pandemic. Stud. Health Technol. Inform. 2020, 275, 22–26. [Google Scholar] [CrossRef]

- Ivanković, D.; Barbazza, E.; Bos, V.; Brito Fernandes, Ó.; Jamieson Gilmore, K.; Jansen, T.; Kara, P.; Larrain, N.; Lu, S.; Meza-Torres, B.; et al. Features Constituting Actionable COVID-19 Dashboards: Descriptive Assessment and Expert Appraisal of 158 Public Web-Based COVID-19 Dashboards. J. Med. Internet Res. 2021, 23, e25682. [Google Scholar] [CrossRef]

- Ryu, S. Telemedicine: Opportunities and Developments in Member States: Report on the Second Global Survey on eHealth 2009 (Global Observatory for eHealth Series, Volume 2). Healthc. Inform. Res. 2012, 18, 153–155. [Google Scholar] [CrossRef]

- FSMB | Search Results. Available online: https://www.fsmb.org/search-results/?q=Guidelines+for+the+Structure+and+Function+of+a++State+Medical+and+Osteopathic+Board+ (accessed on 11 November 2023).

- Dasgupta, A.; Deb, S. Telemedicine: A New Horizon in Public Health in India. Indian J. Community Med. Off. Publ. Indian Assoc. Prev. Soc. Med. 2008, 33, 3–8. [Google Scholar] [CrossRef] [PubMed]

- Institute of Medicine (US) Committee on Evaluating Clinical Applications of Telemedicine. Telemedicine: A Guide to Assessing Telecommunications in Health Care; Field, M.J., Ed.; The National Academies Collection: Reports funded by National Institutes of Health; National Academies Press (US): Washington, DC, USA, 1996. [Google Scholar]

- Bashshur, R.L.; Howell, J.D.; Krupinski, E.A.; Harms, K.M.; Bashshur, N.; Doarn, C.R. The Empirical Foundations of Telemedicine Interventions in Primary Care. Telemed. J. E-Health Off. J. Am. Telemed. Assoc. 2016, 22, 342–375. [Google Scholar] [CrossRef] [PubMed]

- Pires, I.M.; Marques, G.; Garcia, N.M.; Flórez-Revuelta, F.; Ponciano, V.; Oniani, S. A Research on the Classification and Applicability of the Mobile Health Applications. J. Pers. Med. 2020, 10, 11. [Google Scholar] [CrossRef]

- Alvarez, P.; Sianis, A.; Brown, J.; Ali, A.; Briasoulis, A. Chronic disease management in heart failure: Focus on telemedicine and remote monitoring. Rev. Cardiovasc. Med. 2021, 22, 403–413. [Google Scholar] [CrossRef]

- Scott Kruse, C.; Karem, P.; Shifflett, K.; Vegi, L.; Ravi, K.; Brooks, M. Evaluating barriers to adopting telemedicine worldwide: A systematic review. J. Telemed. Telecare 2018, 24, 4–12. [Google Scholar] [CrossRef]

- Hincapié, M.A.; Gallego, J.C.; Gempeler, A.; Piñeros, J.A.; Nasner, D.; Escobar, M.F. Implementation and Usefulness of Telemedicine During the COVID-19 Pandemic: A Scoping Review. J. Prim. Care Community Health 2020, 11, 2150132720980612. [Google Scholar] [CrossRef]

- Demleitner, A.F.; Wolff, A.W.; Erber, J.; Gebhardt, F.; Westenberg, E.; Winkler, A.S.; Kolbe-Busch, S.; Chaberny, I.F.; Lingor, P. Best practice approaches to outpatient management of people living with Parkinson’s disease during the COVID-19 pandemic. J. Neural Transm. Vienna Austria 1996 2022, 129, 1377–1385. [Google Scholar] [CrossRef]

- McDonald-Lopez, K.; Murphy, A.K.; Gould-Werth, A.; Griffin, J.; Bader, M.D.M.; Kovski, N. A Driver in Health Outcomes: Developing Discrete Categories of Transportation Insecurity. Am. J. Epidemiol. 2023, 192, 1854–1863. [Google Scholar] [CrossRef] [PubMed]

- Whited, J.D.; Warshaw, E.M.; Kapur, K.; Edison, K.E.; Thottapurathu, L.; Raju, S.; Cook, B.; Engasser, H.; Pullen, S.; Moritz, T.E.; et al. Clinical course outcomes for store and forward teledermatology versus conventional consultation: A randomized trial. J. Telemed. Telecare 2013, 19, 197–204. [Google Scholar] [CrossRef]

- Mann, D.M.; Chen, J.; Chunara, R.; Testa, P.A.; Nov, O. COVID-19 transforms health care through telemedicine: Evidence from the field. J. Am. Med. Inform. Assoc. 2020, 27, 1132–1135. [Google Scholar] [CrossRef]

- New Amwell Research Finds Telehealth Use Will Accelerate Post-Pandemic. Available online: https://business.amwell.com/about-us/news/press-releases/2020/new-amwell-research-finds-telehealth-use-will-accelerate-post-pandemic (accessed on 11 November 2023).

- Shaver, J. The State of Telehealth Before and After the COVID-19 Pandemic. Prim. Care 2022, 49, 517–530. [Google Scholar] [CrossRef] [PubMed]

- Weiner, J.P.; Bandeian, S.; Hatef, E.; Lans, D.; Liu, A.; Lemke, K.W. In-Person and Telehealth Ambulatory Contacts and Costs in a Large US Insured Cohort Before and During the COVID-19 Pandemic. JAMA Netw. Open 2021, 4, e212618. [Google Scholar] [CrossRef] [PubMed]

- Larson, A.E.; Zahnd, W.E.; Davis, M.M.; Stange, K.C.; Yoon, J.; Heintzman, J.D.; Harvey, S.M. Before and During Pandemic Telemedicine Use: An Analysis of Rural and Urban Safety-Net Clinics. Am. J. Prev. Med. 2022, 63, 1031–1036. [Google Scholar] [CrossRef] [PubMed]

- Office for Civil Rights (OCR). Notification of Enforcement Discretion for Telehealth Remote Communications During the COVID-19 Nationwide Public Health Emergency. Available online: https://www.hhs.gov/hipaa/for-professionals/special-topics/emergency-preparedness/notification-enforcement-discretion-telehealth/index.html (accessed on 11 November 2023).

- Moazzami, B.; Razavi-Khorasani, N.; Dooghaie Moghadam, A.; Farokhi, E.; Rezaei, N. COVID-19 and telemedicine: Immediate action required for maintaining healthcare providers well-being. J. Clin. Virol. Off. Publ. Pan Am. Soc. Clin. Virol. 2020, 126, 104345. [Google Scholar] [CrossRef] [PubMed]

- Schwamm, L.H.; Erskine, A.; Licurse, A. A digital embrace to blunt the curve of COVID19 pandemic. NPJ Digit. Med. 2020, 3, 64. [Google Scholar] [CrossRef] [PubMed]

- Jones, M.S.; Goley, A.L.; Alexander, B.E.; Keller, S.B.; Caldwell, M.M.; Buse, J.B. Inpatient Transition to Virtual Care During COVID-19 Pandemic. Diabetes Technol. Ther. 2020, 22, 444–448. [Google Scholar] [CrossRef]

- Reduce Provider Burnout With Telehealth. Available online: https://letstalkinteractive.com/blog/reduce-provider-burnout-with-telehealth-2 (accessed on 2 December 2023).

- Malouff, T.D.; TerKonda, S.P.; Knight, D.; Abu Dabrh, A.M.; Perlman, A.I.; Munipalli, B.; Dudenkov, D.V.; Heckman, M.G.; White, L.J.; Wert, K.M.; et al. Physician Satisfaction With Telemedicine During the COVID-19 Pandemic: The Mayo Clinic Florida Experience. Mayo Clin. Proc. Innov. Qual. Outcomes 2021, 5, 771–782. [Google Scholar] [CrossRef] [PubMed]

- Chang, B.P.; Heravian, A.; Kessler, D.; Olsen, E. 358 Emergency Physician Tele-medicine Hours Associated With Decreased Reported Burnout Symptoms. Ann. Emerg. Med. 2020, 76, S138–S139. [Google Scholar] [CrossRef]

- How Do Telemedicine Lawsuits Work?—Dyer, Garofalo, Mann & Schultz 2022. Available online: https://ohiotiger.com/how-do-telemedicine-lawsuits-work/ (accessed on 10 October 2023).

- Gorincour, G.; Monneuse, O.; Ben Cheikh, A.; Avondo, J.; Chaillot, P.-F.; Journe, C.; Youssof, E.; Lecomte, J.-C.; Thomson, V. Management of abdominal emergencies in adults using telemedicine and artificial intelligence. J. Visc. Surg. 2021, 158, S26–S31. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.A.; Hartanti, I.R.; Colin, M.N.; Pitaloka, D.A. Telemedicine and artificial intelligence to support self-isolation of COVID-19 patients: Recent updates and challenges. Digit. Health 2022, 8, 20552076221100634. [Google Scholar] [CrossRef]

- Tukur, M.; Saad, G.; AlShagathrh, F.M.; Househ, M.; Agus, M. Telehealth interventions during COVID-19 pandemic: A scoping review of applications, challenges, privacy and security issues. BMJ Health Care Inform. 2023, 30, e100676. [Google Scholar] [CrossRef] [PubMed]

- Chamola, V.; Hassija, V.; Gupta, V.; Guizani, M. A Comprehensive Review of the COVID-19 Pandemic and the Role of IoT, Drones, AI, Blockchain, and 5G in Managing its Impact. IEEE Access 2020, 8, 90225–90265. [Google Scholar] [CrossRef]

- Shuja, J.; Alanazi, E.; Alasmary, W.; Alashaikh, A. COVID-19 open source data sets: A comprehensive survey. Appl. Intell. Dordr. Neth. 2021, 51, 1296–1325. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Jain, A.; Eng, C.; Way, D.H.; Lee, K.; Bui, P.; Kanada, K.; de Oliveira Marinho, G.; Gallegos, J.; Gabriele, S.; et al. A deep learning system for differential diagnosis of skin diseases. Nat. Med. 2020, 26, 900–908. [Google Scholar] [CrossRef] [PubMed]

- Haenssle, H.A.; Fink, C.; Toberer, F.; Winkler, J.; Stolz, W.; Deinlein, T.; Hofmann-Wellenhof, R.; Lallas, A.; Emmert, S.; Buhl, T.; et al. Man against machine reloaded: Performance of a market-approved convolutional neural network in classifying a broad spectrum of skin lesions in comparison with 96 dermatologists working under less artificial conditions. Ann. Oncol. Off. J. Eur. Soc. Med. Oncol. 2020, 31, 137–143. [Google Scholar] [CrossRef] [PubMed]

- Field, N.C.; Entezami, P.; Boulos, A.S.; Dalfino, J.; Paul, A.R. Artificial intelligence improves transfer times and ischemic stroke workflow metrics. Interv. Neuroradiol. J. Peritherapeutic Neuroradiol. Surg. Proced. Relat. Neurosci. 2023, 15910199231209080. [Google Scholar] [CrossRef]

- Entezami, P.; Boulos, A.; Paul, A.; Nourollahzadeh, E.; Dalfino, J. Contrast enhancement of chronic subdural hematomas after embolization of the middle meningeal artery. Interv. Neuroradiol. J. Peritherapeutic Neuroradiol. Surg. Proced. Relat. Neurosci. 2019, 25, 596–600. [Google Scholar] [CrossRef]

- Alghamdi, M.M.M.; Dahab, M.Y.H. Diagnosis of COVID-19 from X-ray images using deep learning techniques. Cogent Eng. 2022, 9, 2124635. [Google Scholar] [CrossRef]

- Labovitz, D.L.; Shafner, L.; Reyes Gil, M.; Virmani, D.; Hanina, A. Using Artificial Intelligence to Reduce the Risk of Nonadherence in Patients on Anticoagulation Therapy. Stroke 2017, 48, 1416–1419. [Google Scholar] [CrossRef] [PubMed]

- Hu, K.; Hu, K. ChatGPT sets record for fastest-growing user base—Analyst note. Reuters 2023. [Google Scholar]

- Hirani, R.; Farabi, B.; Marmon, S. Experimenting with ChatGPT: Concerns for academic medicine. J. Am. Acad. Dermatol. 2023, 89, e127–e129. [Google Scholar] [CrossRef] [PubMed]

- Setiawan, R.; Iskandar, R.; Madjid, N.; Kusumawardani, R. Artificial Intelligence-Based Chatbot to Support Public Health Services in Indonesia. Int. J. Interact. Mob. Technol. IJIM 2023, 17, 36–47. [Google Scholar] [CrossRef]

- Vasileiou, M.V.; Maglogiannis, I.G. The Health ChatBots in Telemedicine: Intelligent Dialog System for Remote Support. J. Healthc. Eng. 2022, 2022, e4876512. [Google Scholar] [CrossRef]

- Caruccio, L.; Cirillo, S.; Polese, G.; Solimando, G.; Sundaramurthy, S.; Tortora, G. Can ChatGPT provide intelligent diagnoses? A comparative study between predictive models and ChatGPT to define a new medical diagnostic bot. Expert Syst. Appl. 2024, 235, 121186. [Google Scholar] [CrossRef]

- Faris, H.; Habib, M.; Faris, M.; Elayan, H.; Alomari, A. An intelligent multimodal medical diagnosis system based on patients’ medical questions and structured symptoms for telemedicine. Inform. Med. Unlocked 2021, 23, 100513. [Google Scholar] [CrossRef]

- Zhong, Q.-Y.; Karlson, E.W.; Gelaye, B.; Finan, S.; Avillach, P.; Smoller, J.W.; Cai, T.; Williams, M.A. Screening pregnant women for suicidal behavior in electronic medical records: Diagnostic codes vs. clinical notes processed by natural language processing. BMC Med. Inform. Decis. Mak. 2018, 18, 30. [Google Scholar] [CrossRef]

- Xue, D.; Frisch, A.; He, D. Differential Diagnosis of Heart Disease in Emergency Departments Using Decision Tree and Medical Knowledge. In Proceedings of the Heterogeneous Data Management, Polystores, and Analytics for Healthcare, Los Angeles, CA, USA, 30 August 2019; Gadepally, V., Mattson, T., Stonebraker, M., Wang, F., Luo, G., Laing, Y., Dubovitskaya, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 225–236. [Google Scholar]

- Küpper, C.; Stroth, S.; Wolff, N.; Hauck, F.; Kliewer, N.; Schad-Hansjosten, T.; Kamp-Becker, I.; Poustka, L.; Roessner, V.; Schultebraucks, K.; et al. Identifying predictive features of autism spectrum disorders in a clinical sample of adolescents and adults using machine learning. Sci. Rep. 2020, 10, 4805. [Google Scholar] [CrossRef]

- Aydin, E.; Türkmen, İ.U.; Namli, G.; Öztürk, Ç.; Esen, A.B.; Eray, Y.N.; Eroğlu, E.; Akova, F. A novel and simple machine learning algorithm for preoperative diagnosis of acute appendicitis in children. Pediatr. Surg. Int. 2020, 36, 735–742. [Google Scholar] [CrossRef]

- Poletti, S.; Vai, B.; Mazza, M.G.; Zanardi, R.; Lorenzi, C.; Calesella, F.; Cazzetta, S.; Branchi, I.; Colombo, C.; Furlan, R.; et al. A peripheral inflammatory signature discriminates bipolar from unipolar depression: A machine learning approach. Prog. Neuropsychopharmacol. Biol. Psychiatry 2021, 105, 110136. [Google Scholar] [CrossRef] [PubMed]

- Oktay, A.B.; Kocer, A. Differential diagnosis of Parkinson and essential tremor with convolutional LSTM networks. Biomed. Signal Process. Control 2020, 56, 101683. [Google Scholar] [CrossRef]

- Nuthakki, S.; Neela, S.; Gichoya, J.W.; Purkayastha, S. Natural language processing of MIMIC-III clinical notes for identifying diagnosis and procedures with neural networks 2019. arXiv 2019, arXiv:1912.12397. [Google Scholar] [CrossRef]

- Atutxa, A.; de Ilarraza, A.D.; Gojenola, K.; Oronoz, M.; Perez-de-Viñaspre, O. Interpretable deep learning to map diagnostic texts to ICD-10 codes. Int. J. Med. Inf. 2019, 129, 49–59. [Google Scholar] [CrossRef] [PubMed]

- Guo, D.; Li, M.; Yu, Y.; Li, Y.; Duan, G.; Wu, F.-X.; Wang, J. Disease Inference with Symptom Extraction and Bidirectional Recurrent Neural Network. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 864–868. [Google Scholar]

- Kalra, S.; Li, L.; Tizhoosh, H.R. Automatic Classification of Pathology Reports using TF-IDF Features 2019. arXiv 2019, arXiv:1903.07406. [Google Scholar] [CrossRef]

- McKillop, M.; South, B.R.; Preininger, A.; Mason, M.; Jackson, G.P. Leveraging conversational technology to answer common COVID-19 questions. J. Am. Med. Inform. Assoc. 2021, 28, 850–855. [Google Scholar] [CrossRef] [PubMed]

- Wilson, L.; Marasoiu, M. The Development and Use of Chatbots in Public Health: Scoping Review. JMIR Hum. Factors 2022, 9, e35882. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, A.; Tam, C.C.; Wu, D.; Li, X.; Qiao, S. Artificial Intelligence–Based Chatbots for Promoting Health Behavioral Changes: Systematic Review. J. Med. Internet Res. 2023, 25, e40789. [Google Scholar] [CrossRef]

- Nakhleh, A.; Spitzer, S.; Shehadeh, N. ChatGPT’s Response to the Diabetes Knowledge Questionnaire: Implications for Diabetes Education. Diabetes Technol. Ther. 2023, 25, 571–573. [Google Scholar] [CrossRef]

- Magnani, J.W.; Mujahid, M.S.; Aronow, H.D.; Cené, C.W.; Dickson, V.V.; Havranek, E.; Morgenstern, L.B.; Paasche-Orlow, M.K.; Pollak, A.; Willey, J.Z.; et al. Health Literacy and Cardiovascular Disease: Fundamental Relevance to Primary and Secondary Prevention: A Scientific Statement From the American Heart Association. Circulation 2018, 138, e48–e74. [Google Scholar] [CrossRef] [PubMed]

- Fitzpatrick, K.K.; Darcy, A.; Vierhile, M. Delivering Cognitive Behavior Therapy to Young Adults With Symptoms of Depression and Anxiety Using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial. JMIR Ment. Health 2017, 4, e7785. [Google Scholar] [CrossRef]

- Maeda, E.; Miyata, A.; Boivin, J.; Nomura, K.; Kumazawa, Y.; Shirasawa, H.; Saito, H.; Terada, Y. Promoting fertility awareness and preconception health using a chatbot: A randomized controlled trial. Reprod. Biomed. Online 2020, 41, 1133–1143. [Google Scholar] [CrossRef] [PubMed]

- Prochaska, J.J.; Vogel, E.A.; Chieng, A.; Kendra, M.; Baiocchi, M.; Pajarito, S.; Robinson, A. A Therapeutic Relational Agent for Reducing Problematic Substance Use (Woebot): Development and Usability Study. J. Med. Internet Res. 2021, 23, e24850. [Google Scholar] [CrossRef] [PubMed]

- Suharwardy, S.; Ramachandran, M.; Leonard, S.A.; Gunaseelan, A.; Lyell, D.J.; Darcy, A.; Robinson, A.; Judy, A. Feasibility and impact of a mental health chatbot on postpartum mental health: A randomized controlled trial. AJOG Glob. Rep. 2023, 3, 100165. [Google Scholar] [CrossRef] [PubMed]

- Beaudry, J.; Consigli, A.; Clark, C.; Robinson, K.J. Getting Ready for Adult Healthcare: Designing a Chatbot to Coach Adolescents with Special Health Needs Through the Transitions of Care. J. Pediatr. Nurs. Nurs. Care Child. Fam. 2019, 49, 85–91. [Google Scholar] [CrossRef] [PubMed]

- Giroux, I.; Goulet, A.; Mercier, J.; Jacques, C.; Bouchard, S. Online and Mobile Interventions for Problem Gambling, Alcohol, and Drugs: A Systematic Review. Front. Psychol. 2017, 8, 954. [Google Scholar] [CrossRef] [PubMed]

- Alarifi, M.; Patrick, T.; Jabour, A.; Wu, M.; Luo, J. Understanding patient needs and gaps in radiology reports through online discussion forum analysis. Insights Imaging 2021, 12, 50. [Google Scholar] [CrossRef] [PubMed]

- Jeblick, K.; Schachtner, B.; Dexl, J.; Mittermeier, A.; Stüber, A.T.; Topalis, J.; Weber, T.; Wesp, P.; Sabel, B.O.; Ricke, J.; et al. ChatGPT makes medicine easy to swallow: An exploratory case study on simplified radiology reports. Eur. Radiol. 2023. [Google Scholar] [CrossRef]

- Comparative Performance of ChatGPT and Bard in a Text-Based Radiology Knowledge Assessment—Nikhil S. Patil, Ryan S. Huang, Christian B. van der Pol, Natasha Larocque. 2023. Available online: https://journals.sagepub.com/doi/10.1177/08465371231193716 (accessed on 13 November 2023).

- Fijačko, N.; Prosen, G.; Abella, B.S.; Metličar, Š.; Štiglic, G. Can novel multimodal chatbots such as Bing Chat Enterprise, ChatGPT-4 Pro, and Google Bard correctly interpret electrocardiogram images? Resuscitation 2023, 193, 110009. [Google Scholar] [CrossRef]

- Patil, N.S.; Huang, R.S.; van der Pol, C.B.; Larocque, N. Comparative Performance of ChatGPT and Bard in a Text-Based Radiology Knowledge Assessment. Can. Assoc. Radiol. J. 2023, 08465371231193716. [Google Scholar] [CrossRef] [PubMed]

- Mese, I. The Impact of Artificial Intelligence on Radiology Education in the Wake of Coronavirus Disease 2019. Korean J. Radiol. 2023, 24, 478–479. [Google Scholar] [CrossRef] [PubMed]

- Gan, R.K.; Ogbodo, J.C.; Wee, Y.Z.; Gan, A.Z.; González, P.A. Performance of Google bard and ChatGPT in mass casualty incidents triage. Am. J. Emerg. Med. 2023, 75, 72–78. [Google Scholar] [CrossRef] [PubMed]

- Cheong, R.C.T.; Unadkat, S.; Mcneillis, V.; Williamson, A.; Joseph, J.; Randhawa, P.; Andrews, P.; Paleri, V. Artificial intelligence chatbots as sources of patient education material for obstructive sleep apnoea: ChatGPT versus Google Bard. Eur. Arch. Otorhinolaryngol. 2023, 281, 985–993. [Google Scholar] [CrossRef]

- Roll, I.; Wylie, R. Evolution and Revolution in Artificial Intelligence in Education. Int. J. Artif. Intell. Educ. 2016, 26, 582–599. [Google Scholar] [CrossRef]

- Paranjape, K.; Schinkel, M.; Panday, R.N.; Car, J.; Nanayakkara, P. Introducing Artificial Intelligence Training in Medical Education. JMIR Med. Educ. 2019, 5, e16048. [Google Scholar] [CrossRef] [PubMed]

- Tushar Garg Artificial Intelligence in Medical Education. Am. J. Med. 2020, 133, e68. [CrossRef] [PubMed]

- Masters, K. Artificial intelligence in medical education. Med. Teach. 2019, 41, 976–980. [Google Scholar] [CrossRef]

- Rampton, V.; Mittelman, M.; Goldhahn, J. Implications of artificial intelligence for medical education. Lancet Digit. Health 2020, 2, e111–e112. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The Power of Feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Majumder, A.A.; D’Souza, U.; Rahman, S. Trends in medical education: Challenges and directions for need-based reforms of medical training in South-East Asia. Indian J. Med. Sci. 2004, 58, 369–380. [Google Scholar] [PubMed]

- Majumder, M.A.A.; Sa, B.; Alateeq, F.A.; Rahman, S. Teaching and Assessing Critical Thinking and Clinical Reasoning Skills in Medical Education. In Handbook of Research on Critical Thinking and Teacher Education Pedagogy; IGI Global: Hershey, PA, USA, 2019; pp. 213–233. ISBN 978-1-5225-7829-1. [Google Scholar]

- Kasalaei, A.; Amini, M.; Nabeiei, P.; Bazrafkan, L.; Mousavinezhad, H. Barriers of Critical Thinking in Medical Students’ Curriculum from the Viewpoint of Medical Education Experts: A Qualitative Study. J. Adv. Med. Educ. Prof. 2020, 8, 72–82. [Google Scholar] [CrossRef]

- Kabanza, F.; Bisson, G.; Charneau, A.; Jang, T.-S. Implementing tutoring strategies into a patient simulator for clinical reasoning learning. Artif. Intell. Med. 2006, 38, 79–96. [Google Scholar] [CrossRef] [PubMed]

- Frize, M.; Frasson, C. Decision-support and intelligent tutoring systems in medical education. Clin. Investig. Med. Med. Clin. Exp. 2000, 23, 266–269. [Google Scholar]

- Prober, C.G.; Heath, C. Lecture halls without lectures--a proposal for medical education. N. Engl. J. Med. 2012, 366, 1657–1659. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.-K. Curriculum Assessment Using Artificial Neural Network and Support Vector Machine Modeling Approaches: A Case Study; IR Applications; Association for Institutional Research: Tallahassee, FL, USA, 2010; Volume 29. [Google Scholar]

- Wartman, S.A.; Combs, C.D. Medical Education Must Move From the Information Age to the Age of Artificial Intelligence. Acad. Med. 2018, 93, 1107. [Google Scholar] [CrossRef] [PubMed]

- Grunhut, J.; Marques, O.; Wyatt, A.T.M. Needs, Challenges, and Applications of Artificial Intelligence in Medical Education Curriculum. JMIR Med. Educ. 2022, 8, e35587. [Google Scholar] [CrossRef] [PubMed]

- Mehta, S.; Vieira, D.; Quintero, S.; Bou Daher, D.; Duka, F.; Franca, H.; Bonilla, J.; Molnar, A.; Molnar, C.; Zerpa, D.; et al. Redefining medical education by boosting curriculum with artificial intelligence knowledge. J. Cardiol. Curr. Res. 2020, 13, 124–129. [Google Scholar] [CrossRef]

- Çalışkan, S.A.; Demir, K.; Karaca, O. Artificial intelligence in medical education curriculum: An e-Delphi study for competencies. PLoS ONE 2022, 17, e0271872. [Google Scholar] [CrossRef] [PubMed]

- Ngo, B.; Nguyen, D.; vanSonnenberg, E. The Cases for and against Artificial Intelligence in the Medical School Curriculum. Radiol. Artif. Intell. 2022, 4, e220074. [Google Scholar] [CrossRef]

- Chan, K.S.; Zary, N. Applications and Challenges of Implementing Artificial Intelligence in Medical Education: Integrative Review. JMIR Med. Educ. 2019, 5, e13930. [Google Scholar] [CrossRef] [PubMed]

- Murdoch, B. Privacy and artificial intelligence: Challenges for protecting health information in a new era. BMC Med. Ethics 2021, 22, 122. [Google Scholar] [CrossRef] [PubMed]

- Healthcare Data Breach Statistics. HIPAA J. Available online: https://www.hipaajournal.com/healthcare-data-breach-statistics/ (accessed on 13 November 2023).

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. Artif. Intell. Healthc. 2020, 295–336. [Google Scholar] [CrossRef]

- Rashid, D.; Hirani, R.; Khessib, S.; Ali, N.; Etienne, M. Unveiling biases of artificial intelligence in healthcare: Navigating the promise and pitfalls. Injury 2024, 55, 111358. [Google Scholar] [CrossRef] [PubMed]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef] [PubMed]

- Char, D.S.; Shah, N.H.; Magnus, D. Implementing Machine Learning in Health Care—Addressing Ethical Challenges. N. Engl. J. Med. 2018, 378, 981–983. [Google Scholar] [CrossRef] [PubMed]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report 2024. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Rojas, J.C.; Fahrenbach, J.; Makhni, S.; Cook, S.C.; Williams, J.S.; Umscheid, C.A.; Chin, M.H. Framework for Integrating Equity Into Machine Learning Models: A Case Study. Chest 2022, 161, 1621–1627. [Google Scholar] [CrossRef] [PubMed]

- McCoy, A.; Das, R. Reducing patient mortality, length of stay and readmissions through machine learning-based sepsis prediction in the emergency department, intensive care unit and hospital floor units. BMJ Open Qual. 2017, 6, e000158. [Google Scholar] [CrossRef]

- Usage of ChatGPT by Demographic 2023. Available online: https://www.statista.com/statistics/1384324/chat-gpt-demographic-usage/ (accessed on 13 November 2023).

- Choudhury, A.; Asan, O. Role of Artificial Intelligence in Patient Safety Outcomes: Systematic Literature Review. JMIR Med. Inform. 2020, 8, e18599. [Google Scholar] [CrossRef]

- Festor, P.; Nagendran, M.; Gordon, A.C.; Faisal, A.A.; Komorowski, M. Evaluating the Human Safety Net: Observational study of Physician Responses to Unsafe AI Recommendations in high-fidelity Simulation. medRxiv 2023, 2023, 10.03.23296437. [Google Scholar] [CrossRef]

- Keane, P.A.; Topol, E.J. With an eye to AI and autonomous diagnosis. Npj Digit. Med. 2018, 1, 40. [Google Scholar] [CrossRef] [PubMed]

- Quinn, T.P.; Senadeera, M.; Jacobs, S.; Coghlan, S.; Le, V. Trust and medical AI: The challenges we face and the expertise needed to overcome them. J. Am. Med. Inform. Assoc. 2021, 28, 890–894. [Google Scholar] [CrossRef] [PubMed]

| Study/Case | AI Technique Used | Application Area | Key Findings | Implications for Personalized Medicine |

|---|---|---|---|---|

| Dermatologist-level classification of skin cancer [44] | Convolutional Neural Networks (CNNs) | Dermatology | Classification of skin lesions with a level of competence comparable to dermatologists | Enhances early diagnosis and treatment personalization along with increasing access in areas with low number of dermatologists |

| Patient-centered pain care using AI and mobile health tools [45] | Reinforcement Learning | Chronic pain | AI-driven cognitive behavior therapy (CBT) non-inferior to traditional CBT | Improves individualized treatment approaches and access to care |

| Improved referral process for specialized medical procedures like epilepsy surgery [46] | Natural Language Processing | Epilepsy and Neurological surgery | Detection of high-risk individuals who would benefit from epilepsy surgery | Ensuring timely and appropriate referrals for at-risk patients |

| Delineating ulcerative colitis from Crohn’s Disease [47] | Guided Image Filtering (GIF) | Gastrointestinal diseases | Improved diagnostic accuracy in complex presentation of inflammatory bowel disease | Enhanced diagnostic accuracy leading to targeted treatment strategies |

| Chemotherapy selection for gastric cancer [48] | Random Forest Machine Learning Model | Oncology | Able to predict which subset of patients would benefit from paclitaxel in gastric cancer | Development of predictive biomarkers to guide personalized drug treatment regiment |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hirani, R.; Noruzi, K.; Khuram, H.; Hussaini, A.S.; Aifuwa, E.I.; Ely, K.E.; Lewis, J.M.; Gabr, A.E.; Smiley, A.; Tiwari, R.K.; et al. Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities. Life 2024, 14, 557. https://doi.org/10.3390/life14050557

Hirani R, Noruzi K, Khuram H, Hussaini AS, Aifuwa EI, Ely KE, Lewis JM, Gabr AE, Smiley A, Tiwari RK, et al. Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities. Life. 2024; 14(5):557. https://doi.org/10.3390/life14050557

Chicago/Turabian StyleHirani, Rahim, Kaleb Noruzi, Hassan Khuram, Anum S. Hussaini, Esewi Iyobosa Aifuwa, Kencie E. Ely, Joshua M. Lewis, Ahmed E. Gabr, Abbas Smiley, Raj K. Tiwari, and et al. 2024. "Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities" Life 14, no. 5: 557. https://doi.org/10.3390/life14050557

APA StyleHirani, R., Noruzi, K., Khuram, H., Hussaini, A. S., Aifuwa, E. I., Ely, K. E., Lewis, J. M., Gabr, A. E., Smiley, A., Tiwari, R. K., & Etienne, M. (2024). Artificial Intelligence and Healthcare: A Journey through History, Present Innovations, and Future Possibilities. Life, 14(5), 557. https://doi.org/10.3390/life14050557