Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study

Abstract

1. Introduction

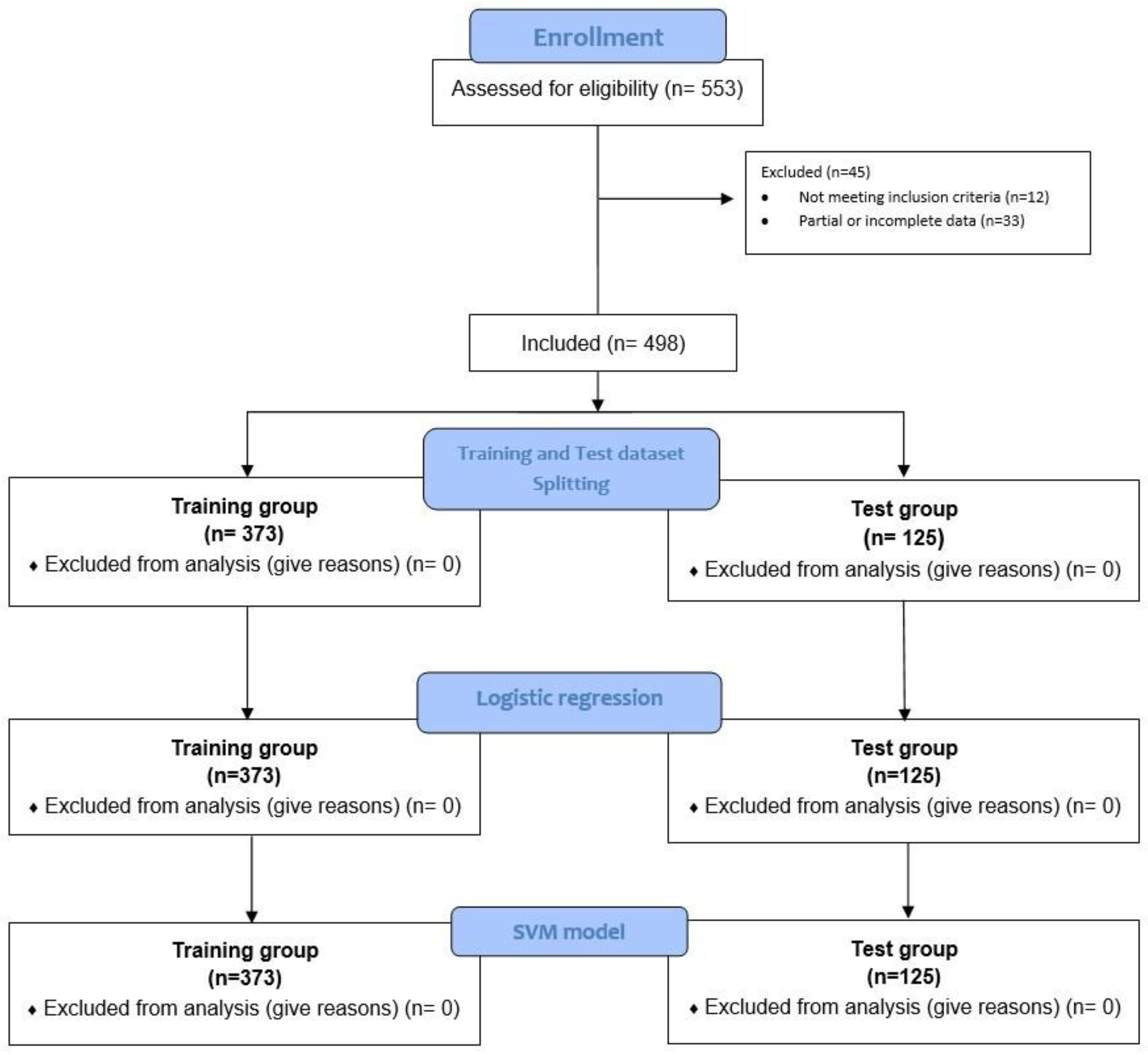

2. Materials and Methods

2.1. Study Design and Data Collection

2.2. Statistical Analysis

2.3. Stratification Process

2.4. Logistic Regression Model

2.5. SVM Model

- εi, indicates slack variables, one for each datapoint i, to allow certain constraints to be violated.

- C, indicates a tuning parameter that controls the trade-off between the penalty of slack variables εi and the optimization of the margin. High values of C penalize slack variables leading to a hard margin, whereas low values of C lead to a soft margin, which is a bigger corridor that allows certain training points inside at the expense of misclassifying some of them. In particular, the C parameter sets the confidence interval range of the learning model.

2.6. Models Test Analysis

2.7. Reporting Completeness of Machine Learning Study

3. Results

3.1. Patients Features

3.2. Logistic Regression Analysis, Full and Reduced Models

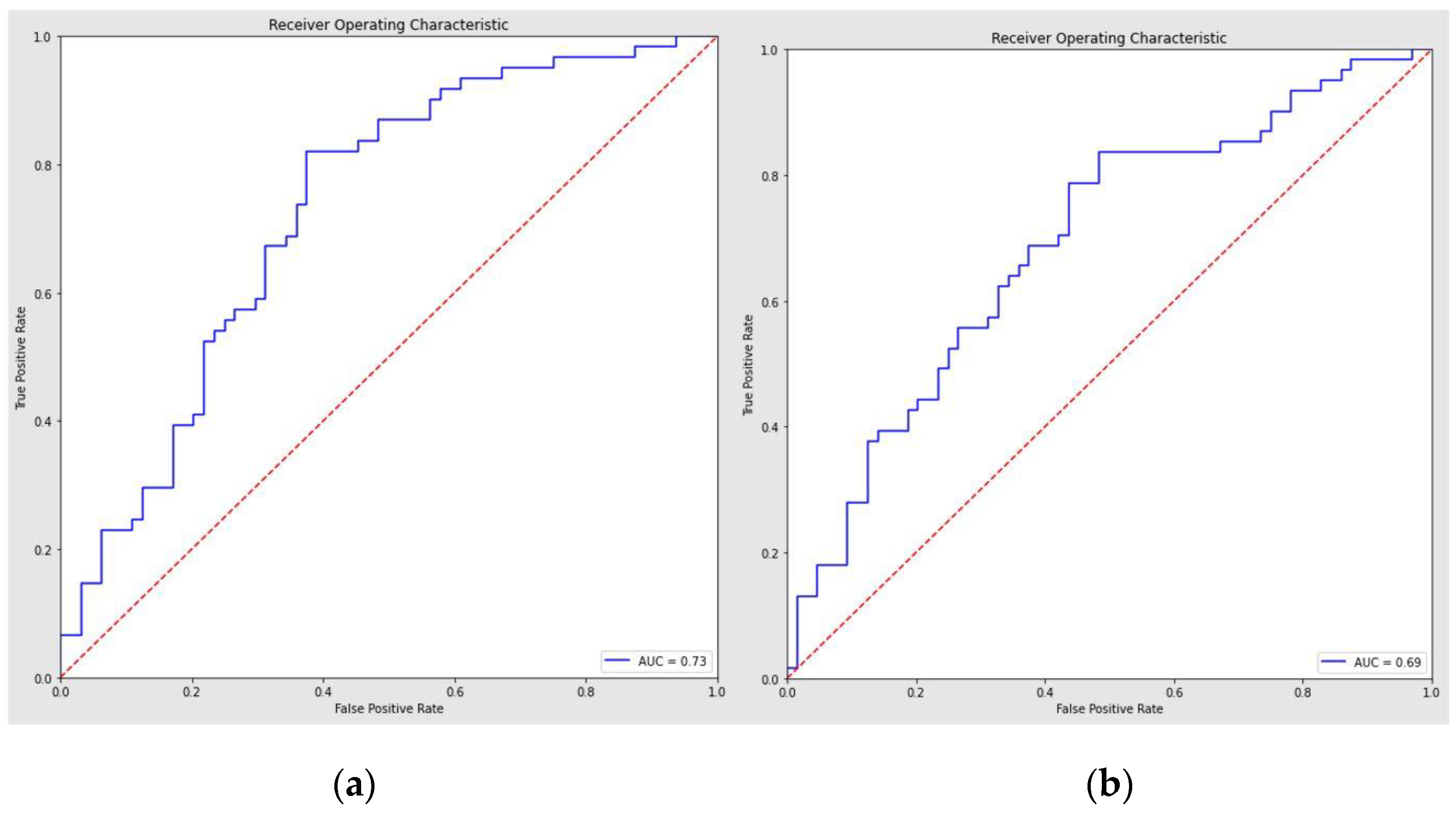

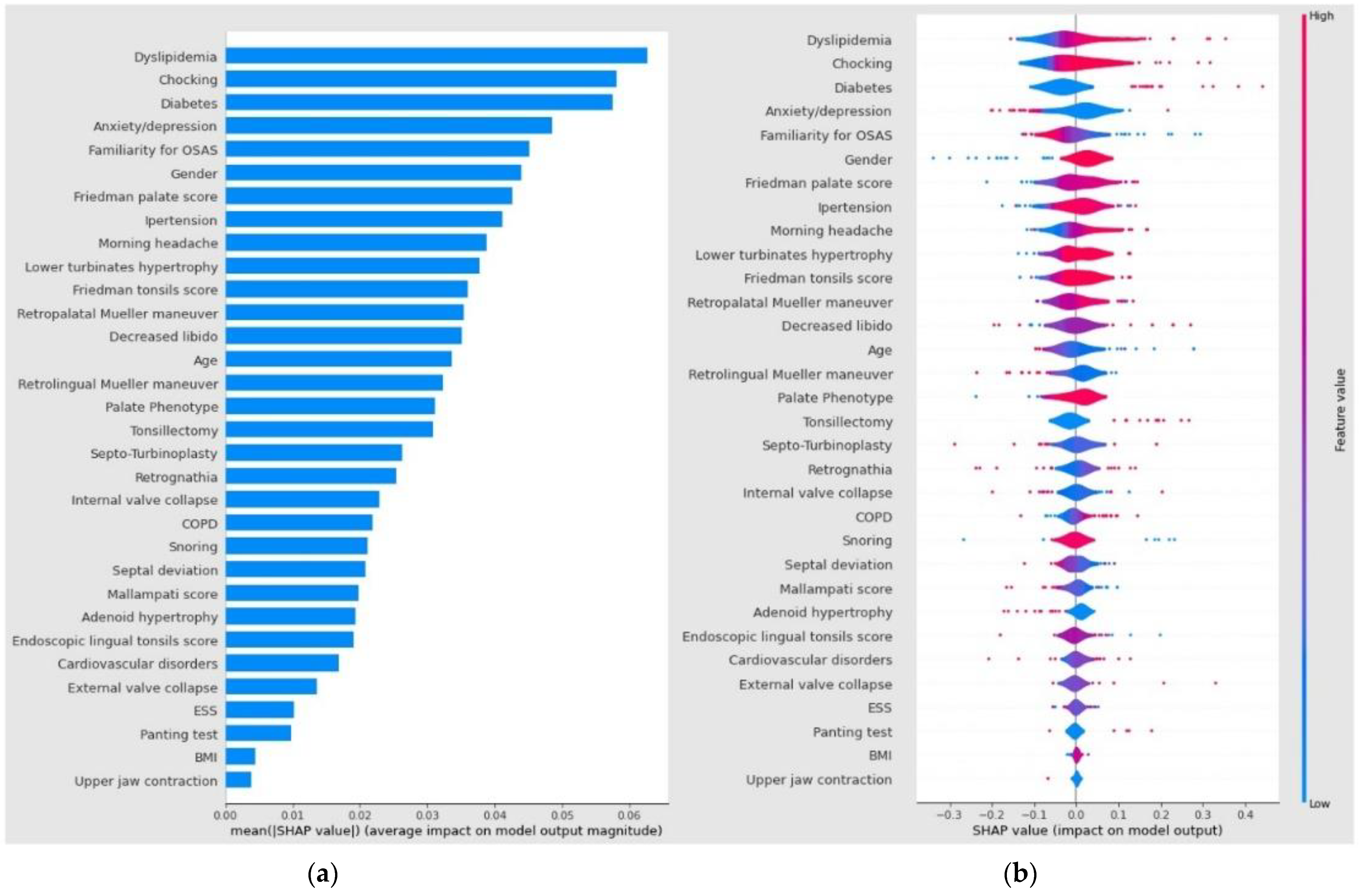

3.3. SVM Model Performance and ROC Curve Analysis

4. Discussion

4.1. Diagnostic and Therapeutic Role

4.2. Diagnostic Application of AI

4.3. OSA Risk Factors and Comorbidities

4.4. Study’s Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yeghiazarians, Y.; Jneid, H.; Tietjens, J.R.; Redline, S.; Brown, D.L.; El-Sherif, N.; Mehra, R.; Bozkurt, B.; Ndumele, C.E.; Somers, V.K. Obstructive Sleep Apnea and Cardiovascular Disease: A Scientific Statement From the American Heart Association. Circulation 2021, 144, e56–e67. [Google Scholar] [CrossRef] [PubMed]

- Stores, G. Clinical diagnosis and misdiagnosis of sleep disorders. J. Neurol. Neurosurg. Psychiatry 2007, 78, 1293–1297. [Google Scholar] [CrossRef]

- Kapur, V.K.; Auckley, D.H.; Chowdhuri, S.; Kuhlmann, D.C.; Mehra, R.; Ramar, K.; Harrod, C.G. Clinical Practice Guideline for Diagnostic Testing for Adult Obstructive Sleep Apnea: An American Academy of Sleep Medicine Clinical Practice Guideline. J. Clin. Sleep Med. 2017, 13, 479–504. [Google Scholar] [CrossRef]

- Mirrakhimov, A.E.; Sooronbaev, T.; Mirrakhimov, E.M. Prevalence of obstructive sleep apnea in Asian adults: A systematic review of the literature. BMC Pulm. Med. 2013, 13, 10. [Google Scholar] [CrossRef]

- Amado-Garzón, S.B.; Ruiz, A.J.; Rondón-Sepúlveda, M.A.; Hidalgo-Martínez, P. Sensitivity and specificity of four screening sleep-disordered breathing tests in patients with and without cardiovascular disease. Sleep Sci. 2021, 14, 311–318. [Google Scholar] [CrossRef]

- Sutherland, K.; Chapman, J.L.; A Cayanan, E.; Lowth, A.B.; Hoyos, C.M.; Wong, K.K.H.; Yee, B.J.; Grunstein, R.R.; A Cistulli, P.; Marshall, N.S. Does craniofacial morphology relate to sleep apnea severity reduction following weight loss intervention? A patient-level meta-analysis. Sleep 2021, 44, zsaa207. [Google Scholar] [CrossRef]

- Tsuiki, S.; Nagaoka, T.; Fukuda, T.; Sakamoto, Y.; Almeida, F.R.; Nakayama, H.; Inoue, Y.; Enno, H. Machine learning for image-based detection of patients with obstructive sleep apnea: An exploratory study. Sleep Breath. 2021, 25, 2297–2305. [Google Scholar] [CrossRef]

- Maranate, T.; Pongpullponsak, A.; Ruttanaumpawan, P. The Prioritization of Clinical Risk Factors of Obstructive Sleep Apnea Severity Using Fuzzy Analytic Hierarchy Process. Comput. Math. Methods Med. 2015, 2015, 257856. [Google Scholar] [CrossRef] [PubMed]

- von Elm, E.; Altman, D.G.; Egger, M.; Pocock, S.J.; Gøtzsche, P.C.; Vandenbroucke, J.P.; STROBE Initiative. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement: Guidelines for reporting observational studies. Int. J. Surg. 2014, 12, 1495–1499. [Google Scholar] [CrossRef] [PubMed]

- de Aguiar Vidigal, T.; Martinho Haddad, F.L.; Gregório, L.C.; Poyares, D.; Tufik, S.; Azeredo Bittencourt, L.R. Subjective, anatomical, and functional nasal evaluation of patients with obstructive sleep apnea syndrome. Sleep Breath. 2013, 17, 427–433. [Google Scholar] [CrossRef] [PubMed]

- Hwang, T.S.; Song, J.; Yoon, H.; Cho, B.P.; Kang, H.S. Morphometry of the nasal bones and pyriform apertures in Koreans. Ann. Anat. 2005, 187, 411–414. [Google Scholar] [CrossRef] [PubMed]

- Patel, B.; Virk, J.S.; Randhawa, P.S.; Andrews, P.J. The internal nasal valve: A validated grading system and operative guide. Eur. Arch. Otorhinolaryngol. 2018, 275, 2739–2744. [Google Scholar] [CrossRef] [PubMed]

- Poirrier, A.L.; Ahluwalia, S.; Kwame, I.; Chau, H.; Bentley, M.; Andrews, P. External nasal valve collapse: Validation of novel outcome measurement tool. Rhinology 2014, 52, 127–132. [Google Scholar] [CrossRef] [PubMed]

- Camacho, M.; Zaghi, S.; Certal, V.; Abdullatif, J.; Means, C.; Acevedo, J.; Liu, S.Y.C.; Brietzke, S.E.; Kushida, C.A.; Capasso, R. Inferior turbinate classification system, grades 1 to 4: Development and validation study. Laryngoscope 2015, 125, 296–302. [Google Scholar] [CrossRef]

- Cassano, P.; Gelardi, M.; Cassano, M.; Fiorella, M.L.; Fiorella, R. Adenoid tissue rhinopharyngeal obstruction grading based on fiberendoscopic findings: A novel approach to therapeutic management. Int. J. Pediatr. Otorhinolaryngol. 2003, 67, 1303–1309. [Google Scholar] [CrossRef] [PubMed]

- Kumar, D.S.; Valenzuela, D.; Kozak, F.K.; Ludemann, J.P.; Moxham, J.P.; Lea, J.; Chadha, N.K. The reliability of clinical tonsil size grading in children. JAMA Otolaryngol. Head Neck Surg. 2014, 140, 1034–1037. [Google Scholar] [CrossRef]

- Friedman, M.; Hamilton, C.; Samuelson, C.G.; Lundgren, M.E.; Pott, T. Diagnostic value of the Friedman tongue position and Mallampati classification for obstructive sleep apnea: A meta-analysis. Otolaryngol. Head Neck Surg. 2013, 148, 540–547. [Google Scholar] [CrossRef]

- Berg, L.M.; Ankjell, T.K.S.; Sun, Y.-Q.; Trovik, T.A.; Sjögren, A.; Rikardsen, O.G.; Moen, K.; Hellem, S.; Bugten, V. Friedman Score in Relation to Compliance and Treatment Response in Nonsevere Obstructive Sleep Apnea. Int. J. Otolaryngol. 2020, 2020, 6459276. [Google Scholar] [CrossRef]

- Woodson, B.T. Palatal Anatomy and Phenotypes for the Treatment of OSA. Curr. Otorhinolaryngol. Rep. 2021, 9, 238–245. [Google Scholar] [CrossRef]

- Friedman, M.; Yalamanchali, S.; Gorelick, G.; Joseph, N.J.; Hwang, M.S. A standardized lingual tonsil grading system: Interexaminer agreement. Otolaryngol. Head Neck Surg. 2015, 152, 667–672. [Google Scholar] [CrossRef]

- Wu, M.J.; Ho, C.Y.; Tsai, H.H.; Huang, H.M.; Lee, P.L.; Tan, C.T. Retropalatal Müller grade is associated with the severity of obstructive sleep apnea in non-obese Asian patients. Retropalatal Müller grade and OSA in non-obese. Sleep Breath. 2011, 15, 799–807. [Google Scholar] [CrossRef] [PubMed]

- Barchitta, M.; Maugeri, A.; Favara, G.; Riela, P.; Gallo, G.; Mura, I.; Agodi, A. Early Prediction of Seven-Day Mortality in Intensive Care Unit Using a Machine Learning Model: Results from the SPIN-UTI Project. J. Clin. Med. 2021, 10, 992. [Google Scholar] [CrossRef]

- Holfinger, S.J.; Lyons, M.M.; Keenan, B.T.; Mazzotti, D.R.; Mindel, J.; Maislin, G.; Cistulli, P.A.; Sutherland, K.; McArdle, N.; Singh, B.; et al. Diagnostic Performance of Machine Learning-Derived OSA Prediction Tools in Large Clinical and Community-Based Samples. Chest 2022, 161, 807–817. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.Y.; Kong, H.-J.; Kim, S.H.; Lee, S.; Kang, S.H.; Han, S.C.; Kim, D.W.; Ji, J.-Y.; Kim, H.J. Machine learning-based preoperative datamining can predict the therapeutic outcome of sleep surgery in OSA subjects. Sci. Rep. 2021, 11, 14911. [Google Scholar] [CrossRef] [PubMed]

- Gutiérrez-Tobal, G.C.; Álvarez, D.; Kheirandish-Gozal, L.; Del Campo, F.; Gozal, D.; Hornero, R. Reliability of machine learning to diagnose pediatric obstructive sleep apnea: Systematic review and meta-analysis. Pediatr. Pulmonol. 2021, 57, 1931–1943. [Google Scholar] [CrossRef] [PubMed]

- Collins, G.S.; Reitsma, J.B.; Altman, D.G.; Moons, K.G. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMJ 2015, 350, g7594. [Google Scholar] [CrossRef]

| Characteristics | Total (n = 498) | Mild–Moderate OSA (n = 220) | Severe OSA (n = 278) | p-Value |

|---|---|---|---|---|

| Age | 50.96 ± 12.15 | 51.57 ± 12.03 | 50.47 ± 12.20 | 0.315 |

| Gender | ||||

| male | 427/498 (87.76%) | 179/498 (35.94%) | 248/498 (49.79%) | 0.189 |

| female | 61/498 (12.24%) | 31/498 (6.22%) | 30/498 (6.02%) | |

| AHI | 37.21 ± 23.24 | 17.84 ± 7.50 | 53.96 ± 17.56 | <0.001 |

| ODI | 35.37 ± 24.79 | 17.76 ± 17.82 | 49.38 ± 20.13 | <0.001 |

| Mean SpO2 | 92.33 ± 3.07 | 93.35 ± 2.25 | 91.53 ± 3.38 | <0.001 |

| Lower SpO2 | 75.92 ± 12.13 | 80.05 ± 11.87 | 72.65 ± 11.31 | <0.001 |

| BMI | 27.32 ± 4.02 | 26.38 ± 2.74 | 28.06 ± 4.66 | <0.001 |

| ESS | 7.97 ± 4.92 | 7.26 ± 4.43 | 8.54 ± 5.20 | 0.003 |

| Model | AHI | Precision | Recall | F1-Score | Sensitivity | Specificity | Accuracy | p-Value |

|---|---|---|---|---|---|---|---|---|

| Full Logistic | mild–moderate | 0.71 | 0.62 | 0.67 | ||||

| severe | 0.65 | 0.74 | 0.69 | 0.74 | 0.63 | 0.68 | p < 0.001 a | |

| Reduced Logistic | mild–moderate | 0.73 | 0.56 | 0.64 | ||||

| severe | 0.63 | 0.79 | 0.70 | 0.79 | 0.56 | 0.67 | p < 0.001 b | |

| SVM | mild–moderate | 0.93 | 0.80 | 0.86 | ||||

| severe | 0.81 | 0.93 | 0.87 | 0.93 | 0.80 | 0.86 | p = 0.541 c |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maniaci, A.; Riela, P.M.; Iannella, G.; Lechien, J.R.; La Mantia, I.; De Vincentiis, M.; Cammaroto, G.; Calvo-Henriquez, C.; Di Luca, M.; Chiesa Estomba, C.; et al. Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study. Life 2023, 13, 702. https://doi.org/10.3390/life13030702

Maniaci A, Riela PM, Iannella G, Lechien JR, La Mantia I, De Vincentiis M, Cammaroto G, Calvo-Henriquez C, Di Luca M, Chiesa Estomba C, et al. Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study. Life. 2023; 13(3):702. https://doi.org/10.3390/life13030702

Chicago/Turabian StyleManiaci, Antonino, Paolo Marco Riela, Giannicola Iannella, Jerome Rene Lechien, Ignazio La Mantia, Marco De Vincentiis, Giovanni Cammaroto, Christian Calvo-Henriquez, Milena Di Luca, Carlos Chiesa Estomba, and et al. 2023. "Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study" Life 13, no. 3: 702. https://doi.org/10.3390/life13030702

APA StyleManiaci, A., Riela, P. M., Iannella, G., Lechien, J. R., La Mantia, I., De Vincentiis, M., Cammaroto, G., Calvo-Henriquez, C., Di Luca, M., Chiesa Estomba, C., Saibene, A. M., Pollicina, I., Stilo, G., Di Mauro, P., Cannavicci, A., Lugo, R., Magliulo, G., Greco, A., Pace, A., ... Vicini, C. (2023). Machine Learning Identification of Obstructive Sleep Apnea Severity through the Patient Clinical Features: A Retrospective Study. Life, 13(3), 702. https://doi.org/10.3390/life13030702