Upper limb movement is very central for daily activities; however, there are about 15 million people a year who suffer from stroke worldwide, with 5 million stroke survivors who experience permanent motor disability and require therapeutic services [

1]. Early intervention in rehabilitation therapies has a desirable effect for the recovery of patients [

2]. The robot exoskeleton can assist hemiplegia patients with pretraining their own rehabilitation exercise, guide the correct track to perform the movement and give the support of the auxiliary force directly. To improve the efficiency of the whole rehabilitation process, there are many technical difficulties in the field of exoskeleton robotics. Therefore, how to make the exoskeletons work as flexibly as a real limb is now a key point [

3,

4,

5]. The sEMG was a reliable way that has been widely applied to rehabilitation assessment [

6,

7], human intention prediction [

8,

9,

10], human motion classification [

11,

12,

13], prosthesis control [

14], and rehabilitation robot control [

15,

16,

17,

18,

19]. Miao et al. [

20] presented a platform robotics system with a subject-specific workspace for bilateral rehabilitation training. Y. Liu et al. [

21] presented a novel bilateral rehabilitation system that implements a surface electromyography (sEMG)-based stiffness control to achieve real-time stiffness adjustment based on the user’s dynamic motion. Z. Yang et al. [

22] proposed a mirror bilateral neuro-rehabilitation training system with sEMG-based patient active participation assessment that was proposed for the bilateral isometric force output coordination of the upper limb elbow joint. With the mirror visual feedback of the human–robot interface, the hemiplegia patients could perform bilateral isometric lifting tasks with modulated robotic assistance intuitive cognition of motor control of bilateral limbs.

sEMG signals have verified that there is good performance for the human intention prediction because sEMG signals can immediately reflect the muscle activity and movement intention [

23]. In general, the basic process of sEMG-based human intention prediction includes data preprocessing, data segmentation, feature extraction and classifier design [

24]. The action-recognition accuracy, as well as the real-time and model robustness, are very important factors during the process of rehabilitation training. For the action recognition accuracy, multi-channels sEMG acquisition technology and feature extraction methods are available to improve the action recognition accuracy. Multi-channels sEMG can capture high spatial and temporal resolution signals, which is the most commonly used data acquisition technology in muscle force estimation and limb motion analysis [

25]. Although multi-channel sEMG can collect abundant information to improve recognition accuracy, there are also the problems of information redundancy and inter-channel crosstalk. Meanwhile, the good feature extraction methods can improve the performance for the muscle activation analysis, muscle strength estimation, motion pattern analysis and so on [

26] in practical applications. However, it needs to be processed deeply to extract the most appropriate sEMG features and the huge amount of calculation. F.Y. Xiao et al. [

27] proposed the sEMG encoding and feature extraction methods, GADF-HOG method and GASF-HOG method, for human gesture recognition. They were inspired by image neurons and combines with computer graphics processing technology to extract sEMG image features. The results verified the feasibility of finding possible solutions from brain science and computer science to extract sEMG features and apply them to gesture recognition. For the model robustness, non-stationary characteristics of sEMG is the most critical impact factor in the accuracy and reliability of the recognition algorithm, which increases the uncertainty of the motion recognition algorithm [

28]. Therefore, a trained model for a specific user cannot be applied to another user because of individual differences among the subjects, muscle parameters, including muscle thickness, and innervation zone location. In order to reduce the impact of non-stationary characteristics of sEMG, feature engineering was applied to improve the robustness of the algorithm [

29]. Spanias et al. [

30] applied a log-likelihood metric to detect the sEMG disturbances and then to decide whether to use it or not. When sEMG contained disturbances, the classifier would detect the disturbances and disregard sEMG data. S.H. Qi et al. [

31] proposed a new initiative via deep learning to recognize general composite motions, which processes sEMG signals as images. A well-designed convolutional neural network (CNN) was used to obtain effective filters automatically to extract features. The reliability of the recognition algorithm could be ensured by giving up those unqualified sEMG signals. However, it was not available to discard any existing signal for the accuracy of the algorithm. Although there were differences in sEMG distribution for different subjects, it still contained motion information, especially for conventional low-density electrodes.

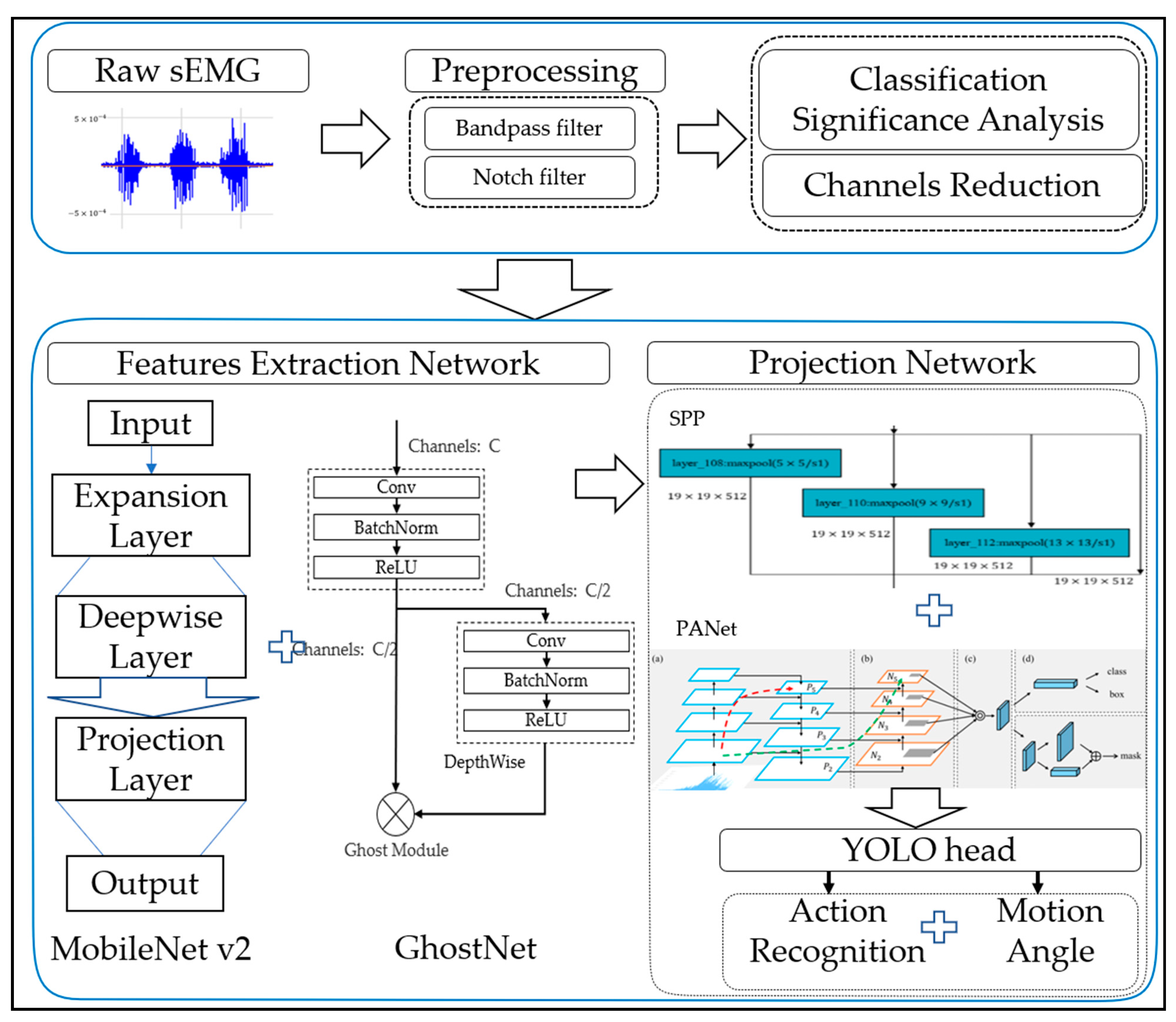

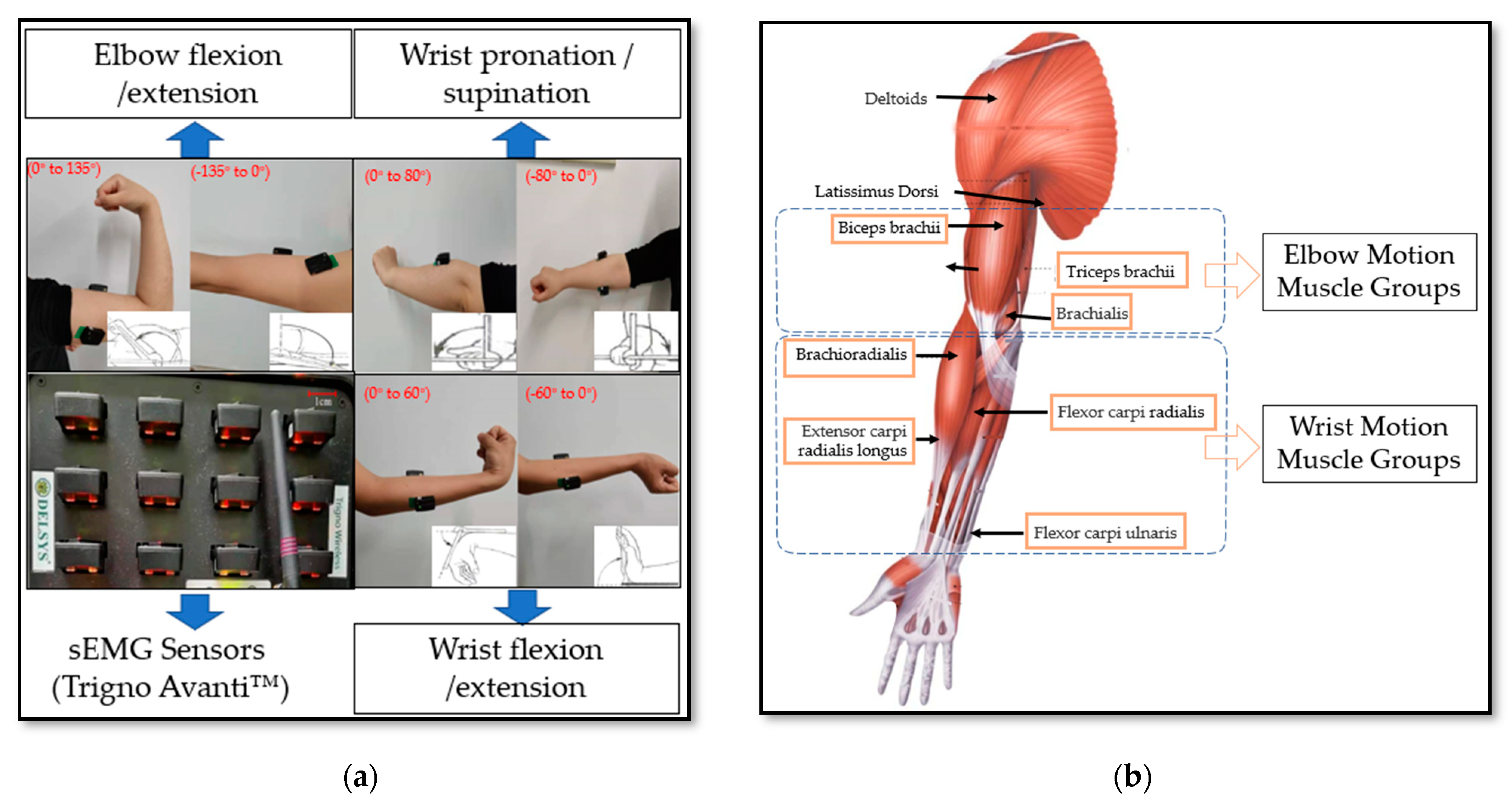

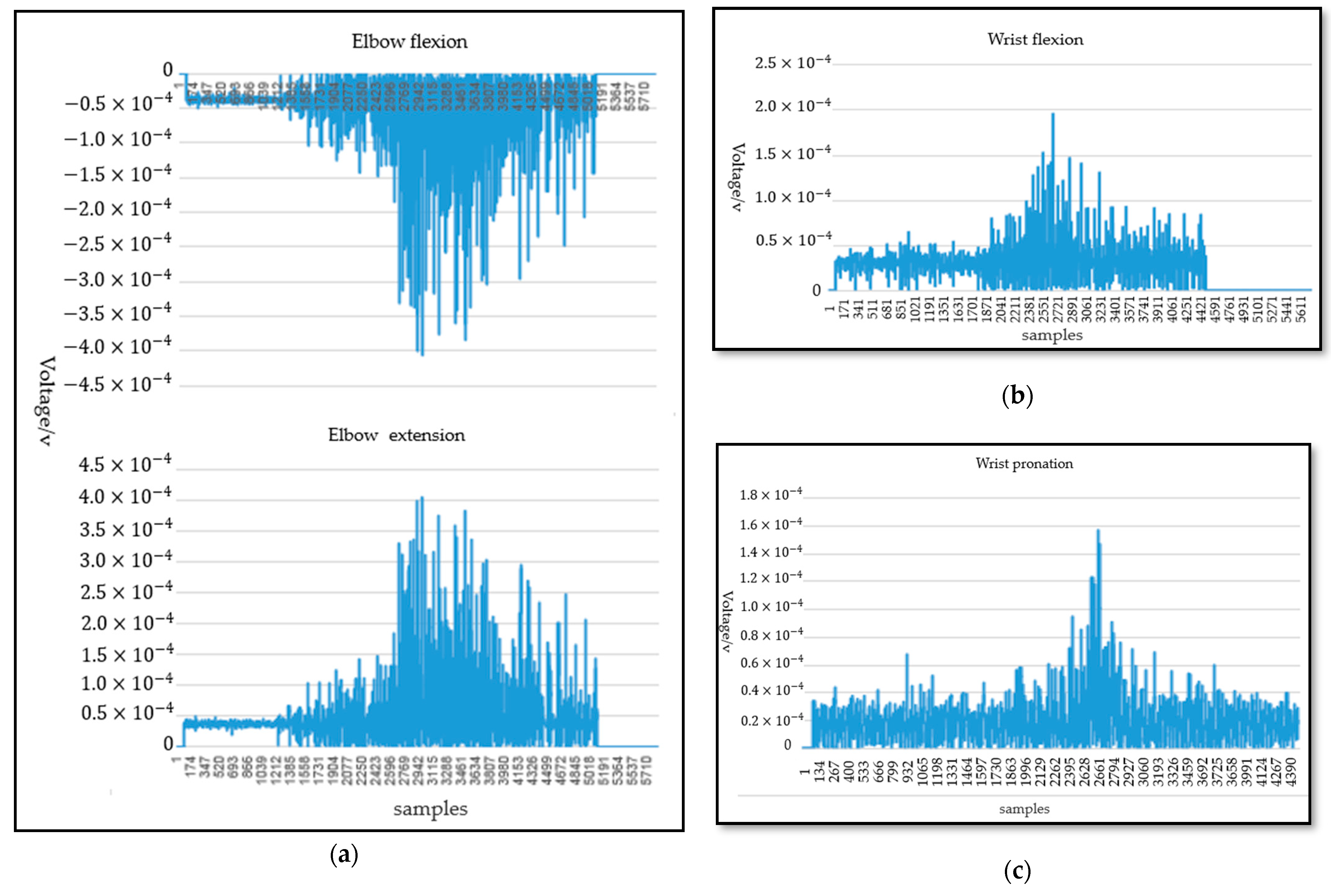

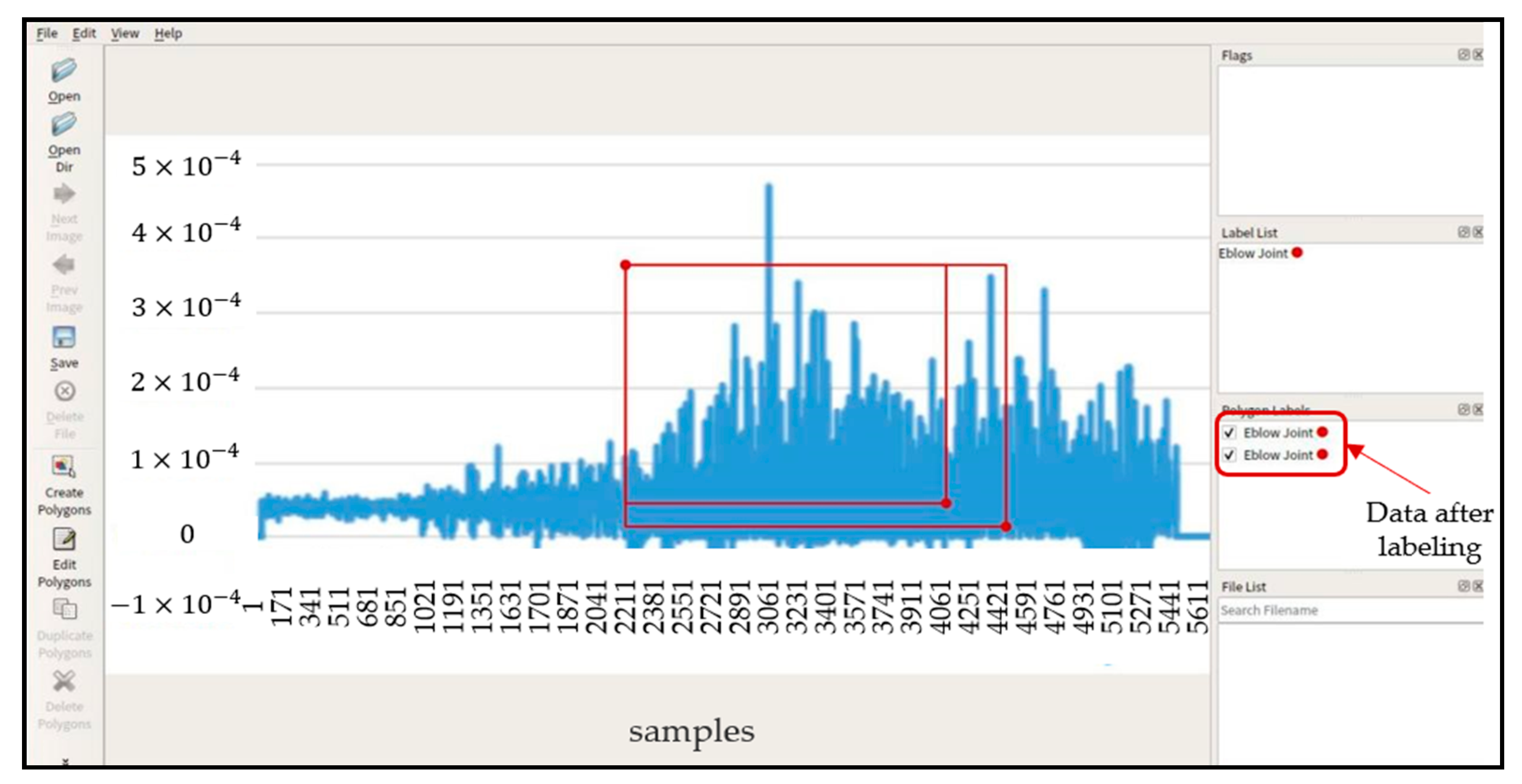

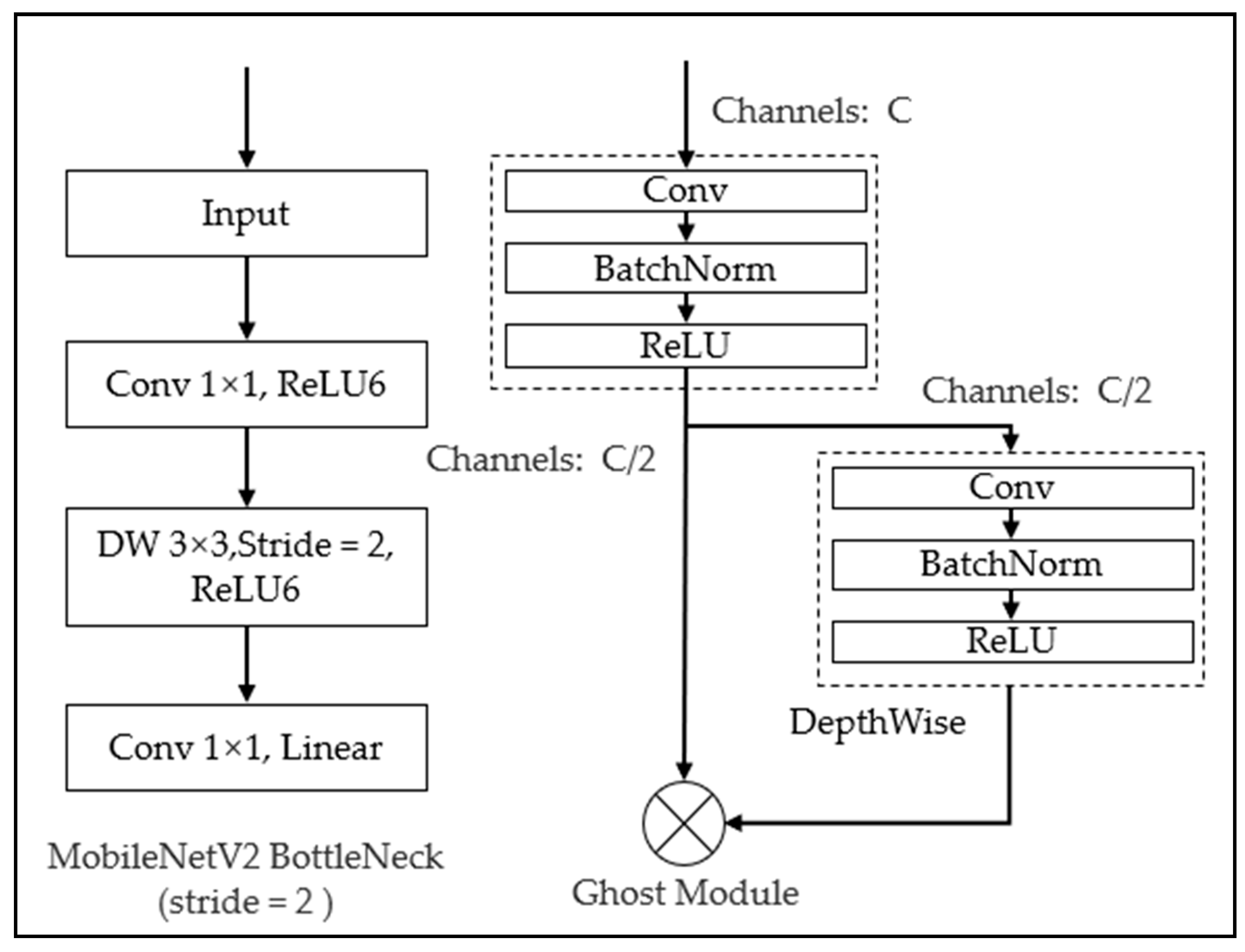

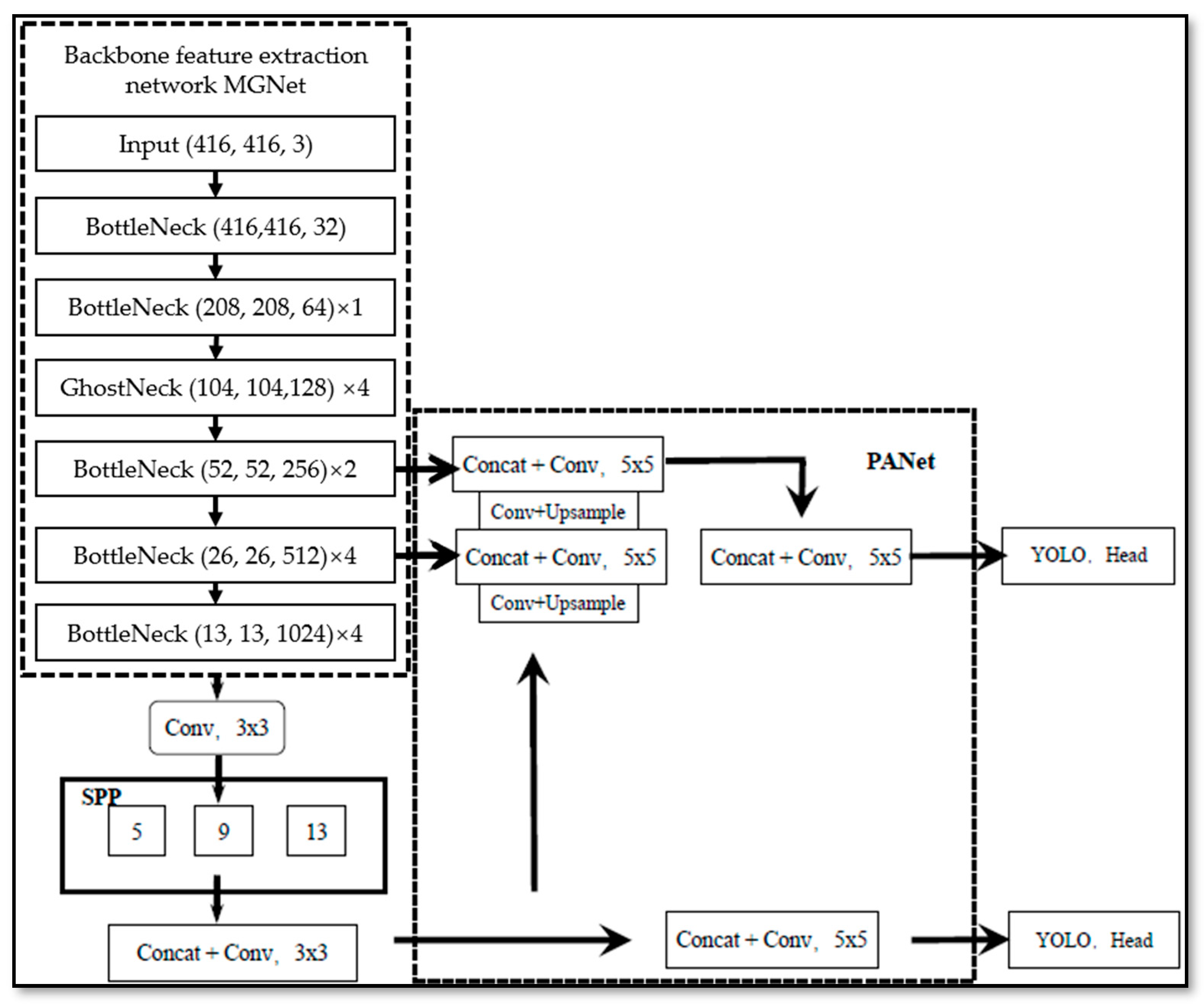

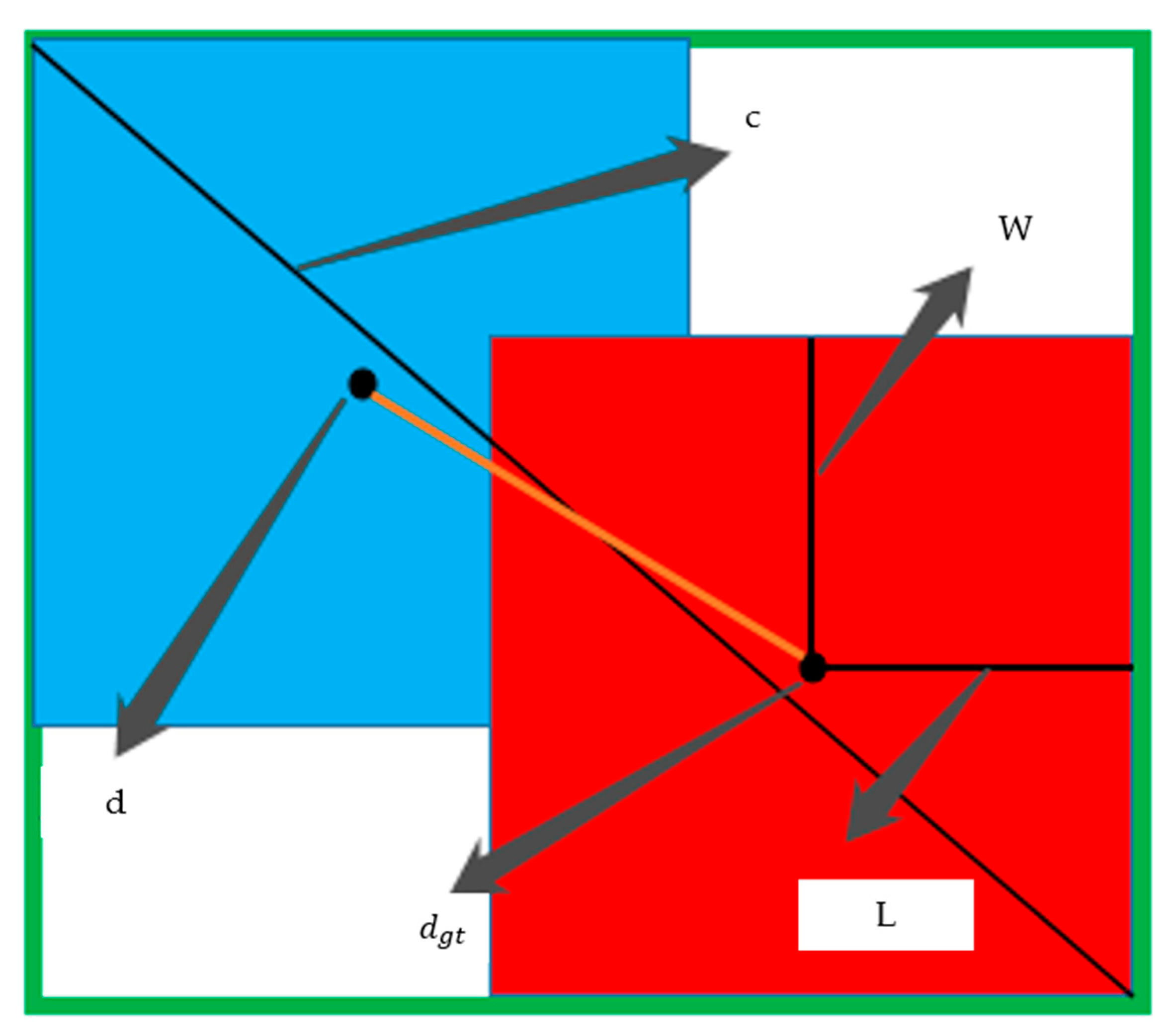

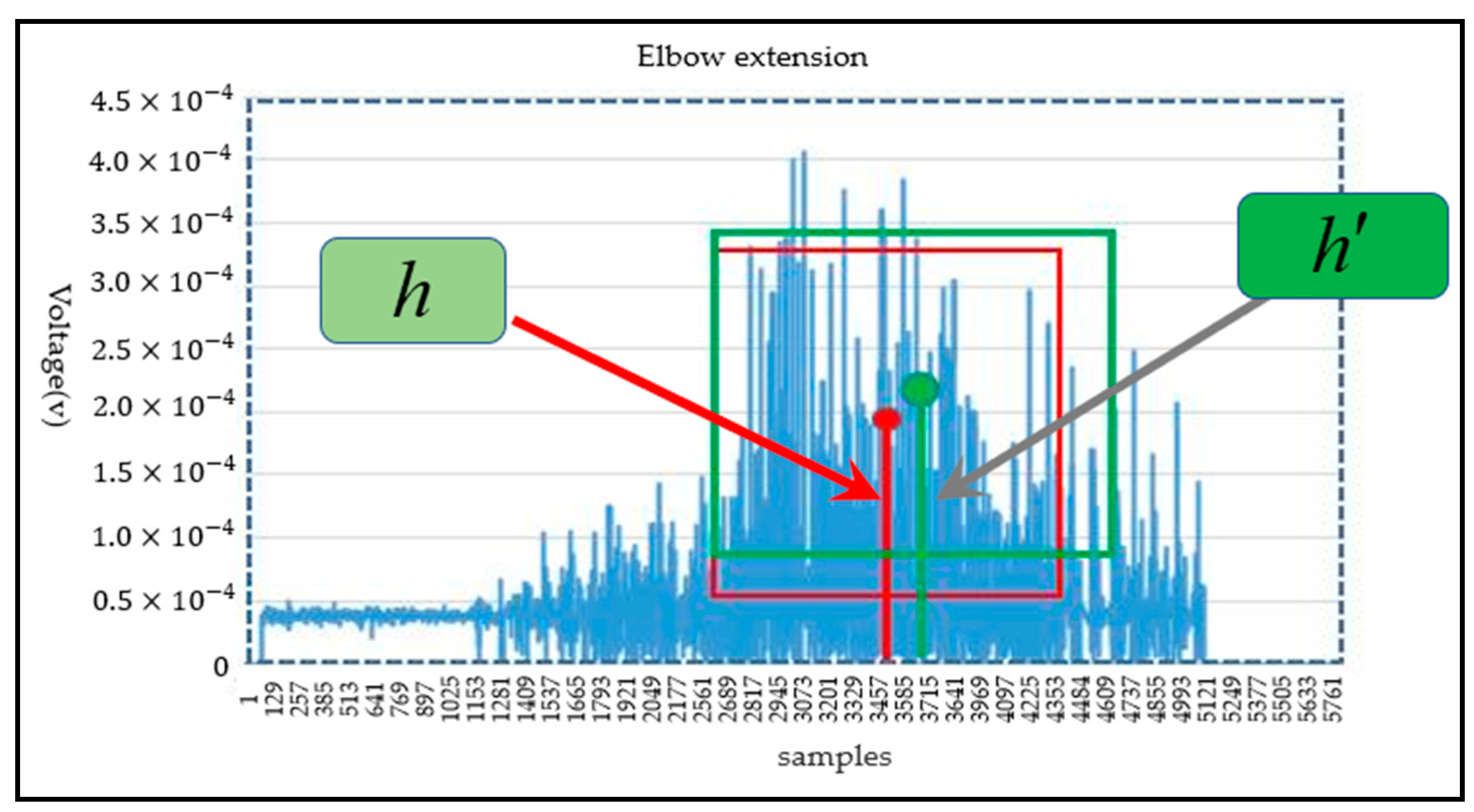

Considering the action-recognition accuracy and model robustness, the purpose of this paper is to achieve a real-time target detection model for the upper limb motion classification and motion angle prediction with high accuracy and reliability, especially for conventional low-density electrodes. Firstly, the multi-channel sEMG signal data was reduced through the classification network model, and the signal channels with high classification accuracy were retained. Next, the reduced signal channels were weighted and summed according to the importance of effective information to realize channel fusion, and then the fused sEMG features were extracted; a lightweight MobileNet-V2 combined with the Ghost module was used as a backbone network to extract features based on sEMG images; finally, the improved Yolo target detection network was used to realize the motion pattern recognition and motion amplitude detection of wrist and elbow based on sEMG signal to effectively reduce the impact of the non-stationary sEMG signal and improve the reliability and accuracy of the prediction.