A Self-triggered Position Based Visual Servoing Model Predictive Control Scheme for Underwater Robotic Vehicles †

Abstract

1. Introduction

1.1. The Self-Triggered Control Framework

1.2. Contributions

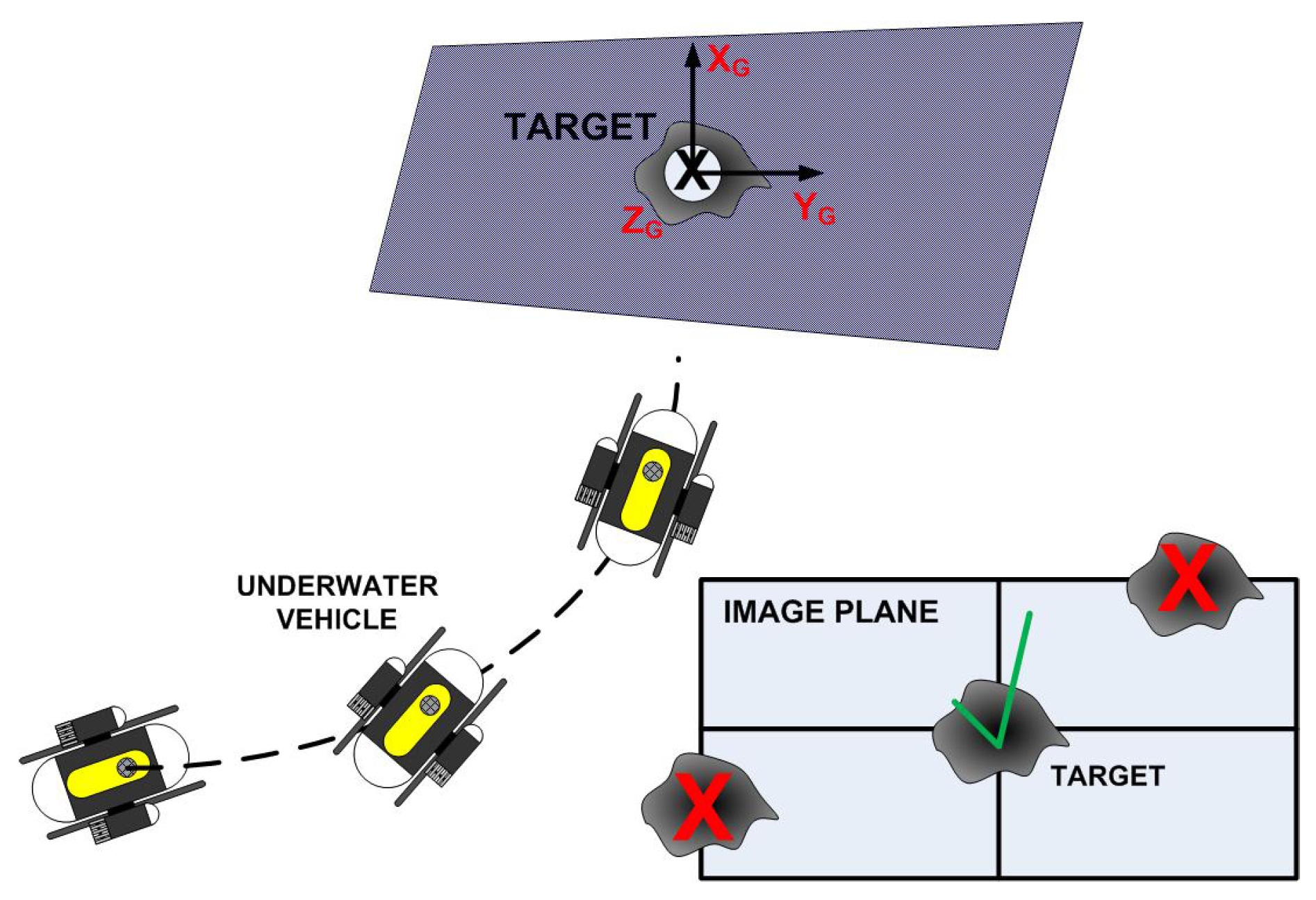

2. Problem Formulation

2.1. Mathematical Modeling

2.2. Control Design and Objective

2.3. Problem Statement

3. Stability Analysis of Self-Triggering NMPC Framework

3.1. Feasibility Analysis

3.2. Convergence Analysis

3.3. The Self-Triggered Mechanism

Convergence of System under the Proposed Self-Triggered Framework

| Algorithm 1 Real-time algorithm of the proposed self Triggered PBVS-NMPC framework: | |

| ▷ At triggering time |

| ▷ Trigger the VTA, get |

| ▷ Run OCP of (10a)–(10d) |

| ▷ The next triggering time |

| |

| |

| |

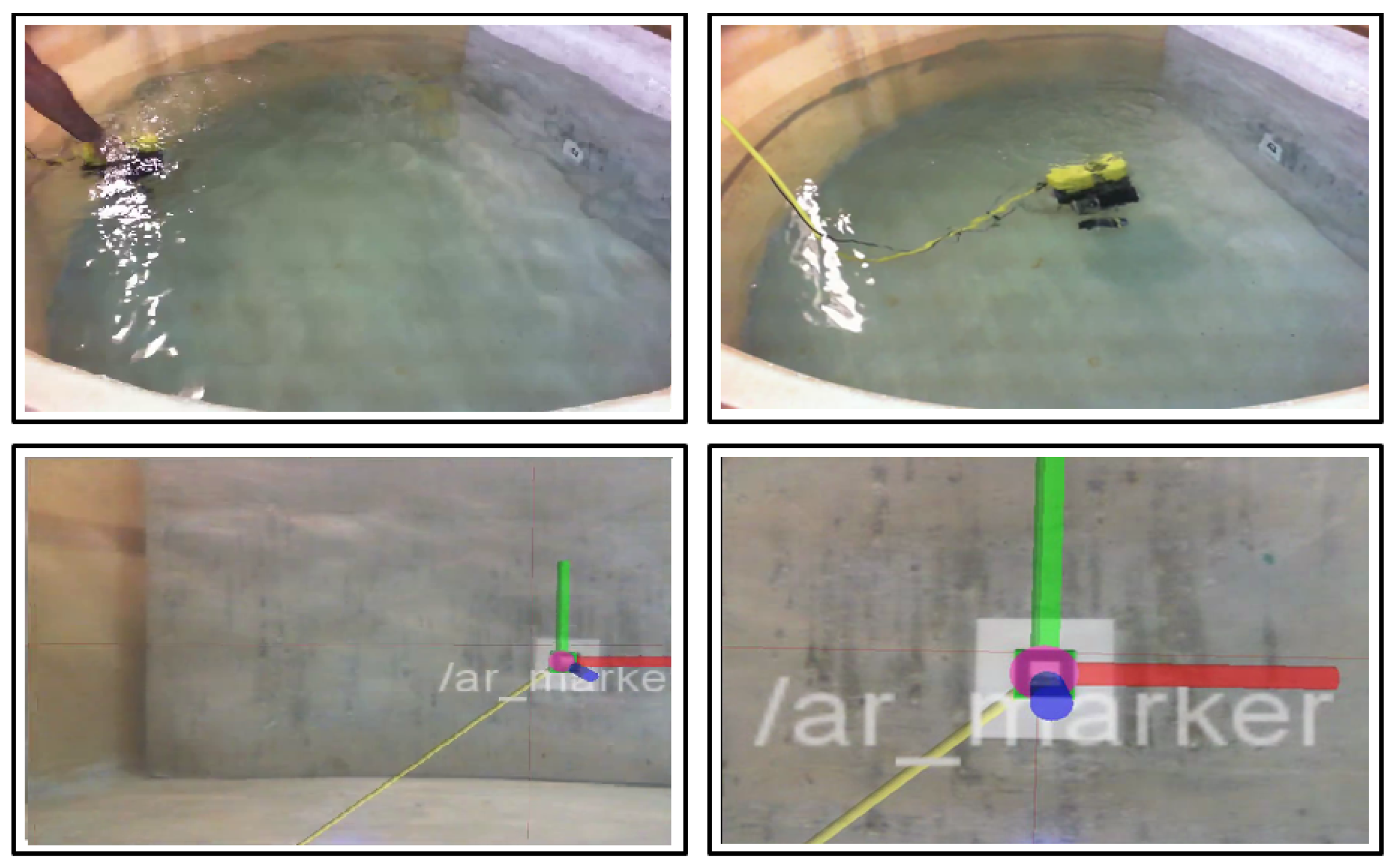

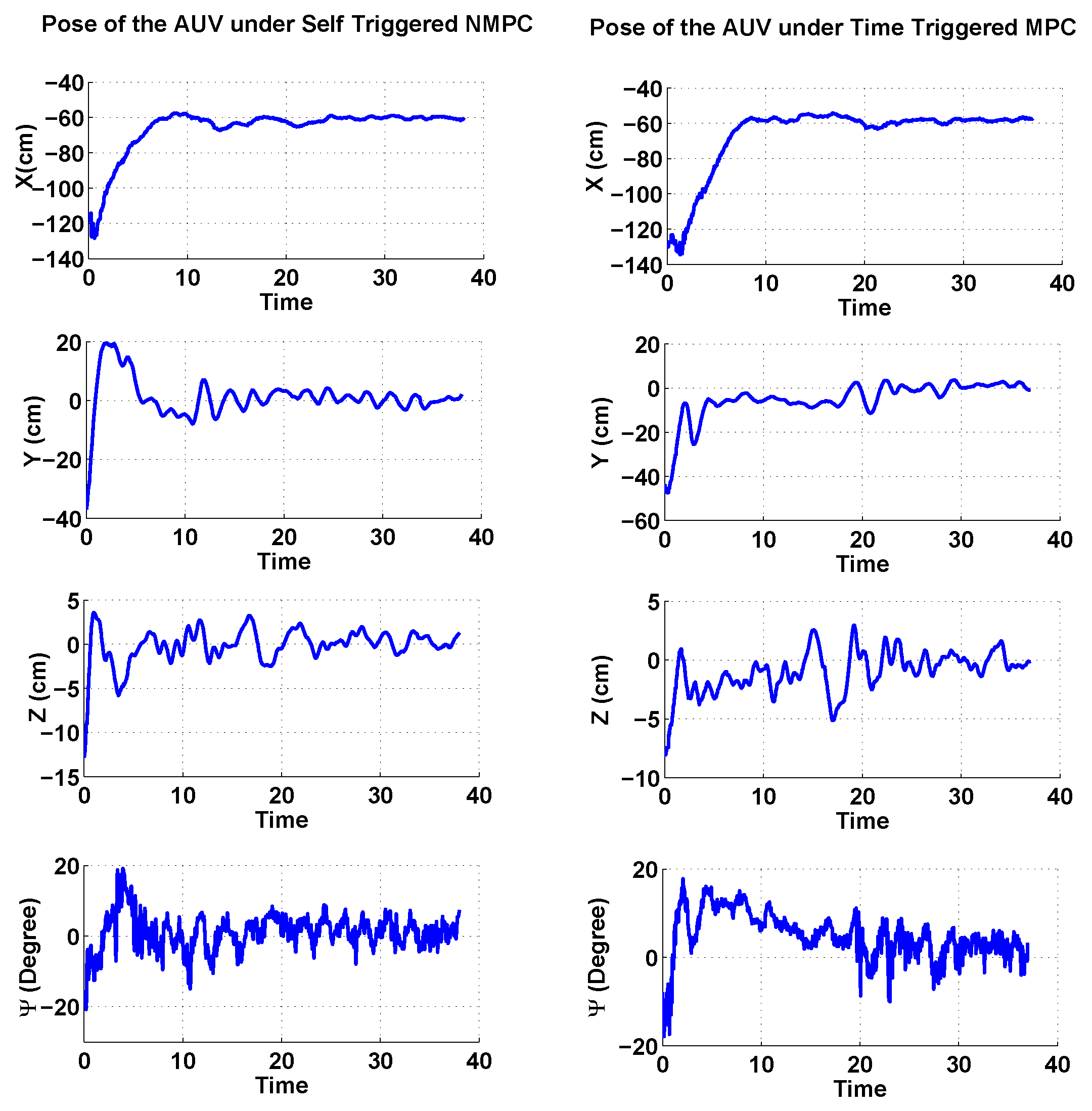

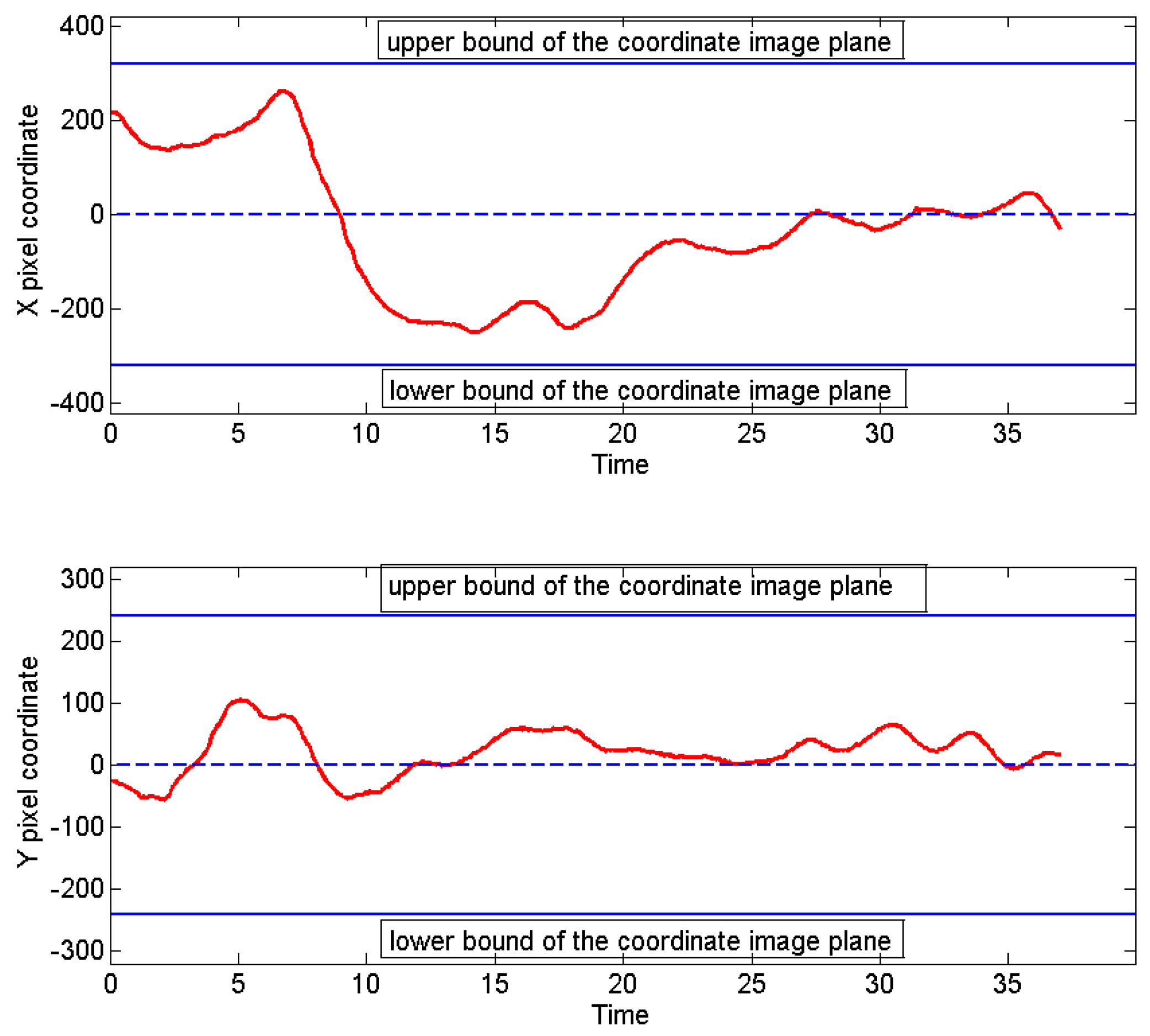

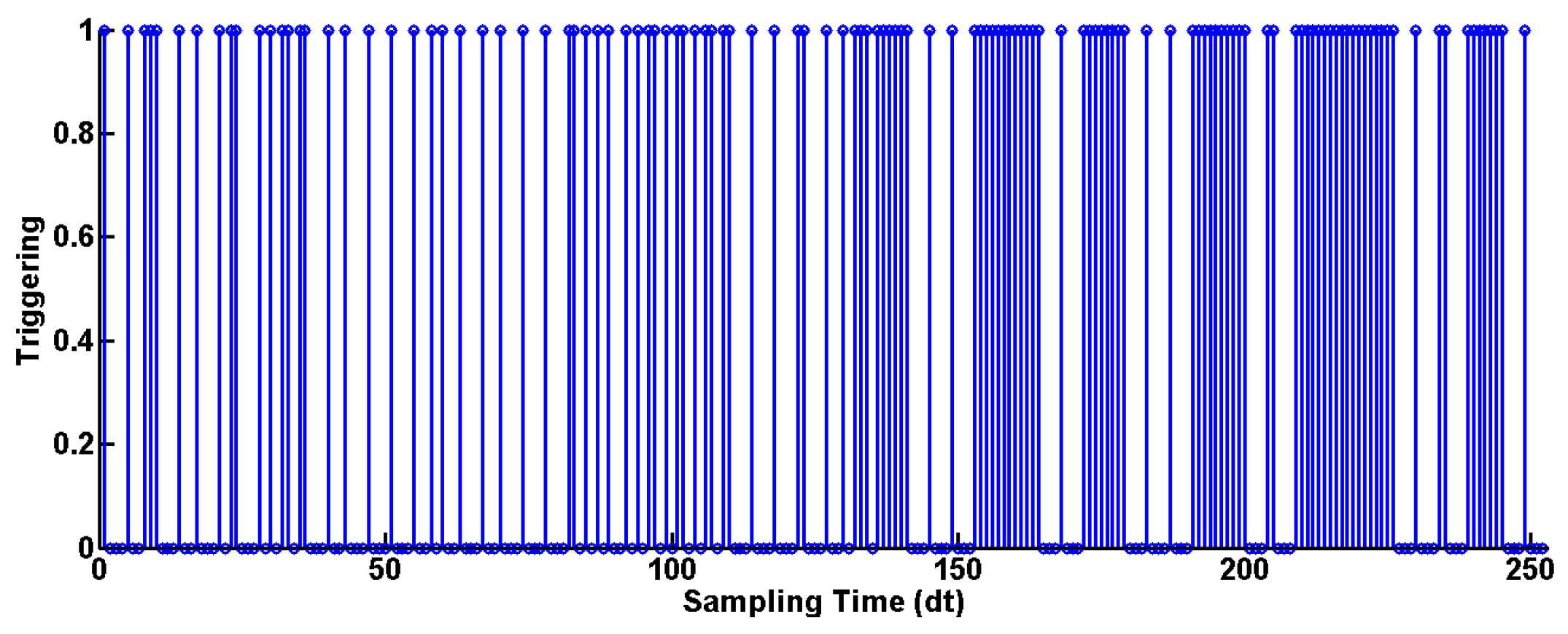

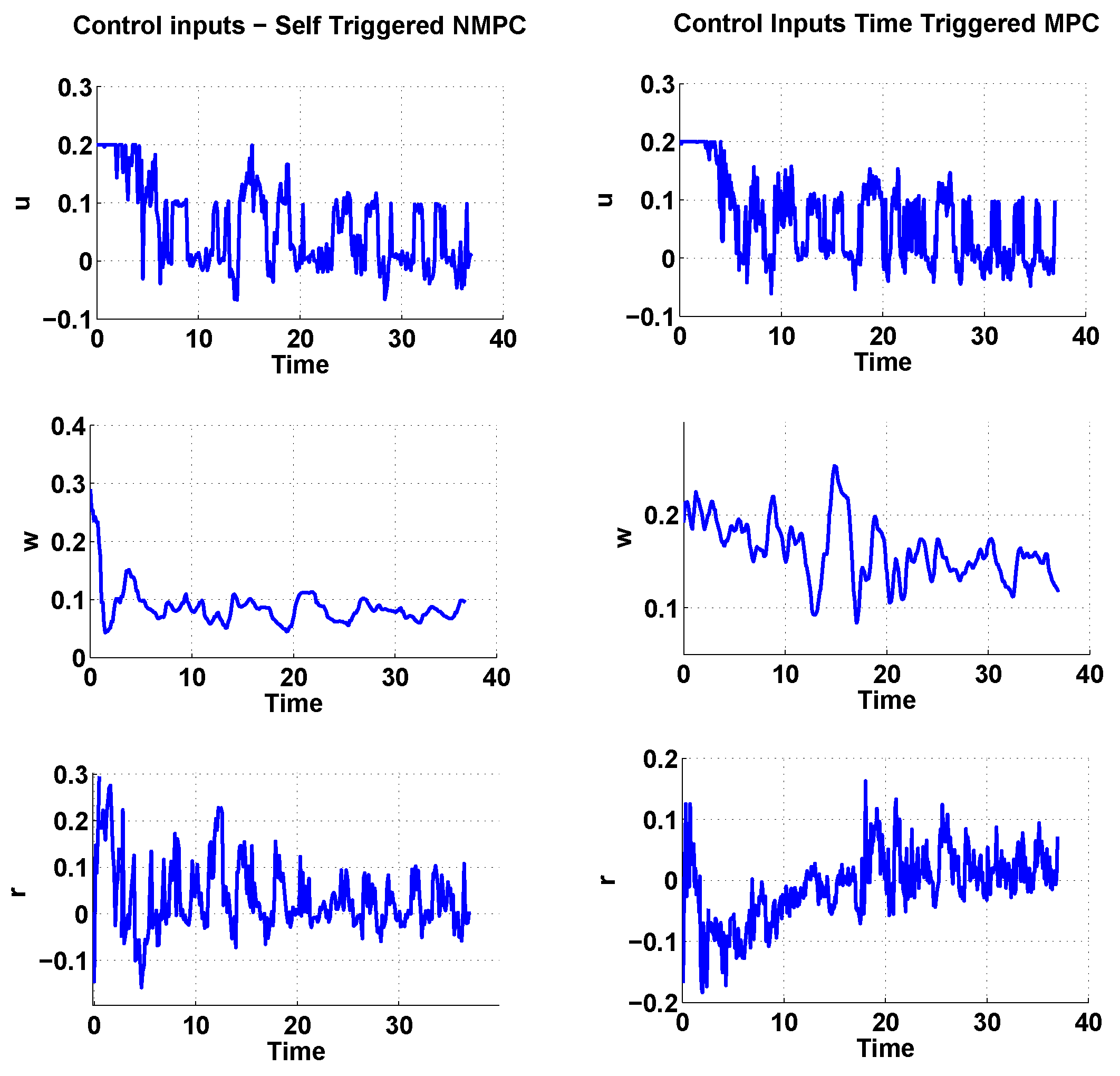

4. Experiments

4.1. System Components

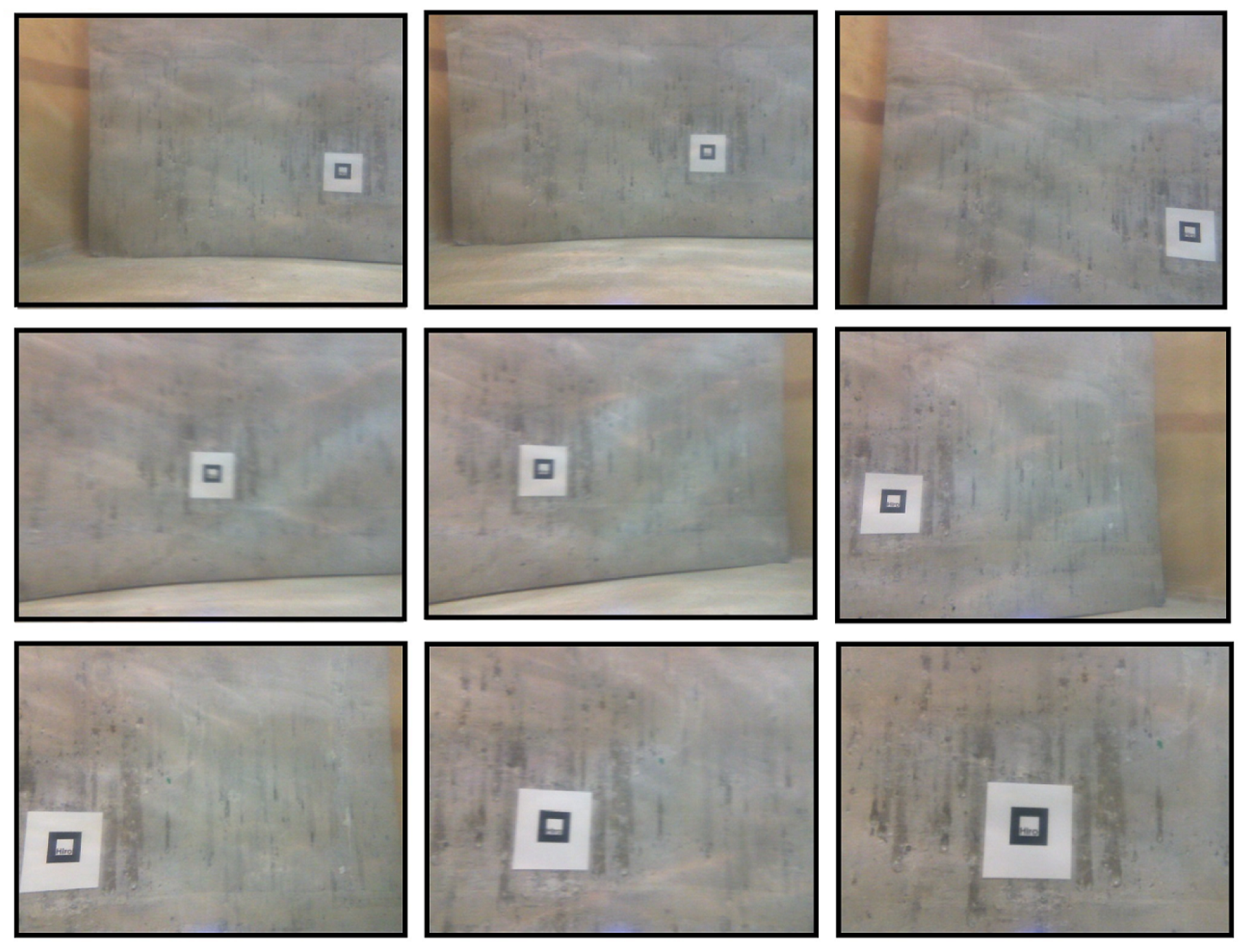

4.2. Experimental Results

4.3. Video

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A.

Appendix A.1. The Proof of Lemma 1:

Appendix A.2. The Proof of Lemma 2:

Appendix A.3. The Proof of Lemma 3:

Appendix A.4. The Proof of Lemma 4:

Appendix B. Lyapunov Function

References

- Hu, Y.; Zhao, W.; Xie, G.; Wang, L. Development and target following of vision-based autonomous robotic fish. Robotica 2009, 27, 1075–1089. [Google Scholar] [CrossRef]

- Pérez-Alcocer, R.; Torres-Méndez, L.A.; Olguín-Díaz, E.; Maldonado-Ramírez, A.A. Vision-based autonomous underwater vehicle navigation in poor visibility conditions using a model-free robust control. J. Sens. 2016, 2016, 8594096. [Google Scholar] [CrossRef]

- Xiang, X.; Jouvencel, B.; Parodi, O. Coordinated formation control of multiple autonomous underwater vehicles for pipeline inspection. Int. J. Adv. Robot. Syst. 2010, 7, 75–84. [Google Scholar] [CrossRef]

- Allibert, G.; Hua, M.D.; Krupínski, S.; Hamel, T. Pipeline following by visual servoing for Autonomous Underwater Vehicles. Control. Eng. Pract. 2019, 82, 151–160. [Google Scholar] [CrossRef]

- Adegboye, M.A.; Fung, W.K.; Karnik, A. Recent advances in pipeline monitoring and oil leakage detection technologies: Principles and approaches. Sensors 2019, 19, 2548. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, Q.; Xiang, X. Automatic inspection of subsea optical cable by an autonomous underwater vehicle. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, Scotland, 19–22 June 2017; pp. 1–6. [Google Scholar]

- Xiang, X.; Yu, C.; Niu, Z.; Zhang, Q. Subsea cable tracking by autonomous underwater vehicle with magnetic sensing guidance. Sensors 2016, 16, 1335. [Google Scholar] [CrossRef] [PubMed]

- Hansen, R.E.; Lågstad, P.; Sæbø, T.O. Search and Monitoring of Shipwreck and Munitions Dumpsites Using HUGIN AUV with Synthetic Aperture Sonar–Technology Study; FFI: Kjeller, Norway, 2019. [Google Scholar]

- Denniston, C.; Krogstad, T.R.; Kemna, S.; Sukhatme, G.S. On-line AUV Survey Planning for Finding Safe Vessel Paths through Hazardous Environments. In Proceedings of the 2018 IEEE/OES Autonomous Underwater Vehicle Workshop (AUV), Porto, Portugal, 6–9 November 2018; pp. 1–8. [Google Scholar]

- Huebner, C.S. Evaluation of side-scan sonar performance for the detection of naval mines. In Proceedings of the Target and Background Signatures IV, Berlin, Germany, 10–11 September 2018. [Google Scholar]

- Heshmati-Alamdari, S.; Bechlioulis, C.P.; Liarokapis, M.V.; Kyriakopoulos, K.J. Prescribed performance image based visual servoing under field of view constraints. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2721–2726. [Google Scholar]

- Chaumette, F.; Hutchinson, S. Visual Servo Control Part I: Basic Approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual Servo Control Part II: Advanced Approaches. IEEE Robot. Autom. Mag. 2007, 14, 109–118. [Google Scholar] [CrossRef]

- Bechlioulis, C.P.; Heshmati-alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. Robust image-based visual servoing with prescribed performance under field of view constraints. IEEE Trans. Robot. 2019, 35, 1063–1070. [Google Scholar] [CrossRef]

- Rives, P.; Borrelly, J.J. Visual servoing techniques applied to underwater vehicles. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems, Grenoble, France, 11 September 1997; Volume 3. [Google Scholar]

- Krupiski, S.; Allibert, G.; Hua, M.D.; Hamel, T. Pipeline tracking for fully-actuated autonomous underwater vehicle using visual servo control. In Proceedings of the American Control Conference, Montreal, QC, Canada, 27–29 June 2012; pp. 6196–6202. [Google Scholar]

- Karras, G.; Loizou, S.; Kyriakopoulos, K. Towards semi-autonomous operation of under-actuated underwater vehicles: Sensor fusion, on-line identification and visual servo control. Auton. Robot. 2011, 31, 67–86. [Google Scholar] [CrossRef]

- Silpa-Anan, C.; Brinsmead, T.; Abdallah, S.; Zelinsky, A. Preliminary experiments in visual servo control for autonomous underwater vehicle. In Proceedings of the 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems, Maui, HI, USA, 29 October–3 November 2001; Volume 4, pp. 1824–1829. [Google Scholar]

- Negahdaripour, S.; Firoozfam, P. An ROV stereovision system for ship-hull inspection. IEEE J. Ocean. Eng. 2006, 31, 551–564. [Google Scholar] [CrossRef]

- Lee, P.M.; Jeon, B.H.; Kim, S.M. Visual servoing for underwater docking of an autonomous underwater vehicle with one camera. Oceans Conf. Rec. (IEEE) 2003, 2, 677–682. [Google Scholar]

- Park, J.Y.; Jun, B.h.; Lee, P.m.; Oh, J. Experiments on vision guided docking of an autonomous underwater vehicle using one camera. Ocean Eng. 2009, 36, 48–61. [Google Scholar] [CrossRef]

- Lots, J.F.; Lane, D.; Trucco, E. Application of 2 1/2 D visual servoing to underwater vehicle station-keeping. Oceans Conf. Rec. (IEEE) 2000, 2, 1257–1262. [Google Scholar]

- Cufi, X.; Garcia, R.; Ridao, P. An approach to vision-based station keeping for an unmanned underwater vehicle. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Lausanne, Switzerland, 30 September–4 October 2002; Volume 1, pp. 799–804. [Google Scholar]

- Van der Zwaan, S.; Bernardino, A.; Santos-Victor, J. Visual station keeping for floating robots in unstructured environments. Robot. Auton. Syst. 2002, 39, 145–155. [Google Scholar] [CrossRef][Green Version]

- Heshmati-Alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. A distributed predictive control approach for cooperative manipulation of multiple underwater vehicle manipulator systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4626–4632. [Google Scholar]

- Heshmati-Alamdari, S.; Bechlioulis, C.P.; Karras, G.C.; Nikou, A.; Dimarogonas, D.V.; Kyriakopoulos, K.J. A robust interaction control approach for underwater vehicle manipulator systems. Annu. Rev. Control. 2018, 46, 315–325. [Google Scholar] [CrossRef]

- Fossen, T. Handbook of Marine Craft Hydrodynamics and Motion Control; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar] [CrossRef]

- Al Makdah, A.A.R.; Daher, N.; Asmar, D.; Shammas, E. Three-dimensional trajectory tracking of a hybrid autonomous underwater vehicle in the presence of underwater current. Ocean Eng. 2019, 185, 115–132. [Google Scholar] [CrossRef]

- Moon, J.H.; Lee, H.J. Decentralized Observer-Based Output-Feedback Formation Control of Multiple Unmanned Underwater Vehicles. J. Electr. Eng. Technol. 2018, 13, 493–500. [Google Scholar]

- Zhang, J.; Yu, S.; Yan, Y. Fixed-time output feedback trajectory tracking control of marine surface vessels subject to unknown external disturbances and uncertainties. ISA Trans. 2019, 93, 145–155. [Google Scholar] [CrossRef]

- Paliotta, C.; Lefeber, E.; Pettersen, K.Y.; Pinto, J.; Costa, M. Trajectory tracking and path following for underactuated marine vehicles. IEEE Trans. Control. Syst. Technol. 2018, 27, 1423–1437. [Google Scholar] [CrossRef]

- Do, K.D. Global tracking control of underactuated ODINs in three-dimensional space. Int. J. Control. 2013, 86, 183–196. [Google Scholar] [CrossRef]

- Li, Y.; Wei, C.; Wu, Q.; Chen, P.; Jiang, Y.; Li, Y. Study of 3 dimension trajectory tracking of underactuated autonomous underwater vehicle. Ocean Eng. 2015, 105, 270–274. [Google Scholar] [CrossRef]

- Aguiar, A.; Pascoal, A. Dynamic positioning and way-point tracking of underactuated AUVs in the presence of ocean currents. Int. J. Control. 2007, 80, 1092–1108. [Google Scholar] [CrossRef]

- Heshmati-Alamdari, S.; Karras, G.C.; Marantos, P.; Kyriakopoulos, K.J. A Robust Predictive Control Approach for Underwater Robotic Vehicles. IEEE Trans. Control. Syst. Technol. 2019. [Google Scholar] [CrossRef]

- Bechlioulis, C.P.; Karras, G.C.; Heshmati-Alamdari, S.; Kyriakopoulos, K.J. Trajectory tracking with prescribed performance for underactuated underwater vehicles under model uncertainties and external disturbances. IEEE Trans. Control. Syst. Technol. 2016, 25, 429–440. [Google Scholar] [CrossRef]

- Heshmati-alamdari, S.; Nikou, A.; Dimarogonas, D.V. Robust Trajectory Tracking Control for Underactuated Autonomous Underwater Vehicles. In Proceedings of the 2019 IEEE 58th Conference on Decision and Control (CDC), Nice, France, 11–13 December 2019; pp. 8311–8316. [Google Scholar]

- Xiang, X.; Lapierre, L.; Jouvencel, B. Smooth transition of AUV motion control: From fully-actuated to under-actuated configuration. Robot. Auton. Syst. 2015, 67, 14–22. [Google Scholar] [CrossRef]

- Heshmati-Alamdari, S. Cooperative and Interaction Control for Underwater Robotic Vehicles. Ph.D. Thesis, National Technical University of Athens, Athens, Greece, 2018. [Google Scholar]

- Allgöwer, F.; Findeisen, R.; Nagy, Z. Nonlinear model predictive control: From theory to application. Chin. Inst. Chem. Eng. 2004, 35, 299–315. [Google Scholar]

- Allibert, G.; Courtial, E.; Chaumette, F. Predictive control for constrained image-based visual servoing. IEEE Trans. Robot. 2010, 26, 933–939. [Google Scholar] [CrossRef]

- Sauvee, M.; Poignet, P.; Dombre, E.; Courtial, E. Image based visual servoing through nonlinear nodel predictive control. In Proceedings of the IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; pp. 1776–1781. [Google Scholar]

- Lee, D.; Lim, H.; Jin Kim, H. Obstacle avoidance using image-based visual servoing integrated with nonlinear model predective control. In Proceedings of the IEEE Conf. on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 5689–5694. [Google Scholar]

- Allibert, G.; Courtial, E.; Toure, Y. Real-time visual predictive controller for image-based trajectory tracking of a mobile robot. In Proceedings of the 17th IFAC World Congress, Seoul, Korea, 6–11 July 2008; pp. 11244–11249. [Google Scholar]

- Templeton, T.; Shim, D.; Geyer, C.; Sastry, S. Autonomous vision-based landing and terrain mapping using an MPC-controlled unmanned rotorcraft. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 1349–1356. [Google Scholar]

- Kanjanawanishkul, K.; Zell, A. Path following for an omnidirectional mobile robot based on model predictive control. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3341–3346. [Google Scholar]

- Hashimoto, K. A review on vision-based control of robot manipulators. Adv. Robot. 2003, 17, 969–991. [Google Scholar]

- Huang, Y.; Wang, W.; Wang, L. Instance-aware image and sentence matching with selective multimodal lstm. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2310–2318. [Google Scholar]

- Hutchinson, S.; Hager, G.; Corke, P. A tutorial on visual servo control. IEEE Trans. Robot. Autom. 1996, 12, 651–670. [Google Scholar] [CrossRef]

- Heshmati-Alamdari, S.; Karras, G.C.; Eqtami, A.; Kyriakopoulos, K.J. A robust self triggered image based visual servoing model predictive control scheme for small autonomous robots. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 5492–5497. [Google Scholar]

- Wang, M.; Liu, Y.; Su, D.; Liao, Y.; Shi, L.; Xu, J.; Miro, J.V. Accurate and real-time 3-D tracking for the following robots by fusing vision and ultrasonar information. IEEE/ASME Trans. Mechatron. 2018, 23, 997–1006. [Google Scholar] [CrossRef]

- Kiani Galoogahi, H.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for speed: A benchmark for higher frame rate object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1125–1134. [Google Scholar]

- Kawasaki, T.; Fukasawa, T.; Noguchi, T.; Baino, M. Development of AUV "Marine Bird" with underwater docking and recharging system. In Proceedings of the 2003 International Conference Physics and Control. Proceedings (Cat. No. 03EX708), Tokyo, Japan, 25–27 June 2003; pp. 166–170. [Google Scholar]

- Eqtami, A.; Heshmati-alamdari, S.; Dimarogonas, D.V.; Kyriakopoulos, K.J. Self-triggered Model Predictive Control for Nonholonomic Systems. In Proceedings of the European Control Conference, Zurich, Switzerland, 17–19 July 2013; pp. 638–643. [Google Scholar]

- Heemels, W.; Johansson, K.; Tabuada, P. An introduction to event-triggered and self-triggered control. In Proceedings of the IEEE 51st Conference on Decision and Control, Maui, HI, USA, 10–13 December 2012; pp. 3270–3285. [Google Scholar]

- Liu, Q.; Wang, Z.; He, X.; Zhou, D. A survey of event-based strategies on control and estimation. Syst. Sci. Control. Eng. 2014, 2, 90–97. [Google Scholar] [CrossRef]

- Zou, L.; Wang, Z.D.; Zhou, D.H. Event-based control and filtering of networked systems: A survey. Int. J. Autom. Comput. 2017, 14, 239–253. [Google Scholar] [CrossRef]

- Heshmati-alamdari, S.; Eqtami, A.; Karras, G.C.; Dimarogonas, D.V.; Kyriakopoulos, K.J. A self-triggered visual servoing model predictive control scheme for under-actuated underwater robotic vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 3826–3831. [Google Scholar]

- Tang, Y.; Zhang, D.; Ho, D.W.; Yang, W.; Wang, B. Event-based tracking control of mobile robot with denial-of-service attacks. IEEE Trans. Syst. Man, Cybern. Syst. 2018. [Google Scholar] [CrossRef]

- Durand, S.; Guerrero-Castellanos, J.; Marchand, N.; Guerrero-Sanchez, W. Event-based control of the inverted pendulum: Swing up and stabilization. Control. Eng. Appl. Inform. 2013, 15, 96–104. [Google Scholar]

- Tèllez-Guzman, J.; Guerrero-Castellanos, J.; Durand, S.; Marchand, N.; Maya, R.L. Event-based LQR control for attitude stabilization of a quadrotor. In Proceedings of the 15th IFAC Latinamerican Control Conference, Lima, Perú, 23–26 October 2012. [Google Scholar]

- Trimpe, S.; Baumann, D. Resource-aware IoT control: Saving communication through predictive triggering. IEEE Internet Things J. 2019, 6, 5013–5028. [Google Scholar] [CrossRef]

- van Eekelen, B.; Rao, N.; Khashooei, B.A.; Antunes, D.; Heemels, W. Experimental validation of an event-triggered policy for remote sensing and control with performance guarantees. In Proceedings of the 2016 Second International Conference on Event-based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 13–15 June 2016; pp. 1–8. [Google Scholar]

- Santos, C.; Mazo, M., Jr.; Espinosa, F. Adaptive self-triggered control of a remotely operated robot. Lect. Notes Comput. Sci. 2012, 7429 LNAI, 61–72. [Google Scholar]

- Guerrero-Castellanos, J.; Tollez-Guzman, J.; Durand, S.; Marchand, N.; Alvarez-Muoz, J.; Gonzalez-Daaz, V. Attitude Stabilization of a Quadrotor by Means of Event-Triggered Nonlinear Control. J. Intell. Robot. Syst. Theory Appl. 2013, 73, 1–13. [Google Scholar] [CrossRef]

- Postoyan, R.; Bragagnolo, M.; Galbrun, E.; Daafouz, J.; Nesic, D.; Castelan, E. Nonlinear event-triggered tracking control of a mobile robot: Design, analysis and experimental results. IFAC Proc. Vol. (IFAC-PapersOnline) 2013, 9, 318–323. [Google Scholar] [CrossRef]

- SNAME, T. Nomenclature for Treating the Motion of a Submerged Body through a Fluid. Available online: https://www.itk.ntnu.no/fag/TTK4190/papers/Sname%201950.PDF (accessed on 10 June 2020).

- Fossen, T. Guidance and Control of Ocean Vehicles; Wiley: New York, NY, USA, 1994. [Google Scholar]

- Antonelli, G. Underwater Robots. In Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2014; Volume 96, pp. 1–268. [Google Scholar]

- Panagou, D.; Kyriakopoulos, K.J. Control of underactuated systems with viability constraints. In Proceedings of the 50th IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 5497–5502. [Google Scholar]

- Marruedo, D.L.; Alamo, T.; Camacho, E. Input-to-State Stable MPC for Constrained Discrete-time Nonlinear Systems With Bounded Additive Uncertainties. In Proceedings of the 41st IEEE Conference Decision and Control, Las Vegas, NV, USA, 10–13 December 2002; pp. 4619–4624. [Google Scholar]

- Pin, G.; Raimondo, D.; Magni, L.; Parisini, T. Robust model predictive control of nonlinear systems with bounded and state-dependent uncertainties. IEEE Trans. Autom. Control. 2009, 54, 1681–1687. [Google Scholar] [CrossRef]

- Jiang, Z.P.; Wang, Y. Input-to-State Stability for Discrete-time Nonlinear Systems. Automatica 2001, 37, 857–869. [Google Scholar] [CrossRef]

- Johnson, S.G. The NLopt Nonlinear-Optimization Package. Available online: http://ab-initio.mit.edu/wiki/index.php/NLopt (accessed on 9 June 2020).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heshmati-alamdari, S.; Eqtami, A.; Karras, G.C.; Dimarogonas, D.V.; Kyriakopoulos, K.J. A Self-triggered Position Based Visual Servoing Model Predictive Control Scheme for Underwater Robotic Vehicles. Machines 2020, 8, 33. https://doi.org/10.3390/machines8020033

Heshmati-alamdari S, Eqtami A, Karras GC, Dimarogonas DV, Kyriakopoulos KJ. A Self-triggered Position Based Visual Servoing Model Predictive Control Scheme for Underwater Robotic Vehicles. Machines. 2020; 8(2):33. https://doi.org/10.3390/machines8020033

Chicago/Turabian StyleHeshmati-alamdari, Shahab, Alina Eqtami, George C. Karras, Dimos V. Dimarogonas, and Kostas J. Kyriakopoulos. 2020. "A Self-triggered Position Based Visual Servoing Model Predictive Control Scheme for Underwater Robotic Vehicles" Machines 8, no. 2: 33. https://doi.org/10.3390/machines8020033

APA StyleHeshmati-alamdari, S., Eqtami, A., Karras, G. C., Dimarogonas, D. V., & Kyriakopoulos, K. J. (2020). A Self-triggered Position Based Visual Servoing Model Predictive Control Scheme for Underwater Robotic Vehicles. Machines, 8(2), 33. https://doi.org/10.3390/machines8020033