Awakening the Synthesizer Knob: Gestural Perspectives

Abstract

:1. Introduction

1.1. Definition; Mainstream Digital Musical Instruments

1.2. The Knob

1.3. History

1.4. Characteristics

| External | Internal |

|---|---|

| Shape (!) Size (!) Material (!) Color ( ) Texture (!) Knurling ( ) Bottom chamfer ( ) Position indicator (!) | Resistance (!) Range of motion ( ) Detents (!) Inertia (?) Axial skew (-) Axial play (-) Dynamic feedback (?) |

1.5. Innovation

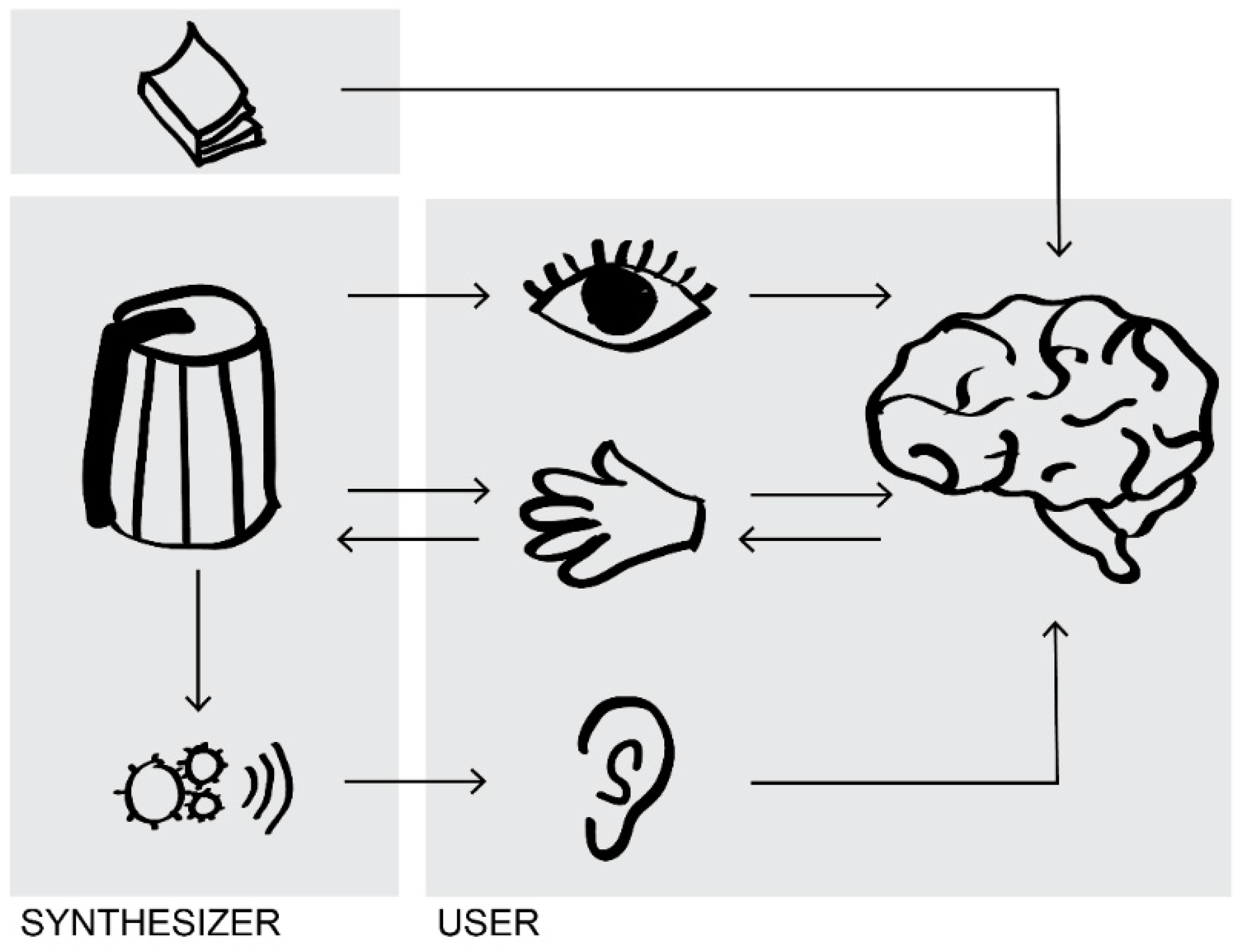

1.6. Expression and Interaction

1.6.1. Musical Expression

1.6.2. Embodiment

- Humans do not think in abstract models, so a user should not be expected to fully understand the inner workings of the instrument, like complex interplay of elements in FM synthesis. Experience is stored in the form of concepts, which means that the same action should yield the same reaction, making menus and multi-functional knobs poor choices [10].

- The brain is originally made for movement; design the movement of interaction! Perception and motor control are not separated; movement seen on the mDMI will influence the actions of the musician. This means that not only the shape of a knob should be designed, but also the (possible) motion the musician makes while turning the knob [10].

- The brain is multimodal, which means that even seemingly irrelevant aspects of the interaction, like the axial skew of a knob, play a role in the overall experience. Interaction is always emotional: will you be making affective music on a technical looking instrument? While it is difficult to make a definite statement on this, it does seem that the delicate violin is more suitable for emotional music than a rugged drum computer [10].

- Tactile feedback containing features of the controlled sound enables a stronger feedback loop, improving muscular control and expression [11].

- While cognitive affordances are important when learning an instrument, and physical affordances are important to ensure a good interaction, the performer’s focus should be within the affective domain while performing. This means that to be expressive, an mDMI should not require high cognitive effort to be operated [12].

- Many of the traditionally used guidelines for affordances might not be applicable to musical instruments. For instance, the function of holding a violin bow in different positions might not be intuitive or even cognitively understandable, but through sensorimotoric and affective learning the musician will understand what happens when using different bow positions, even if the musician is not consciously aware of the effect. Ease of use should not be the main goal in designing musical instruments, but ability to express is [13].

1.6.3. Cognitive Load

1.6.4. Gestures

1.6.5. Enabling Expression

| Input | Gesture | Musical | Synthesis | Output | ||||

|---|---|---|---|---|---|---|---|---|

| Position |  | Direction |  | Tone |  | Pitch |  | Sound |

| Speed | Timbre | Filter | ||||||

| Time | Articulation | Distortion | ||||||

| Flow | Loudness | Volume |

1.7. Three Directives for a New Generation of Knobs

1.7.1. Twisting the Knob Generates a Musical Outcome, Rather Than Changing a Technical Parameter

1.7.2. The Knobs is Sensitive to Your Own Way of Using It

1.7.3. The Looks and Feel of the Knob Are Connected to its Musical Output

2. Experimental Section

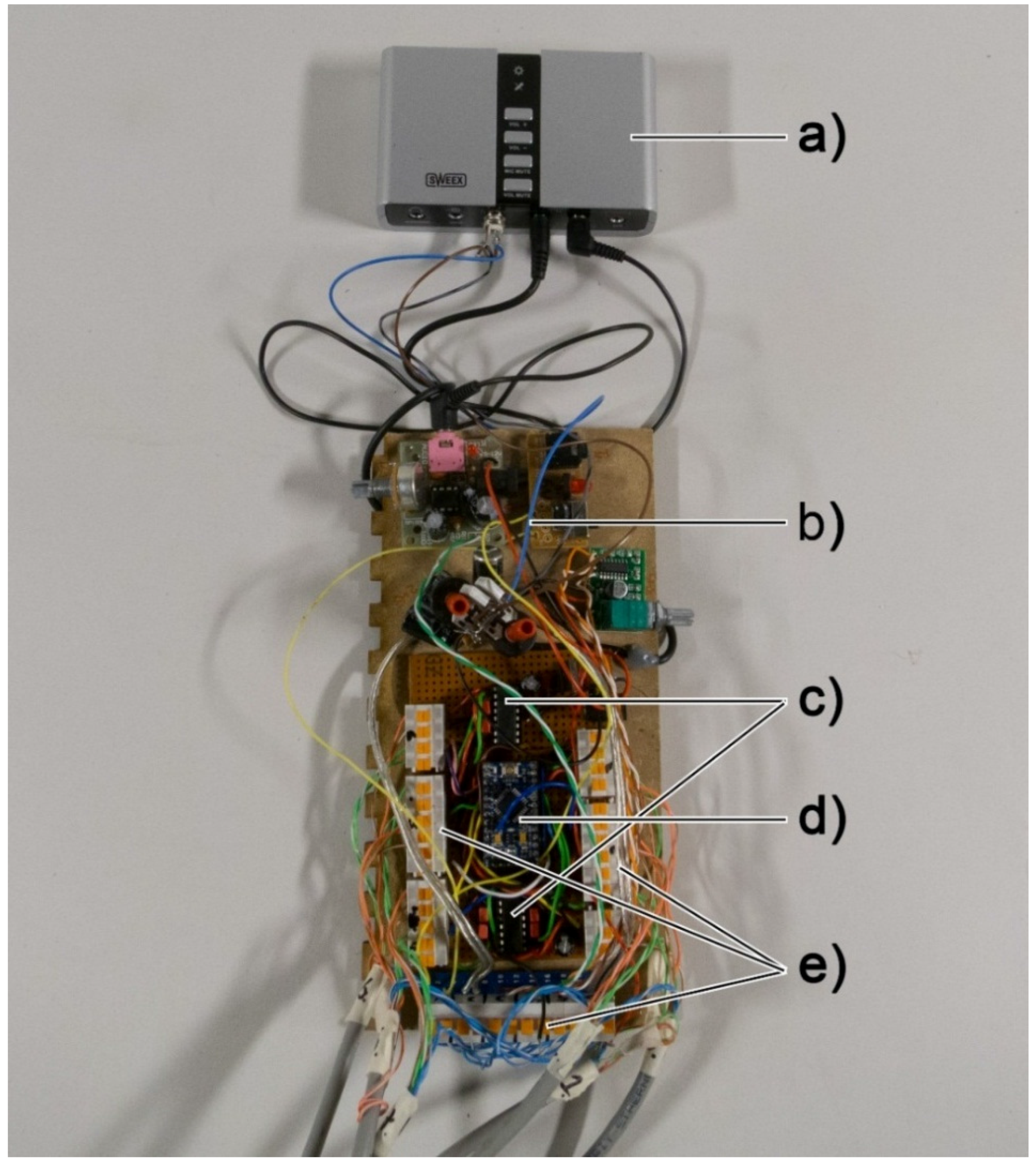

2.1. Materials

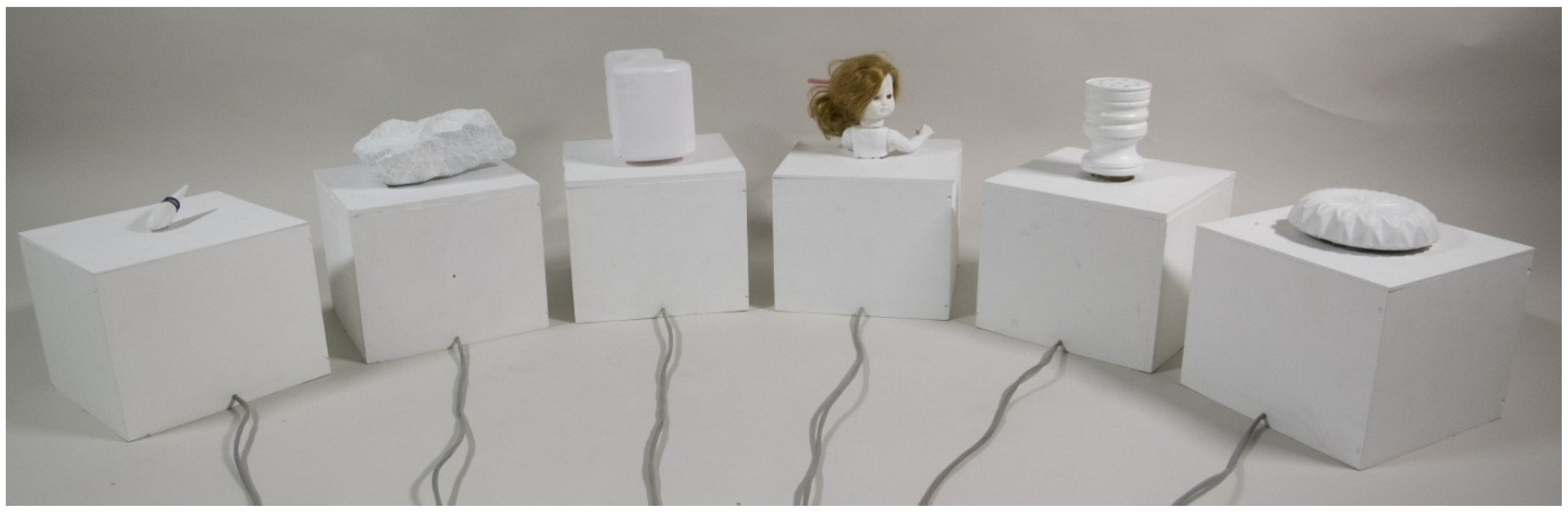

2.1.1. The Six Prototypes

2.1.2. Knob 1: Passive Haptics

2.1.3. Knob 2: Shape > Behavior > Sound

2.1.4. Knob 3: Knob in Control

2.1.5. Knob 4: Emotional Response

2.1.6. Knob 5: Energy In = Energy Out

2.1.7. Knob 6: The Holy

2.2. Prototype Design

2.3. Methods

2.3.1. Discourse Analysis

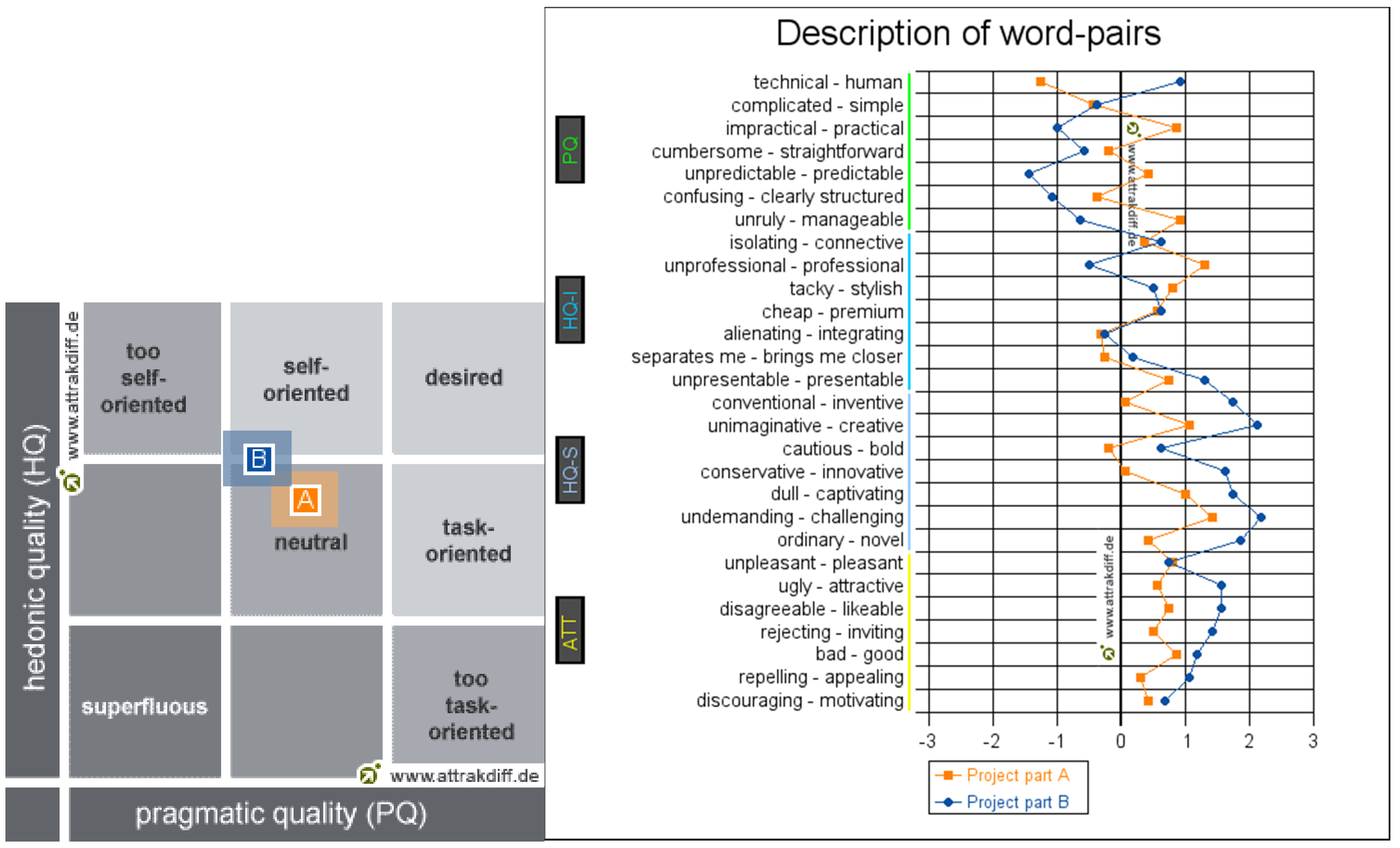

2.3.2. AttrakDiff

2.3.3. Setup

3. Results

3.1. AttrakDiff

3.2. Discourse Analysis

3.2.1. Participant 1

3.2.2. Participant 2

4. Discussion

Future

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Rutenberg, D. The early history of the potentiometer system of electrical measurement. Ann. Sci. 1939, 4, 212–243. [Google Scholar]

- Manning, P. Electronic and Computer Music; Oxford University Press: New York, NY, USA, 2013. [Google Scholar]

- Bernstein, D.W. The San Francisco Tape Music Center: 1960s Counterculture and the Avant-Garde; University of California Press: Oakland, CA, USA, 2008; p. 5. [Google Scholar]

- Yamaha DX7 Famous Examples. Available online http://bobbyblues.recup.ch/yamaha_dx_7/dx7_examples.html (accessed on 1 August 2015).

- Lederman, S.J.; Klatzky, R.L. Hand movements: A window into haptic object recognition. Cognit. Psychol. 1987, 3, 342–368. [Google Scholar] [CrossRef]

- Bovermann, T.; Hinrichsen, A.; Lopes, D.H.M.; de Campo, A.; Egermann, H.; Foerstel, A.; Hardjowirogo, S.-I.; Pysiewicz, A.; Weinzierl, S. 3DMIN—Challenges and Interventions in Design, Development and Dissemination of New Musical Instruments. Int. Conput. Music Assoc. 2014, 2014, 1637–1641. [Google Scholar]

- Todd, N.P.M. The dynamics of dynamics: A model of musical expression. J. Acoust. Soc. Am. 1992, 91, 3540–3550. [Google Scholar] [CrossRef]

- Jordà, S. Digital Instruments and Players: Part II: Diversity, Freedom and Control. In Proceedings of the International Computer Music Conference, Miami, FL, USA, 1–6 November 2004.

- Fels, S. Intimacy and Embodiment: Implications for Art and Technology. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.19.8057&rep=rep1&type=pdf (accessed on 26 October 2015).

- Streeck, J.; Goodwin, C.; LeBaron, C. Embodied Interaction in the Material World: An Introduction; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Bongers, B. Tactile display in electronic musical instruments. In Proceedings of the IEEE Colloquium on Developments in Tactile Displays, London, UK, 21 January 1997; pp. 41–70.

- Bloom, B.S.; Engelhart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals; Longman Group United Kingdom: London, UK, 1956. [Google Scholar]

- Hartson, R.H. Cognitive, physical, sensory, and functional affordances in interaction design. Behav. Inf. Technol. 2003, 22, 315–338. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive Load Theory and Instructional Design: Recent Developments. Educ. Psychol. 2003, 28, 1–4. [Google Scholar] [CrossRef]

- Hoven, E.V.D.; Mazalek, A. Grasping gestures: Gesturing with physical artifacts. Artif. Intell. Eng. Des. Anal. Manuf. 2011, 25, 255–271. [Google Scholar] [CrossRef]

- MacLean, K.E. Haptic interaction design for everyday interfaces. Rev. Hum. Factors Ergon. 2008, 1, 149–194. [Google Scholar] [CrossRef]

- Fels, S. Designing for Intimacy: Creating New Interfaces for Musical Expression. Proc. IEEE 2004, 92, 672–685. [Google Scholar] [CrossRef]

- Hunt, A.; Wanderley, M.M. Mapping performer parameters to synthesis engines. Organ. Sound 2012, 2, 97–108. [Google Scholar] [CrossRef]

- Von Laban, R. Principles of Dance and Movement Notation; Macdonald & Evans: London, UK, 1956. [Google Scholar]

- Juslin, P.N.; Friberg, A.; Bresin, R. Toward a computational model of expression in music performance: The GERM model. Music. Sci. 2002, 5, 63–122. [Google Scholar]

- Sato, M.; Poupyrev, I.; Harrison, C. Touché: Enhancing Touch Interaction on Humans, Screens, Liquids, and Everyday Objects. In Proceedings of the CHI, Austin, TX, USA, 5–10 May 2012.

- Armstrong, N. An Enactive Approach to Digital Musical Instrument Design. Ph.D. Thesis, Princeton University, Princeton, NJ, USA, 2006. [Google Scholar]

- Zimmerman, J.; Forlizzi, J.; Evenson, S. Research through design as a method for interaction design research in HCI. In Proceedings of CHI, San Jose, CA, USA, 30 April–3 May 2007; pp. 493–502.

- Van den Hoven, E.; Frens, J.; Aliakseyeu, D.; Martens, J.B.; Overbeeke, K.; Peters, P. Design research & tangible interaction. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007; pp. 109–115.

- Stowell, D.; Plumbley, M.D.; Bryan-Kinns, N. Discourse analysis evaluation method for expressive musical interfaces. In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME), Genova, Italy, 5–7 June 2008.

- Hassenzahl, M.; Burmester, M.; Koller, F. AttrakDiff: Ein Fragebogen zur Messung wahrgenommener hedonischer und pragmatischer Qualität. Mensch Comput. 2003, 57, 187–196. [Google Scholar]

- Cannon, J.; Favilla, S. The Investment of Play: Expression and Affordances in Digital Musical Instrument Design. In Proceedings of the ICMC, Ljubljana, Slovenia, 9–14 September 2012.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jense, A.; Eggen, B. Awakening the Synthesizer Knob: Gestural Perspectives. Machines 2015, 3, 317-338. https://doi.org/10.3390/machines3040317

Jense A, Eggen B. Awakening the Synthesizer Knob: Gestural Perspectives. Machines. 2015; 3(4):317-338. https://doi.org/10.3390/machines3040317

Chicago/Turabian StyleJense, Arvid, and Berry Eggen. 2015. "Awakening the Synthesizer Knob: Gestural Perspectives" Machines 3, no. 4: 317-338. https://doi.org/10.3390/machines3040317

APA StyleJense, A., & Eggen, B. (2015). Awakening the Synthesizer Knob: Gestural Perspectives. Machines, 3(4), 317-338. https://doi.org/10.3390/machines3040317