Abstract

Trajectory prediction is a critical component of autonomous driving decision-making systems, directly impacting driving safety and traffic efficiency. Despite advancements, existing reviews exhibit limitations in timeliness, classification frameworks, and challenge analysis. This paper systematically reviews multi-agent trajectory prediction technologies, focusing on generating future position sequences from historical trajectories, high-precision maps, and scene context. We propose a multi-dimensional classification framework integrating input representation, output forms, method paradigms, and interaction modeling. The review comprehensively compares conventional methods and deep learning architectures, including diffusion models and large language models. We further analyze five core challenges: complex interactions, rule and map dependence, long-term prediction errors, extreme-scene generalization, and real-time constraints. Finally, interdisciplinary solutions are prospectively explored.

1. Introduction

The rapid development of autonomous driving technology has raised higher requirements for the accuracy and robustness of trajectory prediction. As the core component of the autonomous driving decision-making system, trajectory prediction directly determines driving safety and traffic efficiency [1]. In complex dynamic traffic environments, vehicles need to predict the future movement trajectories of surrounding traffic participants (such as vehicles and pedestrians) in real time to avoid collision risks and plan the optimal path [2,3,4]. However, the randomness of traffic participant behavior, the complexity of multi-agent interaction, and the uncertainty of environmental perception pose significant challenges to high-precision trajectory prediction.

Although there are existing reviews covering traditional methods and deep learning models, the following deficiencies still exist: (1) lagging in timeliness: lacking systematic analysis of frontier technologies such as diffusion models and large language models (LLMs); (2) single classification framework: not unified in the classification dimensions based on interaction modeling, output modalities, and uncertainty handling; (3) insufficient analysis of challenges: no in-depth exploration of key challenges such as cumulative long-term prediction errors and generalization in rare scenarios. This paper focuses on the multi-agent trajectory prediction problem and aims to systematically review the key technologies for generating future position sequences based on historical trajectories, high-precision maps, and scene context. This paper focuses on the following issues:

- Modeling of input elements: how dynamic information, static information, and scene context can be collaboratively represented;

- Evolution of output form: from single-modal deterministic trajectories to multi-modal probabilized trajectories;

- Innovation of method paradigms: the efficacy boundaries of traditional methods and deep learning methods;

- Completeness of evaluation system: the adaptability of dataset characteristics and multi-dimensional evaluation indicators.

Therefore, we propose a multi-dimensional classification framework, integrating traditional methods and deep learning models; deeply analyze the mechanisms of five major challenges (complex interaction, rule dependence, long-term prediction error, extreme scene generalization, real-time constraints); and prospectively explore interdisciplinary integration directions such as embodied intelligence and vehicle-road collaboration. The main contributions of this paper are as follows:

- Proposing a multi-dimensional classification framework, organizing the evolution of trajectory prediction technology from four dimensions: input representation, output form, method paradigm, and interaction modeling;

- Systematically comparing the advantages and limitations of traditional methods and deep learning models, covering the latest progress of diffusion models, Transformer architectures, and generative methods;

- Deeply analyzing the current five major challenges (complex interaction, rule dependence, long-term prediction error, extreme scene generalization, real-time constraints), providing directional guidance for future research.

This paper is organized as follows. Section 2 establishes the trajectory prediction problem formulation and classification framework. Section 3 reviews conventional methods: physics models, maneuver-based approaches, and probabilistic graphical models. Section 4 analyzes deep learning methods categorized by architecture: RNNs, CNNs, GNNs, Transformers, and generative models, detailing feature encoding/fusion. Section 5 evaluates datasets and metrics. Section 6 discusses multi-task applications and core challenges with solutions. Section 7 concludes and proposes future directions including end-to-end frameworks and causal reasoning systems.

2. Key Problems and Method Classification

2.1. Core Input Element

The precision of trajectory prediction relies significantly on the quality and nature of input data, which encompass dynamic information, static information, and scene context. Collectively, these elements offer a thorough depiction of the traffic setting for the trajectory prediction algorithm.

2.1.1. Dynamic Information

Dynamic information primarily comprises historical trajectory data of the host vehicle and surrounding traffic participants, encompassing their position, velocity, acceleration, and heading angle. This data characterizes the immediate movement status of traffic participants and serves as the foundation for trajectory prediction, particularly crucial in short-term trajectory prediction, as it directly indicates the instantaneous movement tendencies of traffic participants [5,6,7]. The collection of dynamic information typically relies on the vehicle’s sensors and V2X communication technology [8].

2.1.2. Static Information

Static information in the traffic environment includes lane lines, curbs, traffic signs, traffic lights, drivable areas, and intersection structures. This data serves as a constant representation of the traffic surroundings and forms the foundational backdrop for trajectory prediction. Typically acquired from high-precision maps and on-board sensors, static information aids vehicles in comprehending traffic regulations and road layout. It holds significant relevance in long-range trajectory forecasting and route mapping by furnishing a comprehensive overview of the traffic landscape [9].

2.1.3. Scenario Context

Enabling vehicles to comprehend the attributes and possible hazards of the present situation, scene context encompasses factors such as traffic regulations, lighting conditions, weather, and the type of scene. This data is typically acquired through sensor data and algorithms that are aware of the environment [10,11,12,13]. Across various scene categories, the utilization of scene context information can assist vehicles in adapting prediction strategies and enhancing the precision of predictions.

2.2. Output Representation

2.2.1. Trajectory Representation

Trajectory representation in autonomous driving trajectory prediction involves converting the movement path of a vehicle or traffic participant into a format that can be analyzed. The selection of a trajectory representation method significantly impacts both the construction of prediction models and the comprehensibility of prediction outcomes [14]. Typical trajectory representation approaches comprise discrete point sequences, parametric curves, and grid occupancy.

- Discrete Point Sequence

A discrete point sequence depicts a trajectory through a series of discrete points in time, providing a direct representation of the trajectory’s temporal evolution. In the context of autonomous driving, a discrete point sequence serves as an intuitive method to capture the vehicle’s position at each time point, with each point encapsulating positional coordinates along with potential speed, acceleration, and related data [15]. Each individual point corresponds to the positional data at a specific time step, denoted as t. This can be mathematically formulated as:

where denotes the pedestrian position at time step and is the total time step of the trajectory.

- 2.

- Parametric Curve

A parametric curve is defined by one or more parameters that determine the coordinates of points along the curve [16]. Examples of parametric curves commonly used include polynomials and spline curves.

The polynomial curve follows a fundamental structure:

where represents the time variable and denotes the polynomial coefficient.

For instance, Buhet et al. [17] introduce a probabilistic prediction approach utilizing polynomial trajectories. This method represents the vehicle trajectory as a polynomial function and forecasts the future trajectory distribution through a probabilistic model, effectively addressing trajectory uncertainty and diversity.

A spline curve, commonly employed for interpolation and fitting, is a smooth curve determined by a series of control points. The B-spline curve is currently the most prevalent type utilized. For instance, Cao et al. [18] illustrates the creation of a trajectory from predetermined waypoints, delineating the path’s form through the node vectors and control polygons of the B-spline. Furthermore, a real-time path planning strategy leveraging the B-spline curve is introduced in [19], enabling swift generation of obstacle-avoidance paths in dynamic environments.

- 3.

- Grid Occupation

The concept of grid occupancy involves partitioning space into grids and projecting the likelihood of each grid being occupied in the future. Schreiber et al. [20] proposed a new encoder–decoder framework, which utilizes convolutional long short-term memory networks to predict future trajectory patterns based on the grid occupancy mapping. Additionally, Zeng et al. [21] proposed an end-to-end interpretable neural motion planner, which employes a grid occupancy graph to delineate various potential trajectories.

2.2.2. Unimodal Prediction and Multimodal Prediction

The unimodal prediction method involves predicting the most likely trajectory for each target. A physical model-based single-modal prediction method utilizes kinematic and dynamic properties to determine a singular, probable future trajectory by considering the vehicle’s position, velocity, and yaw rate [22]. Conversely, a machine learning-based unimodal prediction method extracts key feature information from vehicle lane change trajectory data using SVM’s nonlinear learning and pattern recognition capabilities. This method models the vehicle’s actual lane change process and calculates the probability distribution of behavior parameters that represent changing motion characteristics [23].

The multimodal prediction approach can produce multiple plausible trajectories while accounting for the uncertainty in the target’s intention [24]. By incorporating probabilistic models or deep learning techniques, this approach can assign a probability to each trajectory, reflecting its likelihood. This method integrates historical target trajectories, environmental data, and potential driving intentions to create a probability distribution model using recurrent neural networks and mixed density network output functions, enabling the generation of diverse trajectories and their associated probabilities [25]. Another multi-modal trajectory prediction technique, employing deep learning and adversarial training of generator and discriminator, generates multiple feasible trajectories, capturing the uncertainty in vehicle behavior [26]. Table 1 conducts a comparative analysis of unimodal and multimodal trajectory prediction methods, summarizing their applicable scenarios, main advantages, disadvantages, and representative methods.

Table 1.

Comparison of unimodal prediction and multimodal prediction.

2.2.3. Uncertainty Quantification

Accurately quantifying uncertainty is crucial for ensuring the reliability and robustness of trajectory predictions, especially given the intricate nature of traffic environments and sensor data noise. Decision support systems rely heavily on precise uncertainty quantification methods such as probability distributions, confidence intervals, and generative model sampling to enhance the accuracy of prediction outcomes [27,28].

Common probability distributions comprise the Gaussian distribution and the mixture Gaussian distribution. Yoon et al. [29] employed Gaussian process regression to derive the probability distribution of behavioral parameters that depict lane change motion characteristics, thereby offering a probability estimate for trajectory prediction. Mao et al. [30] introduced a random trajectory prediction approach grounded on the jump diffusion model, and characterized the uncertainty of trajectory prediction through the Gaussian mixture distribution. This technique adeptly addresses multi-modal and uncertainty challenges.

The confidence interval is a statistical tool used to quantitatively and intuitively measure the uncertainty of trajectory predictions. It not only reflects the reliability of the prediction results but can also be applied to tasks such as path planning and collision detection. The confidence interval based on the Gaussian distribution provides the uncertainty range of the predicted values, which is helpful for evaluating the prediction stability of the model, while the confidence interval based on non-parametric methods can determine its coverage range through Monte Carlo simulation based on a large number of pseudo-experiments, thereby improving the accuracy of the estimation [31,32].

Uncertainty quantification combined with generative model sampling allows for a more comprehensive treatment of uncertainty in trajectory prediction. Li et al. [33] employed uncertainty quantification methods to estimate the uncertainty range of trajectory prediction, and then sampled the model within this range to generate trajectory samples that conform to the uncertainty.

2.3. Classification of Trajectory Prediction Methods

2.3.1. Based on Method Paradigm

Predictions based on the method paradigm can be mainly classified into traditional methods and those based on deep learning.

Traditional trajectory prediction methods typically rely on physical models, kinematic models, or rule-based models [34,35]. The advantages of these methods are interpretability and computational efficiency, but they may not be flexible enough to deal with complex traffic scenarios and nonlinear behaviors.

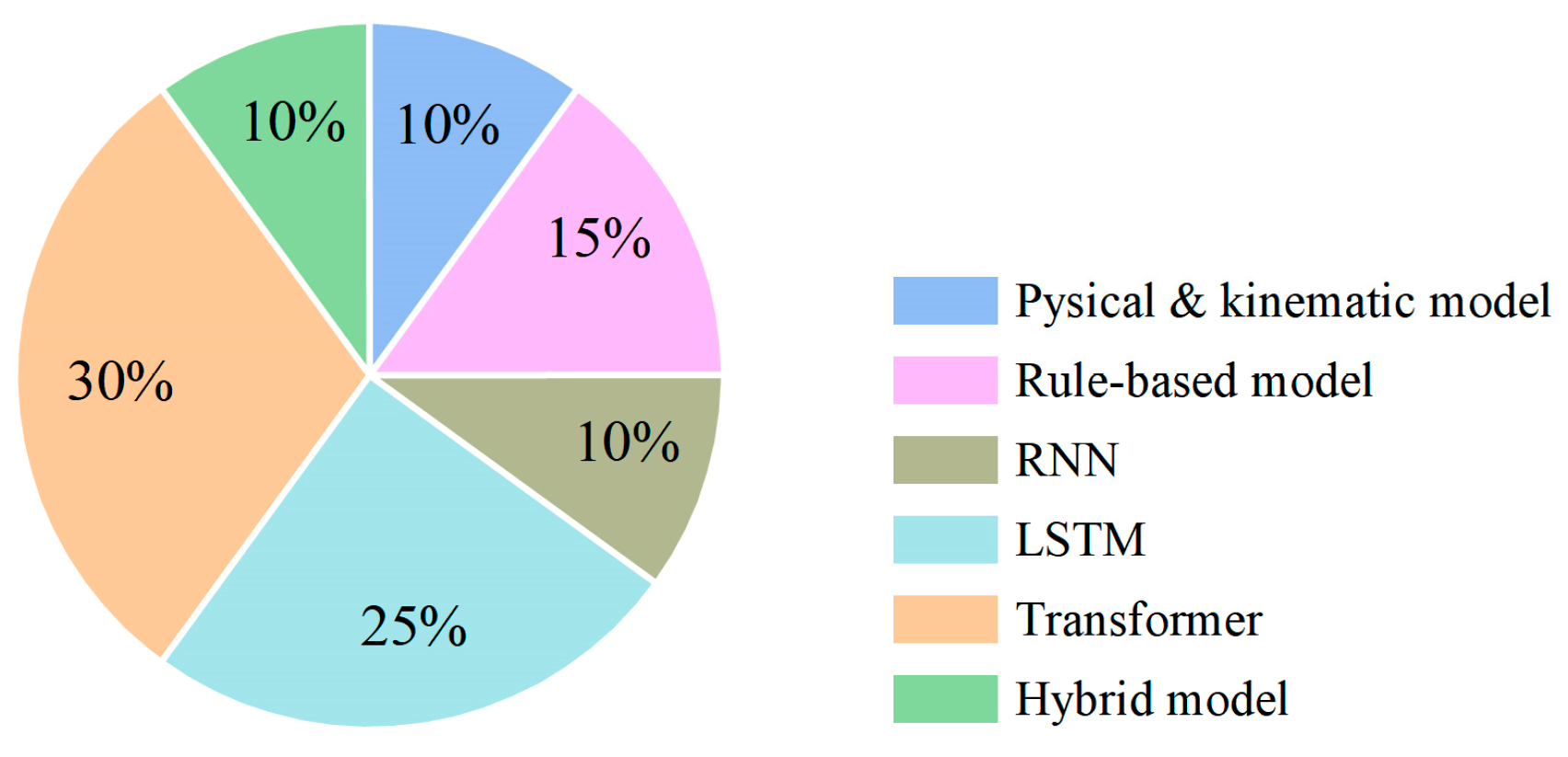

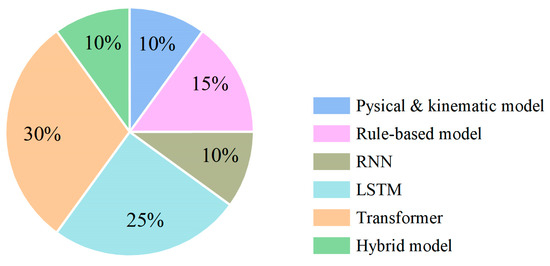

Trajectory prediction methods based on deep learning can automatically extract features and make predictions by learning patterns and relationships in data. Deep learning models, such as recurrent neural networks (RNN), long-short term memory networks (LSTM), and Transformer architectures, have been widely used for trajectory prediction tasks. These methods perform well in dealing with complex traffic scenarios and nonlinear behavior, but often require large amounts of data and calculations [36]. Figure 1 shows the percentage of articles using traditional and deep learning for trajectory prediction. The proportions are derived from our analysis of over 100 relevant studies from 2014 to 2024.

Figure 1.

The proportion of prediction methods based on method.

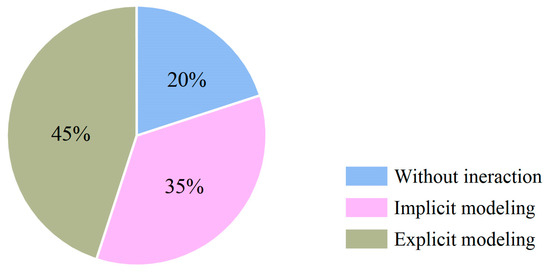

2.3.2. Based on Interaction Modeling

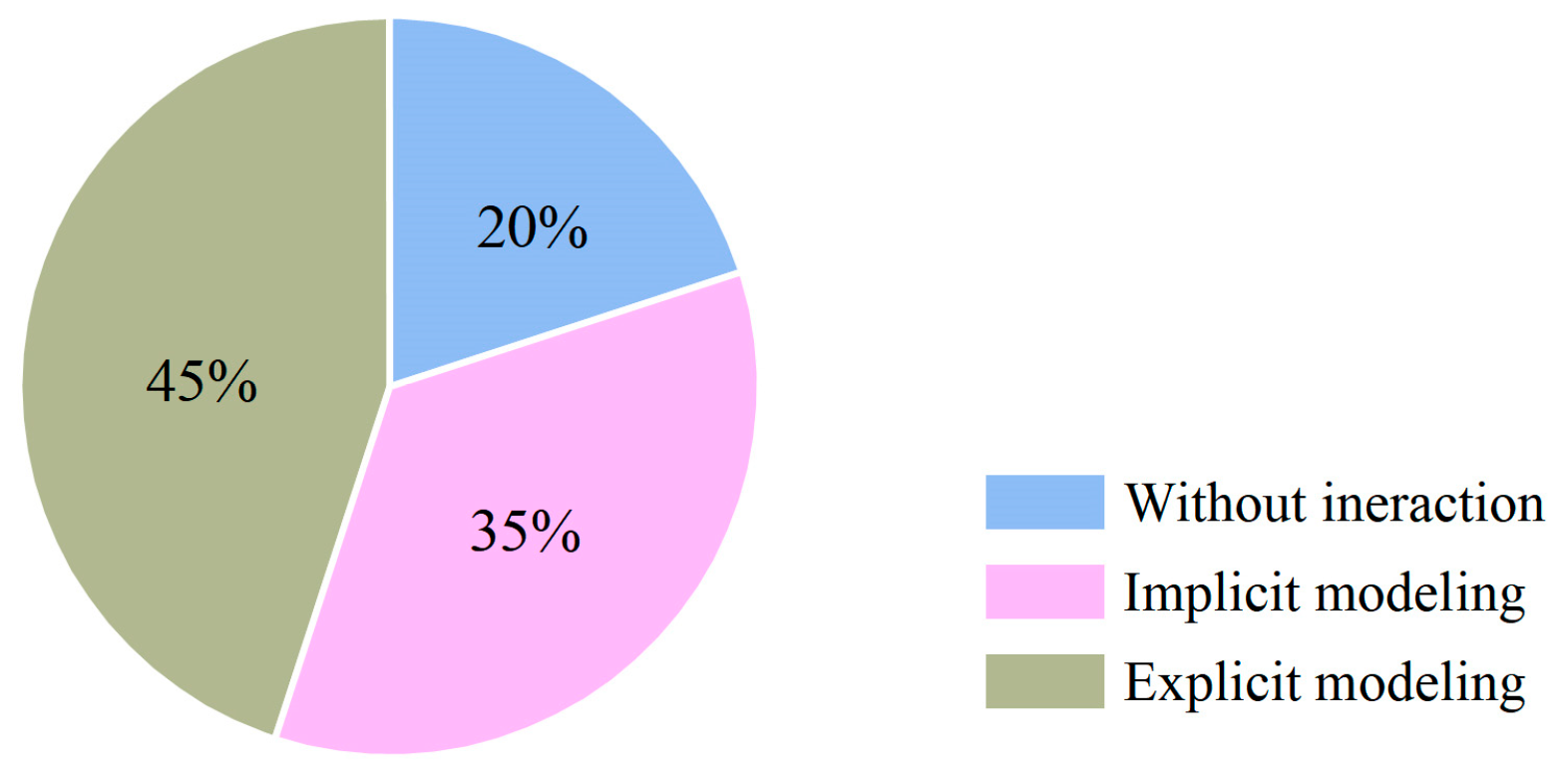

Interaction among traffic participants is one of the key factors affecting the prediction accuracy in autonomous driving trajectory prediction. Interaction modeling methods can be divided into three categories according to whether they explicitly represent and process these interactions: interaction modeling, implicit interaction modeling, and explicit interaction modeling. Figure 2 shows the proportion of articles that use these three types of interactions for trajectory prediction.

Figure 2.

The proportion of prediction methods based on interaction modeling.

Methods that do not consider interaction treat each traffic participant as an independent individual, regardless of its interaction with other traffic participants, and are usually applied in low-density traffic scenarios, but in high-density traffic scenarios, interactions between vehicles are complex and frequent, and methods that do not consider interaction may lead to reduced prediction accuracy [29].

Implicit interaction modeling methods consider the interaction between traffic participants by means of shared feature extraction or joint training, and are often applied to medium density traffic scenes. Implicit interaction modeling methods can improve prediction accuracy through learned implicit relationships while maintaining high computational efficiency. Xin et al. [37] used LSTM to encode the historical trajectory data of vehicles and predict their future trajectories. They proved that this method is capable of implicitly capturing the interaction relationships among vehicles and is suitable for medium-density traffic scenarios. However, in complex traffic scenarios, implicit modeling may fail to capture complex interaction relationships, resulting in insufficient prediction accuracy.

Explicit interaction modeling method improves prediction accuracy by explicitly expressing the interaction between traffic participants. Explicit modeling can significantly improve prediction accuracy and is applicable to scenarios that require high-precision predictions. Zhao et al. [38] proposed a trajectory prediction method based on Graph Neural Network (GNN), which can explicitly simulate the interactions between vehicles. This method performs well in complex traffic scenarios and is suitable for high-density traffic situations. However, explicit modeling usually requires more computing resources and may not be suitable for scenarios with high real-time requirements.

3. Conventional Trajectory Prediction Methods

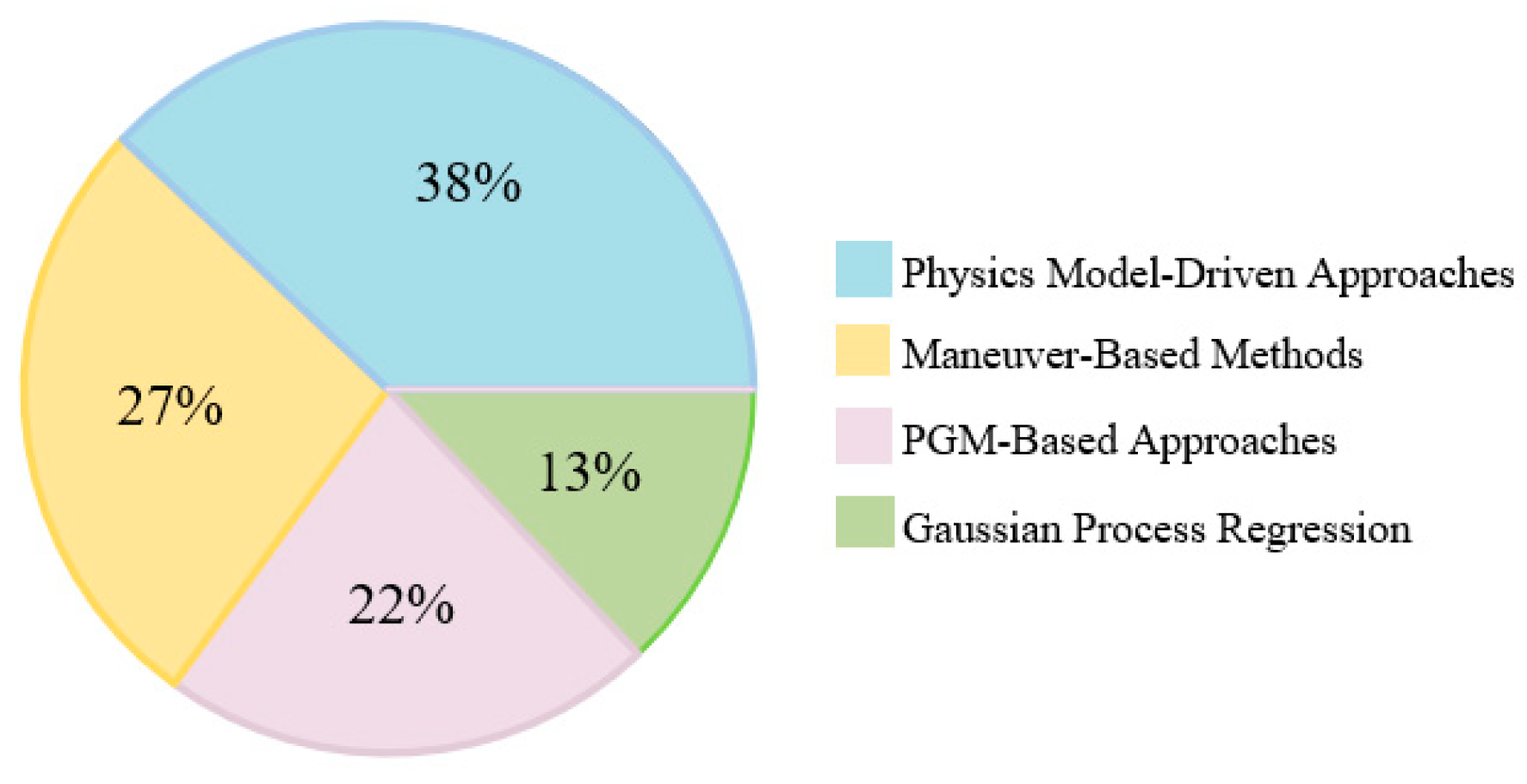

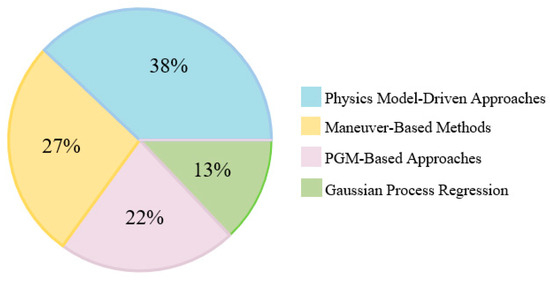

Conventional trajectory prediction methods each have their own focuses. Figure 3 illustrates several conventional methods and their application in addressing the trajectory prediction task for Autonomous Vehicles (AVs). Analysis of the papers indicates that in this review, 38% of the papers focus on Physics Model-Driven Approaches, 27% of the papers concern Maneuver-Based Methods, 22% of the papers concentrate on PGM-Based Approaches, and the remaining 13% are centered on Gaussian Process regression.

Figure 3.

Participation of research articles in trajectory prediction task using conventional approaches.

Conventional methods for trajectory prediction form the foundation of the autonomous driving trajectory prediction field. Centered around mathematical models and statistical patterns, these methods estimate the future motion of traffic participants through explicit physical principles or probabilistic reasoning. Although they have limitations in complex interactive scenarios, these methods remain relevant in specific scenarios due to their interpretability and efficiency. A summary of these models, including representative models, strengths and weaknesses, and applicable scenarios, is presented in Table 2.

Table 2.

Summary of research on cumulative errors and behavioral uncertainty issues.

3.1. Physics Model-Driven Methods

Physics model-driven approaches are grounded in principles of classical mechanics and kinematics [66,67]. Physics-model-driven methods predict trajectories by constructing mathematical equations of vehicle motion based on classical mechanics and kinematic principles. The core idea is to treat vehicle motion as a process that can be described by physical laws. These methods do not rely on large amounts of data; instead, they capture the dynamic characteristics of vehicles through preset motion models and achieve high accuracy within a short time range.

3.1.1. Constant Velocity/Acceleration Models

The Constant Velocity (CV) model assumes a vehicle maintains its current speed throughout the prediction horizon, calculating future positions solely based on its present location and velocity. The Constant Acceleration (CA) model further incorporates acceleration effects, making it suitable for scenarios involving vehicle acceleration or deceleration. In practical applications, these models are often combined with Kalman filtering to address uncertainties from sensor noise. For instance, Zhang et al. [39] proposed a method based on Vehicle-to-Vehicle (V2V) communication and Kalman filtering, enabling ego vehicles to predict trajectories of remote vehicles and achieve obstacle avoidance. Lefkopoulos et al. [40] introduced an Interacting Multiple Model Kalman Filter (IMM-KF), which enhances the accuracy of physics-based trajectory predictions by integrating interaction-related parameters.

3.1.2. Bicycle Model

The bicycle model simplifies vehicles into a “bicycle” structure, characterizing motion through front-wheel steering angles and longitudinal velocity. It comprises two variants: Kinematic model: Ignores dynamic factors like tire forces, considering only geometric constraints. Suitable for low-speed scenarios. Dynamic model: Incorporates parameters such as tire forces and vehicle mass, better capturing motion characteristics during high-speed or limit-handling conditions. In trajectory prediction, bicycle models often integrate with filtering techniques to address motion disturbances. As noted in [46], some studies combine this model with a Monte Carlo approach—randomly sampling input variables to simulate state distributions and generate potential future trajectories.

3.1.3. Advantages and Limitations

Physics-based model-driven approaches offer distinct advantages: their principles are simple, based on explicit kinematic or dynamic laws, requiring no complex training process. With high computational efficiency, they fulfill real-time requirements. Their strong physical interpretability ensures predictions align with human intuition about vehicle motion, facilitating verification and calibration. For instance, physics models demonstrate stable performance in short-term predictions (typically ≤ 1 s), providing reliable trajectory references for safety assessment [41].

However, physics-based model-driven approaches exhibit significant limitations. Its core assumption is that the movement of vehicles follows fixed physical laws (as emphasized in [42]), which prevents them from capturing the interactions between vehicles and the changes in the drivers’ intentions. Furthermore, as the prediction horizons extends, the model errors will gradually accumulate, resulting in a significant decline in the accuracy of long-term predictions.

3.2. Maneuver-Based Methods

Maneuver-based approaches abstract vehicle behavior into discrete “maneuver actions” such as lane changes, car-following, turns, etc., generating future trajectories by recognizing current maneuver types.

3.2.1. Maneuver Recognition and Classification

Maneuver recognition serves as the prerequisite for maneuver-based methods, determining a vehicle’s current behavior by analyzing historical trajectory features. Common maneuver types include longitudinal maneuvers and lateral maneuvers, with combined maneuvers such as intersection turns and U-turns observed in complex scenarios. Houenou et al. [49] identified lane-change maneuvers and integrated them with the Constant Yaw Rate and Acceleration (CYRA) model for trajectory prediction. Tran and Firl [50] leveraged Monte Carlo Simulation (MCS) to forecast multimodal trajectories and employed Gaussian Process Regression to learn behavioral patterns at intersections.

3.2.2. Maneuver Library-Based Trajectory Generation

Trajectory generation based on maneuver libraries involves predefining typical trajectory models for various maneuvers to form a “maneuver library.” Upon identifying a vehicle’s maneuver type, the corresponding model is retrieved from this library to generate future trajectories by combining it with the current kinematic state. Wissing et al. [51] proposed an interaction-aware trajectory prediction method that simulates interactive behaviors using MCS, integrating the Intelligent Driver Model (IDM) and lane-change models to generate distributions of potential future positions for target vehicles. Similarly, Okamoto et al. [52] utilized identified maneuvers in their maneuver-based framework to generate future trajectories through Monte Carlo methods.

3.2.3. Advantages and Limitations

The primary strength of maneuver-based methods lies in their explicit behavioral intent representation, where prediction results directly correlate with a vehicle’s driving objectives. This enables Autonomous Driving Systems (ADS) to intuitively comprehend surrounding vehicles’ behavioral purposes, providing actionable input for decision-making and planning.

While effective in intent interpretation, maneuver-based methods face three core limitations, as discussed in [53]: (1) Maneuver library is unable to comprehensively cover all possible actions, especially in edge scenarios such as emergency avoidance, which can lead to prediction failures. (2) Inherent classification ambiguity exists as real-world driving often involves transitional states overlapping multiple maneuver categories. (3) Its interactive modeling capability is relatively weak, and it neglects dynamic interaction relationships between vehicles.

3.3. PGM-Based Methods

Probabilistic Graphical Model (PGM)-based methods leverage graphical structures to represent conditional dependencies among variables, employing probabilistic inference to handle trajectory prediction uncertainties, thereby proving effective in complex operational environments with multi-factorial interactions.

3.3.1. Hidden Markov Model

The Hidden Markov Model (HMM) conceptualizes trajectory generation as a stochastic process governed by latent states (satisfying the Markov property) and observable states, characterizing motion patterns through initial state probabilities, transition probabilities, and emission probabilities [54]. For instance, Qiao et al. [56] employs HMM with adaptive parameter selection to simulate real-time scenarios, enhancing dynamic adaptability in trajectory prediction. Concurrently, Deng et al. [57] integrate HMM with fuzzy logic to forecast driver maneuvers, ensuring prediction reliability through multiple initial-value iterations.

3.3.2. Dynamic Bayesian Network

The Dynamic Bayesian Network (DBN) extends HMM by incorporating a temporal dimension to model time-evolving dependencies among variables, specifically targeting multi-vehicle interaction scenarios. This framework represents variables—including vehicle states, road structures, and interaction relationships—as nodes interconnected via directed edges to encode dependencies, subsequently enabling trajectory prediction through probabilistic inference. Gindele et al. [59] leverage DBN to model multi-vehicle maneuvers using inputs of all vehicles’ states, interactions, and road structures. He et al. [60] employ it to recognize car-following and lane-changing behaviors while predicting trajectories.

3.3.3. Advantages and Limitations

The core strength of probabilistic graphical model-based approaches lies in their inherent ability to naturally accommodate uncertainties in trajectory prediction, representing potential future trajectories through probability distributions that support risk assessment for autonomous driving systems. For instance, both HMM and DBN output trajectory probability distributions—rather than single deterministic results—thereby enhancing robustness against sensor noise and environmental dynamics [58,68]. Furthermore, these models integrate multi-source information and outperform physics-based models in complex operational scenarios.

Probabilistic graphical model-based approaches exhibit notable constraints: (1) The complexity of their structure requires manual specification of the dependencies between variables, which demands profound domain expertise. (2) Precise reasoning necessitates a thorough traversal of the entire state space, which often forces the use of approximate algorithms, thereby reducing the accuracy of predictions. (3) In high-dimensional continuous state spaces, scalability issues arise. As the number of variables increases, computational efficiency and stability will sharply decline.

3.4. Gaussian Process Regression

Gaussian Process Regression (GPR), a non-parametric Bayesian approach, treats trajectories as samples drawn from a Gaussian process, leveraging kernel functions to characterize similarities between data points for future trajectory prediction. Its core mechanism estimates the mean and covariance functions of the Gaussian process from historical trajectories, ultimately outputting future paths in the form of probabilistic distributions.

3.4.1. Principles and Applications in Trajectory Prediction

Gaussian Process Regression fundamentally operates by assuming all trajectory samples follow a multivariate Gaussian distribution, predicting unobserved future trajectories through estimation of distribution parameters from observed data. In trajectory prediction applications, it processes historical vehicle position sequences as input and outputs probabilistic distributions of future locations. For instance, Laugier et al. [64] first assessed possible vehicle behaviors via HMM before deploying GPR to predict corresponding trajectories. Guo et al. [65] integrated GPR with Dirichlet processes (DP) to construct motion models that extract latent movement patterns.

3.4.2. Advantages and Limitations

Gaussian Process Regression offers the distinctive advantage of naturally outputting uncertainty estimates for trajectories, directly yielding probabilistic distributions of future positions without additional processing. Furthermore, as a non-parametric model, GPR requires no predefined functional forms for trajectories, flexibly fitting complex nonlinear motion patterns while outperforming parametric counterparts in small-sample scenarios.

The main limitation of Gaussian process regression lies in its high computational complexity, which results in a significant decrease in efficiency when dealing with large datasets and makes it difficult to meet real-time requirements. Moreover, Gaussian process regression performs poorly in handling multi-vehicle interaction scenarios because predicting the joint trajectory will significantly increase the number of variables, thereby causing excessively high computational and storage costs for the covariance matrix.

4. Deep Learning-Based Trajectory Prediction Methods

Compared to conventional approaches, deep learning models move away from a heavy reliance on explicit rules and prior models [69,70,71]. They leverage their deep network structures and powerful nonlinear fitting capabilities to automatically learn spatio-temporal dependencies and interaction patterns directly from vast amounts of real-world data [72]. This fundamental shift has led to breakthroughs in prediction accuracy and robustness, significantly enhancing the models’ ability to handle extreme scenarios.

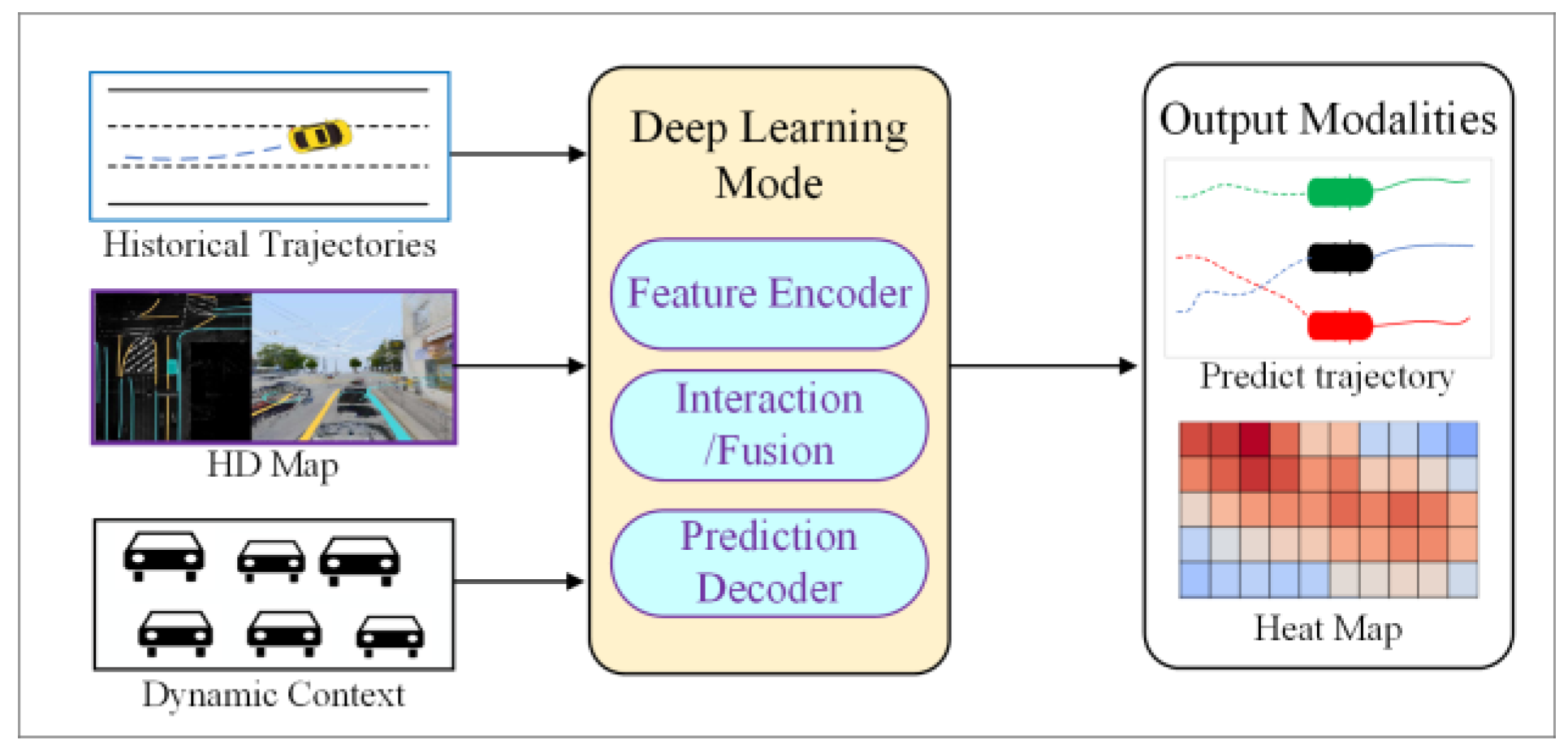

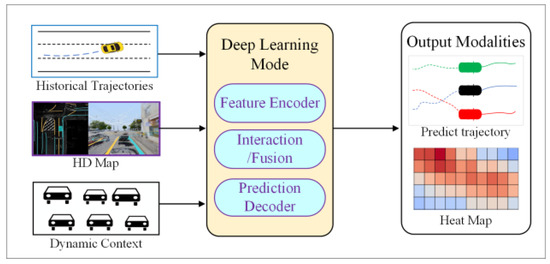

This chapter presents a systematic review of deep learning-based trajectory prediction methods, following the general framework illustrated in Figure 4. The review begins with the foundational technique of feature encoding. Subsequently, it follows the evolutionary trajectory of network architectures, delving into how RNN process temporal data, Convolutional Neural Networks (CNN) extract spatial features, GNN model structured relationships, and Transformers capture global dependencies. Finally, to address the inherent uncertainty of forecasting, the chapter focuses on generative models—including GAN, CVAE, and Diffusion Models—and analyzes their role in multimodal prediction.

Figure 4.

Framework of deep learning trajectory prediction method. The input is multi-source information, and the output is multimodal trajectory, accompanied by attention distribution map.

4.1. Feature Encoding

The primary task in deep learning trajectory prediction is to convert raw, multi-modal inputs—like historical trajectories, HD maps, and the movement of other agents—into dense features a neural network can process. The quality of this encoding is foundational and sets the performance limit for the entire model. The raw input data is typically heterogeneous, primarily including: the historical trajectory of the target agent itself, the surrounding static high-definition (HD) map, and the dynamics of other traffic participants in the environment.

4.1.1. Historical Trajectory Encoding

The historical trajectory, typically represented as a sequence of the agent’s states over the past timesteps, serves as the foundation for understanding its motion patterns and intent. Each state usually contains position , and may also include information such as velocity , acceleration , and heading angle θ. The first step for a deep learning model is to use an Encoder to compress this variable-length, temporally ordered sequence into a fixed-dimensional feature vector h. This vector h should encapsulate all information from the trajectory valuable for future prediction, such as current motion trends and critical past behaviors. The following are several mainstream trajectory encoding techniques.

One-Dimensional Convolutional Neural Networks (1D CNN): This method treats a trajectory as a one-dimensional signal, processing it by sliding a convolutional kernel along the time steps to identify local motion patterns [73,74,75]. Gilles et al. [76] uses a 1D CNN layer as a parallel local pattern detector to extract short-term motion features, such as acceleration or turns, providing dynamic information for subsequent modeling. A more advanced version is the Temporal Convolutional Network (TCN), which captures longer-range temporal dependencies with high parallel efficiency. Azadani and Boukerche [77] use a TCN encoder on historical trajectories to extract spatio-temporal features and create a compact latent representation. Wang et al. [78] leverage a TCN to replace traditional RNNs for temporal modeling. By stacking layers with causal convolutions and dilated convolutions, their model efficiently captures long-term temporal dependencies.

The most natural and classic approach for processing sequential data is the RNN and its advanced variants—LSTM and Gated Recurrent Unit (GRU)—which are essential tools for handling temporal dynamics [79,80]. Within the basic Sequence-to-Sequence (Seq2Seq) framework, Zyner et al. [81] utilized an RNN to encode the vehicle’s historical data, compressing the historical trajectory into a context vector rich in dynamic information. As research progressed, the RNN encoder became the backbone of more complex generative models, such as Generative Adversarial Networks (GAN) [82] and Conditional Variational Autoencoders (CVAE) [83]. Its task is to map the historical trajectory into a latent space to achieve multi-modal prediction. Even in cutting-edge GNN methods, the RNN remains a critical component. It is commonly utilized to pre-encode the temporal dynamics of each node (agent), furnishing the graph convolution operations with node features that are rich in historical context.

4.1.2. Map Information Encoding

Unlike models relying solely on trajectory history, advanced autonomous systems must deeply understand their static surroundings. High-Definition (HD) maps offer powerful prior knowledge of road topology, traffic rules, and geometric constraints, which is critical for generating safe, compliant, and plausible trajectories. To use this information, deep learning models employ specialized encoders to transform static map features into numerical representations. This geographic information is generally categorized and encoded as either rasterized or vectorized maps.

CNN-based Rasterized Map Encoding. The most intuitive approach involves rasterizing vectorized map data into a multi-channel Bird’s-Eye View (BEV) image centered on the ego-vehicle. As in [84], a multi-channel image is created where each channel represents a specific semantic element. In this format, each channel corresponds to a specific semantic element, such as lane lines or drivable areas. This BEV image is then processed by a standard 2D CNN to extract rich spatial context features.

PointNet-based Vectorized Map Encoding. To overcome information loss associated with rasterization, researchers operate directly on vectorized map data by treating elements like lane centerlines as an unordered point cloud. Bojarski et al. [85] utilizes a shared MLP and a symmetric function to process the unordered set of points from forward-looking road imagery to aid in decision-making, thereby ensuring permutation invariance to the order of the points. Despite their strength in geometry, these models were designed for unordered sets and thus cannot capture the connectivity between points.

Graph Neural Networks offer a state-of-the-art framework for map encoding that models both geometric and topological structures. This method explicitly constructs a graph from the scene, where nodes represent meaningful map units and edges encode their topological relationships. The GNN then iteratively propagates information between nodes via a message-passing mechanism, allowing each node’s feature representation to incorporate data from its surroundings and the broader network structure for a deep understanding of the environment. As exemplified by [86], this approach has proven to be highly effective at capturing the relationships between agents and the map, as well as the indirect relationships between agents established through the map.

4.1.3. Context Information Fusion

For effective trajectory prediction, it is crucial to analyze both an agent’s historical trajectory (dynamic information) and the scene map (static information). Efficiently fusing these modalities is a critical step for high-performance models [87,88]. We will introduce the mainstream fusion strategies, categorized as basic static methods and the more advanced attention-based dynamic methods that represent the current standard.

Concatenation and MLP. Basic static fusion methods were applied in early models due to their simplicity and computational efficiency. In research by Chandra et al. [89], features from trajectories and maps were extracted by separate encoders, concatenated into a single vector, and then processed by an MLP to learn nonlinear associations. The main limitation of this static approach is its inability to dynamically prioritize information based on context. Furthermore, flattening structured data into a single vector can lead to the loss of important geometric and topological relationships.

Dynamic Fusion Methods. To overcome these limitations, state-of-the-art models now widely employ dynamic fusion mechanisms based on Cross-Attention [8,90,91]. This is a specific form of attention mechanism where the ‘Query’ vectors originate from one modality (e.g., the dynamic agent’s state), while the ‘Key’ and ‘Value’ vectors are derived from another modality (e.g., the static map features or other agents’ states). This allows the model to dynamically and selectively retrieve relevant information from the environment context for each agent, much like how a person focuses on specific landmarks or vehicles when making a driving decision. This approach mimics selective human attention by using an agent’s state as a “Query” to dynamically probe “Key-Value” pairs derived from the environmental context. Zhou et al. [92] has demonstrated that this approach enables selective and explicit interaction between agents and the map, as well as between agents themselves.

4.2. RNN-Based Methods

As the earliest deep learning architectures successfully applied to sequence modeling, RNN and their variants, especially LSTM and GRU, naturally became the primary tools for solving the trajectory prediction problem. The chain-like structure and internal gating mechanisms of RNN allow them to naturally and effectively learn and remember both long-term and short-term dependencies in time-series data [93,94]. This aligns perfectly with the inherent temporal evolution of trajectory data.

To systematically organize these research findings and clearly present the technological evolution, the comprehensive comparison is presented in Table 3. We can observe that RNN-based prediction methods have evolved from basic models that handle a single agent and output a deterministic trajectory to advanced frameworks capable of handling multi-modal uncertainty and ultimately modeling complex social interactions.

Table 3.

Summary of RNN-based trajectory prediction methods.

4.2.1. The Basic Seq2Seq Framework

The Seq2Seq model is the foundational application paradigm for RNNs in trajectory prediction. This framework utilizes an Encoder and a Decoder, typically built from LSTM or GRUs. The Encoder’s primary function is to compress an entire historical state sequence into a single, information-dense context vector. This fixed-dimensional vector serves as a holistic summary of the agent’s past motion, acting as the informational bridge connecting the observed past to the predicted future. The Seq2Seq framework’s effectiveness was initially demonstrated by research from Zyner et al. [95] on single-agent vehicle prediction. The inherent drawback of this basic framework was its determinism—the inability to generate multiple potential future trajectories. Later efforts aimed to overcome this by extending the framework to support multi-modality, such as the work by Sriram et al. [100], which proposed forecasting for all agents in the scene simultaneously instead of one by one.

4.2.2. Social Pooling

While the basic and probabilistic Seq2Seq frameworks can handle the temporal dynamics and uncertainty of a single agent, their common and more critical limitation is their lack of interaction awareness. They treat each traffic participant as an independent entity, completely ignoring the fact that in dense traffic environments, the mutual influence between individuals is a key factor in determining future behavior.

To address this challenge, Alahi et al. [96] pioneered Social-LSTM, which employed the “Social Pooling” mechanism. This mechanism operates by first defining a spatial grid around each agent. The hidden states of all agents whose current positions fall into the same grid cell are aggregated (e.g., pooled together using a max or average operation). This creates a social tensor that encodes the collective state of an agent’s local neighborhood. This tensor is then concatenated with the agent’s own hidden state and used for the next prediction step. This integrated social interactions into an RNN framework for the first time, enabling each agent to “perceive” its surroundings when predicting its future path, resulting in more realistic, collision-free trajectories. The core concept introduced by Social-LSTM laid the groundwork for the field of interaction-aware prediction, with subsequent works by Xue et al. [97], Xu et al. [98], and others replacing simple pooling with more sophisticated attention mechanisms or GNN.

4.2.3. Advantages and Limitations

RNN and their variants offer powerful temporal modeling capabilities, making them a flexible and scalable backbone for trajectory prediction. Their encoder–decoder architecture allows for the seamless integration of components for multi-modality and social interactions. Despite these strengths, RNN have inherent limitations. Even with LSTM and GRU mitigating the classic vanishing/exploding gradient problem, an information bottleneck can still arise with very long historical sequences. Furthermore, they are often inadequate at modeling spatial relationships.

4.3. CNN-Based Methods

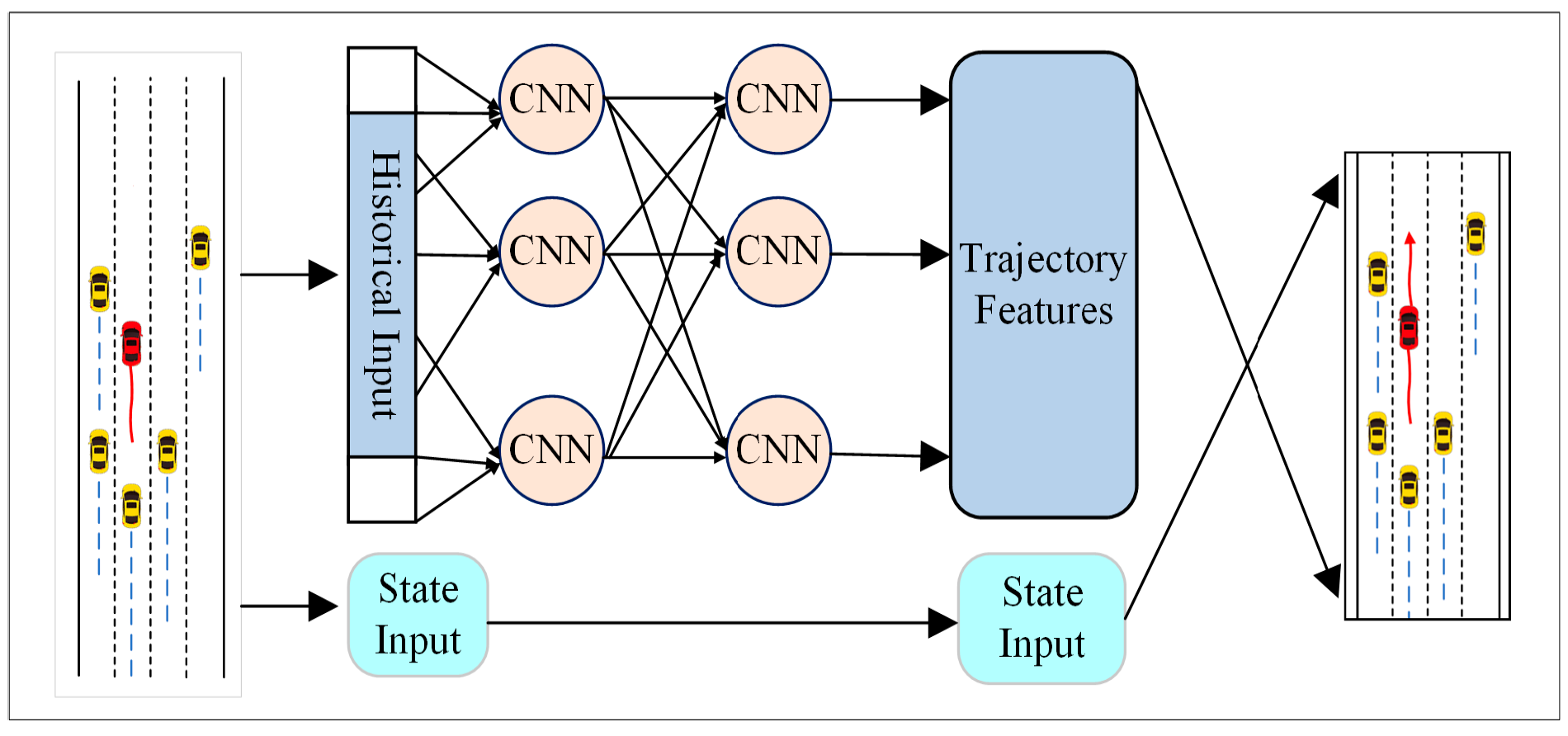

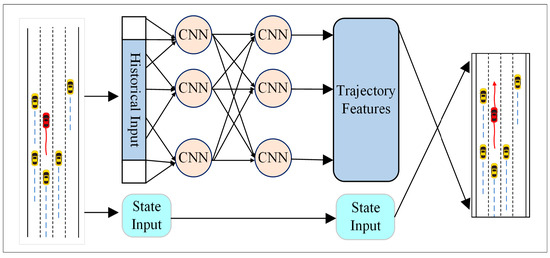

While RNN excel at modeling temporal dependencies, they often struggle to capture the rich spatial context of the traffic scene. In contrast to the temporal focus of RNN, CNN offers a powerful alternative for understanding the spatial context of trajectory prediction. Leveraging their proven ability to extract hierarchical spatial features, CNN-based methods rasterize the complex traffic scene into one or more image-like grids. Standard convolutional operations are then applied to automatically learn and extract the spatio-temporal patterns essential for prediction [101,102,103,104]. A typical framework for this approach is depicted in Figure 5, with key works summarized in Table 4.

Figure 5.

CNN model trajectory prediction framework.

Table 4.

Summary of reviewed DL-based Models relying on CNNs.

4.3.1. Prediction Based on Rasterized Scenes

A prevalent paradigm in trajectory prediction utilizes CNN with BEV representation. This approach encodes static and dynamic information into a multi-channel BEV image centered on the ego-vehicle. This image is then processed by a CNN backbone for feature extraction. Cui et al. [105] pioneered this end-to-end multi-modal method by systematically rasterizing complex traffic scenes into a BEV representation for direct input into a deep CNN. The effectiveness of this paradigm was validated by CoverNet, which demonstrated that scene rasterization allows a CNN to implicitly model agent interactions, leading to more plausible and compliant trajectory predictions.

4.3.2. Variant Architectures

While CNNs excel at spatial modeling, they lack inherent capabilities for explicitly modeling time series data. To overcome this limitation, hybrid architectures combining CNNs with RNNs have been proposed for more effective spatio-temporal fusion [108,109,110,111]. In this framework, the CNN serves as a spatial feature extractor, processing a BEV scene image at each timestep to produce a feature vector. This sequence of vectors is then fed into an RNN (such as LSTM or GRU), which models the temporal dynamics. TraPHic [89] exemplifies this CNN-LSTM approach, explicitly modeling spatio-temporal interactions among agents at intersections to achieve state-of-the-art results. Conversely, Deo et al. [99] proposed an alternative flow: first, an LSTM encodes each agent’s temporal dynamics, then Social Pooling aggregates these hidden states into a spatial tensor, which a CNN subsequently processes to learn high-level spatial correlations.

With advancing research, CNN applications have moved beyond 2D image processing. While 2D CNN excel at static spatial features, they cannot directly model the temporal dimension. The CNN-TP framework by Nikhil et al. [106] utilizes stacked convolutional layers to process all timesteps in parallel, efficiently modeling spatio-temporal trajectory dependencies with a lightweight and real-time structure. The work of Chaabane et al. [107] represents a leap toward modeling higher-dimensional spatio-temporal sequences. Its key innovation lies in redesigning the model’s fundamental units to simultaneously capture spatial and temporal correlations within a unified module, rather than simply stacking CNNs and RNNs.

4.3.3. Advantages and Limitations

The widespread adoption of CNNs in trajectory prediction is due to their significant advantages, particularly their highly parallelizable nature. Unlike the sequential processing of RNNs, CNNs’ convolutional operations can fully leverage modern GPU capabilities, leading to faster inference, a critical requirement for real-time autonomous driving applications. However, a fundamental limitation of CNN methods that rely on BEV images is the information loss from rasterization. The process of converting precise, continuous vectorized data—such as map details and agent coordinates—into a discrete, fixed-resolution grid inevitably discards geometric details and reduces accuracy.

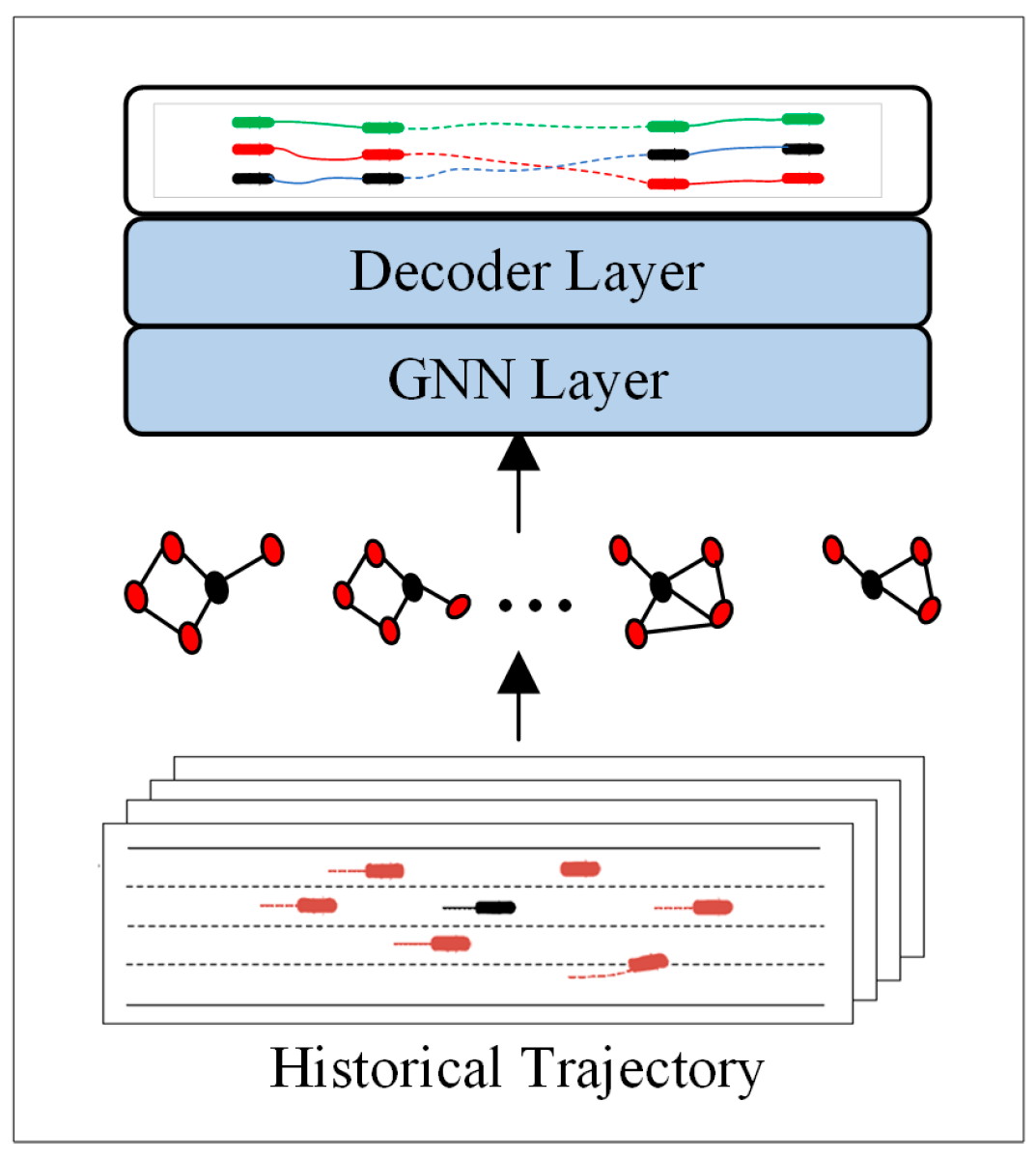

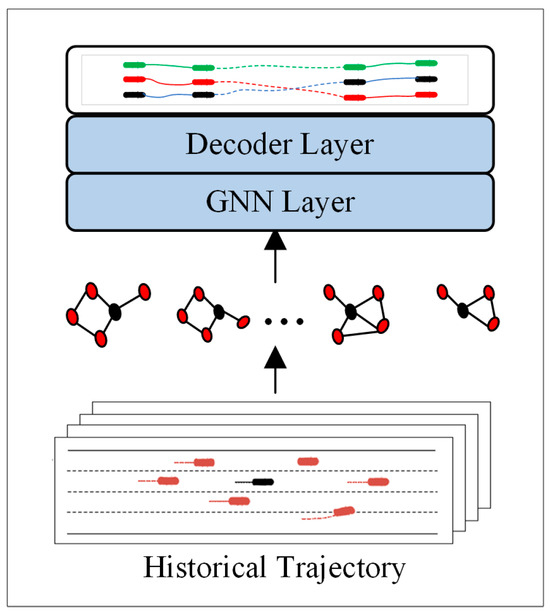

4.4. GNN-Based Methods

Despite their effectiveness, CNN-based methods that rely on rasterization suffer from information loss and difficulties in modeling the explicit topological structure of roads and interactions. GNN offers a powerful alternative by natively operating on vectorized data, allowing them to explicitly model the relationships between agents and map elements as a graph. Therefore, GNNs are particularly effective for modeling the complex interactions between agents and the environment in traffic scenes, as illustrated in Figure 6 and summarized in Table 5.

Figure 6.

GNN model trajectory prediction framework. The scene is first represented as a graph where nodes denote agents and map elements, and edges represent their relationships.

Table 5.

Summary of GNN based trajectory prediction methods.

4.4.1. Graph Representation

To apply a GNN, the traffic scene must be abstracted into a relational graph. This involves defining dynamic agents and static elements as nodes, and their complex relationships as edges. Typically, nodes represent agents like vehicles and map features like lane centerlines. This creates an “irregular graph” where edges signify distinct relationships: social interactions between agents, environmental constraints linking agents to the map, and the road network’s topology connecting map nodes. This process transforms the unstructured scene into a structured, relational network with embedded prior knowledge, enabling efficient GNN processing.

4.4.2. Mainstream Architectures

After the scene graph is constructed, the mainstream GNN architectures learn the relationships between nodes through their core message-passing mechanism. Graph Convolutional Network (GCN) and Graph Attention Networks (GAT) are two key architectures, and the main difference between them lies in how to aggregate information from neighboring nodes.

GCN is a foundational graph learning architecture that updates node representations by aggregating features from their neighbors. In trajectory prediction, the pioneering works GRIP++ [112] applied GCNs to explicitly model spatio-temporal interactions. Traffic participants are treated as nodes with edges defined by spatio-temporal proximity, allowing GCN layers to propagate interaction information. Furthermore, SCALE-Net [113] employed an Edge-enhanced GCN (EGCN) to demonstrate the architecture’s scalability and effectiveness with a varying number of agents.

GAT is a significant advancement over the GCN. It incorporates an attention mechanism, enabling the model to dynamically assign different importance weights to neighboring nodes based on the current context, rather than treating them all equally. LaneGCN [114] is a prime example of applying GAT, where it specifically constructs a lane graph from vectorized HD maps and uses GAT to learn the interaction strength between an agent and different lane lines, thereby achieving highly accurate, context-aware trajectory prediction. Similarly, in VectorNet [115], GAT is also used to aggregate information from different subgraphs to form a comprehensive understanding of the entire scene. RAGAT [116] utilizes two stacked GATs to model these distinct social forces separately, and combines them with vehicle state and free-space information for prediction.

4.4.3. Variant and Hybrid Architectures

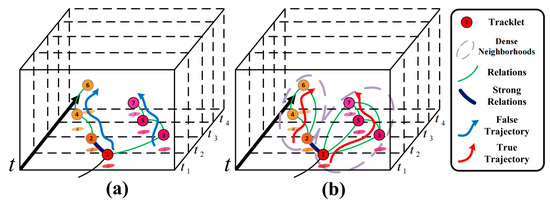

While basic GCN and GAT architectures provide powerful tools for capturing interactions in a single spatial snapshot, the spatio-temporal and multi-modal nature of trajectory prediction necessitates more advanced models. Purely spatial modeling cannot capture dynamic evolution, and deterministic outputs fail to account for behavioral diversity. Consequently, the research frontier has shifted to complex GNN variants and hybrid architectures. Trajectron++ [117] is a prime example, constructing a scene as a heterogeneous spatio-temporal graph. It integrates GNNs and LSTMs for spatio-temporal encoding, which then conditions a CVAE to achieve precise multi-modal prediction. This “GNN encoder + RNN temporal modeling” paradigm became a state-of-the-art approach. S2TNet [118] follows a similar structure but substitutes the RNN with a Transformer, making it theoretically more effective than RNNs at capturing long-term and non-continuous dynamic patterns.

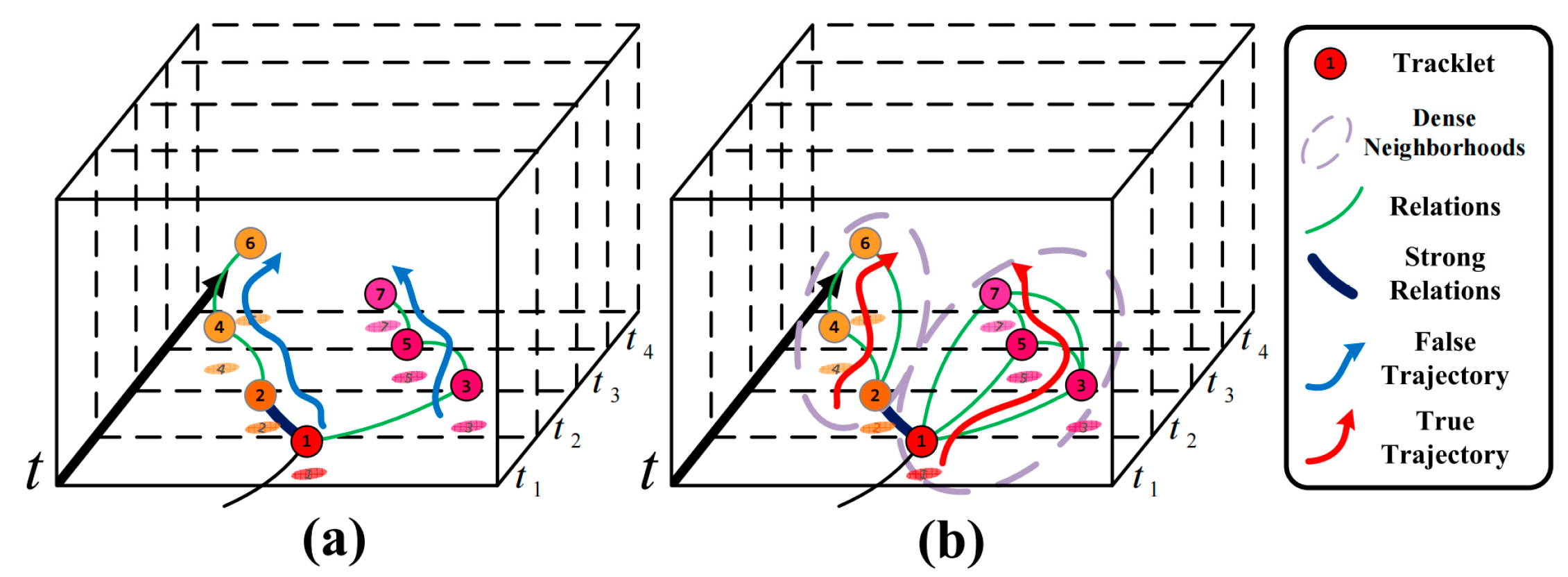

To model group behaviors beyond pairwise relationships, research has explored Hypergraph Networks. As shown in Figure 7, a standard graph edge connects only two nodes, representing a pairwise interaction. Real-world scenarios, however, often involve higher-order interactions, like multiple vehicles at an intersection. A hypergraph generalizes this concept by allowing a ‘hyperedge’ to connect any number of nodes. This provides a flexible and natural way to represent higher-order group interactions, such as the collective behavior of multiple vehicles negotiating a busy intersection or a crowd of pedestrians. For instance, Wen et al. [119] use clustering to identify agent groups and construct a hyperedge for each. A hypergraph network then learns the complex dynamics both within and between these groups, it offers a promising framework for understanding complex collective behaviors.

Figure 7.

(a) Standard graph. (b) Undirected hierarchical relation hypergraph. [119].

4.4.4. Advantages and Limitations

The core strengths of GNNs are their explicit relational modeling and native support for non-Euclidean data. This allows them to effectively integrate agent interactions and road topology into a deep learning framework, enhancing prediction plausibility and accuracy. In dense scenes, the resulting large graphs lead to high computational complexity from message passing, which can compromise the real-time performance required for on-board systems. Furthermore, GNNs are primarily powerful “spatial snapshot” processors and are not complete spatio-temporal solutions on their own. They typically rely on external modules like RNNs or Transformers for temporal modeling, making their handling of time indirect and separate.

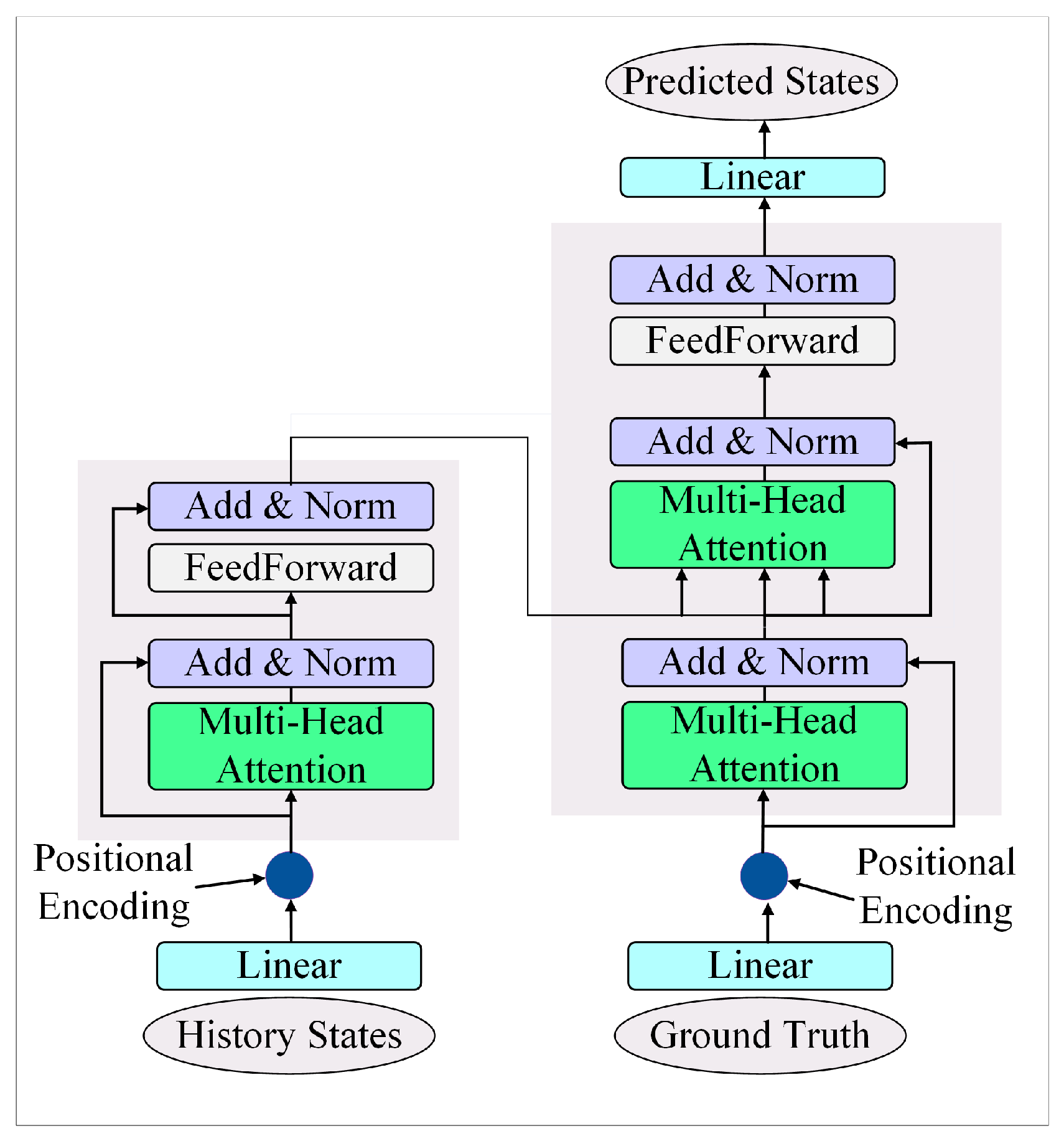

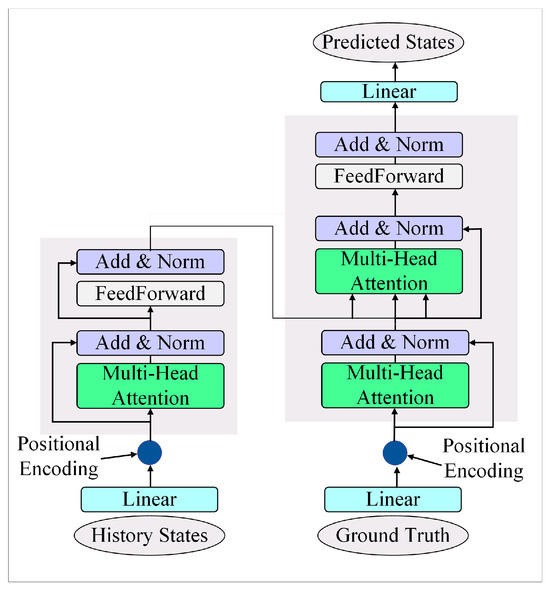

4.5. Transformer-Based Methods

GNN are powerful for relational reasoning but often require additional modules (e.g., RNN) for temporal modeling and can be computationally intensive for large graphs. The Transformer architecture, with its self-attention mechanism, provides a unified and highly parallelizable framework for capturing both long-range temporal dependencies and complex spatial interactions globally. Transformer architecture has introduced a third paradigm to trajectory prediction, enabling a shift towards global modeling [120,121,122]. As illustrated in Figure 8, this allows the model to directly and simultaneously compute the association strength between any element in a sequence and all other elements, overcoming the limitations of previous architectures.

Figure 8.

Illustration of Transformer for trajectory prediction. The attention mechanism allows the model to globally contextualize all elements in the scene.

4.5.1. Self-Attention and Global Modeling

The core of Transformer-based trajectory prediction is the Self-Attention mechanism. This mechanism treats all historical trajectory and scene context information as a single set of tokens, enabling the direct and parallel computation of associations between all elements [123,124,125]. This unified process captures both long-range temporal dependencies and complex spatial interactions. To counteract the inherent permutation-invariance of self-attention, Positional Encoding is introduced to provide temporal context. This is further enhanced by Multi-Head Attention, which allows the model to learn diverse dependency relationships in parallel across different representational subspaces.

4.5.2. Representative Architectures

The power of the self-attention mechanism has catalyzed a series of advanced Transformer-based prediction models. Pioneering work by Giuliari et al. [126] employed a standard encoder–decoder Transformer to process trajectory sequences, proving that self-attention alone, without recurrent structures, could effectively capture temporal dependencies and generate plausible predictions. SceneTransformer [127] takes a holistic view, treating all dynamic and static scene elements as an unordered set of “tokens” and using a single powerful Transformer to learn all interactions end-to-end. AgentFormer [128] offers a more granular approach to social dynamics. Its core innovation is an attention mechanism that distinguishes and simultaneously models individual temporal dynamics (intra-agent attention) and multi-agent social interactions (inter-agent attention), resulting in high-quality, socially compliant predictions.

While the aforementioned models have achieved significant success in accuracy and expressiveness, they also highlight the standard Transformer’s inherent high computational complexity in large-scale scenes. To address the quadratic complexity bottleneck of the self-attention mechanism, HiVT [129] proposed a hierarchical solution. It first uses a lightweight local module to efficiently process each agent’s interaction with its immediate environment, such as its current lane. Subsequently, it employs a global attention module to capture more critical, long-range dependencies between agents. Achaji et al. [130] designed a Factorized Spatio-Temporal Attention module. This module uses separate, lightweight attention layers to efficiently process spatial interactions between agents and their own temporal dynamics independently, before fusing this information.

4.5.3. Advantages and Limitations

The Transformer architecture, while a leading methodology in trajectory prediction, presents a trade-off between its revolutionary performance and inherent challenges. Its primary advantage is superior global dependency modeling; the self-attention mechanism overcomes the limitations of RNN and CNN by capturing long-range spatiotemporal dependencies in a single computational step. This capability is critical for understanding global scene layouts and long-term driving intentions. Additionally, its highly parallelizable nature aligns perfectly with modern hardware, providing superior training efficiency and scalability over RNN. However, these strengths are accompanied by significant challenges. As a model with weak inductive bias, the Transformer requires massive and diverse training data, and its ability to generalize to out-of-distribution, long-tail scenarios is a concern.

4.6. Generative Model-Based Methods

While the aforementioned discriminative models predict a deterministic output, they often fail to capture the inherent multi-modality and uncertainty of future trajectories. To fundamentally address this, researchers have turned to generative models. Generative models address this core limitation by learning the underlying probability distribution of future motions, enabling the prediction of multiple plausible outcomes. The objective of these models is not to predict a single correct answer but to learn and fit the underlying probability distribution of the training data. This provides the autonomous driving system with a complete, probabilistic, and multi-faceted view of the future. The three most prominent generative models in trajectory prediction are GAN, CVAE, and Diffusion Models, with representative works summarized in Table 6.

Table 6.

Summary of reviewed DL-based models reloading on Generative Model Methods.

4.6.1. Generative Adversarial Networks

GAN learns data distributions through a sophisticated “two-player zero-sum game” framework. In trajectory prediction, the Generator creates synthetic future trajectories from historical and scene context, while the Discriminator learns to distinguish these fakes from real data. This adversarial process compels the Generator to master the complex dynamics and constraints of real trajectories, enabling it to produce highly realistic and diverse multi-modal outputs.

The pioneering work, Social-GAN [131], first applied this framework to interaction-aware pedestrian prediction. It combined LSTM-based encoders with a Social Pooling mechanism, demonstrating the potential of GANs to generate socially compliant trajectories. Subsequent research has built upon this foundation; for instance, SoPhie [132] introduced an attention mechanism for more refined constraint modeling, while MATF-GAN [133] tackled more complex vehicle interactions through multi-agent tensor fusion, further enhancing the model’s expressive power.

4.6.2. Conditional Variational Autoencoder

CVAE provides a stable, probabilistic alternative to adversarial training for generating diverse future trajectories. Instead of the competitive framework of GAN, a CVAE learns a low-dimensional, structured latent space designed to capture unobservable factors like intent or goals that drive behavioral diversity. Its classic encoder–decoder architecture functions by first having the encoder map historical trajectories to a probability distribution, typically a Gaussian, in the latent space. The decoder then samples from this latent space. This sampled latent vector, combined with the encoded historical information, is used to generate a specific future trajectory. By repeatedly sampling from this latent space, a CVAE can produce a variety of distinct, conditioned future paths.

DESIRE [134] is a classic application of the CVAE framework. It uses CVAE to generate multiple initial trajectory hypotheses and introduces a sophisticated scoring module to select the final prediction. The highly influential Trajectron++ [117] seamlessly combines CVAE with an advanced GNN encoder. It leverages the GNN to capture complex spatio-temporal interactions and then conditions the CVAE on this rich contextual information to model the uncertainty of future trajectories in the latent space.

4.6.3. Diffusion Models

Diffusion Models represent the latest and most powerful wave in generative modeling [137], their success in fields like image generation having inspired their application to trajectory prediction. The model’s principle involves two stages: a fixed Forward Process and a learnable Reverse Process. In the forward process, Gaussian noise is iteratively added to real trajectory data until it becomes pure noise. The reverse process involves training a denoising network, typically a Transformer, to reverse these steps. For inference, the model begins with pure noise and repeatedly applies the learned denoising network to generate a new, high-quality future trajectory.

Due to their iterative generation process and powerful modeling capabilities, diffusion models generally outperform GAN and CVAE in terms of the quality and diversity of the generated trajectories. MotionDiffuser [135] utilizes a Transformer-based denoiser to capture complex spatio-temporal dependencies and introduces various guidance mechanisms to ensure the physical plausibility and goal-orientation of the generated trajectories. Yuan et al. [136] directly embed kinematic or dynamic models into the iterative denoising process of the diffusion model. This approach fundamentally eliminates physical artifacts and ensures the dynamic feasibility of every generated trajectory.

4.6.4. Advantages and Limitations

The primary advantage of generative models is their inherent ability to model the multi-modal uncertainty of trajectory prediction, producing a probabilistic view of the future that is crucial for safe downstream planning. However, this expressive power comes with specific challenges for each paradigm: GAN can generate sharp, realistic trajectories, but their adversarial training is notoriously unstable, prone to mode collapse and divergence, and requires careful tuning. CVAE offer stable training and a robust probabilistic framework, but their outputs can be overly smooth. Diffusion Models typically produce the highest quality and most diverse samples, but their iterative denoising process results in very slow inference speeds. Furthermore, objectively assessing the quality of a predicted distribution, rather than a single trajectory’s error, remains an open research problem.

5. Evaluation

5.1. Datasets

To assess the accuracy of an autonomous driving trajectory prediction model, the predicted trajectory is typically compared to the real trajectory. The trajectory data comes from multiple public datasets collected by sensors such as LiDAR, cameras, radar, etc. In recent years, modern benchmark datasets have made significant progress in the field of autonomous driving trajectory prediction, overcoming the limitations of earlier datasets in environmental and traffic participant categories, such as NGSIM-180 and highD datasets that capture vehicle motion on highways using drones and surveillance cameras, focusing primarily on a single type of traffic participant whose possible actions include turning left, turning right, and keeping straight.

As AI model complexity increases, more image data is needed to achieve efficient generalization [138,139,140]. The vehicle-centric dataset and the pedestrian/hybrid centric dataset not only contain camera and lidar data, but also provide high-precision (HD) maps for capturing the topology of the road. Compared to earlier datasets, these datasets cover more categories, record mileage data for own vehicles, cover multiple cities, different weather and lighting conditions (including rain and night), and provide labels for other traffic participants such as traffic lights and road rules.

Vehicle-centric datasets focus on vehicle trajectory prediction. These datasets usually contain rich vehicle motion information and are suitable for studying vehicle interactions and vehicle relationships with road environments [141,142]. Pedestrian/mix-centric datasets focus not only on vehicle trajectories, but also on pedestrian trajectories and interactions with other traffic participants [96]. These datasets are of great significance for studying trajectory prediction in multimodal traffic environment. Table 7 provides detailed information about the commonly used datasets in the existing research.

Table 7.

Commonly used datasets.

5.2. Evaluation Index

The evaluation index of trajectory prediction of automatic driving is the key to measuring the performance of the model. These indexes evaluate the accuracy, reliability, efficiency and practicability of the model from different angles. According to the characteristics of trajectory prediction task, the evaluation indicators are divided into modal, multimodal, probability/uncertainty, cross-correlation and computational efficiency indicators.

- Monomodal

ADE is the average Euclidean distance between the predicted trajectory and the true trajectory at each time step and measures the average error of the model over the entire prediction time range. This can be mathematically formulated as:

FDE is the Euclidean distance between the final position of the predicted trajectory and the final position of the true trajectory, focusing on the accuracy of the model at the predicted endpoint. This can be mathematically formulated as:

- 2.

- Multimoding

minADE is the ADE that selects the trajectory with the smallest error from the true trajectory among the k predicted trajectories generated by the model, and measures the average error of the best trajectory of the model in multimodal prediction. This can be mathematically formulated as:

minFDE is the FDE that selects the trajectory with the smallest error from the true trajectory from the k predicted trajectories generated by the model, focusing on the endpoint error of the best trajectory of the model in multimodal prediction. This can be mathematically formulated as:

Miss Rate measures the proportion of k predicted trajectories generated by the model that differ from the true trajectory by more than a certain threshold. This can be mathematically formulated as:

Overlap Rate measures the degree of overlap between multiple prediction trajectories generated by the model and is used to assess the diversity of the model in multimodal prediction. This can be mathematically formulated as:

- 3.

- Probability/uncertainty index

NLL measures how well the model-predicted trajectory distribution matches the true trajectory, with a lower NLL indicating that the model-predicted distribution is closer to the true distribution. This can be mathematically formulated as:

The Brier Score measures the difference between the probability of the model prediction and the true result, with a lower Brier Score indicating that the probability of the model prediction is more accurate. This can be mathematically formulated as:

ECE measures how well the model predicts probability, with lower ECE indicating more reliable model predictions.

AURC measures the risk coverage ability of a model at different confidence thresholds, with a higher AURC indicating that the model covers the true trajectory better at high confidence.

6. Challenges and Outlook

6.1. Challenges

- Complex Interactions Among Traffic Participants

Self-driving vehicles and other vehicles on the road will have behaviors such as following, overtaking, lane change and merging, forming vehicle-vehicle interaction. In the multi-vehicle interaction scene, the dynamic information such as relative position, velocity and acceleration between vehicles changes constantly, and the interaction relationship between vehicles is complex. For example, in the intersection, highway ramp junction and other scenes, vehicles need to consider multiple directions at the same time, predicting the intention and trajectory of other vehicles is difficult. The dynamic nature of traffic environment makes the interaction between vehicles full of uncertainty. For example, sudden lane changes, acceleration or deceleration are difficult to predict accurately in advance. Pedestrians may cross the road without a clear signal, or change direction at an intersection. These behaviors form vehicle-pedestrian interactions and increase the difficulty of trajectory prediction.

- 2.

- Strong Reliance on Traffic Rules and High-Precision Maps

Traffic rules set clear boundaries and priorities for the trajectory planning of autonomous vehicles, but traffic rules are not static, and the rule differences in different regions and scenarios increase the complexity of trajectory prediction. Autonomous driving systems need to understand the semantics of traffic rules, which requires a combination of computer vision technology and natural language processing technology to ensure that rule information is accurately integrated into the trajectory prediction model.

High-precision maps provide detailed lane information, traffic location, road slope, curvature and other data, which are crucial for accurate trajectory prediction. At the same time, high-precision maps need to be updated in real time to reflect dynamic changes such as road construction and accidents, so the fusion of high-precision maps and vehicle perception systems is the key to trajectory prediction.

- 3.

- Cumulative Error and Behavioral Uncertainty in Long-Term Prediction

Automatic driving needs to predict the trajectories of surrounding vehicles and pedestrians in the future. Many prediction models are based on simplified traffic flow assumptions. In long-term prediction, deviations from these assumptions and actual situations will gradually accumulate. The intentions and interaction behaviors of traffic participants have multimodal characteristics. Traditional deterministic prediction is difficult to cover all possible scenarios and accurately capture future states, resulting in error accumulation. At the same time, the uncertainty of driving behavior will be further amplified in long-term prediction, resulting in deviation of prediction results. In complex traffic scenes, the interaction behavior of vehicles is more complex, which increases the uncertainty of behavior.

- 4.

- Generalization Ability for Corner Cases

Although many autonomous driving trajectory prediction models have made some progress in common scenarios, their generalization ability still faces many challenges in rare scenarios. Rare scenarios are those that occur less frequently in real driving but may have a significant impact on driving safety, such as road ponding in extreme weather conditions, abnormal stops caused by sudden vehicle failures, and the appearance of non-standard traffic signs or gestures.

Existing models tend to learn common patterns in training data and lack sufficient capture power for rare scenarios. In order to improve the generalization ability of the model in rare scenarios, the researchers collected a large amount of rich and diverse driving data to enable the model to learn various possible scene features and patterns, thus improving the adaptability to rare scenarios. However, collecting data covering all possible rare scenarios is unrealistic because actual driving scenarios have extremely high complexity and uncertainty. There is also the use of techniques such as GAN to generate rare scene data, which helps the model learn the characteristics and distribution of rare scenes and improve its prediction ability in these scenarios, but the quality and authenticity of the generated data is still a problem to be solved.

- 5.

- Real-Time Requirements and Computational Efficiency

Real-time performance and computational efficiency are the key factors to ensure vehicle safety and efficiency in automatic driving trajectory prediction. Real-time means that the trajectory prediction model can complete the prediction of the future trajectory of the surrounding traffic participants in a very short time, and computational efficiency refers to the ability of the model to complete the calculation task quickly and efficiently under the limited hardware resources. However, there is a contradiction between the real-time requirement of trajectory prediction and the limitation of computational resources. On the one hand, trajectory prediction must be completed in a very short time to ensure vehicle safety and driving efficiency; on the other hand, there is a significant conflict between the computational complexity of high-precision models and limited hardware resources.

6.2. Future Research Directions

The future research directions are proposed to directly address the core challenges outlined in Section 6.1. The mapping between these challenges and directions is summarized in Table 8, which provides a structured overview of the proposed solutions. Each direction is then elaborated in detail below, with discussions on specific technical pathways and current limitations.

Table 8.

Mapping between Challenges and Future Research Directions.

- Interactive Game Theory and Embodied Intelligence

Current interaction modeling mostly relies on data-driven implicit learning (such as GNN), lacking explicit descriptions of game decisions. In the future, it is necessary to combine multi-agent reinforcement learning (MARL) with cognitive theory to construct interpretable interaction models. For example, a hierarchical game-theoretic framework can be designed: the upper level utilizes inverse reinforcement learning (IRL) or Bayesian inference to reason about the intentions and rewards of other vehicles; the lower level then employs multi-agent reinforcement learning (MARL) or model predictive control (MPC) to optimize collaborative and competitive strategies in real-time [143]. This creates a closed loop of “perception-intention reasoning-decision-prediction”.

Additionally, embodied intelligence (Embodied AI) can be introduced, where the predictive model is not just a passive observer but is integrated with a vehicle dynamics model and perception simulator. This allows the model to understand the physical constraints and consequences of actions through interaction and simulation, leading to more physically plausible and causal predictions.

The primary application of this direction is in the development of more transparent and trustworthy autonomous vehicles (AVs), particularly in unstructured and complex scenarios. This can significantly reduce ambiguous situations and improve traffic flow efficiency and safety in urban environments.

- 2.

- Reduction and Dynamic Fusion of High-Precision Maps

To reduce reliance on static high-precision maps, real-time map construction technology needs to be developed. By integrating vehicle sensors and Vehicle-to-Everything (V2X) communication, the road topology can be dynamically updated. Vehicles and roadside units (RSUs) can share their locally perceived BEV map snippets or detected objects with each other [144]. By fusing these distributed perceptions, a vehicle can obtain a collective field view that extends far beyond its own sensor range. Crowdsourced mapping can aggregate and update map changes detected by fleets of vehicles into a cloud-based dynamic map service, enabling continuous, automated map updates. Furthermore, a no map prediction paradigm should be further developed. Using the visual-language model (VLM), the semantic of traffic rules can be parsed, and rule embedding vectors can be generated to replace the traditional map input.

The practical value of significantly reducing reliance on expensive and hard-to-maintain high-definition maps is huge. AVs could operate seamlessly in suburban, rural, or newly constructed areas where HD maps are unavailable. The real-time map building and V2X fusion allow AVs to adapt immediately without waiting for map updates, thus enhancing the robustness and geographical scalability of autonomous driving services.

- 3.

- Closed-Loop Error Correction for Long-Term Prediction

To address the issue of error accumulation, a neural-symbolic hybrid system can be constructed in conjunction with the World Models to simulate the dynamic evolution of traffic scenarios. For example, The NEST model [145] combines Small-world Networks with Hypergraphs and introduces neuromodulators, aiming to efficiently and accurately capture the intricate relationships among traffic participants, and is particularly suitable for high-density urban environments. Moreover, a closed-loop prediction framework can be established, where the prediction results are fed back to the planning module, and the trajectory deviations are corrected through online re-planning.