Intelligent Fault Diagnosis for Cross-Domain Few-Shot Learning of Rotating Equipment Based on Mixup Data Augmentation

Abstract

1. Introduction

2. Theory of Mixup Data Augmentation

3. Cross-Domain Few-Shot Intelligent Fault Diagnosis Model Based on Mixup Data Augmentation

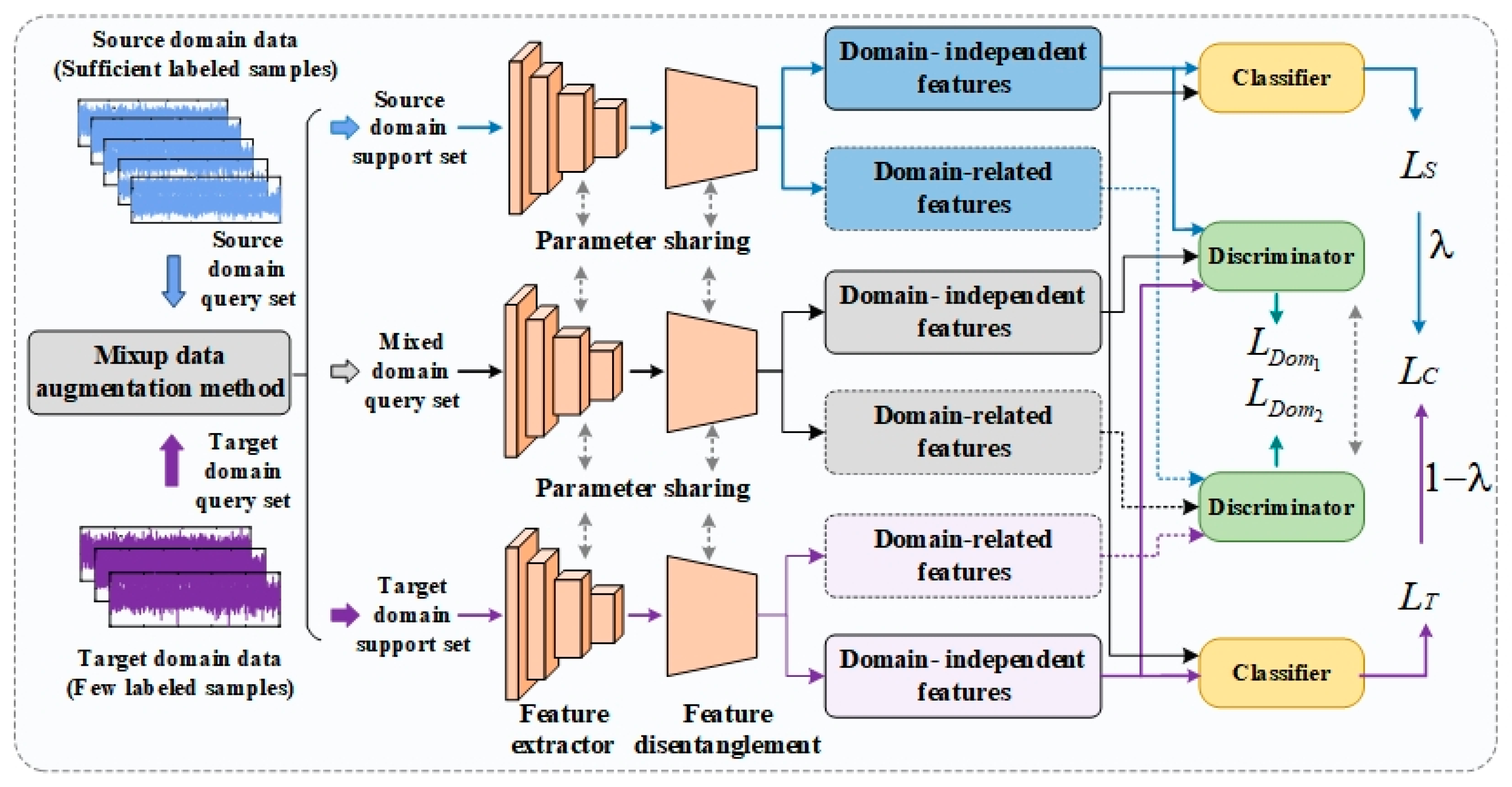

3.1. Overall Framework of the Proposed Method

3.2. Mixup Data Augmentation Method

3.3. Feature Decoupling Module Based on Self-Attention

3.4. Parameter Updating

3.4.1. Sample Classification Loss

3.4.2. Domain Classification Loss

3.4.3. Integrated Loss Function

3.5. Algorithm Implement Process

| Algorithm 1: Cross-domain few-shot intelligent fault diagnosis algorithm based on Mixup data augmentation | ||

| Input: | Source domain dataset ; Target domain dataset | |

| Output: | Trained feature extractor , Feature decoupling module , Domain discriminator , Classifier | |

| 1: | //Network model parameter initialization | |

| 2: | While not done do | |

| 3: | //Sample the support set and query set from the source domain dataset | |

| 4: | //Sample the support set and query set from the target domain dataset | |

| 5: | //Obtain the mixed-domain query set | |

| 6: | //Extract domain-independent features according to Equation (7) | |

| 7: | //Extract domain-related features according to Equation (7) | |

| 9: | Calculate the source domain classification loss using , according to Equation (8) | |

| 10: | Calculate the target domain classification loss using , according to Equation (9) | |

| 11: | //Calculate the sample classification loss | |

| 12: | Calculate the loss using , , according to Equation (11) | |

| 13: | Calculate the loss using , , according to Equation (12) | |

| 14: | ||

| 15: | ||

| 16: | end while | |

4. Experimental Verification

4.1. Experimental Setup

4.2. Comparison Methods

- (1)

- Comparison Method 1 (Single ViT): This method uses the same feature extractor and classifier as the proposed method but updates network parameters exclusively using labeled samples from the source domain.

- (2)

- Comparison Method 2 (Prototypical Network): Leveraging a prototypical network for fault diagnosis, this method employs the same base network models for the feature extractor and classifier as the proposed method. It updates network parameters using labeled target domain samples.

- (3)

- Comparison Method 3 (Without Mixup): This comparative method excludes both the Mixup data augmentation and the subsequent feature processing operations used in the proposed method. It employs the same feature extractor and classifier as the proposed method, and updates network parameters using a large number of labeled samples from the source domain and a small number of labeled samples from the target domain, respectively.

4.3. Test Case 1

4.3.1. Dataset Description of Test Case 1

- Laboratory Bearing Dataset

- 2.

- Jiangnan University (JNU) Bearing Dataset

4.3.2. Experimental Results and Analysis of Test Case 1

4.4. Test Case 2

4.4.1. Dataset Description of Test Case 2

- Case Western Reserve University (CWRU) Bearing Dataset

- 2.

- Huazhong University of Science and Technology (HUST) Bearing Dataset

4.4.2. Experimental Results and Analysis of Test Case 2

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, T.; Han, Q.; Chu, F.; Feng, Z. Vibration based condition monitoring and fault diagnosis of wind turbine planetary gearbox: A review. Mech. Syst. Signal Process. 2019, 126, 662–685. [Google Scholar] [CrossRef]

- Li, J.; Luo, W.; Bai, M. Review of research on signal decomposition and fault diagnosis of rolling bearing based on vibration signal. Meas. Sci. Technol. 2024, 35, 092001. [Google Scholar] [CrossRef]

- Liu, D.; Cui, L.; Cheng, W. A review on deep learning in planetary gearbox health state recognition: Methods, applications, and dataset publication. Meas. Sci. Technol. 2023, 35, 012002. [Google Scholar] [CrossRef]

- Dašić, M.; Almog, R.; Agmon, L.; Yehezkel, S.; Halfin, T.; Jopp, J.; Ya’akobovitz, A.; Berkovich, R.; Stankovic, I. Role of trapped molecules at sliding contacts in lattice-resolved friction. ACS Appl. Mater. Interfaces 2024, 16, 44249–44260. [Google Scholar] [CrossRef]

- Dašić, M.; Ponomarev, I.; Polcar, T.; Nicolini, P. Tribological properties of vanadium oxides investigated with reactive molecular dynamics. Tribol. Int. 2022, 175, 107795. [Google Scholar] [CrossRef]

- Gkagkas, K.; Ponnuchamy, V.; Dašić, M.; Stanković, I. Molecular dynamics investigation of a model ionic liquid lubricant for automotive applications. Tribol. Int. 2017, 113, 83–91. [Google Scholar] [CrossRef]

- Dašić, M.; Stanković, I.; Gkagkas, K. Molecular dynamics investigation of the influence of the shape of the cation on the structure and lubrication properties of ionic liquids. Phys. Chem. Chem. Phys. 2019, 21, 4375–4386. [Google Scholar] [CrossRef]

- Yan, C.; Zhao, M.; Lin, J. Fault signature enhancement and skidding evaluation of rolling bearing based on estimating the phase of the impulse envelope signal. J. Sound Vib. 2020, 485, 115529. [Google Scholar] [CrossRef]

- Zonta, T.; Da, C.; Rosa, R.; Lima, M.; Da, T.; Li, G. Predictive maintenance in the industry 4.0: A systematic literature review. Comput. Ind. Eng. 2020, 150, 106889. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, X.; Gao, C.; Lin, J.; Ren, Z.; Feng, K. Contrastive learning-enabled digital twin framework for fault diagnosis of rolling bearing. Meas. Sci. Technol. 2024, 36, 015026. [Google Scholar] [CrossRef]

- Vashishtha, G.; Chauhan, S.; Sehri, M.; Hebda-Sobkowicz, J.; Zimroz, R.; Dumond, P.; Kumar, R. Advancing machine fault diagnosis: A detailed examination of convolutional neural networks. Meas. Sci. Technol. 2024, 36, 022001. [Google Scholar] [CrossRef]

- Chen, B.; Shen, C.; Shi, J.; Kong, L.; Tan, L.; Wang, D.; Zhu, Z. Continual learning fault diagnosis: A dual-branch adaptive aggregation residual network for fault diagnosis with machine increments. Chin. J. Aeronaut. 2023, 36, 361–377. [Google Scholar] [CrossRef]

- Feng, K.; Ji, J.; Ni, Q.; Beer, M. A review of vibration-based gear wear monitoring and prediction techniques. Mech. Syst. Signal Process. 2023, 182, 109605. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Li, N.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Cen, J.; Yang, Z.; Liu, X.; Xiong, J.; Chen, H. A review of data-driven machinery fault diagnosis using machine learning algorithms. J. Vib. Eng. Technol. 2022, 10, 2481–2507. [Google Scholar] [CrossRef]

- Xiang, L.; Yang, X.; Hu, A.; Su, H.; Wang, P. Condition monitoring and anomaly detection of wind turbine based on cascaded and bidirectional deep learning networks. Appl. Energy 2022, 305, 117925. [Google Scholar] [CrossRef]

- Ding, Y.; Jia, M.; Miao, Q.; Cao, Y. A novel time-frequency transformer based on self-attention mechanism and its application in fault diagnosis of rolling bearings. Mech. Syst. Signal Process. 2022, 168, 108616. [Google Scholar] [CrossRef]

- Li, X.; Ding, Q.; Sun, J. Remaining useful life estimation in prognostics using deep convolution neural networks. Reliab. Eng. Syst. Saf. 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Xu, Z.; Li, C.; Yang, Y. Fault diagnosis of rolling bearings using an improved multi-scale convolutional neural network with feature attention mechanism. ISA Trans. 2021, 110, 379–393. [Google Scholar] [CrossRef]

- Wang, X.; Mao, D.; Li, X. Bearing fault diagnosis based on vibro-acoustic data fusion and 1D-CNN network. Measurement 2021, 173, 108518. [Google Scholar] [CrossRef]

- Niu, G.; Liu, E.; Wang, X.; Ziehl, P.; Zhang, B. Enhanced discriminate feature learning deep residual CNN for multitask bearing fault diagnosis with information fusion. IEEE Trans. Ind. Inform. 2022, 19, 762–770. [Google Scholar] [CrossRef]

- Cao, H.; Shao, H.; Zhong, X. Unsupervised domain-share CNN for machine fault transfer diagnosis from steady speeds to time-varying speeds. J. Manuf. Syst. 2022, 62, 186–198. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Zio, E.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Meng, Z.; Zhan, X.; Li, J.; Pan, Z. An enhancement denoising autoencoder for rolling bearing fault diagnosis. Measurement 2018, 130, 448–454. [Google Scholar] [CrossRef]

- Wang, P.; Li, J.; Wang, S.; Zhang, F.; Shi, J.; Shen, C. A new meta-transfer learning method with freezing operation for few-shot bearing fault diagnosis. Meas. Sci. Technol. 2023, 34, 074005. [Google Scholar] [CrossRef]

- Zhu, Z.; Lei, Y.; Qi, G.; Chai, Y.; Mazur, N.; An, Y.; Huang, X. A review of the application of deep learning in intelligent fault diagnosis of rotating machinery. Measurement 2023, 206, 112346. [Google Scholar] [CrossRef]

- Li, C.; Li, S.; Zhang, A.; He, Q.; Liao, Z.; Hu, J. Meta-learning for few-shot bearing fault diagnosis under complex working conditions. Neurocomputing 2021, 439, 197–211. [Google Scholar] [CrossRef]

- Long, J.; Chen, Y.; Huang, H.; Yang, Z.; Huang, Y.; Li, C. Multidomain variance-learnable prototypical network for few-shot diagnosis of novel faults. J. Intell. Manuf. 2024, 35, 1455–1467. [Google Scholar] [CrossRef]

- Zhang, X.; Su, Z.; Hu, X.; Han, Y.; Wang, S. Semisupervised momentum prototype network for gearbox fault diagnosis under limited labeled samples. IEEE Trans. Ind. Inform. 2022, 18, 6203–6213. [Google Scholar] [CrossRef]

- Chai, Z.; Zhao, C. Fault-prototypical adapted network for cross-domain industrial intelligent diagnosis. IEEE Trans. Autom. Sci. Eng. 2021, 19, 3649–3658. [Google Scholar] [CrossRef]

- Zhang, Y.; Han, D.; Tian, J.; Shi, P. Domain adaptation meta-learning network with discard-supplement module for few-shot cross-domain rotating machinery fault diagnosis. Knowl.-Based Syst. 2023, 268, 110484. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, J.; Ye, X.; Jing, Q.; Wang, J.; Geng, Y. Few-shot transfer learning with attention mechanism for high-voltage circuit breaker fault diagnosis. IEEE Trans. Ind. Appl. 2022, 58, 3353–3360. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, Y.; Peng, X.; Zhang, W. A fault diagnosis method for few-shot industrial processes based on semantic segmentation and hybrid domain transfer learning. Appl. Intell. 2023, 53, 28268–28290. [Google Scholar] [CrossRef]

- Chen, W.; Liu, Y.; Kira, Z.; Wang, Y.; Huang, J. A closer look at few-shot classification. arXiv 2019, arXiv:1904.04232. [Google Scholar] [CrossRef]

- Campbell, J.; Dawson, M.; Zisserman, A.; Xie, W.; Nellåker, C. Deep facial phenotyping with Mixup augmentation. In Proceedings of the Annual Conference on Medical Image Understanding and Analysis, Aberdeen, UK, 19–21 July 2023; Springer Nature: Cham, Switzerland, 2023; pp. 133–144. [Google Scholar] [CrossRef]

- Zhang, Y.; Gong, M.; Li, J.; Feng, K.; Zhang, M. Few-shot learning with enhancements to data augmentation and feature extraction. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 6655–6668. [Google Scholar] [CrossRef]

- Zhang, Z.; Chang, D.; Zhu, R.; Li, X.; Ma, Z.; Xue, J. Query-aware cross-Mixup and cross-reconstruction for few-shot fine-grained image classification. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 1276–1286. [Google Scholar] [CrossRef]

- Xiao, K.; Wang, Z.; Li, J. Semantic-guided robustness tuning for few-shot transfer across extreme domain shift. In Proceedings of the European Conference on Computer Vision, Milano, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 303–320. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Cao, K.; Peng, J.; Chen, J.; Hou, X.; Ma, A. Adversarial style Mixup and improved temporal alignment for cross-domain few-shot action recognition. Comput. Vis. Image Underst. 2025, 255, 104341. [Google Scholar] [CrossRef]

- Zhuo, L.; Fu, Y.; Chen, J.; Cao, Y.; Jiang, Y. Tgdm: Target guided dynamic Mixup for cross-domain few-shot learning. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 6368–6376. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Yu, K.; Wang, X.; Cheng, Y.; Feng, K.; Zhang, Y.; Xing, B. Dual structural consistent partial domain adaptation network for intelligent machinery fault diagnosis. IEEE Trans. Instrum. Meas. 2024, 73, 3520413. [Google Scholar] [CrossRef]

- Diederik, P.; Jimmy, B. Adam: A method for stochastic optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Li, K.; Ping, X.; Wang, H.; Chen, P.; Cao, Y. Sequential fuzzy diagnosis method for motor roller bearing in variable operating conditions based on vibration analysis. Sensors 2013, 13, 8013–8041. [Google Scholar] [CrossRef]

- Case Western Reserve University Bearing Data Center. Available online: https://engineering.case.edu/bearingdatacenter/download-data-file (accessed on 10 October 2024).

- Smith, W.; Randall, R. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64, 100–131. [Google Scholar] [CrossRef]

- Zhao, C.; Zio, E.; Shen, W. Domain generalization for cross-domain fault diagnosis: An application-oriented perspective and a benchmark study. Reliab. Eng. Syst. Saf. 2024, 245, 109964. [Google Scholar] [CrossRef]

| Domain | Source Domain | Target Domain | ||

|---|---|---|---|---|

| Dataset | Laboratory bearing dataset | JNU bearing dataset | ||

| Working condition | Speed | Number of samples | Speed | Number of samples |

| Task 1 | 1500 r/min | 100 | 1000 r/min | 5/10 |

| Task 2 | 900 r/min | 100 | 1000 r/min | 5/10 |

| Task 3 | 1500 r/min | 100 | 600 r/min | 5/10 |

| Task 4 | 900 r/min | 100 | 600 r/min | 5/10 |

| Method | Task 1 | Task 2 | Task 3 | Task 4 | |

|---|---|---|---|---|---|

| Comparison method 1 | Average accuracy (%) | 76.63 | 75.05 | 76.32 | 74.84 |

| Standard deviation | 2.9417 | 2.7870 | 2.4792 | 3.0078 | |

| Comparison method 2 | Average accuracy (%) | 85.24 | 83.40 | 82.06 | 80.53 |

| Standard deviation | 1.6483 | 1.8227 | 1.7621 | 1.8890 | |

| Comparison method 3 | Average accuracy (%) | 85.95 | 85.79 | 87.58 | 87.26 |

| Standard deviation | 1.0729 | 1.3679 | 1.0492 | 1.8878 | |

| Proposed method | Average accuracy (%) | 87.32 | 86.21 | 89.69 | 91.21 |

| Standard deviation | 0.5824 | 0.4878 | 0.5625 | 1.0325 | |

| Method | Task 1 | Task 2 | Task 3 | Task 4 | |

|---|---|---|---|---|---|

| Comparison method 1 | Average accuracy (%) | 83.06 | 81.99 | 82.31 | 82.16 |

| Standard deviation | 2.4725 | 1.8354 | 2.1887 | 2.0171 | |

| Comparison method 2 | Average accuracy (%) | 88.39 | 90.34 | 90.18 | 89.17 |

| Standard deviation | 1.2595 | 1.3865 | 1.3298 | 1.5010 | |

| Comparison method 3 | Average accuracy (%) | 92.61 | 94.39 | 92.28 | 92.78 |

| Standard deviation | 1.4948 | 1.2964 | 1.4402 | 1.2025 | |

| Proposed method | Average accuracy (%) | 98.33 | 98.62 | 95.44 | 96.28 |

| Standard deviation | 0.3917 | 0.3931 | 0.4153 | 0.5707 | |

| Domain | Source Domain | Target Domain | ||

|---|---|---|---|---|

| Dataset | CWRU bearing dataset | HUST bearing dataset | ||

| Working condition | Load | Number of samples | Speed | Number of samples |

| Task 1 | 1 HP | 100 | 40 Hz | 5/10 |

| Task 2 | 3 HP | 100 | 40 Hz | 5/10 |

| Task 3 | 1 HP | 100 | 60 Hz | 5/10 |

| Task 4 | 3 HP | 100 | 60 Hz | 5/10 |

| Method | Task 1 | Task 2 | Task 3 | Task 4 | |

|---|---|---|---|---|---|

| Comparison method 1 | Average accuracy (%) | 81.79 | 81.26 | 80.31 | 82.04 |

| Standard deviation | 2.4932 | 1.8034 | 2.4183 | 1.5228 | |

| Comparison method 2 | Average accuracy (%) | 87.46 | 87.79 | 88.16 | 88.42 |

| Standard deviation | 1.5014 | 1.7103 | 1.6727 | 1.5701 | |

| Comparison method 3 | Average accuracy (%) | 91.11 | 91.84 | 93.16 | 91.05 |

| Standard deviation | 1.0743 | 1.3005 | 1.0339 | 1.3831 | |

| Proposed method | Average accuracy (%) | 95.05 | 93.26 | 95.42 | 92.21 |

| Standard deviation | 0.6080 | 0.3964 | 0.4863 | 0.5426 | |

| Method | Task 1 | Task 2 | Task 3 | Task 4 | |

|---|---|---|---|---|---|

| Comparison method 1 | Average accuracy (%) | 84.50 | 84.74 | 83.91 | 85.01 |

| Standard deviation | 1.6235 | 1.5964 | 1.5833 | 2.1690 | |

| Comparison method 2 | Average accuracy (%) | 89.94 | 90.50 | 92.17 | 93.28 |

| Standard deviation | 1.1440 | 1.3615 | 1.4636 | 1.5947 | |

| Comparison method 3 | Average accuracy (%) | 96.11 | 95.61 | 96.89 | 96.88 |

| Standard deviation | 1.0688 | 1.0874 | 0.9196 | 1.0940 | |

| Proposed method | Average accuracy (%) | 98.83 | 98.95 | 99.55 | 99.28 |

| Standard deviation | 0.3265 | 0.2095 | 0.2828 | 0.2812 | |

| Method | N | IF | RF | OF |

|---|---|---|---|---|

| Comparative method 1 | 0.7357 | 0.8853 | 1.0000 | 0.8850 |

| Comparative method 2 | 0.8293 | 0.9243 | 0.9564 | 0.8972 |

| Comparative method 3 | 0.9573 | 0.9819 | 1.0000 | 0.9782 |

| Proposed method | 0.9890 | 0.9971 | 1.0000 | 0.9890 |

| Method | N | IF | RF | OF |

|---|---|---|---|---|

| Comparative method 1 | 0.9445 | 0.7556 | 0.9074 | 0.8519 |

| Comparative method 2 | 0.9111 | 0.8112 | 0.9667 | 0.9278 |

| Comparative method 3 | 0.9815 | 0.9389 | 1.0000 | 0.9889 |

| Proposed method | 0.9972 | 0.9778 | 1.0000 | 1.0000 |

| Method | N | IF | RF | OF |

|---|---|---|---|---|

| Comparative method 1 | 0.8208 | 0.7476 | 0.9203 | 0.8368 |

| Comparative method 2 | 0.8079 | 0.8534 | 0.9668 | 0.9122 |

| Comparative method 3 | 0.9569 | 0.9548 | 1.0000 | 0.9780 |

| Proposed method | 0.9917 | 0.9888 | 1.0000 | 0.9890 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, K.; Li, Y.; Zhan, Q.; Zhang, Y.; Xing, B. Intelligent Fault Diagnosis for Cross-Domain Few-Shot Learning of Rotating Equipment Based on Mixup Data Augmentation. Machines 2025, 13, 807. https://doi.org/10.3390/machines13090807

Yu K, Li Y, Zhan Q, Zhang Y, Xing B. Intelligent Fault Diagnosis for Cross-Domain Few-Shot Learning of Rotating Equipment Based on Mixup Data Augmentation. Machines. 2025; 13(9):807. https://doi.org/10.3390/machines13090807

Chicago/Turabian StyleYu, Kun, Yan Li, Qiran Zhan, Yongchao Zhang, and Bin Xing. 2025. "Intelligent Fault Diagnosis for Cross-Domain Few-Shot Learning of Rotating Equipment Based on Mixup Data Augmentation" Machines 13, no. 9: 807. https://doi.org/10.3390/machines13090807

APA StyleYu, K., Li, Y., Zhan, Q., Zhang, Y., & Xing, B. (2025). Intelligent Fault Diagnosis for Cross-Domain Few-Shot Learning of Rotating Equipment Based on Mixup Data Augmentation. Machines, 13(9), 807. https://doi.org/10.3390/machines13090807