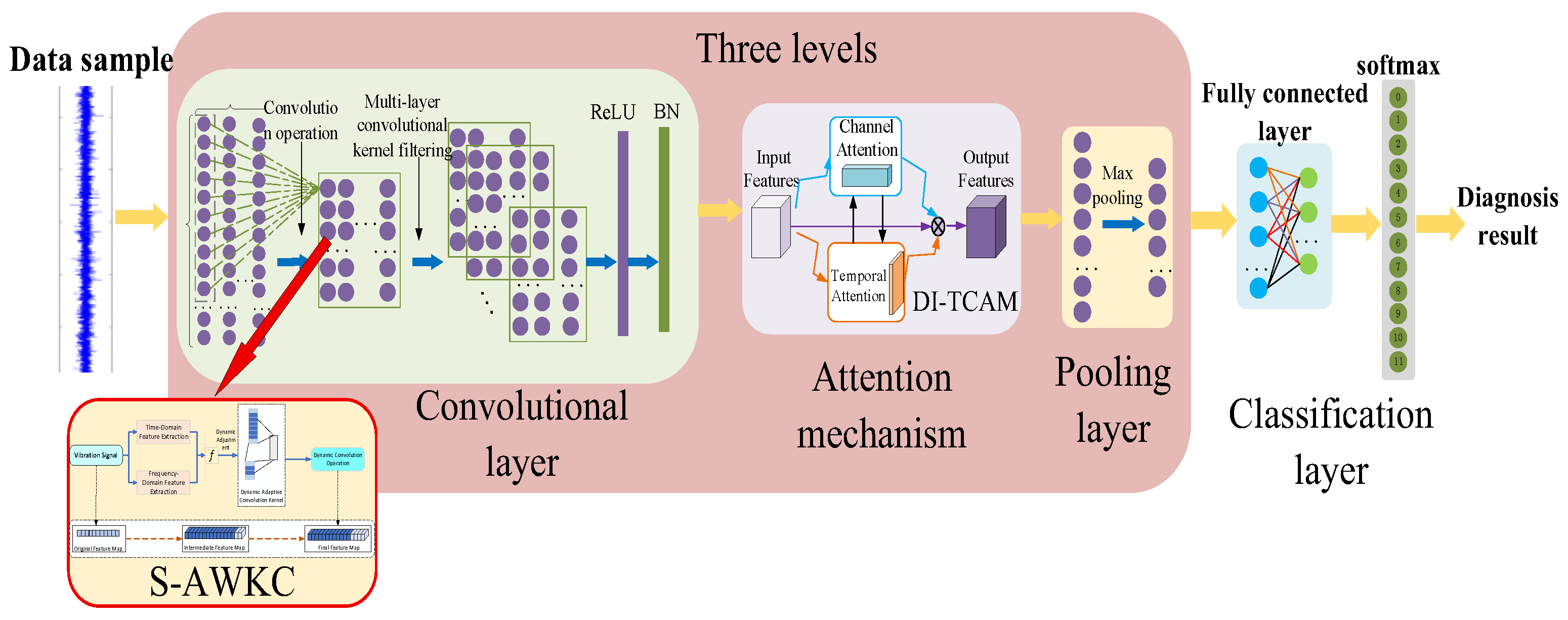

2.2. S-AWKC Module

In the proposed SAWCA-Net model, the convolution layer, as a front-end feature extraction module, is responsible for extracting key time-series structural features from complex and non-stationary one-dimensional vibration signals. The traditional CNN usually adopts a fixed-size convolutional kernel. Although it has strong local pattern recognition ability, its receptive field is limited, and it is difficult to capture the periodic weak fault features in the signal, especially in the environment of high noise interference, which is prone to insufficient feature coverage or over-fitting of local pseudo-features, thus limiting the diagnostic accuracy and the generalization performance of the model.

In order to solve the above problems, this paper designs a spectrum-guided adaptive wide kernel convolution (S-AWKC), which integrates spectrum statistics. The module comprehensively utilizes the statistical characteristics of the input signal in time domain and frequency domain (such as energy, kurtosis, spectral entropy, and amplitude of main frequency) to dynamically adjust the width of the receptive field of the convolution kernel and guides the shift of sampling position based on the spectral structure so as to realize the adaptive sensing and positioning ability of the convolution kernel at different time positions. This strategy not only improves the modeling ability of the model for multi-scale periodic structures but also enhances the stability of feature extraction in a background with strong noise. The overall structure of S-AWKC module is shown in

Figure 2, which mainly includes the following three parts:

(1) Spectral feature extraction and statistical modeling in spectral domain.

Let the original input signal be

x(

t),

t = 1, 2, …;

T is transformed into the frequency domain by using the fast Fourier transform (FFT) to obtain the spectral representation

X(

f). Extract multiple representative statistical features in the frequency domain to form a spectral feature vector:

Among them,

is the amplitude of the main frequency,

is the spectral entropy,

is the spectral centroid, and

represents the energy in the frequency domain. The definitions of these spectral characteristics follow the common practices of classic textbooks on digital signal processing [

19,

20] and the literature on vibration fault diagnosis.

(2) Dynamic adjustment strategy of the receptive field

To achieve adaptive convolution kernel scale control, this paper designs a receptive field adjustment function

based on the time-domain and frequency-domain joint statistics. Combining time-domain features (such as energy

and kurtosis

) with frequency-domain statistical features, the width

kt of the convolution kernel at each time position is dynamically determined:

Based on this statistical characteristic, we define the receptive field adjustment function

We can thus dynamically determine the effective width of the convolution kernel:

Among them,

to

are learnable weight parameters. This weight parameter was initialized using Xavier to ensure the stable transfer of gradients in the early stage of training. This strategy not only expands the receptive field of convolution but also enhances the model’s collaborative perception ability of high-frequency disturbances and low-frequency trends, improving the modeling effect of multi-scale structures. The adaptive receptive field adjustment method proposed in this paper draws on the design concepts of deformable convolution and variable dilation convolution [

21]. These weight parameters, together with other parameters of SAWCA-Net, are jointly optimized through supervised learning. The cross-entropy loss between the predicted labels and the real labels is adopted, and the model is trained through the backpropagation algorithm, thereby achieving the improvement of model performance.

(3) Spectral-driven dynamic sampling offset mechanism

To further enhance the parsing ability of convolution for non-stationary local features, the spectrum-adaptive wide convolution module introduces a dynamic offset mechanism based on spectrum. This mechanism predicts the offset

of each convolution position through a lightweight neural network based on spectral characteristics, achieving flexible adjustment of the sampling position of the convolution kernel:

Among them, is the activation function, and and are convolution weights and bias terms, respectively. The frequency spectrum structure of the input signal is considered in the generation of the model, which enhances the positioning ability of the model in different fault feature positions. The learnable offsets in Equation (4) are initialized to zero to start from a standard sampling position. These offsets are defined as differentiable parameters and are jointly optimized with all other network parameters during the end-to-end supervised training process via backpropagation. The optimization uses the same cross-entropy loss function employed for the main classification task, ensuring that the offsets adapt dynamically to input features to enhance the model’s discriminative capability.

In summary, the spectrum-adaptive wide convolution module integrates multiple statistical features in the time domain and frequency domain, jointly completing the dynamic adjustment of the receptive field range of the convolution kernel and the adaptive offset adjustment of the sampling position, significantly enhancing the model’s modeling ability for periodic weak faults and complex spectral disturbances in non-stationary signals. Compared with the traditional fixed convolutional structure, this module has higher flexibility, signal perception ability, and adaptability and can provide stable and high-quality features with recognition value for the subsequent attention mechanism, effectively enhancing the fault recognition performance of the proposed network under complex working conditions.

2.3. Dynamic Interactive Time-Channel Attention Module

To further enhance the recognition ability of the network for the key fault characteristics of rolling bearings in complex backgrounds, this paper designs a dynamic interactive time–channel attention module (DI-TCAM).This module achieves joint optimization and dynamic adjustment of attention weights by establishing a dual-branch interaction mechanism between the time dimension and the channel dimension, enhancing the model’s modeling ability for weak periodic structures and multi-channel collaborative features in non-stationary vibration signals, and improving the stability of feature expression under strong noise interference.

In dynamic time–channel attention, temporal attention and channel attention guide each other, forming a dynamic fusion mechanism during the feature weighting process. Channel attention focuses on depicting the differences in feature contributions of different channels at various time periods, while temporal attention is used to enhance the periodic activation response at critical moments. Through bidirectional feedback and interactive modeling, the module can effectively highlight the timing segments and channel features highly relevant to the fault category, improving the overall feature utilization efficiency and diagnostic accuracy.

(1) Overall architecture

As shown in

Figure 3, the DI-TCAM module is composed of a channel attention path and a time attention path. However, unlike the traditional parallel design, this paper introduces an interactive feedback path, achieving dynamic information fusion and iterative calibration between the channel and time dimensions, thereby forming a more recognizable dynamic closed-loop feature recalibration mechanism.

As shown in

Figure 3, the dynamic time–channel attention module is composed of a channel attention path and a time attention path. However, unlike the traditional parallel design, this paper introduces an interactive feedback path, achieving dynamic information fusion and iterative calibration between the channel and time dimensions, thereby forming a more recognizable dynamic closed-loop feature recalibration mechanism.

First, we input the feature tensor X ∈ RB×C×T, where B represents the batch size, C represents the number of channels, and T represents the time step. The input data, after extracting the initial features through the convolutional layer, are input into the dynamic time–channel attention module to start the calculation of interactive attention.

In the channel attention path, the global average pooling (GAP) operation is first adopted to aggregate information along the time dimension to obtain the overall description vector of each channel:

This step effectively compresses the time series information, enabling the model to focus on the average activation intensity of each channel within the overall time interval. Subsequently, through a set of trainable weight matrices

and bias terms

, combined with a nonlinear activation function, the channel attention weights are mapped out:

Among them,

is the Sigmoid function, which normalizes the weights to the (0, 1) interval. This attention weight represents the importance contribution of different channels to the overall feature learning. This channel attention generation method refers to the implementations of squeeze-and-excitation network (SENet) [

22] and convolutional block attention module (CBAM) [

23].

Then, based on the channel attention weights, the original feature tensor is weighted and adjusted along the channel dimension to obtain the intermediate feature representation after channel weighting:

Here,

represents the multiplication operation broadcast channel by channel. This channel recalibration operation is the standard practice of the channel attention mechanism [

22].

Then, we enter the temporal attention path. First, we perform cross-channel average pooling on the weighted intermediate feature

to extract the comprehensive feature description vector in the time dimension:

This step summarizes the multi-channel features at each time step to capture the overall dynamic information of the periodic fluctuations in the vibration signal. After processing with the convolutional mapping weight

, the bias term

, and the activation function, the time attention weight is output:

This time attention weight can represent the contribution degree of the vibration signal to fault identification at each time step, effectively guiding the model to focus on the time intervals with obvious periodic fluctuations or prominent abnormal activations. This temporal attention generation process refers to the implementation method of CBAM [

23].

(2) Dynamic interactive feedback mechanism

Based on the independent modeling of traditional time and channel attention, the dynamic time–channel attention module innovatively designs a cross-branch dynamic interaction feedback mechanism to achieve information sharing and recursive optimization between the two attention branches.

Specifically, first, the time attention weight is used to reverse-guide the re-correction and update of the channel attention, obtaining the dynamically enhanced channel attention weight:

Similarly, the channel attention weight also feeds back to the time attention path and further updates and strengthens the time attention weight:

Through the above bidirectional feedback process, dynamic collaborative learning is formed between time and channel features during training, which not only strengthens the modeling of local dependency relationships between channels but also enhances the model’s ability to capture global temporal features of key periodic paragraphs. Especially in dealing with complex and variable early weak failure modes and strong noise interference scenarios, this mechanism can effectively avoid the overfitting problem of local pseudo-features by the single-path attention model and improve the overall stability and robustness of feature recognition.

(3) Feature fusion and output

After obtaining the final dynamic interaction weights

and

, the dynamic time–channel attention module implements dual joint weighting on the input feature tensor to obtain the final output feature tensor:

Among them,

represents broadcast multiplication; that is, weighted adjustments of feature importance are made, respectively, in the channel dimension and the time dimension. This dual-weighted fusion not only fully retains the weak feature collaboration information between channels but also implements significant amplification on the key period intervals in the fault signal, enabling the model to adaptively focus on high-contribution feature regions during the training process, thereby enhancing the fault discrimination performance and anti-interference ability. The idea of joint weighting of this channel and time is similar to the channel–spatial attention fusion in CBAM [

23].

In conclusion, the dynamic time–channel attention module fully integrates the importance information of both temporal and channel two-dimensional features. By introducing a bidirectional interactive feedback mechanism, it achieves dynamic collaborative perception and adaptive weight distribution among multi-dimensional features. This module not only effectively enhances the model’s ability to capture weak periodic fault features but also improves the feature robustness and discriminability in complex and highly noisy environments, providing strong support for the early weak damage identification and stable performance of the rolling bearing fault diagnosis model under complex working conditions.

2.4. Rolling Bearing Fault Diagnosis Process Based on SAWCA-Net

The SAWCA-Net diagnostic process proposed in this paper is shown in

Figure 4.

(1) Data preprocessing: Through the data acquisition system, the vibration signals of the rolling bearings in both healthy and faulty states are simultaneously obtained during the test process. First, the original data are cleaned, including eliminating incorrect values, missing values, and outliers, and the amplitude standardization method is used to eliminate dimensional differences under different working conditions. The processed data are proportionally divided into a training set and a test set for model training and performance verification.

(2) Low-level feature extraction (spectrum-adaptive wide convolution module): The preprocessed training samples are first input into this module to extract the key low-level temporal features. This module combines the time-domain and frequency-domain statistics of the input signal (such as energy, spectral entropy, kurtosis, principal frequency amplitude, etc.), dynamically adjusts the receptive field of the convolution kernel, and introduces a deformable convolution mechanism. Based on the learned offsets, it flexibly adjusts the sampling position, thereby enhancing the ability to capture local features in non-stationary signals. After the convolution operation, the ReLU activation function and BN processing are successively carried out, and the initial feature map is output for subsequent use.

(3) Feature enhancement (dynamic time–channel attention module): The initial feature map is sent to the module, which achieves adaptive weighting of the importance of multi-dimensional features through a bidirectional feedback mechanism of time and channel attention. Channel attention recognizes the feature contributions of different channels, while temporal attention strengthens the periodic information in the signal. The two interact and guide each other, enhancing the model’s modeling ability for weak periodic disturbances and multi-channel collaborative features. The weighted feature map output is subjected to max pooling to achieve dimensionality reduction and redundance compression.

(4) Local high-order feature extraction: After dimensionality reduction, the feature map is successively input into two sets of stacked lightweight convolutional modules, and small receptive field convolutional kernels are used to further extract local high-order detail features. Each group of convolution is accompanied by ReLU activation and BN normalization operations to enhance the nonlinear fitting ability and training convergence speed of the model.

(5) Local channel attention embedding: After each set of convolution and pooling operations, a lightweight channel attention mechanism is introduced to further enhance the response of important channels, improve the cross-level feature fusion effect, effectively suppress the accumulation of redundant information, and retain key features.

(6) Fault classification output: The final set of convolutional outputs, after being activated by ReLU, is flattened into one-dimensional feature vectors and sent to the fully connected layer and the softmax classifier to complete the identification of the fault category of the rolling bearing.