1. Introduction

Bearings, as the core fundamental components of rotating machinery, play an irreplaceable role in critical industrial equipment such as wind turbines, high-speed trains, and aircraft engines [

1,

2,

3]. Their operational condition directly affects the reliability, energy efficiency, and safety performance of the entire mechanical system [

4]. However, due to long-term exposure to alternating loads, variable-speed operation, and harsh working conditions, bearings are highly susceptible to various failure modes, including fatigue spalling, cracks, and lubrication failure. Undetected faults may lead to cascading equipment damage, unplanned down-time, and significant economic losses. Therefore, developing accurate and efficient bearing fault diagnosis methods is crucial for achieving predictive maintenance and ensuring industrial safety [

5,

6,

7].

Traditional fault diagnosis techniques, such as Fast Fourier Transform (FFT) [

8], wavelet analysis [

9], and envelope demodulation [

10], primarily rely on signal processing methods to extract fault features from vibration or acoustic data. While these approaches perform well in certain scenarios, they exhibit notable limitations when dealing with non-stationary signals, weak fault signatures, and complex operating conditions. More importantly, their effectiveness heavily depends on expert experience and manual feature engineering, making them difficult to adapt to diverse industrial environments.

With the rapid advancement of artificial intelligence, data-driven fault diagnosis methods have demonstrated significant advantages. Deep learning approaches, represented by Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), can automatically extract discriminative features directly from raw sensor data [

11,

12,

13]. Li et al. [

14] proposed an interpretable deep learning model based on attention mechanisms for rolling bearing fault diagnosis. By incorporating attention mechanisms, the network can autonomously focus on critical fault-related segments in vibration signals while utilizing attention weight visualization to provide intuitive decision explanations, effectively addressing the “black-box” nature of traditional deep learning models and bridging the gap with engineering diagnostic knowledge. Yang et al. [

15] introduced a federated semi-supervised transfer fault diagnosis method based on distribution barycenter mediation to overcome the limitations of conventional transfer learning in decentralized data scenarios. However, most existing methods rely on supervised learning paradigms, requiring large amounts of labeled fault data for model training—a major challenge in real-world industrial settings where fault samples are scarce and annotation costs are prohibitively high.

The Swin Transformer is particularly suited for vibration signal analysis due to its unique combination of local precision and global context awareness. Its shifted window mechanism efficiently captures both high-frequency impulse features and long-range periodic patterns, addressing the key challenge of detecting fault signatures that span multiple timescales. The hierarchical architecture naturally aligns with vibration physics—early-stage faults manifest in localized waveform distortions while severe faults induce system-wide vibrations—enabling more physiologically meaningful feature learning than CNNs with fixed receptive fields or RNNs with sequential processing constraints.

To overcome this limitation, unsupervised learning techniques have garnered increasing attention due to their ability to learn meaningful representations from unlabeled data. While methods like Auto Encoders [

16], contrastive learning [

17], and clustering algorithms [

18] have shown promise in detecting unknown fault patterns, they face three critical challenges: (1) limited capacity for long-range feature dependency modeling, (2) sensitivity to noise and missing data in industrial environments, and (3) computational inefficiency when processing long vibration sequences. The Swin Transformer [

19,

20,

21], with its hierarchical shifted window attention mechanism, offers potential solutions through its unique combination of local feature extraction and global information interaction. However, its application in unsupervised bearing fault diagnosis remains underexplored, particularly regarding two key aspects: (a) effective adaptation to 1D vibration signals, and (b) integration with reconstruction-based self-supervised paradigms.

This study addresses these gaps through three innovative contributions: First, we develop the first masked self-supervised framework specifically designed for Swin Transformer-based bearing fault diagnosis, enabling robust feature learning through a novel vibration signal reconstruction task [

22,

23]. Our approach introduces two key innovations: First, we develop a novel vibration signal masking strategy that preserves critical fault signatures while enabling efficient self-supervised learning. Second, we establish an effective framework for adapting the Swin Transformer’s architecture to 1D vibration analysis, demonstrating its superiority in capturing both local and global fault patterns compared to conventional methods. Importantly, our methodology maintains computational efficiency while eliminating the need for labeled training data—a crucial advantage for real-world industrial applications where labeled fault samples are scarce.

2. Methodology

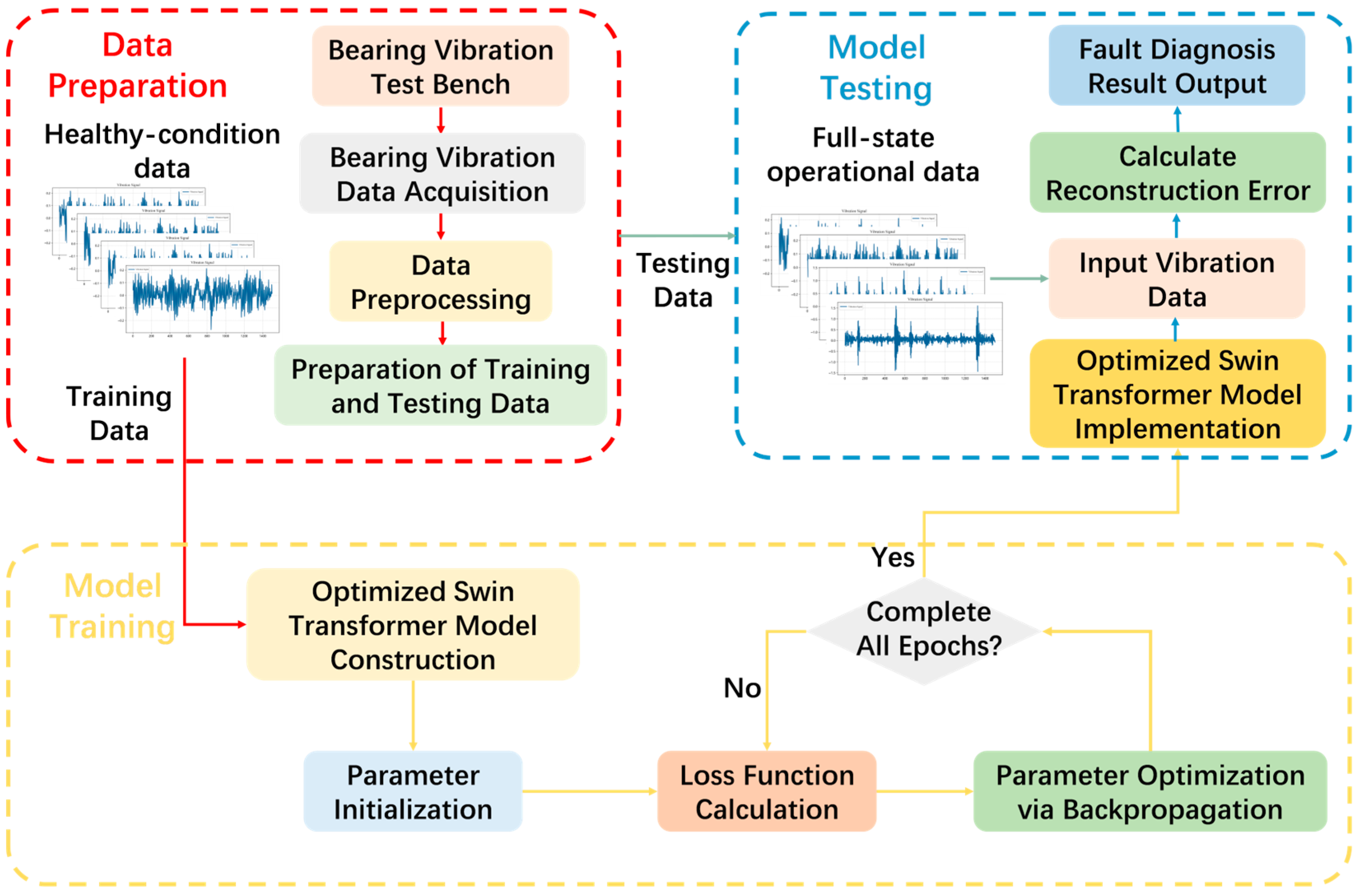

The research framework of this study is illustrated in

Figure 1. The proposed method trains a deep neural network using preprocessed vibration signals from healthy bearing conditions, eliminating the need for labeled data. Leveraging a data reconstruction approach, the model autonomously captures the intrinsic structure of healthy data. During data processing, all label-dependent operations are excluded, ensuring a fully unsupervised learning process.

In the model training phase, the Huber loss function is utilized to compute reconstruction errors, while the 3σ criterion establishes an effective detection threshold. The 3σ threshold (μ + 3σ) is calculated from healthy training data reconstruction losses, where μ and σ represent the mean and standard deviation, respectively. This approach assumes: (1) health state errors approximate normal distribution, and (2) training data contains no latent faults. During the testing phase, vibration data of unknown health status are fed into the trained neural network. If the reconstruction error surpasses the predefined threshold, the data is classified as faulty, enabling robust unsupervised fault diagnosis.

The proposed method employs the 3σ criterion to define a statistical threshold for fault diagnosis. While effective for stationary conditions, two limitations should be noted: (1) sensitivity to non-Gaussian noise (e.g., impulsive disturbances), (2) required recalibration for variable-speed operations. By calculating the mean (μ) and standard deviation (σ) of reconstruction loss values from healthy training data, the detection threshold is established as μ + 3σ. As the training set comprises solely healthy samples, this threshold effectively encompasses the range of data fluctuations under typical operating conditions. In practical applications, test data with reconstruction loss exceeding this threshold is identified as anomalous or faulty, ensuring reliable fault detection.

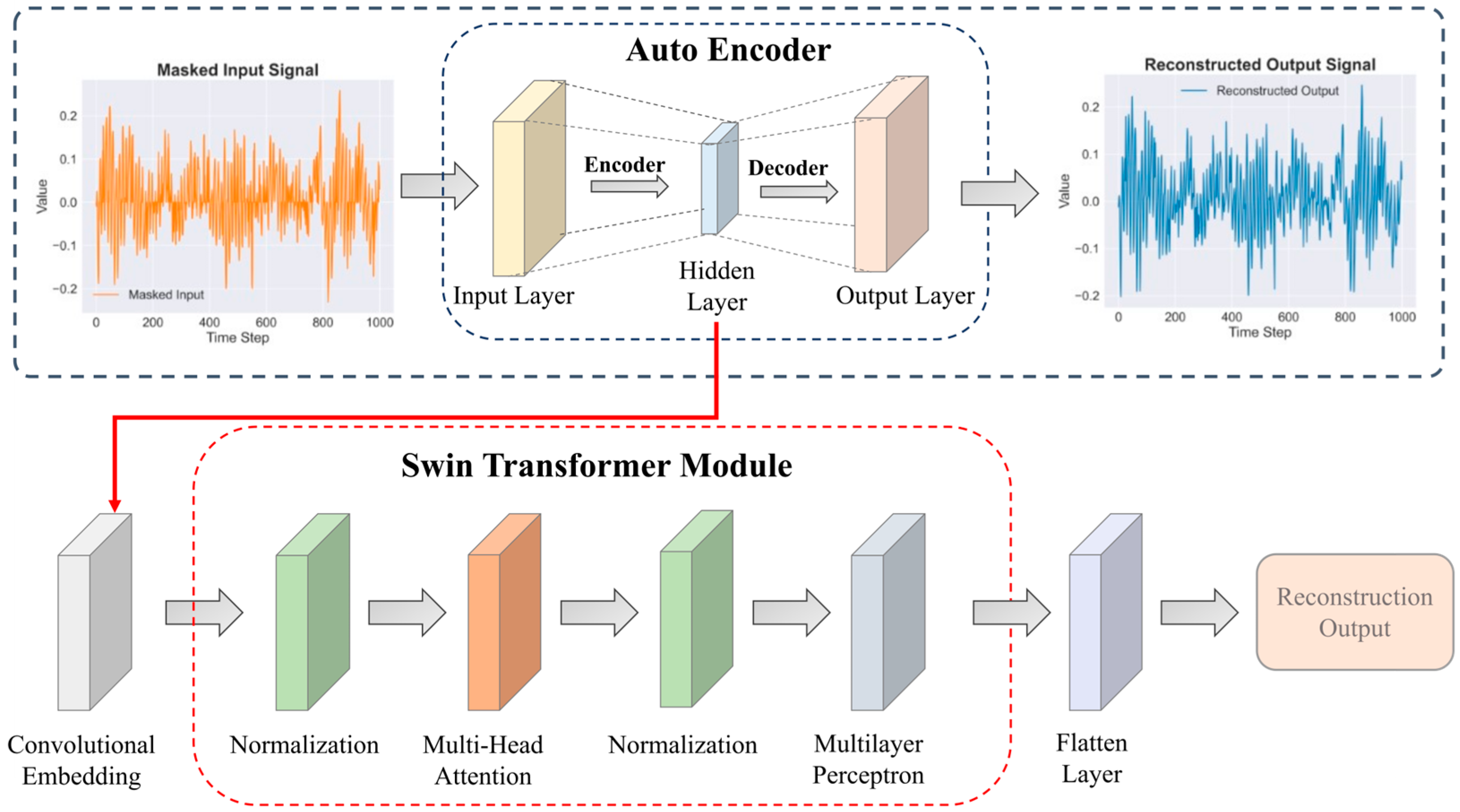

This study presents an intelligent bearing fault diagnosis model leveraging masked self-supervised learning. The model’s architecture, illustrated in

Figure 2, integrates a mask-reconstruction self-supervised learning task with a Swin Transformer neural network to enhance diagnostic accuracy and robustness.

The self-supervised learning component employs an Auto Encoder architecture consisting of an input layer, a hidden layer, and an output layer. The model applies random masking to standardized vibration signals to mimic sensor failures or data loss in real-world operating conditions. By minimizing reconstruction error, the Auto Encoder learns to recover masked data using visible information. The features extracted from the hidden layer are fed into the Swin Transformer for subsequent feature reconstruction tasks.

To clarify the architecture: the model operates as a sequential pipeline where the Auto Encoder serves solely as a self-supervised pre-training module. Once pre-trained, its encoder is fixed and functions as a feature extractor, producing robust embeddings from the input signals. These embeddings are then passed to the Swin Transformer module, which performs the subsequent hierarchical feature reconstruction and analysis, leveraging its attention mechanism to model complex dependencies in the data. This two-stage design effectively separates representation learning from advanced feature processing.

The Swin Transformer is an advanced model based on the Transformer architecture, with its core structure primarily including convolutional embedding layers, multi-head self-attention mechanisms, multilayer perceptron, and feedforward neural networks. By combining local feature extraction with global information interaction, the model can effectively capture long-range dependencies in vibration signals. Compared to traditional methods, the Swin Transformer demonstrates stronger generalization capabilities and higher recognition accuracy in fault diagnosis under complex operating conditions.

Self-supervised learning utilizes randomly masked segments of vibration signals in the time domain, framing the reconstruction of these masked segments as a pretext task. This strategy drives the model to autonomously discover meaningful patterns, capturing the intricate relationships between time-frequency features, periodic behaviors, and fault signatures. By training under such artificially induced data imperfections, the model develops inherent robustness against real-world challenges like noise contamination, missing data, and irregular sampling rates. Consequently, it achieves reliable fault detection even in non-ideal industrial conditions. The result is a more adaptable and resilient diagnostic system capable of handling the complexities of actual operational environments.

As shown in

Figure 3, the workflow progresses from raw waveform input through masked processing to final model reconstruction. During preprocessing, the model randomly obscures selected time-domain segments of vibration signals, generating training samples with deliberate localized information gaps. This masking strategy compels the model to develop the ability to reconstruct complete fault characteristics from partial observations. The approach achieves two key benefits: (1) it substantially strengthens the model’s capacity to extract discriminative fault representations, and (2) it significantly enhances diagnostic reliability when dealing with real-world industrial challenges such as signal noise and data incompleteness.

During the pre-training phase, an Auto Encoder architecture incorporating a masking strategy is employed to learn discriminative features from vibration signals. The model processes randomly masked input signals through an encoder network, which projects them into a low-dimensional latent space. Subsequently, the decoder network reconstructs the complete signals from these latent representations. This self-supervised approach forces the model to develop robust feature extraction capabilities while learning meaningful patterns from partially observed data. Given the original vibration signal

, where

is the input sequence length and

xt represents the vibration amplitude at timestep

, random masking is performed on the amplitude

xt at each timestep:

where

Xmasked represents the randomly masked 1D vibration signal sequence, and

xt is the original value at time step

t. The core task of the encoder is to map the masked vibration signals to a low-dimensional latent space, expressed as follows:

where

fenc denotes the encoder network with parameters

θ, and

Z represents the extracted latent features. The decoder then maps the latent features

back to the original data space

, i.e., reconstructs the input data, expressed as follows:

where

is the mapping function of the decoder, and

represents the decoder’s parameters. The training objective of the Auto Encoder is to minimize the discrepancy between the original vibration signal

and the reconstructed signal

. This paper employs the Huber loss as the loss function to measure the reconstruction error:

Among them, represents the reconstruction error of the t sampling point, and is the decision threshold. This loss function combines the advantages of L2 and L1 loss: it maintains the favorable convergence of L2 loss when the error is small, while automatically switching to L1 loss for larger errors. This mechanism enhances the model’s robustness to vibration signals and ensures training stability.

Following pre-training, the encoder’s primary function transitions from signal reconstruction to discriminative feature extraction. Having learned robust representations of bearing vibration characteristics, the encoder now efficiently projects high-dimensional raw vibration data into a meaningful low-dimensional latent space. In this operational phase, the encoder exclusively focuses on deriving the most salient features from input signals rather than performing reconstruction. These compressed yet informative latent representations are subsequently processed by the Swin Transformer for hierarchical feature reconstruction. Crucially, these embeddings encode distinctive patterns corresponding to different bearing health conditions, substantially enhancing the model’s diagnostic capability and state discrimination performance.

3. Experiment and Result

3.1. Datasets Description and Data Processing

For experimental validation, we selected two benchmark bearing fault datasets: the Paderborn University dataset [

24] (featuring controlled fault progression under various operational conditions) and the CWRU dataset [

25] (containing well-characterized fault signatures across multiple bearing configurations), both widely adopted in the fault diagnosis literature.

Paderborn Bearing Dataset: The Paderborn University Bearing Dataset includes eight different operating conditions: healthy state, Level 1 inner race fault, Level 2 inner race fault, Level 3 inner race fault, Level 1 outer race fault, Level 2 outer race fault, Level 2 combined fault, and Level 3 combined fault. The CWRU Bearing Dataset contains twelve state categories: healthy state, Level 1 to Level 4 inner race faults, Level 1 to Level 3 outer race faults, and Level 1 to Level 4 rolling element faults.

For the Paderborn dataset, a resampling technique is employed for data processing. Each sample comprises 2000 fixed data points, with subsequent samples generated by shifting a fixed number of data points backward, enabling continuous extraction of multiple samples. Data construction is based on the standard operating condition of 1500 rpm, 0.7 N·m load torque, and 1000 N radial force. The training set consists of 80% of the healthy state data under this condition, while the test set includes the remaining 20% of healthy data and all fault state data. This configuration facilitates effective fault state recognition under unsupervised conditions by training exclusively on healthy data. The operating conditions and data partitioning scheme are detailed in

Table 1.

CWRU Bearing Dataset: For the CWRU Bearing Dataset, a resampling technique is utilized for data processing. Each sample consists of 1000 fixed data points, with subsequent samples generated by shifting a fixed number of data points backward, facilitating continuous extraction of multiple samples. During data construction, 80% of the healthy state data is allocated to the training set, while the remaining 20% of healthy data and all fault state data across four operating conditions constitute the test sets. The specific operating conditions and data partitioning scheme are presented in

Table 2.

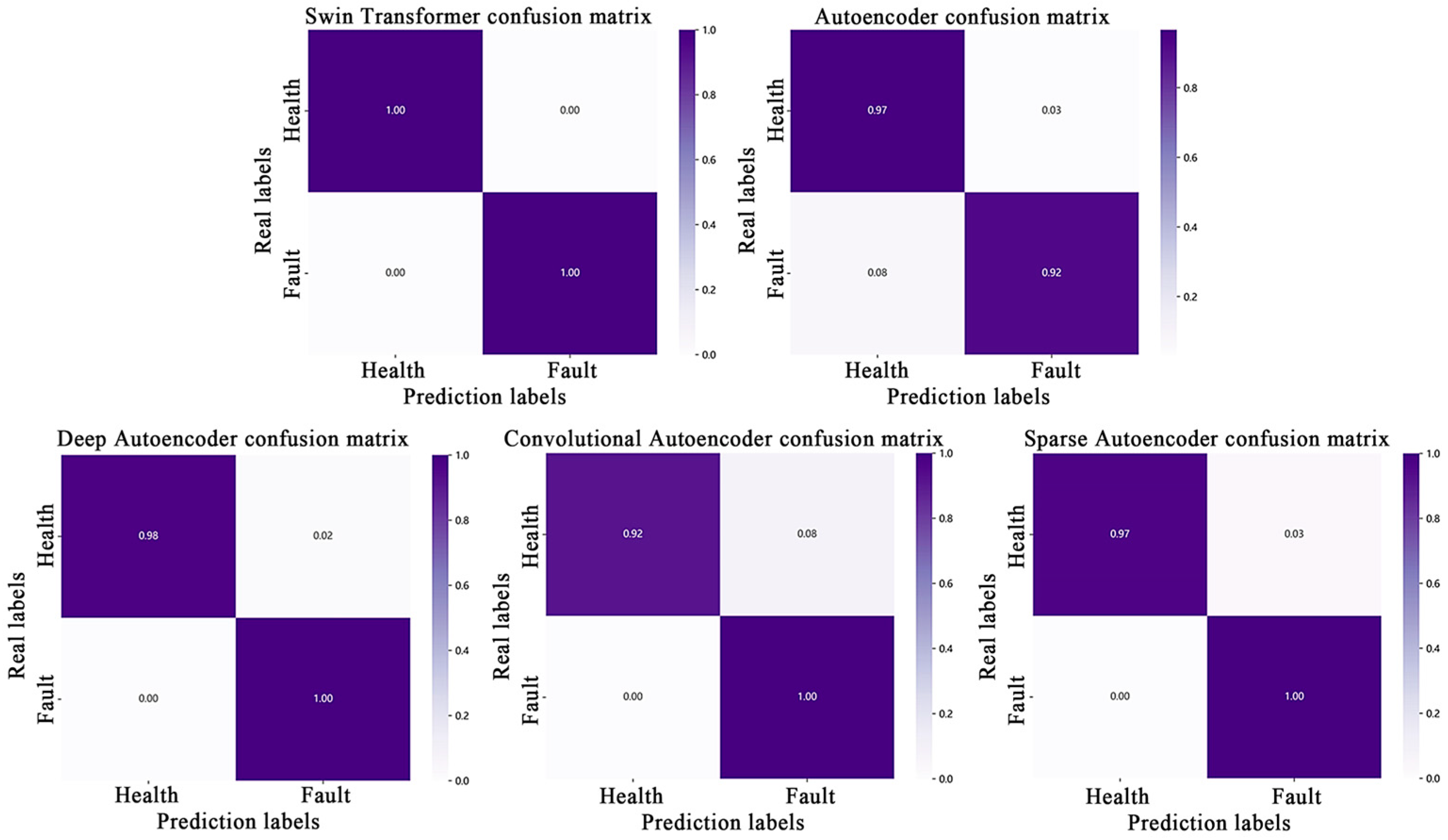

3.2. Comparative Methods

This study evaluates the proposed Swin Transformer-based unsupervised neural network model against several established unsupervised learning methods, including Auto Encoder (AE), Deep Auto Encoder (DAE), Convolutional Auto Encoder (CAE), and Sparse Auto Encoder (SAE), to demonstrate its superior performance in fault identification tasks.

- (1)

Auto Encoder:

We implemented a standard AE with single hidden layer 500 units, LeakyReLU activation α = 0.01, trained using Adam optimizer (lr = 1 × 10−4, batch = 64). LeakyReLU maintains activation in all neurons during training, thereby enhancing network stability and training efficiency.

- (2)

Deep Auto Encoder:

Our DAE configuration stacked three fully connected layers (500-1000-500 units) with layer-wise pretraining and dropout (p = 0.2). Through multilayer nonlinear mapping, the DAE hierarchically extracts abstract features from data, significantly improving reconstruction performance and generalization ability.

- (3)

Convolutional Auto Encoder:

The CAE architecture employed three convolutional blocks (kernel sizes 5-3-5, channels 32-64-32) with max-pooling (stride = 2). Compared to standard Auto Encoders and Deep Auto Encoders, the CAE maintains robust modeling performance while reducing the number of parameters and computational complexity, making it particularly well-suited for processing vibration signal data with spatial structures.

- (4)

Sparse Auto Encoder:

The Sparse Auto Encoder introduces sparsity constraints into the Auto Encoder architecture by restricting the activation ratio of hidden neurons, effectively suppressing interference from redundant information. Incorporating KL divergence sparsity constraint (target activation = 0.05) and L1 regularization (λ = 1 × 10−4). This sparse structure enhances the model’s representational capacity and robustness while mitigating the risk of overfitting, thereby enabling more efficient feature learning.

3.3. Experimental Results

This section presents experimental validation of the proposed method on both the Paderborn bearing dataset and Case Western Reserve University bearing dataset. To mitigate the impact of model randomness, all experimental results represent the average values from five independent trials. The experiments were conducted on a workstation equipped with an Intel Gold 5117 @2.00GHz CPU, 64GB RAM, and an NVIDIA GeForce RTX 3080 GPU. The model employs the Adam optimizer with an initial learning rate of 1 × 10−4, a batch size of 64, and incorporates a dropout rate of 0.2 during training to prevent overfitting.

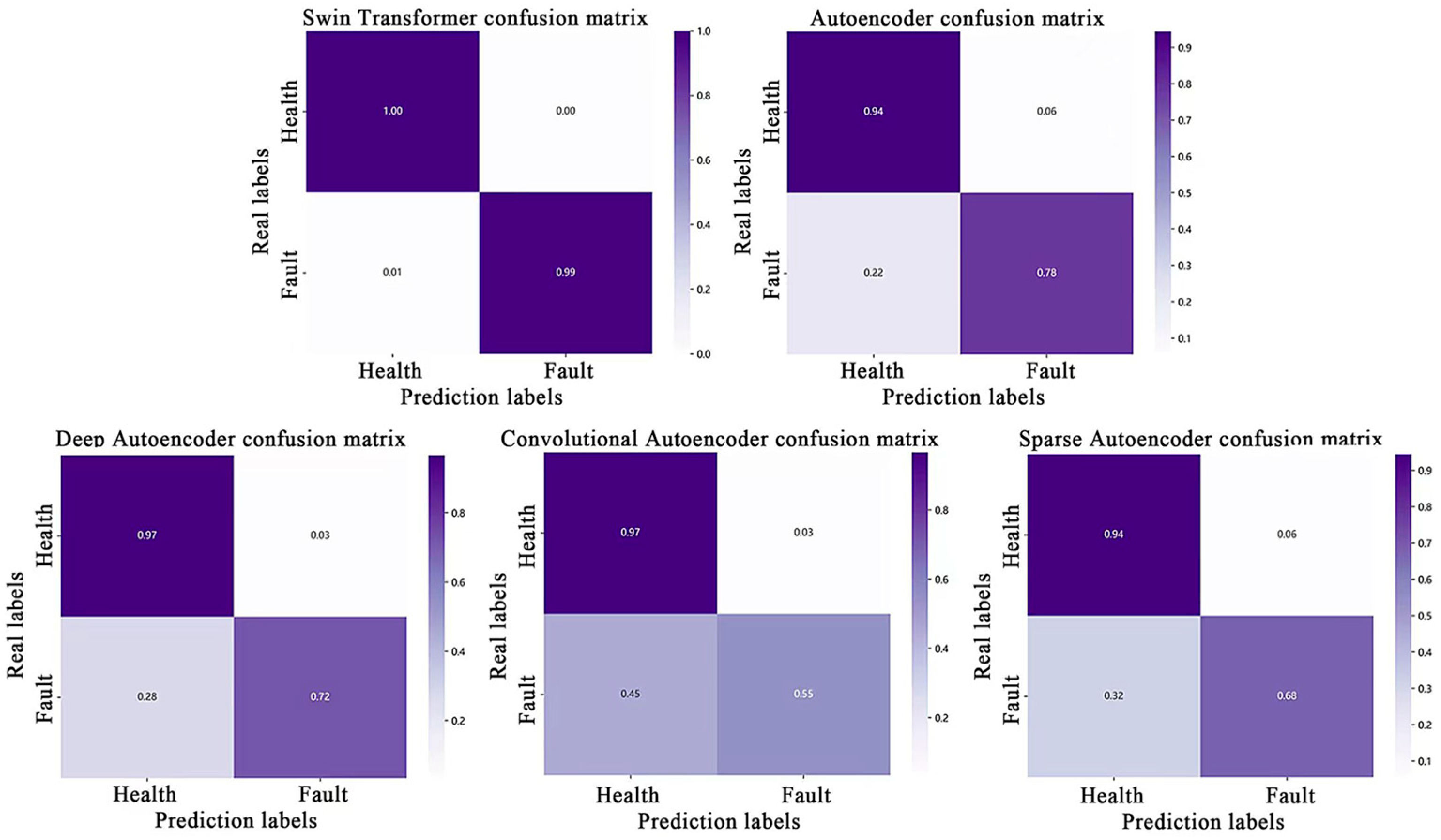

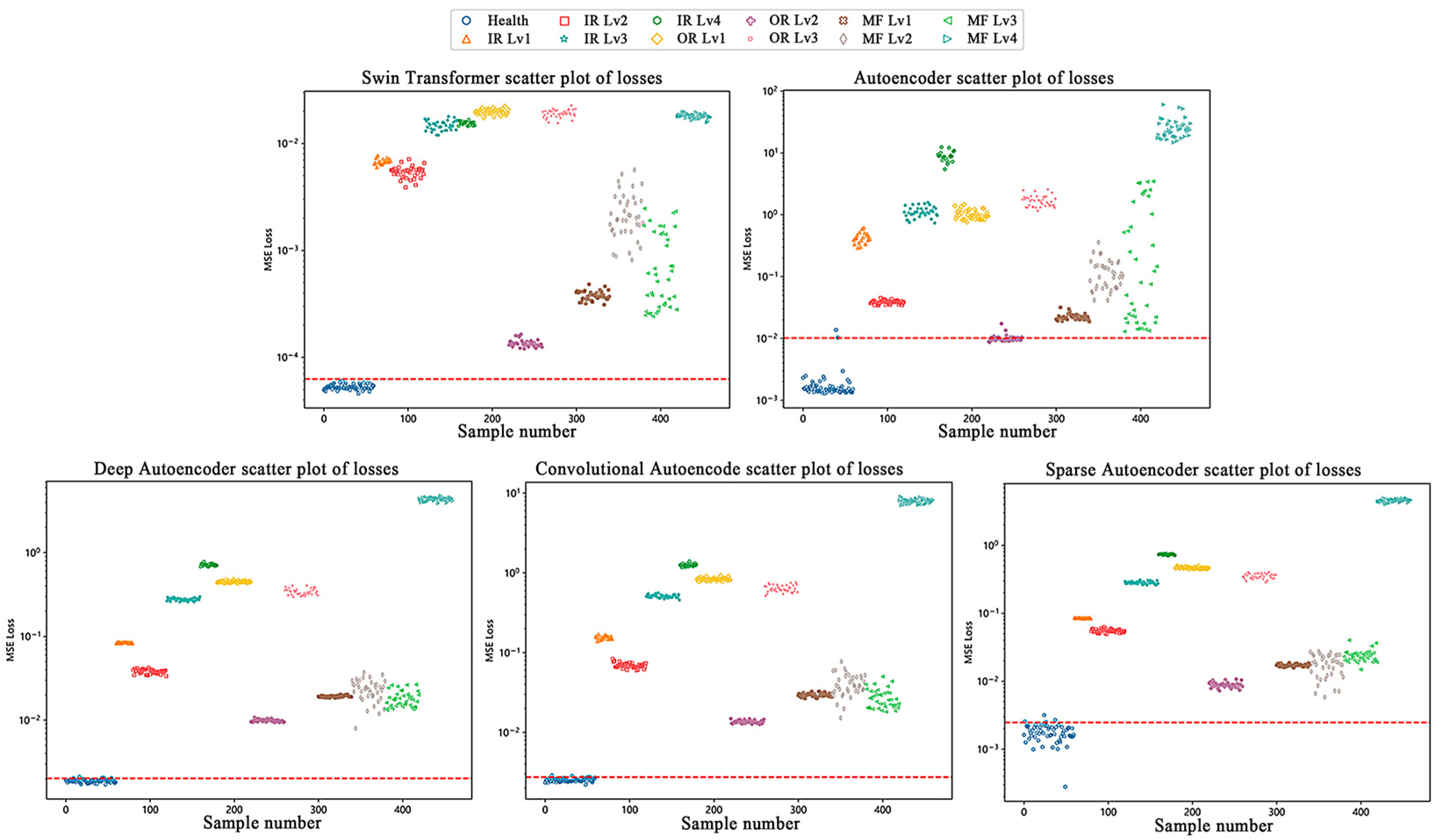

Figure 4 and

Figure 5 present the experimental results and corresponding loss distribution visualizations for the Paderborn bearing dataset, with detailed experimental data provided in

Table 3. The red line represents the threshold plotted based on 3σ. The results demonstrate that the proposed masked self-supervised learning model along with the Swin Transformer achieves superior performance across all metrics, attaining an accuracy of 99.53%, precision of 100.00%, and recall of 99.46%.

The loss distribution plots illustrate that the loss values for healthy samples are consistently concentrated below the established diagnostic threshold, whereas those for fault samples are uniformly distributed above it, demonstrating the model’s robust fault discrimination capability.

In comparative experiments, the four traditional Auto Encoder networks exhibited suboptimal performance, with none surpassing an accuracy of 82% or a recall of 80%. Analysis revealed that misclassified samples were predominantly associated with inner race faults and Level 1 outer race faults, highlighting significant limitations in the ability of traditional methods to effectively capture these fault features.

Figure 6 and

Figure 7 present the experimental results and loss distribution visualizations for the Case Western Reserve University bearing dataset under a 0 N load condition at 1797 rpm rotational speed, with detailed data presented in

Table 4. The results demonstrate that the proposed method achieves perfect diagnostic performance in this operating condition, with 100% accuracy, precision, and recall. The red line represents the threshold plotted based on 3σ. The loss distribution plot clearly indicates that the loss values of all healthy samples fall strictly below the diagnostic threshold, while those of fault samples consistently exceed it, showcasing exceptional fault discrimination characteristics.

The experimental results confirm the superior adaptability and robustness of the proposed method. In contrast to conventional approaches, which exhibited significant performance degradation and notable missed detection issues that compromised system stability and safety, our method achieved 100% fault identification accuracy without any omissions under identical operating conditions. This consistently exceptional performance across all test scenarios underscores the method’s distinct advantages and practical engineering value in complex industrial environments.