Abstract

Kernel transfer learning (KTL), as a kind of statistical transfer learning (STL), has provided significant solutions for cross-domain condition monitoring and fault diagnosis of bearings due to its ability to capture relationships and reduce the gap between source and target domains. However, most conventional kernel transfer methods only set a weighting parameter ranging from 0 to 1 for those functions measuring cross-domain differences, while the intra-domain differences are ignored, which fails to completely characterize the distributional differences to some extent. To overcome these challenges, a novel transfer kernel enabled kernel extreme learning machine (TK-KELM) model is proposed. For model pre-training, a parallel structure is designed to represent the state and change of vibration signals more comprehensively. Subsequently, intra-domain correlation is introduced into the kernel function, which aims to release the weight parameters that describe the inter-domain correlation and break the range limit of 0–1. Consequently, intra-domain as well as inter-domain correlations can boost the authenticity of the transfer kernel jointly. Furthermore, a similarity-guided feature-directed transfer kernel optimization strategy (SFTKOS) is proposed to refine model parameters by calculating domain similarity across different feature scales. Subsequently, the kernels extracted from different scales are fused as the core functions of TK-KELM. In addition, an integration framework via function principal component analysis with maximum mean difference (FPCA-MMD) is designed to extract the multi-scale domain-invariant degradation indicator for boosting the performance of TK-KELM. Finally, related experiments verify the effectiveness and superiority of the proposed TK-KELM model, improving the accuracy of condition monitoring and fault diagnosis.

1. Introduction

Bearings, as critical components within real-world mechanical systems, play a vital role in the reliability and functionality of machinery [1]. Consequently, bearing condition monitoring (BCM) and bearing fault diagnosis (BFD) are essential for preventing unexpected breakdowns and ensuring operational efficiency. Recently, data-driven methods for BCM and BFD have attracted significant attention due to their capability to effectively identify the health state of equipment. They mainly consist of algorithms based on machine learning and methods based on signal processing. Among these methods, machine learning (ML) [2] algorithms have emerged as a powerful tool, establishing mapping models between measured sensor data and the operational states of machinery, ultimately enabling accurate health state diagnosis [3]. Machine learning encompasses a variety of algorithms such as K-nearest neighbors [4], Bayesian learning [5], deep learning [6] and meta-learning [7] that can be employed to analyze sensor data and predict fault conditions. Different from the machine learning algorithms, signal processing-based methods depend on domain-specific prior knowledge to guide feature extraction. For instance, Antoni [8] provided quantifiable measures to characterize signal deviations from healthy states, enabling the early detection of incipient faults through systematic feature quantification. The blind deconvolution techniques proposed by Chen et al. [9] effectively suppress background noise and enhance fault-related impulses. Additionally, Chen et al. [10] also developed a signal decomposition method combined with cyclic spectral correlation, making it particularly effective for diagnosing faults under varying operating conditions. These signal processing methods complement data-driven machine learning approaches, forming a comprehensive toolkit for addressing the challenges in real-world condition monitoring and fault diagnosis scenarios. However, most data-driven algorithms rely on sufficient datasets, and insufficient samples can hinder the effective training of ML models and severely limit their effectiveness [11]. In addition, machines often operate under varying conditions in real-world scenarios, which will cause data distribution differences between training and testing data.

Technically, these aforementioned challenges can be settled by cross-domain learning approaches, such as statistical transfer learning (STL), joint learning, adversarial task augmentation and so on [12]. Among them, STL provides a promising solution to address challenges due to its simplicity and efficiency [13]. Technically, STL belongs to transfer learning (TL), which can acquire knowledge from other relevant fields to solve the drawbacks of traditional ML-based models. The particularity of STL lies in that it focuses on leveraging source-domain specific statistical knowledge to boost the performance of target tasks under the framework of statistical modelling. Theoretically benefitting from the abilities of parameter learning from statistical models as well as knowledge transfer from transfer learning, STL can extract the relatively more global transferable knowledge [14], thereby enabling excellent knowledge transfer capabilities and better generalization for BCM and BFD under changing operating conditions.

Recently, this method has attracted the attention of many scholars. For example, Hu et al. [15] developed a transfer learning strategy based on balanced adaptation regularization for unsupervised BFD across domains. Ganin et al. [16] introduced a novel domain adaptation framework that utilizes adversarial training to minimize the inter-domain distribution gap. Ganin et al. [17] introduced a novel adversarial domain classifier to optimize both classification tasks and domain adaptation. However, the nonlinearity of vibration data would increase the complexity of the model-training process to some extent.

In light of this, kernel transfer learning (KTL), as a kind of STL approach, has emerged as a particularly effective method. KTL utilizes kernel functions to project data onto elevated-dimensional feature spaces, allowing for a more nuanced comprehension of the data’s fundamental patterns [18]. Additionally, by leveraging the kernel trick and sparse representations, KTL can also address the computational challenges arising from nonlinear data. Theoretically, KTL expands the application scope of STL techniques for more complex scenarios. For KTL, plenty of strategies have emerged in recent years, including the following two major aspects: (1) Optimization and selection of kernel function. For example, Cao et al. [19] applied a single parameter to represent the relationship between the two domains in a standard transfer learning scenario involving two domains. Wagle et al. [20] further extended this approach by differentiating between the covariance of the two domains. Wei et al. [21] generalized a transfer kernel to solve a multi-source-domain task through setting up the similarity coefficient of each pair of source-target domains. (2) Optimization of domain alignment. For example, Wei et al. [22] developed a multi-kernel learning approach that integrates multiple kernel functions to facilitate parallel processing capabilities. Zheng et al. [23] proposed a Gaussian process regression of the adaptive two-stage model using the matching kernel. Lu et al. [24] utilized sample correlation and learnable kernels to realize domain adaptive learning. Wu et al. [25] applied an adaptive deep transfer learning method for domain adaptive learning. Additionally, recent advancements in zero-faulty or limited-fault data scenarios deserve further attention. For example, emerging techniques like the shrinkage mamba relation network (SMRN) [26] leverage out-of-distribution data augmentation to enable fault detection and localization in rotating machinery under zero-faulty data regimes. Such methods address the challenge of absent fault labels by generating synthetic anomalies, bridging gaps in data scarcity. However, most existing studies only consider and quantify inter-domain differences by adopting a single parameter ranging from 0 to 1 for kernel optimization. This limits the model’s ability to capture the global distributional differences to some extent.

In order to tackle the aforementioned questions, this article proposes a novel transfer kernel enabled kernel extreme learning machine (TK-KELM) model for BCM and BFD. Firstly, a parallel structure for the pre-training of the model is designed to more fully represent the state and change of vibration signals. Then, the intra-domain differences are involved into the optimization process, thereby releasing the weight parameters that describe the inter-domain relationships and enabling them to break the limitations of the original range from 0 to 1. Subsequently, those transfer kernels undergo optimization through a similarity-guided feature-directed transfer kernel optimization strategy (SFTKOS), which fine-tunes model parameters based on domain similarities across different feature scales. Additionally, an integrated framework that combines functional principal component analysis with maximum mean difference (FPCA-MMD) is introduced to derive multi-scale domain-invariant degradation indicators for enhancing the model’s overall robustness. Finally, the gradient iterative segmentation (GIP) algorithm and support vector machine (SVM) are applied for BCM and BFD, respectively, demonstrating the effectiveness of this methodology. The key contributions of this study are outlined as follows:

- A new mechanism for KTL is proposed to capture both intra-domain and inter-domain differences, which breaks the limitation of the original parameter ranging scope (from 0 to 1) in a conventional KTL framework, and shows strong adaptability for cross-domain transfer learning.

- A similarity-guided feature-directed transfer kernel optimization strategy is designed under the framework of parallel structure and MMD to optimize kernel parameters, which is beneficial for mining domain invariant features completely and improving the cross-domain learning performance.

- A novel transfer kernel enabled kernel extreme learning machine (TK-KELM) model is proposed, which is beneficial for boosting the adaptability of the model for BCM and BFD.

The organization of this article is outlined below. Section 2 delves into the related knowledge essential for understanding our work. Section 3 introduces the proposed method, detailing its components and the theoretical underpinnings. Section 4 presents two case studies to illustrate the practical application and efficacy of the approach proposed in this paper. In conclusion, Section 5 wraps up the article.

2. Related Knowledge

2.1. Statistical Transfer Learning

Statistical transfer learning (STL) provides a distinct perspective on transfer learning by approaching it from a statistical viewpoint. STL can be described as follows [23]: given a source domain with the assignment task and a target domain with the assignment task , STL aims to learn domain-invariant statistical knowledge between domains and boost the performance of target . Specifically, the target domain comprises a sample set containing monitored data from the target object, which follows a data distribution , that is, . Source domain contains the sample set of relevant bearing data and its corresponding distribution , that is, . In this study, let (the sample quantity of ) (the sample quantity of ), which means the target domain has limited data, while adequate data from another working condition (namely source domain ) are available. From a statistical standpoint, data from different conditions are analyzed based on their distinct marginal distributions [27]. Whereas, due to the complexity brought on by varying measurement environments and operational conditions, the data gathered in another working condition naturally exhibits differences in their marginal probability distributions. For a robust cross-domain BCM and BFD model, STL begins by building specific statistical models designed for each unique task or domain, such as linear regression frameworks and Gaussian process methodologies. Subsequently, various statistical methods, including Bayesian inference and boosting techniques, are employed to enable the effective transfer of domain-invariant statistical knowledge between these models, enhancing the overall learning process across different domains.

However, with the increase in data complexity, traditional STL methods will encounter challenges in dealing with highly nonlinear data distributions. In contrast, KTL provides a powerful tool to deal with this problem. KTL tries to map both domains to the regenerated kernel Hilbert space for weakening the distributional gap of different domains. The core idea of KTL is to define an implicit nonlinear mapping through the kernel function , which transfers the input data from the initial space to the Hilbert space. Then, maximum mean discrepancy (MMD) [27] is adopted to measure the distributional difference, which can be expressed as follows:

where is the data implicitly mapped by the kernel function, represents regenerated kernel Hilbert space and and are the data of both domains, respectively. By minimizing MMD, the distributional alignment between domains can be optimized in the regenerated kernel Hilbert space. Finally, the mapped can be regarded as an auxiliary dataset to boost the performance of .

2.2. KELM Model

The basic structure of KELM is made up of a single-layer feedforward architecture, which includes an input layer, a hidden layer and an output layer.

(1) The input layer: The input samples are recorded as , where is the quantity of samples, corresponding to the neurons in the input layer.

(2) The hidden layer: Given a KELM model with hidden units, the output matrix of the hidden layer can be denoted as follows:

where refers to the feature mapping function. The columns of represent the output of the m-th hidden layer neuron for all samples. The parameters of the hidden layer are initialized randomly. The input is mapped to the hidden layer space through the feature mapping process, followed by the activation function below:

where represents the connection weight vector from the hidden layer to the output layer.

(3) The output layer: Then, the output can be formulated as follows:

where represents the vector which connects the hidden layer nodes to the output neurons. Supposing that the vector of the timing signal is represented as , according to the literature [28], can be expressed as follows:

where stands for the unit matrix of appropriate dimensions, represents a regularization parameter and is the stack matrix output by all hidden layers. Therefore, the output becomes the following:

Selecting the core function and its expression can also be expressed as follows:

With this modification, the output becomes the following:

3. Proposed Method

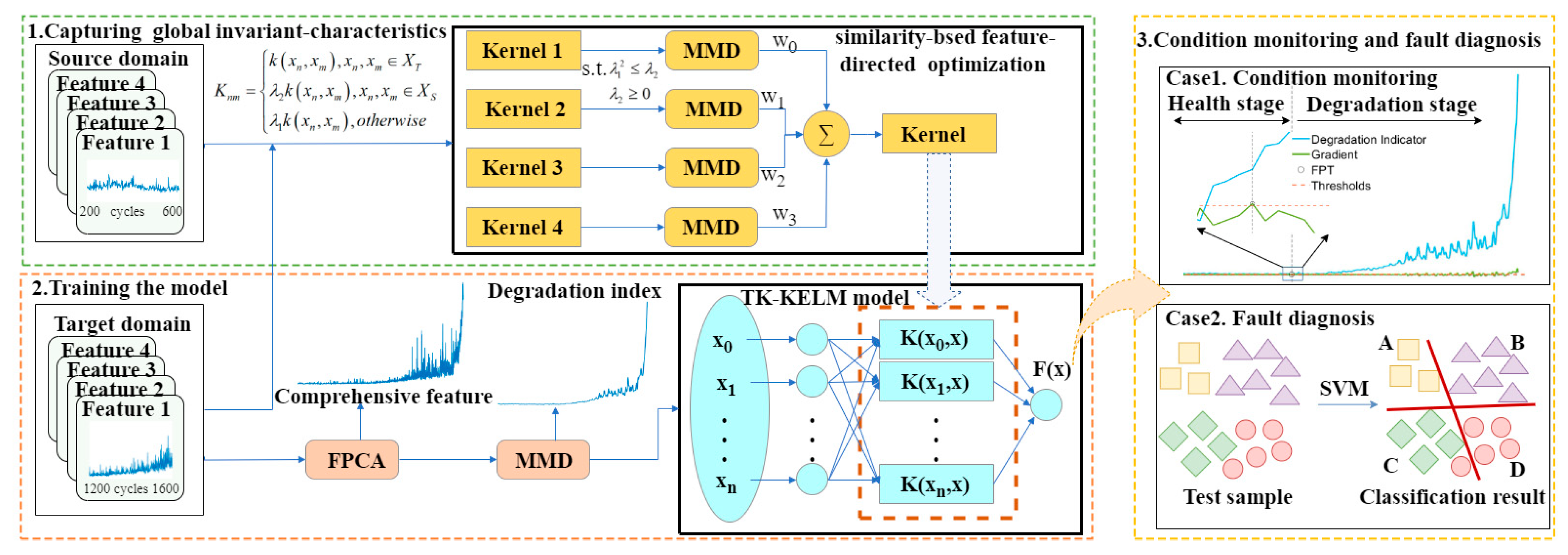

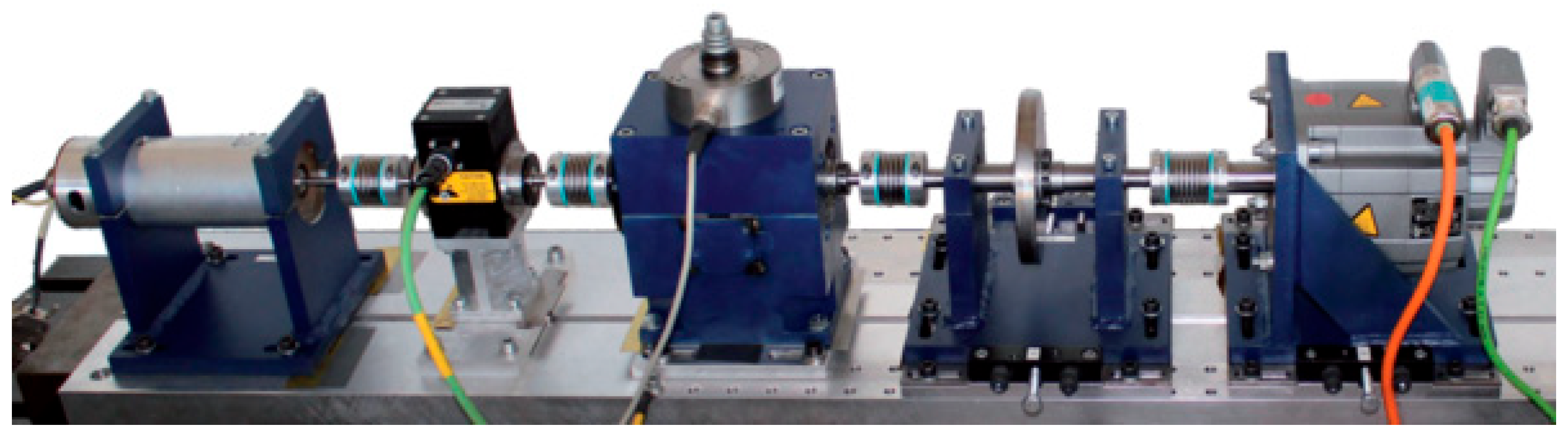

As presented in Figure 1, the overall block diagram of the proposed methodology is shown. The proposed method mainly includes three parts: (1) capturing global invariant-characteristics, (2) training the model and (3) condition monitoring and fault diagnosis. Firstly, diverse sets of time-domain characteristics are retrieved from the source domain, and the domain correlation is captured through employing the kernel function, and then the parameters are optimized through SFTKOS. Secondly, the FPCA-MMD method is utilized to construct the bearing degradation index for model training and prediction. Finally, given optimized transfer kernels as well as domain-invariant indexes, BCM and BFD are realized by solving the problem of distribution differences caused by variable working conditions.

Figure 1.

The overall block diagram of the proposed method.

3.1. Capturing Global Invariant-Characteristics

In STL, one of the challenges is quantifying the relationship of inter-domain data sets, particularly when the has limited annotated data. This paper combines feature transfer and Bayesian methods to address this issue. First, the parameters , , are defined as the covariance matrices of with , with and with , respectively. The parameter is then designed to represent the similarity between and . For the feature transfer method, the characteristics matrix of the target domain will be , where is in range . However, in the Bayesian approach, the relation between and is viewed probabilistically. In this case, is bounded within , representing the chance associated with this relationship. Consequently, should become a free parameter rather than a fixed probability distribution.

According to the literature [24], it is assumed that the prior knowledge probability for different similarities is uniform, which allows to remain free while still providing a probabilistic interpretation. An additional parameter, , is introduced to refine the posterior understanding of the source and , thus further relaxing constraints on the parameter .

As a result, the proposed transfer kernel can be expressed as follows:

where , and represents the well-known radial basis function (RBF) kernel. This transfer kernel leverages shared parameters for both domains and incorporates prior information from the source set. The parameter controls the inter-domain similarity, with a range of , allowing for a broader range of similarities. The parameter represents the different kernel functions, offering the two domains posterior distributions. Moreover, the newly proposed transfer kernel must also meet the requirements of positive semi-definite (PSD). Suppose that is a PSD matrix, where for and . The matrix also satisfies PSD.

Proof.

Based on the properties of PSD matrices, the following properties of PSD matrices hold: (a) If there is a nonzero matrix satisfying , then for the PSD matrix , satisfies PSD. (b) Assuming the matrices and are both PSD, then will be PSD. (c) Supposing that the matrix is PSD, then for any positive number , should be PSD as well.

The proof proceeds as follows:

where

where consists of kernel functions, thus is PSD, and the matrix is nonzero. Using the property (a), then the matrix is PSD. If , and according to the property (c), the matrix meets PSD. Therefore, based on the property (b), the matrix is PSD as well. Additionally, when , then ; thus, will still be PSD. □

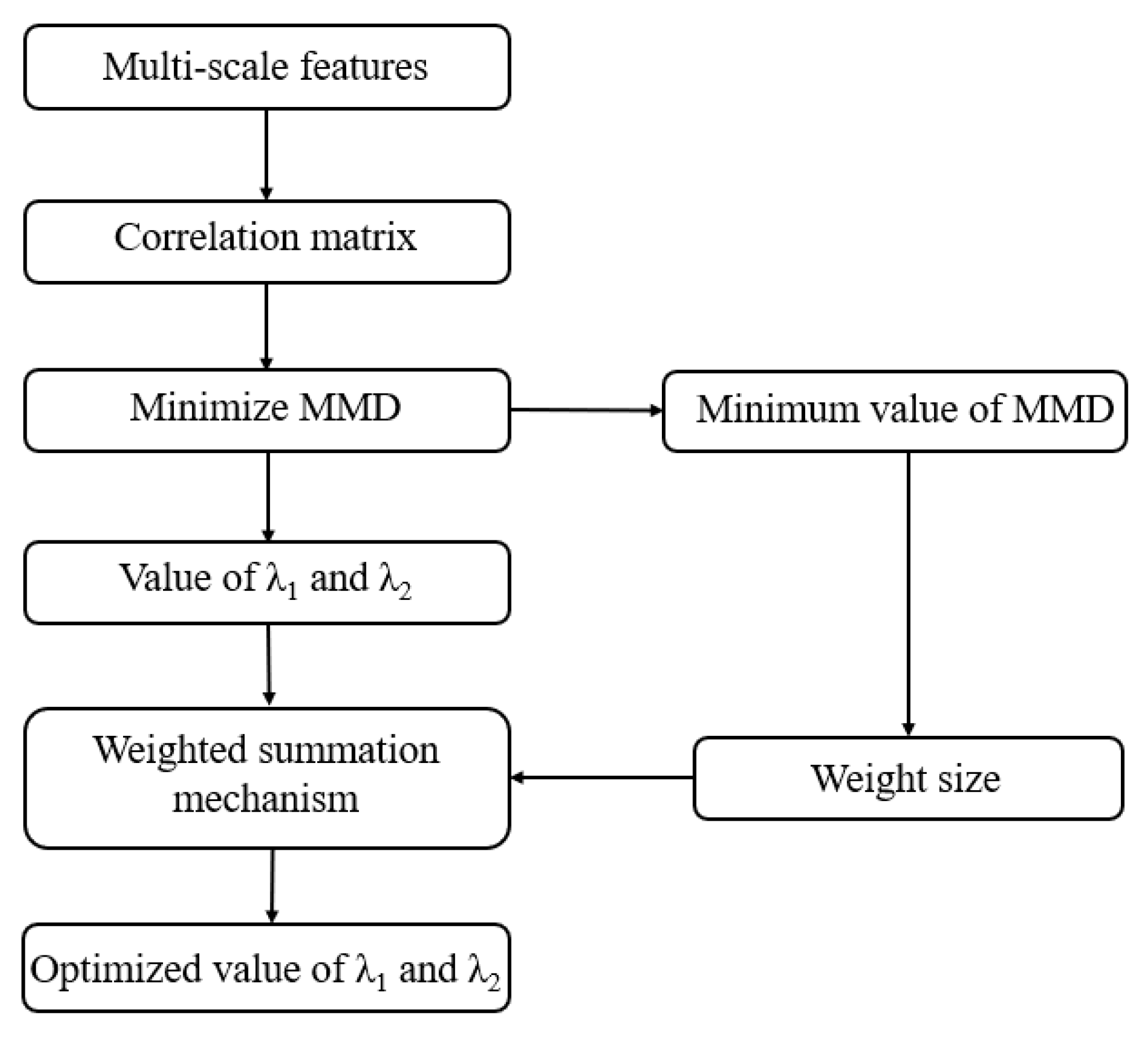

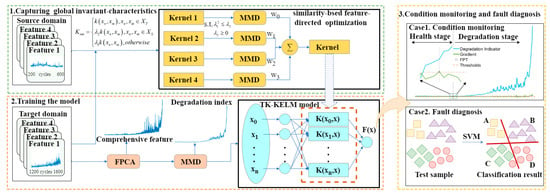

However, although it has been proven that the transfer kernel is PSD, it is often not effective when applied directly to the KELM. Therefore, it is necessary to optimize the transfer kernel to further optimize the inter-domain feature matching. Thereby, SFTKOS, as shown in Figure 2, is proposed. First of all, a parallel structure is designed to support multi-scale feature extraction to more fully characterize the state of vibration signals. Then, the correlation of inter-domain data sets is calculated according to the transfer kernel. Furthermore, the minimization MMD method is employed to optimize the transfer kernel to obtain the values of parameter and . Finally, the optimized kernel function is computed by the weighted fusing mechanism according to the minimization MMD size.

Figure 2.

The flow chart of SFTKOS.

3.2. Training the Model

3.2.1. The Domain-Invariant Feature Extraction

In this study, a combination of functional principal component analysis and maximum mean difference methods (FPCA-MMD) was designed to extract domain-independent tracking indicators from bearing time-domain data. According to the literature [29], when processing high-dimensional time domain signals, FPCA can treat the data as a continuous function, so as to effectively capture potential degradation characteristics and reveal the trend in the degradation process. However, FPCA itself has limitations, such as the inability to directly measure statistical differences between different time periods, and the lack of clear quantification of dynamic changes in different degradation stages.

Therefore, FPCA-MMD combined with the MMD method can make up for this shortcoming of FPCA. MMD can effectively measure the difference in distribution between different time periods or degradation stages, thereby helping to reveal the dynamic changes in the degradation process. Specifically, FPCA first analyzes multi-dimensional data and extracts the features that can represent the main changes in the degradation process. These characteristics help us understand the main trends of degradation. Meanwhile, MMD is employed to measure the differences in the characteristic distribution at different degradation stages. By calculating these differences, we can quantify the changes between different stages, thereby more clearly identifying the key nodes in the degradation process. Therefore, to capture the relevant characteristics from bearing data in the time domain, FPCA-based dimensionality reduction is applied, resulting in a feature vector that characterizes the bearing’s degradation. A sliding window technique [30] with step size is then employed to partition the feature set, producing subsets , in which . To analyze the changes in the degradation process, the variation difference between each subset and the initial one is computed. The difference value is quantified by the MMD method as follows:

where denotes the data segment corresponding to the sliding window, and represents the index of the segment. The MMD function described in Section 2 is utilized to generate a series of difference values . These values are fed into the TK-KELM model as input to track bearing degradation.

3.2.2. KELM Model Training

Because the kernel function needs to meet the Mercer condition, it is also possible to use the new transfer kernel proposed above. Thus, KELM based on transfer kernel is named as TK-KELM in this work. At the same time, the structure of the new model is still consistent with the KELM model. Therefore, the new transfer kernel can be expressed as follows:

Thereby, the new output in this case is given by the following:

Then, the model output weight is solved as follows:

And finally, the predicted output can also be expressed as an expression that is only related to the kernel matrix. Then, the degradation indicator can be taken as the input of the model, and the predicted label data is used as a supplement to the insufficient target domain sample.

3.3. Condition Monitoring and Fault Diagnosis

Accurate monitoring of the degradation stage and early identification of faults are critical for BCM and BFD. The process of condition monitoring consists of systematically appraising the operational state of equipment to spot early indicators of degradation. Early detection allows for timely intervention, potentially preventing catastrophic failures. Fault diagnosis, on the other hand, involves identifying the specific nature and cause of a failure once it has occurred, providing insights for corrective actions and optimizing maintenance strategies.

For identifying the condition change point, a gradient iterative partitioning algorithm is applied in this article. The gradient is used to evaluate the variations in the degradation signal. A sharp variation in the gradient indicates a significant change in condition monitoring, which can be detected as a change point. The gradient at each instance is calculated as follows:

where is the rate of change at that moment, that is, the gradient value. Additionally, a gradient threshold is set according to the 3σ principle. Based on the above settings, the objective of this paper is to identify the condition change points in the gradient. A change point is considered valid only when and the next consecutive gradient values satisfy . If these conditions are not met, the point is treated as a false change point.

After the identification of the failure points is completed, the core work of the next step is to deeply analyze these failure points to determine the specific nature behind them, and determine which fault types the fault points belong to. For fault diagnosis, a way of systematic implementation is designed as follows: First, the multi-scale features of vibration signals from both source and target domains are extracted using the transfer kernel optimized by SFTKOS. These features are then processed through the FPCA-MMD integration framework to derive domain-invariant degradation indicators, which serve as the input features for the classification model. Finally, the SVM is employed as the classifier, where the RBF is selected as the kernel function to handle the nonlinearity of the input features. The regularization parameter C of the SVM is determined via cross-validation within the range of 1 to 100 to balance the model’s generalization ability and classification accuracy.

The computational complexity of the proposed TK-KELM model is dominated by the kernel matrix construction and model training steps. For samples and feature dimensions, computational complexity for online monitoring is , where refers to the kernel matrix construction and model training steps; is the complexity of the covariance of d-dimensional features; and represents the computational complexity of dimensionality reduction operations.

4. Case Study

In this section, the paper designs two empirical investigations to assess the effectiveness of the model in condition monitoring (Case 1), fault diagnosis (Case 2) and fault diagnosis in zero-faulty environments (Case 3), respectively.

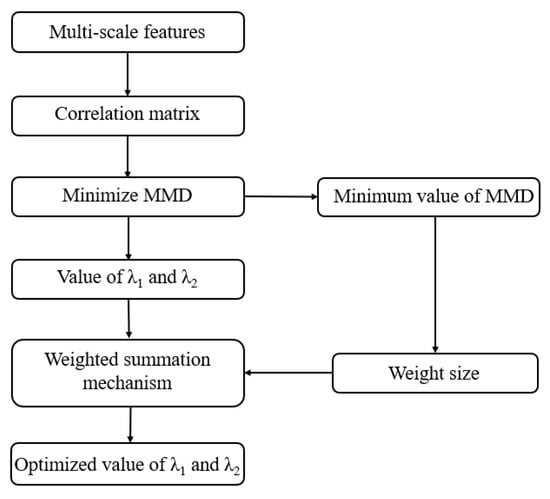

4.1. Case 1: Bearing Condition Monitoring

4.1.1. Data Description

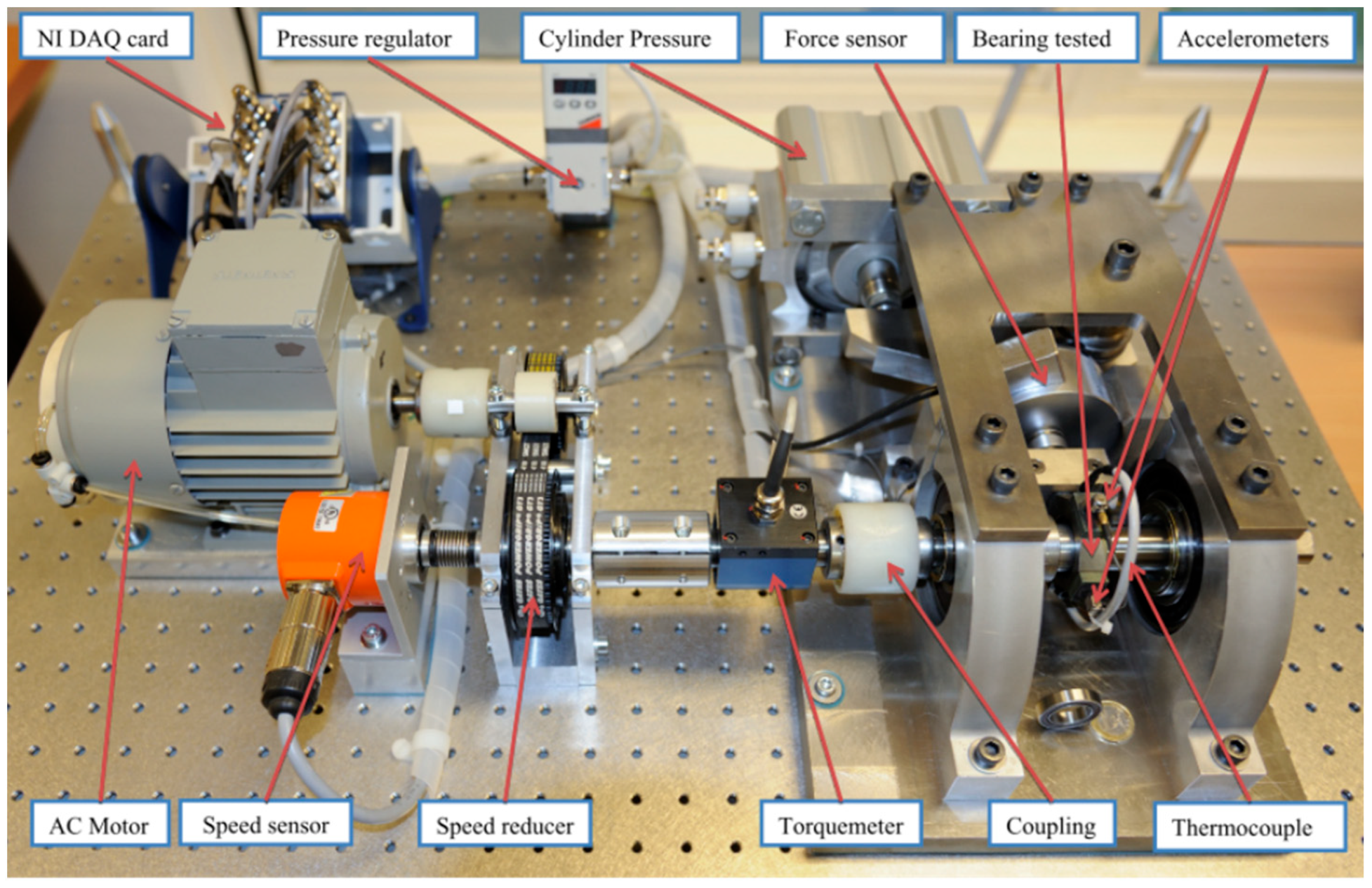

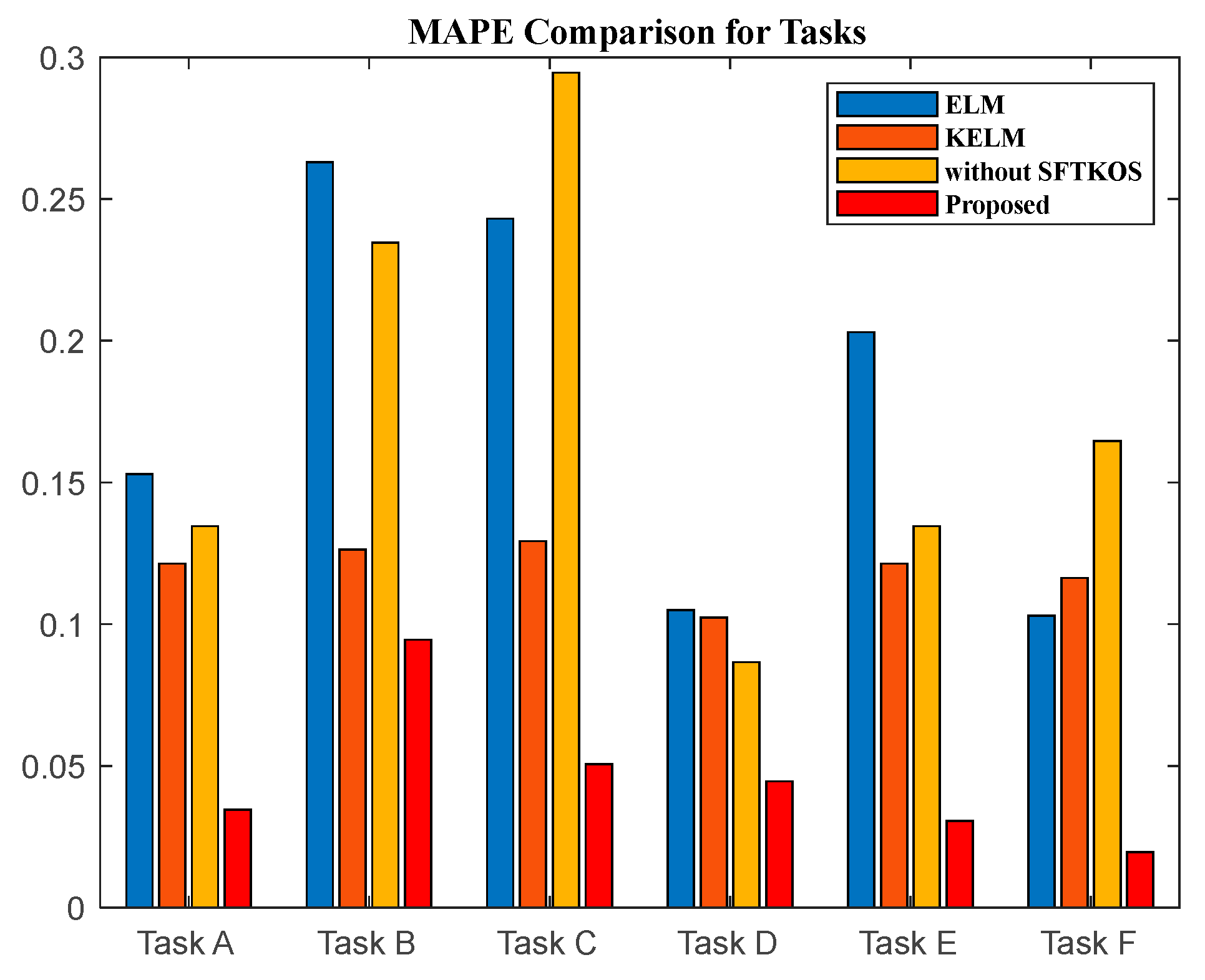

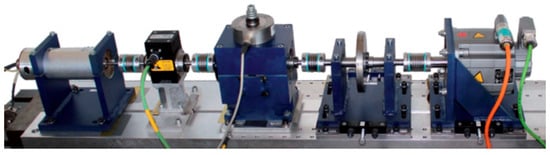

Figure 3 illustrates the experimental system in this paper, PRONOSTIA [31]. It was developed to evaluate methods for detecting bearing faults. The main contribution of this system is supplying authentic experimental data that track the wear and tear of rolling-element bearings throughout their full lifecycle, until complete failure. For example, this platform enables the degradation of bearings to be simulated in just a few hours, allowing for a large number of experiments to be conducted within a week. As a result, the dataset has been widely utilized by researchers as a benchmark for assessing condition monitoring approaches.

Figure 3.

Test platform of PRONOSTIA.

4.1.2. Task Setting

The working condition information of the PRONOSTIA data set is shown in Table 1. This dataset contains three working conditions with different loads and rotational speeds, along with the corresponding bearing data sets. Some data were selected in this case for a controlled trial, as shown in Table 2.

Table 1.

The working condition information of the dataset.

Table 2.

The data set of the experiment.

4.1.3. Experimental Results

To quantify the differences in feature distribution, two statistical tests were used to verify the hypothesis of cross-domain shared degradation patterns: the Kolmogorov–Smirnov (KS) test and MMD. The quantification results are shown in Table 3. All tasks produce KS test p-values , indicating the possibility that they have the same distribution. The MMD values of all tasks were , indicating that the distribution differences before transfer processing were relatively large. These results statistically prove that the assumption of the cross-domain shared degradation model is correct.

Table 3.

The statistical quantification of feature distributions before transfer.

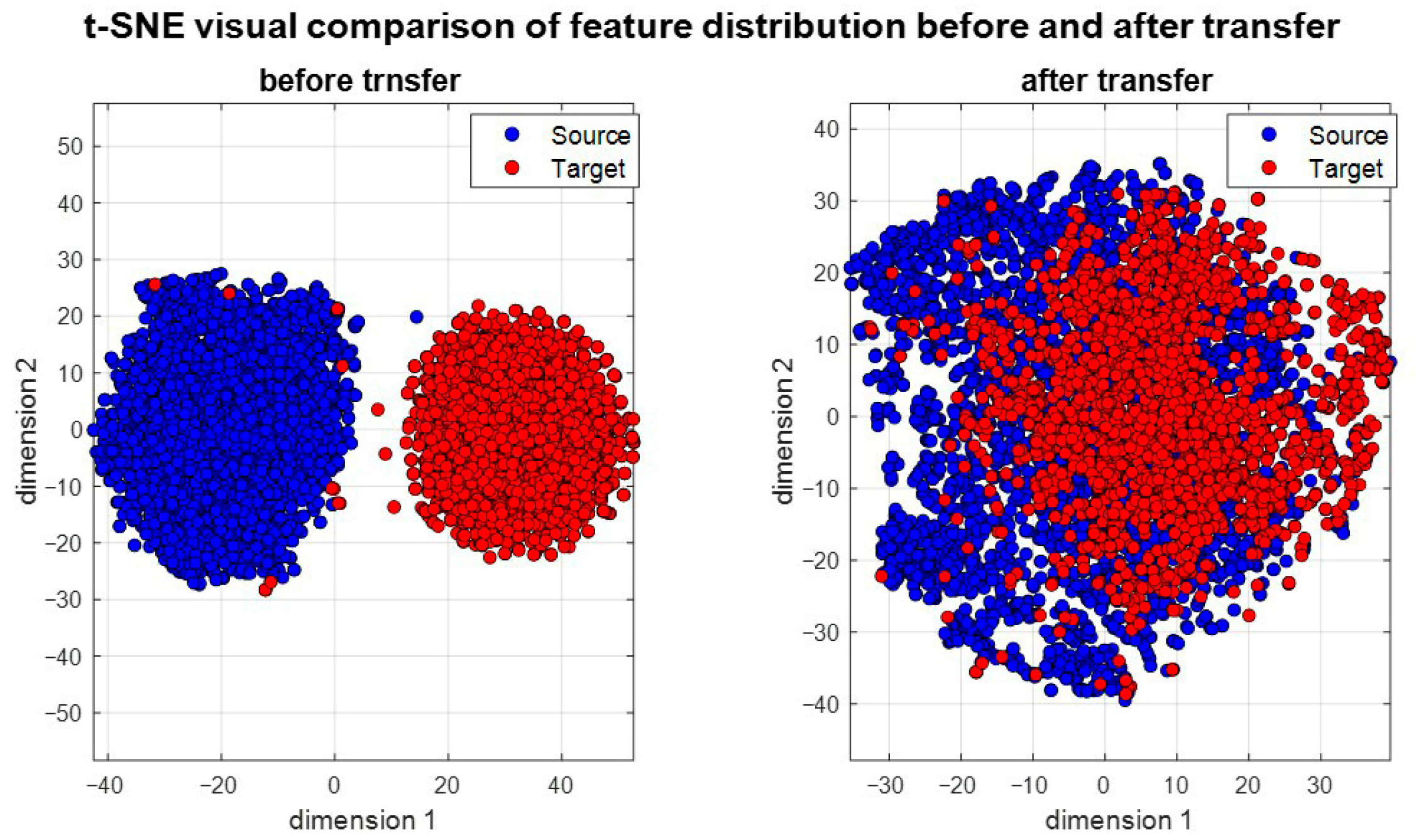

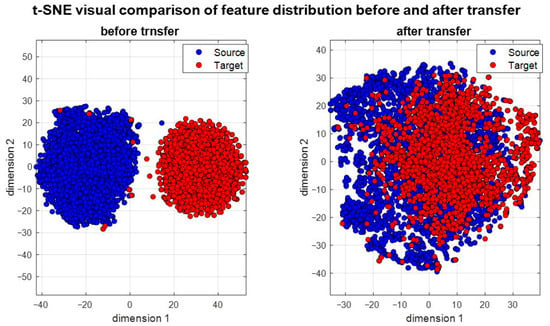

To further validate the differences in feature distribution, the paper takes task A as an example: Figure 4 shows the t-SNE visualization of the feature distribution before and after transfer to illustrate the domain alignment effect more intuitively. Before transfer, the features of the source domain and the target domain are clearly separated. After the TK-KELM transfer, the features were well mixed, indicating that the model has a strong domain alignment ability.

Figure 4.

The visualizations of feature distributions before and after transfer.

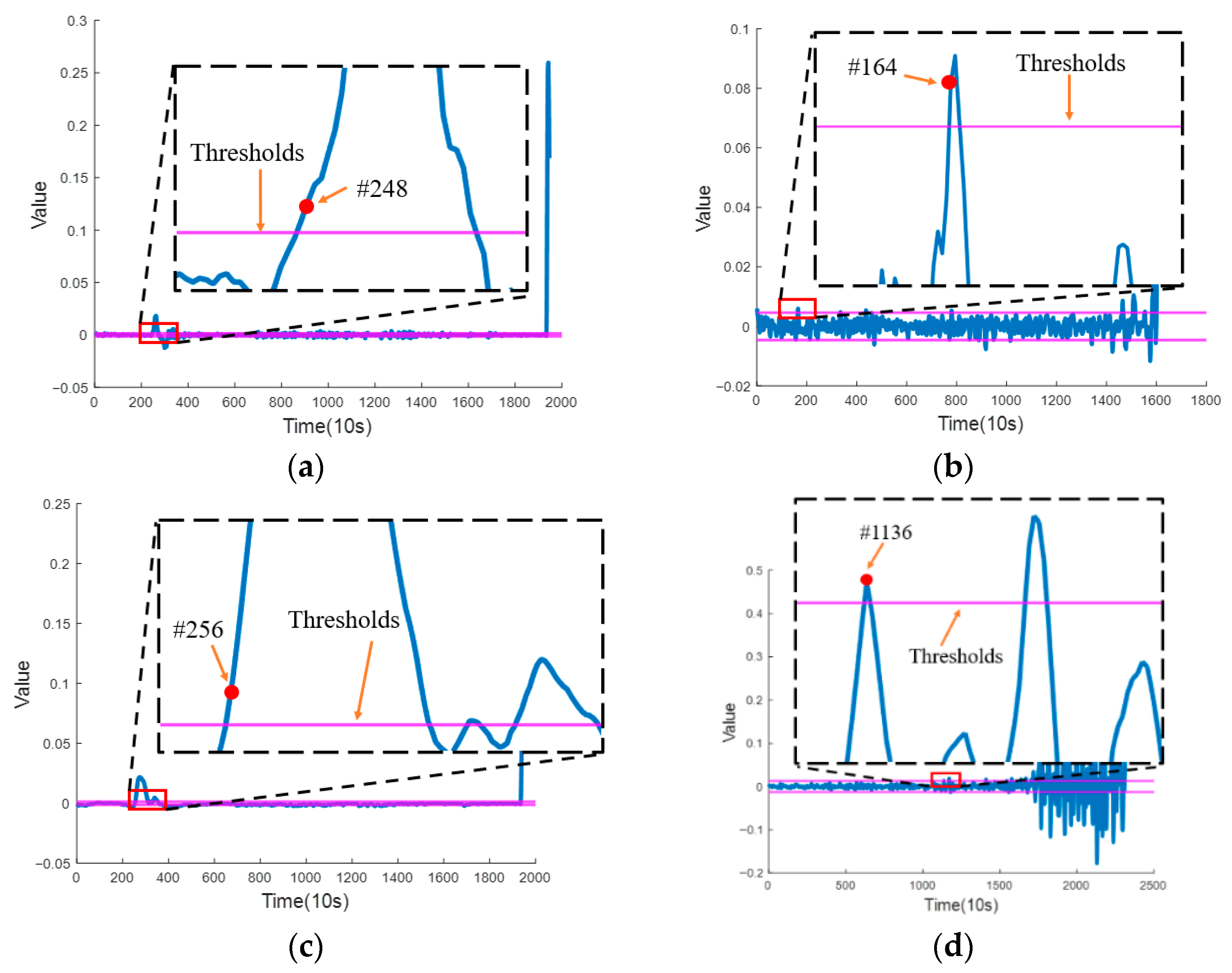

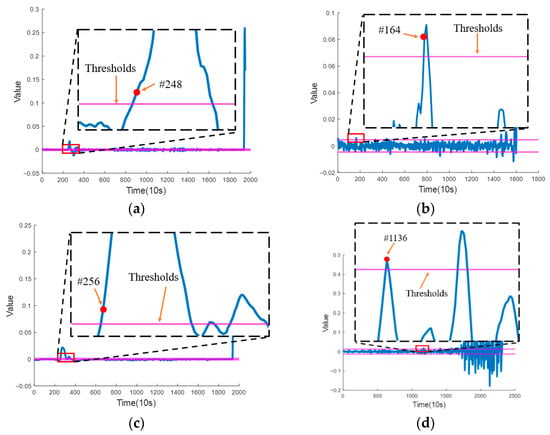

Figure 5 shows the condition monitoring performance of six experiments. For tasks A, B and tasks D, E with the same training dataset, they can all have a good cross-domain migration performance when facing different test datasets. For tasks A, C and tasks D, E with different training datasets, it can be observed that the obtained state change points are almost the same when facing the same set of test datasets, which indicates that the model has good generalization performance. Meanwhile, the tracking index obtained by using the data under working condition one as the source domain is more stable. The reason for the preliminary analysis is that the source domain data is more abundant, enabling the model to better capture the correlation and achieve better transfer performance. For Task B and Task E, they respectively trained the model using the training datasets under different working conditions, and at the same time monitored the test datasets under the third working condition. Both achieved good results.

Figure 5.

The condition monitoring results of task A–F. (a–f) are the results of tasks A–F, respectively.

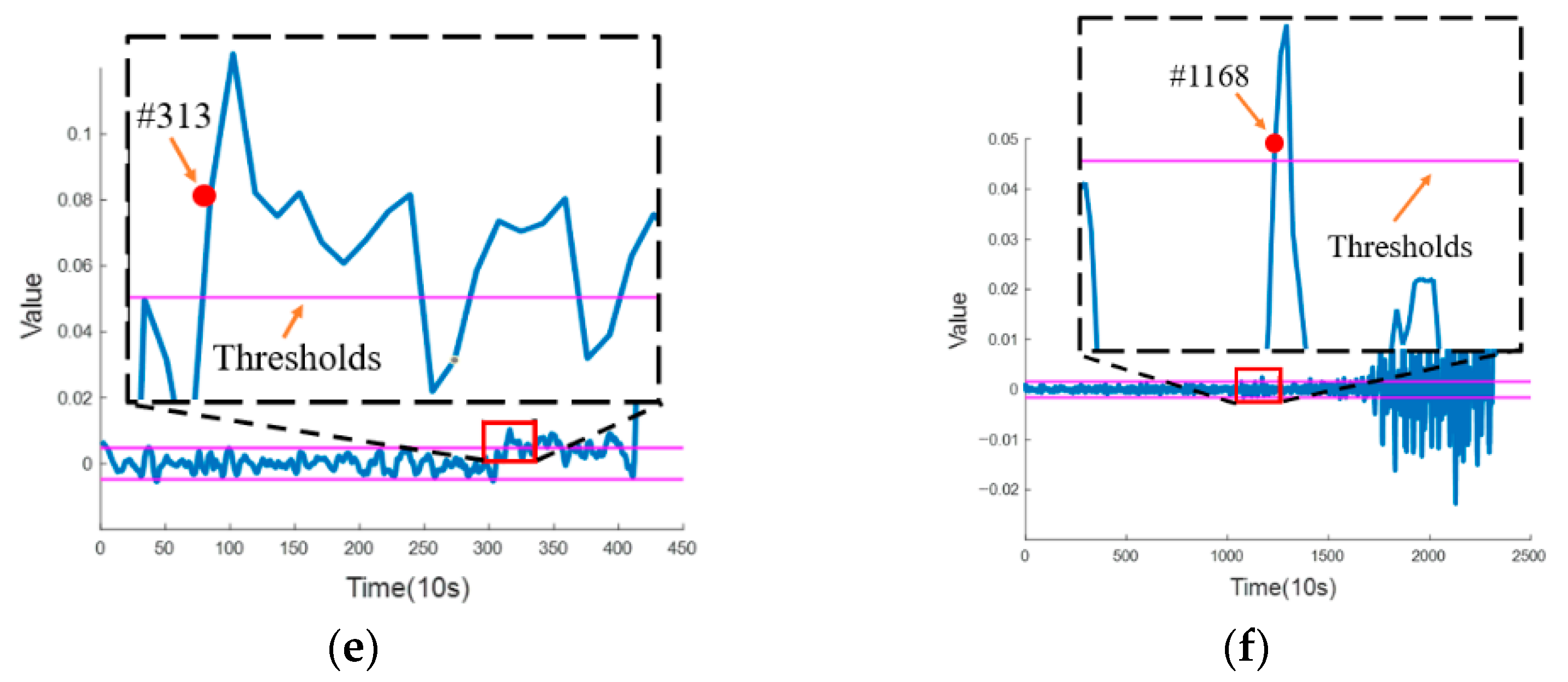

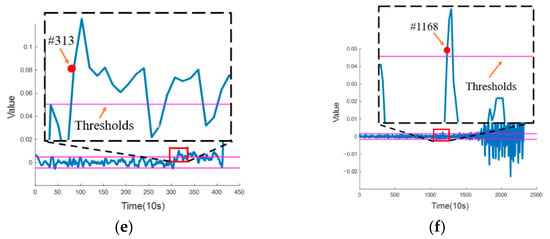

To demonstrate the performance of the proposed model in condition monitoring more clearly, the mean absolute percentage error (MAPE) metric was used. Figure 5 shows the comparison graph of MAPE of four models, ELM, KELM, without SFTKOS and the proposed model.

It is clear from the Figure 6 that the error of the proposed model is minimal. In the MAPE error analysis, the common KELM model is basically stable between 10% and 15% and the ELM model generally reaches 15% to 25%. However, the TK-KELM without SFTKOS model is much higher than the KELM model, with a large fluctuation between 10% and 30%, and even worse than the ELM model in some cases. As for the MAPE of the proposed model being maintained between 0 and 10%, it has a better migration performance compared with the other three models. It can be seen that the proposed SFTKOS is crucial for applying the transfer kernel to the KELM model, which greatly reduces model errors and improves model generalization.

Figure 6.

The MAPE comparison of four models.

4.1.4. Comparing Other Tests

The adaptability of the TK-KELM model is verified via a comparative study with respect to other condition monitoring algorithms. These comparison methods, including the gated recurrent unit-based health index (GRU-HI) [32], the two-level alarm mechanism (TLAM) [33], as well as the hidden Markov model (HMM) [34], comprising an unsupervised FPT detection model (USFPTD) [35] and multilayer cross-domain transformer network (MCTN) [36], are applied. Table 4 gives a comparison of the condition change point locations identified in tasks A-F among the proposed method and other competing degradation models or detection algorithms. For the purpose of comparative analysis, the condition change point determined by the proposed model is relatively advanced. Especially compared with the new health index, GRU-HI, the degradation indicator extracted by the FPCA-MMD method in this paper can help us to monitor the change of bearing status earlier. Compared with MCTN and HMM, the TK-KELM model in this paper has obvious advantages for BCM. For the other two detection algorithms, TLAM and USFPTD, TLAM performs poorly, but USFPTD performs well. For task C, the change point detected by USFPTD was consistent with those detected by the approach presented here, and similar outcomes were obtained for other tasks. In summary, the TK-KELM model demonstrated strong performance in cross-domain BCM tasks, and the bearing degradation index extracted by the FPCA-MMD method is of significant help to BCM tasks.

Table 4.

The condition change point detection comparison.

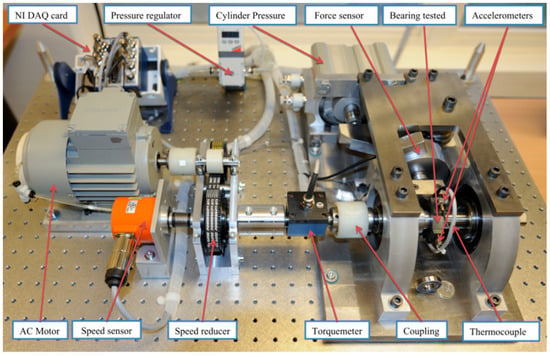

4.2. Case 2: Bearing Fault Diagnosis

4.2.1. Data Description

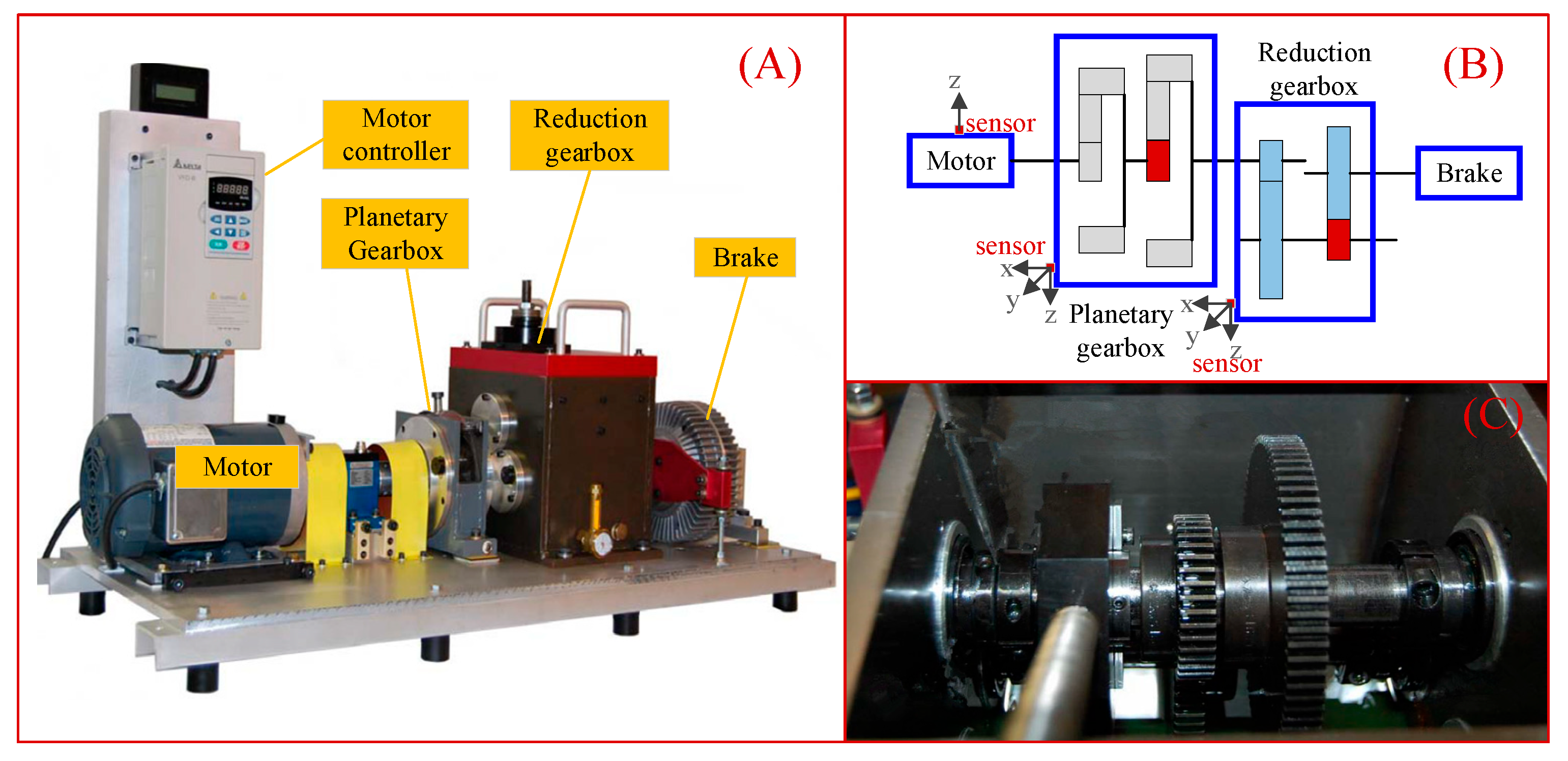

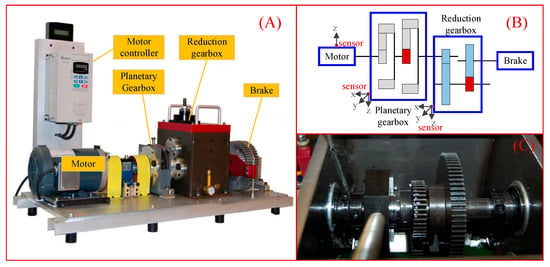

The SEU mechanical dataset [37] contains data related to the health and fault data for roll bearings, collected using the Drivetrain Dynamics Simulator (DDS), which is shown in Figure 7. The bearing data sets are captured with a frequency of 5120 Hz. As shown in Table 5, these records span a range of operating conditions, different speeds and loads, including health and damage states. These conditions are classified into five categories: Normal (health element), Ball (issues with the rolling element), Inner (problems in the inner raceway), Outer (problems in the outer raceway), and Com (issues in both the inner and outer raceways simultaneously).

Figure 7.

The test platform of DDS. (A) shows the overall structure of the test platform; (B) illustrates the schematic diagram of the system components; (C) presents the partial enlarged view of the gear transmission structure.

Table 5.

Description of the data set used in the experiment.

4.2.2. Task Setting

As shown in Table 6, some transfer tasks were set for three different rotational speeds and three different loads, where the rotational speeds are set to D1, D2 and D3 and the system load configurations are set to set to L1, L2 and L3. In the tasks, the target samples were 1000 samples, including all types of faults (e.g., 200 samples for each type of fault). Additionally, the details of the parameter settings are as follows.

Table 6.

The data set of the experiment.

The regularization parameter C in the TK-KELM model is set to 10. The bandwidth σ of the RBF kernel is set to 1.5. The length of the sliding window is 2048 points and the step size is 1024 points. Additionally, each sample is constructed by fusing four time-domain features.

4.2.3. Experimental Results

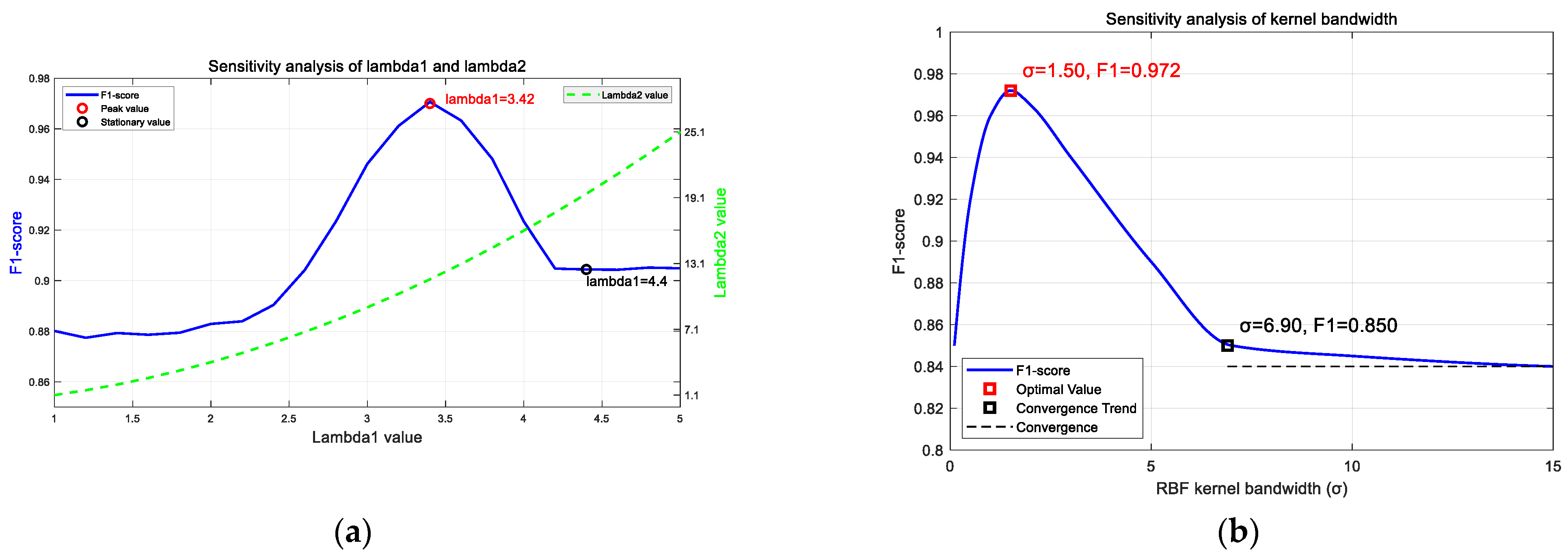

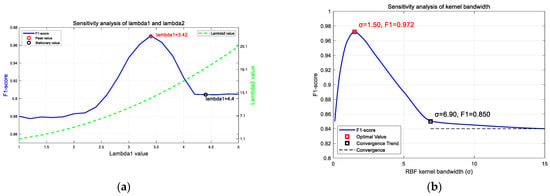

To enhance the transparency and repeatability of the experiments, the sensitivity of these hyperparameters is studied. Take the task A as an example: Figure 8a shows the F1-score of the TK-KELM model with changing hyperparameters (e.g., and , where ). With the increase in , it can be seen from the figure that the F1-score keeps increasing at the beginning, and reaches the maximum value when falls in 3.42. After that, F1-score dips from 0.977 to 0.904. Similarly, in Figure 8b, the F1-score first increases with sigma to 0.972 and then decreases to 0.84 to reach stability.

Figure 8.

The sensitivity analysis. (a) depicts the sensitivity analysis of and with F1-score; (b) shows the sensitivity analysis of kernel bandwidth with F1-score.

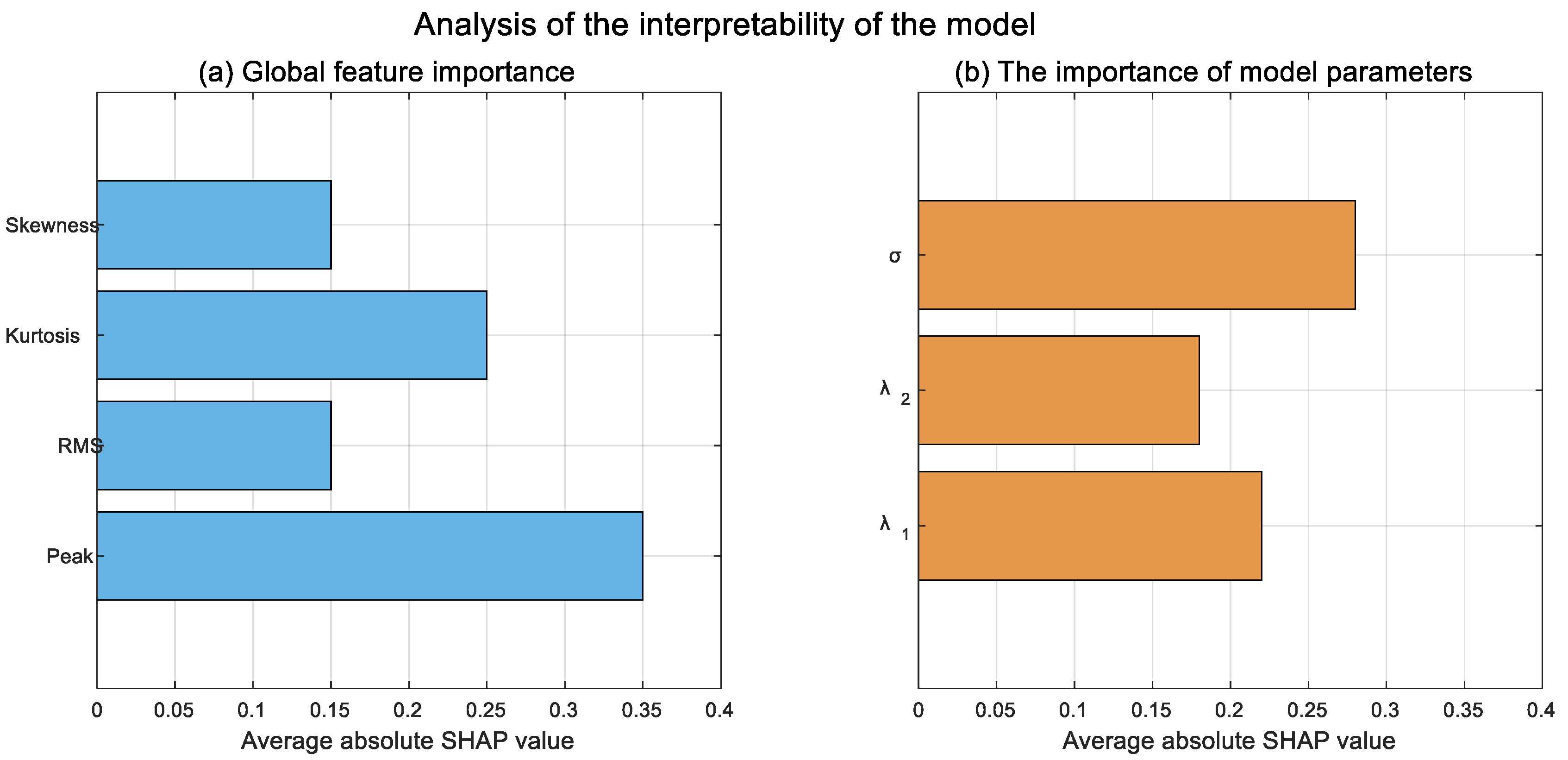

Figure 9a shows the average absolute SHAP values of skewness, kurtosis, root mean square and peak. The higher the SHAP value, the greater the contribution of features to the model’s decision-making. Faults can cause periodic impacts on the vibration signal. When the model detects an abnormally elevated peak value of the target domain samples (combined with the transfer kernel parameter to determine the inter-domain differences), it will prioritize the determination of a fault. The SHAP value of Kurtosis ranks second, corresponding to the compound fault scenario of bearing failure. Normal vibration signals follow an approximately Gaussian distribution. However, compound faults can lead to an asymmetric distribution of “spikes and long tails” in the signals. When the model detects Kurtosis anomalies, it will enhance the discrimination of compound faults. The energy level of the vibration signal reflected by RMS can be used as a preliminary judgment of the existence of faults. The asymmetry of the signal distribution quantified by Skewness may cause Skewness to show abnormalities before Peak and Kurtosis in the early stage of minor faults.

Figure 9.

The SHAP-based interpretability analysis.

Figure 9b reveals how hyperparameters guide decisions: Kernel bandwidth is critical. determines the width of the RBF kernel function. The smaller , the more the kernel function focuses on local feature differences. The larger , the more attention is paid to the global feature distribution. and are associated with intra-domain and inter-domain weights. When the inter-domain differences are large, can be greater than 1 to enhance the inter-domain alignment strength. When the inter-domain differences are small, can be less than 1 to retain more intra-domain features.

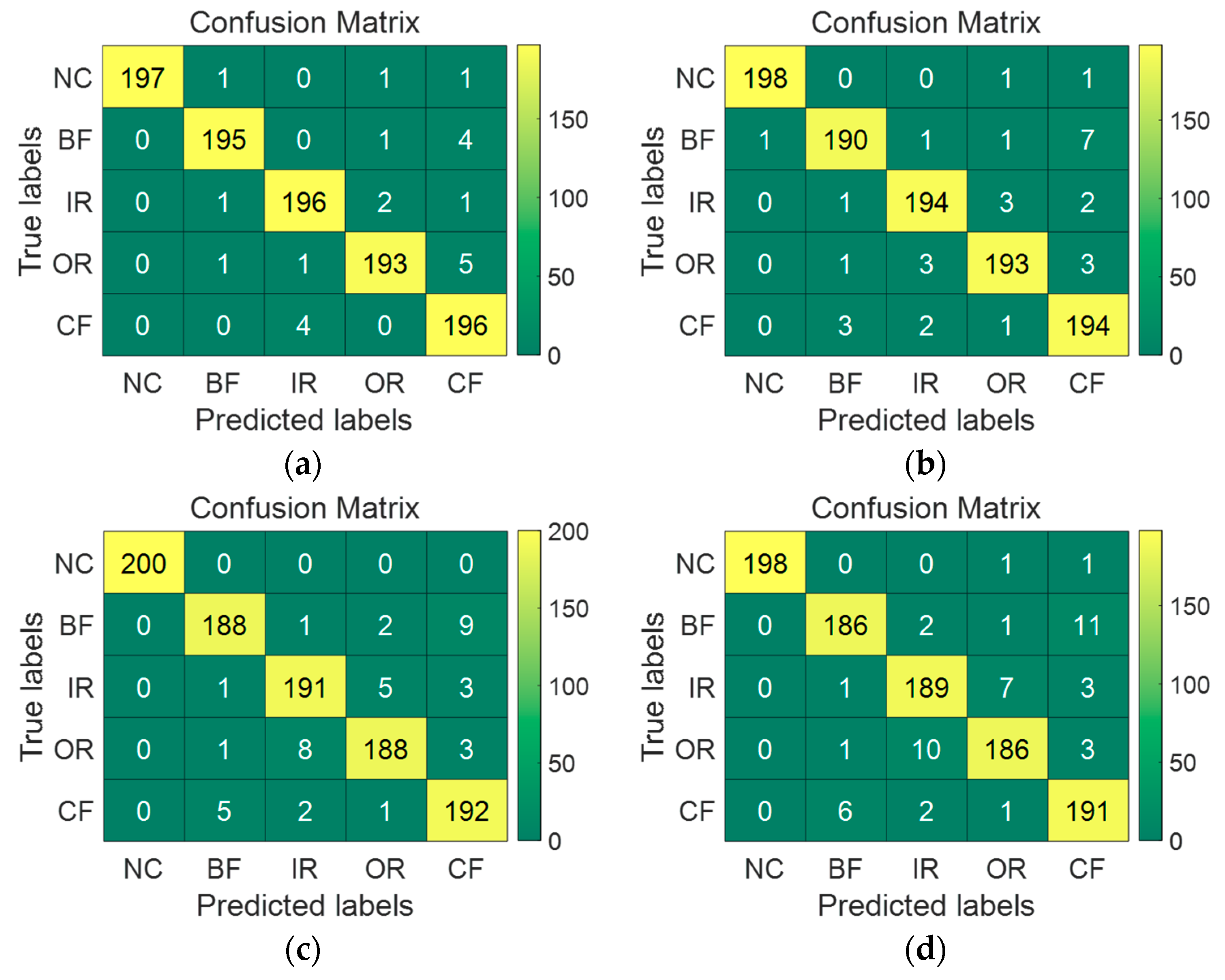

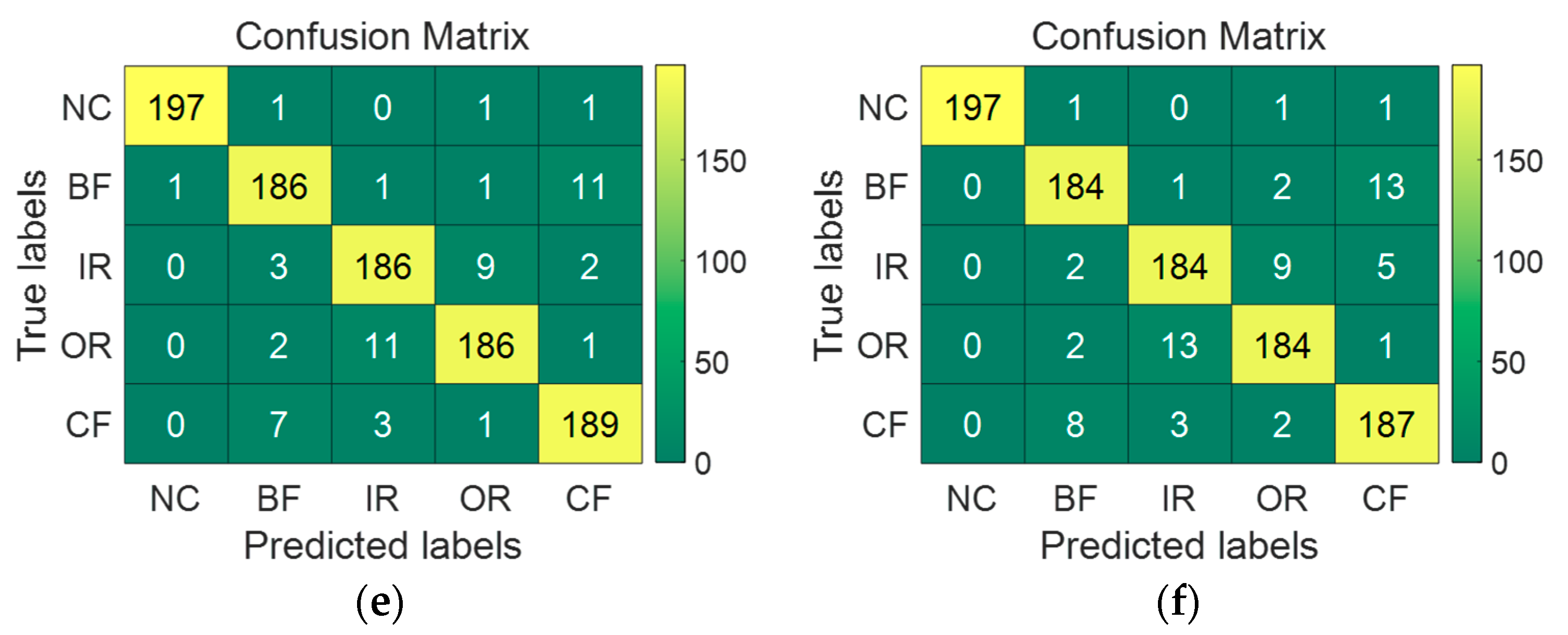

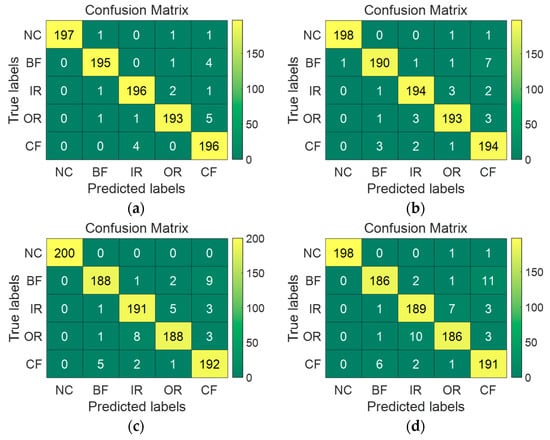

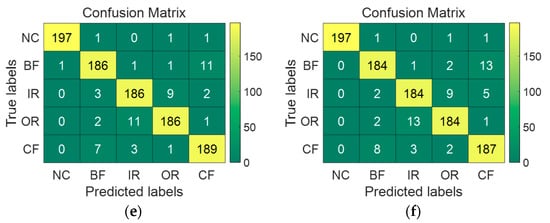

The diagnostic effectiveness of the proposed TK-KELM model on different cross-domain BFD tasks is represented through confusion matrices, as shown in Figure 10. In the confusion matrices, the actual fault categories are represented by rows, while the predicted fault categories are represented by columns. Overall, the model performed well in the six cross-domain transfer learning tasks, with task A and task B having the highest accuracy, followed by task C and task D, and finally task E and task F. It can be found that the performance of the proposed model is better in the case of different speeds than in the case of different loads.

Figure 10.

The confusion matrices of task A–F. (a–f) are the results of tasks A–F, respectively.

In order to effectively illustrate the classification results, three indicators were used: accuracy rate (Precision), recall rate (Recall), and overall index of comprehensive evaluation (F1-score).

The corresponding calculation formula is as follows:

where represents the number of samples for which both the real and predicted labels are and represents the count of samples where the predicted label is but the actual label differs. refers to the number of samples whose real label is while its prediction label is different from .

To ensure the reliability and robustness of the proposed TK-KELM model, 10-fold cross-validation was meticulously conducted across all tasks (A–F). As presented in Table 7, the TK-KELM model demonstrates remarkable stability, with low standard deviations ( for all tasks).

Table 7.

The 10-fold cross-validation results.

4.2.4. Comparing Other Tests

To demonstrate the superiority of the TK-KELM model, this model is compared with other approaches for transfer learning: transfer component analysis (TCA) [38], joint distribution adaptation (JDA) [39], domain adaptive neural network (DANN) [40], multi-representation adaptation network (MRAN) [41], deep convolutional transfer learning network (DCTLN) [42], contrastive learning (CL) [43], deep correlation alignment (Deep CORAL) [44] maximum classifier discrepancy (MCD) [45], shrinkage mamba relation network (SMRN) and relation network (RN) [26].

As shown in Table 8, it is found that the average F1-score of the proposed method achieved 0.956. This performance was significantly better than that of TCA (0.644), JDA (0.742), MRAN (0.756), DANN (0.774), DCTLN (0.799), CL (0.750), without SFTKOS (0.721) and without FPCA-MMD (0.745). In a further analysis of these results, both TCA and JDA use Gaussian kernel function, but they are more focused on reducing edge inter-domain distribution differences. On the contrary, the proposed similarity-based feature-directed transfer kernel optimization method is used in this paper to make the kernel function adjust according to the similarity characteristics of inter-domain data sets, so that the inter-domain similar samples are more closely aligned in the high-dimensional space, improving the transfer performance. MRAN can deal with the alignment problem of different modes or features more comprehensively through multiple representation learning, so it performs well in multi-modal cross-domain tasks. However, the treatment of the nonlinear relationship between features is still rough. DANN’s adversarial data processing involves competition between feature extractors and domain discriminators, resulting in feature representations that are both class discriminant and domain invariant. Despite DANN enhancing the transfer ability of features, it also reduces the discriminability of features. While DCTLN shows decent performance, it may not fully exploit the underlying similarities between domains that are necessary for improving transfer performance in more challenging scenarios. Although CL is powerful in representation learning, especially in unsupervised settings, it does not explicitly account for domain alignment between source and target. In the absence of SFTKOS or FPCA-MMD, the F1-score of the model diagnosis results decreased significantly. Deep CORAL only aligns second-order statistics and has difficultly capturing the distribution differences of complex faults. MCD relies on classifier differential-driven adaptation and has insufficient robustness against multi-task domain offsets of bearing faults. Therefore, the TK-KELM model stands out because it uses the transfer kernel to process nonlinear data, employs an effective transfer kernel optimization method to further optimize the transfer kernel and simultaneously utilizes the FPCA-MMD method to extract multi-scale and invariant features and build the degradation tracking indicator.

Table 8.

Comparison of diagnostic F1-score.

To further validate the practicality of the proposed TK-KELM in zero-faulty bearing fault diagnosis, especially for industrial real-time deployment, Table 9 adds computational efficiency metrics, which are training time, single-sample prediction time, memory usage and CPU/GPU dependency. All experiments are conducted on a unified hardware platform (Intel Core i7-10700K CPU @ 3.80 GHz, NVIDIA RTX 3090). Runtime is averaged over 10 independent trials to ensure reliability. The proposed TK-KELM demonstrates superior computational efficiency for zero-faulty bearing diagnosis. With a training time of 9.6 ± 0.4 s, it is slightly slower than lightweight TCA but far faster than deep learning methods (DANN: 89.5 ± 2.8 s; DCTLN: 156.2 ± 4.2 s) and MRAN (35.7 ± 1.1 s). Its single-sample prediction time (0.14 ± 0.02 ms) meets real-time needs. TK-KELM consumes 482 ± 22 MB memory with 20 ± 3% CPU load, far lower than GPU methods. Balancing accuracy and efficiency, it suits industrial real-time deployment.

Table 9.

Comparison of computational efficiency.

4.3. Case 3: Bearing Fault Diagnosis in the Zero-Faulty Environment

To address the zero-fault scenario, where the target domain lacks fault data, this paper integrates the deep convolutional generative adversarial networks (DCGAN) [46] and out-of-distribution data augmentation (OOD-DA) [26] with the proposed TK-KELM model, respectively. The core idea is to generate pseudo-fault samples consistent with the data distribution of the target domain by using the fault knowledge of the source domain, so as to conduct effective fault diagnosis when the fault data of the target domain is unavailable.

4.3.1. Data Description

For the zero-fault environment verification, vibration data from the Paderborn [47] was used, which is shown in Figure 11. The dataset encompasses signal data from three types of bearings: healthy bearings, bearings with artificial damage and bearings with realistic damage. All these bearings operated under three distinct load conditions, shown in Table 10, and at a rotational speed of 1500 rpm. For each bearing under a specific load setting, a vibration signal lasting roughly 4 s was measured. With a sampling rate of 64 kHz, each measurement resulted in a total of about 256,000 data points.

Figure 11.

The platform of Paderborn bearing data.

Table 10.

The settings of Paderborn platform.

4.3.2. Task Setting

Table 11 presents three transfer tasks, namely task A, task B and task C, which simulate the transfer of source domain knowledge to the target domain with different fault diameters. As shown in Table 12, there are 1000 samples for each type of source domain. To achieve a zero-fault environment, the training samples for the target domain consist of only 250 normal samples and 50 pseudo-fault samples generated by DCGAN and OOD-DA. The test samples for the target domain have 200 samples for each type of fault.

Table 11.

The transfer tasks in zero-faulty environment.

Table 12.

The data set of the transfer tasks in zero-faulty environment.

4.3.3. Comparing Other Tests

Table 13 clearly demonstrates that the proposed TK-KELM model achieves exceptional performance in zero-fault environments by using OOD-DA. This outstanding result validates the effectiveness of the model’s integrated design: by combining pseudo-fault samples generated by OOD-DA with the transfer kernel mechanism, it successfully overcomes the challenge of scarce fault data in target domains. Traditional methods, such as TCA (0.538) and JDA (0.582), struggle here, as their heavy dependence on explicit fault features causes poor generalization when target domain training lacks real faults. Similarly, in the absence of FPCA-MMD and SFTKOS, it is difficult to train the model effectively. TK-KELM and other deep learning models utilize the pseudo faults generated by DCGAN as a bridge to compensate for the scarcity of fault data in the target domain. Although these pseudo faults are not real, they can help the model learn the boundary between normal and abnormal states. In contrast, methods like CL, which lack effective mechanisms to handle scenarios with scarce faults, cannot extract discriminative features from the limited normal data, resulting in a lower F1-scosre. Despite leveraging Mamba for sequence modeling, SMRN and RN are constrained by the reliance on source data alone, lacking pseudo-fault augmentation to generalize to unseen target-domain fault patterns.

Table 13.

Comparison of diagnostic F1-score in zero-faulty environment.

5. Conclusions

This paper proposes a novel transfer kernel enabled kernel extreme learning machine (TK-KELM) model for bearing fault diagnosis and condition monitoring. It addresses the problem of ignoring intra-domain relationships and limiting the range of weighting parameters describing inter-domain relationships in cross-domain scenarios. Experimental results show that the TK-KELM method is superior to conventional methods in accuracy, and can be more effective in BCM and BFD. Although the proposed TK-KELM model performs well, it is important to recognize its current limitations. In Case 3, The current reliance on DCGAN-generated pseudo faults may restrict generalization to unseen real fault types, as synthetic samples cannot fully replicate the complexity of actual fault characteristics. Secondly, the model’s adaptability to varying operating conditions is limited, with performance fluctuating across different settings as observed in Case 2. To address these limitations, future efforts will focus on developing adaptive normal pattern learning mechanisms, as well as designing adaptive transfer mechanisms that can dynamically adjust to varying operating conditions to reduce dependence on pseudo-fault samples and mitigate performance fluctuations across different settings.

Author Contributions

Methodology, H.Y.; Software, W.S.; Formal analysis, J.M.; Data curation, H.W.; Writing—original draft, H.Y. and C.C.; Writing—review & editing, H.Y.; Supervision, C.C.; Funding acquisition, C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by National Natural Science Foundation of China (52305109).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BCM | Bearing condition monitoring |

| BFD | Bearing fault diagnosis |

| ML | Machine learning |

| STL | Statistical transfer learning |

| KTL | Kernel transfer learning |

| TK-KELM | Transfer kernel enabled kernel extreme learning machine |

| SFTKOS | Similarity-guided feature-directed transfer kernel optimization strategy |

| FPCA-MMD | Functional principal component analysis with maximum mean difference |

| MAPE | Mean absolute percentage error |

| GRU-HI | Gated recurrent unit-based health index |

| TLAM | Two-level alarm mechanism |

| HMM | Hidden Markov model |

| USFPTD | Comprising an unsupervised FPT detection model |

| MCTN | Multilayer cross-domain transformer network |

| TCA | Transfer component analysis |

| JDA | Joint distribution adaptation |

| DANN | Domain adaptive neural network |

| MRAN | Multi-representation adaptation network |

| DCTLN | Deep convolutional transfer learning network |

| CL | Contrastive learning |

| DCGAN | Deep convolutional generative adversarial networks |

| Deep CORAL | Deep correlation alignment |

| OOD-DA | Out-of-distribution data augmentation |

| SHRN | Shrinkage mamba relation network |

| RN | Ration network |

References

- Choi, K.; Yi, J.; Park, C.; Yoon, S. Deep Learning for Anomaly Detection in Time-Series Data: Review, Analysis, and Guidelines. IEEE Access 2021, 9, 120043–120065. [Google Scholar] [CrossRef]

- Cao, H.; Niu, L.; Xi, S.; Chen, X. Mechanical model development of rolling bearing-rotor systems: A review. Mech. Syst. Signal Process. 2018, 102, 37–58. [Google Scholar] [CrossRef]

- Truong, C.; Oudre, L.; Vayatis, N. Selective review of offline change point detection methods. Signal Process. 2020, 167, 107299. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, Q.; Xu, L.; Xu, X.; Liang, Z. Wheat Lodging Direction Detection for Combine Harvesters Based on Improved K-Means, Bag of Visual Words. Agronomy 2023, 13, 2227. [Google Scholar] [CrossRef]

- Patacchiola, M.; Turner, J.; Crowley, E.J.; Storkey, A.J. Bayesian Meta-Learning for the Few-Shot Setting via Deep Kernels. Adv. Neural Inf. Process. Syst. 2020, 33, 16108–16118. [Google Scholar]

- Huang, S.; Cai, N.; Narrandes, S.; Wang, Y.; Xu, W. Applications of Support Vector Machine (SVM) Learning in Cancer Genomics. Cancer Genom. Proteom. 2018, 15, 41–51. [Google Scholar]

- Xu, J.; Fang, M.; Zhao, W.; Fan, Y.; Ding, X. Deep Transfer Learning Remaining Useful Life Prediction of Different Bearings. In Proceedings of the International Joint Conference on Neural Networks, Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Antoni, J.; Borghesani, P. A statistical methodology for the design of condition indicators. Mech. Syst. Signal Process. 2019, 114, 290–327. [Google Scholar] [CrossRef]

- Chen, B.; Cheng, Y.; Cao, H.; Song, S.; Mei, G.; Gu, F.; Zhang, W.; Ball, A.D. Generalized Statistical Indicators-Guided Signal Blind Deconvolution for Fault Diagnosis of Railway Vehicle Axle-Box Bearings. IEEE Trans. Veh. Technol. 2025, 74, 2458–2469. [Google Scholar] [CrossRef]

- Chen, B.; Cheng, Y.; Allen, P.; Wang, S.; Gu, F.; Zhang, W.; Ball, A.D. A product envelope spectrum generated from spectral correlation/coherence for railway axle-box bearing fault diagnosis. Mech. Syst. Signal Process. 2025, 225, 112262. [Google Scholar] [CrossRef]

- Zhou, X.; Sun, J.; Tian, Y.; Lu, B.; Hang, Y.; Chen, Q. Hyperspectral technique combined with deep learning algorithm for detection of compound heavy metals in lettuce. Food Chem. 2020, 321, 126503. [Google Scholar] [CrossRef]

- Tsung, F.; Zhang, K.; Cheng, L.; Song, Z. Statistical transfer learning: A review and some extensions to statistical process control. Qual. Eng. 2017, 30, 115–128. [Google Scholar] [CrossRef]

- Yan, T.; Wang, D.; Hou, B.; Peng, Z. Generic Framework for Integration of First Prediction Time Detection with Machine Degradation Modelling from Frequency Domain. IEEE Trans. Reliab. 2022, 71, 1464–1476. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Steibel, J.; Siegford, J.; Han, J.; Norton, T. Classification of drinking, drinker-playing in pigs by a video-based deep learning method. Biosyst. Eng. 2020, 196, 1–14. [Google Scholar] [CrossRef]

- Hu, Q.; Si, X.; Qin, A.; Lv, Y.; Liu, M. Balanced Adaptation Regularization Based Transfer Learning for Unsupervised Cross-Domain Fault Diagnosis. IEEE Sens. J. 2022, 22, 12139–12151. [Google Scholar] [CrossRef]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Ganin, Y.; Kulkarni, T.; Babuschkin, I.; Ali Eslami, S.M.; Vinyals, O. Synthesizing Programs for Images using Reinforced Adversarial Learning. In Proceedings of the Machine Learning Research, Stockholm, Sweden, 10–15 July 2018; pp. 1666–1675. [Google Scholar]

- Radhakrishnan, A.; Ruiz Luyten, M.; Prasad, N.; Uhler, C. Transfer Learning with Kernel Methods. Nat. Commun. 2023, 14, 55–70. [Google Scholar] [CrossRef]

- Cao, B.; Pan, S.J.; Zhang, Y.; Yeung, D.; Yang, Q. Adaptive transfer learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Atlanta, GA, USA, 11–15 July 2010; pp. 407–712. [Google Scholar]

- Wagle, N.; Frew, E.W. Forward Adaptive Transfer of Gaussian Process Regression. J. Aerosp. Inform. Syst. 2017, 14, 214–231. [Google Scholar] [CrossRef]

- Wei, P.; Vo, T.V.; Qu, X.; Ong, Y.S.; Ma, Z. Transfer Kernel Learning for Multi-Source Transfer Gaussian Process Regression. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3862–3876. [Google Scholar] [CrossRef] [PubMed]

- Wei, P.; Sagarna, R.; Ke, Y.; Ong, Y.-S. Practical Multisource Transfer Regression with Source–Target Similarity Captures. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3498–3509. [Google Scholar] [CrossRef]

- Zheng, X.; Fan, W.; Chen, C.; Peng, Z. Adaptive Two-Stage Model for Bearing Remaining Useful Life Prediction Using Gaussian Process Regression with Matched Kernels. IEEE Trans. Reliab. 2024, 73, 1958–1966. [Google Scholar] [CrossRef]

- Lu, X.; Ma, Z.; Lin, Y. Domain Adaptive Learning Based on Sample-Dependent and Learnable Kernels. arXiv 2021, arXiv:2102.09340. [Google Scholar] [CrossRef]

- Wu, Z.; Jiang, H.; Zhao, K.; Li, X. An adaptive deep transfer learning method for bearing fault diagnosis. Measurement 2020, 151, 107227. [Google Scholar] [CrossRef]

- Chen, Z.; Huang, H.; Deng, Z.; Wu, J. Shrinkage mamba relation network with out-of-distribution data augmentation for rotating machinery fault detection and localization under zero-faulty data. Mech. Syst. Signal Process. 2025, 224, 112145. [Google Scholar] [CrossRef]

- Huang, G.; Zhu, Q.; Siew, C. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Wei, Y.; Xiao, G.; Deng, H.; Chen, H.; Tong, M.; Zhao, G.; Liu, Q. Hyperspectral image classification using FPCA-based kernel extreme learning machine. Optik 2015, 126, 3942–3948. [Google Scholar] [CrossRef]

- Li, Z.; Cheng, D.; Zhang, H.; Zhou, K.; Wang, Y. Multi-feature spaces cross adaption transfer learning-based bearings piece-wise remaining useful life prediction under unseen degradation data. Adv. Eng. Inform. 2024, 60, 102413. [Google Scholar] [CrossRef]

- Nectoux, P.; Gouriveau, R.; Medjaher, K.; Ramasso, E.; Morello, B.; Zerhouni, N.; Varnier, C. PRONOSTIA: An Experimental Platform for Bearings Accelerated Life Test. In Proceedings of the 2025 IEEE International Conference on Prognostics and Health Management, Denver, CO, USA, 18–21 June 2012. [Google Scholar]

- Xu, C.; Yang, K.; Chen, X.; Luo, X.; Yu, H. Transfer Learning for Regression through Adaptive Gaussian Process. In Proceedings of the International Conference on Tools for Artificial Intelligence, Macao, China, 31 October–2 November 2022; pp. 42–47. [Google Scholar]

- Cheng, H.; Kong, X.; Wang, Q.; Ma, H.; Yang, S. The two-stage RUL prediction across operation conditions using deep transfer learning and insufficient degradation data. Reliab. Eng. Syst. Saf. 2022, 225, 108581. [Google Scholar] [CrossRef]

- Zhu, J.; Chen, N.; Shen, C. A new data-driven transferable remaining useful life prediction approach for bearing under different working conditions. Mech. Syst. Signal Process. 2020, 139, 106602. [Google Scholar] [CrossRef]

- Chen, K.; Liu, J.; Guo, W.; Wang, X. A two-stage approach based on Bayesian deep learning for predicting remaining useful life of rolling element bearings. Comput. Electr. Eng. 2023, 109, 108745. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, K.; Yu, K.; Ji, J.C.; Ren, Z.; Liu, Z. Dynamic Model-Assisted Bearing Remaining Useful Life Prediction Using the Cross-Domain Transformer Network. IEEE ASME Trans. Mechatron. 2023, 28, 1070–1080. [Google Scholar] [CrossRef]

- Shao, S.; McAleer, S.; Yan, R.; Baldi, P. Highly Accurate Machine Fault Diagnosis Using Deep Transfer Learning. IEEE Trans. Ind. Inf. 2019, 15, 2446–2455. [Google Scholar] [CrossRef]

- Pan, S.; Tsang Ivor, W.; Kwok, J.T.; Yang, Q. Domain Adaptation via Transfer Component Analysis. IEEE Trans. Neural Netw. 2011, 22, 199–210. [Google Scholar] [CrossRef] [PubMed]

- Han, T.; Liu, C.; Yang, W.; Jiang, D. Deep transfer network with joint distribution adaptation: A new intelligent fault diagnosis framework for industry application. ISA Trans. 2020, 97, 269–281. [Google Scholar] [CrossRef]

- Ghifary, M.; Kleijin, W.B.; Zhang, M. Domain Adaptive Neural Networks for Object Recognition. arXiv 2014, arXiv:1409.6041. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhuang, F.; Wang, J.; Chen, J.; Shi, Z.; Wu, W.; He, Q. Multi-representation adaptation network for cross-domain image classification. Neural Netw. 2019, 119, 214–221. [Google Scholar] [CrossRef]

- Guo, L.; Lei, Y.; Xing, S.; Yan, T.; Li, N. Deep Convolutional Transfer Learning Network: A New Method for Intelligent Fault Diagnosis of Machines With Unlabeled Data. IEEE. Trans. Ind. Electron. 2019, 66, 7316–7325. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. arXiv 2020, arXiv:1911.05722v3. [Google Scholar] [CrossRef]

- Li, X.; Zhang, Z.; Gao, L.; Wen, L. A New Semi-Supervised Fault Diagnosis Method via Deep CORAL and Transfer Component Analysis. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 690–699. [Google Scholar] [CrossRef]

- Cho, J.W.; Kim, D.-J.; Jung, Y.; Kweon, I.S. MCDAL: Maximum Classifier Discrepancy for Active Learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8753–8763. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2016, arXiv:1511.06434. [Google Scholar] [CrossRef]

- Lessmeier, C.; Kimotho, J.K.; Zimmer, D.; Sextro, W. Condition Monitoring of Bearing Damage in Electromechanical Drive Systems by Using Motor Current Signals of Electric Motors: A Benchmark Data Set for Data-Driven Classification. In Proceedings of the European Conference of the Prognostics and Health Management Society (PHM Society), Bilbao, Spain, 5–8 July 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).