How to Win Bosch Future Mobility Challenge: Design and Implementation of the VROOM Autonomous Scaled Vehicle

Abstract

1. Introduction

2. Literature Review

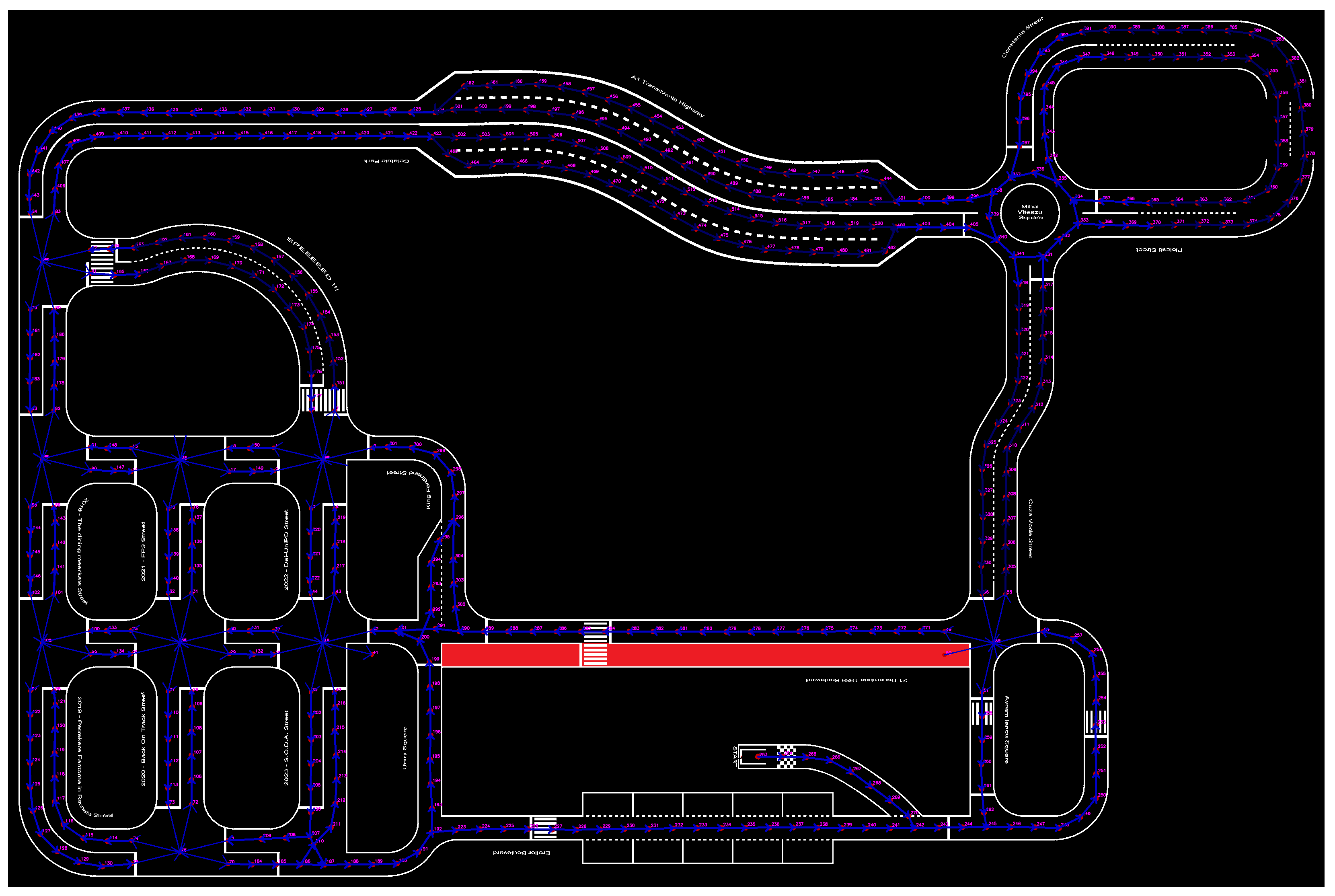

3. The Bosch Future Mobility Challenge Competition

- Project Development: Teams submit monthly status updates showcasing their progress, approach, and future plans. These updates include a written report, a video, and a project timeline.

- Speed Challenge: This is an additional run where points are awarded only if the vehicle successfully completes a predetermined path within a set time limit.

- Car Concept: This is an evaluation focusing on several criteria, including the scalability of the vehicle, reconfigurability, and robustness. Another factor is the hardware-to-software ratio, examining how well the vehicle performs given its hardware. Teams present these aspects before their runs, highlighting the strengths of their designs.

- Run Evaluation: Points are given based on the vehicle’s overall performance, considering the experience from both a hypothetical passenger’s perspective and that of a pedestrian observing the vehicle.

3.1. Competition Requirements

3.2. Team Requirements

4. Implementation

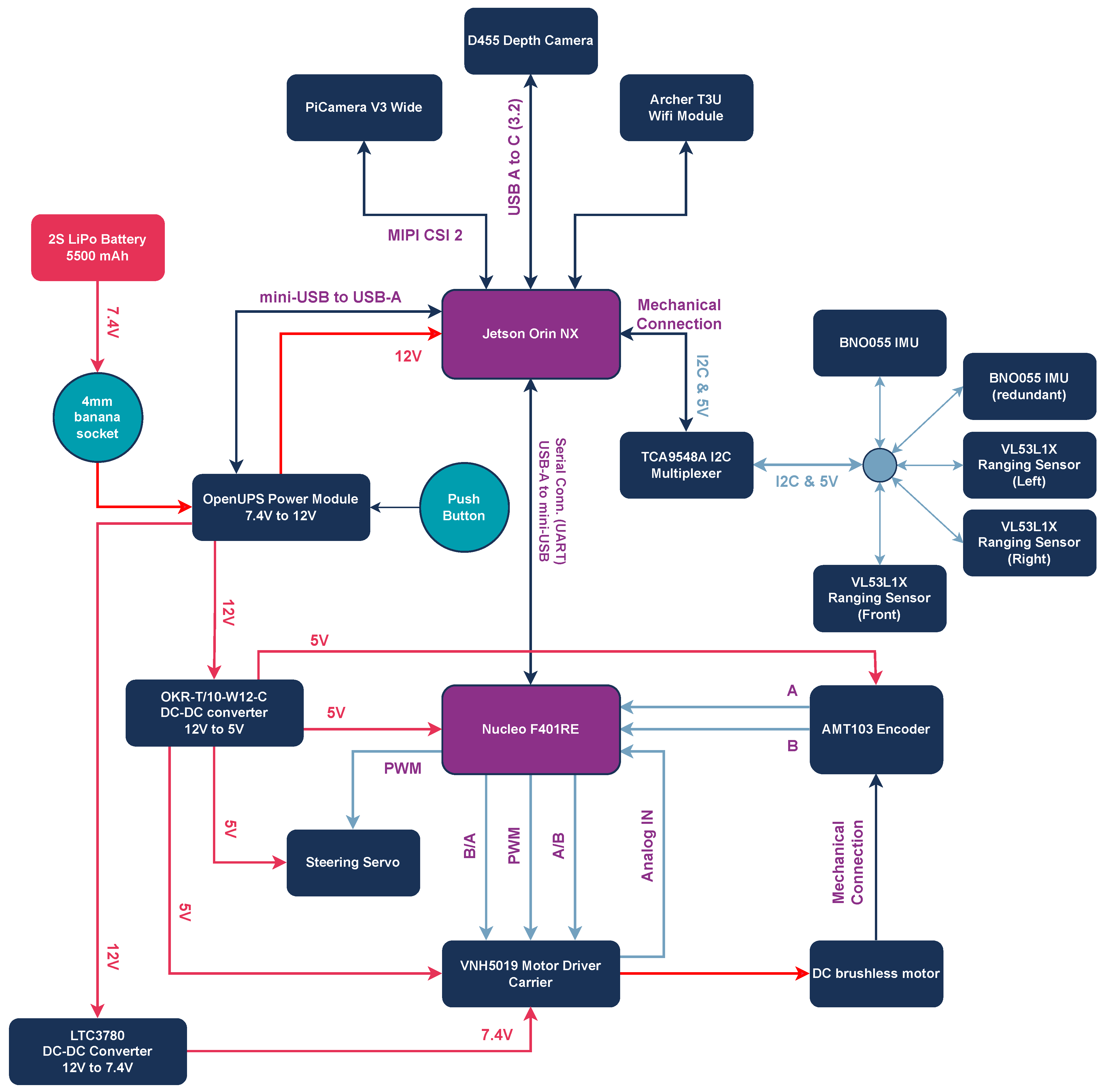

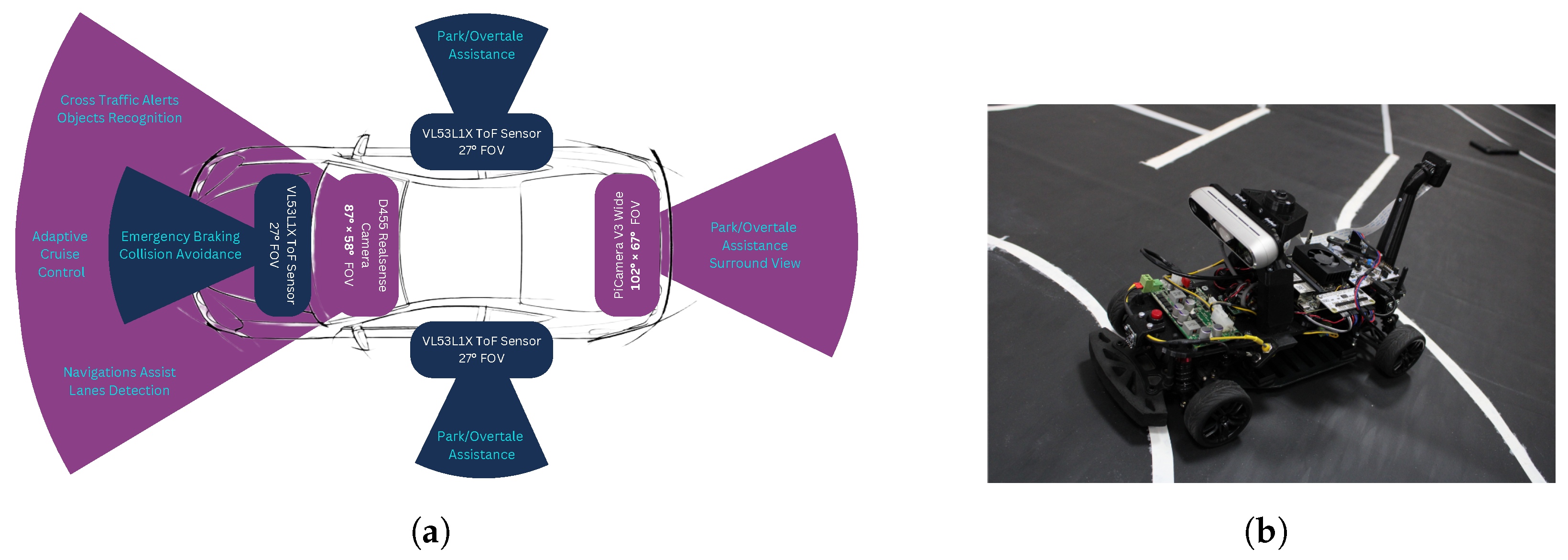

4.1. Hardware Design and Implementation

- All ranging sensors and IMUs communicate via the I2C protocol through the MUX with the Jetson. The physical connection is achieved with jumper wires, while the MUX fits directly on the 40-pin header of the Jetson Carrier board.

- The depth camera communicates via the USB 3.2 protocol with the Jetson platform. The physical connection is achieved via a USB-A to USB-C cable.

- The Nucleo and the OpenUPS board communicate via the USB 2.0 protocol with the Jetson platform (serial). The physical connection is achieved via USB-A to miniUSB-A cables.

- The PiCamera communicates via the MIPI CSI protocol with the Jetson platform. The physical connection is achieved via a 22-pin Ribbon Flexible Flat Cable (FFC).

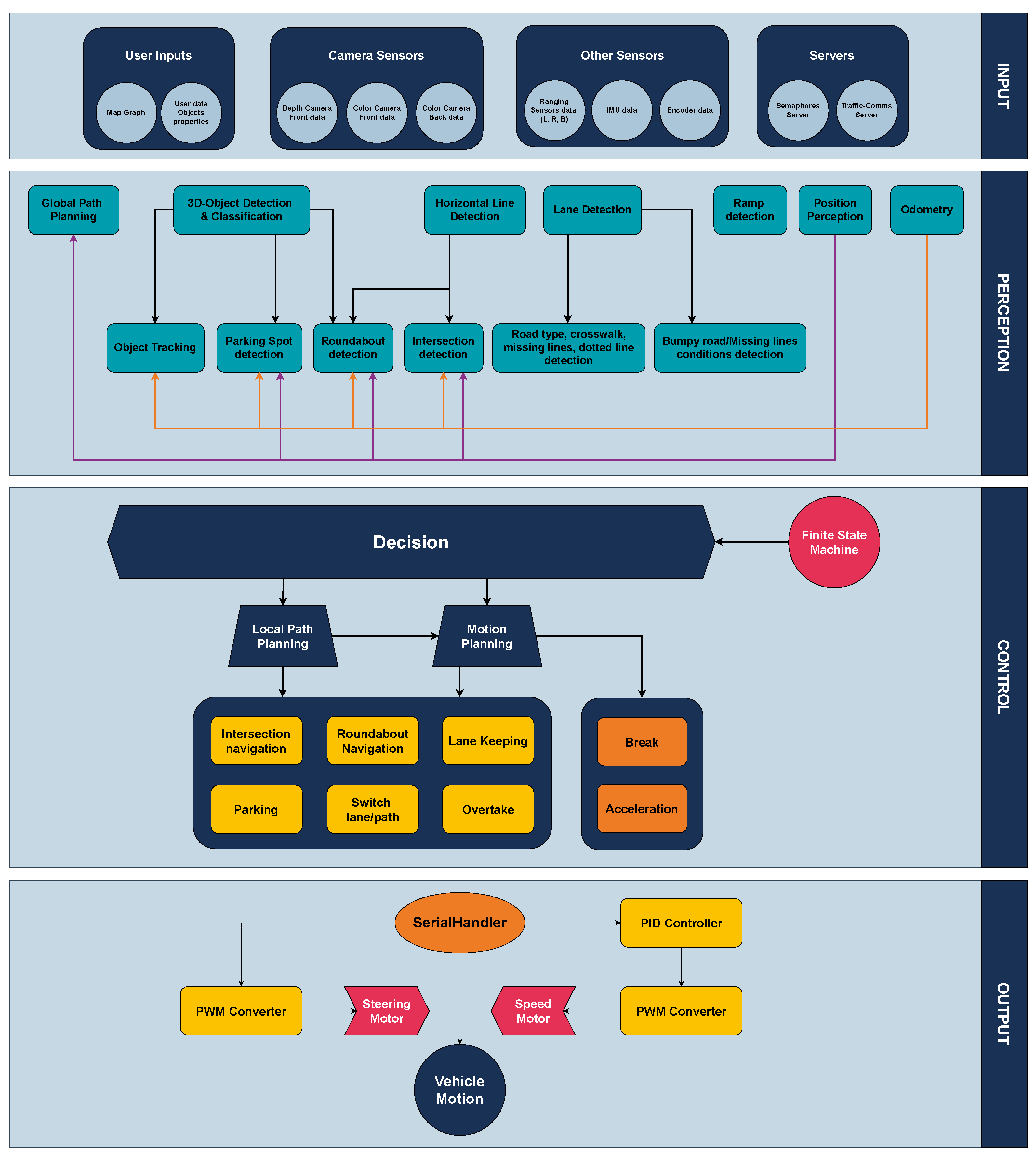

4.2. Software Architecture and Reconfigurability

4.2.1. Software Layers

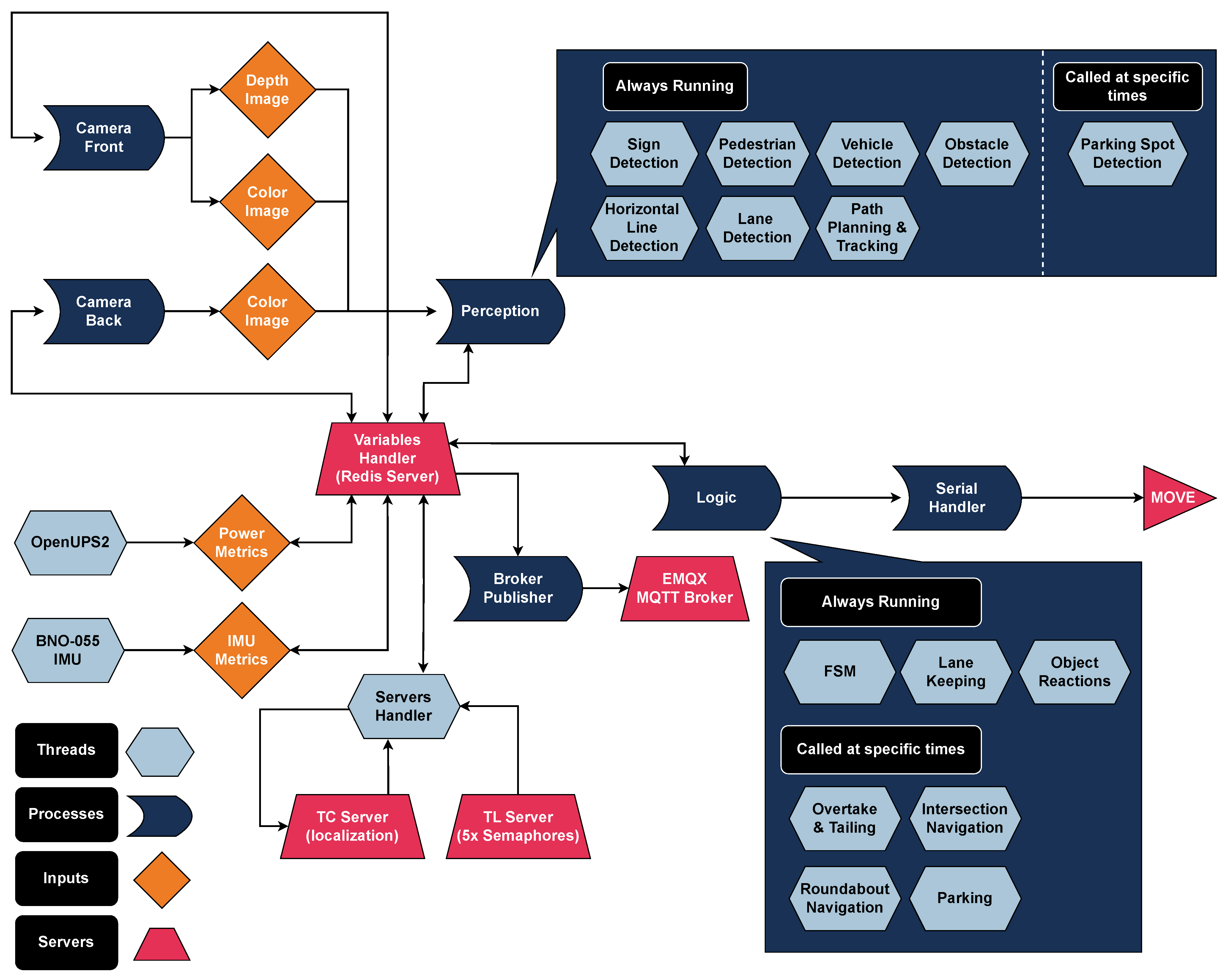

4.2.2. Asynchronous Functionality

4.2.3. Data Publishing

4.2.4. Inter-Process Communication

4.2.5. Reconfigurability Aspect

4.3. Logic Implementation

- Priority 1—Pedestrians: Ensuring the safety of pedestrians is paramount. If a pedestrian is detected within or near the vehicle’s path, the FSM immediately switches to handle this scenario.

- Priority 2—Vehicles: If another vehicle is detected, the FSM prioritizes managing the situation by determining whether an overtaking maneuver, vehicle tailing, or stopping is required.

- Priority 3—Traffic Signs/Lights: Obeying traffic signs and lights is crucial for safe navigation, and the FSM shifts states accordingly to manage these.

- Priority 4—Path Flags: Finally, the FSM handles route-related flags, ensuring proper navigation through complex road elements like intersections.

4.4. Software Modules

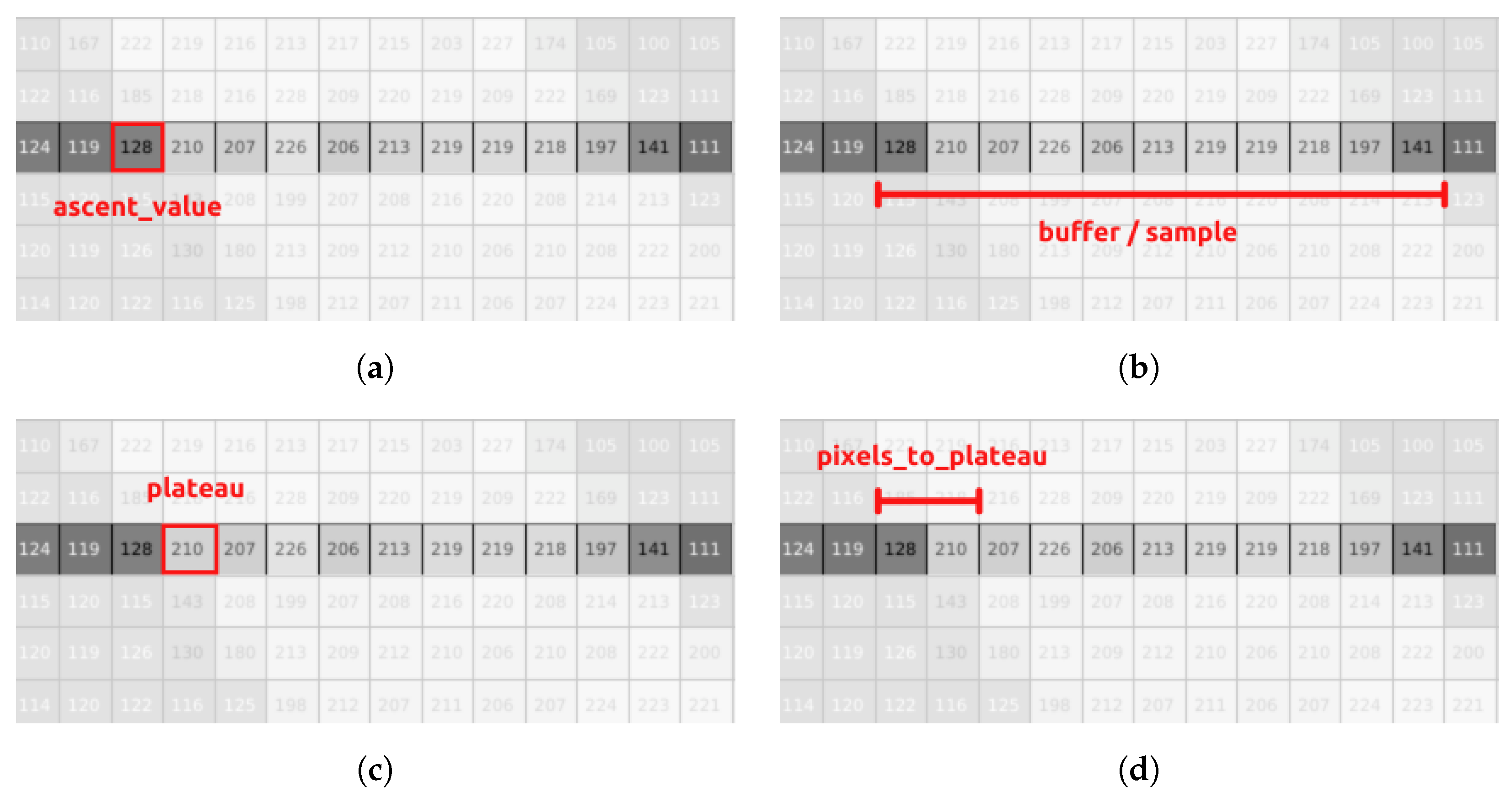

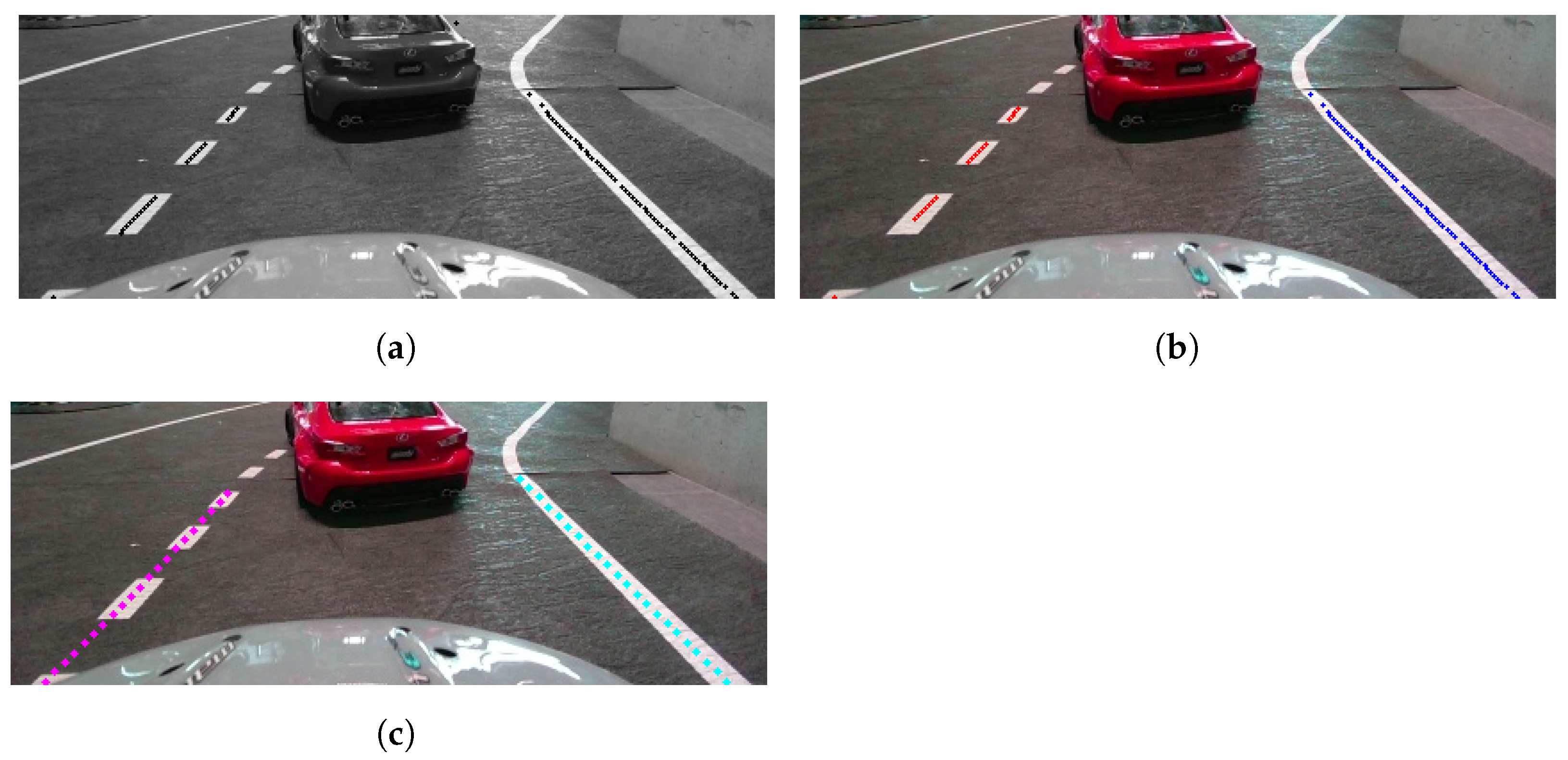

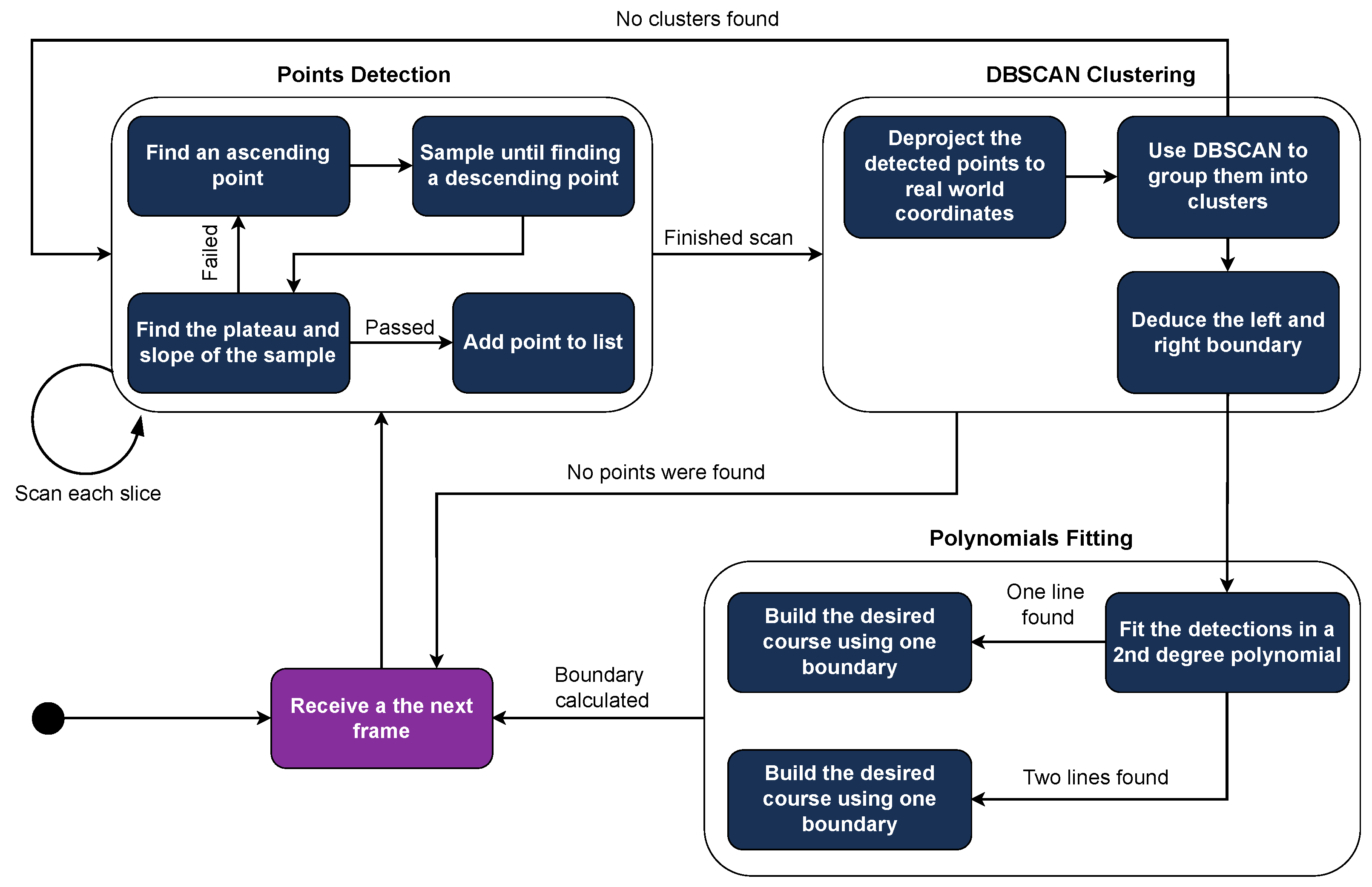

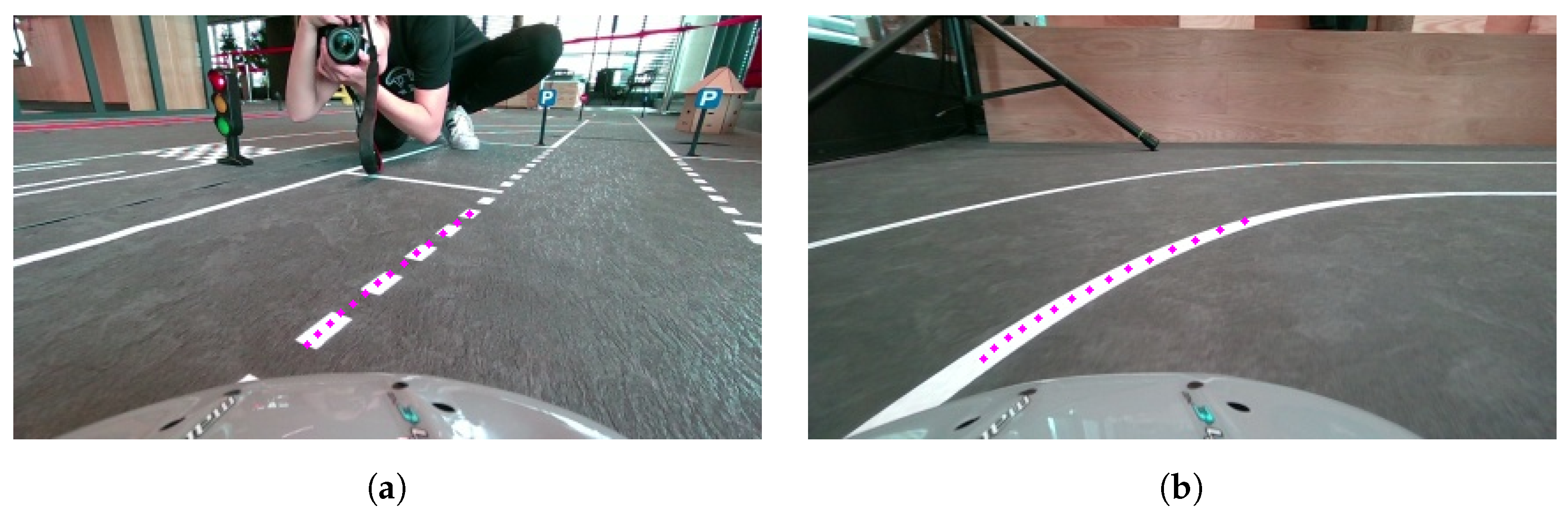

4.4.1. Lane Detection

4.4.2. Lane Keeping

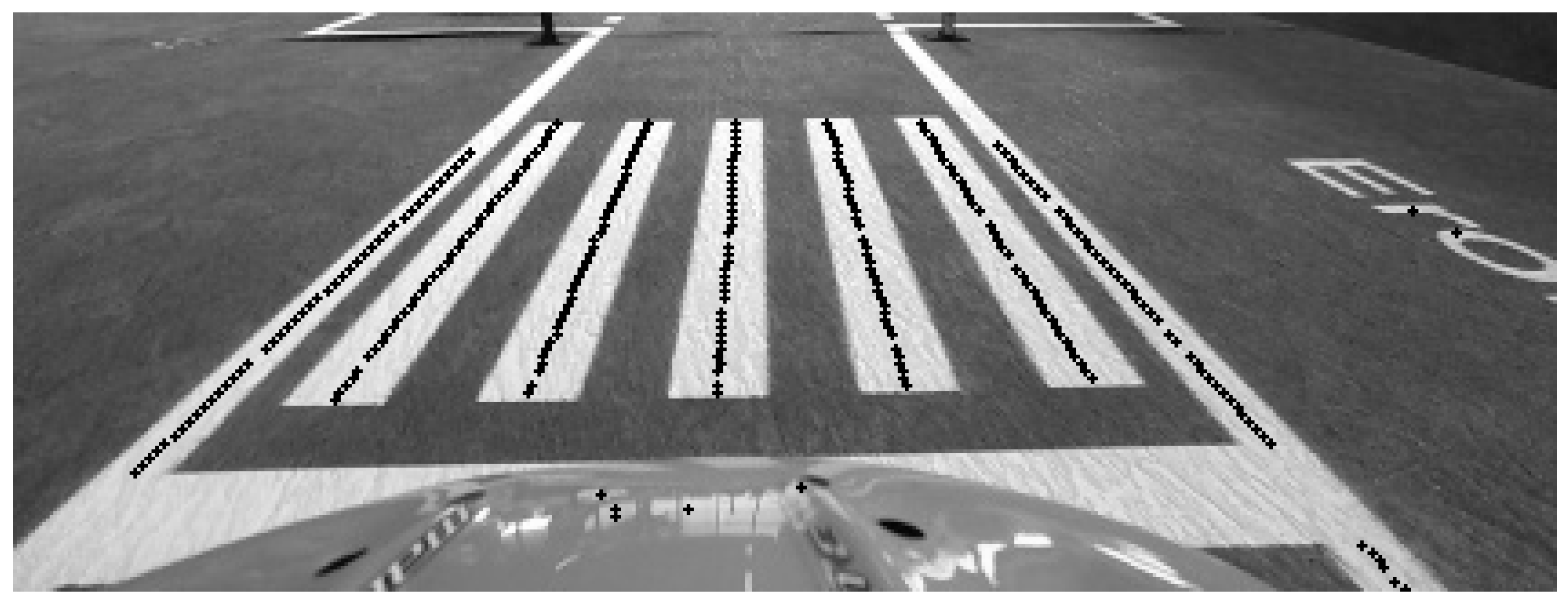

4.4.3. Crosswalk Detection

- Finish the points detection phase in the Lane Detection process.

- For each slice produced, check the number of detected points in it.

- Calculate the average number of points per slice, and if it is above a certain threshold, a crosswalk is detected.

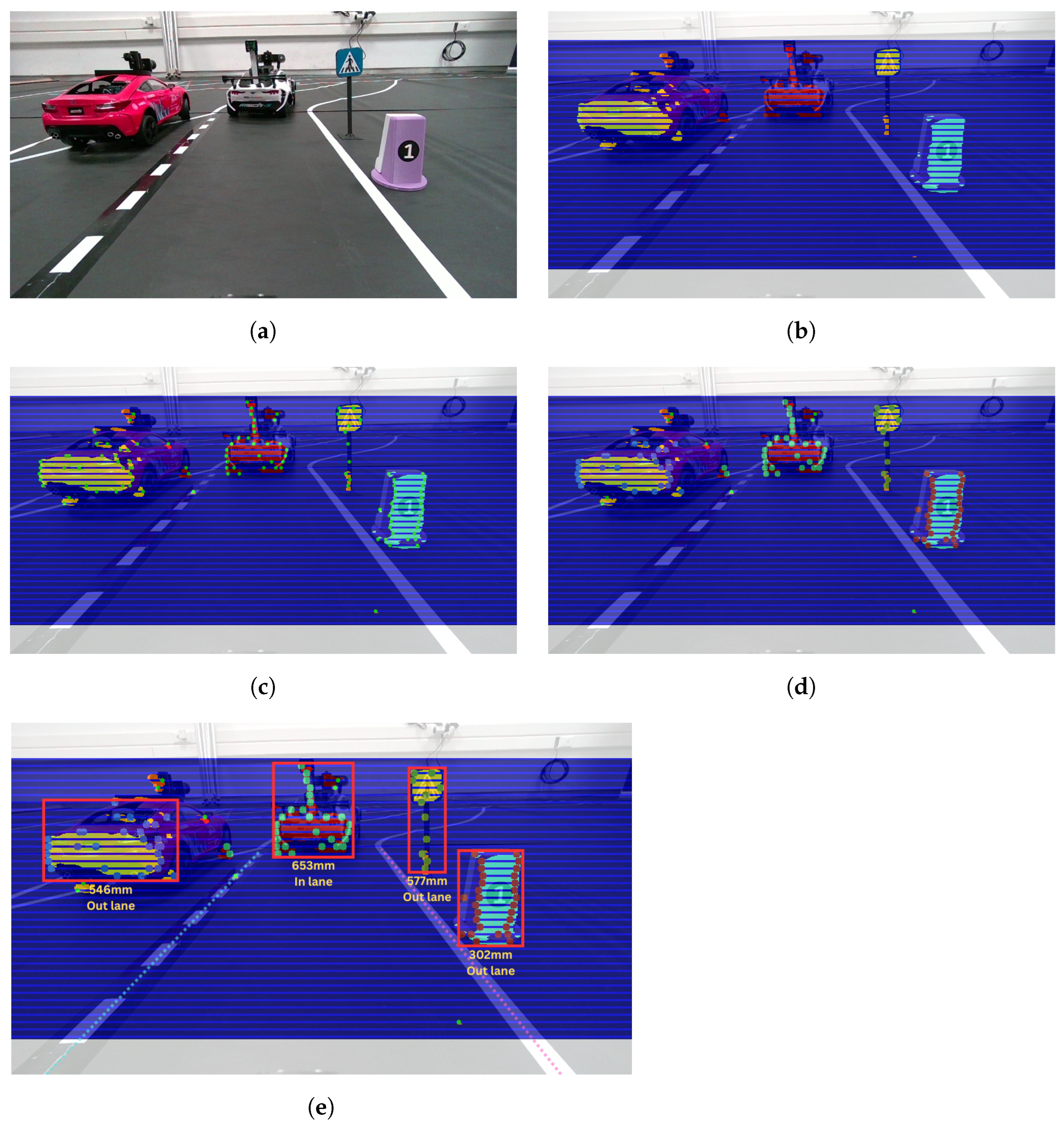

4.4.4. 3D-Objects Reaction

- YOLOv9-c introduced by Wang and Liao [29] for Traffic Sign and Traffic Light classification. This model is trained on a custom-made dataset consisting of approximately 10,000 images per class, with annotations performed in-house.

- Semantic Segmentation with a ResNet-18 backbone, trained on the Cityscapes dataset, for the classification of vehicles, trucks, and buses.

- Body Pose Estimation with a ResNet-18 backbone proposed by Bao et al. [30] for Pedestrian classification.

- Traffic Sign: The vehicle adheres to traffic rules and responds accordingly (e.g., stopping at a STOP sign, reducing speed at a crosswalk).

- Traffic Light: The vehicle receives the state of the detected traffic light from the traffic lights server and acts accordingly.

- Pedestrian: If a pedestrian is within the lane, the algorithm treats it as an obstacle, causing the vehicle to decelerate or stop according to the aforementioned thresholds. If a pedestrian is outside the lane but approaching a crosswalk ahead, the vehicle stops to allow the pedestrian to cross.

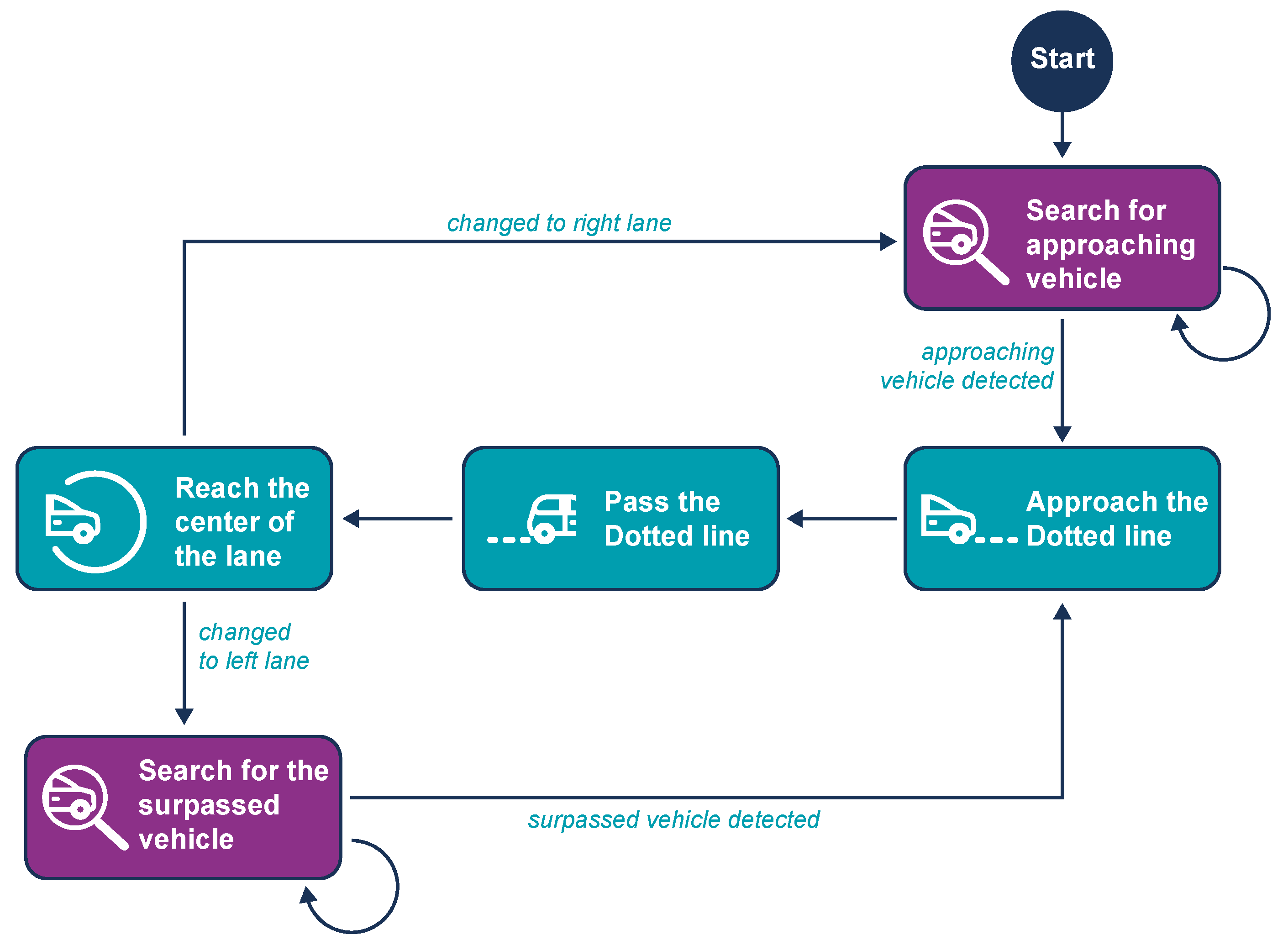

- Vehicle: If the lane on the left is dotted and the vehicle’s speed exceeds that of the vehicle ahead, an overtaking maneuver is initiated. Otherwise, the vehicle follows without further action. Vehicles in other lanes provide information for other parts of the code (e.g., parking navigation) but do not trigger an immediate response.

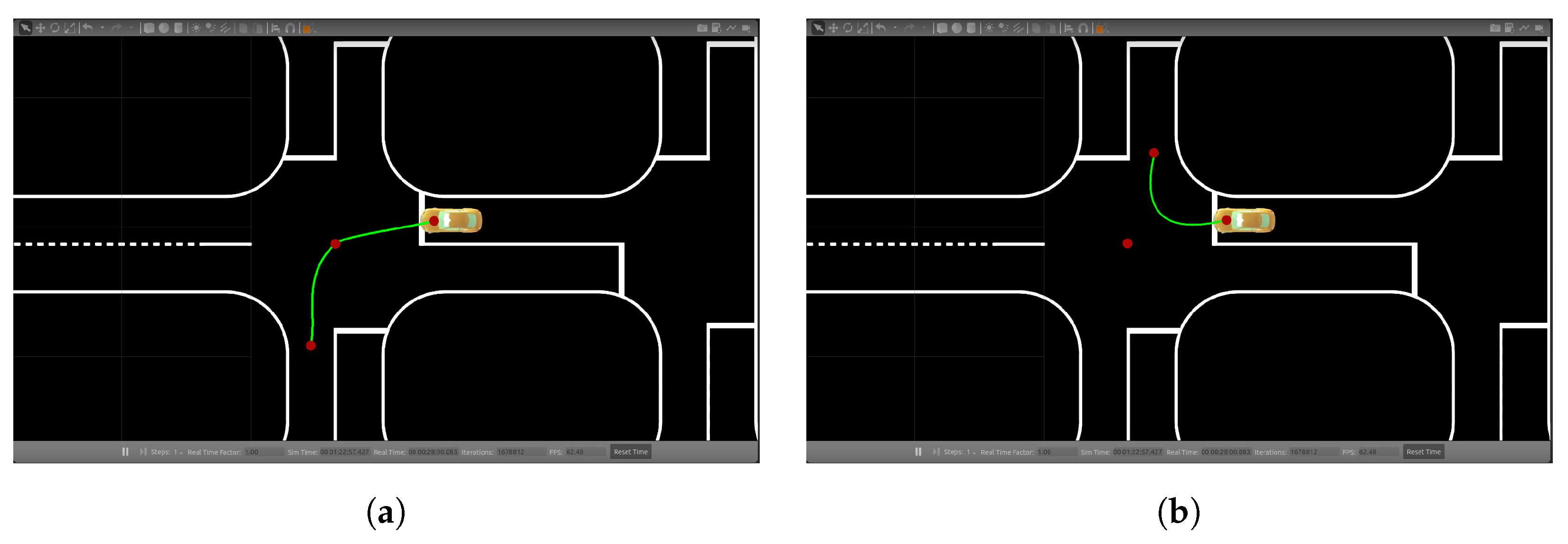

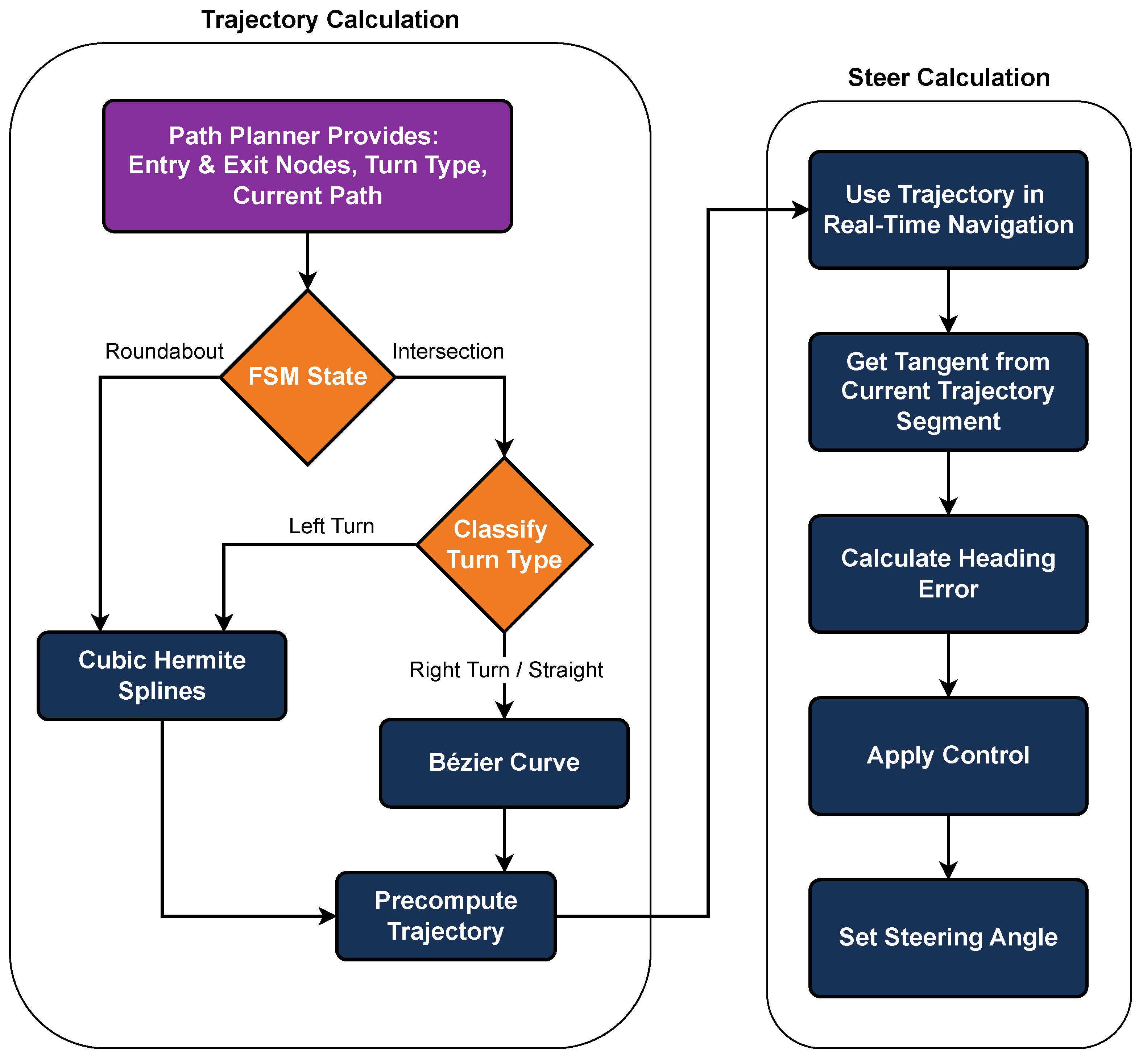

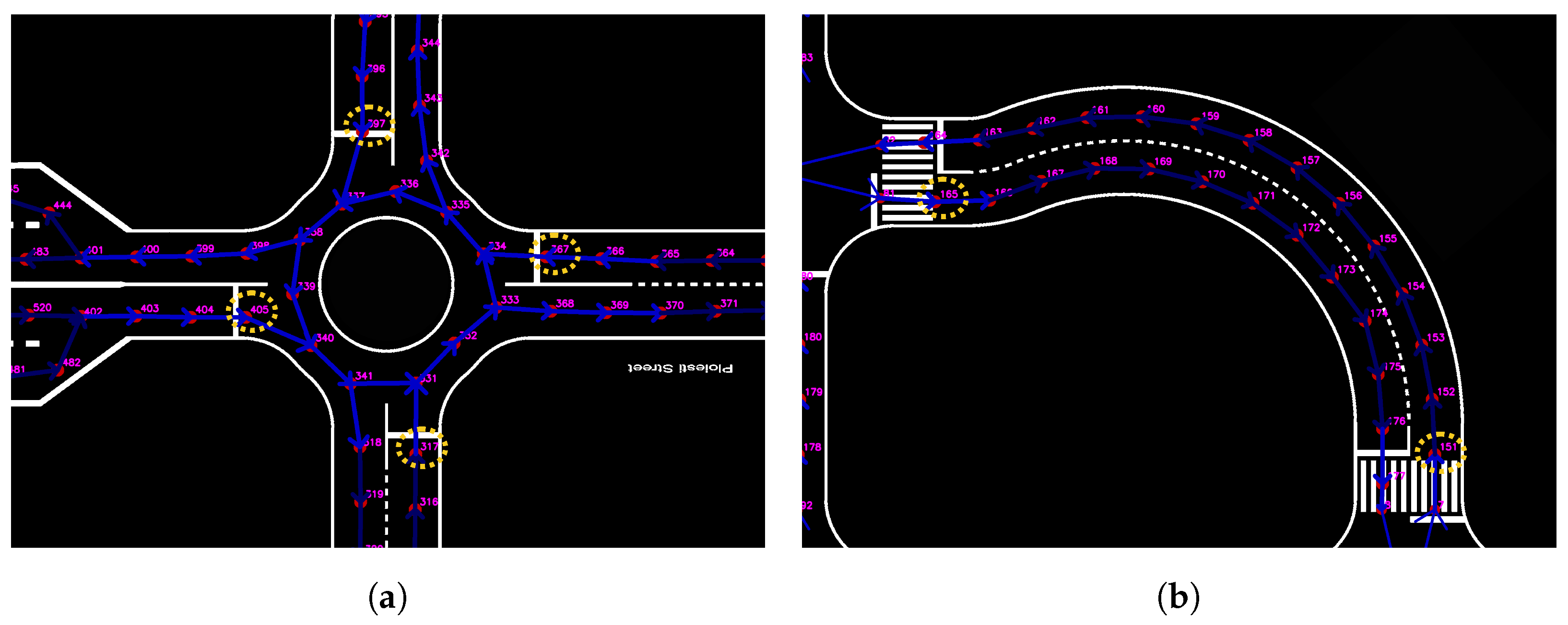

4.4.5. Intersection and Roundabout Navigation

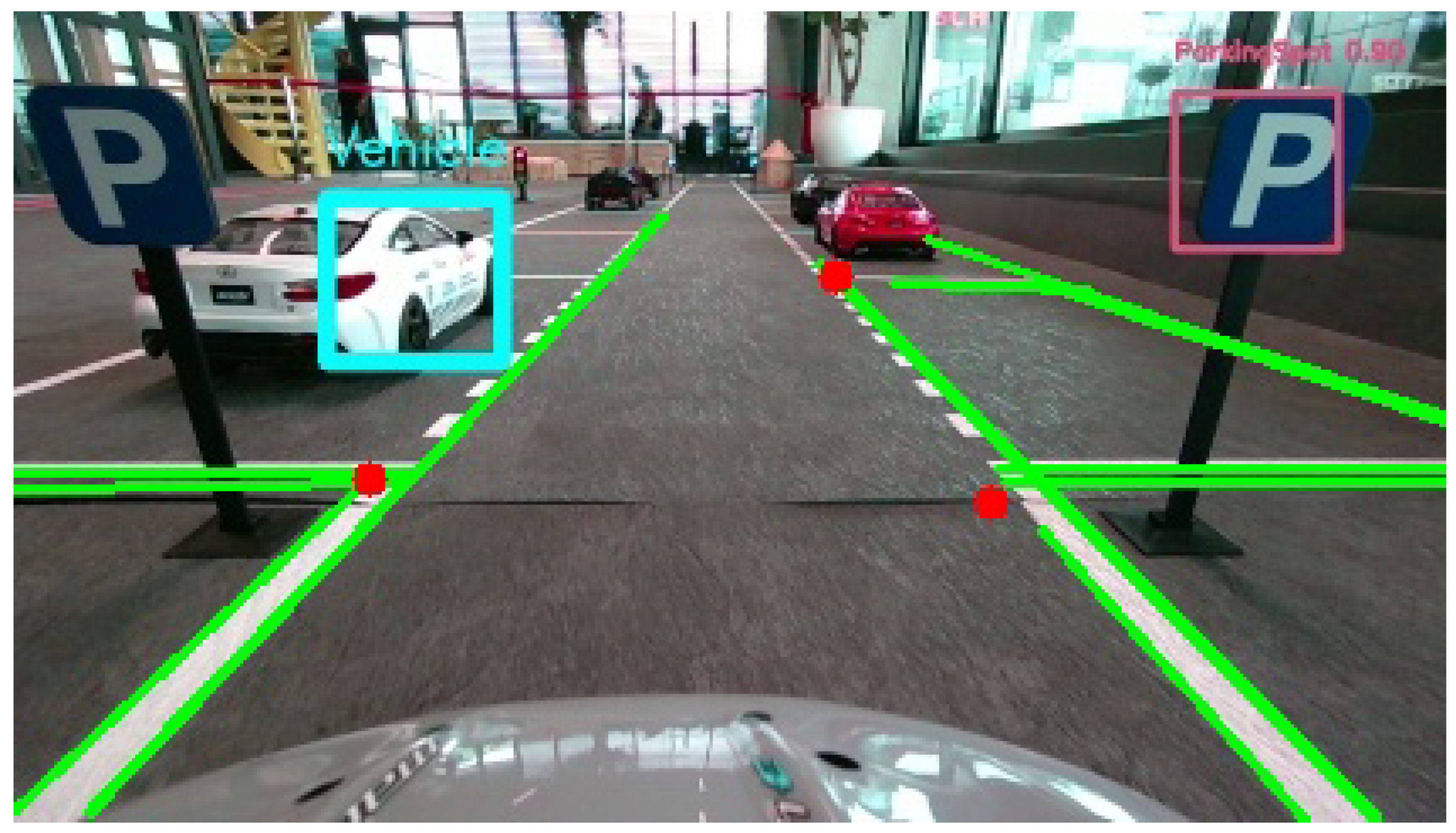

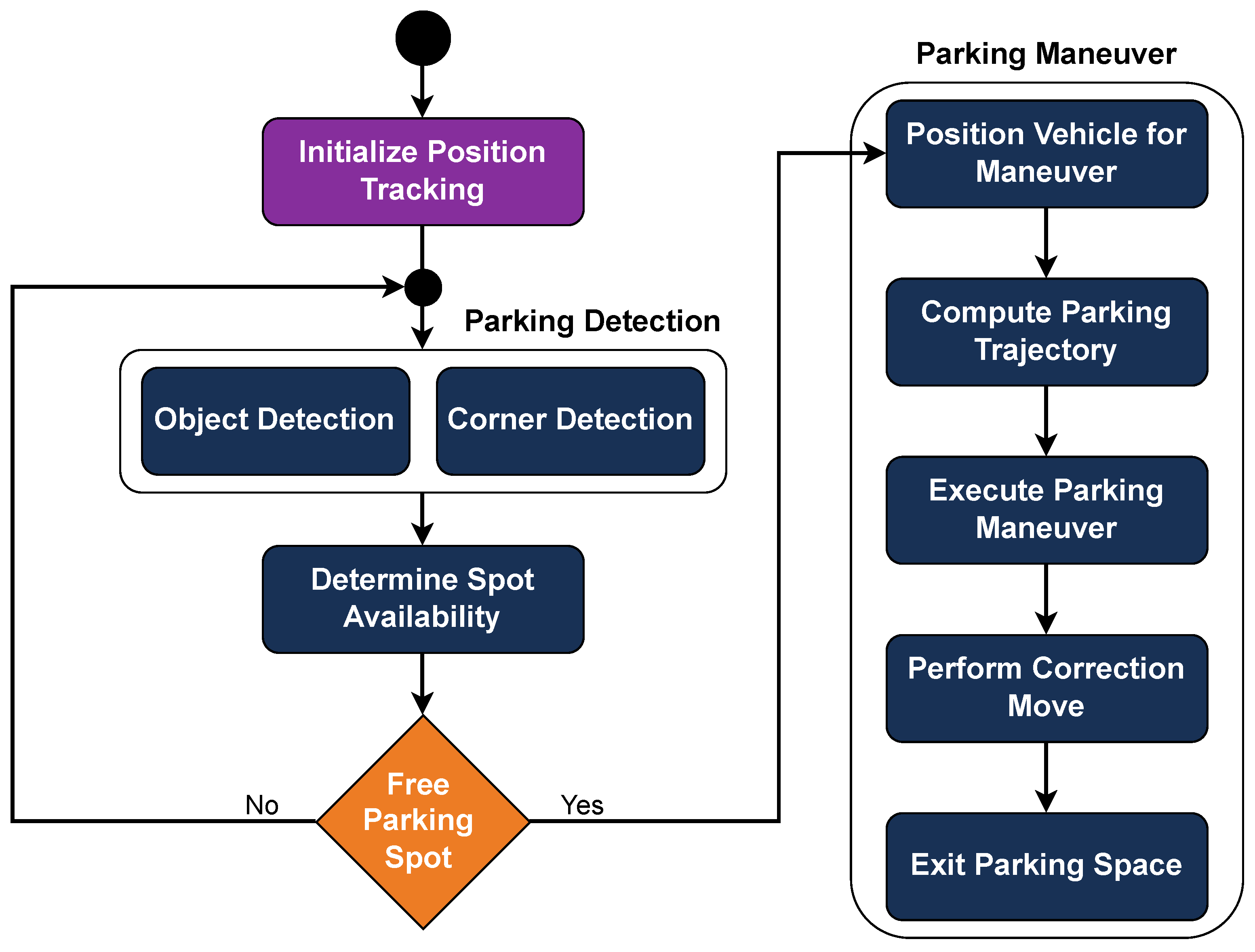

4.4.6. Parking Navigation

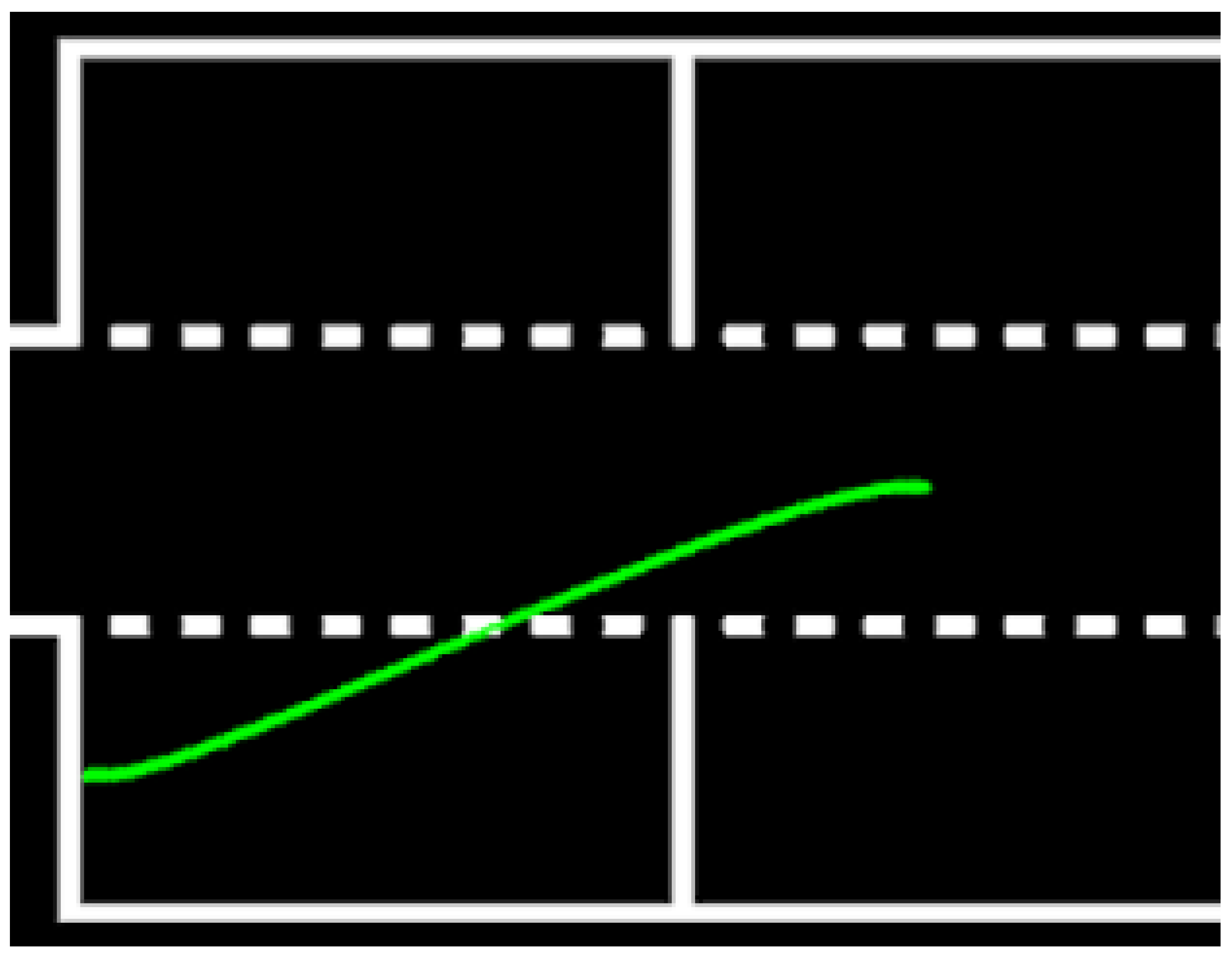

4.4.7. Overtake Procedure

4.4.8. Global Path Planning

- First, the algorithm calculates the shortest path from the vehicle’s starting position to the closest must-node from a set (based on Euclidean distance), using the A* algorithm [32].

- Once this sub-path is calculated, it treats as the new source node and calculates the shortest path to the nearest must-node from another set .

- This process repeats recursively until all the required must-nodes, at least one from each set, have been included in the path.

- After reaching the last required must-node, the algorithm calculates the shortest path back to .

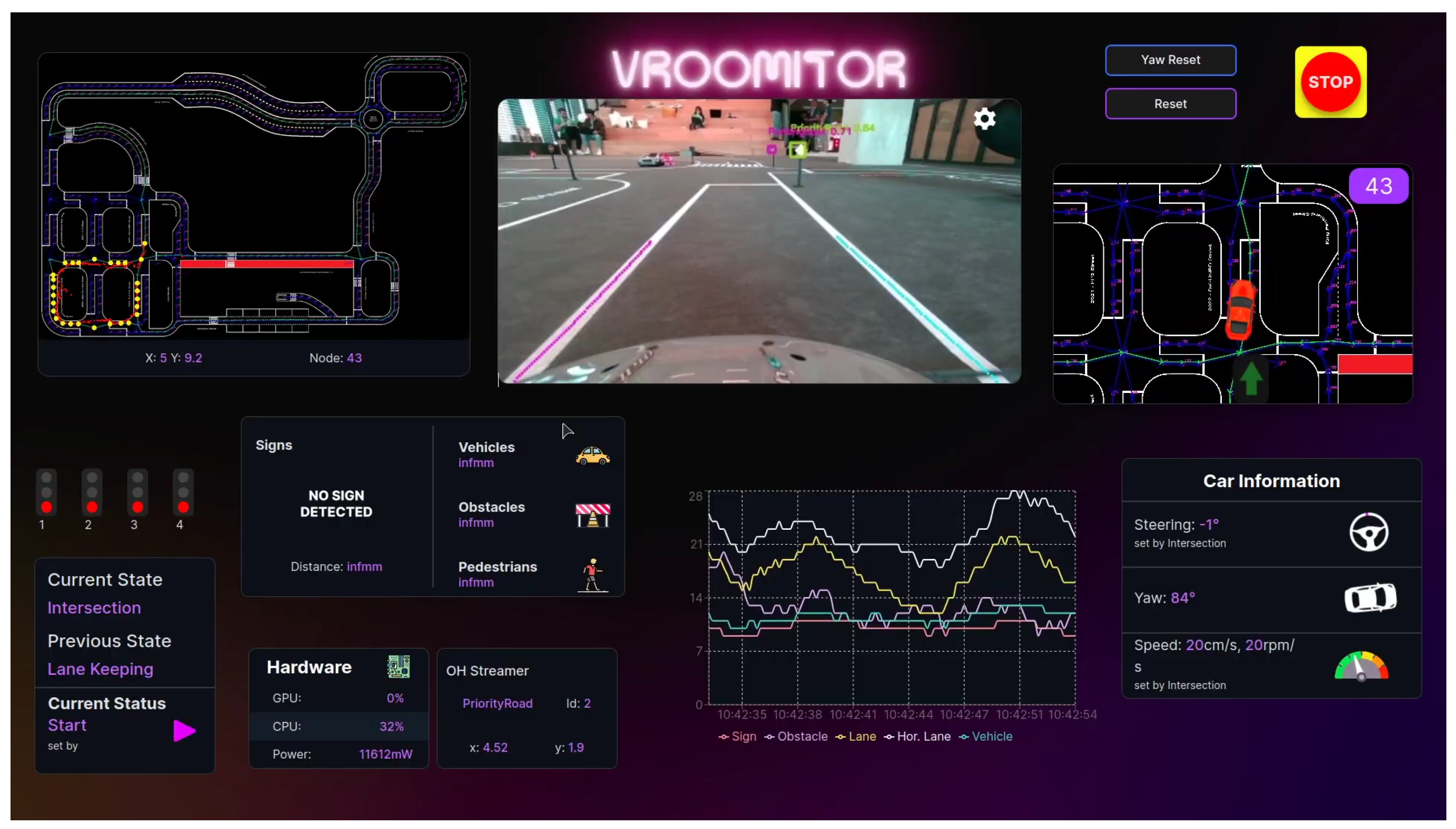

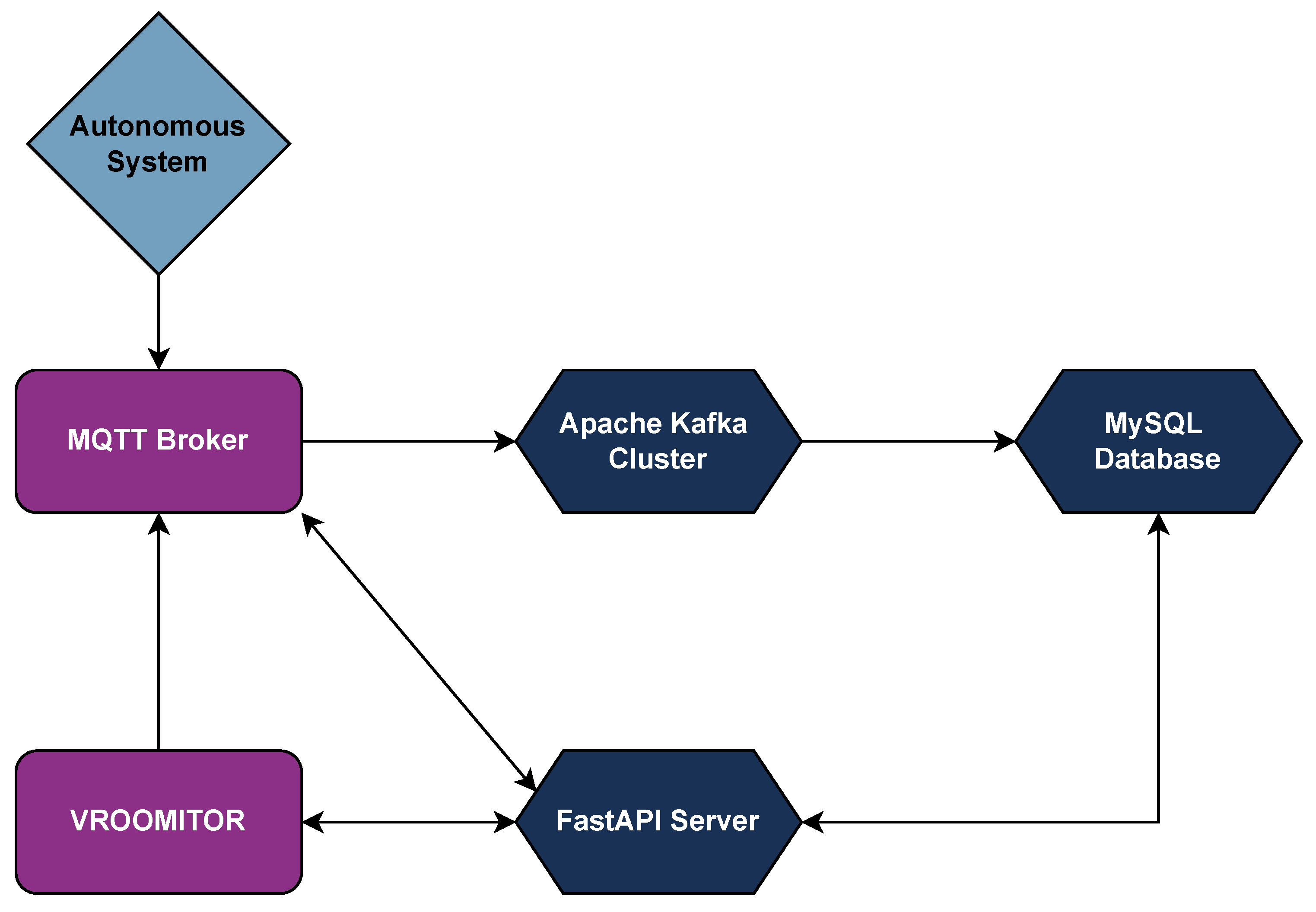

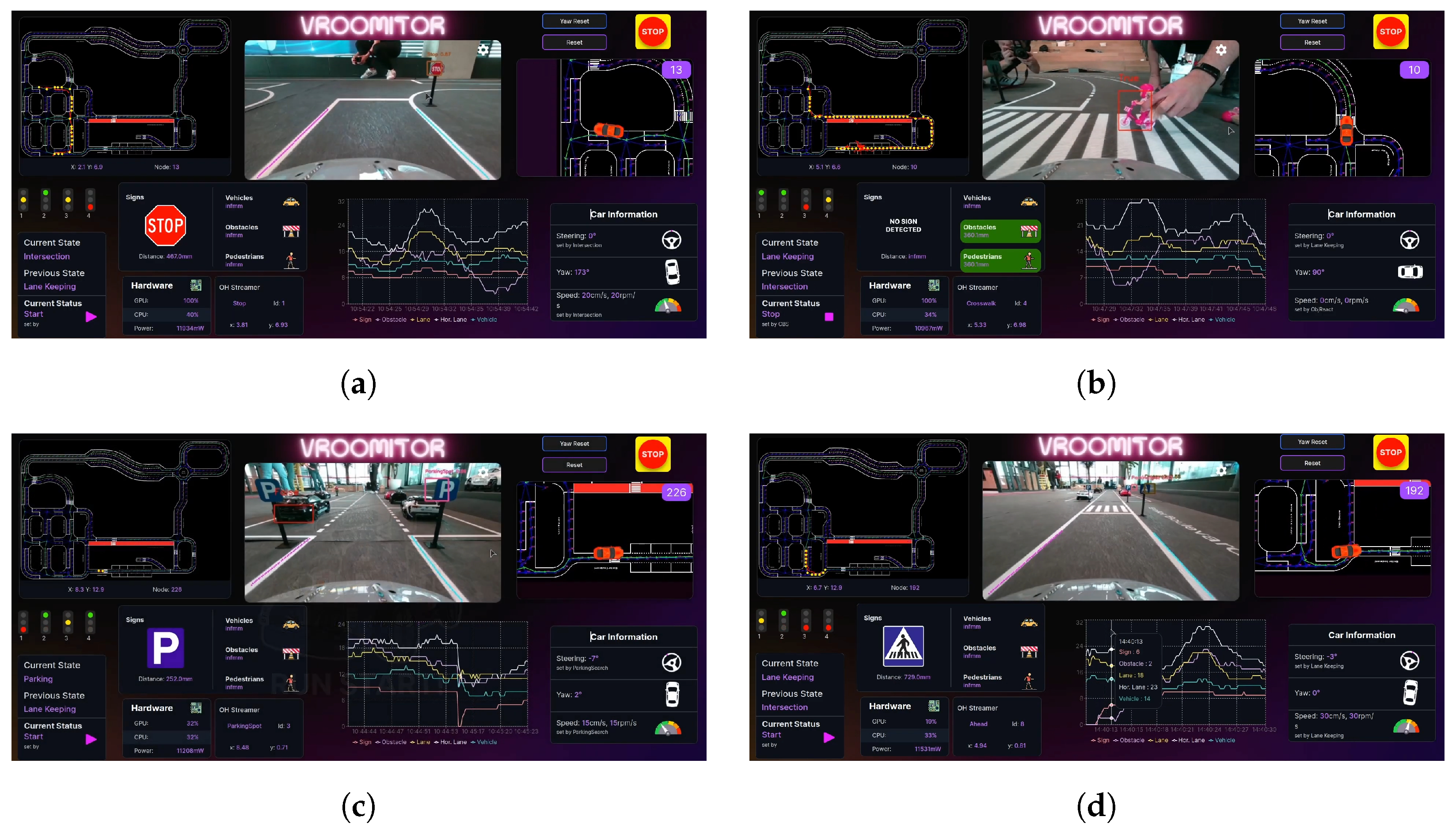

4.5. Real-Time Monitoring System

4.6. Run–Replay Function

- Data Transmission: During each run, the vehicle transmits all relevant data through an MQTT broker to VROOMITOR.

- Data Storage: The replay server subscribes to the corresponding MQTT broker topics and re-transmits the data via an Apache Kafka cluster, which acts as an intermediary. The data is then processed and ingested by an Apache Flink cluster, both hosted on the team’s main Ubuntu server in the lab. The processed telemetry data is organized into sessions—each representing a complete vehicle run—and stored in a MySQL database.

- Replay Selection: When the vehicle switches to replay mode, the user selects the desired session through VROOMITOR. The FastAPI server then responds to the vehicle with the stored data from that session, ensuring accurate timestamp replication.

- Data Utilization: Instead of using live sensor input, the vehicle processes the replayed data with preserved timestamps as though it were in real time. The entire software architecture operates normally, with all modules functioning as if the data originated from the vehicle’s sensors during a live run.

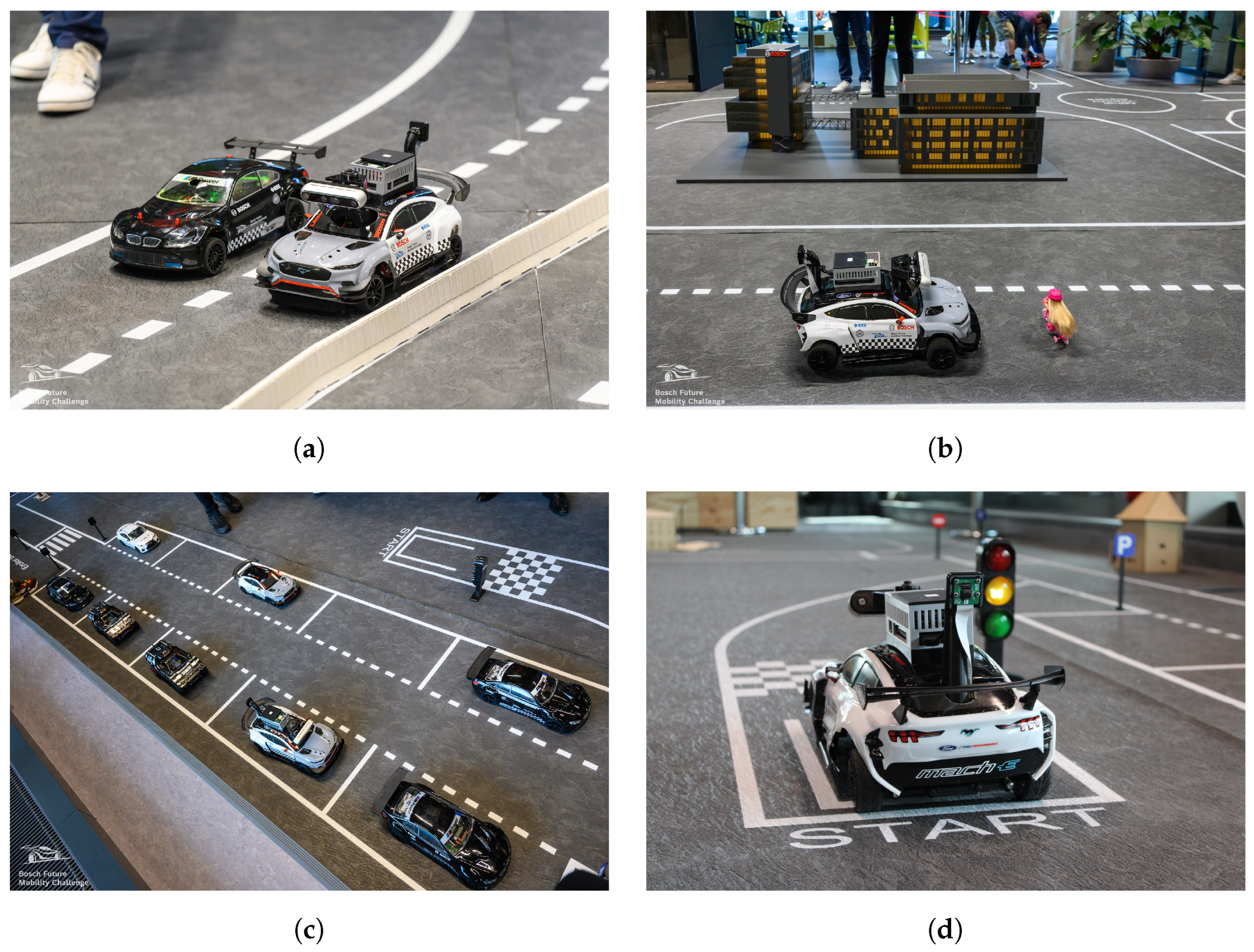

5. Outcomes and Showcase

6. Conclusions

6.1. Solution Migration to Real Systems

- Updating the sensors to higher-range or more robust alternatives suitable for larger-scale vehicles.

- Upgrading the main processor to handle the increased computational demands.

- Retraining the neural networks with data relevant to larger environments and sensor configurations.

- Adapting the software stack, including controller parameters and perception thresholds, to the scale and dynamics of the new platform.

- Ensuring functional safety. As the system transitions from a testbed to a vehicle that may carry human passengers or interact with real traffic, safety assurance becomes critical. This includes incorporating fail-safes, redundancy in critical systems, and compliance with standards.

- Integrating cybersecurity mechanisms. Full-scale autonomous vehicles must guard against threats such as data breaches, remote attacks, and system spoofing. This involves implementing secure communication protocols, encryption layers, intrusion detection systems, and regular software validation.

- Complying with local regulatory frameworks. Deployment of real autonomous vehicles must adhere to national and international traffic laws, certification standards, and ethical guidelines. This may require adapting behaviors (e.g., speed limits and right-of-way rules) and ensuring legal traceability and accountability for system decisions.

6.2. Limitations

6.3. Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3D | 3-Dimensional |

| ADAS | Advanced Driver Assistance System |

| AI | Artificial Intelligence |

| AV | Autonomous Vehicle |

| BFMC | Bosch Future Mobility Challenge |

| DRL | Deep Reinforcement Learning |

| ESC | Electronic Speed Controller |

| FFF | Fused Filament Fabrication |

| FFC | Flexible Flat Cable |

| FPS | Frames per second |

| FSD | Full Self-Driving |

| FSM | Finite State Machine |

| GPS | Global Positioning System |

| GUI | Graphical User Interface |

| IMU | Inertial Measurement Unit |

| LiPo | Lithium-Polymer |

| PID | Proportional–Integral–Derivative |

| PI | Proportional–Integral |

| PWM | Pulse Width Modulation |

| RC | Remote-controlled |

| ROS | Robot Operating System |

| SAE | Society of Automotive Engineers |

| SLAM | Simultaneous Localization and Mapping |

| TCP | Transmission Control Protocol |

| ToF | Time-of-Flight |

| UDP | User Datagram Protocol |

| YOLO | You Only Look Once |

References

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey. In Proceedings of the National Motor Vehicle Crash Causation Survey (NMVCCS) U.S. Department of Transportation, 1 February 2015. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/812115 (accessed on 10 June 2025).

- Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part Policy Pract. 2016, 94, 182–193. [Google Scholar] [CrossRef]

- McKinsey & Company. Ten Ways Autonomous Driving Could Redefine the Automotive World. 2015. Available online: https://www.mckinsey.com/industries/automotive-and-assembly/our-insights/ten-ways-autonomous-driving-could-redefine-the-automotive-world (accessed on 9 October 2024).

- Williams, M. PROMETHEUS-The European research programme for optimising the road transport system in Europe. In Proceedings of the IEE Colloquium on Driver Information, London, UK, 1 December 1988; pp. 1–9. [Google Scholar]

- DARPA. DARPA News & Events. 2014. Available online: https://www.darpa.mil/news-events/2014-03-13 (accessed on 2 September 2024).

- Fulton, J.; Pransky, J. DARPA Grand Challenge—A pioneering event for autonomous robotic ground vehicles. Ind. Robot 2004, 31, 414–422. [Google Scholar] [CrossRef]

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2009; Volume 56. [Google Scholar]

- SAE. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; Techology Report J3016; SAE International: Warrendale, PA, USA, 2021. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Wayve. Wayve AV2.0 Technology. 2024. Available online: https://wayve.ai/technology/#AV2.0 (accessed on 11 October 2024).

- Bojarski, M.; Testa, D.D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to End Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar] [CrossRef]

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-end Learning of Driving Models from Large-scale Video Datasets. arXiv 2017, arXiv:1612.01079. [Google Scholar] [CrossRef]

- Bojarski, M.; Yeres, P.; Choromanska, A.; Choromanski, K.; Firner, B.; Jackel, L.; Muller, U. Explaining How a Deep Neural Network Trained with End-to-End Learning Steers a Car. arXiv 2017, arXiv:1704.07911. [Google Scholar] [CrossRef]

- Koutník, J.; Cuccu, G.; Schmidhuber, J.; Gomez, F.J. Evolving large-scale neural networks for vision-based TORCS. In Proceedings of the International Conference on Foundations of Digital Games, Crete, Greece, 14–17 May 2013. [Google Scholar]

- Sallab, A.E.; Abdou, M.; Perot, E.; Yogamani, S. Deep Reinforcement Learning framework for Autonomous Driving. Electron. Imaging 2017, 29, 70–76. [Google Scholar] [CrossRef]

- Kendall, A.; Hawke, J.; Janz, D.; Mazur, P.; Reda, D.; Allen, J.M.; Lam, V.D.; Bewley, A.; Shah, A. Learning to Drive in a Day. arXiv 2018, arXiv:1807.00412. [Google Scholar] [CrossRef]

- Lu, Y.; Fu, J.; Tucker, G.; Pan, X.; Bronstein, E.; Roelofs, R.; Sapp, B.; White, B.; Faust, A.; Whiteson, S.; et al. Imitation Is Not Enough: Robustifying Imitation with Reinforcement Learning for Challenging Driving Scenarios. arXiv 2023, arXiv:2212.11419. [Google Scholar] [CrossRef]

- Li, D.; Auerbach, P.; Okhrin, O. Towards Autonomous Driving with Small-Scale Cars: A Survey of Recent Development. arXiv 2024, arXiv:2404.06229. [Google Scholar] [CrossRef]

- Balaji, B.; Mallya, S.; Genc, S.; Gupta, S.; Dirac, L.; Khare, V.; Roy, G.; Sun, T.; Tao, Y.; Townsend, B.; et al. DeepRacer: Autonomous Racing Platform for Experimentation with Sim2Real Reinforcement Learning. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 2746–2754. [Google Scholar] [CrossRef]

- Paull, L.; Tani, J.; Ahn, H.; Alonso-Mora, J.; Carlone, L.; Cap, M.; Chen, Y.F.; Choi, C.; Dusek, J.; Fang, Y.; et al. Duckietown: An open, inexpensive and flexible platform for autonomy education and research. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1497–1504. [Google Scholar] [CrossRef]

- Berkeley Autonomous Race Car (BARC). BARC: Berkeley Autonomous Race Car. Available online: https://goldeneye.studentorg.berkeley.edu/barc.html (accessed on 28 August 2024).

- O’Kelly, M.; Sukhil, V.; Abbas, H.; Harkins, J.; Kao, C.; Pant, Y.V.; Mangharam, R.; Agarwal, D.; Behl, M.; Burgio, P.; et al. F1/10: An Open-Source Autonomous Cyber-Physical Platform. arXiv 2019, arXiv:1901.08567. [Google Scholar] [CrossRef]

- Goldfain, B.; Drews, P.; You, C.; Barulic, M.; Velev, O.; Tsiotras, P.; Rehg, J.M. AutoRally: An Open Platform for Aggressive Autonomous Driving. IEEE Control Syst. Mag. 2019, 39, 26–55. [Google Scholar] [CrossRef]

- F1TENTH Autonomous Racing. F1TENTH Racing. Available online: https://f1tenth.org/race.html (accessed on 28 August 2024).

- Bosch Engineering Center, C. Bosch Future Mobility Challenge. Available online: https://boschfuturemobility.com/ (accessed on 10 September 2024).

- Stanford-Clark, A.; Nipper, A. MQTT Version 3.1.1. OASIS Standard. 2014. Available online: http://docs.oasis-open.org/mqtt/mqtt/v3.1.1/os/mqtt-v3.1.1-os.html (accessed on 1 February 2025).

- Systèmes, D. SolidWorks. Version 2023. Dassault Systèmes. 2023. Available online: https://www.solidworks.com/ (accessed on 20 February 2025).

- Boyer, R.S.; Moore, J.S. A Fast String Searching Algorithm. Commun. ACM 1977, 20, 762–772. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Bao, W.; Ma, Z.; Liang, D.; Yang, X.; Niu, T. Pose ResNet: 3D Human Pose Estimation Based on Self-Supervision. Sensors 2023, 23, 3057. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, A.; Hongo, T.; Ninomiya, Y.; Sugimoto, G. Local Path Planning And Motion Control For Agv In Positioning. In Proceedings of the IEEE/RSJ International Workshop on Intelligent Robots and Systems. (IROS ’89) ’The Autonomous Mobile Robots and Its Applications, Tsukuba, Japan, 4–6 September 1989; pp. 392–397. [Google Scholar] [CrossRef]

- Foead, D.; Ghifari, A.; Kusuma, M.B.; Hanafiah, N.; Gunawan, E. A Systematic Literature Review of A* Pathfinding. Procedia Comput. Sci. 2021, 179, 507–514. [Google Scholar] [CrossRef]

- EMQ Technologies Co., Ltd. EMQX: Cloud-Native Distributed MQTT Message Broker. 2024. Available online: https://www.emqx.io (accessed on 24 May 2025).

- Guardian, T. Tesla Autopilot Was Involved in 13 Fatal Crashes, US Agency Finds. 2024. Available online: https://www.theguardian.com/technology/2024/apr/26/tesla-autopilot-fatal-crash (accessed on 24 May 2025).

- Lambert, F. Tesla Full Self-Driving Veers Off Road, FLIPS car in Scary Crash Driver Couldn’t Prevent. 2025. Available online: https://electrek.co/2025/05/23/tesla-full-self-driving-veers-off-road-flips-car-scary-crash-driver-couldnt-prevent/ (accessed on 24 May 2025).

| Task | Points | Mandatory | Description |

|---|---|---|---|

| Parking | 8 | Yes | Identifying suitable parking spots and executing parking maneuvers. |

| Ramp | 5 | Yes | Driving on an inclined surface. |

| Roundabout | 7 | Yes | Navigating the roundabout counter-clockwise. |

| Traffic lights | 6 | Yes | Recognizing and reacting to traffic lights. |

| Missing lanes | 6 | No | Navigating safely in areas with only partial road markings. |

| Bus lane | 5 | No | Not entering the adjacent bus lane on the left. |

| Highway | 5 | No | Maintaining position in the right lane, except when executing an overtaking maneuver. |

| Speed curve | 7 | No | Increasing speed on a curved road while slowing down or stopping at crosswalks as required. |

| Random start point | 15 | No | Starting at a random point inside the competition map. |

| Penalty | Points | Description |

|---|---|---|

| Collision | −2 | Colliding with another vehicle, pedestrian, or structure. |

| Non-compliance with traffic rules | −1.5 | Ignoring traffic lights or signs. |

| Maneuver fail | −1 | Incorrect or incomplete parking, hit on overtaking comeback, initiating parking where it shouldn’t be. |

| Module | Calls | Failures | Failure Rate (%) | Impact |

|---|---|---|---|---|

| Intersection | 20 | 1 | 5 | High |

| Roundabout | 6 | 0 | 0.0 | High |

| Overtake (static vehicle) | 4 | 1 | 25 | High |

| Overtake (dynamic vehicle) | 4 | 0 | 0.0 | High |

| Pedestrian Reaction | 10 | 0 | 0.0 | High |

| Traffic Lights Reaction | 9 | 0 | 0.0 | High |

| Sign Reaction | 20 | 0 | 0.0 | Medium |

| Path Planning | 30 | 0 | 0.0 | High |

| Lane Detection-Keeping | 100 | 2 | 2 | High |

| Crosswalk Detection | 5 | 0 | 0.0 | Medium |

| Parking | 3 | 0 | 0.0 | High |

| Speed Adaptation | 40 | 1 | 10.0 | Small |

| 3D-Object Detection | 70 | 1 | 1.4 | Medium |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papafotiou, T.; Tsardoulias, E.; Nikolaou, A.; Papagiannitsi, A.; Christodoulou, D.; Gkountras, I.; Symeonidis, A.L. How to Win Bosch Future Mobility Challenge: Design and Implementation of the VROOM Autonomous Scaled Vehicle. Machines 2025, 13, 514. https://doi.org/10.3390/machines13060514

Papafotiou T, Tsardoulias E, Nikolaou A, Papagiannitsi A, Christodoulou D, Gkountras I, Symeonidis AL. How to Win Bosch Future Mobility Challenge: Design and Implementation of the VROOM Autonomous Scaled Vehicle. Machines. 2025; 13(6):514. https://doi.org/10.3390/machines13060514

Chicago/Turabian StylePapafotiou, Theodoros, Emmanouil Tsardoulias, Alexandros Nikolaou, Aikaterini Papagiannitsi, Despoina Christodoulou, Ioannis Gkountras, and Andreas L. Symeonidis. 2025. "How to Win Bosch Future Mobility Challenge: Design and Implementation of the VROOM Autonomous Scaled Vehicle" Machines 13, no. 6: 514. https://doi.org/10.3390/machines13060514

APA StylePapafotiou, T., Tsardoulias, E., Nikolaou, A., Papagiannitsi, A., Christodoulou, D., Gkountras, I., & Symeonidis, A. L. (2025). How to Win Bosch Future Mobility Challenge: Design and Implementation of the VROOM Autonomous Scaled Vehicle. Machines, 13(6), 514. https://doi.org/10.3390/machines13060514