1. Introduction

Industry 5.0 signifies a paradigm shift in manufacturing, characterized by a renewed emphasis on human-centricity, sustainability, and resilience, contrasting with the efficiency- and automation-driven objectives of Industry 4.0 [

1]. Smart manufacturing environments are now designed to optimize performance and productivity and ensure adaptability to unforeseen challenges, such as pandemics, wars, and natural disasters [

2]. Humanoid robots (HRs) are becoming more recognized as a viable and strategic asset in this new industrial landscape. With their anthropomorphic design and advanced cognitive and motor skills, humanoid robots fit seamlessly into environments where they can work alongside people. They can navigate spaces, use tools, and interact intuitively and safely with people. This makes them valuable in achieving the goals of Industry 5.0 [

3,

4,

5,

6].

In the context of sustainability, resiliency, and human-centricity, which are the main aspects of Industry 5.0 [

7], “Human and Humanoid-in-the-Loop (HHitL)” is introduced. It refers to integrating HRs not just as tools, but as autonomous, decision-making entities within collaborative manufacturing loops. HRs can replace or assist human operators in hazardous conditions, ensuring continuity without compromising safety. In addition, during supply chain disruptions, they can adaptively take over manual operations with minimal retraining. HRs can enhance human well-being while improving productivity in ergonomically risky or repetitive tasks.

Existing frameworks such as human–cyber–physical systems (HCPSs) [

8,

9] and robotic cell coordination architectures [

10,

11,

12] have significantly advanced human integration in manufacturing. HCPS emphasizes the cognitive role of humans within cyber–physical loops, typically interacting through indirect interfaces, while physical automation remains rigid. Similarly, robotic cell coordination frameworks focus on robot–robot orchestration, often treating human involvement as constraints or supervisory input rather than as active, adaptive participants. In contrast, the proposed Human and Humanoid-in-the-Loop (HHitL) ecosystem introduces a triadic collaboration model between humans, humanoid robots, and digital twins, wherein both human and robotic agents act as co-adaptive decision-makers and embodied collaborators. The novelty of HHitL lies in its integration of perceptual intelligence, real-time digital twin feedback, and foundation models to support intuitive, multimodal interaction in unstructured, dynamic environments. Additionally, the 6P architecture (participation, purpose, preservation, physical assets, persistence, projection) provides an ethical and operational scaffold that goes beyond existing models, ensuring human-centricity, resilience, and sustainable co-working in the Industry 5.0 paradigm.

Despite HRs’ huge potential in these roles, a significant research gap remains unexplored, especially under the umbrella of Industry 5.0. Accordingly, there is a demand to reidentify the industrial ecosystem through the lens of HRs. The primary goal of this paper is to conceptualize the HHitL ecosystem, outline its architecture, and explore the challenges and outlooks of its implementation in smart manufacturing aligned with Industry 5.0 principles.

2. The Proposed Ecosystem

With the emergence of Industry 5.0, three principles of human-centricity, resiliency, and sustainability have gained much importance. Simultaneously, manufacturing has expanded beyond traditional cyber–physical systems to include humanoid robots, i.e., physical AI [

13] agents capable of interacting in shared human–environments with cognitive, physical, and social capabilities.

The term “physical AI agents” refers to intelligent systems that not only possess reasoning and perceptual capabilities but are also embodied in physical form, enabling them to interact with and affect the real world. Unlike traditional AI, which typically exists in disembodied software environments (e.g., virtual assistants, recommendation engines), humanoid robots operate at the intersection of cognition and physicality. In the context of the HHitL ecosystem, humanoid robots represent a new class of embodied artificial intelligence—able to perceive, move, manipulate, and communicate within shared workspaces. They leverage advanced AI (e.g., vision-language models, reinforcement learning, and foundation models) alongside mechanical dexterity and human-like morphology to co-adapt with humans, learn tasks contextually, and make autonomous or semi-autonomous decisions. This synthesis of perception, cognition, and actuation in the real world makes humanoid robots uniquely positioned as “physical AI” agents within Industry 5.0-enabled manufacturing systems.

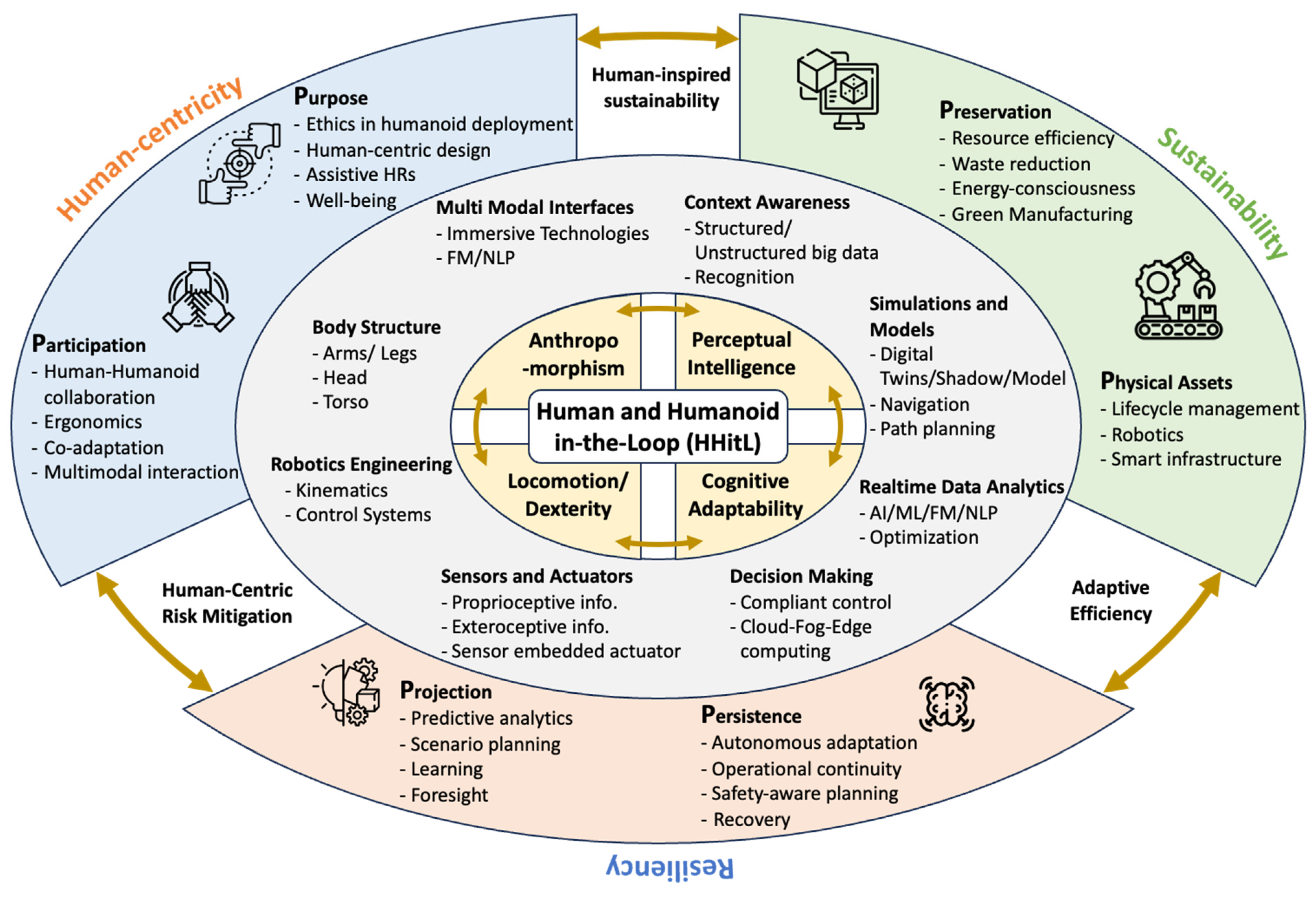

These developments necessitate rethinking the architectural foundation to better reflect the demands of modern industry. To address this issue, we have proposed the HHitL ecosystem from an Industry 5.0 perspective, as shown in

Figure 1. The figure comprises four layers. Layer one is the HHitL architecture, which is discussed in

Section 2.1 and through

Figure 2. Layer 2 (in yellow) illustrates the core features of the ecosystem, while layer 3 (in gray) highlights the enablers of the ecosystem, both of which will be elaborated upon in

Section 2.2. The final layer (in green) demonstrates the six pillars (6P) of the proposed ecosystem, which will be elaborated and mapped to the three principles of Industry 5.0 in

Section 2.3.

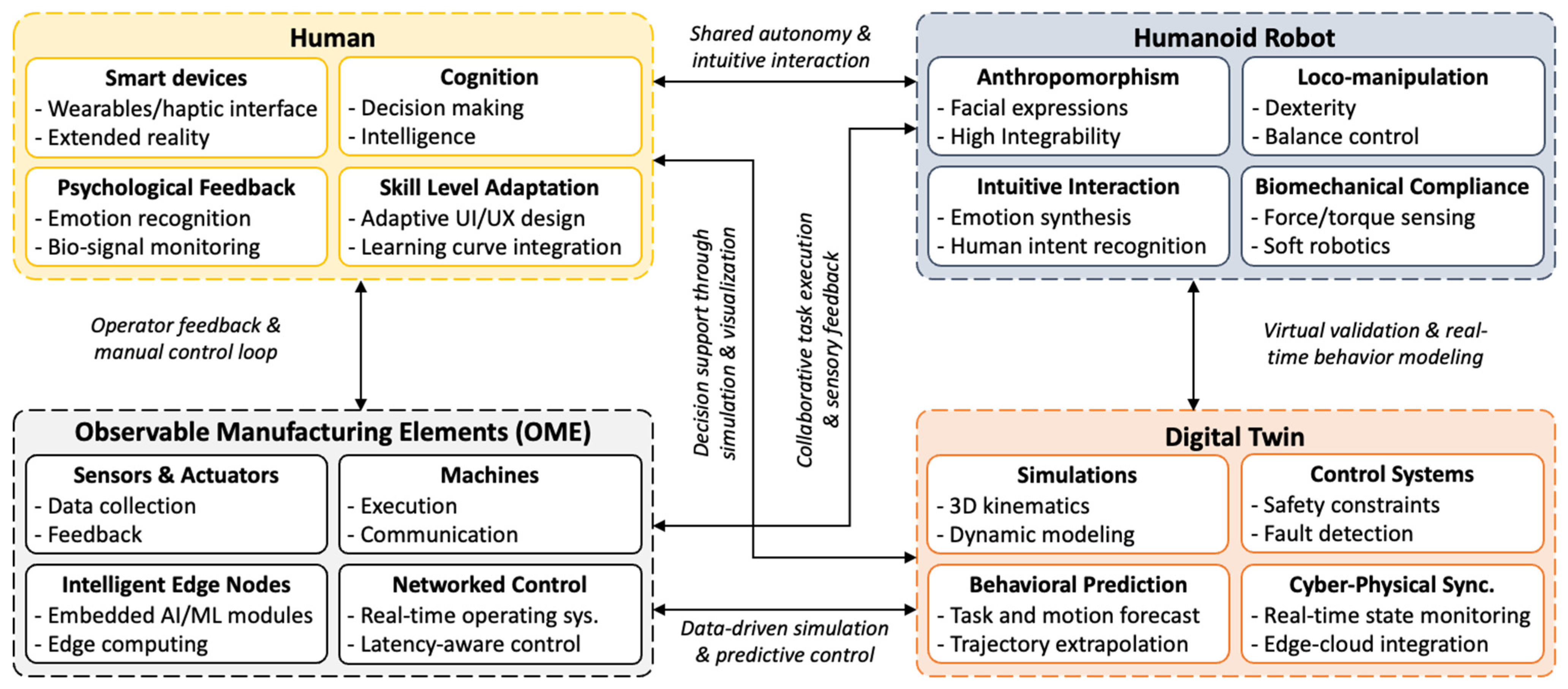

2.1. Human and Humanoid-in-the-Loop

The human-in-the-loop (HitL) concept integrates human expertise with automated systems. It leverages human decision-making capabilities to enhance system performance, particularly where human cognition is required. It also enables workers to oversee and refine automated processes [

8]. However, seamless human–machine interaction is yet a challenge due to the rigid, non-intuitive interfaces of traditional robotic systems. This leads to inefficiencies in the manufacturing processes and increased cognitive load on human operators. The HHitL architecture (

Figure 2) addresses these gaps by incorporating HRs, which mimic human movements, gestures, and communication styles while retaining the core structure of the already established HitL in smart manufacturing [

14]. It also incorporates the presence and roles of HRs, the interactive and cognitive complexity of modern manufacturing, and the strategic objectives defined by Industry 5.0. The architecture comprises four interrelated modules: human, humanoid robot, observable manufacturing elements (OME), and digital twin, each containing four components. The following discussion will cover all four modules and their components in detail.

The OME module enables sensing, actuation, and control within the manufacturing environment, serving as the foundational physical layer that supports real-time data exchange and task execution. It includes sensors and actuators, networked machines, intelligent edge nodes, and real-time communication infrastructure. A critical feature of the OME module is latency-aware control [

15,

16], which is essential for responsive and safe human–humanoid collaboration. In typical HHitL settings, end-to-end latency (sensing, processing, and actuation) should ideally remain below 100 milliseconds, with sub-50ms latency preferred for physical co-manipulation, gesture recognition, or safety-critical tasks. To meet these requirements, the system leverages edge computing for local data processing, time-sensitive networking (TSN) protocols (e.g., ethernet TSN, ROS 2 with DDS), and latency buffering and prioritization mechanisms to ensure high responsiveness without reliance on high-latency cloud infrastructures. These strategies ensure that both human and robotic agents can react and adapt fluidly within dynamic industrial workflows, maintaining synchronization with the digital twin and supporting safe, ergonomic, and efficient collaboration [

17,

18].

The human module positions the human operator as a cognitive, physical, and emotional agent within the manufacturing loop [

19]. It includes components such as smart devices, cognition, psychological feedback, and skill level adaptation. A key feature of this adaptation is learning curve integration, which operates bidirectionally. First, the system continuously monitors user behavior, such as task completion time, interaction frequency, hesitation, or error patterns, to estimate the operator’s evolving skill level [

14]. Based on this, it dynamically adjusts system behavior, including interface complexity, robot autonomy level, and task allocation strategy, thereby supporting novice, intermediate, or expert users. Second, the system actively facilitates the human’s learning process by providing contextual prompts, real-time feedback (e.g., via AR overlays or speech cues), and transparent robot intention modeling. This dual approach fosters co-adaptation, where both the human and humanoid robot progressively refine their collaborative strategies, improving fluency, trust, and task efficiency over time.

The digital twin (DT) module serves as a dynamic, real-time virtual representation of the physical manufacturing environment, including human operators, humanoid robots, machines, and sensors [

20]. DT functions as a real-time, cyber–physical interface that continuously mirrors the dynamic state of the manufacturing environment. It integrates data streams from humanoid robots, human operators, machines, and embedded sensors through industrial protocols such as OPC UA, MQTT, or ISO 23247-compliant interfaces. It continuously synchronizes with the physical world using data collected from edge devices, sensors, and controllers. The DT enables key functions such as behavioral simulation, process optimization, predictive maintenance, and anomaly detection. Grounded in the ISO 23247 standard for digital twin architecture in manufacturing, this module operates as the cognitive and decision-making core of the HHitL ecosystem. It allows human and humanoid agents to explore alternate scenarios virtually, plan collaboratively, and anticipate system-level outcomes before they manifest physically. As such, the DT not only enhances operational transparency but also ensures that decisions are context-aware, data-driven, and adaptable to emergent disruptions.

2.2. Core Features and Enablers

The proposed ecosystem has four key features: anthropomorphism, perceptual intelligence, cognitive adaptability, and locomotion/dexterity. Each core feature is realized through specific enablers that enable the ecosystem to bridge the gap between human intent and robotic execution. The core features of the proposed ecosystem and their corresponding enablers are presented in

Table 1.

Anthropomorphism enables HRs to emulate human-like physicality and communication. It facilitates natural human–robot collaboration by body structure analogous to human and multi-modal interfaces. Body structure, with components like arms/legs, head, and torso, mirrors human anatomy to perform tasks requiring dexterity, such as precise assembly, enhancing compatibility with human workflows. Multi-modal interfaces, which process inputs such as text, speech, and images via foundation models (FMs) [

21], and naturally visualize them through immersive technologies, enable intuitive communication by interpreting verbal commands and gestures.

FMs serve as general-purpose reasoning and perception engines within the HHitL ecosystem. These large-scale, pretrained AI models, such as large language models (LLMs) [

22] and vision language models (VLMs) [

23], can process and integrate diverse inputs, including text, speech, images, sensor signals, and contextual cues. Their role in the ecosystem is to support multimodal interfaces, semantic reasoning, and real-time decision-making by humanoid robots. By enabling robots to understand language, interpret gestures, and anticipate human intent, FMs empower key capabilities such as perceptual intelligence and cognitive adaptability. These models bridge the semantic gap between human instruction and robotic execution, facilitating more intuitive and context-aware collaboration on the shop floor.

Perceptual intelligence equips HRs with the ability to understand and navigate complex manufacturing environments, enabling them to perform intelligent collision avoidance [

24,

25]. To achieve this, multiple enablers need to be realized. For instance, context awareness and sensors and actuators are required to enable HRs to navigate and understand complex manufacturing environments. Context awareness processes structured and unstructured big data for real-time recognition of objects, processes, and environmental changes. Sensors and actuators provide proprioceptive and exteroceptive feedback through sensor-embedded actuators, enabling precise environmental interaction and task execution. Together, these enablers improve responsiveness, precision, and adaptability within the HHitL ecosystem. In addition, real-time data analytics and simulations provide a clear understanding of the manufacturing setting to users/operators, which can be a human, an HR, or their digital counterparts.

Table 1.

Core features and their corresponding enablers.

Table 1.

Core features and their corresponding enablers.

| Core Features | Enablers | Definition/Description | Ref. |

|---|

| Body Structure | Multi Modal Interfaces | Context Awareness | Simulations and Models | Real-time Data Analytics | Decision-Making | Sensors and Actuators | Robotics Engineering |

|---|

| Anthropomorphism | × | × | | | | | | | It refers to both the psychological projection of human traits onto robots and the technical design of human-like features in machines. This concept improves acceptance of robots as team partners in industrial settings. | [26] |

Perceptual

Intelligence | | | × | × | × | | × | | It is to the ability to interact with the environment through various sensory modalities. It involves recognizing objects, navigating complex environments, and interpreting human gestures and emotions. | [27] |

Cognitive

Adaptability | | | × | × | × | × | | | It refers to their ability to adjust behavior based on human needs and environmental factors. This concept involves developing cognitive architectures that enable robots to learn, adapt, and interact effectively with humans in real-world settings. | [28] |

Dexterity/

Locomotion | × | | | × | | × | | × | Dexterity refers to the ability to perform skilled manipulations, particularly with hands. It involves complex interactions between kinematics, contact forces, and motion planning. Locomotion focuses on self-propelled movement in diverse environments. | [29] |

Cognitive adaptability empowers HRs to process and act on real-time information. It enhances decision-making in dynamic manufacturing contexts. Cognitive adaptability relies on real-time data analytics and decision-making to enable intelligent and autonomous operations in the HHitL ecosystem. Real-time data analytics leverages AI, ML, FM, and NLP to optimize processes. Decision-making integrates compliant control and cloud-fog-edge computing to facilitate autonomous, context-aware decisions. In addition, simulations and models in the manufacturing setting also enable the cognitive adaptability of the system by providing a digital replica of the physical aspects.

Locomotion and dexterity are critical for humanoid robots to execute physical tasks with precision and agility in manufacturing environments. They are driven by robotics engineering and simulations and models, ensuring precise and agile task execution in manufacturing environments. Robotics engineering designs robust motion systems using kinematics and control systems. Simulations and models use digital twins and physics-informed models to support navigation and path planning by optimizing movement and anticipating obstacles. In addition, the body structure is also a fundamental enabler of dexterity and locomotion, providing the mechanical framework and kinematic flexibility necessary for complex movement, balance, and task-oriented manipulation in humanoid robots.

It is important to note that “simulations and models” function as embedded components within the broader digital twin module. While the digital twin encompasses real-time synchronization, behavioral prediction, and cyber–physical coordination, simulations and models represent its operational core. They provide the analytical tools necessary for perceptual understanding, predictive planning, motion optimization, and decision support. Thus, while listed as individual enablers for several core features, “simulations and models” are considered integral subcomponents of the digital twin rather than a standalone module.

2.3. Industry 5.0 and the Six Pillars (6P)

The HHitL ecosystem represents a paradigm shift in smart manufacturing and intelligent automation. It integrates the unique cognitive, perceptual, and physical abilities of both humans and humanoid robots within a unique framework. To achieve this, six foundational pillars, including participation, purpose, preservation, physical assets, projection, and persistence, are proposed. These pillars align with the core principles of Industry 5.0: human-centricity, sustainability, and resiliency. Together, they generate a collaborative and intelligent system capable of navigating the complexities of modern industrial and societal demands.

Table 2 demonstrates the relationship between the six pillars and Industry 5.0 principles.

The human-centricity dimension of the HHitL ecosystem is grounded in the pillars of participation and purpose. Participation emphasizes the active and ergonomic involvement of humans in collaboration with HRs. It supports human and HR adaptation and seamless multimodal interaction. It also enables workers to interact naturally and safely with intelligent robotic agents. Complementing this, purpose defines the ethical and societal rationale behind integrating humanoid robots into industrial environments. Beyond task utility, this pillar emphasizes the need for ethical design principles that prioritize human dignity, autonomy, and safety. It promotes collaborative robotics where HRs augment rather than replace human effort, especially in physically demanding, repetitive, or hazardous tasks. In addition, purpose addresses broader societal and legal dimensions, including responsibility attribution in shared workspaces, compliance with privacy regulations during data collection and surveillance, and fairness in access to robotic technologies across regions or socio-economic groups. Psychological factors, such as worker trust, perceived threat to employment, and the potential for anthropomorphism-induced emotional strain, are also considered essential. This pillar thus ensures that technology aligns not only with human values but also with institutional, legal, and emotional well-being.

In addition, sustainability within the HHitL architecture is achieved through the pillars of preservation and physical assets. Preservation focuses on minimizing the environmental impact of robotic and manufacturing operations through resource efficiency, waste reduction, and support for green manufacturing practices. It reinforces the need for systems that are not only intelligent but also eco-conscious by design. Physical assets complement this by emphasizing the optimal use and lifecycle management of hardware and infrastructure. By integrating energy-aware robotics and smart infrastructure, HHitL becomes a vehicle for sustainable automation that reduces environmental footprints while maintaining high-performance standards in industry.

Finally, to navigate uncertain and dynamic environments, and achieve resiliency, the HHitL ecosystem incorporates pillars of projection and persistence. Projection involves the capacity for forward-thinking through predictive analytics, learning, and scenario planning, enabling proactive responses to emerging challenges. It equips the system with the strategic foresight necessary for long-term viability. Persistence, on the other hand, ensures that the system can adapt autonomously to disruptions, maintain operational continuity, and recover safely from failures.

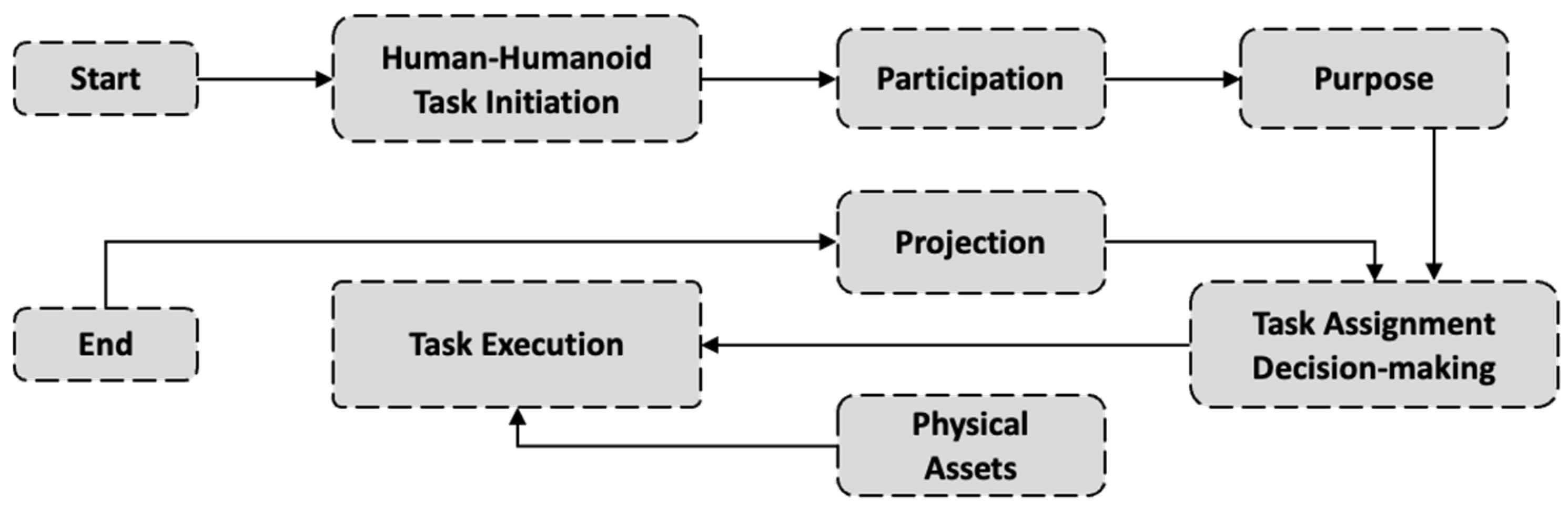

2.4. Interconnection and Operational Mapping of the 6P Pillars

The six foundational pillars of the HHitL ecosystem—participation, purpose, preservation, physical assets, persistence, and projection—derive their strength from how they interact during collaborative tasks. Together, they define the behavioral logic of human–humanoid systems in dynamic manufacturing environments. For example, on a flexible electronics assembly line, participation begins when a human initiates a task via speech or gesture, interpreted by the robot through multimodal sensing. Purpose ensures the robot’s response aligns with ergonomic safety and ethical design, reinforcing human-centric collaboration.

Once validated, preservation is activated via the digital twin to assess energy use and material efficiency, while physical assets like battery levels, joint health, and tool status are monitored. If conditions are favorable, task execution proceeds. Projection simulates future states to anticipate disruptions, and persistence enables the system to adapt autonomously, reassigning tasks or activating a backup robot when needed. These pillars operate as an integrated feedback loop, ensuring collaboration is efficient, ethical, and resilient.

Figure 3 illustrates the operational logic and pillar-based influence on human-humanoid collaboration in a manufacturing setup.

3. Implementation of the HHitL Ecosystem

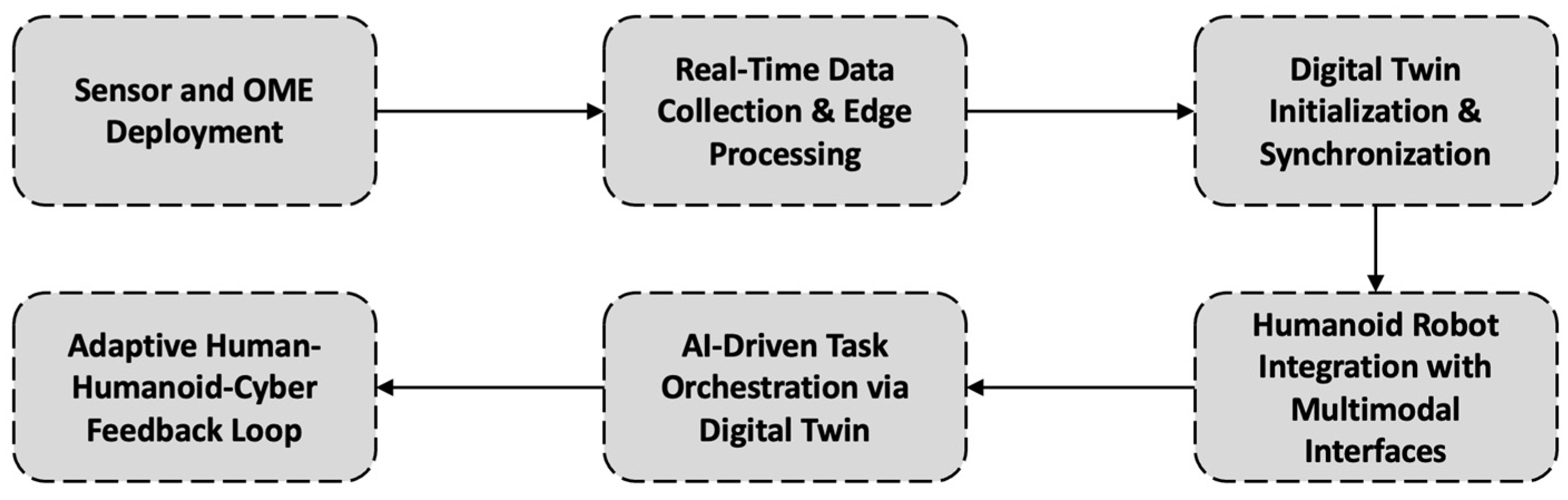

3.1. Pathway

The implementation of the Human and Humanoid-in-the-Loop (HHitL) ecosystem can be approached through a staged and modular pathway, beginning with the integration of OMEs and culminating in closed-loop cognitive collaboration between humans and humanoid robots. In the initial phase, real-time data acquisition is achieved by deploying multimodal sensors across workstations, machines, and wearables. These data streams are processed at the edge for latency-sensitive operations and transmitted to a centralized or distributed digital twin platform. The digital twin acts as a real-time mirror of the factory floor, incorporating machine behavior, human performance metrics, and robot telemetry to support planning, diagnostics, and scenario simulation.

As the system evolves, humanoid robots equipped with foundation model-driven perception and reasoning capabilities are introduced to carry out dexterous and collaborative tasks. These robots interface with humans via intuitive multimodal channels (e.g., voice, gesture, AR), and continuously update their state via the digital twin. Task orchestration becomes dynamic, where the digital twin, supported by AI and simulation feedback, allocates tasks based on workload, safety, and system resilience metrics. The implementation concludes with a real-time feedback loop where humans, humanoid robots, and the cyber layer interact adaptively, supporting predictive maintenance, ethical decision-making, and zero-defect manufacturing. This flow-based deployment strategy ensures technical scalability and aligns the HHitL ecosystem with current smart factory standards and human-centric automation goals.

Figure 4 shows the flowchart of the HHitL ecosystem implementation pathway.

3.2. Case Scenario 1: Human–Humanoid Collaboration in Smart Assembly

In this scenario, a smart electronics assembly line utilizes both human workers and humanoid robots to collaboratively perform precision tasks, such as micro-component placement, ergonomic lifting, and cable routing. The human initiates the workflow by specifying task intent through a voice command or tablet interface (participation). The humanoid robot, equipped with a foundation model, interprets the instruction using speech and vision processing (anthropomorphism, perceptual intelligence), then requests clarification or confirms the action. The digital twin continuously updates the virtual representation of the task station, monitoring the status of both agents, tools, and components. It simulates alternative task sequences and detects bottlenecks in real time (projection). Based on this feedback, the humanoid robot dynamically adjusts its grip strength or movement path and transfers repetitive sub-tasks from the human to itself (cognitive adaptability, dexterity/locomotion).

This collaboration reduces human fatigue and enhances precision. If the human becomes unavailable or fatigued (detected via a wearable), the system autonomously reallocates critical tasks to the robot and flags a supervisor (persistence). Energy usage, tool wear, and physical asset health are monitored throughout (preservation, physical assets), reinforcing the ecosystem’s adaptability and sustainability.

3.3. Case Scenario 2: Adaptive Inspection and Rework in Quality Control

In a flexible manufacturing quality control cell, a humanoid robot works alongside a human quality supervisor to inspect assembled products using vision systems and multimodal sensors. The robot autonomously performs routine inspections based on trained defect models, while escalating ambiguous or uncertain findings to the human (participation, purpose). If the human annotates a defect via gesture or touchscreen, the humanoid robot learns from the feedback through reinforcement learning and foundation model adaptation (cognitive adaptability). The digital twin simulates defect propagation and alerts upstream workstations, improving systemic awareness (projection). In cases of disagreement or data inconsistency, the system integrates contextual information from past rework cycles to support resolution (persistence).

The robot optimizes its scanning path and lighting settings based on resource constraints and power levels (preservation), while predictive maintenance logs are generated for the camera arm and gripper module (physical assets). This system ensures high-quality outcomes while protecting worker well-being and minimizing false positives.

4. Challenges and Outlooks

4.1. Challenges

One of the primary challenges in deploying humanoid robots in manufacturing lies in real-time perception and context awareness. Despite significant advancements in computer vision, multimodal sensing, and ML, humanoid robots still struggle to reliably interpret dynamic, unstructured environments such as shop floors and real industrial settings where unexpected changes are inevitable. The integration of perception systems that can understand intent, emotion, and environmental complexity remains a critical barrier.

Additionally, cyber–physical synchronization, which ensures that the virtual representation remains continuously aligned with the dynamic state of the physical environment, is another significant issue. While real-time state monitoring provides the foundation, maintaining robust synchronization with mobile HRs introduces additional challenges such as latency, spatial drift, inconsistent sensor feedback, and environmental unpredictability. To address these, the HHitL ecosystem leverages high-frequency multi-sensor fusion [

30] (e.g., vision, IMU, LiDAR, tactile sensors), edge computing for low-latency preprocessing, and timestamped data streaming to align updates across devices. For spatial alignment, techniques such as simultaneous localization and mapping (SLAM) and kinematic modeling are integrated into the DT to continuously update the position, posture, and motion intent of the HR. Furthermore, predictive filtering methods, such as Kalman filters or neural estimators, are applied to mitigate the effects of transmission delays and ensure smooth real-time control. These mechanisms allow the DT to serve not just as a passive mirror but as an intelligent, time-sensitive control layer that synchronizes human and robot actions in complex, collaborative manufacturing environments.

Another major concern is physical robustness and dexterity [

6]. Most HRs still lack the mechanical endurance and precision required for continuous industrial tasks, particularly those involving exposure to contaminants or hazardous conditions. Achieving a balance between anthropomorphic factors and industrial durability is still an open engineering challenge. Additionally, smart manufacturing environments often comprise heterogeneous systems with diverse communication protocols and data standards. Seamless integration requires modular, adaptive middleware layers and standardized interfaces, which are currently underdeveloped in the context of humanoid robotics.

From a human-centered perspective, socio-technical alignment remains an important issue. Operators may resist working alongside HRs due to concerns about trust, safety, or job displacement. Ensuring transparent and intuitive interaction, as well as developing ergonomic collaboration models, are essential for acceptance and long-term integration [

5].

In addition to technical challenges, the societal and legal implications of integrating humanoid robots remain underdeveloped. Industrial settings currently lack clear frameworks for assigning liability in cases of robot-induced errors or accidents, particularly in semi-autonomous collaborative operations. Data privacy is another concern, as continuous sensor-based monitoring raises questions about informed consent and data governance for human operators. From a psychological standpoint, working with humanoid robots may lead to increased stress, reduced sense of agency, or social displacement, especially if robots resemble human co-workers too closely. These factors underscore the need for human-centric design principles to be supplemented by regulatory, institutional, and cognitive safeguards to foster long-term trust and acceptance.

Finally, cost and scalability continue to limit deployment. HRs remain significantly more expensive than traditional industrial robots due to their complex kinematics, sensing systems, and control architectures. This limits adoption to pilot projects or high-value manufacturing contexts, leaving mass-market applications out of reach.

4.2. Outlooks

Despite these challenges, the outlook for humanoid robots in smart manufacturing is promising. Advances in foundation models, AI, and multi-modal interfaces rapidly improve robots’ ability to perceive, understand, and act in complex environments. These technologies will soon enable HRs to perform more diverse and cognitively demanding tasks with minimal human supervision.

In parallel, the development of modular hardware architectures and soft robotics is expanding the capabilities of humanoid platforms in terms of dexterity, safety, and energy efficiency. These improvements will enable robots to handle delicate assembly tasks, adapt to diverse tools, and interact safely with humans in shared spaces. The growing maturity of digital twin technologies will further support predictive maintenance, behavior simulation, and collaborative decision-making between humans and HRs. Digital twins will serve as real-time cognitive companions for HRs, allowing them to test behaviors virtually before executing them physically, which improves both safety and adaptability. In addition, standardization efforts are expected to accelerate, enabling better interoperability between humanoid robots, industrial systems, and cloud-based manufacturing platforms. Open-source frameworks and cross-platform middleware will facilitate smoother integration and foster a more competitive ecosystem.