1. Introduction

According to the “Global Road Safety Report 2023” by the World Health Organization (WHO), 1.19 million fatalities were caused by road traffic accidents worldwide, with 92% of these deaths occurring in low- and middle-income countries. Among them, pedestrians, cyclists, and motorcyclists accounted for more than 50% of the fatalities [

1]. In China, data released by the National Bureau of Statistics indicated that casualties resulting from motor vehicle accidents constituted 82.81% of all traffic-related casualties, leading to substantial injuries and significant economic losses [

2]. In response to these critical challenges, ICV technologies are rapidly developed. Among various active safety innovations, the AEB system is regarded as a core technology, with its performance exerting a direct impact on road traffic safety. For example, a 27% reduction in accident incidence was observed for vehicles equipped with an AEB system, as demonstrated by a study conducted by the Insurance Institute for Highway Safety (IIHS) in the United States (U.S.) [

3]. Furthermore, it was reported to reduce the risk of fatal pedestrian collisions by 84–87% and the risk of severe injury (MAIS 3+) by 83–87% [

4]. In addition, the AEB system was proven to be highly effective in preventing approximately 83% of rear-end collisions [

5].

The AEB system is an active safety technology designed to prevent collision risks or mitigate the severity of collisions through active alerts and braking interventions. It primarily consists of three components: environmental perception, control decision-making, and execution mechanisms. To ensure the stability and reliability of AEB systems’ performance, major global automotive markets and international organizations have promoted the implementation and the verification of the effectiveness of the technology through a multi-dimensional policy framework encompassing regulatory mandates, standard certifications, and testing evaluations. In 2016, 20 major automotive manufacturers committed to installing more than 95% of new vehicles with an AEB system as standard. By 2025, an AEB system was expected to be installed in 90% of newly sold vehicles in the U.S. As part of regulatory initiatives, UN R152 was adopted as the entry standard of the European Union (EU), mandating that the AEB system in M1- and N1-category vehicles should automatically detect collision risks within a speed range of 10–60 km/h [

6]. The system was required to issue both visual and auditory warnings and to activate emergency braking (with a deceleration of no less than 4 m/s

2) to avoid or mitigate collisions, as regulated. It was initially enforced for vehicle-to-vehicle functions starting in July 2022 and was extended to pedestrian detection in July 2024 [

7]. In addition, in May 2024, the Ministry of Transport of China mandated that passenger buses, cargo vehicles, towing vehicles, and hazardous goods transport vehicles be outfitted with an AEB system, in compliance with the “Performance Requirements and Test Methods for AEB Systems in Operating Vehicles” (JT/T 1242-2019) [

8], where the evaluation of braking response time and deceleration performance under high-speed conditions were emphasized. The technical implementation and performance evaluation of the AEB system were promoted in various countries by means of a trinity policy framework integrating “regulatory enforcement, standard certification, and testing evaluation”.

However, the reliability of the AEB system varied across different driving environments and conditions, such as pedestrians crossing at urban intersections or strong light interference on highways. Especially, the performance of the AEB system was observed to deteriorate under harsh weather conditions, at higher speeds, and in situations where visibility was obstructed, posing significant challenges to the scientific validity of current evaluation methodologies. In order to enhance the system’s reliability and improve driving safety, researchers endeavored to enrich AEB system testing scenarios and evaluation parameters. Kidd [

9], by examining 6.73 million police-reported rear-end collisions and 4285 fatal accidents, quantified the key factors affecting AEB system performance, providing empirical support for organizations like the IIHS and European New Car Assessment Programme (E-NCAP) to revise their testing protocols. Zhou et al. [

10], utilizing data from the National Automotive Accident In-Deep Investigation System, investigated the expected functional safety of the perception system in hazardous interactions between automobiles and two-wheeled vehicles (e.g., motorcycles and bicycles). Similarly, Lian et al. [

11] extracted typical traffic accident characteristics from international traffic accident databases and analyzed the scenarios for triggering the AEB system, which added data for the construction of a systematic test scenario database. Rao et al. [

12] conducted research focusing on the quantitative evaluation of the AEB system in non-standard driving situations. Through naturalistic, driving data-driven scene generation, the development of simulation models, and boundary collision assessment, critical causes of substantial AEB system performance degradation in curve scenarios were uncovered, being attributed to sensor perception deficiencies and limitations in traditional algorithms. In addition, Kawaguchi et al. [

13] analyzed large-scale fleet operational data and demonstrated that the AEB system effectively reduced collision rates among non-fatigued drivers. They further recommended integrating active intervention technologies, such as fatigue monitoring systems, to optimize the safety performance of commercial vehicles. Karpenko et al. [

14,

15] investigated the influence of tire performance on braking effectiveness through a tire stress–deformation model. It was found that a 0.5 bar reduction in inflation pressure could increase tire deformation by 15%, leading to an approximately 8% extension of braking distance. This indicated that the performance evaluation of the AEB system should not solely rely on data from standard working conditions but also take into account the actual impact of tire status (such as tire pressure and load) on braking response. Ji [

16] and Tian [

17] focused on the investigation of performance evaluation indicators for the AEB system, thereby laying a theoretical foundation for a reliable system assessment. Cicchino et al. [

18], employing both Poisson regression modeling and a quasi-induced exposure methodology, assessed the effectiveness of the pedestrian detection AEB system on real-world police-reported pedestrian collision incidents. Their findings indicated that vehicles equipped with the pedestrian detection AEB system achieved a 25–27% reduction in the risk of pedestrian collisions and a 29–30% decrease in the risk of pedestrian injuries. Moreover, Li [

19], Zhou [

20], Wang [

21], and Leng [

22] explored optimization strategies for AEB system control algorithms. In particular, Wang [

21] proposed an “Estimation–Prediction–Control” hierarchical architecture, which integrated the estimation of road surface adhesion coefficients, the prediction of pedestrian trajectories, and braking system control. This framework highlighted the critical role of road surface adhesion in AEB systems’ functionality and introduced a novel paradigm for active safety control under complex operational conditions.

The aforementioned research on AEB systems’ performance mainly focused on enriching test scenarios, expanding evaluation metrics, identifying key influencing factors, and optimizing control strategies, but there were rarely studies conducting a systematic evaluation of the overall performance of the AEB system. Yang et al. [

23] introduced a data-driven pedestrian-oriented key test scenario generation method, wherein speed difference (Δ

v), relative lateral distance (

Dy), and relative longitudinal distance (

Dx) were defined as core variables to construct a scene risk assessment model. This approach provided a reusable technical pathway for building large-scale scenario libraries and facilitated the transition of the industry from “vehicle-oriented” to “vulnerable road user (VRU)-oriented” testing paradigms. Kovaceva et al. [

24] incorporated Bayesian inference into the safety benefit assessment of the AEB system, systematically integrating counterfactual simulations with real-world test data to address the limitations associated with a reliance on a single source in traditional methods. Zhao et al. [

25] developed a multi-modal sequence model for predicting the motion intentions (such as lane changes, acceleration, deceleration, etc.) of surrounding vehicles, by incorporating lateral trajectory planning into the emergency braking process, and thus overcame the limitations of traditional AEB systems that were restricted to longitudinal braking. Schachner et al. [

26] established a scenario directory generation and safety assessment method based on an open-source toolchain, systematically analyzed the effects of AEB systems on collision outcomes in vehicle–pedestrian conflict scenarios and introduced collision configuration quantification analyses, including impact speed attenuation rate and collision point offset, to support the development of injury prediction models for AEB systems. Jang et al. [

27] applied ADAS testing system equipment and differential global positioning systems to evaluate AEB systems’ performance, conducting assessments based on established evaluation protocols. Xu et al. [

28] proposed an integrated Software-in-the-Loop (SIL) (Matlab/Simulink) and Hardware-in-the-Loop (HIL) (IPG simulation platform, dSPACE controller, and pneumatic braking bench) verification system for two-axle passenger vehicles to improve the validation efficiency of AEB system control algorithms. The test results showed a high degree of consistency between SIL and HIL outcomes in terms of vehicle speed and relative distance measurements. Duan et al. [

29] advanced a novel safety testing method that combined digital twin technology with fault tree analysis, thereby enhancing the richness of autonomous driving test scenarios and improving the credibility of evaluation results. Moreover, Wang [

30], Gao [

31], Fang [

32], and Wang [

33] employed VIL approaches to AEB system performance validation. Among them, Wang [

33] constructed a digital twin-based VIL testing system for intelligent vehicles, which was employed in the development and validation of AEB systems. Real-world road experimental results confirmed the effectiveness and advantages of the proposed method, demonstrating that it not only preserved the realism of traditional real-world road testing but also effectively mitigated the safety risks associated with direct on-road experiments.

Yet, the existing performance evaluation frameworks for AEB systems exhibited two major limitations: First, mainstream evaluation methods, such as E-NCAP and China-NCAP (C-NCAP), adopted static weight allocation mechanisms, making it difficult to accurately capture the dynamic response characteristics of the system under multi-condition coupling. Second, traditional evaluation frameworks generally demonstrated a disconnect between theoretical modeling and experimental validation, resulting in discrepancies between assessment outcomes and actual road performance. With the increasing deployment of L2+ and L3-level autonomous driving technologies, the AEB system has been required to address increasingly complex scenarios, including pedestrian crossings and multi-vehicle interactions. These two critical shortcomings have significantly constrained the accuracy and engineering applicability of current AEB system performance evaluations.

To overcome these challenges, a comprehensive VIL performance evaluation system with the DWAHP for AEB systems was proposed in this study. In particular, a two-level dynamic weighting architecture was established: at the criteria level, a bivariate dynamic weight function incorporating the vehicle speed and road surface adhesion coefficient was introduced to enable the dynamic coupling modeling of road environment parameters; at the scheme level, a five-dimensional indicator system—encompassing braking distance, collision speed, and other key metrics—was constructed, and a multi-condition adaptive evaluation model was developed through a nonlinear weight allocation algorithm. Compared to the traditional AHP, the DWAHP approach effectively addressed the limitations of fixed-weight mechanisms by establishing a dynamic mapping relationship between weight functions and operating condition parameters. Additionally, based on the primary influencing factors of the AEB system (vehicle speed and road surface adhesion coefficient) and the DWAHP method, a dedicated AEB system performance testing platform was developed for VIL testing, forming a complete technical chain covering parameter acquisition, weight allocation, and comprehensive evaluation. Compared to a real-world road test, the VIL-based evaluation outcomes exhibited a high degree consistency for safety, reliability, and riding comfort. This achievement not only established a new methodological framework for the comprehensive evaluation of AEB systems but also provided critical contributions to advancing the performance evaluation of ADASs. The developed testing platform and evaluation model are expected to play a significant role in enhancing the intelligent driving safety evaluation system and contribute substantially to its future improvements.

3. Experimental Setup and VIL Test

The AEB system constitutes an indispensable component of both collision warning and avoidance technologies, as well as autonomous driving systems. At present, performance evaluations of AEB systems are primarily conducted through simulation testing and real-world road testing. Simulation testing, which has become a major focus of recent research, allows for evaluations under various extreme and idealized conditions. However, it remains highly subjective, as it heavily relies on the construction of control models and the setting of critical parameters. Thus, it is not considered a primary reference basis. In contrast, real-world road testing yields highly accurate results consistent with actual driving environments, enabling the comprehensive consideration of factors such as environmental conditions, road surface characteristics, and driver behaviors. Nevertheless, it is limited by significant safety risks, the low repeatability of specific scenarios, and relatively low efficiency.

In view of the above analysis, a performance testing platform for AEB systems was designed and developed, grounded in a comprehensive performance evaluation paradigm based on the DWAHP model and its primary influencing factors (vehicle speed and road surface adhesion coefficient). Subsequently, VIL experiments were carried out on this platform, providing a novel and effective approach for evaluating the performance of AEB systems.

3.1. Development of Experimental Equipment

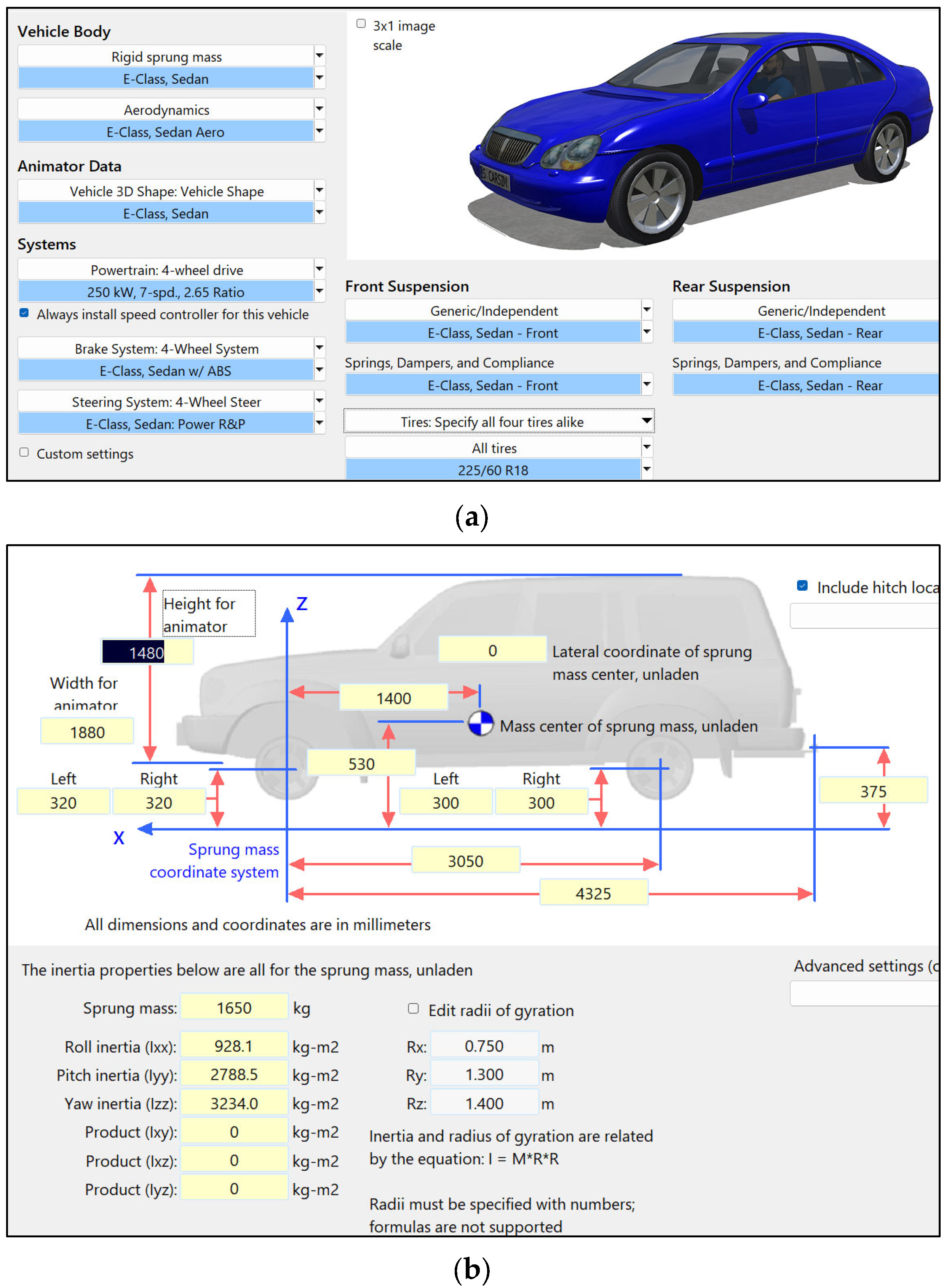

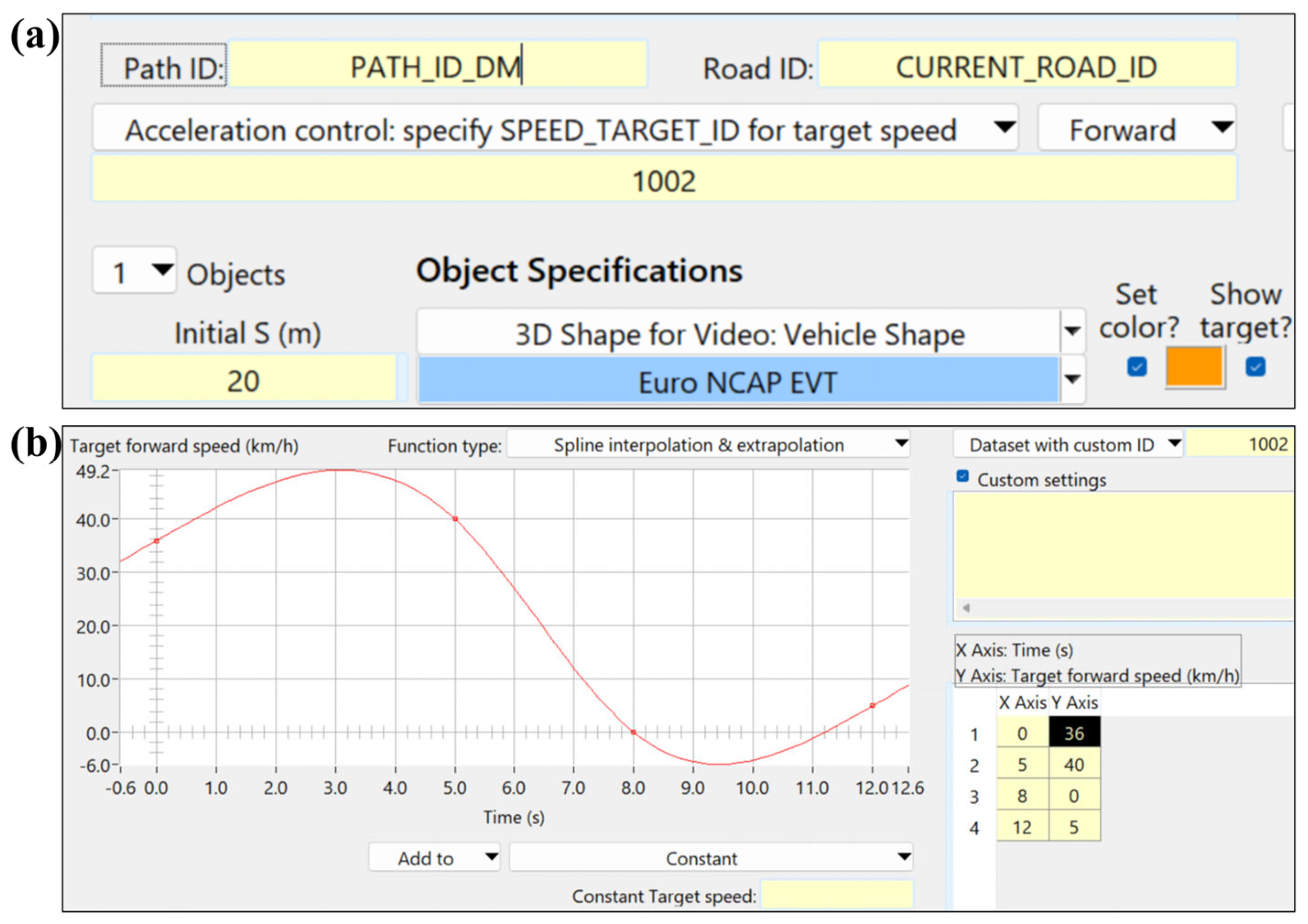

Experimental equipment was designed and developed based on the detection requirements and the primary influencing factors of AEB system performance (vehicle speed and road adhesion coefficient). The platform was equipped with several key functionalities: an adjustable wheelbase to accommodate different vehicle models, an adjustable center distance between the primary and secondary rollers to simulate various road adhesion conditions, and a dual-inertia coupling system realized through a mechanical flywheel and an alternating current (AC) dynamometer, enabling safe operation and accurate data acquisition across low, medium, and high vehicle speeds. The platform mainly consisted of two subsystems: a mechanical body and a measurement and control system. Relying on the comprehensive performance evaluation model DWAHP of the AEB system, employing the VIL testing method, the performance of the AEB system was systematically assessed.

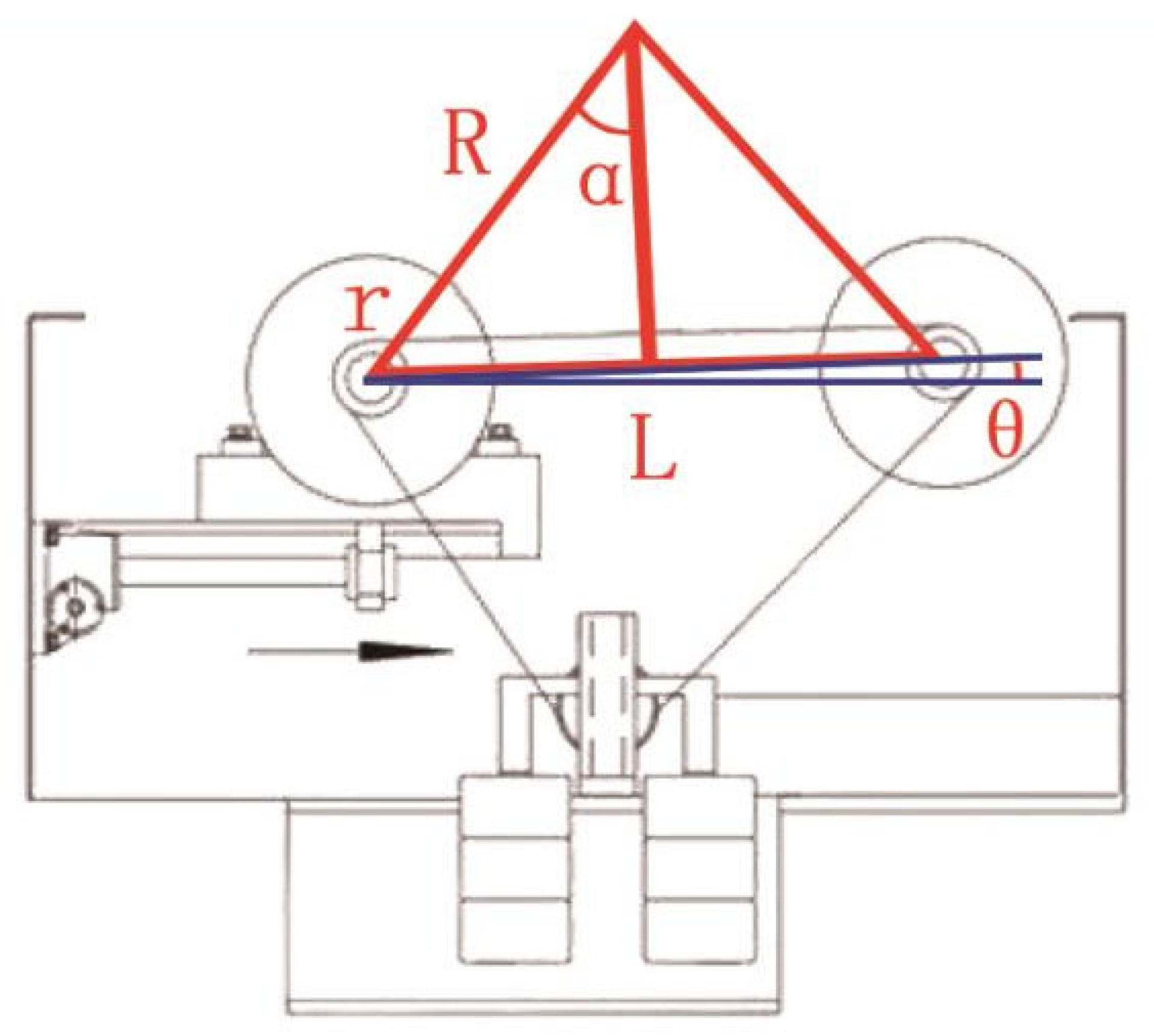

The mechanical structure of the testing platform primarily consisted of a roller assembly, chain transmission and tensioning system, flywheel assembly, AC dynamometer, lifting device, integrated linkage assembly, and protective components. The overall mechanical layout is illustrated in

Figure 9. The specifications and selections of the major testing components are summarized in

Table 11. The platform adopted a four-axle, eight-roller configuration, with an adjustable wheelbase ranging from 1.8 m to 5.0 m. Synchronous operation across all axles was achieved, with a synchronization error of less than 2 km/h, and the maximum detectable vehicle speed reached 120 km/h. The translational inertia of the vehicle body could be dynamically simulated, with the actual applied torque deviating by no more than 1 N·m from the theoretical value and a time delay of less than 10 ms, thereby significantly enhancing measurement accuracy. The adjustable center distance between the primary and secondary rollers ranged from 380 mm to 680 mm, enabling the simulation of various road surface conditions, such as dry asphalt and icy and wet surfaces, by altering the equivalent adhesion coefficient (as depicted in

Figure 10, with the principles described by Equations (24) and (25)) [

34]. The developed platform met the performance requirements for chassis dynamometers specified in Section 6.3.2.3 of GB/T 44500-2024, “Safety Performance Inspection Regulations for New Energy Vehicles” [

35].

where

r is radius of the primary and secondary rollers (mm);

L is the center distance between the primary and secondary rollers (mm);

θ is angular difference in the height of the rotational axes of the primary and secondary rollers (degree);

R is the radius of the vehicle wheel (mm); and

α is installation angle of the vehicle wheel (degree).

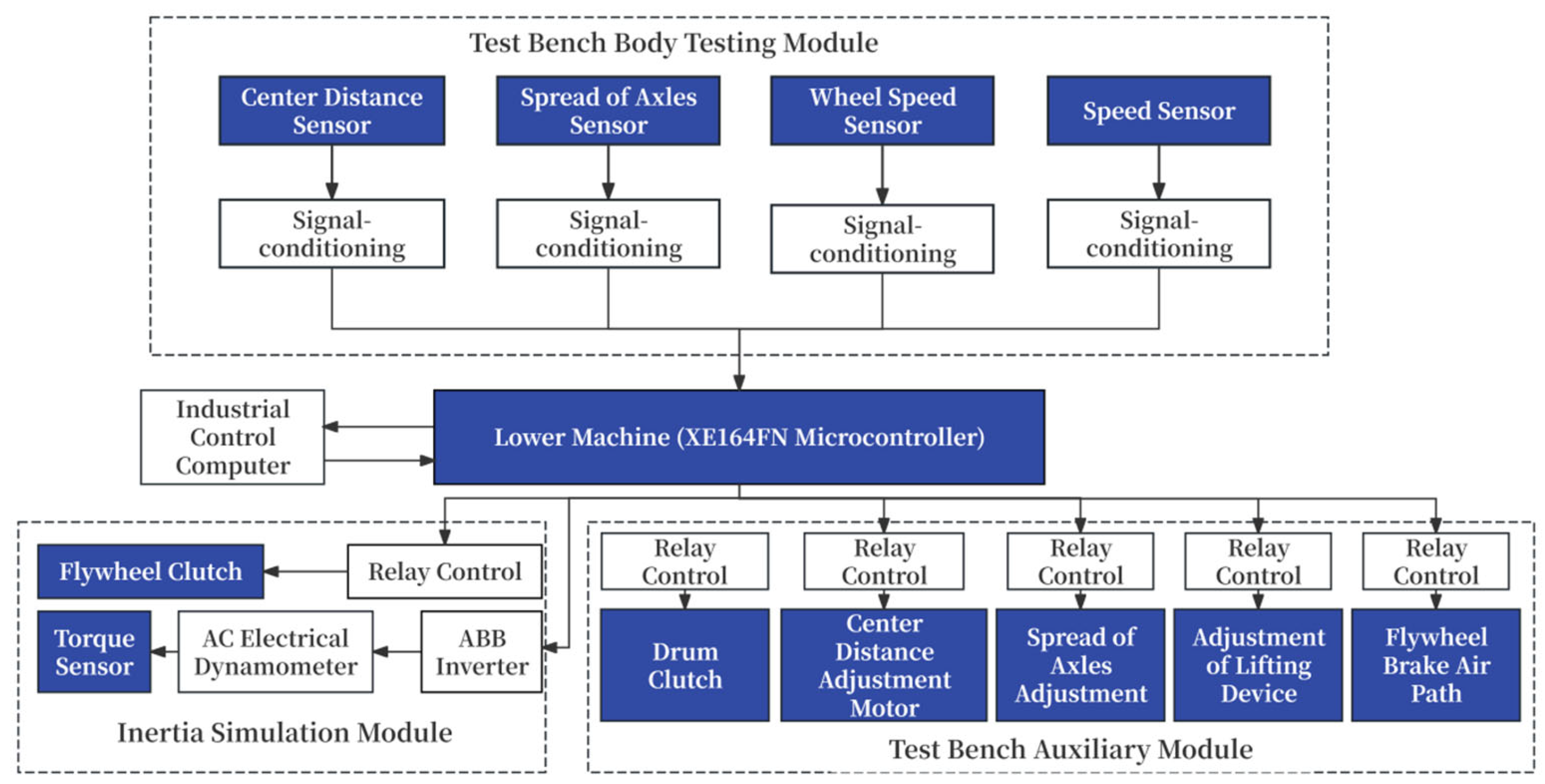

The measurement and control system served as the core component of the AEB system performance testing platform, comprising two main parts: the upper computer system and the lower system. The upper computer system was primarily responsible for vehicle information registration, vehicle detection, control command issuance for the test bench, and the processing and storage of experimental results. The lower computer system mainly consisted of a microcontroller unit, sensors, relay control units, and a detection indication circuit. The main microcontroller chip was selected to be the XE164FN, a 16-bit microcontroller from the XE166 series developed by Infineon Technologies. The overall structure of the measurement and control system is illustrated in

Figure 11, and the testing procedure is depicted in

Figure 12.

3.2. Test Preparation

Figure 13 illustrates the real-time display of critical parameters and system statuses on the upper computer interface, which facilitated continuous monitoring and ensured the reliability of the experimental operations.

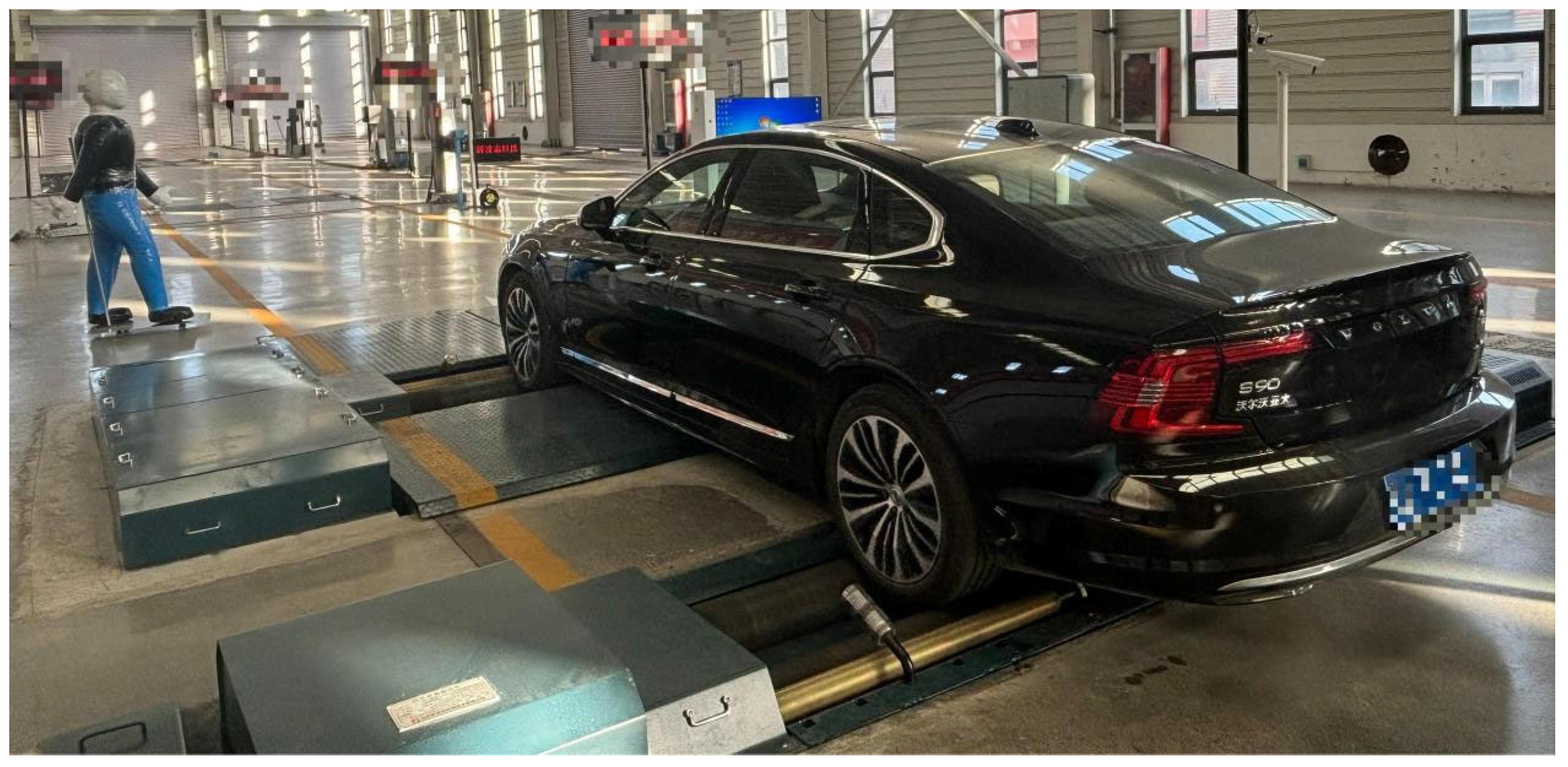

The Volvo S90L model was selected as the tested vehicle. As a brand distinguished by its leading position in automotive safety, Volvo adopted the City Safety system as the AEB control strategy for this model. The key specifications of the vehicle are listed in

Table 12.

A movable adult dummy was employed as the target object. The dummy’s external surface was designed to closely replicate the morphological features of an actual pedestrian, with all constituent parts detectable by the sensors of the vehicle. It was capable of realistically emulating pedestrian motion, thereby satisfying the demands of diverse testing scenarios and ensuring the validity of the experimental results.

- 2.

Development of testing scenarios and operational conditions.

The testing scenario was established under sufficient daylight conditions, where the VRU crossed the roadway at an intersection. The corresponding operational conditions are detailed in

Table 13, and the layout of the testing scenario is depicted in

Figure 14.

- 3.

Equivalence of translational mass and mechanical inertia.

During vehicle testing on the test platform, it was necessary to simulate the inertial resistance experienced by the vehicle during actual road driving by means of the rotational inertia of the bench. This process was based on the principle of kinetic energy equivalence, requiring that the translational mass be matched to the mechanical inertia. To guarantee the accuracy and reliability of the experimental results, the curb mass m (comprising the vehicle mass and the driver’s mass) of the vehicle was converted into the equivalent rotational inertia of the rollers and flywheels prior to testing.

Upon weighing, the curb mass m was found to be 1864 kg. The test platform provided a fixed mechanical inertia equivalent to 1021 kg. Three independently controllable mechanical flywheels, each contributing 220 kg of equivalent mass, were available, in addition to an AC dynamometer capable of continuously adjustable torque control to simulate translational inertia within the range of −220 kg to 220 kg. Accordingly, all three flywheels were engaged during the preparation phase (totaling 660 kg of equivalent mass), and the remaining 163 kg was compensated for via electrical inertia adjustment, thereby achieving an accurate match between the translational mass of the vehicle and the mechanical inertia of the test platform.

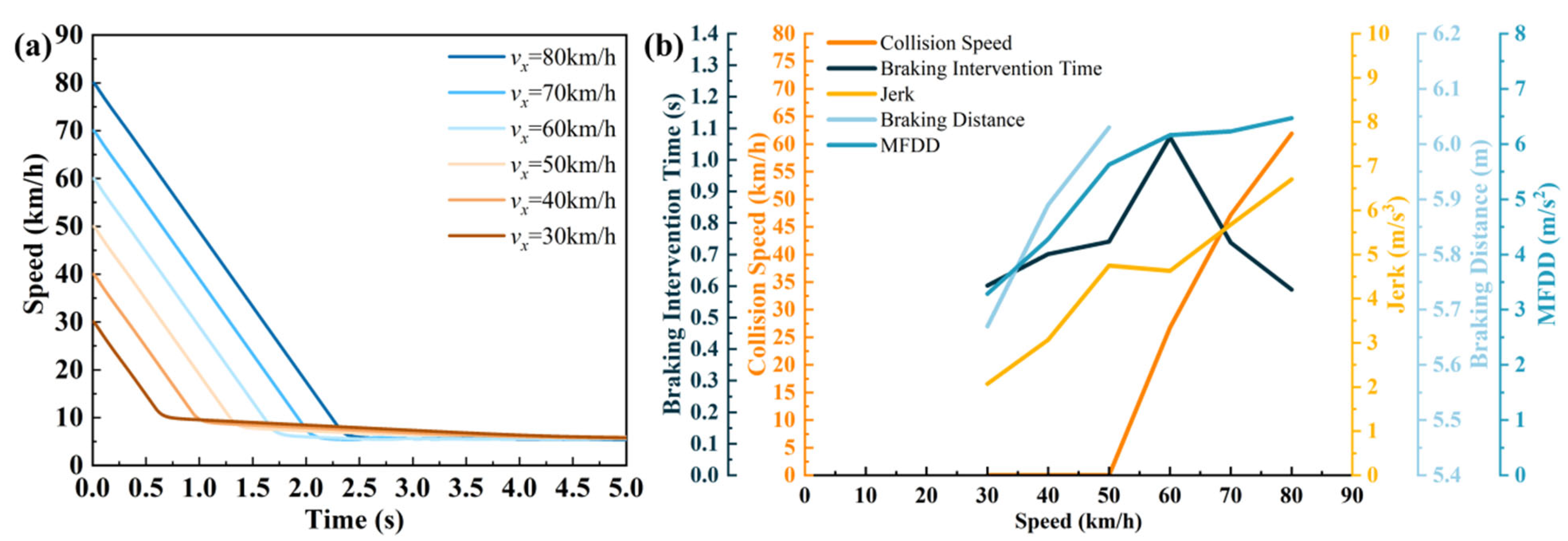

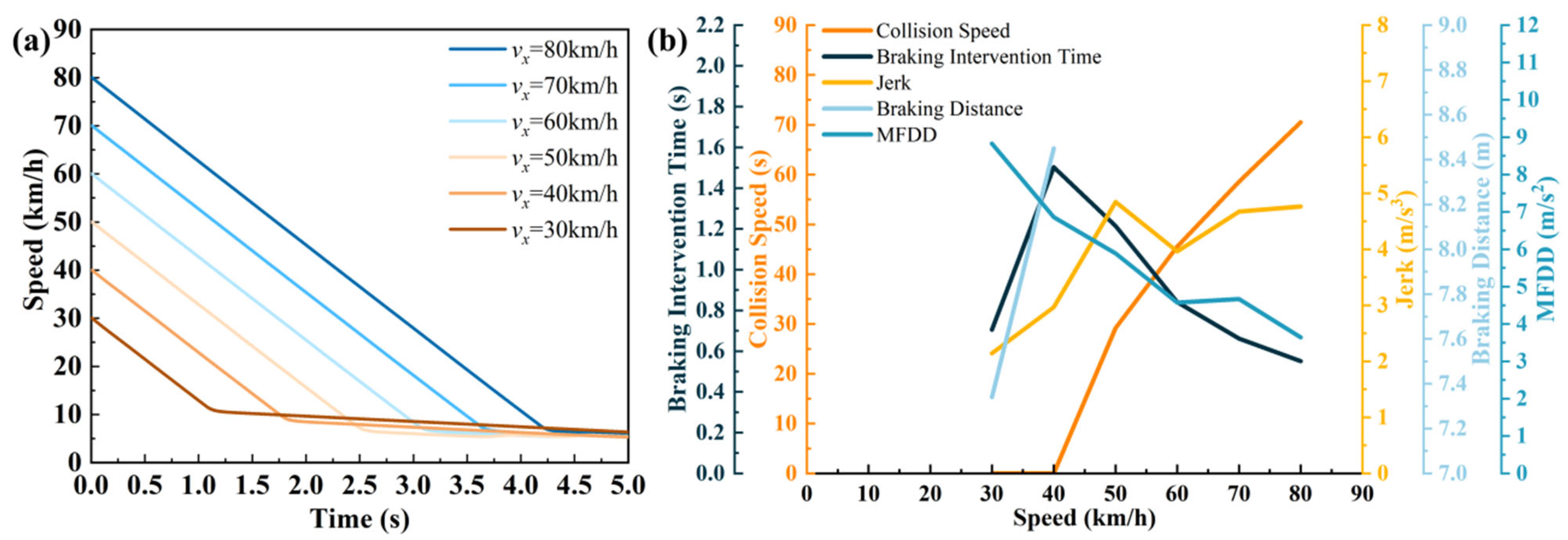

3.3. Testing

Real-time data, including vehicle speed, wheel speed, braking deceleration, braking time, and braking distance, were recorded by the test platform. These data served as the scoring basis for braking distance, braking deceleration, collision speed, AEB system braking intervention time, and acceleration variation rate within the DWAHP model scheme layer. To characterize both the average level and the stability of deceleration during braking, braking deceleration was represented in the form of the MFDD, where a larger MFDD indicated a stronger braking performance. The average acceleration variation rate was employed to describe the severity of acceleration fluctuations over time, serving as a core indicator for assessing motion smoothness and ride comfort. A lower Jerk value corresponded to smoother acceleration transitions and a reduced perception of jolting or abrupt surge sensations by occupants. Accordingly, the average acceleration variation rate was output as a key metric. During the test, continuous in-vehicle video recording was performed to monitor the activation of warnings, initiation of braking, and real-time speed changes of the vehicle, thereby ensuring the accuracy of the measured collision speed and AEB system braking intervention time.

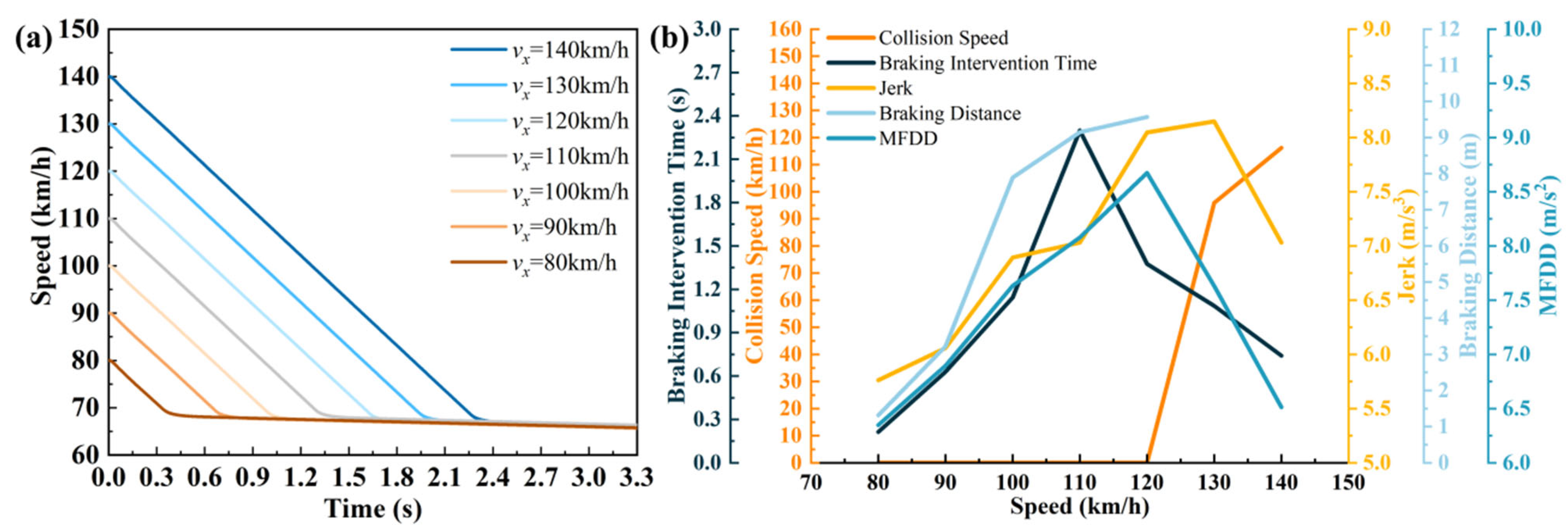

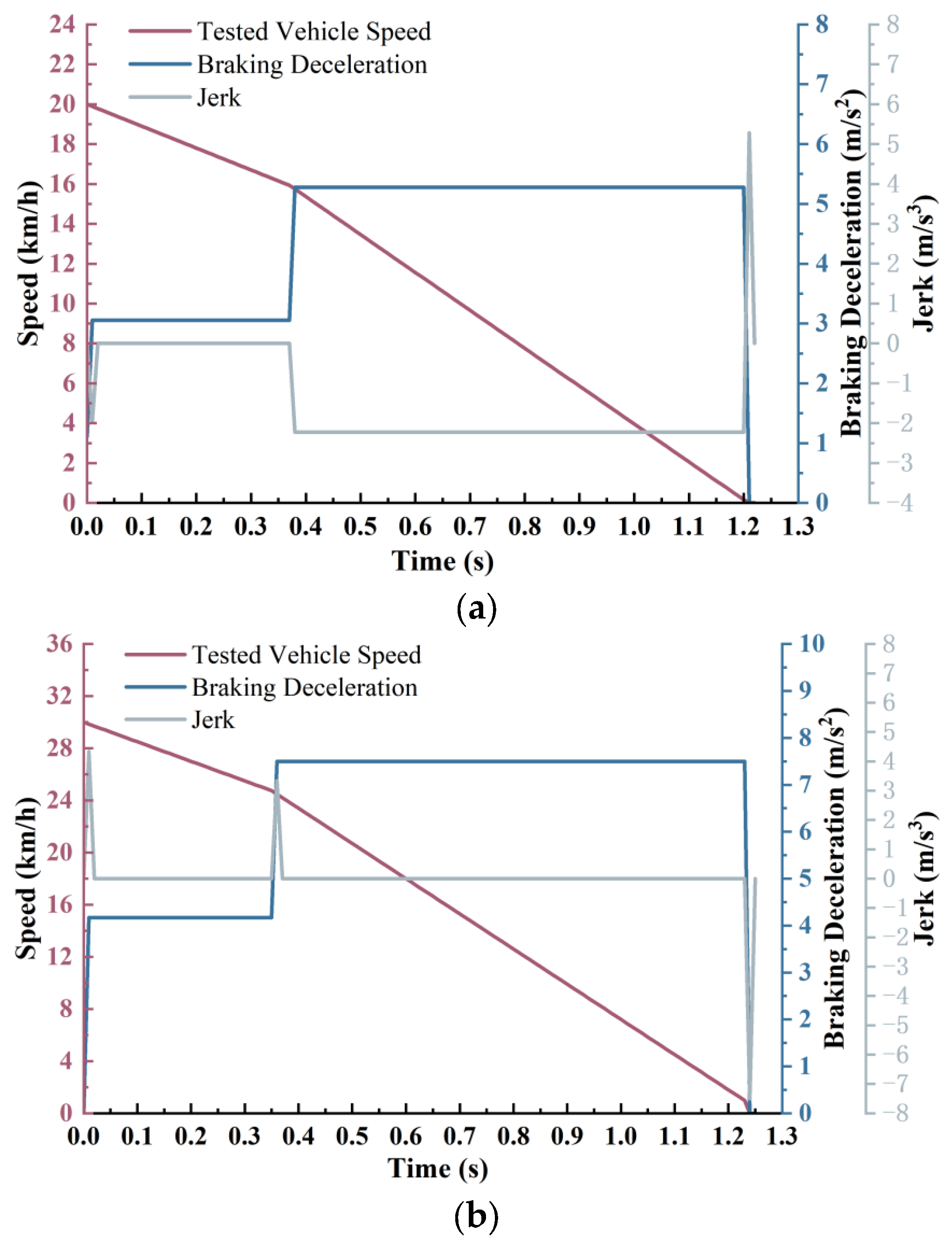

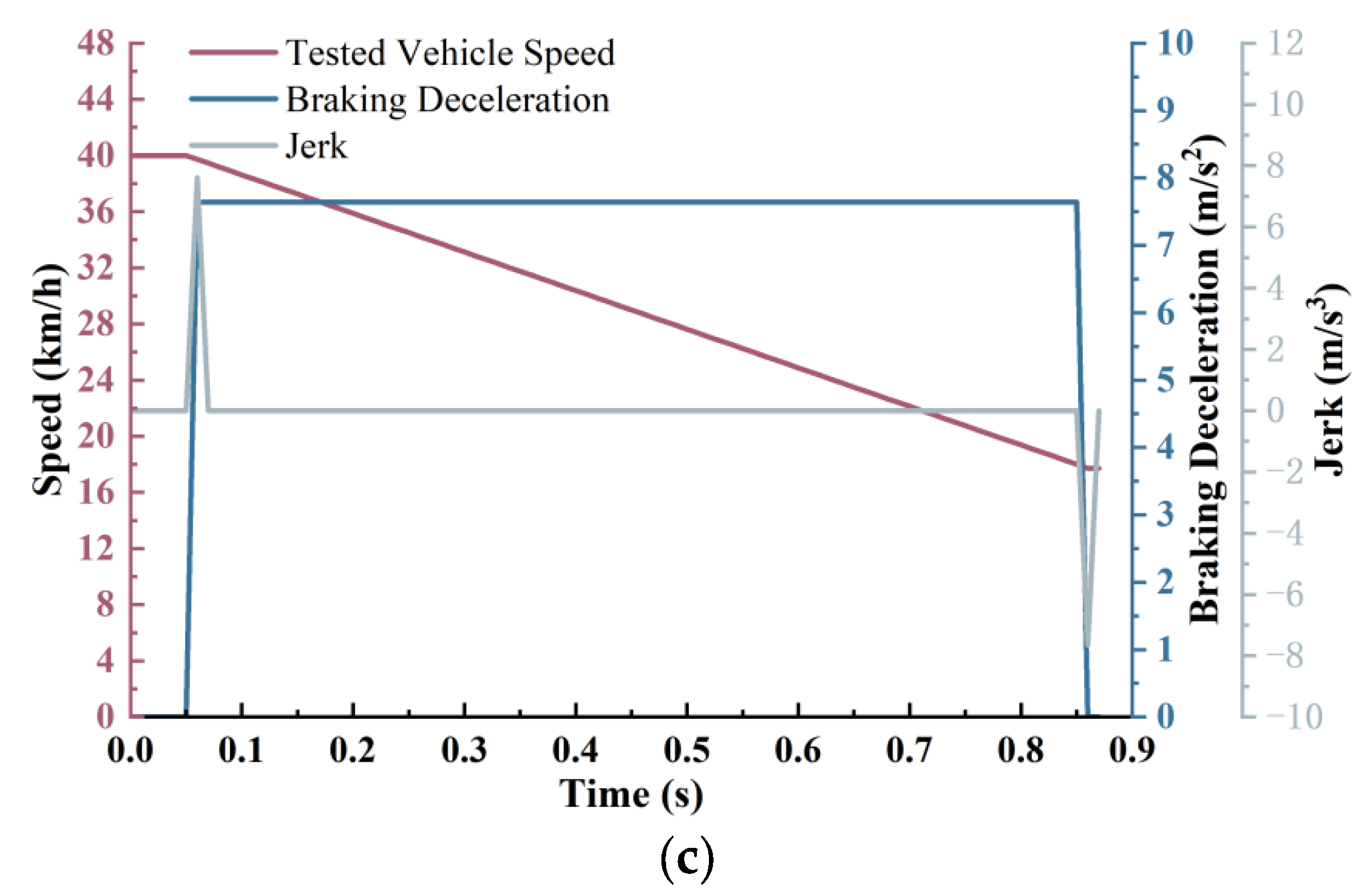

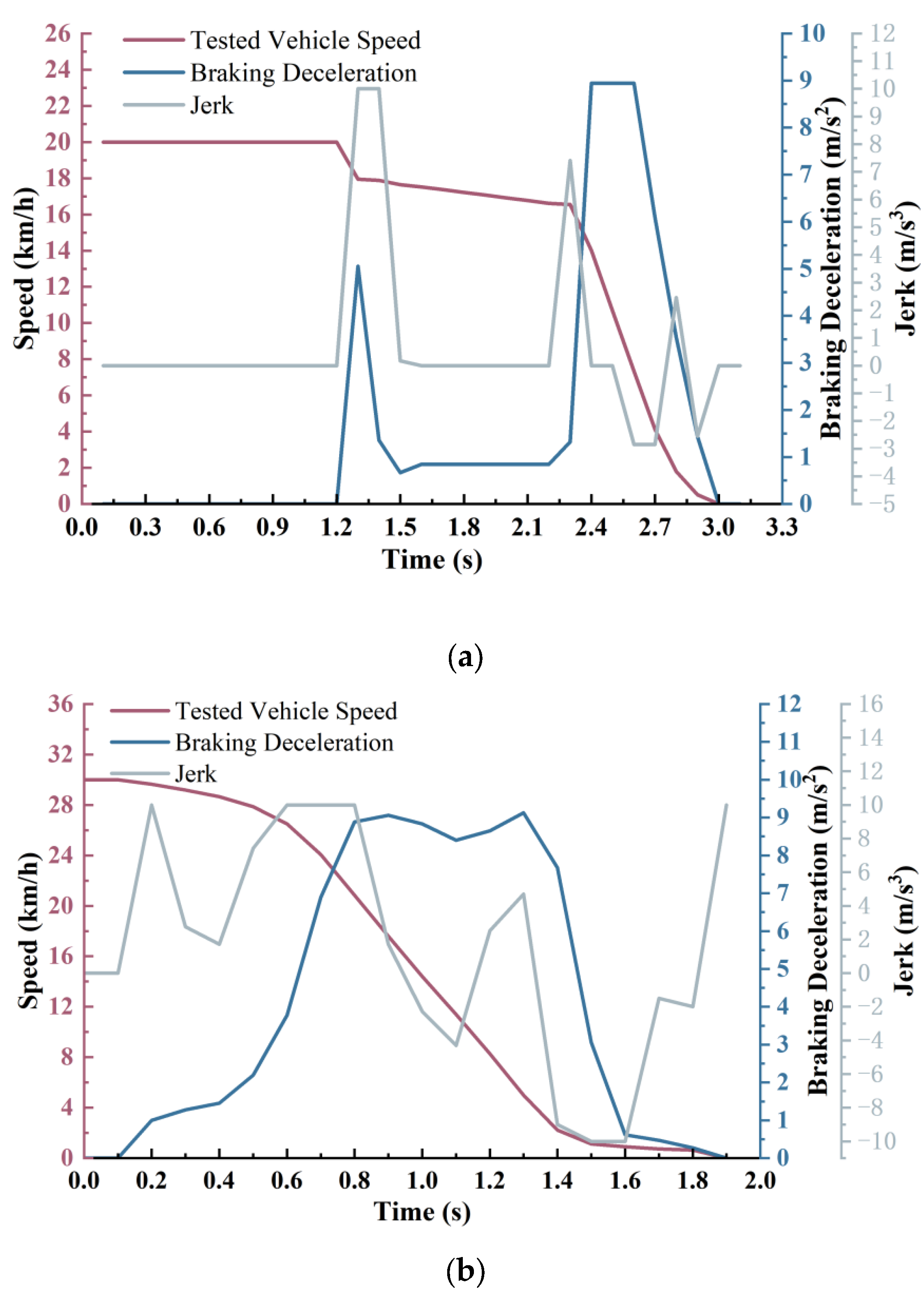

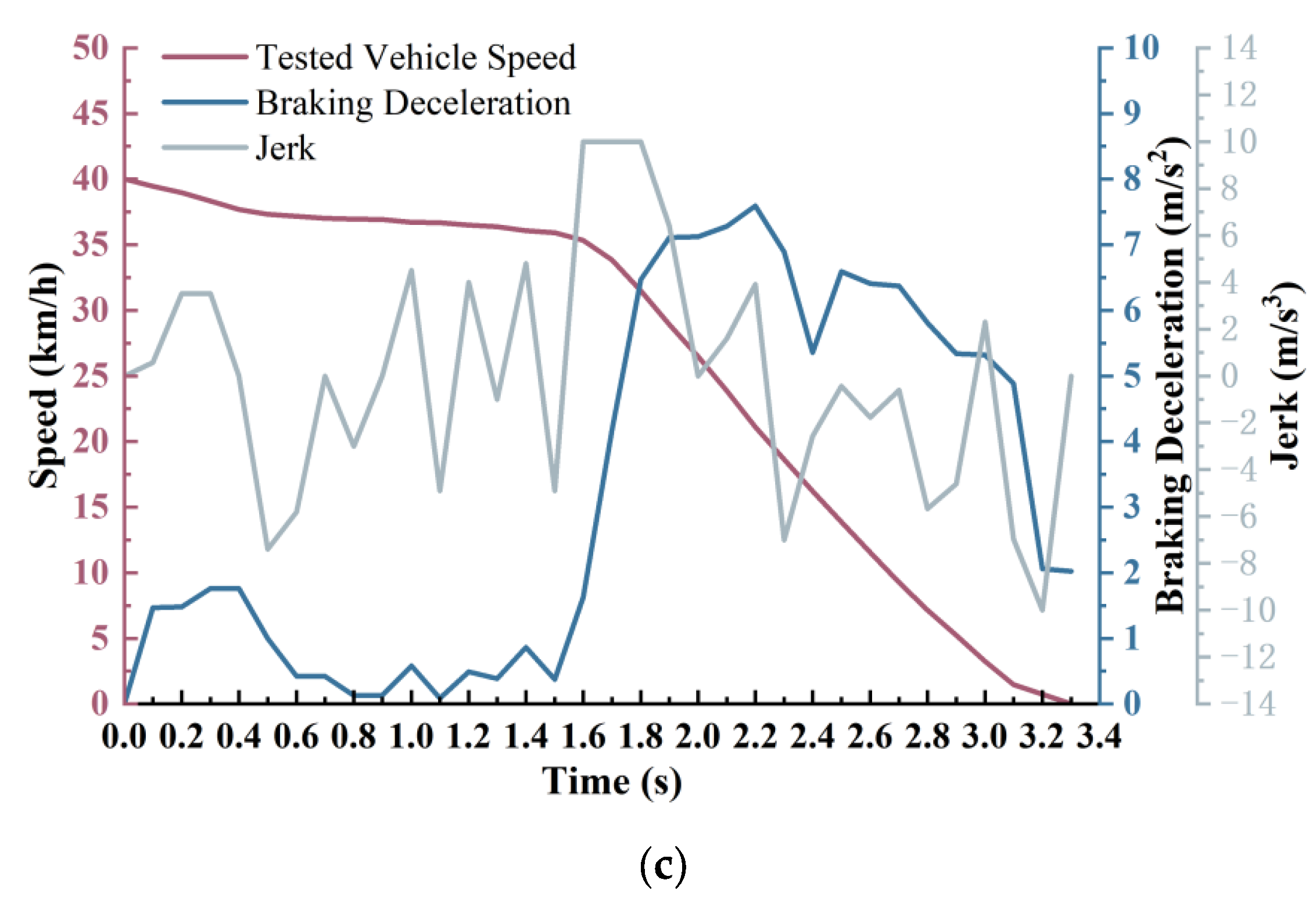

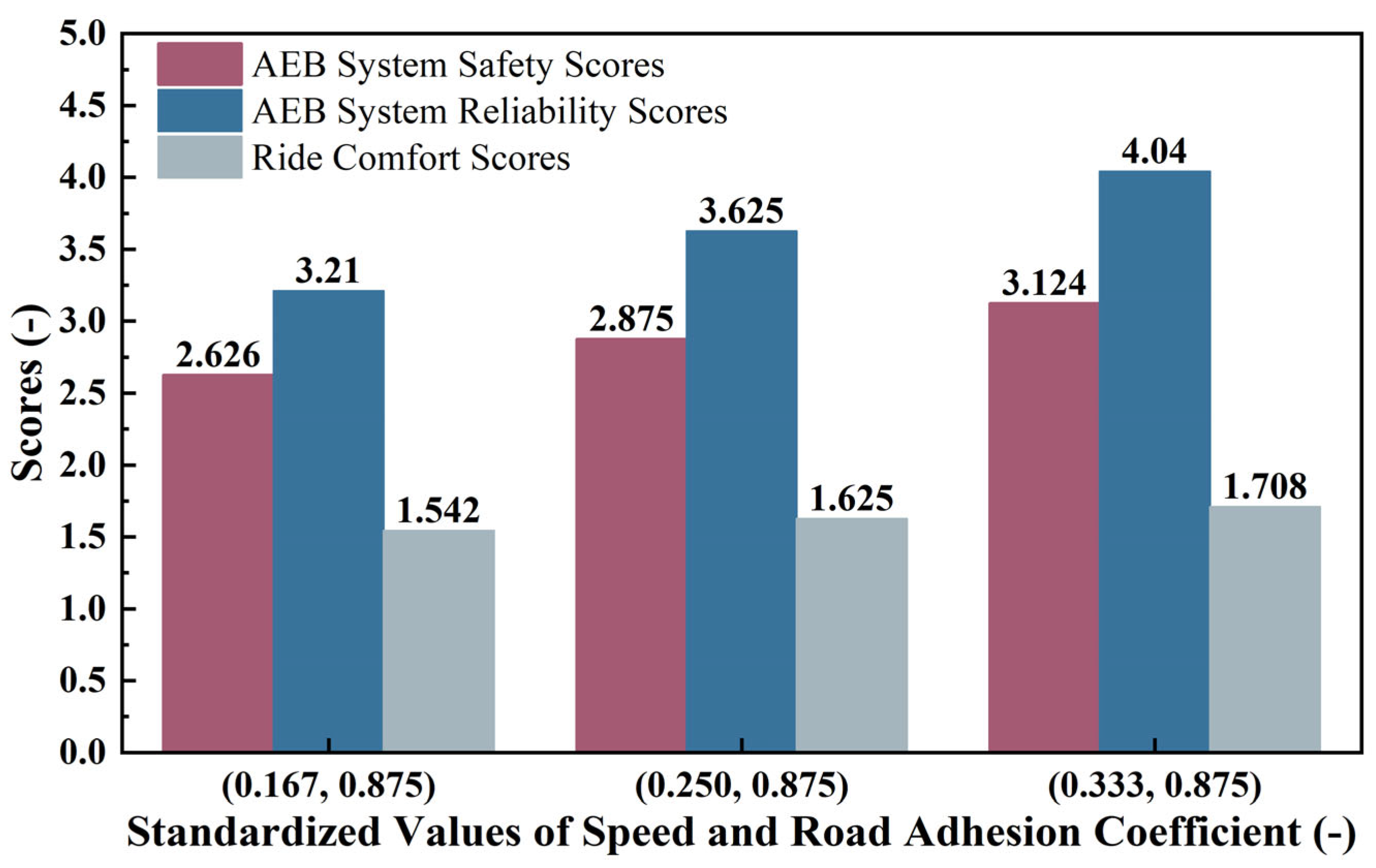

To ensure data reliability, each operational condition within every testing scenario was tested three times. The collected data were subsequently extracted for analysis, and the testing outcomes were assessed accordingly. The results under various conditions are summarized in

Table 14 and depicted in

Figure 15.

As revealed by the data in

Table 14 and

Figure 15, both the MFDD and the Jerk increased with a rising vehicle speed, leading to deteriorated braking smoothness and reduced ride comfort. When the vehicle speeds were 20 km/h and 30 km/h, respectively, the intervention timing of the AEB system occurred relatively earlier, accompanied by higher braking intensities, thereby enabling effective collision avoidance. However, under emergency scenarios involving sudden pedestrian crossings, when the vehicle speed was elevated to 40 km/h, the response time was reduced to 0.81 s after detecting the potential collision risk. This response time was insufficient to complete the collision avoidance maneuver, and the final collision speed was 20.7 km/h.

5. Conclusions

In this study, a comprehensive performance evaluation method for AEB systems in ICVs based on a DWAHP was proposed. The limitations of conventional static weighting methods were overcome by establishing a dynamic mapping relationship among scenario characteristics, indicator weights, and performance scores. A reusable and engineering-oriented testing framework was thereby developed to support the efficient validation of intelligent driving systems. The principal conclusions are summarized as follows:

The DWAHP-based AEB system comprehensive performance evaluation model was constructed. To meet the demands for efficient and reliable evaluation, a co-simulation platform integrating MATLAB/Simulink 2020a and CarSim 19.1 was established. A closed-loop testing environment, comprising vehicle dynamics models, sensor models, and control algorithms, was constructed. Typical traffic scenarios—including urban roads (vehicle speeds of 30–60 km/h with surface adhesion coefficients of 0.5 and 0.85), suburban roads (vehicle speeds of 30–80 km/h with surface adhesion coefficients of 0.5 and 0.85), and highways (vehicle speeds of 80–120 km/h with a surface adhesion coefficient of 0.85)—were simulated. Moreover, five key performance parameters, namely braking distance, braking deceleration, braking intervention time of the AEB system, and Jerk, were systematically collected under various speed and surface conditions. A multi-dimensional performance evaluation matrix was constructed using the DWAHP model, providing a solid data foundation for the quantitative analysis of the comprehensive performance of the AEB system;

A specialized AEB system testing platform was developed. Based on the major influencing factors—vehicle speed and surface adhesion coefficient—and the DWAHP evaluation model, a dedicated AEB testing platform integrating a mechanical system and a measurement and control system was developed. The platform was designed with adjustable wheelbase configurations to accommodate different vehicle types, adjustable primary and secondary drum distances to simulate varying road adhesion levels, and a dual-inertia coupling mechanism combining mechanical flywheels and AC dynamometers. These features ensured accurate and safe data acquisition across low-, medium-, and high-speed conditions. The platform was verified to comply with Section 6.3.2.3 of GB/T 44500-2024, “Inspection Regulations for the Operational Safety Performance of New Energy Vehicles”, regarding the performance requirements for chassis dynamometers;

The VIL-based AEB system performance evaluation method was developed and verified. A comprehensive performance evaluation method for the AEB system, utilizing a VIL test and the DWAHP model, was established and comparatively validated through a real-world road test. The results revealed that the comprehensive performance scores obtained from the VIL test and road test were 13.8341 and 13.9048, respectively. The deviations in safety (VIL score: 1.7275; road test score: 1.9108), reliability (VIL score: 1.6357; road test score: 1.8835), and ride comfort (VIL score: 1.8912; road test score: 1.8412) were 0.1833 (9.5%), 0.2478 (13.1%), and 0.05 (2.7%), respectively. The overall deviation in the comprehensive performance score was 0.0707, corresponding to a deviation rate of 0.51%, demonstrating a good consistency between VIL test and real-world road test. These findings validated the effectiveness and feasibility of the proposed DWAHP-based VIL testing framework for assessing the comprehensive performance of the AEB system.

In conclusion, the DWAHP-based VIL evaluation methodology was demonstrated to effectively deal with the limitations of conventional AEB system performance evaluation technologies, particularly addressing issues related to static weighting, limited adaptability to varying operational conditions, and the lack of integration between traditional evaluation frameworks and experimental validation processes. The proposed methodology and associated research outcomes are highly applicable in multiple practical scenarios. Effective implementation can be achieved in vehicle factory-out inspection processes, where the repeatability, safety, reliability, and comprehensive scenario simulation capabilities of test benches can be fully exploited to enhance inspection efficiency and reduce costs. Moreover, these outcomes are also suitable for utilization in vehicle testing stations and scientific research institutions, providing a reliable evaluation tool for both industrial quality control and academic research endeavors.

Nevertheless, a few drawbacks were identified throughout the study. The dynamic weighting model was calibrated using offline simulation data, and its capacity for real-time correction utilizing online road test feedback remained insufficient. To overcome this shortcoming, future research is recommended to investigate the incorporation of reinforcement learning-based adaptive weighting mechanisms, thereby enhancing real-time responsiveness and the model’s robustness. Additionally, further efforts should be directed toward closing the technological gaps between virtual scenarios’ generalization capability, hardware’s testing precision, and evaluation systems’ adaptability, with the aim of advancing the deployment in engineering and practical application of intelligent driving system evaluation technologies.