1. Introduction

Generally speaking, digital accessibility in technology is essential in ensuring that more people can use technologies and people with disabilities can more effectively use digital applications, web platforms, and devices. In combination with assistive technology (AT), which includes a wide variety of applications and devices, the functional abilities of people with disabilities and their daily lives can be greatly improved. For people that have lost their sight or people that have impaired vision, this technology includes Braille displays, screen readers, voice recognition software, and more. The development of AT has been crucial to providing blind and visually impaired users with the tools they need to effectively interact with digital content and navigate their physical environment more independently.

Recent advances in machine learning and artificial intelligence (AI) have further expanded the possibilities for AI and have improved multiple assistive technologies that have long been in use. Those improvements include real-time object detection and contextual understanding through accessible interfaces. These novelties are vital to promoting independence and improving the quality of life of blind users [

1,

2].

Object detection technology has advanced significantly with the emergence of large datasets and deep learning models. One of the prominent models used for object detection is EfficientDet-lite2, which, due to its lightweight architecture, is designed for efficient performance on mobile devices. The COCO (Common Objects in Context) dataset is widely used for evaluating and training object detection models. It is ideal for developing applications that require real-time object detection in a variety of environments, as it includes a wide and diverse set of everyday objects.

Specifically for blind users, smartphone applications that have object detection capabilities can significantly improve the interaction of those users with their environment and can be a powerful tool for visually impaired users. The navigation and interaction of blind users with their physical environment can be more efficient and safe because the user can receive immediate feedback about their environment by using real-time object detection. This technology can be particularly beneficial in unfamiliar settings, by providing important information about obstacles and other objects in the user’s path [

3].

For all people, but especially for blind users, the sense of touch is very important because it can transmit information. In the context of assistive technology, haptic feedback can provide valuable signals to blind users, enhancing their ability to perceive and interact with digital content and physical environments. Moreover, when it is integrated with object detection technology, haptic feedback can provide immediate and intuitive feedback about the detected objects, improving the spatial awareness of the user and giving them more confidence in navigating their surroundings.

The primary goal of this study is to develop an application that uses object detection to assist blind users in locating objects within their environment. The use of haptic feedback in this application represents a significant innovation. By combining visual information from the camera, audio feedback, and haptic interaction, the application creates a multimodal feedback system that supports the diverse needs of blind users. This approach aligns with the principles of universal design, which support the creation of products and environments that can be used by all people, to the greatest extent possible, without the need for customization or specialized design.

In addition, this research evaluates the usability, the accessibility, and the user experience of the application through a structured questionnaire administered to both blind users and sighted people simulating blindness. A significant novelty of this application is the incorporation of haptic feedback to provide haptic cues when users interact with detected objects. This combination of technologies aims to enhance the user’s ability to explore and understand their environment, offering a practical solution to some of the challenges faced by the visually impaired.

This study contributes to the field of assistive technology by demonstrating how advanced artificial intelligence models and innovative feedback mechanisms can be incorporated into accessible mobile applications. The findings of this study provide valuable insights into user preferences and the effectiveness of different types of feedback in improving usability and accessibility. By focusing on the needs and experiences of both blind and sighted users simulating blindness, this research highlights the importance of inclusive design in technology development.

The remainder of this paper is organized as follows.

Section 2 reviews related work in the areas of object detection technology and assistive applications for visually impaired users.

Section 3 describes the methodology used to develop and evaluate the application, including technical implementation and user interface design.

Section 4 presents the results of the usability evaluation, highlighting the important findings and the user feedback. In

Section 5, a discussion is made about the novelty and implications of these findings, the usefulness of the haptic feedback feature, and the areas for future research. Finally,

Section 6 concludes the paper, summarizing the contributions and potential impact of this study.

2. Related Work

The development of assistive technologies, and particularly those aimed at improving the independence and quality of life of visually impaired individuals, has gained significant importance in recent years. As technology continues to advance, the integration of artificial intelligence (AI) and machine learning into these technologies has opened new doors for innovative applications. One of the most important areas of focus has been the use of object detection technologies in order to assist individuals with visual impairments in recognizing their environment and moving within it.

Object detection, which involves recognizing and classifying objects within an image or video stream, has evolved through the implementation of deep learning models such as EfficientDet-lite2, which is known for its efficiency on mobile devices [

4]. These technologies have been trained using large datasets such as the COCO (Common Objects in Context) dataset [

5], which contains a wide variety of labeled objects commonly encountered in everyday life. By incorporating these object detection systems into mobile applications, developers have been able to create accessible tools that offer real-time object identification, allowing visually impaired users to interact better and more confidently with their surroundings.

At the same time, there has been an increasing emphasis on designing accessible interfaces and integrating multimodal feedback systems—such as haptic and auditory feedback—into assistive technologies. Haptic feedback, in particular, has proven to be an important feature for visually impaired users, providing immediate tactile confirmation when they interact with digital or physical elements [

6]. This has become an increasingly popular approach in applications designed to assist visually impaired individuals, as it allows users to confirm their interactions without relying on visual feedback. Similarly, audio notifications offer an additional layer of accessibility, allowing users to receive verbal signals about their environment or actions. By combining both the haptic and auditory feedback, developers can create a more comprehensive and inclusive user experience, ensuring that visually impaired users receive complementary indications about their surroundings.

Several studies have demonstrated the value of combining AI-based object detection with accessible interface designs. For instance, wearable devices and mobile applications that integrate object detection and real-time feedback mechanisms have shown great potential in making it easier for users to navigate both familiar and unfamiliar environments. Research has also highlighted the importance of adapting these technologies to meet the specific needs of visually impaired individuals [

6]. This includes designing applications that are not only accurate and efficient in their detection capabilities but also intuitive and easy to use, with clear and consistent feedback. Also, the use of mobile platforms for providing these solutions has proven particularly beneficial, as smartphones are widely accessible and have the necessary computing power in order to run sophisticated machine learning models.

Kuriakose et al. [

7] present a review of multimodal navigation systems designed to assist visually impaired users. The paper examines various approaches that combine sensory modalities, such as auditory, haptic, and visual cues, to enhance navigation in indoor and outdoor environments. The authors analyze the strengths and limitations of these systems, and propose specific features that can improve multimodal navigation systems in relation to their accessibility while simultaneously emphasizing the importance of AI to overcome the various challenges in multimodal systems.

Also, Kuriakose et al. [

8] developed DeepNAVI, an assistive navigation system for the blind using a smartphone and a bone conduction headset, incorporating obstacle, distance, scene, position, and motion detection to enhance spatial awareness without losing situational awareness. This system ensures portability and convenience while providing navigation aids to visually impaired users.

Rakkolainen et al. [

9] conducted a review on technologies enabling multimodal interaction in extended reality (XR), including virtual and augmented reality, with an emphasis on accessibility. The review highlights how multimodal interfaces, including haptic and auditory feedback, can be used to make XR environments more accessible to visually impaired individuals. The paper emphasizes the potential for using XR technologies as tools for enhanced perception for users with vision impairments.

Jiménez et al. [

10] developed an assistive locomotion device that provides haptic feedback to guide visually impaired individuals. The device uses sensors to detect obstacles and offers real-time haptic feedback to help users navigate safely. The study highlights how haptic feedback can improve physical environment awareness and autonomy for visually impaired individuals by providing precise haptic guidance.

See et al. [

11] developed a wearable haptic feedback system designed to help visually impaired and blind individuals detect obstacles in multiple regions around them. The system uses sensors to monitor the environment and provides targeted haptic feedback to alert users to obstacles in different directions, improving their spatial awareness and mobility.

Palani et al. [

12] conducted a behavioral evaluation comparing map learning between touchscreen-based visual and haptic displays, targeting both blind and sighted users. The study assessed how effectively visually impaired individuals can learn and navigate maps using haptic feedback compared to traditional visual methods. The findings suggest that haptic displays are highly effective for conveying spatial information, offering an accessible alternative for map learning for users with visual impairments.

Raynal et al. [

13] introduced FlexiBoard, a portable multimodal device that provides tactile and tangible feedback to assist visually impaired users in interpreting graphics and spatial information. The system supports touch-based interaction, allowing users to explore physical representations of graphics while receiving auditory feedback for additional context. The study demonstrates the effectiveness of tactile graphics in enhancing learning and accessibility for people with vision impairments.

Nikolaos Tzimos et al. [

14] examined the integration of haptic feedback into virtual environments to enhance user interaction, particularly benefiting visually impaired individuals when exploring the tactile internet.

Accessibility features in mobile apps are critical to ensuring that visually impaired users can effectively interact with digital content. These features often include screen readers, haptic feedback, and voice recognition technologies. Recent developments have led to the integration of artificial intelligence and machine learning to further improve accessibility.

Billi et al. [

15] proposed a methodology for evaluating the accessibility and usability of mobile applications, combining user-centered design principles with accessibility guidelines. Their study shows how a systematic approach to evaluation can improve both the usability and accessibility of mobile apps, ensuring they meet the needs of users with disabilities.

Ballantyne et al. [

16] examined existing accessibility guidelines for mobile apps, analyzing how well these guidelines are applied to mobile app design. The study assesses compliance with accessibility standards in various applications, highlighting common barriers faced by users with disabilities, including visual impairments. Their findings highlight the need for better enforcement and clearer guidelines to improve the inclusion of mobile apps.

Park et al. [

17] developed an automated evaluation tool designed to evaluate the accessibility of Android applications. The tool provides real-time feedback on app accessibility compliance, identifies common issues, and offers recommendations for improvements. This research points out the importance of automating the accessibility assessment process to make it easier for developers to create more inclusive apps.

Acosta-Vargas et al. [

18] focused on the accessibility assessment of Android mobile applications, using human factors and system interaction methodologies. The study identified key accessibility issues faced by users with disabilities and presented recommendations for improving mobile app accessibility through better adherence to design principles and guidelines.

Mateus et al. [

19] conducted a user-centered evaluation of mobile applications, focusing on their accessibility for the visually impaired. The study compared the experiences of visually impaired users with the results of automated accessibility assessment tools. The research highlighted the discrepancies between automated assessments and actual user experiences, underscoring the importance of user involvement in the assessment process.

Acosta-Vargas et al. [

20] explored the accessibility of native mobile applications for people with disabilities in their scope review. The review highlighted significant gaps in accessibility compliance and the need for better tools and methodologies to ensure that mobile apps are accessible to all users, particularly those with vision and physical impairments.

Object detection technologies have been significantly improved, particularly in noisy or dynamic environments. For instance, Zheng et al. [

21] proposed an asymmetric trilinear attention net with noisy activation functions to enhance automatic modulation classification under signal distortions, demonstrating how attention mechanisms can stabilize performance in challenging conditions. Similarly, Liu et al. [

22] changed the multi-scale feature hierarchy in detection transformers (DETRs), addressing scale-variant object detection.

3. Materials and Methods

The application in this study leverages artificial intelligence (AI) for object detection and incorporates haptic feedback to enhance user interaction. The primary goal of the application is to provide real-time object detection and feedback to users, enabling them to navigate inside their environment more effectively.

The application was developed for Android devices using Android Studio, the official Integrated Development Environment (IDE) for Android app development. The primary programming language used was Kotlin, which is fully supported by Android Studio and offers modern features that enhance productivity and code safety.

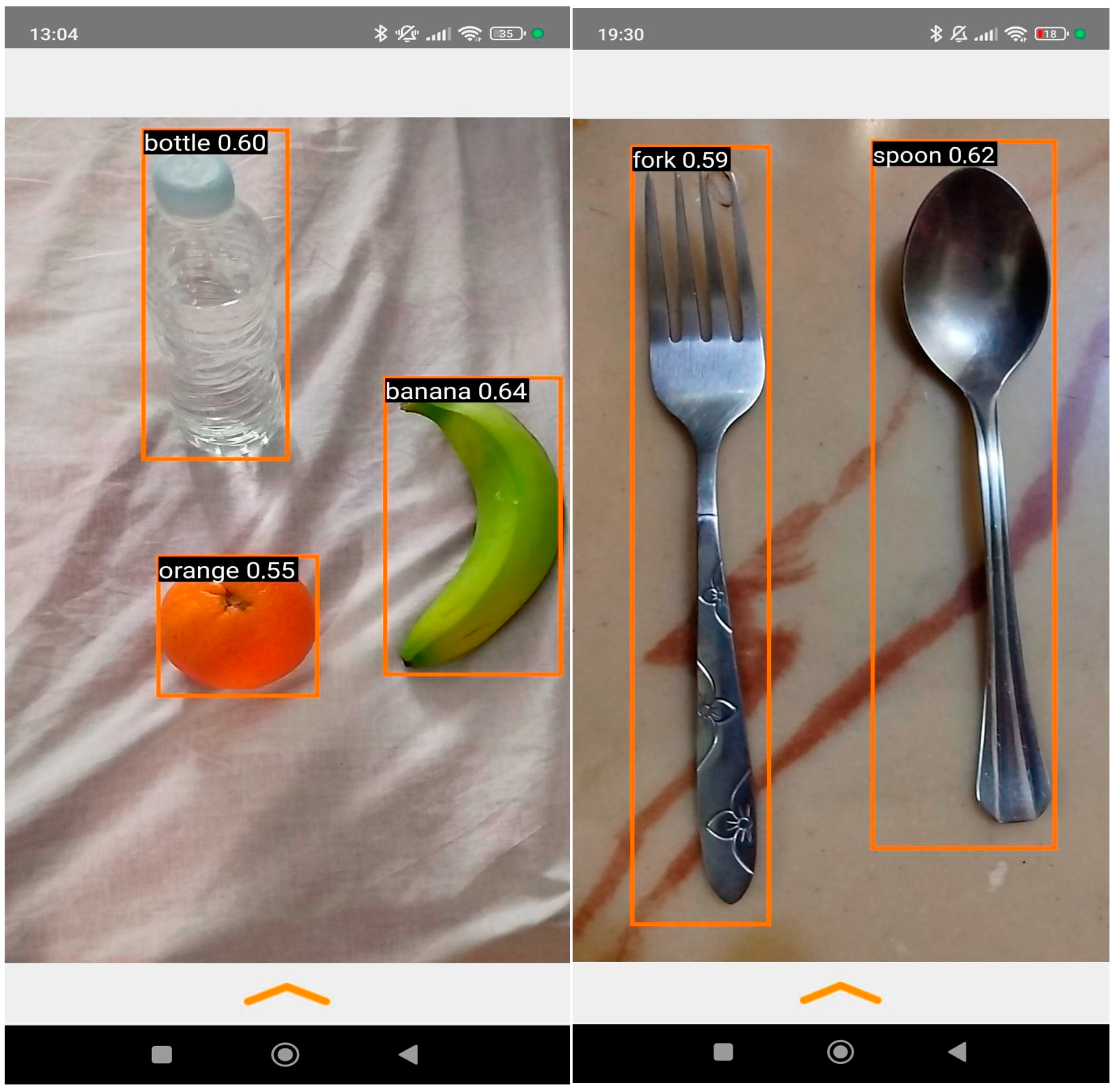

The user interface (UI) was designed to be simple and intuitive, with a focus on accessibility. The main screen displays a live camera feed with overlaid bounding boxes around detected objects. Users can interact with the application by touching the screen, which triggers haptic and voice feedback. The application was designed to be simple and easy to be used by users with vision problems. The application does not have any buttons, and upon opening it, it directly performs its basic function, which is to recognize objects through the camera, as shown in

Figure 1. Of course, there is the possibility of adjustments for the type of vibration, the selection of AΙ object recognition libraries, and the number of processors, but these are intended for use by the research team.

The application utilizes the TensorFlow Lite framework for implementing the AI-based object detection model. TensorFlow Lite is a lightweight version of TensorFlow designed specifically for mobile and embedded devices, providing efficient inference with minimal latency. The model was integrated into the application using the TensorFlow Lite Object Detection API, which provides pre-built functions for loading the model, processing images, and interpreting the detection results. The API also supports customization of detection parameters such as confidence thresholds and the maximum number of objects to detect.

The core functionality of the application relies on the EfficientDet-lite2 model, a lightweight convolutional neural network (CNN) optimized for mobile devices. EfficientDet-lite2 is pre-trained on the COCO (Common Objects in Context) dataset, which includes a wide range of everyday objects.

In order to determine the object detection model that we will use in our application, we compared the EfficientDet-Lite models alongside SSD MobileNetV2 variants to select a model optimized for mobile assistive technology. EfficientDet-Lite and SSD MobileNet Models are the two categories of model that are commonly used in object detection of edge computing devices [

23]. As shown in

Table 1, for the COCO 2017 validation dataset, on a Pixel 4 smartphone using 4 threads on CPU, EfficientDet-Lite2 achieves 33.97% mAP at 69 ms latency. It combines accuracy and speed. While SSD MobileNetV2 320 × 320 is faster (24 ms), its low accuracy (20.2% mAP) risks missing critical objects, and SSD FPNLite 640 × 640 (28.2% mAP) has higher latency (191 ms) [

24,

25].

The application was developed by extending the open-source TensorFlow Lite Object Detection Android Demo [

26]. The official TensorFlow Lite demo provides a well-tested tool for real-time object detection. It is optimized for mobile devices, ensuring low latency and efficient resource usage. Its modular design allowed the integration of new features like haptic feedback and multilingual voice support. We simplified the interface to prioritize voice and haptic feedback over visual elements.

We conducted performance testing using Android Profiler on a Xiaomi Redmi Note 11 (Xiaomi Corporation, Beijing, China; Snapdragon 680, 4GB RAM)under default application settings. The runtime metrics of the application are depicted in

Table 2.

While the base project provided the basic object detection functionality, the following modifications were implemented to make the app suitable for visually impaired users, enhancing accessibility through multimodal feedback mechanisms.

The application triggers vibrations when the user interacts with detected objects on the screen. The vibration patterns were designed to provide intuitive feedback.

The haptic feedback system was integrated with the object detection results, ensuring that users receive tactile feedback when they touch the screen near a detected object. This feature aims to enhance the user experience by providing an additional sensory cue that complements the visual and auditory feedback.

We added vibration patterns using Android’s Vibrator class ( for API version lower than 26) to provide tactile feedback when users interact with detected objects.

The vibration pattern that was used was a square pulse of vibration with an “on” duration of 10 ms. This effect is triggered continuously while the user is touching the object. The vibration starts immediately (0 ms delay), when the user touches the detected object and it vibrates repeatedly for 10 ms. The time gap between the pulses depends on the smartphone CPU and is near 10 ms.

The specific vibration pattern was chosen to provide pleasant, rapid, and intuitive feedback to the user [

27]. For android phones with an API Level equal to or higher than 26, we apply the VibratorManager and the VibrationEffect library, while for API levels lower than 26, we enforce the Vibrator API.

At the current research version, the application uses fixed vibration parameters during experimental evaluation. The user can change the vibration intensity through the android system settings based on their preferences.

To further assist visually impaired users, the application includes text-to-speech (TTS) functionality. The TTS engine reads out the names of detected objects in a selected language, providing an additional layer of information. Our implementation utilizes Android’s native TextToSpeech API (version 9.0+) which offers comprehensive language support through several key features. The system currently supports eight major languages (English, Greek, Spanish, French, German, Italian, Japanese, and Mandarin). The application translates the object labels from English (as provided by the COCO dataset) to other supported language, such as Greek, using a custom HashMap that maps each object category to its corresponding Greek translation. This ensures that users receive feedback in their native language.

When the user touches the screen within the bounding box of a detected object, the speakText() method is triggered. This method retrieves the object’s label (e.g., “cup,” “chair”) from the detection results. A predefined HashMap (e.g., “cup” → “φλιτζάνι”) translates the label to Greek using android’s TextToSpeech engine to vocalize the translated label in Greek. The system maintains synchronization between haptic and audio feedback, with vibration and speech output beginning immediately upon touch detection.

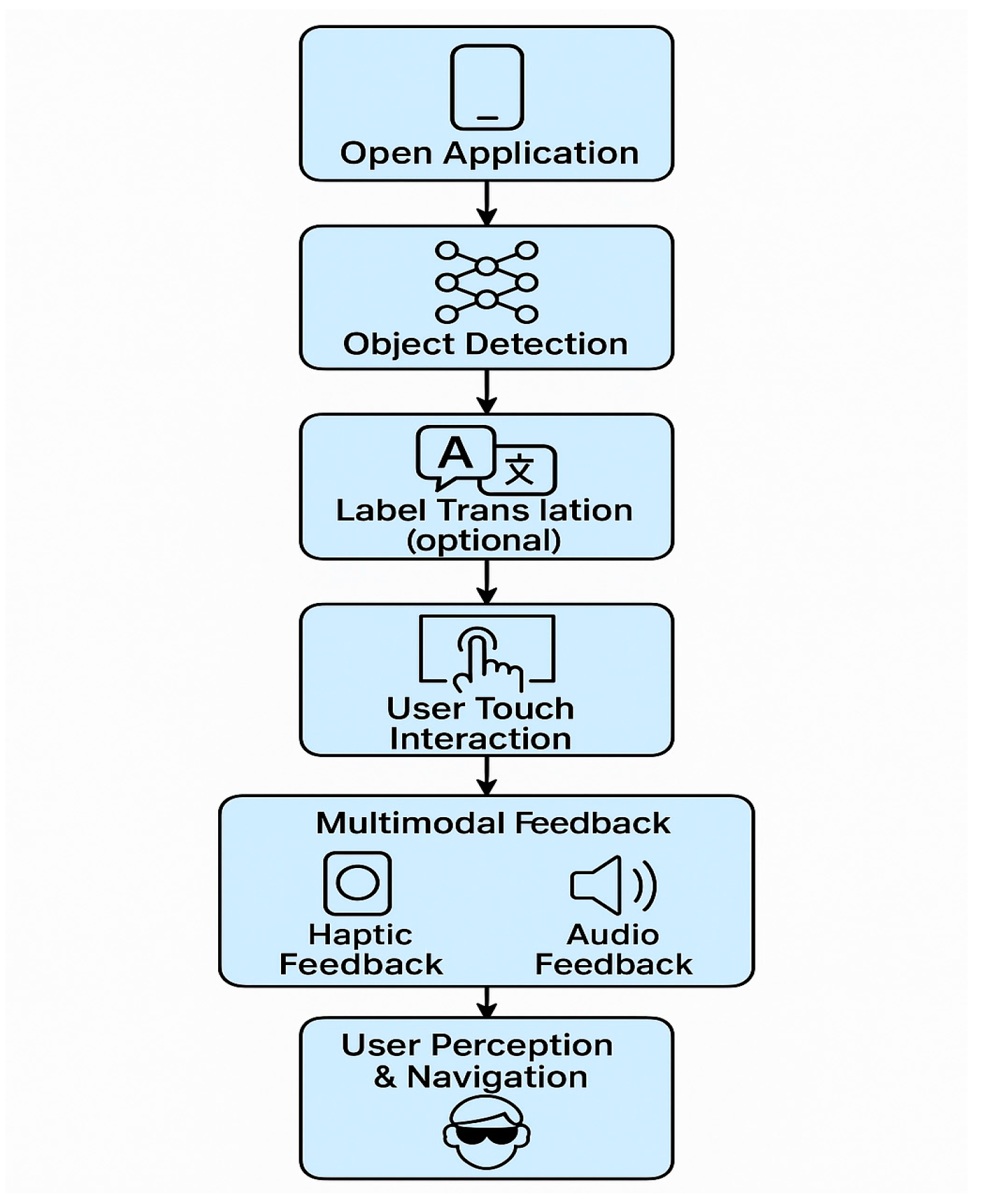

The flowchart diagram of the application is given in

Figure 2.

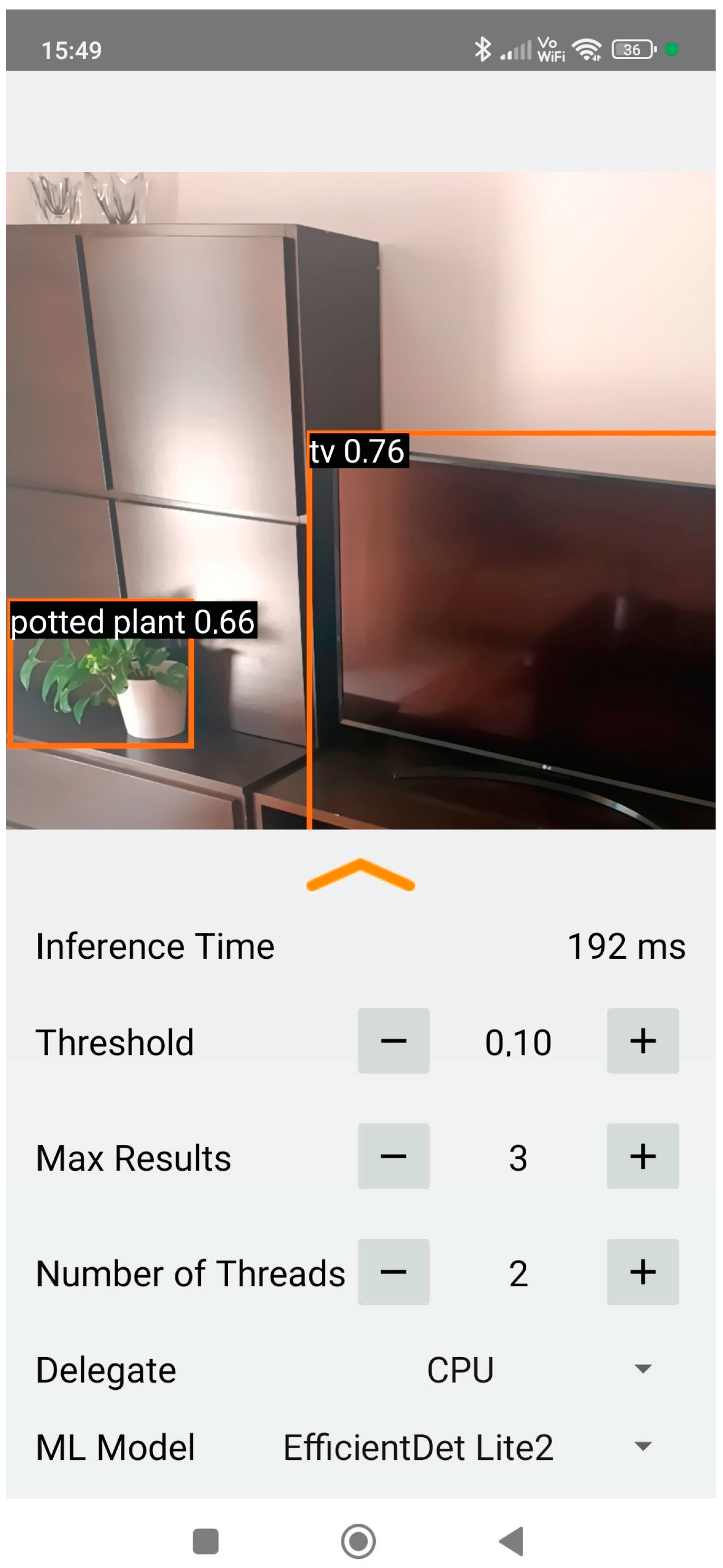

The application also includes a settings menu that allows users to adjust various parameters, such as the detection sensitivity (where lower sensitivity detects more objects but may include false positives), the number of objects to detect simultaneously, and the type of feedback (haptic, voice, or both). The settings menu is accessible via a sliding panel, ensuring that it does not obstruct the main camera view and cannot be easily activated by mistake. The settings menu is depicted in

Figure 3.

3.1. Experimental Design

An evaluation was conducted to compare user performance and satisfaction between the two versions of the application, with haptic feedback and with no haptic feedback, and to evaluate the usefulness and user experience of haptic feedback in aiding navigation and object recognition for visually impaired users.

The evaluation focused on several key metrics, including the following:

Usability: Measured through a questionnaire that assessed how easy the application was to use and how well it met the users’ needs.

Effectiveness: Evaluated based on the accuracy of object detection and the responsiveness of the feedback system.

User Satisfaction: Assessed through questions about the overall user experience, including the quality of haptic and voice feedback.

Ease of Learning: Measured by how quickly users were able to learn and use the application effectively.

All participants were informed about the experiment’s purpose and procedures and provided informed consent before participating. The study was conducted in accordance with ethical guidelines, while data confidentiality and anonymity were maintained. Participants were also given the option to withdraw from the study at any time without any consequences.

We categorized the research on the application’s usability into two distinct studies. The first study evaluated the app’s usability for people who have vision problems. The primary reason for including both blindfolded and blind participants in our study was to understand, in general, the differences in perception in matters of usability between sighted and blind people.

We made sure that the physical environment of the experimental tests was as unchanged as possible. For this reason, we used specific rooms whose interiors remained unchanged despite the changes in the users participating in the tests. In all cases, the tests were conducted in a designated room on the premises of their training institution. We also made sure that there were no external factors that influenced the survey users. Inside those rooms, we placed three objects that the application had a high probability of recognizing.

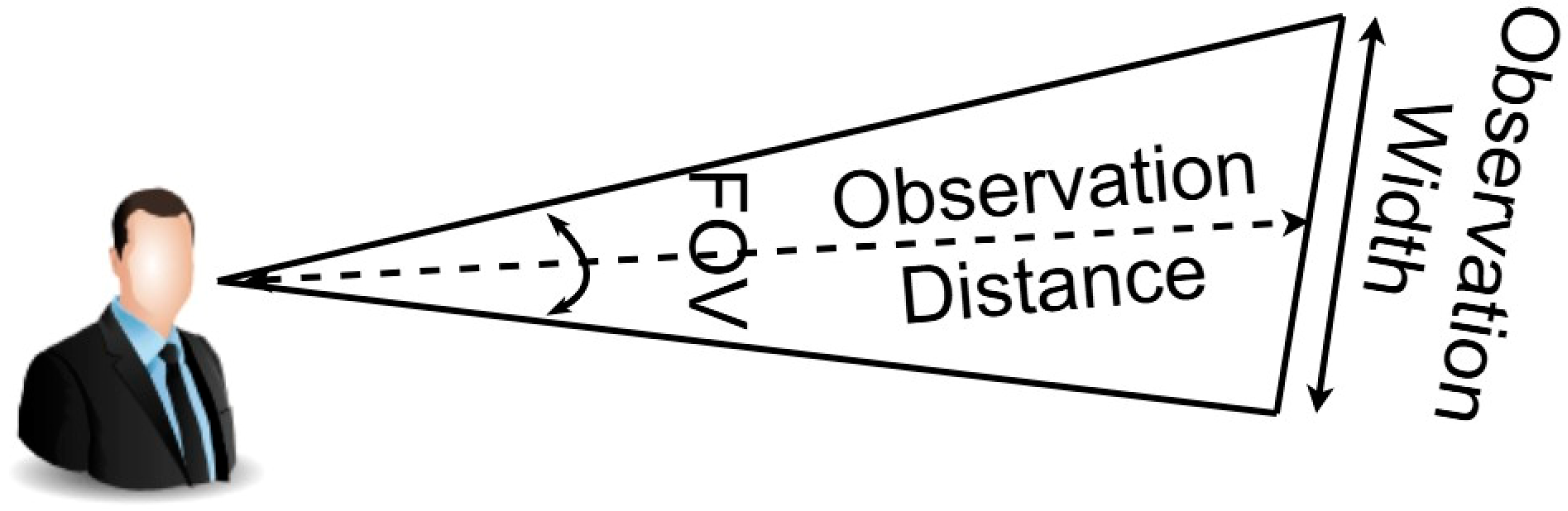

To determine the optimal observation distance between the observer and the objects for detection, we must consider the human field of view (FOV) and the observation width, which is regarded as the horizontal span of the scene detectable by the observer. The relationship is expressed by Equation (1) and depicted in

Figure 4.

The human field of view (FOV) for object recognition varies depending on the level of visual attention required and the type of visual processing involved. For central vision where a high concentration of object recognition is required, the FOV is 5–10°. This is where the highest visual acuity occurs, allowing for detailed object recognition, reading, and fine motor coordination [

28]. For object detection in the parafoveal vision, the FOV is between 10 and 30°. This region helps in recognizing objects without directly looking at them, while it lacks fine detail compared to the central vision [

29].

The distance proposed for object detection with the help of the phone’s camera to compensate for people with vision problems is equal to the distance resulting from Equation (1) for parafoveal object recognition with a maximum FOV of 30 degrees. If the maximum observation width is equal to 2.00 m, the maximum distance of the observer, according to Equation (1), is approximately 3.73 m.

Participants were seated in a comfortable, predetermined position. Each smartphone provided had both versions of the application pre-installed and readily accessible for comparison.

While the application settings were simplified for initial usability testing, changing this setting currently requires assistance from a support person. Future updates will prioritize voice-guided navigation and WCAG compliance to enable fully independent use by blind users.

Before the evaluation, the research team conducted a training session to familiarize participants with the app. They were given time to practice with both versions until they felt confident proceeding to the assessment phase.

Some blind participants were assisted by a support person during initial device use, while others received guidance from the research team. Not all the participants had prior experience with similar applications. The team or support person helped position the phone correctly and directed their fingers to the appropriate areas of the screen.

The user each time had to locate the three objects in front of them at a distance of 3.5 m, with the help of the device. Every time the user changed, we changed the order of the versions that they were going to evaluate in order to have more objective results. Also, for the same purpose, we changed the location of the objects every time the evaluation of one version ended and the evaluation of the other version of the application was going to begin. We placed particular emphasis on user satisfaction and ease of use of the two versions of the application, and, in particular, we wanted to see if the version with vibration offered more satisfaction and was easier to use than the simple version, rather than focusing on the speed of locating the objects.

In the experimental design, we used a simplified environment with a limited number of objects (3–5 items). By minimizing the environmental variables, we could accurately measure the impact of vibration feedback on object detection performance. Standardized and stable conditions ensured comparable results across all participants (blind and blindfolded).

In the experimental scenario with no haptic feedback, the vibration feature was deactivated. The deactivation of the haptic feedback ensured that the users experienced only audio feedback, allowing for precise comparison of user experience between the two experimental conditions.

The study included 18 participants with normal or corrected-to-normal vision and 8 blind participants. Those with normal vision performed the assessment with their eyes closed (blindfolded), though it is important to note that blindfolding cannot fully replicate the experience of visual impairment. Consequently, simulations—no matter how carefully designed—cannot perfectly mirror real-world scenarios.

To address this limitation, our testing protocol follows a multi-stage process, involving method validation, preliminary testing, simulation trials, and final functionality evaluations, before progressing to user acceptance testing. These phases are vital for advancing assistive and inclusive technologies, as the insights gained—even from simulations—can enhance our understanding of disability-related needs and spur innovation. The findings and methodologies presented in this study aim to contribute to the scientific community, informing future research and development in the field.

Regarding the gender of the participants, 15 were male and 11 were female. The age range was 13 people from 17 to 25 years old, 5 people from 26 to 35 years old, 4 people from 36 to 45 years old, and finally 4 people who were 46 years old and over. All sighted participants were unfamiliar with such kind of applications prior to the study. The detailed distribution of age, gender, and vision status of the participants is depicted in

Table 3.

3.2. Performance Measures

The users were asked to complete a 30-question questionnaire, based on a usability questionnaire (USE) [

30] and the User Experience Questionnaire (UEQ) [

31].

We used both the USE (Usefulness, Satisfaction, and Ease of Use) Questionnaire and the User Experience Questionnaire (UEQ) to provide a comprehensive evaluation of the system’s usability and user experience from different perspectives. The USE Questionnaire focuses on usability aspects, measuring usefulness, ease of use, ease of learning, and satisfaction. This questionnaire is particularly valuable for assessing functional usability and efficiency. The UEQ extends beyond usability, evaluating attractiveness, efficiency, dependability, stimulation, and novelty. This allows us to measure users’ subjective experiences and emotional engagement with the system. By combining these two questionnaires, we ensured a holistic assessment, addressing both practical usability concerns and user experience factors to gain a well-rounded understanding of the application’s effectiveness and appeal.

The USE Questionnaire was divided into five sections: demographics, usefulness, ease of use, ease of learning, and satisfaction. Moreover, we evaluated the effect of the haptic feedback in both questionnaires in order to have a comparative analysis for blind and blindfolded users, as well as a comparative analysis for scenarios with haptic feedback activated and not.

The questionnaire responses were rated using a Likert scale, as presented in

Table A1 in

Appendix A. For the USE Questionnaire, we used a 5-point Likert scale (1 to 5), while the UEQ used a 7-point scale (1 to 7). To evaluate each question, we calculated the mean and standard deviation of participants’ responses. Additionally, the mean values were normalized for better comparison between the four categories in the tables below.

Regarding the comparative analyses, the study deliberately prioritized qualitative indicators, usability, and user experience, as these dimensions are critical for evaluating assistive technologies. However, we acknowledge the value of integrating quantitative metrics such as task completion time or error rates, as it could provide a more comprehensive assessment of system performance in future studies.

4. Results

4.1. USE Questionnaire Evaluation

The data collected from the USE Questionnaire were analyzed using descriptive statistics. The results of the participants’ responses are presented below in

Table 4 and

Table 5. The analysis focused on identifying trends and patterns in the data, such as the overall satisfaction with the application and the perceived usefulness of the haptic feedback system.

The comparison results of the two versions of the application, with or without haptic feedback, for the two user categories, blind and blindfolded users, are presented in

Table 4.

The next step was to calculate the mean values of the four main factors of the questionnaire, as shown in

Table 5 for each category user.

The analysis of the USE Questionnaire responses, as shown in

Table 4 and

Table 5, provides a clear indication of the impact of haptic (vibration) feedback on the usability of the application. Four key dimensions were assessed, usefulness, ease of use, ease of learning, and satisfaction, across two groups of participants, blind users and blindfolded users, and two application conditions, with and without vibration feedback.

From

Table 4 and

Table 5, it is evident that blind users consistently rated the application higher when vibration feedback was enabled. Specifically, usefulness responses (questions 1–3) have an average increase of approximately 18.34% compared to the non-vibration feedback. A similar trend was observed in

ease of use, where participants highlighted the intuitive and user-friendly nature of the interface when enhanced with haptic feedback. The most notable difference was in satisfaction (questions 10–12), where users expressed a greater desire to use the application regularly and recommend it to others when vibration was present (90% vs. 71.67%). This suggests that the vibration feedback enhanced their sense of control, engagement, and trust in the system.

Blindfolded users also reported higher scores in most categories under the vibration-enabled condition, albeit with slightly smaller margins. For example, their reported usefulness increased from 66.67% without vibration to 69.25% with vibration. In the ease of use and ease of learning dimensions, scores remained high overall, indicating that the application was perceived as accessible and intuitive regardless of the user’s prior visual experience. However, satisfaction again showed a noticeable difference, with users expressing slightly more positive sentiment (62.59% vs. 62.22%) when the vibration feature was active. Notably, all users, regardless of group, achieved very high scores in ease of learning category (near or at 100%), suggesting a short learning curve and quick familiarity with the interface. Overall, as summarized in

Table 5, the results clearly support that vibration feedback improves user usability across all four evaluation factors.

4.2. UEQ Evaluation

The User Experience (UX) Questionnaire (UEQ) results are presented in

Table 6. They describe how the participants perceived the quality and design of the application, particularly in relation to its efficiency, attractiveness, dependability, stimulation, and novelty. We used the short version of the UEQ, so as not to be too exhausting for the interviewers, in combination with the USE Questionnaire and the open-response interview. The evaluation of UX also involved two user categories, blind and blindfolded, and two interface conditions, with and without vibration feedback.

For blind users, the results show consistently higher ratings with vibration feedback enabled. The application was more supportive (5.5 vs. 4.5) and more inventive (6.75 vs. 5.0) with vibration feedback, suggesting a strong improvement in the user’s experience. Particularly high scores were recorded for ease of use (6.5 for both conditions), indicating that the application was easy to use regardless of vibration. Additionally, the categories “Exciting” (6.25 vs. 5.5) and “Interesting” (6.25 vs. 5.5) also show that the haptic interaction offers more engaging and stimulating interaction.

Among blindfolded users, the pattern is similar, though with slightly less contrast between the two conditions. Notably, the “Clear” metric remained constant at a high value of 5.89 for both versions, indicating that users could interpret and understand the application effectively in either case. The vibration improved the categories “Supportive” (5.44 vs. 5.00) and “Efficient” (5.00 vs. 4.56), demonstrating that the haptic feedback provided an additional layer of usability and control. Interestingly, the categories “Exciting” and “Interesting” showed minor improvements or no difference.

In summary, the UEQ results reinforce the findings from the USE Questionnaire: vibration feedback positively influences user experience by enhancing both usability (e.g., efficiency, supportiveness) and experience (e.g., excitement, inventiveness). Blind users, in particular, showed a more significant appreciation of the system’s enhanced feedback, underscoring the importance of multimodal interaction design in assistive technologies.

4.3. Test Reliability

The outcome of the Cronbach’s Alpha reliability coefficient of the questionnaire for the application evaluation is depicted in

Table 7.

Table 7 depicts that both conditions show strong internal consistency (α > 0.8), indicating that the reliably measures of the overall user experience. The haptic feedback interaction improves the coherence of user perceptions across all dimensions (usefulness, ease, learning, and satisfaction). The application with haptic feedback enabled has a higher internal consistency reliability coefficient than without haptic feedback, which means that the respondents’ answers remain more stable in the case with haptic feedback enabled. Consequently, the application with haptic feedback demonstrated broader user acceptance and satisfied a greater proportion of users.

4.4. Analysis of the Results

User feedback was collected from open questions, and the participants highlighted key areas for improving the usability and functionality of the assistive application. A suggestion was the need for enhanced object recognition, both in terms of the number of identifiable items and the reliability of the recognition system. Users demanded more detailed object descriptions, including the identification of object color. Additionally, spatial information was considered essential; participants requested functionality that provides not only the distance to detected objects but also their relative position (e.g., left, right, in front) in relation to the user. This feedback points to the importance of integrating more advanced computer vision capabilities and contextual awareness features in future development stages.

Furthermore, users positively highlighted the value of a simple and intuitive interface, with support for voice commands and natural-sounding audio output. The haptic feedback was suggested as a helpful aid for orientation and navigation. Device compatibility was also mentioned, with users recommending broader support for both Android and iOS platforms, including wearables like smartwatches. An additional proposed feature was a scanning function for reading product labels, prices, and expiration dates in environments like supermarkets. Overall, the feedback highlights the necessity of combining functional accuracy with user-centered design to create a more effective, accessible, and inclusive assistive application for visually impaired individuals. Nearly all participants reported satisfaction with the application, describing it as enjoyable and entertaining. Blindfolded participants, who simulated visual impairment during testing, describe the application as valuable tool for environmental navigation. Several blind participants expressed enthusiasm about their first experience with the application, noting they discovered a novel method for identifying nearby objects. They also noted that they would use this application as an additional tool to help them in their daily mobility.

From the processing of the USE Questionnaire, the comparisons of the two versions of the application are presented in

Table 4. We see that the app with vibration presents better results than that without vibration in ten out of twelve questions of the questionnaire. This is also reflected in the calculation of the mean values of the four main factors of the questionnaire, as shown in

Table 5.

By observing the users who participated in the research, some important conclusions were drawn regarding how they interact with the application. Most users initially showed interest and eagerness to try the application.

Younger ages, on average, became familiar with it more quickly and continued to use the application at a faster rate. Additionally, the different lengths of fingers and the long nails of women made the precision of the movements more difficult. In general, users used different strategies to locate the detected items on the touch screen.

Another important finding was that haptic interaction helped users understand the exact position of objects and the size they occupied on the screen. This is due to the fact that when the user’s finger touched the object, the device began to vibrate, while when the finger left the boundaries of the object, the vibration stopped; thus, the user understood both the exact position of the object on the screen and the size of this object.

The most important observation was that when the haptic feedback was enabled in the application, users identified the objects that had been detected with the help of technical intelligence more quickly and easily, while large variations were observed regarding the time and effort it took each user to identify all the objects that were detected by the application.

5. Discussion

This paper qualitatively evaluates the role of haptic feedback on object detection applications for visually impaired people that can be implemented on popular touchscreen devices such as tablets and mobile phones. To evaluate the effectiveness of haptics, a mobile phone Android application for visually impaired users was developed, supporting haptic feedback. Then, the application interface functionality was put to the test using the USE Questionnaire and the UEQ, handed out to the application testers via Google Forms. The usability evaluation of the application was carried out separately on two subsets of users. In one set, participants had normal vision but they were blindfolded for the purpose of the research, and in a second set, blind users participated. Haptic feedback scored higher marks on all four USE Questionnaire factors, so we can consider that the use of haptics increases usability in these kinds of applications.

The first contribution of the present study is the creation of an application for smartphones and tablets, which gives tactile and vocal feedback to the user. The second contribution is evaluating and comparing the use of haptic feedback on these kinds of applications. The third contribution of this study is the realization of the different perceptions of usability, in general, between sighted and blind users.

For practical reasons, we investigated the effect of haptic feedback in object detection applications. We can say with certainty that haptic feedback improves usefulness and use satisfaction. Moreover, we can also say that haptics enhance both accessibility and engagement, particularly for users with visual impairments. The performed evaluation also highlights the potential of combining intuitive and simple UI design with multimodal feedback to support inclusive technology adoption.

Unlike commercial applications that are generally based on visual substitution (e.g., Seeing AI [

32] and EnvisionAI [

33]) or human assistance (e.g., Be My Eyes [

34]), this paper proposes haptic interaction to be used by people with visual impairments for environmental/spatial exploration. While current commercial solutions are useful in specific domains like OCR or live video support, they lack the tactile feedback mechanisms critical for spatial awareness. A qualitative comparison between commercial applications is depicted in

Table 8.

5.1. Open Research Questions and Future Work

Several promising research directions emerge from this work. Future studies should investigate the development of more intuitive haptic interaction patterns to enhance usability and personalization mechanisms to adapt haptic feedback to individual user preferences and accessibility requirements. The updated application version should enhance the user experience without becoming overwhelming. Moreover, more research should be carried out on how this app should be designed to improve mobility for people with visual impairments.

As for learning and adaptation, there should be research on how users can learn and adapt the application more easily. What strategies can be employed to facilitate rapid learning of the app and how specific haptic feedback patterns influence users’ psychological and emotional states? What types of haptic interactions are most effective for conveying emotions, such as urgency, excitement, or comfort? Exploring these questions could lead to valuable insights that contribute to developing more effective, engaging, and user-centric smartphone applications.

In future work, the authors plan to extend their investigation, using a larger visually impaired target group to ensure the results are more secure and representative. There will also be a quantitative evaluation of the application based on the number of errors made by users while trying to identify the location of the detected object. Future development will focus on preparing the application for official app marketplaces, with language customization features. Another future goal is to increase the dataset of this application so that it detects more objects than those of the COCO dataset. As artificial intelligence models become more efficient and lighter, we hope to use new models that are proposed by the scientific community. While the COCO dataset served as a practical baseline for evaluating haptic feedback’s usability, a future version of the application will include an enlarged dataset based on the needs of visually impaired users. Transfer learning and data augmentation will be employed to enhance the detection of objects. Additionally, we will validate object size distributions against real usage scenarios to ensure better performance across varying distances.

To further enhance accessibility and user experience, we plan to develop an adaptive vibration system that changes haptic feedback. The users will adjust vibration strength (amplitude) via a slider in the app settings. Multiple vibration patterns (e.g., single pulses, rhythmic sequences) should be available for user selection. The user should also be able to change vibration frequency and pulse duration based on their preferences.

The generalization of our findings is limited due to practical constraints by the small blind participant sample size. While this was necessary for initial haptic feedback usability validation, follow-up studies should address this constraint by using a larger sample size of blind users.

The absence of quantitative performance metrics (e.g., task completion time and error rates) represents a limitation, as these could complement subjective usability data. Future work will adopt a hybrid evaluation framework to address this gap.

5.2. Innovations and Contributions

This study introduces several innovations in the field of assistive technologies for visually impaired users. First, we present a novel integration of real-time AI object detection with haptic feedback, creating a multimodal interface that combines tactile and auditory feedback to enhance spatial awareness and environmental interaction. Our application enables users to physically “explore” detected objects through touch-triggered vibrations, offering a more intuitive and immersive experience. Also, we conducted a comparative usability and user experience study evaluating the impact of combining haptic feedback with audio interfaces on blind users’ environment exploration. The evaluation results demonstrate that multimodal interaction enriched with haptic feedback provides better results compared to audio-only interfaces. These contributions improve assistive technology by integrating haptic feedback in multimodal interaction for visually impaired users, especially for spatial awareness and user autonomy. Furthermore, in our evaluation, we combined two questionnaires—assessing usability and user experience—to conduct a comprehensive analysis not only of the application’s performance but, more critically, of how haptic interaction improves human–computer interaction for visually impaired users.