1. Introduction

Efficient multi-robot coordination is essential for autonomous transport systems operating in confined environments. Most existing collision avoidance strategies rely on modifying predefined paths to resolve collisions through waypoint reconfiguration or real-time deviations. Although in many modern systems robots can replan their paths online, there still exist structured environments where trajectories are predefined and cannot be easily modified due to strict safety or process constraints. Typical examples include automated warehouses where navigation follows predefined graphs or designated lanes [

1], and transport systems operating in confined spaces such as tunnels or narrow corridors [

2,

3]. In these contexts, path geometry is fixed before execution, and adjusting the velocity profile along the predefined route becomes the most effective strategy to ensure safe coordination. This motivates the need for velocity profile optimization as an effective strategy for ensuring collision-free coordination without altering the trajectories. These methods can be implemented using either centralized or decentralized approaches, with centralized methods leveraging global coordination for trajectory planning and decentralized methods relying on local decision-making:

Centralized collision avoidance methods involve a central controller that has complete knowledge of the system’s state and plans the trajectories of all robots accordingly. This approach ensures globally optimal solutions by formulating the problem as an optimization task. Centralized methods also require constant communication between each robot and a central controller, which acts as the coordinator of the system. This dependency introduces potential limitations in terms of scalability, robustness to communication failures, and real-time applicability in dynamic environments.

Decentralized methods, in contrast, distribute the decision-making process among individual robots. Each robot makes trajectory adjustments based on local information. These methods enable real-time collision avoidance and scale more efficiently as the number of robots increases. They are especially advantageous in scenarios with limited or unreliable communication, such as search and rescue missions, distributed surveillance, or large-scale agricultural settings. In such contexts, a centralized controller may be unavailable, and decentralized strategies become not just preferable but necessary. However, they often result in suboptimal solutions compared to centralized approaches, as each robot operates without full knowledge of the global system state [

4,

5,

6].

Most of the existing literature focuses on modifying predefined paths to resolve collisions, while approaches that optimize only the velocity profiles remain less explored. In particular, for centralized approaches, to the best of the authors’ knowledge, no significant works exist that achieve collision avoidance only by adjusting velocity profiles while keeping paths unchanged [

7,

8,

9,

10]. On the other hand, for decentralized methods, the majority of research also employs path modifications [

11,

12,

13,

14], with the exception of the Reciprocal Velocity Obstacles approach, which enables real-time local velocity adjustments to avoid collisions [

15,

16].

Centralized collision avoidance, however, can be effectively formulated as an optimization problem through techniques such as Mixed-Integer Linear Programming [

17] and Mixed-Integer Nonlinear Programming [

18,

19]. The flexibility of this framework makes it well-suited for handling both discrete decision variables, such as robot priorities or scheduling constraints, and continuous variables such as velocity profiles. Genetic Algorithms [

20,

21] can also be used as an optimization method in centralized trajectory planning due to their ability to explore complex, high-dimensional search spaces without requiring gradient information. Compared to traditional mathematical optimization methods, they provide great flexibility in handling nonlinear constraints and multi-objective optimization problems. This study employs a centralized optimization framework using a Genetic Algorithm to compute optimal velocity profiles for multiple robots while ensuring collision avoidance. By integrating constraints on travel time, energy consumption, and kinematic feasibility, the proposed approach balances efficiency and safety.

2. Problem Formulation and Proposed Methods

In this section, we define the multi-robot collision avoidance problem addressed in this work. The objective is to optimize the velocity profiles of robots moving along predefined paths to minimize travel time and energy consumption while avoiding collisions. The problem is formulated as a constrained optimization task within a centralized framework, where a global controller has full knowledge of the environment and robot trajectories.

2.1. Problem Definition

Consider a fleet of n autonomous, omnidirectional mobile robots operating in a two-dimensional environment. Each robot follows a predefined path, defined as a set of waypoints connecting an initial point to a final point. The motion of each robot is subject to kinematic constraints, including maximum velocity and acceleration limits. Each robot i follows a predefined path , parameterized by arc length , where is the total length of the path for robot i. Here, and represent the Cartesian coordinates of the path at the arc-length position s, which increases from 0 at the start of the trajectory to at the goal point. The actual position of robot i at time is given by , where represents the distance covered along the path up to time , based on the robot’s velocity profile, parameterized by samples .

The objective is to compute optimized velocity profiles that ensure the following:

No two robots of the system come closer than a defined safety margin at any time.

The total travel time of the robots is minimized.

The total energy consumption of the robots is minimized.

This optimization problem involves adjusting velocity profiles to satisfy constraints while minimizing a cost function. The orientation of the robots is not considered in this study, as the optimization focuses only on the translational motion along predefined paths. Moreover, each robot follows predefined velocity and acceleration limits:

The following constraint ensures that the distance between any two robots at time

is greater than a safety margin

:

where

denotes the Euclidean norm, corresponding to the distance between the Cartesian positions of robots

i and

j at time

, while

N is the minimum of the

samples of the trajectory of robot

i and the

samples of the trajectory of robot

j.

The proposed approach formulates the collision avoidance problem as an optimization task aimed at adjusting the velocity profiles of multiple robots while maintaining their predefined paths. The methodology employs a centralized optimization framework based on a Genetic Algorithm to determine the optimal velocity adjustments that minimize the objective function.

2.2. Genetic Algorithm Framework

Genetic Algorithms are bio-inspired optimization techniques that emulate the process of natural selection. These algorithms iteratively refine a population of candidate solutions through selection, crossover, and mutation, driving the population towards optimal solutions. In the context of multi-robot coordination, Genetic Algorithms are particularly advantageous because they efficiently explore large search spaces without requiring gradient information [

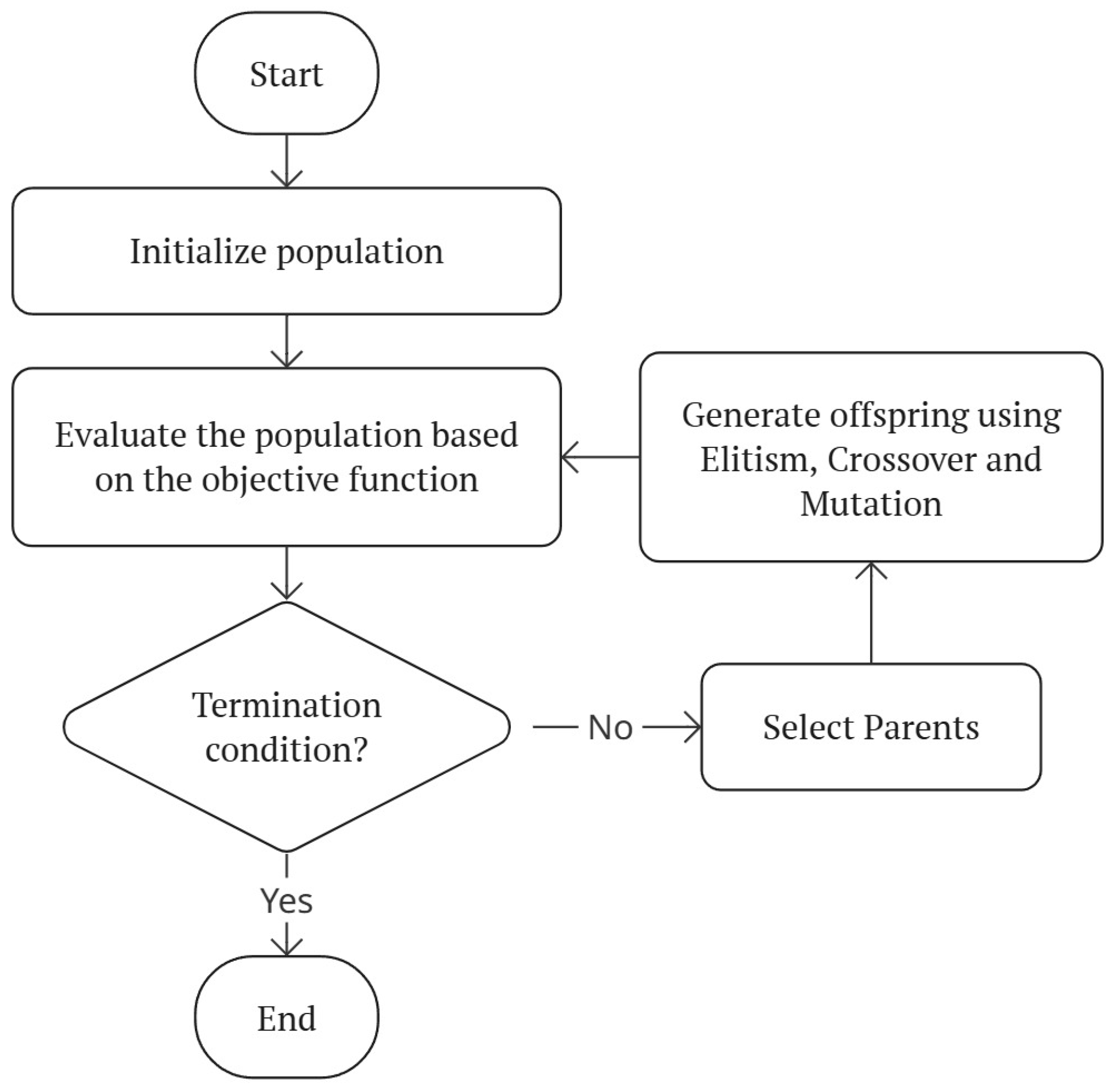

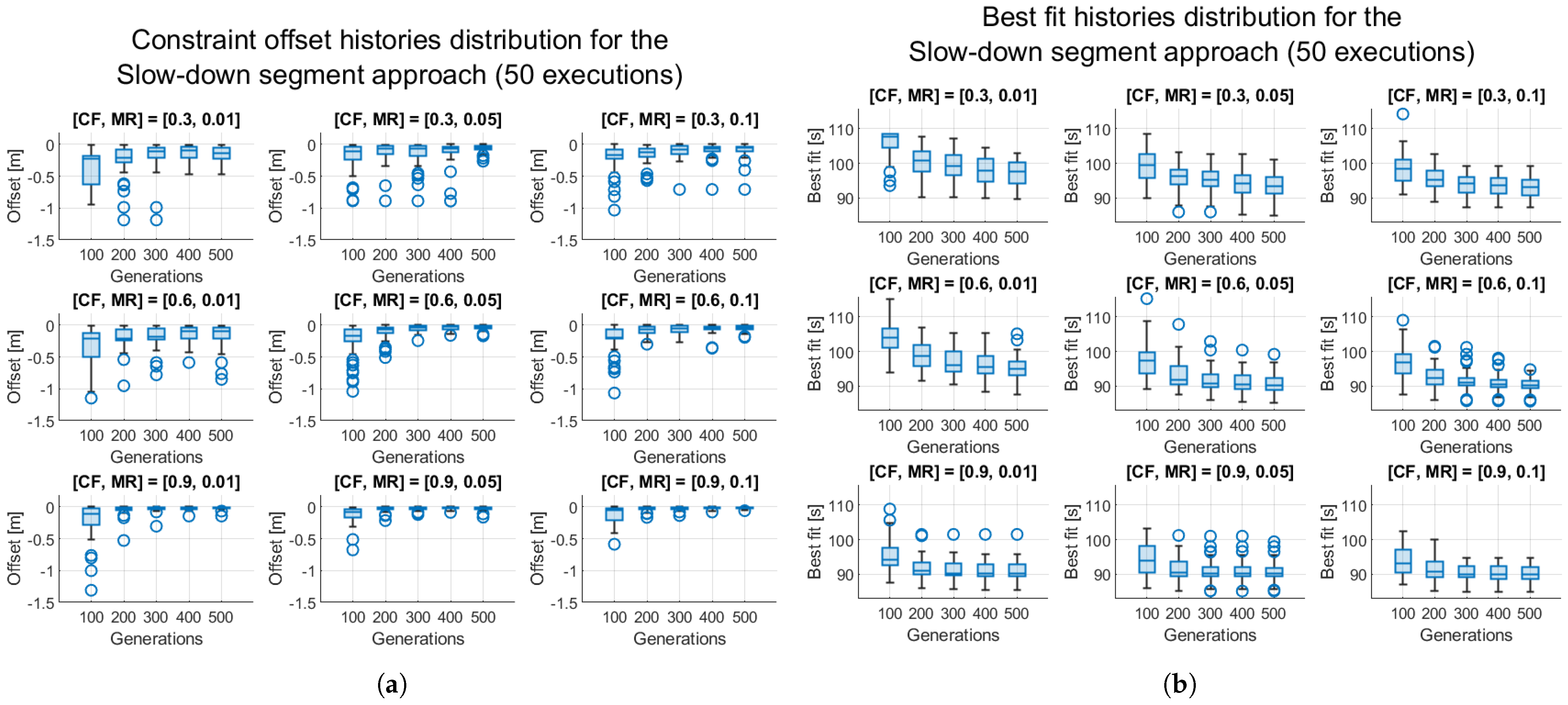

5]. The optimization process, shown in

Figure 1, begins with an initial population of velocity profiles, where each individual represents a candidate solution. At each iteration, individuals are evaluated based on an objective function that considers travel time, energy consumption, and collision constraints. After the evaluation phase, the best-performing individuals are selected to generate the next population. Parent selection is performed using a stochastic uniform selection method, which ensures that individuals with higher fitness have a greater chance of being selected, while maintaining diversity across the population. Other selection strategies can also be employed, each providing a different balance between selection pressure and population diversity. After the selection phase, the next generation of individuals is produced through a combination of elitism, crossover, and mutation operations. Elitism is first applied to ensure that the best-performing individuals of each generation are directly copied to the next one without modification. This mechanism prevents the loss of high-quality solutions during the evolutionary process and accelerates convergence by preserving the most promising candidates. After elitism, the remaining individuals in the new population are generated through crossover and mutation. Crossover operators combine parent solutions to produce offspring by exchanging their genetic material. The Crossover Fraction (CF) determines the portion of the population that undergoes crossover at each generation, controlling how much information is shared between parent solutions. A higher CF emphasizes exploitation, focusing the search around high-fitness regions, whereas a lower CF maintains diversity by allowing more direct inheritance from parent individuals. Mutation, applied to each gene with a probability defined by the Mutation Rate (MR), introduces small random variations to promote exploration and avoid premature convergence. Together, crossover and mutation balance the dual objectives of exploration and exploitation in the search space [

20,

22].

The following sections present different strategies for solving the problem defined in

Section 2.1 using a Genetic Algorithm, where the optimization problem is formally defined at different levels.

2.3. Maximum Velocity Optimization

In this method, the goal is to minimize the total travel time for all robots while ensuring collision avoidance. This is achieved by setting up the optimization problem in Equation (

3) with the robots’ maximum velocities

as decision variables. Rather than minimizing the individual travel times, the objective is to minimize the maximum travel time among all robots, that is, the time required for the last robot to reach its goal. In this formulation, we select the robot with the highest finish time through the inner maximum function. Then, we minimize the finish time of the selected robot through the outer minimization. At the same time, collision avoidance is guaranteed by setting constraints that prevent any two robots from getting closer than the safety margin

. These collision constraints take into account the trajectories of all robots and ensure that the solution complies with the physical limitations of each robot defined in Equation (

1). By solving this optimization problem, the algorithm produces the optimal combination of maximum velocities for all robots that achieves both collision avoidance and minimum task completion time. We define

as the time taken for robot

i to reach the goal under a given maximum velocity

:

2.4. Slow-Down Segment Optimization

The previous approach directly optimizes the velocity profiles of the robots along their entire trajectories. However, a more localized strategy can be adopted to minimize unnecessary velocity reductions while still efficiently preventing collisions. The Slow-Down Segment Optimization method introduces a targeted deceleration phase at the beginning of each robot’s trajectory, ensuring collision avoidance while allowing the robots to operate at their maximum velocity for the remainder of their paths.

This method formulates the problem as a MINLP optimization, where the decision variables include the length of the initial slow-down segment, the scaling factor applied to the maximum velocity of the robot during the segment, and a binary variable determining whether the slow-down segment is applied: if is zero, the velocity profile of robot i is not modified.

The objective function aims to minimize the total travel time while satisfying constraints related to collision avoidance and robot kinematics. By strategically slowing robots at the start of their trajectories, this approach avoids the inefficiencies associated with reducing velocities over the entire path. It ensures that robots reach their maximum allowable speed as soon as possible, thereby maintaining efficient task execution while preventing collisions at critical points. The overall optimization problem is modeled as follows:

where

and

are the bounds for the scaling factor, while

and

are the bounds for the length of the slow-down segment. The lower bound for the segment length is set to

, as the length of the segment cannot be negative, while the upper bound for the scaling factor is fixed at

, since a robot’s velocity cannot exceed its maximum value. This approach offers some key advantages:

By focusing the deceleration on a small part of the trajectory, robots can maximize their velocity for the majority of their path, improving overall task efficiency.

The slow-down segment allows for strategic velocity adjustments that prevent collisions without requiring extensive modifications of the robots’ entire trajectories, which could lead to extreme delays.

By integrating this slow-down segment approach into the optimization framework, the robots can collaborate efficiently while avoiding collisions, resulting in a solution that is both time-optimal and collision-free.

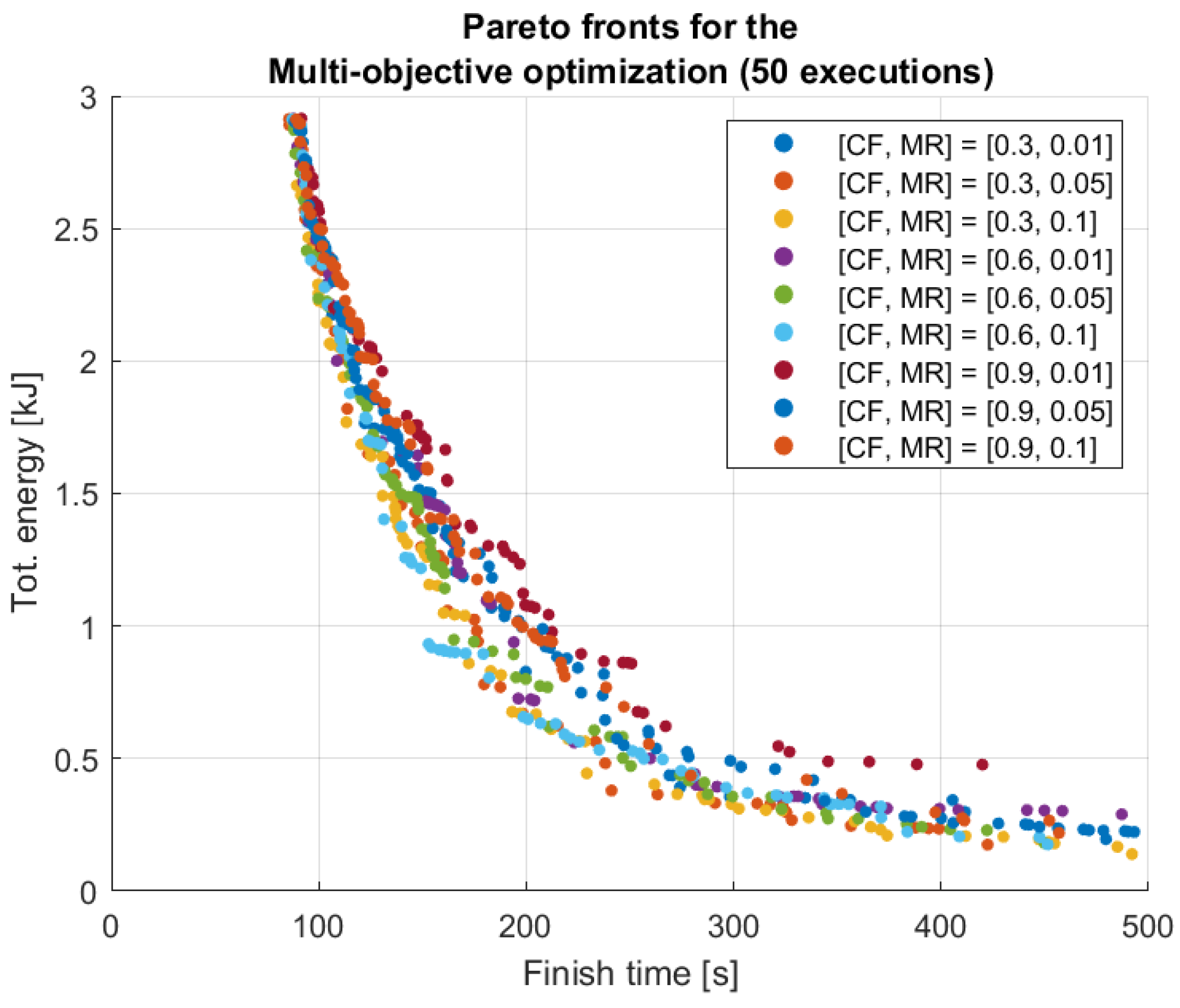

2.5. Multi-Objective Optimization: Minimizing Finish Time and Energy Consumption

In multi-robot systems, besides finish time, energy consumption is also a critical factor. Balancing these two conflicting objectives is crucial, particularly in scenarios where power efficiency is essential for extended operations or when energy availability is a limiting factor. A multi-objective optimization framework is implemented to address this trade-off, simultaneously optimizing total task completion time and energy consumption. On the one hand, minimizing task completion time generally leads to higher velocities, which accelerate operations but result in greater energy consumption, particularly during acceleration and deceleration phases. On the other hand, reducing energy consumption requires lower velocities, which increase task duration but ensure more efficient energy utilization [

23]. To address this trade-off, a Pareto-based multi-objective optimization is employed. The Pareto front represents a set of optimal solutions where no single solution is superior in both objectives simultaneously [

24]. Each point on the Pareto front represents a different trade-off: some solutions can be chosen to prioritize faster task completion at the cost of higher energy consumption, while others prefer lower energy usage at the expense of longer travel times. The optimization problem is formulated as follows:

where

is the mechanical power of robot

i, while

and

are the acceleration and velocity of robot

i at time step

k,

is the mass of robot

i and

is the time interval between two consecutive time steps of robots’ trajectories. The Pareto front visualization allows decision-makers to select the most suitable trade-off, depending on operational constraints. If task completion time is prioritized, a solution with shorter duration but higher energy consumption can be selected. Conversely, if energy efficiency is critical, a solution with lower energy use but longer task duration is preferred. By integrating multi-objective optimization into the slow-down segment approach, the method not only ensures collision-free and efficient task execution but also provides a flexible framework for balancing speed and energy consumption based on application-specific needs.

2.6. Baseline Methods

In this section, we introduce the baseline methods used as references for evaluating the performance of the proposed optimization strategies. These methods do not rely on formal optimization techniques but provide valid, collision-free solutions that are used as benchmarks to assess the proposed GA-based approaches.

The Brute-Force approach explores all possible velocity combinations for the n robots to determine collision-free solutions. Initially, the algorithm generates all potential combinations of maximum velocities within the predefined range , using a discrete step d. Then, it selects the maximum velocities that allow planning collision-free trajectories, generating feasible solutions. Finally, among all the feasible solutions, the one with the highest value and the lowest covariance is selected. To mitigate the high computational cost, a hierarchical evaluation strategy is employed to prevent the algorithm from searching through the combinations in the whole search space . With this strategy, the algorithm first searches for the combinations in the smallest possible interval, which is . If no feasible combination is found, the algorithm expands the interval by multiples of the discrete step d, starts a new iteration and continues the process until a feasible combination is found. Despite this optimization, the approach remains limited by its exponential growth in complexity, making it impractical for large-scale systems.

To improve computational efficiency over the Brute-Force method, a Prioritized Planning approach is employed [

25]. This method sequentially assigns motion plans to robots based on predefined priority levels, effectively transforming the multi-robot trajectory planning problem into a series of single-robot planning tasks in a known dynamic environment. In this approach, each robot is assigned a priority based on a predefined heuristic. Since all robots collaborate toward the same objective, priority assignment is based on path length, that is, robots with longer trajectories are given higher priority since they could run into the highest number of collisions. The highest-priority robot plans its trajectory first, following its maximum velocity constraints. Subsequent robots plan their trajectories sequentially, treating the previously planned robots as dynamic obstacles. Collision avoidance is achieved by iteratively reducing the maximum velocity of each newly planned robot starting from

until no collisions occur. This velocity reduction follows a discrete decrement step

d, ensuring computational efficiency while maintaining feasibility. The process continues until all robots have a collision-free velocity profile, resulting in a vector of maximum velocities

for each robot

i, ensuring collision-free trajectories for all the robots. While this method significantly reduces computational cost compared to brute-force search, its sequential nature may lead to suboptimal solutions, as lower-priority robots may experience excessive slow-downs in order to accommodate higher-priority ones.

4. Conclusions and Future Developments

The final results for the first scenario, summarized in

Table 2, provide a complete comparison between the two baseline methods and the three proposed approaches, respectively: Brute Force (BF), Prioritized Planning (PP), Maximum Velocity Optimization (MVO), Slow-Down Segment Single-Objective Optimization (SDS-SOO), and Slow-Down Segment Multi-Objective Optimization (SDS-MOO). These approaches are evaluated according to the criteria introduced in

Section 3.1.1, including finish time, total energy consumption, and execution time.

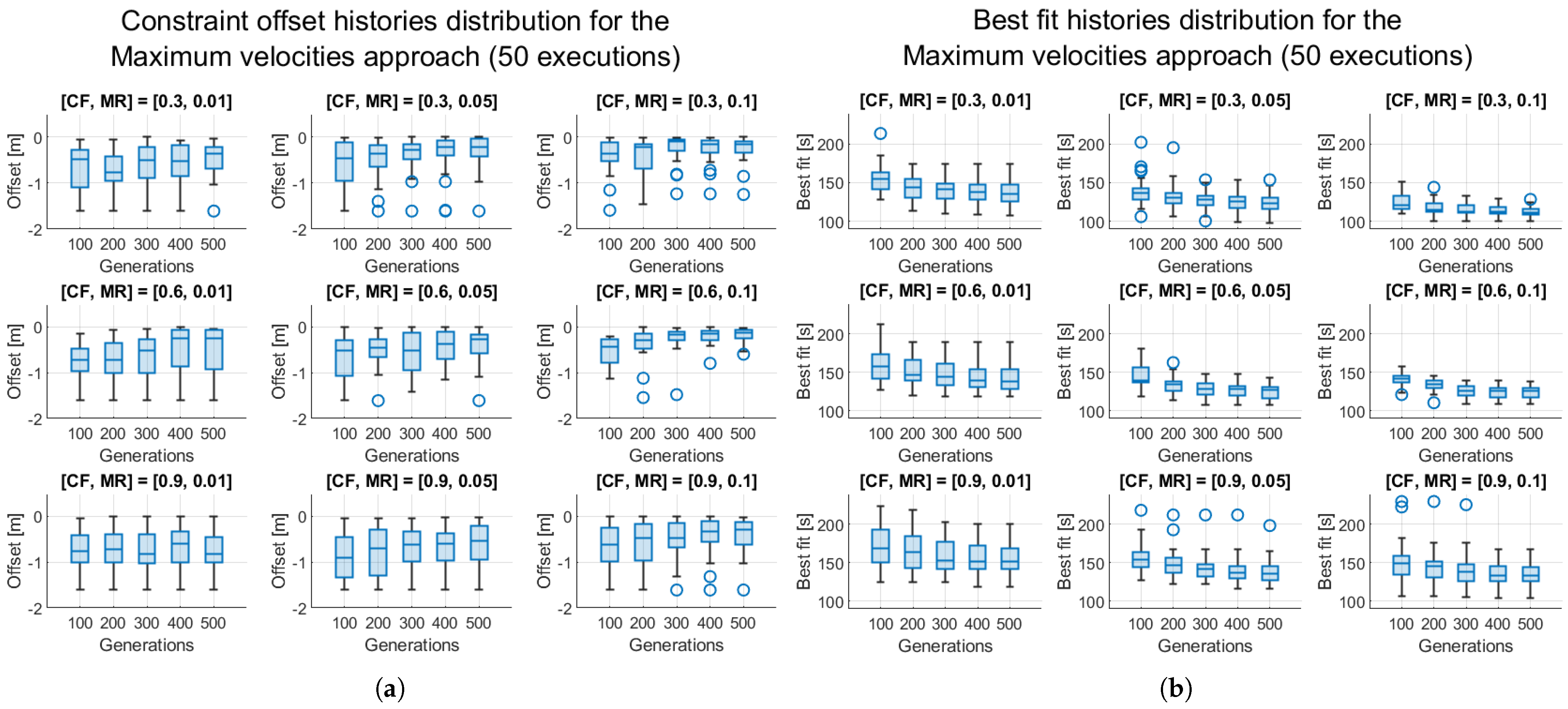

The values in

Table 2 summarize the results obtained in

Section 3.1.3,

Section 3.1.4 and

Section 3.1.5. Only for the SDS-MOO approach, the presented result is the best Pareto-optimal solution across 50 simulations for 9 different combinations of CF and MR, as shown in

Section 3.1.5, based on Finish time and Total energy. Mean distance is the mean distance associated with the best Pareto-optimal solution, while Execution time is the best median across all the combinations and simulations.

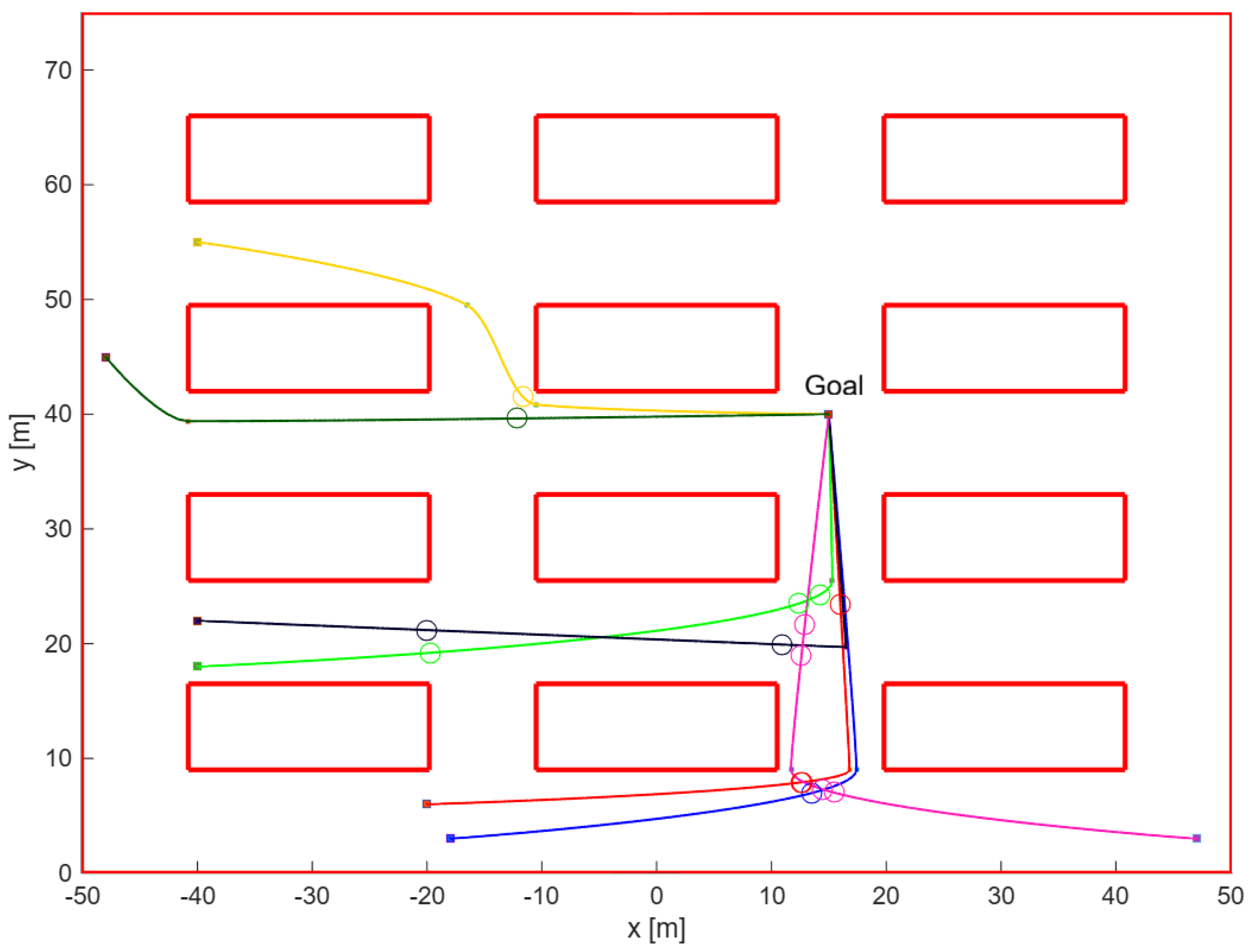

In the starting scenario, where no collision avoidance strategy is applied, the 7 robots move freely at their maximum allowed velocities, resulting in a finish time of 76 s and a total energy consumption of 3.00 kJ. These values represent the best theoretical performance in terms of travel time but also the worst-case scenario for safety management, since there is still no collision avoidance algorithm involved.

The BF approach, while ensuring a valid collision-free solution, achieves a finish time of 110 s. However, this method lacks optimization, both in terms of execution time and solution quality. With an execution time of 84 min, it is computationally demanding and impractical for real-world applications. In contrast, the PP approach offers an incredibly fast execution time of 0.44 s, but at the cost of the worst finish time of 126 s, underlining the trade-off between computational efficiency and solution quality.

The MVO approach represents the first optimization-based method introduced in this study. It gives a reasonable balance between computational effort and solution quality, achieving a median finish time of 111 s across 50 independent executions and an average execution time of 5 min. This result demonstrates the benefits of introducing optimization techniques into the collision avoidance strategy.

The SDS-SOO approach builds on the strengths of MVO, achieving a median finish time of 90 s. This result marks a substantial improvement over the MVO approach and shows the effectiveness of the Slow-Down Segment Optimization strategy in reducing travel time without increasing computational cost.

Finally, the SDS-MOO approach introduces energy consumption as an additional optimization objective alongside finish time. The best Pareto-optimal solution from the 9 tested parameter combinations achieves a total energy consumption of 0.26 kJ, with a finish time of 328 s. While this specific result does not represent the absolute minimum in travel time or energy, the SDS-MOO approach offers a broader set of Pareto-optimal solutions than the other methods, enabling flexibility based on varying priorities. The SDS-MOO approach clearly performs better in solution diversity and flexibility, offering a rich spectrum of Pareto-optimal solutions. This flexibility enables decision-makers to prioritize either travel time or energy consumption based on specific application requirements, enhancing the adaptability and robustness of the optimization strategy.

The experimental results demonstrated that baseline methods, such as Brute-Force and Prioritized Planning, provide valid but suboptimal solutions. The introduction of optimization-based approaches allowed for a more balanced trade-off between computational efficiency, total travel time, and energy consumption. The Slow-Down Segment Multi-Objective Optimization further improved the framework by introducing Pareto-based optimization, offering decision-makers flexibility in selecting solutions that balance speed and energy efficiency.

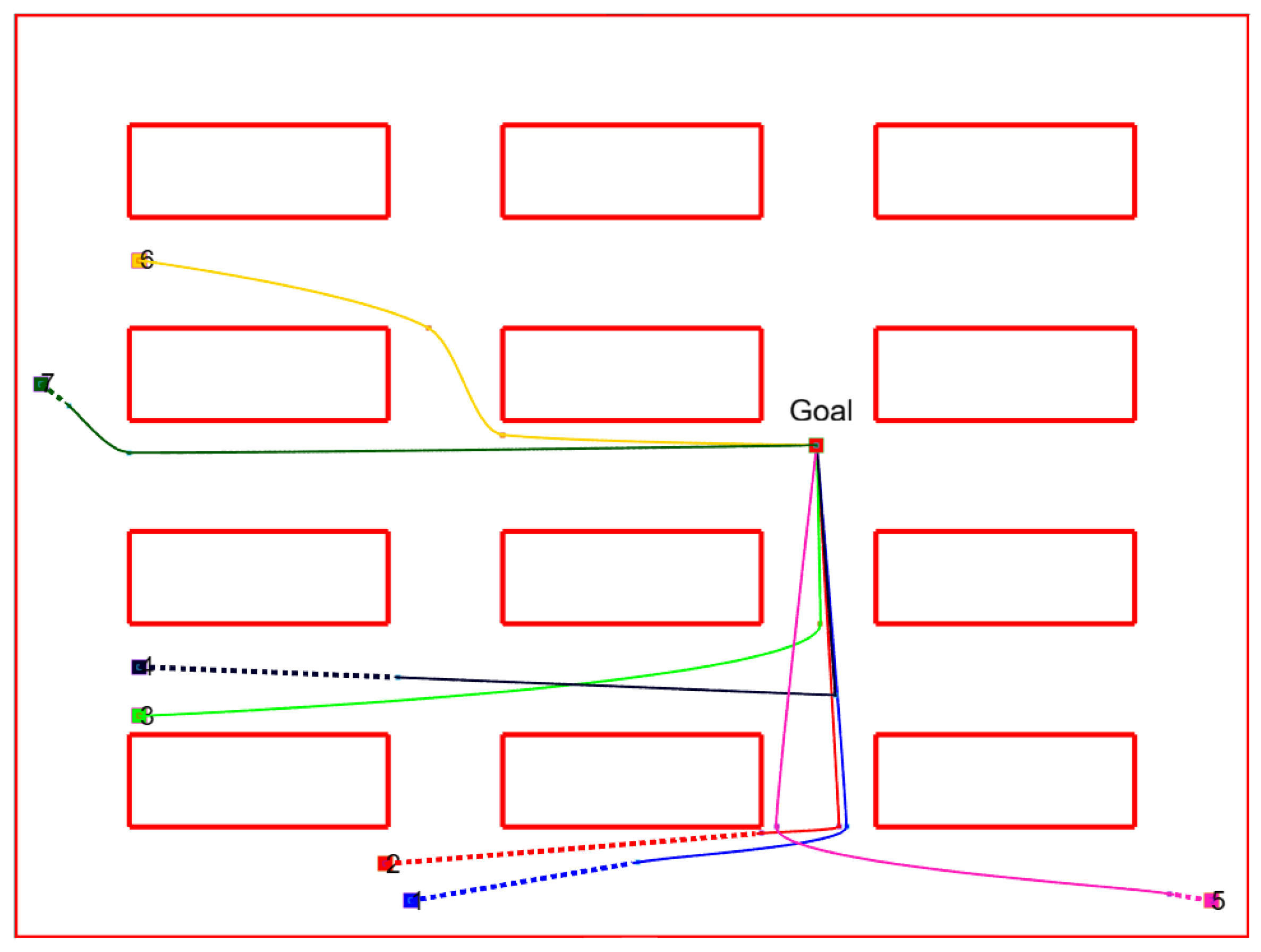

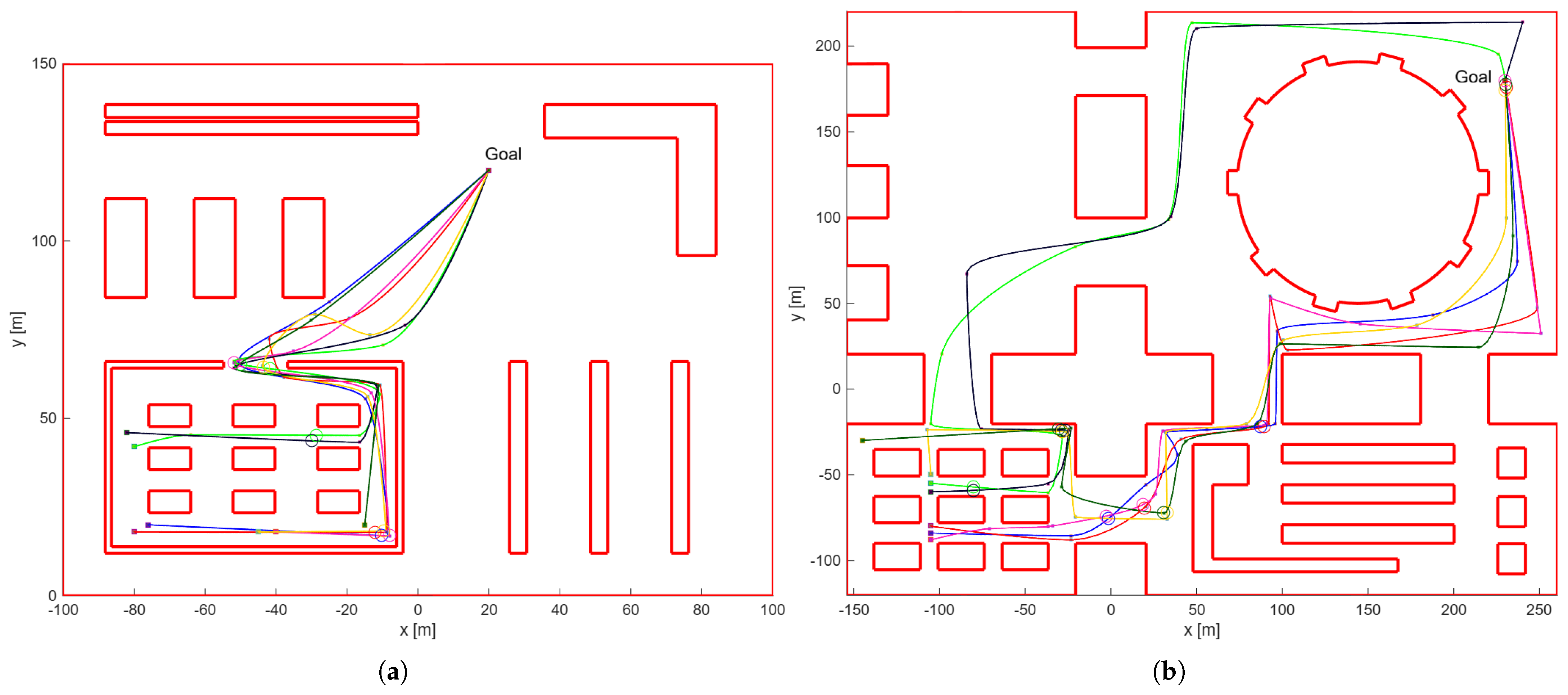

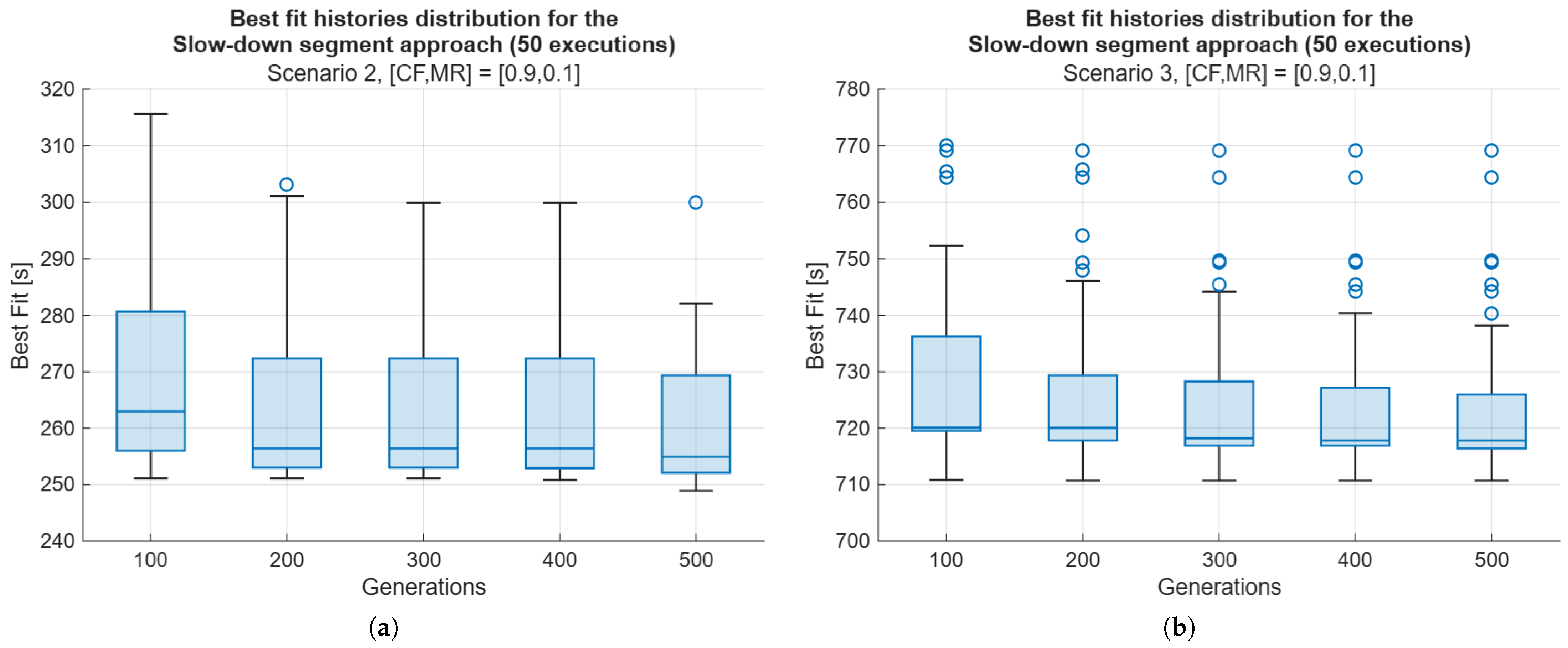

To further validate the scalability and robustness of the proposed framework, two additional simulation scenarios were analyzed. These scenarios were characterized by larger and more complex environments, with irregular obstacles and narrower passages. In the initial conditions, 5 and 10 collisions were detected, with total finish times of 234 s and 686 s for the second and third scenarios, respectively. The experiments focused on the SDS-SOO approach, using the parameter combination

, which yielded the best performance in the first scenario. The same Genetic Algorithm parameters, namely 100 individuals and 500 generations, were maintained. After the optimization, all collisions were successfully resolved, achieving the best median finish times of 255 s and 717 s. These results confirm that the proposed framework maintains effective collision avoidance and consistent performance even when scaling to larger and more complex environments. The increase in map size led to a higher number of trajectory samples for each robot, resulting in greater computational complexity, yet the optimization process remained stable and efficient.

Table 3 summarizes the main quantitative results across the three scenarios. The finish time increase factor, defined as the ratio between the optimized and the initial finish times, progressively decreases from 1.18 in the first scenario to 1.04 in the third, indicating that as the environment grows in size, the relative impact of the collision avoidance task on total travel time becomes smaller. This behavior suggests that the algorithm’s intervention becomes proportionally less invasive in larger layouts, where robots naturally tend to be more spatially distributed. Conversely, the execution time increases approximately linearly with the average path length, which grows from 67 m to 629 m. Although this trend does not represent a rigorous scalability analysis, it provides an initial indication of how computational effort increases with environment size and robot path complexity, setting the basis for future investigations on the framework’s scalability and computational performance. A possible strategy to mitigate execution time growth would be to introduce multi-resolution evaluation techniques, in which the distance computations between robots are initially performed at a lower temporal resolution across the entire population, and progressively refined only for the best-performing individuals. This subsampling approach would allow for a reduction in the number of collision checks in the early stages of the optimization, focusing computational resources on the most promising solutions in later generations, thus preserving accuracy while significantly reducing overall execution time.

Future developments will focus on extending the current framework to address more dynamic and uncertain environments, where moving obstacles or unpredictable changes in robot trajectories may occur. This extension will require integrating time-varying constraints and predictive elements into the optimization process, potentially through the inclusion of stochastic optimization techniques. Moreover, additional investigations will compare the performance of the proposed Genetic Algorithm-based approach with other metaheuristic optimization methods, such as Particle Swarm Optimization or Differential Evolution, to assess differences in convergence speed, robustness, and solution quality. Finally, experimental validation will be pursued using a physical multi-robot platform to evaluate the proposed framework under real-world conditions, considering factors such as sensor noise, actuation delays, and communication uncertainties. These advancements will further strengthen the practical applicability of the method and provide a deeper understanding of its performance beyond simulated environments.

Overall, this study highlights the effectiveness of Genetic Algorithm-based optimization for multi-robot coordination, demonstrating its potential for industrial automation, logistics, and autonomous transportation. By addressing these challenges, future research can further refine the approach, improving its adaptability and scalability for real-world applications.