1. Introduction

In sustainable manufacturing and Industry 4.0, digitization, cloud computing, and intelligent systems drive innovations by enhancing precision and sustainability. Numerical control (CNC) machine tools, as foundational “mother machines” for high-value manufacturing, underpin modern industry through their automation, precision, and reliability, profoundly impacting efficiency and quality in sectors like aerospace, automotive, and precision engineering [

1]. Cutting tools, the critical end-effectors in CNC systems, interact directly with workpieces but are highly vulnerable to failure under harsh conditions involving mechanical impacts, high temperatures, pressures, and friction, leading to wear, damage, or chipping.

In harsh cutting environments, cutting tools continuously endure intense mechanical impacts, high temperatures and pressures, as well as severe friction, making forms of failure such as wear, damage, or even chipping inevitable. Progressive tool failure not only directly leads to a decline in workpiece surface quality, dimensional inaccuracies, and increased scrap rates; more severely, sudden tool breakage can cause catastrophic secondary damage, such as harming the workpiece, fixtures, or even core expensive components like the machine spindle, resulting in significant economic losses and production safety accidents [

2]. Traditional manufacturing employs experience-based periodic tool changes to mitigate risks, but this passive approach overlooks tool life variability from diverse conditions and materials, leading to premature discard of tools with substantial RUL and resource waste, contradicting green manufacturing principles [

3]. Intelligent technologies for real-time tool health assessment and RUL prediction are essential to transition from “time-based” passive to “condition-based” predictive maintenance, a key focus in academia and industry [

4]. Accurate RUL prediction prevents failures, ensures safety, optimizes efficiency, maximizes tool utilization, integrates tool changes into scheduling to minimize downtime, and supports intelligent, unmanned factories like “lights-out” operations, bridging sensing and decision-making.

However, despite significant progress in data-driven prediction methods in recent years [

5,

6], their applications to complex and variable real-world industrial scenarios still face a series of deep-seated challenges, which constitute the current technical bottlenecks in research. First, existing methods show deficiencies in multimodal data fusion, often using simple concatenation or weighting that ignores deep nonlinear interactions among time-series signals for cutting dynamics, geometric information for wear states, and static parameters for operating conditions [

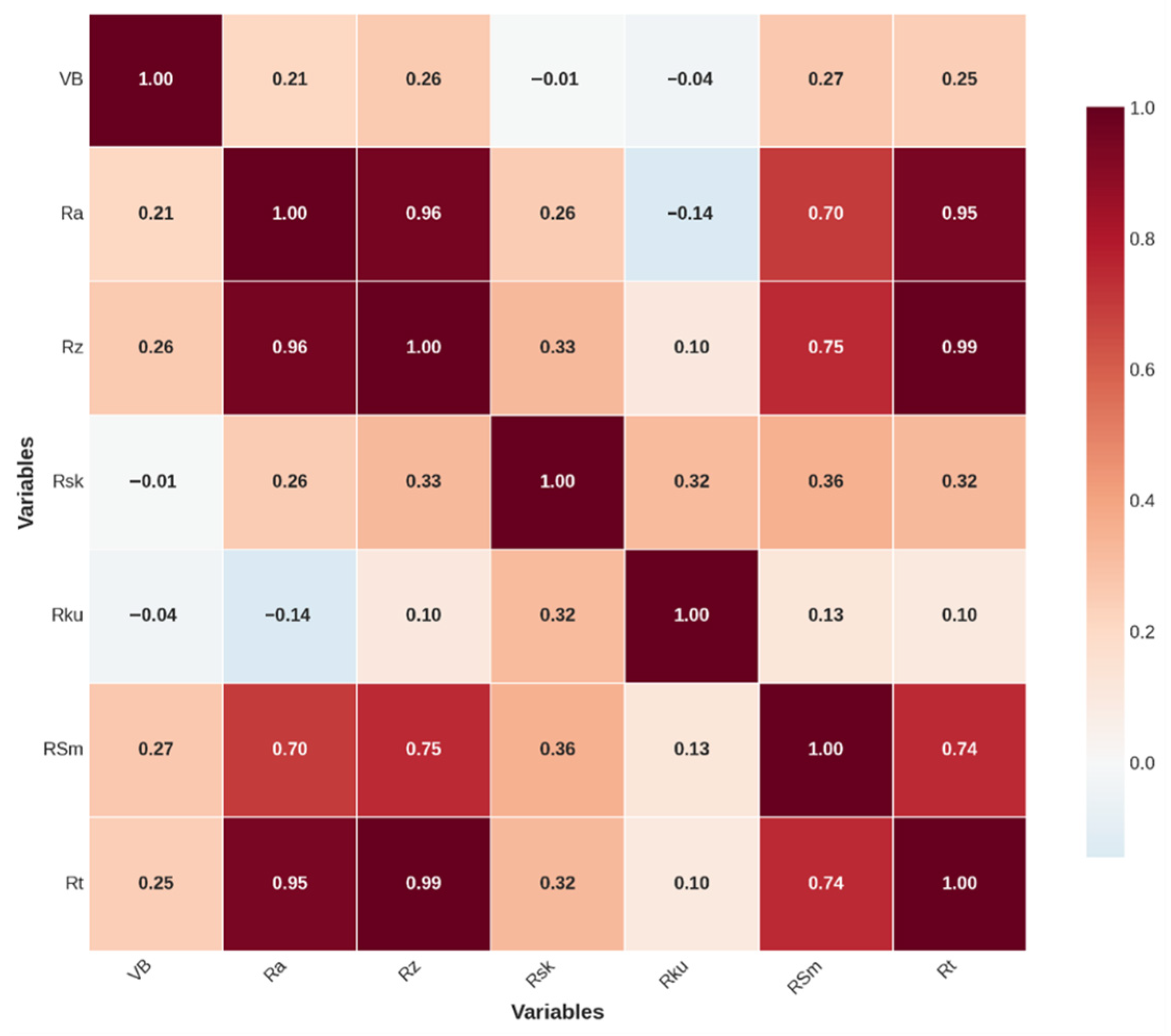

7]. Second, they fail to exploit structural dependencies, as traditional models handle Euclidean data well but overlook non-Euclidean graph structures in machining. For instance, entities include tools as nodes representing wear states, sensors capturing force and roughness signals, workpieces linked to surface quality metrics, and experimental phases grouping sequential operations. Relationships manifest as edges denoting dependencies, such as the physical coupling between tool wear VB in mm and cutting forces Fx/Fy/Fz from sensors, or contextual links across batches that reflect variability in machining conditions and degradation patterns. Ignoring these graphs limits generalization and robustness [

8]. Finally, single-task regression overlooks health state transitions, such as from “normal” to “rapid wear”, failing to provide staged maintenance guidance. This paper addresses these challenges by integrating GNNs with optimized Transformers for deep multimodal fusion, precise graph relational inference, and multi-task learning, enabling simultaneous RUL prediction and health state classification in dynamic machining processes.

2. Literature Review

Cutting tool RUL prediction is a core topic in the field of smart manufacturing. Domestic and international research revolves around three technical routes: physical and statistical models, traditional machine learning and ensemble learning, and deep learning. These routes have, respectively, advanced prediction accuracy and generalization capabilities in theoretical depth, algorithm optimization, and industrial applications.

Physical and statistical models use mathematical equations for mechanistic and data-driven predictions. Physical modeling, constrained by multivariable complexity, guides data methods via fusion; for example, physics-informed hidden Markov model (PI-HMM) [

9] and Gaussian process regression [

10] improve consistency and uncertainty, while early HMMs tracked wear using forces [

11]. Statistical approaches like multi-stage Wiener processes with change-point detection characterize non-stationary wear phases [

12,

13].

Traditional machine learning and ensemble learning build efficient models from sensor data, emphasizing accuracy and uncertainty. Support Vector Machines (SVMs) hybrids incorporate particle filtering or optimization for time-series and limited-data scenarios [

14,

15]; Gaussian Process Regression (GPR) variants handle noise via sparsity or genetic algorithms [

16,

17]. Bayesian methods prevent overfitting and quantify uncertainty [

18], with early inference networks [

19] and recent Bayesian Regularized Artificial Neural Networks (BRANNs) [

20] enabling robust generalization. Ensembles, including random forests [

21,

22] and Extreme Gradient Boosting (XGBoost) hybrids [

23,

24], excel in classification and optimization, while AdaBoost suits small datasets [

25]; comparative studies highlight reliable selection for reliability prediction [

26].

Deep learning enables end-to-end feature learning for robust architectures. Automated analysis employs Fully Convolutional Networks (FCNs) [

27] or lightweight Convolutional Neural Networks (CNNs) [

28] for high-accuracy wear detection. In aerospace, autoencoders with Gated Recurrent Units (GRUs) monitor composites [

29]. Recurrent models like adaptive Bidirectional Long Short-Term Memory networks (BiLSTMs) [

30,

31] and multi-sensor Long Short-Term Memory networks (LSTMs) [

32] capture dependencies. Hybrids fuse features via wavelets [

33] or attention with Independently Recurrent Neural Network (IndRNN) [

34]; multirate Hidden Markov Models (HMMs) process acoustics [

35], and Maximal Overlap Discrete Wavelet Transform (MODWT) supports label-free IoT predictions [

36]. Time–frequency LSTMs [

37] and Transformers like Power Spectral Density–Convolutional Vision Transformer (PSD-CVT) [

38] or Convolutional Physics-Informed Transformer (Conv-PhyFormer) [

39] balance efficiency and interpretability. Physics-informed Deep Learning (DL) optimizes generalization [

40].

Although these studies have advanced tool RUL prediction from mechanism-driven to data-driven paradigms, three core challenges remain: shallow multimodal fusion overlooking physical couplings and contexts, for example, batches and sequences, leading to biases and reduced robustness under noise; inadequate relational modeling of non-Euclidean graphs among entities like tools and sensors, causing redundancy and weakened generalization in variable scenarios; and single-task regression neglecting health state evolution, limiting staged degradation sensitivity and maintenance guidance.

To overcome these, this study proposes an innovative end-to-end architecture integrating multimodal encoding, graph relational inference, and multi-task learning for deep fusion and interaction. Modal encoders refine feature extraction, preserving modality traits with adaptive weighting to address imbalances and exceed concatenation limits. A graph adaptive fusion module uses GATs to construct and learn entity dependency graphs, dynamically attending to nonlinear interactions and contextual links for enhanced robustness in noisy environments. Finally, a cross-modal Transformer decoder fuses global features via self-attention across time-series, geometric, and operational data, enabling dual-head multi-task outputs, one for health state classification and one for RUL regression, to promote knowledge transfer, degradation sensitivity, and precise predictions.

3. Architecture Design for Tool RUL Calculation

The architecture design encompasses key stages such as data acquisition, feature engineering, multimodal feature extraction, graph adaptive fusion, and cross-modal interaction and prediction, aiming to achieve high-precision RUL regression prediction and health status classification of the cutting tool.

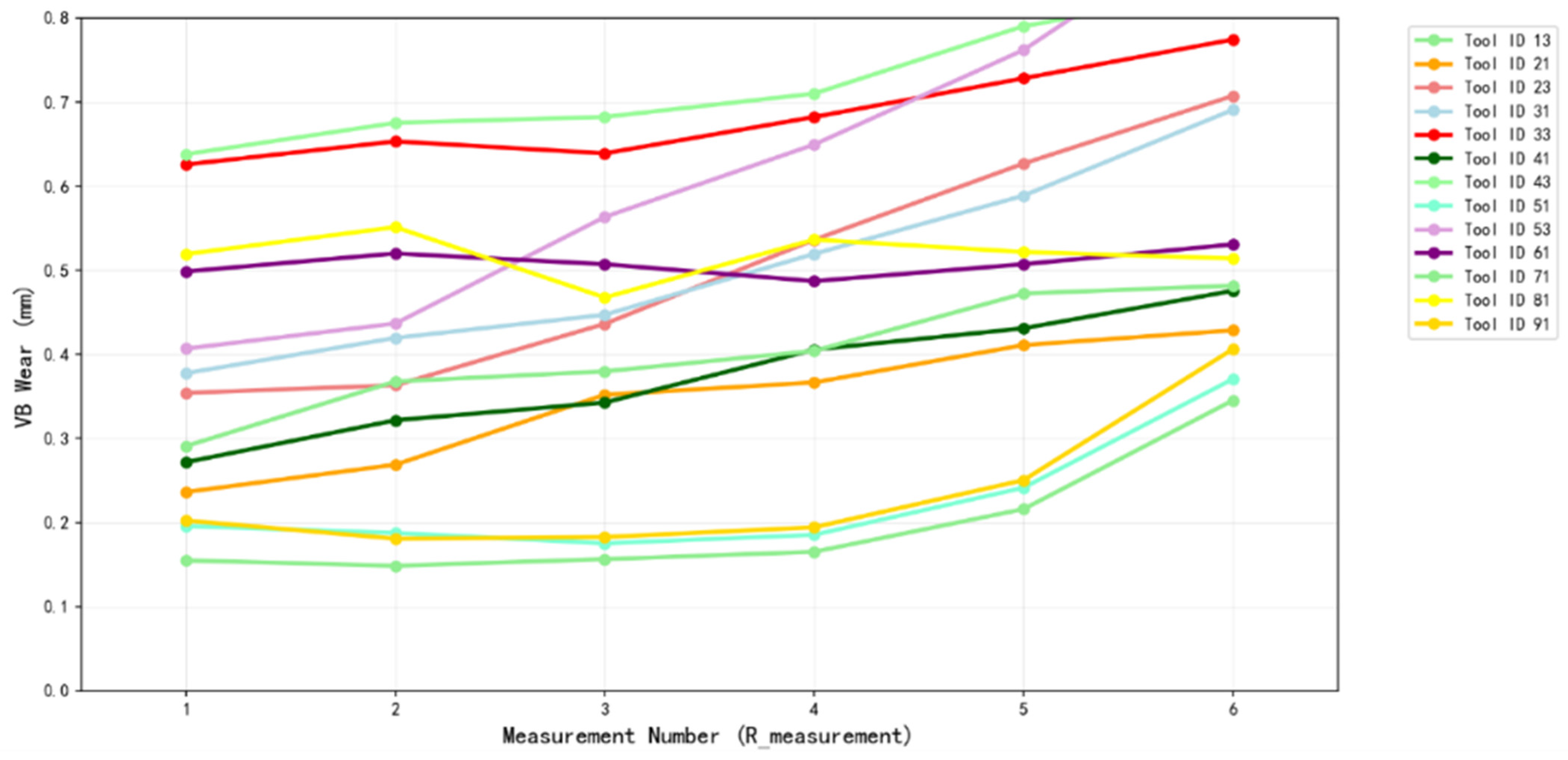

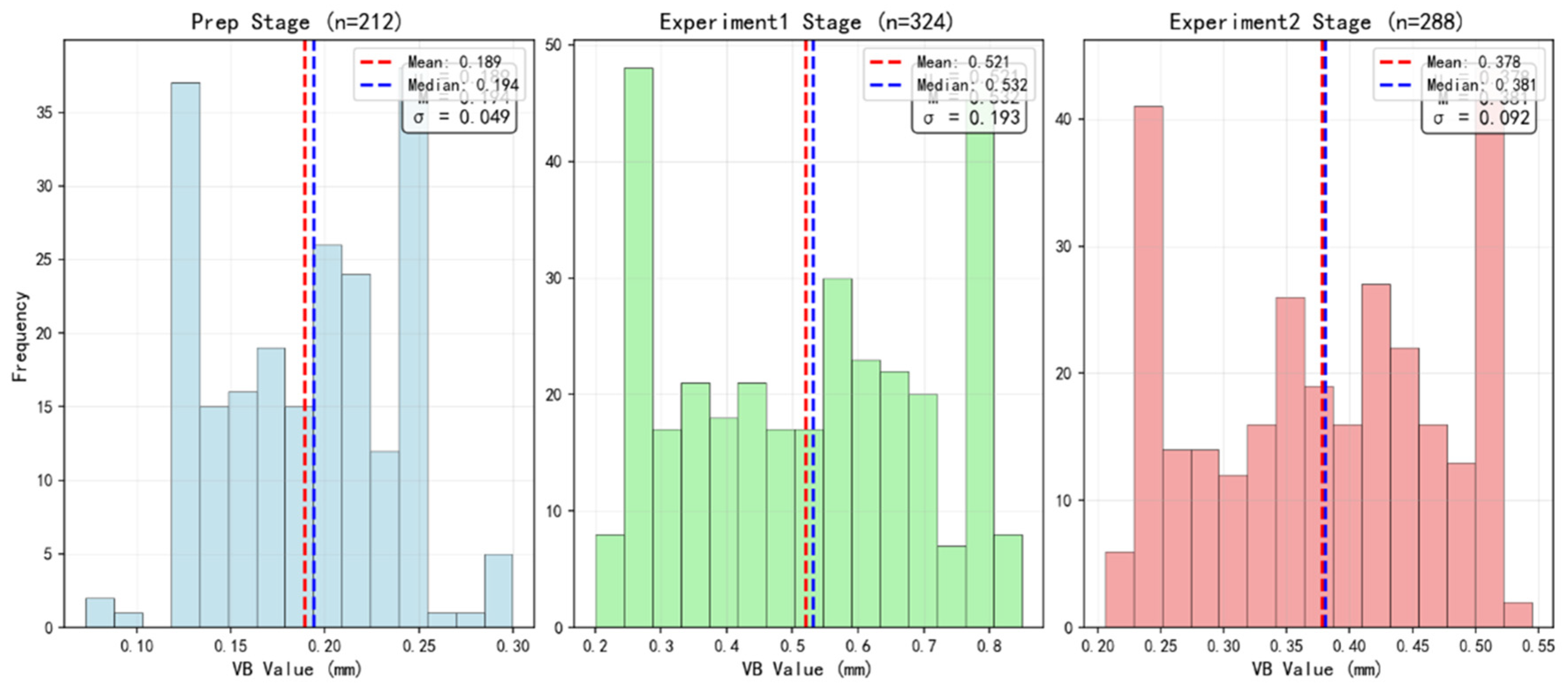

Figure 1 completely depicts a multimodal deep learning model for precisely predicting the tool RUL and assessing its health status. The core idea of this model is to systematically integrate data from different information sources to build a comprehensive understanding of the tool wear process. The entire process begins with data acquisition phase, which acquires four key types of data in parallel: first, raw sensor data that directly reflects the physical state of the cutting process, such as three-directional cutting forces (Fx, Fy, Fz) and a series of surface roughness parameters; second, geometric data that quantify the physical wear of the cutting tool, namely the diameter changes before and after tool use; furthermore, operational parameters that define the machining conditions, including cutting depth, speed, and feed rate; finally, phase information that provides context for the data, such as experimental batches and positions. These four types of data together lay a solid data foundation for the subsequent precise predictions, particularly in the semantics of cutting force variations, tool cutting edge status, tool wear, surface roughness and quality of the component machined [

41].

After data acquisition, the system enters the feature engineering and preprocessing stage, with the objective of transforming raw data into more informative features to prepare for the deep learning model. In this stage, high-frequency sensor signals undergo processing through specialized high-order feature extraction algorithms to reveal their intrinsic patterns. The initial and final diameters of the tool are used to calculate a key wear indicator that is VB, which intuitively reflects the degree of tool degradation. At the same time, operational parameters and experimental phase information are, respectively, integrated and embedded encoded, enabling effective mathematical fusion with other types of features.

Next, the architecture employs a parallel multimodal feature processing pipeline to perform deep feature extraction on different data sources. For the cutting force signals that best reflect the real-time cutting state, the system uses a one-dimensional convolutional neural network (1D-CNN) with three convolutional layers, kernel size of 5, and filter dimensions of [64, 128, 256] for envelope extraction, followed by a bidirectional Transformer encoder configured with four layers, eight attention heads, a hidden dimension of 256, and a dropout rate of 0.1 to capture its complex temporal dependencies. For the geometric and roughness data that represent progressive wear, it is processed through a channel that combines a Temporal Convolutional Network (TCN) with four layers, kernel size of 3, channel dimensions of [32, 64, 128, 256], and a standard Transformer encoder with three layers, four attention heads, a hidden dimension of 128, and a dropout rate of 0.1. At the same time, the processed operational parameters and phase information are also forwarded as independent feature streams. This specialized processing ensures that the unique characteristics of each data modality are fully exploited.

One of the innovative aspects of this architecture is its graph adaptive fusion module, which aims to intelligently integrate information from different data streams. This module constructs the features from the geometric, operational parameters, and phase information streams into a graph structure, where the graph’s nodes represent sensor positions, and the edges cleverly define the complex associations between internal tool features as well as between different machining batches. To provide a precise definition, the graph is formulated as G consisting of nodes V and edges E. The nodes V consist of multimodal entities: sensor nodes representing positions for cutting force signals Fx, Fy, Fz and roughness parameters Ra, Rz, embedded as feature vectors from their respective encoders; geometric nodes capturing tool wear metrics like VB and diameter changes; operational parameter nodes encoding static conditions such as cutting depth, speed, and feed rate via dense embeddings; and phase nodes representing experimental batches and positions as categorical embeddings to provide contextual grouping. This integration relates sensor positions to operational and phase info by treating them as interconnected entities in a heterogeneous graph, where sensor nodes are linked to phase nodes for batch-specific context and to operational nodes for condition-dependent dynamics. The edges E are defined based on domain-informed associations: intra-modal edges connect similar entities within a modality, sensor nodes linked by spatial proximity or signal correlation, weighted by cosine similarity of their feature vectors; inter-modal edges capture cross-dependencies such as between geometric nodes VB and sensor nodes forces to model physical coupling, initially weighted by correlation coefficients, strong edge for VB-Fx with correlation >0.7, or between phase nodes and all others to encode batch-wise temporal relations, weighted by sequence similarity across machining cycles. Edges are undirected and initially static but dynamically refined via the attention mechanism of GATs during training, allowing adaptive weighting based on learned importance. By employing GATs with three layers, eight attention heads, a hidden dimension of 256, output dimension of 128, dropout rate of 0.2, and LeakyReLU negative slope (alpha) of 0.2, the model can adaptively learn the importance of different information sources and generate a highly condensed feature representation that is aligned across modalities and batches, providing key context for understanding the tool’s wear behavior under different operating conditions and time scales.

In the final cross-modal interaction and prediction stage, all refined feature information is aggregated to generate the final prediction results. The deep temporal features extracted from the cutting force signals, along with the aligned contextual features output from the graph fusion module, are fed together into a cross-modal Transformer decoder configured with two layers, eight attention heads, a hidden dimension of 256, feed-forward network dimension of 512, and dropout rate of 0.1. This decoder is responsible for parsing and fusing the deep interactions between these two major categories of heterogeneous features, generating the final fused contextual features. These features contain the most comprehensive description of the tool’s current state and are fed into a dual-task output layer. Among them, a classification head consisting of fully connected layers with dimensions [256, 128, 3], ReLU activation, and Softmax function, through fully connected layers and a Softmax function, determines whether the tool is in a “normal,” “warning,” or “critical” health status. Meanwhile, another regression head that is RUL regression head composed of fully connected layers with dimensions [256, 128, 64, 1], ReLU activation, and Sigmoid output solves a key regression problem through a set of fully connected layers: predicting the normalized proportion of the tool’s RUL. To enhance the model’s generalization ability and training stability, the RUL here is normalized to a standard interval of 0 to 1. In this interval, “1” represents the tool in a brand-new state, possessing its full potential useful life; while “0” represents that the tool’s life has been completely exhausted, reaching the failure standard. Therefore, the task of this regression head is to precisely predict a continuous value between 0 and 1, which intuitively represents the percentage of the tool’s current remaining life in its total life. This normalized prediction value not only facilitates robust regression calculations for the model but also provides a standardized health metric independent of specific physical units. When needing to obtain specific physical life, simply multiply this prediction proportion by the total design life of that model tool under specific operating conditions to easily convert to actual remaining minutes or the number of machinable workpieces.

The model training process employs the AdamW optimizer with a learning rate of 1 × 10

−4, incorporating a warmup phase of 500 steps followed by cosine annealing decay. Training is conducted with a batch size of 32 for 100 epochs, with early stopping implemented using a patience of 15 epochs to prevent overfitting. The dual-task joint loss function balances the classification and regression objectives with a lambda (λ) value of 0.7, meaning the regression task contributes 70% to the total loss while the classification task contributes 30%. Specifically, the classification task employs cross-entropy loss with label smoothing of 0.1, and the regression task uses mean squared error (MSE) loss. To stabilize training, gradient clipping with a maximum norm of 1.0 is applied. All experiments are conducted using PyTorch framework (

https://pytorch.org/) on an NVIDIA RTX 3090 GPU with 24 GB memory (NVIDIA, Santa Clara, CA, USA).

Based on the macroscopic overview of the tool RUL calculation architecture, this study systematically elaborates the end-to-end process of the model from data acquisition to prediction output. To advance theoretical exploration, the following will delve into the mathematical modeling of each module, as well as the loss functions and optimization strategies. Equations are as (1) to (5). The aim is to provide a quantitative framework for the internal mechanisms of the model, while explaining the theoretical basis for design decisions.

- ①

VB Mathematical Modeling

where

(

) represents the initial diameter of the tool,

(

) represents the current measured diameter. To clarify, VB is a calculated metric derived from direct measurements of the tool’s initial and current diameters.

is measured before tool usage,

is similarly measured after a period of machining, reflecting the tool’s worn state. VB is then computed as the difference between these two measured values. The role of VB is to transform static geometric data into dynamic wear indicators, facilitating fusion with temporal sensor signals.

- ②

RUL Normalization

where

is the current RUL, and

is the total design life of the tool under specific operating conditions. The [0, 1] interval facilitates sigmoid activation, MSE stability, and cross-unit generalization.

- ③

ATs Attention Mechanism

The attention coefficient

for node

aggregating information from neighbor

is computed as follows:

where

,

, and

are node vectors, representing the embedded feature representations of nodes in the graph G consisting of V and E, where

is the central node such as a sensor node encoding cutting force features,

and

are neighboring nodes such as geometric nodes for VB or operational parameter nodes for cutting conditions, transformed by the weight matrix W to project them into a common space for attention computation,

is the learnable weight matrix,

is the attention vector,

represents vector concatenation,

represents vector concatenation,

is the set of neighboring nodes of

including

itself for self-attention if applicable, and

introduces nonlinearity. This softmax mechanism dynamically weights the edges, enhancing cross-modal robustness.

- ④

1D-CNN Convolution Operation

where

is the output feature value at position or time step

, representing the convolved result that captures local patterns in the sequence,

denotes the summation over the kernel range from

=

to

−

, aggregating weighted inputs for efficient feature computation,

is the input signal value at the shifted position

, providing the sliding window of sequential data such as sensor readings,

is the kernel weight at position

, and

is the bias term.

- ⑤

Dual-Task Joint Loss

where

is the total combined loss function for the multi-task learning model, serving as the overall objective to minimize during training by balancing regression and classification tasks,

is the hyperparameter that controls the relative importance of the regression task, with higher values prioritizing regression over classification (set to 0.7 in our implementation),

is the loss value for the regression task (computed using mean squared error), and

is the loss value for the classification task (computed using cross-entropy loss).

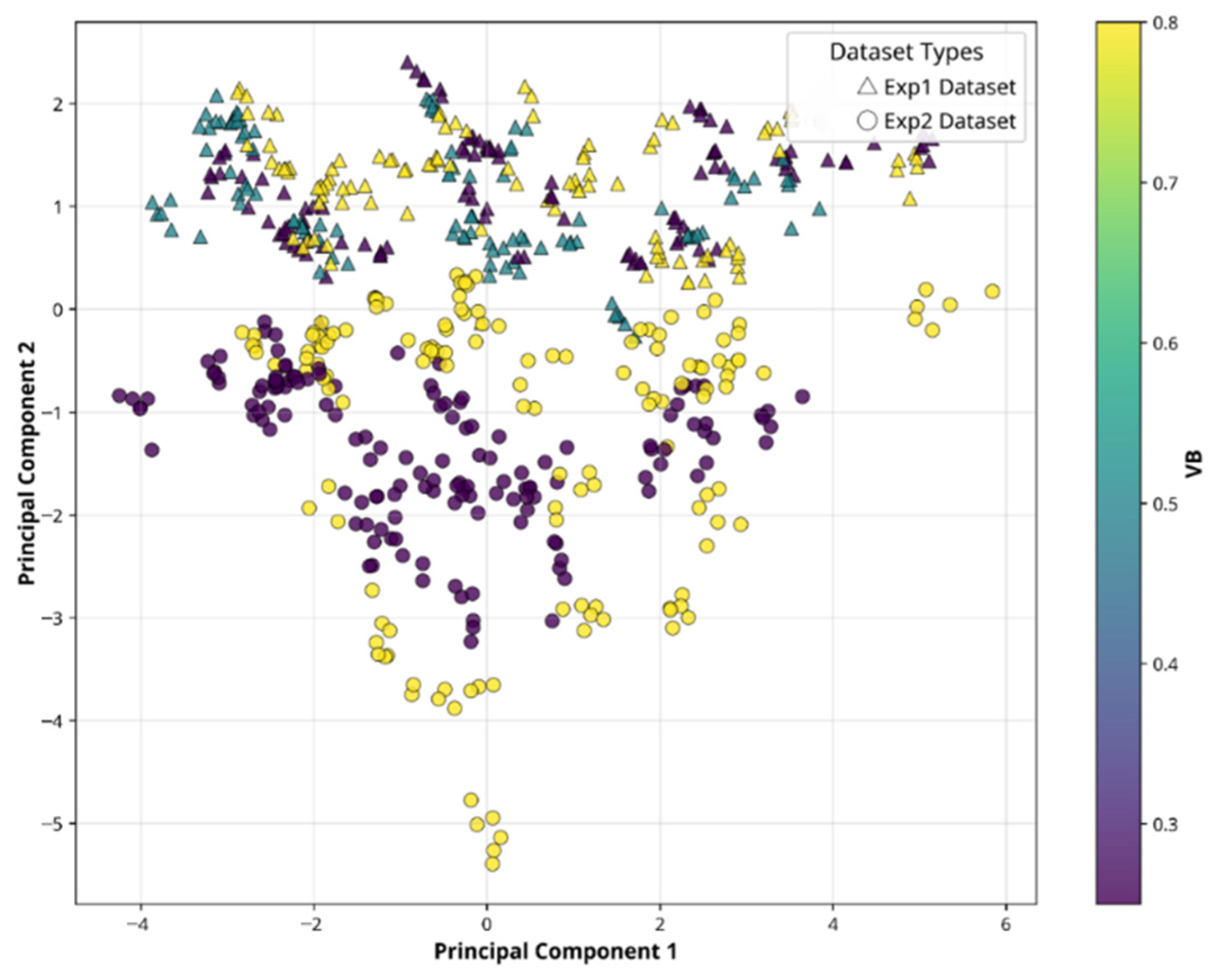

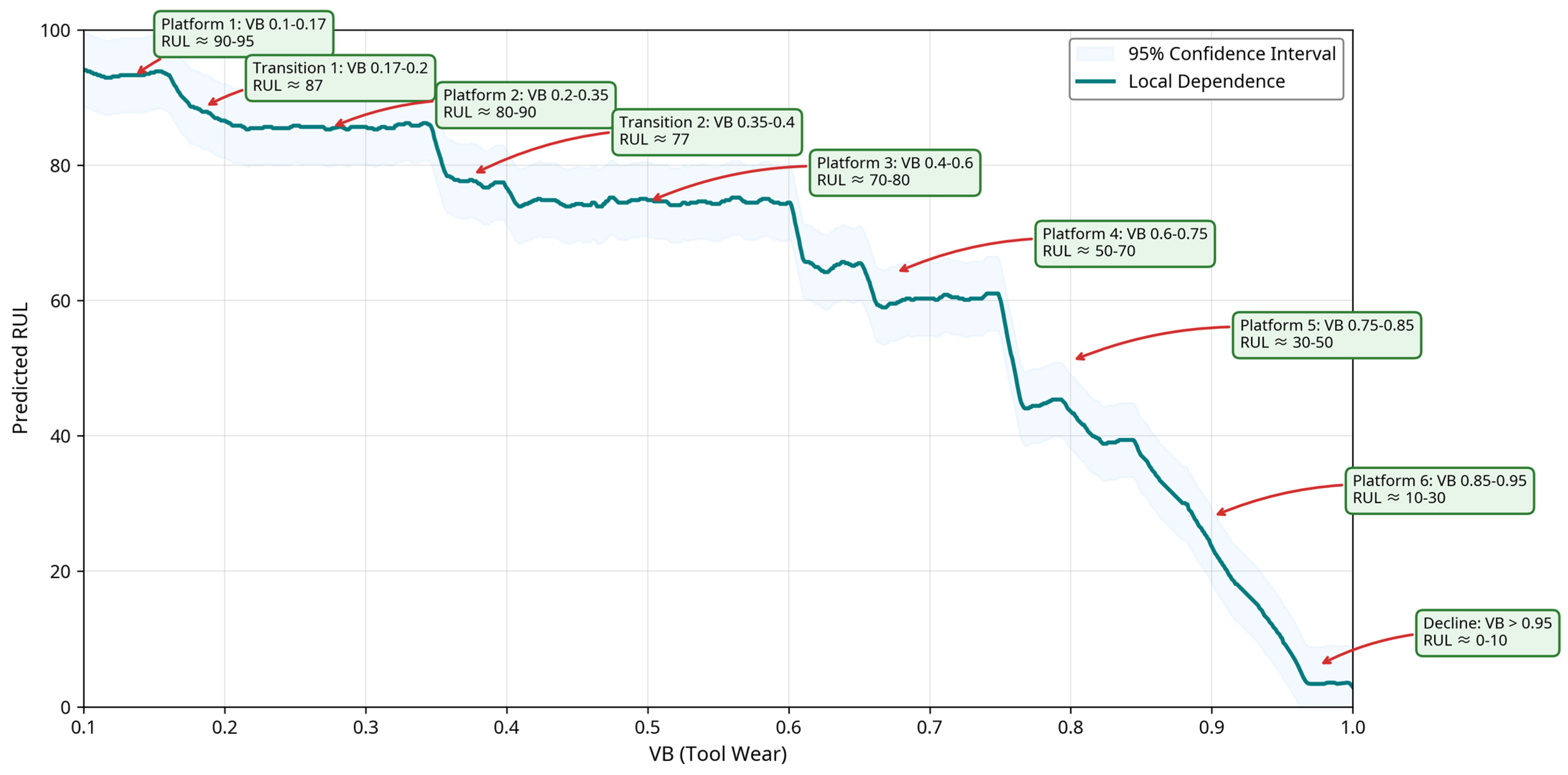

This architecture design drives multimodal fusion with VB as the core degradation indicator. In the feature engineering stage, VB serves as a key proxy, supporting static correlation analysis and segmented trend modeling. The model captures the weak correlations between VB and cutting forces, roughness, and in the graph adaptive fusion module, adaptively weights through the GATs attention mechanism, for risk interval division and multimodal dimensionality reduction.

5. Discussion

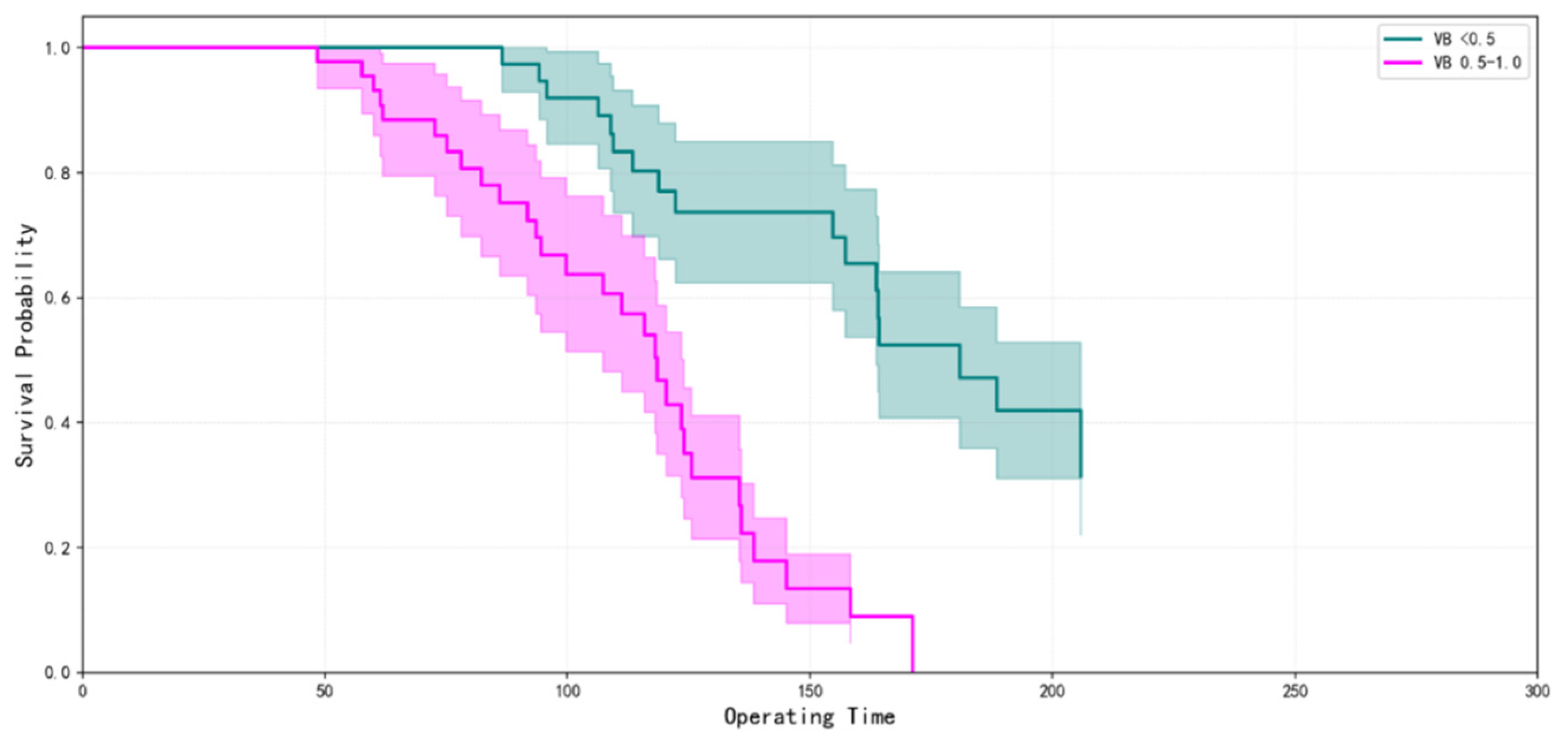

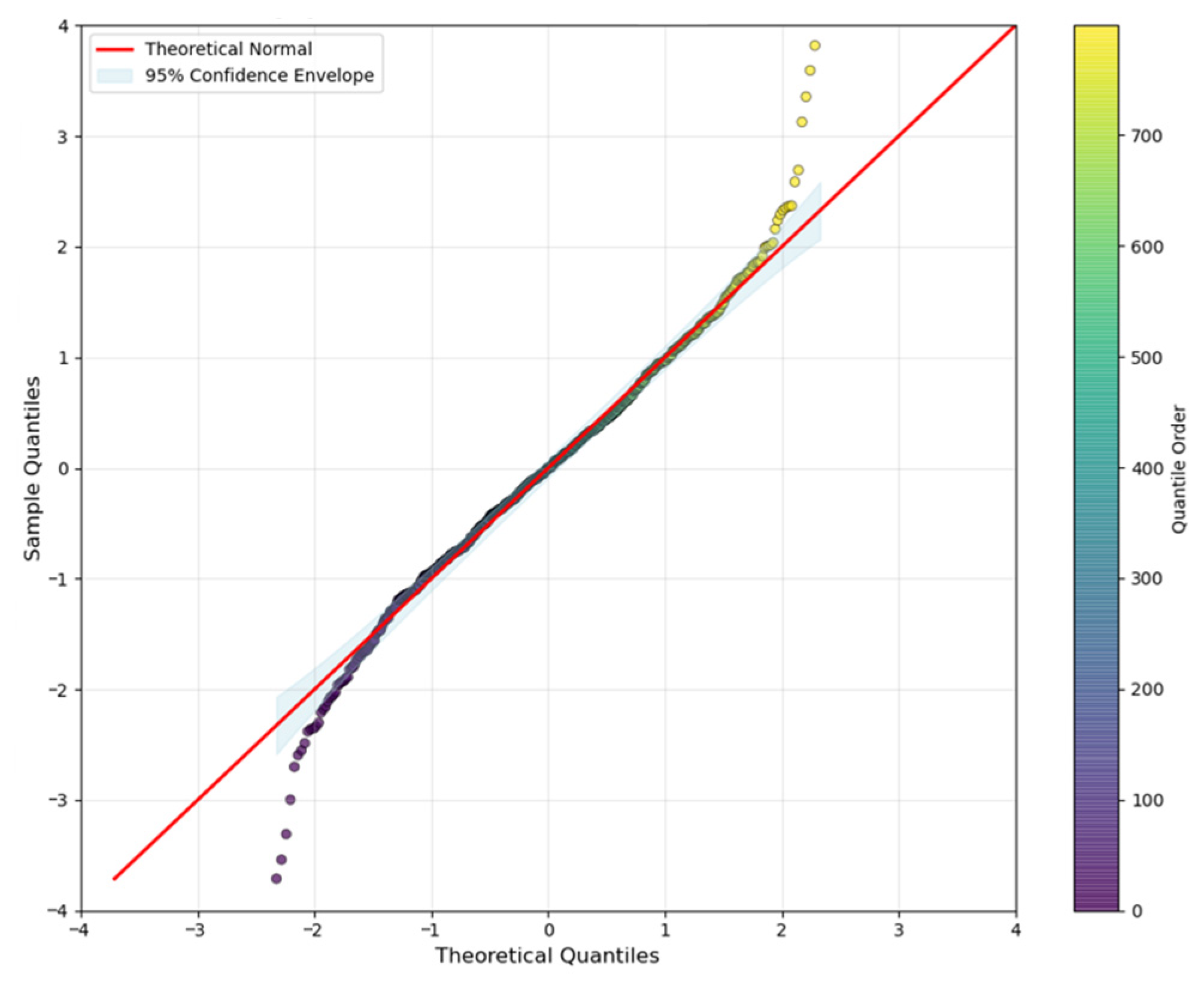

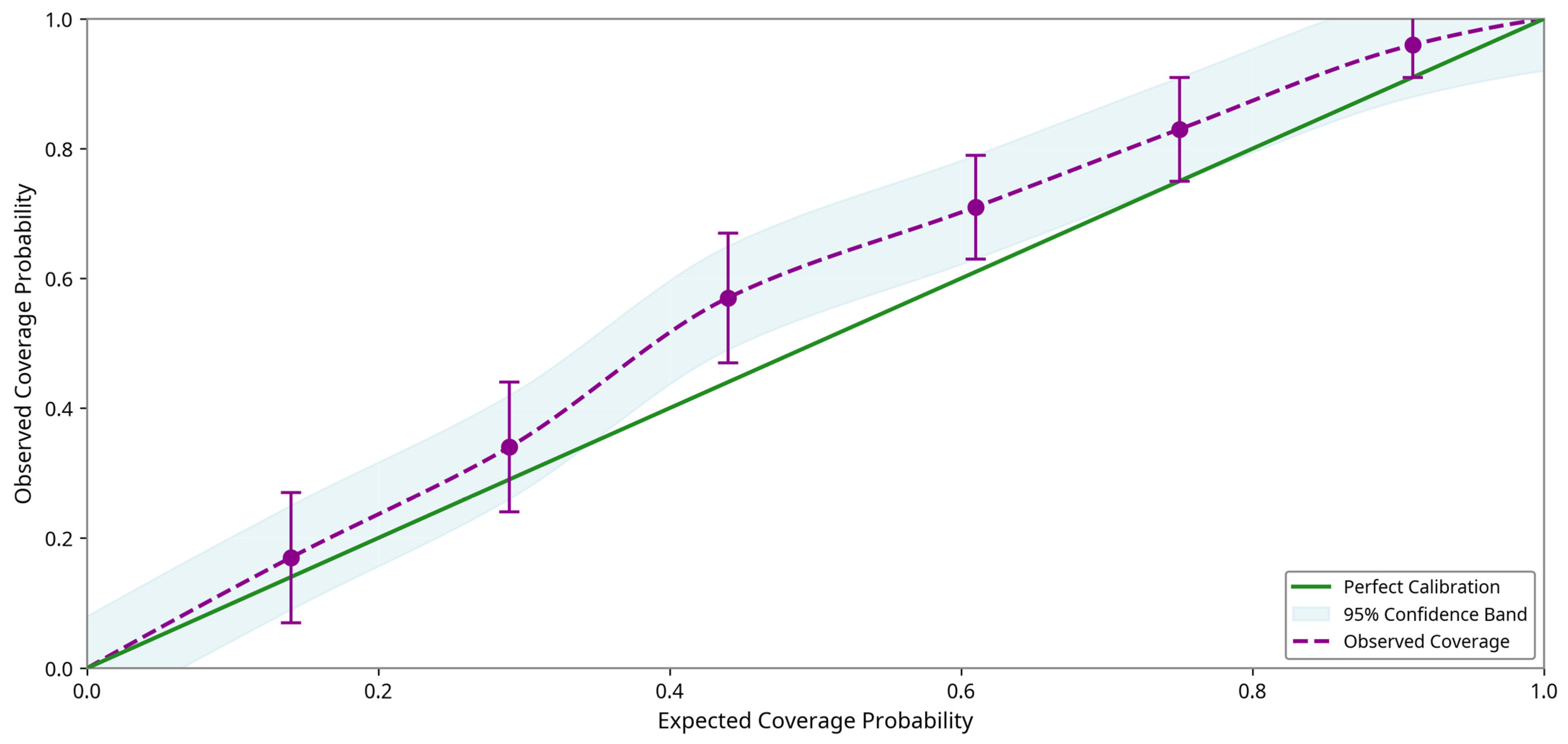

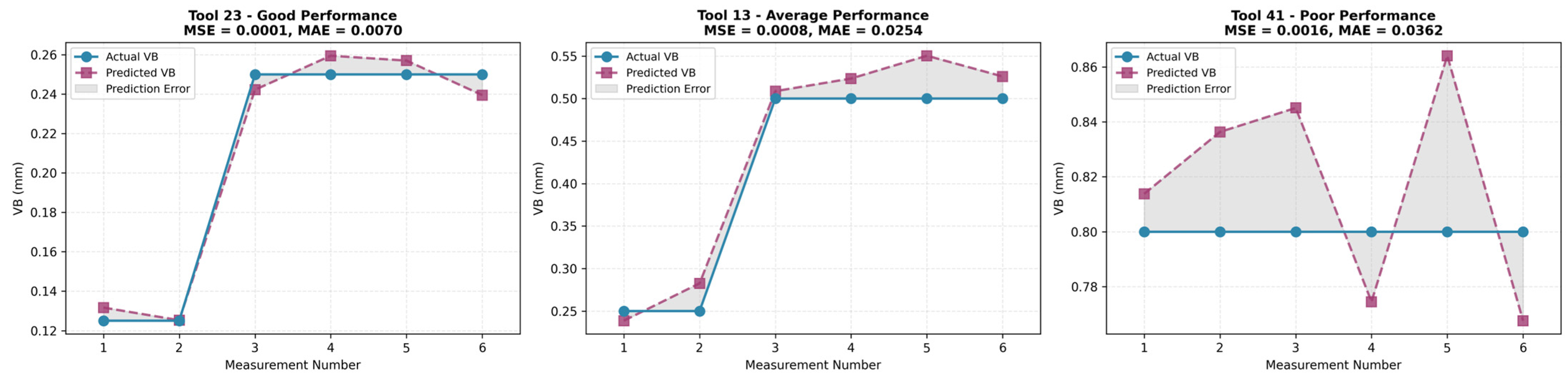

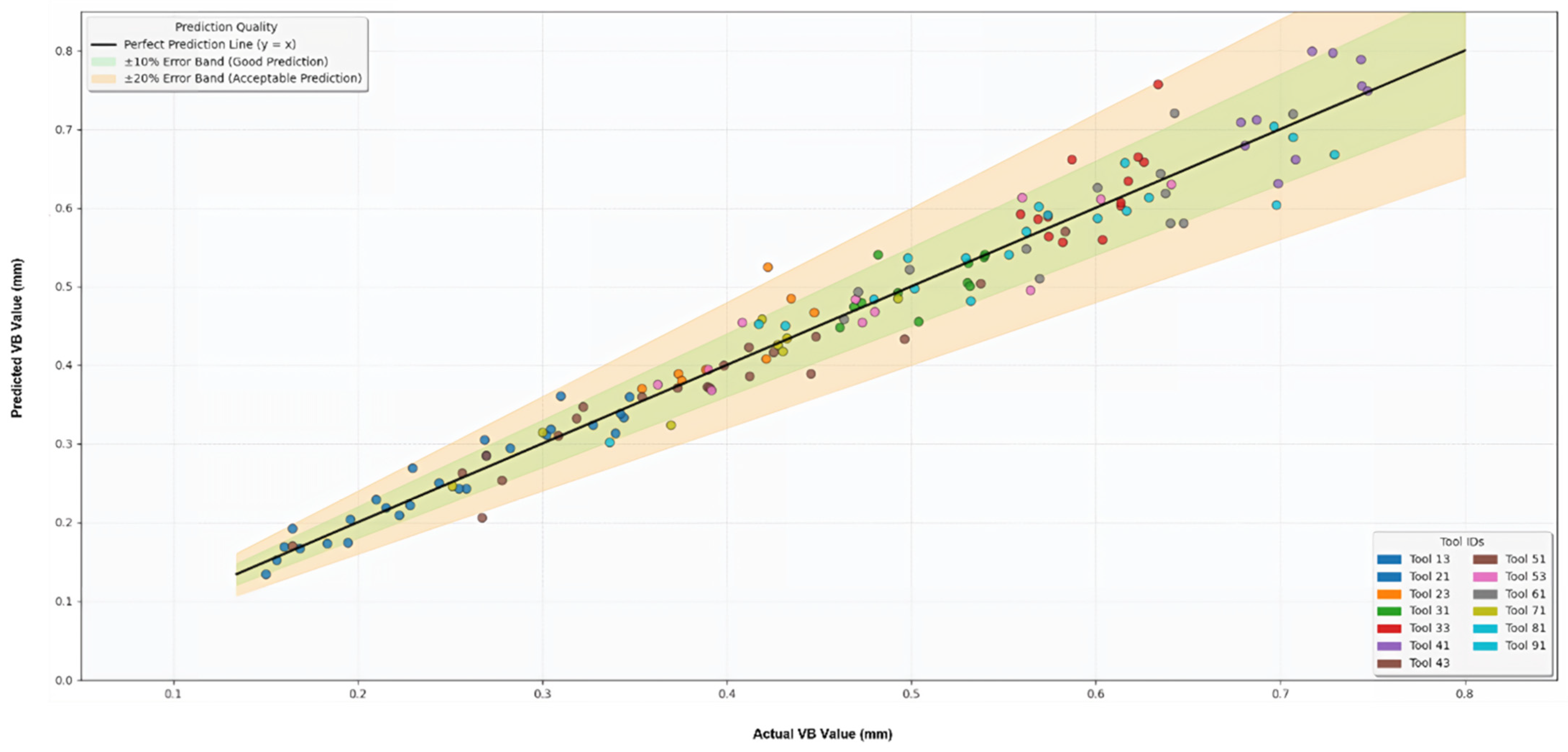

This study proposes a tool RUL prediction model based on GNNs and Transformer optimization. While prior work has explored GNNs for leveraging equipment structures in RUL estimation, our novelty lies in the seamless integration of GNNs with Transformers via a specialized multimodal fusion strategy—combining dedicated encoders for diverse data types, GAT for relational inference, and a cross-modal Transformer decoder for deep interactions—coupled with a dual-head multi-task output for simultaneous RUL regression and health state classification, enabling more refined predictive maintenance beyond single-task paradigms. Through multimodal data fusion, graph adaptive relationship inference, and multi-task learning, it achieves precise evaluation and prospective prediction of tool health status. The experimental results validate the effectiveness of the model in aspects such as static correlation analysis, segmented trend modeling, risk interval division, multimodal interpretation, prediction performance evaluation, and failure classification. Moreover, they highlight VB’s multi-dimensional value as the core degradation indicator, extending beyond basic correlations to inform a closed-loop validation framework that integrates data exploration, model verification, and application optimization—ensuring the model’s comprehensiveness and robustness without reiterating module details.

This study broadens GNNs and Transformer applications in data processing by explicitly modeling entity relationships and enabling collaborative RUL regression and state classification, revealing wear turning points and providing a new framework for physics-guided deep learning, where “physics-guided” refers to the incorporation of domain-specific physical proxies like VB, derived from tool geometry and wear mechanics, into the fusion architecture, constraining the model to respect real-world degradation dynamics rather than purely data-driven patterns. Relative to mechanism-based models, it circumvents assumption limitations through data-driven multimodal fusion for superior generalization; compared to traditional machine learning, it minimizes feature engineering needs via GATs, outperforming ensembles in multivariable interaction capture. Benchmark comparisons underscore these gains in error control, fit, and classification, affirming the model’s innovative fusion and inference value. While the proposed model excels in accuracy, its computational cost must be considered for industrial feasibility. Training the full GNNs-Transformer architecture on an NVIDIA RTX 3090 GPU (24 GB memory) takes approximately 2.5 h per run, with inference time per sample around 50–100 ms, depending on batch size. In comparison, benchmark models like LSTM-SVM and LSTM-XGBoost require 30–60% less training time (1–1.5 h) due to simpler architectures, though they sacrifice multimodal depth. This higher cost stems from the GAT layers and Transformer decoder’s attention mechanisms, which process complex graph and sequence data. For industrialization, the model’s deployment in real-time CNC systems could be viable on edge devices with optimizations, but current complexity may limit scalability in resource-constrained environments; future lightweighting could reduce inference latency by 20–40% without significant accuracy loss, enhancing practical applicability in smart manufacturing.

The uncertainty assessment utilizing partial dependence plots represents an initial step toward model interpretability by visualizing VB’s nonlinear influence on RUL predictions. This aligns with the broader Explainable Artificial Intelligence (XAI) paradigm, which emphasizes transparency and trust in black-box models like GNNs and Transformers, particularly in high-stakes manufacturing where opaque decisions could lead to costly failures or safety risks. By integrating XAI techniques, such as SHapley Additive exPlanations (SHAP) values for feature attribution and ablation for modality contributions, the model not only quantifies uncertainty but also elucidates decision pathways, fostering user confidence in predictive maintenance systems. This approach is consistent with recent advances in developing physically interpretable, data-driven models. In manufacturing contexts, XAI techniques such as SHAP have been employed to analyze machine learning models for cavity prediction in electrochemical machining, effectively linking model behavior to process-level understanding and supporting anomaly detection [

45]. In the wider XAI context for manufacturing, this work contributes to bridging the gap between complex AI and domain experts, enabling better integration with physics-guided models defined here as hybrids that embed physical proxies such as wear metrics into neural architectures for enhanced explainability. Influential future directions could include developing real-time XAI frameworks for edge-deployed systems, leveraging counterfactual explanations to simulate “what-if” scenarios in tool wear, or exploring federated XAI in multi-factory settings to preserve data privacy while aggregating insights across distributed CNC environments—ultimately advancing trustworthy AI for Industry 4.0 applications.

Although multimodal fusion remains the model’s key strength, VB indeed plays a central role as the core degradation indicator in both modeling and analysis, as it directly quantifies tool wear and serves as a proxy for integrating other modalities. However, to mitigate the risk of the approach devolving into a VB-centric regression rather than a truly multimodal system, we conducted feature importance analysis as

Table 2 and ablation experiments as

Table 3 to empirically validate the contributions of each modality (time-series signals like cutting forces Fx/Fy/Fz, geometric data including diameters, operational parameters such as cutting depth/speed/feed rate, and phase contexts like experimental batches). Using SHAP [

46] values in the feature importance analysis, VB accounted for approximately 45% of the overall importance in RUL prediction, while cutting forces and surface roughness parameters contributed significantly to capturing dynamic interactions and machining quality variations; operational parameters and phase contexts added 10% and 5%, respectively, enhancing cross-batch generalization.

Ablation experiments, where individual modalities were removed and the model retrained on the same dataset, showed performance degradation: removing time-series data increased MSE by 18% and reduced accuracy by 9%; excluding geometric data (beyond VB) raised MAE by 12%; omitting operational parameters decreased R2 by 7%; and ablating phase contexts lowered F1-Score by 6%. Surface roughness features (Ra, Rz, Rt, etc.) are integrated into the geometric feature stream; therefore, their ablation effect is collectively represented under “Geometric Data (excluding VB)” rather than treated as a separate modality.

These results confirm that while VB is pivotal, the multimodal fusion leverages complementary information from all sources, preventing over-reliance on a single indicator and ensuring robust predictions in diverse conditions.

Despite these advancements, limitations persist: the model’s reliance on VB may undervalue noise in industrial settings, potentially biasing predictions; furthermore, the study does not demonstrate generalization across different tools or materials, as evaluations were confined to the specific dataset of 13 tools under controlled conditions, limiting insights into broader applicability; high computational demands further constrain real-time deployment. Future efforts should diversify datasets for broader conditions, integrate additional modalities to lessen VB dependence, and apply lightweighting techniques like pruning or quantization to optimize for edge computing, balancing accuracy with practicality.

6. Conclusions

In this paper, an innovative cutting tool RUL prediction model based on GNNs and Transformer optimization is presented for effectively addressing the core challenges of multimodal data fusion, complex relationship modeling, and task singularity. The modeling approach can finely process multimodal features through dedicated encoders, explicitly capture non-Euclidean structural dependencies using GATs, generates fused contextual features via a cross-modal Transformer decoder, and ultimately render collaborative prediction of RUL regression and health status classification of cutting tools with dual-head outputs.

The key conclusions of this study are summarized in the following points:

1. The proposed GNNs-Transformer architecture innovatively integrates multimodal encoding, graph adaptive fusion via GATs, and cross-modal Transformer decoding, enabling deep interaction across time-series signals, geometric data, operational parameters, and phase contexts, while the dual-head multi-task output simultaneously handles RUL regression and health state classification, overcoming limitations of shallow fusion and single-task models.

2. Experiments on a multimodal dataset of 824 entries, structured across 13 tools with 4–6 measurements each and split into 70% training, 15% validation, and 15% independent testing, validate the model’s efficacy through a systematic framework including correlation analysis, trend modeling, risk assessment, and uncertainty quantification.

3. VB emerges as the core degradation indicator, with analyses confirming its strong negative correlation with RUL, nonlinear temporal evolution, risk threshold potential, and robust performance in failure classification, supported by critical evaluations such as residual diagnostics revealing minor mid-range biases and heteroscedasticity.

4. The model outperforms benchmarks (LSTM-SVM, LSTM-CNN, LSTM-XGBoost) by 26–41% in MSE, 33–43% in MAE, 6–12% in R2, 6–12% in accuracy, and 7–14% in F1-Score, verified through feature importance (VB at 45%, others complementary) and ablation studies demonstrating each modality’s essential role.

5. Overall, this architecture enhances prediction accuracy, robustness, and decision guidance for predictive maintenance in smart manufacturing, with future directions including dataset expansion, further modality integration, lightweighting for edge deployment, and advanced XAI for real-time interpretability in Industry 4.0.