From Sensors to Insights: Interpretable Audio-Based Machine Learning for Real-Time Vehicle Fault and Emergency Sound Classification

Abstract

1. Introduction

- Pioneers audio-based diagnostics in ITSs by introducing an interpretable machine learning framework that complements vision-based systems and enhances accessibility, such as providing auditory-to-visual or haptic alerts for drivers with hearing impairments.

- Provides three novel, curated datasets of vehicle fault sounds, emergency sirens, and urban environmental noises—filling a critical gap in publicly available resources for sound-driven ITS research.

- Advances model interpretability in acoustic ITSs by systematically comparing SHAP, Boruta, and ANOVA feature selection and employing SHAP to provide global and local explanations of model predictions.

- Contributes to urban mobility and smart city integration by enabling real-time auditory-to-visual translation of critical events (e.g., sirens, vehicle faults), supporting accessibility for hearing-impaired users, and providing compatibility with intelligent transportation infrastructures such as roadside monitoring and connected vehicle systems.

2. Background

2.1. Machine Learning for Audio Classification

2.2. Feature Extraction for Acoustic Signals

- Mel Spectrograms represent sound energy over time and frequency in a perceptually relevant scale, making them suitable for capturing vehicle and emergency sounds’ tonal and temporal characteristics [21].

- Mel-Frequency Cepstral Coefficients (MFCCs) describe the spectral envelope of an audio signal. They are widely used for distinguishing between different sound sources.

- Chroma Features extract pitch class information, particularly useful when the timbre and tonal structure are essential for classification.

2.3. Feature Selection for Model Efficiency and Interpretability

- Boruta is an all-relevant feature selection method based on Random Forests or Extra Trees, which iteratively removes irrelevant features by comparing them with randomized shadow features [22]. While accurate, Boruta is computationally intensive and often selects a large feature subset.

- SHAP (SHapley Additive exPlanations) assigns importance scores to features by quantifying their contribution to the model’s output. Based on cooperative game theory, SHAP provides global and local interpretability, allowing users to understand model behavior even in black-box classifiers like MLPs [25,26].

2.4. Model Interpretability and the Need for Explainable AI

3. Related Work

3.1. Sound-Based Fault Detection in Vehicles

3.2. Sound-Based Emergency Detection and Situational Awareness

- Expanded and Structurally Enhanced Datasets: While the earlier study focused on a single car fault dataset, the current work introduces two more curated datasets: one dedicated to emergency and environmental sounds and another combining vehicle and emergency audio classes. This comprehensive dataset design enables broader generalization and reflects more realistic ITS audio scenarios, paving the way for improved multi-context classification performance.

- Integration of Interpretable Feature Selection Techniques: A significant enhancement in this version is using multiple feature selection approaches—ANOVA, Boruta, and SHAP—to evaluate feature relevance and model interpretability. SHAP, in particular, is used to provide transparent, global, and local explanations for the influence of each feature on the model’s decisions. This step ensures the system is high-performing and explainable, aligning with the growing demand for interpretable AI in safety-critical domains like ITSs.

- Deployment of Advanced Ensemble Classifiers: The previous version evaluated standard machine learning models such as neural networks, logistic regression, and random forests. In contrast, the current study adopts advanced ensemble classifiers—XGBoost, LightGBM, and Extra Trees—achieving classification accuracies exceeding 91% and AUC values above 0.99 across all datasets. These models offer improved generalization, robustness, and scalability compared to traditional classifiers.

- Focused Emphasis on Real-Time Applicability and Inclusivity: The updated framework is explicitly designed for real-time ITS deployment, with practical features including auditory-to-visual conversion mechanisms and real-time alerts. This enhances the system’s relevance for users with hearing impairments and those operating in noise-insulated environments, thereby contributing to more inclusive urban transportation systems.

- Stronger Alignment with Smart City Objectives: The current study is more deeply integrated into the broader vision of smart city development. It emphasizes scalability, interpretability, and inclusivity. It offers a data-driven, non-intrusive solution that supports predictive maintenance, emergency detection, and safe mobility—key pillars of intelligent urban infrastructure.

3.3. Identified Research Gaps

- Inconsistent Feature Extraction and Selection Practices: There is substantial variability in the audio features extracted across studies, ranging from MFCCs [33,58] and spectrograms to wavelet and cepstral features [5,33,34]. However, the importance of these features is rarely evaluated systematically, leading to poor reproducibility and hindering meaningful comparisons between models. Moreover, the relationship between the number of selected features and model performance is often overlooked [35], leaving the identification of optimal feature subsets unresolved.

- Lack of Robustness in Feature Representations: Many existing approaches rely heavily on classical audio features—particularly MFCCs and Mel spectrograms—without adequately accounting for their sensitivity to noise or variation in operating conditions. This compromises the robustness of models, especially in real-world environments characterized by high acoustic variability. Limited Real-World Generalization [42,43,48]: A significant number of studies [5,28,32,35,56] utilize custom-built datasets that lack environmental diversity, limiting the models’ ability to generalize beyond controlled settings. Additionally, several models are trained on small-scale or narrowly scoped datasets [28,35,40,57], which reduces their effectiveness in detecting rare or previously unseen fault conditions.

- Underutilization of Temporal Modeling: Despite the sequential nature of acoustic signals, temporal dynamics are often underexploited. Few studies adopt architectures such as LSTM, GRU, or attention-based models, which can capture the evolving structure of acoustic events over time. Notable exceptions include [38,44,45], which suggest that incorporating temporal dependencies can improve recognition, accuracy, and reliability.

- Scalability and Deployment Constraints: Another recurring limitation is the lack of optimization for real-time, low-power deployment. Many models are not designed with embedded or IoT platforms in mind, which impedes their integration into smart vehicles, smart homes, and broader smart city infrastructures.

| Ref. | Application | Technique | Dataset Used | Size | Accuracy | Key Contribution | Limitations |

|---|---|---|---|---|---|---|---|

| [28] | Vehicle type classification | Zero Crossing Signature (ZCS) | Custom | 417 | F1 = 0.86 | Introduced the time-domain method for classifying engine types (diesel/gasoline) | Limited dataset generalizability |

| [29] | Acoustic fault diagnosis | Transformer + AFE | CWRU | 100 | F1 = 0.95 | Proposed adaptive feature enhancement in transformer architecture | Computationally expensive |

| [5] | Industrial automation | DCSLBP + ML | MIMII (noisy) | 5101 | 95% | Developed a lightweight model for machine malfunction detection | Focused on structured lab settings |

| [30] | Bearing fault diagnosis | VAE + CNN | CWRU | 2048 | 96.62% | Combined generative and discriminative models for noise robustness | Requires large datasets for VAE training |

| [31] | Vehicle fault detection | Hybrid ML | Real-car dataset | 351 | 92% | Built an early fault detection system using multiple sound-domain features | It may not scale easily across different car models |

| [32] | Automotive diagnostics | Rule-based ML | Real vehicle audio | 555 | 98.6% | Cognitive-inspired system using expert rule integration | Rule-based models lack flexibility for unseen faults |

| [33] | Engine fault detection | MFCC + ML | Local dataset | 280 | 92.17% | Applied classical ML to structured audio features for engine classification | The dataset is not publicly available. |

| [34] | Bearing fault diagnosis | Acoustics + Vibration | CWRU + synthetic | 500 | 98.75% | Merged vibration and acoustic features for improved detection | The fusion system increases complexity |

| [35] | Railway turnout faults | Sound + SVM | Custom | 1600 | 98% | Diagnosed turnout switch issues using time–frequency signal features | Needs real-time implementation testing |

| [36] | Engine knock detection | ML + LSTM | Engine recordings | 153 | 90% | Compared traditional and deep models for knock detection | Limited generalization across engine types |

| [37] | NEV fault classification | Wavelet + SVM | Simulated NEV data | N/A | 90% | Separated battery/mechanical noise for new energy vehicles | Needs real-world NEV recordings |

| [38] | Multi-view vehicle diagnostics | CNN + Spectrogram + Scalogram | Real engine recordings | 311 | 95% | Integrated multiple views of audio for higher feature coverage | Requires computationally intense preprocessing |

| [39] | EPS motor anomaly detection | MFCC + LSTM-AE | Motor testbed | 29,759 | 99.2% | Preserved waveform structure during dimensionality reduction | May overfit small datasets |

| [40] | Robotic manipulator faults | CNN + ML | Custom | 181 | 92.34% | Evaluated ML and DL for robotic sound diagnostics | Lacks transfer learning for similar machines |

| [42] | Industrial anomaly detection | Deep Denoising Autoencoder | MIMII | 5101 | 96.31% | Improved generalization in noisy environments using denoising | Struggles with rare event classes |

| [43] | Diesel engine classification | ANN, CNN | DEFault | 3500 | 99.37% | Benchmarked performance across noise levels | The dataset may not reflect real-world complexity |

| [44] | Diesel engine diagnostics | CNN + Attention | Real engine data | N/A | 98.17% | Built a full-cylinder diagnostic model from single-cylinder data | Scalability to multi-class real-time settings has not been tested |

| [45] | Bearing fault detection | LSTM-AE + GCN | CWRU | N/A | 97.3% | Integrated temporal and spatial graph structures | Model complexity increases training cost |

| [46] | Low-bit-depth signals | MLP | MaFaulDa | 1951 | 99.34% | Achieved fault type/severity classification in low-quality signals | Limited to low-res sensor environments |

| [49] | Emergency signal detection | DSP + Visual Alerts | Siren dataset | N/A | 99% | Developed a visual emergency alert system for the hearing-impaired | Experimental, no large-scale deployment |

| [50] | Emergency siren detection | CNN | Real-world | ~3000 | 98.24% | Built a CNN model for distinguishing sirens and horns | The model’s robustness in high-noise traffic has not been proven |

| [53] | Smart home emergencies | Voice/audio-based | A3Novelty, ITAAL | ~4500 | 95% | Integrated voice command and emergency detection in an embedded system | Limited hardware support |

| [54] | SPH safety monitoring | Deep learning + sound recognition | Indoor acoustics | ~2000 | 90.86% | Monitored behavioral patterns in single-person homes | The dataset may not cover all critical events |

| [55] | Indoor emergency detection | CNN + SER | Collected from the web | 692 | F1 = 77.32 | Used sound events to identify threats in indoor environments | Lacks real noise scenarios |

| [56] | Emergency in multi-person spaces | perception sensor network | Lab recordings | N/A | 85% | Used audio-visual fusion for localized scream detection | Constrained to controlled testbeds |

| [57] | Emergency alert interface | YAM Net | Custom siren dataset | ~800 | 93.68% | Built a driver-aid alert system for emergency signals | Event diversity limited |

| [58] | Railway bearing diagnostics | MFCC + MLP | Real-world airborne data | 25,000 | 97.04 | Demonstrated reliability of airborne audio under real rail conditions | Deployment requires consistent field conditions |

| [59] | Engine ball bearing fault | CNN + ResNet | NASA bearing | 9463 | 91% | Detected bearing flaws using spectral analysis | Limited generalization due to specific component training |

| [60] | Industrial sound fault isolation | Sound separation + 1D-CNN | Mixture dataset | 60 stem files | 99.58% | Proposed a fault-isolation pipeline for overlapping audio events | High processing overhead for source separation |

| [61] | Industrial machinery diagnosis | Integrated binary + multi-class model | MIMII, ToyADMOS | 5101 N/A | 93% 98% | Achieved general-purpose classification across fault types | Requires fine-tuning per machinery setup |

| [12] | Smart city diagnostics | BOWSVFS + Ensemble ML (LR, MLP, AdaBoost) | DB1, DB2, DB3 (custom) | 1164 | DB1: 91.04%, DB2: 88.85%, DB3: 86.85% | Proposed an inclusive ML framework for sound-based fault and emergency detection; introduced three curated datasets; addressed accessibility with visual feedback. | Limited real-world validation; segmentation may miss finer temporal details |

| [65] | Engine fault detection | Multimodal (acoustic + vibration) + Deep Learning | Internal dataset | 2643 | 83% | Proposed fine-grained engine fault detection using multimodal signals | The dataset is limited to specific engine types |

| [66] | Vehicle sound profiling | Neural Networks (CNN, RNN) | Real-world recordings | 6255 | 86.8% | Built an AI-based mechanic system for acoustic vehicle characterization | Limited performance in high background noise conditions |

| [67] | Environmental sound classification | CNN + Data Augmentation | ESC-50 dataset | 8732 | 89.5% | Demonstrated data augmentation benefits for environmental sound classification | Limited to environmental rather than mechanical faults |

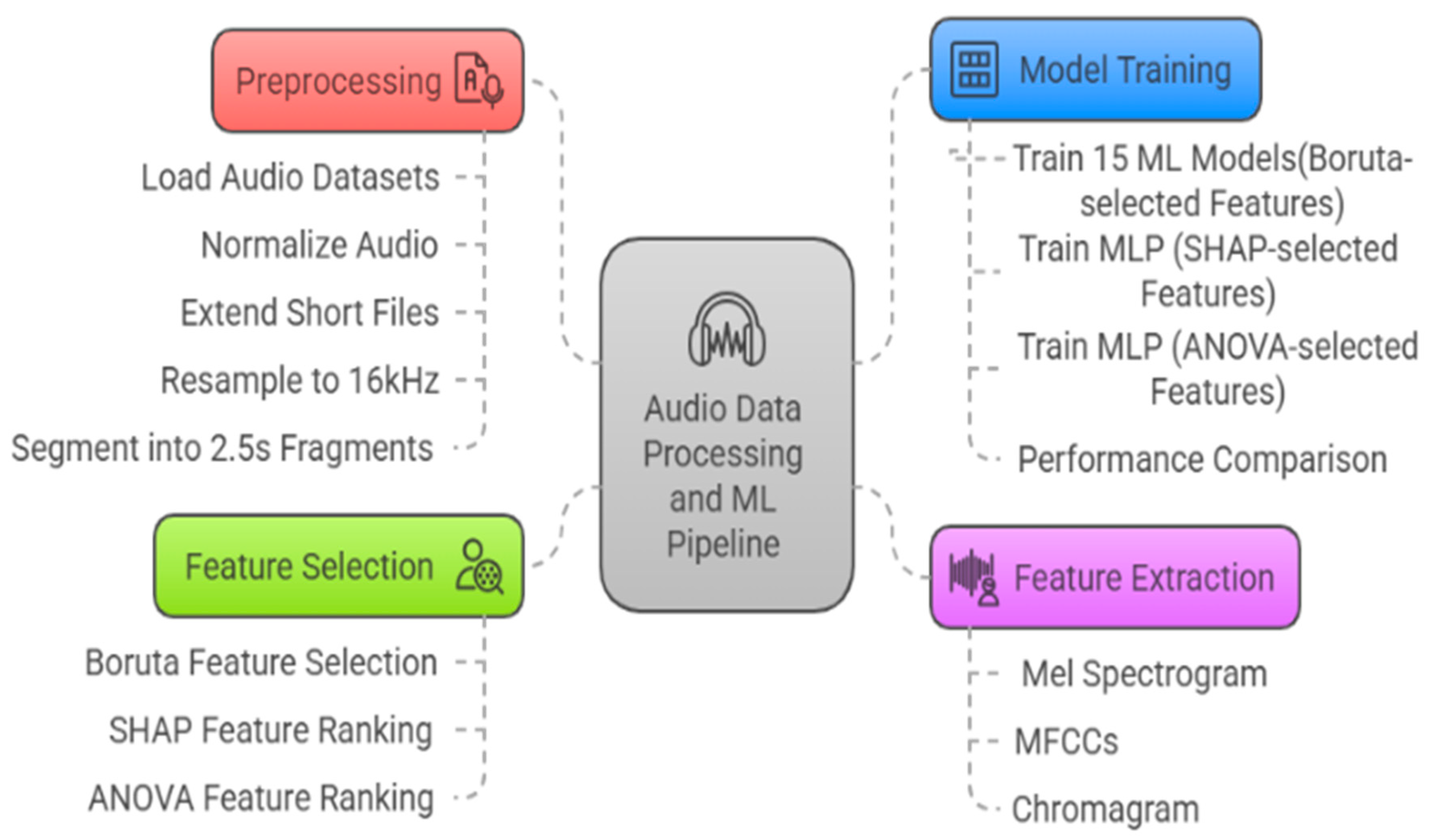

4. Methodology

4.1. Dataset Description

- Field recordings from operational vehicles under real driving conditions.

- Controlled recordings of specific mechanical faults were collected in collaboration with automotive workshops.

- Publicly available audio repositories for environmental and emergency sounds (e.g., sirens, animal calls, weather events).

4.2. Audio Preprocessing

- Normalization and Standardization

- 2.

- Duration Alignment

- 3.

- Segmentation into Fixed-Length Clips

- 4.

- Sliding Window Consideration

4.3. Feature Extraction

- Mel Spectrograms—The mean and standard deviation of energy across Mel frequency bands were computed for each clip, yielding 2 descriptors that summarize spectral energy distribution.

- Chroma Features—Audio energy was mapped to the 12 pitch classes of the musical octave, capturing harmonic and pitch-related content. Each class’s mean and standard deviation were computed, producing 24 descriptors [72].

| Algorithm 1: Compact Feature Representation (52 features) |

Compute each feature’s mean and standard deviation to create a concise representation suitable for machine learning.

|

4.4. Experimental Design

5. Results and Discussion

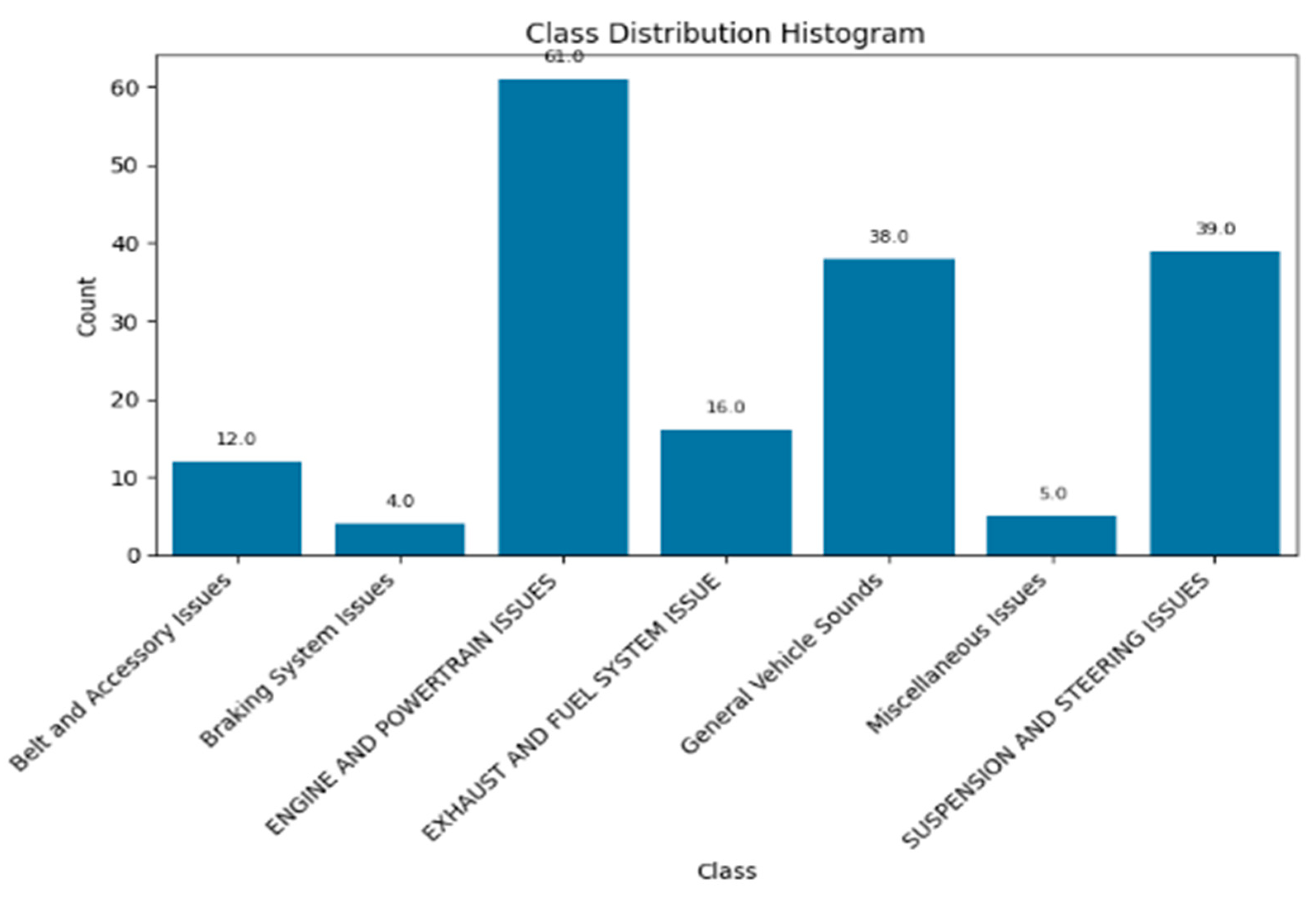

- Dataset 1: Vehicular Acoustic Fault Dataset (VAFD) focuses on sounds associated with vehicular anomalies (e.g., engine, powertrain, suspension, steering).

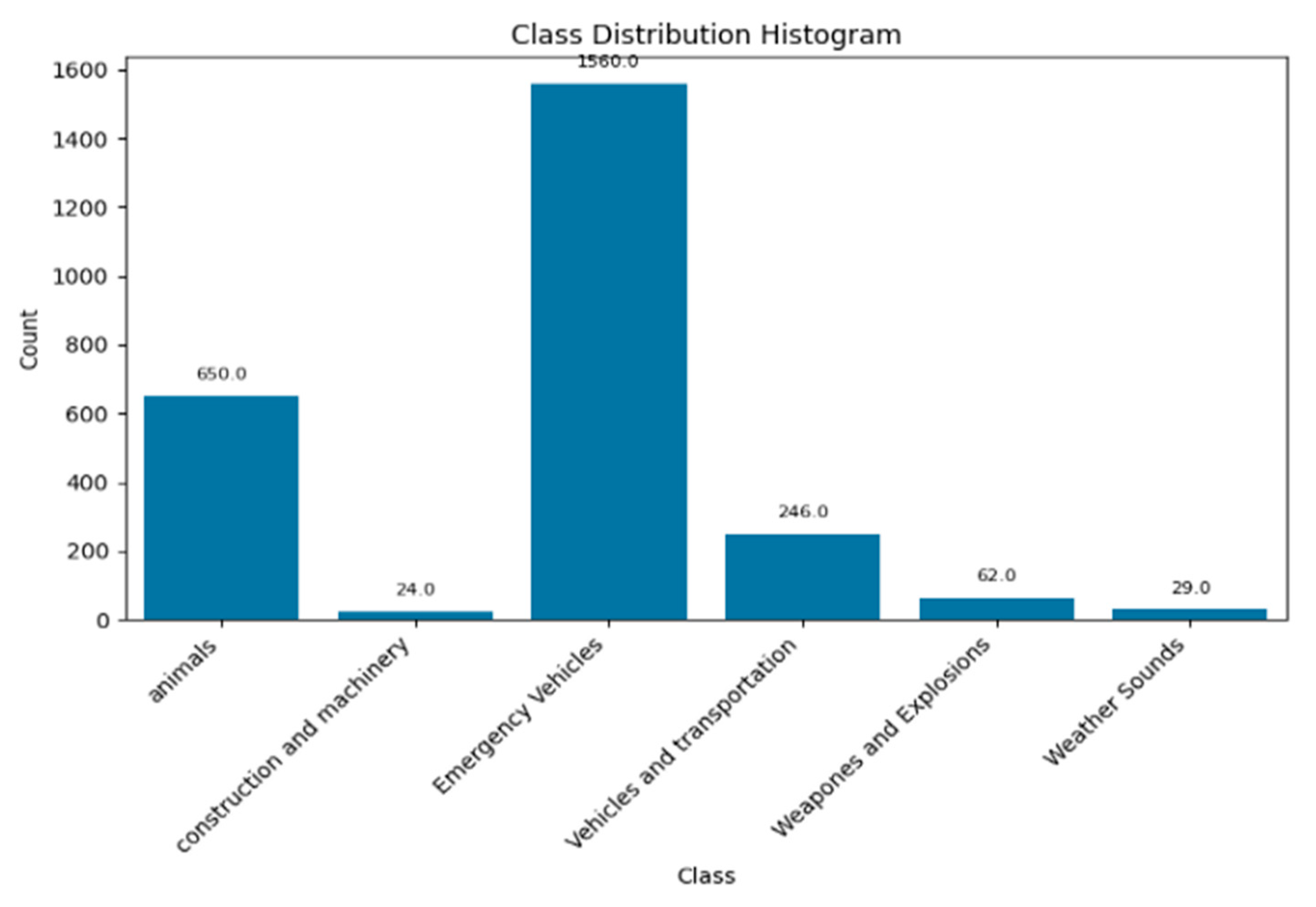

- Dataset 2: Urban Emergency and Ambient Sound Dataset (UEASD) includes emergency sirens, environmental sounds, animal calls, and construction-related noise.

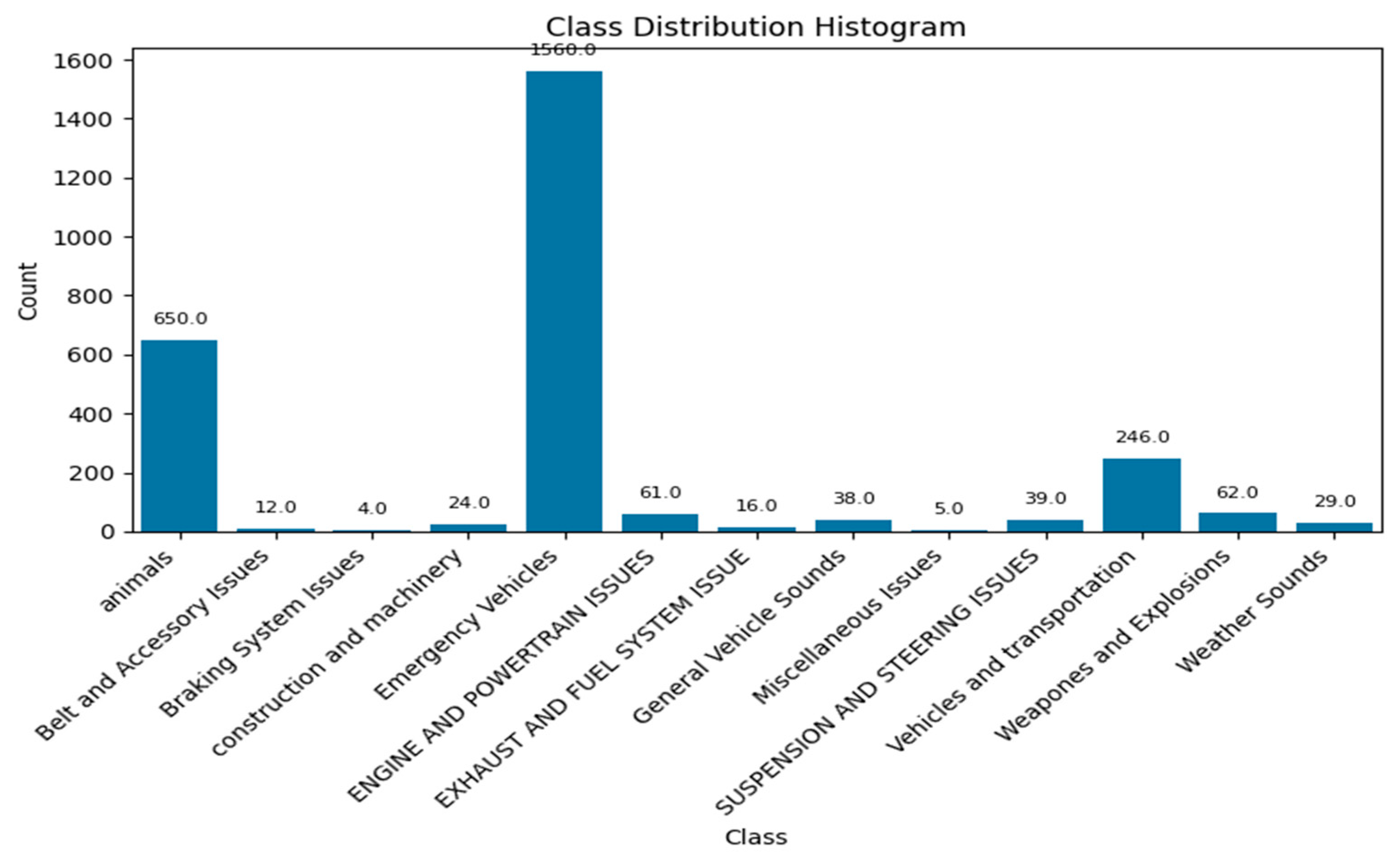

- Dataset 3: Integrated Vehicle and Environmental Sound Dataset (IVESD) merges VAFD and UEASD to increase classification complexity and assess system robustness in multi-domain contexts.

5.1. Performance Metrics

- Accuracy reflects overall correctness, but can be misleading with imbalanced datasets.

- Precision emphasizes the cost of false alarms, while recall (sensitivity) highlights the importance of capturing all critical events.

- F1-score balances precision and recall.

- Specificity measures the ability to avoid false alarms in non-critical cases.

- MCC provides a balanced evaluation even under class imbalance.

- LogLoss captures the confidence of probabilistic predictions.

- Cohen’s Kappa measures agreement beyond chance.

- AUC-ROC evaluates the trade-off between sensitivity and specificity across thresholds.

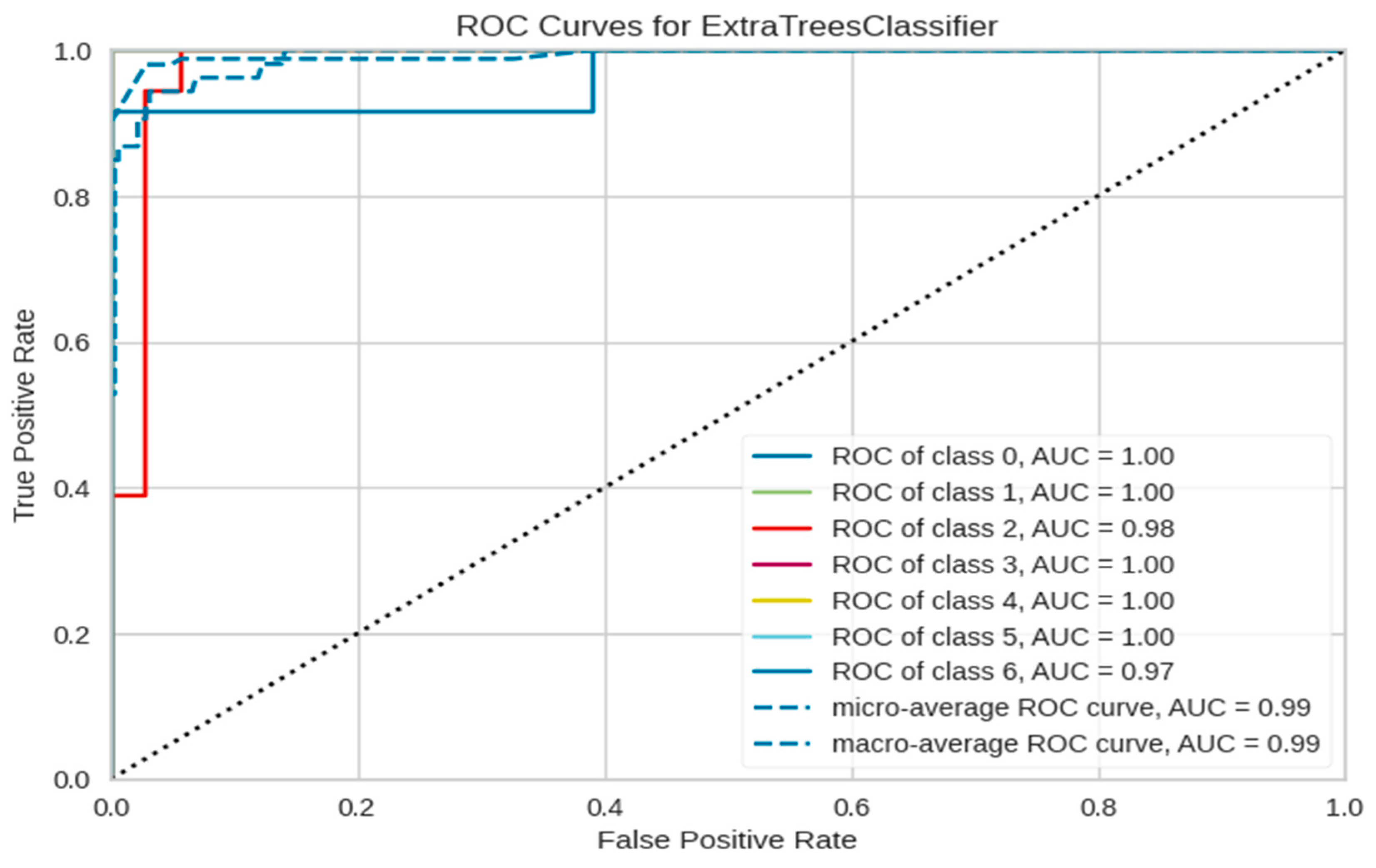

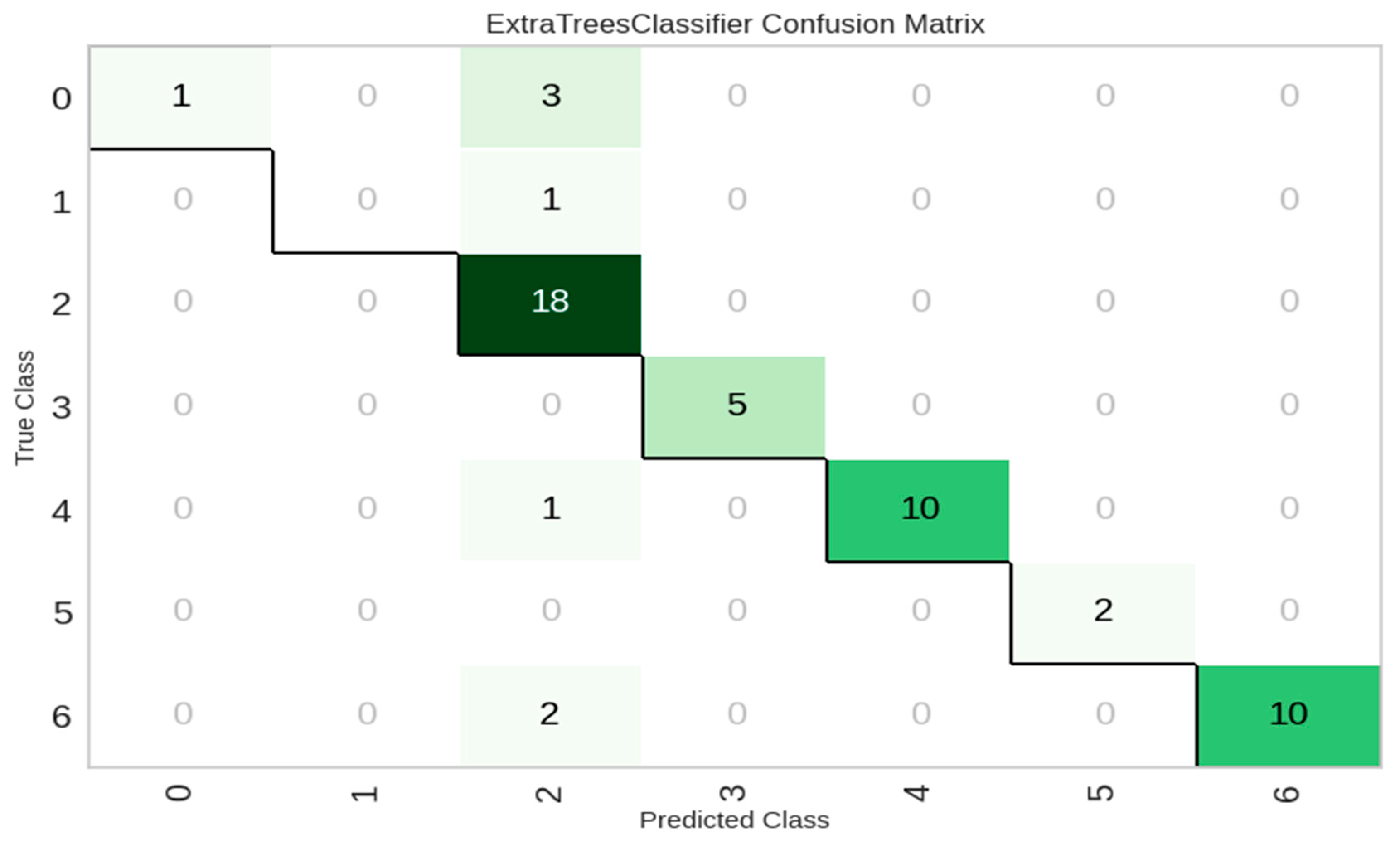

5.1.1. Phase 1—Experiment 1: Performance Analysis on VAFD

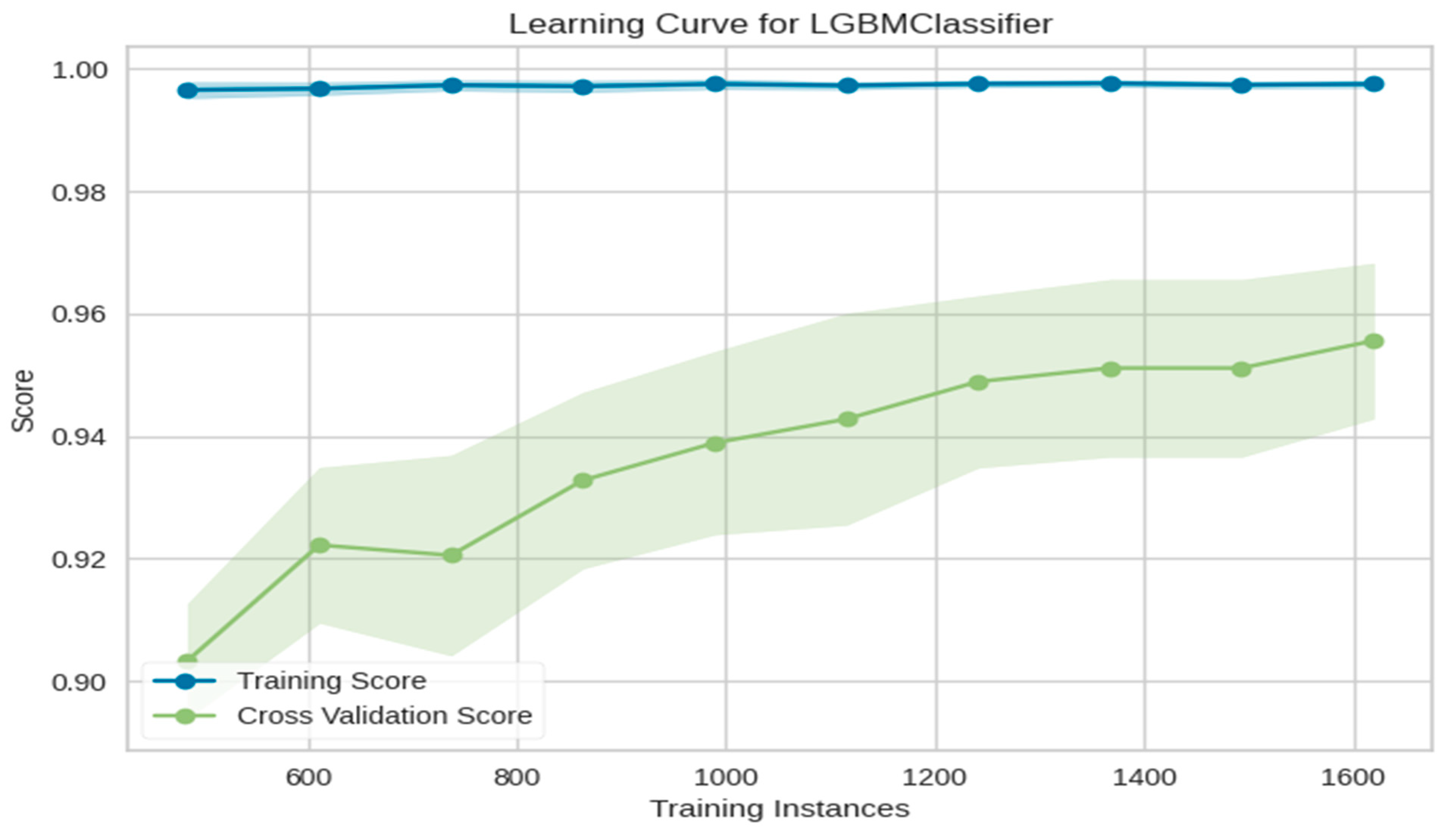

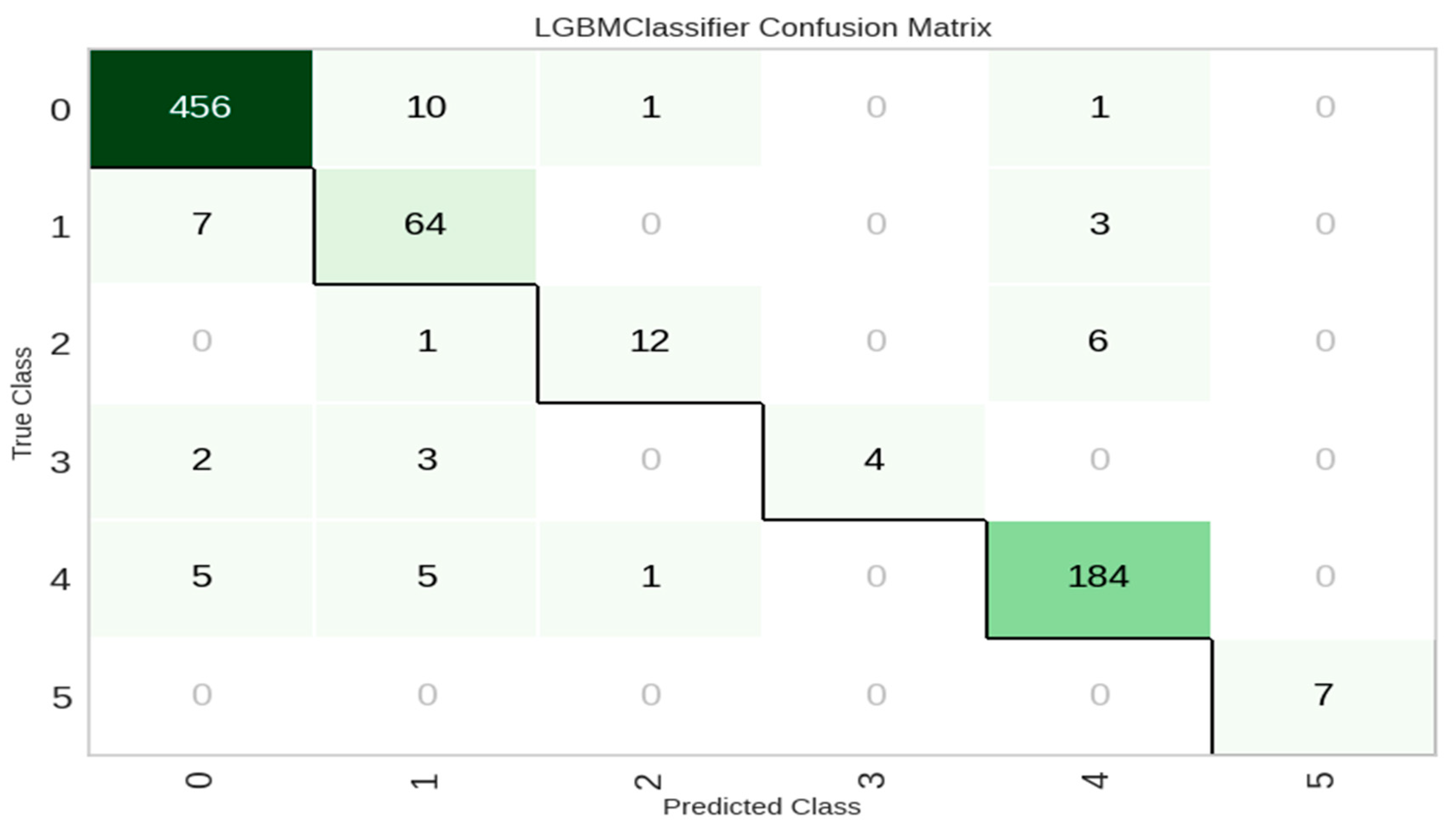

5.1.2. Phase 1—Experiment 1: Performance Analysis of UEASD

5.1.3. Phase 1—Experiment 1: Performance Analysis of IVESD

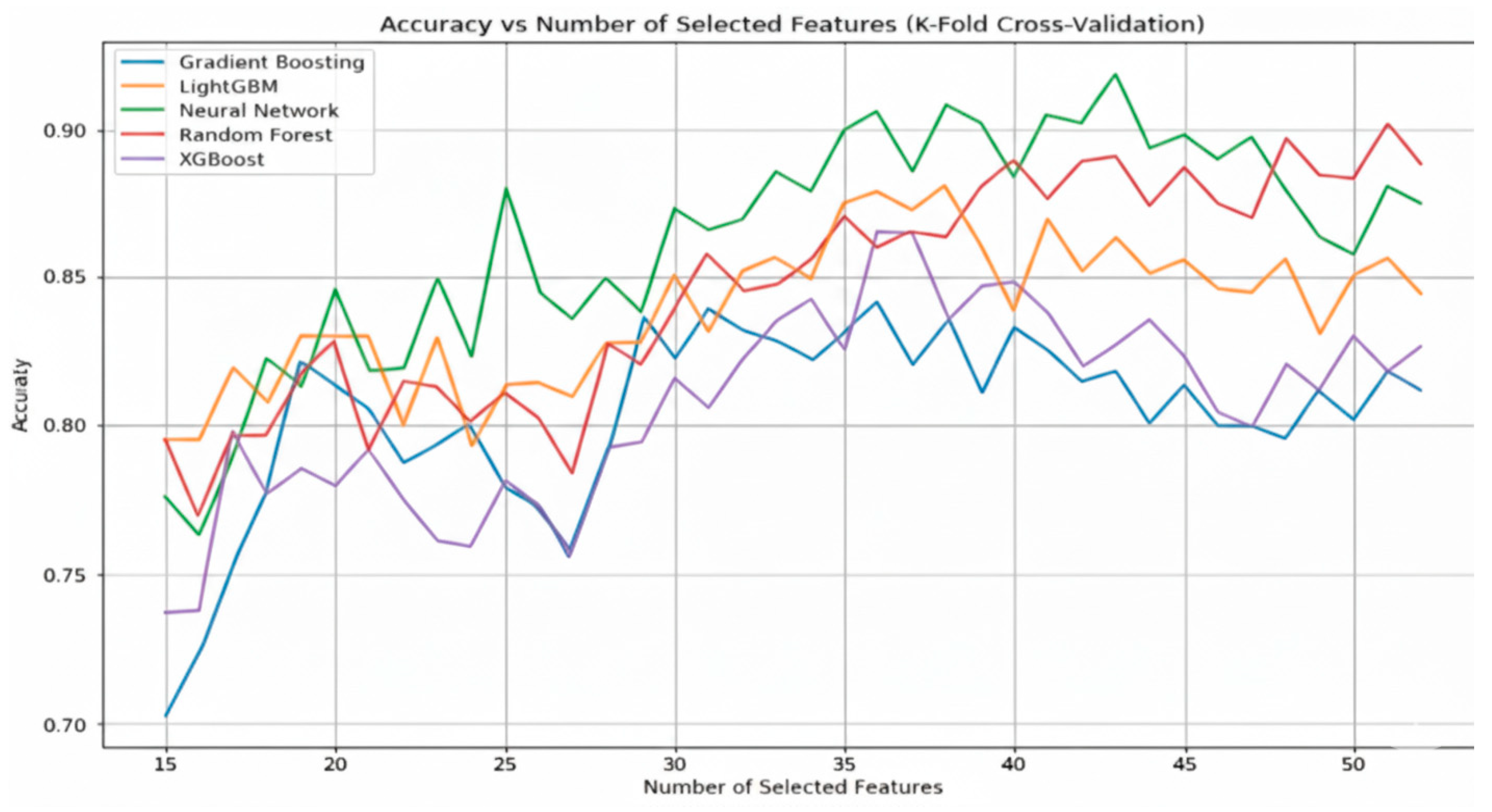

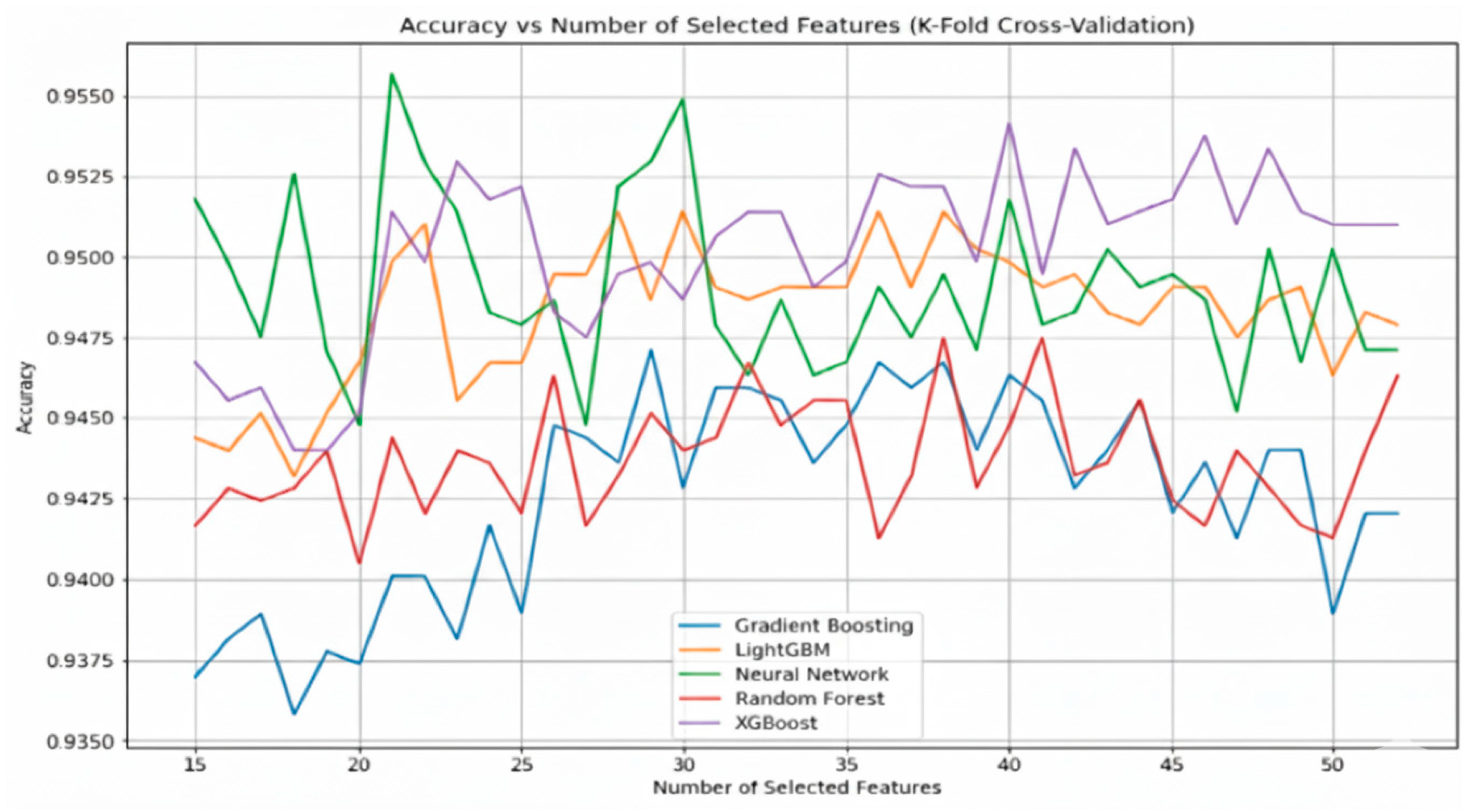

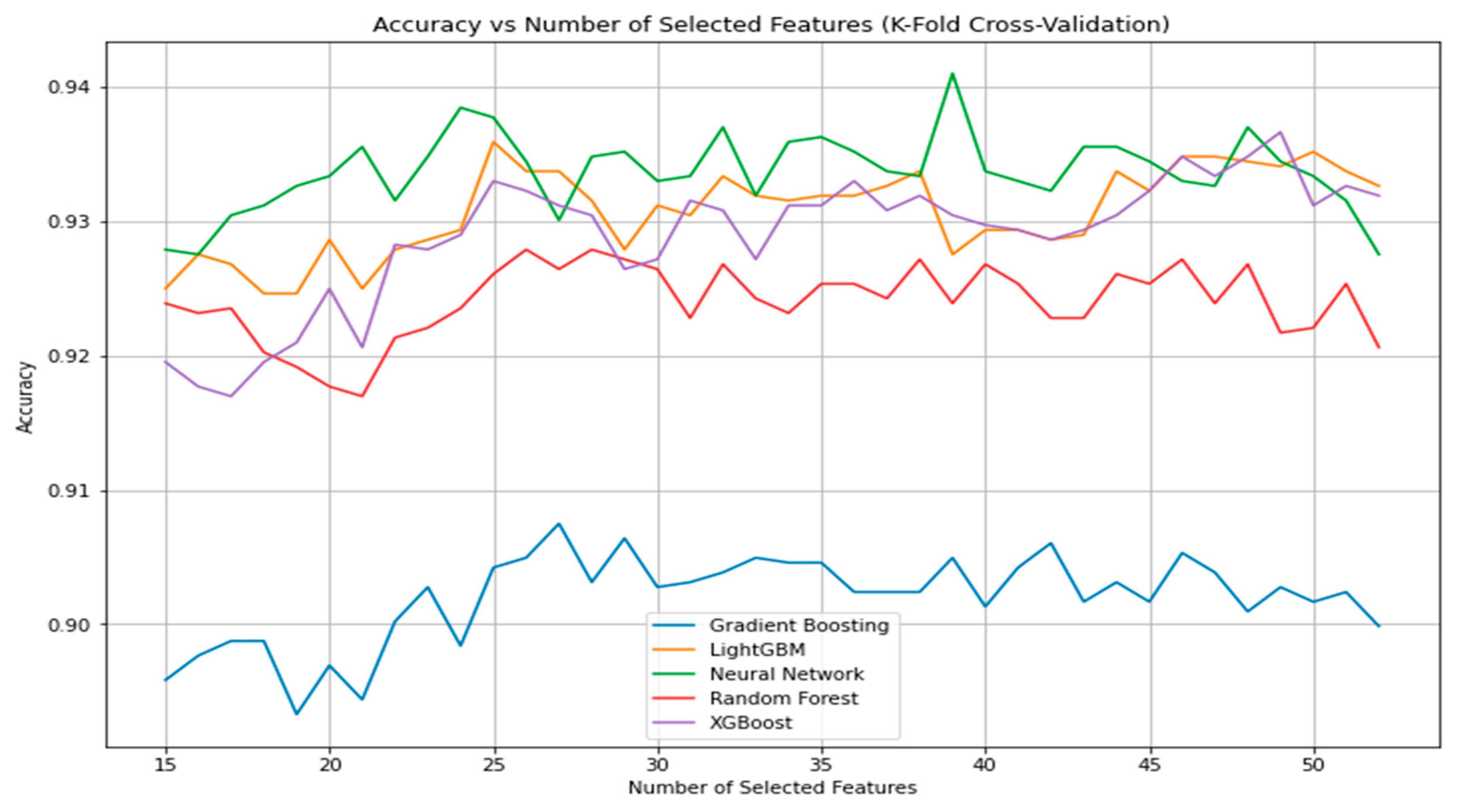

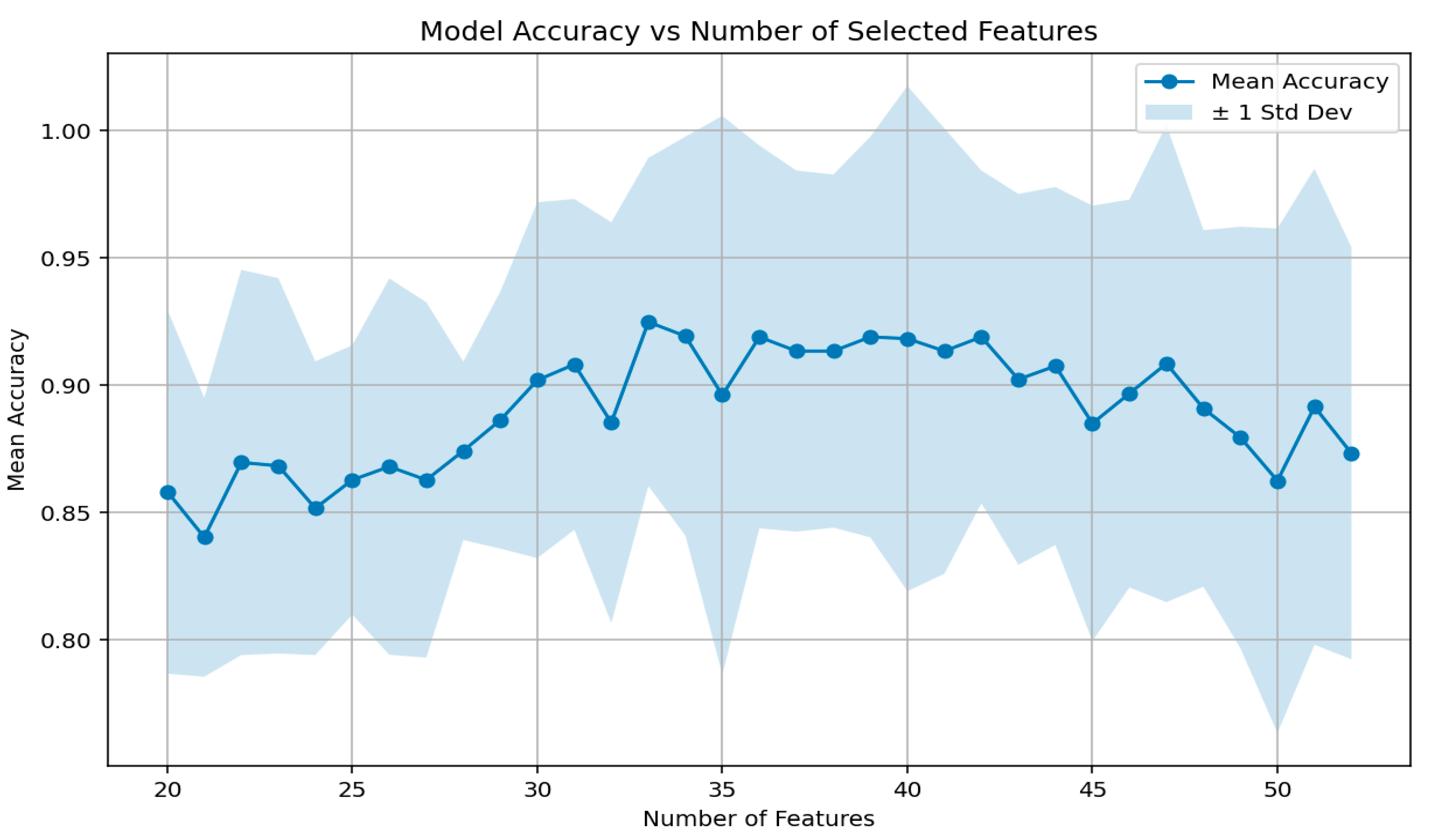

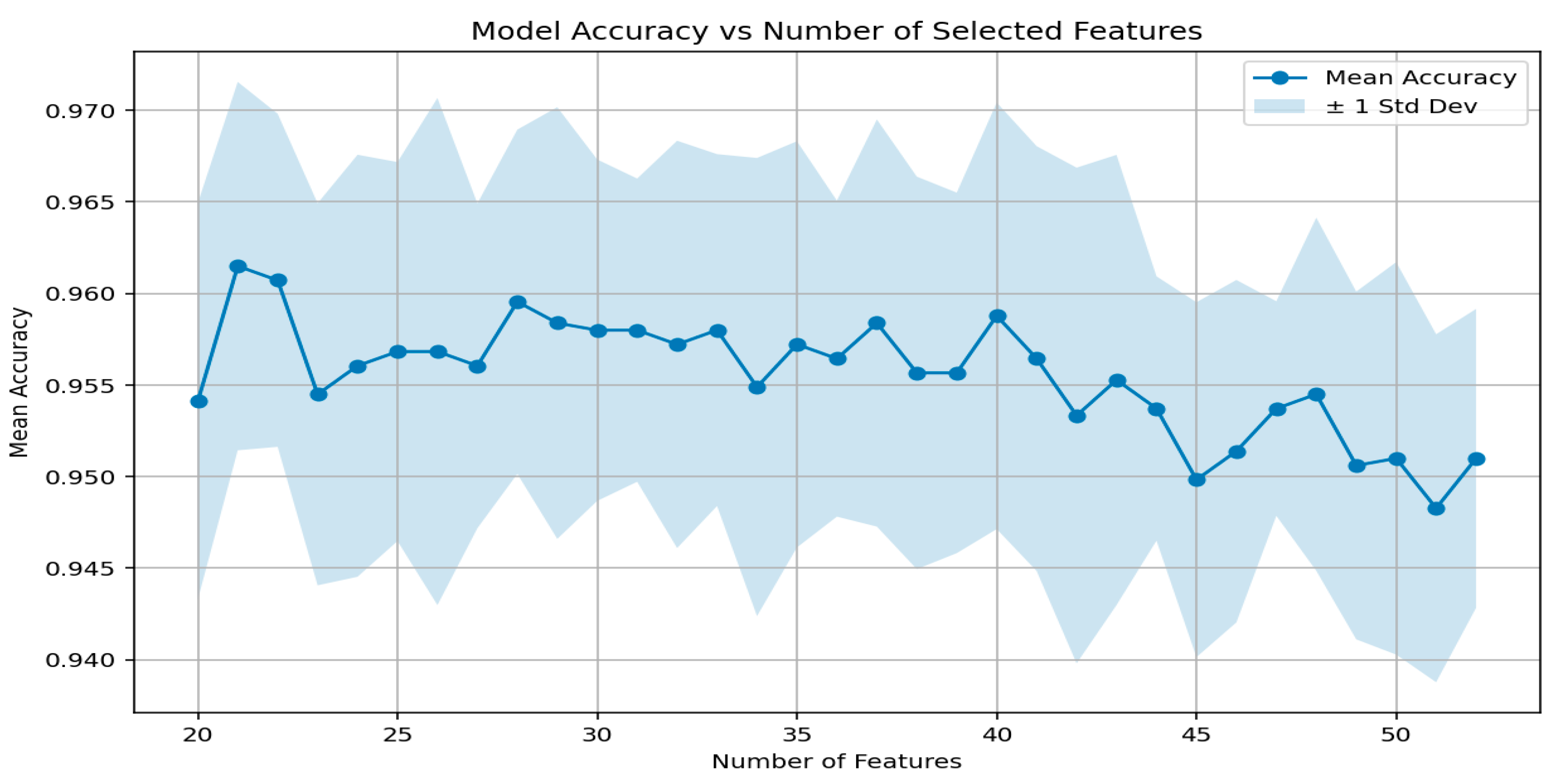

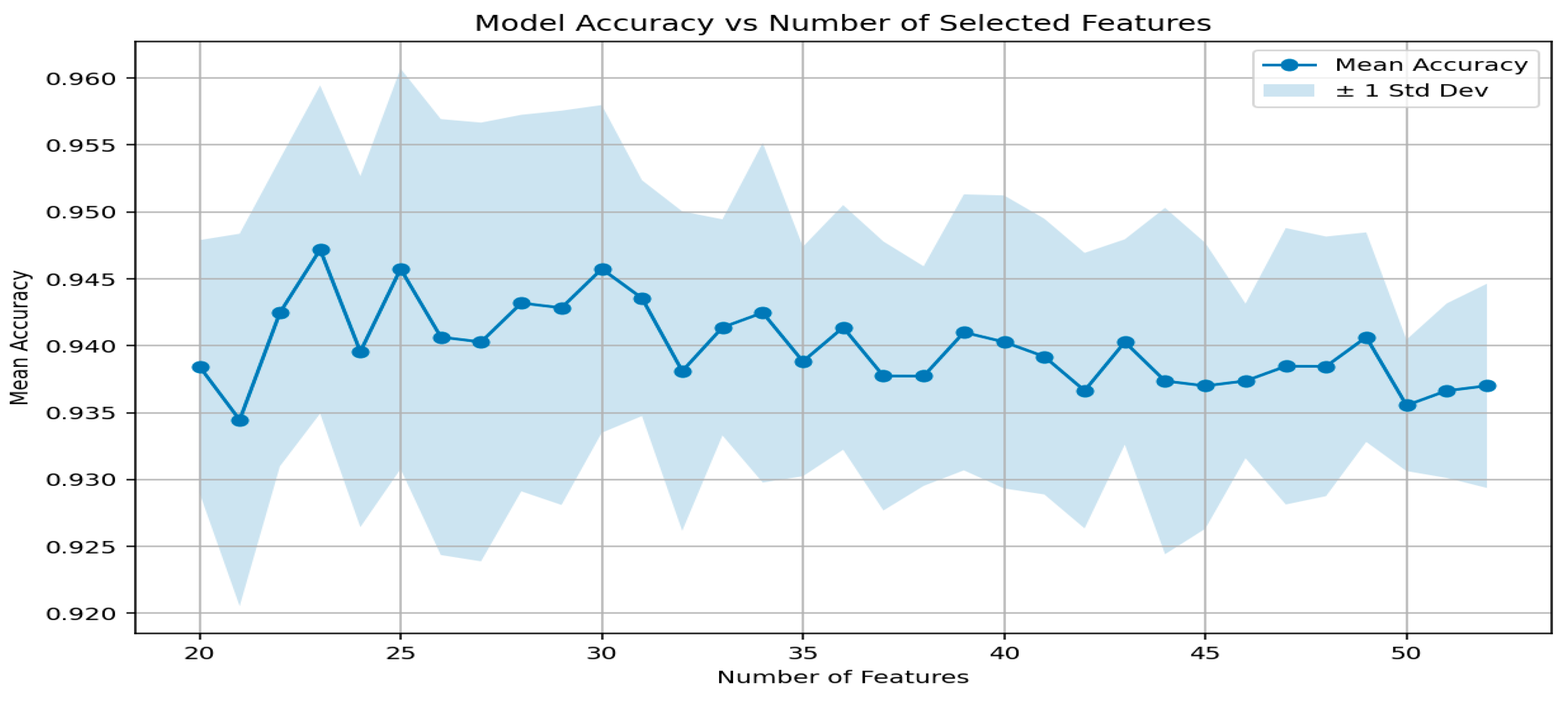

5.2. Phase 1—Experiment 2: Feature Selection Evaluation: Accuracy vs. Number of Selected Features

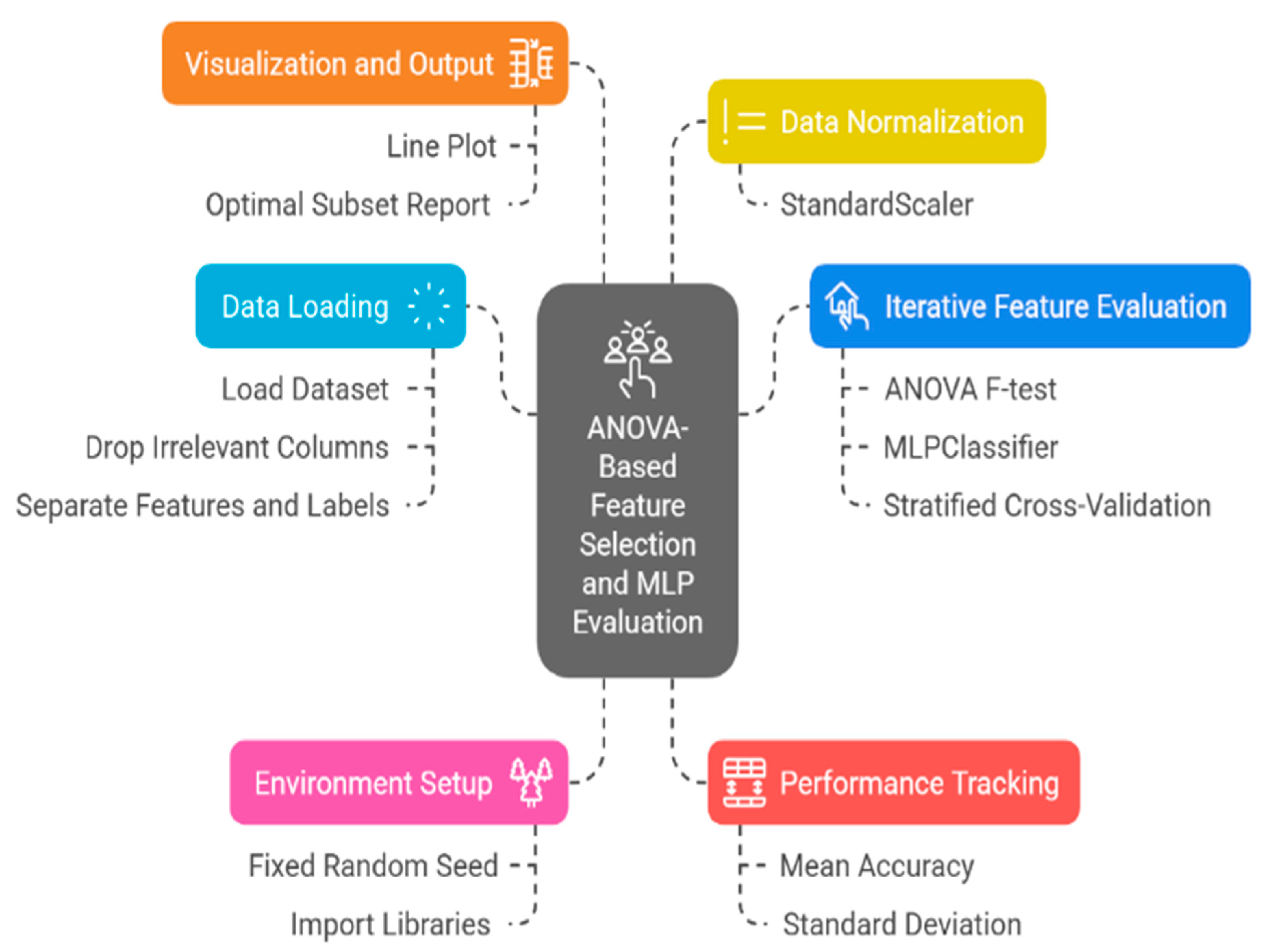

| Algorithm 2: Comprehensive Workflow for Feature Selection and Evaluation Using ANOVA and Classical ML Models |

| Environment Setup: Import essential libraries and set a random seed to ensure reproducibility. Data Loading: Load the dataset from a CSV file and separate features from target labels. Target Encoding: Convert categorical target labels into numeric form using label encoding. Label Display: Print the class label mappings for interpretability. Model Initialization: Define a set of ML models (e.g., Gradient Boosting, LightGBM, MLP, Random Forest, XGBoost). Result Storage: Prepare dictionaries to store evaluation metrics. Feature Range Definition: Specify the number of features to test (e.g., from 15 to 52). Feature Ranking via ANOVA: Calculate F-scores and rank features by importance. Cross-Validation: Use K-Fold cross-validation to evaluate performance for each feature subset. Model Training: For each model and fold, train using selected features and compute accuracy. Result Visualization: Plot model accuracy against the number of selected features. Plot Display: Present the results to analyze trends across models and feature counts. |

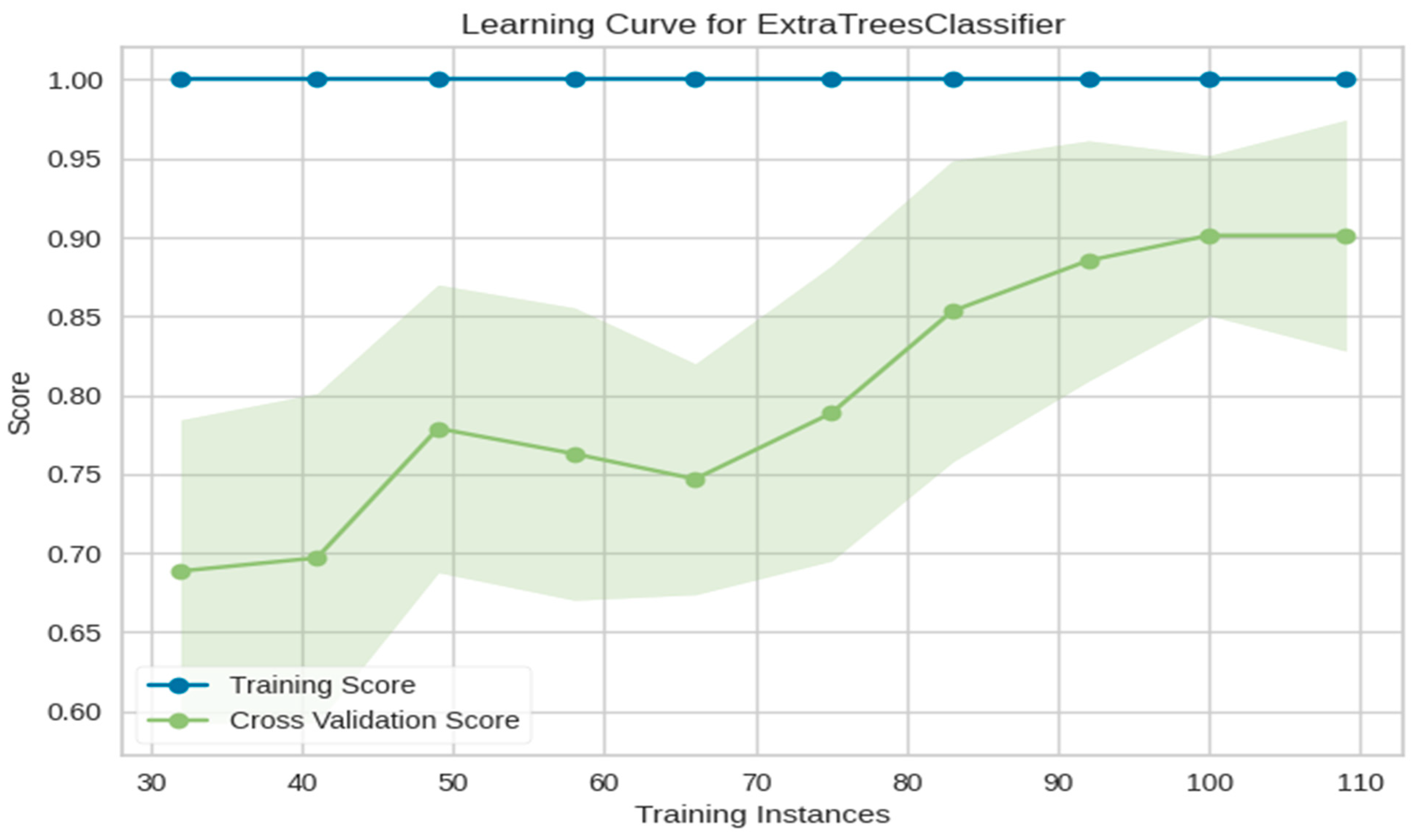

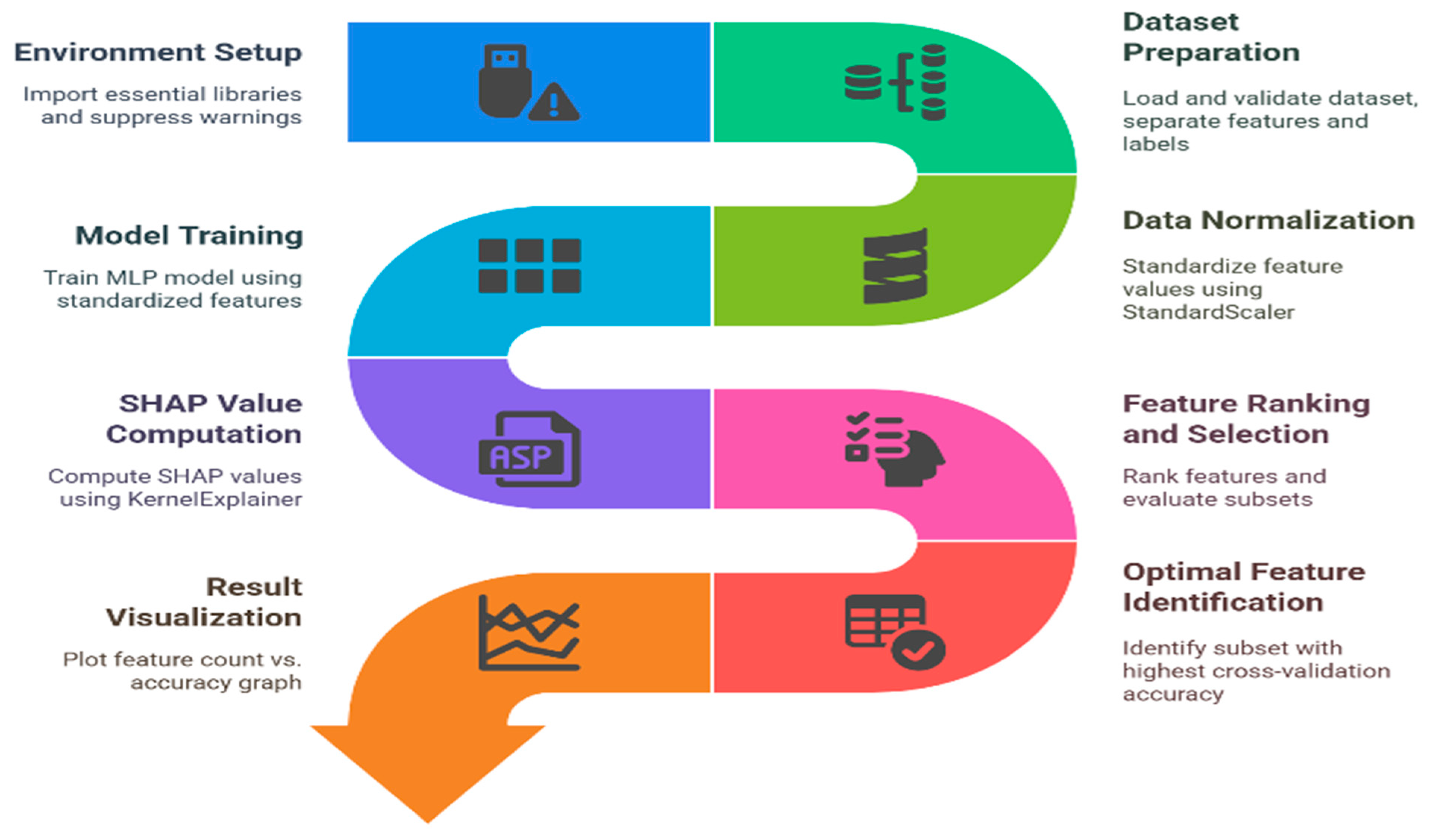

5.3. Phase 2—Experimental Configuration and Pipeline Overview

5.3.1. Phase 2—Experiment 1: Boruta Feature Selection Workflow

| Algorithm 3: Boruta Feature Selection Workflow |

|

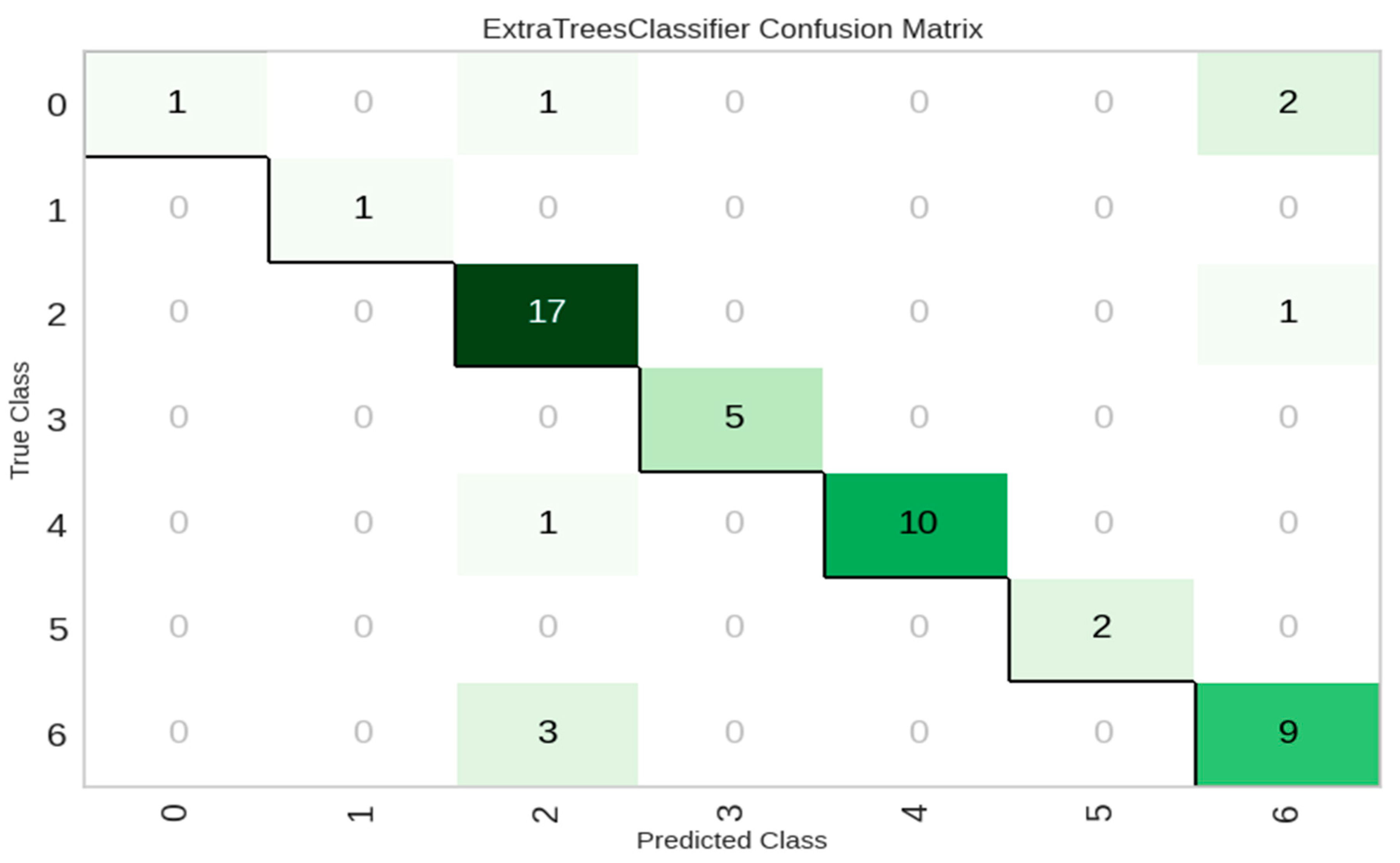

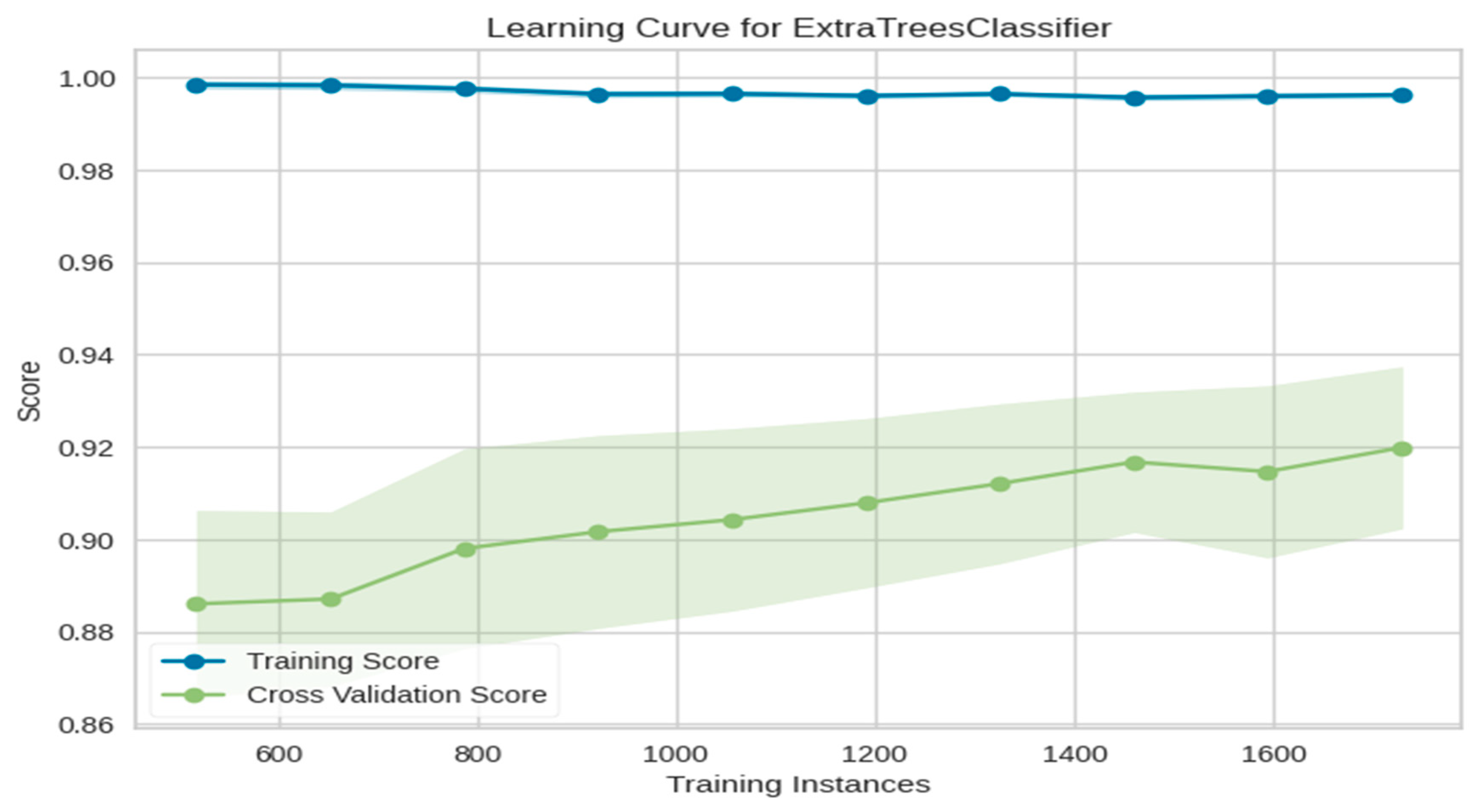

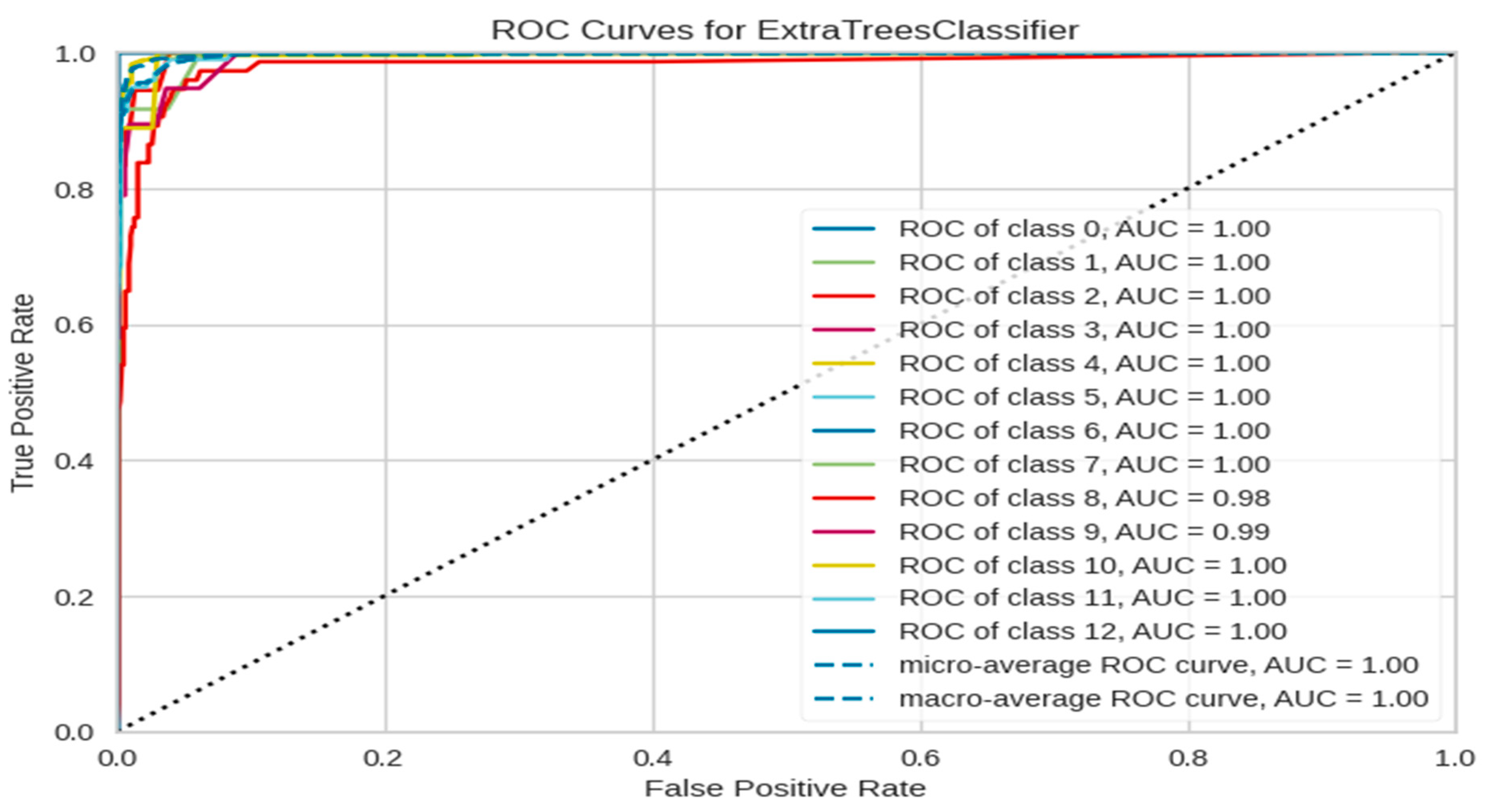

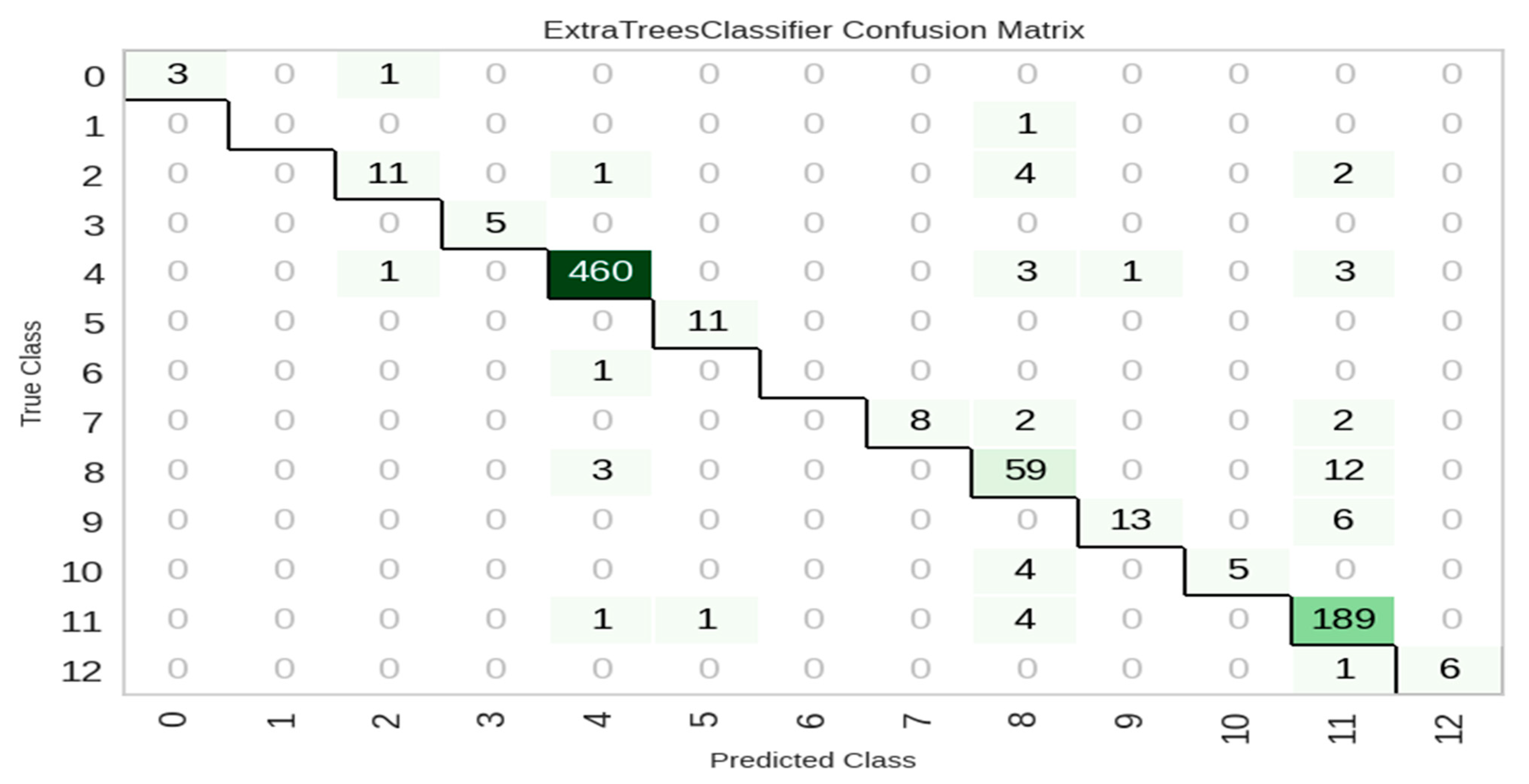

Performance Evaluation on VAFD Using Boruta Feature Selection

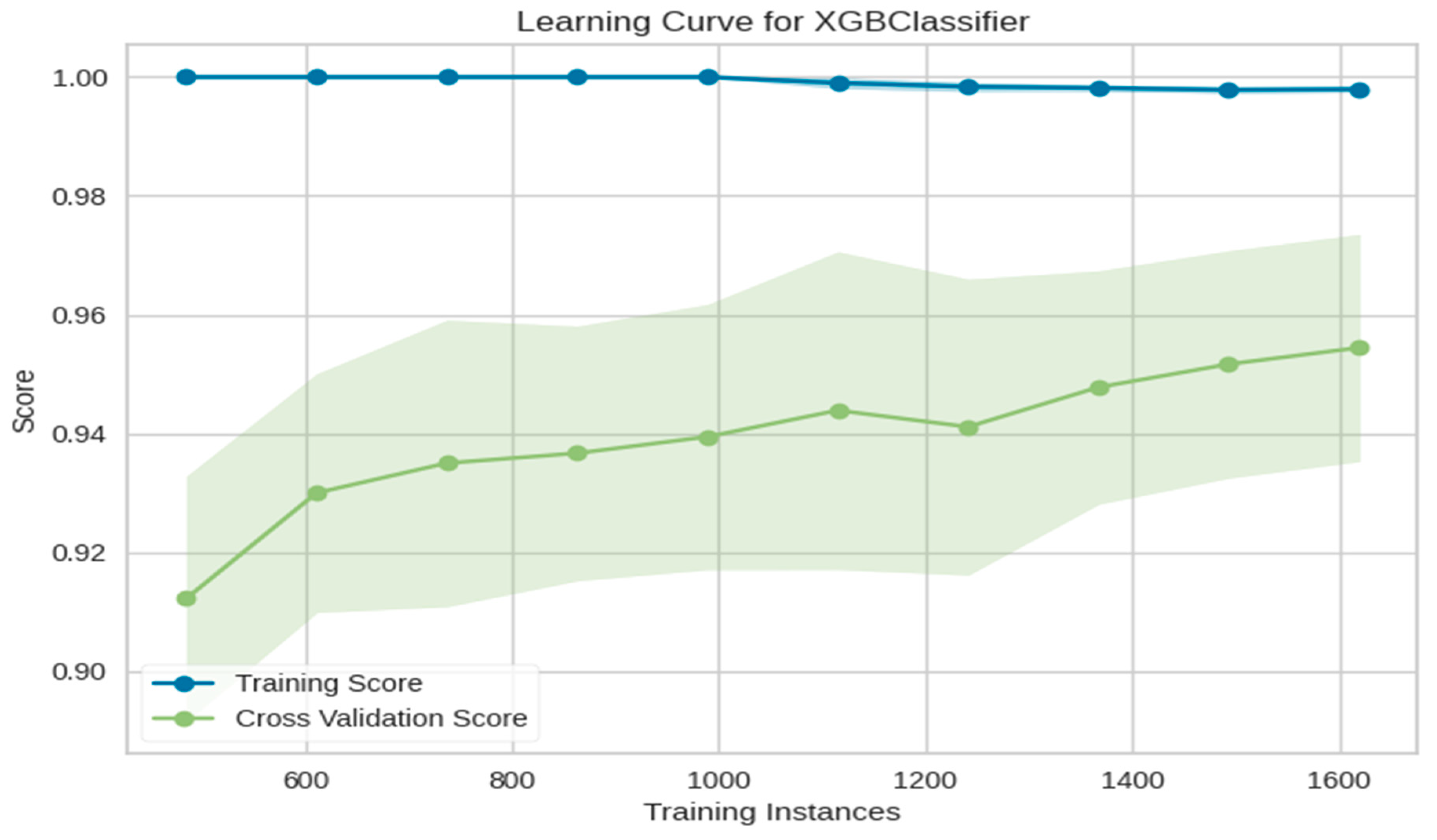

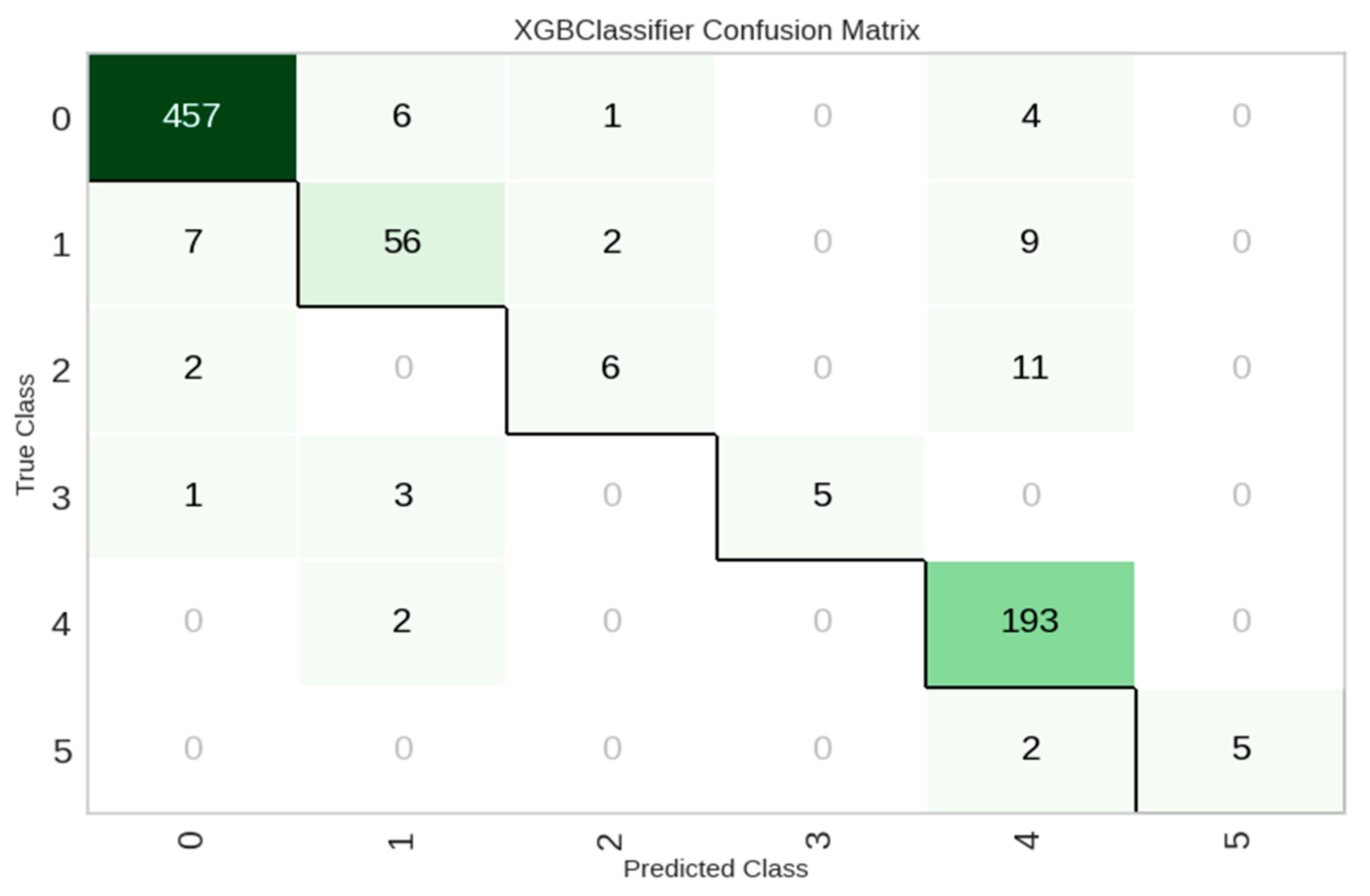

Performance Evaluation on UEASD Using Boruta Feature Selection

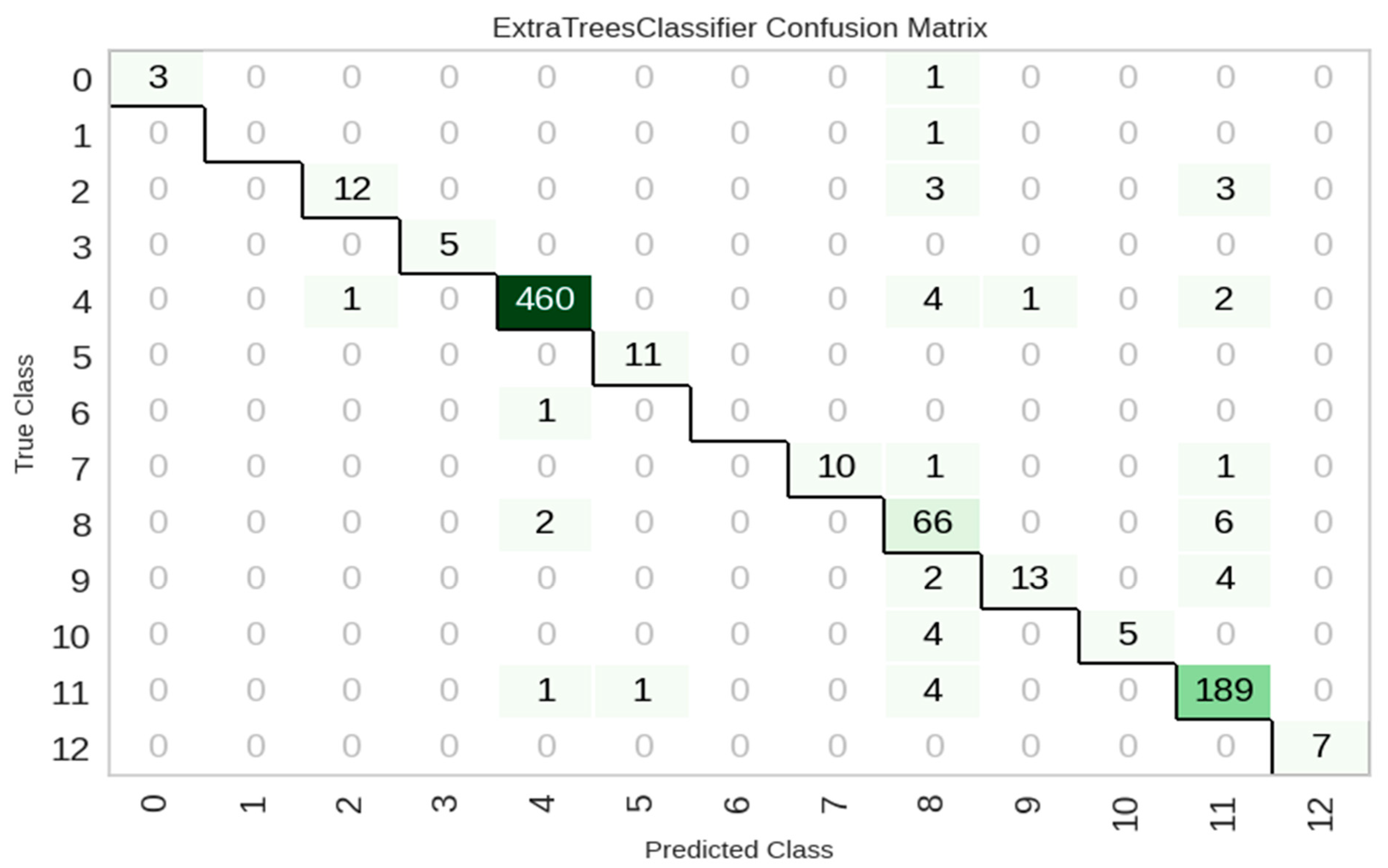

Performance Evaluation on IVESD Using Boruta Feature Selection

5.3.2. Phase 2—Experiment 2: Feature Ranking with ANOVA for MLP Evaluation

Results and Insights for Datasets VAFD, UEASD, and IVESD

ANOVA Feature Ranking with MLP Evaluation

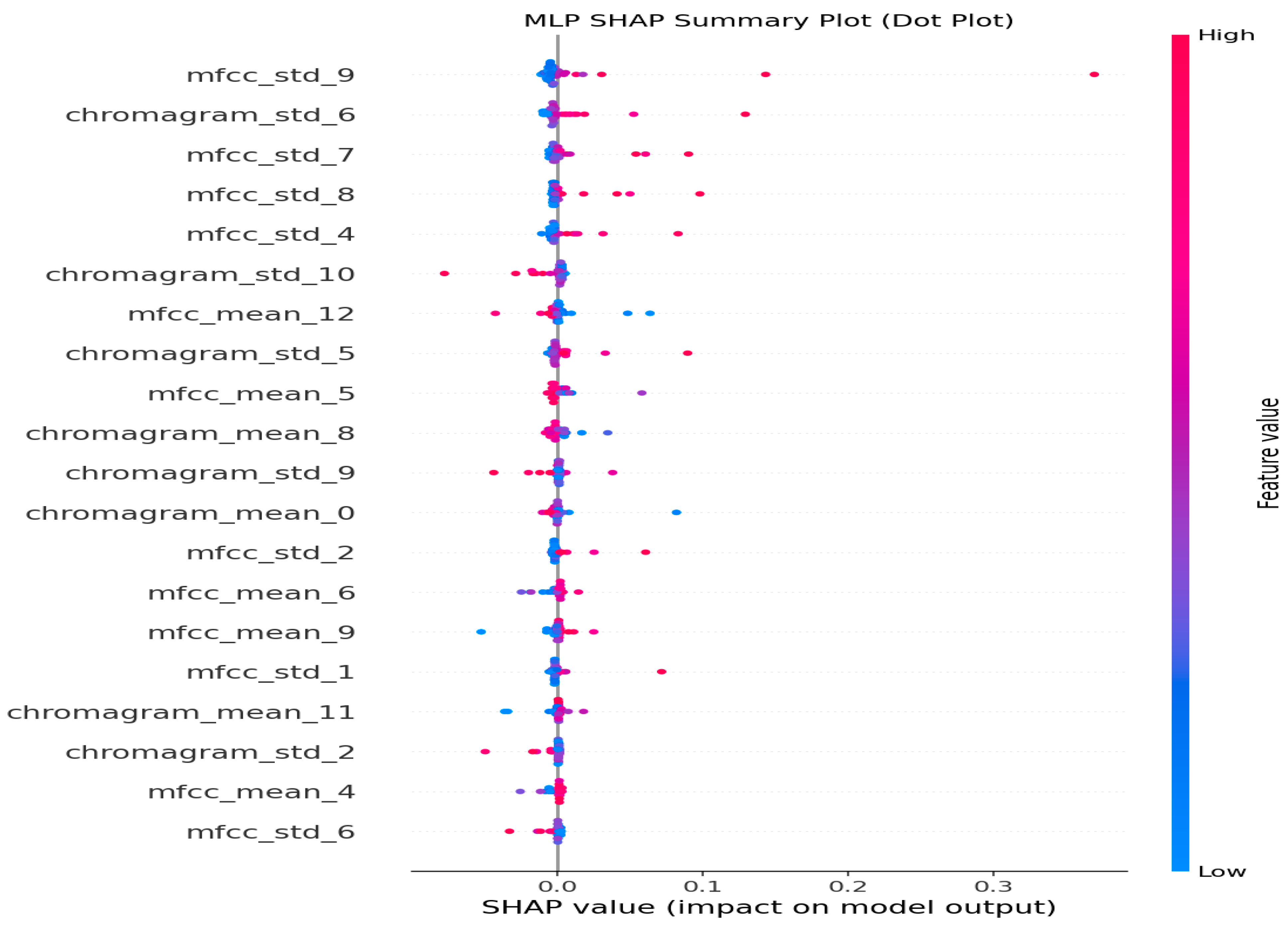

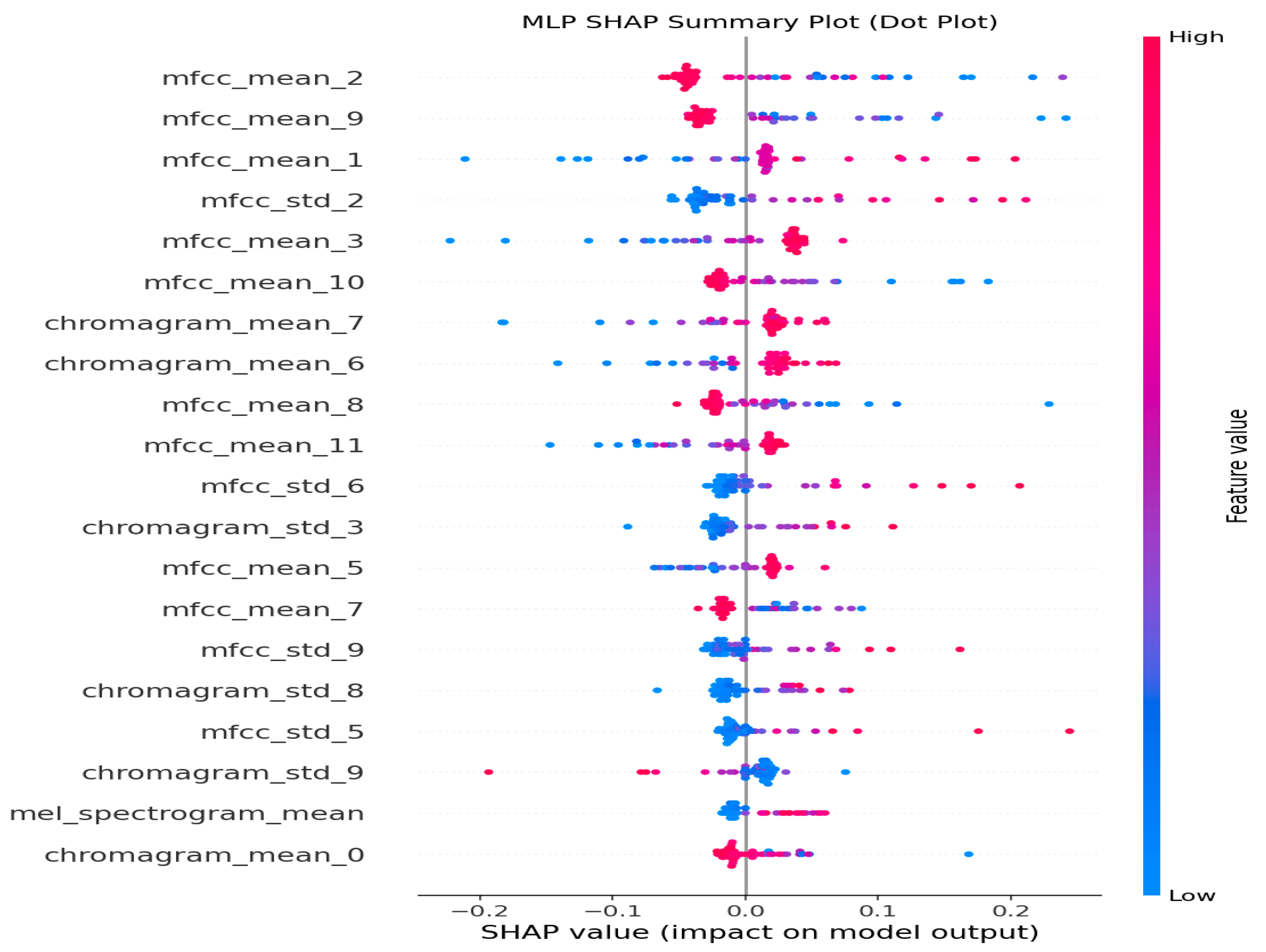

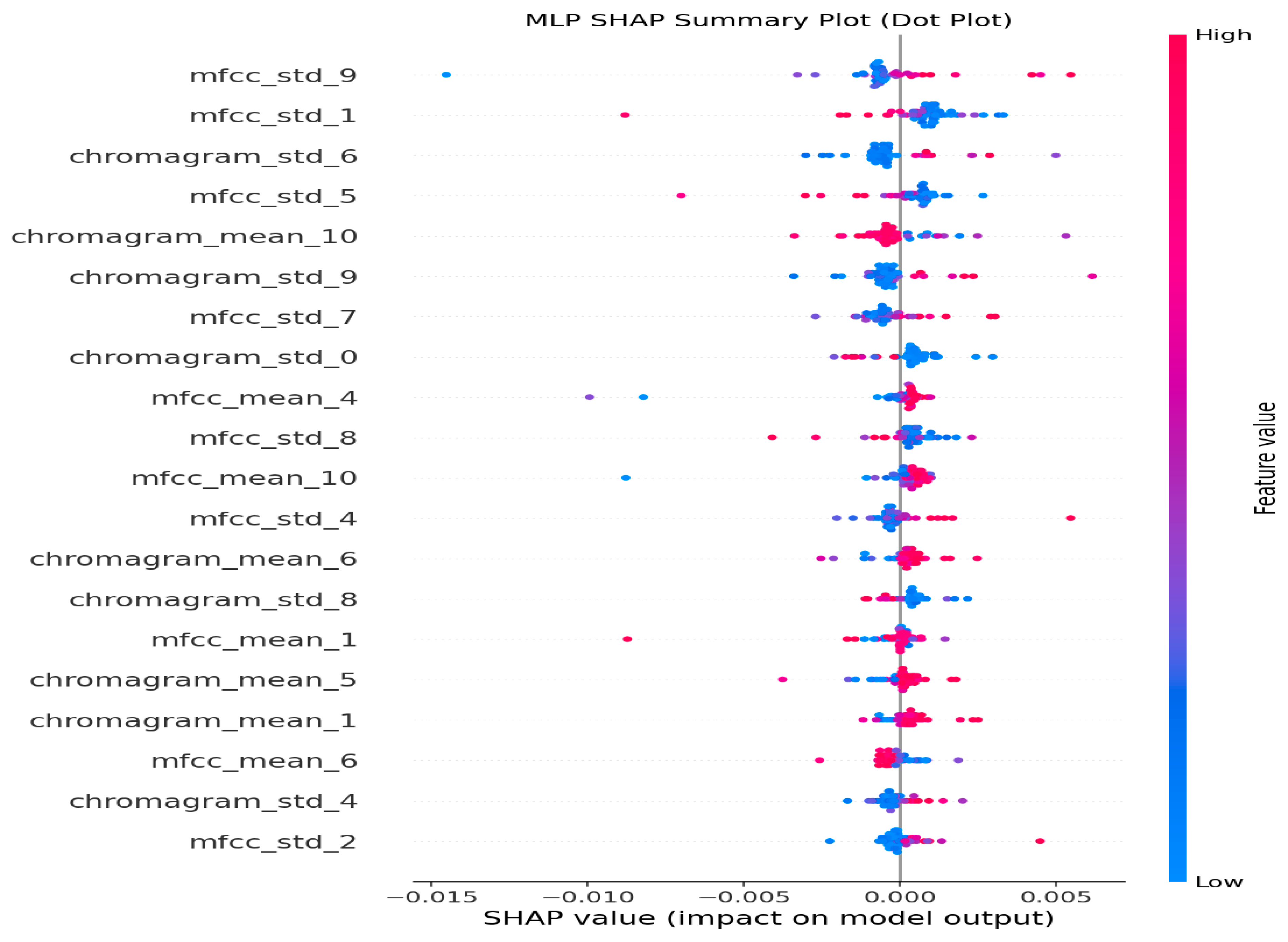

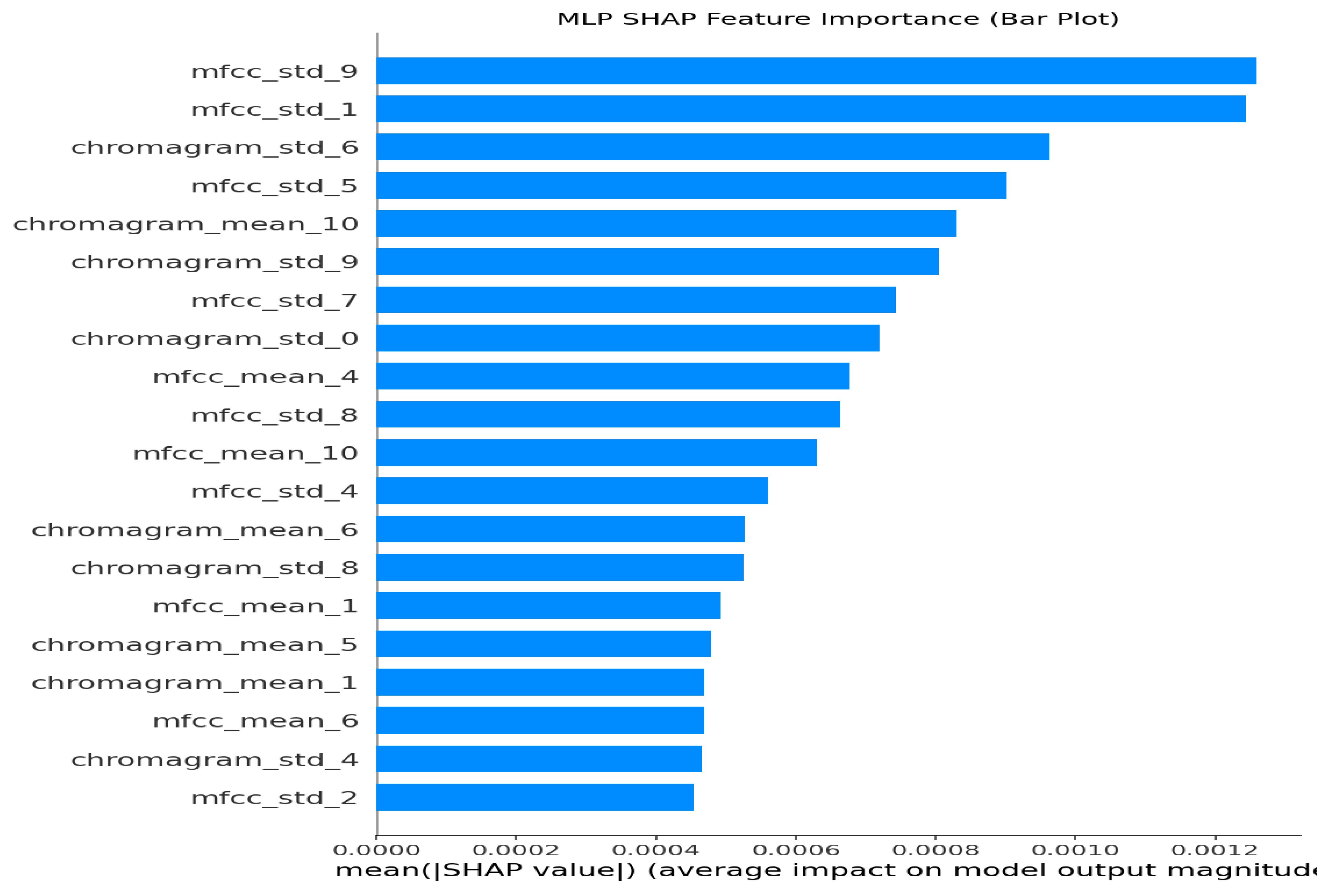

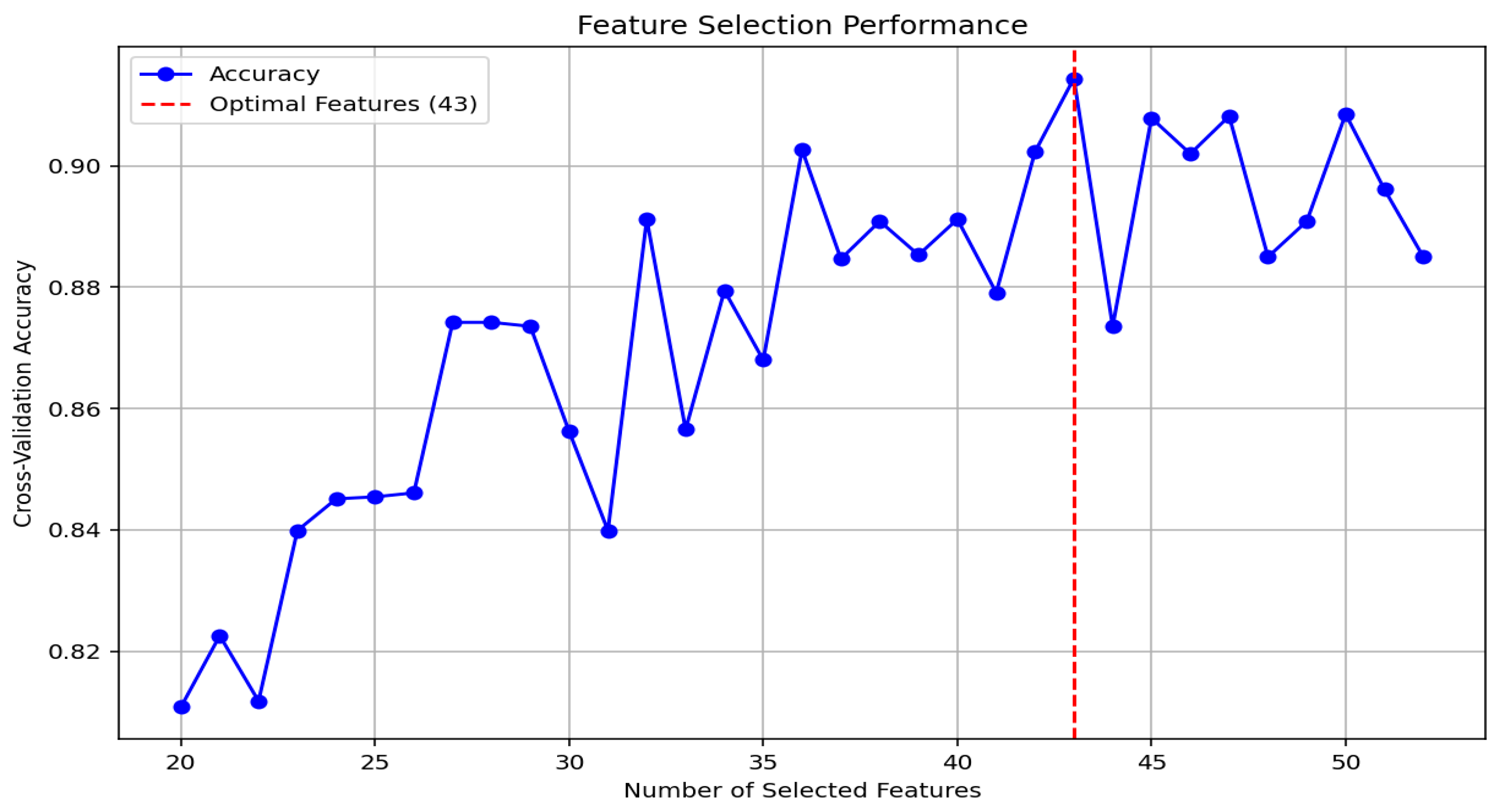

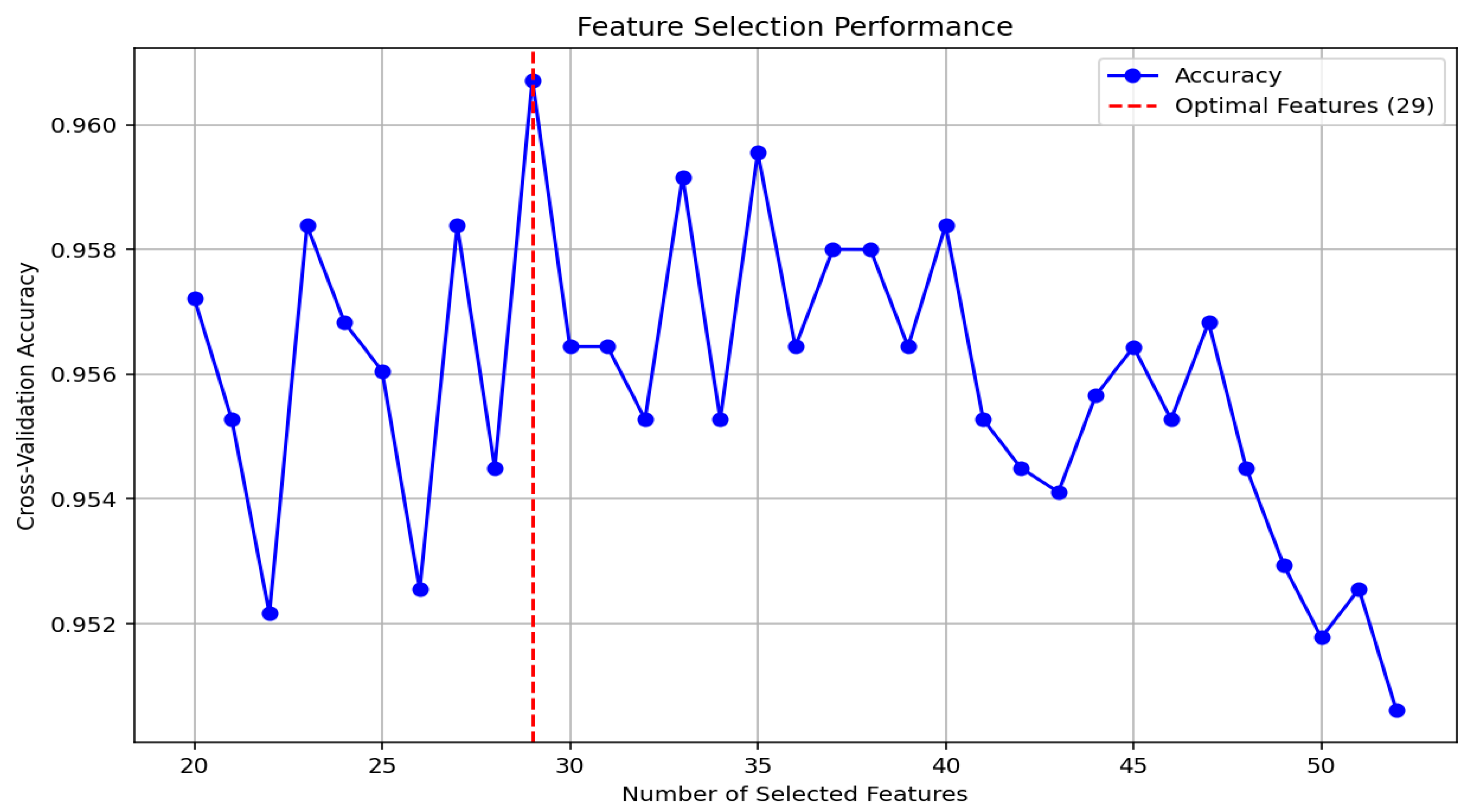

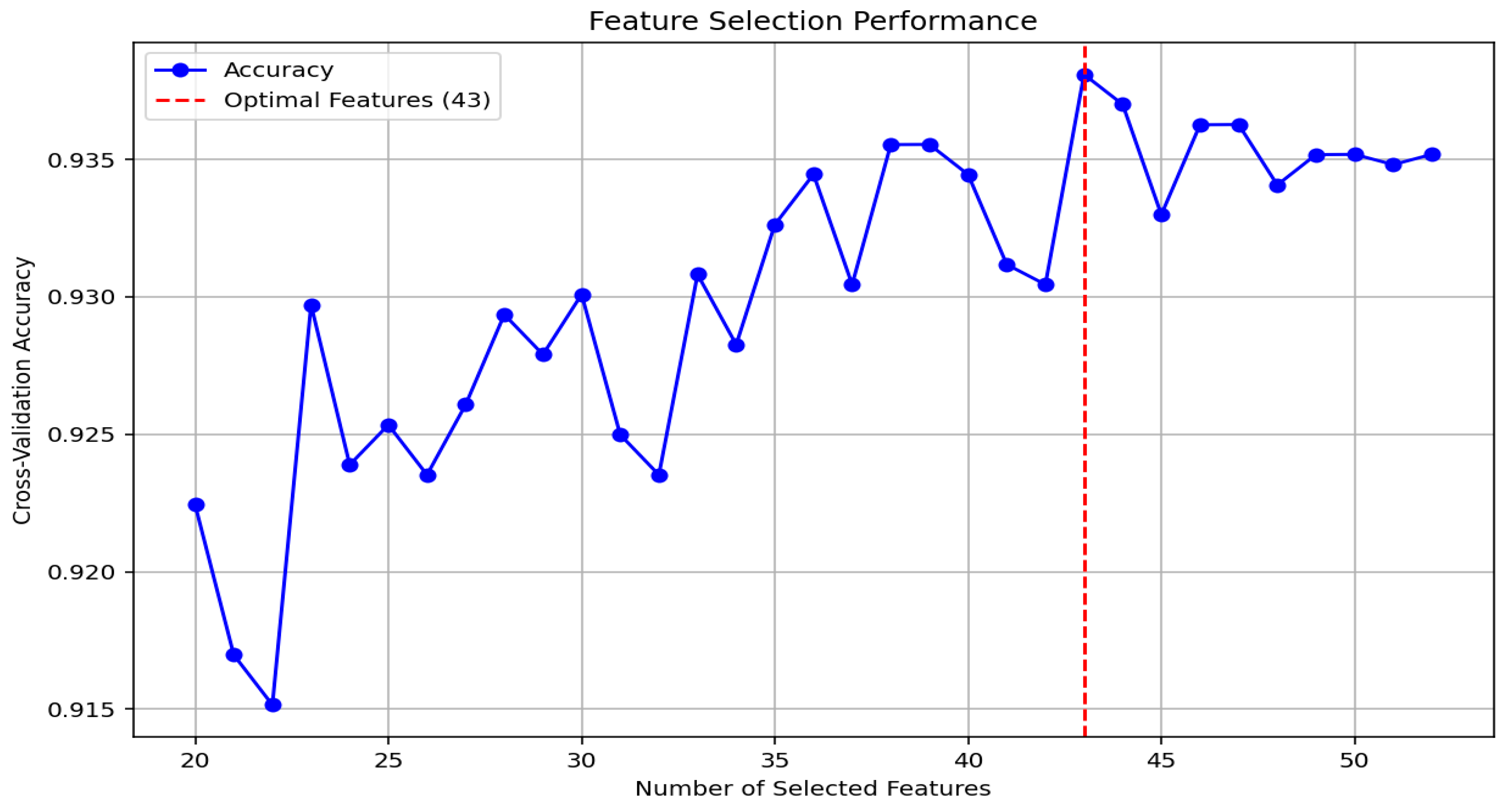

5.3.3. Phase 2—Experiment 3: SHAP-Based Feature Selection and Evaluation

Methodological Overview

- Data Loading and Validation: Ensure essential columns (label, file_name) exist.

- Preprocessing: Standardize features with StandardScaler.

- Model Training: Use a consistent MLP configuration for comparability.

- SHAP Calculation: Use kernel-based SHAP on a sampled subset to reduce computational overhead.

- Ranking: Features were ranked using average SHAP values.

- Evaluation: Perform 10-fold cross-validation to evaluate accuracy across feature counts.

SHAP-Based Feature Selection and Evaluation (VAFD)

| Step | Description |

|---|---|

| 1. Load Dataset | Check and prepare audio features and labels. |

| 2. Preprocess Features | Normalize all features using StandardScaler. |

| 3. Train Initial Model | Fit an MLP model with fixed parameters. |

| 4. Compute SHAP Values | Use KernelExplainer with 100 background and 50 sampled data points. |

| 5. Rank Features | Use mean absolute SHAP values to order features. |

| 6. Feature Selection | Evaluate models with top-k features (k from 20 to 52). |

| 7. Cross-Validation | Use stratified 10-fold validation to assess mean accuracy. |

| 8. Optimal Feature Count | Select a number of features giving the highest validation accuracy. |

| 9. Visualization | Generate plots of accuracy vs. feature count. |

SHAP-Based Feature Importance and Evaluation—UEASD

SHAP-Based Feature Selection and Evaluation—IVESD

5.4. Feature Selection Performance Analysis

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Dataset

Appendix A.1. Contribution to Dataset Refinement

- For VAFD, a new category, General Vehicle Sounds, was introduced to represent normal car operation, enabling more precise differentiation between faulty and non-faulty audio patterns. A new fault class, Timing Chain Noise, was added, increasing the total number of detailed labels from 27 to 29.

- For UEASD, several new categories were introduced to expand the diversity of non-vehicular acoustic events. These include weather sounds (e.g., rain and thunder), fire alarms, forest fire sounds, and snake sounds, reflecting environmental and public safety contexts relevant to ITS.

- Improve model generalization across diverse acoustic conditions;

- Address class imbalance challenges; and

- Facilitate higher-level semantic learning to support scalable and robust ITS applications.

Appendix A.2. Description of the VAFD Dataset

| Group No. | Category | Number of Unique Labels | Number of Instances |

| 1 | Belt and Accessory Issues | 3 | 12 |

| 2 | Braking System Issues | 1 | 4 |

| 3 | Engine and Powertrain Issues | 11 | 61 |

| 4 | Exhaust and Fuel System Issues | 4 | 16 |

| 5 | General Vehicle Sounds | 1 | 38 |

| 6 | Miscellaneous Issues | 1 | 5 |

| 7 | Suspension and Steering Issues | 8 | 39 |

| Total | — | 29 | 175 |

Appendix A.3. Fine-Grained Label Distribution Across VAFD Categories

| Group | Label | Count | Group | Label | Count | Group | Label | Count |

| 1. Belt and Accessory Issues | Engine Chirping/Squealing Belt | 4 | 3. Engine and Powertrain Issues | Thrown Rod | 4 | 7. Suspension and Steering Issues | Universal Joint Failure/Steering Rack Failure | 10 |

| Squeaky Belt | 4 | Lifter Ticking | 4 | Steering Groaning/Whining (Low Power Steering Fluid) | 4 | |||

| — | — | Timing Chain Noise (New) | 4 | Steering Noise | 4 | |||

| — | — | Vacuum Leak | 4 | Turning Front End Clicking/Bad CV Axle | 4 | |||

| 2. Braking System Issues | Braking System Issues | 4 | Bad Wheel Bearing | 21 | — | — | ||

| 4. Exhaust and Fuel System Issues | Exhaust and Fuel System Issues | 4 | Muffler Running Loud/Exhaust Leak | 4 | — | — | ||

| — | — | Radiator Fan Failure | 4 | — | — | |||

| — | — | Fuel Pump Cartridge Fault | 4 | — | — | |||

| 5. General Vehicle Sounds | Car Normal Noise (New) | 38 | 6. Miscellaneous Issues | Wheel Bearing Issue + Transmission Whining + Catalytic Converter Issue | 5 | — | — | |

| 3. Engine and Powertrain Issues | Bad CV Joint | 4 | Knocking | 5 | — | — | ||

| Bad Transmission | 4 | Clunking Over Bumps/Bad Stabilizer Link | 4 | — | — | |||

| Engine Rattle Noise | 4 | Strut Mount Failure | 4 | — | — | |||

| Flooded Engine | 4 | Suspension Arm Fault | 4 | — | — | |||

| Pre-ignition | 4 | — | — | — | — | |||

| Engine Misfire | 4 | — | — | — | — | |||

| Seized Engine | 4 | — | — | — | — |

Appendix A.4. Fine-Grained Label Distribution Across UEASD Categories

| Group | Label | Count | Group | Label | Count | Group | Label | Count |

| Animals | Cats | 200 | Vehicles and Transportation | Car Crashes | 103 | Emergency Vehicles | Police Car Siren | 41 |

| Sheep | 80 | Car Horn | 24 | Fire Truck Siren | 37 | |||

| Bear | 68 | Motorcycle | 20 | Ambulance Siren | 30 | |||

| Dog | 68 | Bus | 20 | Forest Fire Sound (New) | 1440 | |||

| Monkey | 60 | Bike | 20 | Fire Alarm (New) | 12 | |||

| Lions | 48 | Train | 20 | Weather Sounds (New) | Rain and Thunderstorm | 29 | ||

| Wolf | 47 | Truck Sound | 20 | — | — | |||

| Horse | 40 | Truck Horn | 19 | Construction and Machinery | Drilling | 24 | ||

| Mouse | 28 | — | — | Weapons and Explosions | Gunshot and Gunfire | 62 | ||

| Snake (New) | 11 | — | — | — | — |

Appendix B. Pseudo Code

Appendix B.1. Pseudo Code for Feature Extraction 52 Features

| #Configuration Define ROOT_DIR as the root directory containing audio dataset. Define OUTPUT_FEATURES_CSV as the path to save extracted features. Define TARGET_SAMPLE_RATE as the target sample rate for audio processing. #Function to extract audio features Function extract_features(file_path): Try: Load audio file from file_path with TARGET_SAMPLE_RATE. Calculate Mel Spectrogram and convert it to dB scale. Calculate MFCCs (13 coefficients). Calculate Chromagram. Compute mean and standard deviation for: -Mel Spectrogram -MFCCs (per coefficient) -Chromagram (per feature) Return the extracted features as a dictionary. Catch exceptions and print an error message. Return None if an error occurs. #Get all subfolders in ROOT_DIR List input_folders containing subdirectories in ROOT_DIR. #Initialize an empty list for storing all features Initialize all_features as an empty list. #Loop through each subfolder in input_folders For each folder in input_folders: Get the full path of the folder as input_folder. #Loop through all files in the folder For each file_name in input_folder: Get the full file path as file_path. If file_path is a valid audio file (extension .wav, .m4a, or .mp3): Print that the file is being processed. Extract features using extract_features(file_path). If features are successfully extracted: Add folder name as the label. Add file_name to the features. Append the features dictionary to all_features. #Save features to a CSV file If all_features is not empty: Initialize flat_features as an empty list. #Flatten nested feature dictionaries For each feature dictionary f in all_features: Create a new dictionary flat with: -file_name -label -Mean and Std of Mel Spectrogram Add MFCC means and standard deviations with indexed keys. Add Chromagram means and standard deviations with indexed keys. Append the flat dictionary to flat_features. Convert flat_features to a data frame. Save the data frame to OUTPUT_FEATURES_CSV. Print that features have been saved. Else: Print that no features were extracted. |

Appendix B.2. Pseudo-Code for Feature Ranking and the Relation Between the Number of Features and Model Evaluation

|

Appendix B.3. Pseudo-Code: Feature Ranking and Evaluation Based on Number of Selected Features

|

Appendix B.4. The Pseudo-Code Outlines the Steps Taken to Perform Boruta-Based Feature Selection on the Datasets

|

Appendix B.5. Pseudo-Code: SHAP Feature Selection Workflow

Pseudocode Steps:

|

Appendix B.6. Pseudo-Code for Feature Ranking ANOVA and MLP

|

References

- Suman, A.; Kumar, C.; Suman, P. Early detection of mechanical malfunctions in vehicles using sound signal processing. Appl. Acoust. 2022, 188, 108578. [Google Scholar] [CrossRef]

- Henriquez, P.; Alonso, J.B.; Ferrer, M.A.; Travieso, C.M. Review of Automatic Fault Diagnosis Systems Using Audio and Vibration Signals. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 642–652. [Google Scholar] [CrossRef]

- Hossain, M.N.; Rahman, M.M.; Ramasamy, D. Artificial Intelligence-Driven Vehicle Fault Diagnosis to Revolutionize Automotive Maintenance: A Review. Comput. Model. Eng. Sci. 2024, 141, 951–996. [Google Scholar] [CrossRef]

- Burdzik, R. A comprehensive diagnostic system for vehicle suspensions based on a neural classifier and wavelet resonance estimators. Measurement 2022, 200, 111602. [Google Scholar] [CrossRef]

- Boztas, G.; Tuncer, T.; Aydogmus, O.; Yildirim, M. A DCSLBP based intelligent machine malfunction detection model using sound signals for industrial automation systems. Comput. Electr. Eng. 2024, 119, 109541. [Google Scholar] [CrossRef]

- Legala, A.; Kubesh, M.; Chundru, V.R.; Conway, G.; Li, X. Machine learning modeling for fuel cell-battery hybrid power system dynamics in a Toyota Mirai 2 vehicle under various drive cycles. Energy AI 2024, 17, 100415. [Google Scholar] [CrossRef]

- Walter, E. (Ed.) Data Acquisition from Light-Duty Vehicles Using OBD and CAN; SAE International: Warrendale, PA, USA, 2018. [Google Scholar] [CrossRef]

- Zappatore, M.; Longo, A.; Bochicchio, M.A. Crowd-sensing our Smart Cities: A Platform for Noise Monitoring and Acoustic Urban Planning. J. Commun. Softw. Syst. 2017, 13, 53. [Google Scholar] [CrossRef]

- Zaheer, R.; Ahmad, I.; Habibi, D.; Islam, K.Y.; Phung, Q.V. A Survey on Artificial Intelligence-Based Acoustic Source Identification. IEEE Access 2023, 11, 60078–60108. [Google Scholar] [CrossRef]

- Ciaburro, G.; Iannace, G. Improving Smart Cities Safety Using Sound Events Detection Based on Deep Neural Network Algorithms. Informatics 2020, 7, 23. [Google Scholar] [CrossRef]

- Qurthobi, A.; Maskeliūnas, R.; Damaševičius, R. Detection of Mechanical Failures in Industrial Machines Using Overlapping Acoustic Anomalies: A Systematic Literature Review. Sensors 2022, 22, 3888. [Google Scholar] [CrossRef]

- Rashed, A.; Abdulazeem, Y.; Farrag, T.; Bamaqa, A.; Almaliki, M.; Badawy, M.; Elhosseini, M. Toward Inclusive Smart Cities: Sound-Based Vehicle Diagnostics, Emergency Signal Recognition, and Beyond. Machines 2025, 13, 258. [Google Scholar] [CrossRef]

- Fahimifar, S.; Mousavi, K.; Mozaffari, F.; Ausloos, M. Identification of the most important external features of highly cited scholarly papers through 3 (i.e., Ridge, Lasso, and Boruta) feature selection data mining methods. Qual. Quant. 2022, 57, 3685–3712. [Google Scholar] [CrossRef]

- Parisineni, S.R.A.; Pal, M. Enhancing trust and interpretability of complex machine learning models using local interpretable model agnostic shap explanations. Int. J. Data Sci. Anal. 2023, 18, 457–466. [Google Scholar] [CrossRef]

- Manikandan, G.; Pragadeesh, B.; Manojkumar, V.; Karthikeyan, A.L.; Manikandan, R.; Gandomi, A.H. Classification models combined with Boruta feature selection for heart disease prediction. Inform. Med. Unlocked 2024, 44, 101442. [Google Scholar] [CrossRef]

- Gong, S.; Jin, Q.; Wang, C.; Wang, T. In-situ test of pedestrian landscape bridge for benchmark SHM model towards smart city. Nondestruct. Test. Eval. 2025, 1–18. [Google Scholar] [CrossRef]

- Chahine, K. Tree-Based Algorithms and Incremental Feature Optimization for Fault Detection and Diagnosis in Photovoltaic Systems. Eng 2025, 6, 20. [Google Scholar] [CrossRef]

- Somasundaram, M.M.P.; Deepak, D.; Kumar, R. Enhancing Road Safety with AI-Powered System for Effective Emergency Sound Detection and Localization. Sensors 2023, 25, 793. Available online: https://www.mdpi.com/1424-8220/25/3/793 (accessed on 10 July 2025).

- Scikit-Learn Developers. Sklearn.Ensemble.ExtraTreesClassifier. Scikit-Learn. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.ExtraTreesClassifier.html (accessed on 5 June 2025).

- Shi, Y.; Ke, G.; Soukhavong, D.; Lamb, J.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; et al. Lightgbm: Light Gradient Boosting Machine [dataset]. In CRAN: Contributed Packages; The R Foundation: Vienna, Austria, 2020. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Kursa, M.B.; Jankowski, A.; Rudnicki, W.R. Boruta—A System for Feature Selection. Fundam. Informaticae 2010, 101, 271–285. [Google Scholar] [CrossRef]

- SixSigma.Us. (n.d.). ANOVA F-Value Meaning. Available online: https://www.6sigma.us/six-sigma-in-focus/anova-f-value-meaning/ (accessed on 5 June 2025).

- Dubey, R.; Bodade, R.M.; Dubey, D. Two-way analysis of variance (ANOVA) ranking of features based on wavelet bi-phase and bi-spectrum for the classification of adventitious lung sounds. Res. Biomed. Eng. 2024, 41, 6. [Google Scholar] [CrossRef]

- Chen, H.; Lundberg, S.; Lee, S.-I. Explaining Models by Propagating Shapley Values of Local Components. In Explainable AI in Healthcare and Medicine; Springer: Cham, Switzerland, 2020; pp. 261–270. [Google Scholar] [CrossRef]

- Huang, R.; Ni, J.; Qiao, P.; Wang, Q.; Shi, X.; Yin, Q. An Explainable Prediction Model for Aerodynamic Noise of an Engine Turbocharger Compressor Using an Ensemble Learning and Shapley Additive Explanations Approach. Sustainability 2023, 15, 13405. [Google Scholar] [CrossRef]

- Chazette, L.; Brunotte, W.; Speith, T. Exploring Explainability: A Definition, a Model, and a Knowledge Catalogue. In Proceedings of the 2021 IEEE 29th International Requirements Engineering Conference (RE), Notre Dame, IN, USA, 20–24 September 2021; pp. 197–208. [Google Scholar] [CrossRef]

- Murovec, J.; Prezelj, J.; Ćirić, D.G.; Milivojčević, M.M. Zero-Crossing Signature: A Time-Domain Method Applied to Diesel and Gasoline Vehicle Classification. IEEE Sens. J. 2025, 25, 5128–5138. [Google Scholar] [CrossRef]

- Wang, S.; Xu, Q.; Zhu, S.; Wang, B. Making transformer hear better: Adaptive feature enhancement based multi-level supervised acoustic signal fault diagnosis. Expert Syst. Appl. 2025, 264, 125736. [Google Scholar] [CrossRef]

- Wang, Y.; Li, D.; Li, L.; Sun, R.; Wang, S. A novel deep learning framework for rolling bearing fault diagnosis enhancement using VAE-augmented CNN model. Heliyon 2024, 10, e35407. [Google Scholar] [CrossRef]

- Nasim, F.; Masood, S.; Jaffar, A.; Ahmad, U.; Rashid, M. Intelligent Sound-Based Early Fault Detection System for Vehicles. Comput. Syst. Sci. Eng. 2023, 46, 3175–3190. [Google Scholar] [CrossRef]

- Hamad, A.A.; Nasim, M.F.; Jaffar, A.; Khalaf, O.I.; Ouahada, K.; Hamam, H.; Akram, S.; Siddique, A. Cognitive Inspired Sound-Based Automobile Problem Detection: A Step Toward Xai. SSRN 2024. [Google Scholar] [CrossRef]

- Akbalık, F.; Yıldız, A.; Ertuğrul, Ö.F.; Zan, H. Engine Fault Detection by Sound Analysis and Machine Learning. Appl. Sci. 2024, 14, 6532. [Google Scholar] [CrossRef]

- Guo, X. Fault Diagnosis of Rolling Bearings Based on Acoustics and Vibration Engineering. IEEE Access 2024, 12, 139632–139648. [Google Scholar] [CrossRef]

- Li, Y.; Tao, X.; Sun, Y. A Fault Diagnosis Method for Turnout Switch Machines Based on Sound Signals. Electronics 2024, 13, 4839. [Google Scholar] [CrossRef]

- Hameed, U.; Masood, S.; Nasim, F.; Jaffar, A. Exploring the Accuracy of Machine Learning and Deep Learning in Engine Knock Detection. Bull. Bus. Econ. (BBE) 2024, 13, 203–210. [Google Scholar] [CrossRef]

- Yuan, G.; Yang, Y. Fault detection method of new energy vehicle engine based on wavelet transform and support vector machine. Int. J. Knowl.-Based Intell. Eng. Syst. 2024, 28, 718–731. [Google Scholar] [CrossRef]

- Akbalik, F.; Yildiz, A.; Ertuğrul, Ö.F.; Zan, H. Enhancing vehicle fault diagnosis through multi-view sound analysis: Integrating scalograms and spectrograms in a deep learning framework. Signal Image Video Process. 2025, 19, 182. [Google Scholar] [CrossRef]

- Yun, E.; Jeong, M. Acoustic Feature Extraction and Classification Techniques for Anomaly Sound Detection in the Electronic Motor of Automotive EPS. IEEE Access 2024, 12, 149288–149307. [Google Scholar] [CrossRef]

- Khan, F.A.; Jamil, A.; Khan, S.A.; Hameed, A.A. Enhancing robotic manipulator fault detection with advanced machine learning techniques. Eng. Res. Express 2024, 6, 025204. [Google Scholar] [CrossRef]

- Zhao, D.; Shao, D.; Wang, T.; Cui, L. Time-frequency self-similarity enhancement network and its application in wind turbines fault analysis. Adv. Eng. Inform. 2025, 65, 103322. [Google Scholar] [CrossRef]

- Kim, S.-M.; Soo Kim, Y. Enhancing Sound-Based Anomaly Detection Using Deep Denoising Autoencoder. IEEE Access 2024, 12, 84323–84332. [Google Scholar] [CrossRef]

- Naryanto, R.F.; Delimayanti, M.K.; Naryaningsih, A.; Warsuta, B.; Adi, R.; Setiawan, B.A. Diesel Engine Fault Detection using Deep Learning Based on LSTM. In Proceedings of the 2023 7th International Conference on Electrical, Telecommunication and Computer Engineering (ELTICOM), Medan, Indonesia, 13–14 December 2023; pp. 37–42. [Google Scholar] [CrossRef]

- Chu, S.; Zhang, J.; Liu, F.; Kong, X.; Jiang, Z.; Mao, Z. Fault identification model of diesel engine based on mixed attention: Single-cylinder fault data driven whole-cylinder diagnosis. Expert Syst. Appl. 2024, 255, 124769. [Google Scholar] [CrossRef]

- Lee, D.; Choo, H.; Jeong, J. GCN-Based LSTM Autoencoder with Self-Attention for Bearing Fault Diagnosis. Sensors 2024, 24, 4855. [Google Scholar] [CrossRef]

- Spadini, T.; Nose-Filho, K.; Suyama, R. Intelligent Fault Diagnosis of Type and Severity in Low-Frequency, Low Bit-Depth Signals. arXiv 2024, arXiv:2411.06299. [Google Scholar]

- Qiao, Z.; Yao, D.; Yang, J.; Zhou, T.; Ge, T. MSTD: A framework for rolling bearing fault diagnosis based on multi-scale and soft-threshold denoising. Nondestruct. Test. Eval. 2024, 1–21. [Google Scholar] [CrossRef]

- Hao, J.; Shen, G.; Zhang, X.; Shao, H. An adversarial gradual domain adaptation approach for fault diagnosis via intermediate domain generation. Nondestruct. Test. Eval. 2025, 1–21. [Google Scholar] [CrossRef]

- Beritelli, F.; Casale, S.; Russo, A.; Serrano, S. An Automatic Emergency Signal Recognition System for the Hearing Impaired. In Proceedings of the 2006 IEEE 12th Digital Signal Processing Workshop & 4th IEEE Signal Processing Education Workshop, Teton National Park, WY, USA, 24–27 September 2006. [Google Scholar] [CrossRef]

- Tran, V.-T.; Tsai, W.-H. Acoustic-Based Emergency Vehicle Detection Using Convolutional Neural Networks. IEEE Access 2020, 8, 75702–75713. [Google Scholar] [CrossRef]

- Banchero, L.; Vacalebri-Lloret, F.; Mossi, J.M.; Lopez, J.J. Enhancing Road Safety with AI-Powered System for Effective Detection and Localization of Emergency Vehicles by Sound. Sensors 2025, 25, 793. [Google Scholar] [CrossRef]

- Asif, M.; Usaid, M.; Rashid, M.; Rajab, T.; Hussain, S.; Wasi, S. Large-scale audio dataset for emergency vehicle sirens and road noises. Sci. Data 2022, 9, 599. [Google Scholar] [CrossRef]

- Principi, E.; Squartini, S.; Bonfigli, R.; Ferroni, G.; Piazza, F. An integrated system for voice command recognition and emergency detection based on audio signals. Expert Syst. Appl. 2015, 42, 5668–5683. [Google Scholar] [CrossRef]

- Kim, J.; Min, K.; Jung, M.; Chi, S. Occupant behavior monitoring and emergency event detection in single-person households using deep learning-based sound recognition. Build. Environ. 2020, 181, 107092. [Google Scholar] [CrossRef]

- Min, K.; Jung, M.; Kim, J.; Chi, S. Sound Event Recognition-Based Classification Model for Automated Emergency Detection in Indoor Environment. In Advances in Informatics and Computing in Civil and Construction Engineering; Springer: Cham, Switzerland, 2018; pp. 529–535. [Google Scholar] [CrossRef]

- Nguyen, Q.; Yun, S.-S.; Choi, J. Detection of audio-based emergency situations using perception sensor network. In Proceedings of the 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xi’an, China, 19–22 August 2016; pp. 763–766. [Google Scholar] [CrossRef]

- Kamelia, R.; Kusuma, H. Emergency Sound Classification and Visual Alert System for Enhanced Situational Awareness. In Proceedings of the 2024 International Conference on TVET Excellence & Development (ICTeD), Melaka, Malaysia, 16–17 December 2024; pp. 213–218. [Google Scholar] [CrossRef]

- Kreuzer, M.; Schmidt, D.; Wokusch, S.; Kellermann, W. Real-World Airborne Sound Analysis for Health Monitoring of Bearings in Railway Vehicles. SSRN 2024. [Google Scholar] [CrossRef]

- Shajie, D.; Juliet, S.; Ezra, K.; Annie Flora, J.B. Diagnostic Sonance: Sound-Based Approach to Assess Engine Ball Bearing Health in Automobiles. Przegląd Elektrotechniczny 2024, 1, 74–78. [Google Scholar] [CrossRef]

- Senanayaka, A.; Lee, P.; Lee, N.; Dickerson, C.; Netchaev, A.; Mun, S. Enhancing the accuracy of machinery fault diagnosis through fault source isolation of complex mixture of industrial sound signals. Int. J. Adv. Manuf. Technol. 2024, 133, 5627–5642. [Google Scholar] [CrossRef]

- Gantert, L.; Zeffiro, T.; Sammarco, M.; Campista, M.E.M. Multiclass classification of faulty industrial machinery using sound samples. Eng. Appl. Artif. Intell. 2024, 136, 108943. [Google Scholar] [CrossRef]

- Dobre, R.A.; Nita, V.A.; Ciobanu, A.; Negrescu, C.; Stanomir, D. Low computational method for siren detection. In Proceedings of the 2015 IEEE 21st International Symposium for Design and Technology in Electronic Packaging (SIITME), Brasov, Romania, 22–25 October 2015; pp. 291–295. [Google Scholar] [CrossRef]

- Dobre, R.-A.; Dumitrascu, E.-V. High-performance, low complexity yelp siren detection system. Alex. Eng. J. 2024, 109, 669–684. [Google Scholar] [CrossRef]

- Colelough, B.; Zheng, A. Effects of Dataset Sampling Rate for Noise Cancellation through Deep Learning. arXiv 2024, arXiv:2405.20884. [Google Scholar] [CrossRef]

- Fedorishin, D.; Forte, L.; Schneider, P.; Setlur, S.; Govindaraju, V. Fine-Grained Engine Fault Sound Event Detection Using Multimodal Signals. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1186–1190. [Google Scholar] [CrossRef]

- Terwilliger, A.M.; Siegel, J.E. Improving Misfire Fault Diagnosis with Cascading Architectures via Acoustic Vehicle Characterization. Sensors 2022, 22, 7736. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P. Deep Convolutional Neural Networks and Data Augmentation for Environmental Sound Classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Kalantarian, H.; Mortazavi, B.; Pourhomayoun, M.; Alshurafa, N.; Sarrafzadeh, M. Probabilistic segmentation of time-series audio signals using Support Vector Machines. Microprocess. Microsyst. 2016, 46, 96–104. [Google Scholar] [CrossRef]

- Nath, K.; Sarma, K.K. Separation of overlapping audio signals: A review on current trends and evolving approaches. Signal Process. 2024, 221, 109487. [Google Scholar] [CrossRef]

- Tran, T.; Lundgren, J. Drill Fault Diagnosis Based on the Scalogram and Mel Spectrogram of Sound Signals Using Artificial Intelligence. IEEE Access 2020, 8, 203655–203666. [Google Scholar] [CrossRef]

- Abdul, Z.K.; Al-Talabani, A.K. Mel Frequency Cepstral Coefficient and its Applications: A Review. IEEE Access 2022, 10, 122136–122158. [Google Scholar] [CrossRef]

- Zalkow, F.; Muller, M. CTC-Based Learning of Chroma Features for Score–Audio Music Retrieval. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 2957–2971. [Google Scholar] [CrossRef]

- Khan, S. Ethem Alpaydin. Introduction to Machine Learning (Adaptive Computation and Machine Learning Series). The MIT Press, 2004. ISBN: 0 262 01211 1 Price £32.95/$50.00 (hardcover). xxx+415 pages. Nat. Lang. Eng. 2008, 14, 133–137. [Google Scholar] [CrossRef]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4. [Google Scholar]

- Ko, K.; Kim, S.; Kwon, H. Selective Audio Perturbations for Targeting Specific Phrases in Speech Recognition Systems. Int. J. Comput. Intell. Syst. 2025, 18, 103. [Google Scholar] [CrossRef]

- Kwon, H.; Park, D.; Jo, O. Silent-Hidden-Voice Attack on Speech Recognition System. IEEE Access 2024, 12, 173010–173019. [Google Scholar] [CrossRef]

- Kwon, H.; Lee, K.; Ryu, J.; Lee, J. Audio Adversarial Example Detection Using the Audio Style Transfer Learning Method. IEEE Access 2025, 13, 122464–122472. [Google Scholar] [CrossRef]

- Kwon, H.; Nam, S.-H. Audio adversarial detection through classification score on speech recognition systems. Comput. Secur. 2023, 126, 103061. [Google Scholar] [CrossRef]

| Dataset | Description |

|---|---|

| Vehicular Acoustic Fault Dataset (VAFD) | Curated to represent vehicle-related fault sounds across subsystems and include general operational audio. Designed to support diagnostic model development through acoustic analysis. |

| Urban Emergency and Ambient Sound Dataset (UEASD) | Includes emergency signals (e.g., sirens), environmental noises, animal sounds, and construction sounds. Structured to support acoustic scene analysis, anomaly detection, and public safety research. |

| Integrated Vehicle and Environmental Sound Dataset (IVESD) | Merges VAFD and UEASD into a comprehensive multi-domain collection, facilitating the creation of robust models capable of distinguishing between mechanical faults and environmental sounds. |

| Dataset | Class | Count |

|---|---|---|

| VAFD | Engine/Powertrain Faults | 61 |

| Suspension/Steering Faults | 39 | |

| General Vehicle Sounds (Normal) | 38 | |

| Exhaust/Fuel System Faults | 16 | |

| Belt/Accessory Issues | 12 | |

| Braking Faults | 4 | |

| Miscellaneous Mechanical Faults | 5 | |

| UEASD | Emergency Vehicle Sirens | 1560 |

| Animal Sounds | 650 | |

| Vehicles/Transport Noise | 246 | |

| Weapons/Explosions | 62 | |

| Weather Sounds | 29 | |

| Construction/Machinery | 24 | |

| IVESD | Integrated Vehicle + Env. Classes | Balanced integration from VAFD + UEASD |

| Phase | Experiment | Objective | Key Methods | Main Findings |

|---|---|---|---|---|

| Phase 1: Baseline Evaluation and Feature Selection | 1. Baseline Performance of ML Models | Establish baseline using 15 ML models across 3 datasets | 52 handcrafted features (Mel spectrogram stats, MFCCs, Chroma); ANOVA F-test for feature ranking; feature count varied (20–52). | Ensemble models (e.g., LightGBM, XGBoost) performed best; reduced feature sets outperformed full sets due to redundancy. |

| 2. Ensemble vs. MLP | Compare top ensembles with MLP under refined feature selection | ANOVA-ranked feature subsets (15–52); 10-fold cross-validation. | MLP outperformed ensembles when redundant features were removed, highlighting its strength in modeling non-linear patterns. | |

| Phase 2: Advanced Feature Selection and Optimization | 1. Boruta-Based Selection with 15 Models | Evaluate Boruta’s ability to identify relevant features and improve model generalization. | Boruta with Extra Trees: retraining 15 models on selected subsets | Boruta effectively reduced dimensionality while maintaining or improving performance across all datasets. |

| 2. ANOVA-Based Optimization with MLP | Optimize MLP performance by selecting the best feature count. | ANOVA selection (25–52 features); 10-fold cross-validation | Identified optimal feature range balancing accuracy and computational efficiency. | |

| 3. SHAP-Based Selection and Interpretability | Combine performance with model transparency using SHAP | SHAP ranking with MLP; tested varying top-n feature subsets | Achieved high accuracy with interpretable models; suitable for safety-critical ITS applications. |

| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | MCC | TT (Sec) |

|---|---|---|---|---|---|---|---|---|

| Extra Trees Classifier | 0.9013 | 0.0000 | 0.9013 | 0.8862 | 0.8811 | 0.8655 | 0.8783 | 0.2340 |

| Random Forest Classifier | 0.8436 | 0.0000 | 0.8436 | 0.8356 | 0.8206 | 0.7866 | 0.8019 | 0.2830 |

| Light Gradient Boosting Machine | 0.8276 | 0.0000 | 0.8276 | 0.8450 | 0.8091 | 0.7683 | 0.7887 | 0.1930 |

| K Neighbors Classifier | 0.7712 | 0.0000 | 0.7712 | 0.8123 | 0.7681 | 0.7040 | 0.7201 | 0.0510 |

| Gradient Boosting Classifier | 0.7615 | 0.0000 | 0.7615 | 0.7704 | 0.7460 | 0.6840 | 0.6969 | 1.8780 |

| Linear Discriminant Analysis | 0.7455 | 0.0000 | 0.7455 | 0.7744 | 0.7334 | 0.6687 | 0.6881 | 0.0530 |

| Logistic Regression | 0.7385 | 0.0000 | 0.7385 | 0.7422 | 0.7240 | 0.6550 | 0.6671 | 0.2580 |

| Ridge Classifier | 0.7378 | 0.0000 | 0.7378 | 0.7379 | 0.7226 | 0.6520 | 0.6641 | 0.0360 |

| Extreme Gradient Boosting | 0.7359 | 0.0000 | 0.7359 | 0.7207 | 0.7083 | 0.6422 | 0.6636 | 0.2310 |

| Naive Bayes | 0.7308 | 0.0000 | 0.7308 | 0.7317 | 0.7128 | 0.6442 | 0.6605 | 0.0500 |

| Decision Tree Classifier | 0.6282 | 0.0000 | 0.6282 | 0.6437 | 0.6034 | 0.5085 | 0.5317 | 0.0580 |

| SVM—Linear Kernel | 0.6135 | 0.0000 | 0.6135 | 0.6214 | 0.5690 | 0.5028 | 0.5472 | 0.0660 |

| Ada Boost Classifier | 0.5321 | 0.0000 | 0.5321 | 0.3824 | 0.4174 | 0.3085 | 0.4181 | 0.1580 |

| Dummy Classifier | 0.3532 | 0.0000 | 0.3532 | 0.1266 | 0.1858 | 0.0000 | 0.0000 | 0.0300 |

| Quadratic Discriminant Analysis | 0.3115 | 0.0000 | 0.3115 | 0.1953 | 0.1992 | 0.0484 | 0.0536 | 0.0390 |

| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | MCC | TT (Sec) |

|---|---|---|---|---|---|---|---|---|

| Extreme Gradient Boosting | 0.9550 | 0.9948 | 0.9550 | 0.9534 | 0.9525 | 0.9188 | 0.9192 | 0.6550 |

| Light Gradient Boosting Machine | 0.9528 | 0.9956 | 0.9528 | 0.9522 | 0.9501 | 0.9149 | 0.9154 | 1.3880 |

| Extra Trees Classifier | 0.9505 | 0.9927 | 0.9505 | 0.9509 | 0.9479 | 0.9110 | 0.9115 | 0.3800 |

| Random Forest Classifier | 0.9472 | 0.9952 | 0.9472 | 0.9476 | 0.9446 | 0.9050 | 0.9056 | 0.5150 |

| Gradient Boosting Classifier | 0.9422 | 0.0000 | 0.9422 | 0.9413 | 0.9394 | 0.8958 | 0.8964 | 12.8640 |

| K Neighbors Classifier | 0.9322 | 0.9844 | 0.9322 | 0.9324 | 0.9296 | 0.8781 | 0.8785 | 0.0620 |

| Logistic Regression | 0.9261 | 0.0000 | 0.9261 | 0.9235 | 0.9229 | 0.8661 | 0.8668 | 0.7020 |

| Decision Tree Classifier | 0.9255 | 0.9487 | 0.9255 | 0.9269 | 0.9244 | 0.8667 | 0.8672 | 0.0750 |

| Quadratic Discriminant Analysis | 0.9066 | 0.0000 | 0.9066 | 0.8986 | 0.8967 | 0.8355 | 0.8390 | 0.0480 |

| Linear Discriminant Analysis | 0.9027 | 0.0000 | 0.9027 | 0.9049 | 0.9014 | 0.8260 | 0.8268 | 0.0550 |

| Ridge Classifier | 0.8983 | 0.0000 | 0.8983 | 0.8844 | 0.8879 | 0.8147 | 0.8158 | 0.0340 |

| SVM—Linear Kernel | 0.8194 | 0.0000 | 0.8194 | 0.8596 | 0.8060 | 0.7034 | 0.7205 | 0.0620 |

| Naive Bayes | 0.8038 | 0.9445 | 0.8038 | 0.8763 | 0.8288 | 0.6706 | 0.6776 | 0.0370 |

| Ada Boost Classifier | 0.6849 | 0.0000 | 0.6849 | 0.6553 | 0.6216 | 0.3660 | 0.4177 | 0.6190 |

| Dummy Classifier | 0.6070 | 0.5000 | 0.6070 | 0.3685 | 0.4586 | 0.0000 | 0.0000 | 0.0350 |

| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | MCC | TT (Sec) |

|---|---|---|---|---|---|---|---|---|

| Extra Trees Classifier | 0.9266 | 0.0990 | 0.9266 | 0.9265 | 0.9204 | 0.8791 | 0.8800 | 0.3840 |

| Light Gradient Boosting Machine | 0.9245 | 0.0991 | 0.9245 | 0.9189 | 0.9160 | 0.8751 | 0.8762 | 3.9820 |

| Random Forest Classifier | 0.9183 | 0.0992 | 0.9183 | 0.9160 | 0.9107 | 0.8651 | 0.8662 | 0.7940 |

| Extreme Gradient Boosting | 0.9173 | 0.0988 | 0.9173 | 0.9129 | 0.9099 | 0.8633 | 0.8642 | 1.4490 |

| Gradient Boosting Classifier | 0.9037 | 0.0000 | 0.9037 | 0.8989 | 0.8967 | 0.8414 | 0.8424 | 30.1280 |

| K Neighbors Classifier | 0.8907 | 0.0981 | 0.8907 | 0.8903 | 0.8866 | 0.8214 | 0.8221 | 0.0570 |

| Logistic Regression | 0.8715 | 0.0000 | 0.8715 | 0.8654 | 0.8608 | 0.7853 | 0.7866 | 1.9600 |

| Decision Tree Classifier | 0.8715 | 0.0921 | 0.8715 | 0.8731 | 0.8691 | 0.7897 | 0.7904 | 0.0970 |

| Quadratic Discriminant Analysis | 0.8434 | 0.0000 | 0.8434 | 0.8171 | 0.8205 | 0.7447 | 0.7503 | 0.0660 |

| Linear Discriminant Analysis | 0.8377 | 0.0000 | 0.8377 | 0.8632 | 0.8441 | 0.7400 | 0.7415 | 0.1230 |

| Ridge Classifier | 0.8351 | 0.0000 | 0.8351 | 0.7741 | 0.7985 | 0.7153 | 0.7197 | 0.1240 |

| SVM—Linear Kernel | 0.8205 | 0.0000 | 0.8205 | 0.7780 | 0.7912 | 0.6950 | 0.7020 | 0.1570 |

| Naive Bayes | 0.7555 | 0.0952 | 0.7555 | 0.8221 | 0.7754 | 0.6187 | 0.6242 | 0.0440 |

| Dummy Classifier | 0.5682 | 0.0500 | 0.5682 | 0.3228 | 0.4117 | 0.0000 | 0.0000 | 0.0710 |

| Ada Boost Classifier | 0.5563 | 0.0000 | 0.5563 | 0.6004 | 0.5134 | 0.4211 | 0.4657 | 0.7190 |

| Component | Description |

|---|---|

| Preprocessing Steps | Normalize Audio—Extend Short Files—Resample to 16 kHz—Segment into 2.5 s |

| Target Type | Multiclass Classification |

| Target Encoding | Label Encoding |

| Total Number of Features | 52 |

| Feature Types | Mel Spectrogram (mean, std), MFCCs (13 × mean & std), Chromagram (mean, std) |

| Feature Selection | Boruta Algorithm |

| Model Training | 15 Machine Learning Models (e.g., SVM, RF, KNN, XGBoost, etc.) |

| Fold Generation Method | Stratified K-Fold Cross-Validation |

| Number of Folds | 10 |

| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | MCC | TT (Sec) |

|---|---|---|---|---|---|---|---|---|

| Extra Trees Classifier | 0.9103 | 0.0000 | 0.9103 | 0.9056 | 0.8987 | 0.8796 | 0.8887 | 0.2630 |

| Light Gradient Boosting Machine | 0.8615 | 0.0000 | 0.8615 | 0.8422 | 0.8347 | 0.8156 | 0.8323 | 0.2150 |

| Random Forest Classifier | 0.8346 | 0.0000 | 0.8346 | 0.7842 | 0.7999 | 0.7751 | 0.7893 | 0.3800 |

| Gradient Boosting Classifier | 0.8199 | 0.0000 | 0.8199 | 0.8153 | 0.7968 | 0.7616 | 0.7804 | 2.0860 |

| Extreme Gradient Boosting | 0.8109 | 0.0000 | 0.8109 | 0.7788 | 0.7828 | 0.7443 | 0.7601 | 0.2550 |

| Logistic Regression | 0.7859 | 0.0000 | 0.7859 | 0.7995 | 0.7754 | 0.7144 | 0.7268 | 0.2700 |

| Naive Bayes | 0.7462 | 0.0000 | 0.7462 | 0.7175 | 0.7149 | 0.6555 | 0.6739 | 0.0410 |

| Ridge Classifier | 0.7128 | 0.0000 | 0.7128 | 0.6866 | 0.6828 | 0.6124 | 0.6295 | 0.0390 |

| K Neighbors Classifier | 0.7058 | 0.0000 | 0.7058 | 0.7511 | 0.6946 | 0.6247 | 0.6434 | 0.0380 |

| Decision Tree Classifier | 0.7051 | 0.0000 | 0.7051 | 0.7050 | 0.6831 | 0.6188 | 0.6347 | 0.0400 |

| Linear Discriminant Analysis | 0.6872 | 0.0000 | 0.6872 | 0.7390 | 0.6868 | 0.5938 | 0.6088 | 0.1140 |

| Ada Boost Classifier | 0.5481 | 0.0000 | 0.5481 | 0.4082 | 0.4338 | 0.3401 | 0.4453 | 0.1720 |

| SVM—Linear Kernel | 0.5019 | 0.0000 | 0.5019 | 0.4395 | 0.4246 | 0.3096 | 0.3927 | 0.0520 |

| Quadratic Discriminant Analysis | 0.4436 | 0.0000 | 0.4436 | 0.5316 | 0.4461 | 0.3305 | 0.3586 | 0.0400 |

| Dummy Classifier | 0.3519 | 0.0000 | 0.3519 | 0.1247 | 0.1839 | 0.0000 | 0.0000 | 0.0370 |

| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | MCC | TT (Sec) |

|---|---|---|---|---|---|---|---|---|

| Light Gradient Boosting Machine | 0.9555 | 0.9949 | 0.9555 | 0.9554 | 0.9518 | 0.9198 | 0.9206 | 2.6790 |

| Extreme Gradient Boosting | 0.9522 | 0.9943 | 0.9522 | 0.9516 | 0.9489 | 0.9140 | 0.9146 | 1.6070 |

| Random Forest Classifier | 0.9477 | 0.9943 | 0.9477 | 0.9441 | 0.9433 | 0.9059 | 0.9067 | 0.9090 |

| Extra Trees Classifier | 0.9461 | 0.9908 | 0.9461 | 0.9432 | 0.9419 | 0.9028 | 0.9036 | 0.4280 |

| Gradient Boosting Classifier | 0.9450 | 0.0000 | 0.9450 | 0.9440 | 0.9417 | 0.9013 | 0.9021 | 25.1000 |

| K Neighbors Classifier | 0.9350 | 0.9866 | 0.9350 | 0.9334 | 0.9320 | 0.8830 | 0.8836 | 0.1000 |

| Decision Tree Classifier | 0.9222 | 0.9467 | 0.9222 | 0.9266 | 0.9224 | 0.8612 | 0.8621 | 0.1410 |

| Logistic Regression | 0.9161 | 0.0000 | 0.9161 | 0.9151 | 0.9123 | 0.8478 | 0.8492 | 1.5590 |

| Quadratic Discriminant Analysis | 0.9022 | 0.0000 | 0.9022 | 0.8779 | 0.8860 | 0.8260 | 0.8306 | 0.1160 |

| Ridge Classifier | 0.8994 | 0.0000 | 0.8994 | 0.8812 | 0.8871 | 0.8161 | 0.8180 | 0.0670 |

| Linear Discriminant Analysis | 0.8988 | 0.0000 | 0.8988 | 0.9039 | 0.8989 | 0.8194 | 0.8202 | 0.1240 |

| SVM—Linear Kernel | 0.8549 | 0.0000 | 0.8549 | 0.8356 | 0.8347 | 0.7335 | 0.7428 | 0.0940 |

| Ada Boost Classifier | 0.8205 | 0.0000 | 0.8205 | 0.8151 | 0.8035 | 0.6752 | 0.6853 | 0.9120 |

| Naive Bayes | 0.7610 | 0.9371 | 0.7610 | 0.8759 | 0.7949 | 0.6069 | 0.6216 | 0.0810 |

| Dummy Classifier | 0.6070 | 0.5000 | 0.6070 | 0.3685 | 0.4586 | 0.0000 | 0.0000 | 0.0450 |

| Model | Accuracy | AUC | Recall | Prec. | F1 | Kappa | MCC | TT (Sec) |

|---|---|---|---|---|---|---|---|---|

| Extra Trees Classifier | 0.9199 | 0.0991 | 0.9199 | 0.9170 | 0.9121 | 0.8676 | 0.8690 | 0.3880 |

| Light Gradient Boosting Machine | 0.9183 | 0.0988 | 0.9183 | 0.9130 | 0.9100 | 0.8648 | 0.8657 | 4.5030 |

| Extreme Gradient Boosting | 0.9178 | 0.0989 | 0.9178 | 0.9146 | 0.9103 | 0.8642 | 0.8651 | 2.5270 |

| Random Forest Classifier | 0.9105 | 0.0991 | 0.9105 | 0.9050 | 0.9002 | 0.8517 | 0.8532 | 1.0420 |

| Gradient Boosting Classifier | 0.9063 | 0.0000 | 0.9063 | 0.9049 | 0.8992 | 0.8454 | 0.8463 | 52.9980 |

| K Neighbors Classifier | 0.8954 | 0.0980 | 0.8954 | 0.8952 | 0.8909 | 0.8289 | 0.8297 | 0.0710 |

| Logistic Regression | 0.8840 | 0.0000 | 0.8840 | 0.8769 | 0.8749 | 0.8070 | 0.8080 | 2.6920 |

| Decision Tree Classifier | 0.8699 | 0.0917 | 0.8699 | 0.8680 | 0.8657 | 0.7865 | 0.7872 | 0.1430 |

| Linear Discriminant Analysis | 0.8497 | 0.0000 | 0.8497 | 0.8687 | 0.8545 | 0.7574 | 0.7584 | 0.0700 |

| Ridge Classifier | 0.8450 | 0.0000 | 0.8450 | 0.7930 | 0.8109 | 0.7326 | 0.7370 | 0.0670 |

| Quadratic Discriminant Analysis | 0.8372 | 0.0000 | 0.8372 | 0.7821 | 0.8009 | 0.7307 | 0.7382 | 0.0800 |

| SVM—Linear Kernel | 0.8023 | 0.0000 | 0.8023 | 0.7703 | 0.7697 | 0.6489 | 0.6651 | 0.1340 |

| Ada Boost Classifier | 0.7716 | 0.0000 | 0.7716 | 0.6885 | 0.7135 | 0.6111 | 0.6408 | 1.0660 |

| Naive Bayes | 0.7097 | 0.0942 | 0.7097 | 0.8260 | 0.7416 | 0.5585 | 0.5709 | 0.0530 |

| Dummy Classifier | 0.5682 | 0.0500 | 0.5682 | 0.3228 | 0.4117 | 0.0000 | 0.0000 | 0.0440 |

| Dataset | Optimal No. of Features | Mean Accuracy | Standard Deviation | Key Selected Features |

|---|---|---|---|---|

| Dataset 1 | 33 | 0.9248 | 0.0645 | mfcc_mean_6–12, mfcc_std_8–9, chromagram_mean_1–11, chromagram_std_0–11 |

| Dataset 2 | 21 | 0.9615 | 0.0101 | mfcc_mean_1–12, chromagram_mean_2–9 |

| Dataset 3 | 23 | 0.9472 | 0.0123 | mfcc_mean_1–12, mfcc_std_2, chromagram_mean_1–9 |

| Dataset | Optimal Number of Features | Best Accuracy |

|---|---|---|

| VAFD | 43 | 0.9144 |

| UEASD | 29 | 0.9607 |

| IVESD | 43 | 0.9381 |

| Dataset | Feature Selection Method | Best Classifier | Accuracy (%) | No. of Selected Features |

|---|---|---|---|---|

| 1 | ANOVA (Extra Trees) | Extra Trees Classifier | 90.13 | 38 |

| 1 | ANOVA (MLP) | MLP | 92.48 | 33 |

| 1 | Boruta | Extra Trees Classifier | 91.03 | 45 |

| 1 | SHAP | MLP | 91.44 | 43 |

| 2 | ANOVA (Extra Trees) | Extra Trees Classifier | 95.50 | 31 |

| 2 | ANOVA (MLP) | MLP | 96.15 | 21 |

| 2 | Boruta | LightGBM | 95.55 | 52 |

| 2 | SHAP | MLP | 96.07 | 29 |

| 3 | ANOVA (Extra Trees) | Extra Trees Classifier | 92.66 | 31 |

| 3 | ANOVA (MLP) | MLP | 94.72 | 23 |

| 3 | Boruta | Extra Trees Classifier | 91.99 | 47 |

| 3 | SHAP | MLP | 93.81 | 43 |

| Study and Year | Application Domain | Dataset | Feature Selection | Classification Model | Explainability | Reported Accuracy (%) |

|---|---|---|---|---|---|---|

| Proposed Method (2025) | Vehicle faults and emergency sounds | Custom DB3 (vehicle + emergency sounds) | SHAP, Boruta, ANOVA | Extra Trees, MLP, LightGBM | Yes (SHAP) | Up to 96.15 |

| Rashed et al. (2025) [12] | Vehicle fault detection | YouTube-derived audio + FeatureList-126 | ReliefF, ANOVA, FCBF | BOWSVFS (Bayesian Soft Voting) | No | 91.04 |

| Fedorishin et al. (2024) [65] | Fine-grained engine fault detection | Multimodal dataset (audio + vibration) | Not specified | Multimodal SED framework | No | Not specified |

| Terwilliger and Siegel (2022) [66] | Acoustic vehicle characterization | Synthesized dataset (40+ hours) | Not specified | Cascading CNN architecture | No | 93.6 (validation), 86.8 (test) |

| Salamon et al. (2017) [67] | Urban sound classification | UrbanSound8K | Not specified | CNN with data augmentation | No | 89.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Badawy, M.; Rashed, A.; Bamaqa, A.; Sayed, H.A.; Elagamy, R.; Almaliki, M.; Farrag, T.A.; Elhosseini, M.A. From Sensors to Insights: Interpretable Audio-Based Machine Learning for Real-Time Vehicle Fault and Emergency Sound Classification. Machines 2025, 13, 888. https://doi.org/10.3390/machines13100888

Badawy M, Rashed A, Bamaqa A, Sayed HA, Elagamy R, Almaliki M, Farrag TA, Elhosseini MA. From Sensors to Insights: Interpretable Audio-Based Machine Learning for Real-Time Vehicle Fault and Emergency Sound Classification. Machines. 2025; 13(10):888. https://doi.org/10.3390/machines13100888

Chicago/Turabian StyleBadawy, Mahmoud, Amr Rashed, Amna Bamaqa, Hanaa A. Sayed, Rasha Elagamy, Malik Almaliki, Tamer Ahmed Farrag, and Mostafa A. Elhosseini. 2025. "From Sensors to Insights: Interpretable Audio-Based Machine Learning for Real-Time Vehicle Fault and Emergency Sound Classification" Machines 13, no. 10: 888. https://doi.org/10.3390/machines13100888

APA StyleBadawy, M., Rashed, A., Bamaqa, A., Sayed, H. A., Elagamy, R., Almaliki, M., Farrag, T. A., & Elhosseini, M. A. (2025). From Sensors to Insights: Interpretable Audio-Based Machine Learning for Real-Time Vehicle Fault and Emergency Sound Classification. Machines, 13(10), 888. https://doi.org/10.3390/machines13100888