Abstract

Thin-walled parts exhibit high flexibility, rendering them susceptible to chatter during milling, which can significantly impact machining accuracy, surface quality, and productivity. Therefore, chatter detection plays a crucial role in thin-wall milling. In this study, a chatter detection method based on multi-sensor fusion and a dual-stream convolutional neural network (CNN) is proposed, which can effectively identify the machining status in thin-wall milling. Specifically, the acceleration signals and cutting force signals are first collected during the milling process and transformed into the frequency domain using fast Fourier transform (FFT). Secondly, a dual-stream CNN is designed to extract the hidden features from the spectrum of multi-sensor signals, thereby avoiding confusion when learning the features of each sensor signal. Then, considering that the characteristics of each sensor are of different importance for chatter detection, a joint attention mechanism based on residual connection is designed, and the feature weight coefficients are adaptively assigned to obtain the joint features. Finally, the joint features feed into a machining status classifier to identify chatter occurrences. To validate the feasibility and effectiveness of the proposed method, a series of milling tests are conducted. The results demonstrate that the proposed method can accurately distinguish between stable and chatter under various milling scenarios, achieving a detection accuracy of up to 98.68%.

1. Introduction

Thin-walled parts are key components of aerospace equipment, and the quality of their machining performance directly affects whether the equipment can safely operate. Due to their low stiffness, chatter is prone to occur during machining, which can lead to problems such as poor surface quality, severe tool wear, and even damage to the machine spindle [1,2,3]. To effectively avoid chatter, the general approach is to establish a dynamic model of the thin-walled part milling process, draw a stability lobe diagram, and select chatter-free machining parameters [4,5]. However, chatter can still occur even under predicted stable machining parameters due to the time-varying and position-dependent nature of thin-walled parts, as well as inevitable model simplification errors [6,7,8]. Therefore, detecting and identifying chatter during the milling process is essential for improving the machined surface quality of thin-walled parts.

Chatter detection typically involves three primary stages: data acquisition, feature extraction and selection, and state recognition [9]. First, various sensors are installed on the machine tool to capture signals such as cutting force, displacement, acceleration, and sound during the machining process. Additionally, sensors integrated into the machine provide current signals. Then, signal processing techniques like wavelet transform are employed to extract representative features from the acquired signals, reflecting the cutting process state. However, manually selecting features may lack sensitivity to chatter or fail to provide useful information, necessitating feature selection to identify sensitive features. Once sensitive features are identified, an effective classification method is essential to classify the machining condition. Commonly used classification methods fall into two categories: threshold methods and machine-learning algorithms. The threshold method clearly distinguishes between different machining states by setting appropriate boundaries for the extracted features. For example, Albertelli et al. [10] presented a chatter indicator based on cyclostationary theory, with thresholds set at 54.5 and 57.2 for identifying chatter. Hao et al. [11] proposed the power entropy of acceleration and sound signal fusion as chatter indicators and setting their thresholds at 3, 2, 1.25, and 1.18 for chatter recognition. However, the determination of thresholds relies on processing parameters and human knowledge, so chatter detection is less robust. In contrast, a machine-learning algorithm can input selected features into a machine-learning model for training and then classify the processing state, thereby improving the robustness of chatter monitoring. For example, Kuljanic et al. [12] employed wavelet decomposition to extract features from signals collected by various sensors and input them into artificial neural networks (ANNs) for state recognition. The results demonstrate that multi-source sensor fusion technology significantly enhances the accuracy and robustness of chatter recognition, improving accuracy by up to 5.91% compared with using a single sensor. Pan et al. [13] extracted multiple features from signals collected by a displacement sensor, an accelerometer, and a sound sensor; used popular learning methods to reduce feature dimensionality; and finally applied the support vector machine (SVM) method to detect chatter. In addition, Tran et al. [14] not only employed wavelet packet decomposition (WPD) to extract the time–frequency domain features from sound and acceleration signals but also used the recursive feature elimination (RFE) method to select critical chatter features and input them into machine-learning models for state classification.

Although chatter detection methods based on thresholding methods and machine-learning algorithms have been applied in practice, there are two drawbacks: (i) manual feature extraction is time-consuming and laborious, especially when dealing with a multi-sensor signal, which require extracting features from each sensor and domain expertise; and (ii) shallow architectures, such as SVM, which perform only a single nonlinear feature transformation, struggle to effectively capture complex nonlinear relationships. This limitation hinders the ability to capture chatter-sensitive information, thereby affecting the accuracy of chatter detection.

Deep learning provides an effective solution to address the limitations of the aforementioned chatter detection methods. With its strong performance in feature learning and classification, deep learning has gradually become a mainstream approach in the field of chatter detection. Its robust feature mapping capabilities make it especially proficient at analyzing nonlinear physical systems, including thin-wall milling. Recently, researchers have introduced several deep-learning-based chatter detection methods such as convolutional neural networks (CNNs) [15,16,17,18] and long short-term memory (LSTM) [19]. Fu et al. [16] utilized wavelet transform signals as input to a CNN for condition detection. Compared with the SVM method, the CNN-based chatter detection method achieved higher accuracy. Sener et al. [17] and Tran et al. [18] employed a continuous wavelet transform to preprocess vibration signals, which were used for training and testing of CNNs. The results showed that milling chatter could be accurately identified. Zhu et al. [15] also proposed a CNN-based chatter detection method but used processed surface images as input. Vashisht et al. [19] introduced an online ball screw motor chatter detection method based on LSTM and motor current signals, which was trained using analog data without requiring additional sensors. Chen et al. [20] developed a chatter detection method using deep-learning neural network and recurrence plots (RPs), where RPs were automatically generated via the adaptive particle swarm optimization method. Sun et al. [21] proposed a novel hybrid deep neural network for chatter detection, combining Inception, LSTM, and residual network. Han et al. [22] fed manually extracted features from multiple sources into a temporal attention-based network for chatter recognition. However, this method faces the issue of manually extracting multi-source and multi-domain features, treating the deep network merely as a traditional classifier and overlooking its capability to automatically extract valuable information from the original signals.

It can be seen from the literature [15,16,17,18,19,20,21,22] that deep-learning algorithms have achieved good results in chatter detection. However, most current deep-learning algorithms for chatter detection consider only a single sensor or a single-source sensor. Even when multiple sensors are used, manually extracted features are often directly input into the deep-learning neural network without effectively integrating the data from multiple sensors. Data collected by multiple sensors often contain various features, which may include redundant, irrelevant, or even contradictory information. Ignoring these differences can negatively impact the accuracy of chatter detection.

To solve the above problems, this study proposes a chatter detection method based on multi-sensor fusion and a dual-stream residual attention CNN. The contribution of this study is as follows:

- (1)

- Multi-sensor signals are used to extract the features from different sensors using a dual-stream CNN, eliminating the need for manual feature extraction.

- (2)

- An improved attention mechanism is proposed to adaptively fuse the features from different sensors.

- (3)

- When chatter occurs, the spectrum of the signal will change. Therefore, the spectrum of each sensor signal is used as the input for the dual-stream CNN, which performs better than using time-domain signals.

The structure of this study is organized as follows. Section 2 introduces the background of the theory of the modules in the proposed model. Section 3 presents the process and overall architecture of the proposed chatter method. Section 4 briefly describes the experimental setup and dataset partition. Section 5 analyzes and discusses the results, and Section 6 summarizes the conclusions of this study.

2. Theory Background

2.1. Convolutional Neural Network

A convolutional neural network (CNN) is a deep feedforward network that is mainly used for multi-dimensional data processing, such as time series signals and images [17]. Compared with traditional methods, a CNN can automatically extract intrinsic data representations, thus avoiding the tedious and time-consuming process of manual feature extraction. A typical CNN is primarily composed of an input layer, several convolutional layers, pooling layers, and a fully connected layer.

In a convolutional layer, the input data are convoluted with a convolution kernel, and the activation function is applied to obtain the output feature map. The convolution process can be expressed as

where represents the convolution operation, is the j-th feature map of the l-th layer, is the output of the (l − 1)-th layer, and M represents the size of the input; is the weight, is the bias, and represents the nonlinear activation function.

The pooling layer is primarily used to extract the main features and reduce the feature dimension after the convolutional layer. Maximum pooling and average pooling are two common pooling algorithms, and the maximum pooling formula is as follows:

where and W represent the result of the l-th layer pooling and the size of the pooling window, respectively.

The function of the fully connected layer is to flatten the multi-dimensional feature maps obtained from convolution and pooling operations into one-dimensional vectors. This process is defined as follows:

where is the output value of the j-th neuron in the (l + 1)-th layer.

2.2. Batch Normalization Algorithm

Batch normalization (BN) is similar to a standardization operation, reducing internal covariate shift, boosting training efficiency, and improving generalization ability [23]. Specifically, the BN layer standardizes the input data of each batch, making the input distribution of each layer more stable. This accelerates the convergence speed of the network and improves its generalization ability. Generally, the BN layer is placed after the convolutional layer, and its specific calculation process is shown in Equation (4).

where and respectively represent the scaling and placement of the BN layer, denotes the output of the BN layer, and represents the batch size.

3. The Proposed Method

In this study, a multi-sensor fusion method for chatter detection in the thin-wall milling process is proposed. The method is mainly composed of four parts: preprocessing of multi-sensor signals, feature extraction using a dual-stream CNN with processed multi-sensor signals, fusion of sensor features through a joint attention mechanism based on residual connection, and prediction of machining states.

3.1. Multi-Sensor Signal Preprocessing

Most popular chatter detection methods rely on a single sensor, but a single signal contains limited information and cannot accurately detect chatter. To enhance the accuracy, reliability, and robustness of chatter detection, multi-sensor information fusion technology has become increasingly important. Moreover, time-domain signals often contain interference such as noise, making it challenging to directly extract critical chatter-related features. In contrast, frequency-domain analysis can better depict the cutting state during machining processes and reveal hidden feature information from frequency-domain data. To obtain multi-sensor signals, accelerometers and dynamometers are employed to collect acceleration and cutting force signals in the x, y, and z directions during the thin-wall milling process. Fast Fourier transform (FFT) is then applied to obtain frequency-domain information, where the discrete Fourier transform (DFT) is expressed as follows:

where and are the time-domain information of the original signal and the Fourier amplitude, respectively.

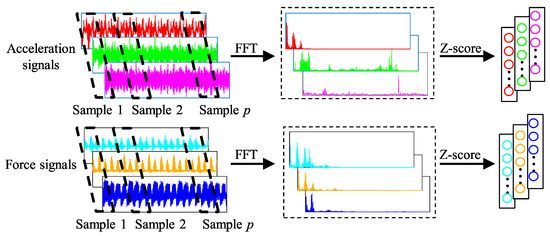

As shown in Figure 1, for the acquired multi-sensor signals, firstly, the one-dimensional time series of length m × 1 is divided into p segments using sliding window segmentation, and each time series is processed by FFT to obtain a one-dimensional frequency-domain series of length l × 1. Secondly, in order to eliminate the influence of dimensions between parameters and improve the efficiency of model training, the data are standardized using the Z-score method, as shown in Equation (6).

where z denotes the normalized data, x represents the input eigenvector, and μ and σ are its mean and standard deviation, respectively.

Figure 1.

Multi-sensor signal preprocessing.

Finally, the frequency-domain sequences of the three channels of acceleration and cutting force signals are fused into the acceleration signal eigenvector and the force signal eigenvector of length , which are denoted as and , respectively, and serve as the input to the deep-learning network.

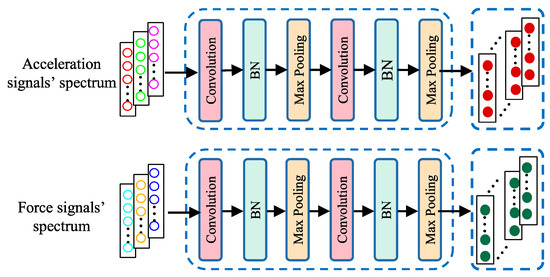

3.2. Multi-Sensor Signal Feature Extraction

The signals from multiple sensors contain more information than those from a single sensor, so using multiple sensors for feature fusion can improve the performance of the model. However, the characteristics of different sensors may be correlated or contradictory. To avoid this negative impact on the accuracy of chatter detection, a 1D CNN-based dual-stream structure is designed, as shown in Figure 2. This structure can separately extract features from multiple sensor signals to ensure that the features of different sensors are not confused. Table 1 lists the basic parameters of the dual-stream CNN network.

Figure 2.

Dual-stream CNN network.

Table 1.

Parameters of each layer in the dual-stream CNN.

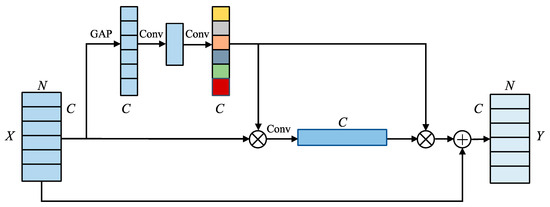

3.3. Adaptive Fusion of Multi-Sensor Signal Characteristics

In machining status detection, the data collected by multiple sensors consist of different channels. To improve prediction accuracy, it is necessary to consider the weight of the feature map extracted by different signals and the importance of the spatial position within the same feature map. Therefore, this study proposes a joint attention mechanism with residual connection to automatically learn the weights of different features, thereby enhancing useful features and suppressing useless ones. The input feature map is given as , where C is the number of channels, and N is the length of x, as shown in Figure 3. The channel attention mechanism is used to extract information between channels. Specifically, performing global average pooling (GAP) first will compress X into vectors . The i-th element of z can be defined as

Figure 3.

The joint attention mechanism with residual connection.

Then, two convolutional layers are used to obtain the weights of the channels:

where and denote the weights of the two convolutional layers, and and denote the corresponding biases. and denote the ReLU and sigmoid functions, respectively. The weights of the channels indicate the importance of the different orientations of the different sensors, and these weights are multiplied element by element with the different channels to obtain the channel characteristics.

where means multiplying element by element.

On the other hand, to obtain the spatial position information within each feature map, this study also employs a spatial attention mechanism. Specifically, a 3 × 1 convolution is used to further extract local features, and the weights of the spatial positions are determined using the sigmoid function.

The joint features can then be activated in the following way:

Finally, the relationship between the joint features and the original features is established through residual connection:

The residual connection not only can fuse the joint features and the original features to obtain abundant feature representations but also can effectively avoid the problems of gradient vanishing and explosion, which is conducive to the training and optimization of the model.

3.4. Machining Condition Prediction

After processing with a joint attention mechanism with residual connection, the resulting feature map is multi-dimensional. To facilitate subsequent processing, it needs to be converted into a one-dimensional feature vector. For this purpose, GAP is adopted. GAP does not need to learn parameters, which can improve the generalization ability of the model and establish the relationship between channels. This feature vector is then input into a classifier containing only one fully connected layer to predict the machining state. Finally, the Softmax function is used to classify the probability of various machining states.

When dividing the dataset, there may be inconsistencies in the amounts of data for different cutting states. If the conventional cross-entropy function is used as the loss function to train a neural network model, the optimal model parameters may not be obtained. To solve this problem, the focal loss function is used to optimize the model parameters and obtain the best model parameters. The focal loss function is defined as [24]

where and respectively represent the machining state of the real sample and the machining state predicted with the proposed model, and is the imbalance factor.

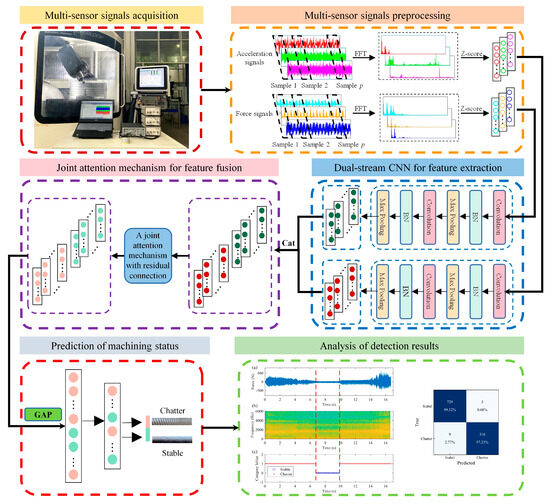

3.5. General Steps of the Proposed Method

In this study, a chatter detection method for thin-wall milling process based on multi-sensor fusion and a dual-stream residual attention CNN is proposed. The overall framework diagram of the method is shown in Figure 4, and its general steps are summarized as follows:

Figure 4.

The overall framework of the proposed method.

Step 1: Use an accelerometer and a dynamometer to collect acceleration and cutting force signals in three different directions (x, y, z) during the cutting process.

Step 2: Convert the acquired acceleration and cutting force signals into frequency-domain data using FFT and standardize the data using the Z-score method.

Step 3: Fuse the normalized spectrum data separately for acceleration and cutting force data in three directions (x, y, z) to form two sets of three-channel acceleration and cutting force spectrum data, and divide the dataset into training and test samples.

Step 4: Construct a deep neural network model based on the dual-stream CNN and the joint attention mechanism with residual connection, and use the focal loss function during the training process.

Step 5: Determine the machining condition of the test samples and evaluate the effectiveness of the proposed model using various evaluation metrics.

4. Experimental Setup and Dataset Partition

4.1. Experimental Setup

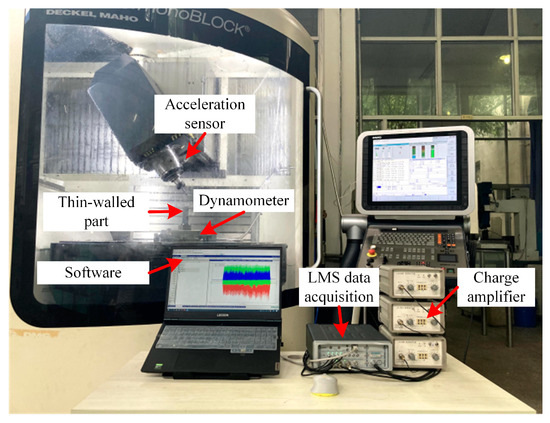

To validate the effectiveness of the proposed chatter detection method, machining experiments are conducted on a five-axis CNC milling machine DMU 60 Monoblock, as depicted in Figure 5. The test workpiece is a thin-walled TC4 titanium alloy part with geometric dimensions of 100 mm × 100 mm × 6 mm. The experimental tool is a 10 mm diameter variable pitch ball-end cutter with an axial cutting edge length of 18 mm, a helix angle of 30°, and alternating pitch angles of 85°-95°-85°-95°. To enrich the cutting status information during machining, multiple sensors are utilized for chatter detection. Vibrations generated during milling are measured by a three-dimensional accelerometer mounted on the spindle head, while cutting forces are monitored using a YDCB-III05 dynamometer attached to the turntable. At the same time, the LMS SCADAS data acquisition system is employed to simultaneously acquire acceleration and cutting force signals at a sampling frequency of 20 kHz.

Figure 5.

Experimental setup and scene.

4.2. Dataset Partition

The stability of the five-axis milling process is mainly influenced by the depth of cut, spindle speed, and tool orientation vector. These three factors are considered when designing cutting parameters. Currently, 60 sets of cutting experiments are being carried out, which include combinations of different cutting depths, spindle speeds, and lead angles. In each experiment, the feed per tooth and the tilt angle remain constant at 0.05 mm/z and 60°, respectively, and the other cutting parameters are detailed in Table 2. The sliding window method divides each group of signals with a window size of 2048, resulting in a total of 10,590 groups of samples. All samples are divided into two datasets: training (90%) and test (10%), used to train and test the chatter detection algorithm proposed in this study.

Table 2.

Cutting parameters for machining experiments.

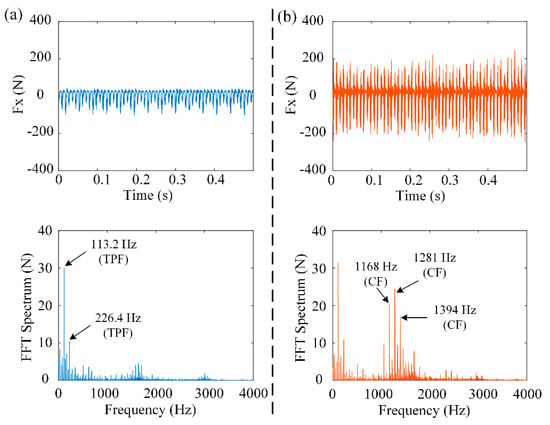

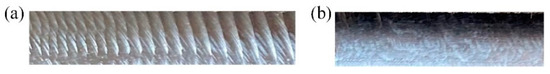

Chatter recognition using deep learning is framed as a classification problem, typically tackled with supervised learning methods. The quality of the dataset greatly influences the performance of the chatter detection method. In this study, the machining states are classified as either stable or chatter. By thoroughly analyzing the time- and frequency-domain features of the cutting force signal, accurate labeling of data is achieved. As illustrated in Figure 6, chatter in the machining state results in a significant increase in signal amplitude in the time domain and a shift in the dominant frequency band in the frequency domain. Conversely, in a stable state, the signal frequencies in the frequency domain are primarily the tooth pass frequency (TPF) and its multiples. Additionally, the machined surface of the thin-walled workpiece is inspected after each milling. Figure 7 shows that during stable milling, the surface is smooth; however, chatter causes a marked reduction in surface finish and the appearance of visible chatter marks.

Figure 6.

Time and frequency domains of cutting force signals under different machining status: (a) stable and (b) chatter. Symbol ‘CF’ means the chatter frequency.

Figure 7.

Surface topography of the workpiece under different machining status: (a) chatter and (b) stable.

5. Results and Discussion

5.1. Evaluation Indicators

Chatter detection is inherently a dichotomous problem. Therefore, when evaluating the performance of the proposed method, several indicators commonly used in classification problem, such as accuracy, precision, recall, and F1 score, can be used. If there is a classification problem with n categories and N samples, for each category, depending on the relationship between the predicted label and the true label, there will be several classification results: true positive (TP), false positive (FP), true negative (TN), and false negative (FN). These metrics are defined as follows:

where Acc, P, R, and F1 represent accuracy, precision, recall, and F1 score, respectively. Since the F1 score accounts for both precision and recall, this study uses accuracy and F1 score to assess the performance of the proposed model, with accuracy serving as the primary metric.

5.2. Classification Performance of the Proposed Method

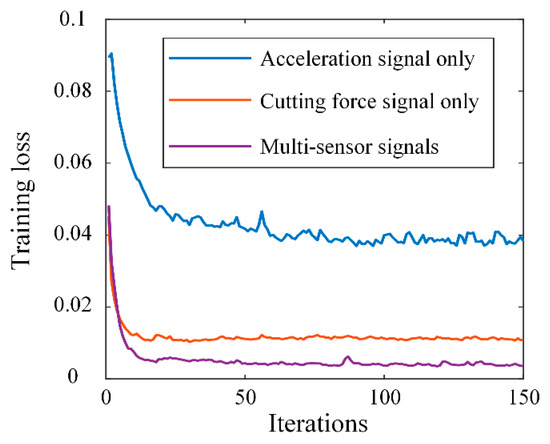

To verify the classification performance of the proposed chatter detection method, it is compared with a chatter detection method based on a single sensor and evaluated from the perspectives of training loss, confusion matrix, and feature visualization. It should be noted that the chatter recognition method based on a single sensor is also based on deep learning but only uses a single-stream CNN and does not consider the attention mechanism with residual connection.

Figure 8 illustrates the training loss per epoch for both the proposed multi-sensor fusion method and the single-sensor-based chatter detection method. Generally, the training loss rapidly decreases and then converges to a lower value as the number of epochs increases. Of the three methods evaluated, the proposed multi-sensor fusion method achieved the best performance, with a minimum training loss of 0.0036, whereas the training loss for the other methods exceeded 0.0110.

Figure 8.

Training loss curves for the chatter detection method based on a single sensor and the proposed method.

Table 3 presents the final test performance comparison between the single-sensor-based chatter detection method and the proposed multi-sensor fusion method after 150 iterations. The results demonstrate that the proposed multi-sensor fusion method effectively learns the nonlinear relationship between multi-sensor signals and machining states. As shown in Table 3, the accuracy of the multi-sensor fusion method reaches 98.68%, surpassing the accuracy of chatter detection methods using only the acceleration signal (93.77%) or the cutting force signal (96.03%). While the proposed method shows a modest 2.65% improvement over the cutting force-based method in accuracy, it significantly outperforms in terms of F1 score, with improvements of 4.31% and 8.54% compared with methods using only the cutting force signal and the acceleration signal, respectively. These results underscore the necessity of multi-sensor fusion for effective chatter detection and highlight the superiority of our approach.

Table 3.

Test performance of the chatter detection methods compared in this study.

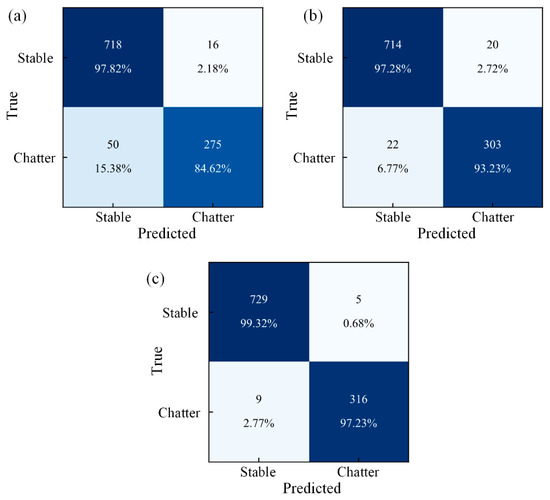

For a better analysis of the experimental results, Figure 9 provides a confusion matrix of the test results for the above method. The confusion matrix organizes predicted classes along its rows and actual classes along its columns. Cells on the diagonal indicate correctly predicted samples, while off-diagonal cells highlight classification errors made by the classifier. The results show that when only the acceleration signal is considered, the error rate in recognizing the chatter state is the highest, reaching 15.38%. However, when the proposed multi-sensor fusion method is used for the identification of the machining state, the recognition error rates for the stable state and chatter state are only 0.68% and 2.77%, respectively. Therefore, it can be seen that the proposed method exhibits excellent performance in identifying the machining state.

Figure 9.

Confusion matrix: (a) acceleration signal only, (b) cutting force signal only, and (c) proposed multi-sensor fusion method.

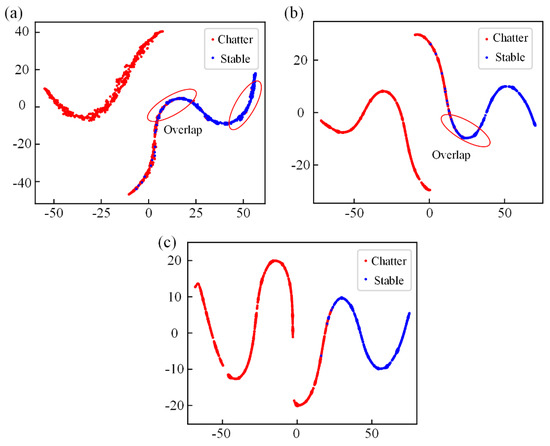

To visualize and analyze the learning features of the network, t-SNE technology is utilized to map the feature distribution in the fully connected layer at the network’s termination. Generally, t-SNE is employed to assess the efficacy of fault diagnosis methods by projecting high-dimensional features into a two-dimensional space. The outcomes of the t-SNE visualization are presented in Figure 10, where each point’s coordinates denote its position in the 2D space. As depicted in Figure 10, when the chatter detection method utilizes only the acceleration signal or the cutting force signal, the features in the final fully connected layer exhibit varying degrees of overlap. In contrast, the clustering results of the proposed method are notably distinct, with minimal overlap observed. This suggests that the proposed method effectively discerns diverse features and identifies distinct machining states.

Figure 10.

t-SNE visualizes features learned in the final fully connected layer: (a) acceleration signal only, (b) cutting force signal only, and (c) proposed multi-sensor fusion method.

5.3. Comparison with Other Methods

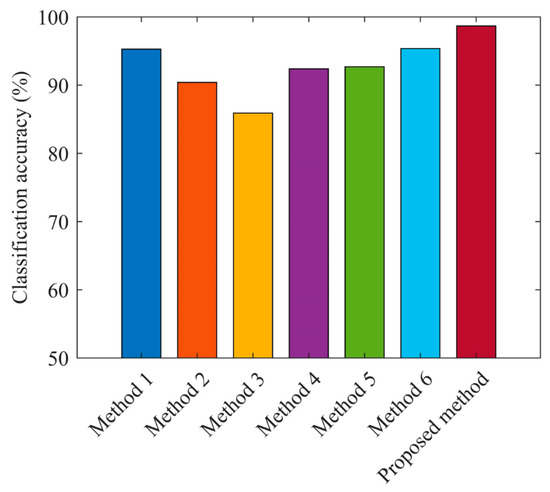

To further verify the performance of the proposed chatter detection method, this study compares it with the multi-sensor data fusion method without residual connection and with other shallow intelligent chatter identification methods, such as the tree model, naive Bayesian model, SVM model, and KNN model. The input to these shallow intelligent chatter identification methods consists of 6×32 time-domain, frequency-domain, and time–frequency domain features extracted from the original acceleration and cutting force signals. More details on these features can be found in References [25,26]. In addition, the proposed method is compared with the chatter detection method based on time-domain data input. To facilitate the subsequent description, these methods are simply named Method 1 (FFT + dual-stream CNN), Method 2 (Multi-domain Features + Tree), Method 3 (Multi-Domain Features + naive Bayesian), Method 4 (Multi-domain Features + SVM), Method 5 (Multi-Domain Features + KNN), and Method 6 (Time-domain data + dual-stream CNN + attention mechanism with residual connection).

As can be seen from Figure 11, the proposed method is better than Method 1 in terms of classification accuracy. Combined with the results in Table 3, it can be found that the accuracy of the chatter identification method based on the cutting force signal is also higher than that of Method 1. This indicates that there is redundancy in the features of the multi-sensor signals and verifies the necessity of adaptive fusion features using the attention mechanism with residual connection. Compared with Method 6, the proposed method is improved by 3.31%, which shows the validity of using the FFT of signals as the input for the deep-learning network model. Compared with other shallow intelligent chatter identification methods, the accuracy of the proposed method increases by up to 12.78% and at least 5.98%.

Figure 11.

Comparison of identification accuracy with other methods.

5.4. Generalization Analysis

Based on the analysis presented in Section 5.2 and Section 5.3, the proposed method demonstrates significant improvements in classification accuracy for machining status detection. Multi-sensor fusion, compared with single-sensor-based chatter detection methods, enriches the available machining information. Furthermore, the attention mechanism integrated with residual connections effectively enhances relevant features while suppressing irrelevant ones, thereby enhancing classification accuracy. Nevertheless, further validation under diverse milling conditions is essential to assess the proposed method’s ability to generalize in practical scenarios.

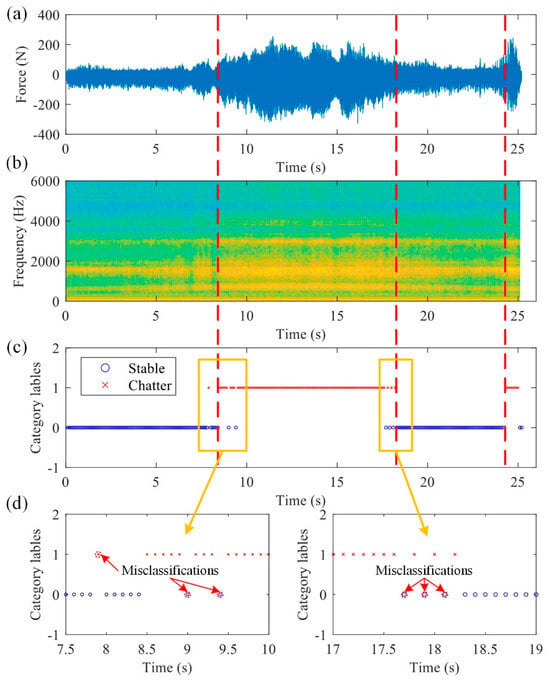

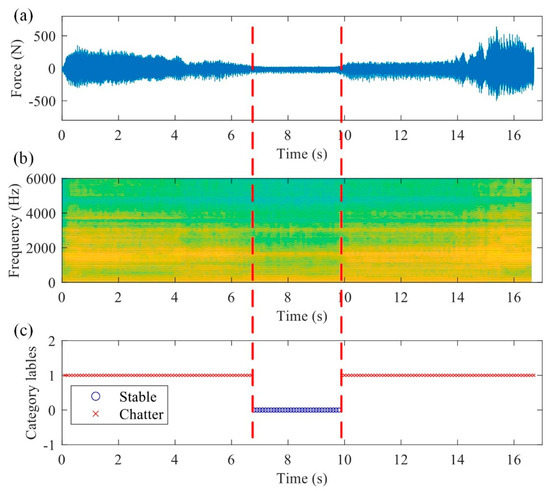

To assess the method’s generalizability, two additional milling experiments are conducted using the experimental setup depicted in Figure 5. The experiments use the same TC4 thin-walled workpiece. In the first experiment, the spindle speed is set to 1200 rpm, the depth of cut is 0.3 mm, and the tool orientation angle is (10°, 60°). Chatter identification results are presented in Figure 12. Each sampled data segment has a length of 2048, which undergoes FFT transformation into the frequency domain before being inputted into the trained model. In the second experiment, the spindle speed is increased to 1800 rpm, the depth of cut reduced to 0.2 mm, and the tool orientation angle is adjusted to (5°, 60°). The corresponding chatter identification outcomes are illustrated in Figure 13. Comparing Figure 12 and Figure 13, it is evident that the proposed multi-sensor fusion method, employing a dual-stream residual attention CNN, effectively detects machining states during thin-wall milling. However, it should be noted that the first experiment exhibits misclassifications during the chatter identification stage. These misclassifications likely stem from the challenges in discerning the distinct signal characteristics between stable and chatter states, particularly during transitional phases. Consequently, the distinctions between stable and chatter states may not be sufficiently pronounced. Overall, the experimental findings affirm that the proposed method accurately identifies chatter and is applicable for chatter detection in thin-wall milling across various milling conditions.

Figure 12.

Chatter detection results for thin-walled part using the proposed method (spindle speed: 1200 rpm, depth of cut: 0.3 mm, lead angle 10°). (a) y-direction force signal; (b) time–frequency spectrogram via short-time Fourier transform; (c) machining state classification result; and (d) misclassifications.

Figure 13.

Chatter detection results for thin-walled part using the proposed method (spindle speed: 1800 rpm, depth of cut: 0.3 mm, lead angle 5°). (a) y-direction force signal; (b) time–frequency spectrograph via short-time Fourier transform; and (c) machining state classification result.

6. Conclusions

In this study, a multi-sensor fusion method for chatter detection in thin-wall milling process is proposed based on the dual-stream residual attention CNN. By evaluating and verifying the chatter classification performance and generalization performance of the method, the following main conclusions are drawn:

- (1)

- The proposed method employs a dual-stream CNN to extract the features from multi-sensor signals without manual feature extraction. Simultaneously, a joint attention mechanism with residual connection is used to adaptively fuse features from multiple sensors, enhancing useful features and suppressing useless features. Compared with the traditional chatter detection method, the proposed method does not rely on signal processing technology or expert experience and eliminates the need for threshold selection.

- (2)

- The effectiveness and superiority of the proposed method are verified using a cutting force signal and an acceleration signal collected from machining experiments. The t-SNE visualization results show that the proposed method achieves the clearest clustering results with almost no overlap. Experimental results demonstrate that the accuracy of the proposed method can reach 98.68%, which is higher than that of the chatter identification method based on a single sensor. Compared with some existing methods, the proposed method has higher chatter identification accuracy.

- (3)

- The proposed method can accurately identify the machining status under different milling conditions, indicating that it has good generalization capabilities.

Author Contributions

Methodology, investigation, software, and writing—original draft: D.Z. Data curation, validation, and writing—review and editing: D.L. Visualization and writing—review and editing: W.G. Investigation and formal analysis: H.W. Writing—review and editing, funding acquisition, and supervision: Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Natural Science Foundation of China (Grant No. U22A20202).

Institutional Review Board Statement

This work does not contain any studies with human participants or animals performed by any of the authors.

Data Availability Statement

Data and materials used in this research are available.

Conflicts of Interest

Author Danian Zhan, Dawei Lu, Wenxiang Gao, and Haojie Wei were employed by the company AVIC Chengdu Aircraft Industrial (Group) Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Munoa, J.; Beudaert, X.; Dombovari, Z.; Altintas, Y.; Budak, E.; Brecher, C.; Stepan, G. Chatter suppression techniques in metal cutting. CIRP Ann. 2016, 65, 785–808. [Google Scholar] [CrossRef]

- Zhu, L.; Liu, C. Recent progress of chatter prediction, detection and suppression in milling. Mech. Syst. Signal Process. 2020, 143, 106840. [Google Scholar] [CrossRef]

- Sun, Y.; Jia, J.; Xu, J.; Chen, M.; Niu, J. Path, feedrate and trajectory planning for free-form surface machining: A state-of-the-art review. Chin. J. Aeronaut. 2022, 35, 12–29. [Google Scholar] [CrossRef]

- Altintas, Y. Manufacturing Automation: Metal Cutting Mechanics, Machine Tool Vibrations, and CNC Design, 2nd ed.; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar] [CrossRef]

- Olvera, D.; Urbikain, G.; Elías-Zuñiga, A.; López de Lacalle, L.N. Improving Stability Prediction in Peripheral Milling of Al7075T6. Appl. Sci. 2018, 8, 1316. [Google Scholar] [CrossRef]

- Tuysuz, O.; Altintas, Y. Frequency Domain Updating of Thin-Walled Workpiece Dynamics Using Reduced Order Substructuring Method in Machining. J. Manuf. Sci. Eng. 2017, 139, 071013. [Google Scholar] [CrossRef]

- Kiss, A.K.; Bachrathy, D.; Stepan, G. Effects of Varying Dynamics of Flexible Workpieces in Milling Operations. J. Manuf. Sci. Eng. 2019, 142, 011005. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, S. Predictive modeling of chatter stability considering force-induced deformation effect in milling thin-walled parts. Int. J. Mach. Tools Manuf. 2018, 135, 38–52. [Google Scholar] [CrossRef]

- Wang, W.-K.; Wan, M.; Zhang, W.-H.; Yang, Y. Chatter detection methods in the machining processes: A review. J. Manuf. Process. 2022, 77, 240–259. [Google Scholar] [CrossRef]

- Albertelli, P.; Braghieri, L.; Torta, M.; Monno, M. Development of a generalized chatter detection methodology for variable speed machining. Mech. Syst. Signal Process. 2019, 123, 26–42. [Google Scholar] [CrossRef]

- Hao, Y.; Zhu, L.; Yan, B.; Qin, S.; Cui, D.; Lu, H. Milling chatter detection with WPD and power entropy for Ti-6Al-4V thin-walled parts based on multi-source signals fusion. Mech. Syst. Signal Process. 2022, 177, 109225. [Google Scholar] [CrossRef]

- Kuljanic, E.; Totis, G.; Sortino, M. Development of an intelligent multisensor chatter detection system in milling. Mech. Syst. Signal Process. 2009, 23, 1704–1718. [Google Scholar] [CrossRef]

- Pan, J.; Liu, Z.; Wang, X.; Chen, C.; Pan, X. Boring chatter identification by multi-sensor feature fusion and manifold learning. Int. J. Adv. Manuf. Technol. 2020, 109, 1137–1151. [Google Scholar] [CrossRef]

- Tran, M.-Q.; Liu, M.-K.; Elsisi, M. Effective multi-sensor data fusion for chatter detection in milling process. ISA Trans. 2022, 125, 514–527. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.; Zhuang, J.; Guo, B.; Teng, W.; Wu, F. An optimized convolutional neural network for chatter detection in the milling of thin-walled parts. Int. J. Adv. Manuf. Technol. 2020, 106, 3881–3895. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, Y.; Gao, Y.; Gao, H.; Mao, T.; Zhou, H.; Li, D. Machining vibration states monitoring based on image representation using convolutional neural networks. Eng. Appl. Artif. Intell. 2017, 65, 240–251. [Google Scholar] [CrossRef]

- Sener, B.; Gudelek, M.U.; Ozbayoglu, A.M.; Unver, H.O. A novel chatter detection method for milling using deep convolution neural networks. Measurement 2021, 182, 109689. [Google Scholar] [CrossRef]

- Tran, M.-Q.; Liu, M.-K.; Tran, Q.-V. Milling chatter detection using scalogram and deep convolutional neural network. Int. J. Adv. Manuf. Technol. 2020, 107, 1505–1516. [Google Scholar] [CrossRef]

- Vashisht, R.K.; Peng, Q. Online Chatter Detection for Milling Operations Using LSTM Neural Networks Assisted by Motor Current Signals of Ball Screw Drives. J. Manuf. Sci. Eng. 2020, 143, 011008. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, X.; Zhao, W. Automatic feature extraction for online chatter monitoring under variable milling conditions. Measurement 2023, 210, 112558. [Google Scholar] [CrossRef]

- Sun, Y.; He, J.; Ma, H.; Yang, X.; Xiong, Z.; Zhu, X.; Wang, Y. Online chatter detection considering beat effect based on Inception and LSTM neural networks. Mech. Syst. Signal Process. 2023, 184, 109723. [Google Scholar] [CrossRef]

- Han, Z.; Zhuo, Y.; Yan, Y.; Jin, H.; Fu, H. Chatter detection in milling of thin-walled parts using multi-channel feature fusion and temporal attention-based network. Mech. Syst. Signal Process. 2022, 179, 109367. [Google Scholar] [CrossRef]

- Amin, J.; Sharif, M.; Anjum, M.A.; Raza, M.; Bukhari, S.A.C. Convolutional neural network with batch normalization for glioma and stroke lesion detection using MRI. Cogn. Syst. Res. 2020, 59, 304–311. [Google Scholar] [CrossRef]

- Han, S.; Shao, H.; Huo, Z.; Yang, X.; Cheng, J. End-to-end chiller fault diagnosis using fused attention mechanism and dynamic cross-entropy under imbalanced datasets. Build. Environ. 2022, 212, 108821. [Google Scholar] [CrossRef]

- Cao, H.; Yue, Y.; Chen, X.; Zhang, X. Chatter detection in milling process based on synchrosqueezing transform of sound signals. Int. J. Adv. Manuf. Technol. 2017, 89, 2747–2755. [Google Scholar] [CrossRef]

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).