KCS-YOLO: An Improved Algorithm for Traffic Light Detection under Low Visibility Conditions

Abstract

1. Introduction

2. Related Studies

3. Traffic Light Dataset and Proposed KCS-YOLO Algorithm

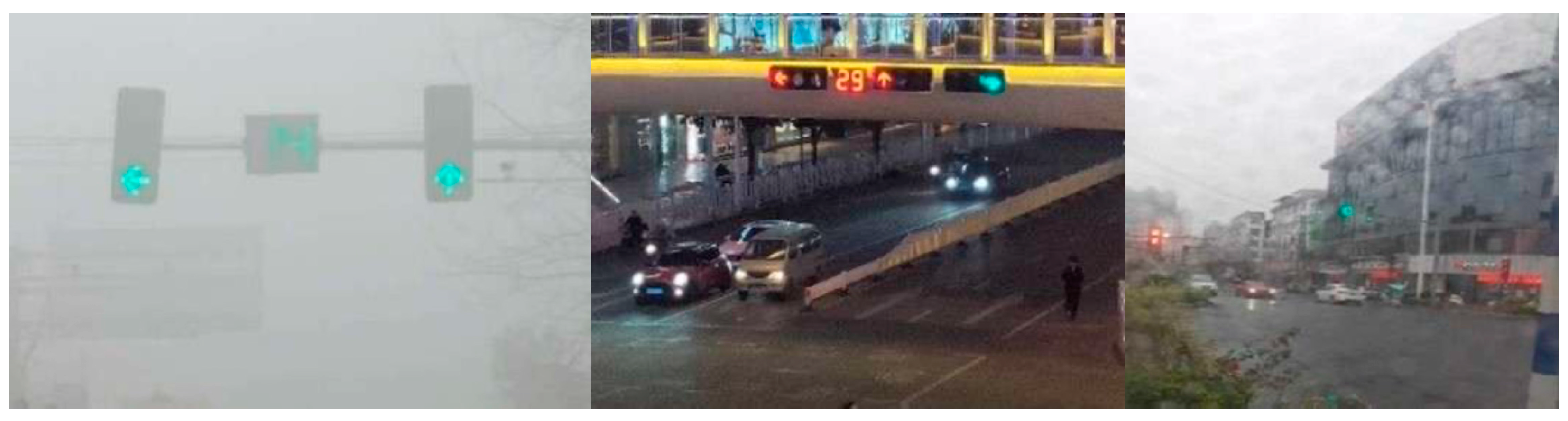

3.1. Generating Dataset

3.2. Preprocessing Dataset

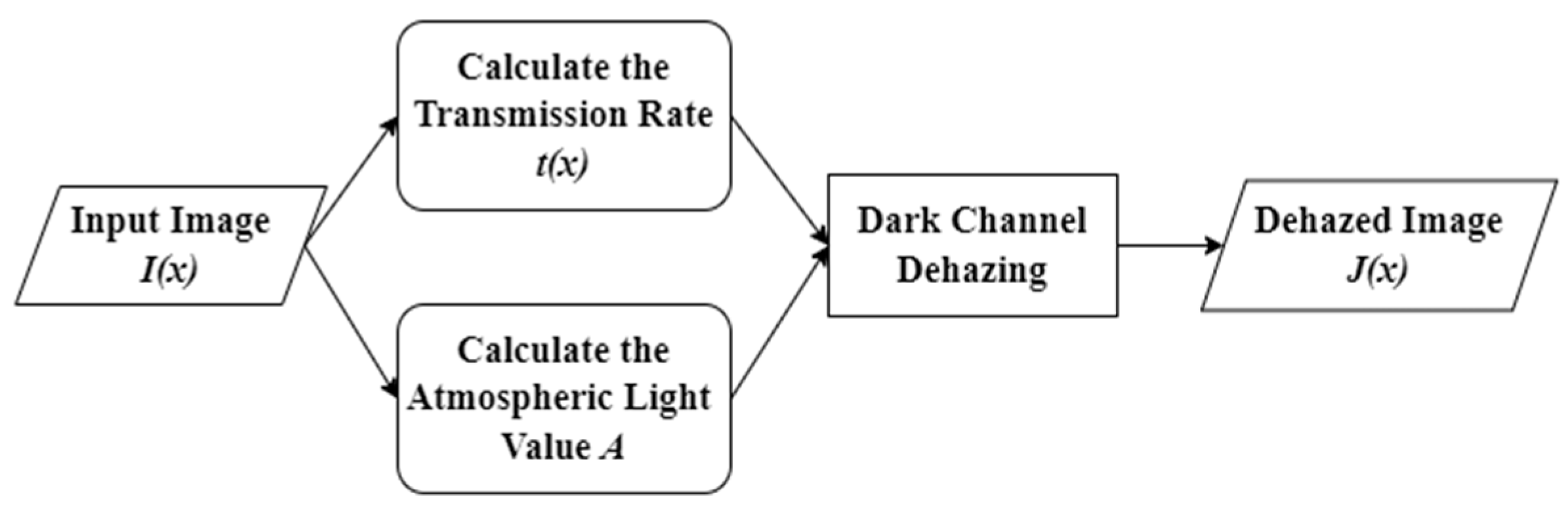

3.2.1. Dark Channel Prior

3.2.2. Estimating Transmission Rate

3.2.3. Image Dehazing

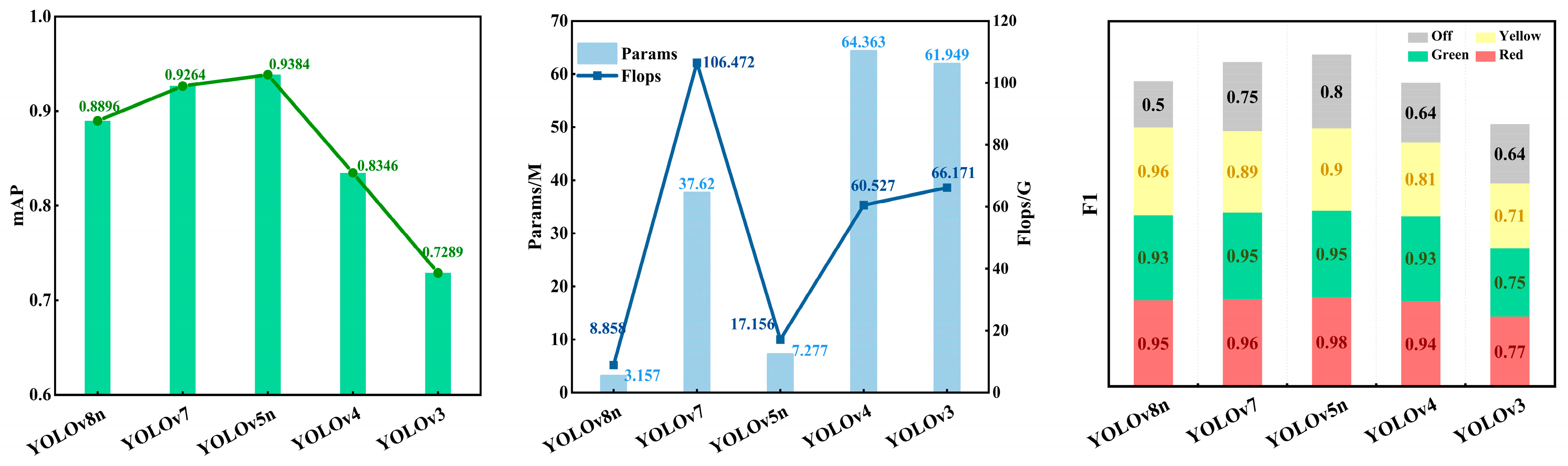

3.3. A Comparison of Different YOLO Algorithms

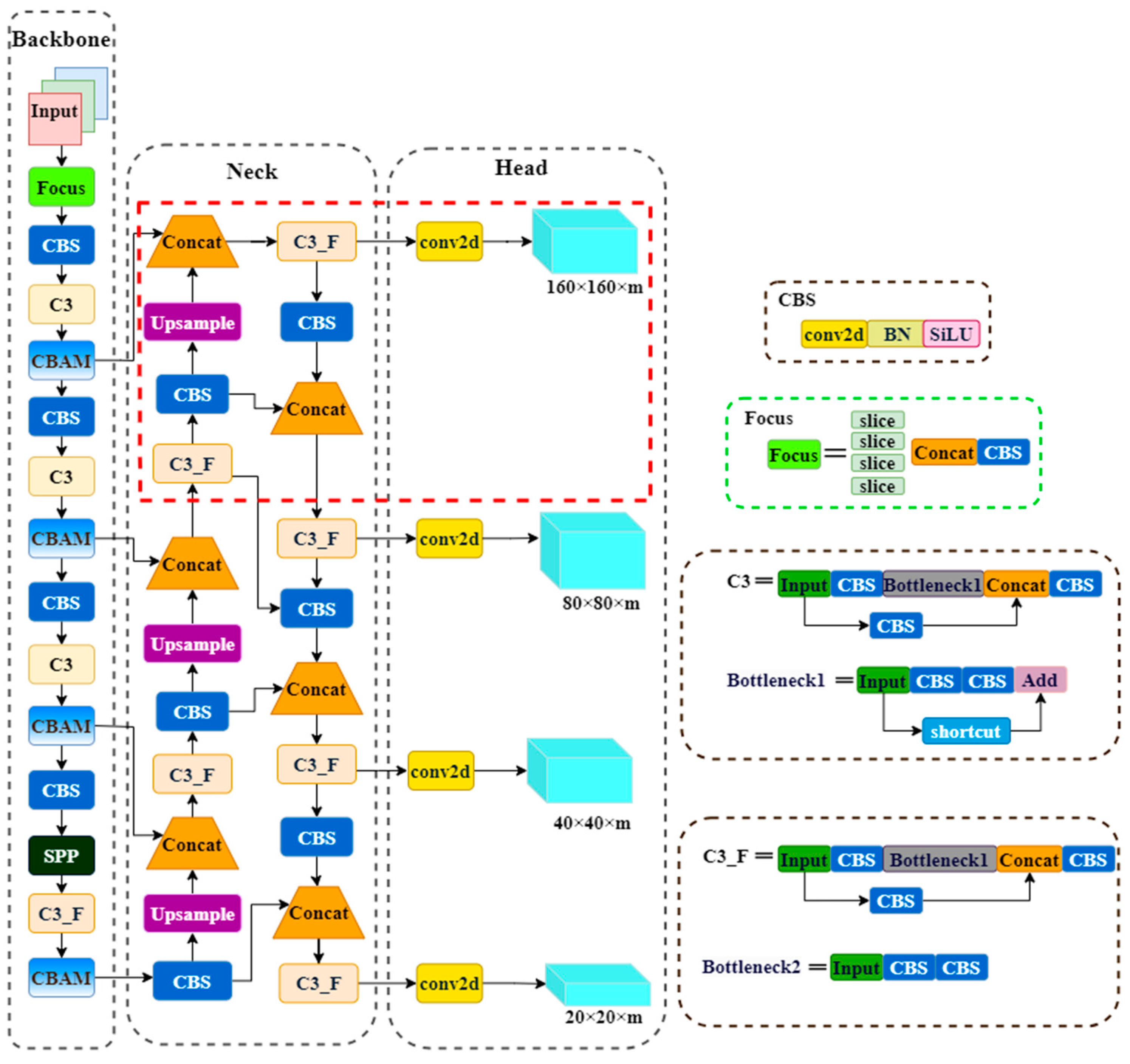

3.4. Proposed KCS-YOLO Algorithm

3.4.1. K-Means++ Re-Clustering Anchors

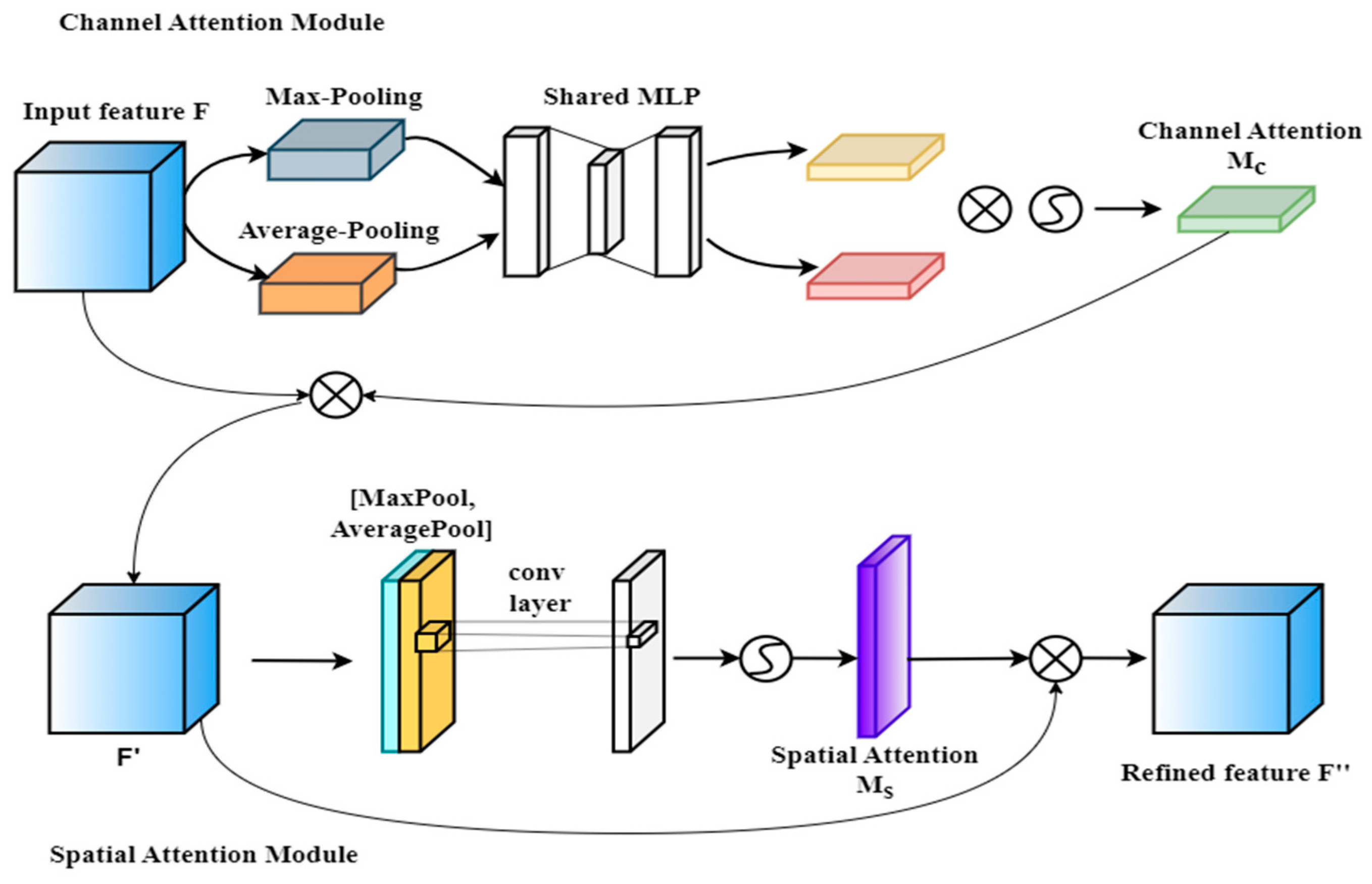

3.4.2. Incorporating an Attention Mechanism

3.4.3. A Small Object Detection Layer

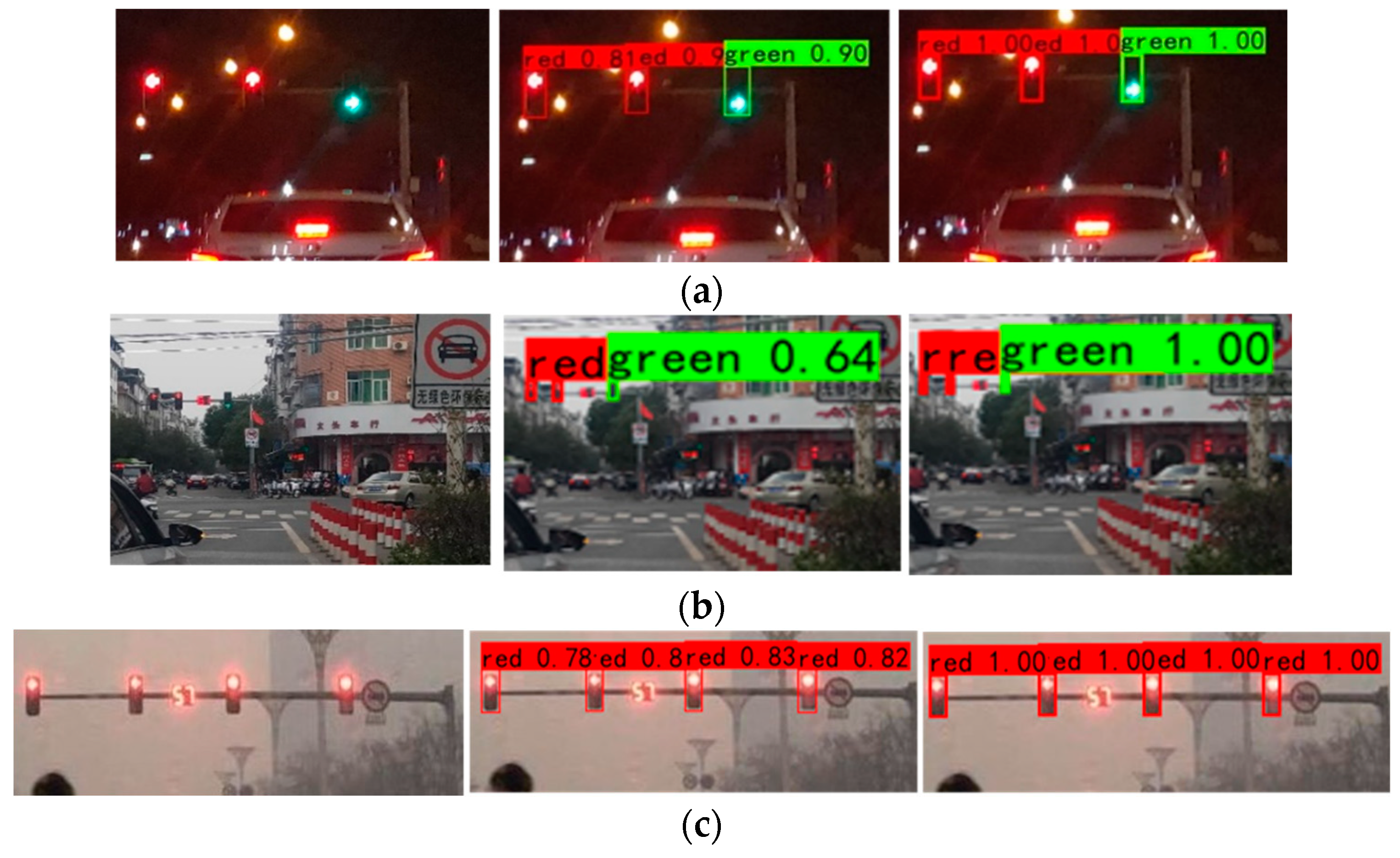

4. Results and Discussion

4.1. Experiment Set-Up

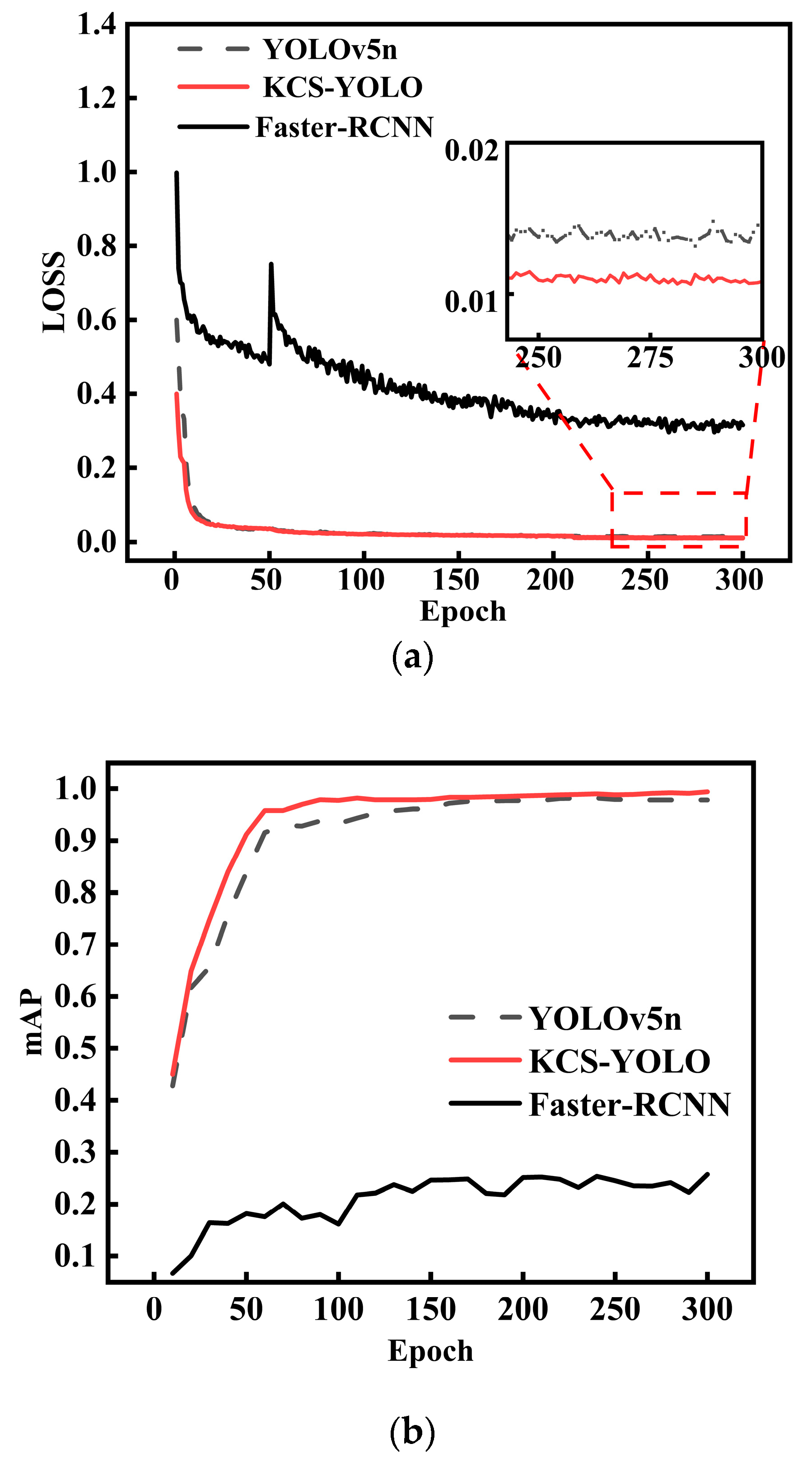

4.2. Ablation Experiments

4.3. Comparison Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, D.; Mu, H.; He, Q.; Shi, J.; Wang, Y.; Wu, X. A Low Visibility Recognition Algorithm Based on Surveillance Video. J. Appl. Meteor. Sci. 2022, 33, 501–512. [Google Scholar] [CrossRef]

- Viezee, W.; Evans, W.E. Automated Measurements of Atmospheric Visibility. J. Appl. Meteorol. 2013, 22, 1455–1461. [Google Scholar] [CrossRef]

- Sabu, A.; Vishwanath, N. An improved visibility restoration of single haze images for security surveillance systems. In Proceedings of the 2016 Online International Conference on Green Engineering and Technologies (IC-GET), Coimbatore, India, 19 November 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Ait Ouadil, K.; Idbraim, S.; Bouhsine, T. Atmospheric visibility estimation: A review of deep learning approach. Multimed Tools Appl. 2024, 83, 36261–36286. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Spring: Cham, Switzerland, 2018; Volume 11211. [Google Scholar] [CrossRef]

- Tian, E.; Kim, J. Improved Vehicle Detection Using Weather Classification and Faster R-CNN with Dark Channel Prior. Electronics 2023, 12, 3022. [Google Scholar] [CrossRef]

- Yang, L. A Deep Learning Method for Traffic Light Status Recognition. J. Intell. Connect. Veh. 2023, 6, 173–182. [Google Scholar] [CrossRef]

- Possatti, L.C.; Guidolini, R.; Cardoso, V.B.; Berriel, R.F.; Paixão, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Traffic Light Recognition Using Deep Learning and Prior Maps for Autonomous Cars. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Du, L.; Chen, W.; Fu, S.; Kong, H.; Li, C.; Pei, Z. Real-time Detection of Vehicle and Traffic Light for Intelligent and Connected Vehicles Based on YOLOv3 Network. In Proceedings of the 2019 5th International Conference on Transportation Information and Safety (ICTIS), Liverpool, UK, 14–17 July 2019; pp. 388–392. [Google Scholar] [CrossRef]

- Kou, A. Detection and recognition of traffic signs based on improved deep learning. Int. Core J. Eng. 2020, 6, 208–213. [Google Scholar] [CrossRef]

- Mondal, K.; Rabidas, R.; Dasgupta, R. Single image haze removal using contrast limited adaptive histogram equalization based multiscale fusion technique. Multimed. Tools Appl. 2024, 83, 15413–15438. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, W.; Yang, X. An Enhanced Deep Learning Model for Obstacle and Traffic Light Detection Based on YOLOv5. Electronics 2023, 12, 2228. [Google Scholar] [CrossRef]

- Mao, K.; Jin, R.; Ying, L.; Yao, X.; Dai, G.; Fang, K. SC-YOLO: Provide Application-Level Recognition and Perception Capabilities for Smart City Industrial Cyber-Physical System. IEEE Syst. J. 2023, 17, 5118–5129. [Google Scholar] [CrossRef]

- Appiah, E.O.; Mensah, S. Object detection in adverse weather condition for autonomous vehicles. Multimed. Tools Appl. 2024, 83, 28235–28261. [Google Scholar] [CrossRef]

- Li, Z.; Zeng, Q.; Liu, Y.; Liu, J.; Li, L. An improved traffic lights recognition algorithm for autonomous driving in complex scenarios. Int. J. Distrib. Sens. Netw. 2021, 1–17. [Google Scholar] [CrossRef]

- HumanSignal/labelImg. Available online: https://github.com/HumanSignal/labelImg (accessed on 26 June 2024).

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar] [CrossRef]

- Peng, Y.-T.; Cao, K.; Cosman, P.C. Generalization of the Dark Channel Prior for Single Image Restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Fan, J.; Huo, T.; Li, X. A Review of One-Stage Detection Algorithms in Autonomous Driving. In Proceedings of the 2020 4th CAA International Conference on Vehicular Control and Intelligence (CVCI), Hangzhou, China, 18–20 December 2020; pp. 210–214. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Dou, Z.; Zhou, H.; Liu, Z. An Improved YOLOv5s Fire Detection Model. Fire Technol. 2024, 60, 135–166. [Google Scholar] [CrossRef]

- Tang, L.; Li, F.; Lan, R.; Luo, X. A small object detection algorithm based on improved faster RCNN. In Proceedings of the International Symposium on Artificial Intelligence and Robotics, Fukuoka, Japan, 21–22 August 2021. [Google Scholar] [CrossRef]

- Cao, C.; Wang, B.; Zhang, W.; Zeng, X.; Yan, X.; Feng, Z.; Liu, Y.; Wu, Z. An improved Faster R-CNN for small object detection. IEEE Access 2019, 7, 106838–106846. [Google Scholar] [CrossRef]

- Ji, H.; Gao, Z.; Mei, T.; Li, Y. Improved Faster R-CNN with multiscale feature fusion and homography augmentation for vehicle detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1761–1765. [Google Scholar] [CrossRef]

| Algorithms | AP | mAP | F1 | Params/M | FLOPs/G | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Red | Green | Yellow | Off | Red | Green | Yellow | Off | ||||

| YOLOv8n | 0.9663 | 0.9441 | 0.9731 | 0.6750 | 0.8896 | 0.95 | 0.93 | 0.96 | 0.50 | 3.157 | 8.858 |

| YOLOv7 | 0.9876 | 0.9598 | 0.9336 | 0.8647 | 0.9264 | 0.96 | 0.95 | 0.89 | 0.75 | 37.620 | 106.472 |

| YOLOv5n | 0.9799 | 0.9588 | 0.9227 | 0.8922 | 0.9384 | 0.98 | 0.95 | 0.90 | 0.80 | 7.277 | 17.156 |

| YOLOv4 | 0.9711 | 0.9435 | 0.8701 | 0.5538 | 0.8346 | 0.94 | 0.93 | 0.81 | 0.64 | 64.363 | 60.527 |

| YOLOv3 | 0.7548 | 0.7535 | 0.7377 | 0.6694 | 0.7289 | 0.77 | 0.75 | 0.71 | 0.64 | 61.949 | 66.171 |

| Attention Mechanisms | AP | mAP | F1 | Params/M | FLOPs/G | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Red | Green | Yellow | Off | Red | Green | Yellow | Off | ||||

| YOLOv5n | 0.9799 | 0.9588 | 0.9227 | 0.8922 | 0.9384 | 0.98 | 0.95 | 0.90 | 0.80 | 7.277 | 17.156 |

| YOLOv5n_CA | 0.9807 | 0.9889 | 1.0000 | 0.9500 | 0.9799 | 0.98 | 0.97 | 1.00 | 0.75 | 7.314 | 17.172 |

| YOLOv5n_CBAM | 0.9903 | 0.9719 | 1.0000 | 0.9712 | 0.9834 | 0.98 | 0.97 | 1.00 | 0.89 | 7.322 | 17.163 |

| YOLOv5n_EMA | 0.9917 | 0.9772 | 1.0000 | 0.9600 | 0.9822 | 0.99 | 0.96 | 1.00 | 0.88 | 7.331 | 18.129 |

| YOLOv5n_MCA | 0.9938 | 0.9765 | 1.0000 | 0.9500 | 0.9801 | 0.99 | 0.96 | 1.00 | 0.89 | 7.277 | 17.168 |

| YOLOv5n_SimAM | 0.9938 | 0.9765 | 1.0000 | 0.9500 | 0.9801 | 0.99 | 0.96 | 1.00 | 0.89 | 7.277 | 17.168 |

| Algorithms | AP | mAP | F1 | Params/M | FLOPs/G | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Red | Green | Yellow | Off | Red | Green | Yellow | Off | ||||

| YOLOv5n_F | 0.9708 | 0.9492 | 0.8804 | 0.7838 | 0.8960 | 0.94 | 0.91 | 0.85 | 0.84 | 7.277 | 17.156 |

| YOLOv5n | 0.9799 | 0.9588 | 0.9227 | 0.8922 | 0.9384 | 0.98 | 0.95 | 0.90 | 0.80 | 7.277 | 17.156 |

| YOLOv5n_C | 0.9903 | 0.9719 | 1.0000 | 0.9712 | 0.9834 | 0.98 | 0.97 | 1.00 | 0.89 | 7.322 | 17.163 |

| YOLOv5n_K | 0.9889 | 0.9703 | 0.9677 | 0.9652 | 0.9730 | 0.98 | 0.97 | 0.93 | 0.91 | 7.277 | 17.156 |

| YOLOv5n_S | 0.9913 | 0.9746 | 0.9706 | 0.9899 | 0.9816 | 0.98 | 0.97 | 0.95 | 0.90 | 7.436 | 20.734 |

| YOLOv5n_CK | 0.9863 | 0.9703 | 0.9843 | 0.9922 | 0.9832 | 0.98 | 0.97 | 0.96 | 0.97 | 7.322 | 17.163 |

| YOLOv5n_CS | 0.9905 | 0.9744 | 0.9563 | 0.9437 | 0.9662 | 0.98 | 0.96 | 0.92 | 0.83 | 7.481 | 20.741 |

| YOLOv5n_KS | 0.9863 | 0.9673 | 0.9743 | 0.9927 | 0.9800 | 0.98 | 0.97 | 0.95 | 0.97 | 7.436 | 20.734 |

| KCS-YOLO | 0.9917 | 0.9757 | 1.0000 | 0.9872 | 0.9887 | 0.98 | 0.97 | 1.00 | 0.90 | 7.481 | 20.741 |

| Algorithms | AP | mAP | F1 | Params/M | FLOPs/G | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Red | Green | Yellow | Off | Red | Green | Yellow | Off | ||||

| YOLOv5n | 0.9799 | 0.9588 | 0.9227 | 0.8922 | 0.9384 | 0.98 | 0.95 | 0.90 | 0.80 | 7.277 | 17.156 |

| Faster-RCNN | 0.2877 | 0.2628 | 0.2213 | 0.2009 | 0.2431 | 0.38 | 0.37 | 0.22 | 0.24 | 41.327 | 251.437 |

| KCS-YOLO | 0.9917 | 0.9757 | 1.0000 | 0.9872 | 0.9887 | 0.98 | 0.97 | 1.00 | 0.90 | 7.481 | 20.741 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Q.; Zhang, D.; Liu, H.; He, Y. KCS-YOLO: An Improved Algorithm for Traffic Light Detection under Low Visibility Conditions. Machines 2024, 12, 557. https://doi.org/10.3390/machines12080557

Zhou Q, Zhang D, Liu H, He Y. KCS-YOLO: An Improved Algorithm for Traffic Light Detection under Low Visibility Conditions. Machines. 2024; 12(8):557. https://doi.org/10.3390/machines12080557

Chicago/Turabian StyleZhou, Qinghui, Diyi Zhang, Haoshi Liu, and Yuping He. 2024. "KCS-YOLO: An Improved Algorithm for Traffic Light Detection under Low Visibility Conditions" Machines 12, no. 8: 557. https://doi.org/10.3390/machines12080557

APA StyleZhou, Q., Zhang, D., Liu, H., & He, Y. (2024). KCS-YOLO: An Improved Algorithm for Traffic Light Detection under Low Visibility Conditions. Machines, 12(8), 557. https://doi.org/10.3390/machines12080557