Deep Learning-Enhanced Small-Sample Bearing Fault Analysis Using Q-Transform and HOG Image Features in a GRU-XAI Framework

Abstract

1. Introduction

- The Q transform, a robust time-frequency analysis technique, is applied to extract features from raw signal data, effectively capturing both temporal and frequency-domain information pertinent to bearing fault detection. To enhance interpretability, XAI techniques are applied to identify and highlight the most relevant features derived from the Q transform.

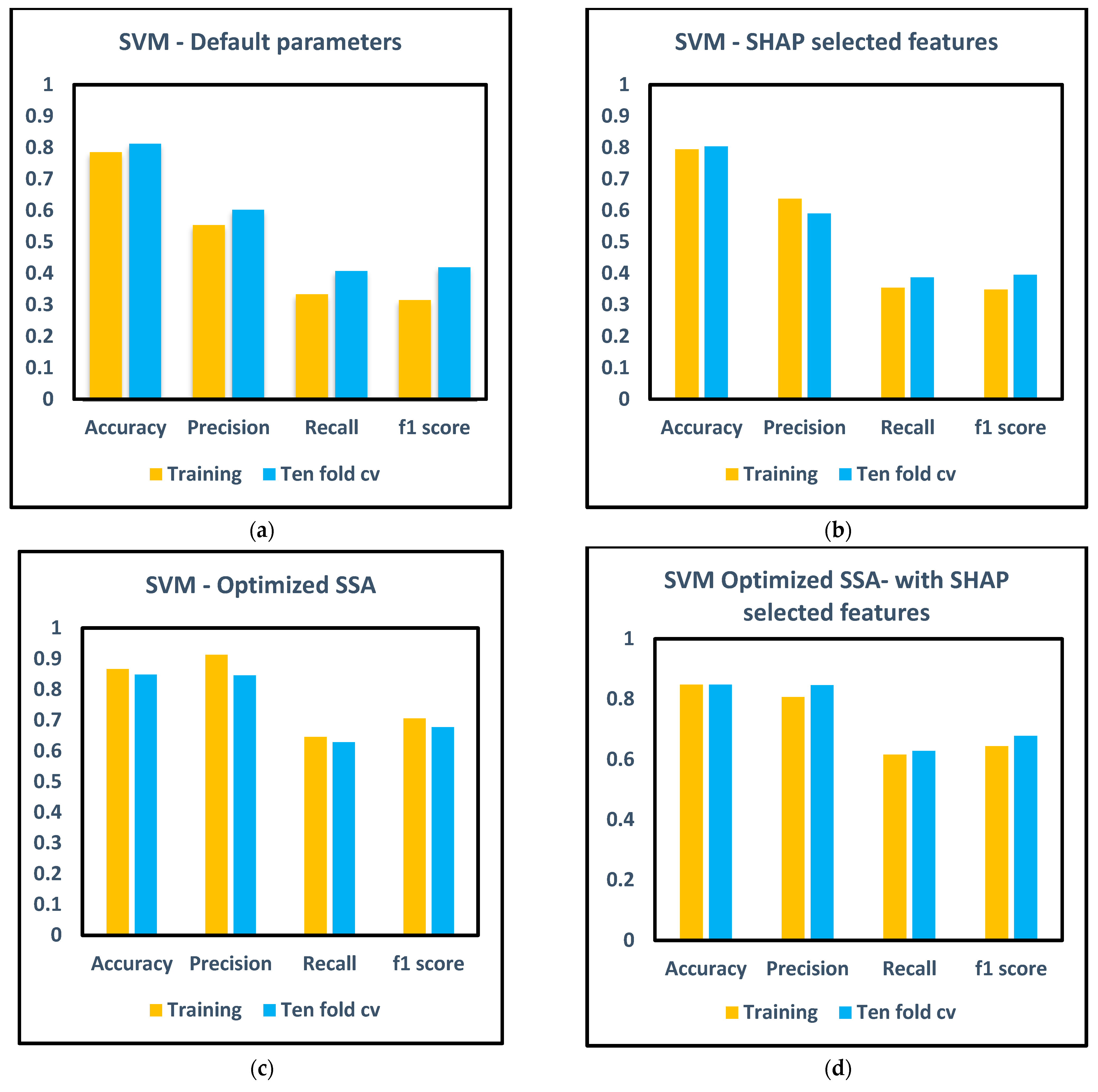

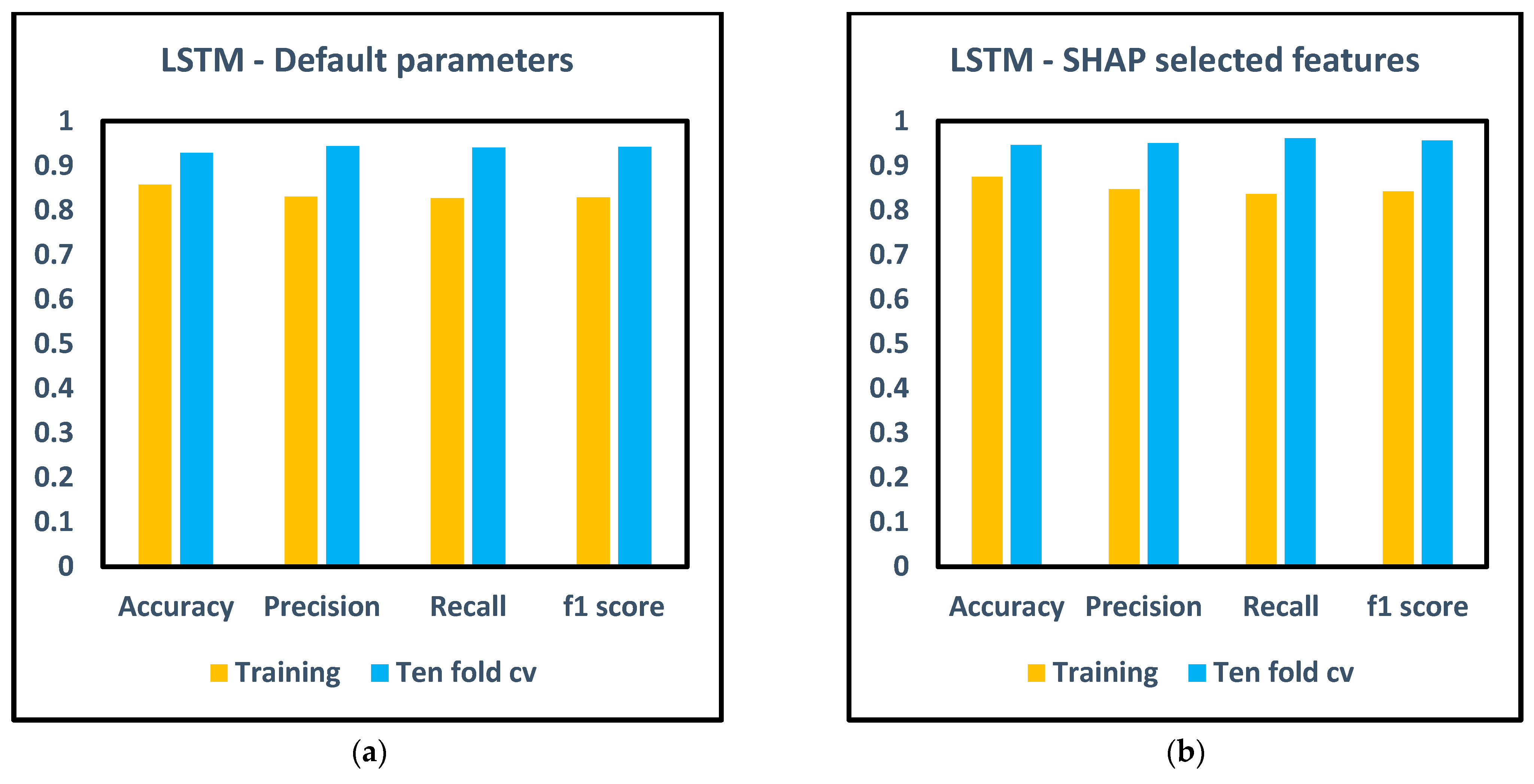

- For performance comparison across various model architectures, this study employs three distinct machine learning techniques—SVM, LSTM, and GRU—to predict bearing defects.

- The novel utilization of LSTM and GRU as XAI models for bearing fault diagnosis is explored, significantly enhancing the transparency and robustness of predictive maintenance technologies.

- The methodology is further refined by incorporating SSA optimization for hyperparameter tuning, alongside XAI-based feature selection. This approach not only optimizes the machine learning models but also enhances their interpretability and effectiveness in fault prediction.

- The robustness and generalization of the models are meticulously validated utilizing tenfold cross-validation, ensuring superior performance across different models.

2. Materials and Methods

2.1. Discrete Cosine Transform (DCT)

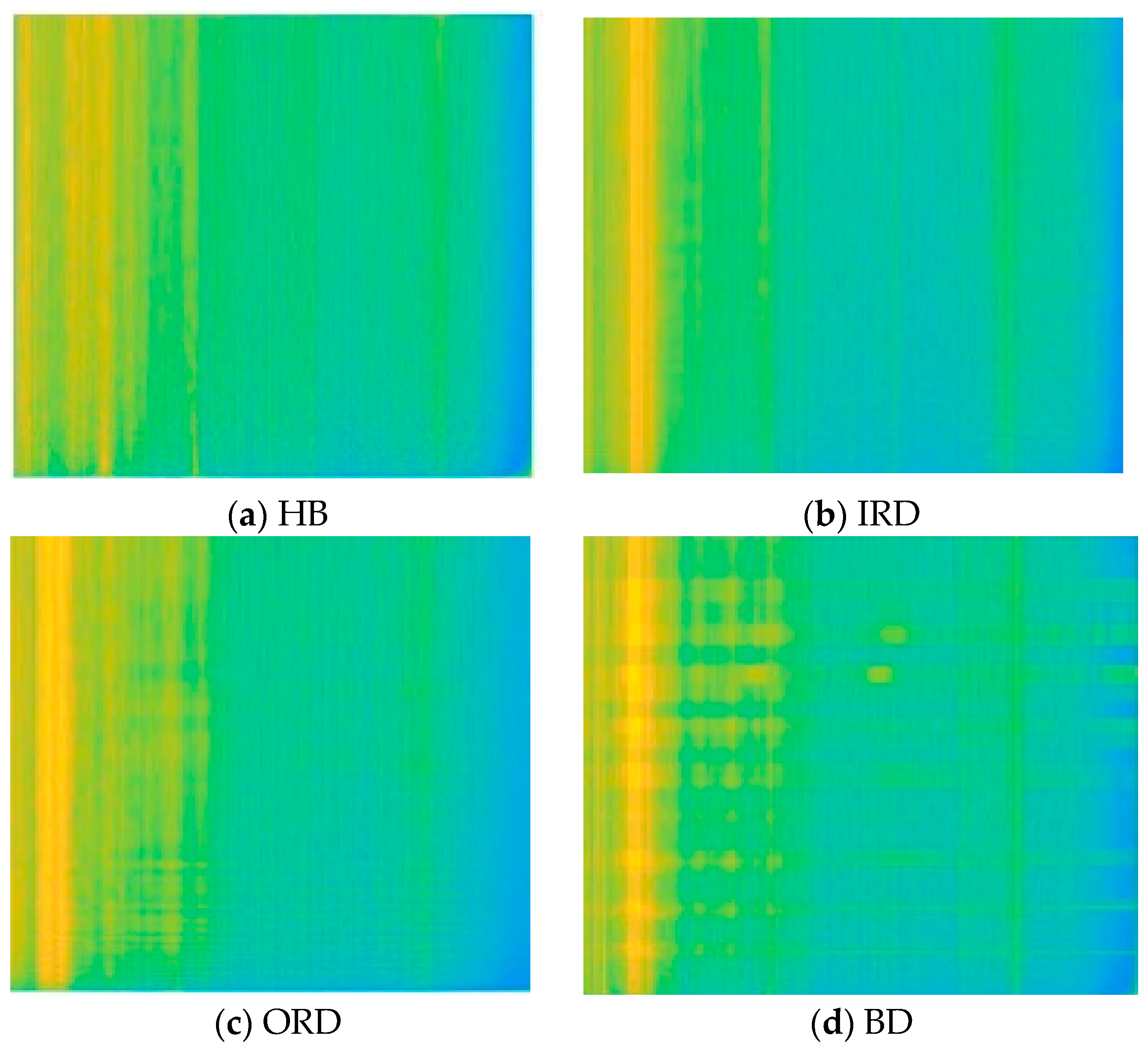

2.2. Q Transform

2.3. HOG Features

2.4. Machine Learning Techniques

2.4.1. Support Vector Machine

2.4.2. Long Short-Term Memory

2.4.3. Gated Recurrent Unit

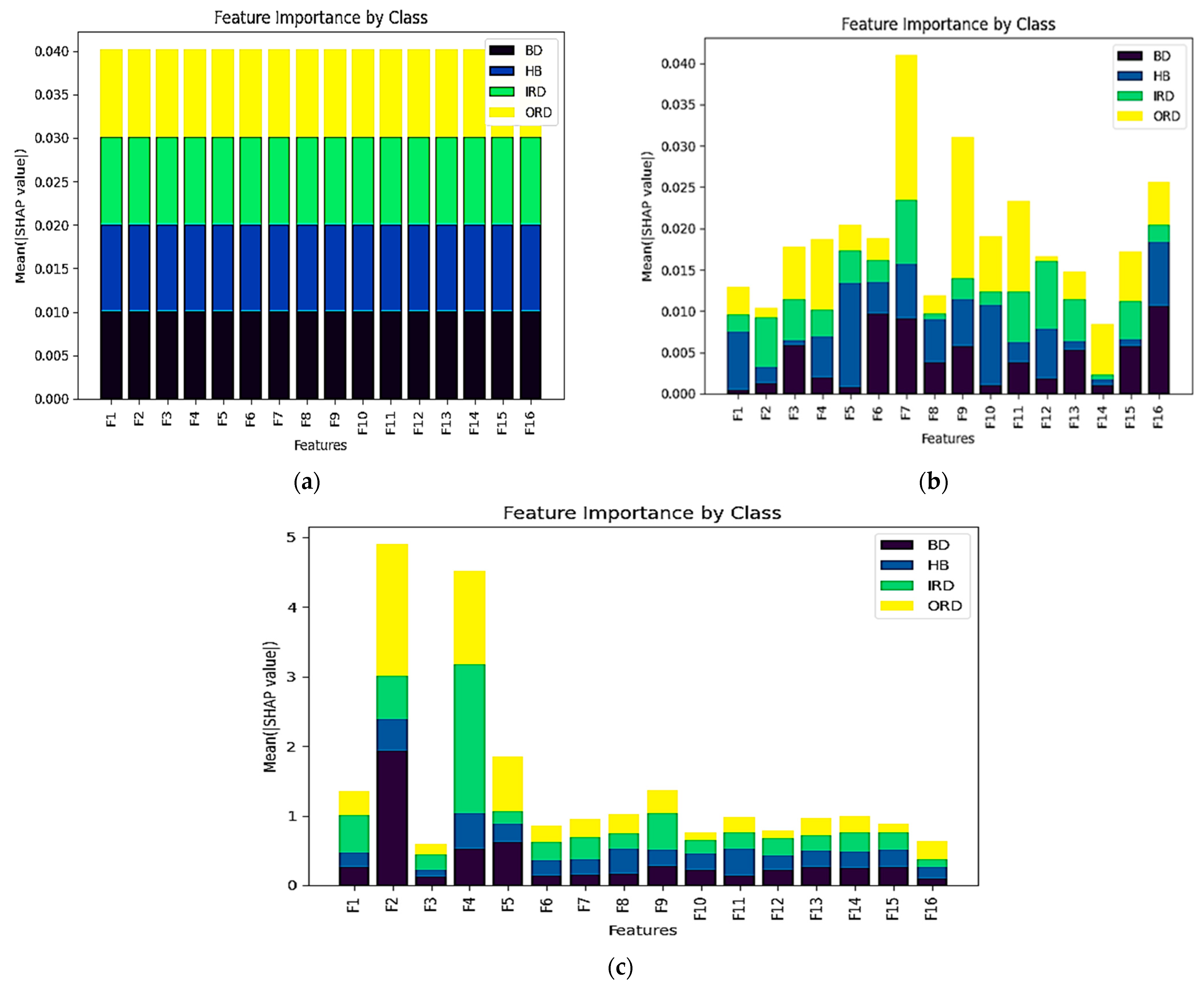

2.5. Explainable AI

Shapley Additive Explanations

2.6. Experimentation

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.; Shen, C.; Kong, L.; Wang, D.; Xia, M.; Zhu, Z. A New Adversarial Domain Generalization Network Based on Class Boundary Feature Detection for Bearing Fault Diagnosis. IEEE Trans. Instrum. Meas. 2022, 71, 2506909. [Google Scholar] [CrossRef]

- Vakharia, V.; Kiran, M.B.; Dave, N.J.; Kagathara, U. Feature Extraction and Classification of Machined Component Texture Images Using Wavelet and Artificial Intelligence Techniques. In Proceedings of the 2017 8th International Conference on Mechanical and Aerospace Engineering (ICMAE), Prague, Czech Republic, 22–25 July 2017; pp. 140–144. [Google Scholar] [CrossRef]

- Liu, T.C.; Wu, T.Y. Application of Empirical Mode Decomposition and Envelope Analysis to Fault Diagnosis in Roller Bearing with Single/Double Defect. Smart Sci. 2017, 5, 150–159. [Google Scholar] [CrossRef]

- Shah, M.; Borade, H.; Sanghavi, V.; Purohit, A.; Wankhede, V.; Vakharia, V. Enhancing Tool Wear Prediction Accuracy Using Walsh–Hadamard Transform, DCGAN and Dragonfly Algorithm-Based Feature Selection. Sensors 2023, 23, 3833. [Google Scholar] [CrossRef]

- Wang, D.; Guo, W.; Wang, X. A joint sparse wavelet coefficient extraction and adaptive noise reduction method in recovery of weak bearing fault features from a multi-component signal mixture. Appl. Soft Comput. 2013, 13, 4097–4104. [Google Scholar] [CrossRef]

- Łuczak, D. Machine Fault Diagnosis through Vibration Analysis: Continuous Wavelet Transform with Complex Morlet Wavelet and Time–Frequency RGB Image Recognition via Convolutional Neural Network. Electronics 2024, 13, 452. [Google Scholar] [CrossRef]

- Kumar, A.; Berrouche, Y.; Zimroz, R.; Vashishtha, G.; Chauhan, S.; Gandhi, C.P.; Xiang, J. Non-parametric Ensemble Empirical Mode Decomposition for extracting weak features to identify bearing defects. Measurement 2023, 211, 112615. [Google Scholar] [CrossRef]

- Zhu, H.; He, Z.; Wei, J.; Wang, J.; Zhou, H. Bearing fault feature extraction and fault diagnosis method based on feature fusion. Sensors 2021, 21, 2524. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wang, Y.; Zi, Y.; Jiang, S. A local weighted multi-instance multilabel network for fault diagnosis of rolling bearings using encoder signal. IEEE Trans. Instrum. Meas. 2020, 69, 8580–8589. [Google Scholar] [CrossRef]

- Zhao, D.; Li, J.; Cheng, W.; Wen, W. Bearing Multi-Fault Diagnosis with Iterative Generalized Demodulation Guided by Enhanced Rotational Frequency Matching under Time-Varying Speed Conditions. ISA Trans. 2023, 133, 518–528. [Google Scholar] [CrossRef]

- Zhao, D.; Cai, W.; Cui, L. Adaptive Thresholding and Coordinate Attention-Based Tree-Inspired Network for Aero-Engine Bearing Health Monitoring under Strong Noise. Adv. Eng. Inform. 2024, 61, 102559. [Google Scholar] [CrossRef]

- Zhao, D.; Wang, H.; Cui, L. Frequency-Chirprate Synchrosqueezing-Based Scaling Chirplet Transform for Wind Turbine Nonstationary Fault Feature Time–Frequency Representation. Mech. Syst. Signal Process. 2024, 209, 111112. [Google Scholar] [CrossRef]

- Kiakojouri, A.; Lu, Z.; Mirring, P.; Powrie, H.; Wang, L. A Novel Hybrid Technique Combining Improved Cepstrum Pre-Whitening and High-Pass Filtering for Effective Bearing Fault Diagnosis Using Vibration Data. Sensors 2023, 23, 9048. [Google Scholar] [CrossRef] [PubMed]

- Hoang, D.T.; Tran, X.T.; Van, M.; Kang, H.J. A Deep Neural Network-Based Feature Fusion for Bearing Fault Diagnosis. Sensors 2021, 21, 244. [Google Scholar] [CrossRef]

- Sugumaran, V.; Jain, D.; Amarnath, M.; Kumar, H. Fault Diagnosis of Helical Gear Box Using Decision Tree Through Vibration Signals. Int. J. Perform. Eng. 2013, 9, 221–234. [Google Scholar] [CrossRef]

- Vakharia, V.; Gupta, V.K.; Kankar, P.K. A Comparison of Feature Ranking Techniques for Fault Diagnosis of Ball Bearing. Soft Comput. 2016, 20, 1601–1619. [Google Scholar] [CrossRef]

- Pham, M.T.; Kim, J.M.; Kim, C.H. Deep learning-based bearing fault diagnosis method for embedded systems. Sensors 2020, 20, 6886. [Google Scholar] [CrossRef]

- Yang, K.; Zhao, L.; Wang, C. A new intelligent bearing fault diagnosis model based on triplet network and SVM. Sci. Rep. 2022, 12, 5234. [Google Scholar] [CrossRef]

- Li, B.; Zhang, P.L.; Tian, H.; Mi, S.S.; Liu, D.S.; Ren, G.Q. A new feature extraction and selection scheme for hybrid fault diagnosis of gearbox. Expert Syst. Appl. 2011, 38, 10000–10009. [Google Scholar] [CrossRef]

- Karabadji, N.E.I.; Seridi, H.; Khelf, I.; Azizi, N.; Boulkroune, R. Improved decision tree construction based on attribute selection and data sampling for fault diagnosis in rotating machines. Eng. Appl. Artif. Intell. 2014, 35, 71–83. [Google Scholar] [CrossRef]

- Rajeswari, C.; Sathiyabhama, B.; Devendiran, S.; Manivannan, K. A gear fault identification using wavelet transform, rough set based GA, ANN and C4. 5 algorithm. Procedia Eng. 2014, 97, 1831–1841. [Google Scholar] [CrossRef]

- Gao, K.; Wu, Z.; Yu, C.; Li, M.; Liu, S. Composite Fault Diagnosis of Rolling Bearings: A Feature Selection Approach Based on the Causal Feature Network. Appl. Sci. 2023, 13, 9089. [Google Scholar] [CrossRef]

- Harinarayan, R.R.A.; Shalinie, S.M. XFDDC: eXplainable Fault Detection Diagnosis and Correction framework for chemical process systems. Process Saf. Environ. Prot. 2022, 165, 463–474. [Google Scholar] [CrossRef]

- Meas, M.; Machlev, R.; Kose, A.; Tepljakov, A.; Loo, L.; Levron, Y.; Belikov, J. Explainability and transparency of classifiers for air-handling unit faults using explainable artificial intelligence (XAI). Sensors 2022, 22, 6338. [Google Scholar] [CrossRef]

- Rashidi, S.; Fallah, A.; Towhidkhah, F. Feature extraction based DCT on dynamic signature verification. Sci. Iran. 2012, 19, 1810–1819. [Google Scholar] [CrossRef]

- Pang, Y.; Jia, L.; Liu, Z. Discrete cosine transformation and temporal adjacent convolutional neural network-based remaining useful life estimation of bearings. Shock Vib. 2020, 2020, 8240168. [Google Scholar] [CrossRef]

- Madhu, C.; Shankar, E.A.; Reddy, N.S.; Srinivasulu, D. Signal estimation reproduction in frequency domain using tunable Q-factor wavelet transform. In Proceedings of the Second International Conference on Emerging Trends in Science Technologies for Engineering Systems (ICETSE-2019), Karnataka, India, 17–18 May 2019; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar] [CrossRef]

- Zhou, W.; Gao, S.; Zhang, L.; Lou, X. Histogram of oriented gradients feature extraction from raw bayer pattern images. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 946–950. [Google Scholar] [CrossRef]

- Vakharia, V.; Shah, M.; Suthar, V.; Patel, V.K.; Solanki, A. Hybrid perovskites thin films morphology identification by adapting multiscale-SinGAN architecture, heat transfer search optimized feature selection and machine learning algorithms. Phys. Scr. 2023, 98, 025203. [Google Scholar] [CrossRef]

- Almalki, Y.E.; Ali, M.U.; Ahmed, W.; Kallu, K.D.; Zafar, A.; Alduraibi, S.K.; Irfan, M.; Basha, M.A.A.; Alshamrani, H.A.; Alduraibi, A.K. Robust Gaussian and Nonlinear Hybrid Invariant Clustered Features Aided Approach for Speeded Brain Tumor Diagnosis. Life 2022, 12, 1084. [Google Scholar] [CrossRef]

- Wang, M.; Chen, Y.; Zhang, X.; Chau, T.K.; Ching Iu, H.H.; Fernando, T.; Ma, M. Roller bearing fault diagnosis based on integrated fault feature and SVM. J. Vib. Eng. Technol. 2021, 10, 853–862. [Google Scholar] [CrossRef]

- Tian, H.; Fan, H.; Feng, M.; Cao, R.; Li, D. Fault diagnosis of rolling bearing based on hpso algorithm optimized cnn-lstm neural network. Sensors 2023, 23, 6508. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Ning, X.; Hou, L.; He, F.; Zhang, H.; Shankar, A. Three-dimensional Softmax Mechanism Guided Bidirectional GRU Networks for Hyperspectral Remote Sensing Image Classification. Signal Process. 2023, 212, 109151. [Google Scholar] [CrossRef]

- Brito, L.C.; Susto, G.A.; Brito, J.N.; Duarte, M.A. An explainable artificial intelligence approach for unsupervised fault detection and diagnosis in rotating machinery. Mech. Syst. Signal Process. 2022, 163, 108105. [Google Scholar] [CrossRef]

- Chen, H.Y.; Lee, C.H. Vibration signals analysis by explainable artificial intelligence (XAI) approach: Application on bearing faults diagnosis. IEEE Access 2020, 8, 134246–134256. [Google Scholar] [CrossRef]

- Brusa, E.; Cibrario, L.; Delprete, C.; Di Maggio, L.G. Explainable AI for machine fault diagnosis: Understanding features’ contribution in machine learning models for industrial condition monitoring. Appl. Sci. 2023, 13, 2038. [Google Scholar] [CrossRef]

- Loparo, K.A. Case Western Reserve University Dataset. Bearing Data Centre. Available online: https://engineering.case.edu/bearingdatacenter (accessed on 10 January 2024).

| Type of Bearing | Outer Race Diameter (mm) | Inner Race Diameter (mm) | Size of the Ball | Number of the Ball |

|---|---|---|---|---|

| 6205 (SKF) | 51.99 | 25.01 | 7.94 | 9 |

| S. No. | Features | Details | S. No. | Features | Details |

|---|---|---|---|---|---|

| 1 | Max | It indicates the largest value in the given dataset. | 9 | Peak2Peak | It is a difference between the highest and lowest data points. |

| 2 | Median | It represents the mid-value of a given dataset. | 10 | Peak2Rms | It shows the difference between peaks to RMS values. |

| 3 | Average | 11 | Root Sum of Square | It represents the summation of the squared value of data. | |

| 4 | Kurtosis | 12 | Crest Factor | ||

| 5 | Skewness | The probability distribution in a given dataset can be found by skewness. | 13 | Foam Factor | |

| 6 | Standard Deviation | It is defined as a variation in the data concerned with an average value. | 14 | Shape Factor | |

| 7 | Variance | 15 | L Factor | ||

| 8 | Root Mean Square | 16 | Shannon Entropy | It measures the uncertainty in the data process. |

| Training Results (SVM) | Tenfold Cross-Validation Results (SVM) | |||||||||

| DEFAULT | BD | HB | IRD | ORD | DEFAULT | BD | HB | IRD | ORD | |

| BD | 2 | 0 | 1 | 9 | BD | 5 | 0 | 0 | 7 | |

| HB | 0 | 0 | 0 | 4 | HB | 0 | 0 | 0 | 4 | |

| IRD | 0 | 0 | 2 | 10 | IRD | 0 | 0 | 3 | 9 | |

| ORD | 0 | 0 | 0 | 28 | ORD | 1 | 0 | 0 | 27 | |

| (a) | (b) | |||||||||

| SHAP | BD | HB | IRD | ORD | SHAP | BD | HB | IRD | ORD | |

| BD | 2 | 0 | 0 | 10 | BD | 4 | 0 | 0 | 8 | |

| HB | 0 | 0 | 0 | 4 | HB | 0 | 0 | 0 | 4 | |

| IRD | 0 | 0 | 3 | 9 | IRD | 0 | 0 | 3 | 9 | |

| ORD | 0 | 0 | 0 | 28 | ORD | 1 | 0 | 0 | 27 | |

| (c) | (d) | |||||||||

| SSA | BD | HB | IRD | ORD | SSA | BD | HB | IRD | ORD | |

| BD | 5 | 0 | 0 | 7 | BD | 6 | 0 | 0 | 6 | |

| HB | 0 | 3 | 0 | 1 | HB | 0 | 3 | 0 | 1 | |

| IRD | 0 | 0 | 5 | 7 | IRD | 0 | 0 | 4 | 8 | |

| ORD | 0 | 0 | 0 | 28 | ORD | 2 | 0 | 0 | 26 | |

| (e) | (f) | |||||||||

| SHAP+SSA | BD | HB | IRD | ORD | SHAP+SSA | BD | HB | IRD | ORD | |

| BD | 5 | 0 | 0 | 7 | BD | 6 | 0 | 0 | 6 | |

| HB | 0 | 3 | 0 | 1 | HB | 0 | 3 | 0 | 1 | |

| IRD | 0 | 1 | 4 | 7 | IRD | 0 | 0 | 4 | 8 | |

| ORD | 1 | 0 | 0 | 27 | ORD | 2 | 0 | 0 | 26 | |

| (g) | (h) | |||||||||

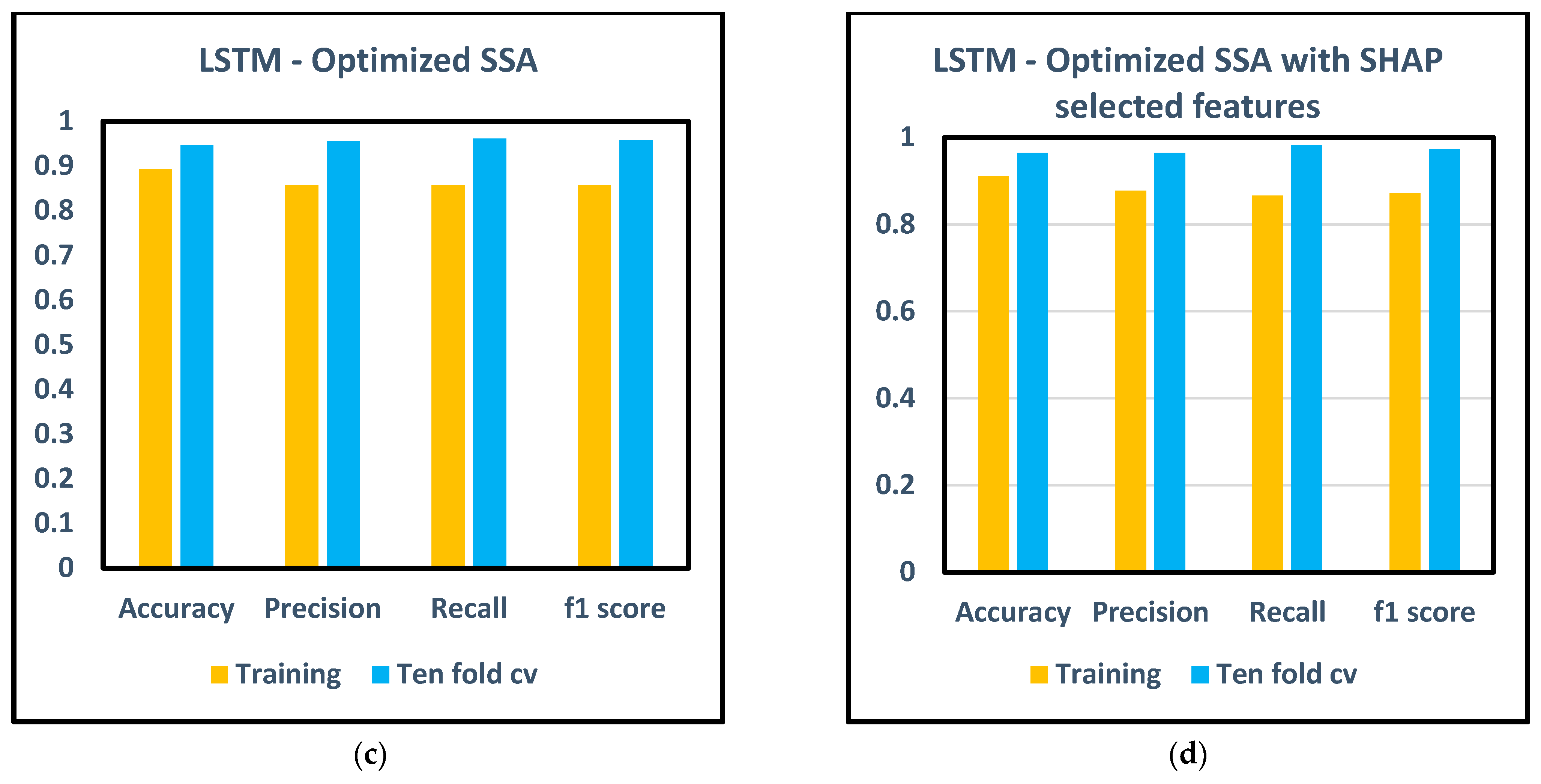

| Training Results (LSTM) | Tenfold Cross-Validation Results (LSTM) | |||||||||

| DEFAULT | BD | HB | IRD | ORD | DEFAULT | BD | HB | IRD | ORD | |

| BD | 10 | 0 | 0 | 2 | BD | 11 | 0 | 0 | 1 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 1 | 10 | 1 | IRD | 0 | 0 | 11 | 1 | |

| ORD | 1 | 0 | 2 | 25 | ORD | 2 | 0 | 0 | 26 | |

| (a) | (b) | |||||||||

| SHAP | BD | HB | IRD | ORD | SHAP | BD | HB | IRD | ORD | |

| BD | 10 | 0 | 0 | 2 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 1 | 10 | 1 | IRD | 0 | 0 | 11 | 1 | |

| ORD | 1 | 0 | 1 | 26 | ORD | 1 | 0 | 1 | 26 | |

| (c) | (d) | |||||||||

| SSA | BD | HB | IRD | ORD | SSA | BD | HB | IRD | ORD | |

| BD | 11 | 0 | 0 | 1 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 1 | 10 | 1 | IRD | 0 | 0 | 11 | 1 | |

| ORD | 1 | 0 | 1 | 26 | ORD | 2 | 0 | 0 | 26 | |

| (e) | (f) | |||||||||

| SHAP+SSA | BD | HB | IRD | ORD | SHAP+SSA | BD | HB | IRD | ORD | |

| BD | 11 | 0 | 0 | 1 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 1 | 10 | 1 | IRD | 0 | 0 | 12 | 0 | |

| ORD | 1 | 0 | 0 | 27 | ORD | 2 | 0 | 0 | 26 | |

| (g) | (h) | |||||||||

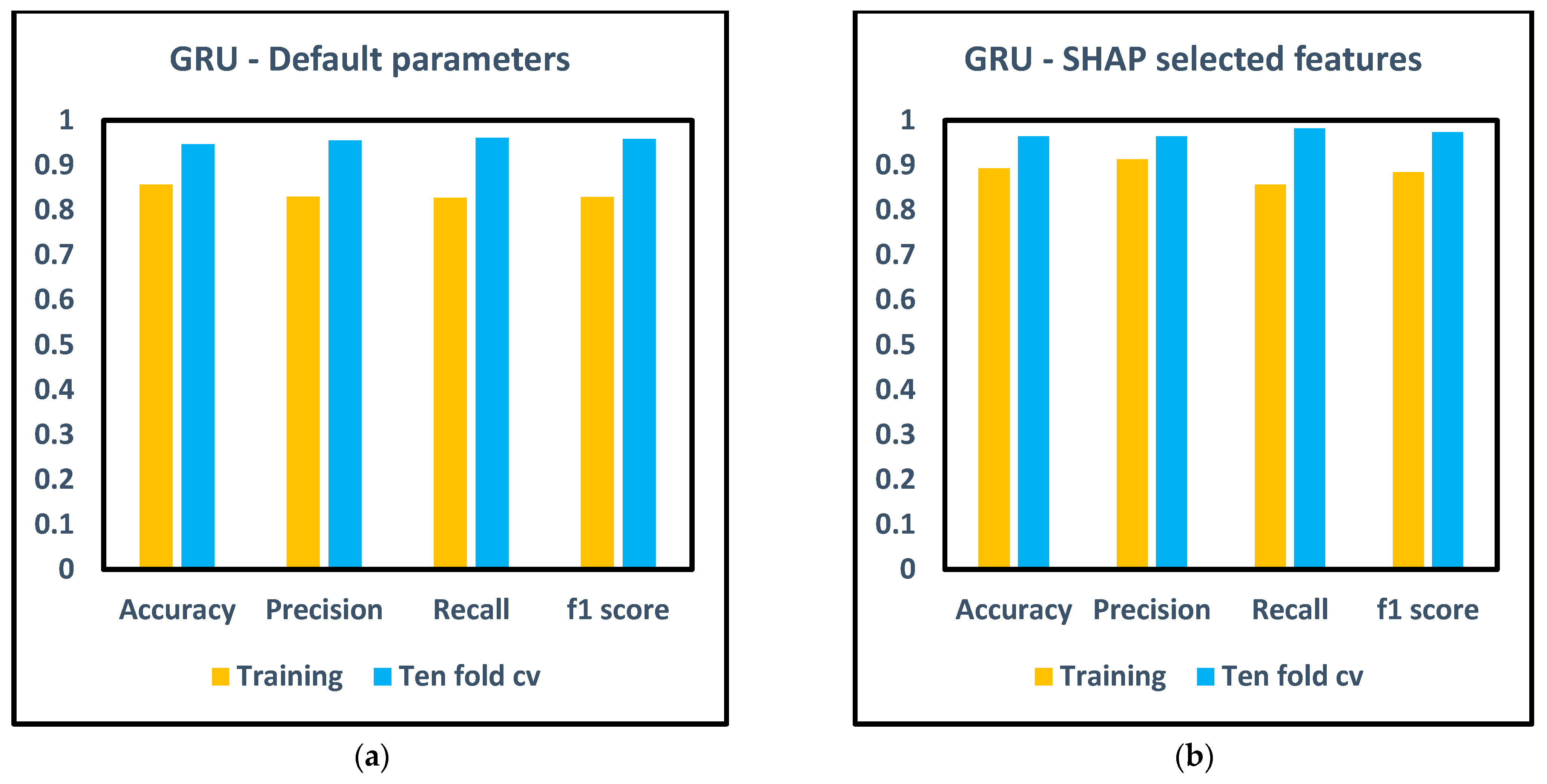

| Training Results (GRU) | Tenfold Cross-Validation Results (GRU) | |||||||||

| DEFAULT | BD | HB | IRD | ORD | DEFAULT | BD | HB | IRD | ORD | |

| BD | 10 | 0 | 0 | 2 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 1 | 10 | 1 | IRD | 0 | 0 | 11 | 1 | |

| ORD | 1 | 0 | 2 | 25 | ORD | 2 | 0 | 0 | 26 | |

| (a) | (b) | |||||||||

| SHAP | BD | HB | IRD | ORD | SHAP | BD | HB | IRD | ORD | |

| BD | 10 | 0 | 0 | 2 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 1 | 10 | 1 | IRD | 0 | 0 | 12 | 0 | |

| ORD | 1 | 0 | 1 | 26 | ORD | 2 | 0 | 0 | 26 | |

| (c) | (d) | |||||||||

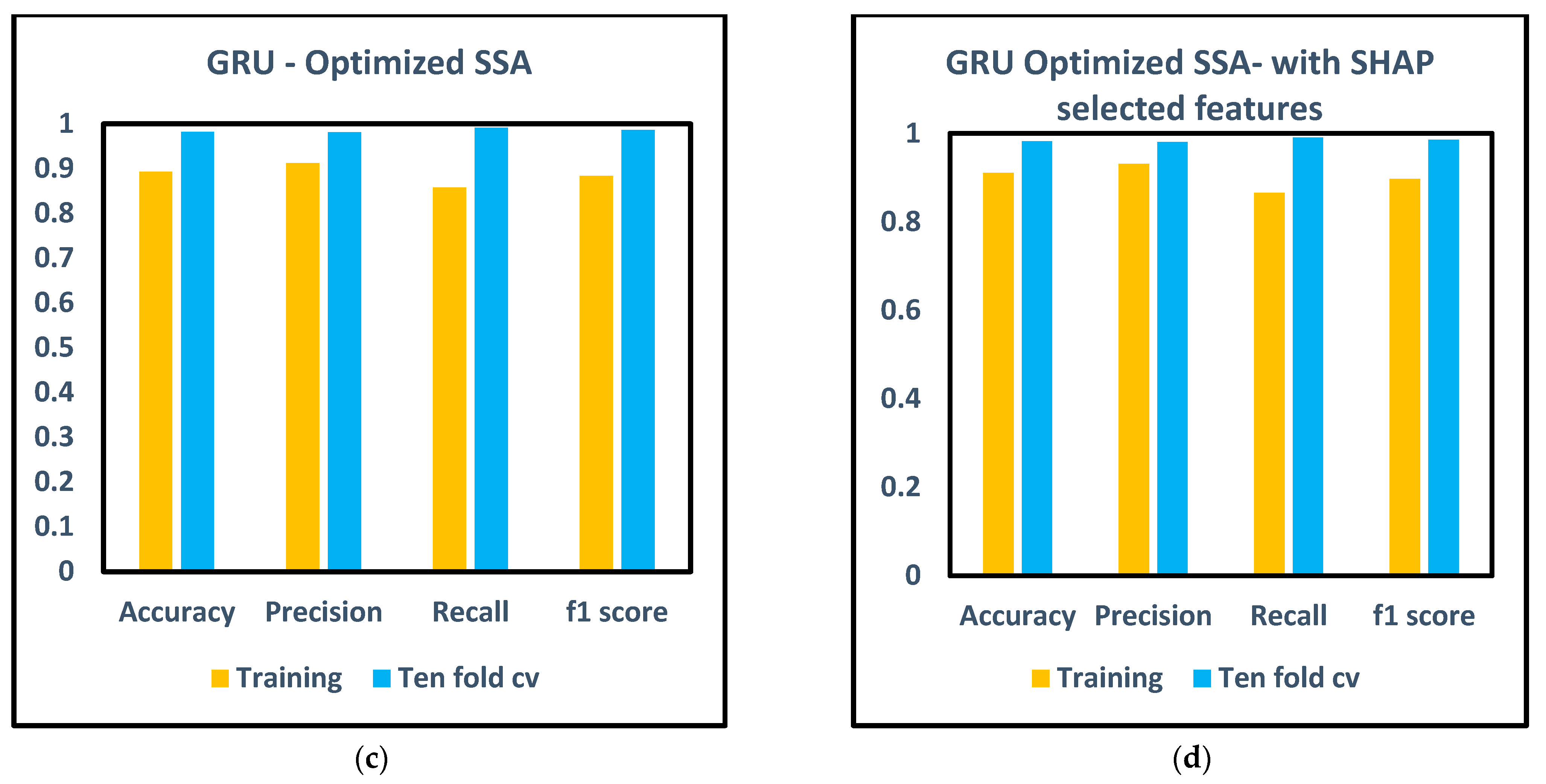

| SSA | BD | HB | IRD | ORD | SSA | BD | HB | IRD | ORD | |

| BD | 10 | 0 | 0 | 2 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 0 | 11 | 1 | IRD | 0 | 0 | 12 | 0 | |

| ORD | 2 | 0 | 0 | 26 | ORD | 1 | 0 | 0 | 27 | |

| (e) | (f) | |||||||||

| SHAP+SSA | BD | HB | IRD | ORD | SHAP+SSA | BD | HB | IRD | ORD | |

| BD | 10 | 0 | 0 | 2 | BD | 12 | 0 | 0 | 0 | |

| HB | 0 | 3 | 1 | 0 | HB | 0 | 4 | 0 | 0 | |

| IRD | 0 | 0 | 11 | 1 | IRD | 0 | 0 | 12 | 0 | |

| ORD | 1 | 0 | 0 | 27 | ORD | 1 | 0 | 0 | 27 | |

| (g) | (h) | |||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dave, V.; Borade, H.; Agrawal, H.; Purohit, A.; Padia, N.; Vakharia, V. Deep Learning-Enhanced Small-Sample Bearing Fault Analysis Using Q-Transform and HOG Image Features in a GRU-XAI Framework. Machines 2024, 12, 373. https://doi.org/10.3390/machines12060373

Dave V, Borade H, Agrawal H, Purohit A, Padia N, Vakharia V. Deep Learning-Enhanced Small-Sample Bearing Fault Analysis Using Q-Transform and HOG Image Features in a GRU-XAI Framework. Machines. 2024; 12(6):373. https://doi.org/10.3390/machines12060373

Chicago/Turabian StyleDave, Vipul, Himanshu Borade, Hitesh Agrawal, Anshuman Purohit, Nandan Padia, and Vinay Vakharia. 2024. "Deep Learning-Enhanced Small-Sample Bearing Fault Analysis Using Q-Transform and HOG Image Features in a GRU-XAI Framework" Machines 12, no. 6: 373. https://doi.org/10.3390/machines12060373

APA StyleDave, V., Borade, H., Agrawal, H., Purohit, A., Padia, N., & Vakharia, V. (2024). Deep Learning-Enhanced Small-Sample Bearing Fault Analysis Using Q-Transform and HOG Image Features in a GRU-XAI Framework. Machines, 12(6), 373. https://doi.org/10.3390/machines12060373