A Tomato Recognition and Rapid Sorting System Based on Improved YOLOv10

Abstract

1. Introduction

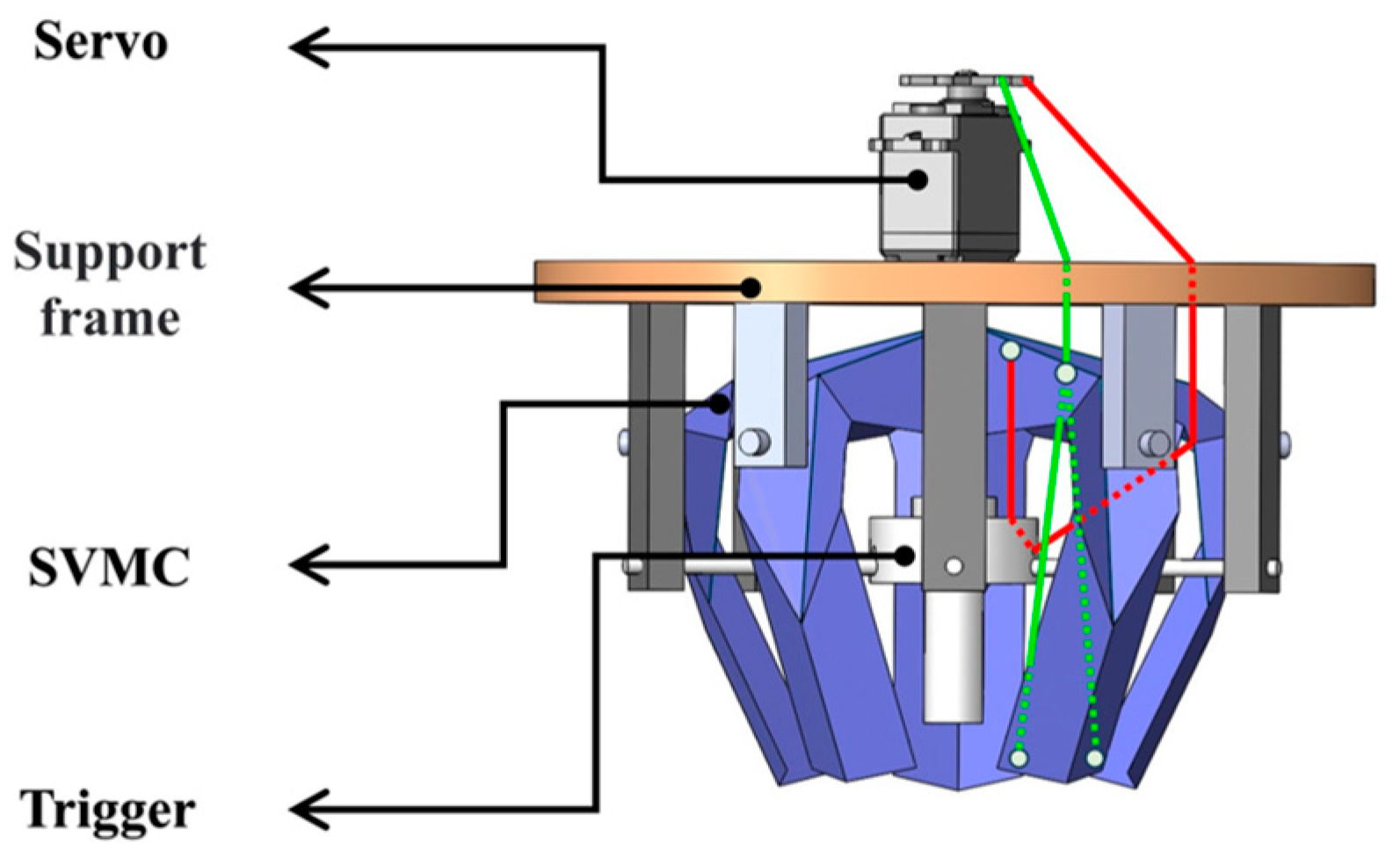

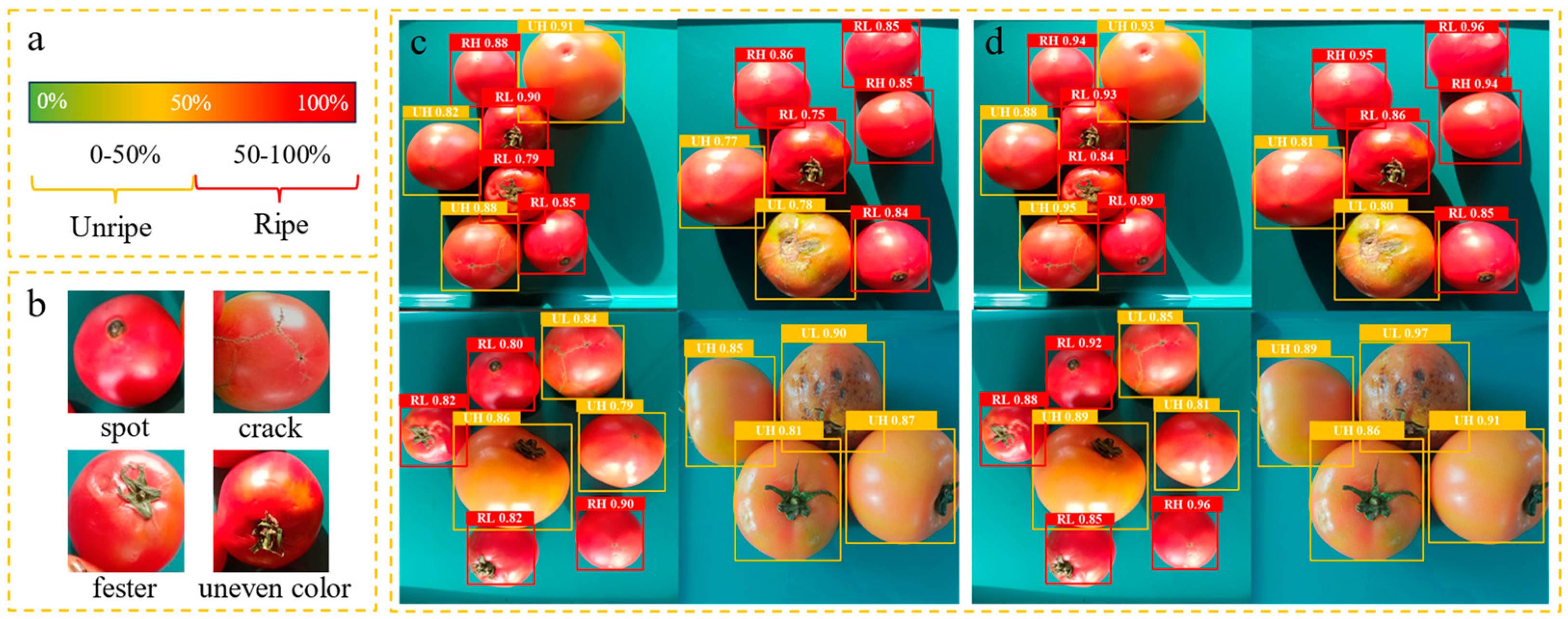

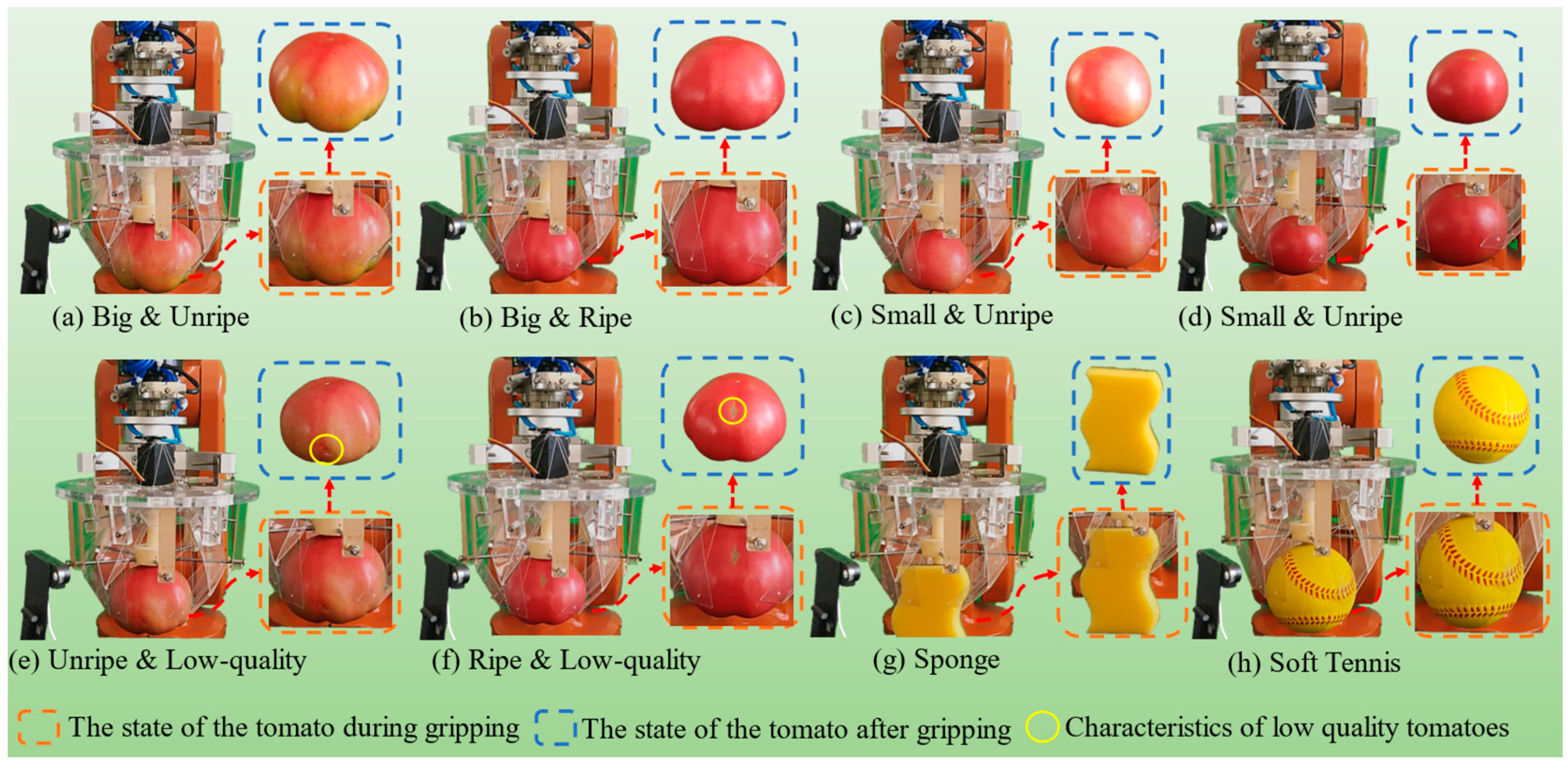

2. Origami Soft Gripper

3. Recognizing Tomato Datasets Using SES-YOLOv10n

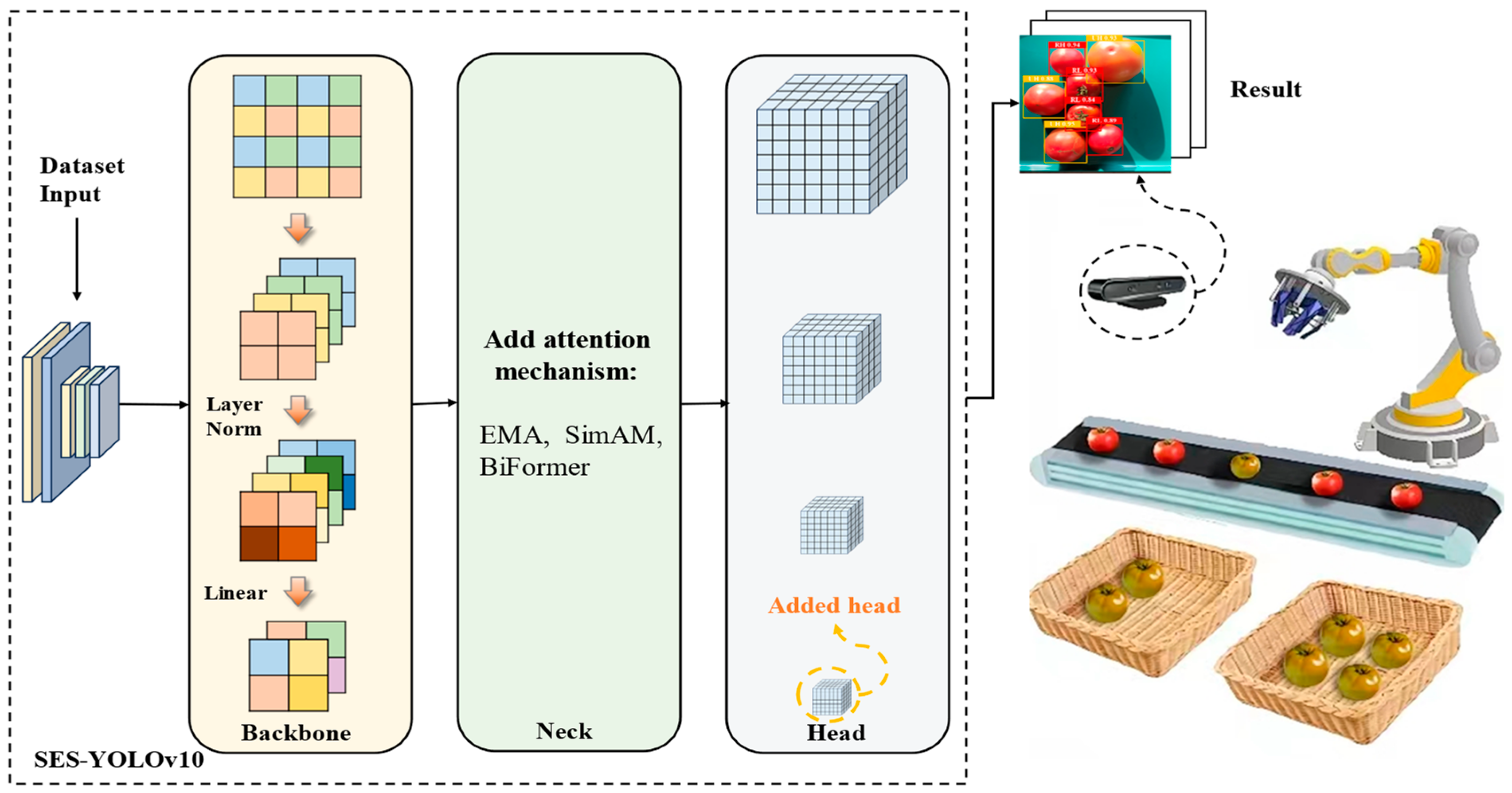

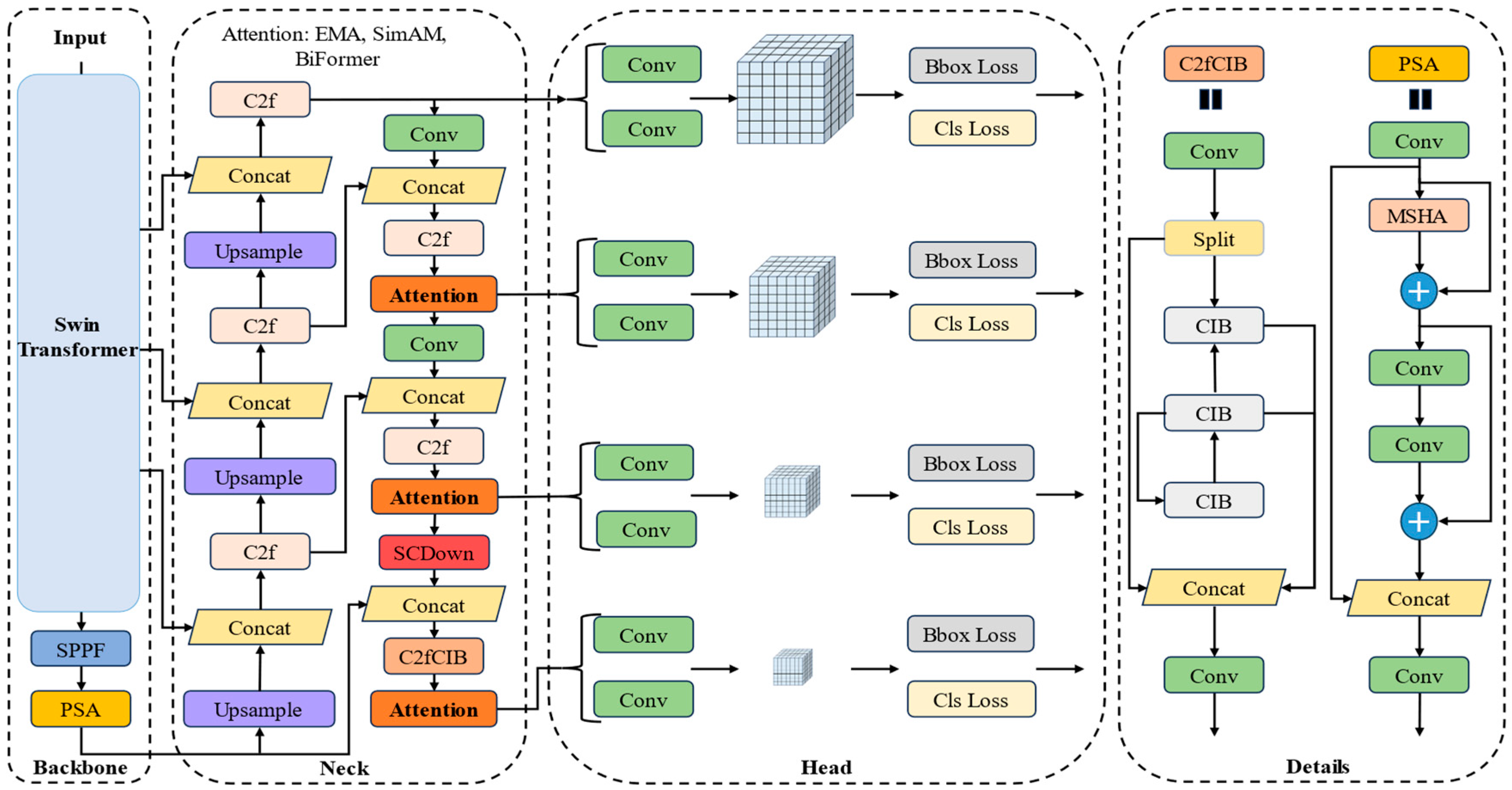

3.1. SES-YOLOv10n Modeling

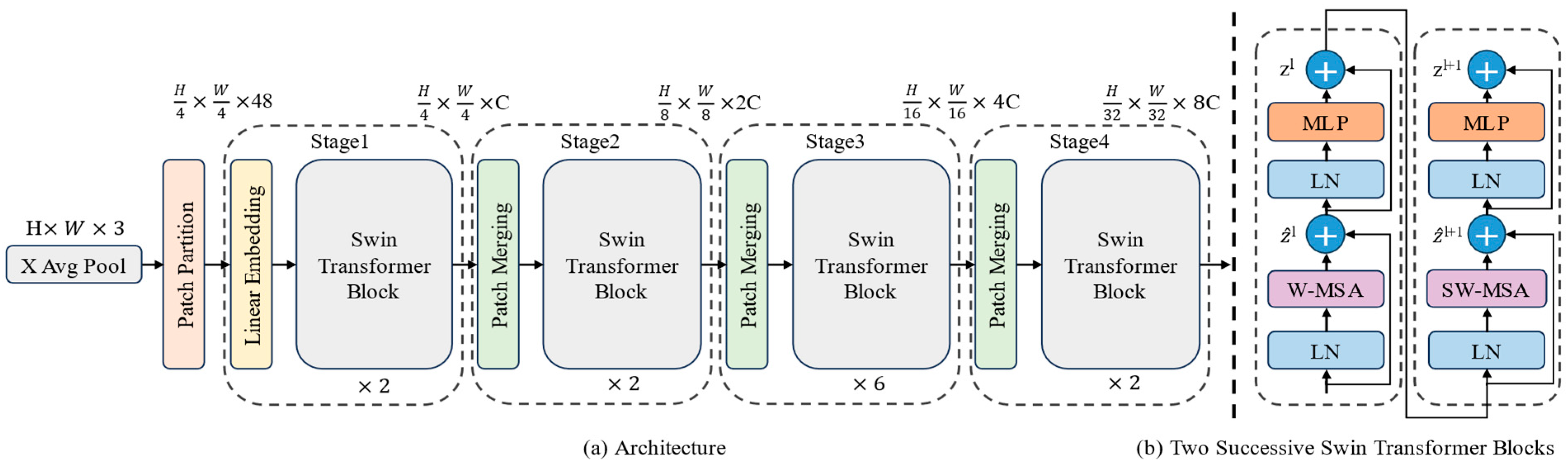

3.1.1. Swin Transformer Module

3.1.2. Attention Mechanism

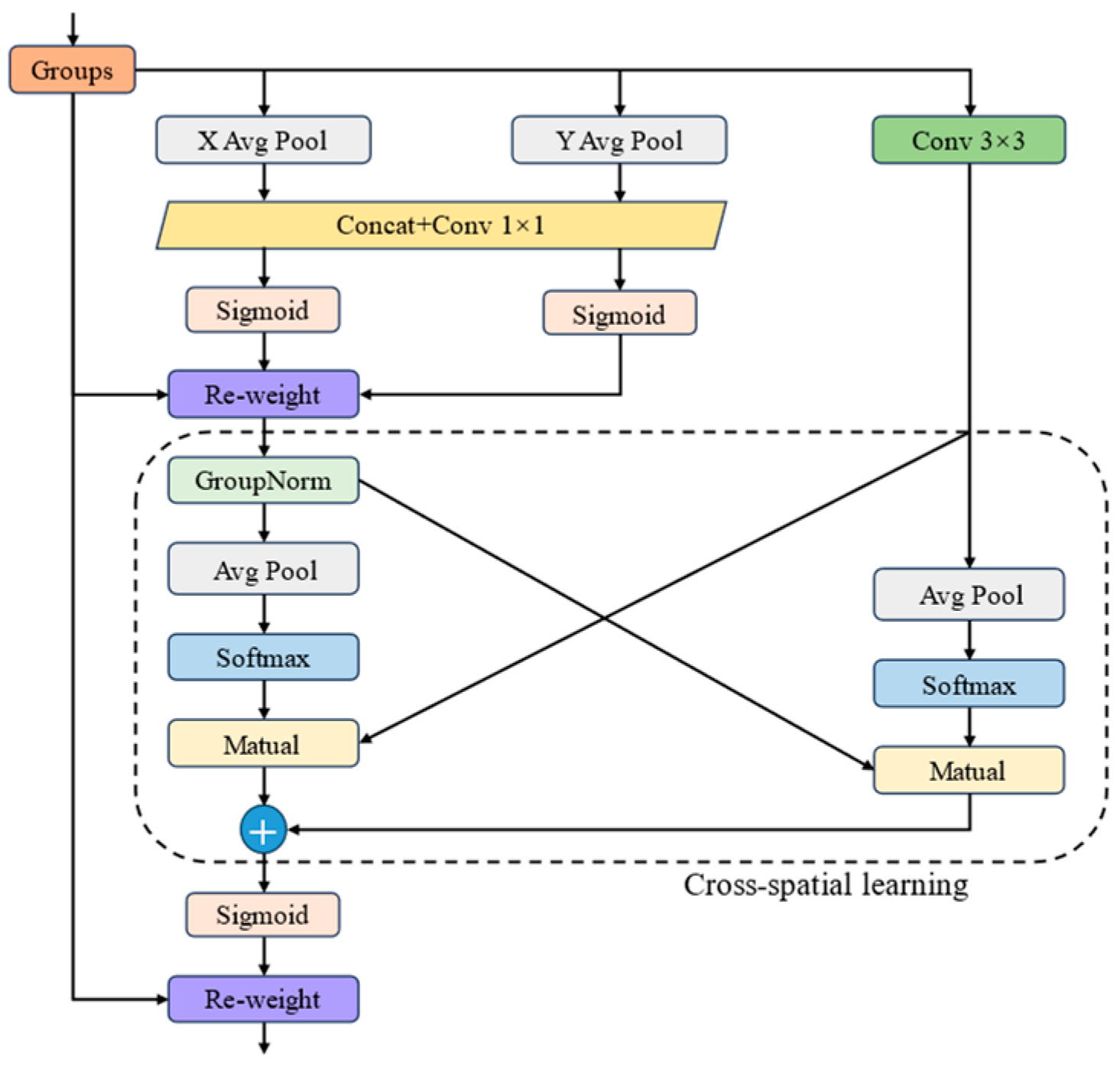

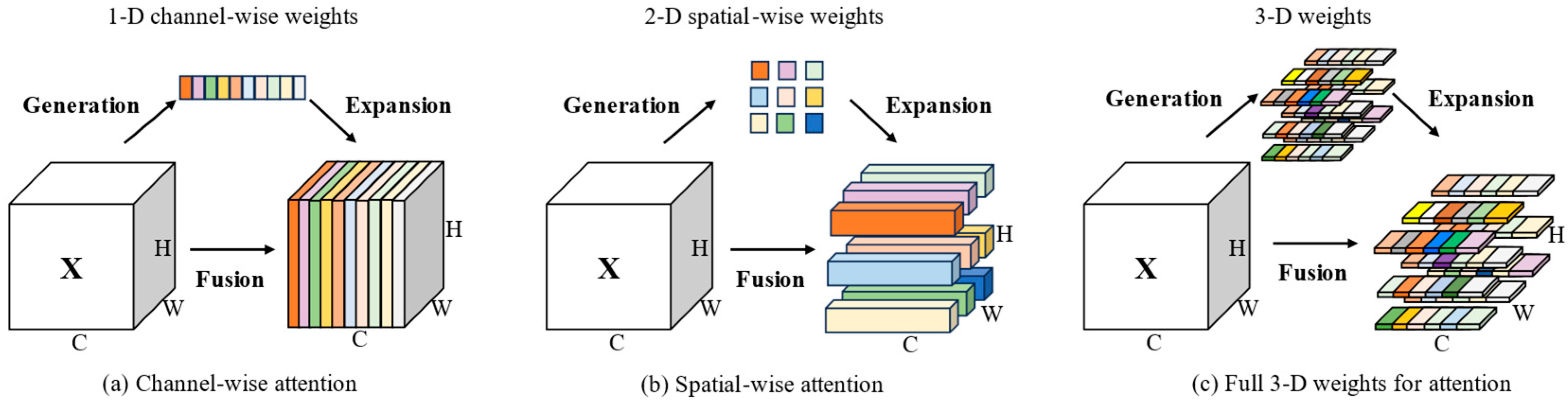

- (1)

- EMA

- (2)

- SimAM

- (3)

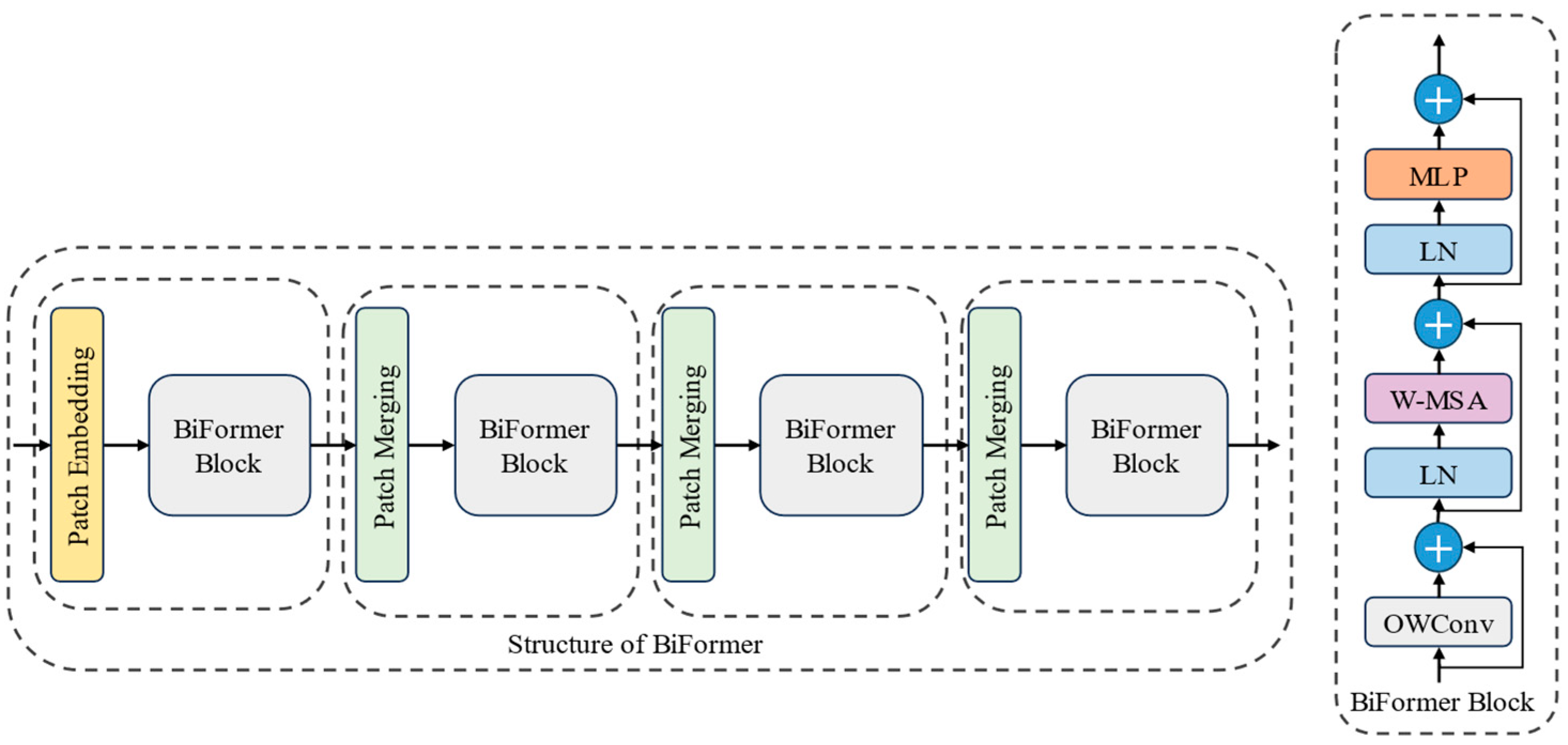

- BiFormer

3.1.3. Head

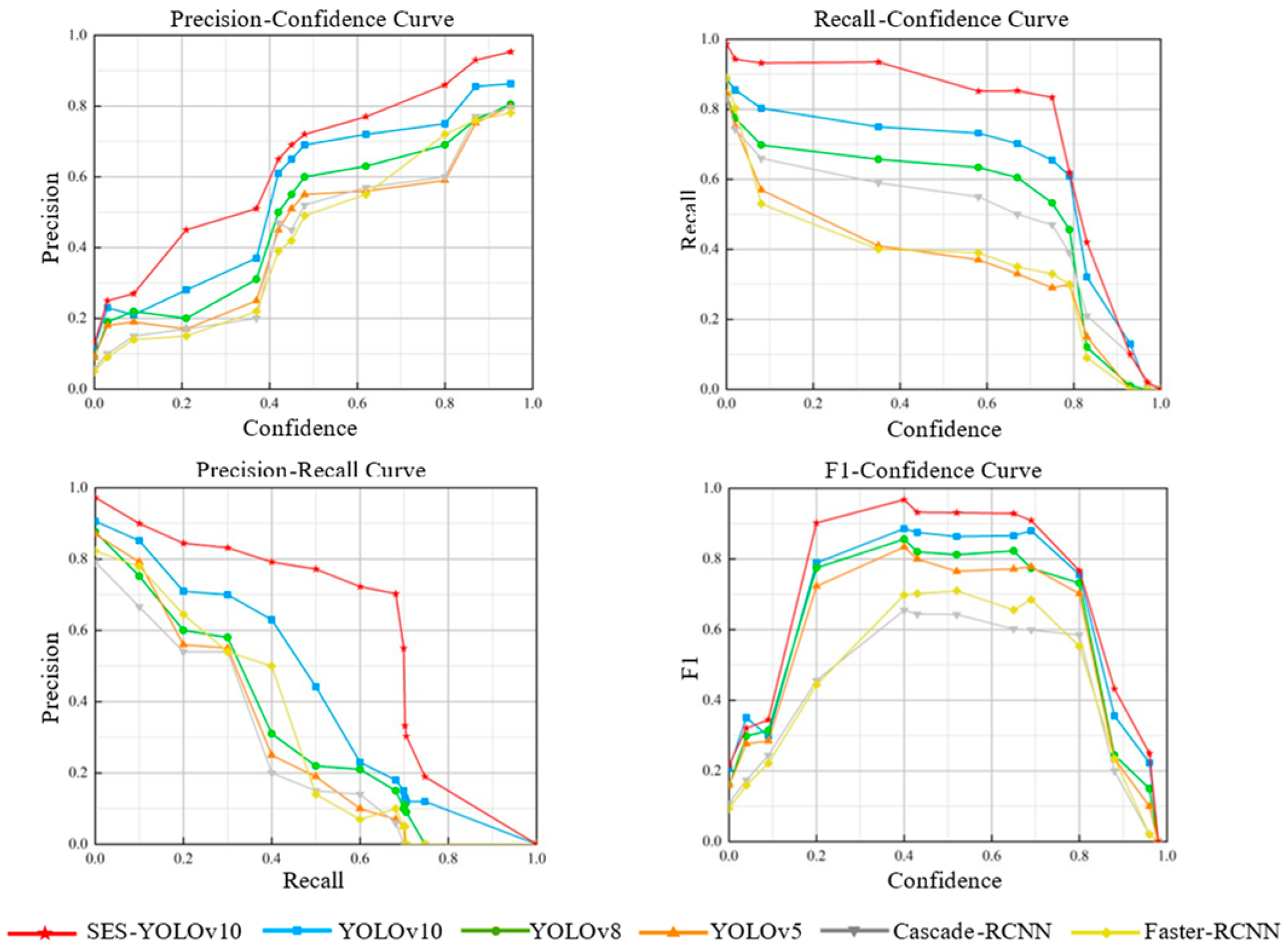

3.2. Performance Test

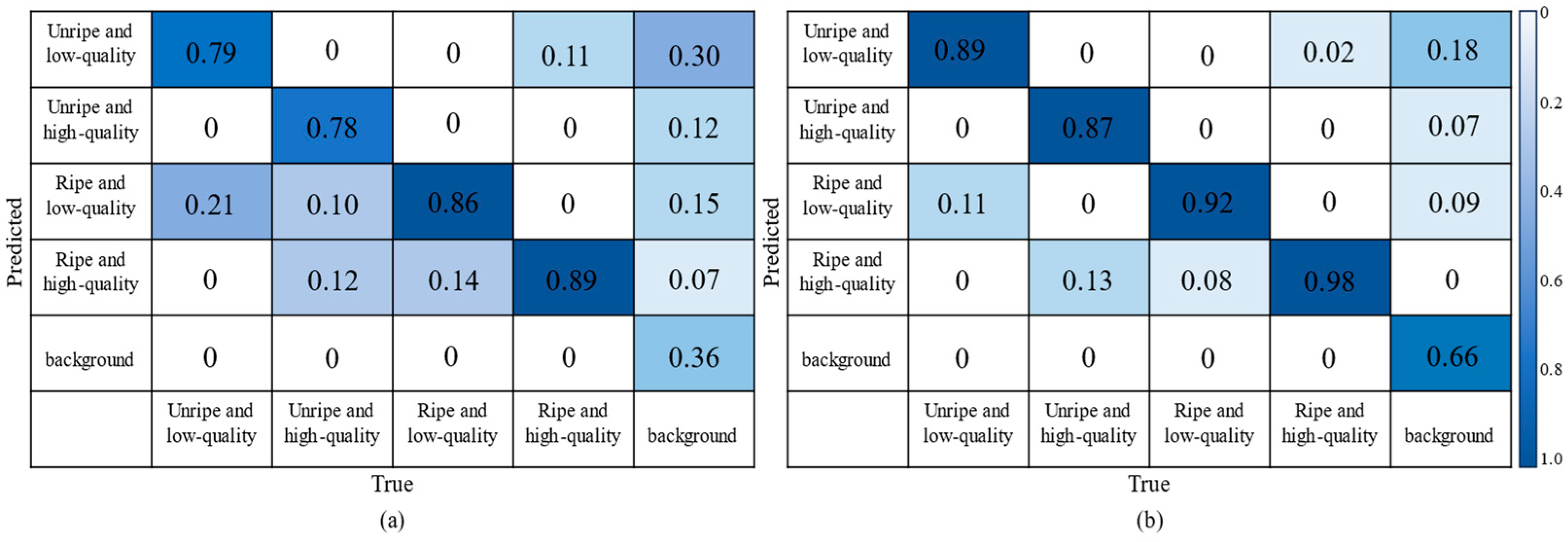

3.2.1. Classification Accuracy Evaluation

3.2.2. Experiments

3.2.3. Extended Evaluation

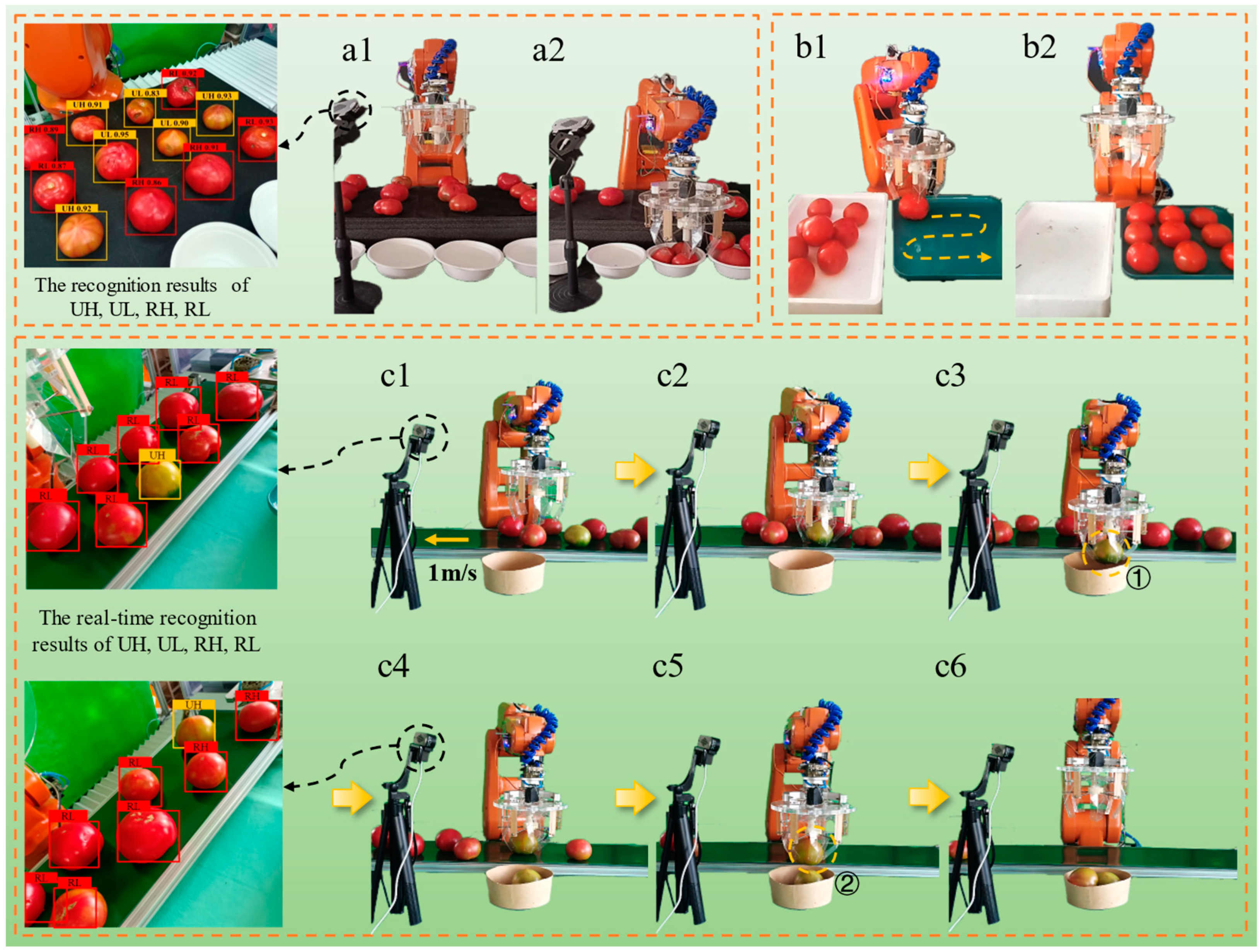

4. Pairs of Tomatoes for Quick Grasping

4.1. Experimental Principle and Equipment

4.2. Static Grasping

4.2.1. Classification Grasping Experiment

4.2.2. Pendulum Experiment

4.3. Dynamic Grasping

4.4. Nondestructive Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, T.; Sun, M.; He, Q.; Zhang, G.; Shi, G.; Ding, X.; Lin, S. Tomato recognition and location algorithm based on improved YOLOv5. Comput. Electron. Agric. 2023, 208, 107759. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Detection of tomato plant phenotyping traits using YOLOv5-based single-stage detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Zheng, S.; Liu, Y.; Weng, W.; Jia, X.; Yu, S.; Wu, Z. Tomato recognition and localization method based on improved YOLOv5n-seg model and binocular stereo vision. Agronomy 2023, 13, 2339. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, J.; Zhang, F.; Gao, J.; Yang, C.; Song, C.; Rao, W.; Zhang, Y. Greenhouse tomato detection and pose classification algorithm based on improved YOLOv5. Comput. Electron. Agric. 2024, 216, 108519. [Google Scholar] [CrossRef]

- Zhou, Z.; Zahid, U.; Majeed, Y.; Nisha Mustafa, S.; Sajjad, M.M.; Butt, H.D.; Fu, L. Advancement in artificial intelligence for on-farm fruit sorting and transportation. Front. Plant Sci. 2023, 14, 1082860. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Tang, B. A two-finger soft gripper based on a bistable mechanism. IEEE Robot. Autom. Lett. 2022, 7, 11330–11337. [Google Scholar] [CrossRef]

- Zhang, Z.; Ni, X.; Gao, W.; Shen, H.; Sun, M.; Guo, G.; Wu, H.; Jiang, S. Pneumatically controlled reconfigurable bistable bionic flower for robotic gripper. Soft Robot. 2022, 9, 657–668. [Google Scholar] [CrossRef]

- Zaidi, S.; Maselli, M.; Laschi, C.; Cianchetti, M. Actuation technologies for soft robot grippers and manipulators: A review. Curr. Robot. Rep. 2021, 2, 355–369. [Google Scholar] [CrossRef]

- Zaghloul, A.; Bone, G.M. 3D shrinking for rapid fabrication of origami-inspired semi-soft pneumatic actuators. IEEE Access 2020, 8, 191330–191340. [Google Scholar] [CrossRef]

- Zou, X.; Liang, T.; Yang, M.; LoPresti, C.; Shukla, S.; Akin, M.; Weil, B.T.; Hoque, S.; Gruber, E.; Mazzeo, A.D. Paper-based robotics with stackable pneumatic actuators. Soft Robot. 2022, 9, 542–551. [Google Scholar] [CrossRef]

- Wang, C.; Guo, H.; Liu, R.; Yang, H.; Deng, Z. A programmable origami-inspired webbed gripper. Smart Mater. Struct. 2021, 30, 055010. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Lin, A.; Chen, B.; Xu, J.; Zhang, Z.; Lu, G.; Zhang, D. Ds-transunet: Dual swin transformer u-net for medical image segmentation. IEEE Trans. Instrum. Meas. 2022, 71, 1–15. [Google Scholar] [CrossRef]

- Gong, H.; Mu, T.; Li, Q.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. Swin-transformer-enabled YOLOv5 with attention mechanism for small object detection on satellite images. Remote Sens. 2022, 14, 2861. [Google Scholar] [CrossRef]

- Hao, W.; Ren, C.; Han, M.; Zhang, L.; Li, F.; Liu, Z. Cattle body detection based on YOLOv5-EMA for precision livestock farming. Animals 2023, 13, 3535. [Google Scholar] [CrossRef]

- You, H.; Lu, Y.; Tang, H. Plant disease classification and adversarial attack using SimAM-EfficientNet and GP-MI-FGSM. Sustainability 2023, 15, 1233. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, Z.; Wang, X.; Fu, W.; Ma, J.; Wang, G. Improved yolov8 insulator fault detection algorithm based on biformer. In Proceedings of the 2023 IEEE 5th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 14–16 July 2023; pp. 962–965. [Google Scholar]

- Terrile, S.; Argüelles, M.; Barrientos, A. Comparison of different technologies for soft robotics grippers. Sensors 2021, 21, 3253. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; Li, J.; Dong, E.; Sun, D. Soft scalable crawling robots enabled by programmable origami and electrostatic adhesion. IEEE Robot. Autom. Lett. 2023, 8, 2365–2372. [Google Scholar] [CrossRef]

- Chen, B.; Shao, Z.; Xie, Z.; Liu, J.; Pan, F.; He, L.; Zhang, L.; Zhang, Y.; Ling, X.; Peng, F.; et al. Soft origami gripper with variable effective length. Adv. Intell. Syst. 2021, 3, 2000251. [Google Scholar] [CrossRef]

- Wang, Z.; Or, K.; Hirai, S. A dual-mode soft gripper for food packaging. Robot. Auton. Syst. 2020, 125, 103427. [Google Scholar] [CrossRef]

- Hu, Q.; Dong, E.; Sun, D. Soft gripper design based on the integration of flat dry adhesive, soft actuator, and microspine. IEEE Trans. Robot. 2021, 37, 1065–1080. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Liu, W.; Bai, X.; Yang, H.; Bao, R.; Liu, J. Tendon driven bistable origami flexible gripper for high-speed adaptive grasping. IEEE Robot. Autom. Lett. 2024, 9, 5417–5424. [Google Scholar] [CrossRef]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An improved swin transformer-based model for remote sensing object detection and instance segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Zhang, X.; Cui, B.; Wang, Z.; Zeng, W. Loader Bucket Working Angle Identification Method Based on YOLOv5s and EMA Attention Mechanism. IEEE Access 2024, 12, 105488–105496. [Google Scholar] [CrossRef]

- Mahaadevan, V.C.; Narayanamoorthi, R.; Gono, R.; Moldrik, P. Automatic identifier of socket for electrical vehicles using SWIN-transformer and SimAM attention mechanism-based EVS YOLO. IEEE Access 2023, 11, 111238–111254. [Google Scholar] [CrossRef]

- Zheng, X.; Lu, X. BPH-YOLOv5: Improved YOLOv5 based on biformer prediction head for small target cigatette detection. In Proceedings of the Jiangsu Annual Conference on Automation (JACA 2023), Changzhou, China, 10–12 November 2023. [Google Scholar]

- Tan, L.; Liu, S.; Gao, J.; Liu, X.; Chu, L.; Jiang, H. Enhanced Self-Checkout System for Retail Based on Improved YOLOv10. arXiv 2024, arXiv:2407.21308. [Google Scholar]

- Chen, W.; Liu, M.; Zhao, C.; Li, X.; Wang, Y. MTD-YOLO: Multi-task deep convolutional neural network for cherry tomato fruit bunch maturity detection. Comput. Electron. Agric. 2024, 216, 108533. [Google Scholar] [CrossRef]

- Fan, S.; Liang, X.; Huang, W.; Zhang, V.J.; Pang, Q.; He, X.; Li, L.; Zhang, C. Real-time defects detection for apple sorting using NIR cameras with pruning-based YOLOV4 network. Comput. Electron. Agric. 2022, 193, 106715. [Google Scholar] [CrossRef]

- Fu, L.; Yang, Z.; Wu, F.; Zou, X.; Lin, J.; Cao, Y.; Duan, J. YOLO-Banana: A lightweight neural network for rapid detection of banana bunches and stalks in the natural environment. Agronomy 2022, 12, 391. [Google Scholar] [CrossRef]

- Liu, Z.; Xiong, J.; Cai, M.; Li, X.; Tan, X. V-YOLO: A Lightweight and Efficient Detection Model for Guava in Complex Orchard Environments. Agronomy 2024, 14, 1988. [Google Scholar] [CrossRef]

- Jing, J.; Zhang, S.; Sun, H.; Ren, R.; Cui, T. YOLO-PEM: A Lightweight Detection Method for Young “Okubo” Peaches in Complex Orchard Environments. Agronomy 2024, 14, 1757. [Google Scholar] [CrossRef]

- Mi, Z.; Yan, W.Q. Strawberry Ripeness Detection Using Deep Learning Models. Big Data Cogn. Comput. 2024, 8, 92. [Google Scholar] [CrossRef]

| Class | Formula |

|---|---|

| Precision | |

| Recall | |

| Average Precision | |

| Mean Average Precision | |

| F1 score |

| Model | Precision/% | Recall/% | mAP@.5/% | mAP@.5:.95/% |

|---|---|---|---|---|

| YOLOv5 | 79.9 | 84.3 | 87.0 | 68.8 |

| Faster-RCNN | 78.1 | 88.9 | 82.3 | 64.2 |

| Cascade-RCNN | 79.6 | 83.2 | 79.2 | 61.6 |

| YOLOv8 | 80.5 | 84.1 | 87.7 | 71.9 |

| YOLOv10 | 86.3 | 88.6 | 90.6 | 79.0 |

| SES-YOLOv10 | 95.3 | 98.7 | 97.3 | 87.9 |

| Ref. | Model | Plant | mAP (%) | Focus |

|---|---|---|---|---|

| Chen et al. (2024) [30] | MTD-YOLOv7 | Tomato | 86.6 | Ripe/unripe |

| Fan et al. (2022) [31] | YOLOv4P | Apple | 93.74 | NIR Images |

| Fu et al. (2022) [32] | YOLO-Banana(v5) | Banana | 92.19 | Shrubs/Stems |

| Liu et al. (2024) [33] | V-YOLO(v10) | Guava | 93.3 | Detection |

| Jing et al. (2024) [34] | YOLO-PEM(v8) | Peach | 93.15 | Detection |

| Mi et al. (2024) [35] | YOLOv9-ST | Strawberry | 87.3 | Ripe/unripe |

| Proposed method | SES-YOLOv10 | Tomato | 95.3 | Tomato types |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Wang, S.; Gao, X.; Yang, H. A Tomato Recognition and Rapid Sorting System Based on Improved YOLOv10. Machines 2024, 12, 689. https://doi.org/10.3390/machines12100689

Liu W, Wang S, Gao X, Yang H. A Tomato Recognition and Rapid Sorting System Based on Improved YOLOv10. Machines. 2024; 12(10):689. https://doi.org/10.3390/machines12100689

Chicago/Turabian StyleLiu, Weirui, Su Wang, Xingjun Gao, and Hui Yang. 2024. "A Tomato Recognition and Rapid Sorting System Based on Improved YOLOv10" Machines 12, no. 10: 689. https://doi.org/10.3390/machines12100689

APA StyleLiu, W., Wang, S., Gao, X., & Yang, H. (2024). A Tomato Recognition and Rapid Sorting System Based on Improved YOLOv10. Machines, 12(10), 689. https://doi.org/10.3390/machines12100689