Technology Modules Providing Solutions for Agile Manufacturing

Abstract

1. Introduction

2. Solutions for Agile Manufacturing

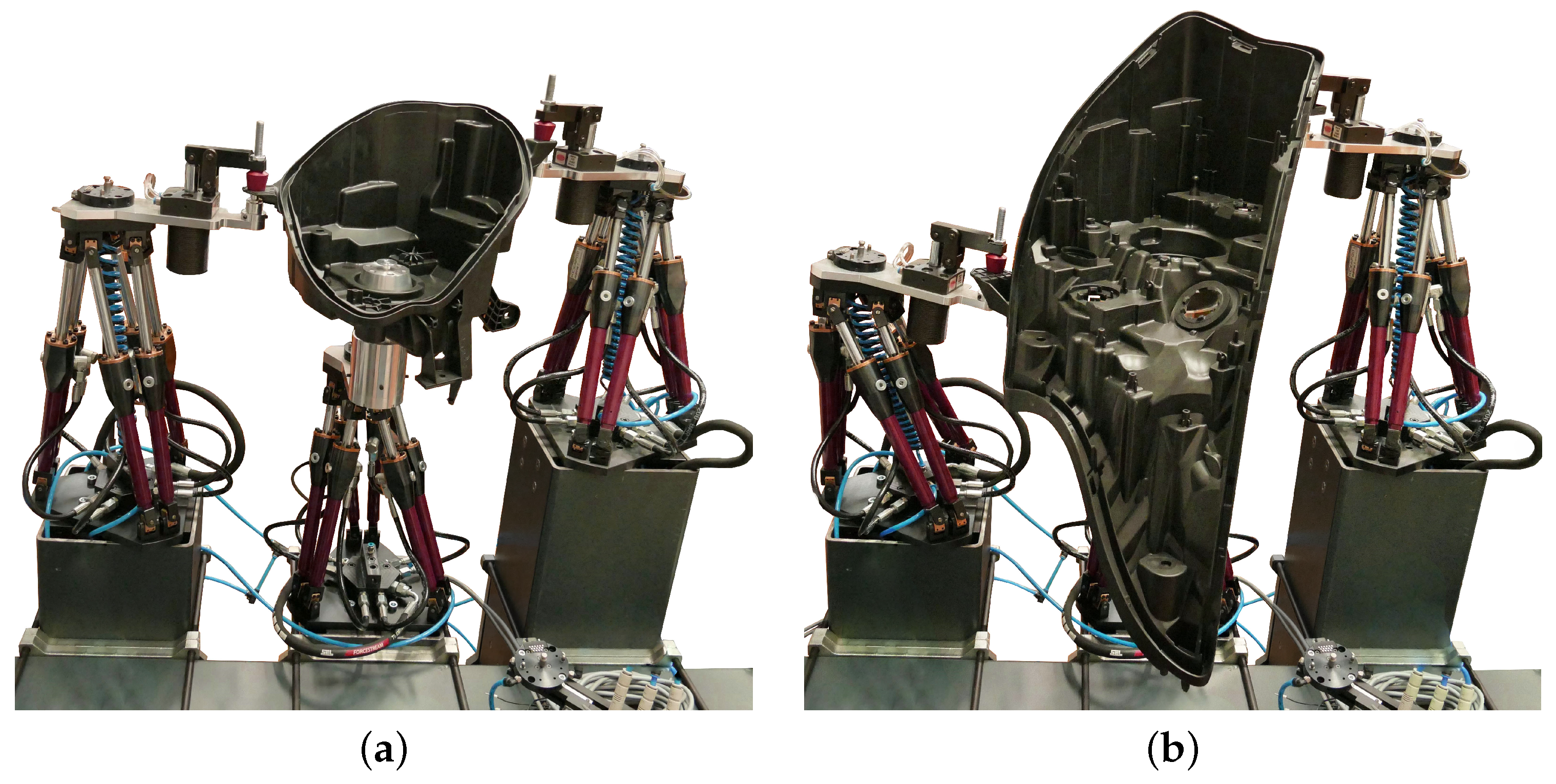

2.1. Optimal Locations and Postures of Reconfigurable Fixtures

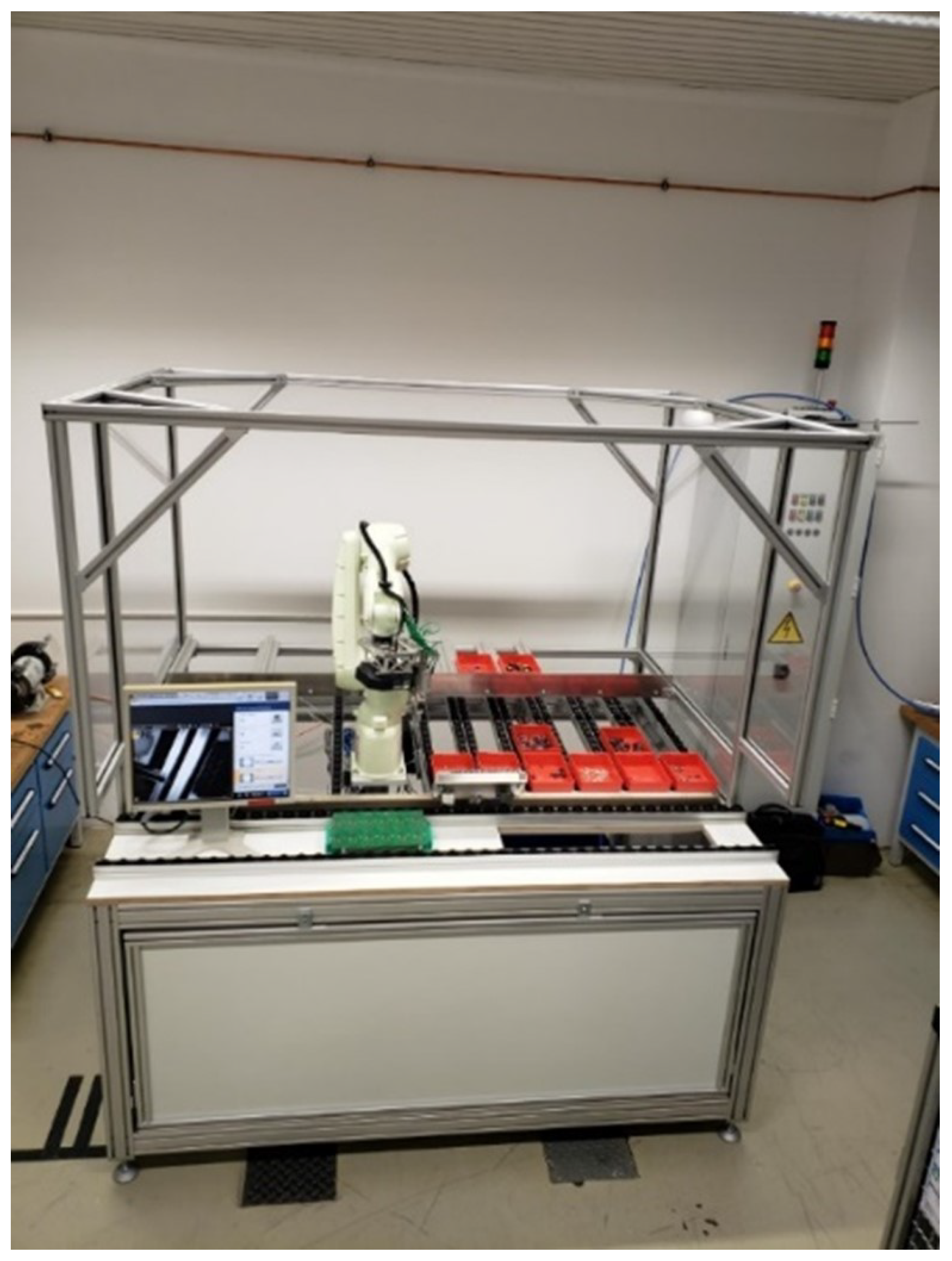

2.2. Assembly of Through-Hole Technology Printed Circuit Boards

2.3. Object Detection

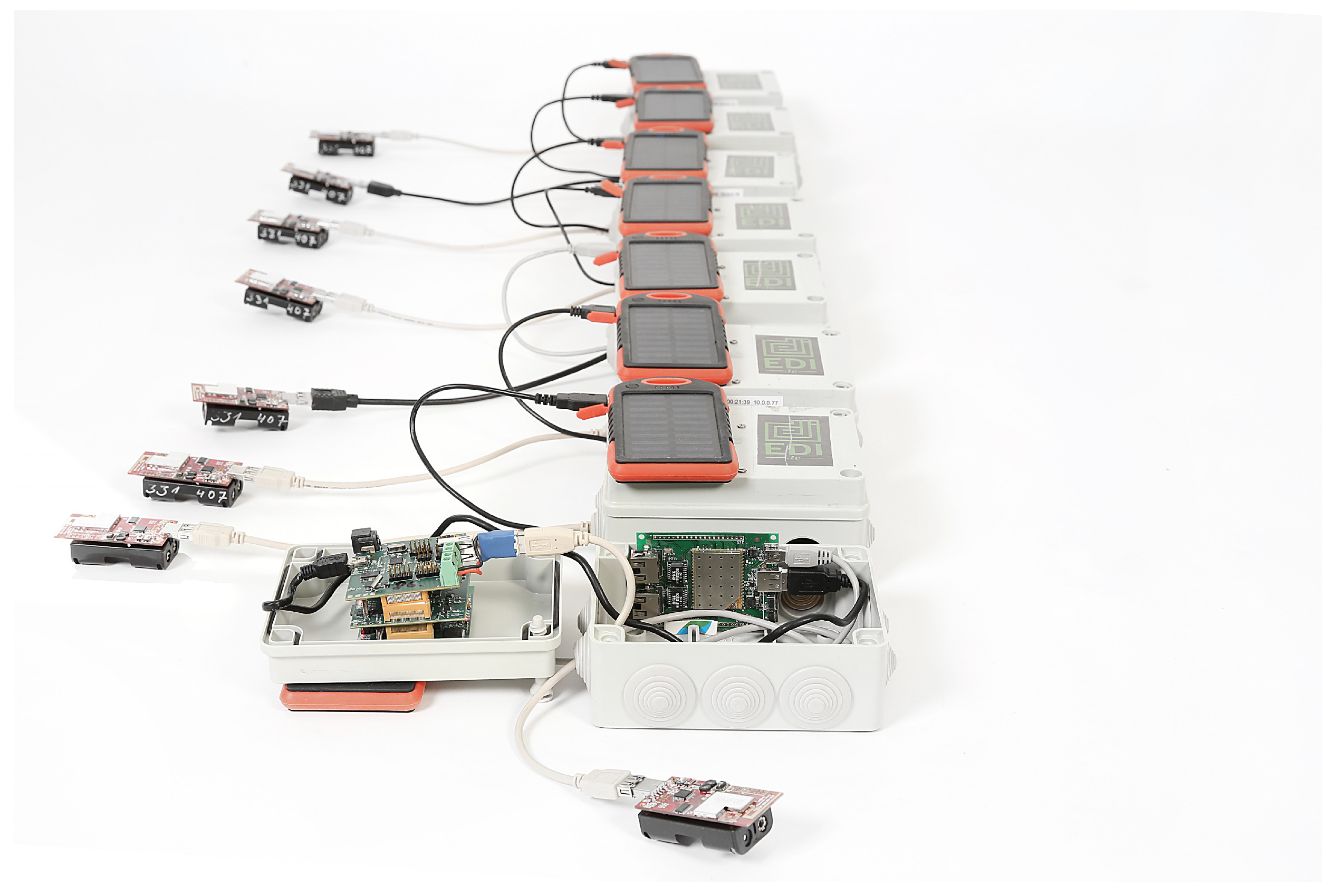

2.4. Industrial IoT Robustness Simulation Modules

2.5. Predictive Maintenance with IoT

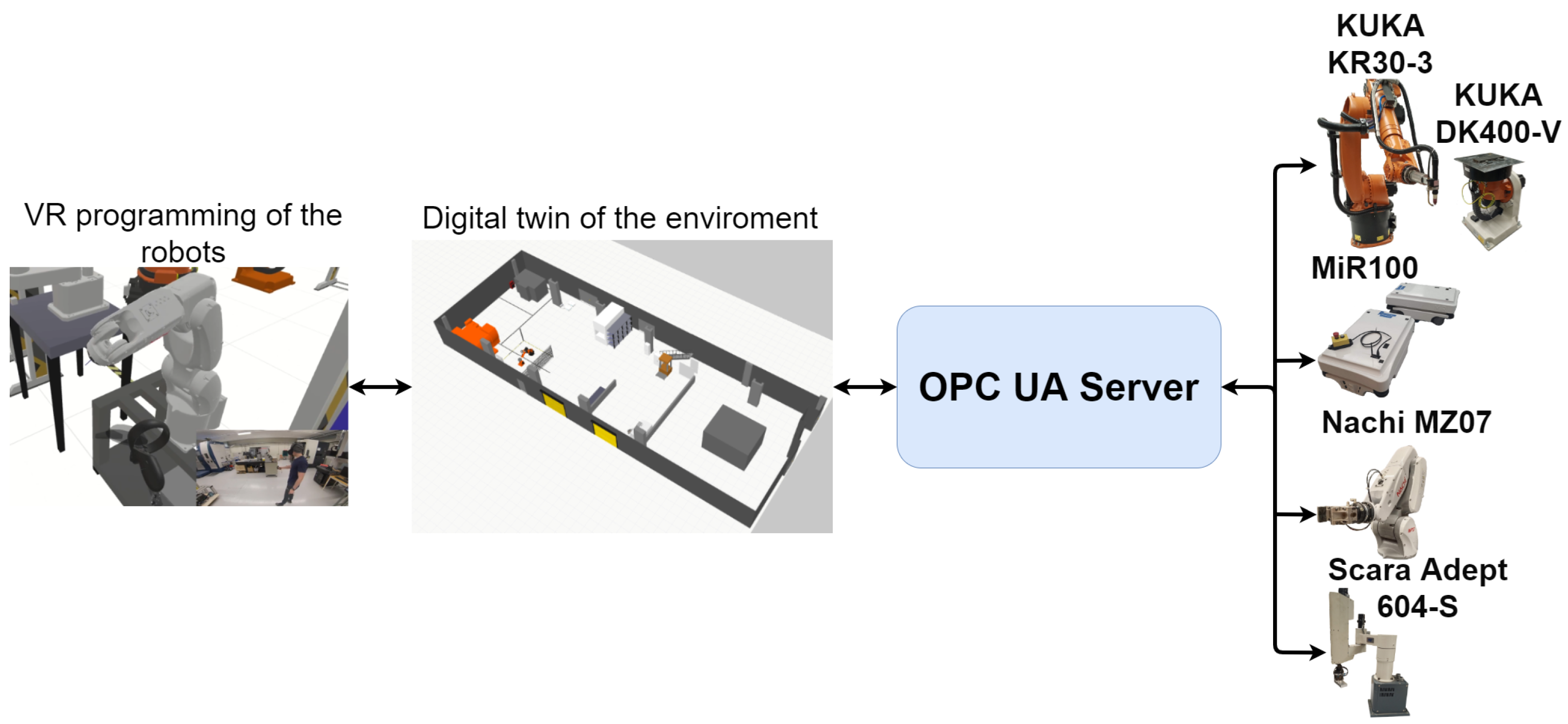

2.6. Virtualization of a Robot Cell for Training and Production Prototyping

2.7. VR Programming of Manufacturing Cells

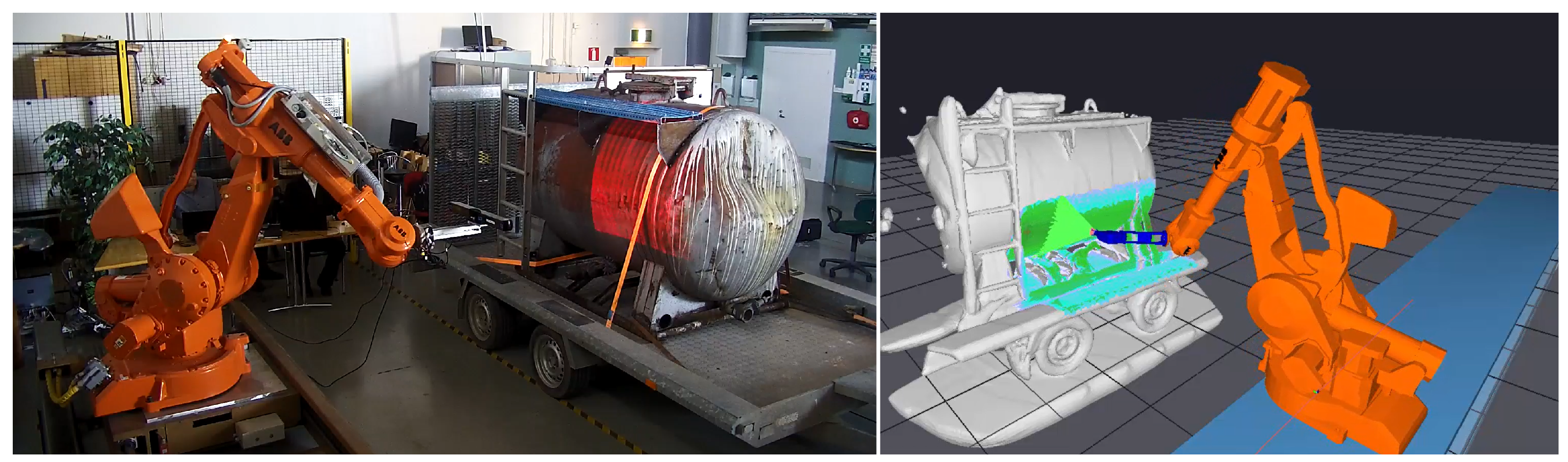

2.8. Online Trajectory Generation with 3D Camera for Industrial Robot

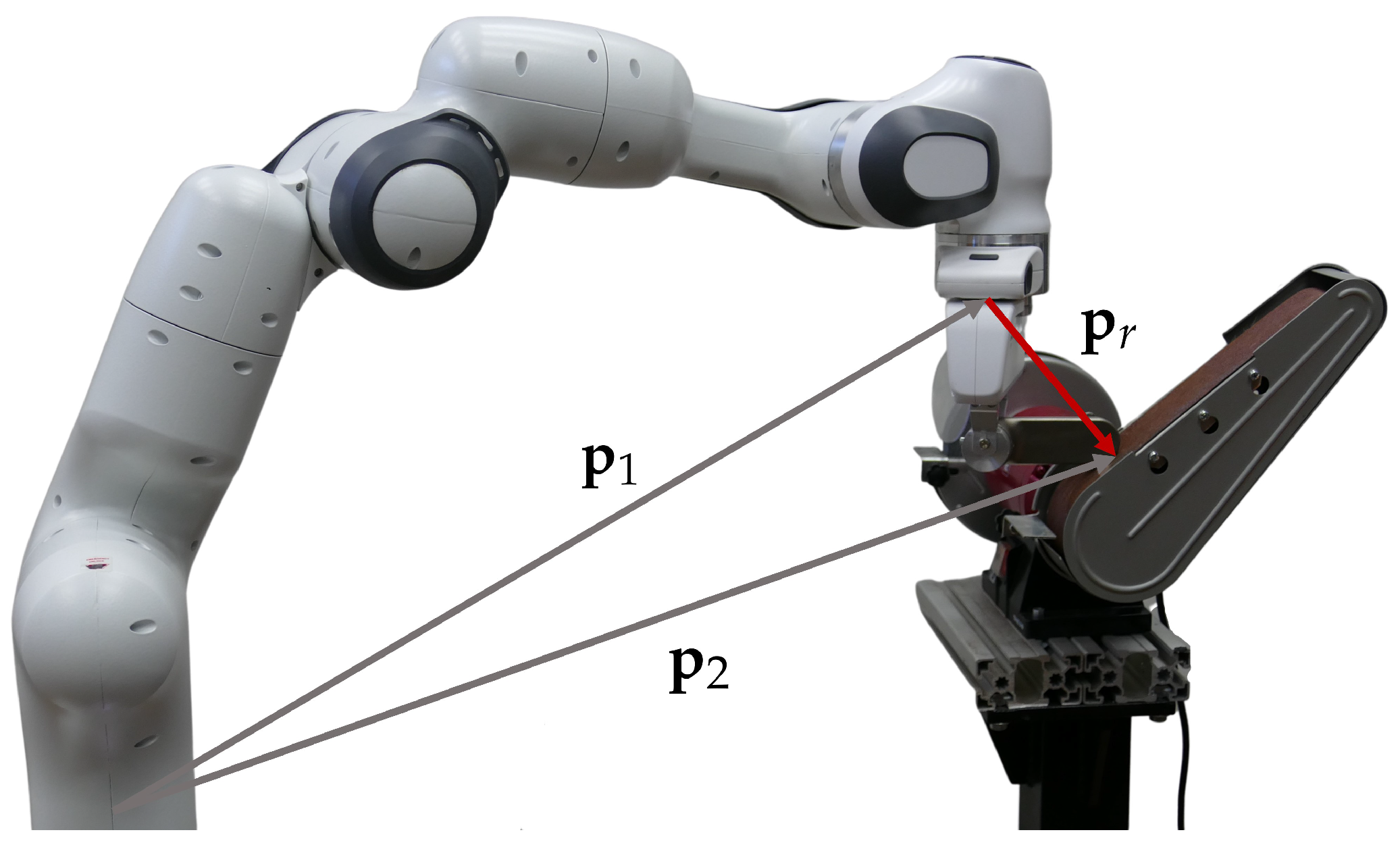

2.9. Robot Programming of Hard to Transfer Tasks by Manual Guidance

2.10. Dynamic Task Planning & Work Re-Organization

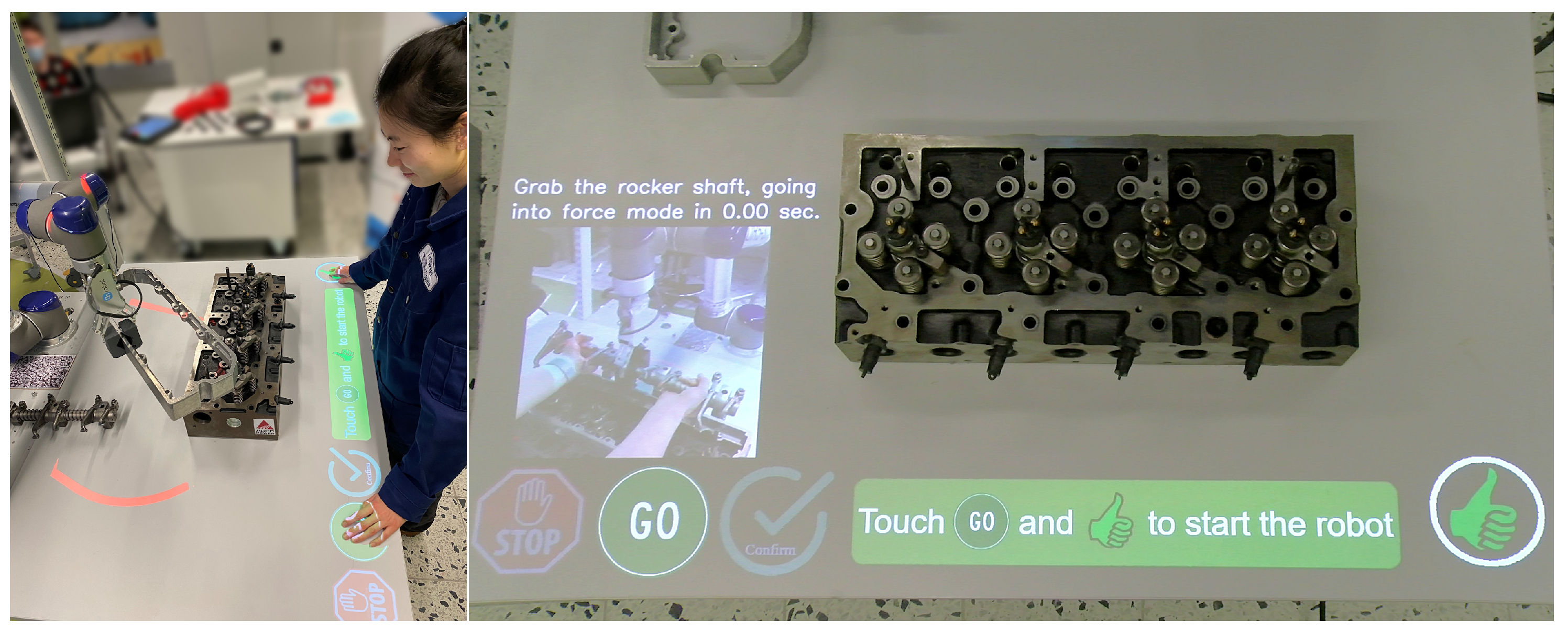

2.11. Projector Based GUI for HRC

2.12. Safe Human Detection in a Collaborative Work Cell

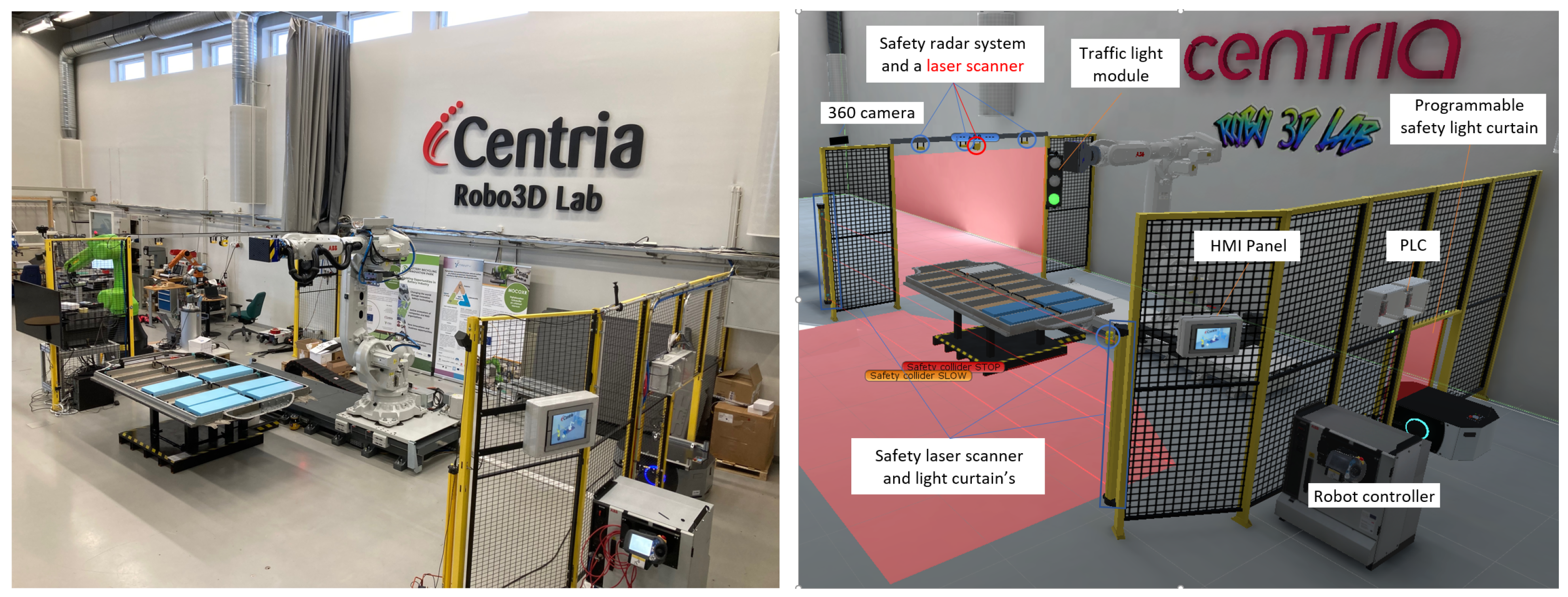

2.13. Adaptive Speed and Separation Monitoring for Safe Human-Robot Collaboration

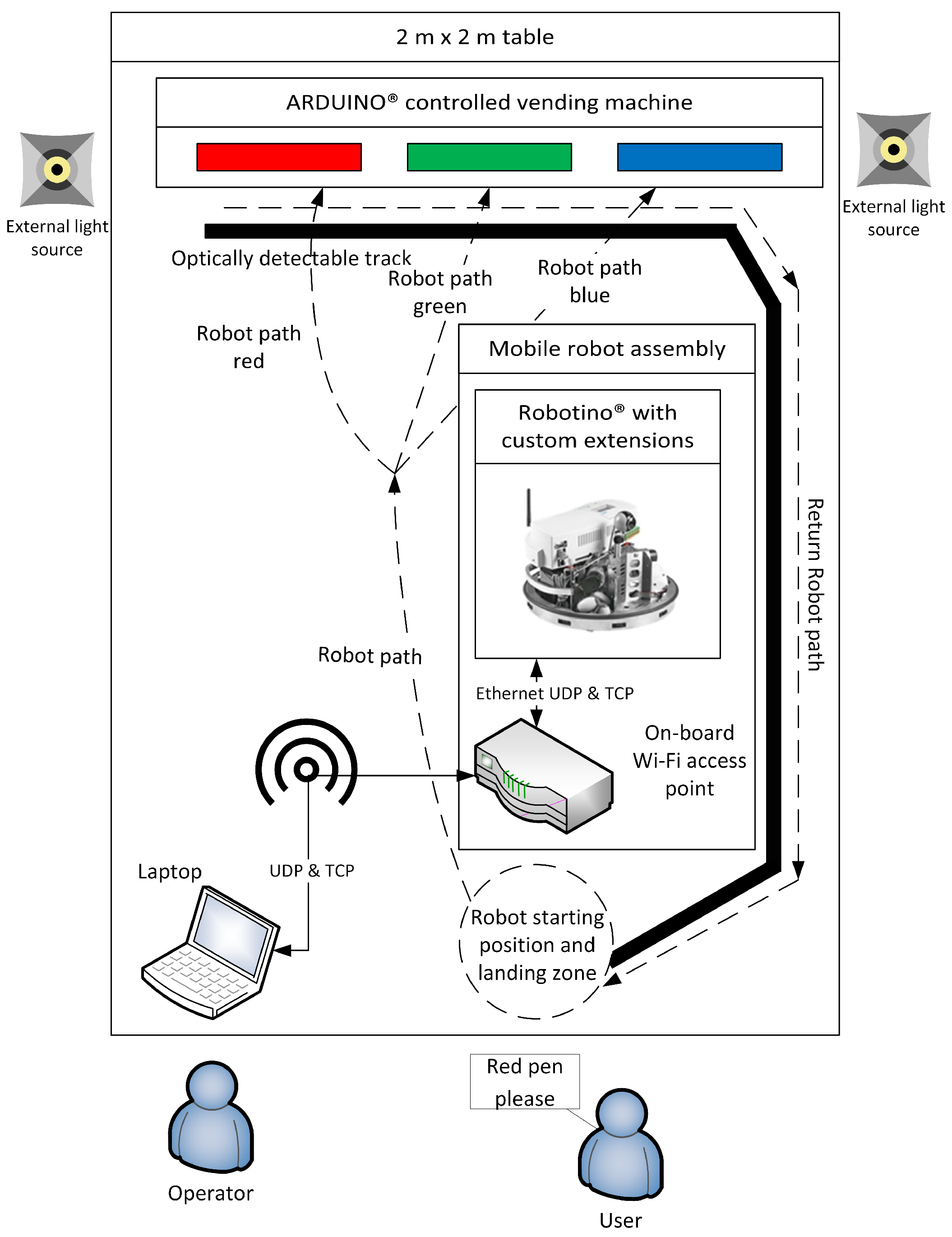

2.14. Mobile Robot Environment Detection

3. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SME | Small and Medium-sized Enterprises |

| PCB | Printed Circuit Board |

| WSN | Wireless Sensor Networks |

| IoT | Internet of Things |

| AI | Artificial Intelligence |

| HRC | Human-Robot Collaboration |

| GUI | Graphical User Interface |

| TRL | Technology Readiness Level |

| THT | Through-Hole Technology |

| SMD | Surface Mount Device |

| IoT | Internet of Things |

| IIoT | Industrial Internet of Things |

| VR | Virtual Reality |

| DLP | Digital Light Processing |

| UI | User Interface |

| HMI | Human Machine Interface |

| AGV | Automated Ground Vehicles |

| CAD | Computer Aided Design |

| TCP | Tool Center Point |

| IDS | Intrusion Detection System |

| VPN | Virtual Private Network |

| MMS | Manufacturing Management Software |

| KPI | Key Performance Indicator |

| OPC UA | Open Platform Communications United Architecture |

| iLfD | Incremental Learning from Demonstration |

| DMP | Dynamic Movement Primitives |

| HRC | Human Robot Collaboration |

| ROS | Robot Operating System |

| RGB | Red, Breen and Blue |

| PLC | Programmable Logic Controller |

| BLE | Bluetooth Low Energy |

| OCR | Optical Character Recognition |

References

- Hu, S.J.; Ko, J.; Weyand, L.; ElMaraghy, H.A.; Lien, T.K.; Koren, Y.; Bley, H.; Chryssolouris, G.; Nasr, N.; Shpitalni, M. Assembly system design and operations for product variety. CIRP Ann. 2011, 60, 715–733. [Google Scholar] [CrossRef]

- Erdem, I.; Helgosson, P.; Kihlman, H. Development of Automated Flexible Tooling as Enabler in Wing Box Assembly. Procedia CIRP 2016, 44, 233–238. [Google Scholar] [CrossRef][Green Version]

- Shirinzadeh, B. Issues in the design of the reconfigurable fixture modules for robotic assembly. J. Manuf. Syst. 1993, 12, 1–14. [Google Scholar] [CrossRef]

- Bejlegaard, M.; ElMaraghy, W.; Brunoe, T.D.; Andersen, A.L.; Nielsen, K. Methodology for reconfigurable fixture architecture design. CIRP J. Manuf. Sci. Technol. 2018, 23, 172–186. [Google Scholar] [CrossRef]

- Bernstein, H. Elektronik und Mechanik; Springer Vieweg: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Risse, A. Fertigungsverfahren in der Mechatronik, Feinwerk- und Präzisionsgerätetechnik; Springer Vieweg: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Hummel, M. Einführung in Die Leiterplatten- und Baugruppentechnologie; Leuze Verlag: Bad Saulgau, Germany, 2017. [Google Scholar]

- Cognilytica. Data Engineering, Preparation, and Labeling for AI. 2019. Available online: https://www.cloudfactory.com/reports/data-engineering-preparation-labeling-for-ai (accessed on 28 August 2023).

- Roh, Y.; Heo, G.; Whang, S. A Survey on Data Collection for Machine Learning: A Big Data-AI Integration Perspective. IEEE Trans. Knowl. Data Eng. 2019, 33, 1328–1347. [Google Scholar] [CrossRef]

- Bertolini, M.; Mezzogori, D.; Neroni, M.; Zammori, F. Machine Learning for industrial applications: A comprehensive literature review. Expert Syst. Appl. 2021, 175, 114820. [Google Scholar] [CrossRef]

- Ali, A.; Ming, Y.; Chakraborty, S.; Iram, S. A Comprehensive Survey on Real-Time Applications of WSN. Future Internet 2017, 9, 77. [Google Scholar] [CrossRef]

- Mobley, R.K. An Introduction to Predictive Maintenance; Elsevier: Amsterdam, The Netherlands, 2002. [Google Scholar]

- Zonta, T.; Da Costa, C.A.; da Rosa Righi, R.; de Lima, M.J.; da Trindade, E.S.; Li, G.P. Predictive maintenance in the Industry 4.0: A systematic literature review. Comput. Ind. Eng. 2020, 150, 106889. [Google Scholar] [CrossRef]

- Büth, L.; Juraschek, M.; Sangwan, K.S.; Herrmann, C.; Thiede, S. Integrating virtual and physical production processes in learning factories. Procedia Manuf. 2020, 45, 121–127. [Google Scholar] [CrossRef]

- Kritzinger, W.; Karner, M.; Traar, G.; Henjes, J.; Sihn, W. Digital Twin in manufacturing: A categorical literature review and classification. IFAC-PapersOnLine 2018, 51, 1016–1022. [Google Scholar] [CrossRef]

- Wilbert, A.D.; Behrens, B.; Zymla, C.; Dambon, O.; Klocke, F. Robotic finishing process–An extrusion die case study. CIRP J. Manuf. Sci. Technol. 2015, 11, 45–52. [Google Scholar] [CrossRef]

- Ng, W.X.; Chan, H.K.; Teo, W.K.; Chen, I.M. Programming a robot for conformance grinding of complex shapes by capturing the tacit knowledge of a skilled operator. IEEE Trans. Autom. Sci. Eng. 2015, 14, 1020–1030. [Google Scholar] [CrossRef]

- Tsarouchi, P.; Makris, S.; Chryssolouris, G. Human–robot interaction review and challenges on task planning and programming. Int. J. Comput. Integr. Manuf. 2016, 29, 916–931. [Google Scholar] [CrossRef]

- Tsarouchi, P.; Spiliotopoulos, J.; Michalos, G.; Koukas, S.; Athanasatos, A.; Makris, S.; Chryssolouris, G. A Decision Making Framework for Human Robot Collaborative Workplace Generation. Procedia CIRP 2016, 44, 228–232. [Google Scholar] [CrossRef]

- Bruno, G.; Antonelli, D. Dynamic task classification and assignment for the management of human-robot collaborative teams in workcells. Int. J. Adv. Manuf. Technol. 2018, 98, 2415–2427. [Google Scholar] [CrossRef]

- Aaltonen, I.; Salmi, T.; Marstio, I. Refining levels of collaboration to support the design and evaluation of human-robot interaction in the manufacturing industry. Procedia CIRP 2018, 72, 93–98. [Google Scholar] [CrossRef]

- De Luca, A.; Flacco, F. Integrated control for pHRI: Collision avoidance, detection, reaction and collaboration. In Proceedings of the IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 288–295. [Google Scholar]

- Andronas, D.; Argyrou, A.; Fourtakas, K.; Paraskevopoulos, P.; Makris, S. Design of Human Robot Collaboration workstations—Two automotive case studies. Procedia Manuf. 2020, 52, 283–288. [Google Scholar] [CrossRef]

- Michalos, G.; Kousi, N.; Karagiannis, P.; Gkournelos, C.; Dimoulas, K.; Koukas, S.; Mparis, K.; Papavasileiou, A.; Makris, S. Seamless human robot collaborative assembly—An automotive case study. Mechatronics 2018, 55, 194–211. [Google Scholar] [CrossRef]

- Gašpar, T.; Kovač, I.; Ude, A. Optimal layout and reconfiguration of a fixturing system constructed from passive Stewart platforms. J. Manuf. Syst. 2021, 60, 226–238. [Google Scholar] [CrossRef]

- Mathiesen, S.; Sørensen, L.; Iversen, T.M.; Hagelskjær, F.; Kraft, D. Towards Flexible PCB Assembly Using Simulation-Based Optimization. In Towards Sustainable Customization: Bridging Smart Products and Manufacturing Systems 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 166–173. [Google Scholar] [CrossRef]

- Shikdar, A.A.; Al-Hadhrami, M.A. Operator Performance and Satisfaction in an Ergonomically Designed Assembly Workstation. J. Eng. Res. 2005, 2, 89. [Google Scholar] [CrossRef]

- Arents, J.; Lesser, B.; Bizuns, A.; Kadikis, R.; Buls, E.; Greitans, M. Synthetic Data of Randomly Piled, Similar Objects for Deep Learning-Based Object Detection. In Proceedings of the Image Analysis and Processing–ICIAP 2022, Lecce, Italy, 23–27 May 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 706–717. [Google Scholar]

- Ahmad, R.; Wazirali, R.; Abu-Ain, T. Machine Learning for Wireless Sensor Networks Security: An Overview of Challenges and Issues. Sensors 2022, 8, 4730. [Google Scholar] [CrossRef] [PubMed]

- Amutha, J.; Sharma, S.; Nagar, J. WSN Strategies Based on Sensors, Deployment, Sensing Models, Coverage and Energy Efficiency: Review, Approaches and Open Issues. Wirel. Pers. Commun. 2020, 111, 1089–1115. [Google Scholar] [CrossRef]

- Genale, A.S.; Sundaram, B.B.; Pandey, A.; Janga, V.; Wako, D.A.; Karthika, P. Machine Learning and 5G Network in an Online Education WSN using AI Technology. In Proceedings of the 2022 International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 9–11 May 2022. [Google Scholar] [CrossRef]

- Striegel, M.; Rolfes, C.; Heyszl, J.; Helfert, F.; Hornung, M.; Sigl, G. EyeSec: A Retrofittable Augmented Reality Tool for Troubleshooting Wireless Sensor Networks in the Field. arXiv 2019. [Google Scholar] [CrossRef]

- Judvaitis, J.; Nesebergs, K.; Balass, R.; Greitans, M. Challenges of DevOps ready IoT Testbed. CEUR Workshop Proc. 2019, 2442, 3–6. [Google Scholar]

- Havard, V.; Trigunayat, A.; Richard, K.; Baudry, D. Collaborative Virtual Reality Decision Tool for Planning Industrial Shop Floor Layouts. Procedia CIRP 2019, 81, 1295–1300. [Google Scholar] [CrossRef]

- Ganier, F.; Hoareau, C.; Tisseau, J. Evaluation of procedural learning transfer from a virtual environment to a real situation: A case study on tank maintenance training. Ergonomics 2014, 57, 828–843. [Google Scholar] [CrossRef]

- Innovation Radar Methodology. Available online: https://www.innoradar.eu/methodology/#maturity-info (accessed on 21 March 2023).

- Moore, P.R.; Pu, J.; Ng, H.C.; Wong, C.B.; Chong, S.K.; Chen, X.; Adolfsson, J.; Olofsgård, P.; Lundgren, J.O. Virtual engineering: An integrated approach to agile manufacturing machinery design and control. Mechatronics 2003, 13, 1105–1121. [Google Scholar] [CrossRef]

- Ihlenfeldt, S.; Tehel, R.; Reichert, W.; Kurth, R. Characterization of generic interactive digital twin for increased agility in forming. CIRP Ann. 2023, 72, 333–336. [Google Scholar] [CrossRef]

- Papacharalampopoulos, A.; Michail, C.K.; Stavropoulos, P. Manufacturing resilience and agility through processes digital twin: Design and testing applied in the LPBF case. Procedia CIRP 2021, 103, 164–169. [Google Scholar] [CrossRef]

- Wan, G.; Dong, X.; Dong, Q.; He, Y.; Zeng, P. Design and implementation of agent-based robotic system for agile manufacturing: A case study of ARIAC 2021. Rob. Comput. Integr. Manuf. 2022, 77, 102349. [Google Scholar] [CrossRef]

- Monetti, F.; de Giorgio, A.; Yu, H.; Maffei, A.; Romero, M. An experimental study of the impact of virtual reality training on manufacturing operators on industrial robotic tasks. Procedia CIRP 2022, 106, 33–38. [Google Scholar] [CrossRef]

- Survey of Occupational Injuries and Illnesses Data. Available online: https://www.bls.gov/iif/nonfatal-injuries-and-illnesses-tables/case-and-demographic-characteristics-table-r31-2020.htm (accessed on 24 March 2023).

- AUTOMAPPPS-Reactive/Real-Time: Automatic Robot Motion Planning and Programming. Available online: https://convergent-it.com/automatic-robot-programming/ (accessed on 24 March 2023).

- Müller, M.T.; Zschech, C.; Gedan-Smolka, M.; Pech, M.; Streicher, R.; Gohs, U. Surface modification and edge layer post curing of 3D sheet moulding compounds (SMC). Radiat. Phys. Chem. 2020, 173, 108872. [Google Scholar] [CrossRef]

- Scanning Software & Supported 3rd Party Programs. Available online: https://www.photoneo.com/3d-scanning-software (accessed on 24 March 2023).

- Li, X.; He, B.; Zhou, Y.; Li, G. Multisource Model-Driven Digital Twin System of Robotic Assembly. IEEE Syst. J. 2021, 15, 114–123. [Google Scholar] [CrossRef]

- Li, X.; He, B.; Wang, Z.; Zhou, Y.; Li, G.; Jiang, R. Semantic-Enhanced Digital Twin System for Robot–Environment Interaction Monitoring. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Simonič, M.; Petrič, T.; Ude, A.; Nemec, B. Analysis of methods for incremental policy refinement by kinesthetic guidance. J. Intell. Robot. Syst. 2021, 102, 5. [Google Scholar] [CrossRef]

- Nemec, B.; Yasuda, K.; Ude, A. A virtual mechanism approach for exploiting functional redundancy in finishing operations. IEEE Trans. Autom. Sci. Eng. 2021, 18, 2048–2060. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human–Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Hietanen, A.; Pieters, R.; Lanz, M.; Latokartano, J.; Kämäräinen, J.K. AR-based interaction for human-robot collaborative manufacturing. Robot.-Comput.-Integr. Manuf. 2020, 63, 101891. [Google Scholar] [CrossRef]

- Pitkäaho, T.; Kaarlela, T.; Pieskä, S.; Sarlin, S. Indoor positioning, artificial intelligence and digital twins for enhanced robotics safety. IFAC-PapersOnLine 2021, 54, 540–545. [Google Scholar] [CrossRef]

- Grieves, M.; Vickers, J. Origins of the Digital Twin Concept. Florida Institute of Technology 2016, 8, 3–20. [Google Scholar] [CrossRef]

- Juliani, A.; Berges, V.P.; Teng, E.; Cohen, A.; Harper, J.; Elion, C.; Goy, C.; Gao, Y.; Henry, H.; Mattar, M.; et al. Unity: A general platform for intelligent agents. arXiv 2018, arXiv:1809.02627. [Google Scholar]

- Kaarlela, T.; Padrao, P.; Pitkäaho, T.; Pieskä, S.; Bobadilla, L. Digital Twins Utilizing XR-Technology as Robotic Training Tools. Machines 2023, 11, 13. [Google Scholar] [CrossRef]

- MQTT Version 3.1.1. 2014. Available online: https://docs.oasis-open.org/mqtt/mqtt/v3.1.1/os/mqtt-v3.1.1-os.pdf (accessed on 14 September 2022).

- Matsas, E.; Vosniakos, G.C. Design of a virtual reality training system for human–robot collaboration in manufacturing tasks. Int. J. Interact. Des. Manuf. 2017, 11, 139–153. [Google Scholar] [CrossRef]

- ISO15066; Robots and Robotic Devices—Collaborative Robots. International Organization for Standardization: Geneva, Switzerland, 2016.

- ISO13855; Safety of Machinery—Positioning of Safeguards with Respect to the Approach Speeds of Parts of the Human Body. International Organization for Standardization: Geneva, Switzerland, 2010.

- Dianatfar, M.; Latokartano, J.; Lanz, M. Concept for virtual safety training system for human-robot collaboration. Procedia Manuf. 2020, 51, 54–60. [Google Scholar] [CrossRef]

- Lee, H.; Liau, Y.Y.; Kim, S.; Ryu, K. Model-Based Human Robot Collaboration System for Small Batch Assembly with a Virtual Fence. Int. J. Precis. Eng. Manuf. Green Technol. 2020, 7, 609–623. [Google Scholar] [CrossRef]

- Karagiannis, P.; Kousi, N.; Michalos, G.; Dimoulas, K.; Mparis, K.; Dimosthenopoulos, D.; Tokçalar, Ö.; Guasch, T.; Gerio, G.P.; Makris, S. Adaptive speed and separation monitoring based on switching of safety zones for effective human robot collaboration. Robot. Comput. Integr. Manuf. 2022, 77, 102361. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deniša, M.; Ude, A.; Simonič, M.; Kaarlela, T.; Pitkäaho, T.; Pieskä, S.; Arents, J.; Judvaitis, J.; Ozols, K.; Raj, L.; et al. Technology Modules Providing Solutions for Agile Manufacturing. Machines 2023, 11, 877. https://doi.org/10.3390/machines11090877

Deniša M, Ude A, Simonič M, Kaarlela T, Pitkäaho T, Pieskä S, Arents J, Judvaitis J, Ozols K, Raj L, et al. Technology Modules Providing Solutions for Agile Manufacturing. Machines. 2023; 11(9):877. https://doi.org/10.3390/machines11090877

Chicago/Turabian StyleDeniša, Miha, Aleš Ude, Mihael Simonič, Tero Kaarlela, Tomi Pitkäaho, Sakari Pieskä, Janis Arents, Janis Judvaitis, Kaspars Ozols, Levente Raj, and et al. 2023. "Technology Modules Providing Solutions for Agile Manufacturing" Machines 11, no. 9: 877. https://doi.org/10.3390/machines11090877

APA StyleDeniša, M., Ude, A., Simonič, M., Kaarlela, T., Pitkäaho, T., Pieskä, S., Arents, J., Judvaitis, J., Ozols, K., Raj, L., Czmerk, A., Dianatfar, M., Latokartano, J., Schmidt, P. A., Mauersberger, A., Singer, A., Arnarson, H., Shu, B., Dimosthenopoulos, D., ... Lanz, M. (2023). Technology Modules Providing Solutions for Agile Manufacturing. Machines, 11(9), 877. https://doi.org/10.3390/machines11090877