UniRoVE: Unified Robot Virtual Environment Framework

Abstract

1. Introduction

2. Related Work

3. Equipment and Methods

3.1. System Development

3.2. Performance Analysis

3.2.1. Qualitative Evaluation

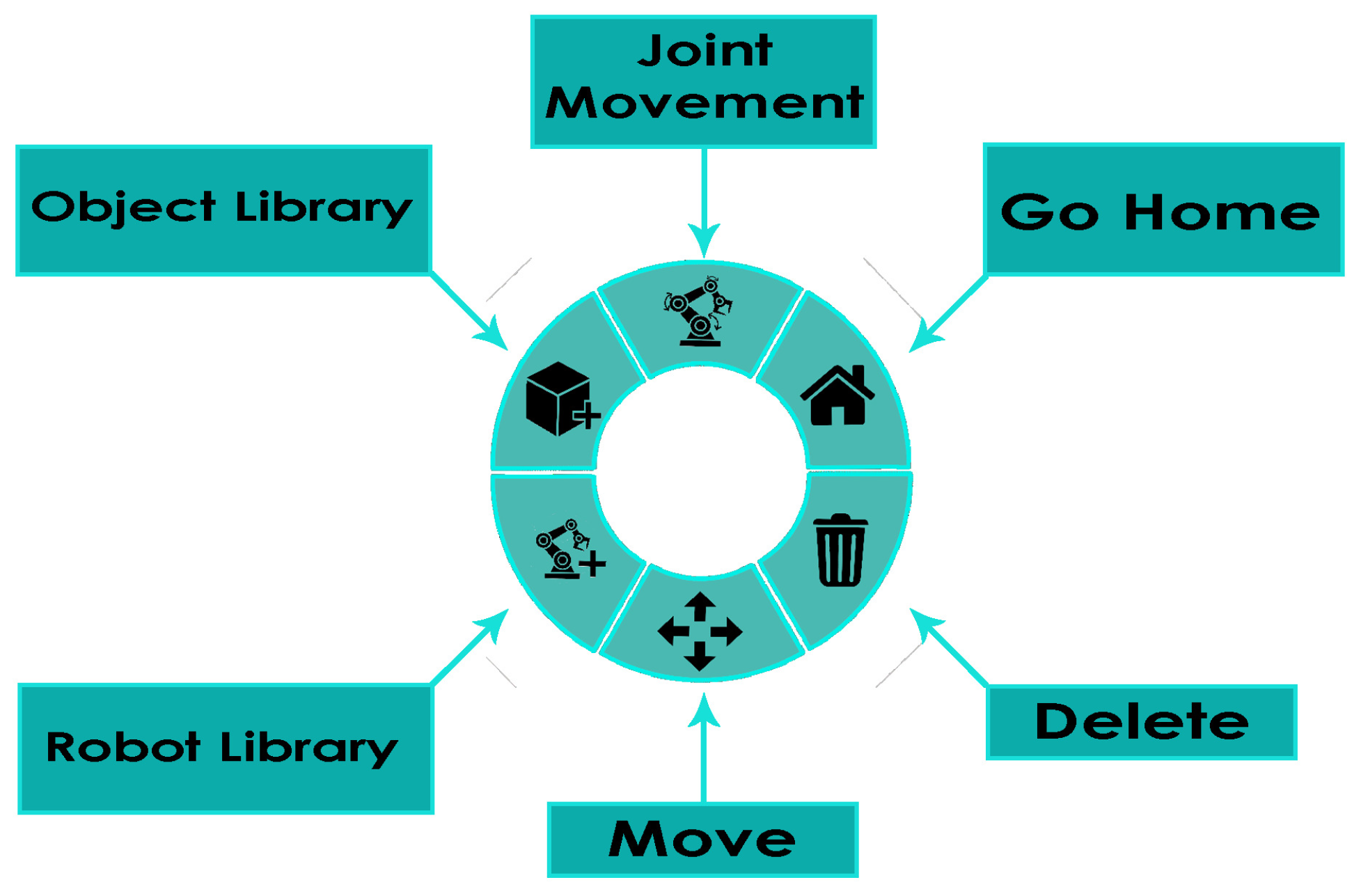

- Interaction Realism: this category contains all the related questions about the realism of the environment and the qualitative feeling of the user after making simple interactions with the framework. An example of these questions could be whether the user found any difficulty while interacting with the radial menu.

- Robotics Motor: this aspect is related to the robotics simulation, aiming to analyze the experience obtained from working with the simulated robot. An example question of this category could be if the user obtained a better knowledge about how robots move in reality.

- Personalisation: this category evaluates the sense of freedom that the user had during the assessment of the system. An example of a question of this category could be if the user felt that some functionality was missing, or whether if the user wanted to accomplish something that was not implemented yet.

3.2.2. Participants

- Profile 1: Participants with a background in robotics, practical experience with real robots, and experience with VR applications.

- Profile 2: Participants with a formal education in robotics and had previous experience with VR applications, but no practical experience with real robots.

- Profile 3: Participants without any prior background in robotics and no experience with real robots, but with previous experience with VR applications.

- Profile 4: Participants without any prior background in robotics, no experience with real robots, and without previous experience with VR applications.

3.2.3. Procedure

- Teleport within the scenario.

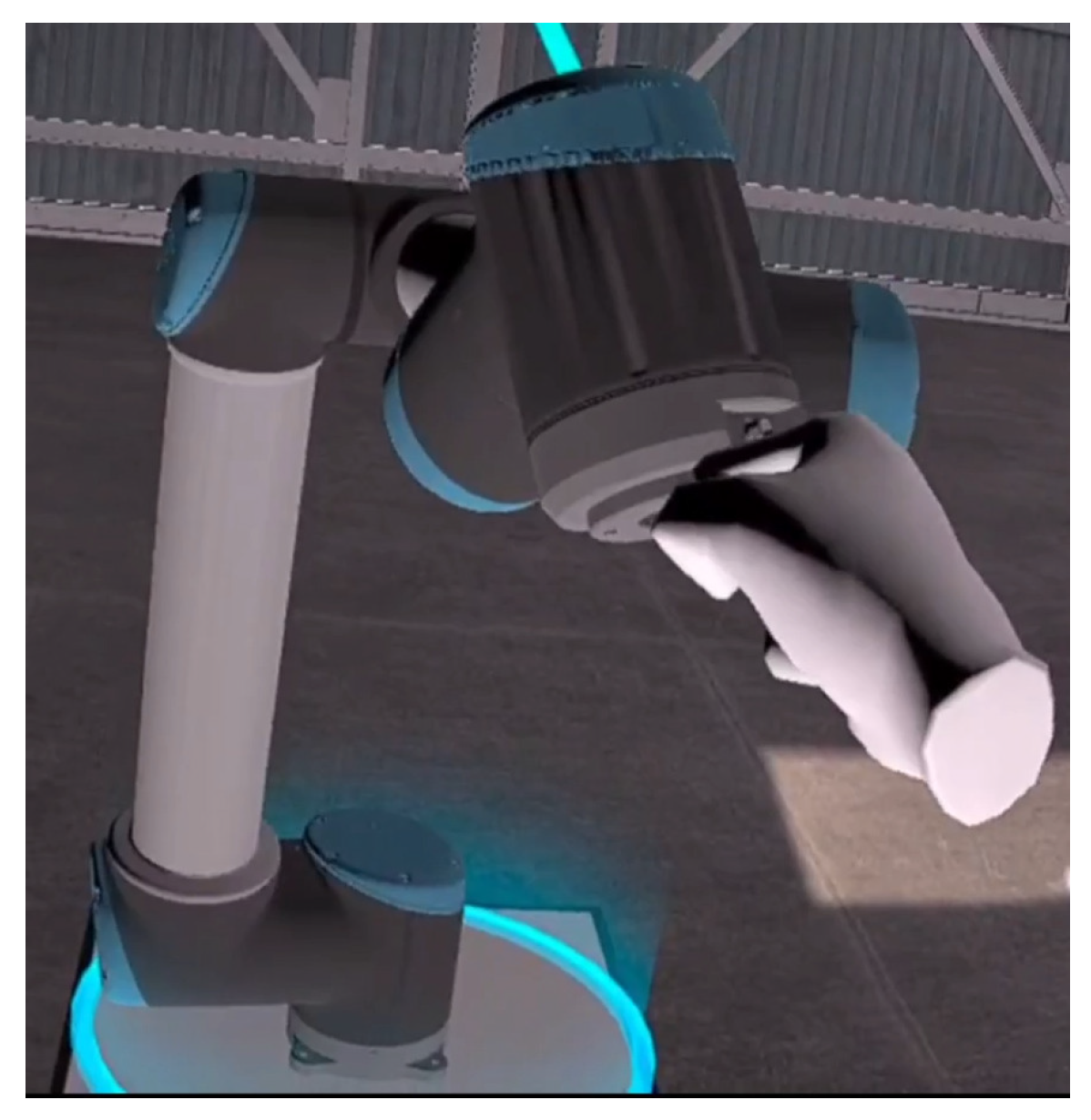

- Perform a Cartesian jog with the pre-imported UR10 robot.

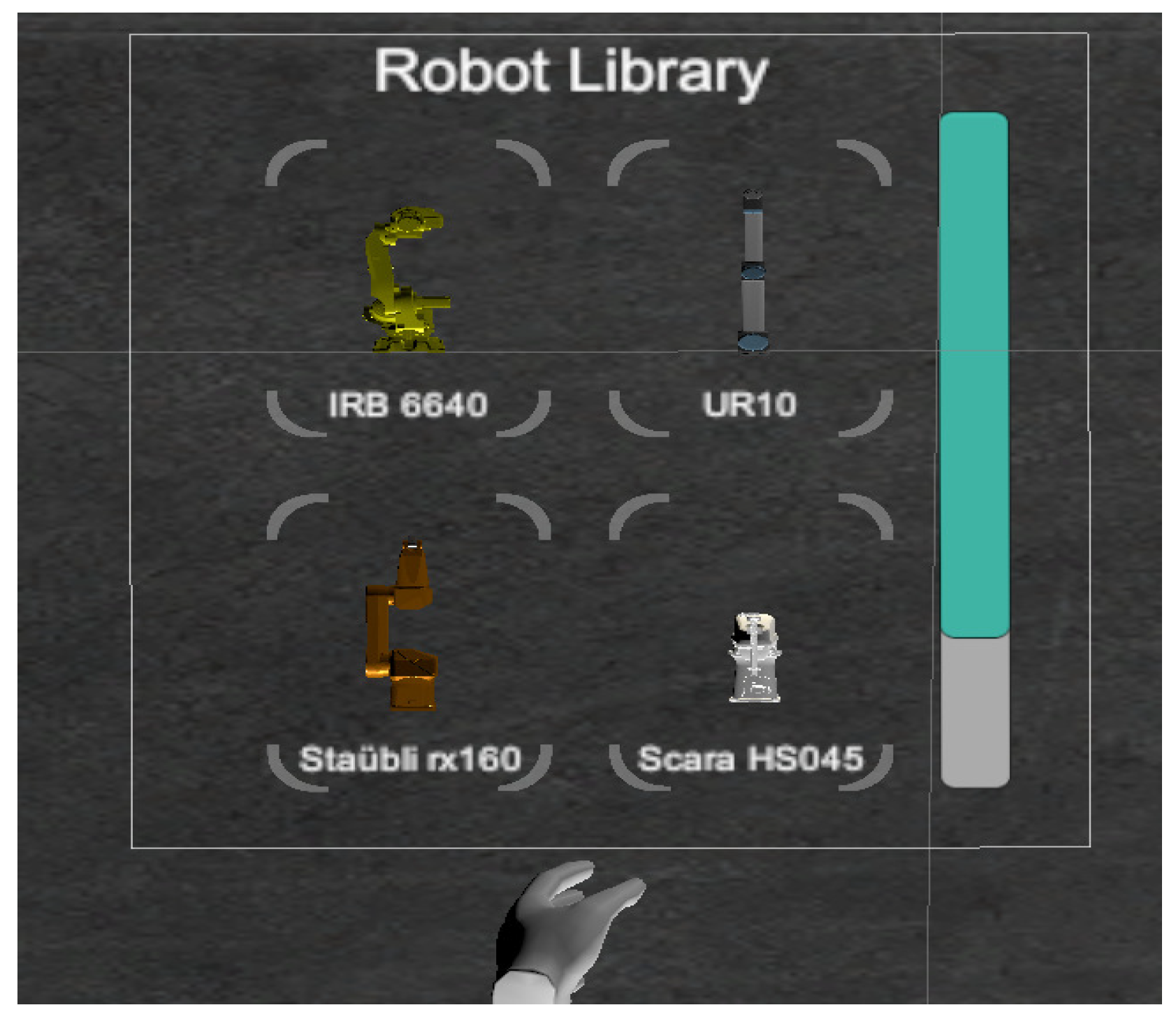

- Introduce a new robot into the scenario using the robot library.

- Perform a joint jog with the newly placed robot.

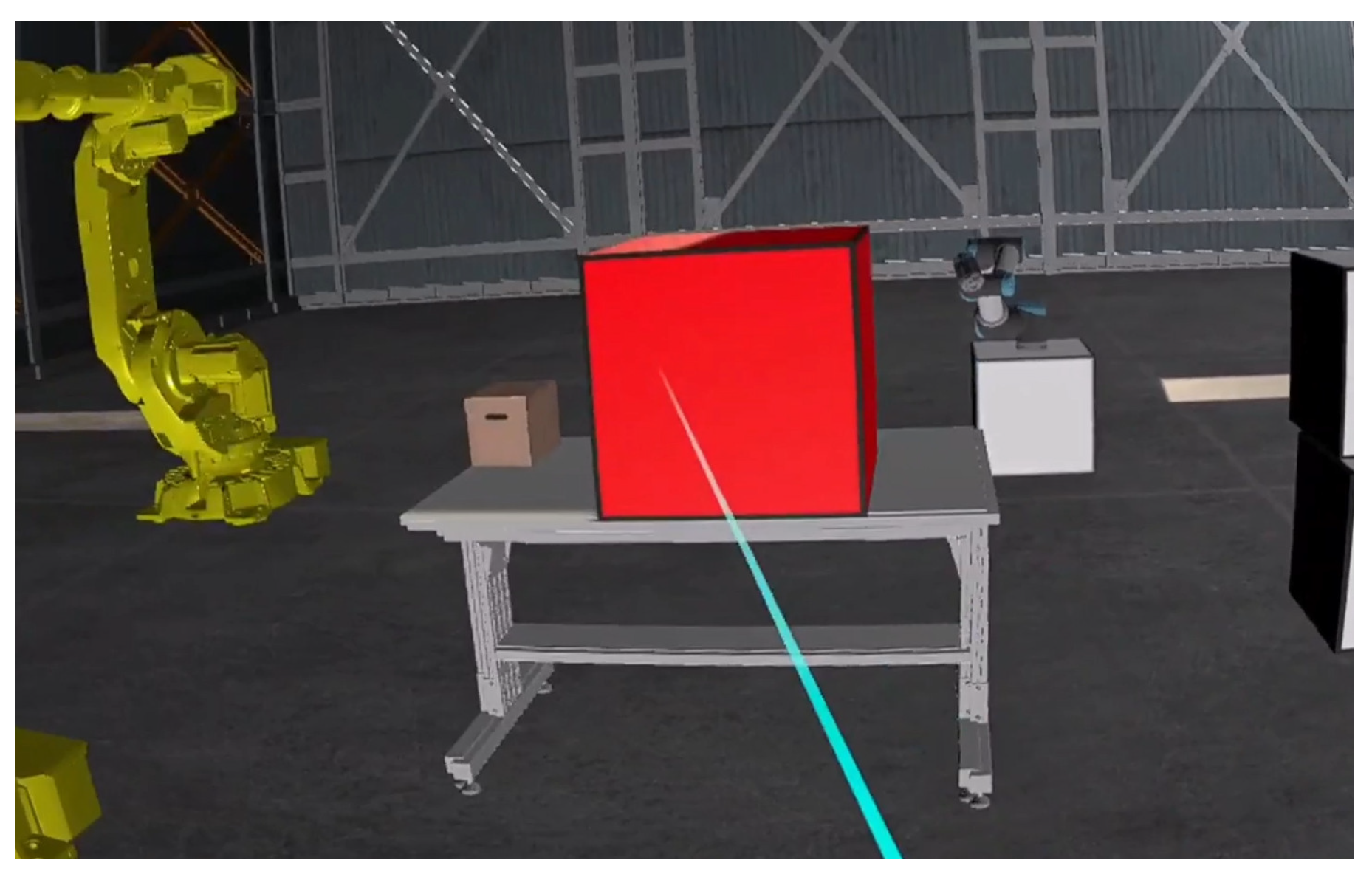

- Place 5 different objects within the scenario, utilizing at least 2 distinct types.

- Delete 1 robot and 1 object from the scenario.

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Qureshi, M.O.; Syed, R.S. The impact of robotics on employment and motivation of employees in the service sector, with special reference to health care. Saf. Health Work. 2014, 5, 198–202. [Google Scholar] [CrossRef] [PubMed]

- Zaatari, S.E.; Marei, M.; Li, W.; Usman, Z. Cobot programming for collaborative industrial tasks: An overview. Robot. Auton. Syst. 2019, 116, 162–180. [Google Scholar] [CrossRef]

- Akan, B.; Ameri, A.; Cürüklü, B.; Asplund, L. Intuitive industrial robot programming through incremental multimodal language and augmented reality. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3934–3939. [Google Scholar] [CrossRef]

- ABB Robotics RobotStudio ABB Robotics, Zürich, Switzerland. Available online: https://new.abb.com/products/robotics/robotstudio (accessed on 28 June 2022).

- Mindrend Technologies Mindrend Technologies, Willoughby, Ohio, United States. Available online: https://learnvr.org/portfolio/vr-robotics-simulator/ (accessed on 1 March 2021).

- Holubek, R.; Ružarovský, R.; Sobrino, D.R.D. An Innovative Approach of Industrial Robot Programming Using Virtual Reality for the Design of Production Systems Layout; Pleiades Publishing: Frankfurt, Germany, 2019; pp. 223–235. [Google Scholar] [CrossRef]

- Holubek, R.; Ružarovský, R.; Sobrino, D.R.D. Using Virtual Reality as a Support Tool for the Offline Robot Programming. Res. Pap. Fac. Mater. Sci. Technol. Slovak Univ. Technol. 2018, 26, 85–91. [Google Scholar] [CrossRef]

- Su, Y.H.; Young, K.Y.; Cheng, S.L.; Ko, C.H.; Young, K.Y. Development of an Effective 3D VR-Based Manipulation System for Industrial Robot Manipulators—IEEE Conference Publication. In Proceedings of the 2019 12th Asian Control Conference (ASCC), Kitakyushu-shi, Japan, 9–12 June 2019; pp. 890–894. [Google Scholar]

- Rodríguez Hoyos, D.S.; Tumialán Borja, J.A.; Velasco Peña, H.F. Virtual Reality Interface for Assist in Programming of Tasks of a Robotic Manipulator; Springer: Berlin/Heidelberg, Germany, 2021; Volume 685 LNEE, pp. 328–335. [Google Scholar] [CrossRef]

- Bolano, G.; Roennau, A.; Dillmann, R.; Groz, A. Virtual Reality for Offline Programming of Robotic Applications with Online Teaching Methods; Institute of Electrical and Electronics Engineers Inc.: Kyoto, Japan, 2020; pp. 625–630. [Google Scholar] [CrossRef]

- Zafra Navarro, A.; Guillén Pastor, J. UR Robot Scripting and Offline Programming in a Virtual Reality Environment; University of Skövde, Högskolevägen: Skövde, Sweden, 2021; Available online: https://urn.kb.se/resolve?urn=urn:nbn:se:his:diva-20185 (accessed on 22 July 2022).

- Adami, P.; Rodrigues, P.B.; Woods, P.J.; Becerik-Gerber, B.; Soibelman, L.; Copur-Gencturk, Y.; Lucas, G. Effectiveness of VR-based training on improving construction workers’ knowledge, skills, and safety behavior in robotic teleoperation. Adv. Eng. Inform. 2021, 50, 101431. [Google Scholar] [CrossRef]

- Tanaya, M.; Yang, K.K.; Christensen, T.; Li, S.; O’Keefe, M.; Fridley, J.; Sung, K. A Framework for Analyzing AR/VR Collaborations: An Initial Result; Institute of Electrical and Electronics Engineers Inc.: Annecy, France, 2017; pp. 111–116. [Google Scholar] [CrossRef]

- Ghrairi, N.; Kpodjedo, S.; Barrak, A.; Petrillo, F.; Khomh, F. The State of Practice on Virtual Reality (VR) Applications: An Exploratory Study on Github and Stack Overflow; Institute of Electrical and Electronics Engineers Inc.: Lisbon, Portugal, 2018; pp. 356–366. [Google Scholar] [CrossRef]

- Waldron, K.; Schmiedeler, J. Kinematics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 9–33. [Google Scholar] [CrossRef]

- Vosinakis, S.; Koutsabasis, P. Evaluation of visual feedback techniques for virtual grasping with bare hands using Leap Motion and Oculus Rift. Virtual Real. 2018, 22, 47–62. [Google Scholar] [CrossRef]

- Christopoulos, A.; Conrad, M.; Shukla, M. Increasing student engagement through virtual interactions: How? Virtual Real. 2018, 22, 353–369. [Google Scholar] [CrossRef]

- Oprea, S.; Martinez-Gonzalez, P.; Garcia-Garcia, A.; Castro-Vargas, J.A.; Orts-Escolano, S.; Garcia-Rodriguez, J. A Visually Plausible Grasping System for Object Manipulation and Interaction in Virtual Reality Environments. Comput. Graph. 2019, 83, 77–86. [Google Scholar] [CrossRef]

- Gonzalez-Franco, M.; Peck, T.C. Avatar embodiment. Towards a standardized questionnaire. Front. Robot. AI 2018, 5, 74. [Google Scholar] [CrossRef] [PubMed]

| ID Question |

|---|

| Aspect 1: Interaction Realism |

| Q1: I did understand the purpose of each menu without further explanation. |

| Q2: I felt as I was in a real industrial environment. |

| Q3: I did not get confused with the buttons used of the controllers. |

| Q4: It was really easy for me to find the functionallity that I was looking for. |

| Aspect 2: Robotics Motor |

| Q5: When I was dragging the end-effector of the robot, the robot followed perfectly my |

| hand. |

| Q6: I feel like I have a greater comprehension of how the robots behave. |

| Q7: I think that I can expect when a robot is going to pass by a singularity. |

| Q8: I was able to understand how the movement of an individual joint would affect to the |

| end-effector. |

| Aspect 3: Personalisation |

| Q9: I felt like I could move with total liberty inside the environment. |

| Q10: I did not feel like any functionality was missing. |

| Q11: I feel that I can create my own case of study with ease by using this framework. |

| Profile 1 | Profile 2 | Profile 3 | Profile 4 | |

|---|---|---|---|---|

| Knowledge in robotics | X | X | ||

| Interacted with real robots | X | |||

| Previous experience with VR | X | X | X | |

| Group participants: | 30% | 30% | 20% | 20% |

| Profile 1 | Profile 2 | Profile 3 | Profile 4 | |

|---|---|---|---|---|

| Interaction Realism | 1.83 | 1.75 | 1.88 | −0.13 |

| Robotics Motor | 2.33 | 2.42 | 2.00 | 1.88 |

| Personalisation | 1.89 | 1.67 | 2.33 | 1.83 |

| Embodiment Score | 2.02 | 1.94 | 2.07 | 1.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zafra Navarro, A.; Rodriguez Juan, J.; Igelmo García, V.; Ruiz Zúñiga, E.; Garcia-Rodriguez, J. UniRoVE: Unified Robot Virtual Environment Framework. Machines 2023, 11, 798. https://doi.org/10.3390/machines11080798

Zafra Navarro A, Rodriguez Juan J, Igelmo García V, Ruiz Zúñiga E, Garcia-Rodriguez J. UniRoVE: Unified Robot Virtual Environment Framework. Machines. 2023; 11(8):798. https://doi.org/10.3390/machines11080798

Chicago/Turabian StyleZafra Navarro, Alberto, Javier Rodriguez Juan, Victor Igelmo García, Enrique Ruiz Zúñiga, and Jose Garcia-Rodriguez. 2023. "UniRoVE: Unified Robot Virtual Environment Framework" Machines 11, no. 8: 798. https://doi.org/10.3390/machines11080798

APA StyleZafra Navarro, A., Rodriguez Juan, J., Igelmo García, V., Ruiz Zúñiga, E., & Garcia-Rodriguez, J. (2023). UniRoVE: Unified Robot Virtual Environment Framework. Machines, 11(8), 798. https://doi.org/10.3390/machines11080798