ConvLSTM-Att: An Attention-Based Composite Deep Neural Network for Tool Wear Prediction

Abstract

1. Introduction

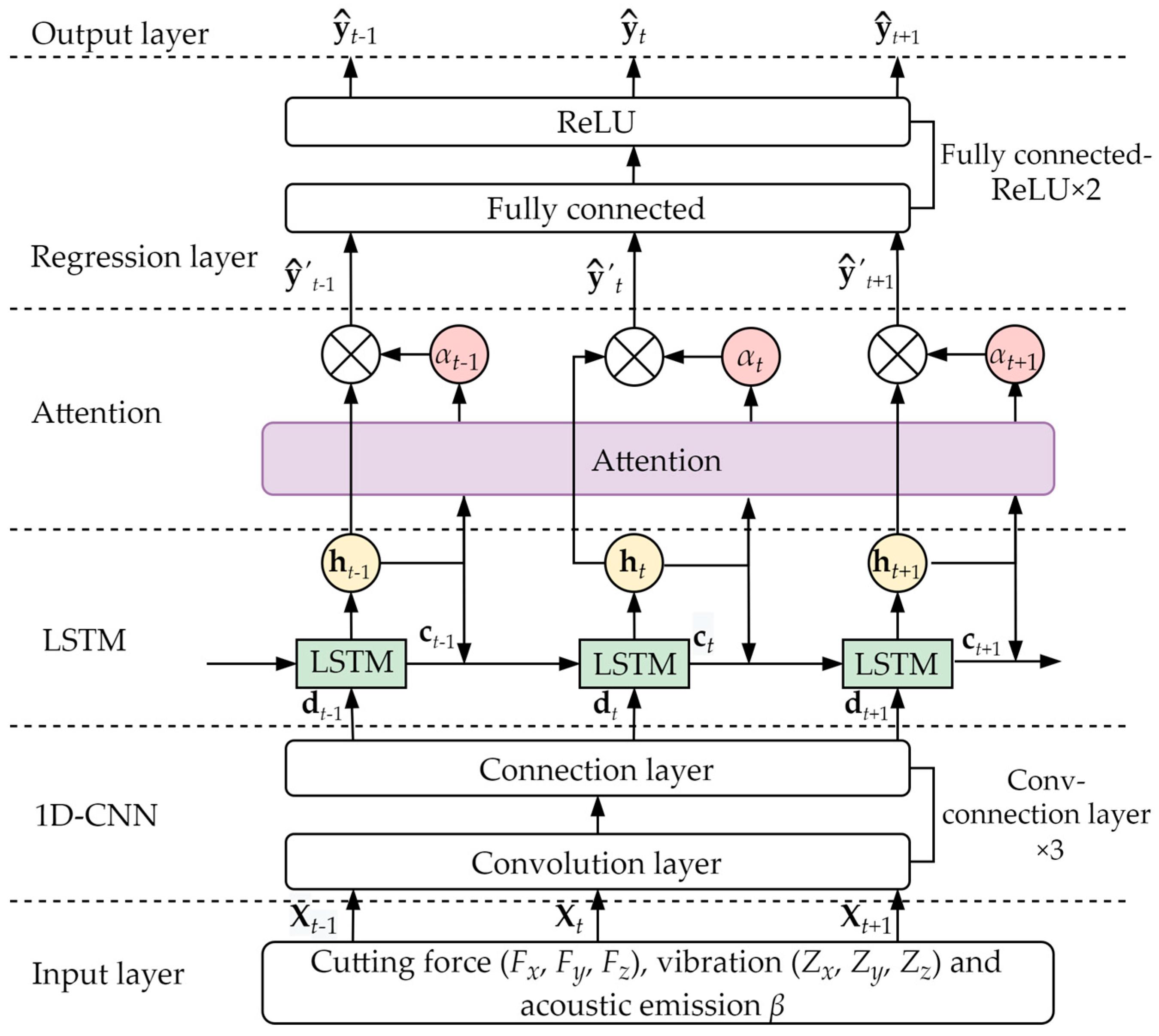

2. Composition of the Prediction Model

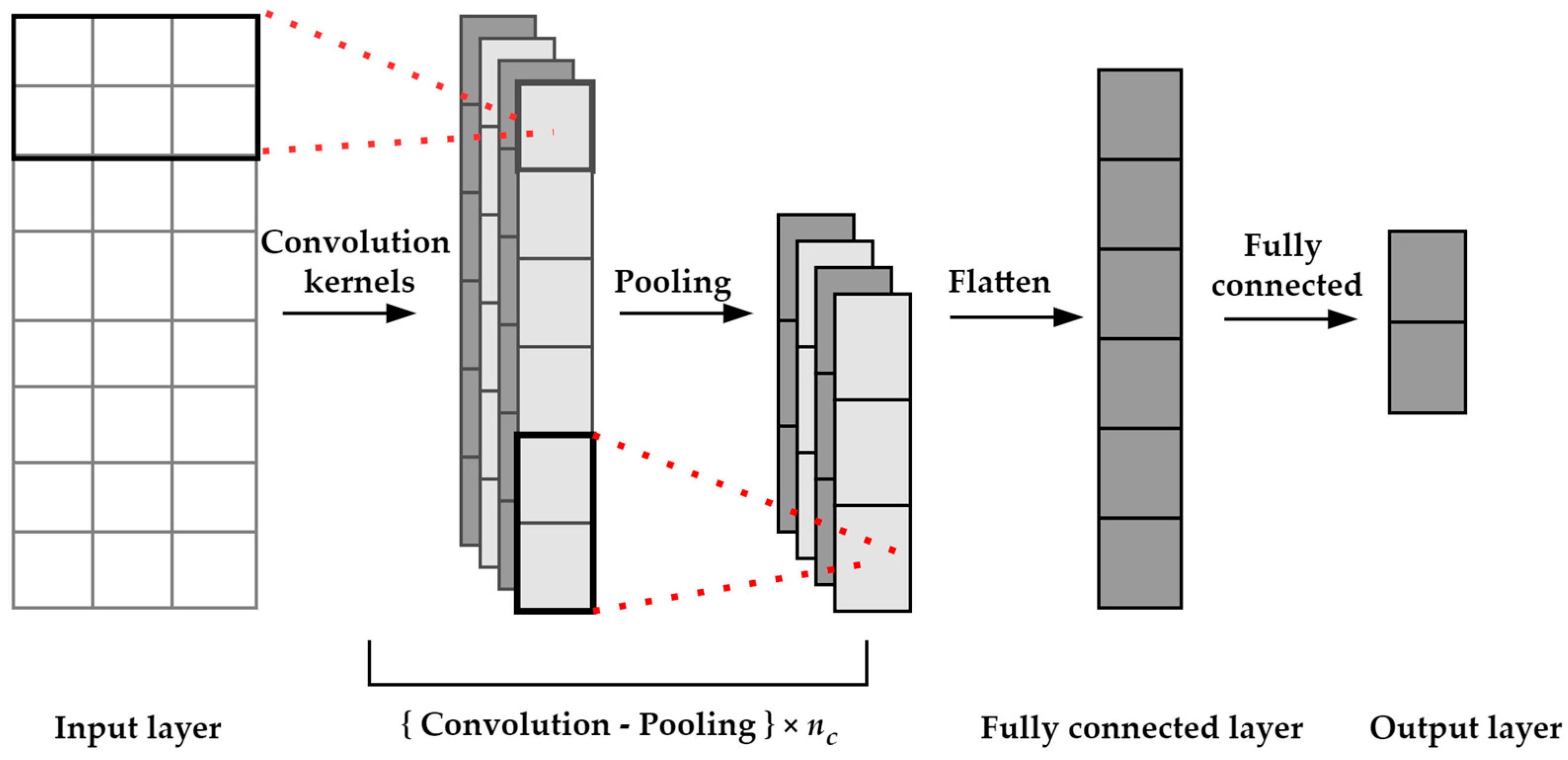

2.1. One-Dimensional Convolutional Neural Network (1D-CNN)

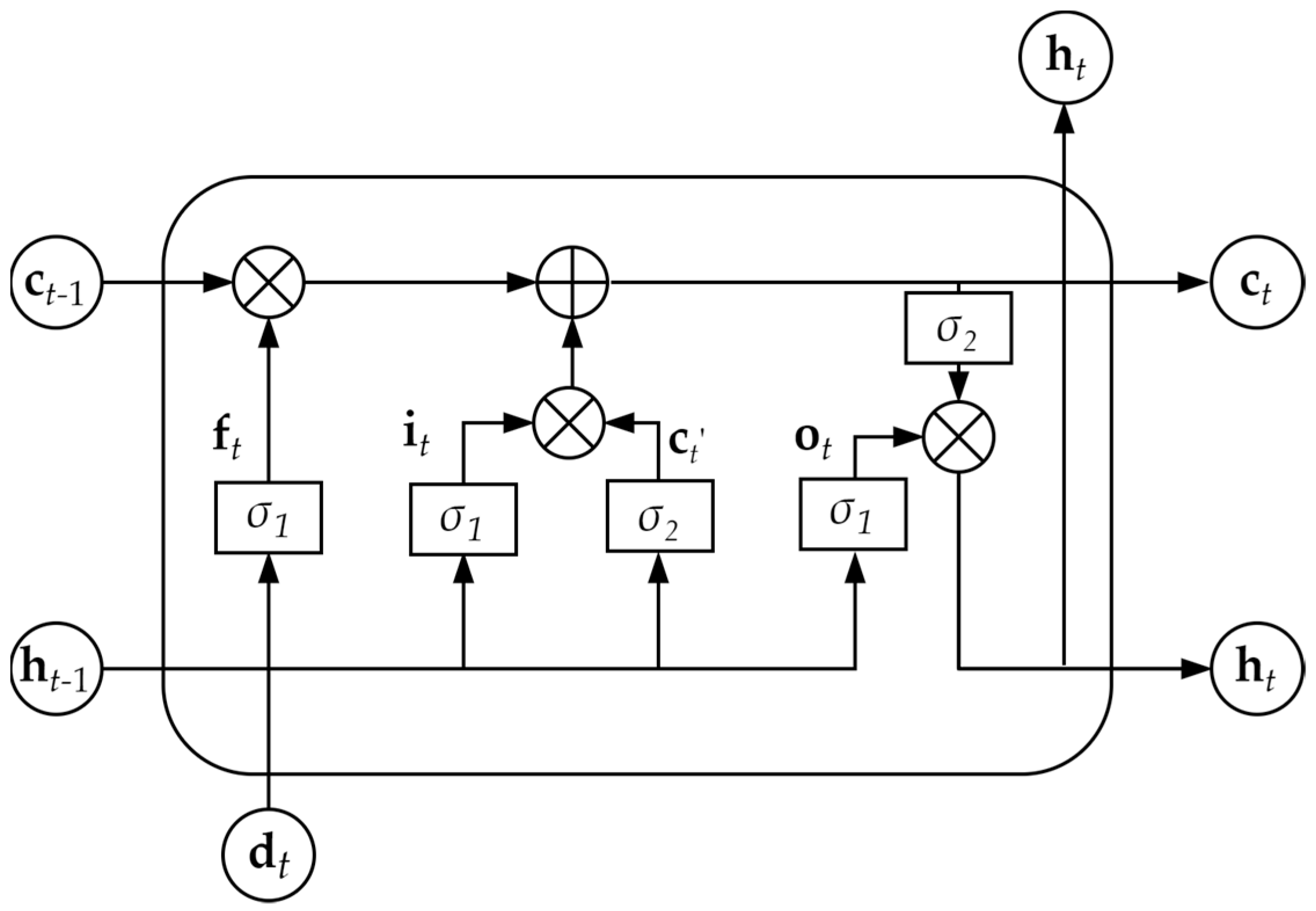

2.2. Long Short-Term Memory (LSTM) Network

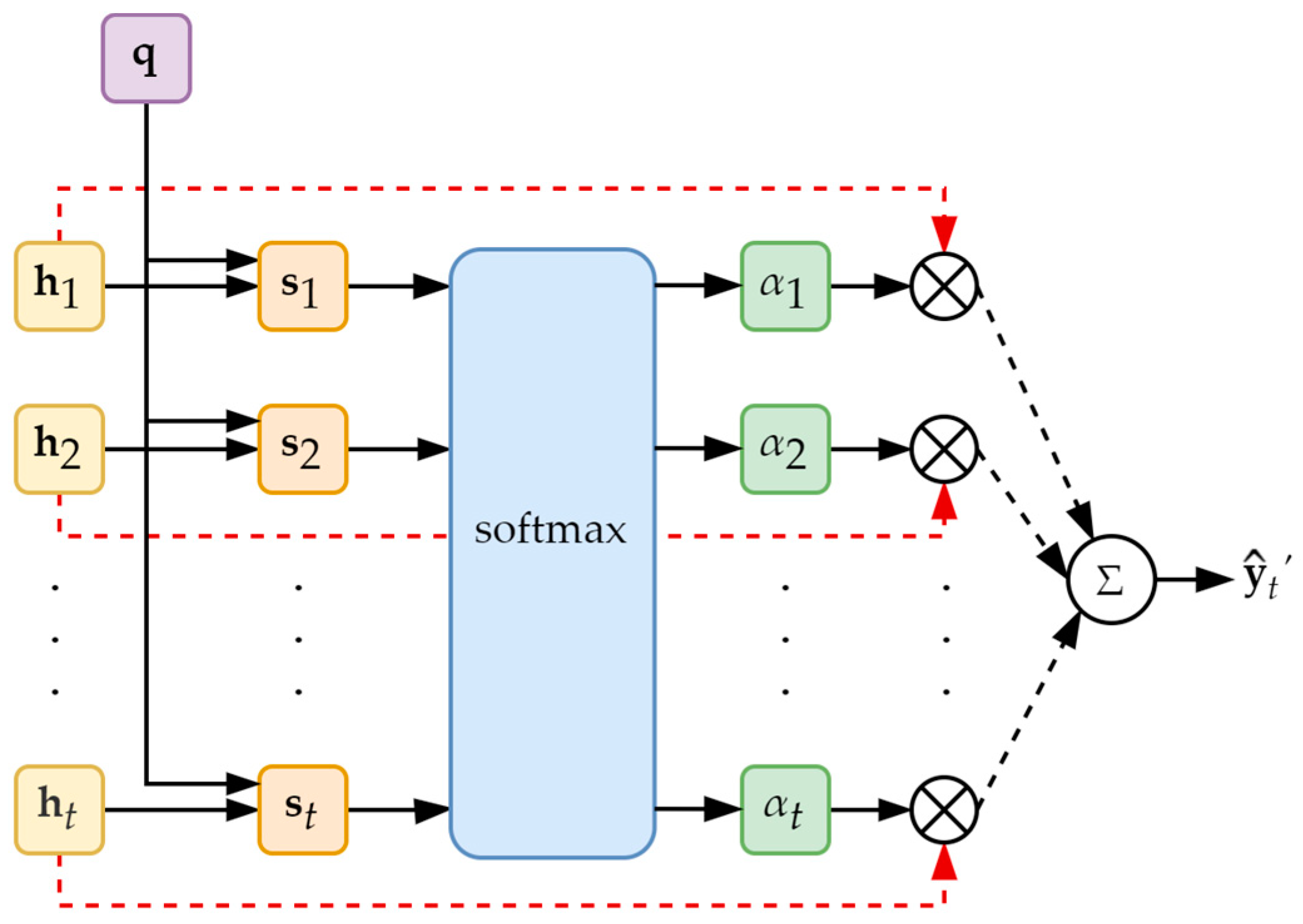

2.3. Attention Mechanism

3. Predicting Model

3.1. ConvLSTM-Att Model Construction

3.2. The ConvLSTM-Att Model for Predicting the Tool Wear Process

| Algorithm 1. Training of the ConvLSTM-Att model |

| Input: Tool wear data set , learning rate , epoch , LSTM neuron number , maximum error . 1. All data are processed by max-min normalization. 2. Divide into the training set , validation set and test set . 3. Perform training with : do Adjust , , , . Train ConvLSTM-Att model with . Validate the currently trained model using . while ( does not converges && ) return the current training model. End 4. Applying the optimal model to . |

| Output: for |

4. Experimental Study and Results

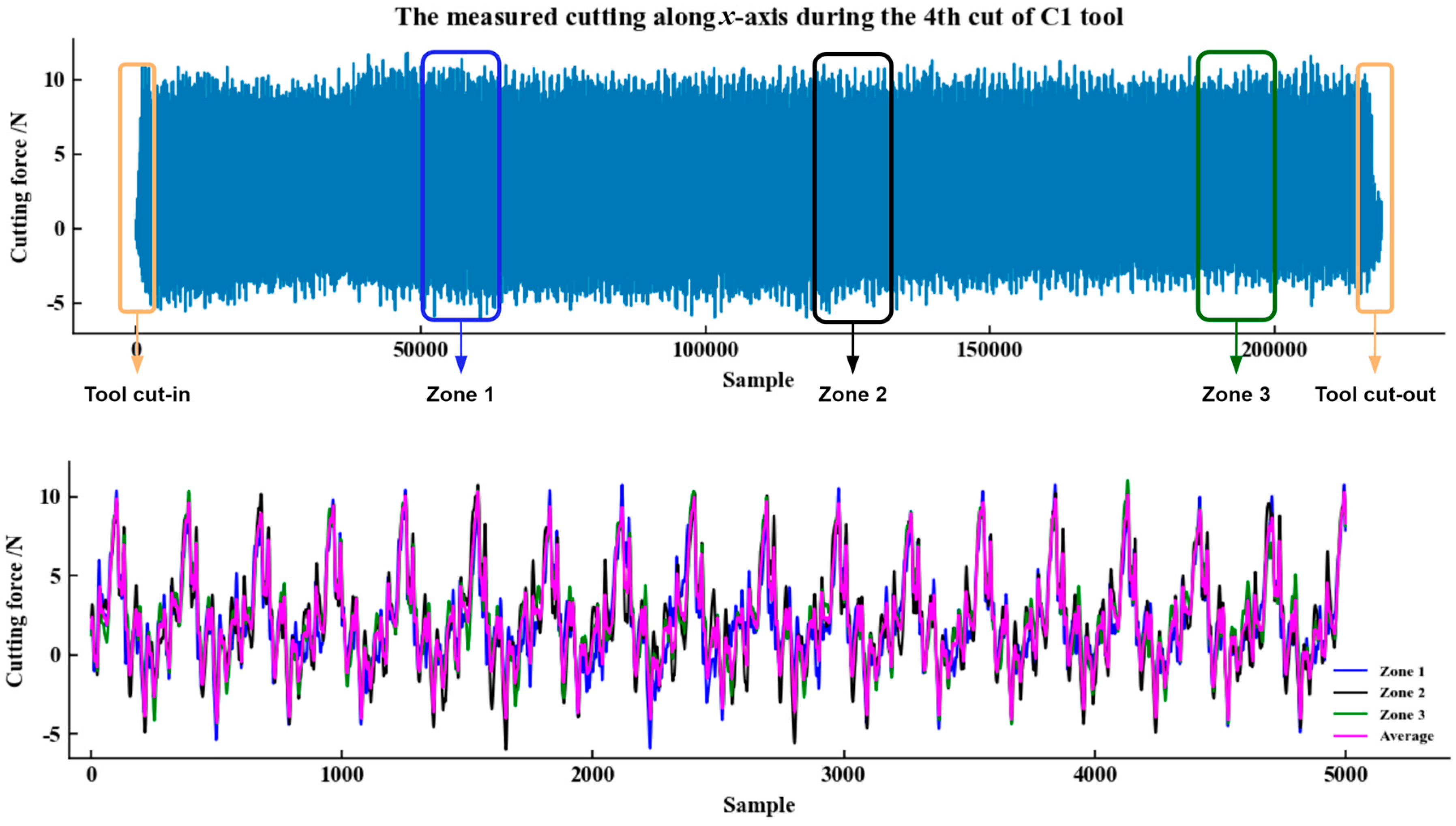

4.1. Experimental Setting

4.2. Experimental Design and Parameter Setting

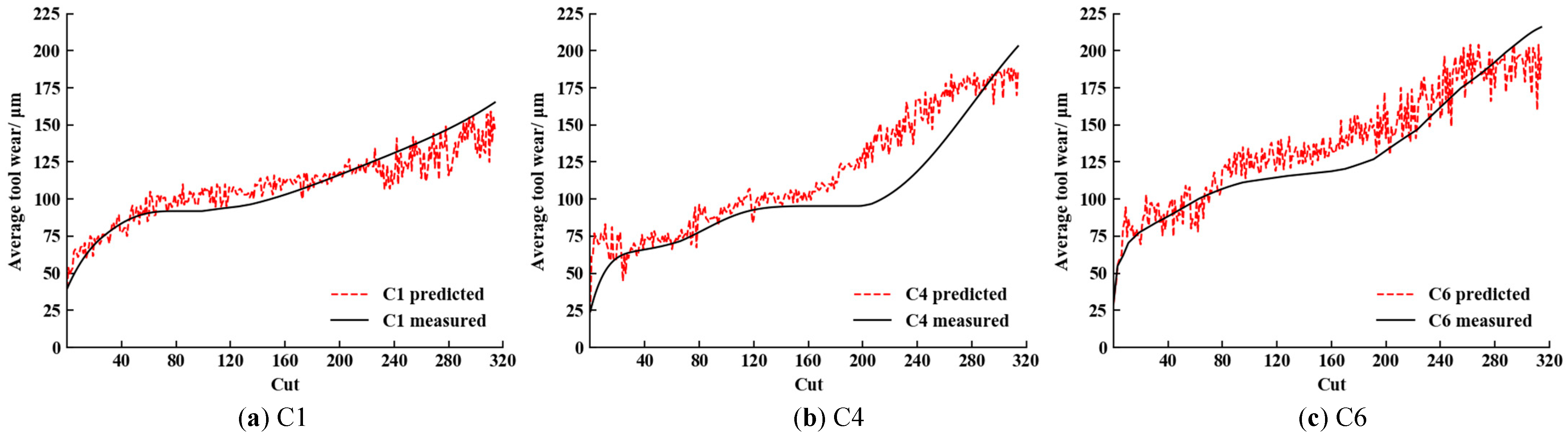

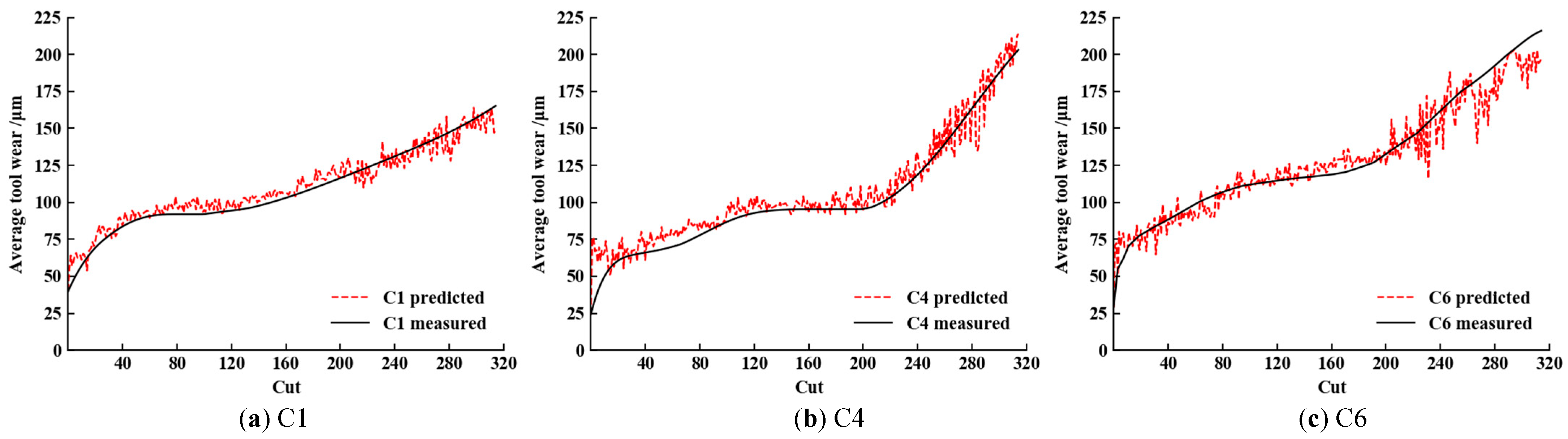

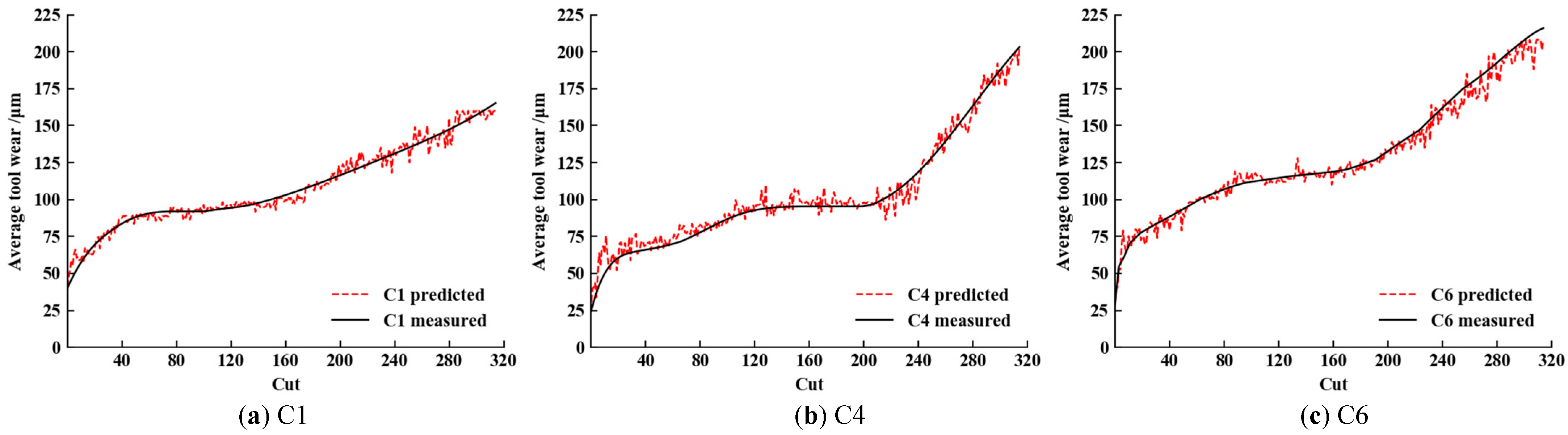

4.3. Comparison and Analysis of Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Salierno, G.; Leonardi, L.; Cabri, G. The Future of Factories: Different Trends. Appl. Sci. 2021, 11, 9980. [Google Scholar] [CrossRef]

- Lu, J.; Chen, R.; Liang, H.; Yan, Q. The Influence of Concentration of Hydroxyl Radical on the Chemical Mechanical Polishing of SiC Wafer Based on the Fenton Reaction. Precis. Eng. 2018, 52, 221–226. [Google Scholar] [CrossRef]

- Chen, N.; Hao, B.; Guo, Y.; Li, L.; Khan, M.A.; He, N. Research on Tool Wear Monitoring in Drilling Process Based on APSO-LS-SVM Approach. Int. J. Adv. Manuf. Technol. 2020, 108, 2091–2101. [Google Scholar] [CrossRef]

- Yang, X.; Yuan, R.; Lv, Y.; Li, L.; Song, H. A Novel Multivariate Cutting Force-Based Tool Wear Monitoring Method Using One-Dimensional Convolutional Neural Network. Sensors 2022, 22, 8343. [Google Scholar] [CrossRef]

- García-Ordás, M.T.; Alegre-Gutiérrez, E.; Alaiz-Rodríguez, R.; González-Castro, V. Tool Wear Monitoring Using an Online, Automatic and Low Cost System Based on Local Texture. Mech. Syst. Signal Process. 2018, 112, 98–112. [Google Scholar] [CrossRef]

- Du, K.-L.; Swamy, M.N.S. Neural Networks and Statistical Learning; Springer London: London, UK, 2019; ISBN 978-1-4471-7451-6. [Google Scholar]

- Du, K.-L.; Swamy, M.N.S. Neural Networks in a Softcomputing Framework; Springer-Verlag: London, UK, 2006; ISBN 978-1-84628-302-4. [Google Scholar]

- Du, K.-L.; Swamy, M.N.S. Search and Optimization by Metaheuristics; Springer International Publishing: Cham, Switzerland, 2016; ISBN 978-3-319-41191-0. [Google Scholar]

- Li, J.; Wang, Y.; Du, K.-L. Distribution Path Optimization by an Improved Genetic Algorithm Combined with a Divide-and-Conquer Strategy. Technologies 2022, 10, 81. [Google Scholar] [CrossRef]

- Kuntoğlu, M.; Aslan, A.; Pimenov, D.Y.; Usca, Ü.A.; Salur, E.; Gupta, M.K.; Mikolajczyk, T.; Giasin, K.; Kapłonek, W.; Sharma, S. A Review of Indirect Tool Condition Monitoring Systems and Decision-Making Methods in Turning: Critical Analysis and Trends. Sensors 2020, 21, 108. [Google Scholar] [CrossRef] [PubMed]

- Mao, W.; Feng, W.; Liu, Y.; Zhang, D.; Liang, X. A New Deep Auto-Encoder Method with Fusing Discriminant Information for Bearing Fault Diagnosis. Mech. Syst. Signal Process. 2021, 150, 107233. [Google Scholar] [CrossRef]

- Wang, J.; Xie, J.; Zhao, R.; Zhang, L.; Duan, L. Multisensory Fusion Based Virtual Tool Wear Sensing for Ubiquitous Manufacturing. Robot. Comput.-Integr. Manuf. 2017, 45, 47–58. [Google Scholar] [CrossRef]

- Qiu, J.; Wang, H.; Lu, J.; Zhang, B.; Du, K.-L. Neural Network Implementations for PCA and Its Extensions. ISRN Artif. Intell. 2012, 2012, 847305. [Google Scholar] [CrossRef]

- Feng, T.; Guo, L.; Gao, H.; Chen, T.; Yu, Y.; Li, C. A New Time–Space Attention Mechanism Driven Multi-Feature Fusion Method for Tool Wear Monitoring. Int. J. Adv. Manuf. Technol. 2022, 120, 5633–5648. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.; Chen, Z.; Mao, K.; Wang, P.; Gao, R.X. Deep Learning and Its Applications to Machine Health Monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Song, Z. English Speech Recognition Based on Deep Learning with Multiple Features. Computing 2020, 102, 663–682. [Google Scholar] [CrossRef]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A Survey of the Usages of Deep Learning for Natural Language Processing. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 604–624. [Google Scholar] [CrossRef]

- Luo, B.; Wang, H.; Liu, H.; Li, B.; Peng, F. Early Fault Detection of Machine Tools Based on Deep Learning and Dynamic Identification. IEEE Trans. Ind. Electron. 2019, 66, 509–518. [Google Scholar] [CrossRef]

- Kothuru, A.; Nooka, S.P.; Liu, R. Application of Deep Visualization in CNN-Based Tool Condition Monitoring for End Milling. Procedia Manuf. 2019, 34, 995–1004. [Google Scholar] [CrossRef]

- Xu, X.; Wang, J.; Ming, W.; Chen, M.; An, Q. In-Process Tap Tool Wear Monitoring and Prediction Using a Novel Model Based on Deep Learning. Int. J. Adv. Manuf. Technol. 2021, 112, 453–466. [Google Scholar] [CrossRef]

- Chen, Q.; Xie, Q.; Yuan, Q.; Huang, H.; Li, Y. Research on a Real-Time Monitoring Method for the Wear State of a Tool Based on a Convolutional Bidirectional LSTM Model. Symmetry 2019, 11, 1233. [Google Scholar] [CrossRef]

- Zhao, R.; Wang, J.; Yan, R.; Mao, K. Machine Health Monitoring with LSTM Networks. In Proceedings of the 2016 10th International Conference on Sensing Technology (ICST), Nanjing, China, 11–13 November 2016; pp. 1–6. [Google Scholar]

- Cai, W.; Zhang, W.; Hu, X.; Liu, Y. A Hybrid Information Model Based on Long Short-Term Memory Network for Tool Condition Monitoring. J. Intell. Manuf. 2020, 31, 1497–1510. [Google Scholar] [CrossRef]

- Chan, Y.-W.; Kang, T.-C.; Yang, C.-T.; Chang, C.-H.; Huang, S.-M.; Tsai, Y.-T. Tool Wear Prediction Using Convolutional Bidirectional LSTM Networks. J. Supercomput. 2022, 78, 810–832. [Google Scholar] [CrossRef]

- Schwendemann, S.; Sikora, A. Transfer-Learning-Based Estimation of the Remaining Useful Life of Heterogeneous Bearing Types Using Low-Frequency Accelerometers. J. Imaging 2023, 9, 34. [Google Scholar] [CrossRef]

- Qiao, H.; Wang, T.; Wang, P. A Tool Wear Monitoring and Prediction System Based on Multiscale Deep Learning Models and Fog Computing. Int. J. Adv. Manuf. Technol. 2020, 108, 2367–2384. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, F. A Sequence-to-Sequence Model With Attention and Monotonicity Loss for Tool Wear Monitoring and Prediction. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Huang, Q.; Wu, D.; Huang, H.; Zhang, Y.; Han, Y. Tool Wear Prediction Based on a Multi-Scale Convolutional Neural Network with Attention Fusion. Information 2022, 13, 504. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef]

- Huang, Z.; Zhu, J.; Lei, J.; Li, X.; Tian, F. Tool Wear Predicting Based on Multi-Domain Feature Fusion by Deep Convolutional Neural Network in Milling Operations. J. Intell. Manuf. 2020, 31, 953–966. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D Convolutional Neural Networks and Applications: A Survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Van Houdt, G.; Mosquera, C.; Nápoles, G. A Review on the Long Short-Term Memory Model. Artif. Intell. Rev. 2020, 53, 5929–5955. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A Review on the Attention Mechanism of Deep Learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Zhou, Y.; Xue, W. Review of Tool Condition Monitoring Methods in Milling Processes. Int. J. Adv. Manuf. Technol. 2018, 96, 2509–2523. [Google Scholar] [CrossRef]

- PHM Society: 2010 PHM Society Conference Data Challenge. Available online: https://www.phmsociety.org/competition/phm/10 (accessed on 13 January 2023).

- Qiao, H.; Wang, T.; Wang, P.; Qiao, S.; Zhang, L. A Time-Distributed Spatiotemporal Feature Learning Method for Machine Health Monitoring with Multi-Sensor Time Series. Sensors 2018, 18, 2932. [Google Scholar] [CrossRef]

| Cutting Conditions | Spindle Speed/r/min | Feeding Speed/mm/min | Axial Depth of Cut/mm | Radial depth of Cut/mm | Feed Amount/mm | Sampling Fre-quency/kHz |

|---|---|---|---|---|---|---|

| Parameters | 10,400 | 1555 | 0.2 | 0.125 | 0.001 | 50 |

| Models | Test Set | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| C1 | C4 | C6 | |||||||

| RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | |

| RNN [23] | 15.6 | 13.1 | / | 19.7 | 16.7 | / | 32.9 | 25.5 | / |

| 1D-CNN | 10.849 | 8.411 | 0.749 | 19.074 | 16.089 | 0.702 | 15.748 | 13.002 | 0.827 |

| Deep LSTMs [23] | 12.1 | 8.3 | / | 10.2 | 8.7 | / | 18.9 | 15.2 | / |

| LSTM [24] | 11.4 | 8.5 | / | 11.7 | 8.5 | / | 21.2 | 14.6 | / |

| HLLSTM [25] | 8.0 | 6.6 | / | 7.5 | 6.0 | / | 8.8 | 7.1 | / |

| CNN-LSTM | 6.480 | 5.336 | 0.929 | 9.897 | 7.389 | 0.925 | 10.646 | 7.749 | 0.914 |

| TDConvLSTM [38] | 8.33 | 6.99 | / | 8.39 | 6.96 | / | 10.22 | 7.50 | / |

| ConvLSTM-Att | 4.251 | 3.218 | 0.976 | 6.224 | 4.610 | 0.970 | 5.716 | 4.056 | 0.971 |

| Models | Time/s | ||

|---|---|---|---|

| C1 | C4 | C6 | |

| 1D-CNN | 356.6 | 362.3 | 349.7 |

| CNN-LSTM | 589.75 | 598.25 | 593.25 |

| ConvLSTM-Att | 679.8 | 684.9 | 681.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Ye, X.; Yang, F.; Du, K.-L. ConvLSTM-Att: An Attention-Based Composite Deep Neural Network for Tool Wear Prediction. Machines 2023, 11, 297. https://doi.org/10.3390/machines11020297

Li R, Ye X, Yang F, Du K-L. ConvLSTM-Att: An Attention-Based Composite Deep Neural Network for Tool Wear Prediction. Machines. 2023; 11(2):297. https://doi.org/10.3390/machines11020297

Chicago/Turabian StyleLi, Renwang, Xiaolei Ye, Fangqing Yang, and Ke-Lin Du. 2023. "ConvLSTM-Att: An Attention-Based Composite Deep Neural Network for Tool Wear Prediction" Machines 11, no. 2: 297. https://doi.org/10.3390/machines11020297

APA StyleLi, R., Ye, X., Yang, F., & Du, K.-L. (2023). ConvLSTM-Att: An Attention-Based Composite Deep Neural Network for Tool Wear Prediction. Machines, 11(2), 297. https://doi.org/10.3390/machines11020297