1. Introduction

Equations of the motion of multibody systems are highly nonlinear in general, but there are cases where one is interested in a linearization of such equations as a way to study the effects of perturbations around a given configuration. To this end, being able to compute the eigenvalues and eigenvectors of the linearized model is of fundamental importance, and it is not limited to the conventional methods of a modal analysis.

For instance, among other applications, eigenvectors can be used to perform a component mode synthesis, also known as

modal reduction, that is an effective approach which turns a complex system into a surrogate model with a smaller set of coordinates, hence obtaining faster simulations [

1,

2,

3]. Another application that requires the computation of eigenpairs is the stability analysis of dynamic systems, for instance, the aeroelastic stability of helicopter blades, wind turbines and other slender structures. In this case, one needs to implement a complex-valued eigenvalue problem, where the imaginary and real parts of the eigenvalues give an indication of the damping factor and, consequently, an indication about the impending instability [

4,

5]. Finally, we can mention that, in the field of control theory, often a state-space representation of the linearized system is required, and this is another problem that motivates the research of efficient methods to recover the eigenvalues of the multibody system [

6,

7].

Motivated by the above-mentioned applications, in this paper, we discuss the numerical difficulties related to the computation of eigenvalues and eigenvectors in multi-flexible-body systems under the most general assumptions: we assume that the system can present singular modes (also called rigid body or free–free modes); we consider the optional presence of damping, hence leading to complex-valued eigenpairs; we consider an arbitrary number of parts and constraints; and we assume that the size of the system could be arbitrarily large. In particular, this last requirement imposes some limitations on the type of solver that must preserve the sparsity of the matrices for the sake of an acceptable computational performance and that should be able to output just a small subset of eigenvalues, either the lowest ones or those clustered around a frequency of interest.

The problem of the eigenvalue computation in multibody systems is discussed by various authors in the literature, although the topic is more common in the field of the finite element analysis (FEA). A difficulty of multibody systems with respect to a conventional FEA is that constraints are ubiquitous and often described by algebraic equations and Lagrange multipliers. A classical approach is to remove constraints by means of an orthogonal complement that reduces the generalized coordinates to the lowest amount possible, as discussed, for instance, in [

8,

9]. This idea has the benefit that the linearized equations are those of an unconstrained system; thus, a conventional eigenvalue solver can be applied. However, there are also drawbacks that will be discussed in the next paragraphs.

Alternatively, one can solve an eigenvalue problem paired with constraints, thus leading to matrices that are larger but sparser. This approach is shown, for example, in [

10,

11]. Despite the increment in the number of unknown eigenvalues and the increment in the dimension of eigenvectors, we experienced that this approach leads to a simpler formulation. Most important, we noticed that this method preserves the sparsity of the matrices so that we could design a solver that can leverage this useful property.

An eigenvalue solver that achieved big popularity in the past years is the Implicitly Restarted Arnoldi Method (IRAM) [

12]. In fact, this is the method implemented in

Arpack, a widespread Fortran77 library that can solve generalized eigenvalue problems, with both sparse or structured matrices [

13]. As such, the IRAM would be sufficient to satisfy our requirements; however, we experienced that it fails to provide good convergence in some difficult cases, so we pointed our attention to the more recent Krylov–Schur method.

The Krylov–Schur method was presented in [

14] as an improvement over previous Krylov subspace methods, such as the IRAM and Lanczos. Because of an efficient and robust restarting strategy, it is often able to converge even in cases where the IRAM stalls, and in general, it exhibits a superior robustness and faster convergence [

15]. For these reasons, the Krylov–Schur method has become the default for the

MATLAB eigs command, and it is also available in the

SLEPc library [

16], an extension of the

PETSc linear algebra library, as well as in the

Trilinos library [

17]. However, both are large libraries that target supercomputing and require complex build toolchains. On the other hand, there are efforts such as the

Spectra C++ library [

18] that are lightweight but might not offer all the desired functionalities, for instance,

Spectra contains the Krylov–Schur method in a partially implemented form, making it usable only for symmetric matrices (hence the complex eigenvalue problem is out of reach, making it unuseful for a damped eigenmode computation at the moment of writing). The lack of reliable, complete and lightweight open-source libraries for computing eigenvalues with the Krylov–Schur method motivated us to develop our C++ version of it, which is described in the following pages.

In the next section, we will discuss how to obtain the needed matrices from a linearization of the multibody system; then we will review different formulations for expressing the eigenvalue problem, with or without constraints, with or without damping; then we will discuss some computational aspects related to the implementation of the sparsity-preserving Krylov–Schur solver; and finally, we will show some applications and benchmarks.

2. Linearization of Multibody Structures

We introduce the semi-explicit Differential Algebraic Equations (DAE) of a generic, nonlinear multi-flexible-body system with generalized coordinates

:

where

is a vector of

m holonomic-rheonomic constraints with an

sparse Jacobian

. Moreover,

is the vector of external and internal forces, and

represent the gyroscopic and centrifugal components of the inertial forces (the full inertial forces are in fact

).

By rewriting Equation (1) into a multivariate function

it is easier to see that any

feasible infinitesimal variation of the unknowns

,

,

will still be of equilibrium, thus leading again to a zero-valued function, i.e.,

. No variation takes place on the time variable. This leads to the trivial conclusion that any step along the total derivative of the

F function does not lead to any variation of

F, i.e.,

By expanding the evaluation of the partial derivatives of

F to all the terms contained in it the following result is obtained where all the derivatives of the forces have been meaningfully collected into more readable stiffness

K and damping

R matrices:

In the formula above, the damping matrix

R comes from the linearization of internal/external forces

about

, plus the linearization of

, the quadratic part of the inertial forces; hence,

Note that the

part also includes the so-called

gyroscopic damping, and it is null for

.

The tangent stiffness K contains the effect of the linearization of internal and external forces ( i.e. the conventional stiffness matrix), the linearization of the inertial forces ()—that is null if, as often happens, the system is studied in a static configuration, but might be relevant otherwise when studying, for example, eigenmodes of a rotating wind turbine—and the linearization of the constraint reaction forces . It can be noted that the latter can introduce a contribution to the tangent stiffness due to the geometric effect of changes in about the linearization point. One example is offered by the gravity-induced stiffness of a pendulum, where the rotation of the pendulum generate changes in due to the change of the reaction force at the pendulum hinge. If the other sources of stiffness are more relevant (e.g., springs, elastic internal forces in beams, etc.) or if is small at the linearization point, then this term might be neglected.

Because of these reasons, a static or dynamic analysis should be performed right before computing eigenvectors, since the value of

must be known when computing (9):

Oftentimes, especially in the FEA literature, the matrix is split in two components , where is the material stiffness and is the geometric stiffness—the latter is caused, for example, by change in orientation of internal forces in beams, and its effect is null in configurations that have no initial stress at the linearization point.

A further splitting can be performed by distinguishing internal forces, caused by finite elements, and external forces, caused by applied loads; thus, , and . In many cases, matrices are of small value if compared to matrices and can be neglected, but in other cases, for example, when considering aerodynamic loads, they might be relevant.

We remark that (5) and (6) require the introduction of constraints via Jacobian matrices

and Lagrange multipliers

: in fact, in the following, we will handle this complication by solving

constrained eigenvalue problems. However, one might wonder if there is an alternative approach that avoids

and

at all, so that a conventional (not constrained) eigenvalue solver could be used. Actually, this would be possible, for example, by running a QR decomposition on the

matrix in order to find a

matrix such that

. In this way, one could introduce a smaller set of independent variables

for whom

, hence rewriting the DAE (1) as a simple ODE

This can be linearized to give a single expression which is an alternative to (5) and (6):

with

However, we note that the expressions of and are substantially more intricate than the expression of R and K in (7) and (9), especially considering that (15) would require the knowledge of and .

While these latter terms might be neglected in some cases—thus reducing matrices to the approximated forms , —we experienced that such a simplification is possible only when constraints do not change direction in a significant way: in fact, even a simple example of an oscillating pendulum would erroneously give zero natural frequency with this simplification.

Moreover, the multiplications by and will destroy the sparsity of the original matrices M, R, K: this is not an issue in problems of small size, but for large problems this would lead to unacceptable memory and performance requirements.

For these reasons, we prefer to proceed with the linearization expressed in (5) and (6) at the cost of dealing with constraints during the iterative eigenvalue solution process. The following section will explain how to use the M, R, K, matrices to this end.

3. Modal Analysis

We can distinguish two types of modal analysis: in the first case, we search for real-valued eigenvalues of the undamped system; in the second case, we search for complex-valued eigenvalues of the damped system. The former can be considered a subcase of the latter for , and hence a single solver could attack both problems; however, it is better to adopt two different solution schemes in order to exploit some optimizations that lead to a high computational performance if the damping is of no interest.

3.1. Undamped Case—Real Valued

We recall some basic concepts in eigenvalue analysis of dynamic systems.

For the simple case of an unconstrained, undamped system with

, with solutions

it is possible to compute the eigenmodes from the following characteristic expression:

that leads to a standard eigenvalue problem (SEP) with eigenvalues

and matrix

:

For symmetric K and M, by the spectral theorem, eigenpairs are real.

However, there are some difficulties that prevent the direct use of (18) in engineering problems of practical interest:

It works only if there are no constraints (no Jacobian matrix);

It requires the inversion of the M matrix: even if M is often diagonal-dominant and easy to invert, this is not true in general, and it could destroy the sparsity of the matrices in the case of large systems;

We may be interested in just a small subset of eigenvalues, usually the lower modes, so we need an iterative scheme that is able to do this.

We compute the modes of the

constrained undamped multibody system with the following

generalized eigenvalue problem (GEP):

where we introduce the augmented eigenvector

and where we recover natural frequencies as:

We remark that one could change the sign in the left-hand side of (20); this would obtain positive eigenvalues and then one would compute instead.

The solution of the problem (20) generates eigenvalues, where only n is of interest, and m is spurious modes with that can be discarded. The same filtering must be performed for the corresponding eigenvectors. Moreover, the last m components of the eigenvectors, namely , can just be discarded or used to get insight about reaction forces because they represent the amplitude of reactions in constraints during the periodical motion of the system.

Alternatively, one can solve

but this would produce

m spurious modes with

that can easily be confused with those eigenvalues resulting from rigid body modes. These latter, also known as

free-free modes, result from bodies that retain some unconstrained degree of freedom, that turn out to have

too.

In this formulation (22), the last m components of the eigenvectors, namely , represent the second integration of reaction forces/moments of the constraints, which can be discarded because no physical meaning exists.

The matrices that appear in the two forms of the GEP have different properties, and this is relevant when we will choose the optimal solution scheme. In the GEP (20), the A matrix is nonsingular only if there are no rigid body modes, as it is z-times rank deficient in the presence of z rigid body modes. Moreover, the B matrix is always singular and not invertible. Hence, both matrices are not invertible in the most general case. On the other hand, in the GEP (22), the A matrix is singular, but the B matrix is always nonsingular and invertible, regardless of the presence of rigid body modes, because M is positive definite and is assumed to be full rank. This would make GEP (22) a better choice with respect to GEP (20) because one could always transform it to an SEP via . However, as we will see later, solving the SEP in this form is not what we need in the case of large systems, where we want a limited number of eigenpairs starting from the smallest ones. If so, a shift-and-invert approach is needed, where the nonsingularity of B is irrelevant, and we would rather need the inversion of A. In this case, neither GEP (20) nor GEP (22) would fit this requirement. However, the shift-and-invert approach requires a regularized form of the inverse matrix, by means of a shift parameter as in , so both approaches could work in this setting, except for .

Finally, we note that, when the K matrix is symmetric, both A and B are symmetric; therefore, optimized linear solvers for the inner loop of the Krylov–Schur solver could be used for the sake of a higher speed (that is, the problem can be approached via LDLt decompositions rather than LU decompositions in the case of direct solvers, or via the MINRES rather than the GMRES in the case of Krylov solvers).

3.2. Damped Case—Complex Valued

The conventional modal analysis of the damped system

with solutions

formulated as a quadratic eigenvalue problem (QEP), either with left or right eigenvectors:

We recall some useful properties. Because coefficients of (24) are real, any complex roots must appear as complex conjugate pairs. The QEP generates eigenvalues that are finite if M is nonsingular; if M, R, K are real, or Hermitian, then eigenvalues can be a mix of real values or complex conjugate pairs ; if M is Hermitian positive definite and R, K are Hermitian positive semidefinite, then .

Complex conjugate pairs correspond to underdamped modes, oscillatory and decaying for ;

Purely imaginary conjugate pairs correspond to undamped modes, purely harmonic and not decaying;

Real modes with and no imaginary part correspond to overdamped modes, not oscillatory, exponential decaying;

In all cases, indicates an unstable system;

For the class of damped systems, also eigenvectors

are complex, with elements:

where both the amplitude and the phase of the entire eigenvector can be arbitrary (but the relative amplitude

of each component is unaltered by whatever normalization, and the relative phase of each component is constant

);

The two eigenvectors of a complex conjugate pair are also conjugate.

Oscillatory modes, corresponding to a complex conjugate pair

,

, can be written in a more engineering-oriented way as done in 1-dof systems,

, where one has the following expressions for natural (undamped) frequencies

, damped frequencies

and damping factors

:

Although there exist algorithms that can solve (24) directly, often the QEP is transformed to an SEP or GEP so that a conventional solver like Arnoldi or Krylov–Schur can be used. This can be performed by expressing the problem in state space: we introduce an augmented eigenvector that contains both the eigenvector

and the eigenvector

:

This can be used to transform the QEP (24) into the following GEP with double the original size:

Additionally, one can consider the constraints by introducing Lagrange multipliers

that correspond to the

m constraints enforced as

, thus obtaining a constrained QEP:

Finally, introducing the augmented eigenvector

as

and by making use of simple linear algebra, we can write the constrained QEP as a constrained GEP:

An alternative formulation is based on the solution of the following GEP, where the spurious modes related to the constraint equations are zero instead of infinite:

that corresponds to

We experienced that, among the different formulations (

Table 1), the most efficient way to compute eigenpairs for the constrained damped system is the GEP approach (33).

4. Computing Eigenpairs with Sparse Matrices

When the number of unknowns n grows, it is not possible to compute all the n eigenvalues and eigenvectors, both for reasons of computational time and for the extreme requirement of the memory needed for storing all the eigenvectors. In fact, many analyses that require the computation of eigenmodes in practice require a small set of them.

There are iterative methods that preserve the sparsity of matrices and that can compute a limited set of k eigenvectors: most notably, these are the IRAM (Implicitly Restarted Arnoldi Method), the Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG) and, lastly, the Krylov–Schur method.

The problem is that they compute the largest k, not the smallest ones, which is exactly the opposite of our interest. This issue can be solved adopting a Moebius transform of the eigenvalue problem. We proceed as follows.

For the undamped constrained case, first we formulate the generalized eigenvalue problem (GEP):

then we adopt a Moebius transform of the eigenvalue problem, namely the

shift-and-invert strategy that computes eigenvalues

in the following problem:

After the eigenvalue problem (40) is solved for k pairs of , one recovers the original and hence the original using (42).

For the damped constrained case, we formulate a GEP of the type

then, similarly to the undamped case, we apply the shift–invert Moebius transformation to solve

with

, obtaining pairs

, and finally recovering

.

Right eigenvectors are not affected by the Moebius transform. Just in case one is interested in the left eigenvectors as in , then those are recovered solving and using the transform .

User-defined values of can be used to extract eigenvalues in specific frequency ranges. In fact, the iterative solver will return the k eigenvalues that are closer, in absolute value, to .

If the shift parameter is zero or close to it, as often happens, one can see that the largest k eigenvalues computed by the Krylov–Schur solver will become the smallest k eigenvalues , for the modes closer to zero frequency.

As a special case, for , one has and , that for an unconstrained problem (no Jacobian) it corresponds to solving the inverse eigenvalue problem .

In general, one can adjust the shift value so that it provides the best numerical performance; in detail, it provides a regularization of A and helps solve the linear problem in (41) and also in the case where A or B are singular or close to singularity. This is what happens in many cases when conducting a modal analysis of engineering structures, especially if the structure has rigid body modes. In fact, our default method is to extract all the lower modes, including rigid body modes, and at this end, we experienced that a value of works well also to retrieve the six modes and to cure ill-posed problems.

The Krylov–Schur and Arnoldi methods draw on a single computational primitive, that is, the product of a sparse matrix

C by a vector

for the solution of the problem

. However, in our case,

; hence, pre-computing such a

C matrix is out of question because the exact inversion of

would require too much CPU time and would destroy the sparsity. Because only the product primitive

is required for the iterative solver, an acceptable trade-off is to return the result of the product

by performing these steps:

Here, we note that Equation (46b) in the second step requires a linear system solution. This can be a computational bottleneck, but a substantial speedup can be achieved, observing that the coefficient matrix is constant; therefore, one can factorize it once at the beginning of the Krylov–Schur iterations and perform only the back substitutions in (46b).

An alternative that preserves the sparsity of the matrices and can fit better in scenarios with millions of unknowns is that (46b) is solved iteratively via truncated MINRES or GMRES iterative methods. If the number of unknowns is in the range of tens of thousands, however, we experienced that the factorization via a direct method performs faster.

5. Implementation of the Krylov–Schur Solver

The Krylov–Schur method was introduced in 2001 [

14], leading to an improved performance in respect to other Krylov subspace methods, such as Arnoldi and Lanczos, which were used for decades in the field of eigenvalue computation. The notorious Implicitly Restarted Arnoldi Method, implemented, for example, in the

arpack library, or the Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG), implemented, for example, in the

blopex library, both fail to converge for those problems whose matrix is of type (20), (22), (33) or (34) and for which wide mass ratios or strongly ill-conditioned blocks are present.

On the contrary, the robustness of the Krylov–Schur method also guarantees satisfying results for the most critical conditions, thus becoming the elected choice for the following tests. Our implementation follows the guidelines in [

19] as reported in Algorithm 1. It was extended to the case of complex and sparse matrices and is included in the open-source multibody library

chrono [

20].

On a parent level of the Krylov–Schur solver, specific routines construct an eigenvalue problem in accordance with (33) or with (20) for the undamped case. This will push the spurious constraint modes to infinity, being of less disturbance for the usual low-frequency area of interest for engineering applications.

The code offers the possibility to specify different problem formulations, either direct or in shift–invert, by providing different Op_Cv operators in Algorithm 2. For instance, in Algorithm 3, we show the implementation for the shift–invert case, implementing (46a) and (46b).

The solutions of the linear systems required by the method can be theoretically provided by any linear solver enabled for complex values; practically, given the relatively high accuracy required by the solution and the ill-conditioning of some matrices, direct solvers are almost mandatory for this application, relegating iterative solvers only for systems with higher degrees of freedom. However, for smaller and simpler problems, the choice of the solver is not critical (allowing the use of, e.g., SparseLU and SparseQR functions from the popular C++ linear algebra library

eigen [

21]); for most of the real cases, more advanced solvers are required, such as Pardiso MKL or MUMPS [

22]. Given the importance of this choice, our Krylov–Schur implementation was made solver agnostic: the user can indeed provide one of its own choice.

For the undamped case, as in (20), the value of in the shift–invert procedure is assigned as a small positive real value (by default, we used in our tests) in order to return the lowest eigenmodes, including those with zero eigenvalues in case there are rigid body modes. A small negative real value would work as well, but the numerical conditioning of the problem would be worse. If one needs specific eigenmodes clustered about some specific frequency , we set it as . For the damped case, we use a complex shift , with a small real value and no imaginary part if we are interested in the lowest eigenvalues, for instance, , or with a finite imaginary part if we need eigenmodes clustered about an frequency: .

The Krylov–Schur decomposition is then solved by using the

eigen linear algebra library eigensolvers [

21].

An important contribution to the stability of the method is given by a trivial and inexpensive preconditioning of the Jacobian matrix

. While the stiffness and damping matrices usually have terms in the order of at least

, the Jacobian matrix is usually in the order of

. This change affects only the Lagrange multipliers

and the relative eigenvector counterpart

that should be re-scaled back for the same factor (if they are of any interest to the user). This simple change in the matrices allows, in some corner case, a significant reduction in the residuals, even just after the first iteration of the method.

| Algorithm 1 Krylov–Schur |

- 1:

procedureKrylov–Schur(Op_Cv(),) - 2:

- 3:

- 4:

- 5:

KrylovExpansion(Op_Cv(),) - 6:

while do - 7:

- 8:

- 9:

KrylovExpansion(Op_Cv(),) - 10:

sortSchur() - 11:

- 12:

- 13:

- 14:

- 15:

- 16:

- 17:

CheckConvergence(H, , p, ) - 18:

end while - 19:

eig() - 20:

- 21:

return - 22:

end procedure

|

| Algorithm 2 Krylov Expansion |

- 1:

procedureKrylovExpansion(Op_Cv(), Q, H, , ) - 2:

for do - 3:

Op_Cv() - 4:

- 5:

- 6:

- 7:

- 8:

- 9:

- 10:

- 11:

- 12:

- 13:

end for - 14:

end procedure

|

| Algorithm 3 Op_Cv operator |

- 1:

procedureOp_Cv(v) - 2:

- 3:

- 4:

return - 5:

end procedure

|

6. Results

The Krylov–Schur method was tested on various scenarios, including real-case problems, in order to assess the accuracy and scalability of the method. The relevant test conditions include flexible elements, rigid bodies, generic constraints and free–free modes in various combinations.

The tests are leveraging the newly implemented quadratic Krylov–Schur eigensolver using the Pardiso MKL direct linear solver, and the results are compared to the

eigs solver of

Matlab (that turns out to be an implementation of the Krylov–Schur solver as well) as well as against the state-of-the-art Arpack [

23] routines, making sure that—even for this latter library—the same Pardiso MKL linear solvers were used. The hardware includes an Intel i7 6700HQ with 16 GB RAM.

For the purpose of this article, only Rayleigh damping is considered. Other damping formulations can be used without expecting any drastic impact over the solver performance given that the sparsity of the matrices are kept within reasonable limits. One example that might negatively affect the solver is if modal damping is used: in this case, dense damping matrices arise, thus easily leading to increased computational costs. However, because the solver is mainly targeting sparse problems, the authors did not investigate other damping modes.

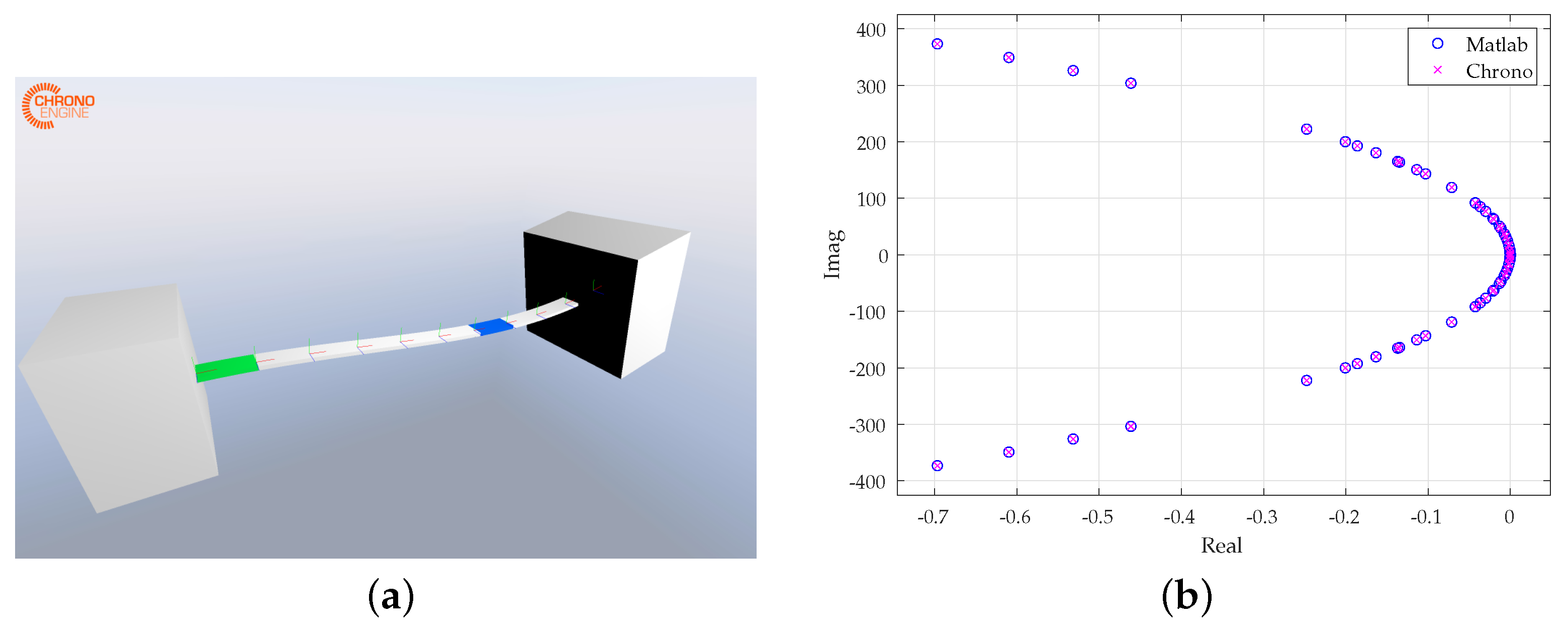

6.1. Hybrid Flexible and Rigid Bodies with Constraints

Constraining the system results in an additional zero-valued block in the system matrices, thus potentially compromising the stability for the inner linear solver. In the following test case, a Euler beam with properties set according to

Table 2 is fixed at the base while its tip is constrained to a rigid body of a heavier mass (4000

) (

Figure 1). The method was tested with end masses up to 10e8 in order to prove its robustness.

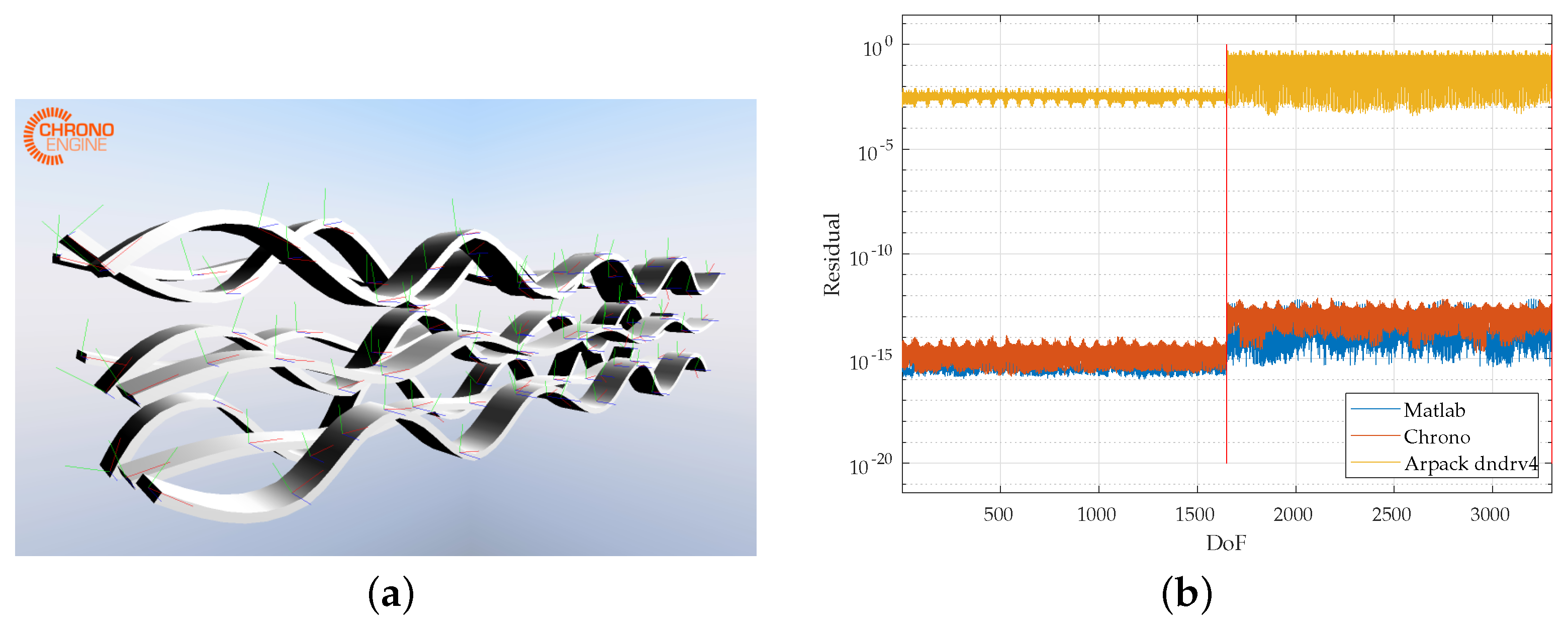

6.2. Finite Elements

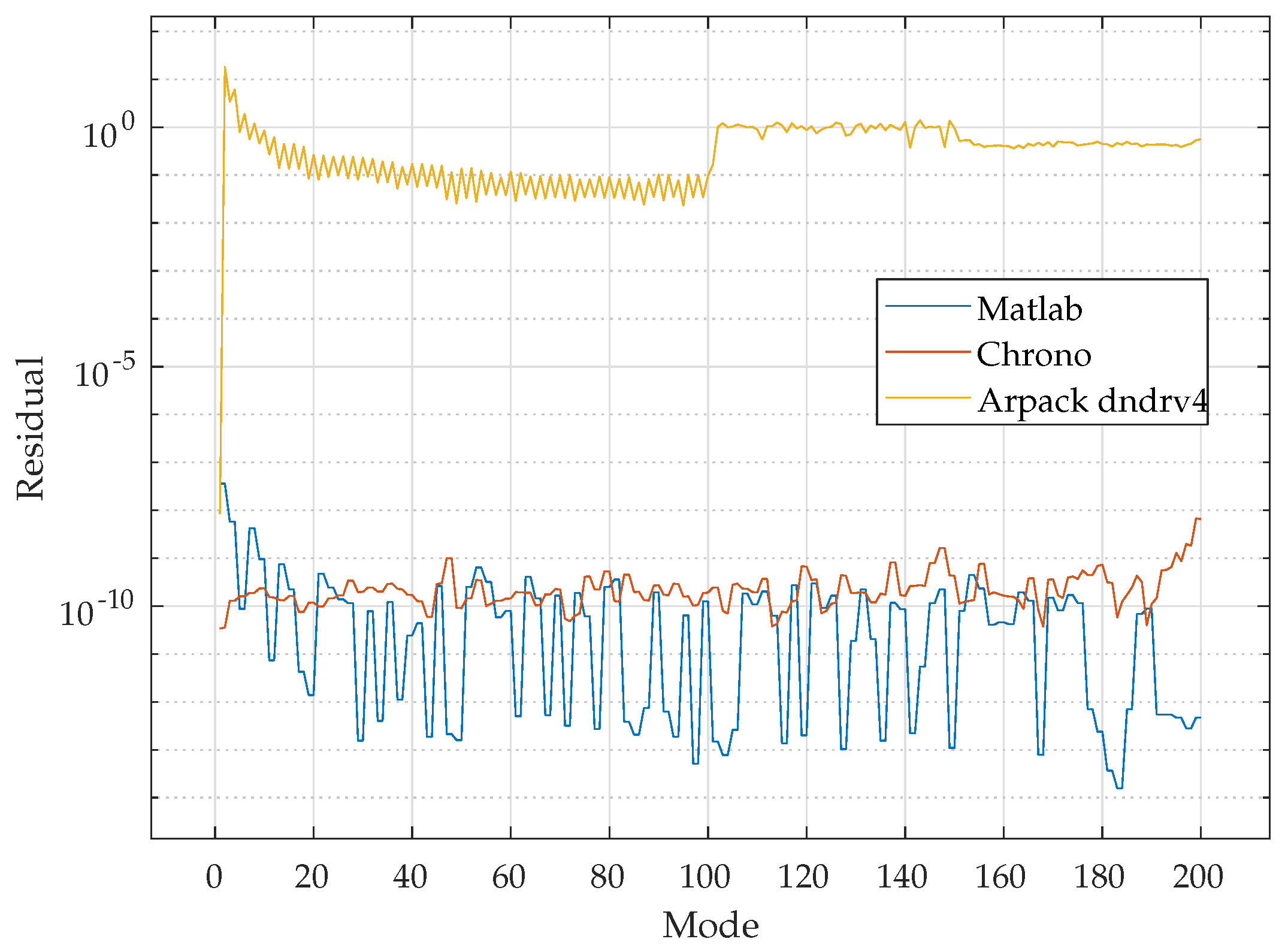

An additional test case with a crank–rod–piston assembly shows the use of tetrahedral mesh (

Figure 2). Without any specific preconditioner, the Arpack

dndrv4 routine failed to return consistent results in a reasonable time. The Krylov–Schur solver manifested a superior performance, especially in denser and more computationally expensive problems.

6.3. Free–Free Modes

The presence of unconstrained bodies results in degenerated modes whose eigenvalues are pushed toward infinity. The method also guarantees proper stability for this degenerate case (

Figure 3). It might be noticed how each degree of freedom contributes to the overall residual: the first half represents the positional degrees of freedom while the second represents the velocities (usually of less interest). The beam properties are the same as in

Table 2. In this case, a comparison with Arpack revealed that, even while asking for better accuracy, the Arpack

dndrv4 routine was not able to converge to more accurate results. The Intel MKL Pardiso solver was used for both the Krylov–Schur and Arpack solvers, thus restricting the potential cause of the reduced accuracy to the eigensolver itself.

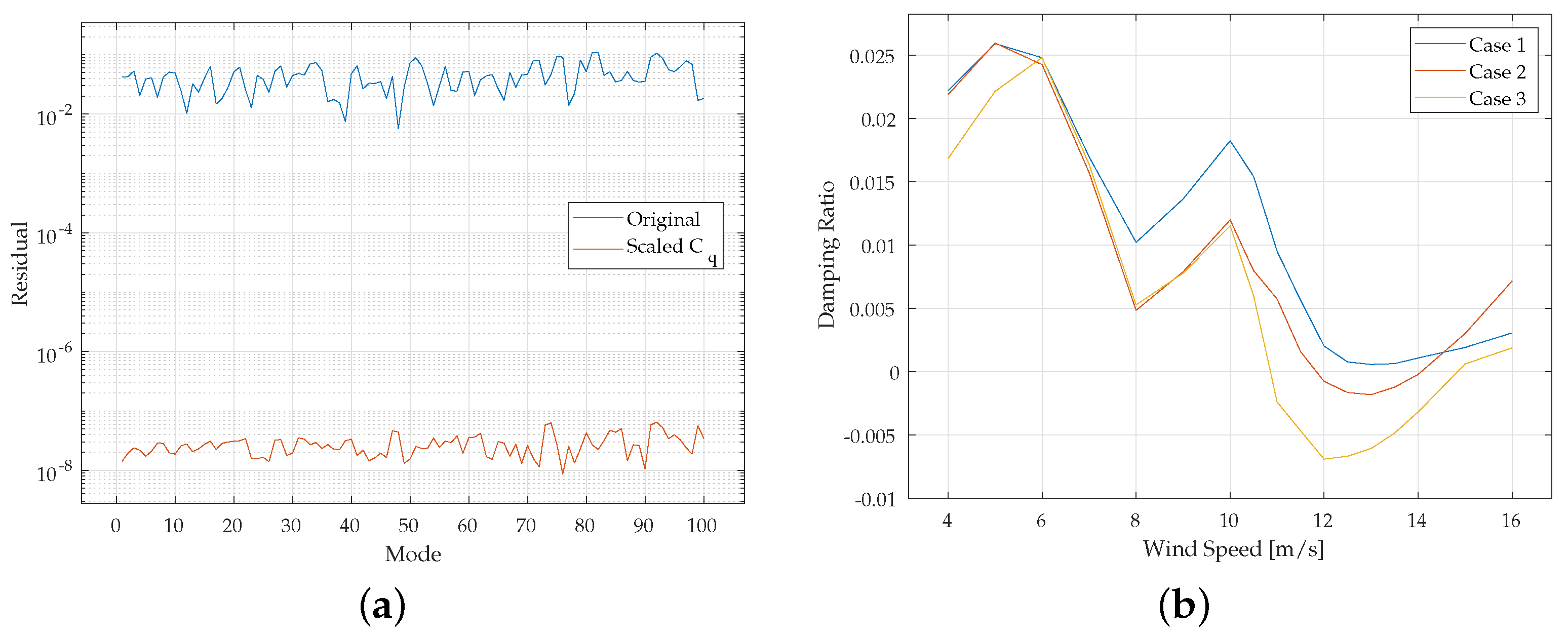

6.4. Wind Turbine

This medium-scale real test case involves a modern large-size wind turbine, courtesy of a commercial original equipment manufacturer in the wind industry. The test includes constrained flexible as well as free rigid bodies. Given the wide ratio between smaller and bigger eigenvalues (the

A matrix results in a reversed conditioning number of

), the preconditioning of the Jacobian matrix of the constraints has proved to be essential for the robustness and accuracy of the results. The problem is non-symmetric, especially due to the linearization of the inertia (

) and constraint forces (

), as shown in Equation (9) and more broadly in

Section 2; this does not pose any additional issue to the eigensolver nor to the inner linear solver because they are both already operating on an asymmetric problem, as shown in Equation (33). Given the sensitivity of the results, the eigenvalues are not shown directly, but only the residuals, together with the stability assessment over different working conditions, are offered (

Figure 4). The problem size is in the order of thousands.

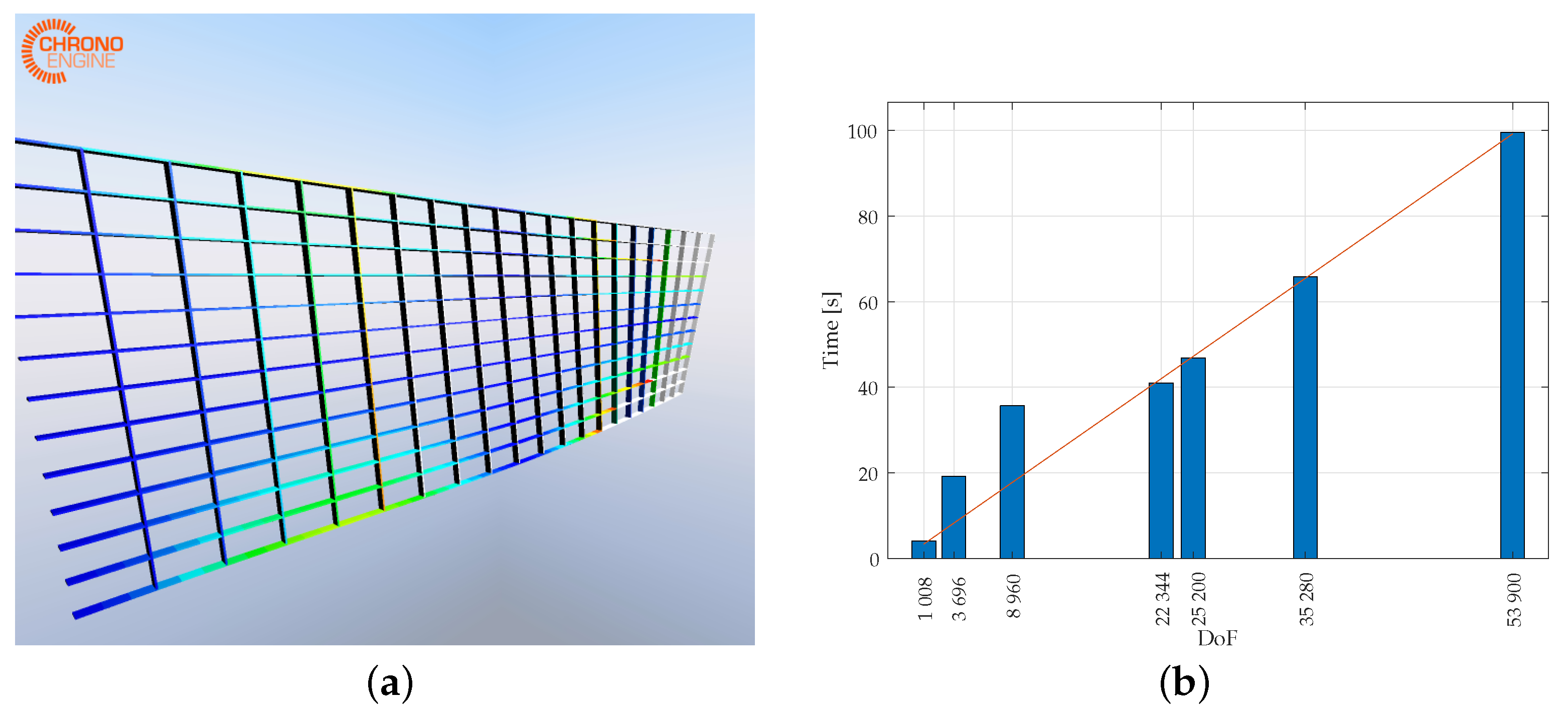

6.5. Scalability

The scalability of the method was tested against a grid of Euler beams, whose size and number of cells are parametrized in order to provide different scales to the same problem. Each beam is fixed at every intersection with the grid. For each test, the lower 100 modes were computed. The number of elements are three and two, respectively, along each cell in the longitudinal and vertical direction. The ratio between the longitudinal and vertical number of cells is kept constant across the different tests. The results basically show a linear relation (

) between the number of degrees of freedom of the original problem and the time cost of the Krylov–Schur solver,

Figure 5, with a little additional overhead for smaller-scale tests. The results do not include the time expense for the assembly of the matrices. Again, the beam properties are set according to

Table 2.

Moreover, in this case, the results for the Arpack solver returned high residuals, especially with close-to-zero shifts. An example over a 20 × 14 beams grid structure is shown in

Figure 6.

7. Conclusions

The proposed implementation of the Krylov–Schur solver successfully proves to effectively handle a wide variety of test cases, including free–free modes, constraints, rigid and flexible systems, resulting in either real or complex, symmetric or asymmetric matrices of the associated eigenvalue problem. The ample availability of the software guarantees a vast dissemination of the method, offering the best platform for further improvements.