1. Introduction

Wind power has become an important source of electricity for production and domestic use due to the global energy crisis and the increasing demand for clean energy [

1,

2,

3,

4]. Wind power is one of the fastest growing renewable energy segments worldwide [

5,

6,

7], with advantages such as being clean and renewable and having technological maturity. Nevertheless, wind energy still suffers from poor reliability and high operational and maintenance costs, resulting in poor availability and affordability compared to traditional energy sources [

8,

9,

10]. Wind turbine blades (WTBs) are an important component of a wind turbine (WT), accounting for about 20% of the total cost, and are also the component with the highest failure rate [

11,

12]. Since commercial WTs are typically exposed to harsh working environments and variable weather conditions, the operating time of WTBs is greatly reduced due to various defects. Structural health monitoring (SHM) technologies are major concerns for the wind industry and academia. The monitoring is reliable and cost-effective, reducing long downtime and high maintenance costs and avoiding catastrophic scenarios due to undetected failures [

13]. In recent years, WTBs have gradually increased in size, thus improving efficiency and energy production, but with a higher probability of failure [

14]. Therefore, studying the structural health monitoring of WTBs is significant and meaningful [

15,

16].

Early studies of WTBs structural health monitoring could be categorized into two classes, noncontact measurement studies and contact measurement studies [

17]. Contact measurement studies mainly consist of stress measurements, vibration measurements, etc. For instance, Wang et al. [

18] utilized the multi-channel convolutional neural network (MCNN) to automatically and effectively capture defect characteristics from raw vibration signals. Wang et al. [

19] proposed a novel wavelet package energy transmissibility function (WPETF) method, increasing the high-frequency resolution of vibration signals while maintaining its low sensitivity to noise, for wind turbine blades fault detection. However these methods may modify the physical, chemical, mechanical or dimensional properties of WTBs. Hence, contact measurement is difficult for practical applications in wind farms [

20]. Noncontact measurement mainly includes acoustic testing and visual testing. Acoustic testing is a technique employed for early defect detection mainly in the frequency-domain. Tsai and Wang [

21] developed a defect detection method based on convolutional neural network for wind turbine blade surfaces, which analyzed the physical correlation between surface conditions and acoustic signals of operating wind turbines under realistic environmental conditions. Reddy et al. [

22] proposed a WTB structural health monitoring method by detecting the images with unmanned aerial vehicles and discusses deploying the trained neural network model using a micro-web framework.

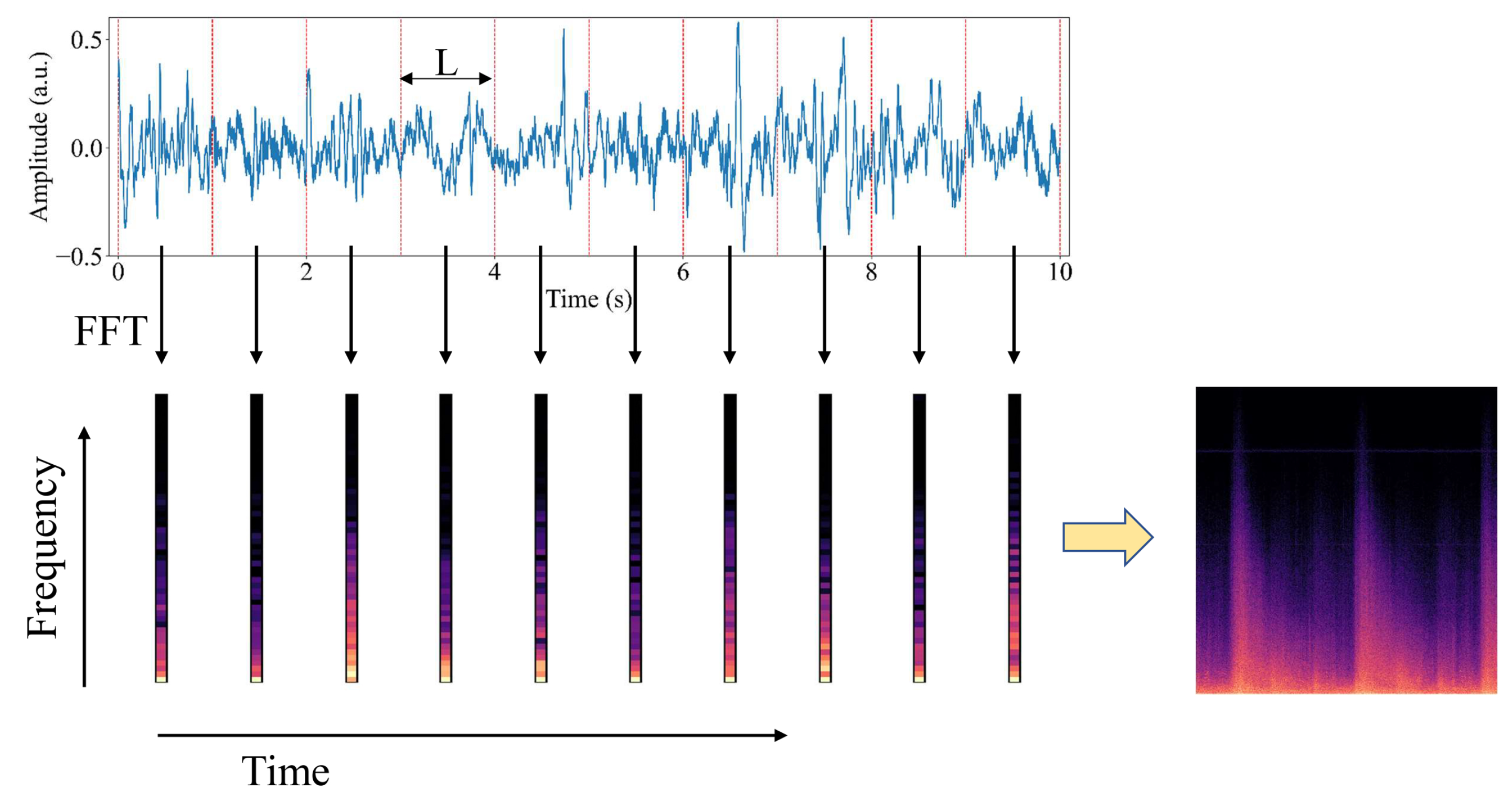

The structural health monitoring of wind turbine blades includes signal processing and fault diagnosis. As the raw signals show no significant characteristic, these signals need to be extracted before fault diagnosis [

23]. Through signal processing, the features of raw signals can explore the hidden information of defects. There are some commonly used signal processing methods, such as fast Fourier transform (FFT) and short-time Fourier transform (STFT). The FFT converts time-domain signals into frequency-domain, and loses the information about time. To solve this problem, STFT is proposed to reserve the time and frequency-domain information by moving a window of fixed length on acoustic signals and applying the FFT on each segment. To distinguish the defects based on the features, it is necessary to design a fault diagnosis algorithm. With the development of deep learning, convolutional neural network (CNN) has been widely used in fault diagnosis due to the advantage of automatically extracting information without any human supervision [

24]. Thus, some academics have employed CNN to achieve defect detection. Edge-side lightweight YOLOv4 [

25] is proposed to achieve real-time safety management of on-site power system work. The model takes the advantages of depth-wise separable convolution and mobile inverted bottleneck convolution to reduce the size and computation of model with a high accuracy. Zhang et al. [

26] used a deep convolution generative adversarial network to generate fault samples and used the residual connected convolutional neural network for feature extracting and classification. Despite the successful applications of deep learning in other fields, it still lacks in-depth research on the applications in wind turbine blade defect detection. There are still some challenges for the use of deep learning [

24].

- (1)

Data aspect: Defects of wind turbine blades are often repaired at an early stage, making them difficult to be obtained. In addition, the diversity of defect categories and degrees of wind turbine blades makes it hard to construct a complete dataset.

- (2)

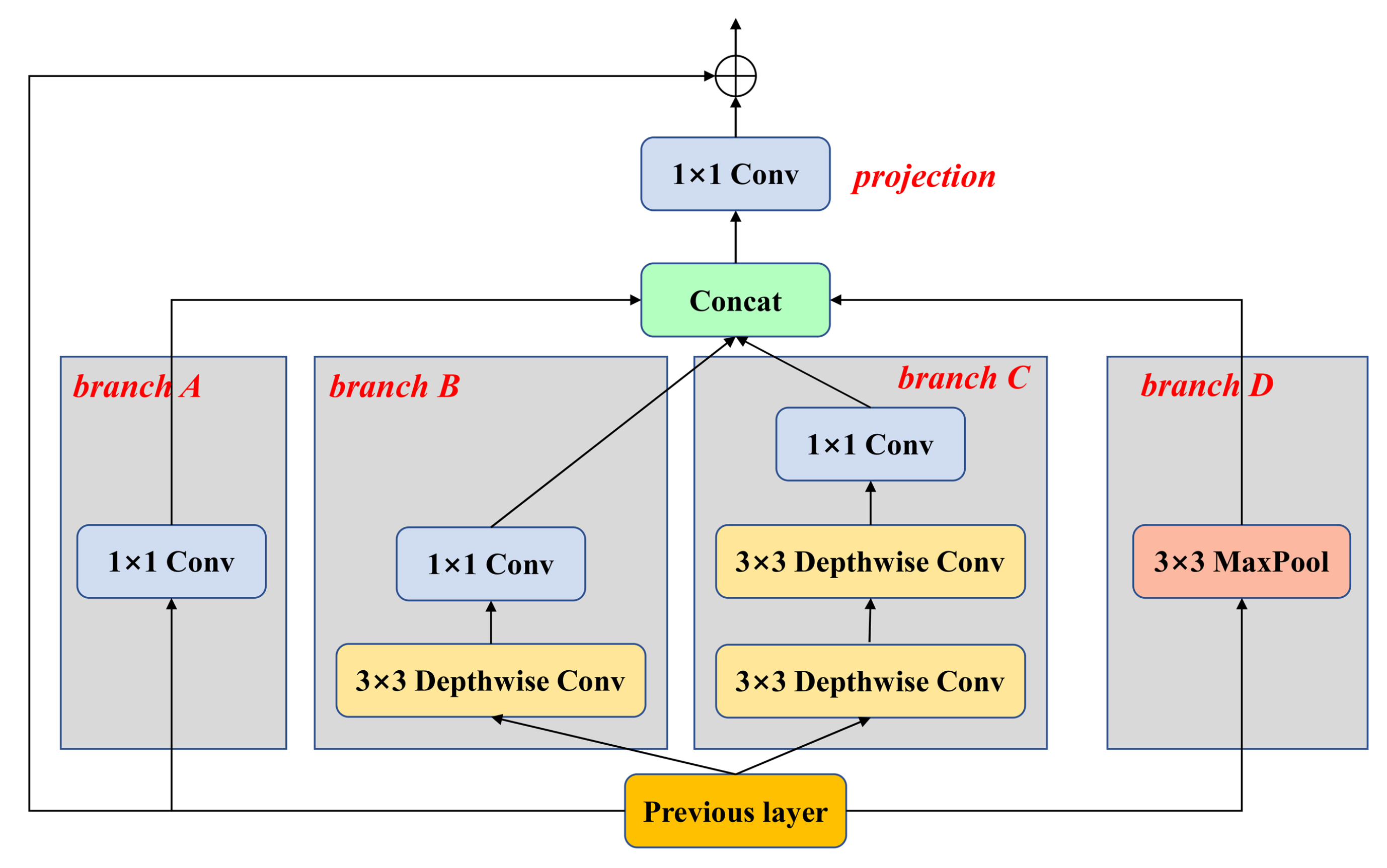

Model aspect: Common convolutional neural networks mainly have good performance on ImageNet, COCO and other datasets. The general trend has been to make deeper and more complicated networks in order to achieve higher accuracy [

27], resulting in huge size and computation. Hence, these models cannot be carried out in embedded devices for edge computing. The model for WTB defect detection should be explored for actual scenarios.

- (3)

Explanation aspect: Deep learning is often treated as a black box due to its complexity. Although these models enable superior performance, they lack the ability to decompose into individual intuitive components, making them difficult to interpret [

28]. Therefore, it is important and meaningful to build trust in deep learning.

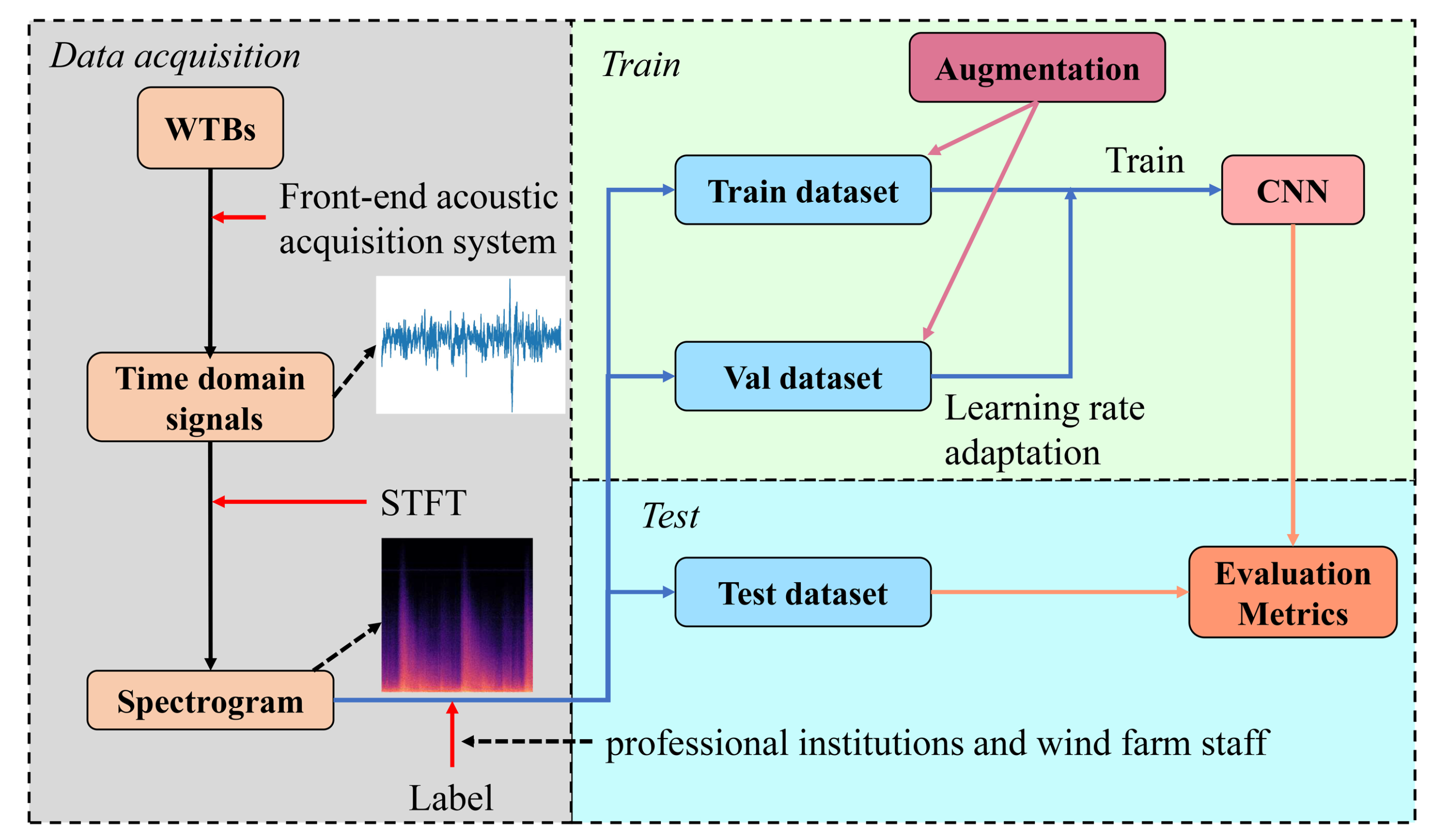

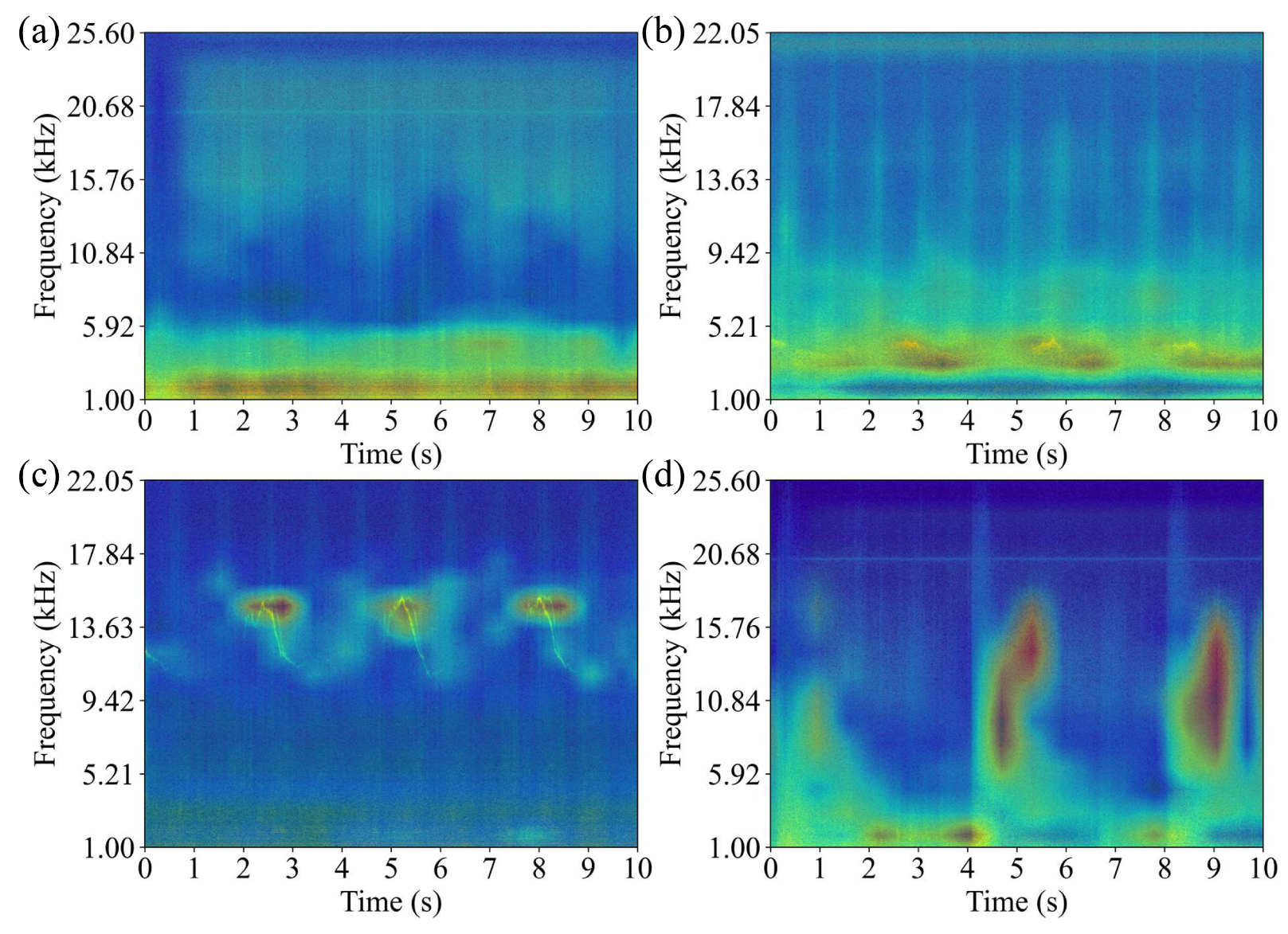

To address the above challenges, a lightweight convolutional neural network called WTBMobileNet for wind turbine blade defect detection is proposed. Specifically, for the data aspect, acoustic signals of different defect categories and degrees are collected from three wind farms (Dawu, Diaoyutai and Xiaolishan) and analyzed in detail through spectrograms. In order to alleviate the problem of data imbalance, class-balanced loss function is used in this study. For the model, WTBMobileNet is designed to implement multi-scale and efficient feature extraction, mainly combining the advantages of GoogLeNet [

29], MobileNet [

27] and ResNet [

30]. The proposed model is compared with baseline networks in multiple aspects, which proves its effectiveness and excellent performance. In addition, the impact of different data augmentations on WTBMobileNet is analyzed, and the best-performing model is trained through four data augmentations which have positive gains. Finally, the application potential of WTBMobileNet is explored, demonstrating that the proposed model has good performance in both drone image classification and spectrogram object detection.

5. Conclusions

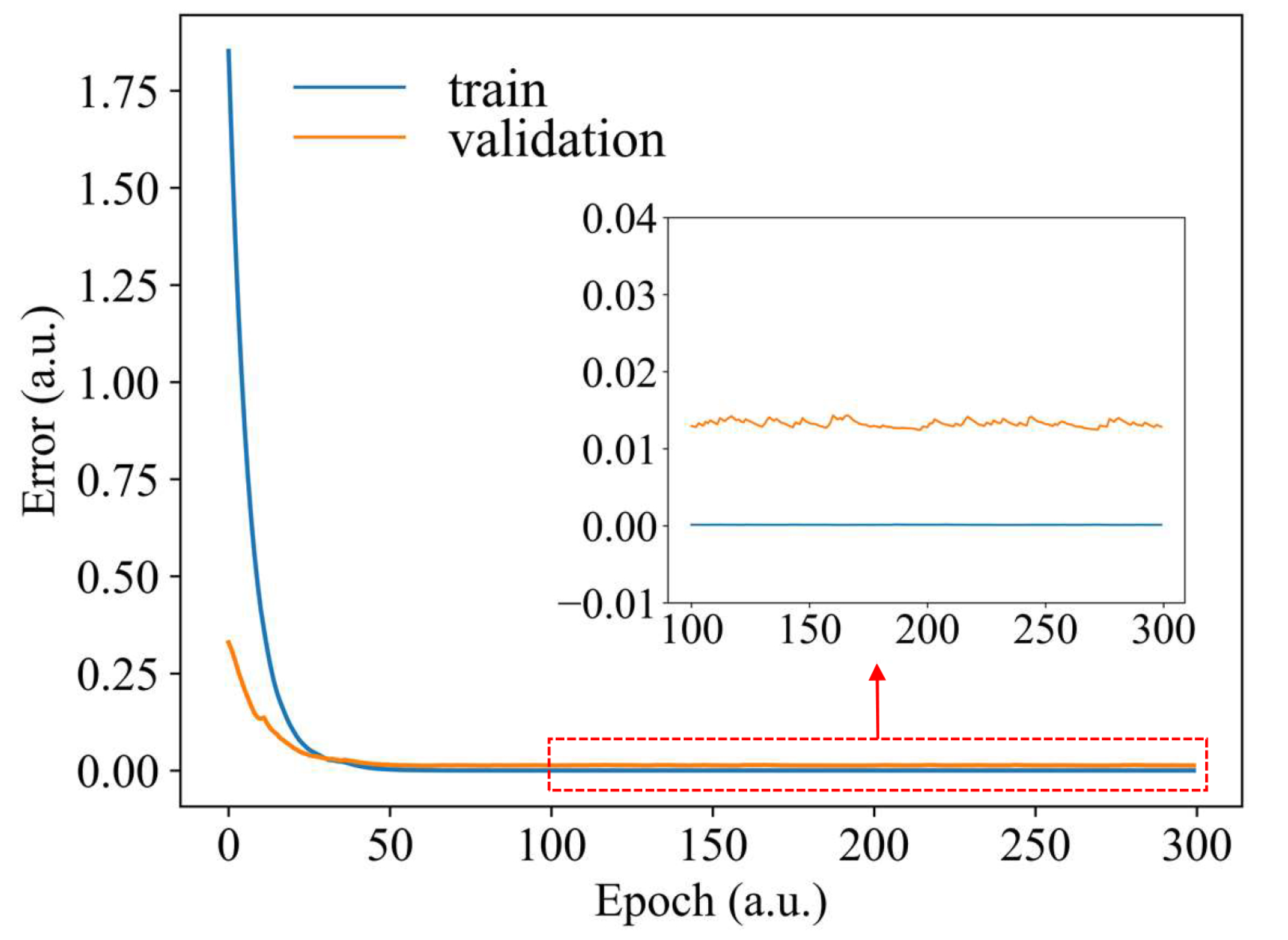

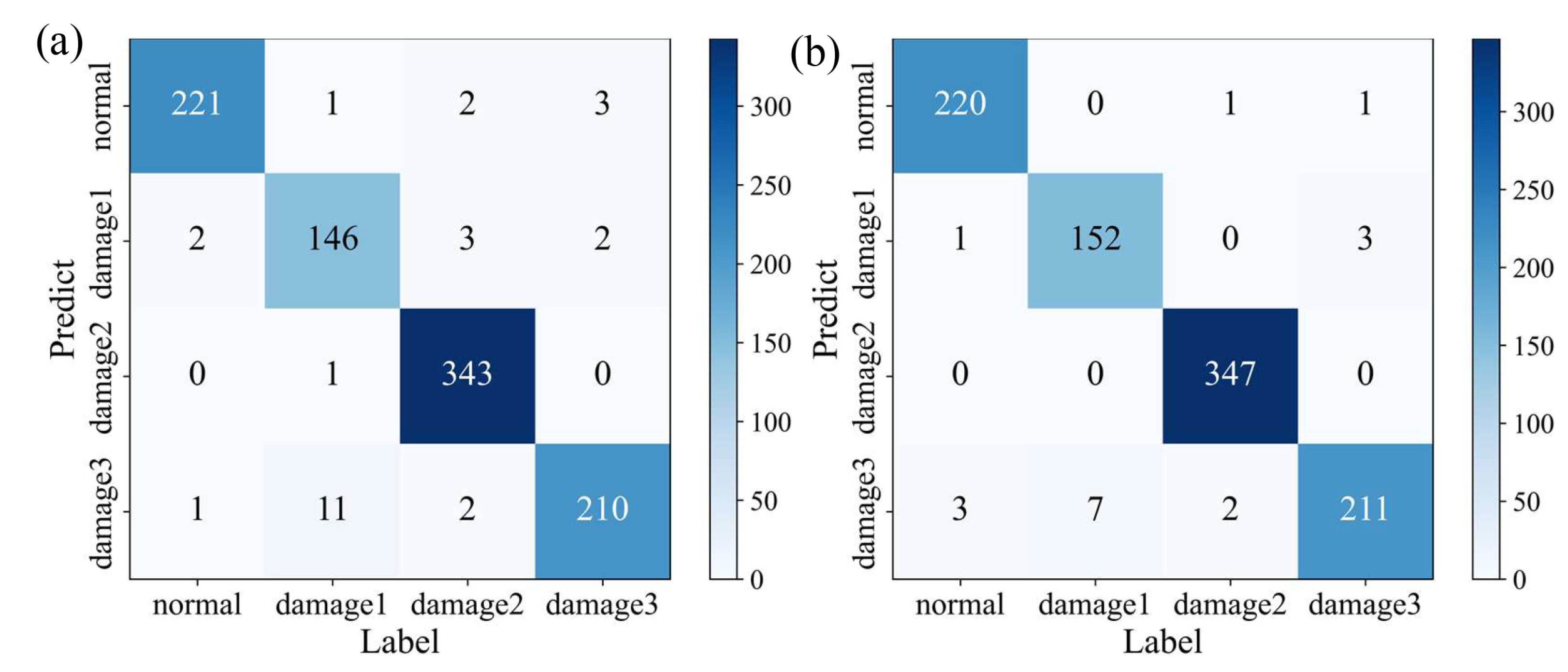

In this paper, to address the challenges of deep learning, a lightweight convolutional neural network is proposed for the embedded devices, which reduces size and FLOPs with a small decrease in accuracy, to implement wind turbine blade defect detection. Compared with the baseline models, the proposed model has an accuracy of 95.6%, and the amount of parameters and computation are 0.315 million and 0.423 GFLOPs, respectively. To further improve the performance of WTBMobileNet, five data augmentations are analyzed, where GaussianNoiseSNR, PinkNoiseSNR, PitchShift and TimeShift have positive gains. The WTBMobileNet with four data augmentations has an accuracy of 98.1%, and improves the accuracy of Defect 1 from 91.82% to 95.6%. In addition, the interpretability and transparency of WTBMobileNet are demonstrated through CAM. Finally, WTBMobileNet is tested in drone image classification and spectrogram object detection. The accuracy, mAP@0.5, mAP@0.75 and mAP@[0.5, 0.95], is 89.55% and 96.4% and 73.6% and 70.7%, respectively, proving that WTBMobileNet has great potential in these two applications.

In the future, we would like to further study the deep-learning-based defect detection method for wind turbine blades from two aspects. First, we would like to optimize the Faster R-CNN to achieve multi-scale and high-accuracy object detection in drone inspection. Second, we would like to combine acoustics and vision to achieve multimodal defect detection for wind turbine blades.