A Novel and Simplified Extrinsic Calibration of 2D Laser Rangefinder and Depth Camera

Abstract

:1. Introduction

- Provide a novel specific calibration board which is simple to manufacture for the 2D LRF and camera calibration to construct three observation feature point-line constraints between two sensors.

- Through the method in this paper, the joint calibration of 2D LRF and depth camera is completed by only two observations with an oversimplified operation.

- By setting the calibration threshold, the joint calibration of the 2D LRF and depth camera placed on a movable device adjusts the number of observations autonomously.

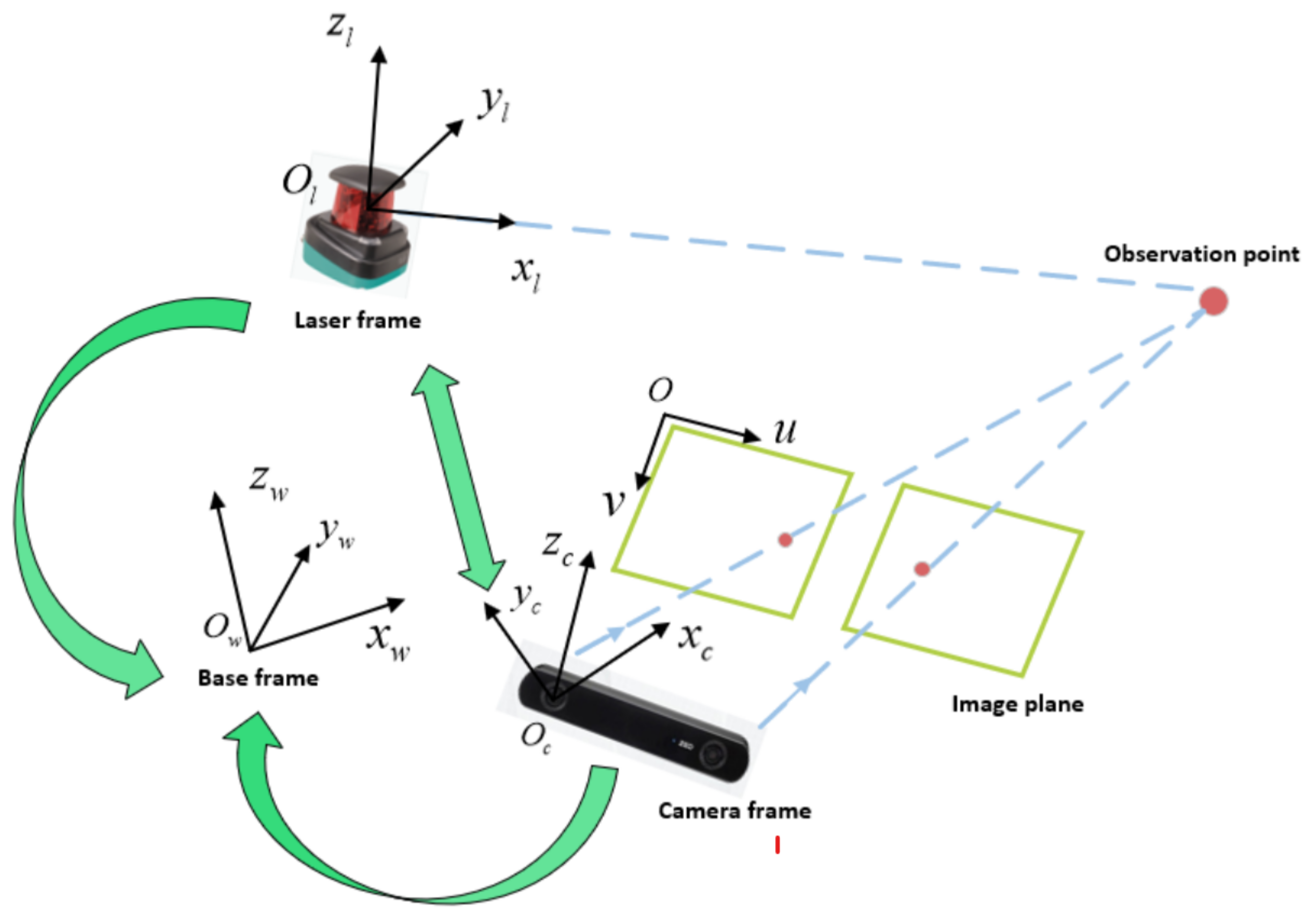

2. The Calibration Basis of 2D LRF and Depth Camera

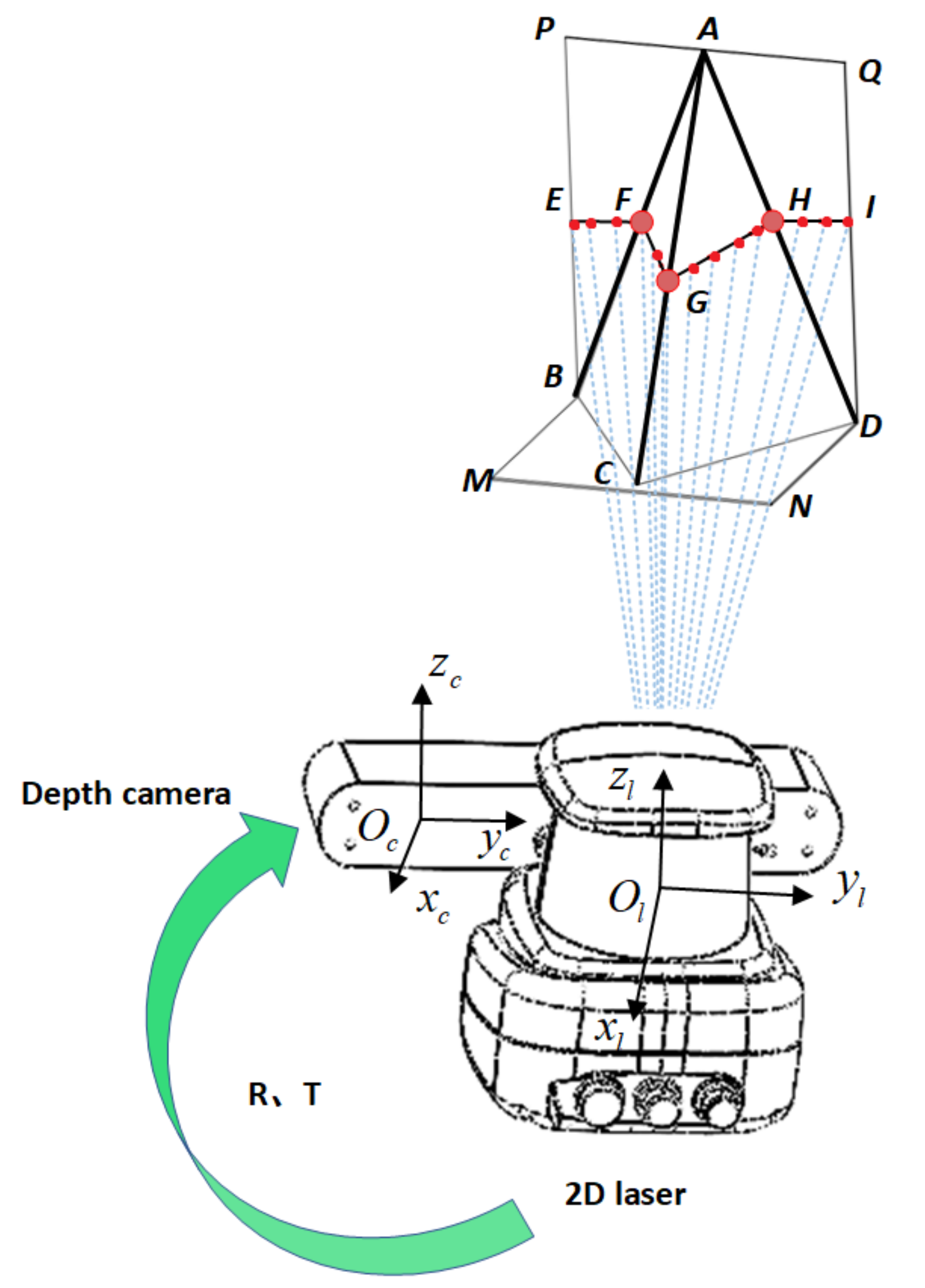

3. Calibration Methods

3.1. Feature Extraction

3.2. Parameter Fitting

3.3. Calibration Algorithm

| Algorithm 1 LRF and Depth Camera Extrinsic Parameter Calibration. |

|

4. Calibration Experiments and Analysis of Results

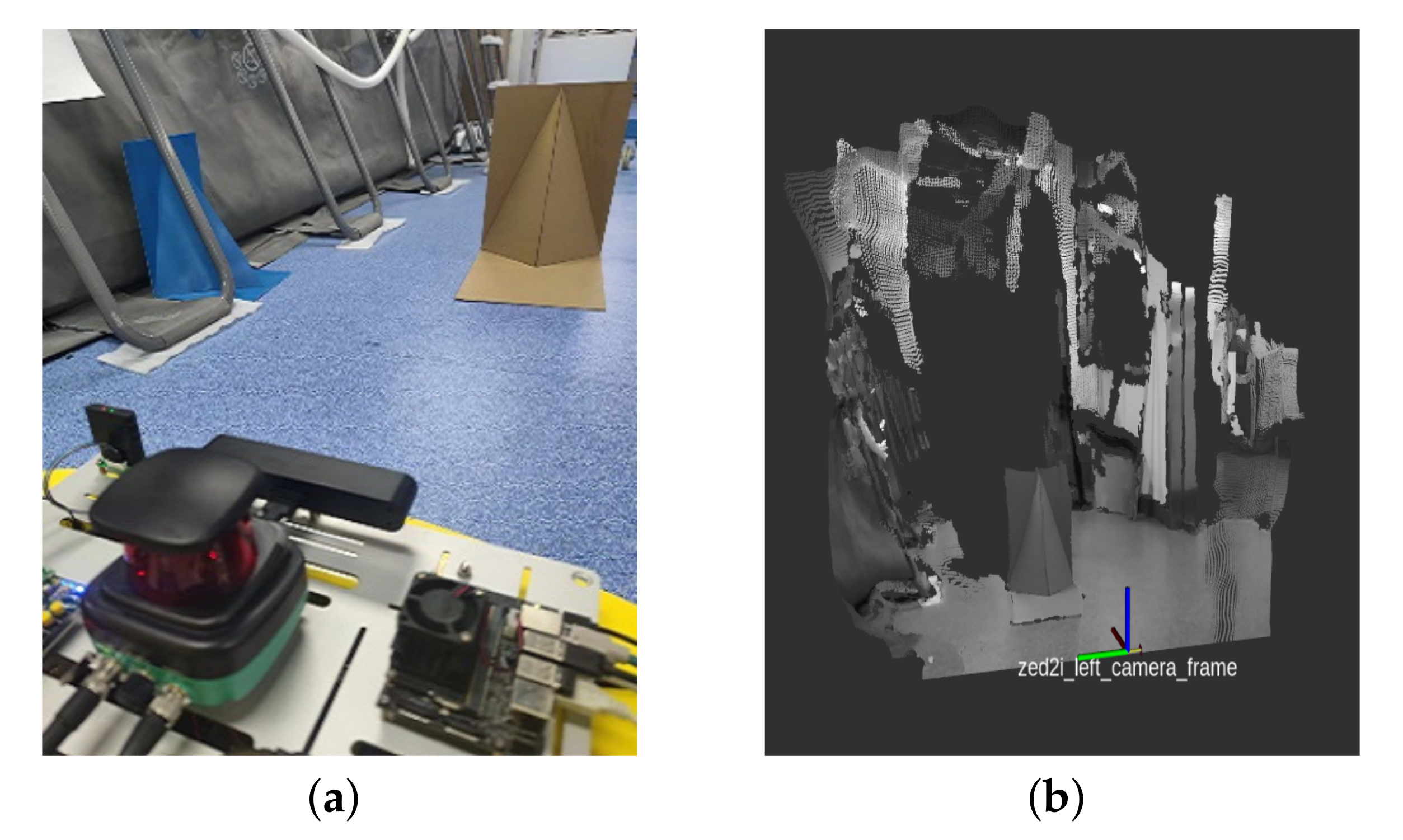

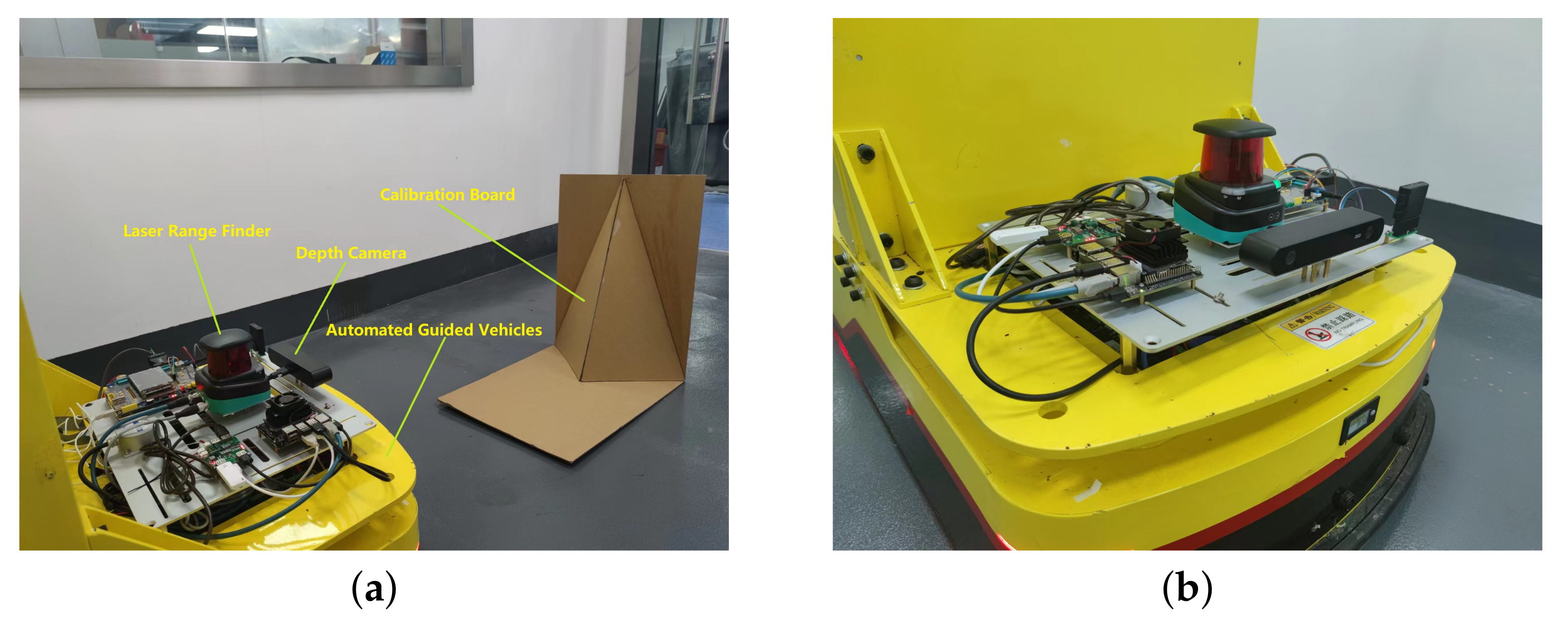

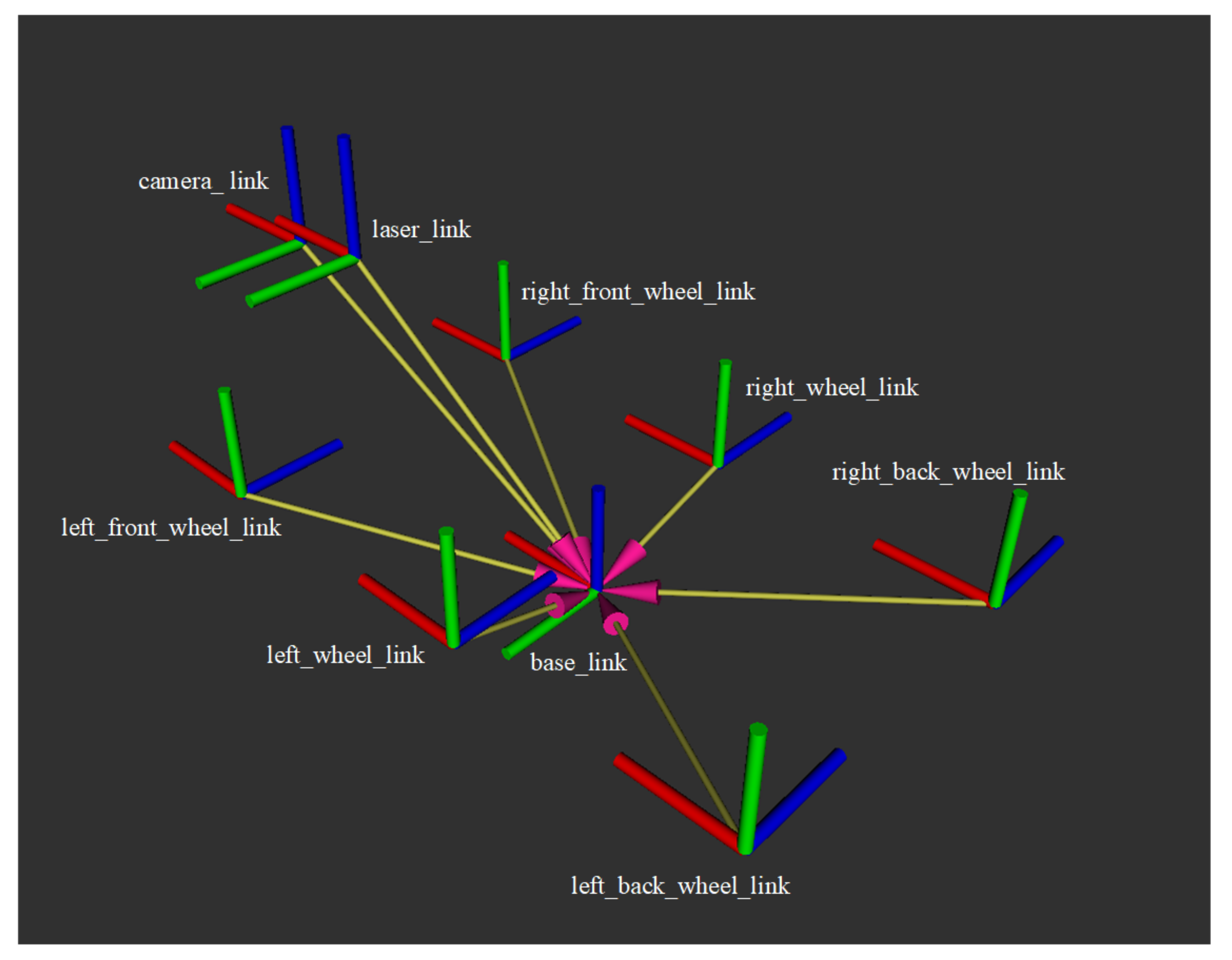

4.1. Experimental Equipment and Environment

4.2. Experimental Steps

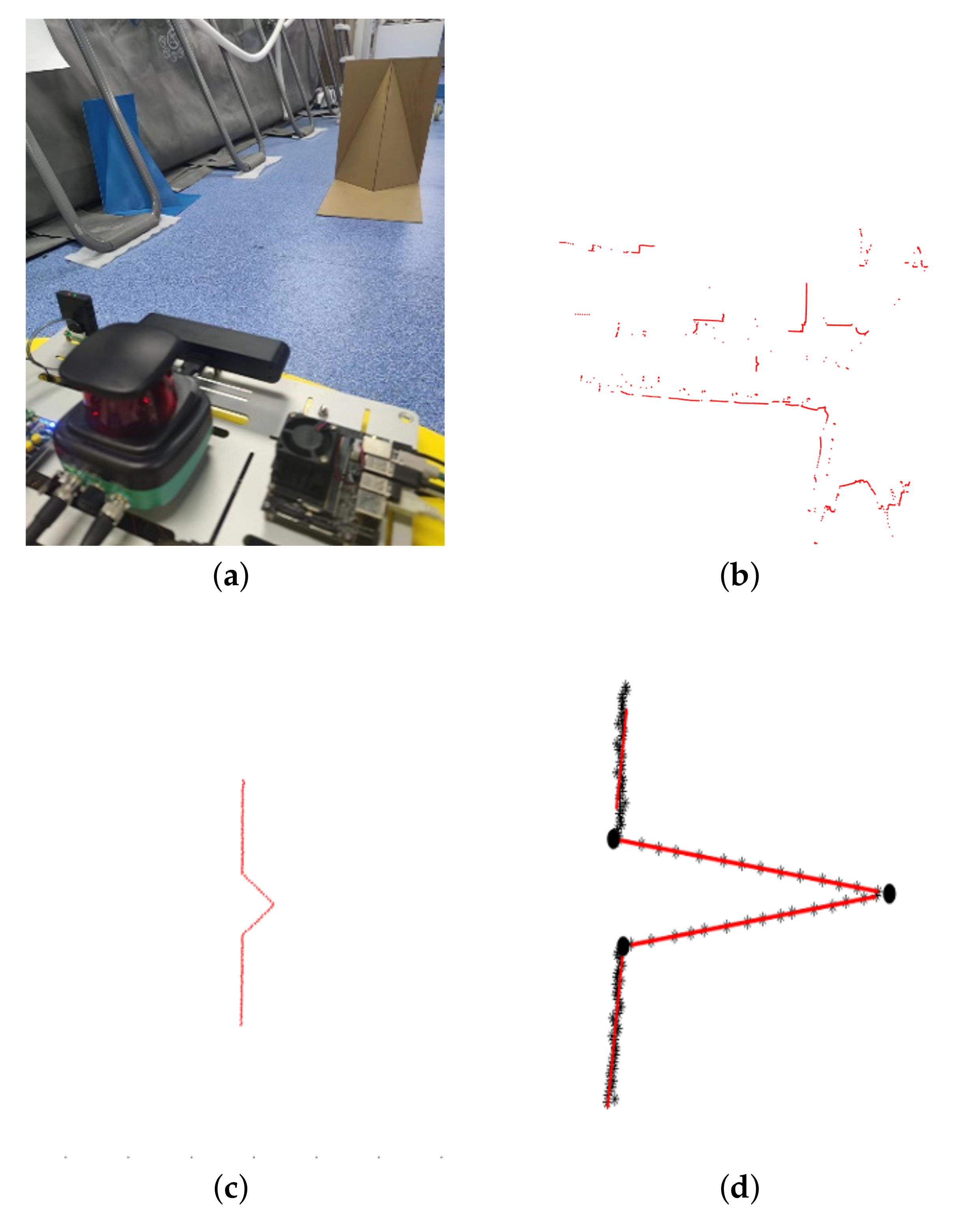

- Identify and extract the point cloud data gathered by 2D LRF on the calibration plate by line and corner feature detection algorithms; split the point cloud data into three parts; fit the point cloud of each part into a straight line; and solve the intersection point of any two straight lines. The feature extraction process is shown in Figure 6.

- Project the intersection points found in the previous step under the depth camera coordinate system by Equation (8).

- Identify and extract the point cloud collected by the depth camera on the calibration plate by edge and corner detection algorithms. Segment the three planes of the calibration plate; obtain the equation of the plane by fitting the point cloud on the plane; and find the equation of the intersection line between two planes in the three planes. The feature extraction and fitting process are shown in Figure 7.

- Using the point on the line as a constraint, the coordinates of the projected point are substituted into the intersection equation to obtain six equations.

- Move the AGV to adjust the observation position, and complete the data collection and extraction again.

- Solve the rotation and translation matrices of the depth camera and 2D LRF coordinate transformation according to Equation (11).

- Take multiple experiments, calculate the average of the calibration results.

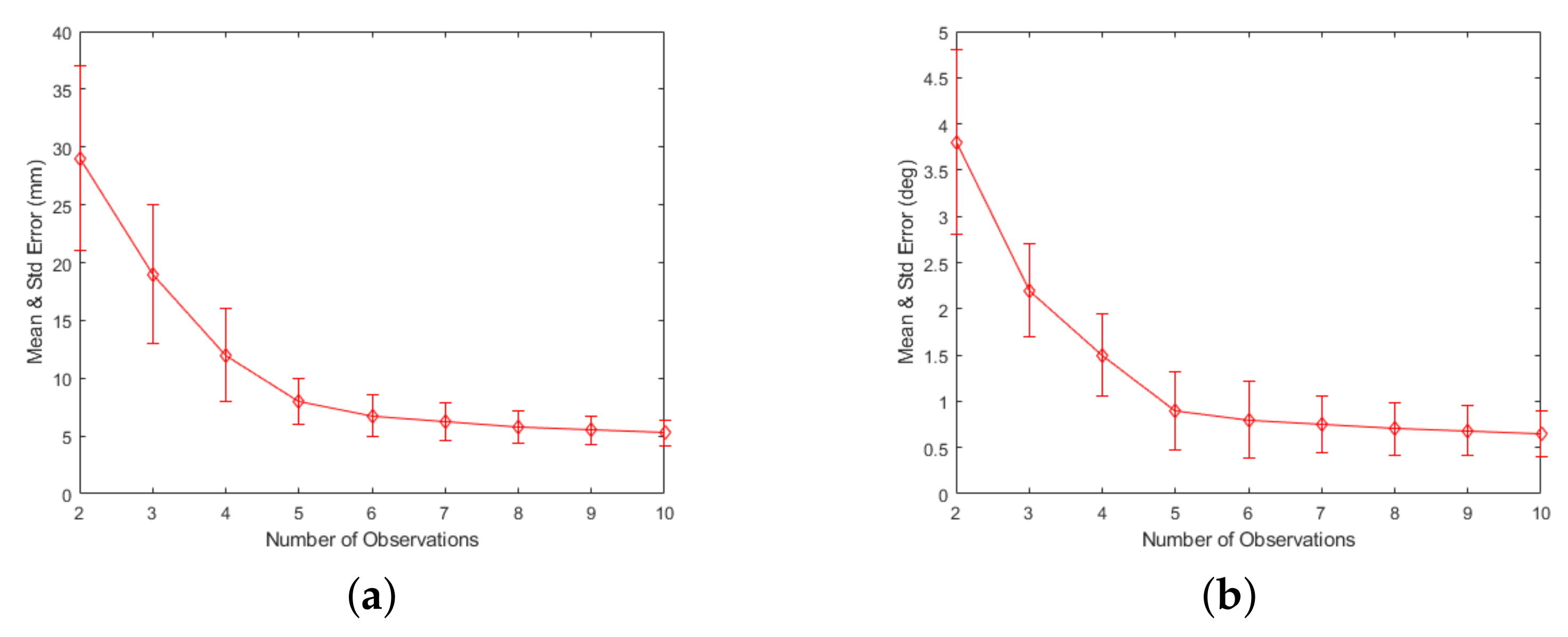

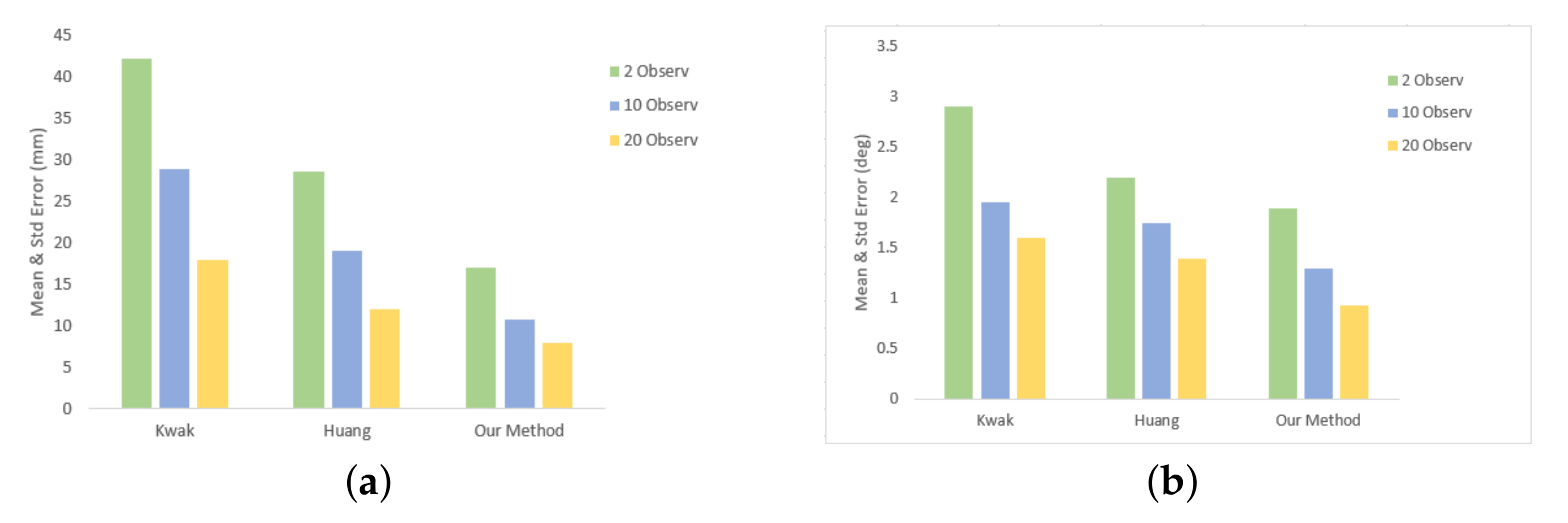

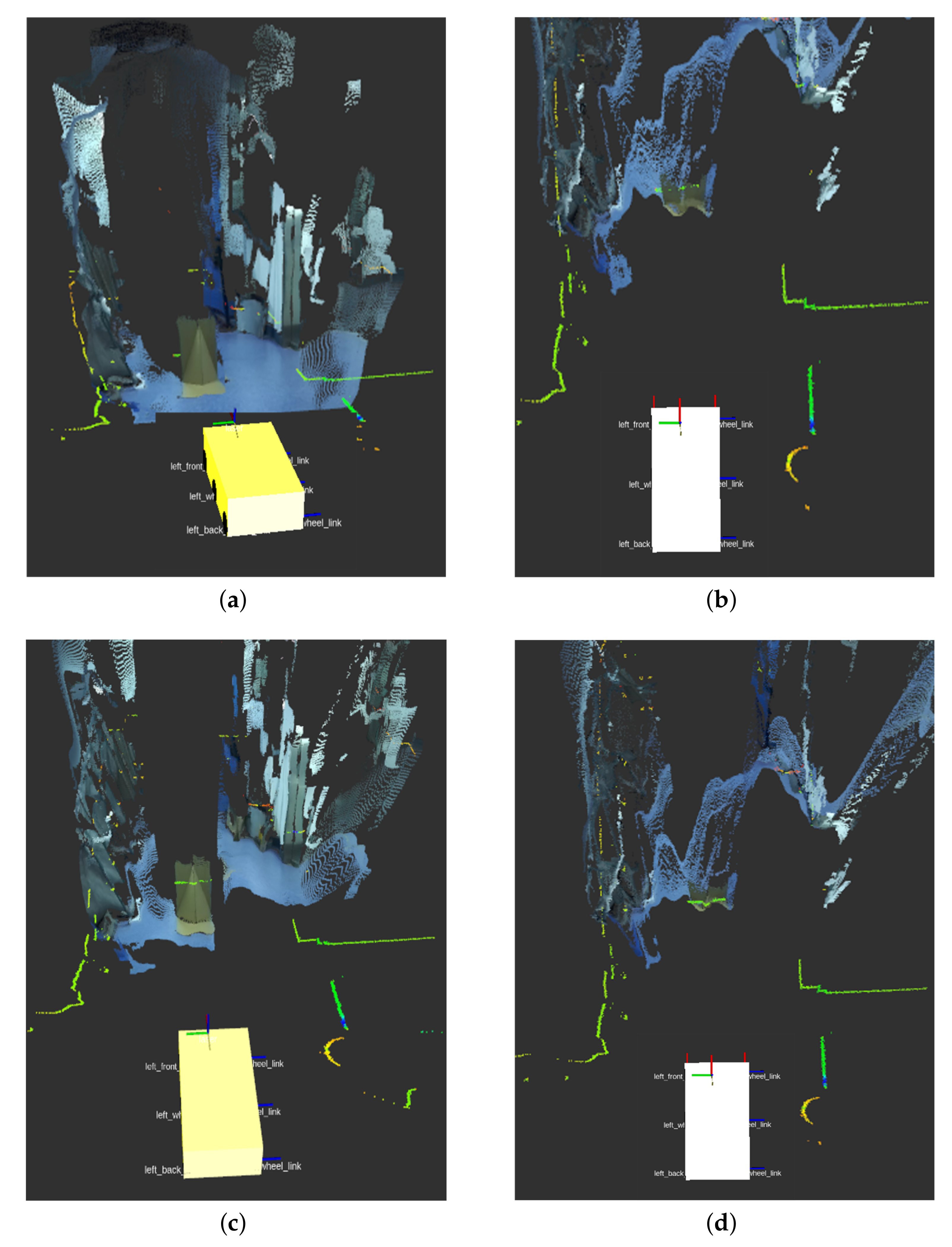

4.3. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LRF | Laser Range Finder |

| 2D | Two-Dimensional |

| LSQ | Least Square |

| P3P | Perspective Three-Point |

| AGV | Automated Guided Vehicles |

References

- Khurana, A.; Nagla, K.S. Extrinsic calibration methods for laser range finder and camera: A systematic review. MAPAN 2021, 36, 669–690. [Google Scholar] [CrossRef]

- Zhu, Z.; Ma, Y.; Zhao, R.; Liu, E.; Zeng, S.; Yi, J.; Ding, J. Improve the Estimation of Monocular Vision 6-DOF Pose Based on the Fusion of Camera and Laser Rangefinder. Remote Sens. 2021, 13, 3709. [Google Scholar] [CrossRef]

- Shao, W.; Zhang, H.; Wu, Y.; Sheng, N. Application of Fusion 2D Lidar and Binocular Vision in Robot Locating Obstacles. J. Intell. Fuzzy Syst. 2021, 41, 4387–4394. [Google Scholar] [CrossRef]

- Lei, G.; Yao, R.; Zhao, Y.; Zheng, Y. Detection and Modeling of Unstructured Roads in Forest Areas Based on Visual-2D Lidar Data Fusion. Forests 2021, 12, 820. [Google Scholar] [CrossRef]

- Zou, Y.; Chen, T. Laser vision seam tracking system based on image processing and continuous convolution operator tracker. Opt. Lasers Eng. 2018, 105, 141–149. [Google Scholar] [CrossRef]

- Li, A.; Cao, J.; Li, S.; Huang, Z.; Wang, J.; Liu, G. Map Construction and Path Planning Method for a Mobile Robot Based on Multi-Sensor Information Fusion. Appl. Sci. 2022, 12, 2913. [Google Scholar] [CrossRef]

- Peng, M.; Wan, Q.; Chen, B.; Wu, S. A Calibration Method of 2D Lidar and a Camera Based on Effective Lower Bound Estimation of Observation Probability. Electron. Inf. J. 2022, 44, 1–10. [Google Scholar]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2301–2306. [Google Scholar]

- Vasconcelos, F.; Barreto, J.P.; Nunes, U. A minimal solution for the extrinsic calibration of a camera and a laser-rangefinder. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2097–2107. [Google Scholar] [CrossRef]

- Zhou, L. A new minimal solution for the extrinsic calibration of a 2D LIDAR and a camera using three plane-line correspondences. IEEE Sens. J. 2014, 14, 442–454. [Google Scholar] [CrossRef]

- Kaiser, C.; Sjoberg, F.; Delcura, J.M.; Eilertsen, B. SMARTOLEV—An orbital life extension vehicle for servicing commercial spacecrafts in GEO. Acta Astronaut. 2008, 63, 400–410. [Google Scholar] [CrossRef]

- Li, G.H.; Liu, Y.H.; Dong, L. An algorithm for extrinsic parameters calibration of a camera and a laser range finder using line features. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 3854–3859. [Google Scholar]

- Kwak, K.; Huber, D.F.; Badino, H. Extrinsic calibration of a single line scanning lidar and a camera. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3283–3289. [Google Scholar]

- Dong, W.B.; Isler, V. A novel method for the extrinsic calibration of a 2D laser rangefinder and a camera. IEEE Sens. J. 2018, 18, 4200–4211. [Google Scholar] [CrossRef]

- Gomez-Ojeda, R.; Briales, J.; Fernandez-Moral, E.; Gonzalez-Jimenez, J. Extrinsic calibration of a 2d laser-rangefinder and a camera based on scene corners. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3611–3616. [Google Scholar]

- Itami, F.; Yamazaki, T. A simple calibration procedure for a 2D LiDAR with respect to a camera. IEEE Sens. J. 2019, 19, 7553–7564. [Google Scholar] [CrossRef]

- Itami, F.; Yamazaki, T. An improved method for the calibration of a 2-D LiDAR with respect to a camera by using a checkerboard target. IEEE Sens. J. 2020, 20, 7906–7917. [Google Scholar] [CrossRef]

- Liu, D.X.; Dai, B.; Li, Z.H.; He, H. A method for calibration of single line laser radar and camera. J. Huazhong Univ. Sci. Technol. (Natural Sci. Ed.) 2008, 36, 68–71. [Google Scholar]

- Le, Q.V.; Ng, A.Y. Joint calibration of multiple sensors. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 3651–3658. [Google Scholar]

- Li, Y.; Ruichek, Y.; Cappelle, C. 3D triangulation based extrinsic calibration between a stereo vision system and a LIDAR. In Proceedings of the 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 797–802. [Google Scholar]

- Chai, Z.Q.; Sun, Y.X.; Xiong, Z.H. A novel method for lidar camera calibration by plane fitting. In Proceedings of the 2018 IEEE/ASME International Conference on Advanced Intelligent Mechatronics(AIM), Auckland, New Zealand, 9–12 July 2018; pp. 286–291. [Google Scholar]

- Tian, Z.; Huang, Y.; Zhu, F.; Ma, Y. The extrinsic calibration of area-scan camera and 2D laser rangefinder (LRF) using checkerboard trihedron. Access IEEE 2020, 8, 36166–36179. [Google Scholar] [CrossRef]

- Huang, Z.; Su, Y.; Wang, Q.; Zhang, C. Research on external parameter calibration method of two-dimensional lidar and visible light camera. J. Instrum. 2020, 41, 121–129. [Google Scholar]

- Tu, Y.; Song, Y.; Liu, F.; Zhou, Y.; Li, T.; Zhi, S.; Wang, Y. An Accurate and Stable Extrinsic Calibration for a Camera and a 1D Laser Range Finder. IEEE Sens. J. 2022, 22, 9832–9842. [Google Scholar] [CrossRef]

- Leveinson, J.; Thrun, S. Automatic online calibration of cameras and lasers. Robot. Sci. Syst. 2013, 2, 7. [Google Scholar]

- Zhao, W.Y.; Nister, D.; Hus, S. Alignment of continuous video onto 3D point clouds. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1305–1318. [Google Scholar] [CrossRef]

- Yang, B.; Chen, C. Automatic registration of UAV-borne sequent images and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2015, 101, 262–274. [Google Scholar] [CrossRef]

- Schneider, N.; Piewak, F.; Stiller, C.; Franke, U. RegNet: Multimodal sensor registration using deep neural networks. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1803–1810. [Google Scholar]

- Yu, G.; Chen, J.; Zhang, K.; Zhang, X. Camera External Self-Calibration for Intelligent Vehicles. In Proceedings of the 2019 IEEE 28th International Symposium on Industrial Electronics (ISIE), Vancouver, BC, Canada, 12–14 June 2019; pp. 1688–1693. [Google Scholar]

- Jiang, P.; Osteen, P.; Saripalli, S. Calibrating LiDAR and Camera using Semantic Mutual information. arXiv 2021, arXiv:2104.12023. [Google Scholar]

- Hu, H.; Han, F.; Bieder, F.; Pauls, J.H.; Stiller, C. TEScalib: Targetless Extrinsic Self-Calibration of LiDAR and Stereo Camera for Automated Driving Vehicles with Uncertainty Analysis. arXiv 2022, arXiv:2202.13847. [Google Scholar]

- Lv, X.; Wang, S.; Ye, D. CFNet: LiDAR-camera registration using calibration flow network. Sensors 2021, 21, 8112. [Google Scholar] [CrossRef]

- Zhang, X.; Zeinali, Y.; Story, B.A.; Rajan, D. Measurement of three-dimensional structural displacement using a hybrid inertial vision-based system. Sensors 2019, 19, 4083. [Google Scholar] [CrossRef]

| Range/m | Rate/Hz | Resolution/° | Accuracy/mm | Angle/° |

|---|---|---|---|---|

| 0.1–30 | 10–50 | 0.042 | ±25 | 360 |

| Depth Range/m | Depth FPS/Hz | Resolution | Aperture | Field/° |

|---|---|---|---|---|

| 0.2–20 | 15–100 | 3840 × 1080 | f/1.8 | 110H × 70V × 120D |

| Pose | X/mm | Y/mm | Z/mm | Yaw/ | Pitch/ | Roll/ |

|---|---|---|---|---|---|---|

| 0 | 120 | 0 | 0 | 0 | 0 | |

| 1.27 | 125.75 | 3.13 | 0 | 0 | 0.11 | |

| 128 | 60 | 10 | 0 | 0 | 0 | |

| 162.35 | 72.51 | 32.58 | 0 | 0 | 0.57 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, W.; Chen, H.; Jin, Z.; Zuo, Q.; Xu, Y.; He, K. A Novel and Simplified Extrinsic Calibration of 2D Laser Rangefinder and Depth Camera. Machines 2022, 10, 646. https://doi.org/10.3390/machines10080646

Zhou W, Chen H, Jin Z, Zuo Q, Xu Y, He K. A Novel and Simplified Extrinsic Calibration of 2D Laser Rangefinder and Depth Camera. Machines. 2022; 10(8):646. https://doi.org/10.3390/machines10080646

Chicago/Turabian StyleZhou, Wei, Hailun Chen, Zhenlin Jin, Qiyang Zuo, Yaohui Xu, and Kai He. 2022. "A Novel and Simplified Extrinsic Calibration of 2D Laser Rangefinder and Depth Camera" Machines 10, no. 8: 646. https://doi.org/10.3390/machines10080646

APA StyleZhou, W., Chen, H., Jin, Z., Zuo, Q., Xu, Y., & He, K. (2022). A Novel and Simplified Extrinsic Calibration of 2D Laser Rangefinder and Depth Camera. Machines, 10(8), 646. https://doi.org/10.3390/machines10080646