Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives

Abstract

:1. Introduction

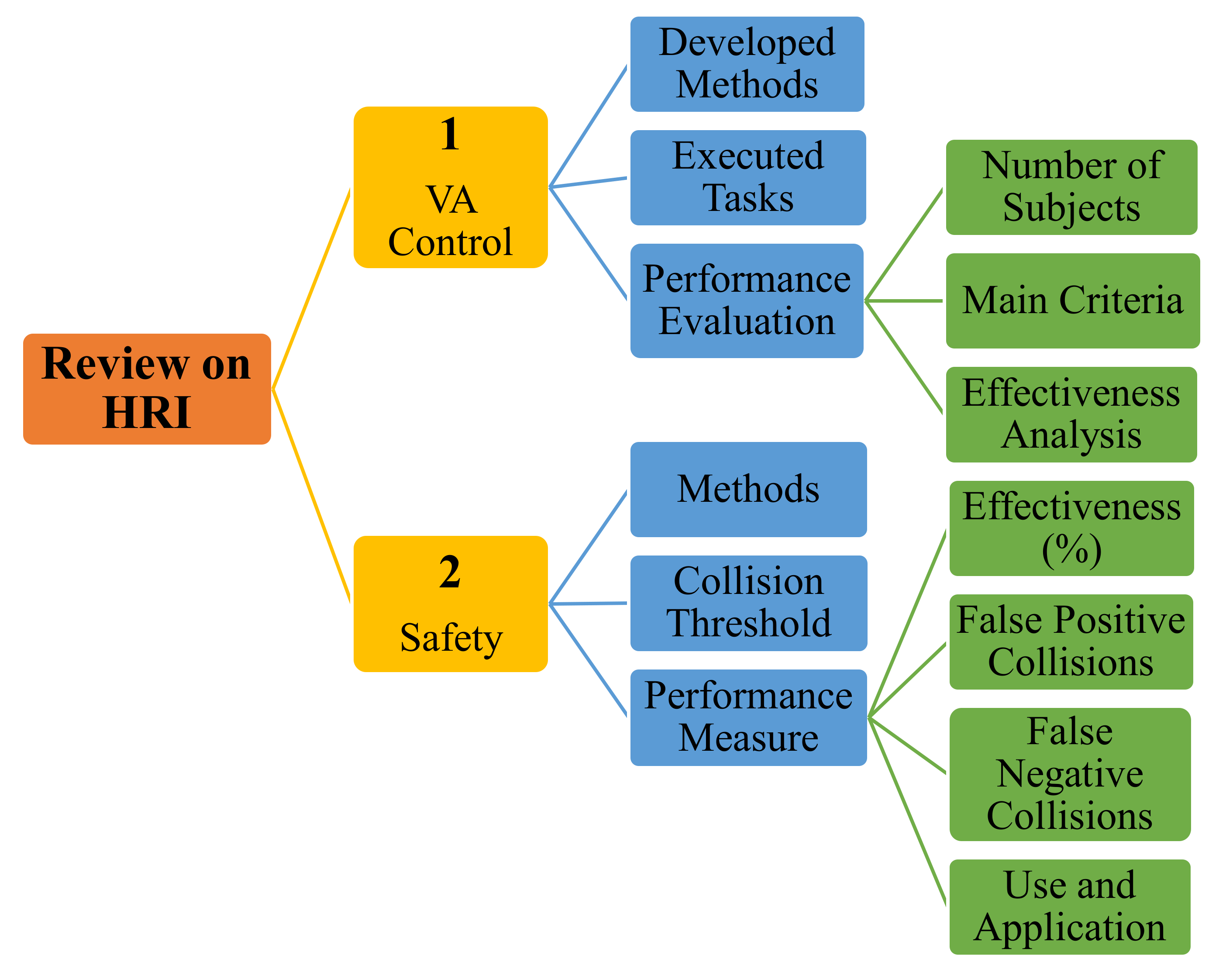

- In the first part, the VA controller is presented in which the virtual damping, inertia, or both are adjusted. The various developed methods, the executed co-manipulation tasks and applications, the criteria for evaluation, and the performance are compared and investigated. The study of this part is crucial and innovative, and its main aim is to give an insight into the role of VA control in improving the HRI’s performance. In addition, it gives guidelines to researchers for designing and evaluating their own VA control systems.

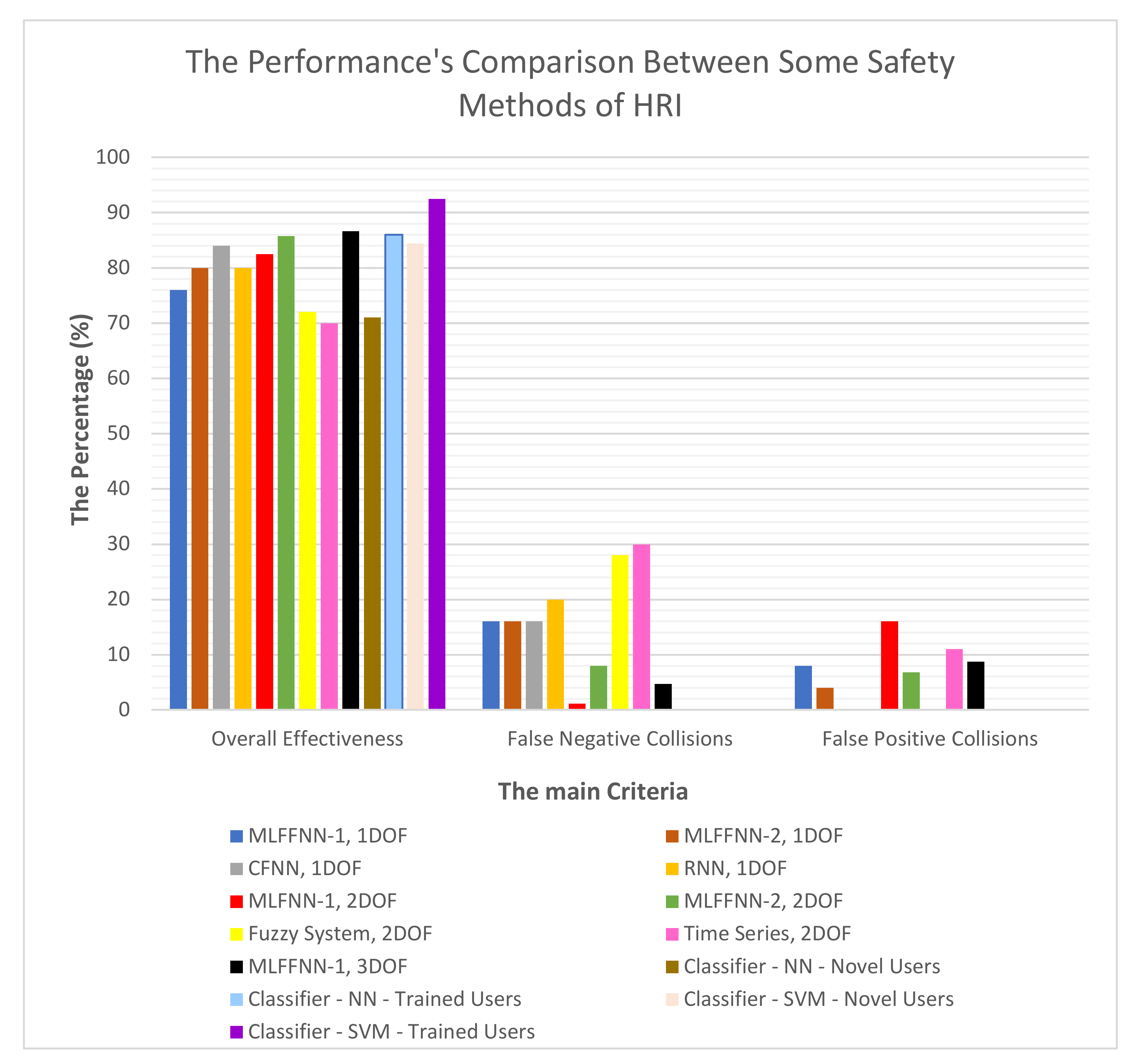

- In the second part, the safety of HRI is reviewed. The model- and data-based methods for collision detection, the collision threshold determination, and the effectiveness (%) of the methods are analyzed and compared. The main purpose of studying this part is revealing the effectiveness, performance measure (%), and application of each method. This could be a chance for the future enhancement of the performance measure of the developed safety method.

2. Control Methods for HRI

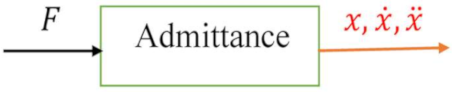

2.1. Compliance Control (Impedance/Admittance)

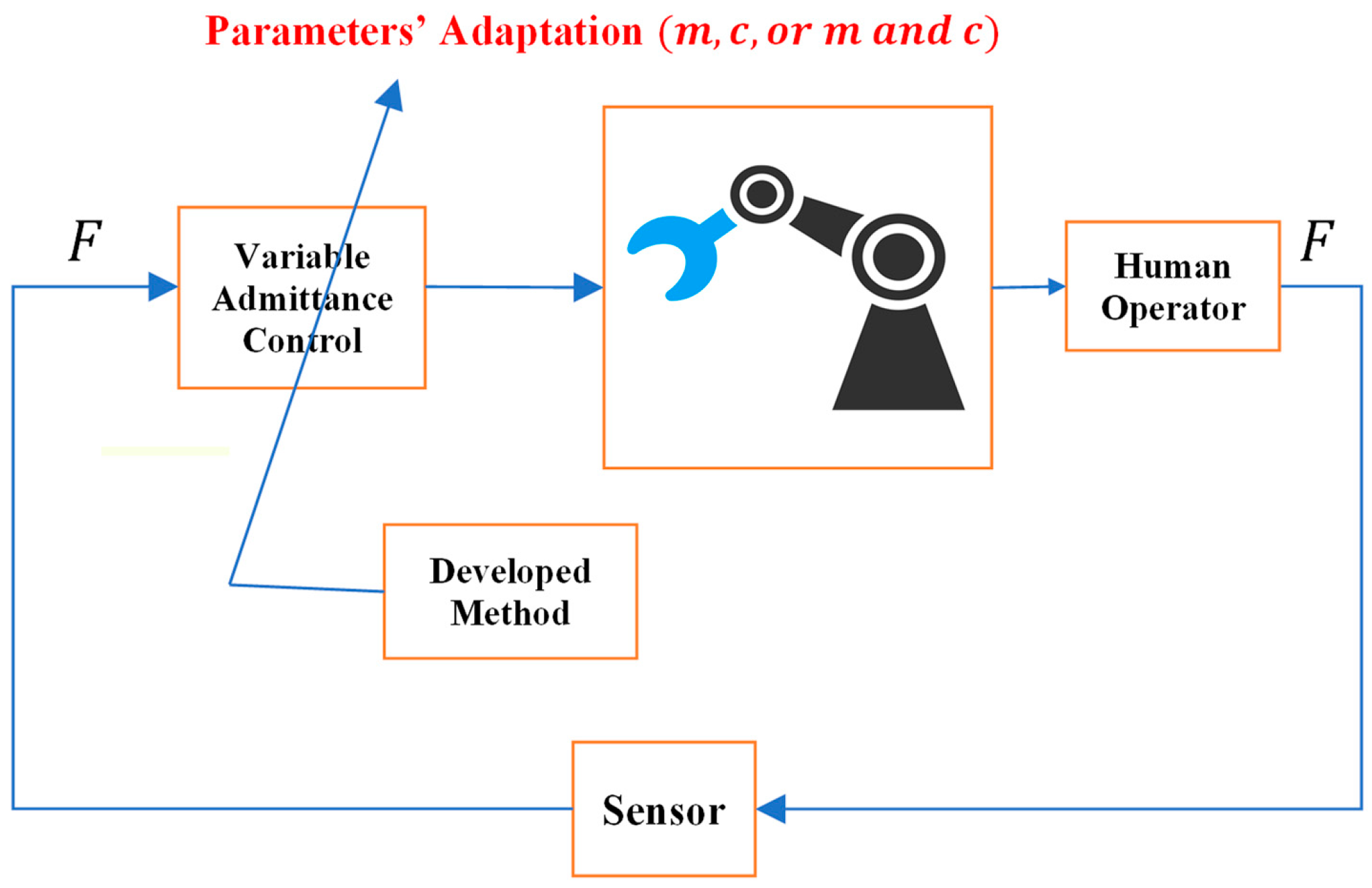

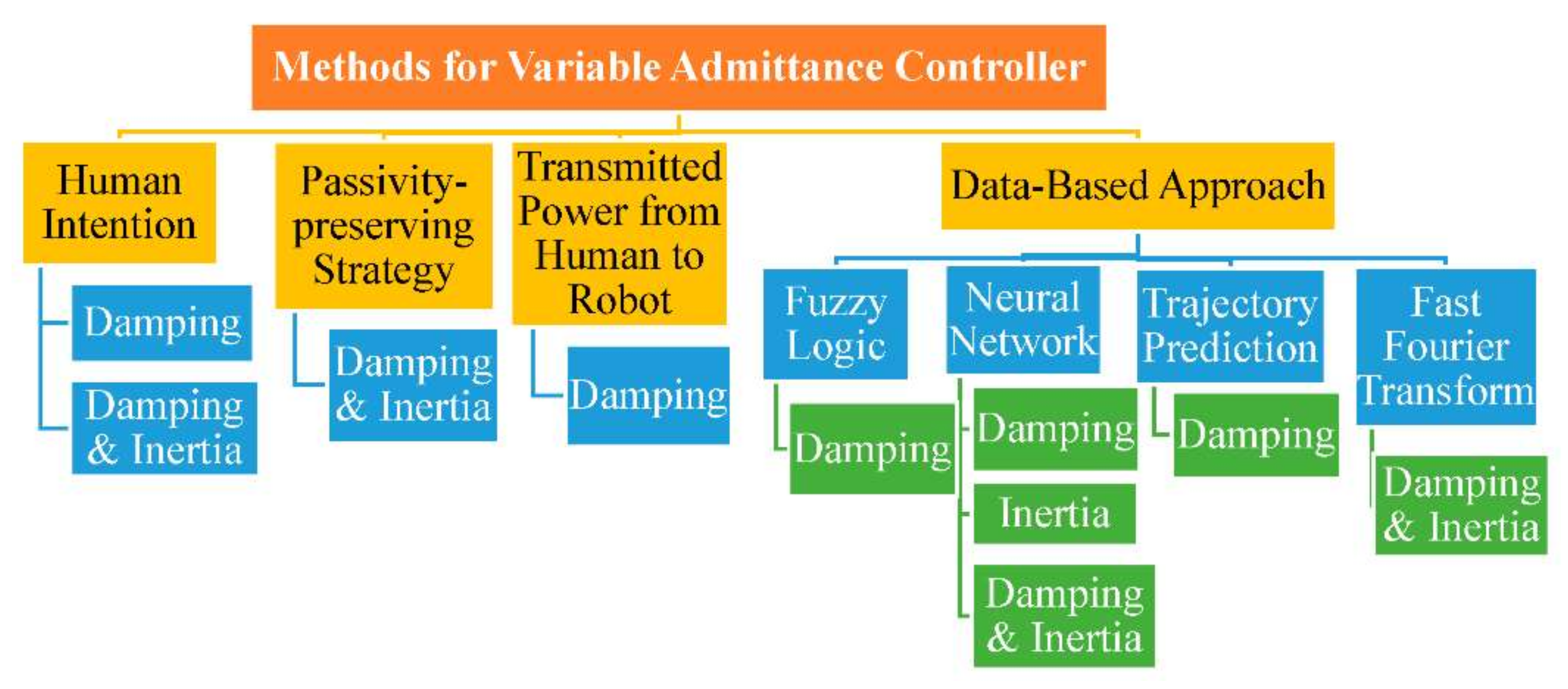

2.2. Methods for VA Control System in Co-Manipulation Tasks

2.3. Accomplished Co-Manipulation Tasks with VA Control

- (1)

- The collaborative co-manipulation tasks in which the human effort and oscillations should be reduced. These types of tasks are the main interest of this paper and are discussed in this subsection.

- (2)

- The rehabilitations tasks in which the robot should apply high force and assist the human, or in other cases the robot should leave the patient to act alone. These types of tasks are out of scope of this paper.

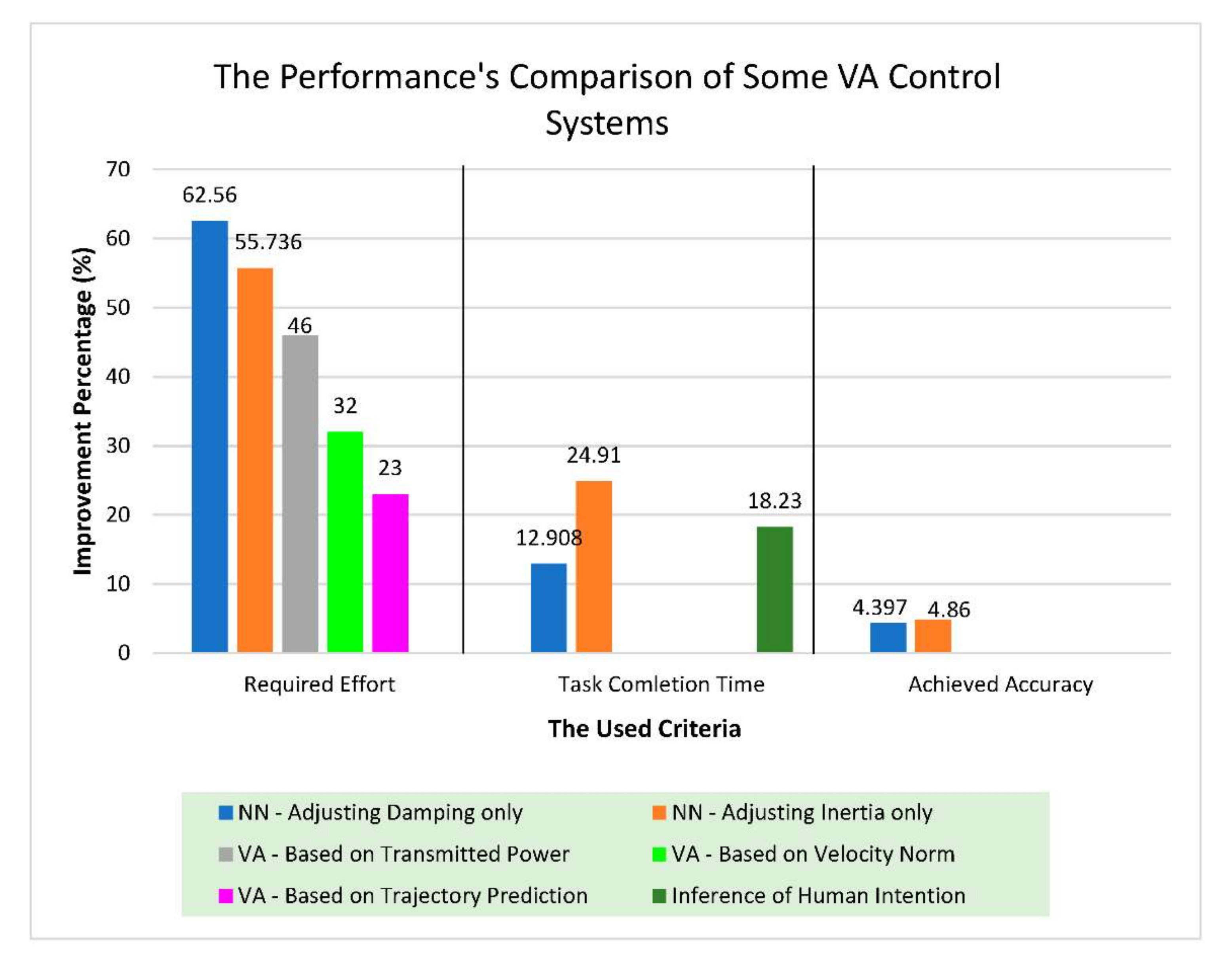

2.4. Performance’s Comparison of VA Controllers in Co-Manipulation Tasks

- (1)

- The required effort for performing the task.

- (2)

- The needed time for executing the task.

- (3)

- The oscillations and the number of overshoots.

- (4)

- The achieved accuracy.

- (5)

- The accumulated jerk.

- (6)

- The opposition of the robot to human forces.

- (1)

- The VA control system based on inference of human intention [37],

- (2)

- The VA control system depending on transmitted power by human to robot [40],

- (3)

- The VA control system based on the velocity norm [52],

- (4)

- The neural network-based system to adjust the damping only [49],

- (5)

- The neural network-based system to adjust the inertia only [50], and

- (6)

- The VA control system depending on the trajectory’s prediction of the motion of a human hand [42].

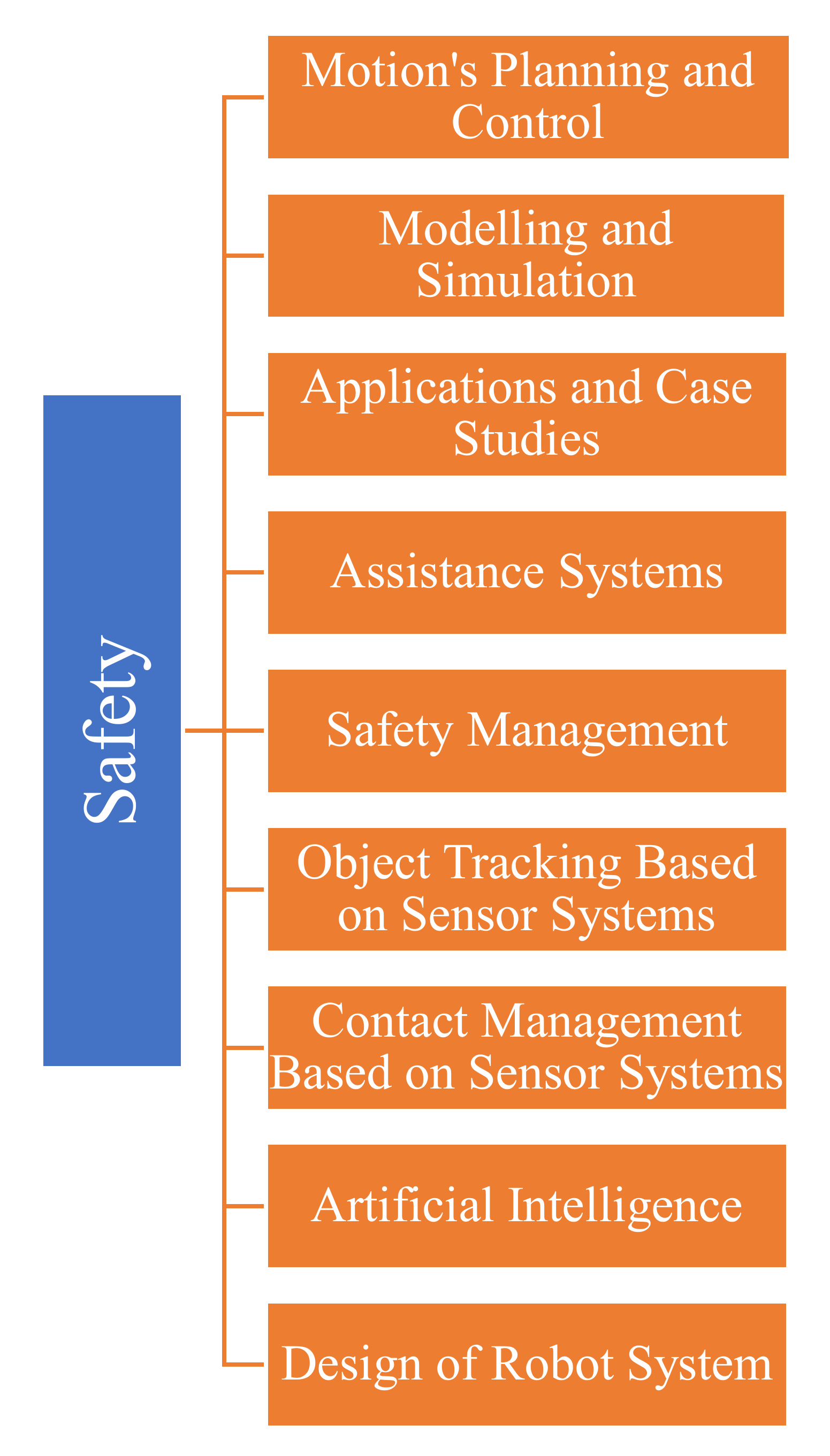

3. Safety of HRI

3.1. Collision Detection Techniques

3.1.1. Model-Based Methods

3.1.2. Data-Based Methods

3.2. Collision Threshold

3.3. Performance Measure and Effectiveness Comparison of the Safety Methods

4. Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sharkawy, A.-N. Human-Robot Interaction: Applications. In Proceedings of the 1st IFSA Winter Conference on Automation, Robotics & Communications for Industry 4.0 (ARCI’ 2021); Yurish, S.Y., Ed.; International Frequency Sensor Association (IFSA) Publishing, S. L.: Chamonix-Mont-Blanc, France, 2021; pp. 98–103. [Google Scholar]

- Sharkawy, A.-N. A Survey on Applications of Human-Robot Interaction. Sens. Transducers 2021, 251, 19–27. [Google Scholar]

- Kruger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann.-Manuf. Technol. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Liu, C.; Tomizuka, M. Algorithmic Safety Measures for Intelligent Industrial Co-Robots. In Proceedings of the IEEE International Conference on Robotics and Automation 2016, Stockholm, Sweden, 16–21 May 2016; pp. 3095–3102. [Google Scholar]

- Sharkawy, A.N.; Papakonstantinou, C.; Papakostopoulos, V.; Moulianitis, V.C.; Aspragathos, N. Task Location for High Performance Human-Robot Collaboration. J. Intell. Robot. Syst. Theory Appl. 2020, 100, 183–202. [Google Scholar] [CrossRef]

- Sharkawy, A.-N. Intelligent Control and Impedance Adjustment for Efficient Human-Robot Cooperation. Ph.D. Thesis, University of Patras, Patras, Greece, 2020. [Google Scholar] [CrossRef]

- Thomas, C.; Matthias, B.; Kuhlenkötter, B. Human—Robot Collaboration—New Applications in Industrial Robotics. In Proceedings of the International Conference in Competitive Manufacturing 2016 (COMA’16), Stellenbosch University, Stellenbosch, South Africa, 27–29 January 2016; pp. 1–7. [Google Scholar]

- Billard, A.; Robins, B.; Nadel, J.; Dautenhahn, K. Building Robota, a Mini-Humanoid Robot for the Rehabilitation of Children With Autism. Assist. Technol. 2007, 19, 37–49. [Google Scholar] [CrossRef] [Green Version]

- Robins, B.; Dickerson, P.; Stribling, P.; Dautenhahn, K. Robot-mediated joint attention in children with autism: A case study in robot-human interaction. Interact. Stud. 2004, 5, 161–198. [Google Scholar] [CrossRef]

- Werry, I.; Dautenhahn, K.; Ogden, B.; Harwin, W. Can Social Interaction Skills Be Taught by a Social Agent? The Role of a Robotic Mediator in Autism Therapy. In Cognitive Technology: Instruments of Mind. CT 2001. Lecture Notes in Computer Science; Beynon, M., Nehaniv, C.L., Dautenhahn, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; ISBN 978-3-540-44617-0. [Google Scholar]

- Lum, P.S.; Burgar, C.G.; Shor, P.C.; Majmundar, M.; Loos, M. Van der Robot-Assisted Movement Training Compared With Conventional Therapy Techniques for the Rehabilitation of Upper-Limb Motor Function After Stroke. Arch. Phys. Med. Rehabil. Vol. 2002, 83, 952–959. [Google Scholar] [CrossRef] [Green Version]

- COVID-19 Test Robot as a Tireless Colleague in the Fight against the Virus. Available online: https://www.kuka.com/en-de/press/news/2020/06/robot-helps-with-coronavirus-tests (accessed on 24 June 2020).

- Vasconez, J.P.; Kantor, G.A.; Auat Cheein, F.A. Human—Robot interaction in agriculture: A survey and current challenges. Biosyst. Eng. 2019, 179, 35–48. [Google Scholar] [CrossRef]

- Baxter, P.; Cielniak, G.; Hanheide, M.; From, P. Safe Human-Robot Interaction in Agriculture. In Proceedings of the HRI’18 Companion, Session; Late-Breaking Reports, Chicago, IL, USA, 5–8 March 2018. [Google Scholar]

- Smart Robot Installed Inside Greenhouse Care. Available online: https://www.shutterstock.com/image-photo/smart-robot-installed-inside-greenhouse-care-765510412 (accessed on 17 June 2022).

- Lyon, N. Robot Turns Its Eye to Weed Recognition at Narrabri. Available online: https://www.graincentral.com/ag-tech/drones-and-automated-vehicles/robot-turns-its-eye-to-weed-recognition-at-narrabri/ (accessed on 19 October 2018).

- Bergerman, M.; Maeta, S.M.; Zhang, J.; Freitas, G.M.; Hamner, B.; Singh, S.; Kantor, G. Robot farmers: Autonomous orchard vehicles help tree fruit production. IEEE Robot. Autom. Mag. 2015, 22, 54–63. [Google Scholar] [CrossRef]

- Freitas, G.; Zhang, J.; Hamner, B.; Bergerman, M.; Kantor, G. A low-cost, practical localization system for agricultural vehicles. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2012; Volume 7508, pp. 365–375. ISBN 9783642335020. [Google Scholar]

- Cooper, M.; Keating, D.; Harwin, W.; Dautenhahn, K. Robots in the classroom—Tools for accessible education. In Assistive Technology on the Threshold of the New Millennium, Assistive Technology Research Series; Buhler, C., Knops, H., Eds.; ISO Press: Düsseldorf, Germany, 1999; pp. 448–452. [Google Scholar]

- Han, J.; Jo, M.; Park, S.; Kim, S. The Educational Use of Home Robots for Children. In Proceedings of the ROMAN 2005. IEEE International Workshop on Robot and Human Interactive Communication, Nashville, TN, USA, 13–15 August 2005; pp. 378–383. [Google Scholar]

- How Robotics Is Changing the Mining Industry. Available online: https://eos.org/features/underground-robots-how-robotics-is-changing-the-mining-industry (accessed on 13 May 2019).

- Bandoim, L. Grocery Retail Lessons from the Coronavirus Outbreak for the Robotic Future. Available online: https://www.forbes.com/sites/lanabandoim/2020/04/14/grocery-retail-lessons-from-the-coronavirus-outbreak-for-the-robotic-future/?sh=5c0dfe1b15d1 (accessed on 14 April 2020).

- Dautenhahn, K. Methodology & Themes of Human-Robot Interaction: A Growing Research Field. Int. J. Adv. Robot. Syst. 2007, 4, 103–108. [Google Scholar]

- Moniz, A.B.; Krings, B. Robots Working with Humans or Humans Working with Robots ? Searching for Social Dimensions in New Human-Robot Interaction in Industry. Societies 2016, 6, 23. [Google Scholar] [CrossRef] [Green Version]

- De Santis, A.; Siciliano, B.; De Luca, A.; Bicchi, A. An atlas of physical human—Robot interaction. Mech. Mach. Theory 2008, 43, 253–270. [Google Scholar] [CrossRef] [Green Version]

- Khatib, O.; Yokoi, K.; Brock, O.; Chang, K.; Casal, A. Robots in Human Environments: Basic Autonomous Capabilities. Int. J. Rob. Res. 1999, 18, 684–696. [Google Scholar] [CrossRef]

- Song, P.; Yu, Y.; Zhang, X. A Tutorial Survey and Comparison of Impedance Control on Robotic Manipulation. Robotica 2019, 37, 801–836. [Google Scholar] [CrossRef]

- Hogan, N. Impedance control: An approach to manipulation: Part I theory; Part II implementation; Part III applications. J. Dynamlc Syst. Meas. Contral 1985, 107, 1–24. [Google Scholar] [CrossRef]

- Sam Ge, S.; Li, Y.; Wang, C. Impedance adaptation for optimal robot—Environment interaction. Int. J. Control 2014, 87, 249–263. [Google Scholar]

- Ott, C.; Mukherjee, R.; Nakamura, Y. Unified impedance and admittance control. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 554–561. [Google Scholar]

- Song, P.; Yu, Y.; Zhang, X. Impedance control of robots: An overview. In Proceedings of the 2017 2nd International Conference on Cybernetics, Robotics and Control (CRC), Chengdu, China, 21–23 July 2017; pp. 51–55. [Google Scholar]

- Dimeas, F. Development of Control Systems for Human-Robot Collaboration in Object Co-Manipulation. Ph.D. Thesis, University of Patras, Patras, Greece, 2017. [Google Scholar]

- Newman, W.S.; Zhang, Y. Stable interaction control and coulomb friction compensation using natural admittance control. J. Robot. Syst. 1994, 1, 3–11. [Google Scholar] [CrossRef]

- Surdilovic, D. Contact Stability Issues in Position Based Impedance Control: Theory and Experiments. In Proceedings of the 1996 IEEE International Conference on Robotics and Automation, Minneapolis, MN, USA, USA, 22–28 April 1996; pp. 1675–1680. [Google Scholar]

- Adams, R.J.; Hannaford, B. Stable Haptic Interaction with Virtual Environments. IEEE Trans. Robot. Autom. 1999, 15, 465–474. [Google Scholar] [CrossRef] [Green Version]

- Ott, C. Cartesian Impedance Control of Redundant and Flexible-Joint Robots; Siciliano, B., Khatib, O., Groen, F., Eds.; Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2008; Volume 49, pp. 1–192. ISBN 9783540692539. [Google Scholar]

- Duchaine, V.; Gosselin, M. General Model of Human-Robot Cooperation Using a Novel Velocity Based Variable Impedance Control. In Proceedings of the Second Joint EuroHaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems (WHC’07), Tsukuba, Japan, 22–24 March 2007; pp. 446–451. [Google Scholar]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. A recurrent neural network for variable admittance control in human—Robot cooperation: Simultaneously and online adjustment of the virtual damping and Inertia parameters. Int. J. Intell. Robot. Appl. 2020, 4, 441–464. [Google Scholar] [CrossRef]

- Yang, C.; Peng, G.; Li, Y.; Cui, R.; Cheng, L.; Li, Z. Neural networks enhanced adaptive admittance control of optimized robot-environment interaction. IEEE Trans. Cybern. 2019, 49, 2568–2579. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sidiropoulos, A.; Kastritsi, T.; Papageorgiou, D.; Doulgeri, Z. A variable admittance controller for human-robot manipulation of large inertia objects. In Proceedings of the 2021 30th IEEE International Conference on Robot and Human Interactive Communication, RO-MAN 2021, Vancouver, BC, Canada, 8–12 August 2021; pp. 509–514. [Google Scholar]

- Topini, A.; Sansom, W.; Secciani, N.; Bartalucci, L.; Ridolfi, A.; Allotta, B. Variable Admittance Control of a Hand Exoskeleton for Virtual Reality-Based Rehabilitation Tasks. Front. Neurorobot. 2022, 15, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yang, Y.; Zhao, B.; Qi, X.; Hu, Y.; Li, B.; Sun, L.; Zhang, L.; Meng, M.Q.H. Variable admittance control based on trajectory prediction of human hand motion for physical human-robot interaction. Appl. Sci. 2021, 11, 5651. [Google Scholar] [CrossRef]

- Du, Z.; Wang, W.; Yan, Z.; Dong, W.; Wang, W. Variable Admittance Control Based on Fuzzy Reinforcement Learning for Minimally Invasive Surgery Manipulator. Sensors 2017, 17, 844. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dimeas, F.; Aspragathos, N. Fuzzy Learning Variable Admittance Control for Human-Robot Cooperation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 4770–4775. [Google Scholar]

- Tsumugiwa, T.; Yokogawa, R.; Hara, K. Variable Impedance Control with Regard to Working Process for Man-Machine Cooperation-Work System. In Proceedings of the 2001 IEEE/RsI International Conference on Intelligent Robots and Systems, Maui, HI, USA, 29 October–3 November 2001; pp. 1564–1569. [Google Scholar]

- Lecours, A.; Mayer-st-onge, B.; Gosselin, C. Variable admittance control of a four-degree-of-freedom intelligent assist device. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3903–3908. [Google Scholar]

- Okunev, V.; Nierhoff, T.; Hirche, S. Human-preference-based Control Design: Adaptive Robot Admittance Control for Physical Human-Robot Interaction. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 443–448. [Google Scholar]

- Landi, C.T.; Ferraguti, F.; Sabattini, L.; Secchi, C.; Bonf, M.; Fantuzzi, C. Variable Admittance Control Preventing Undesired Oscillating Behaviors in Physical Human-Robot Interaction. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3611–3616. [Google Scholar]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Variable Admittance Control for Human—Robot Collaboration based on Online Neural Network Training. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. A Neural Network based Approach for Variable Admittance Control in Human- Robot Cooperation: Online Adjustment of the Virtual Inertia. Intell. Serv. Robot. 2020, 13, 495–519. [Google Scholar] [CrossRef]

- Sauro, J. A Practical Guide to the System Usability Scale: Background, Benchmarks and Best Practices; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2011; pp. 1–162. [Google Scholar]

- Ficuciello, F.; Villani, L.; Siciliano, B. Variable Impedance Control of Redundant Manipulators for Intuitive Human-Robot Physical Interaction. IEEE Trans. Robot. 2015, 31, 850–863. [Google Scholar] [CrossRef] [Green Version]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Emerging research fields in safety and ergonomics in industrial collaborative robotics: A systematic literature review. Robot. Comput. Integr. Manuf. 2021, 67, 101998. [Google Scholar] [CrossRef]

- ISO 10218-1; Robots and Robotic Devices—Safety Requirements for Industrial Robots—Part 1: Robots. ISO Copyright Office: Zurich, Switzerland, 2011.

- ISO 10218-2; Robots and robotic devices—Safety Requirements for Industrial Robots—Part 2: Robot Systems and Integration. ISO Copyright Office: Zurich, Switzerland, 2011.

- ISO/TS 15066; Robots and Robotic Devices—Collaborative Robots. ISO Copyright Office: Geneva, Switzerland, 2016.

- Yamada, Y.; Hirasawa, Y.; Huang, S.; Umetani, Y.; Suita, K. Human—Robot Contact in the Safeguarding Space. IEEE/ASME Trans. Mechatron. 1997, 2, 230–236. [Google Scholar] [CrossRef]

- Flacco, F.; Kroger, T.; De Luca, A.; Khatib, O. A Depth Space Approach to Human-Robot Collision Avoidance. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 338–345. [Google Scholar]

- Schmidt, B.; Wang, L. Contact-less and Programming-less Human-Robot Collaboration. In Proceedings of the Forty Sixth CIRP Conference on Manufacturing Systems 2013; Elsevier: Amsterdam, The Netherlands, 2013; Volume 7, pp. 545–550. [Google Scholar]

- Anton, F.D.; Anton, S.; Borangiu, T. Human-Robot Natural Interaction with Collision Avoidance in Manufacturing Operations. In Service Orientation in Holonic and Multi Agent Manufacturing and Robotics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 375–388. ISBN 9783642358524. [Google Scholar]

- Kitaoka, M.; Yamashita, A.; Kaneko, T. Obstacle Avoidance and Path Planning Using Color Information for a Biped Robot Equipped with a Stereo Camera System. In Proceedings of the 4th Asia International Symposium on Mechatronics, Singapore, 15–18 December 2010; pp. 38–43. [Google Scholar]

- Lenser, S.; Veloso, M. Visual Sonar: Fast Obstacle Avoidance Using Monocular Vision. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003), Las Vegas, NV, USA, 27–31 October 2003. [Google Scholar]

- Peasley, B.; Birchfield, S. Real-Time Obstacle Detection and Avoidance in the Presence of Specular Surfaces Using an Active 3D Sensor. In Proceedings of the 2013 IEEE Workshop on Robot Vision (WORV), Clearwater Beach, FL, USA, 15–17 January 2013; pp. 197–202. [Google Scholar]

- Flacco, F.; Kroeger, T.; De Luca, A.; Khatib, O. A Depth Space Approach for Evaluating Distance to Objects. J. Intell. Robot. Syst. 2014, 80, 7–22. [Google Scholar] [CrossRef]

- Gandhi, D.; Cervera, E. Sensor Covering of a Robot Arm for Collision Avoidance. In Proceedings of the SMC’03 Conference Proceedings 2003 IEEE International Conference on Systems, Man and Cybernetics. Conference Theme—System Security and Assurance (Cat. No.03CH37483), Washington, DC, USA, 8 October 2003; pp. 4951–4955. [Google Scholar]

- Lam, T.L.; Yip, H.W.; Qian, H.; Xu, Y. Collision Avoidance of Industrial Robot Arms using an Invisible Sensitive Skin. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 4542–4543. [Google Scholar]

- Shi, L.; Copot, C.; Vanlanduit, S. A Bayesian Deep Neural Network for Safe Visual Servoing in Human–Robot Interaction. Front. Robot. AI 2021, 8, 1–13. [Google Scholar] [CrossRef]

- Haddadin, S.; Albu-sch, A.; De Luca, A.; Hirzinger, G. Collision Detection and Reaction: A Contribution to Safe Physical Human-Robot Interaction. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3356–3363. [Google Scholar]

- Cho, C.; Kim, J.; Lee, S.; Song, J. Collision detection and reaction on 7 DOF service robot arm using residual observer. J. Mech. Sci. Technol. 2012, 26, 1197–1203. [Google Scholar] [CrossRef]

- Jung, B.; Choi, H.R.; Koo, J.C.; Moon, H. Collision Detection Using Band Designed Disturbance Observer. In Proceedings of the 8th IEEE International Conference on Automation Science and Engineering, Seoul, Korea, 20–24 August 2012; pp. 1080–1085. [Google Scholar]

- Cao, P.; Gan, Y.; Dai, X. Model-based sensorless robot collision detection under model uncertainties with a fast dynamics identification. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419853713. [Google Scholar] [CrossRef] [Green Version]

- Morinaga, S.; Kosuge, K. Collision Detection System for Manipulator Based on Adaptive Impedance Control Law. In Proceedings of the 2003 IEEE International Conference on Robotics &Automation, Taipei, Taiwan, 14–19 September 2003; pp. 1080–1085. [Google Scholar]

- Kim, J. Collision detection and reaction for a collaborative robot with sensorless admittance control. Mechatronics 2022, 84, 102811. [Google Scholar] [CrossRef]

- Lu, S.; Chung, J.H.; Velinsky, S.A. Human-Robot Collision Detection and Identification Based on Wrist and Base Force/Torque Sensors. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 796–801. [Google Scholar]

- Dimeas, F.; Avenda, L.D.; Nasiopoulou, E.; Aspragathos, N. Robot Collision Detection based on Fuzzy Identification and Time Series Modelling. In Proceedings of the RAAD 2013, 22nd InternationalWorkshop on Robotics in Alpe-Adria-Danube Region, Portoroz, Slovenia, 11–13 September 2013. [Google Scholar]

- Dimeas, F.; Avendano-valencia, L.D.; Aspragathos, N. Human—Robot collision detection and identification based on fuzzy and time series modelling. Robotica 2014, 33, 1886–1898. [Google Scholar] [CrossRef]

- Franzel, F.; Eiband, T.; Lee, D. Detection of Collaboration and Collision Events during Contact Task Execution. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Munich, Germany, 19–21 July 2021; pp. 376–383. [Google Scholar]

- Cioffi, G.; Klose, S.; Wahrburg, A. Data-Efficient Online Classification of Human-Robot Contact Situations. In Proceedings of the 2020 European Control Conference (ECC), St. Petersburg, Russia, 12–15 May 2020; pp. 608–614. [Google Scholar]

- Briquet-Kerestedjian, N.; Wahrburg, A.; Grossard, M.; Makarov, M.; Rodriguez-Ayerbe, P. Using neural networks for classifying human-robot contact situations. In Proceedings of the 2019 18th European Control Conference, ECC 2019, Naples, Italy, 25–28 June 2019; pp. 3279–3285. [Google Scholar]

- Sharkawy, A.-N.; Aspragathos, N. Human-Robot Collision Detection Based on Neural Networks. Int. J. Mech. Eng. Robot. Res. 2018, 7, 150–157. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Koustoumpardis, P.N.; Aspragathos, N. Manipulator Collision Detection and Collided Link Identification based on Neural Networks. In Advances in Service and Industrial Robotics. RAAD 2018. Mechanisms and Machine Science; Nikos, A., Panagiotis, K., Vassilis, M., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–12. [Google Scholar]

- Sharkawy, A.N.; Koustoumpardis, P.N.; Aspragathos, N. Neural Network Design for Manipulator Collision Detection Based only on the Joint Position Sensors. Robotica 2020, 38, 1737–1755. [Google Scholar] [CrossRef]

- Sharkawy, A.N.; Koustoumpardis, P.N.; Aspragathos, N. Human–robot collisions detection for safe human–robot interaction using one multi-input–output neural network. Soft Comput. 2020, 24, 6687–6719. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Mostfa, A.A. Neural Networks’ Design and Training for Safe Human-Robot Cooperation. J. King Saud Univ. Eng. Sci. 2021, 1–15. [Google Scholar] [CrossRef]

- Sotoudehnejad, V.; Takhmar, A.; Kermani, M.R.; Polushin, I.G. Counteracting modeling errors for sensitive observer-based manipulator collision detection. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 4315–4320. [Google Scholar]

- De Luca, A.; Albu-Schäffer, A.; Haddadin, S.; Hirzinger, G. Collision detection and safe reaction with the DLR-III lightweight manipulator arm. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 1623–1630. [Google Scholar]

- Sharkawy, A.-N. Principle of Neural Network and Its Main Types: Review. J. Adv. Appl. Comput. Math. 2020, 7, 8–19. [Google Scholar] [CrossRef]

| Parameter | Compliance Control | |

|---|---|---|

| Admittance Controller | Impedance Controller | |

| Use | It is used with HRI in which there is no interaction between the robot and the stiff environment. | The main aim of the methodology of impedance control is modulating the manipulator’s mechanical impedance [28]. |

| Inputs and Outputs | It maps the applied forces into robot motion, as shown in Figure 2a. | The motion is the input, whereas the output is the force as shown in Figure 2b [30,32]. |

| Rendering | 1- It can render only the virtual stiff surfaces, whereas it cannot render the low inertia. 2- It is negatively affected during the dynamic interaction with the real stiff surfaces (constrained motion) [33,34,35]. | 1- It can render low inertia, whereas it cannot render the virtual stiff surfaces. 2- It is negatively affected during the dynamic interaction with the low inertia (free motion) [35]. |

| Control | 1- It is the impedance control based on position [36]. 2- The position or velocity controller is used to control the robot and the desired compliant behavior is understood by the outer control loop. | 1- The force-based impedance control is used. 2- It is not only the controlled manipulator is required, but also the controller itself should have the impedance causality. |

| Representation |  |  |

| Schemes of Control | Work Space | Measured Variables | Appropriate Applied Situations | Control Aims |

| Position Control | Task space | Position | Free motion | Desired position |

| Force Control | Task space | Contact Force | Constrained motion | Desired contact force |

| Hybrid Control | Position subspace | Position | All motion kinds | Desired position |

| Force subspace | Contact Force | Desired contact force | ||

| Impedance/ Admittance Control | Task space | Position, Contact Force | All motion kinds | Impedance/ Admittance |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharkawy, A.-N.; Koustoumpardis, P.N. Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives. Machines 2022, 10, 591. https://doi.org/10.3390/machines10070591

Sharkawy A-N, Koustoumpardis PN. Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives. Machines. 2022; 10(7):591. https://doi.org/10.3390/machines10070591

Chicago/Turabian StyleSharkawy, Abdel-Nasser, and Panagiotis N. Koustoumpardis. 2022. "Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives" Machines 10, no. 7: 591. https://doi.org/10.3390/machines10070591

APA StyleSharkawy, A.-N., & Koustoumpardis, P. N. (2022). Human–Robot Interaction: A Review and Analysis on Variable Admittance Control, Safety, and Perspectives. Machines, 10(7), 591. https://doi.org/10.3390/machines10070591