Image Based Visual Servoing for Floating Base Mobile Manipulator Systems with Prescribed Performance under Operational Constraints

Abstract

:1. Introduction

1.1. Related Literature

1.2. Contributions

- Model-free IBVS for FBMMS calculating low-level commands (i.e., forces and torques), without explicit knowledge of the system model, which in most cases is quite complicated and difficult to identify with standard techniques.

- Predefined overshoot behavior, maximum steady-state error and rate of convergence.

- Compliance with the system’s geometrical and operational limitations such as joint limits and/or system’s manipulability.

- Robust steady-state behaviour against external disturbances.

- Regulation of the system’s performance by pre-designed performance functions, which are decoupled from the selection of control gains.

- IBVS scheme with low complexity which can be easily implemented to an embedded on-board computing system of FBMMS.

2. Problem Formulation

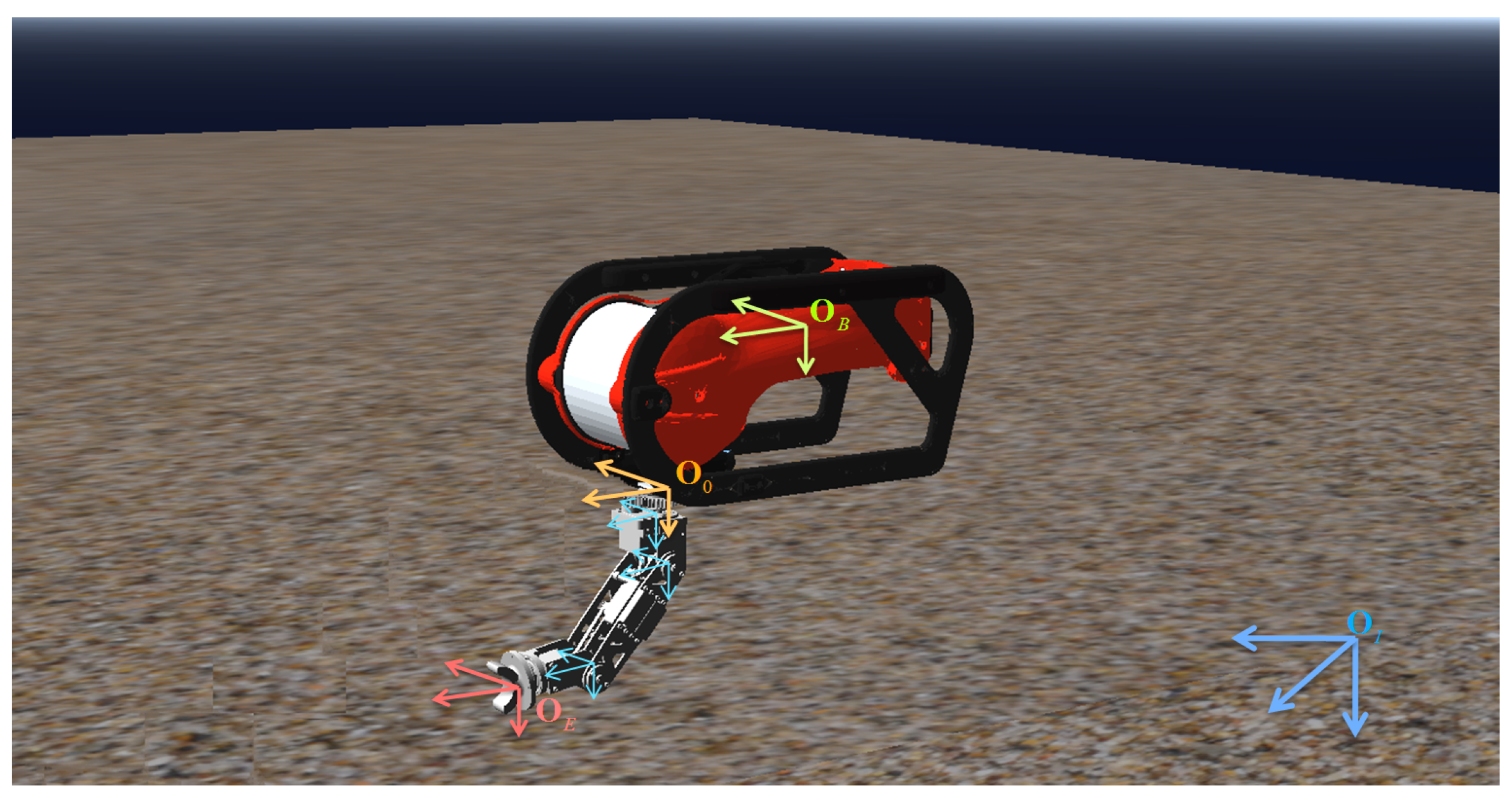

2.1. Floating Base Mobile Manipulator Modeling

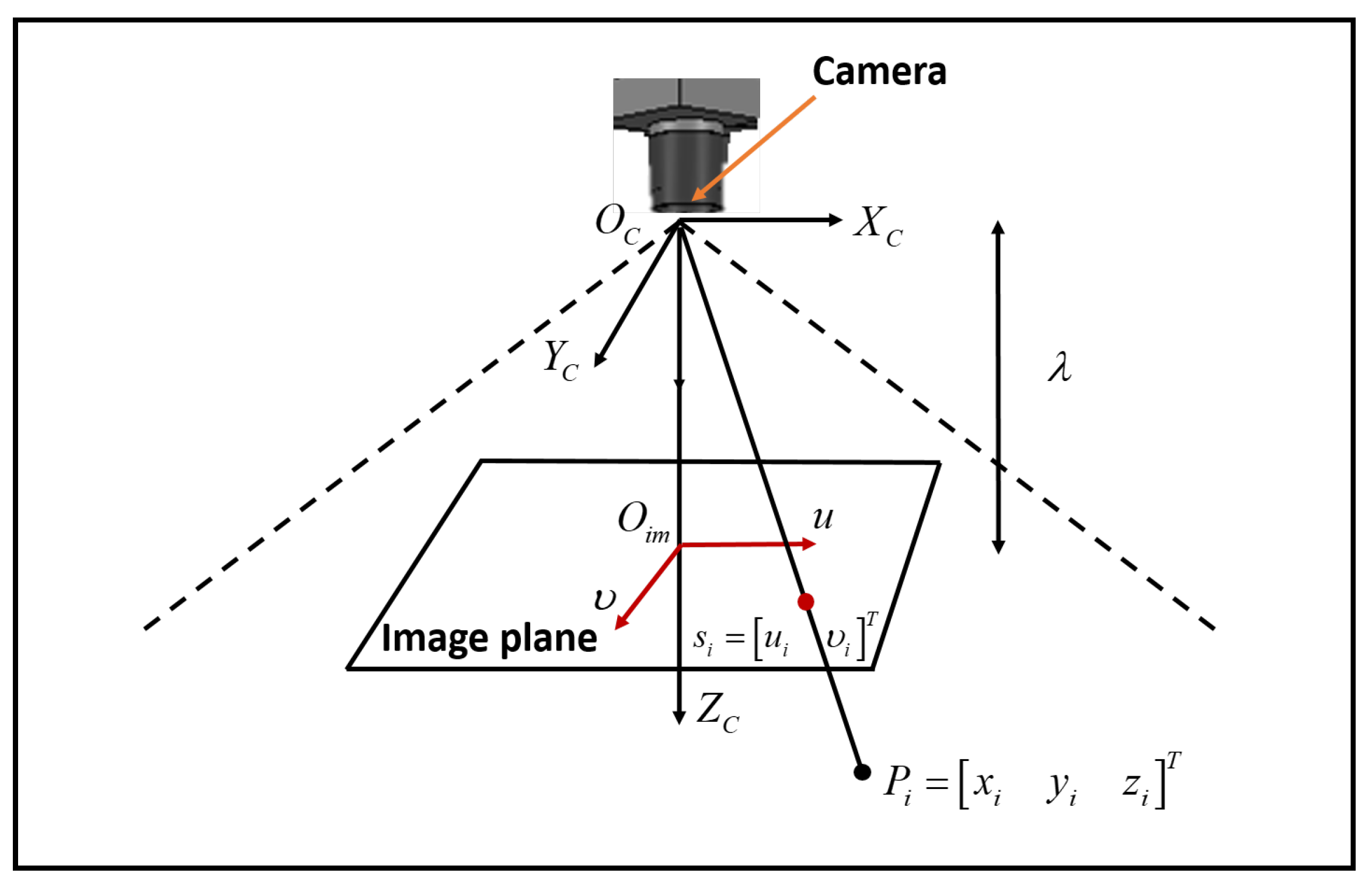

2.2. Mathematical Modeling of IBVS

2.3. Problem Statement

- The image features do not escape the image plane during the control operation (field of view constraints);

- Predetermined overshoot, rate of convergence and steady state error for the image features and the system velocities;

- Respect system’s operational limitations, e.g., manipulator joint limits, system’s manipulability;

- Robustness against exogenous disturbances, system model uncertainties, camera calibration and depth estimation errors.

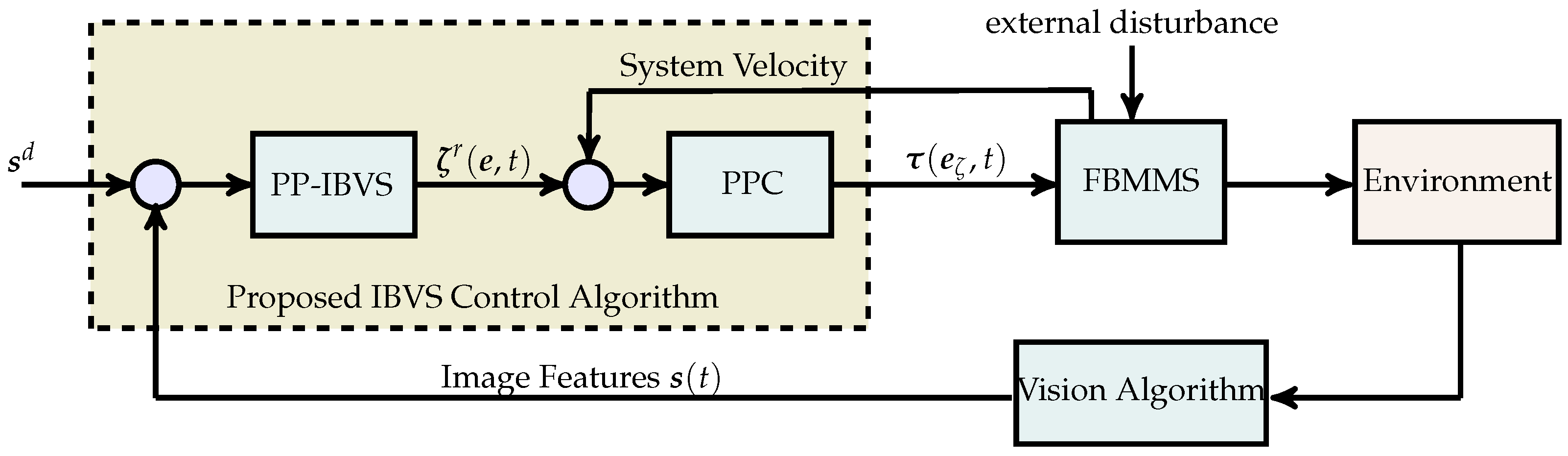

3. Control Methodology

3.1. PP-IBVS Control Design

3.2. Handling of Operational Specifications and Limits

3.3. Prescribed Performance Velocity Control

4. Stability Analysis

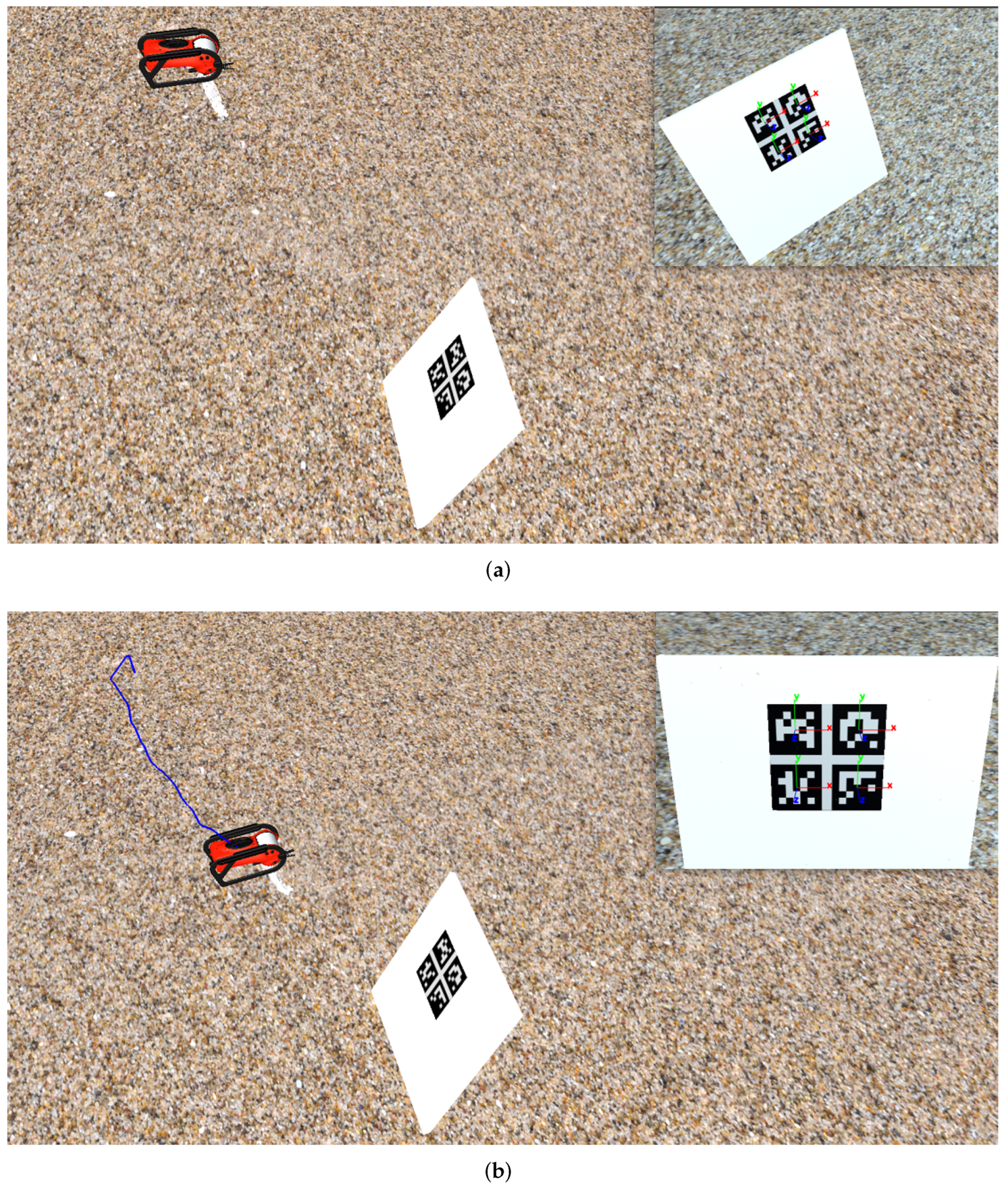

5. Simulation Results

5.1. System Components and Parameters

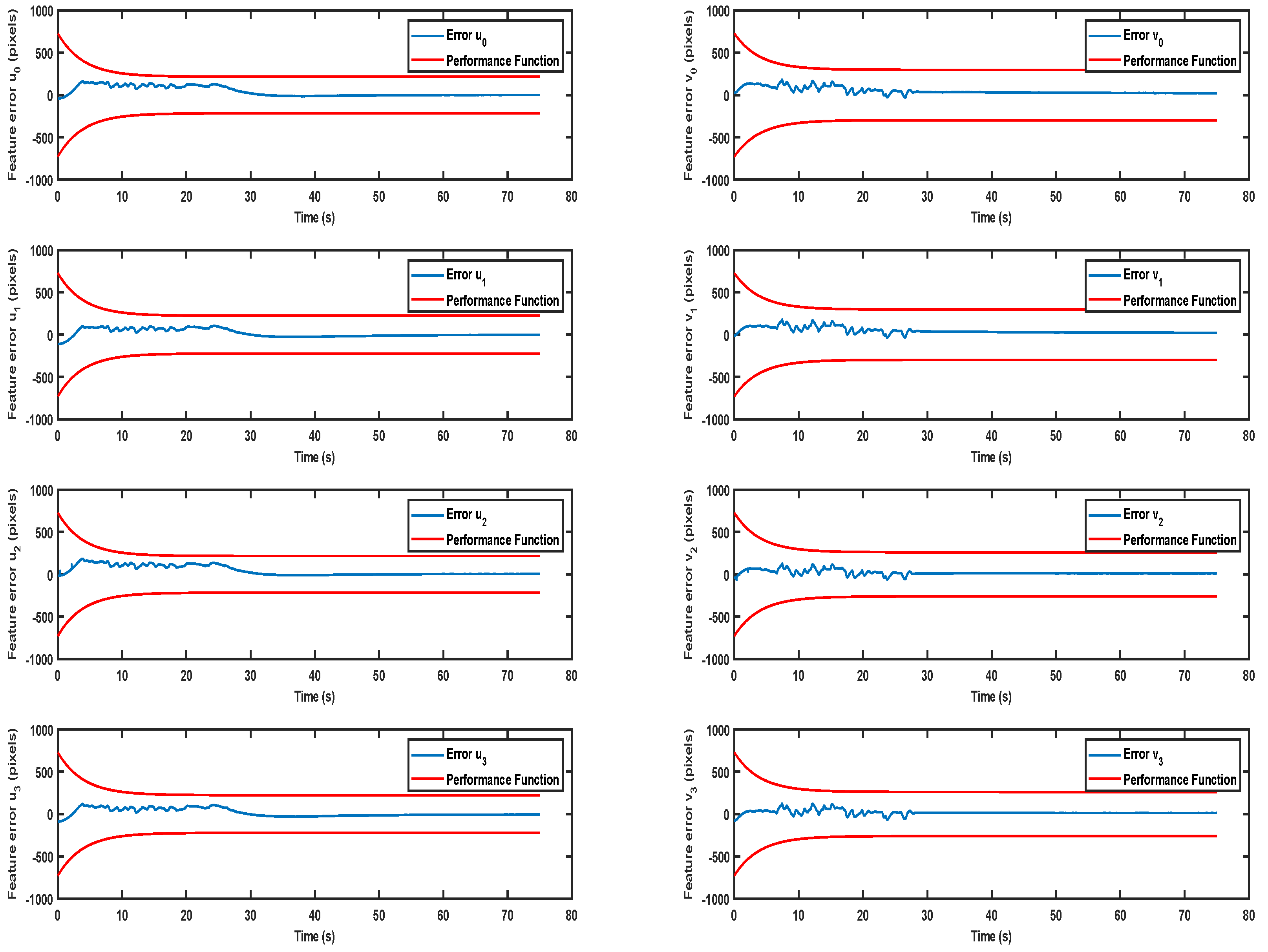

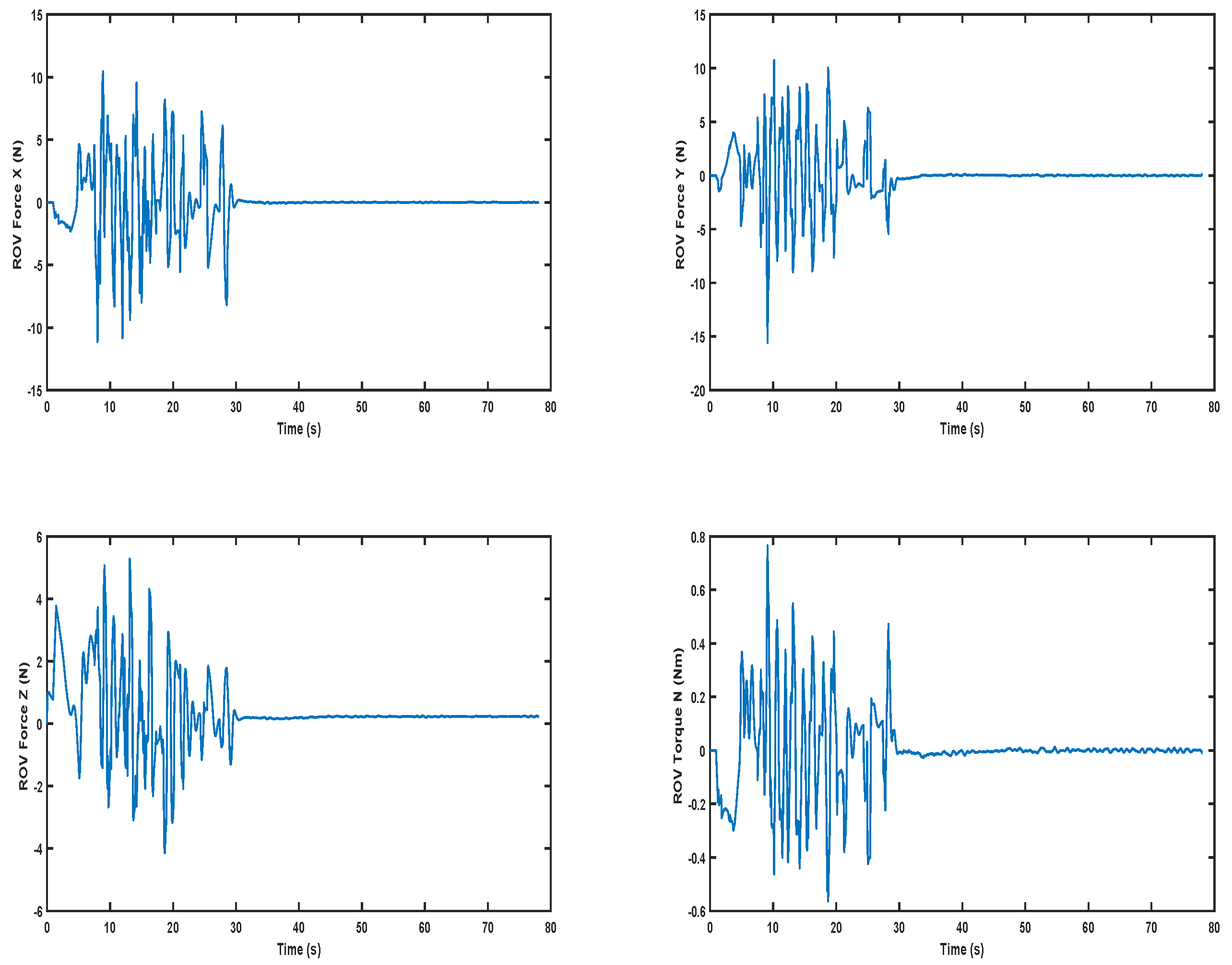

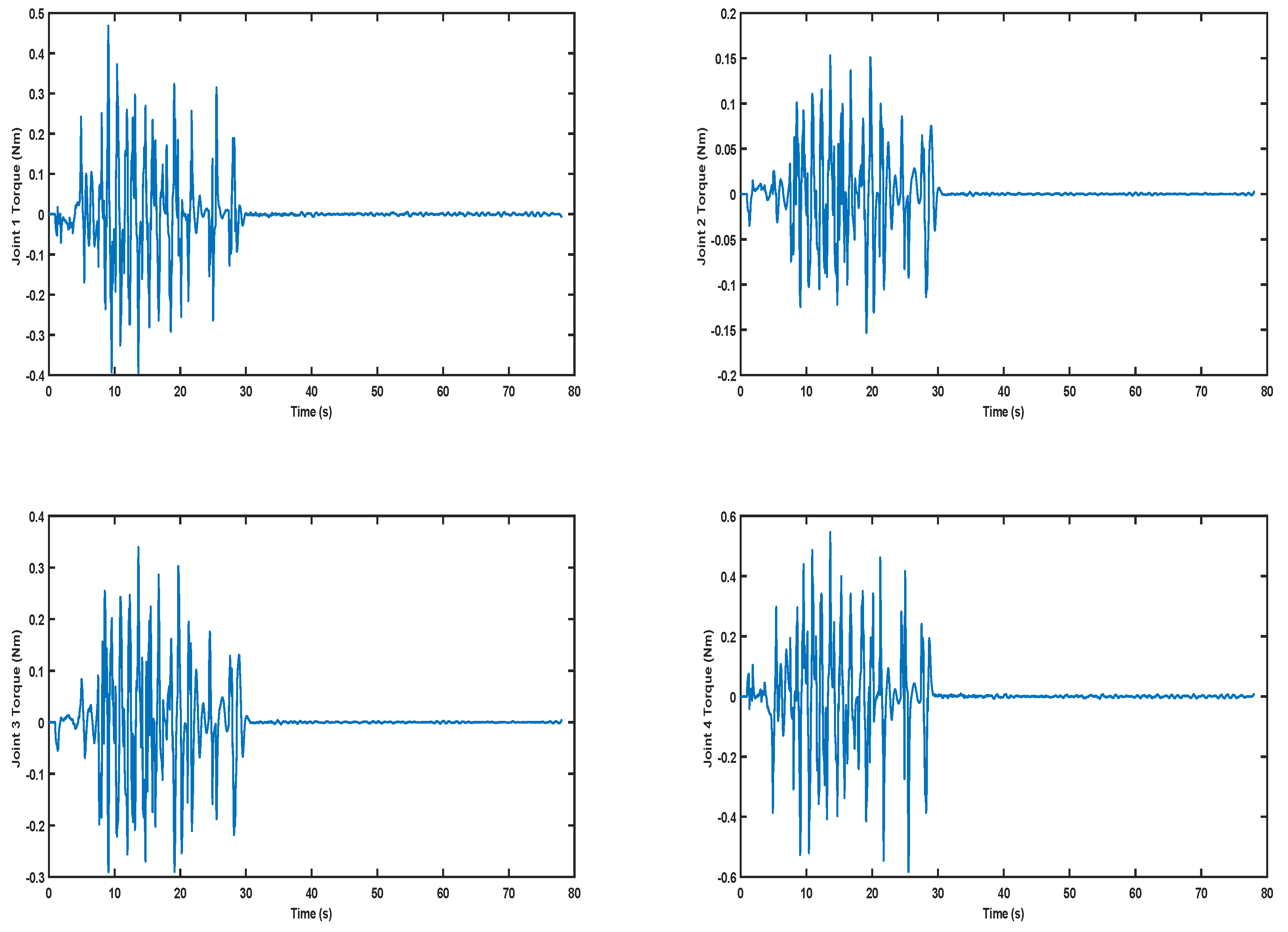

5.2. Results

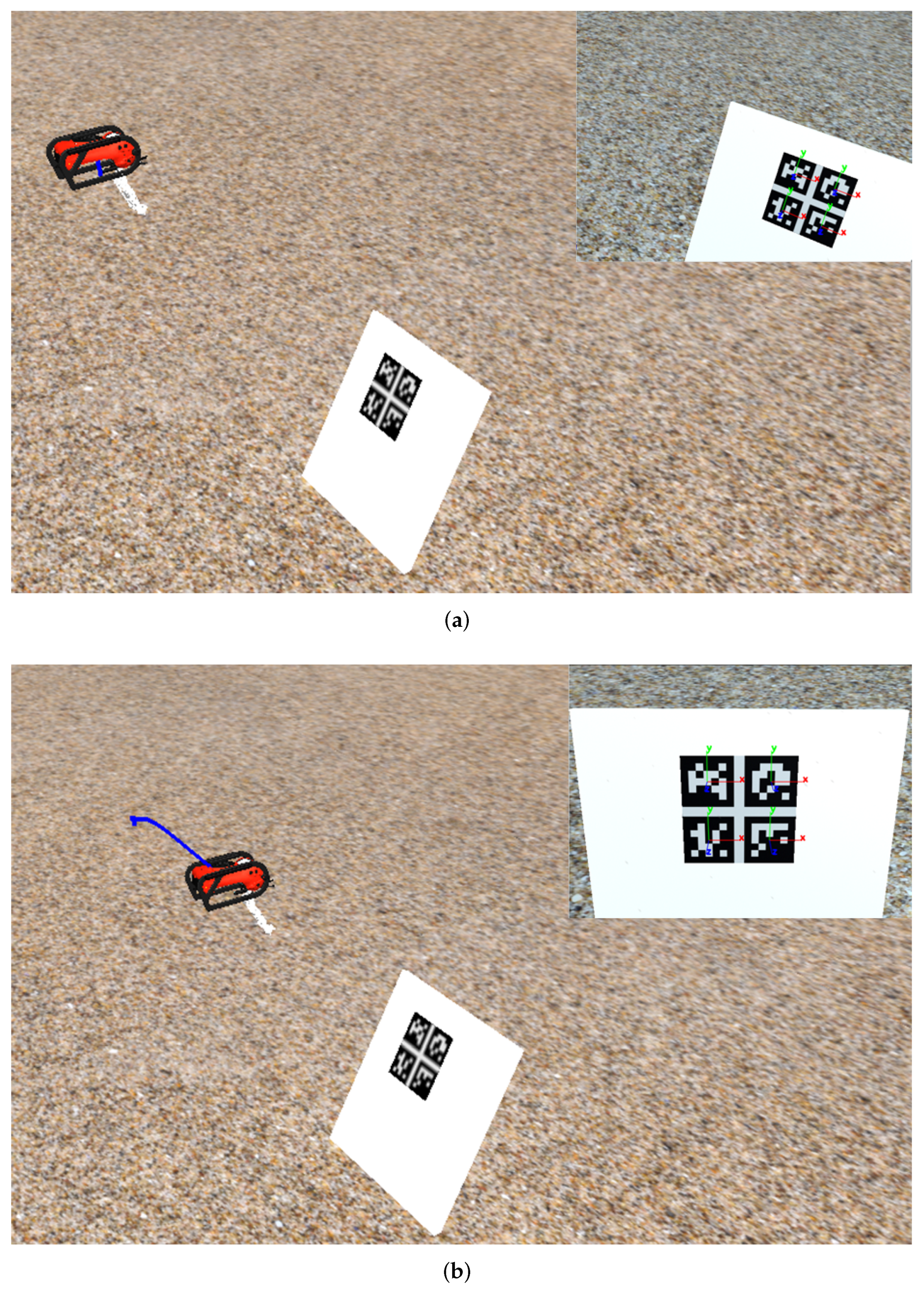

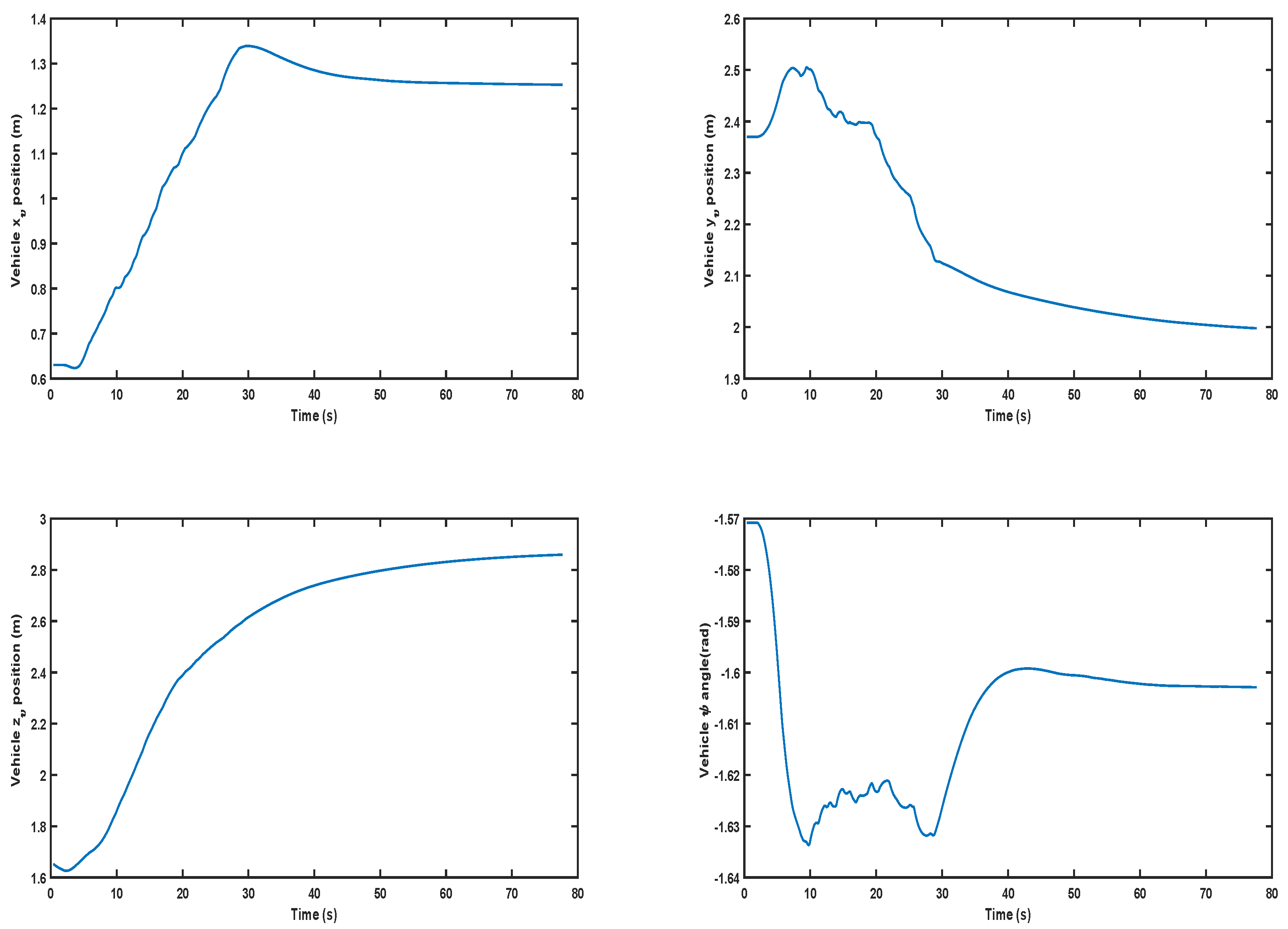

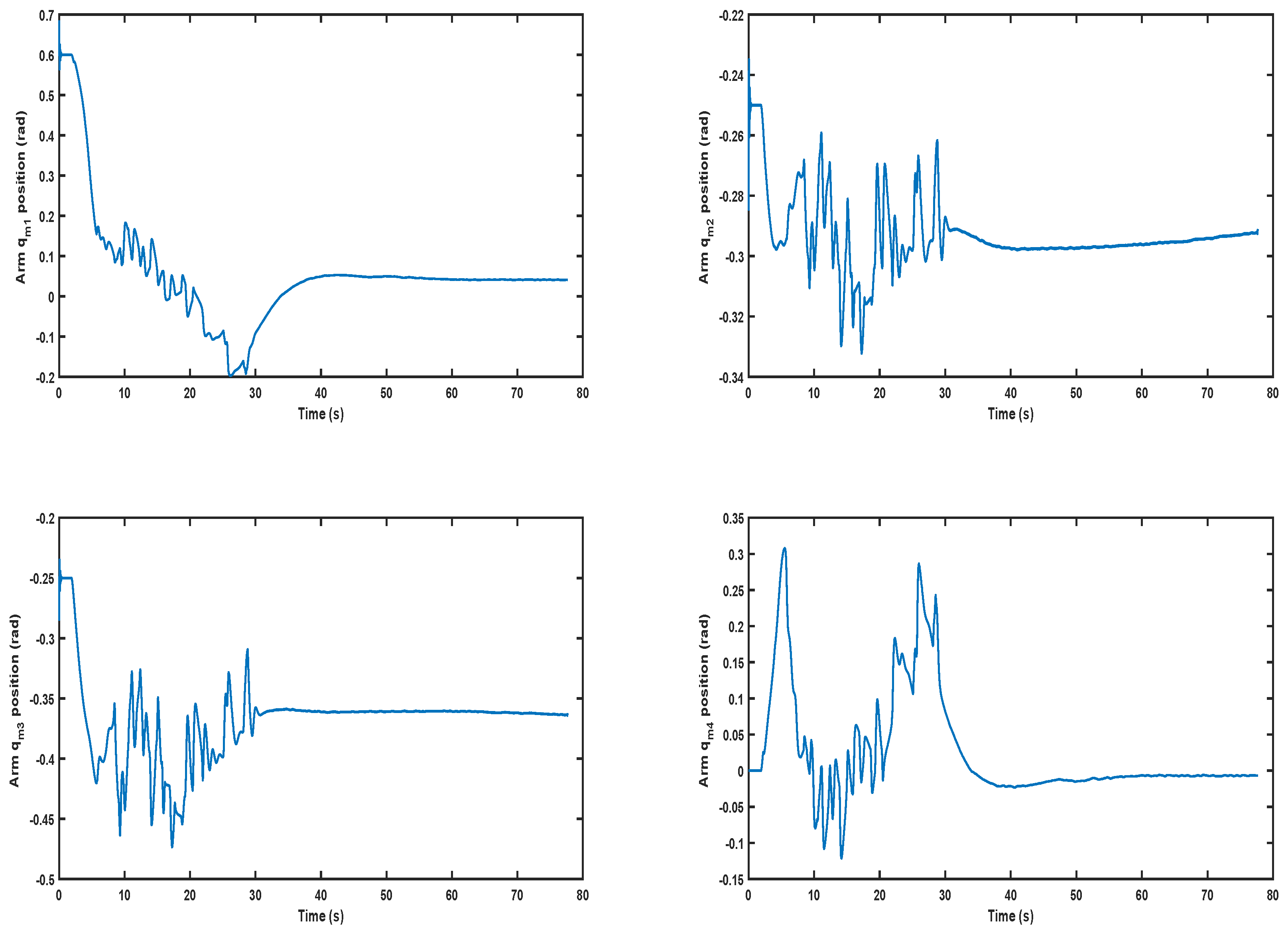

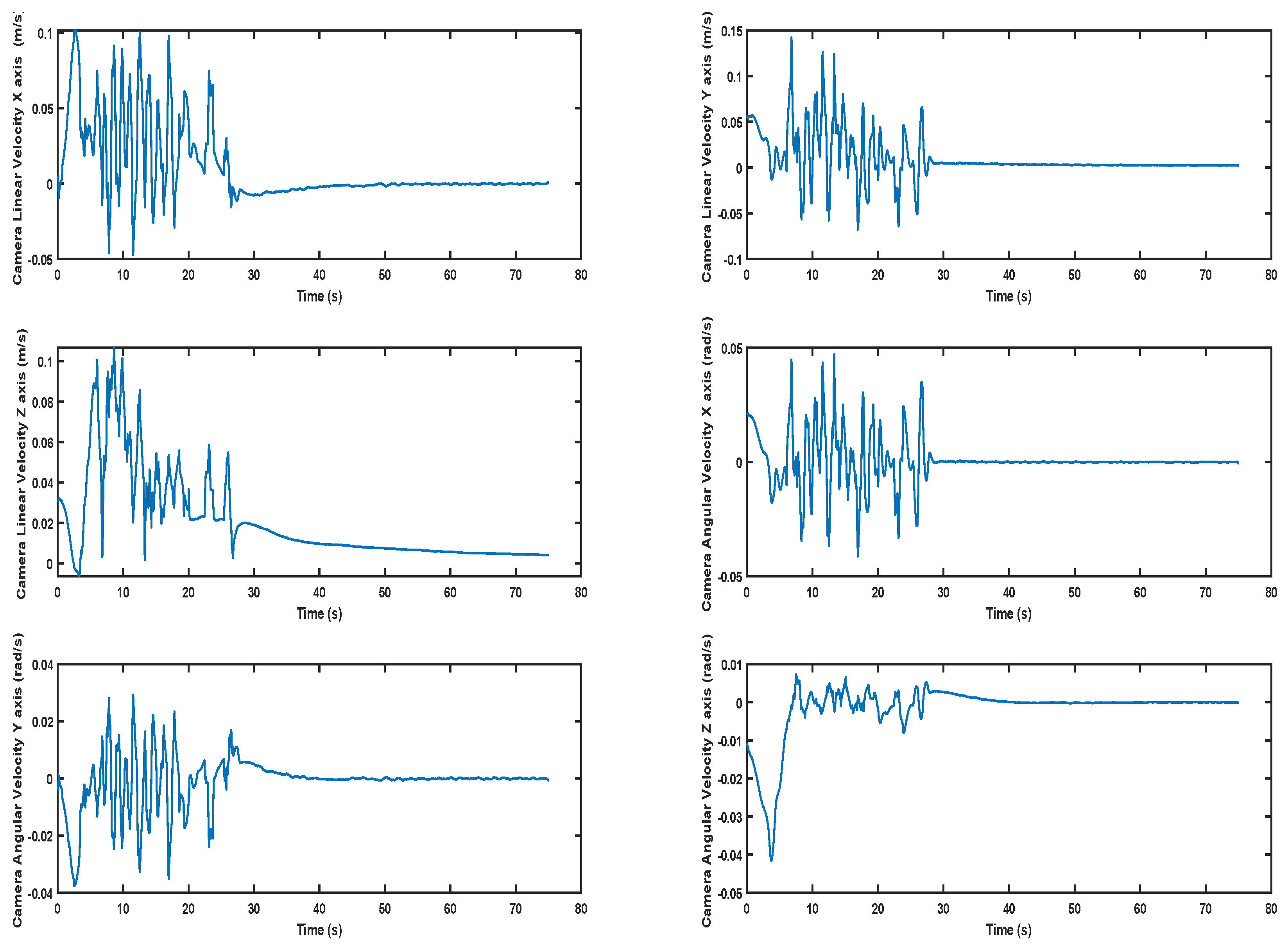

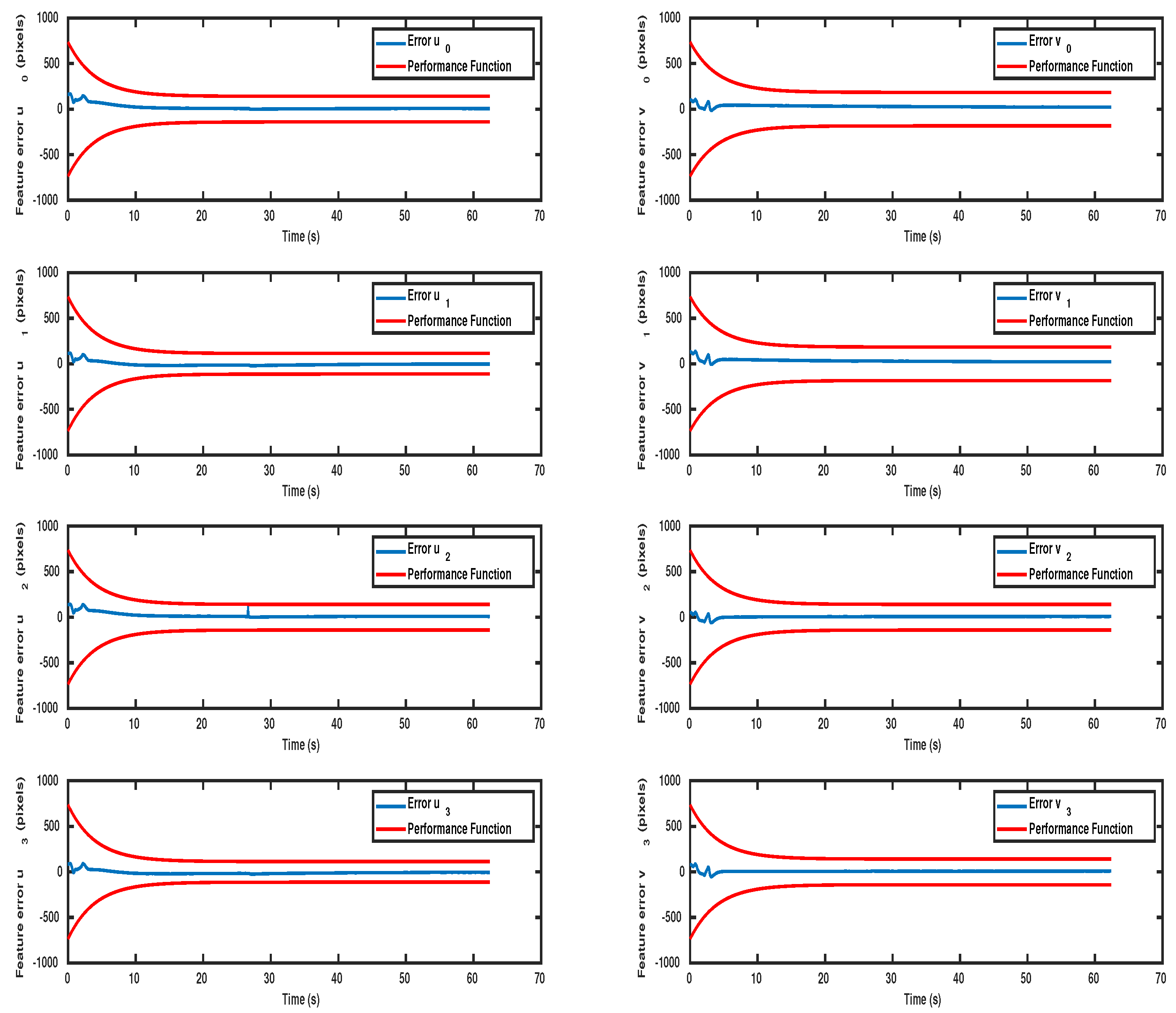

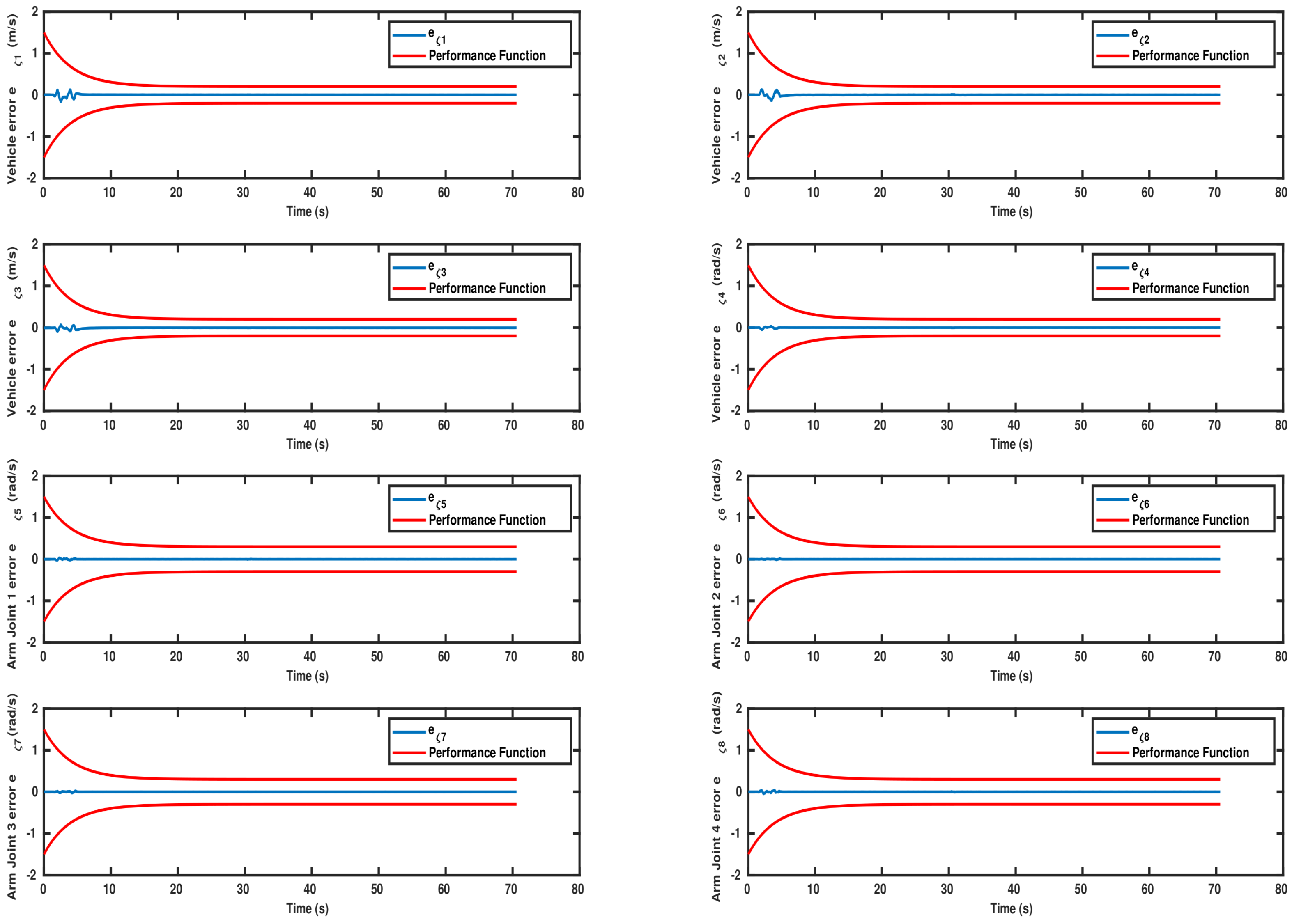

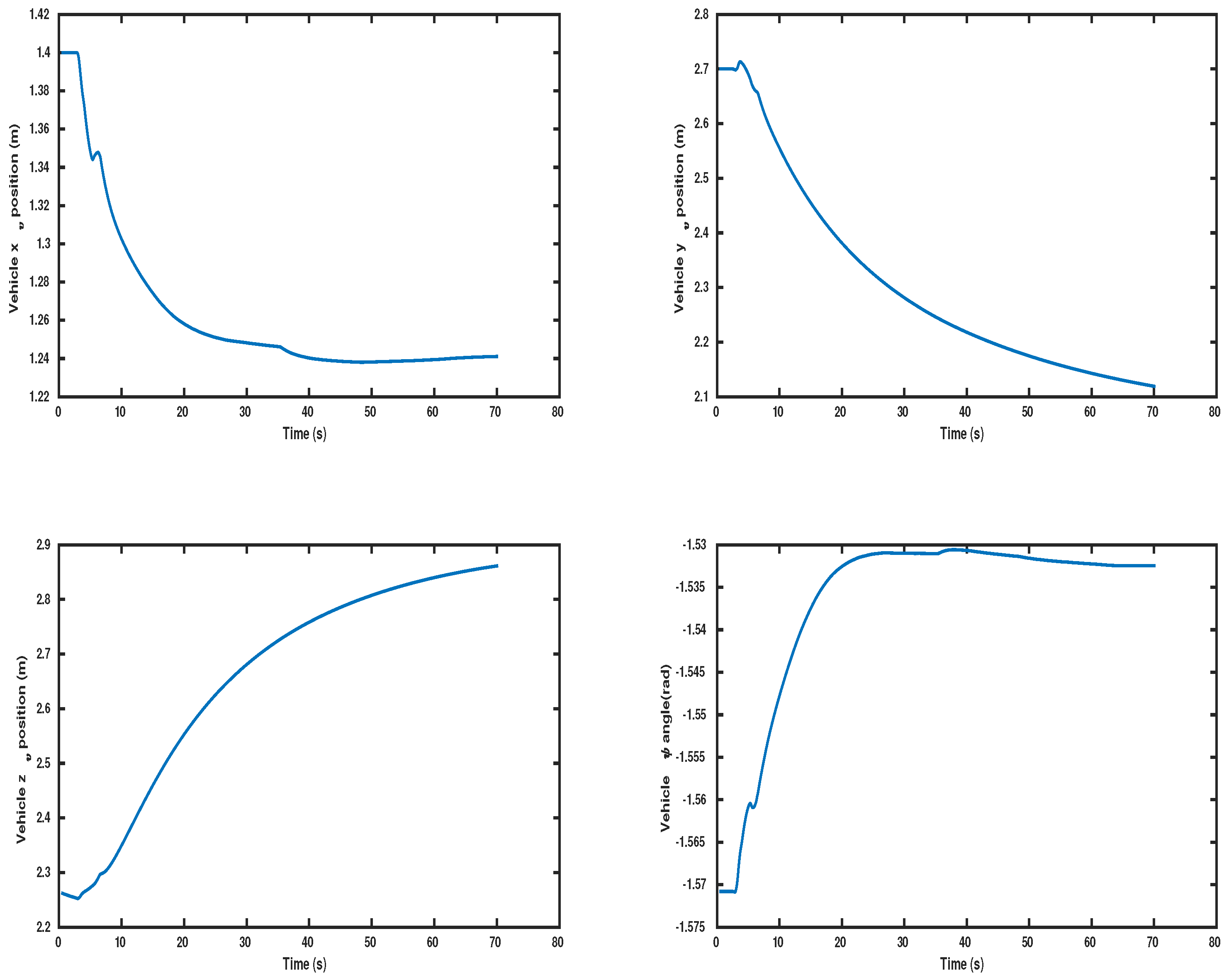

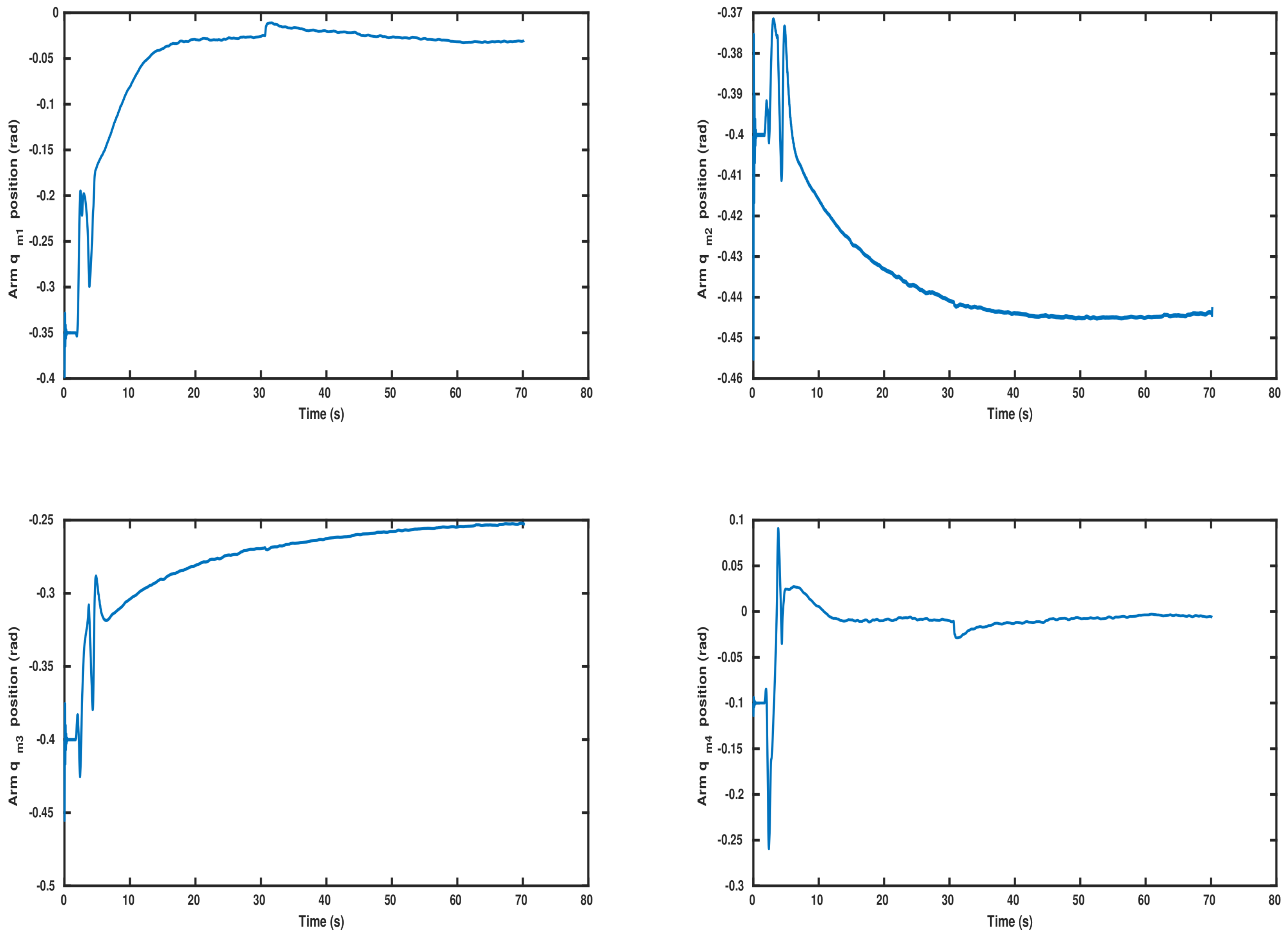

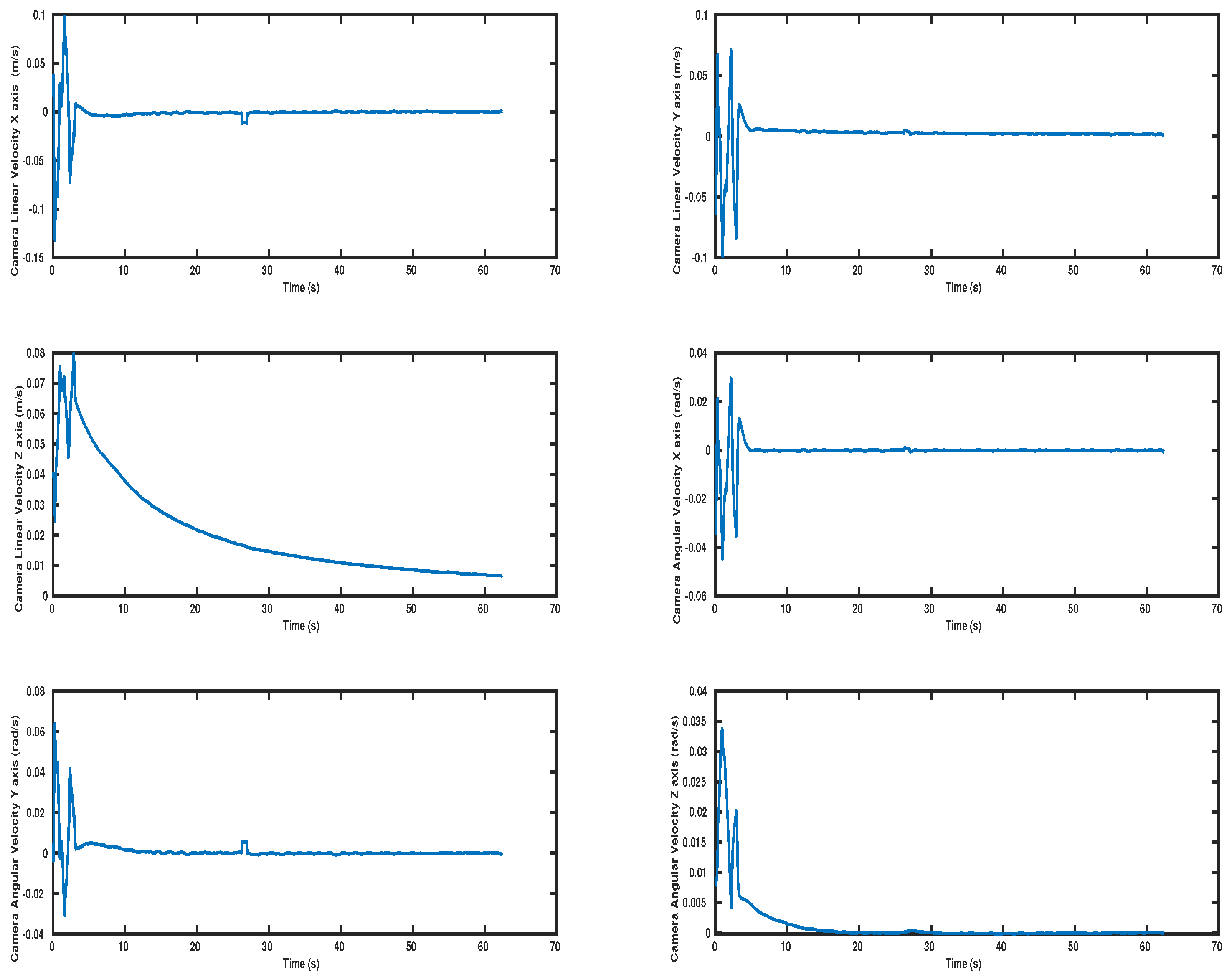

5.2.1. Scenario 1

5.2.2. Scenario 2

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sereinig, M.; Werth, W.; Faller, L.M. A review of the challenges in mobile manipulation: Systems design and RoboCup challenges. E I Elektrotechnik Inf. 2020, 137, 297–308. [Google Scholar] [CrossRef]

- Lang, H.; Khan, M.T.; Tan, K.K.; de Silva, C.W. Developments in visual servoing for mobile manipulation. Unmanned Syst. 2013, 1, 143–162. [Google Scholar] [CrossRef]

- Sarapura, J.A.; Roberti, F.; Carelli, R. Adaptive 3D Visual Servoing of a Scara Robot Manipulator with Unknown Dynamic and Vision System Parameters. Automation 2021, 2, 127–140. [Google Scholar] [CrossRef]

- Heshmati-Alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. A predictive control approach for cooperative transportation by multiple underwater vehicle manipulator systems. IEEE Trans. Control Syst. Technol. 2021, 30, 917–930. [Google Scholar] [CrossRef]

- Parlaktuna, O.; Ozkan, M. Adaptive control of free-floating space manipulators using dynamically equivalent manipulator model. Robot. Auton. Syst. 2004, 46, 185–193. [Google Scholar] [CrossRef]

- Nanos, K.; Papadopoulos, E.G. On the dynamics and control of free-floating space manipulator systems in the presence of angular momentum. Front. Robot. AI 2017, 4, 26. [Google Scholar] [CrossRef]

- Gao, J.; Liang, X.; Chen, Y.; Zhang, L.; Jia, S. Hierarchical image-based visual serving of underwater vehicle manipulator systems based on model predictive control and active disturbance rejection control. Ocean Eng. 2021, 229, 108814. [Google Scholar] [CrossRef]

- Lippiello, V.; Fontanelli, G.A.; Ruggiero, F. Image-based visual-impedance control of a dual-arm aerial manipulator. IEEE Robot. Autom. Lett. 2018, 3, 1856–1863. [Google Scholar] [CrossRef]

- Thomas, J.; Loianno, G.; Sreenath, K.; Kumar, V. Toward image based visual servoing for aerial grasping and perching. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2113–2118. [Google Scholar]

- Heshmati-Alamdari, S.; Bechlioulis, C.P.; Karras, G.C.; Kyriakopoulos, K.J. Cooperative impedance control for multiple underwater vehicle manipulator systems under lean communication. IEEE J. Ocean. Eng. 2020, 46, 447–465. [Google Scholar] [CrossRef]

- Han, H.; Wei, Y.; Ye, X.; Liu, W. Motion planning and coordinated control of underwater vehicle-manipulator systems with inertial delay control and fuzzy compensator. Appl. Sci. 2020, 10, 3944. [Google Scholar] [CrossRef]

- Logothetis, M.; Karras, G.C.; Heshmati-Alamdari, S.; Vlantis, P.; Kyriakopoulos, K.J. A model predictive control approach for vision-based object grasping via mobile manipulator. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–6. [Google Scholar]

- Rastegarpanah, A.; Aflakian, A.; Stolkin, R. Improving the Manipulability of a Redundant Arm Using Decoupled Hybrid Visual Servoing. Appl. Sci. 2021, 11, 11566. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control. I. Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Chaumette, F.; Hutchinson, S. Visual servo control. Part II: Advanced approaches. IEEE Robot. Autom. Mag. 2007, 14, 109–118. [Google Scholar] [CrossRef]

- Silveira, G.; Malis, E. Direct visual servoing: Vision-based estimation and control using only nonmetric information. IEEE Trans. Robot. 2012, 28, 974–980. [Google Scholar] [CrossRef]

- Heshmati-alamdari, S.; Karavas, G.K.; Eqtami, A.; Drossakis, M.; Kyriakopoulos, K.J. Robustness Analysis of Model Predictive Control for Constrained Image-Based Visual Servoing. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014. [Google Scholar]

- Huang, Y. A Switched Approach to Image-Based Stabilization for Nonholonomic Mobile Robots with Field-of-View Constraints. Appl. Sci. 2021, 11, 10895. [Google Scholar] [CrossRef]

- Chaumette, F. Potential Problems of Stability and Convergence in Image-Based and Position-Based Visual Servoing; LNCIS Series, No 237; Springer: Berlin/Heidelberg, Germany, 1998; pp. 66–78. [Google Scholar]

- Kazemi, M.; Gupta, K.; Mehrandezh, M. Global Path Planning for Robust Visual Servoing in Complex Environments. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 1726–1732. [Google Scholar]

- Mezouar, Y.; Chaumette, F. Path Planning for Robust Image-based Control. IEEE Trans. Robot. Autom. 2002, 18, 534–549. [Google Scholar] [CrossRef] [Green Version]

- Sauvée, M.; Poignet, P.; Dombre, E.; Courtial, E. Image based visual servoing through nonlinear model predictive control. In Proceedings of the 45th IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; pp. 1776–1781. [Google Scholar]

- Lee, D.; Lim, H.; Kim, H.J. Obstacle avoidance using image-based visual servoing integrated with nonlinear model predictive control. In Proceedings of the 2011 50th IEEE Conference on Decision and Control and European Control Conference, Orlando, FL, USA, 12–15 December 2011; pp. 5689–5694. [Google Scholar]

- Allibert, G.; Courtial, E.; Touré, Y. Real-time visual predictive controller for image-based trajectory tracking of a mobile robot. IFAC Proc. Vol. 2008, 41, 11244–11249. [Google Scholar] [CrossRef]

- Heshmati-alamdari, S.; Eqtami, A.; Karras, G.C.; Dimarogonas, D.V.; Kyriakopoulos, K.J. A Self-triggered Position Based Visual Servoing Model Predictive Control Scheme for Underwater Robotic Vehicles. Machines 2020, 8, 33. [Google Scholar] [CrossRef]

- Chesi, G. Visual servoing path planning via homogeneous forms and LMI optimizations. IEEE Trans. Robot. 2009, 25, 281–291. [Google Scholar] [CrossRef]

- Heshmati-alamdari, S.; Bechlioulis, C.P.; Liarokapis, M.V.; Kyriakopoulos, K.J. Prescribed Performance Image Based Visual Servoing under Field of View Constraints. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Bechlioulis, C.P.; Heshmati-alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. Robust image-based visual servoing with prescribed performance under field of view constraints. IEEE Trans. Robot. 2019, 35, 1063–1070. [Google Scholar] [CrossRef]

- Belmonte, Á.; Ramón, J.L.; Pomares, J.; Garcia, G.J.; Jara, C.A. Optimal image-based guidance of mobile manipulators using direct visual servoing. Electronics 2019, 8, 374. [Google Scholar] [CrossRef] [Green Version]

- Alepuz, J.P.; Emami, M.R.; Pomares, J. Direct image-based visual servoing of free-floating space manipulators. Aerosp. Sci. Technol. 2016, 55, 1–9. [Google Scholar] [CrossRef]

- Zhao, X.; Xie, Z.; Yang, H.; Liu, J. Minimum base disturbance control of free-floating space robot during visual servoing pre-capturing process. Robotica 2020, 38, 652–668. [Google Scholar] [CrossRef]

- Laiacker, M.; Huber, F.; Kondak, K. High accuracy visual servoing for aerial manipulation using a 7 degrees of freedom industrial manipulator. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1631–1636. [Google Scholar]

- Marchionne, C.; Sabatini, M.; Gasbarri, P. GNC architecture solutions for robust operations of a free-floating space manipulator via image based visual servoing. Acta Astronaut. 2021, 180, 218–231. [Google Scholar] [CrossRef]

- Lippiello, V.; Cacace, J.; Santamaria-Navarro, A.; Andrade-Cetto, J.; Trujillo, M.A.; Esteves, Y.R.R.; Viguria, A. Hybrid visual servoing with hierarchical task composition for aerial manipulation. IEEE Robot. Autom. Lett. 2015, 1, 259–266. [Google Scholar] [CrossRef] [Green Version]

- Zhang, G.; Wang, B.; Wang, J.; Liu, H. A hybrid visual servoing control of 4 DOFs space robot. In Proceedings of the 2009 International Conference on Mechatronics and Automation, Changchun, China, 9–12 August 2009; pp. 3287–3292. [Google Scholar]

- Buonocore, L.R.; Cacace, J.; Lippiello, V. Hybrid visual servoing for aerial grasping with hierarchical task-priority control. In Proceedings of the 2015 23rd Mediterranean Conference on Control and Automation (MED), Torremolinos, Spain, 16–19 June 2015; pp. 617–623. [Google Scholar]

- Quan, F.; Chen, H.; Li, Y.; Lou, Y.; Chen, J.; Liu, Y. Singularity-Robust Hybrid Visual Servoing Control for Aerial Manipulator. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 562–568. [Google Scholar]

- Antonelli, G. Underwater Robots; Springer Tracts in Advanced Robotics; Springer International Publishing: Cham, Switzerland, 2013. [Google Scholar]

- Karras, G.C.; Bechlioulis, C.P.; Fourlas, G.K.; Kyriakopoulos, K.J. Target Tracking with Multi-rotor Aerial Vehicles based on a Robust Visual Servo Controller with Prescribed Performance. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 480–487. [Google Scholar]

- Hutchinson, S.; Hager, G.D.; Corke, P.I. A tutorial on visual servo control. IEEE Trans. Robot. Autom. 1996, 12, 651–670. [Google Scholar] [CrossRef] [Green Version]

- Bechlioulis, C.; Rovithakis, G. Prescribed performance adaptive control for multi-input multi-output affine in the control nonlinear systems. IEEE Trans. Autom. Control 2010, 55, 1220–1226. [Google Scholar] [CrossRef]

- Heshmati-Alamdari, S.; Bechlioulis, C.P.; Karras, G.C.; Nikou, A.; Dimarogonas, D.V.; Kyriakopoulos, K.J. A robust interaction control approach for underwater vehicle manipulator systems. Annu. Rev. Control 2018, 46, 315–325. [Google Scholar] [CrossRef]

- Bechlioulis, C.; Rovithakis, G. A low-complexity global approximation-free control scheme with prescribed performance for unknown pure feedback systems. Automatica 2014, 50, 1217–1226. [Google Scholar] [CrossRef]

- Prats, M.; Perez, J.; Fernandez, J.; Sanz, P. An open source tool for simulation and supervision of underwater intervention missions. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2012; pp. 2577–2582. [Google Scholar]

- Malis, E.; Rives, P. Robustness of image-based visual servoing with respect to depth distribution errors. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No.03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 1056–1061. [Google Scholar]

- Siciliano, B.; Slotine, J.J.E. A general framework for managing multiple tasks in highly redundant robotic systems. In Advanced Robotics, Proceedings of the 91 ICAR, Fifth International Conference on “Robots in Unstructured Environments”, Pisa, Italy, 19–22 June 1991; IEEE: Piscataway, NJ, USA; Volume 2, pp. 1211–1216.

- Simetti, E.; Casalino, G. A Novel Practical Technique to Integrate Inequality Control Objectives and Task Transitions in Priority Based Control. J. Intell. Robot. Syst. Theory Appl. 2016, 84, 877–902. [Google Scholar] [CrossRef]

- Soylu, S.; Buckham, B.; Podhorodeski, R. Redundancy resolution for underwater mobile manipulators. Ocean Eng. 2010, 37, 325–343. [Google Scholar] [CrossRef]

- Sontag, E. Mathematical Control Theory: Deterministic Finite Dimensional Systems; Texts in Applied Mathematics; U.S. Government Printing Office: Washington, DC, USA, 1998.

- Quigley, M.; Conley, K.; Gerkey, B.P.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. Icra Workshop Open Source Softw. 2009, 3, 5. [Google Scholar]

- Kato, H.; Billinghurst, M. Marker Tracking and HMD Calibration for a Video-based Augmented Reality Conferencing System. In Proceedings of the 2nd International Workshop on Augmented Reality (IWAR 99), San Francisco, CA, USA, 20–21 October 1999. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karras, G.C.; Fourlas, G.K.; Nikou, A.; Bechlioulis, C.P.; Heshmati-Alamdari, S. Image Based Visual Servoing for Floating Base Mobile Manipulator Systems with Prescribed Performance under Operational Constraints. Machines 2022, 10, 547. https://doi.org/10.3390/machines10070547

Karras GC, Fourlas GK, Nikou A, Bechlioulis CP, Heshmati-Alamdari S. Image Based Visual Servoing for Floating Base Mobile Manipulator Systems with Prescribed Performance under Operational Constraints. Machines. 2022; 10(7):547. https://doi.org/10.3390/machines10070547

Chicago/Turabian StyleKarras, George C., George K. Fourlas, Alexandros Nikou, Charalampos P. Bechlioulis, and Shahab Heshmati-Alamdari. 2022. "Image Based Visual Servoing for Floating Base Mobile Manipulator Systems with Prescribed Performance under Operational Constraints" Machines 10, no. 7: 547. https://doi.org/10.3390/machines10070547

APA StyleKarras, G. C., Fourlas, G. K., Nikou, A., Bechlioulis, C. P., & Heshmati-Alamdari, S. (2022). Image Based Visual Servoing for Floating Base Mobile Manipulator Systems with Prescribed Performance under Operational Constraints. Machines, 10(7), 547. https://doi.org/10.3390/machines10070547