Collaborative Measurement System of Dual Mobile Robots That Integrates Visual Tracking and 3D Measurement

Abstract

:1. Introduction

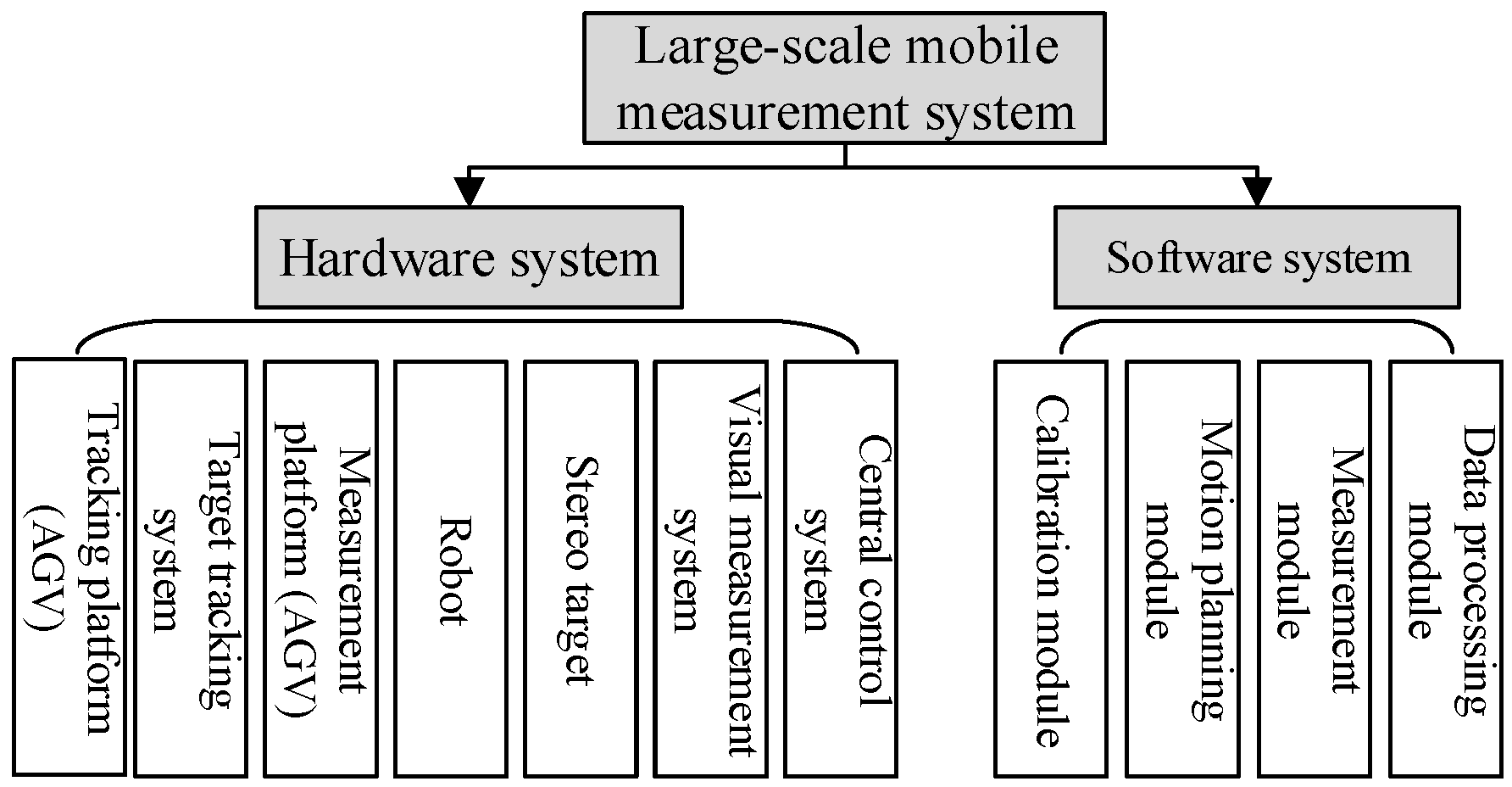

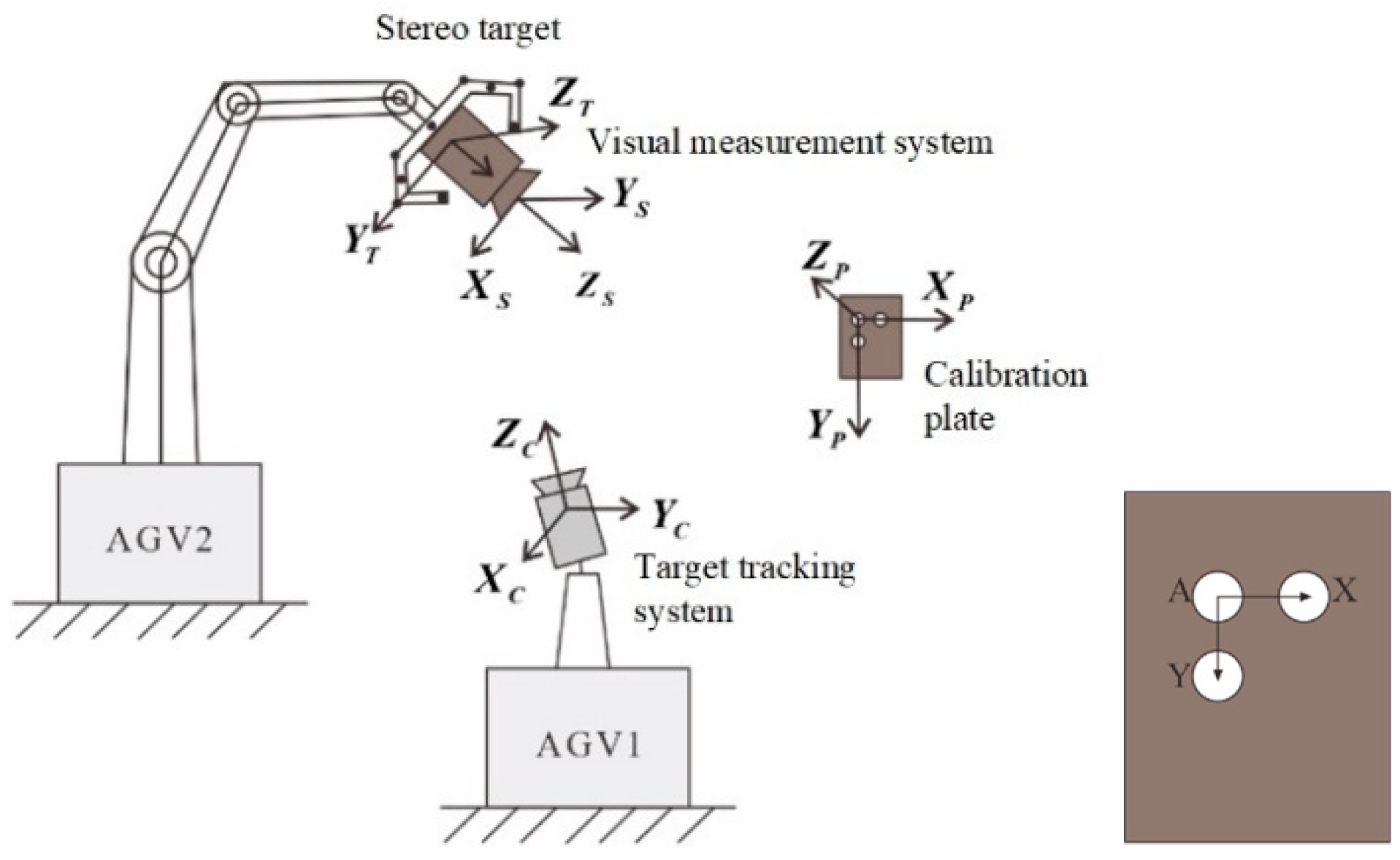

2. System Composition

2.1. Overall Structure

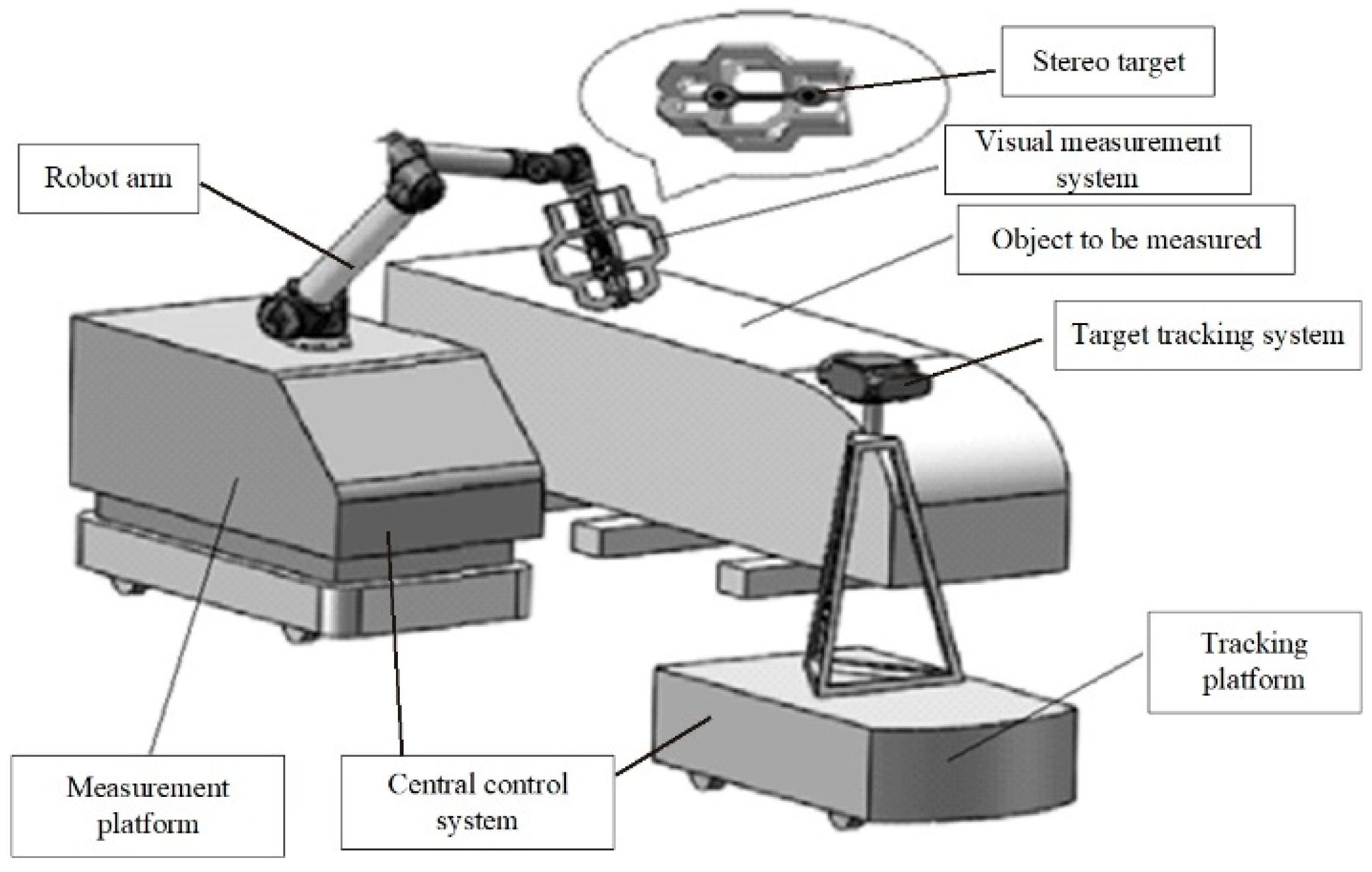

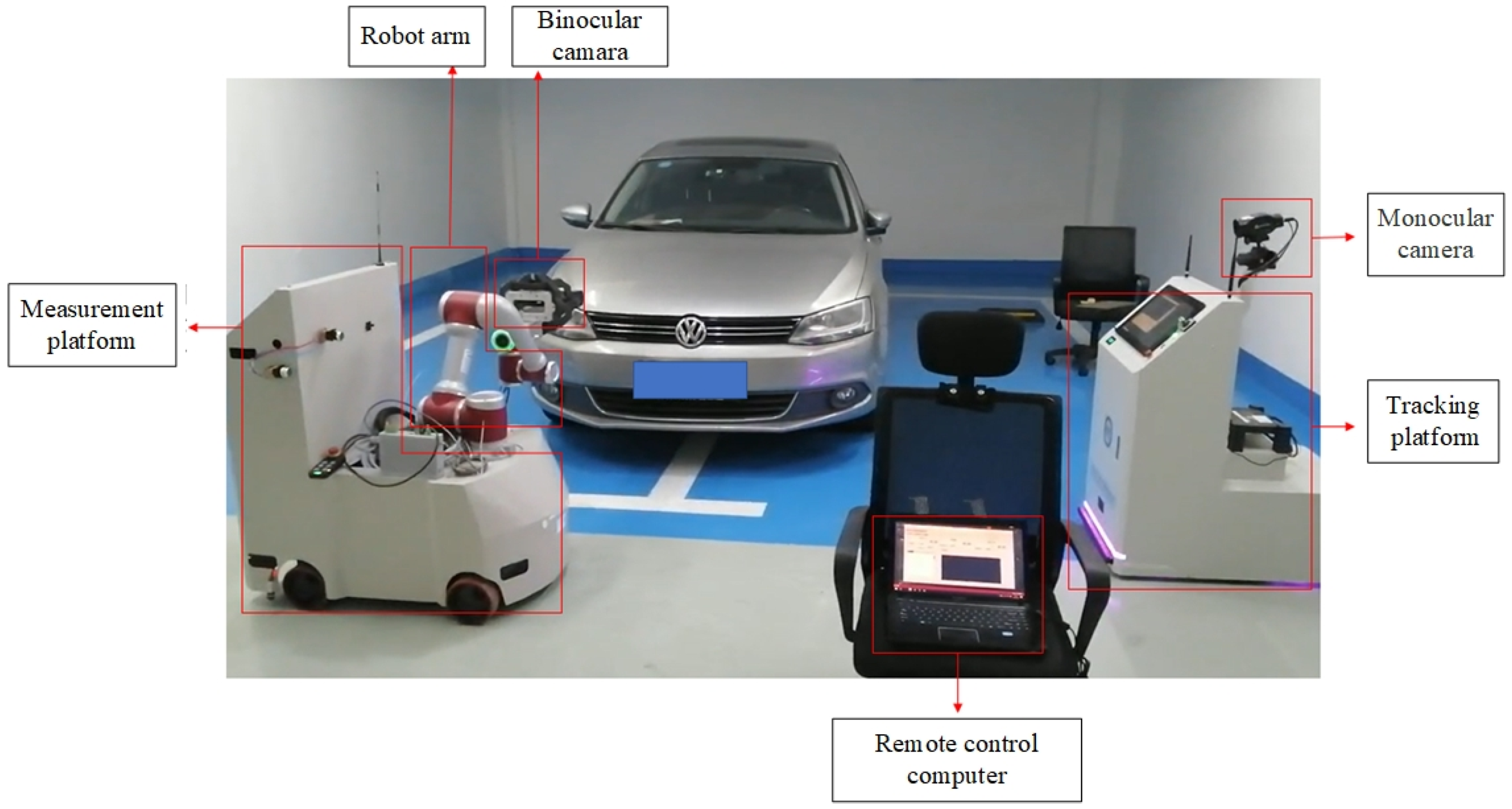

2.2. Hardware System

- (1)

- The mobile robot with the function of optical measurement consists of the measurement chassis (AGV), visual measurement system, high-precision stereo target, industrial robots, and central control system. The robot can adopt various multi-degree-of-freedom tandem industrial robots depending on the measurement tasks, with the stereo target mounted at the end of the robot. The surface of the stereo target has many targets for tracking in different directions. The visual measurement system is a binocular visual system or laser measurement system mounted in the stereo target. The central control system coordinates the work of all devices and realizes data interaction with upper-level manufacturing execution systems and other systems through standardized interfaces.

- (2)

- The mobile robot with a visual tracking function has a target tracking system, and its monocular camera is fixed on the bracket of the tracking chassis (AGV), which can obtain the position of the high-precision target on the measurement chassis in real time, thus realizing the real-time tracking and calibration of the target, converting all local point cloud data obtained each time into the unified world coordinate system, without sticking coded targets.

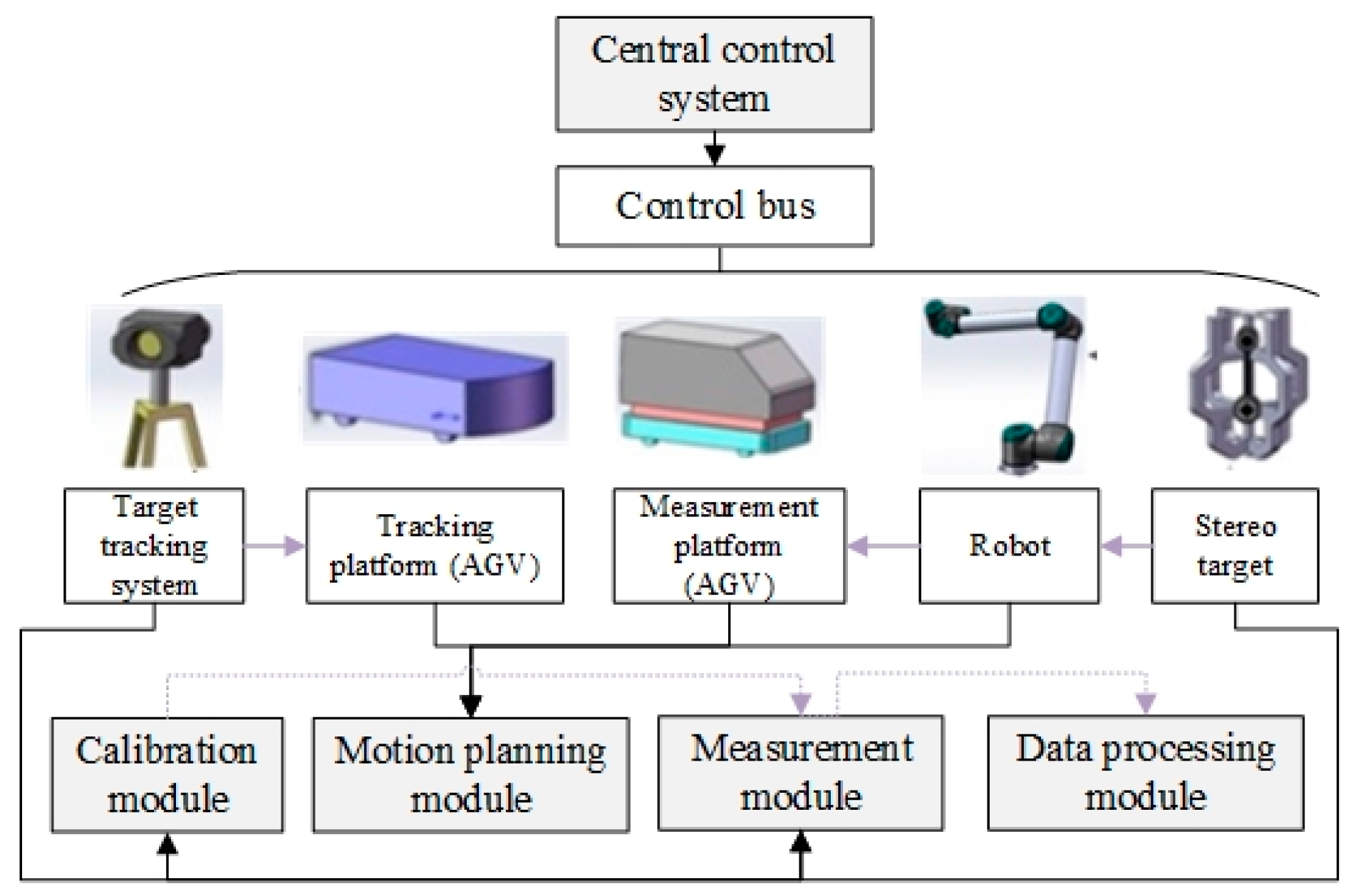

2.3. Software Platform

- (1)

- Calibration module. The function of this module is to calibrate the pose relationship between the stereo target and the visual measurement system and perform the global calibration among multiple sites (i.e., the transformation relationship between the coordinate systems of the target tracking systems of adjacent sites after coordinate transformation). The transformation matrix worked out by calibration enables the transformation of the high-density point cloud acquired by the visual measurement system to the world coordinate system of the target tracking system.

- (2)

- Motion planning module. The function of this module is to perform the path and trajectory planning for the mobile chassis (AGV) and industrial robots. Specifically, the path planning of mobile chassis (AGV) is to obtain the sequence of operating points of the two AGVs. The path planning of industrial robots is to obtain the sequence of operating points of the robot’s end-effector after the mobile chassis (AGV) carrying the robot reaches each point. The two cooperate with each other to cover all the points that need to be measured on the surface of the object to be measured. On the basis of path planning, trajectory planning is implemented to ensure the stability and continuity of the bulk movement.

- (3)

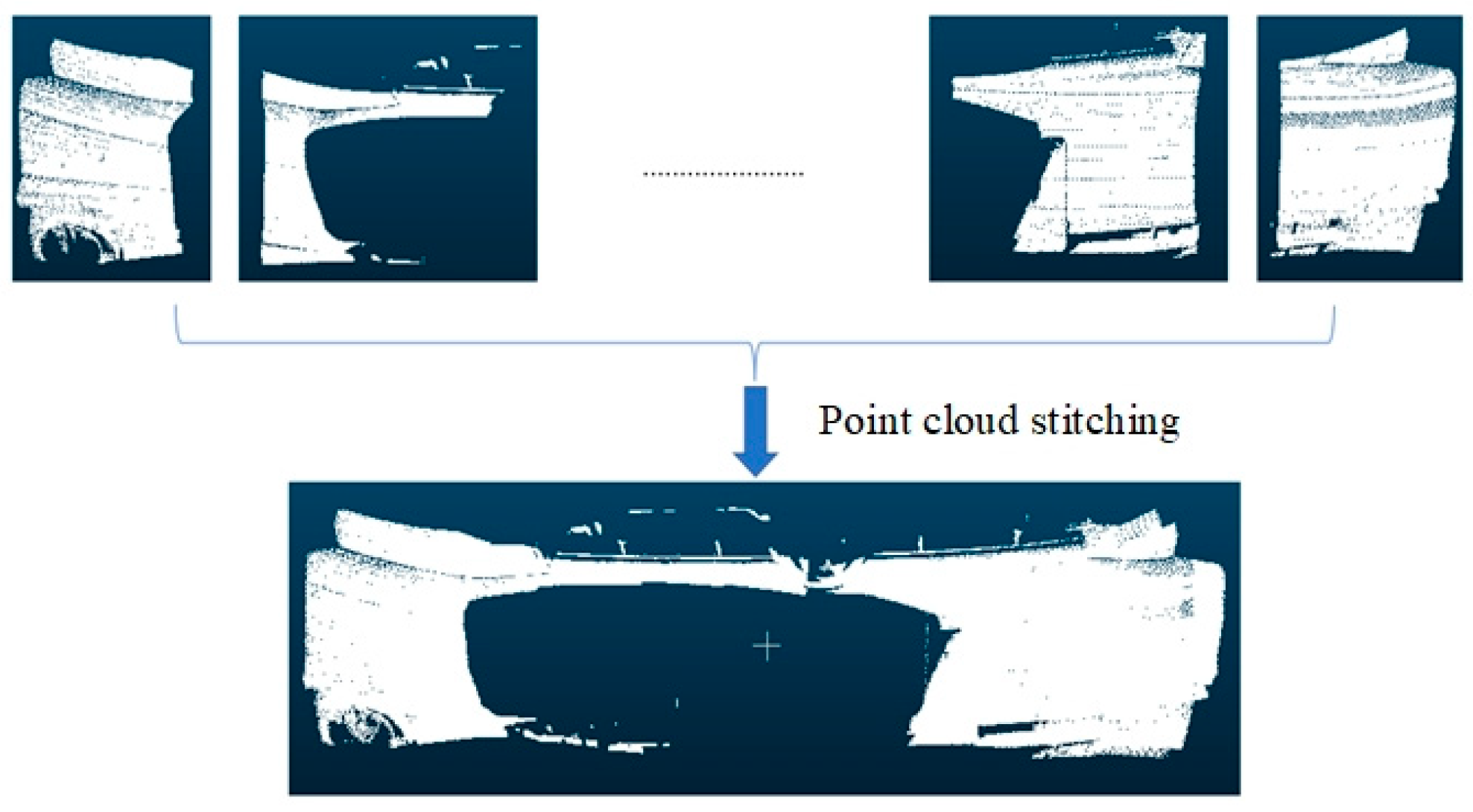

- Measurement module. The function of this module is to obtain the overall point cloud data of the complex workpiece to be measured and complete the unified stitching of the local point cloud data collected by the visual measurement system through the transformation relationship between coordinate systems of local calibration and merge them into the coordinate system of the tracking target system. After the coordinate transformation, the transformation relationship of the coordinate system obtained by global calibration is used to align and merge different point cloud segments between adjacent sites, thus obtaining the complete point cloud data to be measured.

- (4)

- Data processing module. The function of this module is to optimize the point cloud data. Due to the irregularity of complex workpieces and the limitations of measurement methods, the initial point cloud data obtained by target tracking and visual measurement systems may generate cumulative errors, so the data need to be optimized.

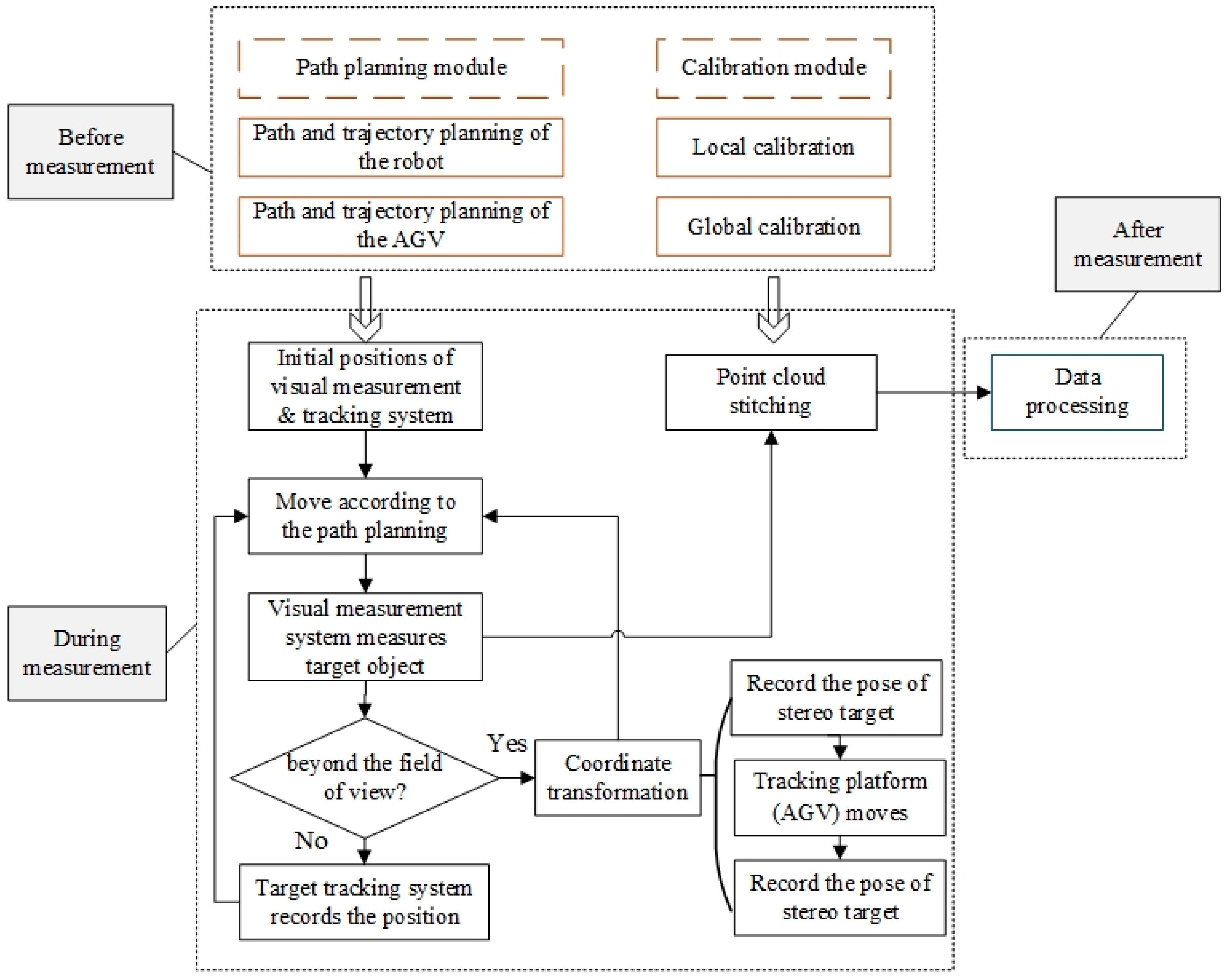

3. System Principle

3.1. Working Principle of Collaborative Measurement

3.2. System Workflow

- (1)

- Before the measurement, the path and trajectory planning are performed for the AGV and robot, and the subsequent overall measurement and acquisition program is executed according to the planned trajectory. In this part, the stereo target and vision measurement system are calibrated.

- (2)

- During the measurement, the visual measurement system, stereo target, and target tracking system are used to obtain the overall point cloud data of the components to be measured. The steps are as follows:

- a.

- First, stop the target tracking system and the visual measurement system at the initial point according to the path planning. Once the measurement starts, the visual measurement system performs the point-to-point measurement on the measured workpiece based on the path planned by the motion planning module. Meanwhile, the target tracking system records the pose of the visual measurement system at each measuring point.

- b.

- When the size of the workpiece to be measured is large, the robot needs to move forward by one station. The measurement chassis (AGV) moves forward along the planned smooth curve for a certain distance at a predetermined speed and stops, and then the visual measurement system smoothly passes the measuring point along the planned path to perform the measurement, and the target tracking system records and collects the pose of the stereo target. When the stereo target on the measurement chassis (AGV) is not within the measurement range of the target tracking system, the tracking chassis (AGV) needs to perform a coordinate transformation to continue the measurement.

- c.

- During the coordinate transformation, the target tracking system first records the pose of the stereo target, then the tracking chassis (AGV) moves forward a certain distance according to the suitable working distance of the target tracking system and stops and records the pose of the stereo target again. Next, the coordinate transformation is performed on the target tracking system according to the two sets of pose-related information of the stereo target recorded by the target tracking system. After the coordinate transformation, the target tracking system and the visual measurement system continue to perform measurement by taking photos according to Steps a and b until the task is completed.

- d.

- Stitch and merge the local point cloud data acquired by the visual measurement system into the coordinate system of the tracking target system through the local calibration of the coordinate system’s transformation relationship. After the coordinate transformation, we can align and merge different point cloud segments obtained from scanning the tracking target system between adjacent sites using the coordinate system’s transformation relationship derived from the global calibration. Finally, the point cloud data of the entire component to be measured can be obtained.

- (3)

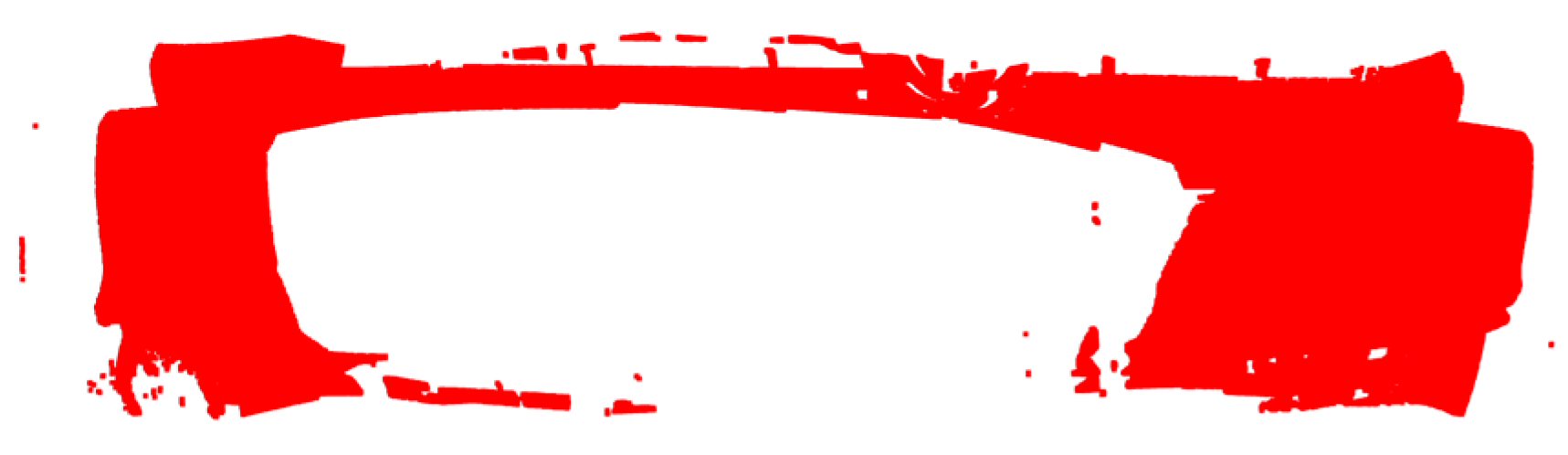

- After measurement, optimize the measured data. The proposed intelligent algorithm (DeepMerge) is used to effectively correct the accumulative errors of the point cloud of visual tracking and stitching.

4. System Core Modules

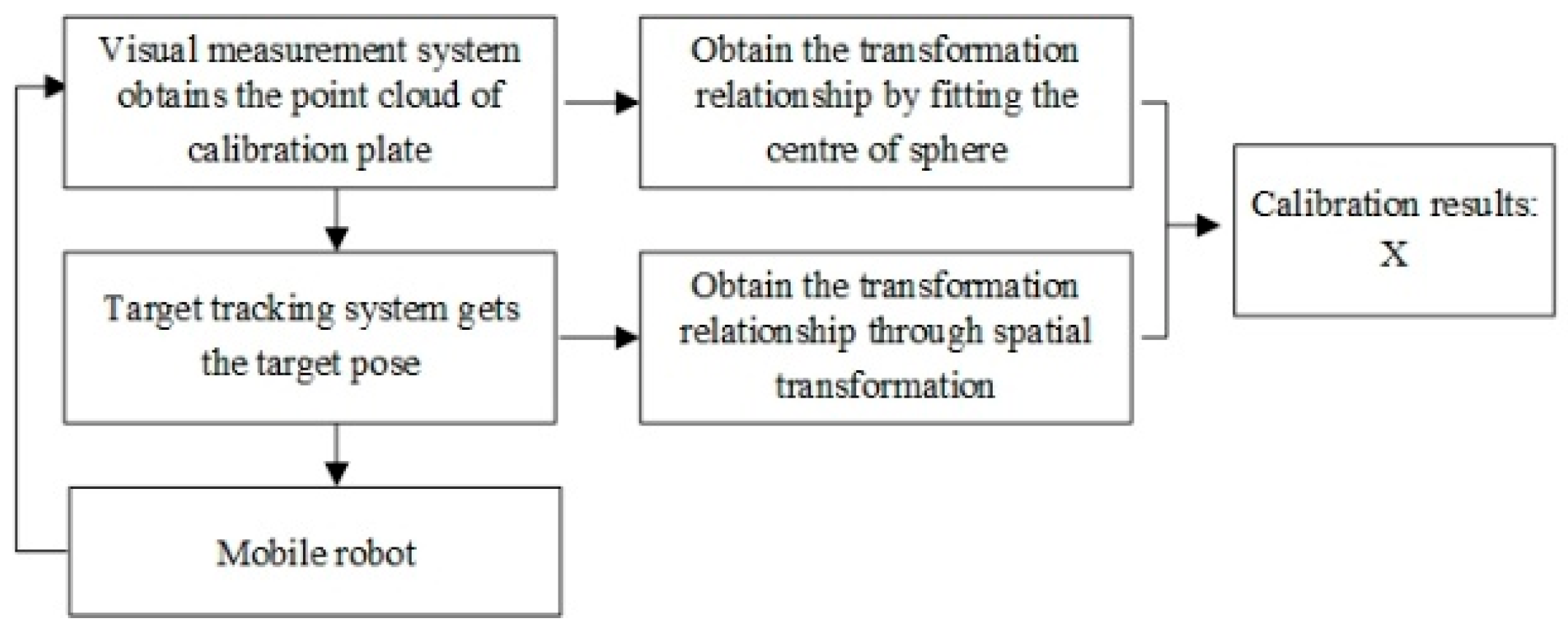

4.1. Calibration Module

- (1)

- Place the calibration plate sprayed with eikonogen within the measurable range of the measuring camera to obtain the point cloud data of the calibration plate in the measurement area;

- (2)

- Import the point cloud data into Geomagic to obtain the center coordinates of the three spheres by fitting;

- (3)

- Change the pose of the target and the measurement camera by moving the robot arm and obtain the transformation relationship () between the measuring coordinate system and the calibration coordinate system under different poses. The tracking camera tracks the position of the target to obtain the transformation relationship () between the target and the tracking camera at the corresponding moment.

- (4)

- Control the distance between the measurement camera and the calibration plate within the effective field of view, collect as many data sets as possible for calculation, and obtain the calibration results. The transformation relationship between the measurement camera and the target is

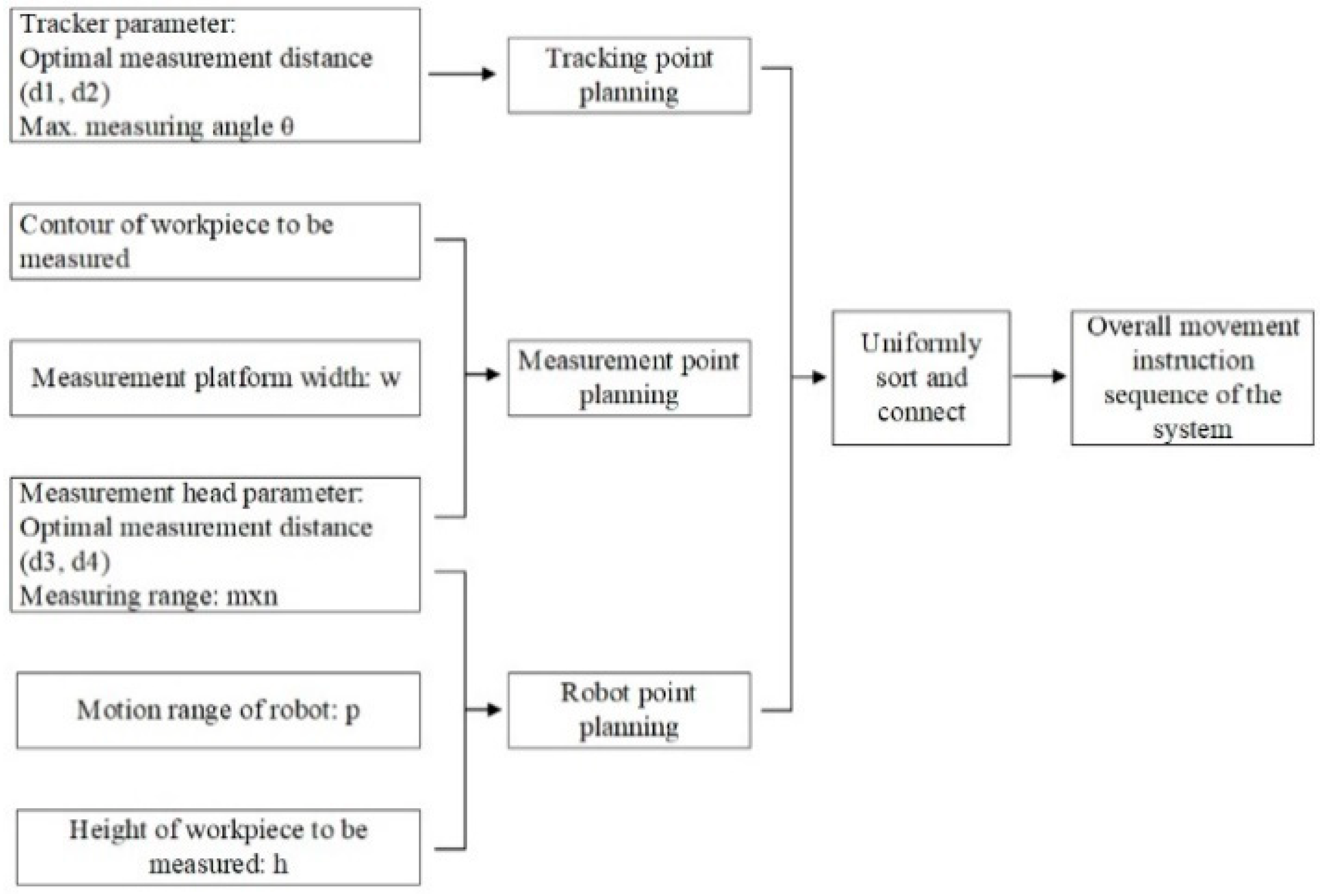

4.2. Trajectory Planning Module

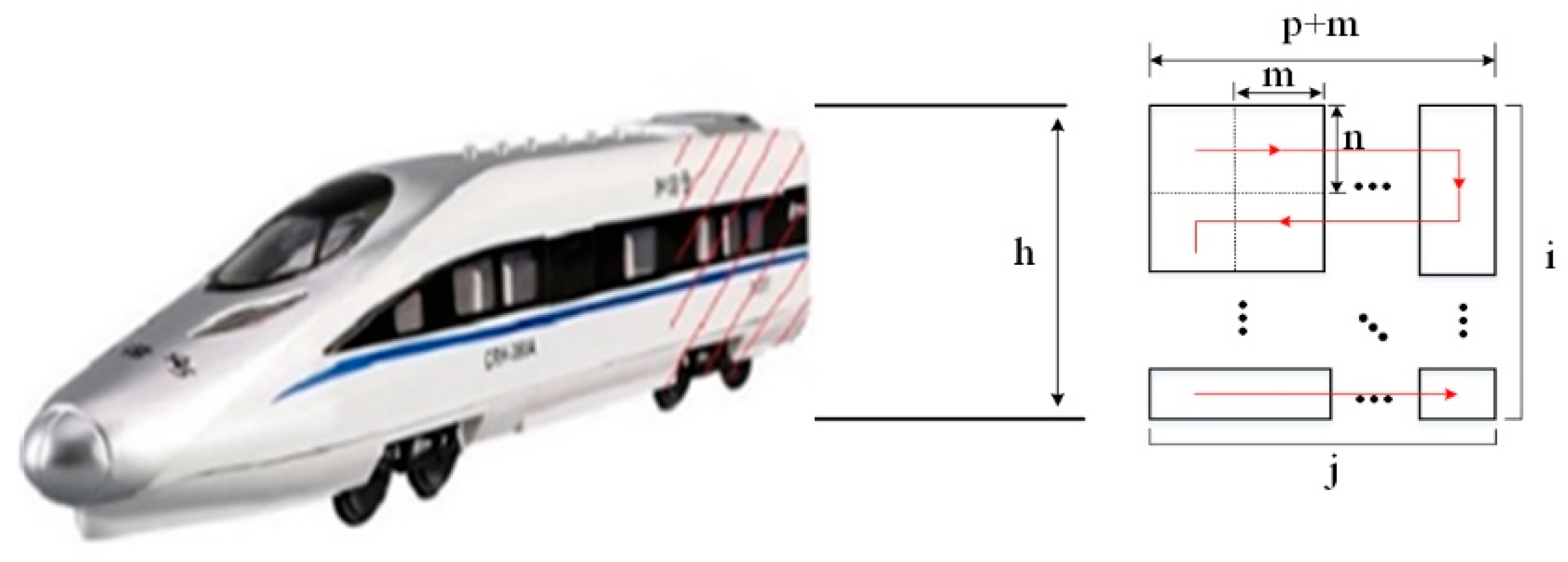

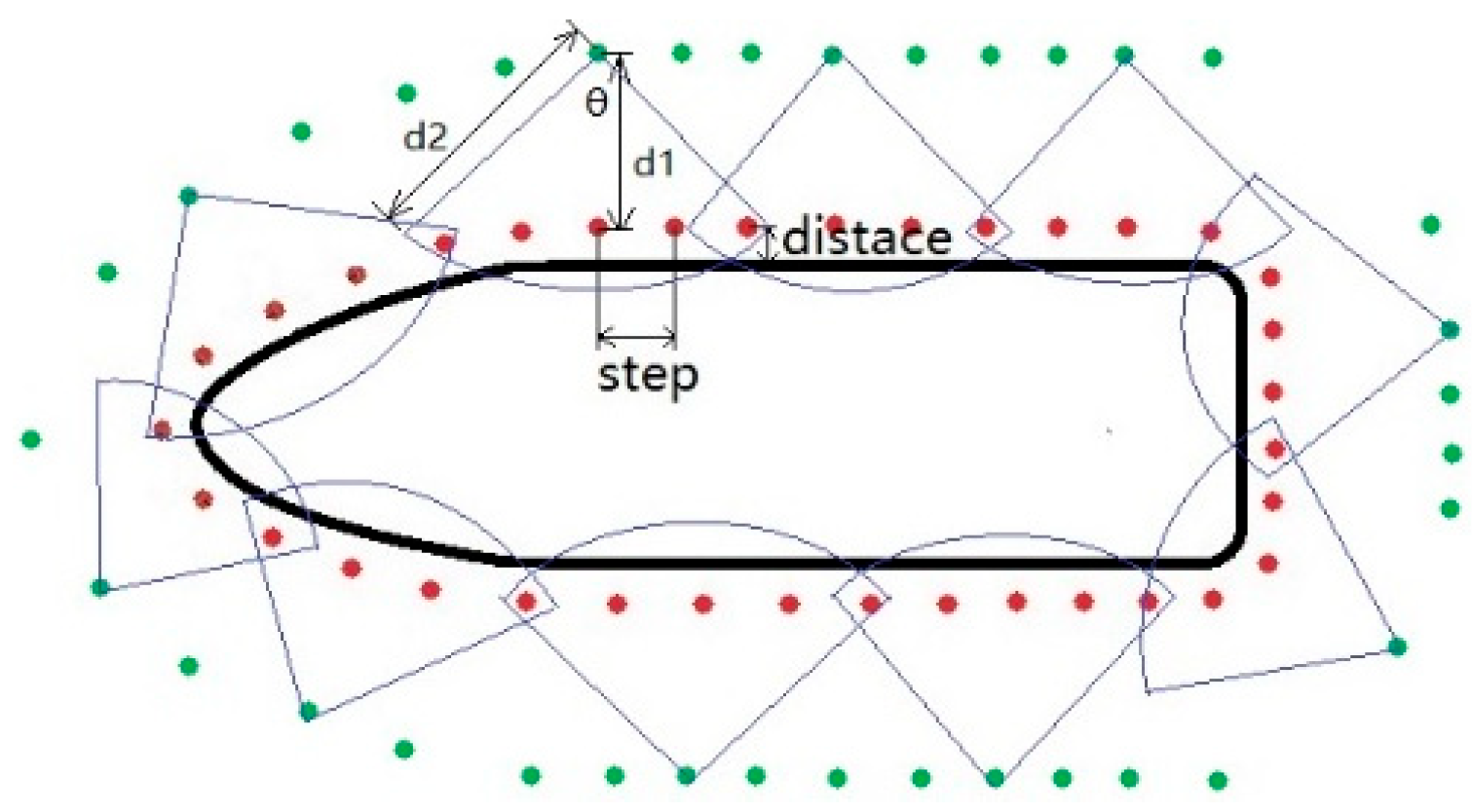

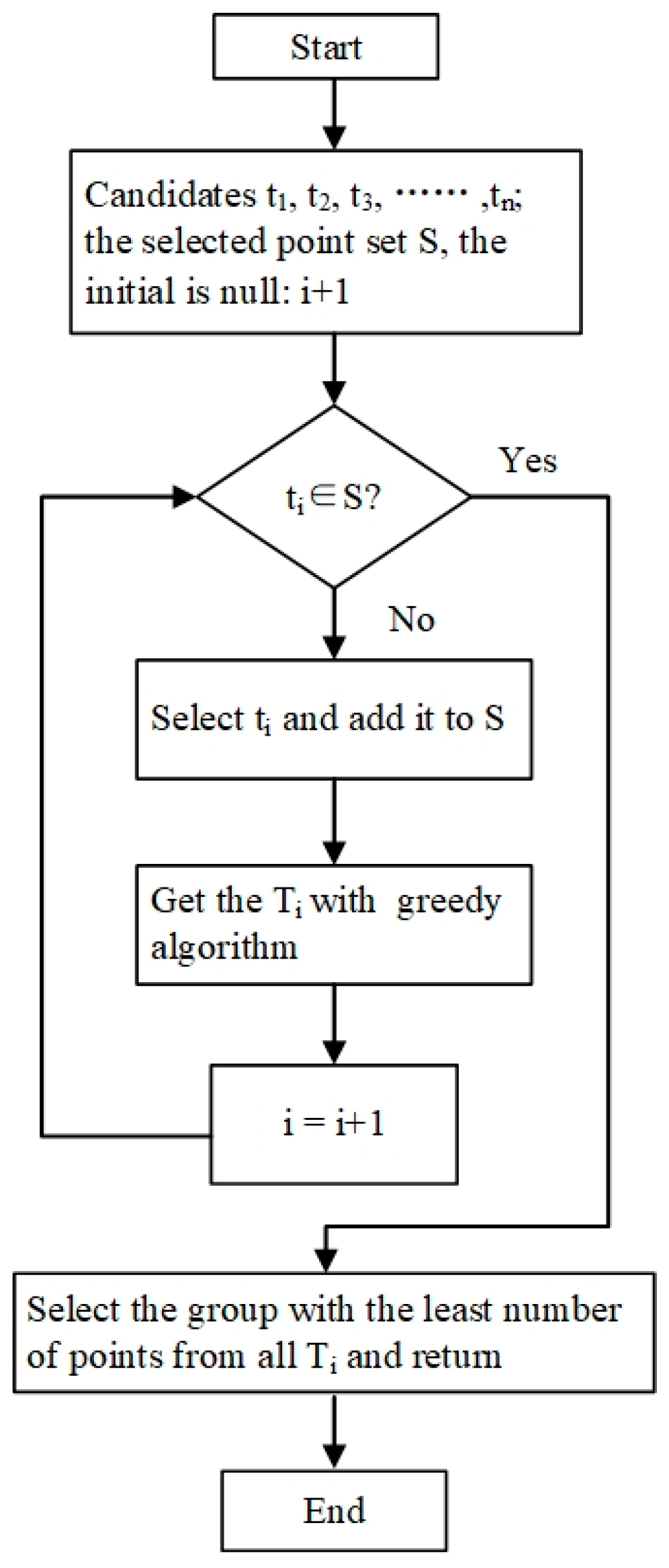

4.2.1. Trajectory Planning of the Optical Measurement Robot

- (1)

- Mobile Measuring Chassis (AGV)

- (2)

- Trajectory planning for the optical measurement robot arm

4.2.2. Trajectory Planning of the Visual Tracking Robot

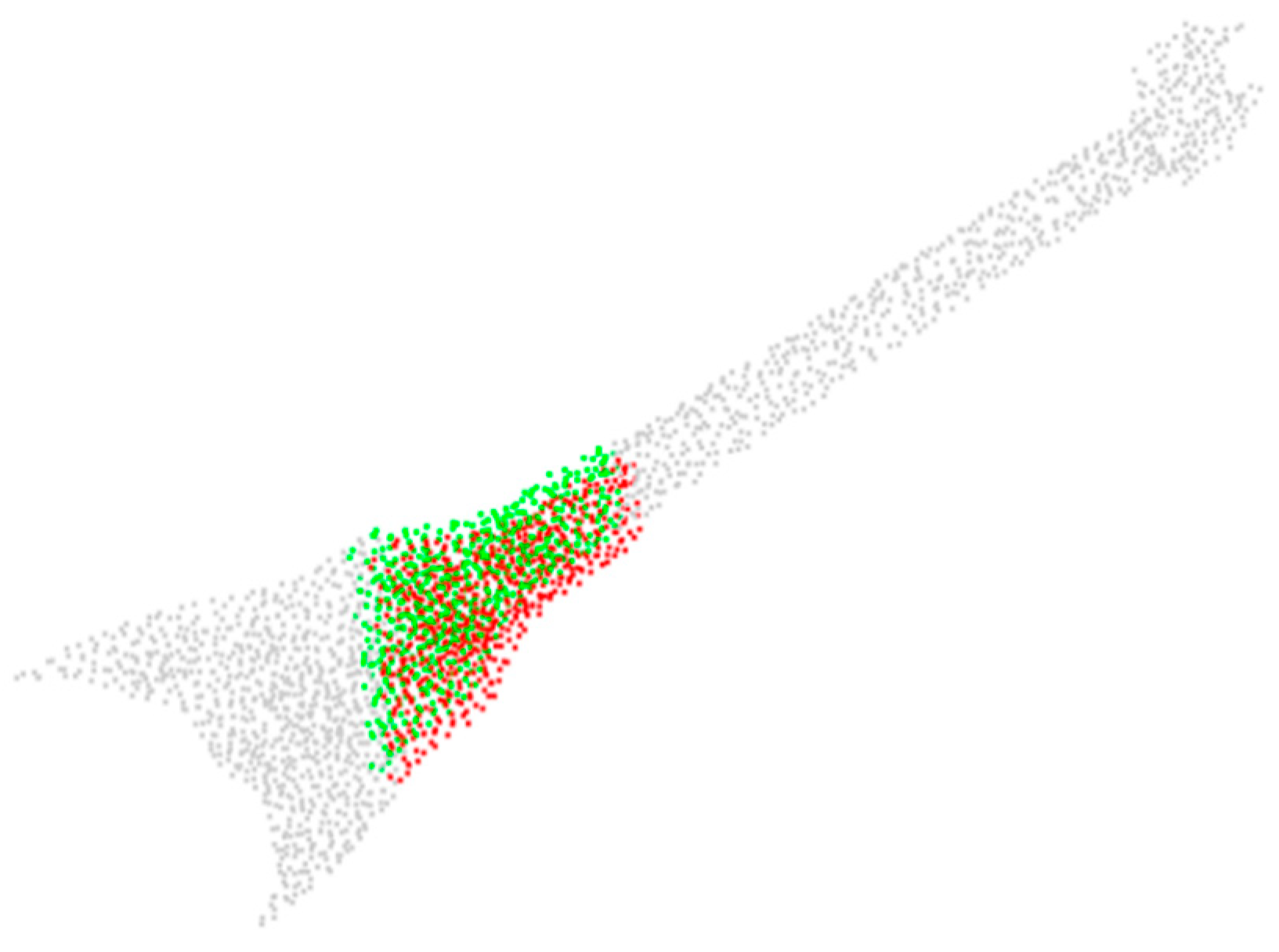

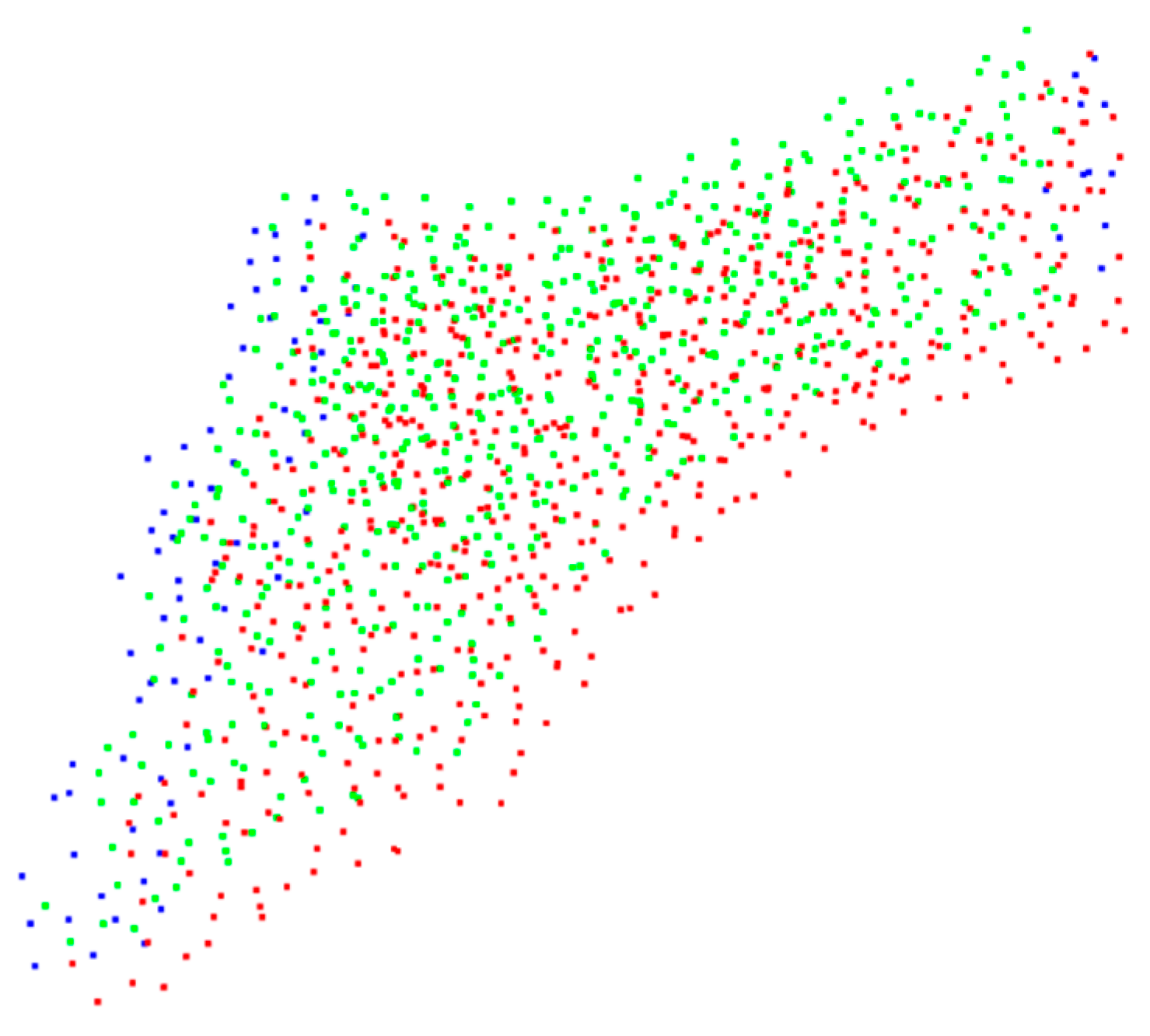

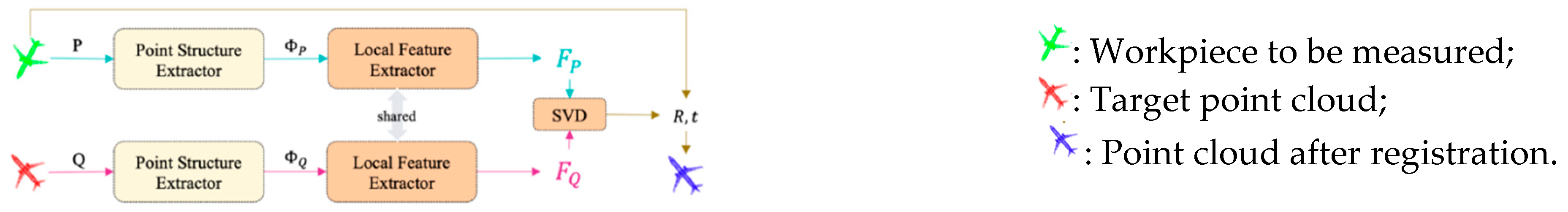

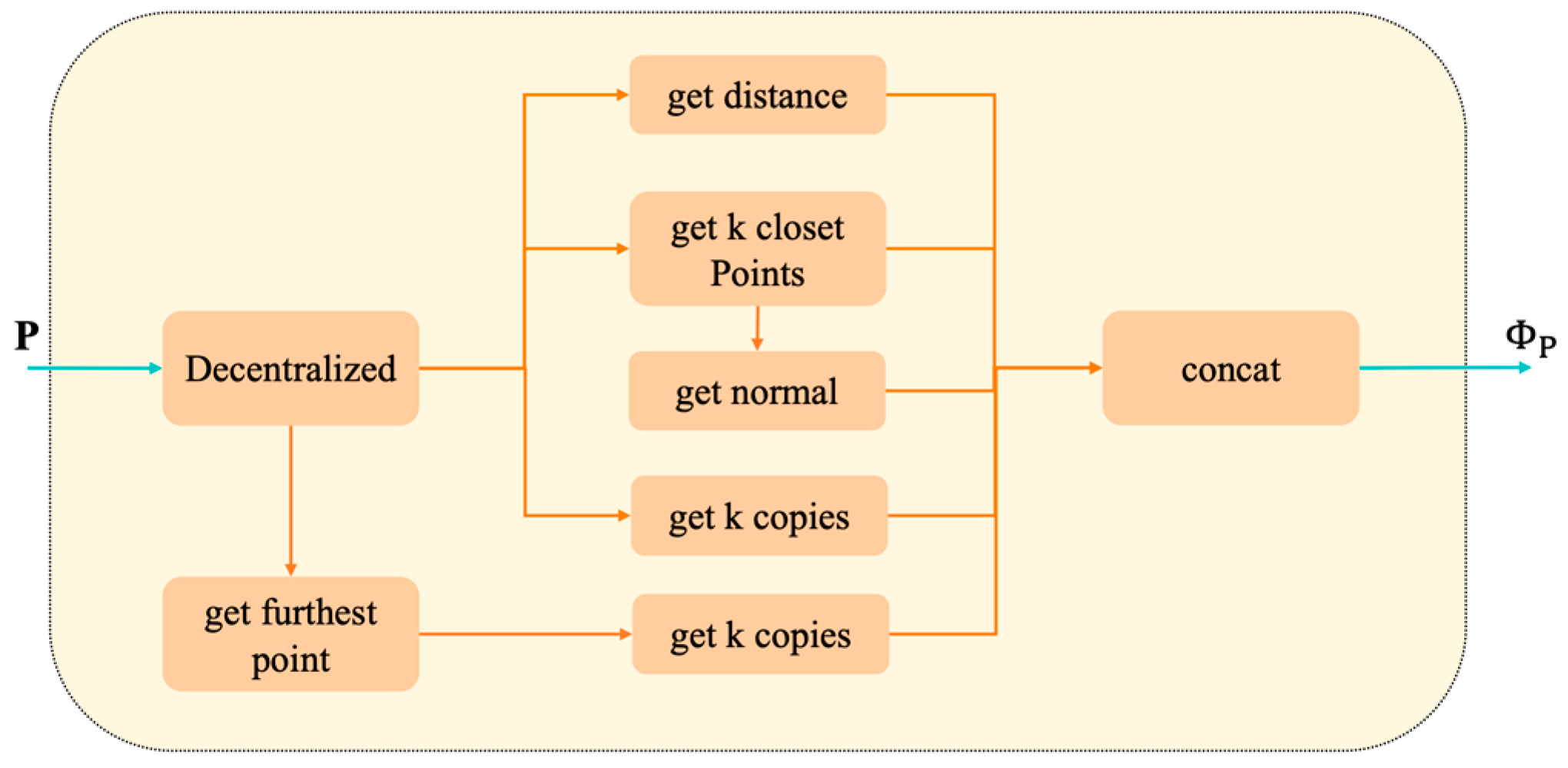

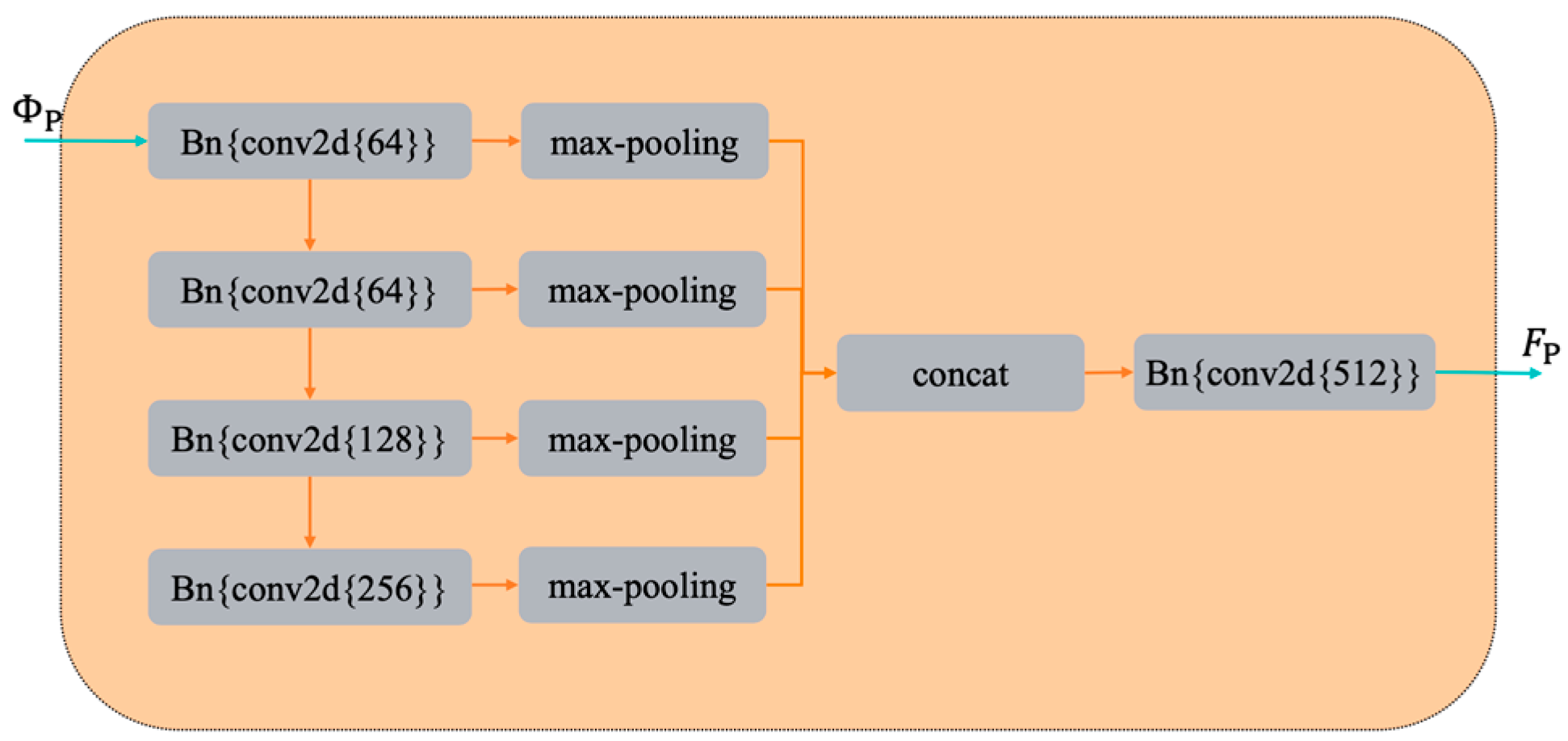

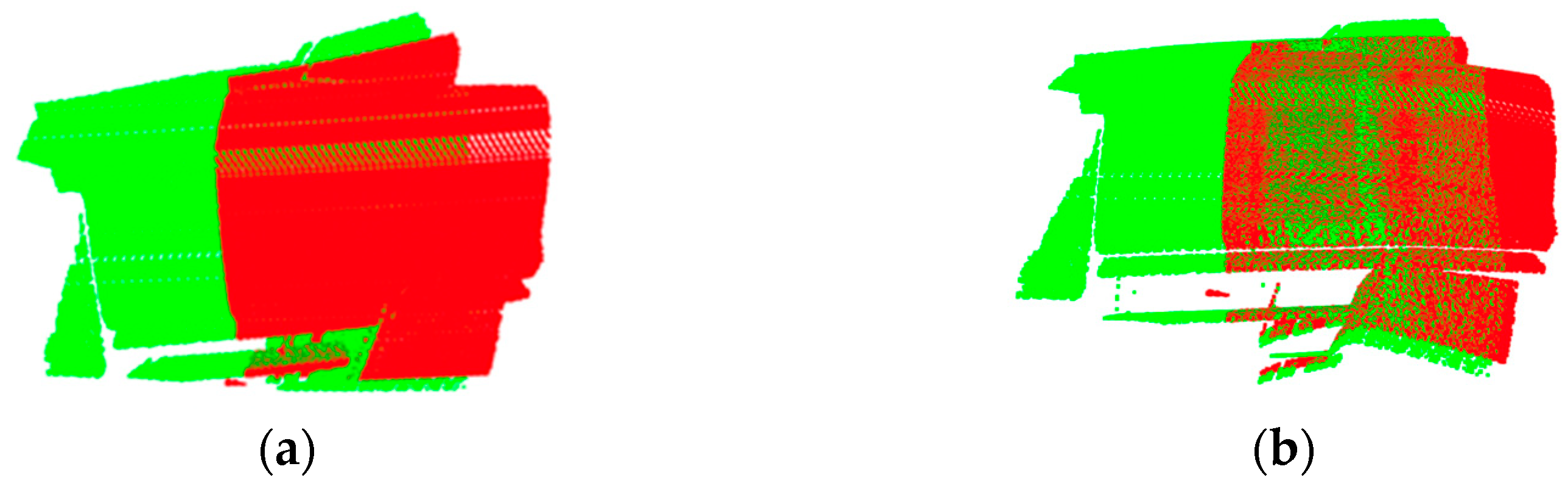

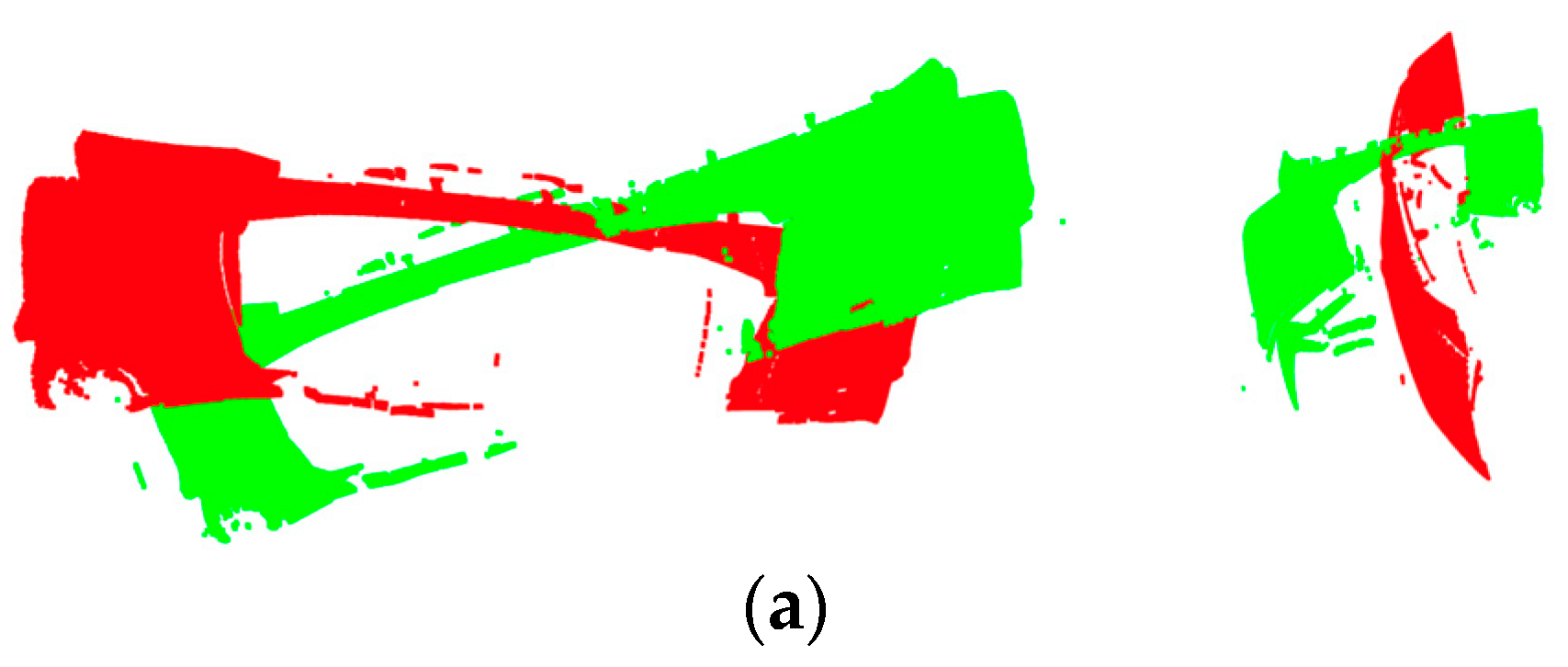

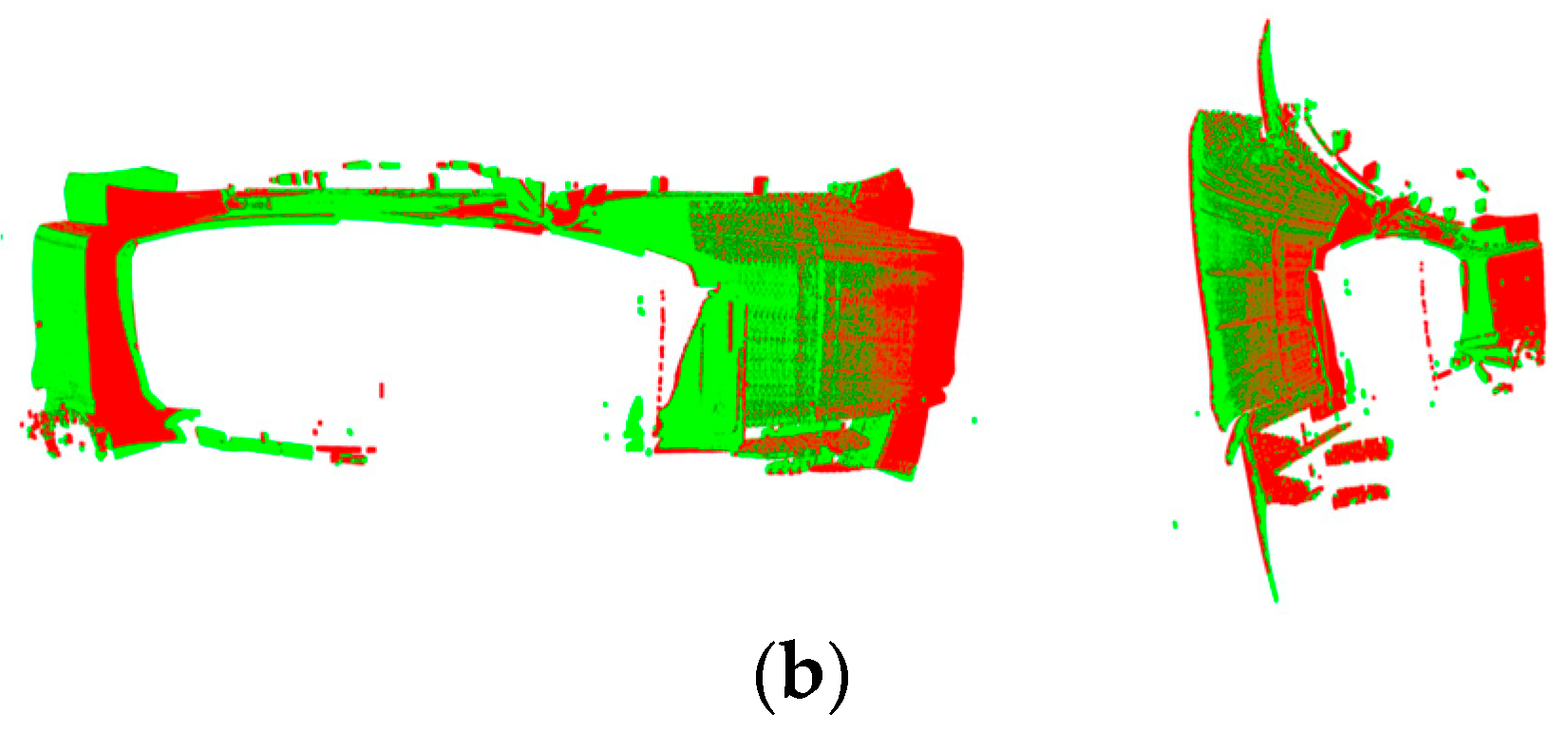

4.3. DeepMerge for Point Cloud Stitching Based on Deep Learning

- (1)

- Principle of DeepMerge for point cloud stitching

- (2)

- Model structure of DeepMerge

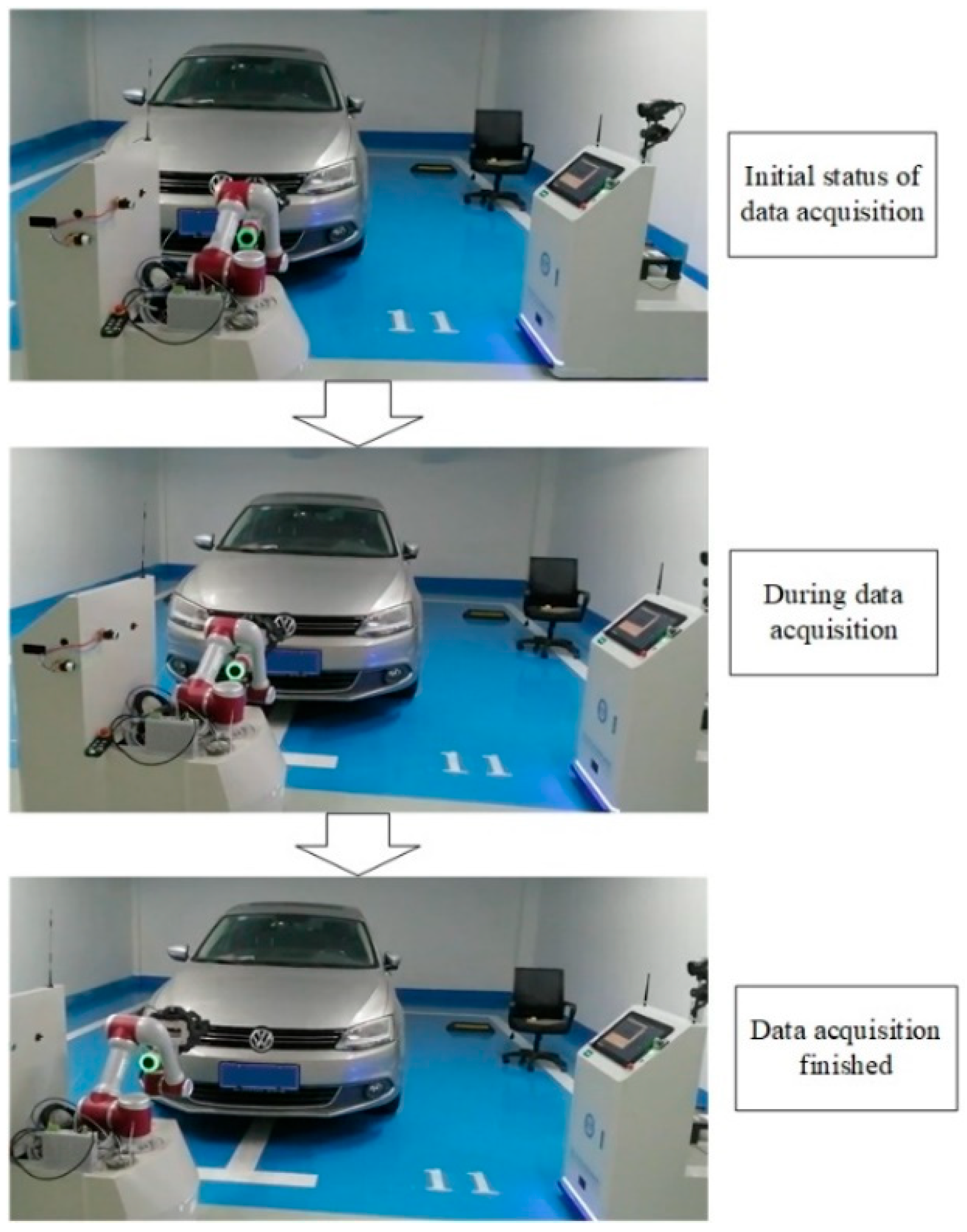

5. Point Cloud Data Collection and Stitching Experiment

5.1. Introduction to the Experimental Platform

5.2. Data Collection Experiment

5.3. Data Stitching Experiment

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhu, D.H.; Feng, X.Z.; Xu, X.H.; Yang, Z.Y.; Li, W.L.; Yan, S.J.; Ding, H. Robotic grinding of complex components: A step towards efficient and intelligent machining challenges, solutions, and applications. Robot. Comput. Integr. Manuf. 2020, 65, 101908. [Google Scholar] [CrossRef]

- Saadat, M.; Cretin, L. Measurement systems for large aerospace components. Sens. Rev. 2002, 22, 199–206. [Google Scholar] [CrossRef]

- Feng, F.; Yan, S.J.; Ding, H. Design and research of multi-robot collaborative polishing system for large wind turbine blades. Robot. Tech. Appl. 2018, 5, 16–24. [Google Scholar]

- Dai, S.J.; Wang, X.J.; Zhang, H.B.; Wen, B.R. Research on variation of grinding temperature of wind turbine blade robotic grinding. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2020, 235, 367–377. [Google Scholar] [CrossRef]

- Soori, M.; Asmael, M.; Khan, A.; Farouk, N. Minimization of surface roughness in 5-axis milling of turbine blades. Mech. Based Des. Struct. Mach. 2021, 1–18. [Google Scholar] [CrossRef]

- Chen, Z.; Du, F. Measuring principle and uncertainty analysis of a large volume measurement network based on the combination of iGPS and portable scanner. Measurement 2017, 104, 263–277. [Google Scholar] [CrossRef]

- Lu, Q.; Ge, Y.H.; Cui, Z. Research on Feature Edge Detection Method of Large-Size Components Based on Machine Vision. Appl. Mech. Mater. 2012, 152–154, 1367–1372. [Google Scholar] [CrossRef]

- Xu, J.; Sheng, H.; Zhang, S.; Tan, J.; Deng, J. Surface accuracy optimization of mechanical parts with multiple circular holes for additive manufacturing based on triangular fuzzy number. Front. Mech. Eng. 2021, 16, 133–150. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, F.; Qu, X.; Liang, B. Fast Measurement and Reconstruction of Large Workpieces with Freeform Surfaces by Combining Local Scanning and Global Position Data. Sensors 2015, 15, 14328–14344. [Google Scholar] [CrossRef] [Green Version]

- Summers, A.; Wang, Q.; Brady, N.; Holden, R. Investigating the measurement of offshore wind turbine blades using coherent laser radar. Robot. Comput. Manuf. 2016, 41, 43–52. [Google Scholar] [CrossRef] [Green Version]

- Hall-Holt, O.; Rusinkiewicz, S. Stripe boundary codes for real-time structured-light range scanning of moving objects. In Proceedings of the Eighth IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 359–366. [Google Scholar] [CrossRef] [Green Version]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Sadaoui, S.E.; Phan, N. Touch Probe Measurement in Dimensional Metrology: A Review. Int. J. Automot. Mech. Eng. 2021, 18, 8647–8657. [Google Scholar] [CrossRef]

- Arenhart, R.S.; Pizzolato, M.; Menin, P.L.; Hoch, L. Devices for Interim Check of Coordinate Measuring Machines: A Systematic Review. MAPAN 2021, 36, 157–173. [Google Scholar] [CrossRef]

- Reich, C.; Ritter, R.; Thesing, J. 3-D shape measurement of complex objects by combining photogrammetry and fringe projection. Opt. Eng. 2000, 39, 224–232. [Google Scholar] [CrossRef] [Green Version]

- Tam, G.K.; Cheng, Z.-Q.; Lai, Y.-K.; Langbein, F.C.; Liu, Y.; Marshall, D.; Martin, R.R.; Sun, X.-F.; Rosin, P.L. Registration of 3D Point Clouds and Meshes: A Survey from Rigid to Nonrigid. IEEE Trans. Vis. Comput. Graph. 2012, 19, 1199–1217. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yin, S.; Ren, Y.; Guo, Y.; Zhu, J.; Yang, S.; Ye, S. Development and calibration of an integrated 3D scanning system for high-accuracy large-scale metrology. Measurement 2014, 54, 65–76. [Google Scholar] [CrossRef]

- Barone, S.; Paoli, A.; Razionale, A.V. 3D Reconstruction and Restoration Monitoring of Sculptural Artworks by a Multi-Sensor Framework. Sensors 2012, 12, 16785–16801. [Google Scholar] [CrossRef] [Green Version]

- Yang, S.; Liu, M.; Yin, S.; Guo, Y.; Ren, Y.; Zhu, J. An improved method for location of concentric circles in vision measurement. Measurement 2017, 100, 243–251. [Google Scholar] [CrossRef]

- Yang, S.; Liu, M.; Song, J.; Yin, S.; Guo, Y.; Ren, Y.; Zhu, J. Flexible digital projector calibration method based on per-pixel distortion measurement and correction. Opt. Lasers Eng. 2017, 92, 29–38. [Google Scholar] [CrossRef]

- Paoli, A.; Razionale, A.V. Large yacht hull measurement by integrating optical scanning with mechanical tracking-based methodologies. Robot. Comput. Manuf. 2012, 28, 592–601. [Google Scholar] [CrossRef]

- Barone, S.; Paoli, A.; Razionale, A.V. Shape measurement by a multi-view methodology based on the remote tracking of a 3D optical scanner. Opt. Lasers Eng. 2012, 50, 380–390. [Google Scholar] [CrossRef]

- Gan, Z.X.; Tang, Q. Laser sensor-based robot visual system and its application. Robot. Tech. Appl. 2010, 5, 20–25. [Google Scholar]

- Mosqueira, G.; Apetz, J.; Santos, K.; Villani, E.; Suterio, R.; Trabasso, L.G. Analysis of the indoor GPS system as feedback for the robotic alignment of fuselages using laser radar measurements as comparison. Robot. Comput. Manuf. 2012, 28, 700–709. [Google Scholar] [CrossRef]

- Jung, M.; Song, J.B. Efficient autonomous global localization for service robots using dual laser scanners and rotational motion. Int. J. Control Autom. Syst. 2017, 15, 723–751. [Google Scholar] [CrossRef]

- Wang, Z.; Mastrogiacomo, L.; Franceschini, F.; Maropoulos, P. Experimental comparison of dynamic tracking performance of iGPS and laser tracker. Int. J. Adv. Manuf. Technol. 2011, 56, 205–213. [Google Scholar] [CrossRef] [Green Version]

- Michalos, G.; Makris, S.; Eytan, A.; Matthaiakis, S.; Chryssolouris, G. Robot Path Correction Using Stereo Vision System. Procedia CIRP 2012, 3, 352–357. [Google Scholar] [CrossRef] [Green Version]

- Schmidt, B.; Wang, L. Automatic work objects calibration via a global–local camera system. Robot. Comput. Manuf. 2014, 30, 678–683. [Google Scholar] [CrossRef]

- Wang, J.; Tao, B.; Gong, Z.; Yu, S.; Yin, Z. A Mobile Robotic Measurement System for Large-scale Complex Components Based on Optical Scanning and Visual Tracking. Robot. Comput.-Integr. Manuf. 2021, 67, 102010. [Google Scholar] [CrossRef]

- Wang, J.; Tao, B.; Gong, Z.; Yu, W.; Yin, Z. A Mobile Robotic 3-D Measurement Method Based on Point Clouds Alignment for Large-Scale Complex Surfaces. IEEE Trans. Instrum. Meas. 2021, 70, 7503011. [Google Scholar] [CrossRef]

- Lindner, L.; Sergiyenko, O.; Rodríguez-Quiñonez, J.; Tyrsa, V.V.; Mercorelli, P.; Fuentes, W.F.; Murrieta-Rico, F.N.; Nieto-Hipólito, J. Continuous 3D scanning mode using servomotors instead of stepping motors in dynamic laser triangulation. In Proceedings of the 2015 IEEE 24th International Symposium on Industrial Electronics (ISIE), Buzios, Brazil, 3–5 June 2015; pp. 944–949. [Google Scholar]

- Garcia-Cruz, X.M.; Sergiyenko, O.; Tyrsa, V.V.; Rivas-López, M.; Hernández-Balbuena, D.; Rodríguez-Quiñonez, J.; Basaca-Preciado, L.; Mercorelli, P. Optimization of 3D laser scanning speed by use of combined variable step. Opt. Lasers Eng. 2014, 54, 141–151. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, L.; Gan, Z.; Ren, J.; Wu, F.; Su, H.; Mei, Z.; Sun, Y. Collaborative Measurement System of Dual Mobile Robots That Integrates Visual Tracking and 3D Measurement. Machines 2022, 10, 540. https://doi.org/10.3390/machines10070540

Qi L, Gan Z, Ren J, Wu F, Su H, Mei Z, Sun Y. Collaborative Measurement System of Dual Mobile Robots That Integrates Visual Tracking and 3D Measurement. Machines. 2022; 10(7):540. https://doi.org/10.3390/machines10070540

Chicago/Turabian StyleQi, Lizhe, Zhongxue Gan, Jiankun Ren, Fuwang Wu, Hao Su, Zhen Mei, and Yunquan Sun. 2022. "Collaborative Measurement System of Dual Mobile Robots That Integrates Visual Tracking and 3D Measurement" Machines 10, no. 7: 540. https://doi.org/10.3390/machines10070540

APA StyleQi, L., Gan, Z., Ren, J., Wu, F., Su, H., Mei, Z., & Sun, Y. (2022). Collaborative Measurement System of Dual Mobile Robots That Integrates Visual Tracking and 3D Measurement. Machines, 10(7), 540. https://doi.org/10.3390/machines10070540