Improved DCNN Based on Multi-Source Signals for Motor Compound Fault Diagnosis

Abstract

:1. Introduction

- (1)

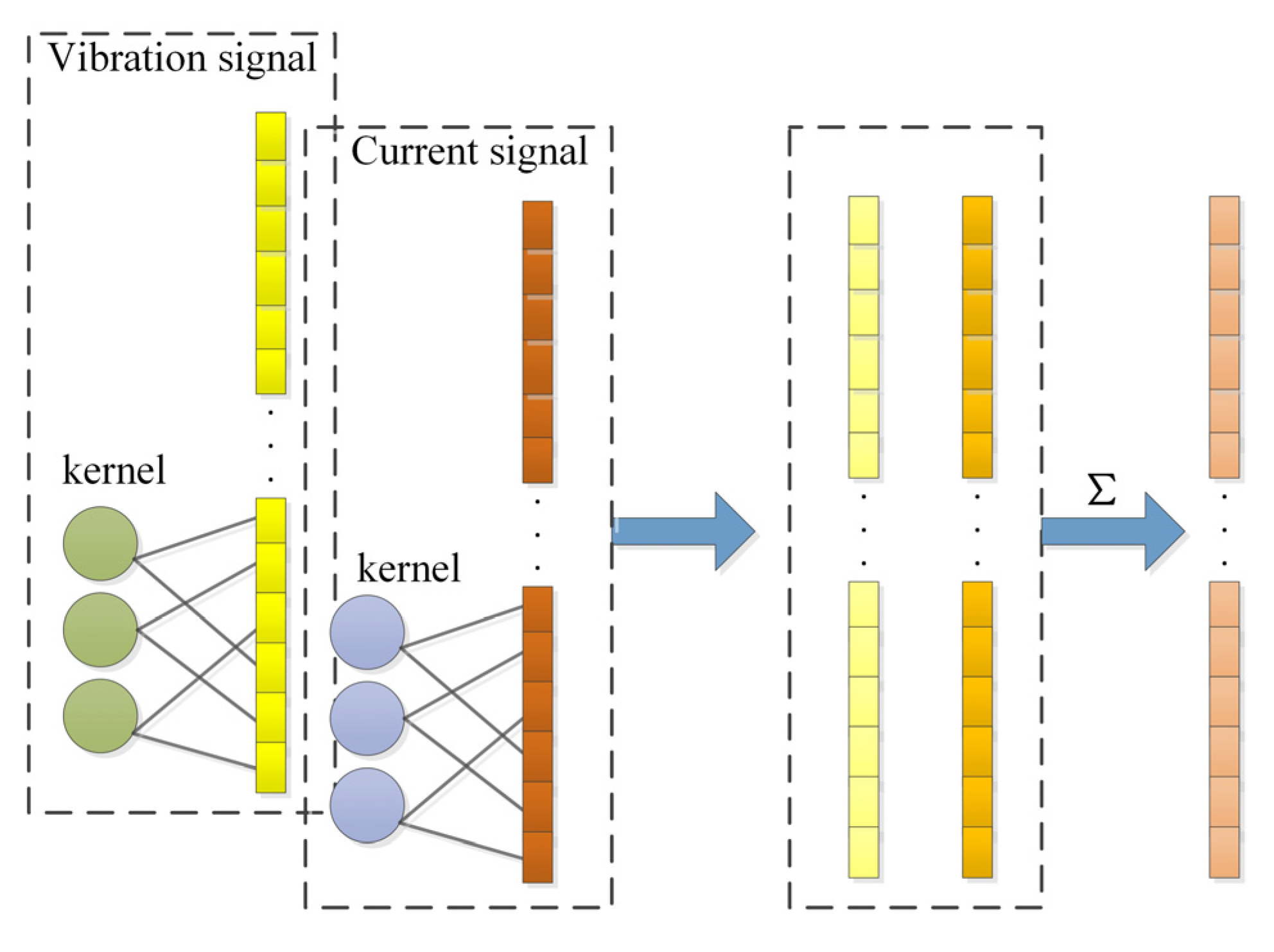

- An improved multi-channel DCNN model was constructed to achieve the purpose of effective multi-source signal fusion.

- (2)

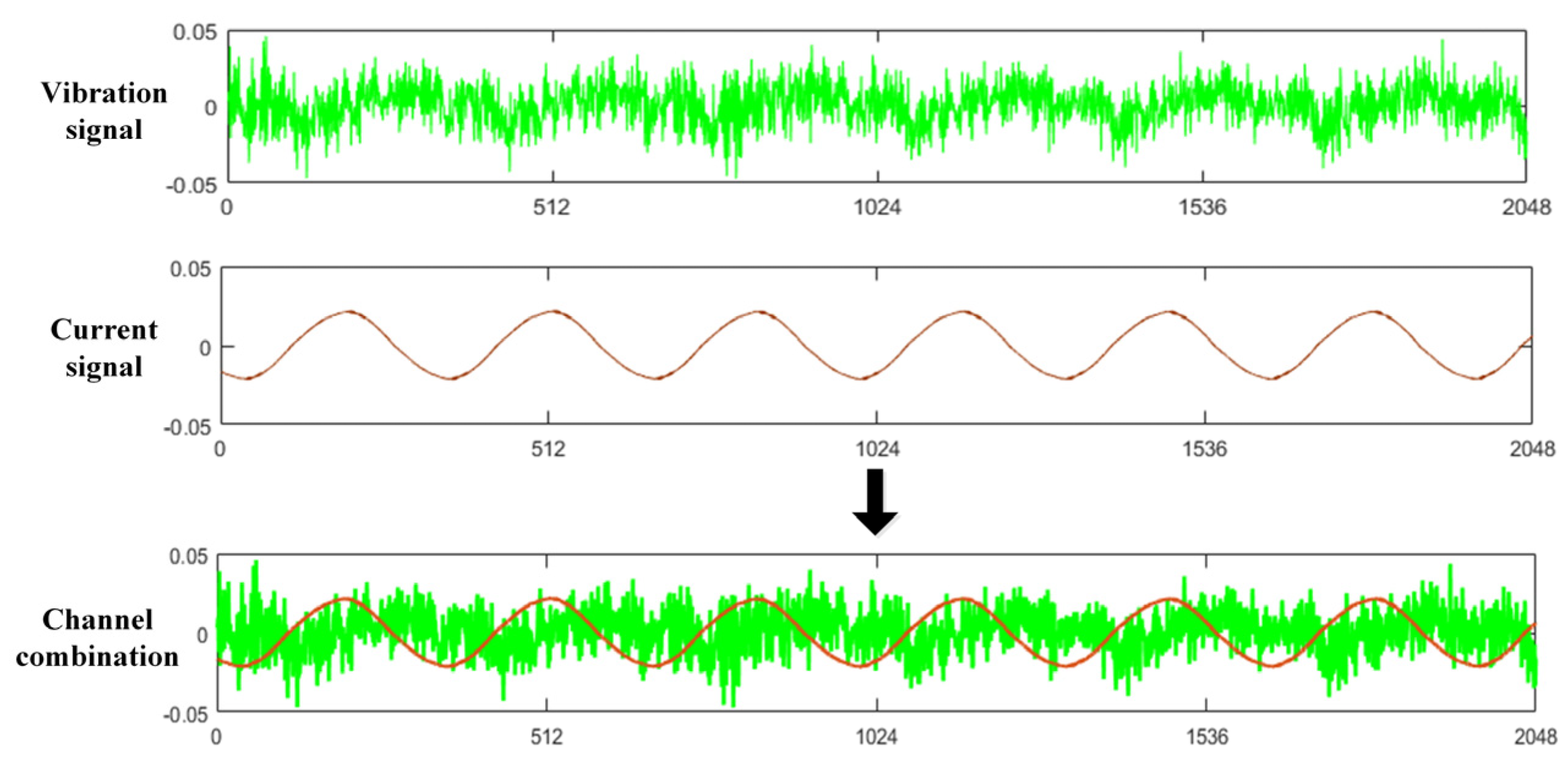

- The model analysis was based on original one-dimensional vibration signal and current signals collected by multiple sensors, and the multi-source signal comprehensively covered the fault information of the motor compound fault.

- (3)

- The SELU activation function is used to effectively avoid gradient disappearance and gradient explosion during training.

- (4)

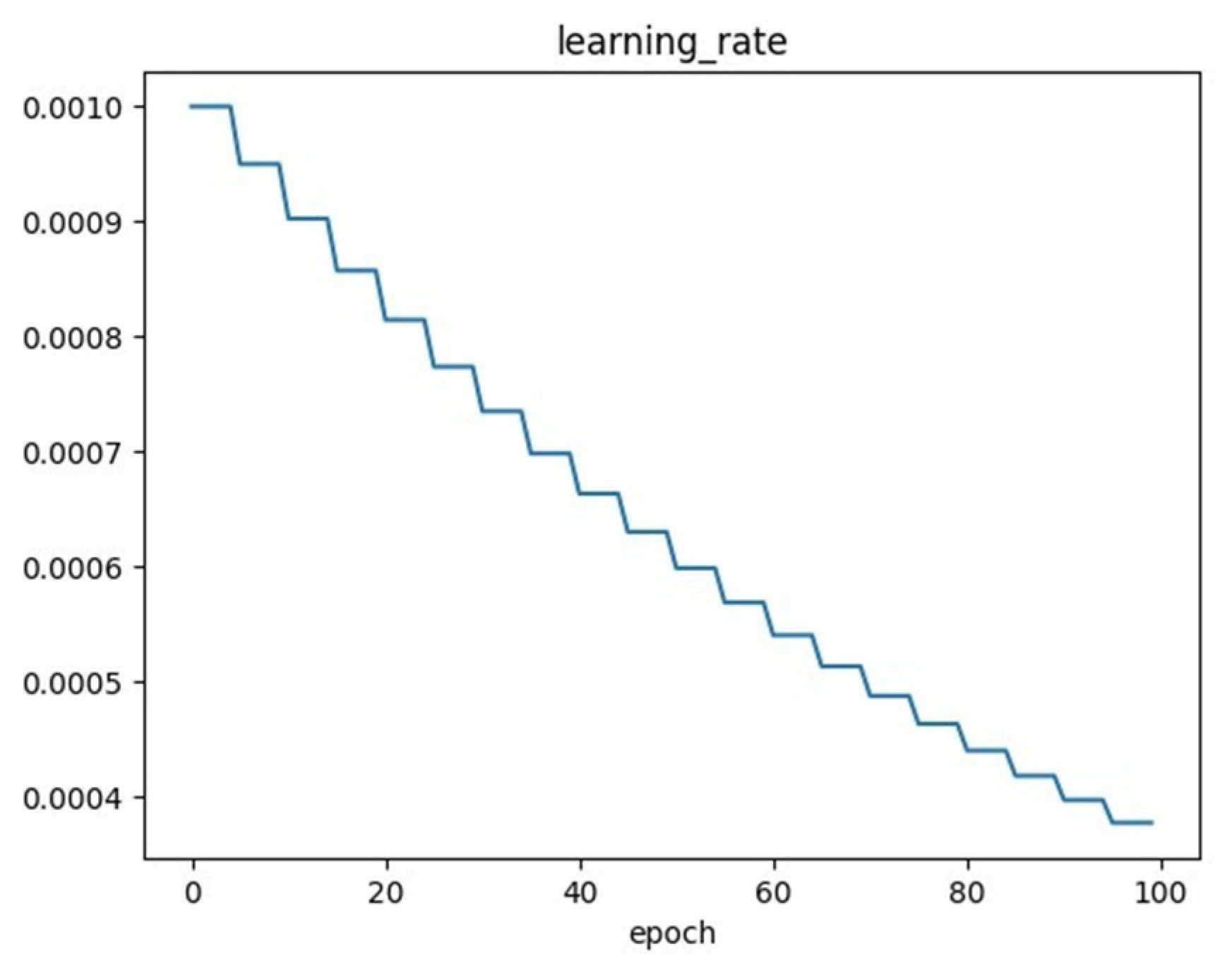

- The combination of dynamic attenuated learning rate and Adam Optimizer method improves the model’s training stability and ensures convergence accuracy.

2. Model and Methodology

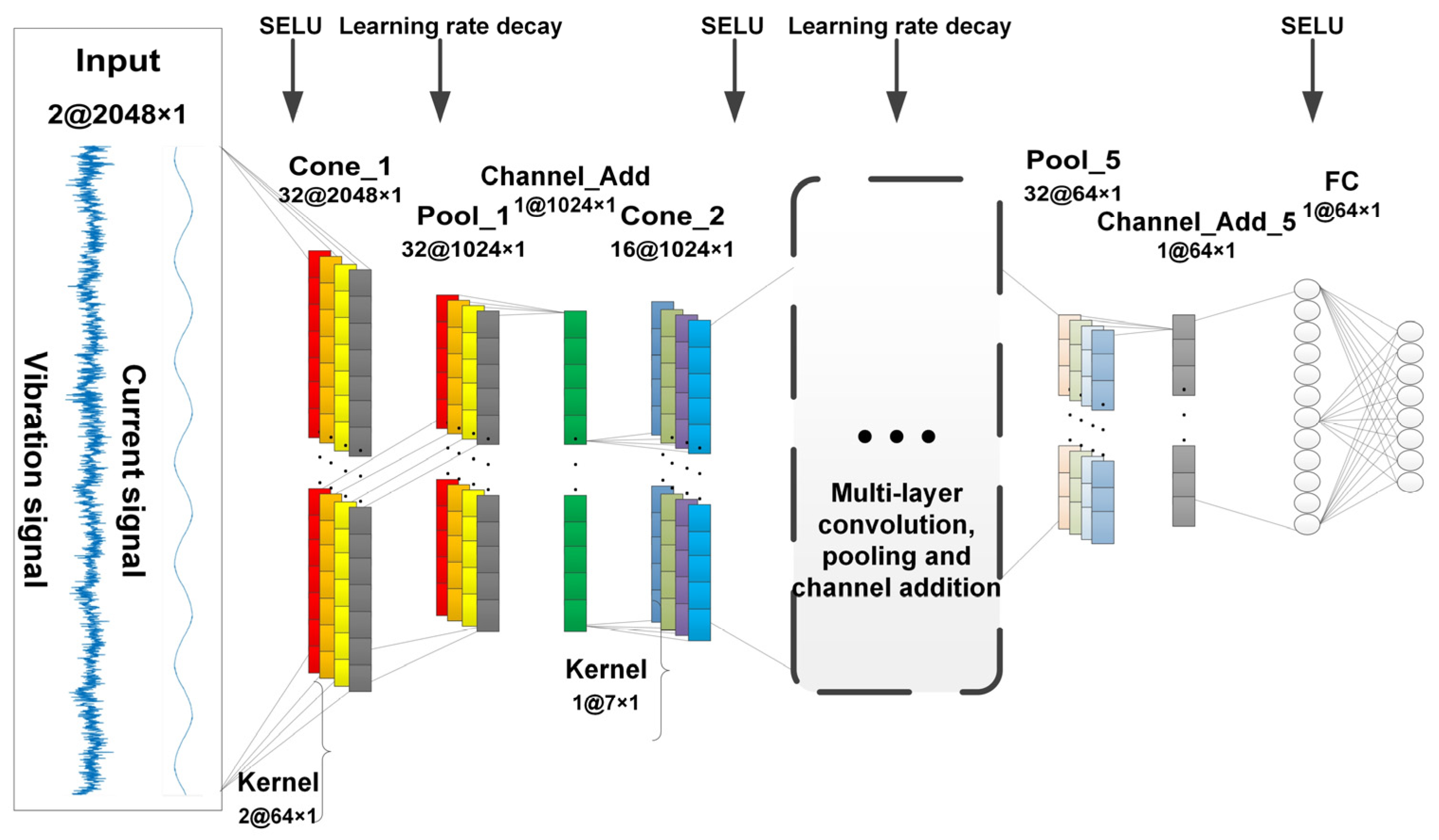

2.1. Architecture of MC-DCNN

2.2. SeLU Activation Function

2.3. Adam Optimizer with Dynamic Attenuation Learning Rate

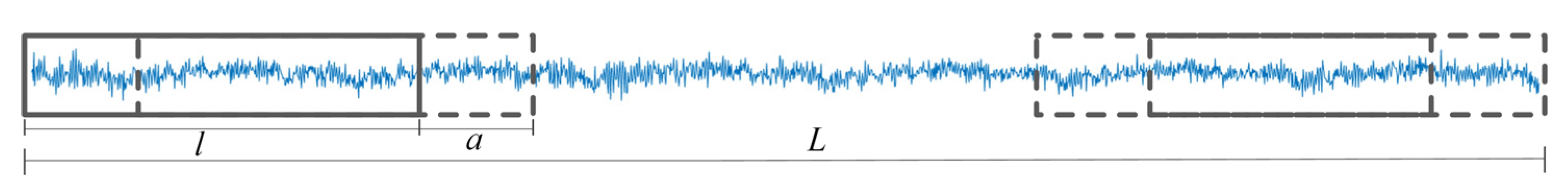

2.4. Data Augmentation Method

3. MC-DCNN Fault Diagnosis Framework

4. Experiments and Results

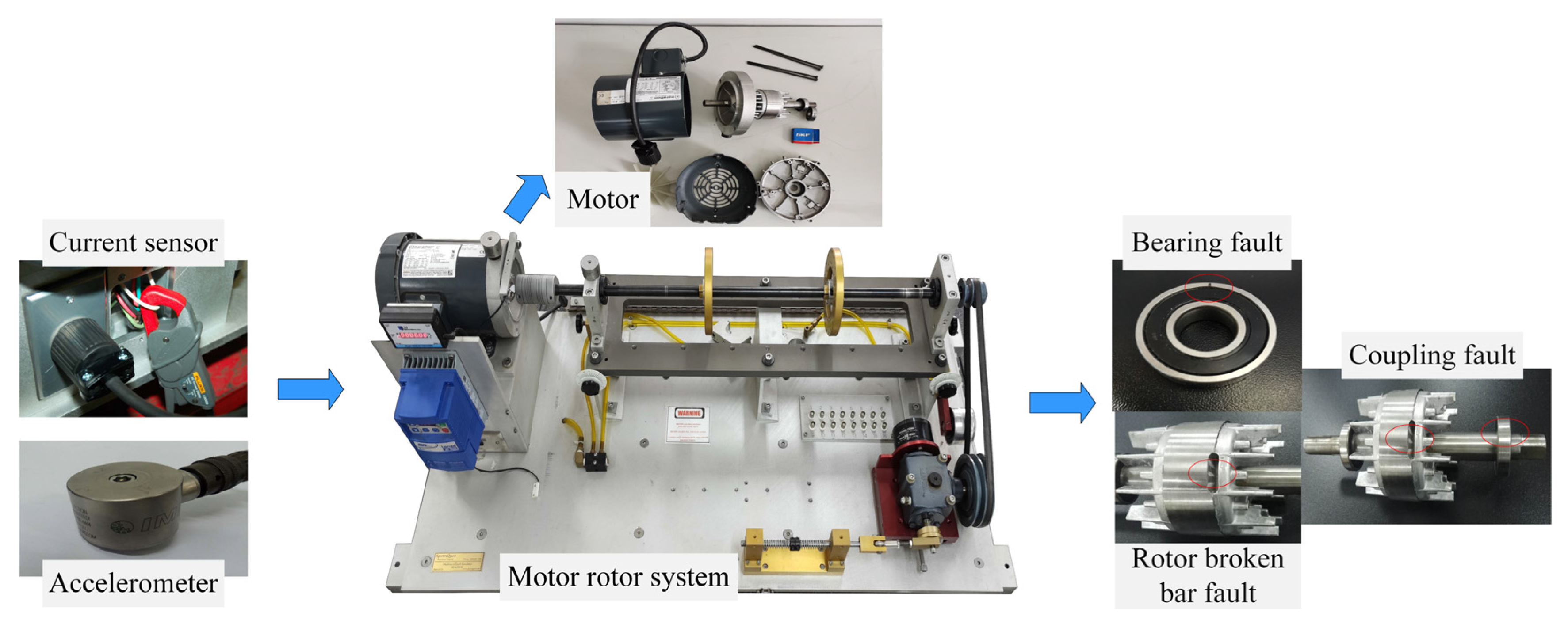

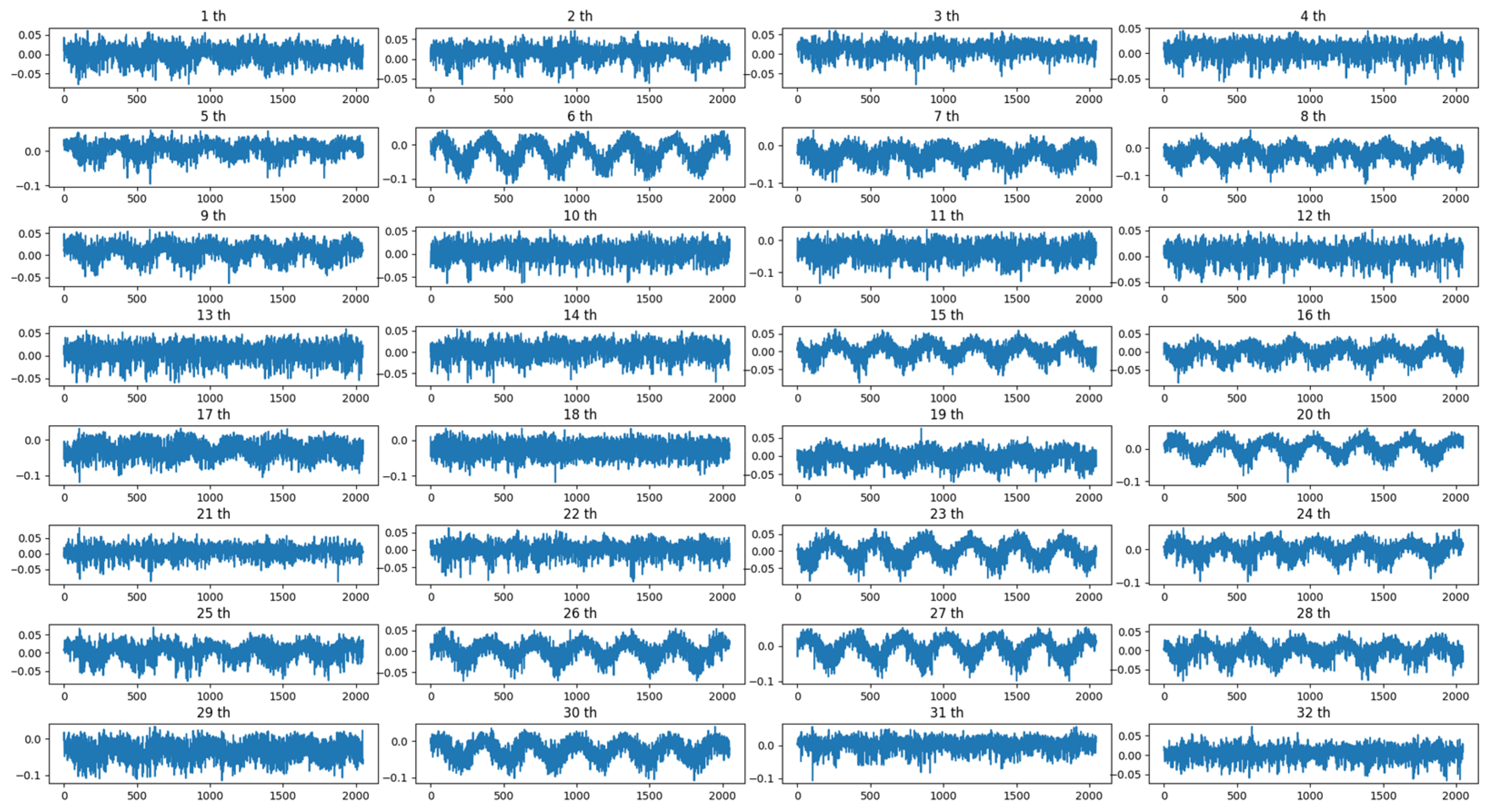

4.1. Experimental Setting

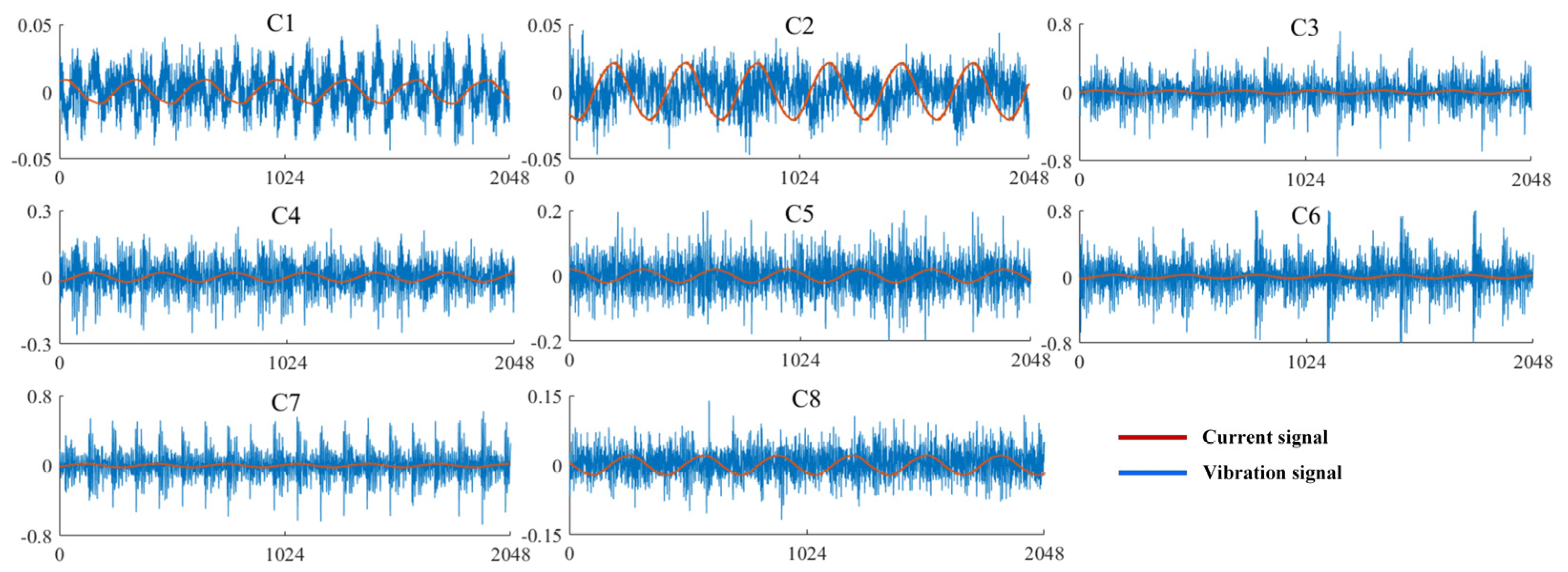

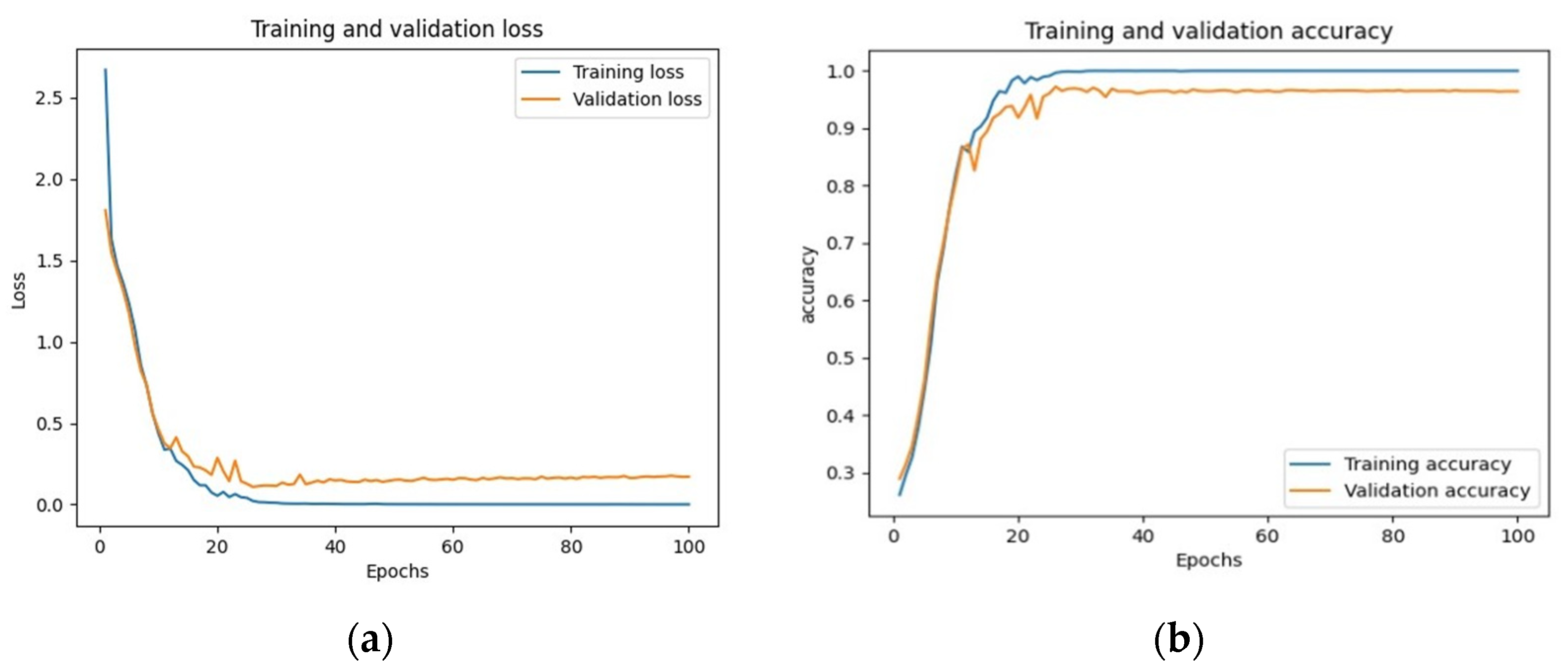

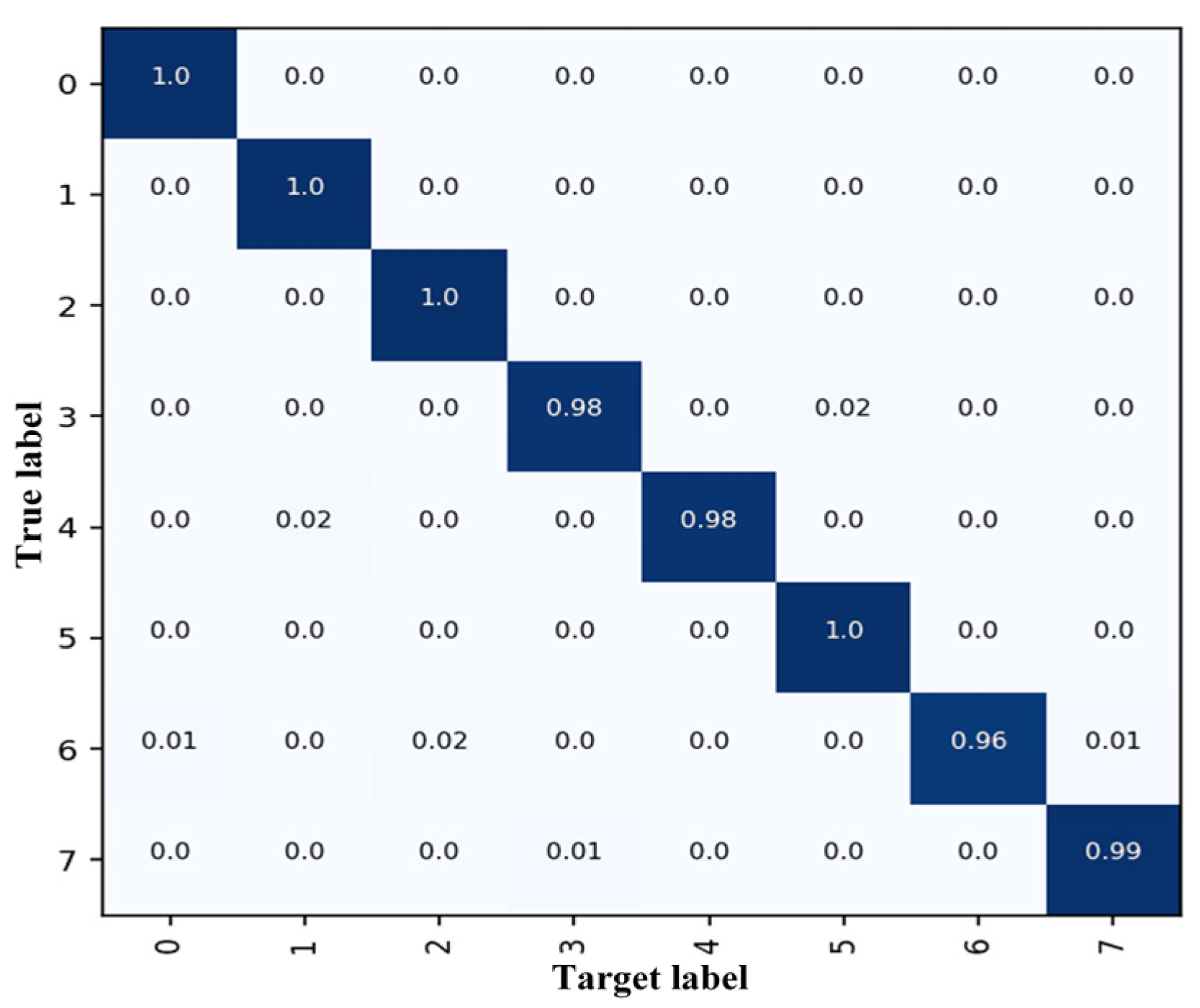

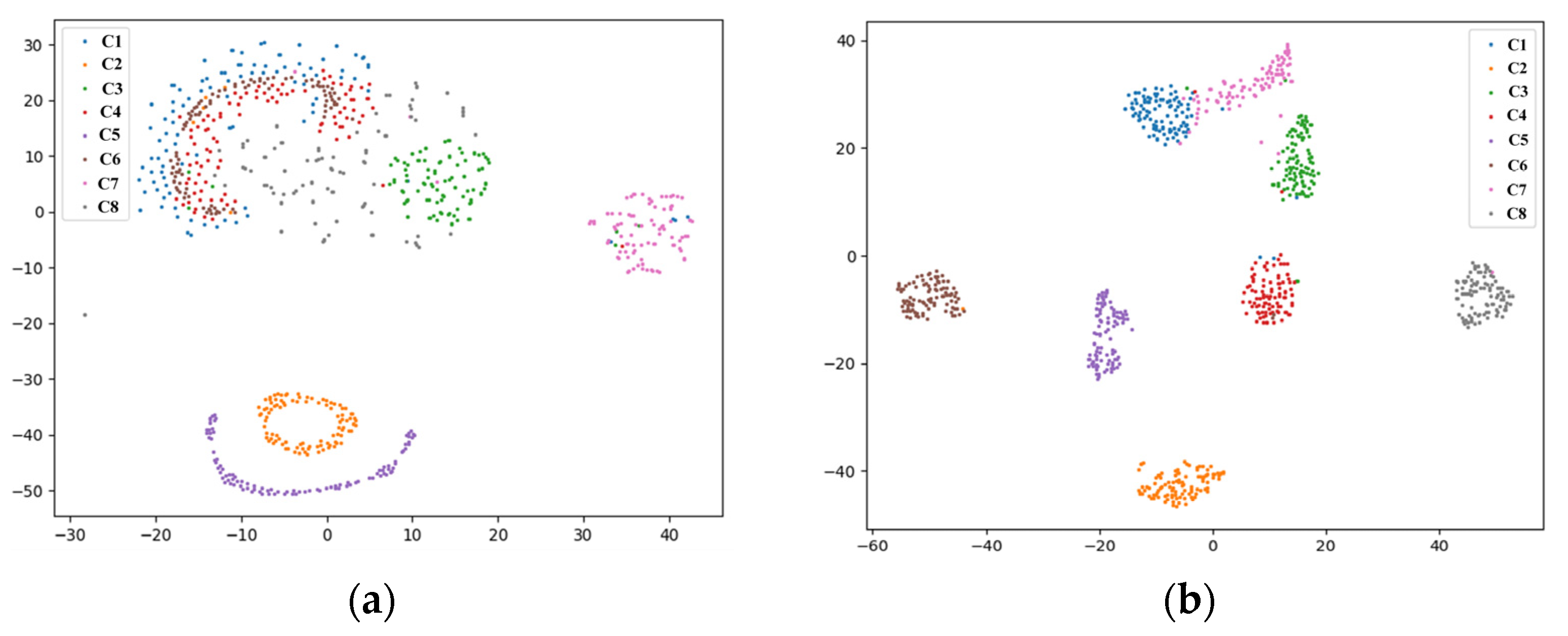

4.2. Motor Compound Fault Diagnosis

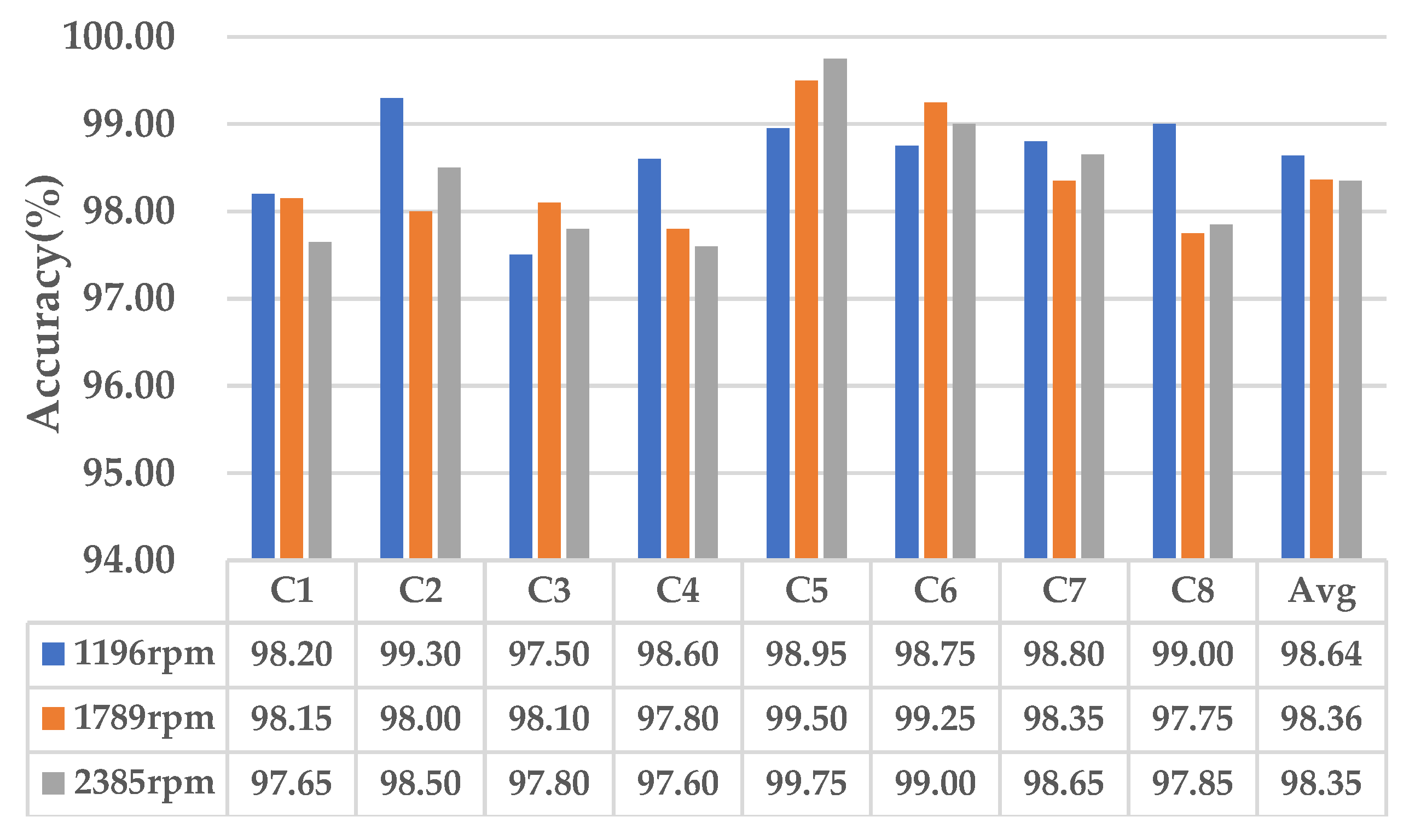

4.3. Comparison and Discussion

- (1)

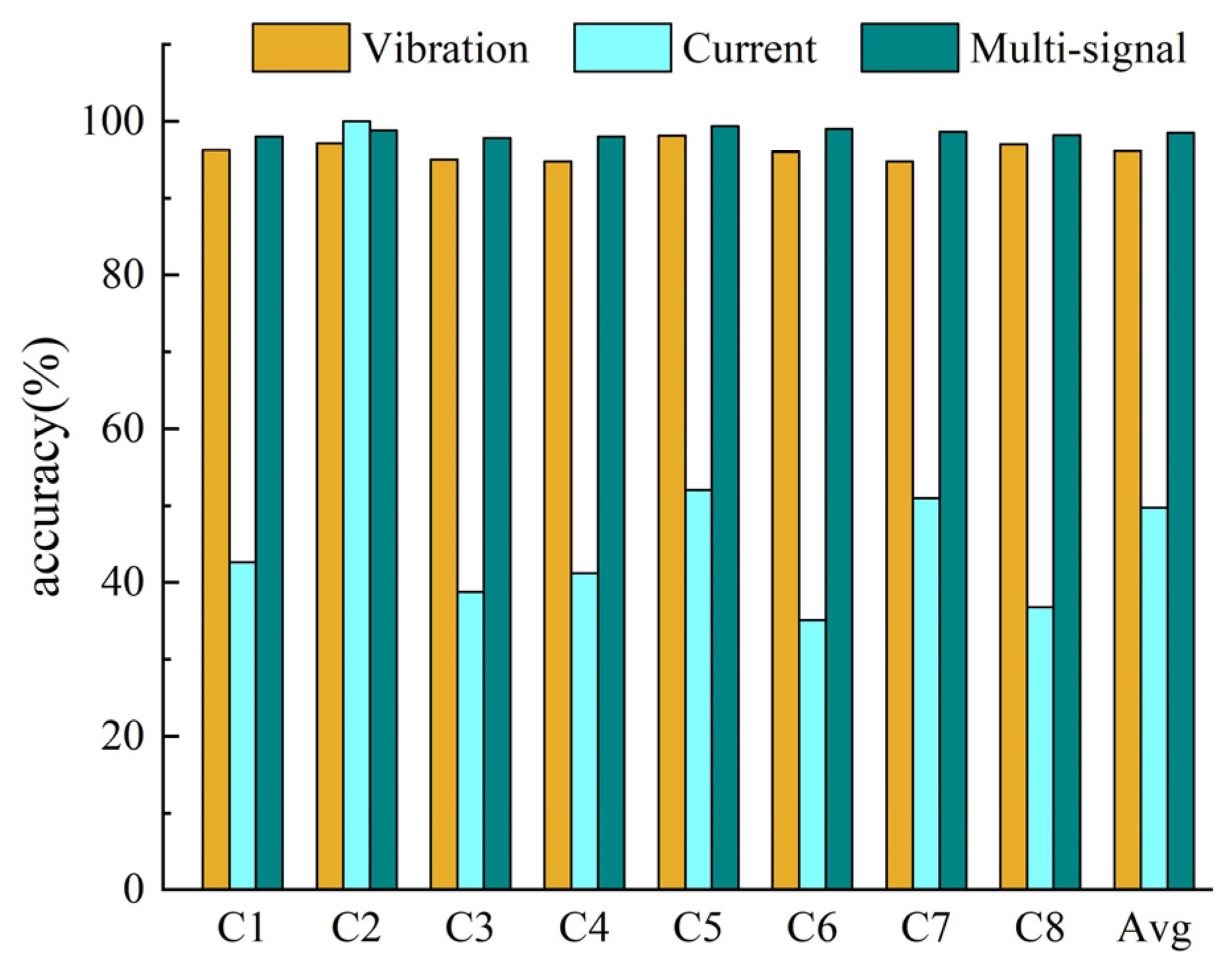

- Comparison of single-signal and multi-signal fault diagnosis

- (2)

- Comparative experiment of SeLU and ReLU in MC-DCNN

- (3)

- Comparative experiment of learning rate with decay and without decay in MC-DCNN

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shi, P.; Chen, Z.; Vagapov, Y.; Zouaoui, Z. A new diagnosis of broken rotor bar fault extent in three phase squirrel cage induction motor. Mech. Syst. Signal Process. 2014, 42, 388–403. [Google Scholar] [CrossRef]

- Gangsar, P.; Tiwari, R. Signal based condition monitoring techniques for fault detection and diagnosis of induction motors: A state-of-the-art review. Mech. Syst. Signal Process. 2020, 144, 106908. [Google Scholar] [CrossRef]

- Chen, X.; Wang, S.; Qiao, B.; Qiang, C. Basic research on machinery fault diagnostics: Past, present, and future trends. Front. Mech. Eng. 2018, 13, 264–291. [Google Scholar] [CrossRef] [Green Version]

- Delgado-Arredondo, P.A.; Morinigo-Sotelo, D.; Osornio-Rios, R.A.; Avina-Cervantes, J.G. Methodology for fault detection in induction motors via sound and vibration signals. Mech. Syst. Signal Process. 2017, 83, 568–589. [Google Scholar] [CrossRef]

- Wu, Y.; Jiang, B.; Wang, Y. Incipient winding fault detection and diagnosis for squirrel-cage induction motors equipped on CRH trains. ISA Trans. 2020, 99, 488–495. [Google Scholar] [CrossRef] [PubMed]

- Tian, M.; Li, S.; Song, J.; Lin, L. Effects of the mixed fault of broken bars and static eccentricity on current of induction motor. Electr. Mach. Control 2017, 21, 1–9. [Google Scholar]

- Gu, F.; Shao, Y.; Hu, N.; Naid, A.; Ball, A.D. Electrical motor current signal analysis using a modified bispectrum for fault diagnosis of downstream mechanical equipment. Mech. Syst. Signal Process. 2011, 25, 360–372. [Google Scholar] [CrossRef]

- Zhen, D.; Wang, T.; Gu, F.; Ball, A.D. Fault diagnosis of motor drives using stator current signal analysis based on dynamic time warping. Mech. Syst. Signal Process. 2013, 34, 191–202. [Google Scholar] [CrossRef] [Green Version]

- He, Z.J.; Wu, F.; Chen, B.Q. Automatic fault feature extraction of mechanical anomaly on induction motor bearing using ensemble super-wavelet transform. Mech. Syst. Signal Process. 2015, 54–55, 457–480. [Google Scholar] [CrossRef]

- Jing, S.; Zhao, X.; Guo, S.; Wang, Z. Fault diagnosis research of asynchronous motor rotor broken bar. J. Henan Polytech. Univ. (Nat. Sci.) 2016, 35, 224–229. [Google Scholar]

- Camarena-Martinez, D.; Osornio-Rios, R.; Romero-Troncoso, R.J. Fused Empirical Mode Decomposition and MUSIC Algorithms for Detecting Multiple Combined Faults in Induction Motors. J. Appl. Res. Technol. 2015, 10, 160–167. [Google Scholar] [CrossRef]

- Lei, Y.; Yang, B.; Jiang, X.; Jia, F.; Nandi, A.K. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Mao, W.; Feng, W.; Liu, Y.; Zhang, D.; Liang, X. A new deep auto-encoder method with fusing discriminant information for bearing fault diagnosis. Mech. Syst. Signal Process. 2021, 150, 107233. [Google Scholar] [CrossRef]

- Wang, S.; Xiang, J.; Zhong, Y.; Tang, H. A data indicator-based deep belief networks to detect multiple faults in axial piston pumps–Science Direct. Mech. Syst. Signal Process. 2018, 112, 154–170. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, T.; Huang, X.; Cao, L.; Zhou, Q. Fault diagnosis of rotating machinery based on recurrent neural networks. Measurement 2020, 171, 108774. [Google Scholar] [CrossRef]

- Sony, S.; Dunphy, K.; Sadhu, A.; Capretz, M. A systematic review of convolutional neural network-based structural condition assessment techniques. Eng. Struct. 2021, 226, 111347. [Google Scholar] [CrossRef]

- Choudhary, A.; Mian, T.; Fatima, S. Convolutional Neural Network Based Bearing Fault Diagnosis of Rotating Machine Using Thermal Images. Measurement 2021, 176, 109196. [Google Scholar] [CrossRef]

- Liang, P.; Deng, C.; Wu, J.; Yang, Z.X. Intelligent fault diagnosis of rotating machinery via wavelet transform, generative adversarial nets and convolutional neural network. Measurement 2020, 159, 107768. [Google Scholar] [CrossRef]

- Wei, Z.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2017, 100, 439–453. [Google Scholar]

- Gao, S.; Pei, Z.; Zhang, Y.; Li, T. Bearing fault diagnosis based on adaptive convolutional neural network with nesterov momentum. IEEE Sens. J. 2021, 21, 9268–9276. [Google Scholar] [CrossRef]

- Shi, J.; Yi, J.; Ren, Y.; Li, Y.; Chen, L. Fault diagnosis in a hydraulic directional valve using a two-stage multi-sensor information fusion. Measurement 2021, 179, 109460. [Google Scholar] [CrossRef]

- Jing, L.; Wang, T.; Ming, Z.; Peng, W. An Adaptive Multi-Sensor Data Fusion Method Based on Deep Convolutional Neural Networks for Fault Diagnosis of Planetary Gearbox. Sensors 2017, 17, 414. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shao, S.; Yan, R.; Lu, Y.; Wang, P.; Gao, R.X. DCNN-Based Multi-Signal Induction Motor Fault Diagnosis. IEEE Trans. Instrum. Meas. 2020, 69, 2658–2669. [Google Scholar] [CrossRef]

- Lu, Y.; Lu, G.; Zhou, Y.; Li, J.; Xu, Y.; Zhang, D. Highly shared Convolutional Neural Networks. Expert Syst. Appl. 2021, 175, 114782. [Google Scholar] [CrossRef]

- Boureau, Y.L.; Bach, F.; Lecun, Y.; Ponce, J. Learning Mid-Level Features for Recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2559–2566. [Google Scholar]

- Sindi, H.; Nour, M.; Rawa, M.; Öztürk, Ş.; Polat, K. Random Fully Connected Layered 1D CNN for Solving the Z-Bus Loss Allocation Problem. Measurement 2021, 171, 108794. [Google Scholar] [CrossRef]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (elus). arXiv 2016, arXiv:1511.07289. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

| Class Label | Motor Condition | Speed (rpm) | Training Set | validation Set |

|---|---|---|---|---|

| C1 | Normal | 1196/1789/2385 | 700 | 300 |

| C2 | Broken bar of rotor | 1196/1789/2385 | 700 | 300 |

| C3 | Inner race defect of bearing | 1196/1789/2385 | 700 | 300 |

| C4 | Outer race defect of bearing | 1196/1789/2385 | 700 | 300 |

| C5 | Ball defect of bearing | 1196/1789/2385 | 700 | 300 |

| C6 | Broken bar of rotor and inner race defect of bearing | 1196/1789/2385 | 700 | 300 |

| C7 | Broken bar of rotor and outer race defect of bearing | 1196/1789/2385 | 700 | 300 |

| C8 | Broken bar of rotor and ball defect of bearing | 1196/1789/2385 | 700 | 300 |

| Structure Parameter | Details |

|---|---|

| Input | Data = 2@2048 × 1 |

| Convolution 1 | Kernel_size = 64 × 1, Stride = 1 |

| Convolution 2–5 | Kernel_size = 7 × 1, Stride = 1 |

| Max-pooling 1–5 | Pool_size = 2 × 1, Stride = 2 |

| Activation function | SELU |

| Learning rate | Decay learning rate starting at 0.001 |

| Batch size | 128 |

| Classification Accuracy (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | Avg | |

| Vibration | 96.25 | 97.13 | 95.00 | 94.78 | 98.13 | 96.00 | 94.75 | 97.00 | 96.13 |

| Current | 42.65 | 100.00 | 38.78 | 41.20 | 52.00 | 35.13 | 51.00 | 36.80 | 49.70 |

| Multi-signal | 98.00 | 98.80 | 97.80 | 98.00 | 99.40 | 99.00 | 98.60 | 98.20 | 98.48 |

| Defect Category | Precision | Recall | F1-Score | Avg-Accuracy | ||||

|---|---|---|---|---|---|---|---|---|

| ReLU | SeLU | ReLU | SeLU | ReLU | SeLU | ReLU | SeLU | |

| C6 | 91.00% | 99.00% | 95.00% | 97.00% | 93.00% | 98.00% | 91.33% | 98.67% |

| C7 | 93.00% | 99.00% | 98.00% | 99.00% | 96.00% | 99.00% | ||

| C8 | 90.00% | 98.00% | 99.00% | 99.00% | 94.00% | 99.00% | ||

| Learning Rate | Training | Validation | ||

|---|---|---|---|---|

| Loss | Accuracy | Val_Loss | Val_Accuracy | |

| with decay | 0.0007 | 100.00% | 0.0616 | 98.37% |

| without decay | 0.0010 | 100.00% | 0.1521 | 96.19% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gong, X.; Zhi, Z.; Feng, K.; Du, W.; Wang, T. Improved DCNN Based on Multi-Source Signals for Motor Compound Fault Diagnosis. Machines 2022, 10, 277. https://doi.org/10.3390/machines10040277

Gong X, Zhi Z, Feng K, Du W, Wang T. Improved DCNN Based on Multi-Source Signals for Motor Compound Fault Diagnosis. Machines. 2022; 10(4):277. https://doi.org/10.3390/machines10040277

Chicago/Turabian StyleGong, Xiaoyun, Zeheng Zhi, Kunpeng Feng, Wenliao Du, and Tao Wang. 2022. "Improved DCNN Based on Multi-Source Signals for Motor Compound Fault Diagnosis" Machines 10, no. 4: 277. https://doi.org/10.3390/machines10040277

APA StyleGong, X., Zhi, Z., Feng, K., Du, W., & Wang, T. (2022). Improved DCNN Based on Multi-Source Signals for Motor Compound Fault Diagnosis. Machines, 10(4), 277. https://doi.org/10.3390/machines10040277