Intelligent Fault Diagnosis for Inertial Measurement Unit through Deep Residual Convolutional Neural Network and Short-Time Fourier Transform

Abstract

:1. Introduction

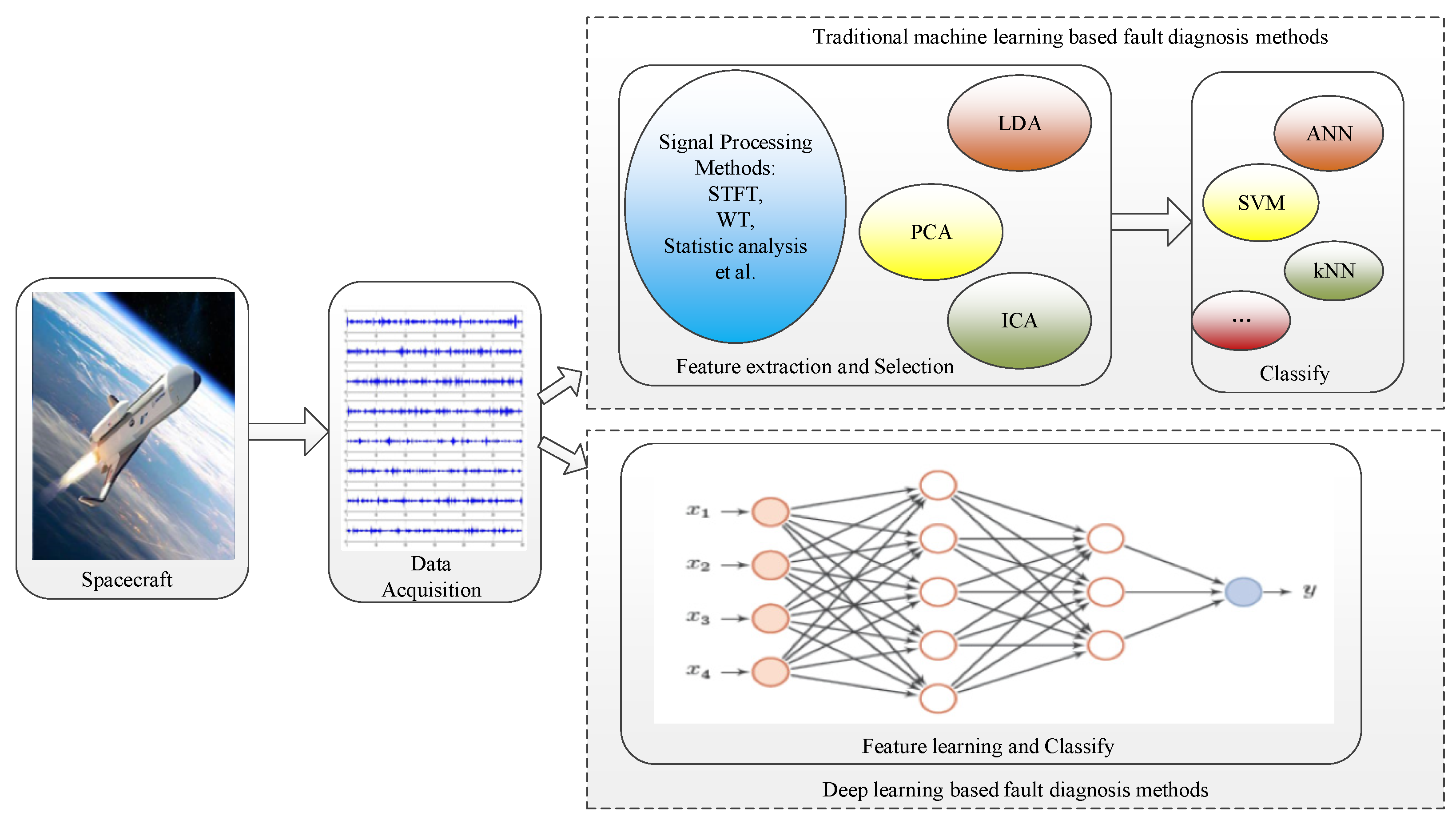

2. Related Works

2.1. Fault Diagnosis Using Traditional Machine Learning

2.2. Fault Diagnosis Using Deep Learning

3. Basics and Background

3.1. CNN and Deep Residual Networks

3.1.1. Convolutional Layer

3.1.2. Pooling Layer

3.1.3. Batch Normalization (BN)

3.1.4. Residual Network

3.2. Short-Time Fourier Transform

4. Proposed Method

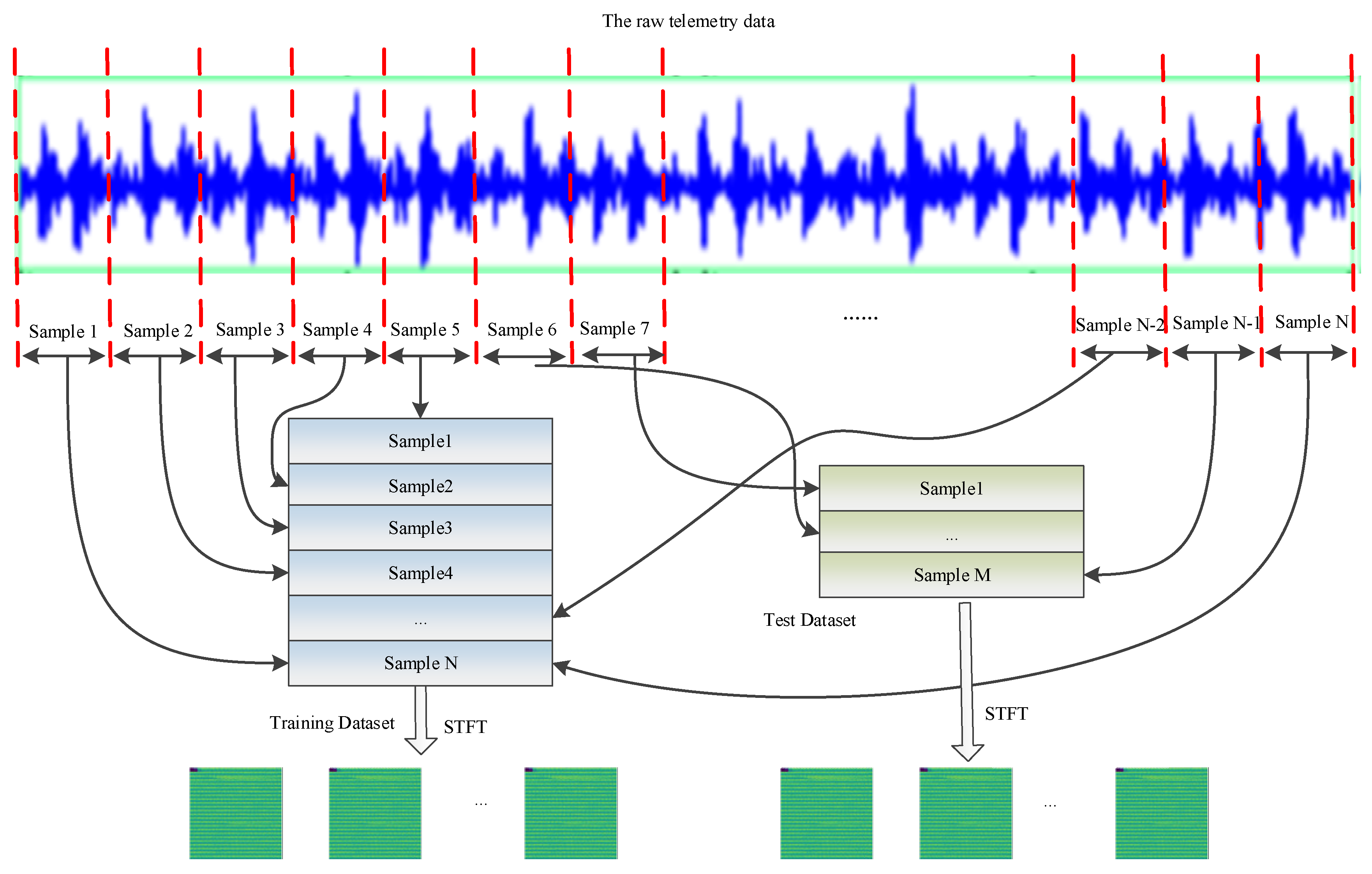

4.1. The Novel Data Acquisition and Preprocessing

4.1.1. Time–Frequency Transformation through STFT

4.1.2. Normalization

4.1.3. Date Augmentation

4.2. Model Training

4.2.1. Improved Version of Activation Function

4.2.2. The Structure of Our Proposed Residual Network

4.3. The Flow Chart of the Proposed Method

5. Experiments and Analysis

5.1. Case 1

5.1.1. Data Description and Preprocessing

5.1.2. Model Parameter Setting

5.1.3. Comparison Methods

5.1.4. Results’ Analysis

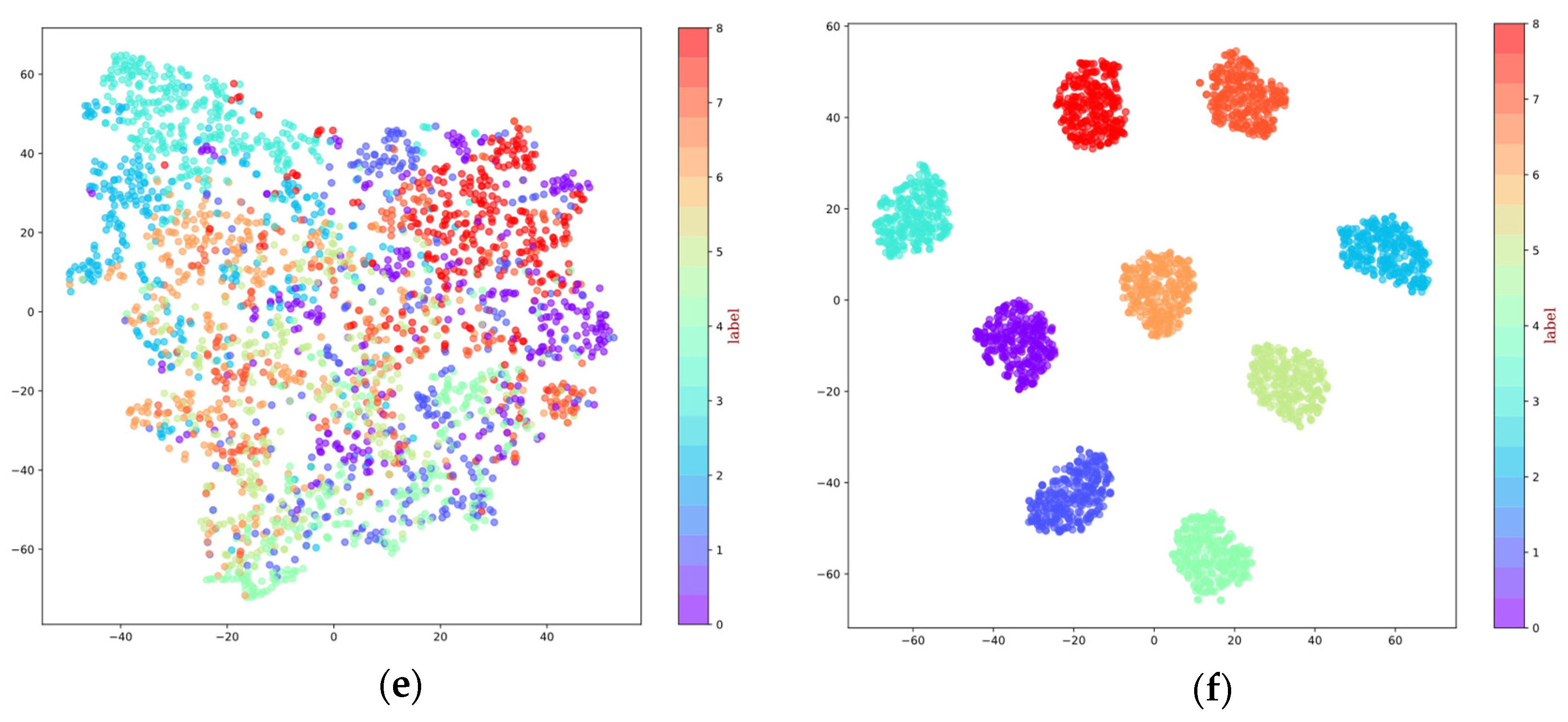

5.1.5. Visualization Analysis

5.2. Case 2

5.2.1. Data Description

5.2.2. Results’ Analysis

5.2.3. Visualization Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tazartes, D. An historical perspective on inertial navigation systems. In Proceedings of the 2014 international symposium on inertial sensors and systems (ISISS), Laguna Beach, CA, USA, 25–26 February 2014; pp. 1–5. [Google Scholar]

- Wang, L.; Li, K.; Zhang, J.; Ding, Z.X. Soft Fault Diagnosis and Recovery Method Based on Model Identification in Rotation FOG Inertial Navigation System. IEEE Sens. J. 2017, 17, 5705–5716. [Google Scholar] [CrossRef]

- Lu, S.; Zhou, W.; Huang, J.; Lu, F.; Chen, Z. A Novel Performance Adaptation and Diagnostic Method for Aero-Engines Based on the Aerothermodynamic Inverse Model. Aerospace 2022, 9, 16. [Google Scholar] [CrossRef]

- Nakatani, I.; Hashimoto, M.; Nishigori, N.; Mizutani, M. Diagnostic expert system for scientific satellite. Acta Astronaut. 1994, 34, 101–107. [Google Scholar] [CrossRef]

- Yang, Z.-L.; Wang, B.; Dong, X.-H.; Liu, H. Expert System of Fault Diagnosis for Gear Box in Wind Turbine. Syst. Eng. Procedia 2012, 4, 189–195. [Google Scholar]

- Guo, Y.; Wang, J.; Chen, H.; Li, G.; Huang, R.; Yuan, Y.; Ahmad, T.; Sun, S. An expert rule-based fault diagnosis strategy for variable refrigerant flow air conditioning systems. Appl. Therm. Eng. 2019, 149, 1223–1235. [Google Scholar] [CrossRef]

- Kodavade, D.V.; Apte, S.D. A Universal Object-Oriented Expert System Frame Work for Fault Diagnosis. Int. J. Intell. Sci. 2012, 2, 63–70. [Google Scholar] [CrossRef]

- Fuertes, S.; Picart, G.; Tourneret, J.Y.; Chaari, L.; Ferrari, A.; Richard, C. Improving Spacecraft Health Monitoring with Automatic Anomaly Detection Techniques. In Proceedings of the 14th International Conference on Space Operations, Daejeon, Korea, 16–20 May 2016; p. 2430. [Google Scholar]

- Ibrahim, S.K.; Ahmed, A.; Zeidan, M.A.E.; Ziedan, I.E. Machine Learning Techniques for Satellite Fault Diagnosis. Ain Shams Eng. J. 2020, 11, 45–56. [Google Scholar] [CrossRef]

- Suo, M.; Zhu, B.; An, R.; Sun, H.; Xu, S.; Yu, Z. Data-driven fault diagnosis of satellite power system using fuzzy Bayes risk and SVM. Aerosp. Sci. Technol. 2019, 84, 1092–1105. [Google Scholar] [CrossRef]

- Shao, J.; Zhang, Y. Fault Diagnosis Based on IGA-SVMR for Satellite Attitude Control System. Appl. Mech. Mater. 2014, 494–495, 1339–1342. [Google Scholar]

- Liu, R.; Yang, B.; Zio, E.; Chao, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Naganathan, G.; Senthilkumar, M.; Aiswariya, S.; Muthulakshmi, S.; Riyasen, G.S.; Priyadharshini, M.M. Internal fault diagnosis of power transformer using artificial neural network. Mater. Today Proc. 2021; in press. [Google Scholar]

- Narendra, K.G.; Sood, V.K.; Khorasani, K.; Patel, R. Application of a radial basis function (RBF) neural network for fault diagnosis in a HVDC system. IEEE Trans. Power Syst. 1998, 13, 177–183. [Google Scholar] [CrossRef]

- Zhang, K.; Li, Y.; Scarf, P.; Ball, A. Feature selection for high-dimensional machinery fault diagnosis data using multiple models and radial basis function networks. Neurocomputing 2011, 74, 2941–2952. [Google Scholar] [CrossRef]

- Peng, Z.; Dong, K.; Wang, Y.; Huang, X. A Fault Diagnosis Model for Coaxial-Rotor Unit Using Bidirectional Gate Recurrent Unit and Highway Network. Machines 2022, 10, 313. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, T.; Wu, J.; Sun, C.; Wang, S.; Yan, R.; Chen, X. Deep learning algorithms for rotating machinery intelligent diagnosis: An open source benchmark study. ISA Trans. 2020, 107, 224–255. [Google Scholar] [CrossRef]

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Nguyen, V.-C.; Hoang, D.-T.; Tran, X.-T.; Van, M.; Kang, H.-J. A Bearing Fault Diagnosis Method Using Multi-Branch Deep Neural Network. Machines 2021, 9, 345. [Google Scholar] [CrossRef]

- Jiao, J.; Zhao, M.; Lin, J.; Liang, K. A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 2020, 417, 36–63. [Google Scholar] [CrossRef]

- Omeara, C.; Schlag, L.; Faltenbacher, L.; Wickler, M. ATHMoS: Automated Telemetry Health Monitoring System at GSOC using Outlier Detection and Supervised Machine Learning. In Proceedings of the 14th International Conference on Space Operations, Daejeon, Korea, 16–20 May 2016; p. 2347. [Google Scholar]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting Spacecraft Anomalies Using LSTMs and Nonparametric Dynamic Thresholding. In KDD ‘18: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; ACM: New York, NY, USA, 2018; pp. 387–395. [Google Scholar]

- Chen, J.; Pi, D.; Wu, Z.; Zhao, X.; Pan, Y.; Zhang, Q. Imbalanced satellite telemetry data anomaly detection model based on Bayesian LSTM. Acta Astronaut. 2021, 180, 232–242. [Google Scholar] [CrossRef]

- Yuan, M.; Wu, Y.; Lin, L. Fault diagnosis and remaining useful life estimation of aero engine using LSTM neural network. In Proceedings of the 2016 IEEE International Conference on Aircraft Utility Systems (AUS), Beijing, China, 10–12 October 2016; pp. 135–140. [Google Scholar]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, S.; Wei, Y.; Zhang, H. A novel feature adaptive extraction method based on deep learning for bearing fault diagnosis. Measurement 2021, 185, 110030. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Ding, Q. Deep residual learning-based fault diagnosis method for rotating machinery. ISA Trans. 2019, 95, 295–305. [Google Scholar] [CrossRef] [PubMed]

- Ding, X.; He, Q. Energy-uctuated multiscale feature learning with deep ConvNet for intelligent spindle bearing fault diagnosis. IEEE Trans. Instrum. Meas. 2017, 66, 9261935. [Google Scholar] [CrossRef]

- Zhao, M.; Kang, M.; Tang, B.; Pecht, M. Deep Residual Networks With Dynamically Weighted Wavelet Coefficients for Fault Diagnosis of Planetary Gearboxes. IEEE Trans. Ind. Electron. 2018, 65, 4290–4300. [Google Scholar] [CrossRef]

- Shao, X.; Kim, C.-S. Unsupervised Domain Adaptive 1D-CNN for Fault Diagnosis of Bearing. Sensors 2022, 22, 4156. [Google Scholar] [CrossRef] [PubMed]

- JJiang, G.; He, H.; Yan, J.; Xie, P. Multiscale Convolutional Neural Networks for Fault Diagnosis of Wind Turbine Gearbox. IEEE Trans. Ind. Electron. 2019, 66, 3196–3207. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015; Volume 1, pp. 448–456. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 630–645. [Google Scholar]

- Hasan, M.J.; Islam, M.M.; Kim, J.M. Multi-sensor fusion-based time-frequency imaging and transfer learning for spherical tank crack diagnosis under variable pressure conditions. Measurement 2021, 168, 108478. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the International Conference on Machine Learnin, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Song, X.; Cong, Y.; Song, Y.; Chen, Y.; Liang, P. A bearing fault diagnosis model based on CNN with wide convolution kernels. J. Ambient. Intell. Humaniz. Comput. 2021, 13, 4041–4056. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Liang, H.; Cao, J.; Zhao, X. Multi-scale dynamic adaptive residual network for fault diagnosis. Measurement 2021, 188, 110397. [Google Scholar] [CrossRef]

- Kong, X.; Mao, G.; Wang, Q.; Ma, H.; Yang, W. A multi-ensemble method based on deep auto-encoders for fault diagnosis of rolling bearings. Measurement 2020, 151, 107132. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.; Qin, W.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Shi, X.; Cheng, Y.; Zhang, B.; Zhang, H. Intelligent fault diagnosis of bearings based on feature model and Alexnet neural network. In Proceedings of the 2020 IEEE International Conference on Prognostics and Health Management (ICPHM), Detroit, MI, USA, 8–10 June 2020. [Google Scholar]

- Cao, P.; Zhang, S.; Tang, J. Gear Fault Data. Available online: https://figshare.com/articles/dataset/Gear_Fault_Data/6127874/1 (accessed on 15 July 2022).

| Label | Dataset | Fault Type | Working Condition | Label | Dataset | Fault Type | Working Condition |

|---|---|---|---|---|---|---|---|

| 0 | Bearing | Ball | 20 Hz–0 V | 10 | Gear | Chipped | 20 Hz–0 V |

| 1 | Bearing | Combination | 20 Hz–0 V | 11 | Gear | Health | 20 Hz–0 V |

| 2 | Bearing | Health | 20 Hz–0 V | 12 | Gear | Miss | 20 Hz–0 V |

| 3 | Bearing | Inner | 20 Hz–0 V | 13 | Gear | Root | 20 Hz–0 V |

| 4 | Bearing | Outer | 20 Hz–0 V | 14 | Gear | Surface | 20 Hz–0 V |

| 5 | Bearing | Ball | 30 Hz–2 V | 15 | Gear | Chipped | 30 Hz–2 V |

| 6 | Bearing | Combination | 30 Hz–2 V | 16 | Gear | Health | 30 Hz–2 V |

| 7 | Bearing | Health | 30 Hz–2 V | 17 | Gear | Miss | 30 Hz–2 V |

| 8 | Bearing | Inner | 30 Hz–2 V | 18 | Gear | Root | 30 Hz–2 V |

| 9 | Bearing | Outer | 30 Hz–2 V | 19 | Gear | Surface | 30 Hz–2 V |

| No. | Layer | Output Channels | Kernel Size | Stride | Padding | Activation Function |

|---|---|---|---|---|---|---|

| 1 | Conv2d 1 | 16 | 13 | 1 | Yes | PReLU |

| 2 | Maxpool 1 | / | 2 | 2 | No | / |

| 3 | Basic residual block 1 | 64 | 3 | 1 | Yes | PReLU |

| 4 | Basic residual block 2 | 128 | 3 | 1 | Yes | PReLU |

| 5 | Basic residual block 3 | 256 | 3 | 1 | Yes | PReLU |

| 10 | AdaptiveMaxpool | / | / | / | No | / |

| 11 | FC 1 | / | / | / | / | PReLU |

| 12 | Output layer | / | / | / | / | Softmax |

| AE | DAE | CNN | AlexNet | LSTM | Proposed Method |

|---|---|---|---|---|---|

| Input 5 Conv 2 FC 2 FC 5 ConvT Conv | Input 5 Conv 2 FC 2 FC 5 ConvT Conv | Input 2 Conv 1 Maxpool 3 Conv 1 Maxpool 3 FC | Input 1 Conv 1 Maxpool 1 Conv 1 Maxpool 3 Conv 1 Maxpool 3 FC | Input 3 LSTM 3 FC | Input 1 Conv 1 Maxpool 3 basic residual blocks 1 Maxpool 3 FC |

| Dataset | AE | DAE | CNN | AlexNet | LSTM | Proposed Method |

|---|---|---|---|---|---|---|

| SEU_A | 95.34 | 96.81 | 83.33 | 94.36 | 90.69 | 98.77 |

| SEU_B | 84.56 | 93.63 | 61.02 | 87.99 | 79.17 | 96.81 |

| SEU_C | 97.30 | 95.83 | 84.80 | 88.48 | 89.95 | 99.02 |

| SEU_D | 93.38 | 94.12 | 89.95 | 85.05 | 81.86 | 98.04 |

| SEU_E | 89.46 | 94.85 | 69.36 | 89.71 | 83.58 | 99.51 |

| SEU_F | 73.53 | 81.13 | 63.24 | 84.80 | 79.17 | 94.12 |

| SEU_G | 68.38 | 89.71 | 61.03 | 82.11 | 74.75 | 93.87 |

| Average | 85.99 | 92.29 | 73.24 | 87.50 | 82.74 | 97.16 |

| Dataset | AE | DAE | CNN | AlexNet | LSTM | Proposed Method (ReLU) | Proposed Method (ReLU) |

|---|---|---|---|---|---|---|---|

| UoC | 47.34 | 49.77 | 32.72 | 37.14 | 34.55 | 75.17 | 77.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiang, G.; Miao, J.; Cui, L.; Hu, X. Intelligent Fault Diagnosis for Inertial Measurement Unit through Deep Residual Convolutional Neural Network and Short-Time Fourier Transform. Machines 2022, 10, 851. https://doi.org/10.3390/machines10100851

Xiang G, Miao J, Cui L, Hu X. Intelligent Fault Diagnosis for Inertial Measurement Unit through Deep Residual Convolutional Neural Network and Short-Time Fourier Transform. Machines. 2022; 10(10):851. https://doi.org/10.3390/machines10100851

Chicago/Turabian StyleXiang, Gang, Jing Miao, Langfu Cui, and Xiaoguang Hu. 2022. "Intelligent Fault Diagnosis for Inertial Measurement Unit through Deep Residual Convolutional Neural Network and Short-Time Fourier Transform" Machines 10, no. 10: 851. https://doi.org/10.3390/machines10100851

APA StyleXiang, G., Miao, J., Cui, L., & Hu, X. (2022). Intelligent Fault Diagnosis for Inertial Measurement Unit through Deep Residual Convolutional Neural Network and Short-Time Fourier Transform. Machines, 10(10), 851. https://doi.org/10.3390/machines10100851