Improving HRI with Force Sensing

Abstract

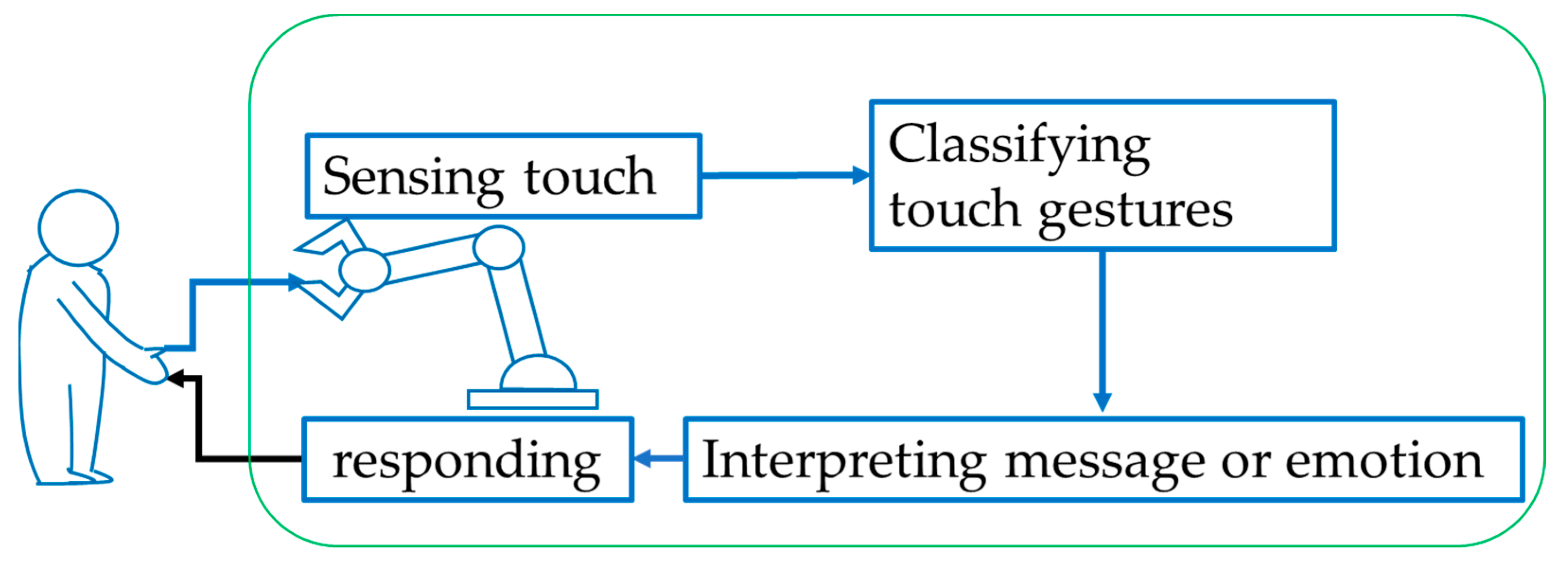

:1. Introduction

1.1. Background

1.2. Related Works

2. Materials

2.1. Collecting Expressive Touch Gestures

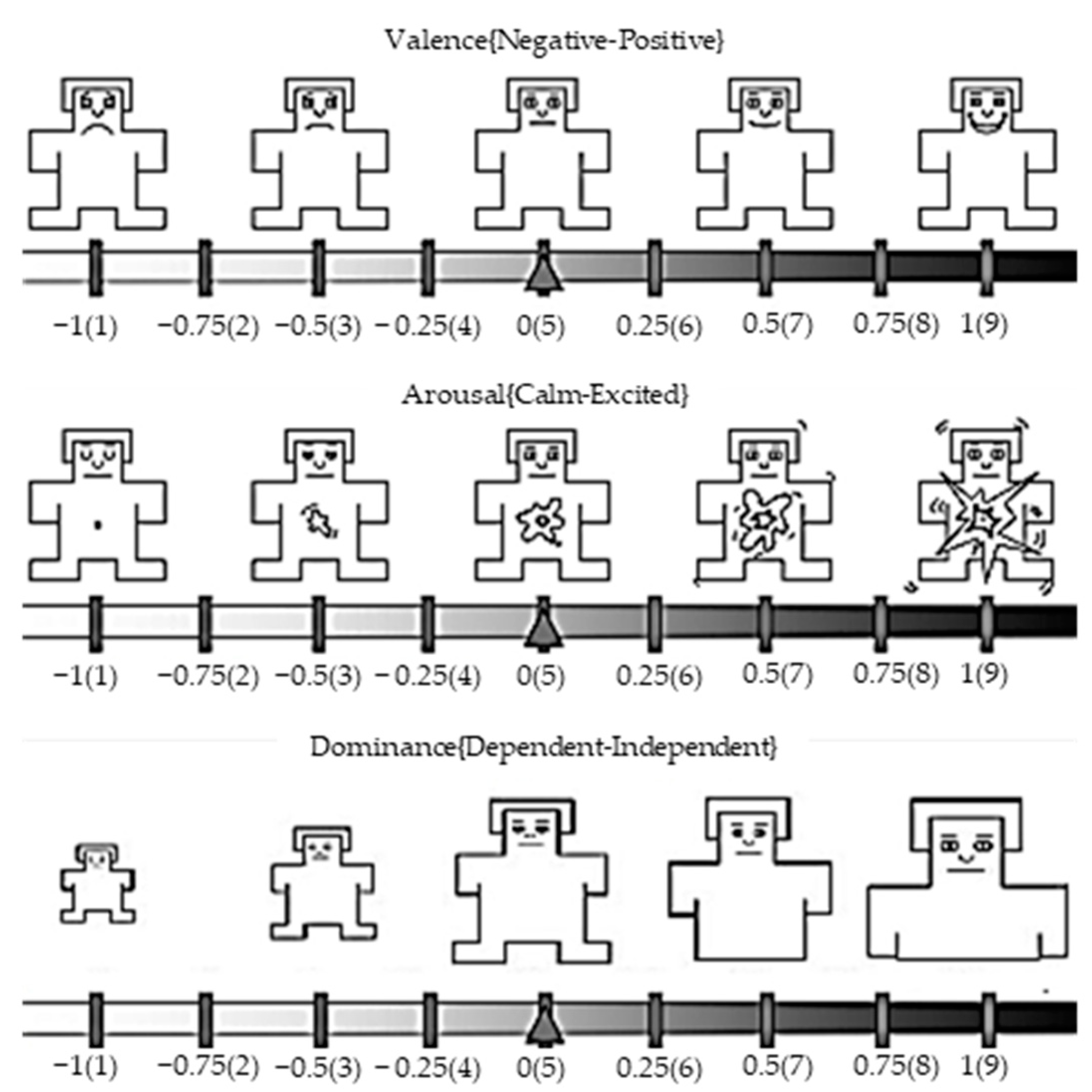

2.2. PAD Emotional State Model

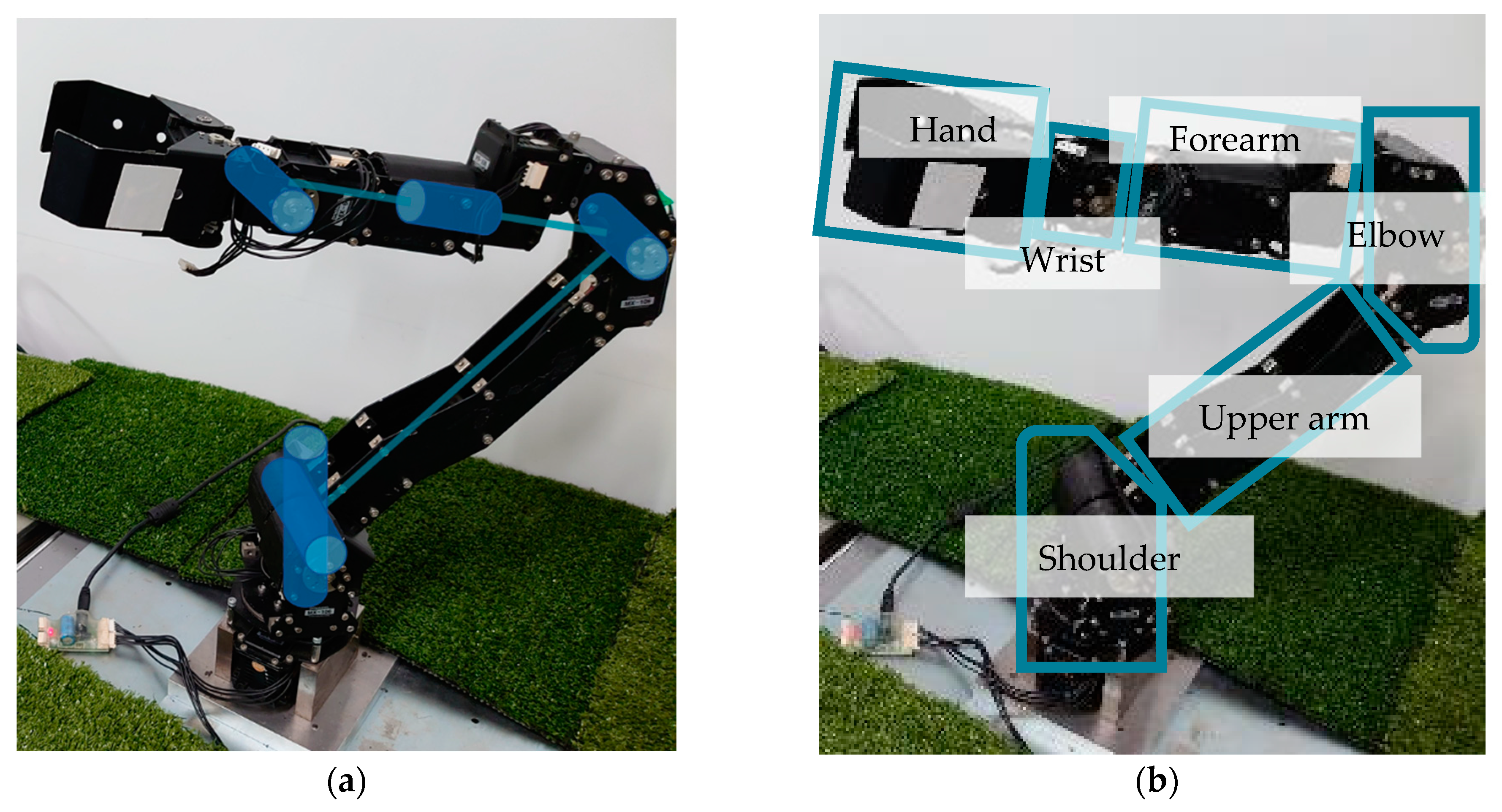

2.3. Equipment

2.4. Touch Gestures

3. Experiment on How Humans Convey Emotion to Robot Arm

3.1. Method

3.2. Results and Discussion

3.2.1. Location

3.2.2. Classification of Touch Gestures

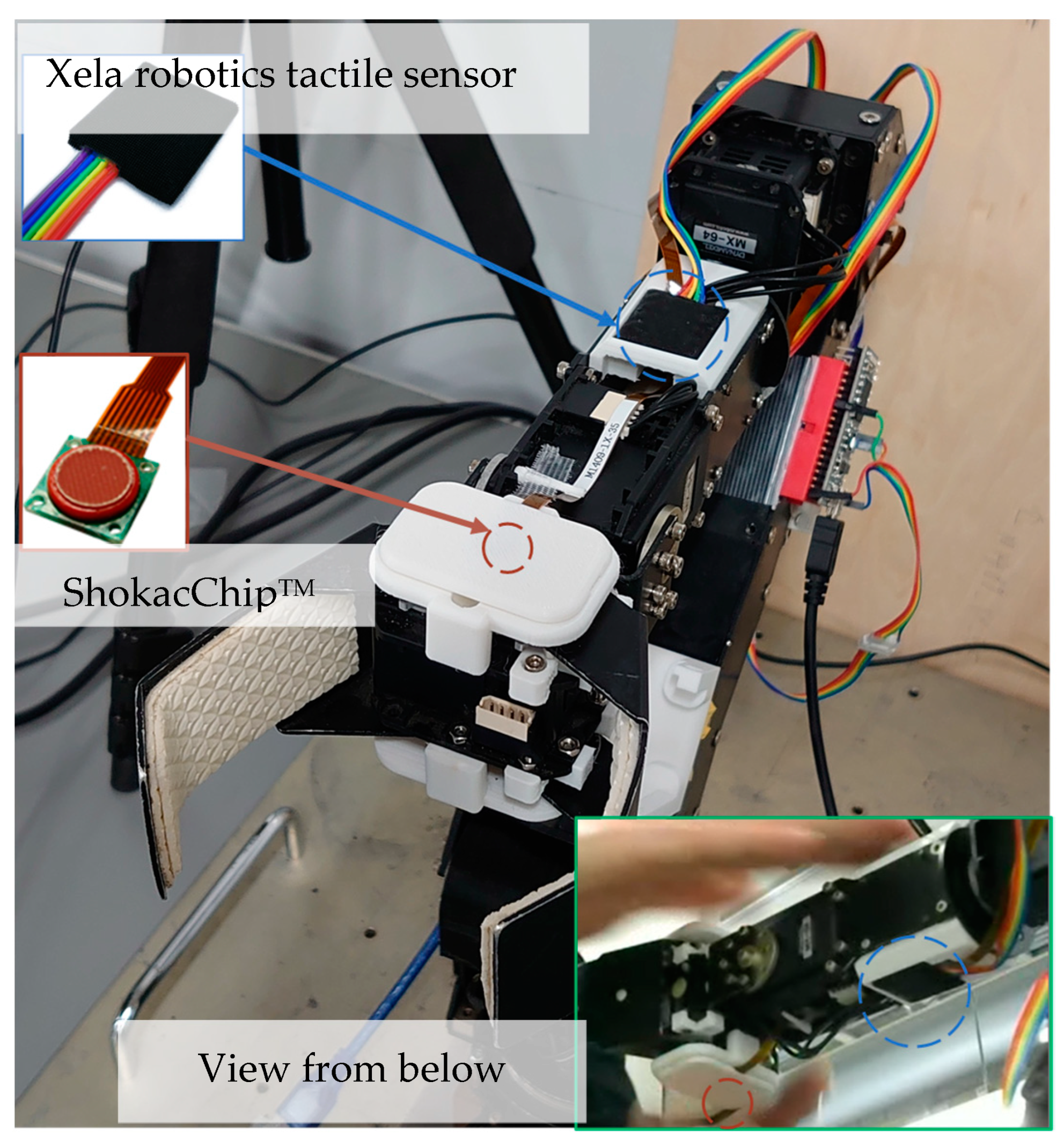

4. Experiment Using Tactile Sensors

4.1. Method

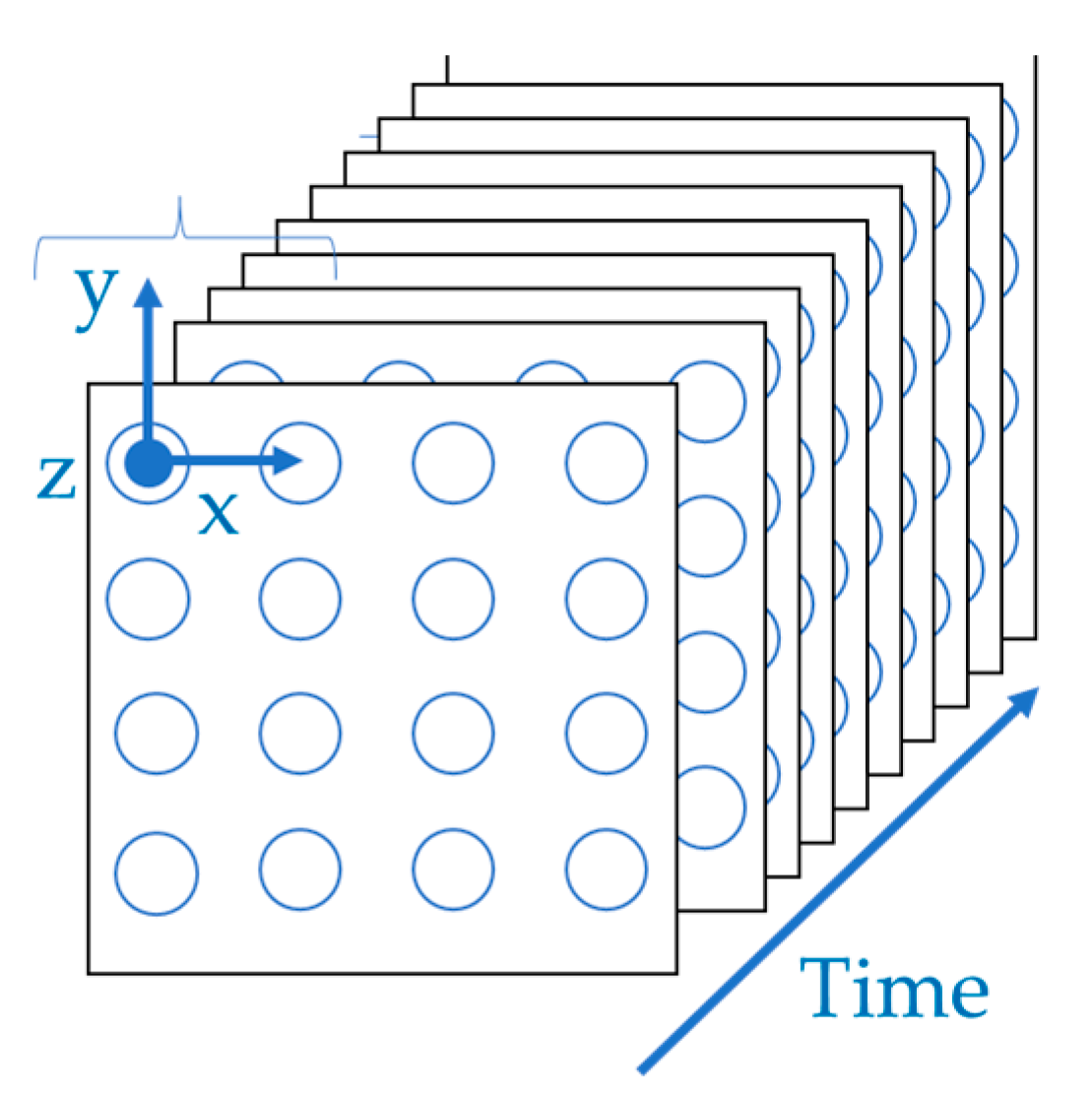

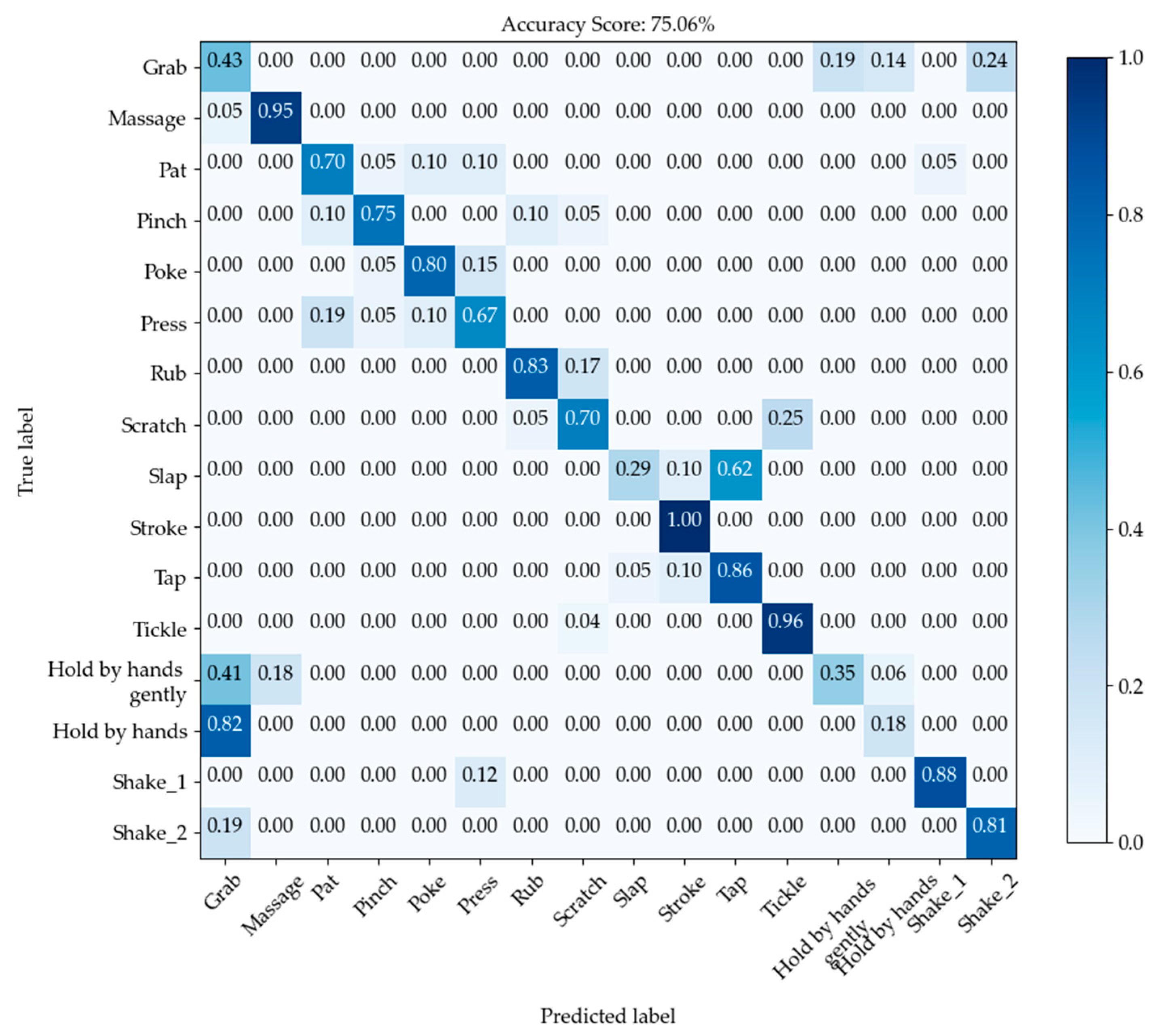

4.2. Classifying Touch Gestures

4.2.1. Classifying Touch Gestures with ShokacChipTM Sensors

4.2.2. Classifying Touch Gestures with Xela Robotics Tactile Sensors

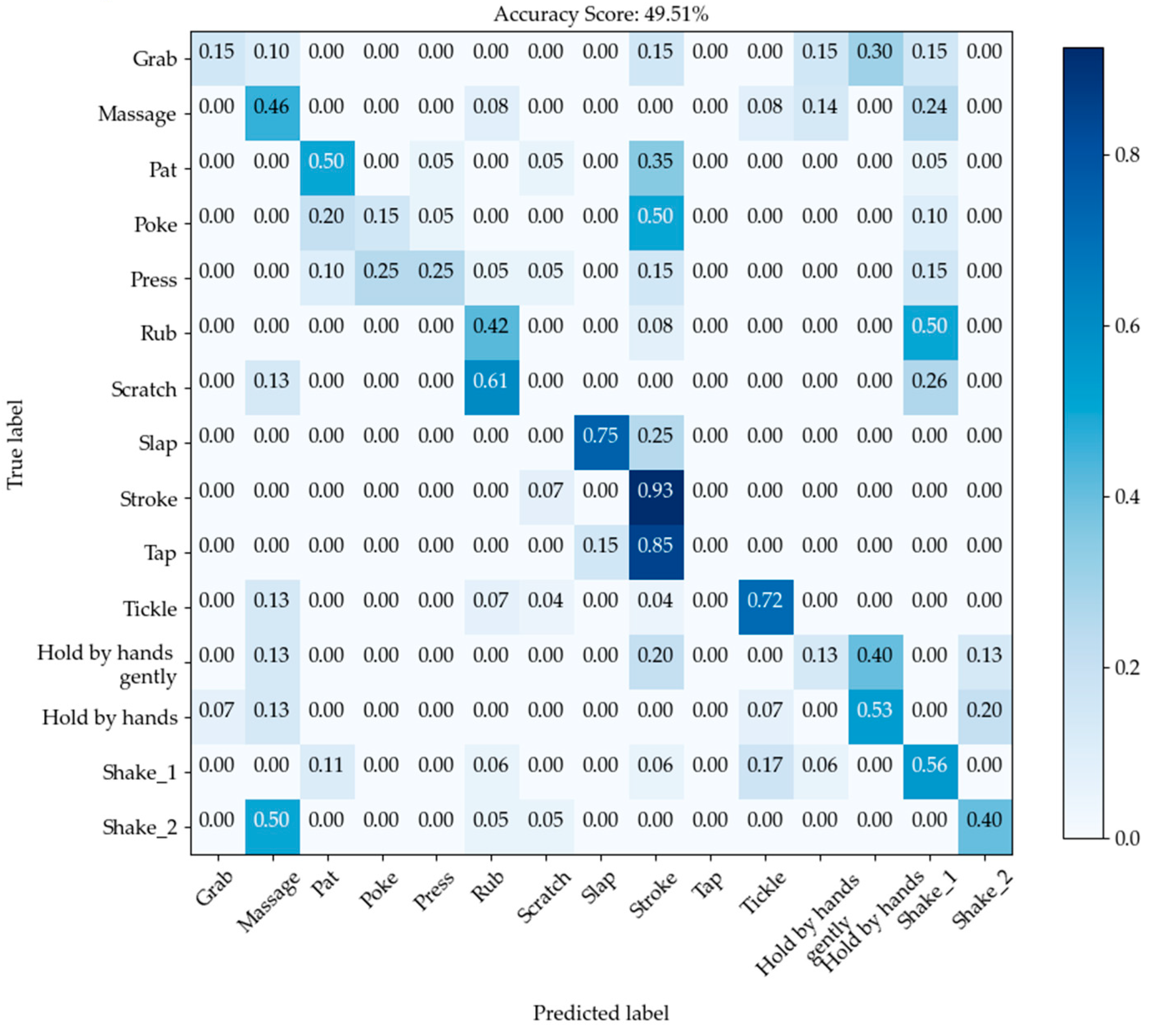

4.3. Results of Touch Gestures’ Classification

4.4. Changing Impressions of Robots through Conveying Emotions by Touch

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Demir, K.A.; Döven, G.; Sezen, B. Industry 5.0 and Human-Robot Co-working. Procedia Comput. Sci. 2019, 158, 688–695. [Google Scholar] [CrossRef]

- Rodriguez-Guerra, D.; Sorrosal, G.; Cabanes, I.; Calleja, C. Human-Robot Interaction Review: Challenges and Solutions for Modern Industrial Environments. IEEE Access 2021, 9, 108557–108578. [Google Scholar] [CrossRef]

- Future, M.R. Collaborative Robot Market Research Report by Global Forecast to 2023. Available online: https://www.marketresearchfuture.com/reports/collaborative (accessed on 21 January 2021).

- Admoni, H.; Scassellati, B. Social Eye Gaze in Human-Robot Interaction: A Review. J. Human-Robot Interact. 2017, 6, 25–63. [Google Scholar] [CrossRef] [Green Version]

- Yohanan, S.; MacLean, K.E. The Role of Affective Touch in Human-Robot Interaction: Human Intent and Expectations in Touching the Hapitac Creature. Int. J. Soc. Robot. 2012, 4, 163–180. [Google Scholar] [CrossRef]

- Law, T.; Malle, B.F.; Scheutz, M. A Touching Connection: How Observing Robotic Touch Can Affect Human Trust in a Robot. Int. J. Soc. Robot. 2021, 13, 2003–2019. [Google Scholar] [CrossRef]

- Cabibihan, J.-J.; Ahmed, I.; Ge, S.S. Force and motion analyses of the human patting gesture for robotic social touching. In Proceedings of the 2011 IEEE 5th International Conference on Cybernetics and Intelligent Systems (CIS), Qingdao, China, 17–19 September 2021; pp. 165–169. [Google Scholar]

- Eid, M.A.; Al Osman, H. Affective Haptics: Current Research and Future Directions. IEEE Access 2015, 4, 26–40. [Google Scholar] [CrossRef]

- Herstein, M.J.; Holmes, R.; McCullough, M.; Keltner, D. Touch communicates distinct emotions. Am. Psychol. Assoc. 2006, 6, 528–533. [Google Scholar]

- Hertenstein, M.J.; Holmes, R.; McCullough, M.; Keltner, D. The communication of emotion via touch. Am. Psychol. Assoc. 2009, 9, 566–573. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ju, Y.; Zheng, D.; Hynds, D.; Chernyshov, G.; Kunze, K.; Minamizawa, K. Haptic Empathy: Conveying Emotional Meaning through Vibrotactile Feedback. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Andreasson, R.; Alenljung, B.; Billing, E.; Lowe, R. Affective Touch in Human–Robot Interaction: Conveying Emotion to the Nao Robot. Int. J. Soc. Robot. 2018, 10, 473–491. [Google Scholar] [CrossRef] [Green Version]

- Jung, M.M.; Poel, M.; Poppe, R.; Heylen, D.K.J. Automatic recognition of touch gestures in the corpus of social touch. J. Multimodal User Interfaces 2017, 11, 81–96. [Google Scholar] [CrossRef] [Green Version]

- Mehrabian, A. Pleasure-arousal-dominance: A general framework for describing and measuring individual differences in Temperament. Curr. Psychol. 1996, 14, 261–292. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. Measuring emotion: The self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 1994, 25, 49–59. [Google Scholar] [CrossRef]

- Touchence. Available online: http://touchence.jp/products/chip.html (accessed on 14 November 2021).

- Xela. Robotics. Available online: https://xelarobotics.com/ja/xr1944 (accessed on 14 November 2021).

- Kanda, T.; Ishiguro, H.; Ono, T.; Imai, M.; Nakatsu, R. An evaluation on interaction between humans and an autonomous robot Robovie. J. Robot. Soc. Jpn. 2002, 20, 315–323. [Google Scholar] [CrossRef] [Green Version]

- Geva, N.; Uzefovsky, F.; Levy-Tzedek, S. Touching the social robot PARO reduces pain perception and salivary oxytocin levels. Sci. Rep. 2020, 10, 9814. [Google Scholar] [CrossRef] [PubMed]

| Touch | Definition |

|---|---|

| Grab | Grasp or seize the robot arm suddenly and roughly. |

| Hold by hands | Put the robot arm between your both flat hands firmly |

| Hold by hands gently | Put the robot arm between your both flat hands gently |

| Massage | Rub or knead the robot arm with your hands. |

| Nuzzle | Gently rub or push against the robot arm with your nose. |

| Pat | Gently and quickly touch the robot arm with the flat of your hand. |

| Pinch | Tightly and sharply grip the robot arm between your fingers and thumb. |

| Poke | Jab or prod the robot arm with your finger. |

| Press | Exert a steady force on the robot arm with your flattened fingers or hand. |

| Rub | Move your hand repeatedly back and forth on the fur of the robot arm with firm pressure. |

| Tap | Strike the robot arm with a quick light blow or blows using one or more fingers. |

| Tickle | Touch the robot arm with light finger movements. |

| Tremble | Shake against the robot arm with a slight rapid motion. |

| Grab | Grasp or seize the robot arm suddenly and roughly. |

| Hold by hands | Put the robot arm between your both flat hands firmly |

| Hold by hands gently | Put the robot arm between your both flat hands gently |

| Massage | Rub or knead the robot arm with your hands. |

| Pat | Gently and quickly touch the robot arm with the flat of your hand. |

| Pinch | Tightly and sharply grip the robot arm between your fingers and thumb. |

| Poke | Jab or prod the robot arm with your finger. |

| Press | Exert a steady force on the robot arm with your flattened fingers or hand. |

| Rub | Move your hand repeatedly back and forth on the fur of the robot arm with firm pressure. |

| Scratch | Rub the robot arm with your fingernails. |

| Shake | Press intermittently the robot arm with your fingers (Shake_1) or Hold the robot arm with your hand and move it up and down (Shake_2) |

| Slap | Quickly and sharply strike the robot arm with your open hand. |

| Squeeze | Firmly press the robot arm between yourfingers or both hands. |

| Stroke | Move your hand with gentle pressure over the robot arm, often repeatedly. |

| Body Part | Emotion | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Total | Love | Sympathy | Gratitude | Happiness | Sadness | Disgust | Anger | Fear | |

| Hand | 438.1 | 71.4 | 42.9 | 100.0 | 14.3 | 66.7 | 57.1 | 71.4 | 14.3 |

| Wrist | 176.3 | 42.9 | 14.3 | 28.6 | 28.6 | 33.3 | 0.0 | 14.3 | 14.3 |

| Forearm | 162 | 42.9 | 28.6 | 28.6 | 28.6 | 33.3 | 0.0 | 0.0 | 0.0 |

| Elbow | 14.3 | 14.3 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Upper arm | 28.6 | 14.3 | 14.3 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Shoulder | 71.5 | 0.0 | 14.3 | 0.0 | 14.3 | 0.0 | 28.6 | 0.0 | 14.3 |

| Total | 890.8 | 185.8 | 114.4 | 157.2 | 85.8 | 133.3 | 85.7 | 85.7 | 42.9 |

| Gesture | Emotion | |||||||

|---|---|---|---|---|---|---|---|---|

| Love | Sympathy | Gratitude | Sadness | Happiness | Disgust | Anger | Fear | |

| Hold | 0 | 0 | 14.3 | 14.3 | 0 | 0 | 0 | 0 |

| Massage | 0 | 14.3 | 0 | 14.3 | 14.3 | 0 | 0 | 0 |

| Nuzzle | 0 | 0 | 14.3 | 0 | 0 | 0 | 0 | 0 |

| Pat | 28.6 | 0 | 14.3 | 28.6 | 0 | 0 | 0 | 0 |

| Pinch | 0 | 0 | 0 | 0 | 0 | 28.6 | 14.3 | 14.3 |

| Poke | 0 | 0 | 0 | 0 | 0 | 28.6 | 14.3 | 28.6 |

| Press | 0 | 0 | 0 | 0 | 0 | 14.3 | 42.9 | 0 |

| Rub | 0 | 0 | 0 | 0 | 28.6 | 0 | 0 | 0 |

| Scratch | 14.3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Shake | 14.3 | 0 | 57.1 | 14.3 | 28.6 | 0 | 0 | 14.3 |

| Slap | 0 | 0 | 0 | 0 | 0 | 42.9 | 28.6 | 0 |

| Squeeze | 0 | 0 | 0 | 0 | 0 | 0 | 14.3 | 0 |

| Stroke | 57.1 | 28.6 | 14.3 | 42.9 | 28.6 | 0 | 0 | 0 |

| Tap | 14.3 | 28.6 | 14.3 | 0 | 28.6 | 0 | 0 | 0 |

| Tickle | 28.6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| No touch | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 28.6 |

| Massage | 0 | 14.3 | 0 | 14.3 | 14.3 | 0 | 0 | 0 |

| Nuzzle | 0 | 0 | 14.3 | 0 | 0 | 0 | 0 | 0 |

| Median | Average | Standard Deviation | Variance | ||

|---|---|---|---|---|---|

| Love | P | 0.75 | 0.71 | 0.09 | 0.01 |

| A | 0.25 | 0.14 | 0.52 | 0.27 | |

| D | 0.00 | 0.07 | 0.24 | 0.06 | |

| Sympathy | P | 0.00 | −0.07 | 0.35 | 0.12 |

| A | −0.25 | −0.25 | 0.41 | 0.17 | |

| D | 0.25 | 0.04 | 0.47 | 0.22 | |

| Gratitude | P | 0.75 | 0.71 | 0.17 | 0.03 |

| A | 0.25 | 0.25 | 0.32 | 0.10 | |

| D | 0.25 | 0.07 | 0.43 | 0.18 | |

| Sadness | P | −0.75 | −0.68 | 0.24 | 0.06 |

| A | −0.50 | −0.50 | 0.29 | 0.08 | |

| D | −0.50 | −0.50 | 0.35 | 0.13 | |

| Happiness | P | 0.75 | 0.79 | 0.17 | 0.03 |

| A | 0.50 | 0.43 | 0.37 | 0.14 | |

| D | 0.25 | 0.25 | 0.32 | 0.10 | |

| Disgust | P | −0.75 | −0.71 | 0.30 | 0.09 |

| A | 0.25 | 0.25 | 0.29 | 0.08 | |

| D | 0.50 | 0.43 | 0.35 | 0.12 | |

| Angry | P | −0.75 | −0.68 | 0.12 | 0.01 |

| A | 0.75 | 0.68 | 0.19 | 0.04 | |

| D | 0.50 | 0.61 | 0.24 | 0.06 | |

| Fear | P | −0.50 | −0.61 | 0.13 | 0.02 |

| A | 0.25 | 0.14 | 0.40 | 0.16 | |

| D | −0.75 | −0.64 | 0.13 | 0.02 |

| Adjectives Pairs Used in SD Questionnaire | ||

|---|---|---|

| Gentle | - | Scary |

| Pleasant | - | Unpleasant |

| Friendly | - | Unfriendly |

| Safe | - | Dangerous |

| Warm | - | Cold |

| Cute | - | Hateful |

| Casual | - | Formal |

| Easy to understand | - | Difficult to understand |

| Approachable | - | Unapproachable |

| Cheerful | - | Gloomy |

| Considerate | - | Selfish |

| Funny | - | Unfunny |

| Amusing | - | Obnoxious |

| Likeable | - | Dislikeable |

| Interesting | - | Boring |

| Good | - | Bad |

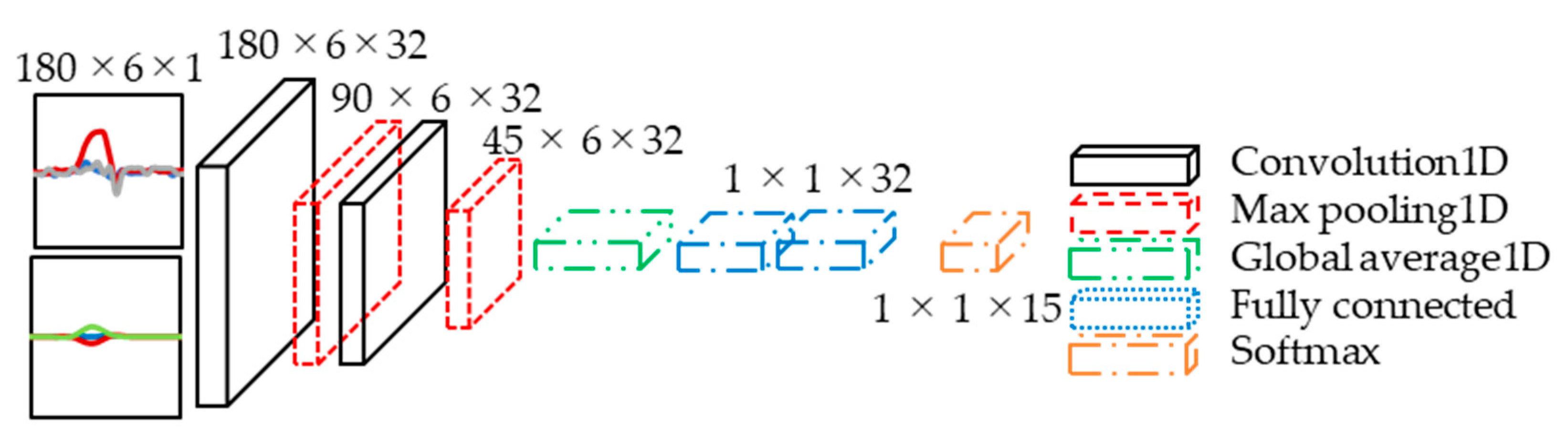

| Layer Tiype | Activation | Kernel Size | Padding | Output Shape | Param |

|---|---|---|---|---|---|

| Convolution 1D | Relu | 5 | same | (None, 180, 32) | 992 |

| Max pooling 1D | - | - | - | (None, 90, 32) | 0 |

| Convolution 1D | Relu | 3 | same | (None, 90, 32) | 3104 |

| Max pooling 1D | - | - | - | (None, 45, 32) | 0 |

| Convolution 1D | Relu | 3 | same | (None, 22, 32) | 3104 |

| Max pooling 1D | - | - | - | (None, 45, 32) | 0 |

| Global average 1D | - | - | - | (None, 32) | 0 |

| Dense | Relu | - | - | (None, 32) | 1056 |

| Dense | Softmax | - | - | (None, 16) | 495 |

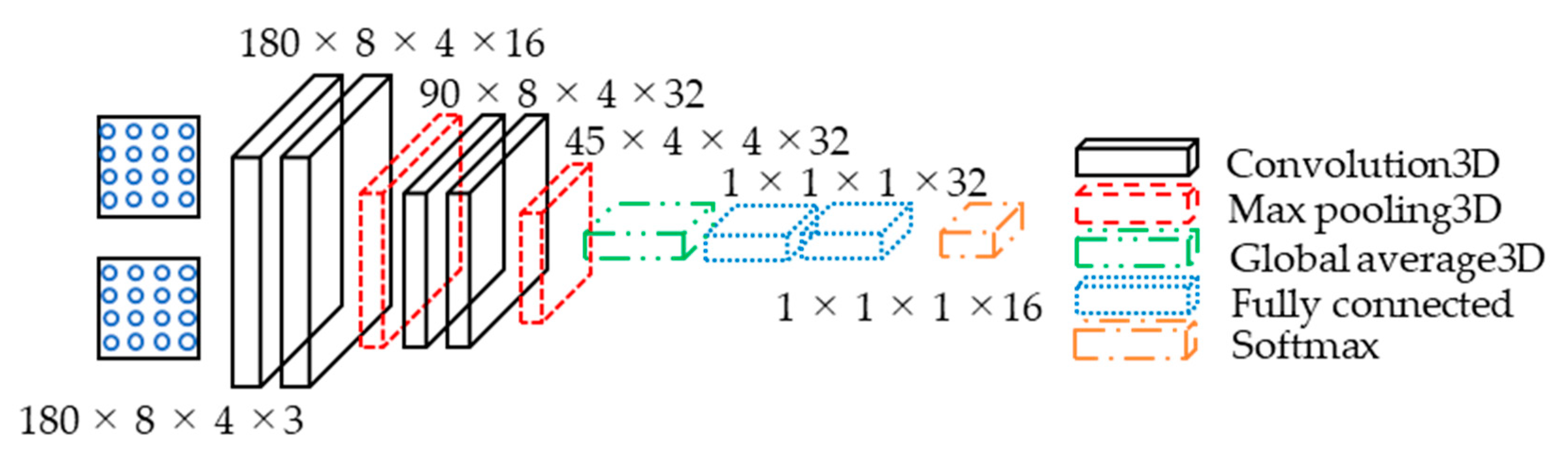

| Layer Tiype | Activation | Kernel Size | Padding | Output Shape | Param |

|---|---|---|---|---|---|

| Convolution 3D | Relu | (5, 2, 2) | Same | (None, 180, 8, 4, 16) | 976 |

| Convolution 3D | Relu | (5, 2, 2) | Same | (None, 180, 8, 4, 16) | 5136 |

| Max pooling 3D | - | - | - | (None, 90, 8, 4, 16) | 0 |

| Convolution 3D | Relu | (3, 2, 2) | Same | (None, 90, 8, 4, 32) | 6176 |

| Convolution 3D | Relu | (3, 2, 2) | same | (None, 90, 8, 4, 32) | 12,320 |

| Max pooling 3D | - | - | - | (None, 45, 8, 4, 32) | 0 |

| Convolution 3D | Relu | (3, 2, 2) | Same | (None, 45, 8, 4, 32) | 12,320 |

| Global average 3D | - | - | - | (None, 32) | 0 |

| Dense | Relu | - | - | (None, 32) | 1056 |

| Dense | Relu | - | - | (None, 32) | 1056 |

| Dense | Softmax | - | - | (None, 16) | 528 |

| Adjective Pairs (+1–−1) | Average SD Method Values | Difference | p-Value | |

|---|---|---|---|---|

| Before Experiment | After Experiments | |||

| Gentle–Scary | 0.0384 | 0.154 | 0.12 | 0.196 |

| Pleasant–Unpleasant | 0.212 | 0.327 | 0.12 | 0.236 |

| Friendly–Unfriendly * | 0.0961 | 0.404 | 0.31 | 0.01 |

| Safe–Dangerous ** | 0.0385 | 0.442 | 0.4 | 0.004 |

| Warm–Cold * | −0.0385 | 0.269 | 0.31 | 0.03 |

| Cute–Hateful | 0.327 | 0.442 | 0.12 | 0.221 |

| Casual–Formal ** | 0.135 | 0.365 | 0.5 | 0 |

| Easy–Difficult (to understand) | 0.0192 | 0.288 | 0.27 | 0.055 |

| Approachable–Unapproachable ** | 0.0192 | 0.442 | 0.42 | 0.003 |

| Cheerful–Gloomy | 0.154 | 0.212 | 0.06 | 0.583 |

| Considerate–Selfish | 0.115 | 0.135 | 0.02 | 0.853 |

| Funny–Unfunny | 0.365 | 0.5 | 0.13 | 0.157 |

| Amusing–Obnoxious * | 0.25 | 0.481 | 0.23 | 0.006 |

| Likeable–Dislikeable | 0.442 | 0.615 | 0.17 | 0.112 |

| Interesting–Boring | 0.538 | 0.519 | −0.02 | 0.868 |

| Good–Bad | 0.423 | 0.481 | 0.06 | 0.575 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hayashi, A.; Rincon-Ardila, L.K.; Venture, G. Improving HRI with Force Sensing. Machines 2022, 10, 15. https://doi.org/10.3390/machines10010015

Hayashi A, Rincon-Ardila LK, Venture G. Improving HRI with Force Sensing. Machines. 2022; 10(1):15. https://doi.org/10.3390/machines10010015

Chicago/Turabian StyleHayashi, Akiyoshi, Liz Katherine Rincon-Ardila, and Gentiane Venture. 2022. "Improving HRI with Force Sensing" Machines 10, no. 1: 15. https://doi.org/10.3390/machines10010015

APA StyleHayashi, A., Rincon-Ardila, L. K., & Venture, G. (2022). Improving HRI with Force Sensing. Machines, 10(1), 15. https://doi.org/10.3390/machines10010015