Approximation Results for Equilibrium Problems Involving Strongly Pseudomonotone Bifunction in Real Hilbert Spaces

Abstract

1. Background

- (i)

- strongly monotone if

- (ii)

- monotone if

- (iii)

- -strongly pseudo-monotone if

- (iv)

- pseudo-monotone ifand

- (v)

- satisfy the Lipschitz-type conditions on for such that

2. Preliminaries

- (i)

- with

- (ii)

- (C1)

- , for all and f is strongly pseudomonotone on

- (C2)

- f meet the Lipschitz-type condition with two constants and and

- (C3)

- is convex and sub-differentiable on for fixed each

3. Main Results

| Algorithm 1. Modified subgradient extragradient method for equilibrium problems. |

|

- (G1)

- strongly pseudo-monotone over for ifand

- (G2)

- L-Lipschitz continuity on C if

- (S1)

- Let arbitrarily.

- (S2)

- (S3)

- Computewhere If , then STOP.

- (S4)

- Determine a half space first and evaluate

- (S5)

- Computewhere and satisfies the following conditions:

- (i)

- non-decreasing sequence through for each ; and

- (ii)

- there exists thus thatand

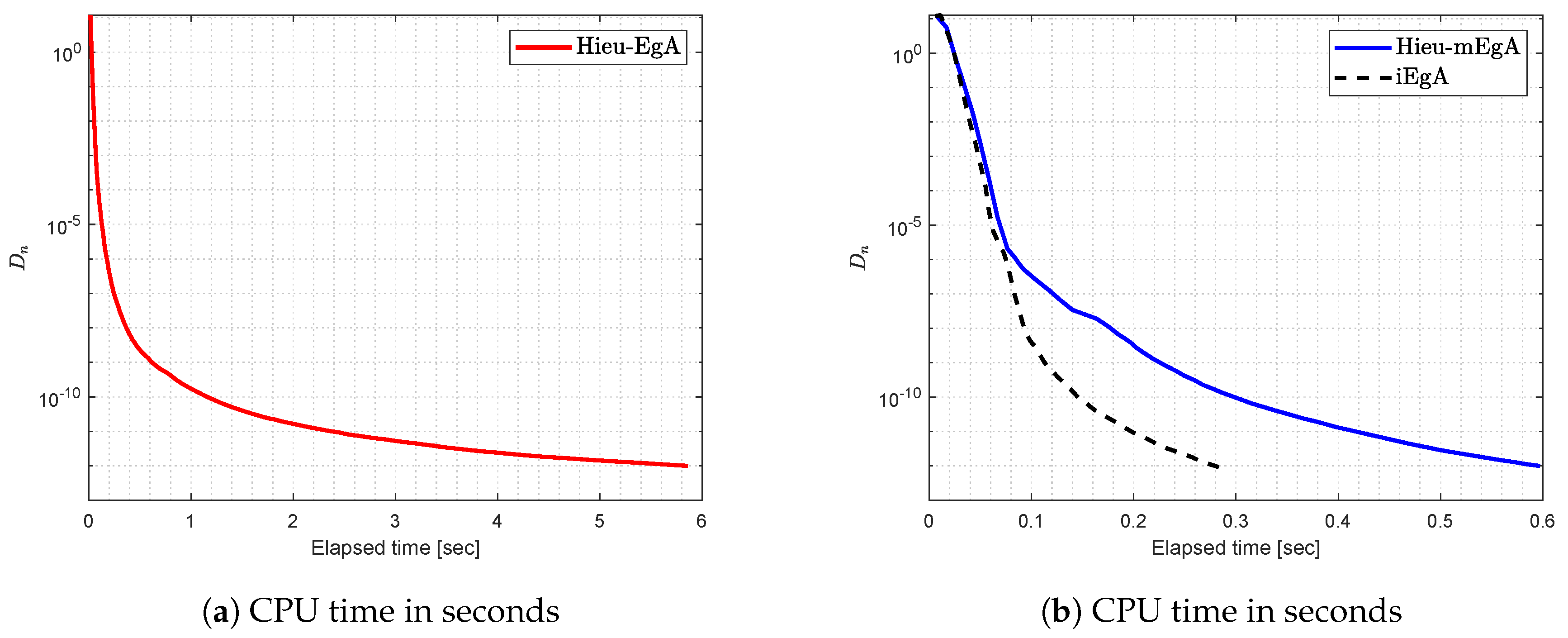

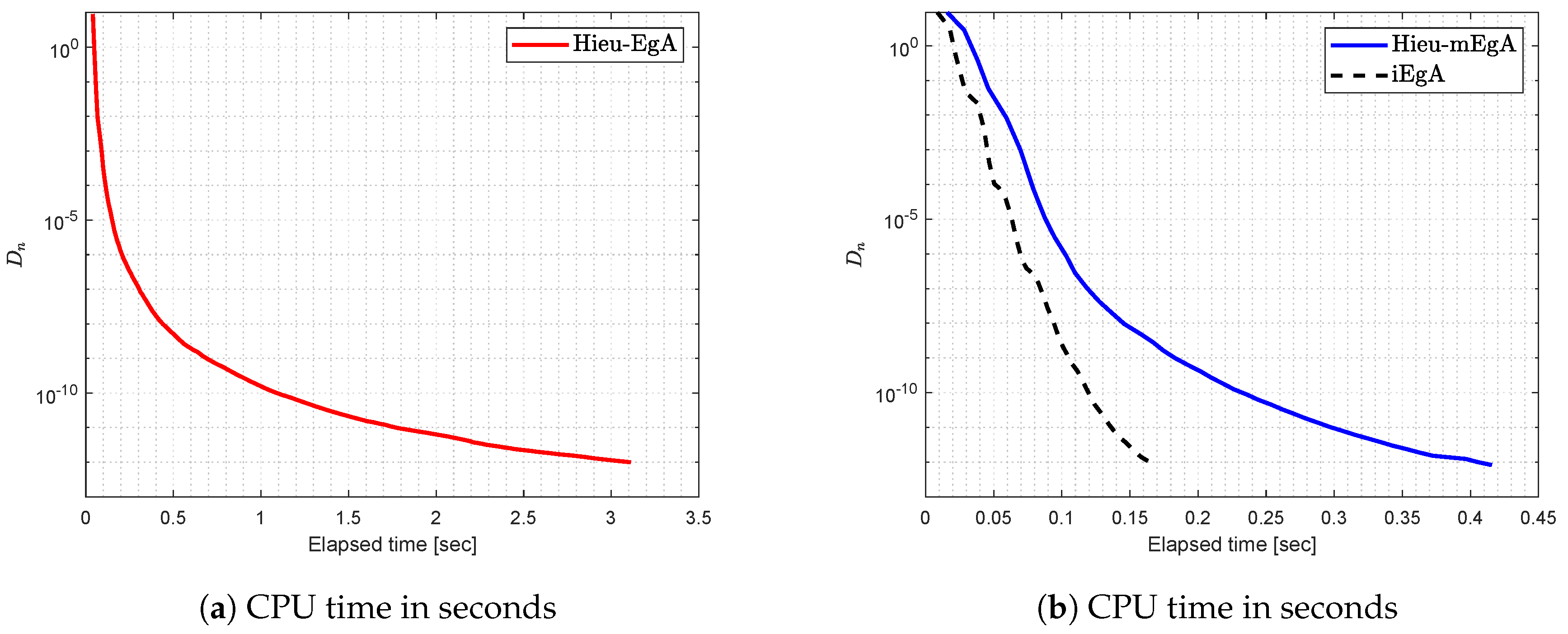

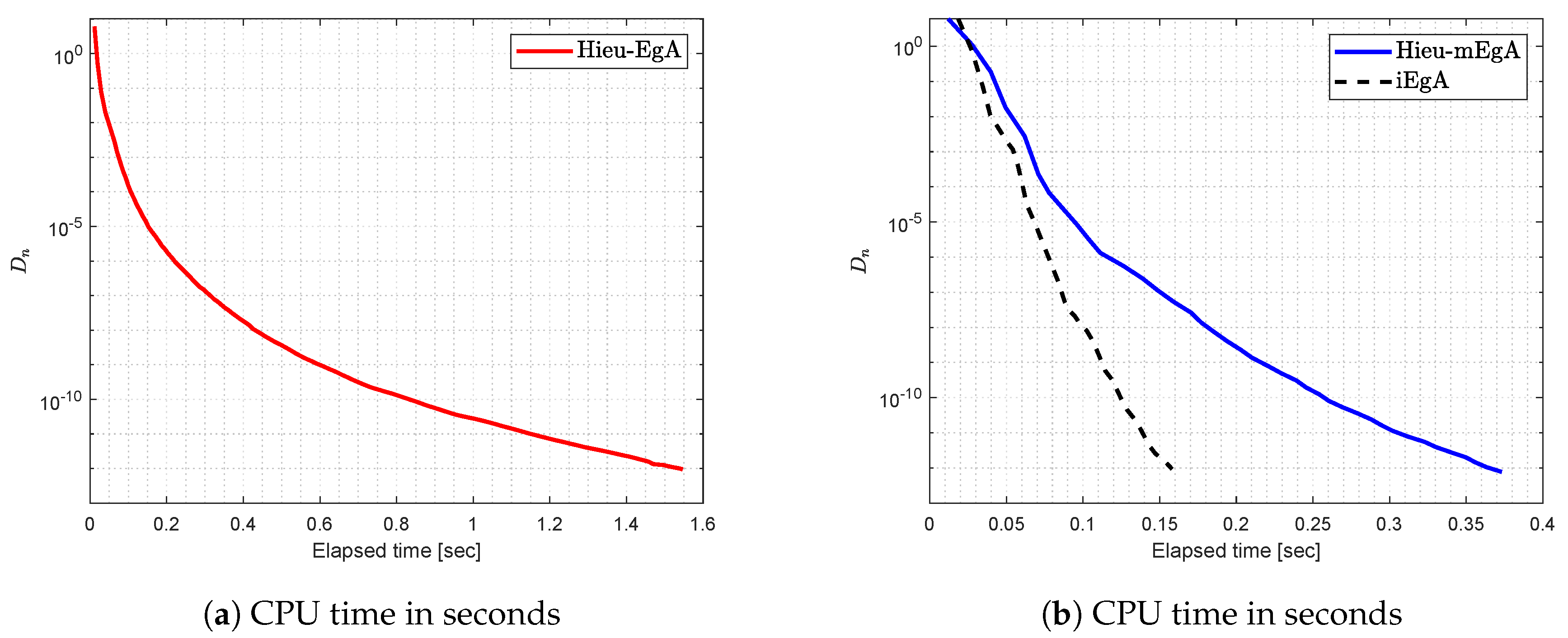

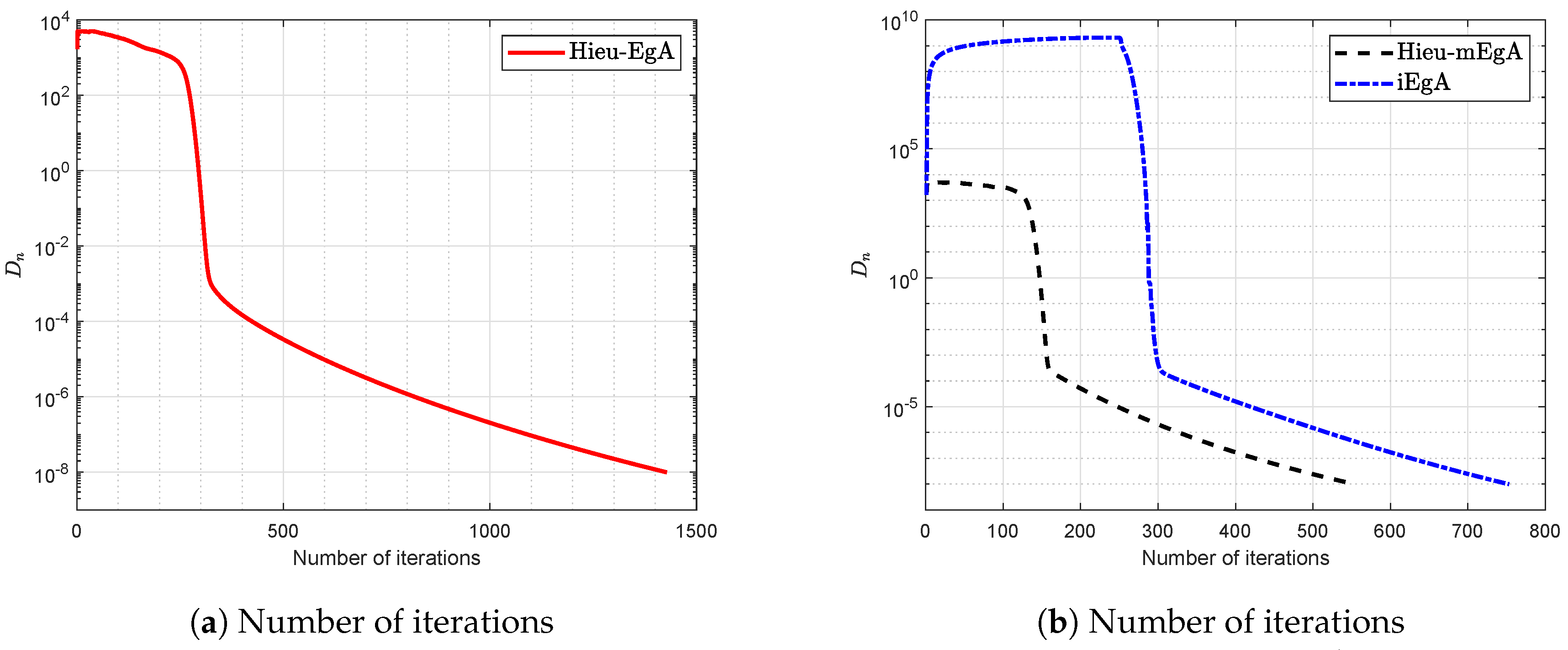

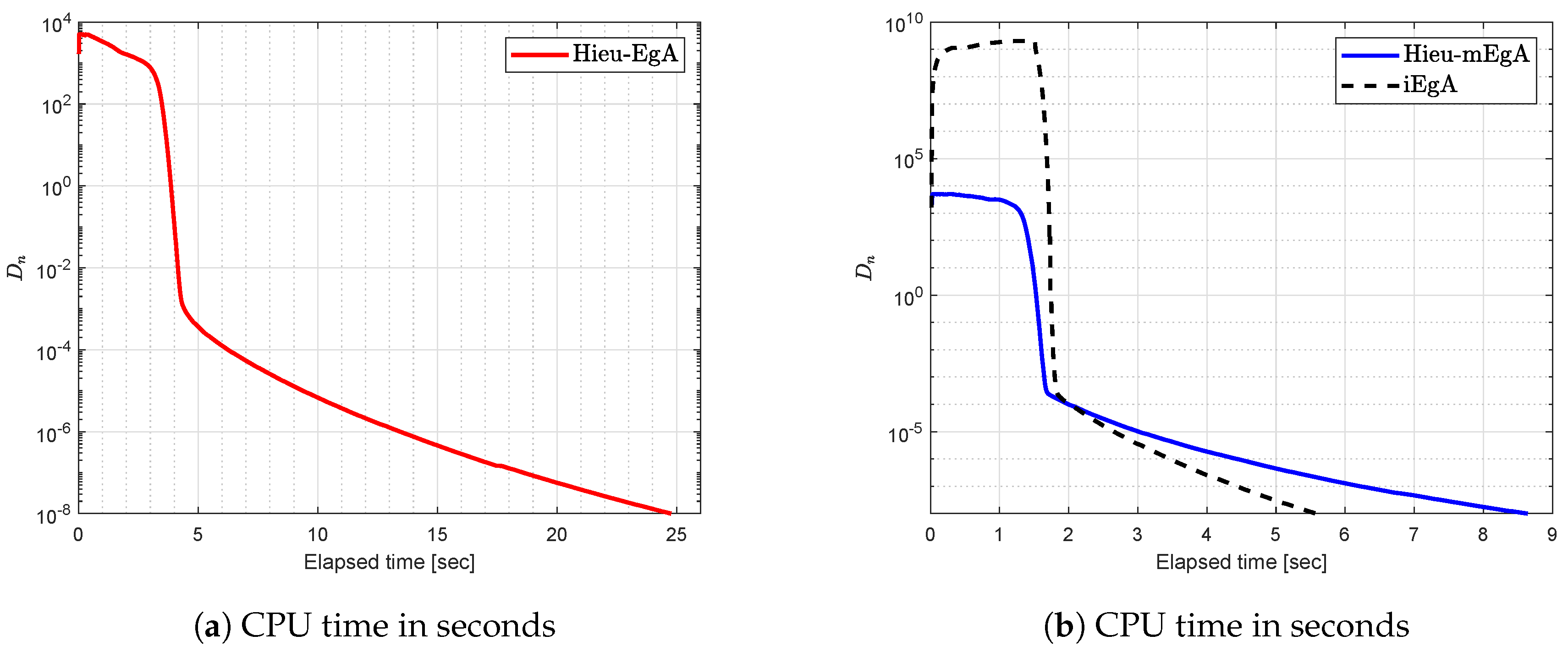

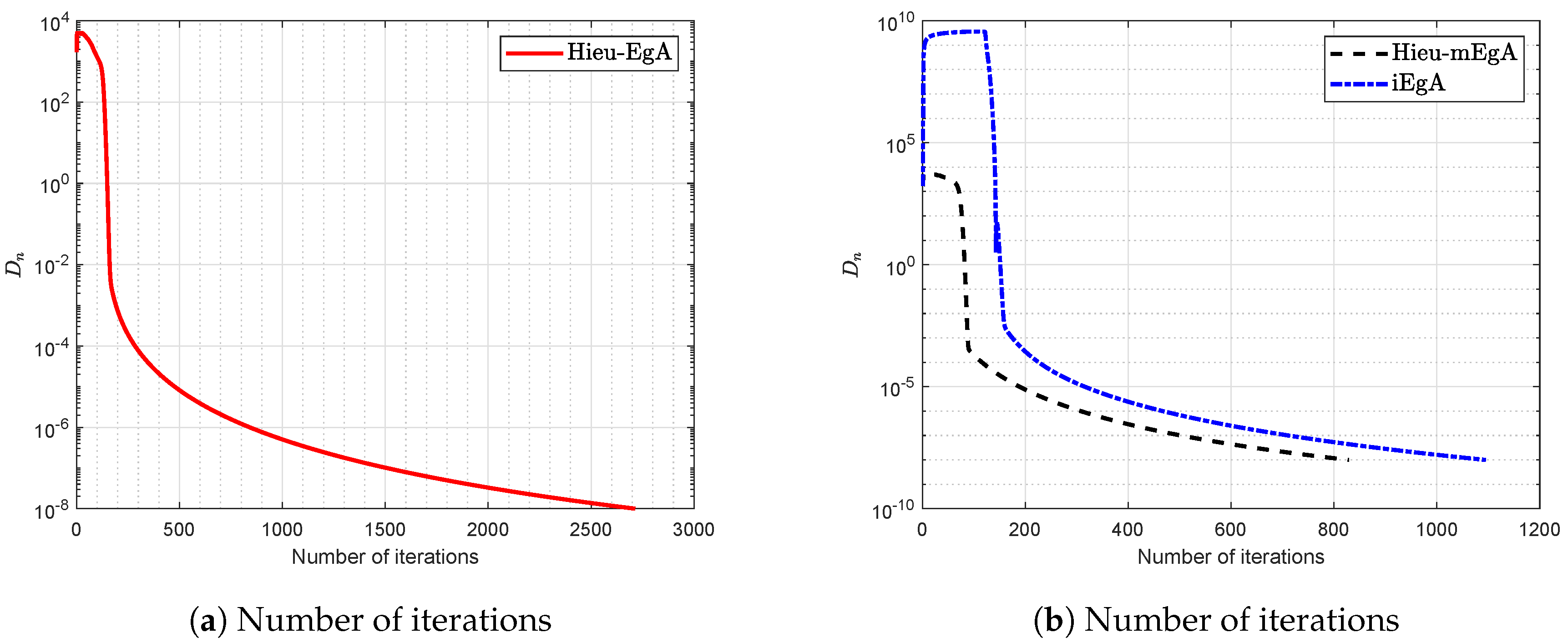

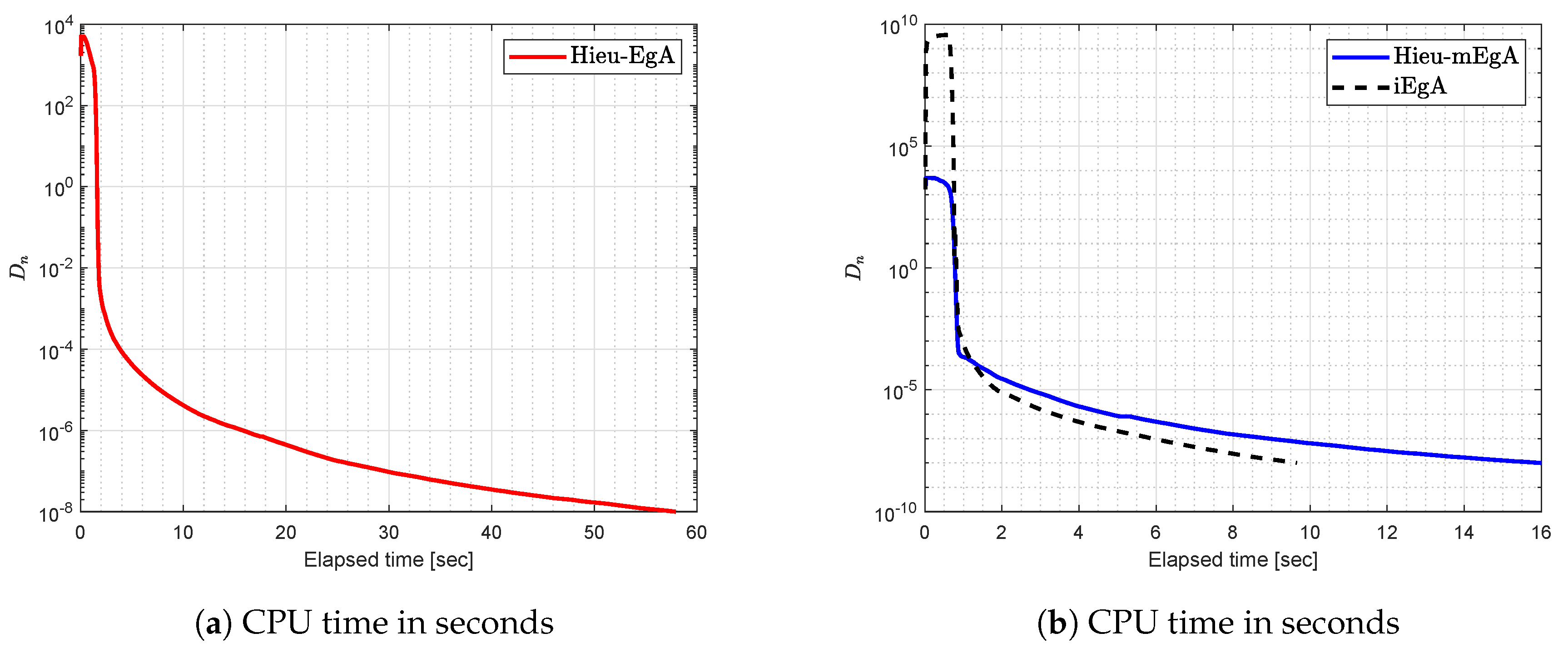

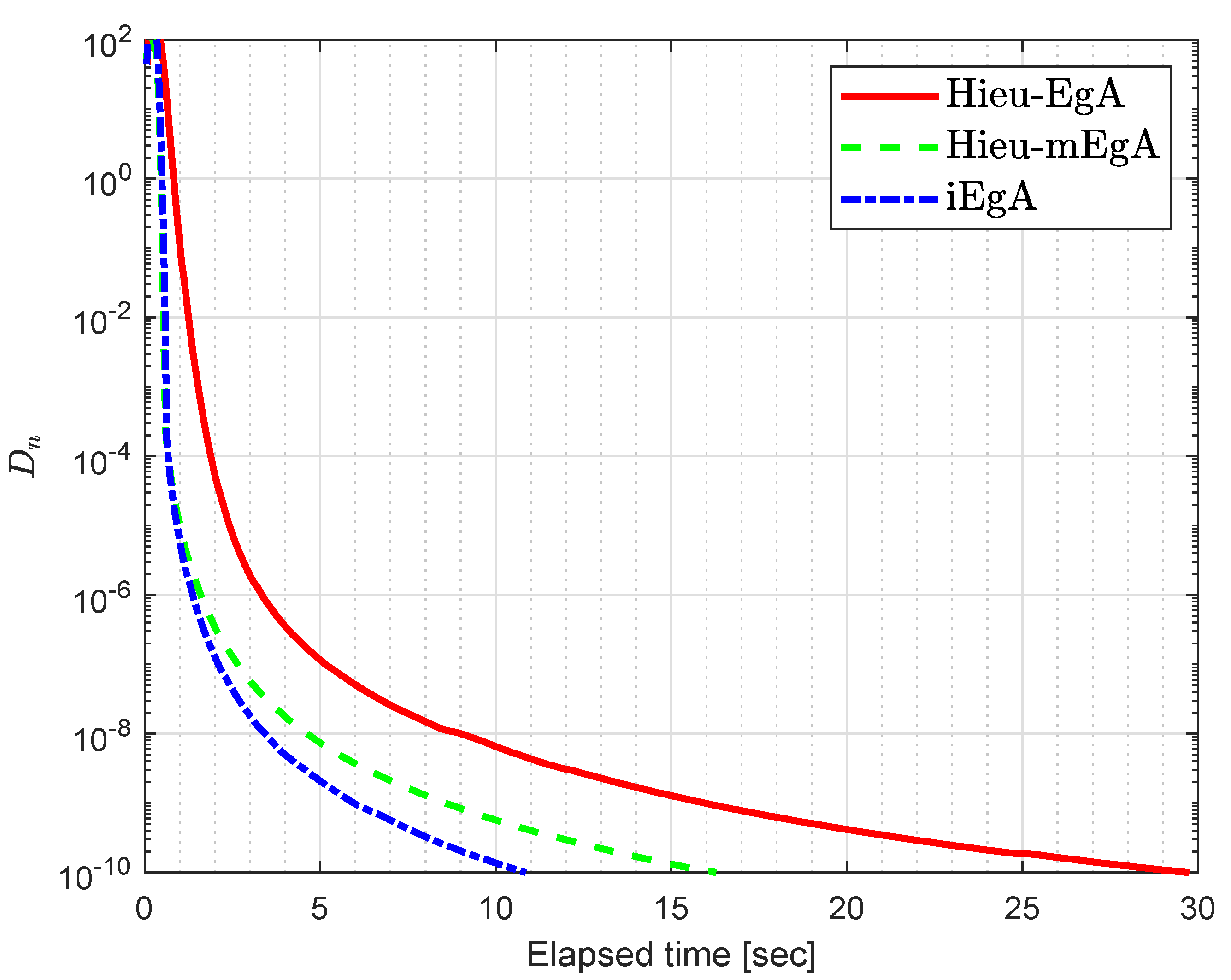

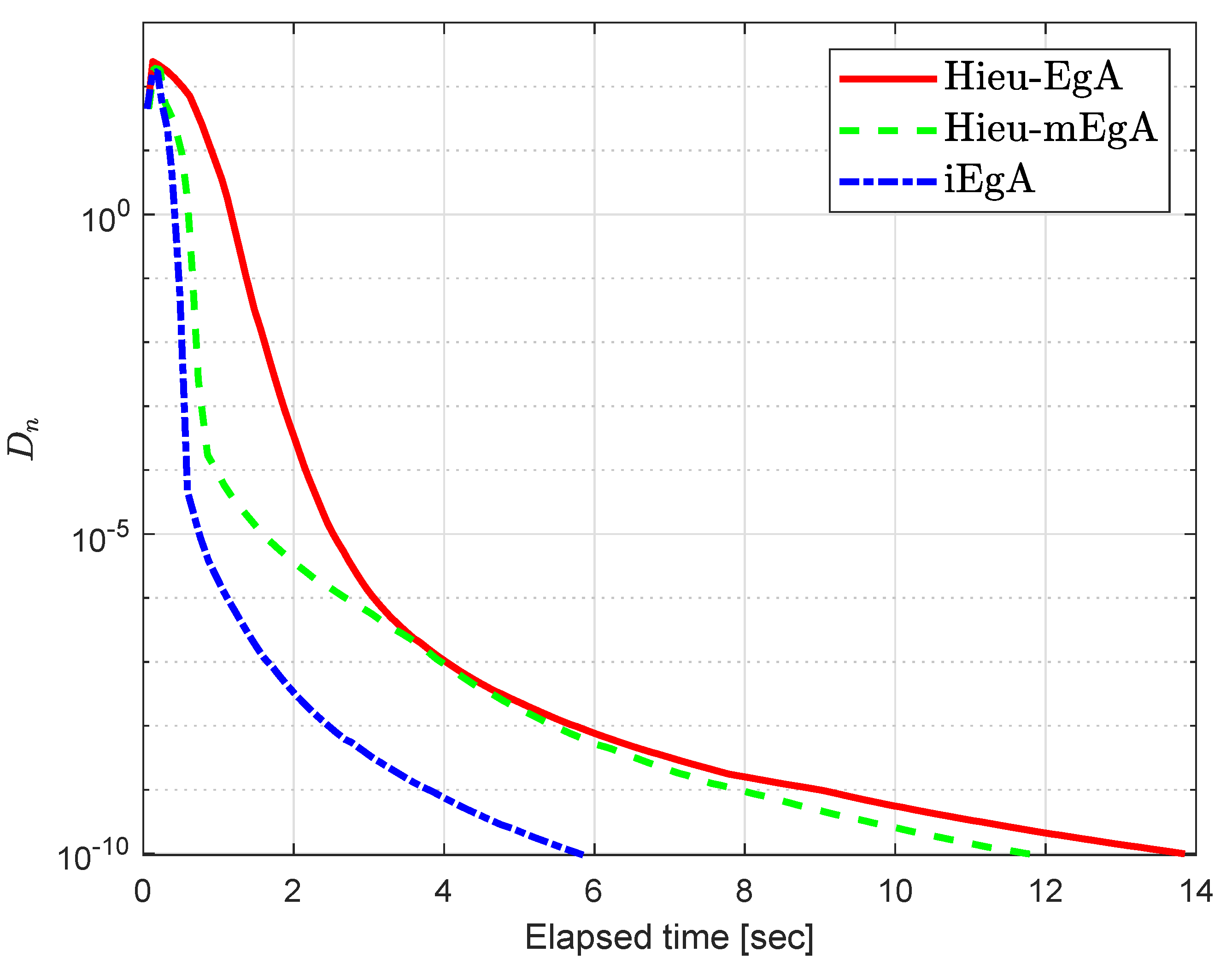

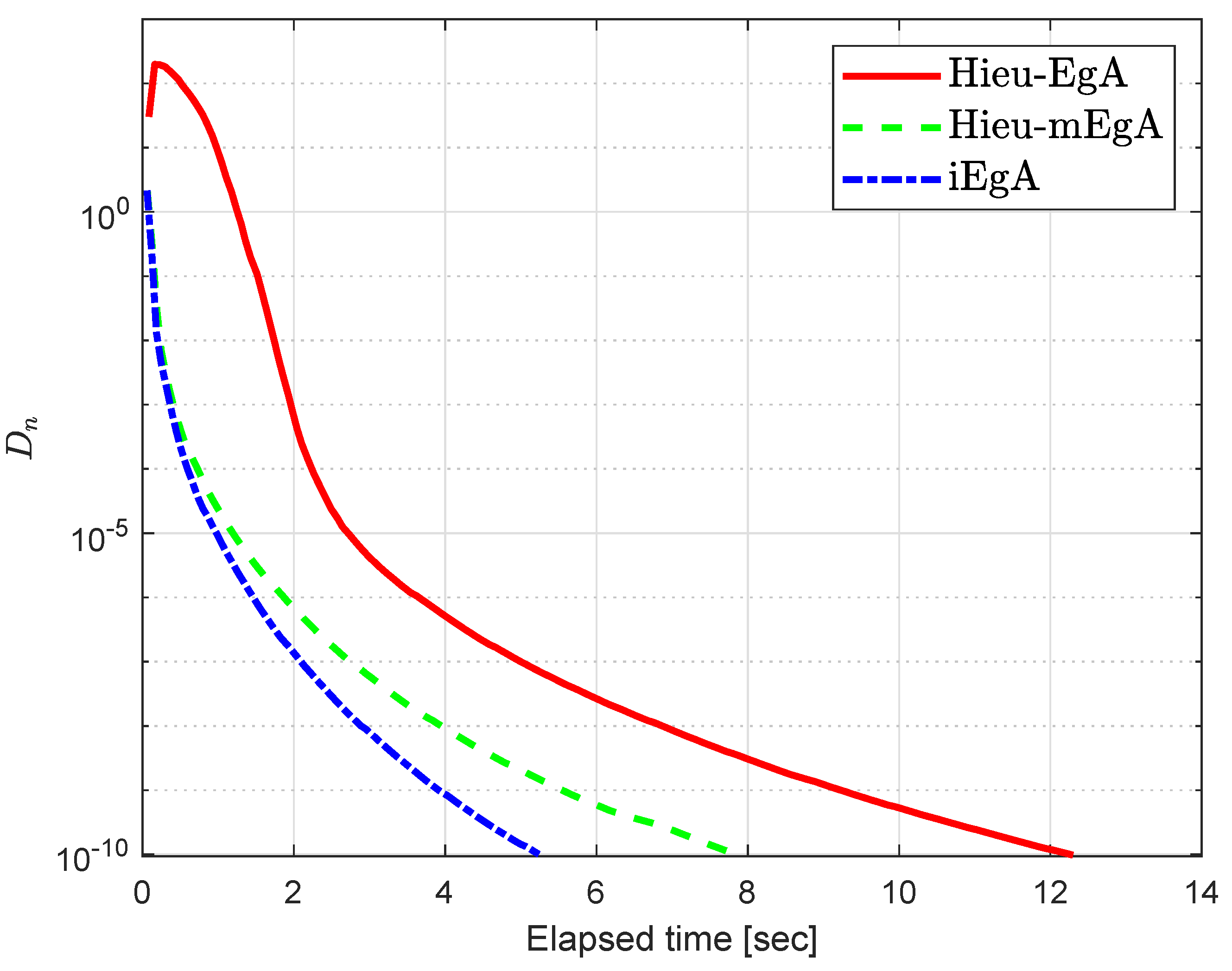

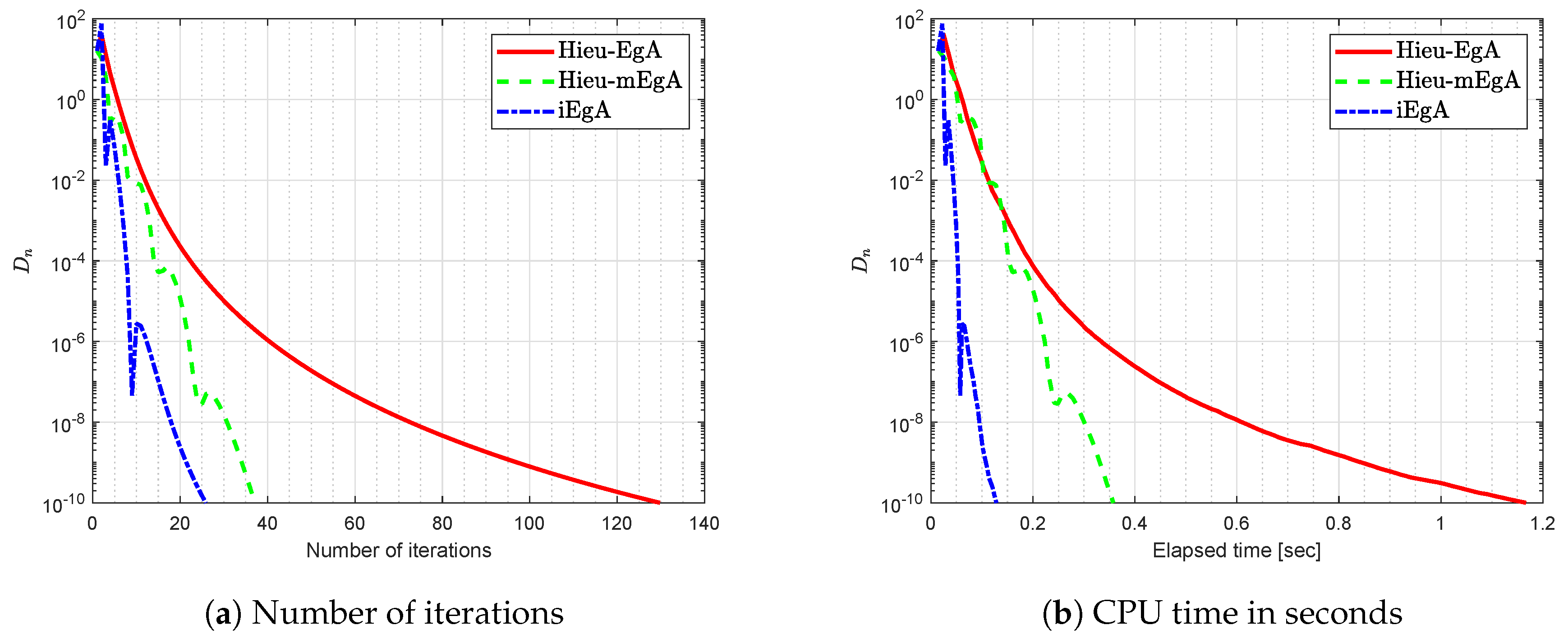

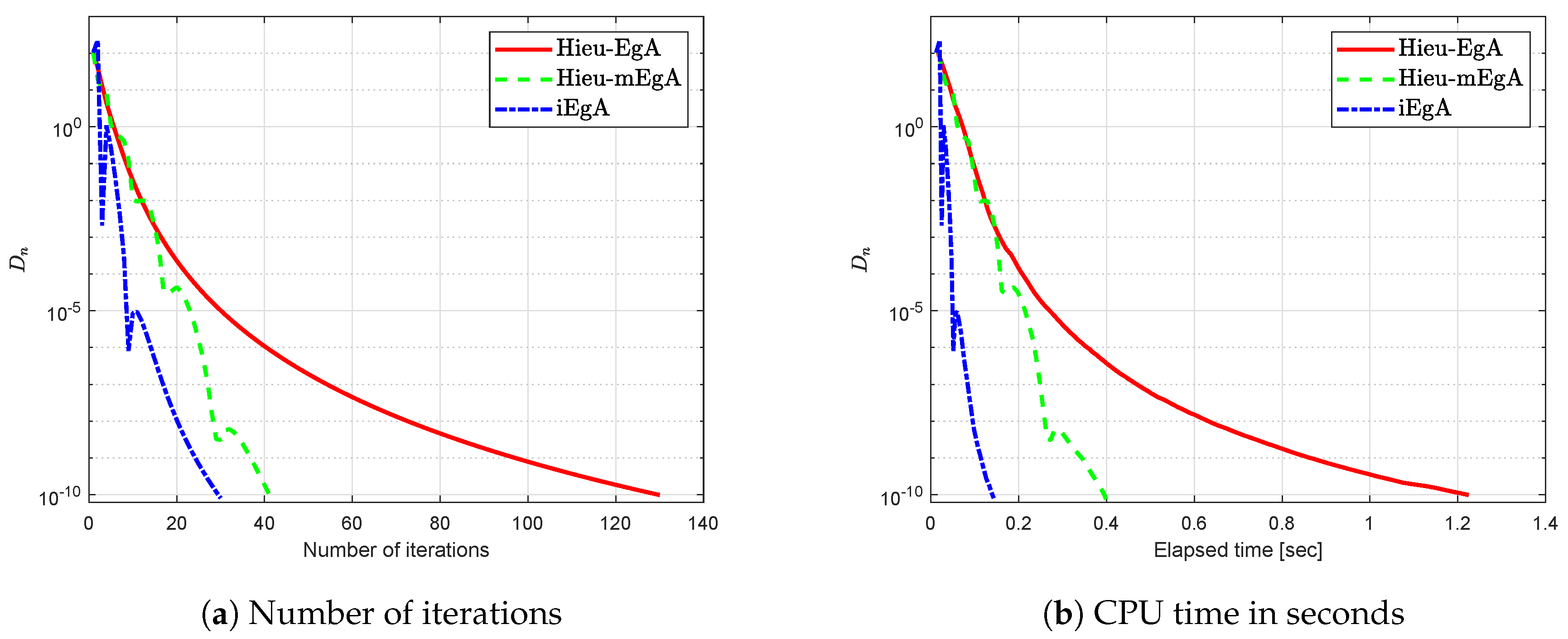

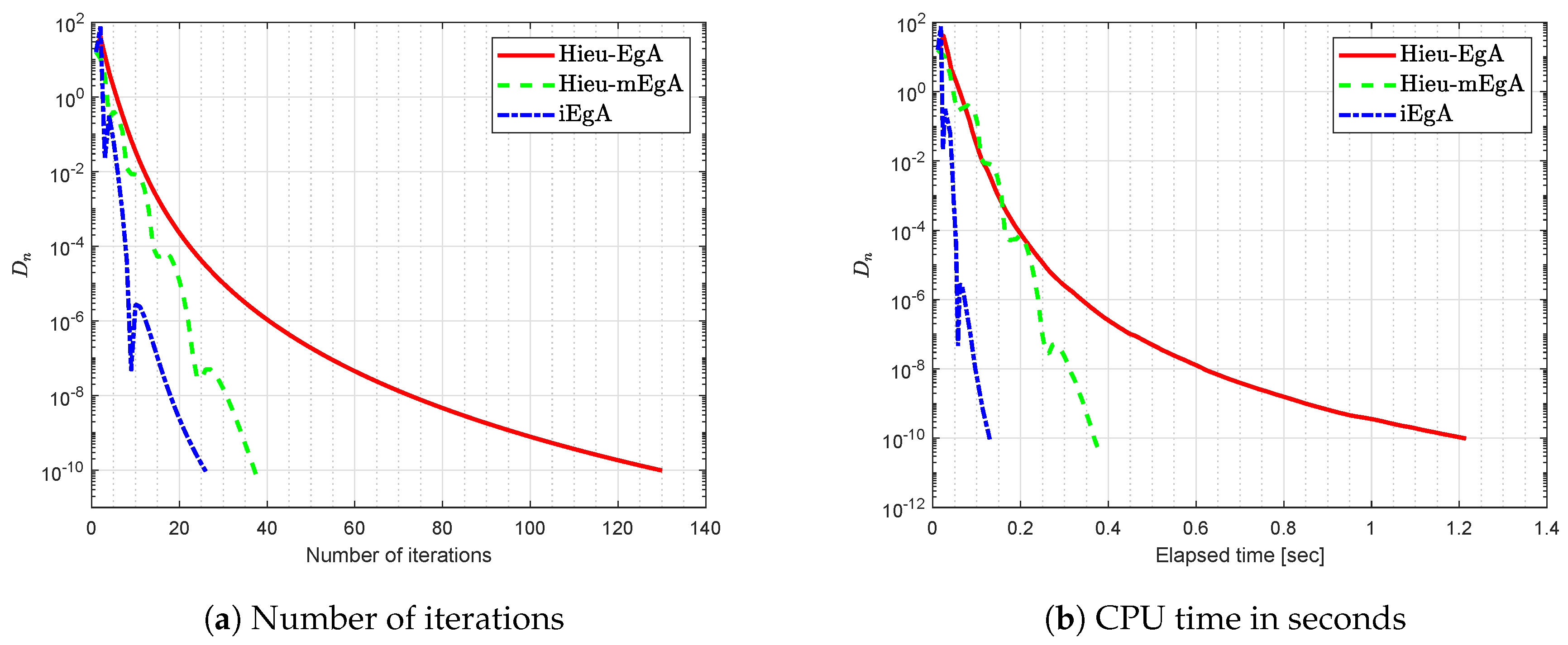

4. Numerical Illustration

- (1)

- For Hieu et al. [26] (Hieu-EgA), we use

- (2)

- For Hieu et al. [29] (Hieu-mEgA), we use and

- (3)

- For Algorithm 1 (iEgA), we use , and

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Blum, E. From optimization and variational inequalities to equilibrium problems. Math. Stud. 1994, 63, 123–145. [Google Scholar]

- Fan, K. A Minimax Inequality and Applications, Inequalities III; Shisha, O., Ed.; Academic Press: New York, NY, USA, 1972. [Google Scholar]

- Bianchi, M.; Schaible, S. Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 1996, 90, 31–43. [Google Scholar] [CrossRef]

- Bigi, G.; Castellani, M.; Pappalardo, M.; Passacantando, M. Existence and solution methods for equilibria. Eur. J. Oper. Res. 2013, 227, 1–11. [Google Scholar] [CrossRef]

- Muu, L.; Oettli, W. Convergence of an adaptive penalty scheme for finding constrained equilibria. Nonlinear Anal. Theory Methods Appl. 1992, 18, 1159–1166. [Google Scholar] [CrossRef]

- Combettes, P.L.; Hirstoaga, S.A. Equilibrium programming in Hilbert spaces. J. Nonlinear Convex Anal. 2005, 6, 117–136. [Google Scholar]

- Antipin, A. Equilibrium programming: Proximal methods. Comput. Math. Math. Phys. 1997, 37, 1285–1296. [Google Scholar]

- Giannessi, F.; Maugeri, A.; Pardalos, P.M. Equilibrium Problems: Nonsmooth Optimization and Variational Inequality Models; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; Volume 58. [Google Scholar]

- Dafermos, S. Traffic Equilibrium and Variational Inequalities. Transp. Sci. 1980, 14, 42–54. [Google Scholar] [CrossRef]

- Todorčević, V. Harmonic Quasiconformal Mappings and Hyperbolic Type Metrics; Springer International Publishing: Berlin/Heidelberg, Germany, 2019. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Cho, Y.J.; Yordsorn, P. Weak convergence of explicit extragradient algorithms for solving equilibirum problems. J. Inequalities Appl. 2019, 2019. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Je Cho, Y.; Suleiman, Y.I.; Kumam, W. Modified Popov’s explicit iterative algorithms for solving pseudomonotone equilibrium problems. Optim. Methods Softw. 2020, 1–32. [Google Scholar] [CrossRef]

- Todorčević, V. Subharmonic behavior and quasiconformal mappings. Anal. Math. Phys. 2019, 9, 1211–1225. [Google Scholar] [CrossRef]

- Koskela, P.; Manojlović, V. Quasi-Nearly Subharmonic Functions and Quasiconformal Mappings. Potential Anal. 2011, 37, 187–196. [Google Scholar] [CrossRef][Green Version]

- ur Rehman, H.; Kumam, P.; Abubakar, A.B.; Cho, Y.J. The extragradient algorithm with inertial effects extended to equilibrium problems. Comput. Appl. Math. 2020, 39. [Google Scholar] [CrossRef]

- Hammad, H.A.; ur Rehman, H.; la Sen, M.D. Advanced Algorithms and Common Solutions to Variational Inequalities. Symmetry 2020, 12, 1198. [Google Scholar] [CrossRef]

- Hieu, D.V.; Quy, P.K.; Vy, L.V. Explicit iterative algorithms for solving equilibrium problems. Calcolo 2019, 56. [Google Scholar] [CrossRef]

- Hieu, D.V. New inertial algorithm for a class of equilibrium problems. Numer. Algorithms 2018, 80, 1413–1436. [Google Scholar] [CrossRef]

- Anh, P.K.; Hai, T.N. Novel self-adaptive algorithms for non-Lipschitz equilibrium problems with applications. J. Glob. Optim. 2018, 73, 637–657. [Google Scholar] [CrossRef]

- Anh, P.N.; Anh, T.T.H.; Hien, N.D. Modified basic projection methods for a class of equilibrium problems. Numer. Algorithms 2017, 79, 139–152. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Kumam, W.; Shutaywi, M.; Jirakitpuwapat, W. The Inertial Sub-Gradient Extra-Gradient Method for a Class of Pseudo-Monotone Equilibrium Problems. Symmetry 2020, 12, 463. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Argyros, I.K.; Deebani, W.; Kumam, W. Inertial Extra-Gradient Method for Solving a Family of Strongly Pseudomonotone Equilibrium Problems in Real Hilbert Spaces with Application in Variational Inequality Problem. Symmetry 2020, 12, 503. [Google Scholar] [CrossRef]

- Flåm, S.D.; Antipin, A.S. Equilibrium programming using proximal-like algorithms. Math. Program. 1996, 78, 29–41. [Google Scholar] [CrossRef]

- Tran, D.Q.; Dung, M.L.; Nguyen, V.H. Extragradient algorithms extended to equilibrium problems. Optimization 2008, 57, 749–776. [Google Scholar] [CrossRef]

- Korpelevich, G. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Hieu, D.V. New extragradient method for a class of equilibrium problems in Hilbert spaces. Appl. Anal. 2017, 97, 811–824. [Google Scholar] [CrossRef]

- Polyak, B. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A Fast Iterative Shrinkage-Thresholding Algorithm for Linear Inverse Problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Hieu, D.V.; Cho, Y.J.; bin Xiao, Y. Modified extragradient algorithms for solving equilibrium problems. Optimization 2018, 67, 2003–2029. [Google Scholar] [CrossRef]

- Rehman, H.U.; Kumam, P.; Dong, Q.L.; Peng, Y.; Deebani, W. A new Popov’s subgradient extragradient method for two classes of equilibrium programming in a real Hilbert space. Optimization 2020, 1–36. [Google Scholar] [CrossRef]

- Yordsorn, P.; Kumam, P.; ur Rehman, H.; Ibrahim, A.H. A Weak Convergence Self-Adaptive Method for Solving Pseudomonotone Equilibrium Problems in a Real Hilbert Space. Mathematics 2020, 8, 1165. [Google Scholar] [CrossRef]

- Yordsorn, P.; Kumam, P.; Rehman, H.U. Modified two-step extragradient method for solving the pseudomonotone equilibrium programming in a real Hilbert space. Carpathian J. Math. 2020, 36, 313–330. [Google Scholar]

- Fan, J.; Liu, L.; Qin, X. A subgradient extragradient algorithm with inertial effects for solving strongly pseudomonotone variational inequalities. Optimization 2019, 1–17. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V. Inertial extragradient algorithms for strongly pseudomonotone variational inequalities. J. Comput. Appl. Math. 2018, 341, 80–98. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The Subgradient Extragradient Method for Solving Variational Inequalities in Hilbert Space. J. Optim. Theory Appl. 2010, 148, 318–335. [Google Scholar] [CrossRef] [PubMed]

- ur Rehman, H.; Kumam, P.; Argyros, I.K.; Alreshidi, N.A.; Kumam, W.; Jirakitpuwapat, W. A Self-Adaptive Extra-Gradient Methods for a Family of Pseudomonotone Equilibrium Programming with Application in Different Classes of Variational Inequality Problems. Symmetry 2020, 12, 523. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Argyros, I.K.; Shutaywi, M.; Shah, Z. Optimization Based Methods for Solving the Equilibrium Problems with Applications in Variational Inequality Problems and Solution of Nash Equilibrium Models. Mathematics 2020, 8, 822. [Google Scholar] [CrossRef]

- ur Rehman, H.; Kumam, P.; Shutaywi, M.; Alreshidi, N.A.; Kumam, W. Inertial Optimization Based Two-Step Methods for Solving Equilibrium Problems with Applications in Variational Inequality Problems and Growth Control Equilibrium Models. Energies 2020, 13, 3292. [Google Scholar] [CrossRef]

- Attouch, F.A.H. An Inertial Proximal Method for Maximal Monotone Operators via Discretization of a Nonlinear Oscillator with Damping. Set-Valued Var. Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Heinz, H.; Bauschke, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; CMS Books in Mathematics; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Ofoedu, E. Strong convergence theorem for uniformly L-Lipschitzian asymptotically pseudocontractive mapping in real Banach space. J. Math. Anal. Appl. 2006, 321, 722–728. [Google Scholar] [CrossRef]

- Tiel, J.V. Convex Analysis: An Introductory Text, 1st ed.; Wiley: New York, NY, USA, 1984. [Google Scholar]

- Kreyszig, E. Introductory Functional Analysis with Applications, 1st ed.; Wiley: New York, NY, USA, 1978. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumam, W.; Muangchoo, K. Approximation Results for Equilibrium Problems Involving Strongly Pseudomonotone Bifunction in Real Hilbert Spaces. Axioms 2020, 9, 137. https://doi.org/10.3390/axioms9040137

Kumam W, Muangchoo K. Approximation Results for Equilibrium Problems Involving Strongly Pseudomonotone Bifunction in Real Hilbert Spaces. Axioms. 2020; 9(4):137. https://doi.org/10.3390/axioms9040137

Chicago/Turabian StyleKumam, Wiyada, and Kanikar Muangchoo. 2020. "Approximation Results for Equilibrium Problems Involving Strongly Pseudomonotone Bifunction in Real Hilbert Spaces" Axioms 9, no. 4: 137. https://doi.org/10.3390/axioms9040137

APA StyleKumam, W., & Muangchoo, K. (2020). Approximation Results for Equilibrium Problems Involving Strongly Pseudomonotone Bifunction in Real Hilbert Spaces. Axioms, 9(4), 137. https://doi.org/10.3390/axioms9040137