1. Introduction

We consider derivative-free methods to discover a multiple root (say, ) of multiplicity m, i.e., and , of a nonlinear equation .

Many higher-order methods, either independent or based on the modified Newton’s method [

1] with quadratically convergent

have been proposed and verified in the literature; see, for example: [

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15] and references therein. Such methods require the evaluations of derivatives of either linear order or linear and second order, or both. However, higher-order derivative-free methods to handle the case of multiple roots are yet to be explored. The main reason for the non-availability of such methods is due to the difficulty in obtaining their order of convergence. The derivative-free methods are important in the conditions when derivative of the function

f is difficult to compute or is expensive to obtain. One such method free from derivative is the classical Traub–Steffensen method [

16] which actually replaces the derivative

in the classical Newton method with appropriate approximation based on difference quotient,

or

where

and

is a first-order divided difference.

In this way the modified Newton method (

1) becomes the modified Traub–Steffensen method

The modified Traub–Steffensen method (

2) is a noteworthy improvement of Newton’s method, because it keeps quadratic convergence without using derivative.

In this work, we introduce a two-step family of third-order derivative-free methods for computing multiple zeros that require three evaluations of the function

f per iteration. The iterative scheme uses the Traub–Steffensen iteration (

2) in the first step and Traub–Steffensen-like iteration in the second step. The procedure is based on a simple approach of using weight factors in the iterative scheme. Many special methods of the family can be generated depending on the forms of weight factors. Efficacy of these methods is tested on various numerical problems of different natures. In the comparison with existing techniques that use derivative evaluations, the new derivative-free methods are computationally more efficient.

The rest of the paper is summarized as follows. In

Section 2, the scheme of third-order methods is developed and its convergence is studied.

Section 3 is divided into two parts:

Section 3.1 and

Section 3.2. In

Section 3.1, an initial approach concerning the study of the dynamics of the methods with the help of basins of attraction is presented. To demonstrate applicability and efficacy of the new methods, some numerical experiments are performed in

Section 3.2. A comparison of the methods with existing ones of the same order is also shown in this subsection. Concluding remarks are given in

Section 4.

3. Numerical Tests

In this section, first we plot the basins of attraction of the zeros of some polynomials when the proposed iterative methods are applied on the polynomials. Next, we verify the theoretical results by the applications of the methods on some nonlinear functions, including those which arise in practical problems.

3.1. Basins of Attraction

Analysis of the complex dynamical behavior gives important information about convergence and stability of an iterative scheme; see e.g., [

2,

3,

18,

19,

20,

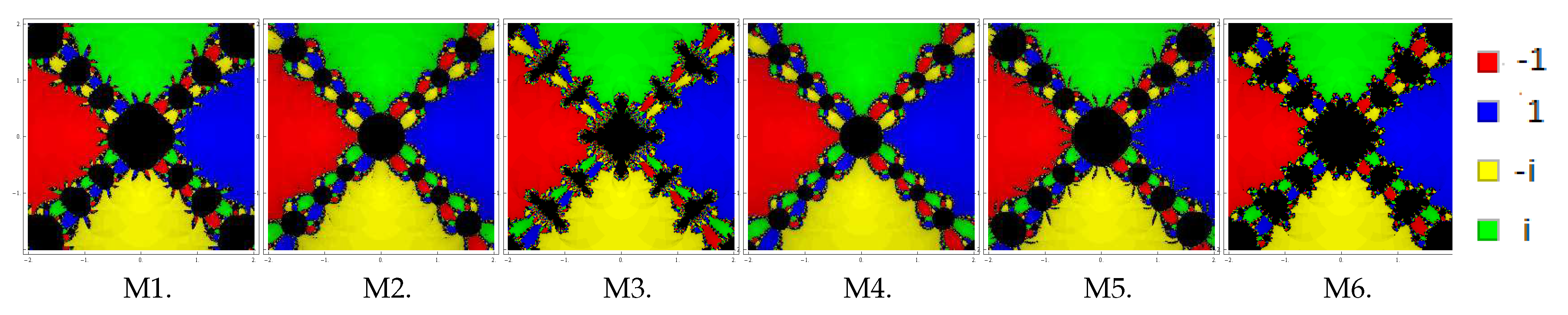

21]. Here, we directly describe the dynamics of iterative methods M1–M6 by means of visual display of the basins of attraction of the zeros of a polynomial

. The deep dynamical study of the proposed six methods, with a detailed stability analysis of the fixed points and their behavior, requires a separate article, and so such complete analysis is not a subject of discussion in the present work.

To start with, let us take the initial point in a rectangular region that contains all the zeros of a polynomial The iterative method starts from point in a rectangle either converges to the zero or eventually diverges. The stopping criterion for convergence is considered to be up to a maximum of 25 iterations. If the required tolerance is not achieved in 25 iterations, we conclude that the method starting at point does not converge to any root. The strategy adopted is as follows: A color is allocated to each initial point in the basin of attraction of a zero. If the iteration initiating at converges, then it represents the attraction basin with that particular assigned color to it, otherwise if it fails to converge in 25 iterations, then it shows the black color.

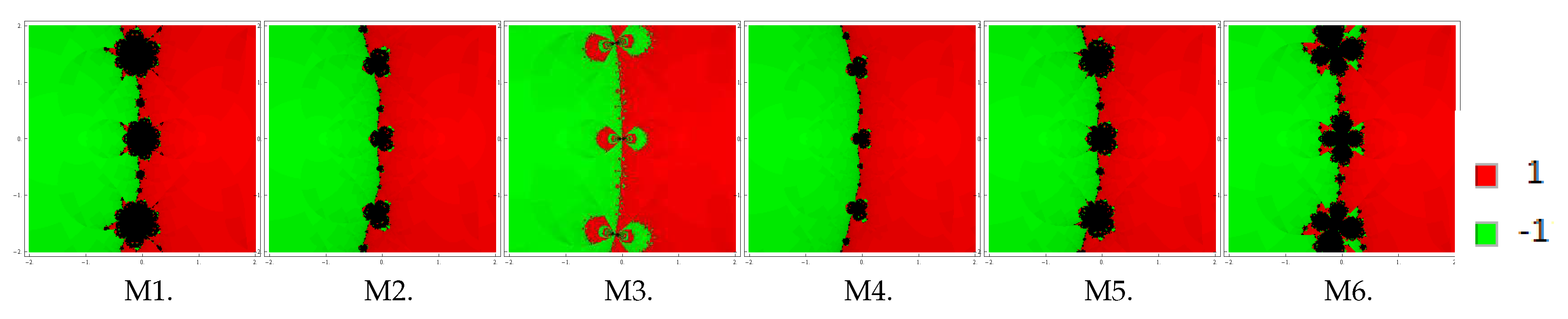

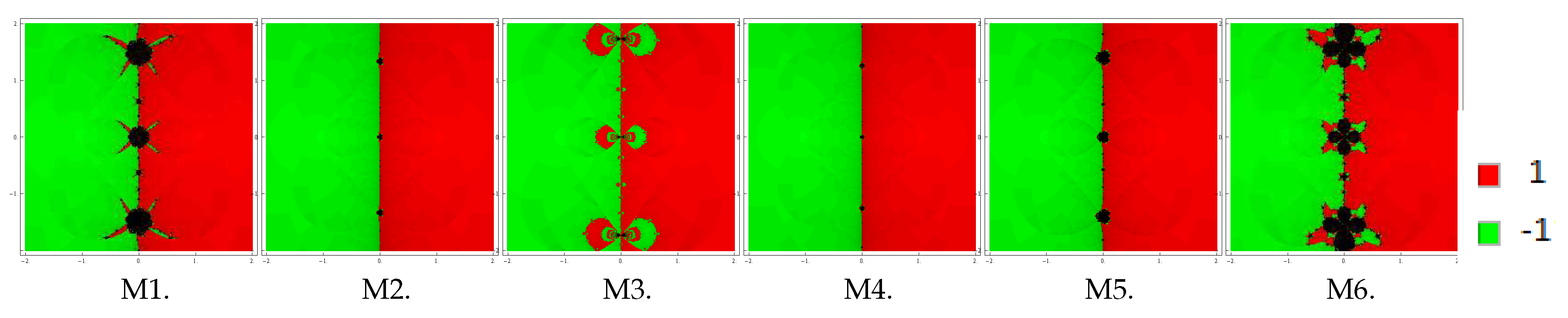

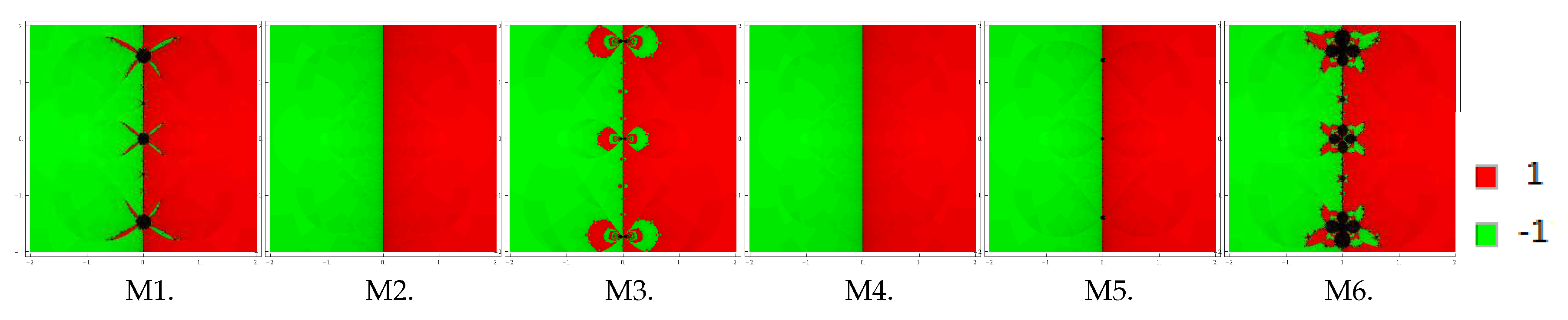

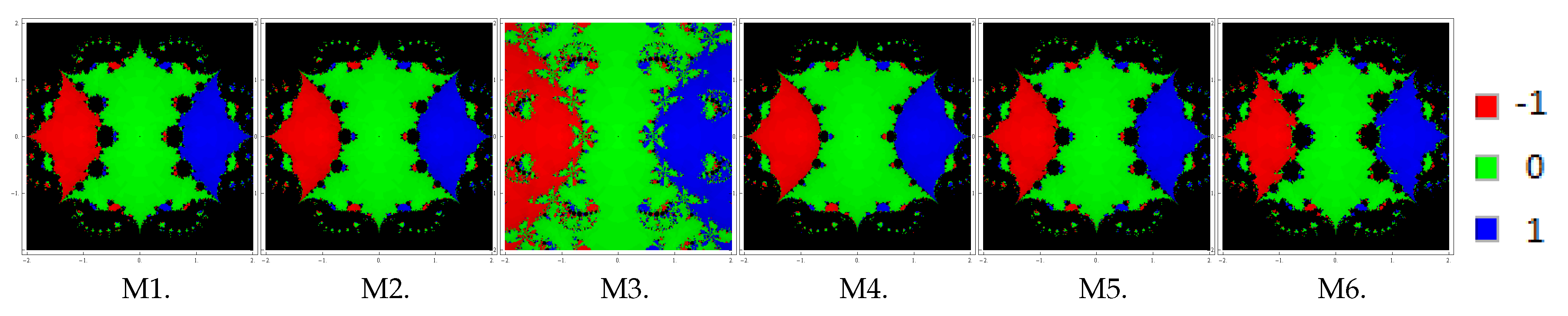

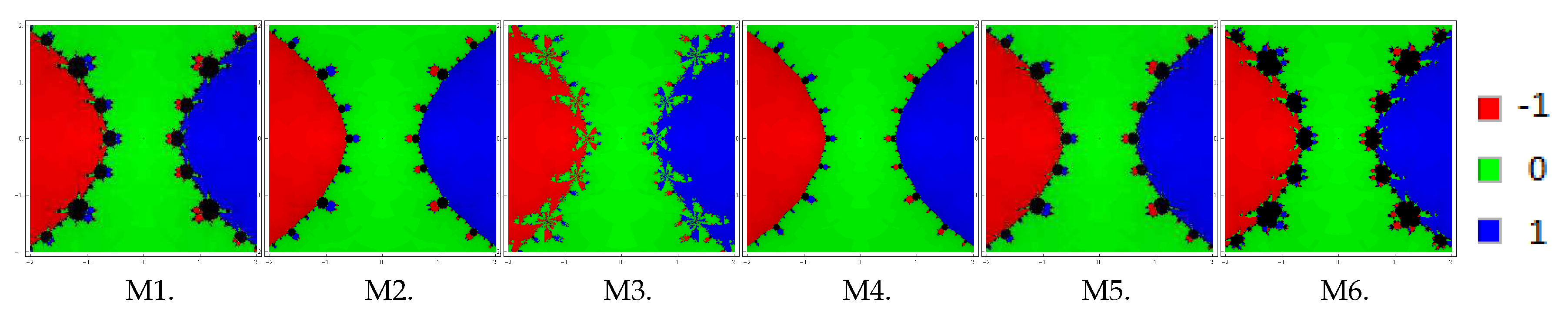

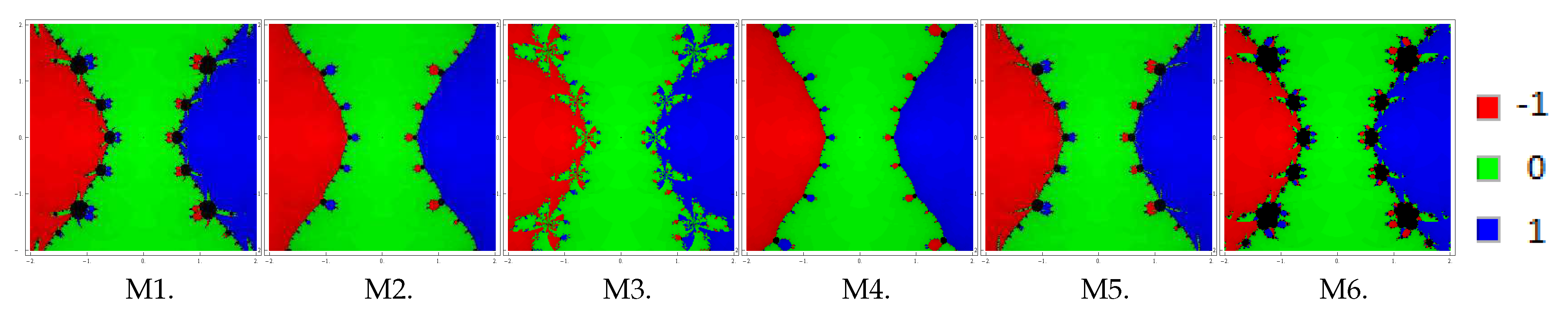

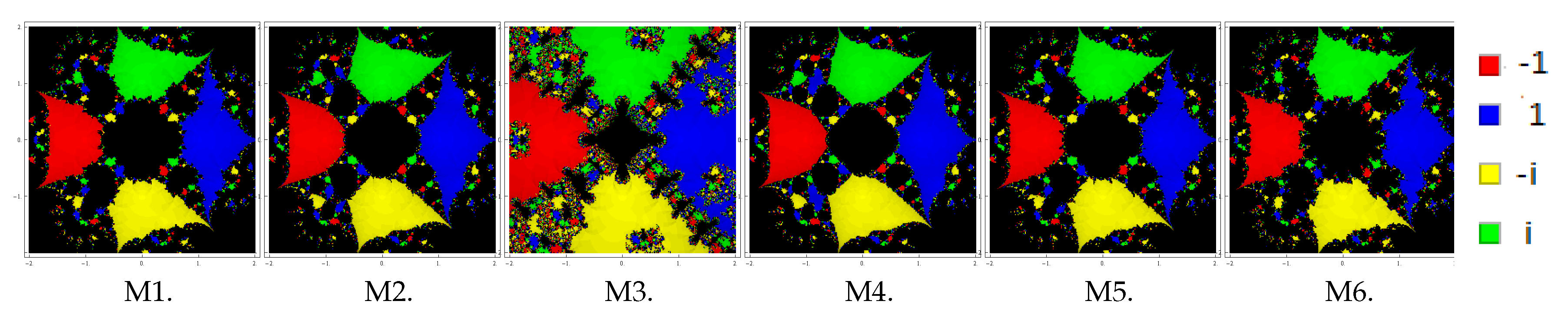

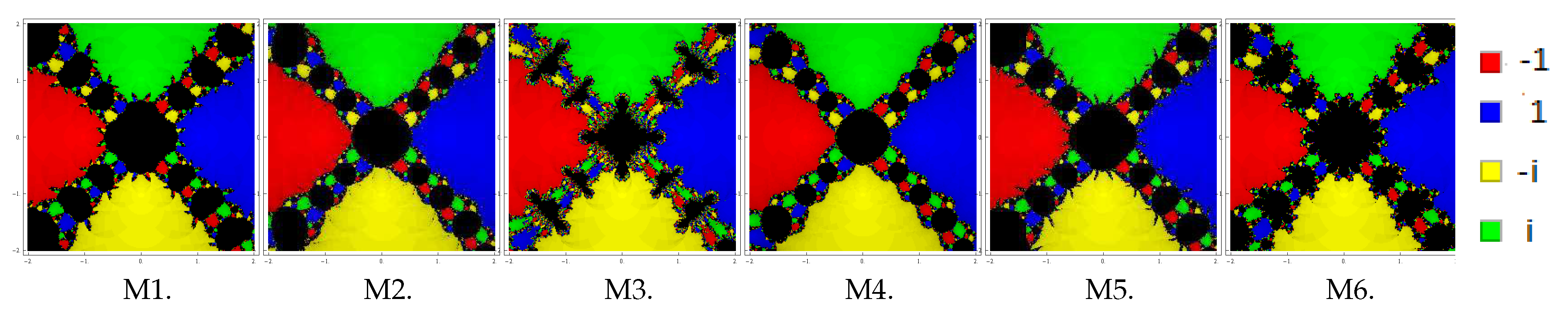

We plot the basins of attraction of the methods M1–M6 (for the choices ) on following three polynomials:

Problem 1. In the first example, we look at the polynomial which has zeros with multiplicity 2. For making basins, we use a grid of points in a rectangle of size and fix the color green to each initial point in the basin of attraction of zero ‘’ and the color red to each point in the basin of attraction of zero ‘1

’. Basins achieved for the methods M1–M6 are shown in Figure 1, Figure 2 and Figure 3 corresponding to . Looking at the behavior of the methods, we see that methods M2 and M4 possess fewest divergent points, followed by M3 and M5. On the contrary, the method M6 has the most divergent points, followed by M1. Notice that the basins are becoming better as parameter β assumes smaller values. Problem 2. Let us consider the polynomial with zeros with multiplicity 3. To examine the dynamical view, we consider a rectangle with grid points and allot the colors red, green, and blue to each point in the basin of attraction of , 0

and 1

, respectively. Basins of this problem are shown in Figure 4, Figure 5 and Figure 6 corresponding to parameter choices in the proposed methods. Observing the behavior of the methods, we see that methods M3, M2, and M4 have better convergence behavior since they have the least number of divergent points. On the other hand, M6 contains large black regions followed by M1 and M5, indicating that the methods do not converge in 25 iterations starting at those points. Also, observe that the basins are improving with the smaller values of β. Problem 3. Lastly, we consider the polynomial with zeros with multiplicity 2. To see the dynamical view, we consider a rectangle with grid points and allocate the colors red, blue, yellow, and green to each point in the basin of attraction of , 1

, and i, respectively. Basins for this problem are shown in Figure 7, Figure 8 and Figure 9 corresponding to parameter choices in the proposed methods. Observing the behavior of the methods, we see that methods M3, M2, and M4 have better convergence behavior since they have fewest divergent points. On the other hand, M6 contains large black regions followed by M1 and M5 indicating that the methods do not converge in 25 iterations starting at those points. Also, observe that the basins are becoming larger with the smaller values of β. From these graphics, one can easily evaluate the behavior and stability of any method. If we choose an initial point in a zone where distinct basins of attraction touch each other, it is impractical to predict which root is going to be attained by the iterative method that starts in . Hence, the choice of in such a zone is not a good one. Both the black zones and the regions with different colors are not suitable to take the initial guess when we want to acquire a unique root. The most adorable pictures appear when we have very tricky frontiers between basins of attraction, and they correspond to the cases where the method is more demanding with respect to the initial point and its dynamic behavior is more unpredictable. We conclude this section with a remark that the convergence nature of proposed methods depends upon the value of parameter . The smaller the value of , the better the convergence of the method.

3.2. Applications

The above six methods M1–M6 of family (

3) are applied to solve a few nonlinear equations, which not only depict the methods practically but also serve to verify the validity of theoretical results we have derived. To investigate the theoretical order of convergence, we obtain the computational order of convergence (COC) using the formula (see [

22])

Performance is compared with some well-known third-order methods requiring derivative evaluations such as Dong [

5], Halley [

7], Chebyshev [

7], Osada [

12], and Victory and Neta [

14]. These methods are expressed as:

Victory-Neta method (VNM):

where

All computations are determined in the programming package

Mathematica using multiple-precision arithmetic. Performance of the new methods is tested by choosing value of the parameter

. Numerical results displayed in

Table 1,

Table 2,

Table 3 and

Table 4 include: (i) values of last three consecutive errors

; (ii) number of iterations

required to converge to the solution such that

; (iii) COC; and (iv) the elapsed CPU time (CPU-time) in seconds.

The following examples are chosen for numerical tests:

Example 1 (

Eigenvalue problem)

. Finding eigenvalues of a large sparse matrix is one of the most challenging tasks in applied mathematics and engineering. Furthermore, calculating the zeros of a characteristic equation of square matrix greater than 4 is another big challenge. Therefore, we consider the following 9 × 9 matrix The characteristic polynomial of the matrix (M) is given asThis function has one multiple zero at of multiplicity 4. We find this zero with initial approximation . Numerical results are shown in Table 1. Example 2 (

Manning equation)

. Consider isentropic supersonic flow along a sharp expansion corner. The relationship between the Mach number before the corner (i.e., ) and after the corner (i.e., ) is given by (see [23])where and γ is the specific heat ratio of the gas. For a special case study, we solve the equation for given that , and . In this case, we havewhere . We consider this case for seven times and obtained the required nonlinear function The above function has zero at with multiplicity 7. The required zero is determined by using initial approximation . Numerical results are shown in Table 2. Example 3. Next, we assume a standard nonlinear test function defined by The function has multiple zero at of multiplicity 3. We choose the initial approximation for obtaining the zero of the function. Numerical results are displayed in Table 3. Example 4. Lastly, we assume a standard nonlinear test function defined by The function has complex zero at of multiplicity 4. We choose the initial approximation for obtaining the zero of the function. Numerical results are displayed in Table 4. From the numerical results we examine that the accuracy in the values of successive approximations rises, which shows the stable nature of the methods. Also, as with the existing methods, the present methods show consistent convergence behavior. We display the value ‘0’ of in the iteration at which . From the calculation of computational order of convergence, it is also confirmed that the order of convergence of the methods is preserved. The efficient nature of proposed methods can be observed by the fact that the amount of CPU time consumed by the methods is less than that of the time taken by existing methods. In addition, the new methods are more accurate because error becomes much smaller with increasing n as compared to the error of existing techniques. The main purpose of developing the new derivative-free methods for different types of nonlinear equations is purely to illustrate the exactness of the approximate solution and the stability of the convergence to the required solution. Similarly, numerical experimentations, carried out for several problems of different type, proved the above conclusions to a large extent.