1. Introduction

Financial market stability is significantly influenced by the prices of the stock index, which serve as crucial indicators of macroeconomic conditions. However, constructing robust and generalizable prediction models for stock indices presents considerable challenges due to their inherent volatility, a characteristic driven by complex interactions among economic, political, cultural and demographic factors. Among various forecasting approaches, time series analysis models, particularly autoregressive (AR) models, have been widely employed for stock price prediction.

Traditional estimation methods for AR models, such as the ordinary least squares (OLS) estimator and the maximum likelihood estimator, perform adequately under some regular conditions, but become unreliable when financial data contain outliers. Such outliers frequently arise from unexpected events such as policy changes, major accidents, and social phenomena. As demonstrated by Box et al. [

1], outliers can severely distort time-series model estimates. Two primary approaches have been developed to address this issue: outlier detection and removal, or robust estimation methods that mitigate outlier effects.

In outlier detection research, Huber [

2] pioneered methods for identifying outliers in AR models. Chang et al. [

3] subsequently extended these methods to ARIMA models with iterative procedures for innovational outliers (IOs) and additive outliers (AOs). Tsay et al. [

4] generalized these approaches to multivariate cases, while McQuarrie and Tsai [

5] proposed a detection method that does not require prior knowledge of the order, location, or type of the model. Further developments include techniques by Karioti and Caroni [

6] for shorter time series and unequal-length AR models, and continuous detection methods introduced by Louni [

7] that demonstrate superior performance for IOs. However, most detection methods rely on specific distributional assumptions that are often unrealistic in practice. To address this limitation, Čampulová et al. [

8] proposed a nonparametric approach combining data smoothing with change point analysis of residuals.

Compared to outlier detection, robust estimation methods for time series have received less attention. Notable contributions include Pan et al. [

9] application of local least absolute deviation for PARMA models and Jiang [

10] introduction of exponential square estimators for AR models with heavy-tailed errors. Recent advances by Callens et al. [

11] weighted M-estimators with data-driven parameter selection and Chang and Shi [

12] reweighted multivariate least trimmed squares and MM-estimators for VAR models.

Traditional time series estimation methods also suffer from poor generalization due to the lack of sparsity. This issue was addressed by the least absolute shrinkage and selection operator (lasso) method [

13], which produces sparse and interpretable models. Subsequent developments include smoothly clipped absolute deviation (SCAD) penalty [

14] and adaptive lasso [

15] to overcome the bias of the lasso method. For AR models, Audrino and Camponovo [

16] investigated adaptive lasso’s theoretical properties, Songsiri [

17] formulated

-regularized least squares problems, and Emmert-Streib and Dehmer [

18] proposed a two-step lasso approach for vector autoregression with data-driven feature selection. However, traditional AR model estimation methods are sensitive to outliers and can not deal with the case that the influence of explanatory variables on the dependent variable gradually weakens as the lag order increases. In order to overcome this issue, we propose a novel robust adaptive lasso approach that combines the partial autocorrelation coefficient to construct adaptive penalty weights and uses robust autocorrelation coefficients constructed using the

statistic. Both extensive numerical simulations and a real data example demonstrate the validity of our proposed method.

The rest of the paper is organized as follows: In

Section 2, we first review traditional estimation methods for AR models, and present our proposed robust adaptive lasso method. In

Section 3, we evaluate the finite-sample performance of the proposed method through Monte Carlo simulations and compare it with other methods. In

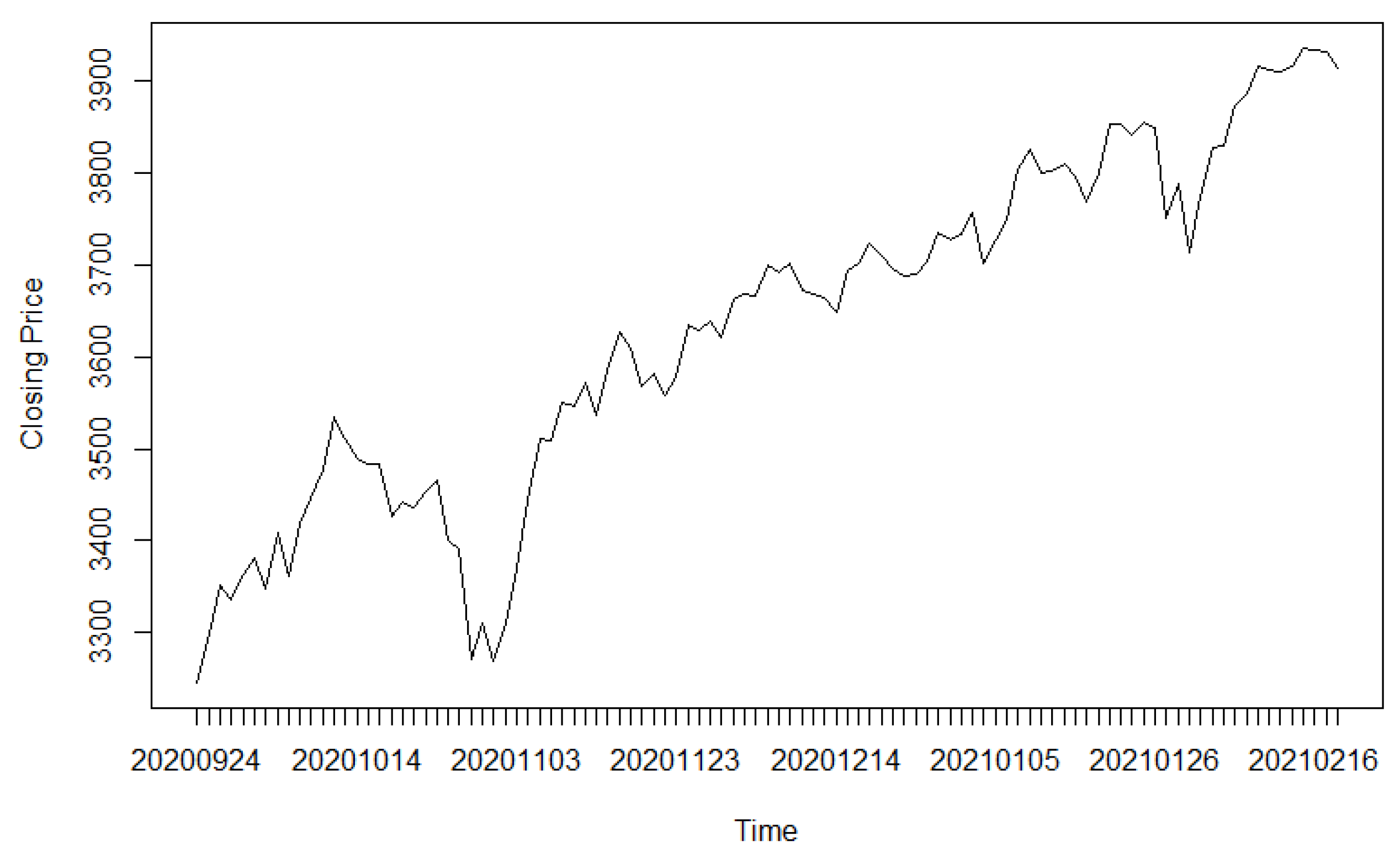

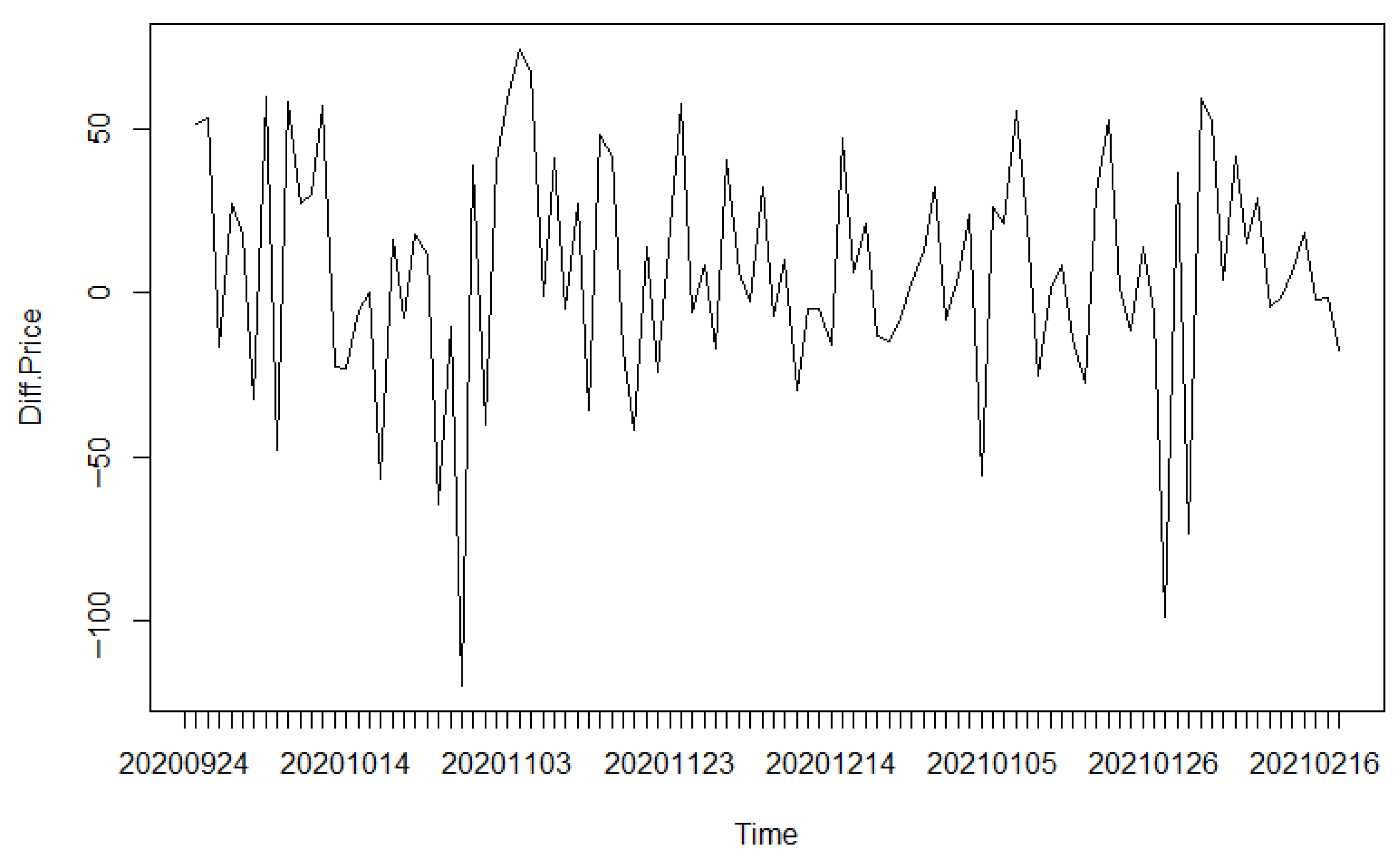

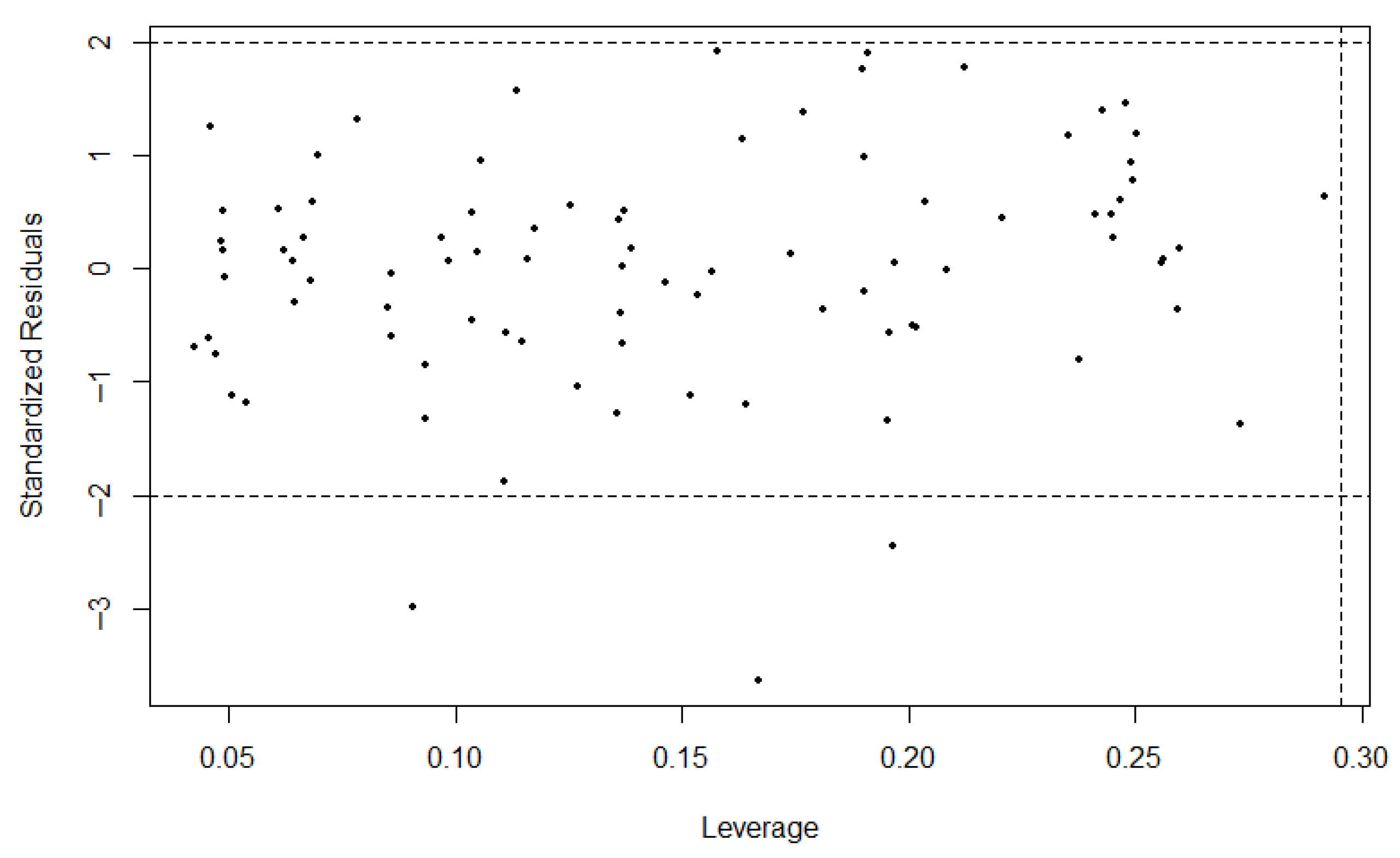

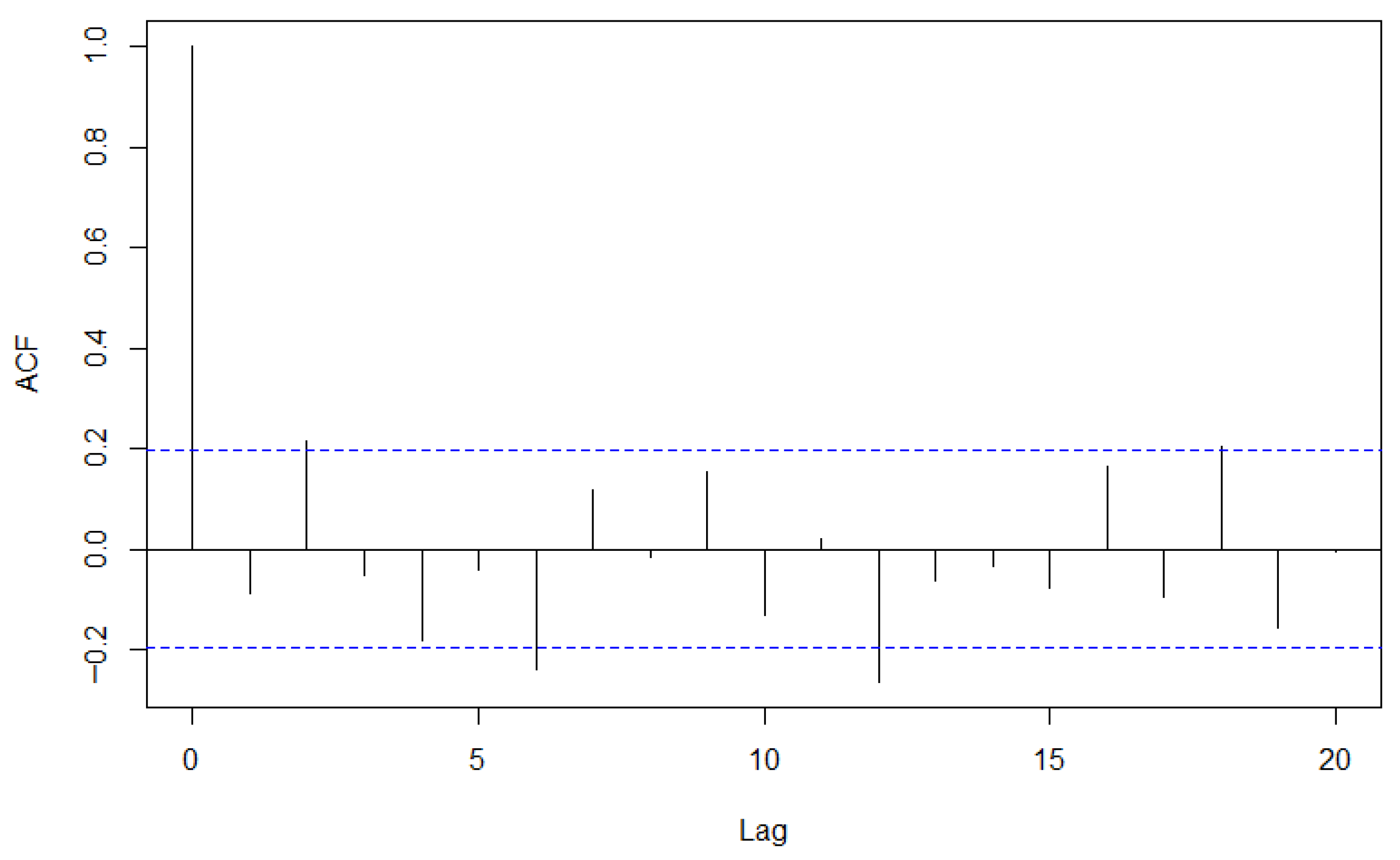

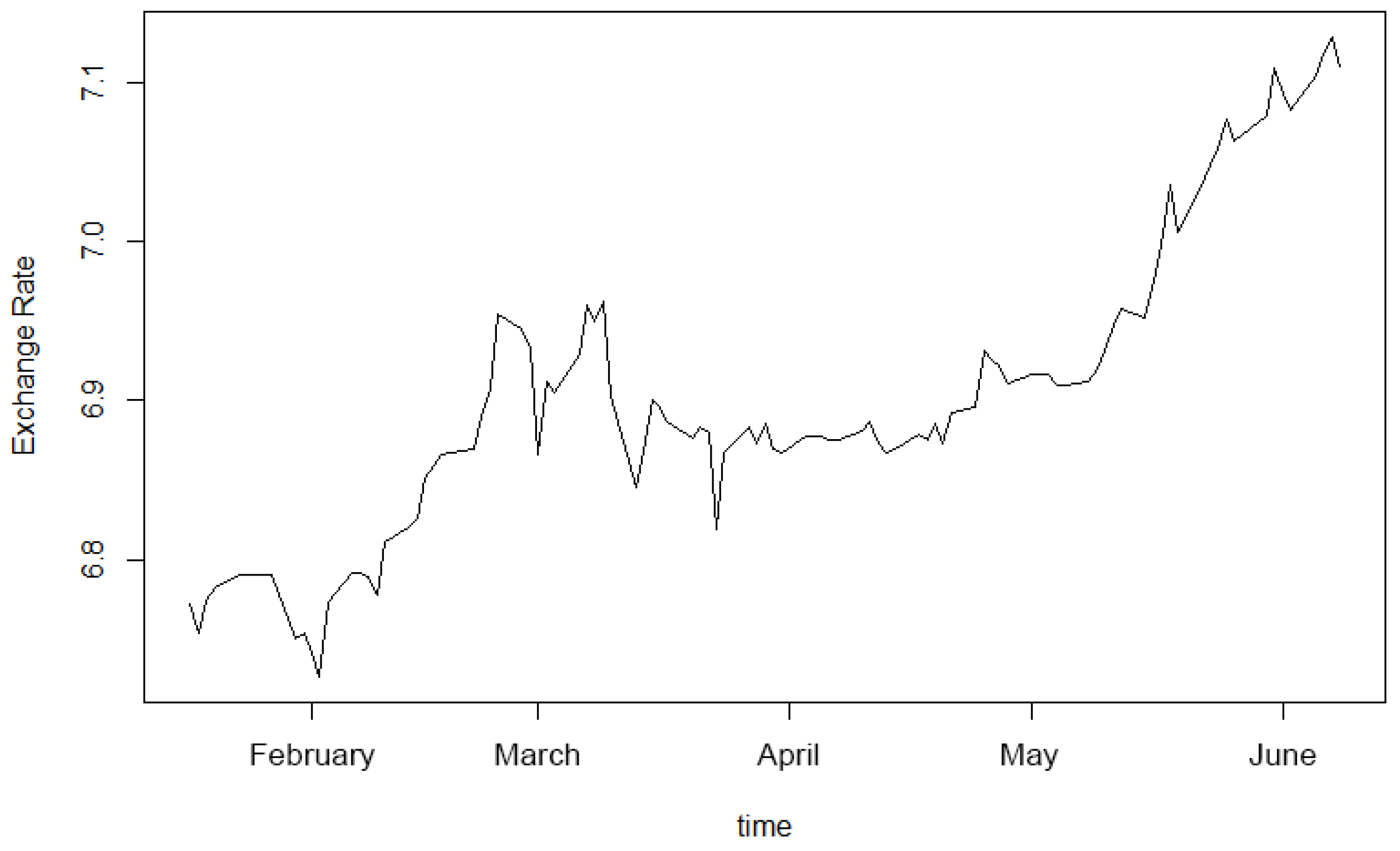

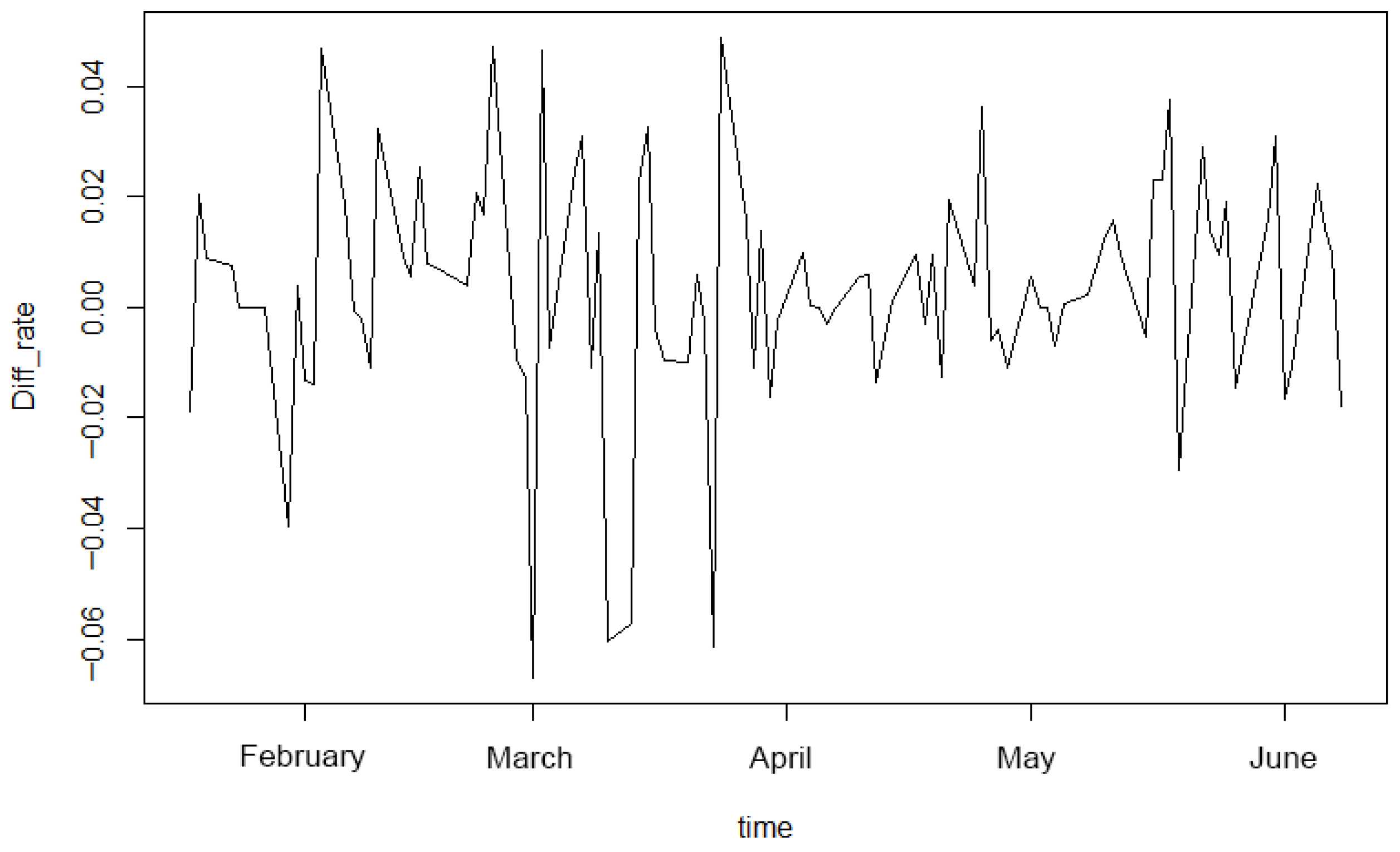

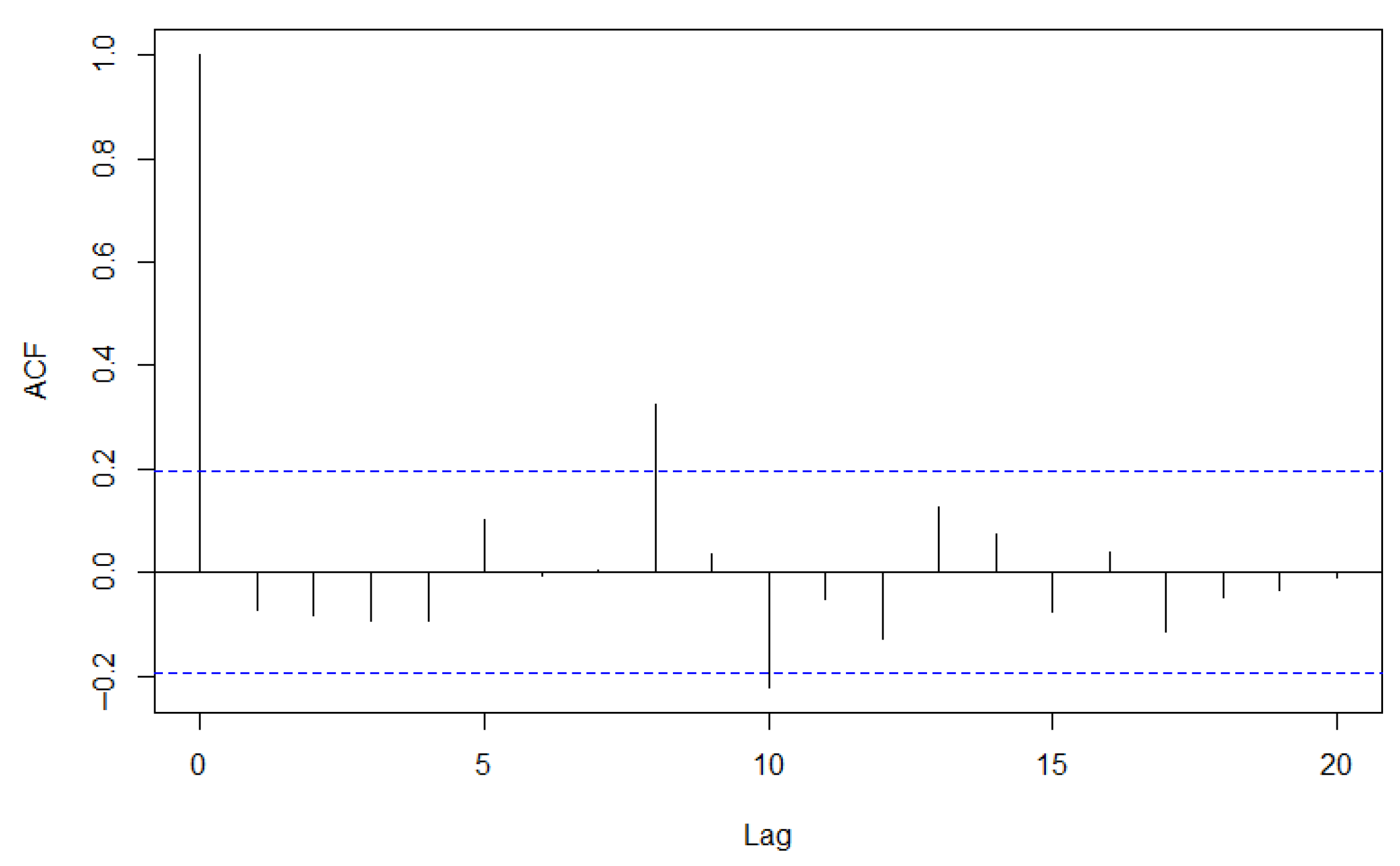

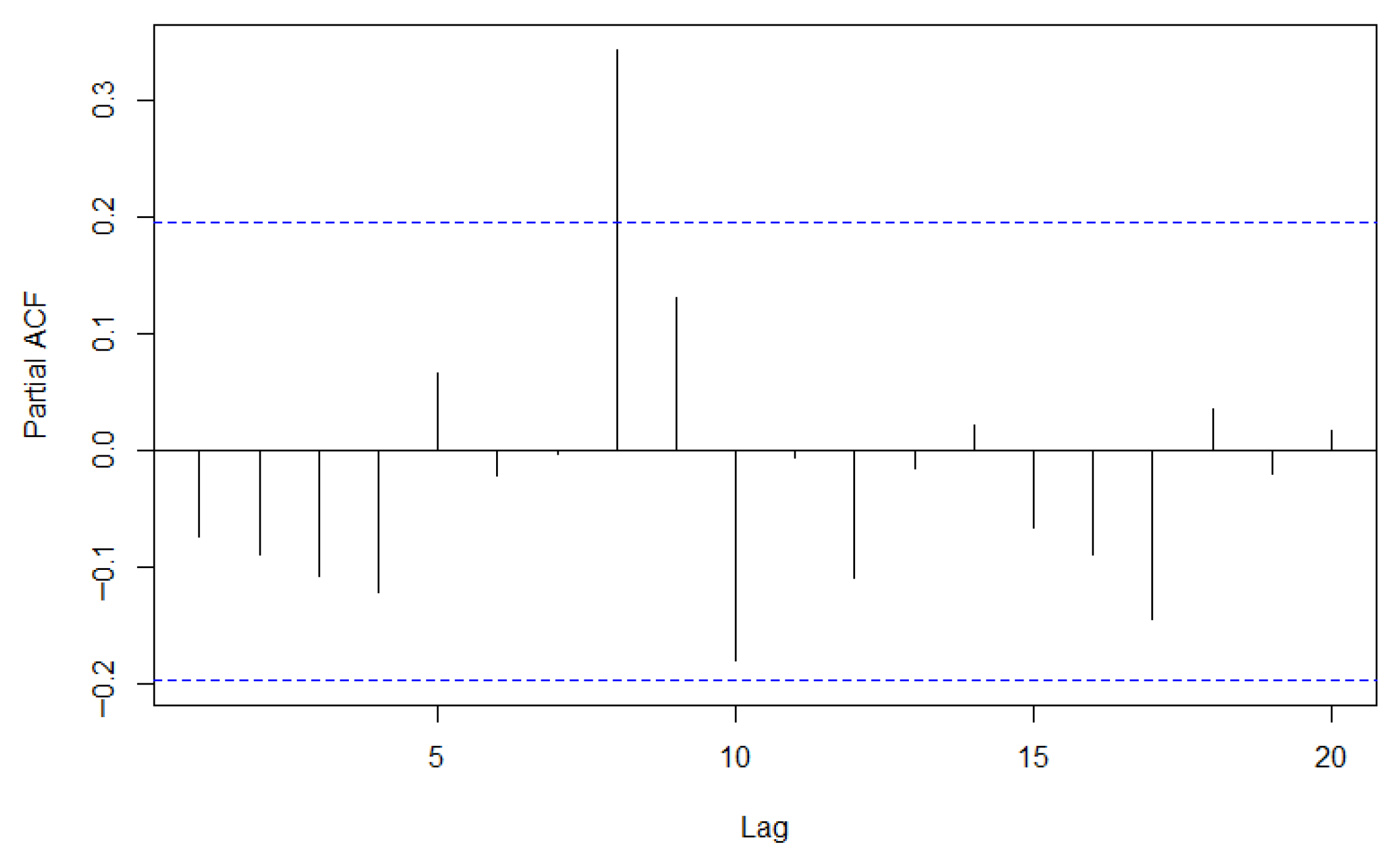

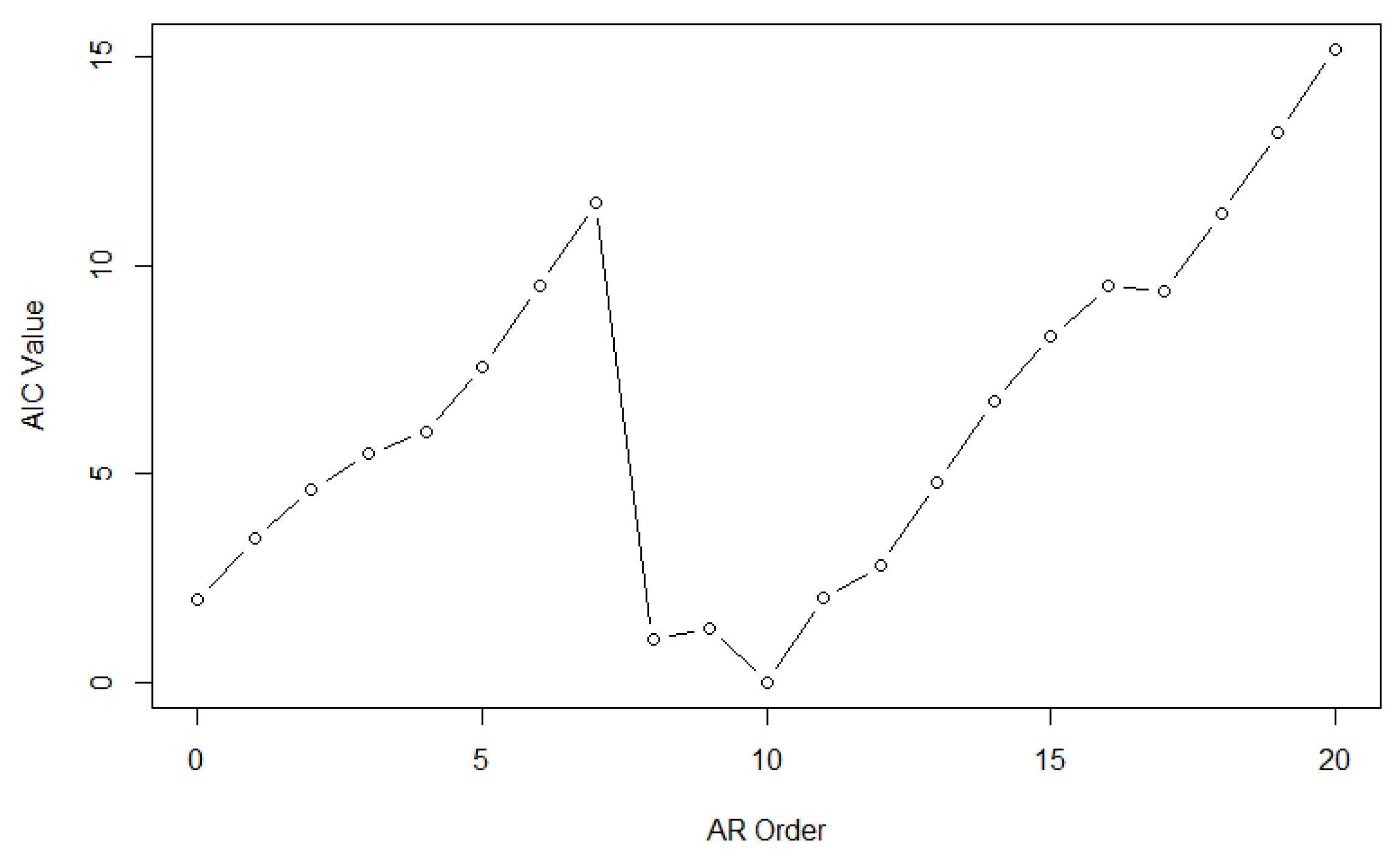

Section 4, we employ the proposed method to analyze two distinct time series: the S&P 500 Index and the USD/CNY exchange rate. We conclude with some remarks in

Section 5.

3. Simulation Studies

In this section, we investigate the numerical performance of the proposed method using Monte Carlo simulations. We simulated 100 data sets from the following autoregression model (

14) with sample sizes

.

The data are generated by the following three scenarios:

Scenario 1: The coefficients with for . The error term follows a Gaussian mixture distribution: , where the contamination proportion takes values .

Scenario 2: Maintaining the same coefficient as Scenario 1, the error distribution is replaced by with . The Cauchy component introduces extreme outliers due to its heavy-tailed properties.

Scenario 3: The coefficients with for . Characteristic root analysis confirms stationarity with a dominant root modulus of 1.031, inducing strong persistence and slow mean reversion typical of economic time series. The error term follows that of Scenario 1.

To demonstrate the advantage of our proposed method, we compare our proposed method (RA-LASSO) with the traditional adaptive lasso method (LS-LASSO) and the ordinary least squares estimation (OLS). Furthermore, the following four criteria were computed to evaluate the finite sample performance:

TP: The average accuracy rate of parameter estimates over 100 repetitions.

Size: The average number of non-zero coefficients in the estimation results over 100 repetitions.

AE: Mean absolute estimation error: .

SE: Root of mean squares estimation error: .

For RA-LASSO and LS-LASSO, we take

. Meanwhile, these methods require the selection of initial values

and the tuning parameters

. In this simulation, we use the ordinary least squares estimates as initial values

, and select the tuning parameters

by minimizing the following BIC criterion [

26]. The BIC criterion is defined as follows:

where

,

denotes the predicted value of

, and

k denotes the number of non-zero coefficients in

.

The corresponding simulation results are shown in

Table 1,

Table 2 and

Table 3. These results reveal the following:

For Scenario 1, in the absence of contamination (), all methods performed well. As the contamination level increases to 0.1 and 0.2, the advantage of the robust method became pronounced. RA-LASSO consistently achieves a higher TP and lower AE and SE compared to the other methods under contamination.

For Scenario 2, under no contamination (), the performance of all methods is similar. However, even a mild contamination () drastically degrades the performance of OLS and LS-LASSO. RA-LASSO demonstrates relatively robust performance, outperforming the other two methods. Furthermore, the Size metric reveals that LS-LASSO tends to overfit severely under contamination, whereas RA-LASSO effectively controls model complexity, selecting a model size much closer to the true value.

For Scenario 3, RA-LASSO consistently delivers the highest TP and the lowest estimation errors (AE and SE) under contamination. This confirms that the proposed method remains effective in the presence of both persistent serial correlation and outliers in the innovations.