1. Introduction

Multicollinearity is a prevalent challenge in predictive modeling, occurring when input features exhibit high correlation, which results in unstable model estimates and reduced prediction accuracy.

The multiple linear regression model is a widely used statistical tool across disciplines, including business, environmental studies, industry, medicine, and social sciences. A crucial assumption of this model is the independence of explanatory variables. However, in practice, explanatory variables often exhibit moderate to strong linear relationships, leading to multicollinearity. This instability makes the coefficients less reliable. In a basic regression model, we have

In Equation (1), denotes the vector of observed responses, represents the design matrix comprising the predictor variables, is the vector of unknown regression coefficients, and is the vector of random errors.

The typical method for estimating these coefficients is the OLS method, which is represented in Equation (2) as follows:

A key measure for detecting multicollinearity is the Condition Number (CN), which compares the largest (

) and smallest (

) eigenvalues of the matrix

:

A high CN (e.g., greater than 30) suggests severe multicollinearity, which leads to unstable estimates of the regression coefficients. Another common diagnostic tool is the Variance Inflation Factor (VIF), which measures how much the variance of a regression coefficient is inflated due to multicollinearity. It is calculated for each predictor as in Equation (4).

where

is the coefficient of determination when the

predictor is regressed on all the others. A VIF greater than 10 indicates sever multicollinearity in the data. When multicollinearity exists, the matrix

becomes close to singular, which makes OLS estimates less reliable. One effective solution is the ridge regression, a regularization technique that introduces a penalty term to mitigate overfitting [

1]. In ref. [

2], the authors modified new ridge estimator for severe multicollinear datasets. The shrinkage parameter, which controls the strength of regularization, was tuned simultaneously to find optimize the regression coefficients. The shrinkage parameter adjustment takes place during both the tuning and validation phases to achieve a balance between fitting the data well and avoiding overfitting.

The

term, a small positive value known as the shrinkage or ridge parameter, is used to improve the numerical stability of the regression model. Additionally,

is the identity matrix, which is controlled by the ridge parameter

k. This technique effectively shrinks the coefficients, which, in turn, helps reduce the variance or minimize the MSE. A key strength of the ridge parameter

k is its simplicity and computational efficiency, especially when the model includes fewer independent variables. However, the performance of ridge estimators is highly influenced by the choice of

k, which is typically determined through cross-validation. There are scenarios where a single regularization parameter may not be sufficient, especially in complex models. This is where two-parameter ridge estimators come into play, offering advantages over the traditional ridge estimator is widely used to handle multicollinearity. For example, ref. [

3] examined coefficient testing under ridge models, while ref. [

4] proposed a new ridge-type estimator with improved performance.

However, ref. [

5] introduced the two-parameter ridge or shrinkage estimator, which adds another scale parameter,

to adjust the penalty term, as in Equation (6).

where

This approach allows for more flexibility, making it better suited for handling multicollinearity in complex cases. If

, Equation (6) reverts to the standard OLS estimator. The authors in [

6] improved the two-parameter ridge regression estimator for the multicollinearity dataset and compared their estimators with others based on the MSE.

While ref. [

7] introduced a generalized ridge estimator as a comprehensive solution for handling severe multicollinearity, ref. [

8] introduced three shrinkage estimators based on averages for severe multicollinear data. Ref. [

9] developed the bias–variance trade-off by incorporating data-specific tuning parameters, offering a more tailored approach to ridge regression.

Theoretical improvements have focused on utilizing higher-order eigenvalue terms to improve ridge regression techniques. Ref. [

10] developed new ridge estimators to effectively reduce the effect of multicollinearity from the data. Ref. [

11] further highlighted and improved the shrinkage parameters in practical applications of ridge regression to address multicollinearity, making it an essential tool in regression analysis. While refs. [

12,

13] expanded the scope of ridge estimators to handle severe multicollinearity datasets in fields such as genetics, environmental studies, and econometrics, ref. [

14] proposed ridge estimators based on rank, a method particularly useful for analyzing complex multicollinearity genetic data.

Extensive research has been conducted on estimating the ridge or shrinkage parameters in linear regression models. Ref. [

15] innovated two-parameter estimators of complex multicollinear data to improve the accuracy of the Almon distributed-lag model. Ref. [

16] developed ridge estimators to enhance the accuracy of linear regression models and compared these estimators with OLS and other established estimators based on the MSE. More recently, ref. [

17] used bootstrap–quantile and improved ridge estimators for linear regression, while ref. [

18] introduced six new two-parameter ridge estimators to address multicollinearity challenges and compared these modified estimators with other established estimators based on the MSE. The authors in both [

19,

20] further introduced two-parameter ridge estimators to more effectively handle data with high multicollinearity, compared to other existing estimators.

The literature clearly shows that no single ridge estimator performs well across all multicollinearity scenarios. To deal with this issue, many researchers have proposed modifications to improve the estimator performance under severe multicollinearity. In this study, we propose four new ridge-type estimators, denoted as RIRE1, RIRE2, RIRE3, and RIRE4, that demonstrate better performance in simulation studies across different conditions such as sample sizes, number of predictors, error variances, and correlation structures. These estimators outperform the OLS and other existing shrinkage methods, maintaining robust efficiency under both normal and non-normal distributions, particularly when datasets have severe multicollinearity. The remainder of the paper is organized as follows:

Section 2 presents the statistical methodology for ridge estimators, including a review of existing estimators and the introduction of our four newly modified estimators.

Section 3 describes the Monte Carlo simulations conducted to assess the performance of these estimators under various conditions. In

Section 4, the proposed estimators are applied to the analysis of two real-world datasets to demonstrate their practical utility. Finally,

Section 5 offers concluding remarks and summarizes the key findings of the study.

2. Methodology

Ridge regression is a supervised learning method that adds a penalty term to the regression equation in order to reduce multicollinearity. This section provides the mathematical foundation for existing ridge estimators and the newly proposed modified estimator for ridge regression models.

To simplify the regression model in Equation (1), we can reformulate it into its canonical form as

where

is the transformed design matrix,

is the parameter vector in the canonical space, and

represents the noise, as before. The matrix

is orthogonal, derived from the eigenvectors of

and satisfies

. This transformation aligns the design matrix

with the principal components of

, simplifying the regression problem.

Additionally, we define

where

is a diagonal matrix containing the eigenvalues

arranged in ascending order. The relationship between the original parameters and the canonical parameters is expressed as

which enables the model to operate in the canonical space.

In this form, the OLS estimator becomes

where

scales

by the inverse eigenvalues. However, small eigenvalues can cause instability in the OLS solution. Ridge regression mitigates this by introducing a regularization parameter

, modifying the estimator to be

which adds

to the diagonal elements of

Λ, stabilizing the solution by reducing the influence of small eigenvalues.

A generalized two-parameter ridge regression estimator extends this idea, taking the form

where

adjusts the intensity of shrinkage. This added flexibility allows for better control over the trade-off between bias and variance, catering to various modeling requirements.

2.1. Existing Ridge-Type Estimators

In this part, some existing estimators are discussed and reviewed. Hoerl and Kennard in [

1] developed the first ridge estimator, commonly known as the HK estimator, and its mathematical formulation is given as

The authors in [

8] explored three ridge estimators designed to address multicollinearity in data using averaging techniques. These are the Arithmetic Mean (KAM), the Geometric Mean (KGM), and the Median (KMed). They are mathematically expressed as

Ref. [

21] introduced an eigenvalue-based estimator, known as the KMS estimator, to effectively handle multicollinearity. Its mathematical expression is given as

Similarly, a two-parameter ridge estimator, referred to as the TK estimator, was established by [

6], with the optimal values for

and

derived as follows:

Ref. [

22] developed three estimators for multicollinearity data, denoted as MPR1, MPR2, and MPR3. Their mathematical expressions are given below:

In these formulations, the adjusted ridge parameter for the

predictor is computed as

where

is a weight defined by the ratio of the eigenvalue

to the absolute value of the corresponding coefficient estimate

:

From the well-established ridge-type estimators available, we selected the estimators HK, KAM, KGM, KMed, KMS, TK, MPR1, MPR2, MPR3, and OLS to be compared with our four modified estimators using Monte Carlo simulations based on the MSE.

2.2. New Ridge-Type Estimators

The newly proposed estimators, referred to as RIRE1, RIRE2, RIRE3, and RIRE4, effectively address various multicollinearity conditions. For these estimators, the

values

) are presented below:

In this estimator, the logarithmic function imposes a nonlinear growth constraint on the penalization term. By summing

over

, this estimator accumulates the contribution of each variable to multicollinearity.

Squaring the eigenvalues in this estimator increases the weight of highly collinear directions. Normalizing by the maximum coefficient ensures that the penalty strength does not disproportionately increase due to one dominant variable. Thus, RIRE2 enforces balanced shrinkage that is tailored to both the multicollinearity severity and the variable scale.

The RIRE3 estimator captures the squared contribution of eigenvalue-weighted coefficients, scaled by residual variance, effectively linking penalization strength to the overall signal-to-noise ratio in the presence of multicollinearity.

RIRE4 introduces higher-order penalization sensitivity by cubing the eigenvalues and squaring the coefficients, allowing it to react more forcefully to severe collinearity. The denominator acts as a normalization factor, stabilizing the shrinkage magnitude. This estimator is particularly effective when a subset of predictors exhibits extremely high multicollinearity.

Equations (19)–(22) are used to optimize the -values, while Equation (7) is utilized to compute .

2.3. The Performance of Estimators Based on the MSE Criterion

We assessed and compared the performance of our proposed modified estimators with OLS and other existing estimators based on the MSE criterion. The MSE has been applied in various studies such as in [

23,

24,

25,

26,

27,

28] to evaluate the accuracy of estimators. The MSE can be calculated as

Since it is challenging to compare Equations (23) and (24) theoretically, we will, instead, analyze their performance through Monte Carlo simulations in the next section.

3. Computational Analysis Using Monte Carlo Simulation

Equation (25) is used for generating predictors as seen in previous research studies [

26,

27,

28].

The correlation (

) between predictors was varied across values of 0.50, 0.70,

to examine different multicollinearity scenarios. Independent samples (

) were drawn from a standard normal distribution, with sample sizes

and predictor counts

used to evaluate model robustness. The response variable (

) was generated using the following model:

where

is the intercept (set to zero),

is the regression coefficient, and

is the error term with variance

analyzed at levels 0. 4, 1, 4, and 8. Furthermore, to examine the impact of non-normal errors, we generated error terms from a t-distribution with 2 degrees of freedom (

and an F-distribution with 6 and 12 degrees of freedom (F (6,12)).

To calculate the MSE of the estimators, Algorithm 1 was used, as detailed below.

| Algorithm 1 Step-By-Step Procedure for MSE |

Standardize the matrix of independent variables using Equation (25), then compute eigenvalues

and eigenvectors

of X′X. Determine regression coefficients

in canonical form as

where

and corresponds to the maximum eigenvalue. Generate random error terms from

(

and F (6,12). Compute dependent variable values using Equation (12). Calculate OLS and ridge regression estimates using their expressions. Repeat for (

) Monte Carlo iterations and calculate the MSE for all estimators using Equation (27):

|

|

Simulations with N = 10,000 were performed in R to evaluate the MSE across varying values of

,

and

.

Table A1,

Table A2,

Table A3 and

Table A4 in

Appendix A present the MSEs for the proposed and existing estimators under these conditions. All analyses were conducted using R version 4.1.0. Detailed analysis follows in the next section.

Discussion and Analysis

The comparison of estimators in

Table A1 and

Table A2 illustrates how their performance, as measured by the MSE criterion, varies under different conditions, including variations in the sample size

, number of predictors (p), predictor correlations

, and error variance

generated from

.

Table A3 and

Table A4 present the MSEs of different estimators when the error term is generated from a standardized t-distribution with 2 degrees of freedom (t

2) and an F-distribution with 6 and 12 degrees of freedom. This heavy-tailed error distribution introduces significant deviations from normality, challenging the robustness of classical estimators.

Here are some resulting remarks from the analysis:

- i.

Effect of Sample Size (): A small sample size exacerbated the limitations of the OLS and some classical ridge estimators, particularly under high correlations and large predictor counts . For instance, OLS demonstrated very high MSEs in these cases, reflecting its instability in multicollinearity. Conversely, as the sample size increased , the MSE of all the estimators decreased, with the HK estimator showing improved performance. The KAM, KGM, and KMed estimators improved their performance in large sample sizes. For , OLS showed significant variability in the error variance, especially as the correlation increased from . As the sample size increased ( and ), the estimates stabilized, with OLS providing more consistent results. Higher error variances (σ2 = exacerbated this sensitivity, particularly in smaller samples. Estimators such as MPRs and RIREs showed less variability and became more reliable as the sample size increased. Notably, RIRE2, RIRE4, and MTPR estimators maintained low MSEs even in small-sample scenarios, suggesting their robustness to sample size variations.

- ii.

Effect of Predictors (): The number of predictors significantly affected the estimators’ performance. When is small , classical ridge estimators such as HK, KAM, KGM, and KMed estimators performed relatively well under moderate multicollinearity . However, as increased to , their MSE increased substantially, especially in high multicollinearity settings. This trend was more pronounced in OLS, which struggled to accommodate a higher predictor count. By contrast, RIRE2, RIRE4, and MPR variants exhibited remarkable scalability, maintaining low MSEs regardless of the predictor count.

- iii.

Effect of Correlations (): High correlations among the predictors dramatically increased the MSE for OLS and classical ridge estimators. For example, the MSE of HK escalated in these conditions, particularly in small sample sizes and larger predictor settings. RIRE estimators and MTPR variants, however, showed resilience to extreme correlations, consistently achieving the lowest MSE across all scenarios.

- iv.

Effect of Error Variance : The ridge estimators (e.g., HK and KMS) were particularly sensitive to a high error variance (), with their performance deteriorating in settings where both and were high. Our RIRE estimators demonstrated relative stability, maintaining lower MSEs under increasing error variances. MPR estimators also performed well in managing the effects of a higher error variance, making them suitable for noisy data.

- v.

To assess the effect of non-normal error terms, errors were simulated from a heavy-tailed t-distribution with 2 degrees of freedom, which introduces significant departures from normality by allowing extreme values or outliers. Under these challenging conditions, classical estimators such as the OLS and conventional ridge-based methods (HK, KAM, KGM, KMed, KMS, TK, MPR1–MPR3) exhibited notably high mean squared errors (MSEs), especially at high correlation levels ( close to 1). In contrast, the proposed modified ridge estimators (RIRE1 to RIRE4) showed marked resilience to the heavy-tailed noise structure. Their MSEs remained consistently low across different sample sizes and predictor dimensions, indicating enhanced robustness against outliers and extreme error values inherent to t2-distributed noise. The robustness is particularly important in practical scenarios where normality assumptions are violated and error distributions have heavy tails. Among the RIRE estimators, some estimators (RIRE2 and RIRE4) performed better, suggesting that their specific modifications effectively mitigate the influence of large error fluctuations. These results highlight the advantage of the new estimators in maintaining accuracy and stability in regression models affected by non-normal, heavy-tailed error distributions.

- vi.

The findings from

Table A3 indicate that the new RIRE estimators provide improved accuracy and stability compared with classical and existing methods in regression models with t

2-distributed errors.

- vii.

Table A4 show the MSEs when error terms follow a standardized F-distribution with (6, 12) degrees of freedom, representing heavy-tailed, non-normal errors. The modified ridge estimators (RIRE1–RIRE4) consistently outperformed OLS and other existing methods (HK, KAM, KGM, KMed, KMS, TK, MPR1–MPR3), especially at high correlations (

). Among them, RIRE3 and RIRE4 achieved the lowest MSEs, demonstrating superior robustness and accuracy under this complex error structure. This highlights the advantage of RIRE estimators in handling heavy-tailed, asymmetric noise effectively.

These results highlight that no single estimator performs optimally under all conditions. However, our modified estimators RIRE2 and RIRE4 consistently outperformed others when compared with OLS and other existing estimators in scenarios involving small samples, large predictors, high correlations, and high error variances. The other ridge estimators, such as HK, were effective under moderate conditions; however, they failed to handle extreme multicollinearity or challenging settings involving many predictors and small samples. OLS remained unsuitable for multicollinearity, especially when .

The summary table (

Table 1) was created based on the simulation results from

Table A1,

Table A2,

Table A3 and

Table A4. The proposed RIRE estimators demonstrated strong performance across a wide range of conditions, consistently outperforming other methods. In particular, RIRE3 and RIRE4 performed the best in 88 out of 120 cases, excelling in scenarios with varying sample sizes, error variances, and dimensions. RIRE2 also showed strength in nine scenarios, particularly at high error variances. Overall, our RIREs were the top choice in 97 out of 120 situations, proving their reliability and adaptability compared with alternatives such as MPR1 and MPR3, which performed well only in specific contexts.

4. Real-Life Applications

In this section, we utilize the newly modified proposed and competing estimators on three real-life applications. The first dataset is the Updated Longley (1959–2005), sourced from the Department of Labor, the Bureau of Statistics, and the Defense Manpower Data Center, Gujrati’s Basic Econometrics [

29], and Mental Health and Digital Behavior (2020–2024). The second set of data is the Hospital Manpower dataset used in [

17]. The third dataset is the Body Fat Dataset [

30], which contains body composition measurements and is publicly available online. These datasets exhibit high multicollinearity and are recognized benchmarks for ridge regression analysis.

4.1. Practical Application of the Longley Dataset

The dataset consists of 47 observations spanning from 1959 to 2005, with a total of six variables:

. Thus, the regression model can be written as

where

is the dependent variable,

are the independent variables,

is the intercept,

are the coefficients for each independent variable, and

is the error term.

To check for multicollinearity in the dataset, we looked at key indicators: eigenvalues, the CN, the VIF, and the heatmap display. These help in understanding how much the independent variables are related to each other and whether that could cause issues in our analysis. The eigenvalues of the dataset are: .

We used Equation (3) to calculate the CN as follows:

The CN for the dataset is about 514.94, which points to a significant amount of multicollinearity. Such a high CN suggests that the independent variables are strongly correlated to each other.

Equation (4) was utilized to calculate the VIFs for each predictor . is the R-squared value from regressing on all the other predictors in the model. However, can be approximated using the inverse of the correlation matrix of the dataset. The diagonal elements of the inverse of the correlation matrix represent for each predictor. Therefore, the VIFs for the variables are as follows: (52.90), (79.94), (35.94), (4.18), and (4.81). High VIF values indicate multicollinearity, with a VIF greater than 10 suggesting significant correlation among the predictors. In this analysis, , and showed high multicollinearity, while and had lower VIFs, indicating that they are less correlated with the other predictors.

Furthermore, in

Figure 1, the heatmap shows that

, and

are strongly related, meaning that they share a lot of the same information. In particular,

is highly correlated with

(0.97) and

(0.99), which suggests that these variables move together. On the other hand,

has a strong negative relationship with

(−0.87) and

(−0.87), indicating that as one increases, the other tends to decrease. This level of correlation could cause issues in regression analysis by making it harder to determine the unique effect of each variable. To remove the effect of severe multicollinearity, we used ridge regression, both with our proposed newly modified estimators and existing estimators.

The analysis of this real dataset validates the simulation results, confirming that our modified estimators (RIREs) performed better than other existing estimators, as shown in

Table 2.

Figure 2 shows that MPR1, RIRE2, and RIRE3 had the lowest MSEs, indicating the best overall estimator performance.

Comparison of the Estimators Based on Confidence Interval

The 99% confidence interval (C.I.) for each coefficient is calculated using the following formula: For each coefficient (for ), , where is the critical value for a 99% C.I (2.576), and is the standard error of the coefficients, which can be calculated from the MSE and the number of observations. For each estimator, we used the MSE provided to calculate the standard error for each coefficient.

The standard error can be computed using the formula

, where is the number of observations. We denoted L () and U () as the lower and upper bounds of the C.I, respectively.

From

Table 3 and based on the provided confidence intervals, we see that RIRE4 had the narrowest intervals across most of the coefficients, particularly for

, suggesting it as the most precise estimator. RIRE1 and RIRE2 also showed relatively narrow intervals but not as consistently as RIRE4. Thus, RIRE4 appears to be the best estimator, as it had the smallest range between the lower and upper bounds for most of the coefficients.

4.2. Hospital Manpower Data

This dataset contains 17 observations with five predictors:

(monthly man-hours; Load),

(monthly X-ray exposures; Xray),

(occupied bed days; BedDays),

(population in thousands; AreaPop), and

(average patient stay; Stay). The dependent variable y represents the average daily patient load (Hours). The linear model is given as

To assess multicollinearity, the CN, VIF, and heatmap were used. The CN of about 278.87 far exceeds the common threshold of 30, indicating severe multicollinearity. The VIF values were ( (8.189), (7929.5), (4.083), (8504.7), and (19.75). Since values above 5 (or sometimes 10) indicate problematic multicollinearity, several variables here exceed that threshold. This, along with the very high CN, suggests strong inter-variable dependencies that could significantly affect the analysis.

It is also clear from

Figure 3 that strong positive correlations among most hospital manpower variables were observed, except for moderate correlations involving

, indicating potential multicollinearity issues in the dataset.

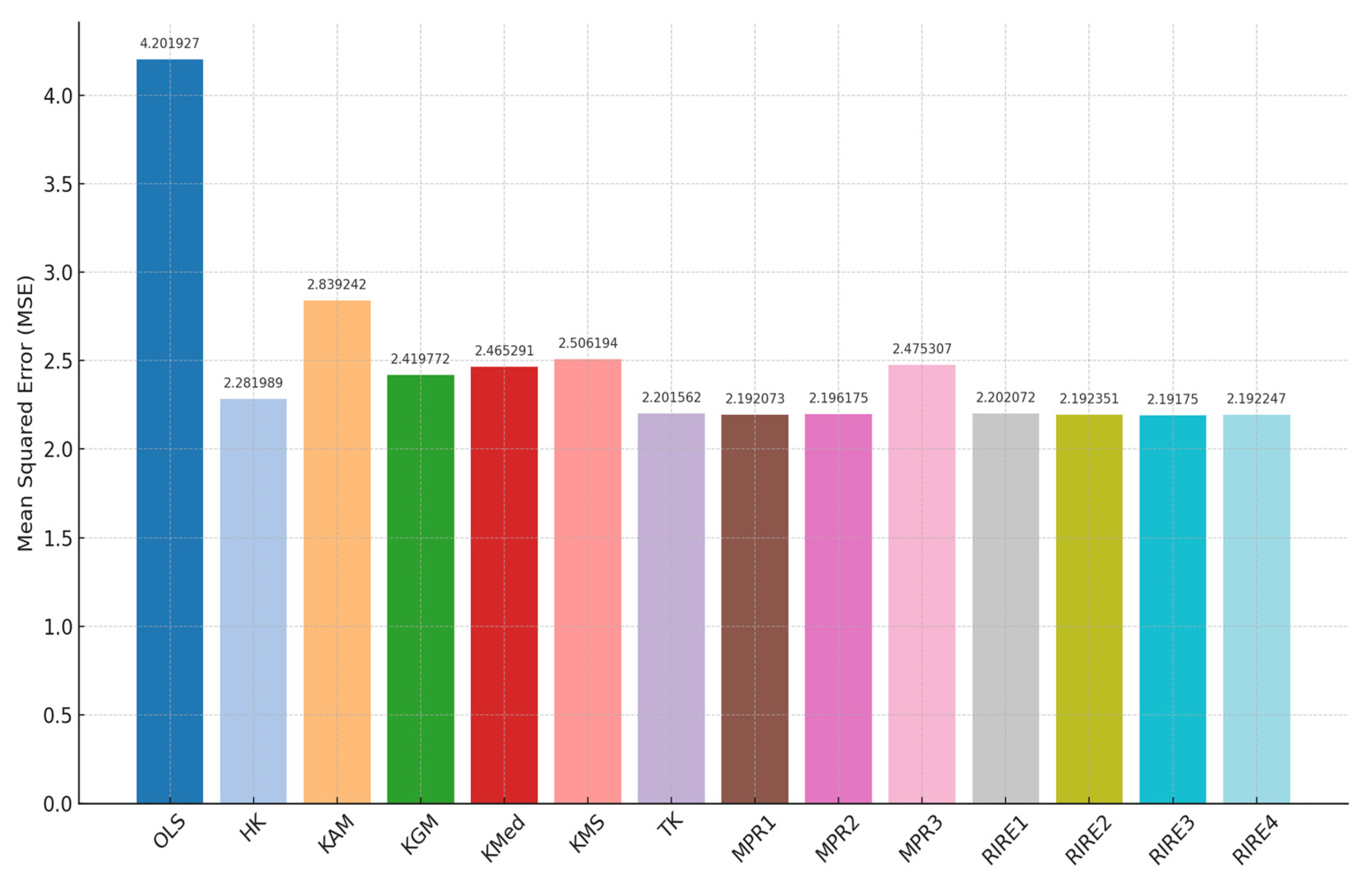

Table 4 shows that the newly proposed RIRE1–RIRE4 estimators consistently achieved the minimum MSE (2.19175–2.202072), outperforming OLS (4.201927) and the other existing methods. This indicates that the new proposed RIRE estimators provide better prediction accuracy on the Hospital Manpower data based on the MSE criterion.

Figure 4 confirms that RIRE3, MPR1, and RIRE2 offer the most accurate estimates with minimal MSEs, while OLS demonstrates the least efficiency.

Comparisons of Estimator Coefficients Based on the 99% C.I for the Hospital Manpower Data

To calculate the 99% C.I. for the Hospital Manpower data regression model, we followed the same steps as above, ensuring correct application of formulas for the SE and the confidence intervals for .

Table 5 presents the confidence intervals (C.I.) for the coefficients of several estimators applied to the Hospital Manpower dataset. The RIRE estimators outperformed the existing methods in terms of providing more consistent and narrower confidence intervals, particularly for coefficients like

and

. RIRE1 offered substantial improvements over OLS, with tighter intervals for most coefficients, especially

and

. RIRE2 showed better precision for

, with narrower intervals than OLS, HK, and MPR1, although it still had wider intervals compared with KMS for certain coefficients. RIRE3 delivered significant improvements over OLS and TK, offering narrower and more stable intervals for several coefficients, especially for

and

. RIRE4 provided the most balanced results, with narrower intervals for

,

, and

, outperforming traditional estimators (MPR2 and MPR3) in terms of precision. Overall, the RIREs produced more reliable and precise estimates compared with traditional methods, especially when dealing with multicollinearity issues in the dataset.

4.3. Body Fat Dataset

This dataset contains body composition and anthropometric data for 252 individuals, including variables like BODYFAT (y), DENSITY (X

1), AGE (X

2), WEIGHT (X

3), HEIGHT (X

4), ADIPOSITY (X

5), and various circumferences (e.g., NECK (X

6), CHEST (X

7), ABDOMEN (X

8), etc.). It is useful for analyzing the relationship between body fat and physical attributes. A regression model is given below:

Multicollinearity was assessed using CN, eigenvalues, VIF, and heatmap display. The results indicated severe multicollinearity, with a CN of 1234.89 (well above the threshold of 30) and VIF values between 2.31 and 62.63.

Figure 5 suggests potential multicollinearity, particularly between variables such as weight, adiposity, and abdominal circumference, which exhibit very high correlations and could lead to issues in predictive modeling or regression analysis.

To address the issue of multicollinearity, we use our proposed and existing estimators to enhance model stability and to reduce the multicollinearity effects.

Table 6 shows that the estimation in this third dataset aligns with the simulation results, where the proposed estimator RIRE3 achieved the minimum MSE compared with OLS and other existing ridge estimators.

5. Conclusions

This study presented four new modified ridge regression estimators, referred to as RIRE1, RIRE2, RIRE3, and RIRE4, which were designed to enhance precision in estimations when modeling multicollinear data. The adaptive characteristics of these estimators provided a versatile method for regularization, rendering them appropriate for contemporary predictive modeling challenges. The analysis highlighted the impressive performance of our newly modified RIRE estimators, especially RIRE2, RIRE3, and RIRE4, as they effectively handled challenging scenarios such as small sample sizes, severe multicollinearity, and large error variances compared with OLS and other existing estimators under both normal and non-normal error distributions. Our new estimators achieved the lowest MSE in both simulations and real-world dataset analyses, confirming their reliability and practical usefulness, which offer a clear advantage over other ridge regression estimators.

Future research could focus on adapting RIRE estimators for high-dimensional data and testing their effectiveness on a wider range of real-world datasets. This exploration would offer valuable insights into their potential for handling complex data structures.