1. Introduction

Queuing theory originated from the pioneering work of Danish engineer Agner Krarup Erlang. In the early 20th century, he developed the first mathematical models to answer questions related to the number of telephone lines and operators needed to satisfy a random demand; see refs. [

1,

2]. Since then, this theory has become an important subject in many fields, notably in operations research, telecommunications, and computer system design.

Seminal works on queuing theory include the papers by Khinchine [

3], Kendall [

4], and Jackson [

5]. Khinchine obtained a formula that characterizes the waiting-time and queue-length distributions in the M/G/1 queue, which is one of the most fundamental results in queuing theory. In his paper, Kendall proposed the notation (such as M/M/1, M/G/1) that is widely used to classify queuing systems. Jackson showed that under the assumptions of Poisson arrivals and exponential service times, the stationary distribution of open queuing networks factorizes into a product of independent node distributions.

Finally, classic books on this subject include the monographs by Cox and Smith [

6] and Kleinrock [

7].

In classical queuing models, the arrivals of customers constitute a discrete-space stochastic process. For instance, in the case of the

model, where

s is the number of servers, customers arrive according to a Poisson process. However, in some applications, arrivals occur almost according to a diffusion process. This is true, in particular, in the case of

heavy traffic (for instance, on the Internet). See, for example, Lee et al. [

8] and Lee and Weerasingheb [

9].

In this paper, which is a vastly expanded version of the conference paper [

10], we assume that the number

of customers in the system at time

t is such that

where

is a one-dimensional standard Brownian motion. The functions

and

are such that

is a diffusion process. Moreover, the non-negative constant

k is the rate at which the customers are served. The function

should be such that if

, then

will increase with time

t.

This type of degenerate two-dimensional diffusion process, which was proposed by Rishel [

11], has been used in reliability theory to model the wear of devices. Indeed, wear should be strictly increasing with time. If we want to model the remaining lifetime instead, then it should be decreasing with time. In ref. [

11], the model introduced is in fact in multidimensional. Furthermore, the infinitesimal parameters of the process

may depend on

. Thus, Equation (2) can be replaced by

for

.

Remark 1. (i) With this type of model, in which the variations in the number of customers in the system is approximated by a diffusion process, there is no individual service time distribution as such. We see that if in Equation (1), then . Hence the statement that the constant k is the service rate of customers. (ii) Several authors have used a diffusion process as an approximation of the variations in . A commonly used model is reflected Brownian motion (see, for instance, refs. [12,13]), so that the number of customers cannot become negative. With the model that we propose, this result can be guaranteed without having to introduce a reflective barrier. (iii) To implement the proposed model, we must first identify the process on which the variations in depend. In the case of the application to reliability theory, can be an environmental variable, such as temperature or the speed at which the machine is used. Here, could be the market share of a company at time t. This share varies due, in particular, to competition between companies. The variable could also be the pool of all potential customers for the company. This pool may increase or decrease, depending on the activities of the company we are interested in, for example by opening branches in new countries or closing others. Next, we would need to estimate the infinitesimal parameters (that is, and ) of . Finally, we would also need to estimate the function and the constant k using real data. In the next section, a particular case for the various functions in Equations (

1) and (2) will be considered. Then, in

Section 3, an optimal control problem for the two-dimensional process

will be studied. In

Section 4, explicit and exact solutions to particular cases of this control problem will be presented. Finally, some concluding remarks will end this paper in

Section 5.

2. A Particular Case

Suppose that and in Equation (2). Then, is a Wiener process with positive drift and dispersion parameter . A Wiener process, being a Gaussian process, can take both positive and negative values. Therefore, the function should be such that is a non-negative function. For example, we could take . However, if we assume that and are both large enough, and that is small, then the probability that becomes negative is negligible.

We choose , with c being a positive constant. With this choice, we can appeal to the following proposition to compute the joint probability density function of the random vector .

Proposition 1 ([

14])

. Let be an n-dimensional stochastic process defined bywhere is an n-dimensional standard Brownian motion, is a square matrix of order n, is an n-dimensional vector, and is a positive definite square matrix of order n. Then, given that , we may write thatwhereandwhere the symbol prime

denotes the transpose

of the matrix, and the function is given by Remark 2. In words, the above proposition tells us that any affine transformation of an n-dimensional standard Brownian motion (which is a Gaussian process) remains a Gaussian process. Moreover, it provides the formulas needed to compute the mean and the covariance function of the new process.

In our case, we have

The coefficient matrix

contains the service rate

k and the constant

c. We chose

; that is, the value of

is assumed to increase at a rate which is proportional to

(if

), and

c is the constant of proportionality. We also assumed that

is a Wiener process with infinitesimal parameters

and

. The vector

contains the drift

, and

appears in the degenerate constant matrix

, which is the

noise matrix.

Now, for

,

Hence, if

, the function

is given by

Next, we find that

It follows that

Finally, with

we calculate

and

Notice that for

t large,

has a Gaussian distribution with mean and variance that are both (approximately) affine functions of

t.

Making use of the above results, we can compute the probability that will become equal to zero, so that the queue is empty, as a function of time. Similarly, if the system capacity is finite, we can easily compute the probability that the system will become saturated.

The actual (approximate) number of customers in the system is given by

where

r is the system capacity.

Corollary 1. Suppose that the system (1), (2) is of the formwhere , and . Then has a Gaussian distribution with mean and variance given byand Proof. The diffusion process is a geometric Brownian motion with infinitesimal mean and infinitesimal variance . A geometric Brownian motion is non-negative (if it starts at ), since it can be expressed as the exponential of a Wiener process. If we define , then is a Wiener process with infinitesimal mean and infinitesimal variance . The results are then deduced at once from Proposition 1. □

Remark 3. We assume that both and the parameter μ are large enough (and σ small enough) for the probability to be negligible.

Next, assume that the system (

1), (2) is

where

and

. Then,

will not have a Gaussian distribution. We can at least compute its expected value.

Proposition 2. The expected value of the random variable in the system (22), (23) iswhere and . Proof. The solution of the ordinary differential equation (ODE)

that satisfies the initial condition

is

Moreover, we have

The expected value of

is then obtained by computing

□

Remark 4. In the special case when , so that , we have In the next section, an optimal control problem for the two-dimensional stochastic process will be examined.

3. A Homing Problem

In this section, we suppose that the term

in Equation (

1) is replaced by the function

, where

is a non-negative function and

is a control variable that is assumed to be a continuous function.

Let

The random variable

is called a

first-passage time in probability theory.

Our aim is to find the control that minimizes the expected value of the cost functional

where

,

, and

is the final cost function. This type of stochastic control problem, in which a stochastic process is controlled until a given event occurs, is known as a

homing problem; see Whittle [

15]. The author of the current paper has written several articles on homing problems; see, for example, ref. [

16]. Other papers on this subject are refs. [

11,

17].

Many papers have been published on the optimal control of queuing systems. Sometimes the authors assume that it is possible to control the service rate and/or the arrival rate of customers into the system.

In ref. [

18], Laxmi and Jyothsna considered a discrete-time queue. Their objective was to minimize costs by optimizing the service rates. To do so, they used swarm optimization. In Tian et al. [

19], the authors also treated a problem for a queuing system with varying service rates. Other papers in which the authors assumed that the service rate could be controlled are refs. [

20,

21,

22,

23,

24]. In Wu et al. [

25], the aim was rather to control customer arrivals. The main difference between these papers and the work presented here is that in our case the final time is a random variable. See Lefebvre and Yaghoubi [

26] for other references on the optimal control of queuing systems.

To find the optimal control

, we can try using dynamic programming, which enables us to express

in terms of the value function

The function

gives the smallest expected cost (or largest expected reward, if the cost is negative), starting form

and

.

Using the results in ref. [

15], we can state the following proposition.

Proposition 3. The value function satisfies the dynamic programming equation

where , etc. The equation is valid for . We have the boundary condition if . Moreover, the optimal control is given by If we substitute the expression for

into the dynamic programming equation, we find that to obtain the value function, we must solve the second-order non-linear partial differential equation (PDE)

Assume now that instead of the service rate, the optimizer can control the arrival rate of the process, so that the two-dimensional process

is defined by the system of stochastic differential equations

Proceeding as above, we obtain the following corollary.

Corollary 2. In the case of the controlled process defined in Equations (36) and (37), the optimal control is given byFurthermore, the value function satisfies the PDE Finally, in some cases, Equation (

39) (as well as Equation (

35)) can be linearized.

Proposition 4. Suppose that there exists a positive constant α such thatThen the functionsatisfies the linear

second-order PDEThe boundary condition is if . Remark 5. The linear PDE in Equation (42) is in fact the Kolmogorov backward equation

satisfied by the moment-generating function of the random variable :with , for the uncontrolled

process obtained by setting in Equation (37). Moreover, the boundary condition is the appropriate one, and we assume that for the uncontrolled process. In the next section, explicit solutions to particular homing problems will be presented.

4. Explicit Solutions

Problem I. The first particular homing problem that we consider is the one for which the function

in Equation (

1) is equal to

, where

c is a positive constant, and the term

is replaced by

, with

. Moreover, we take

where

, and we define the first-passage time

in which

is a geometric Brownian motion that satisfies the stochastic differential Equation (23).

We assume that

in Equation (

31) and that the final cost is

To obtain the value function, we must solve the PDE

subject to the boundary conditions

if

, for

.

Now, based on the boundary conditions, we look for a solution of the form

This is an application of the

method of similarity solutions. We find that Equation (

47) is transformed into the non-linear second-order ODE

Next,

is a positive constant. We set

We find that, if

, then the function

satisfies the simple linear ODE

whose general solution is

The constants

and

are such that the boundary conditions

if

, for

, are satisfied. We find that

for

.

From the function

, we obtain the value function

, and hence the optimal control (see Equation (

34))

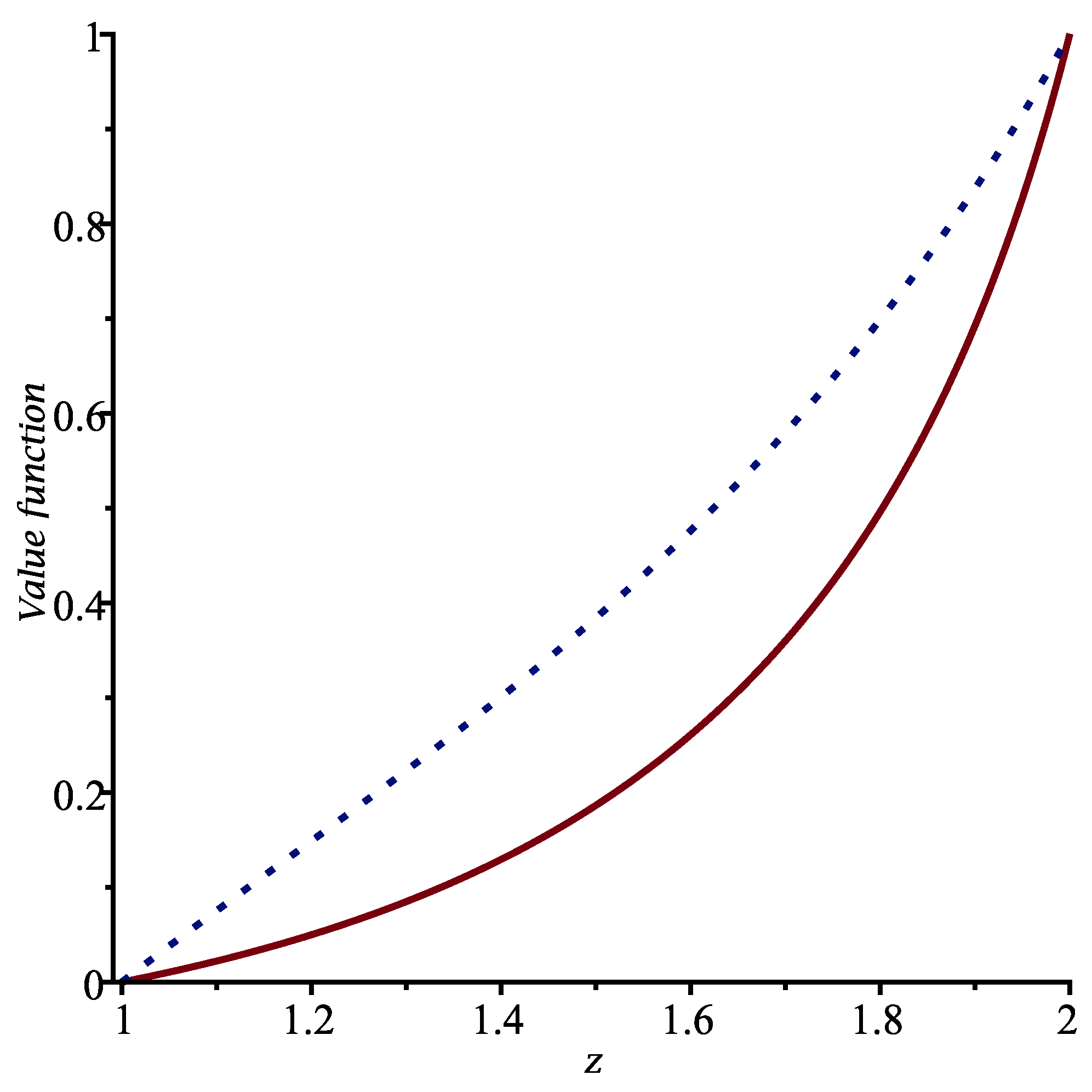

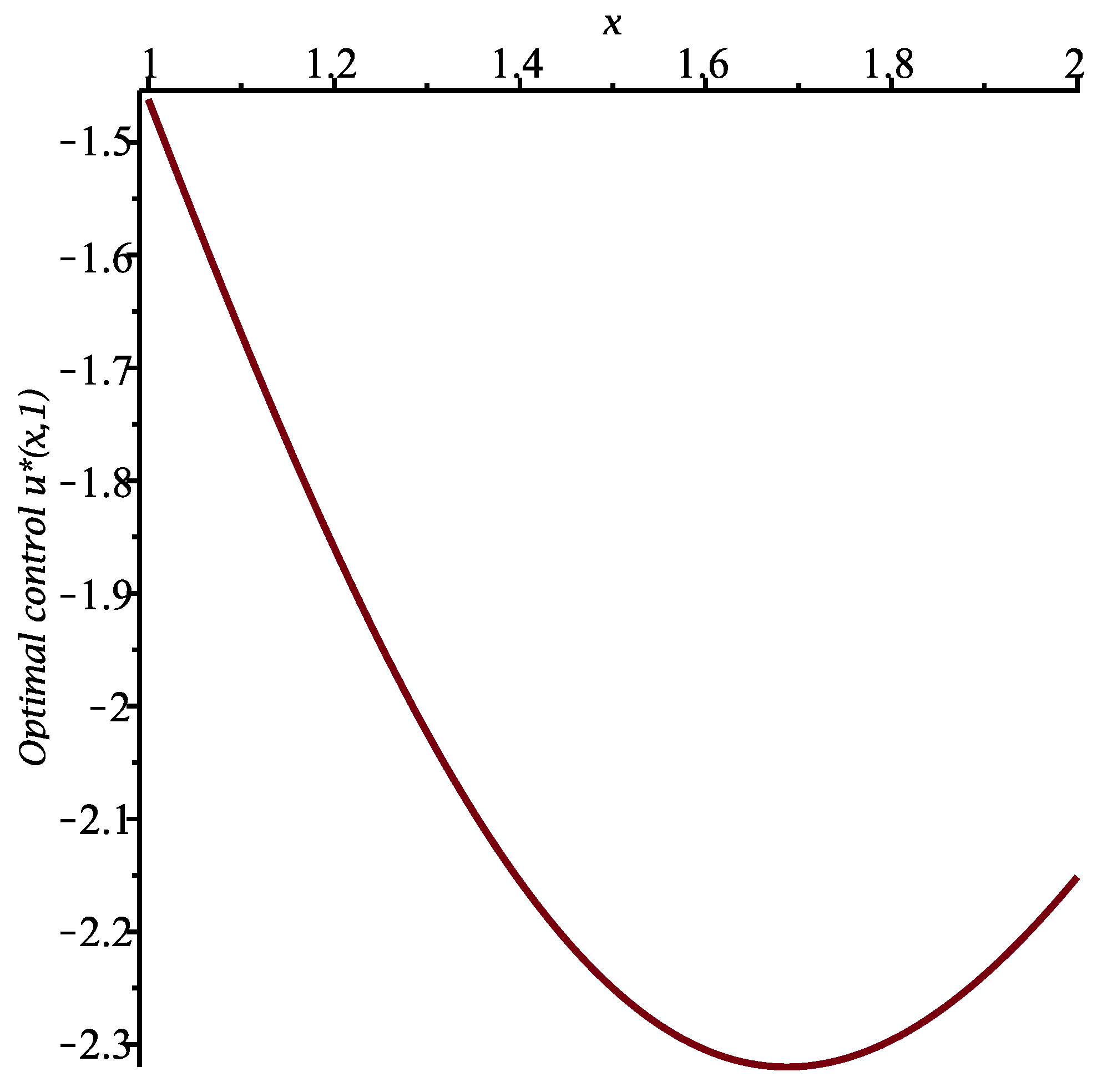

To illustrate the results, let us set

,

,

,

and

. The constant

is equal to 1, and we calculate

for

. The function

is presented in

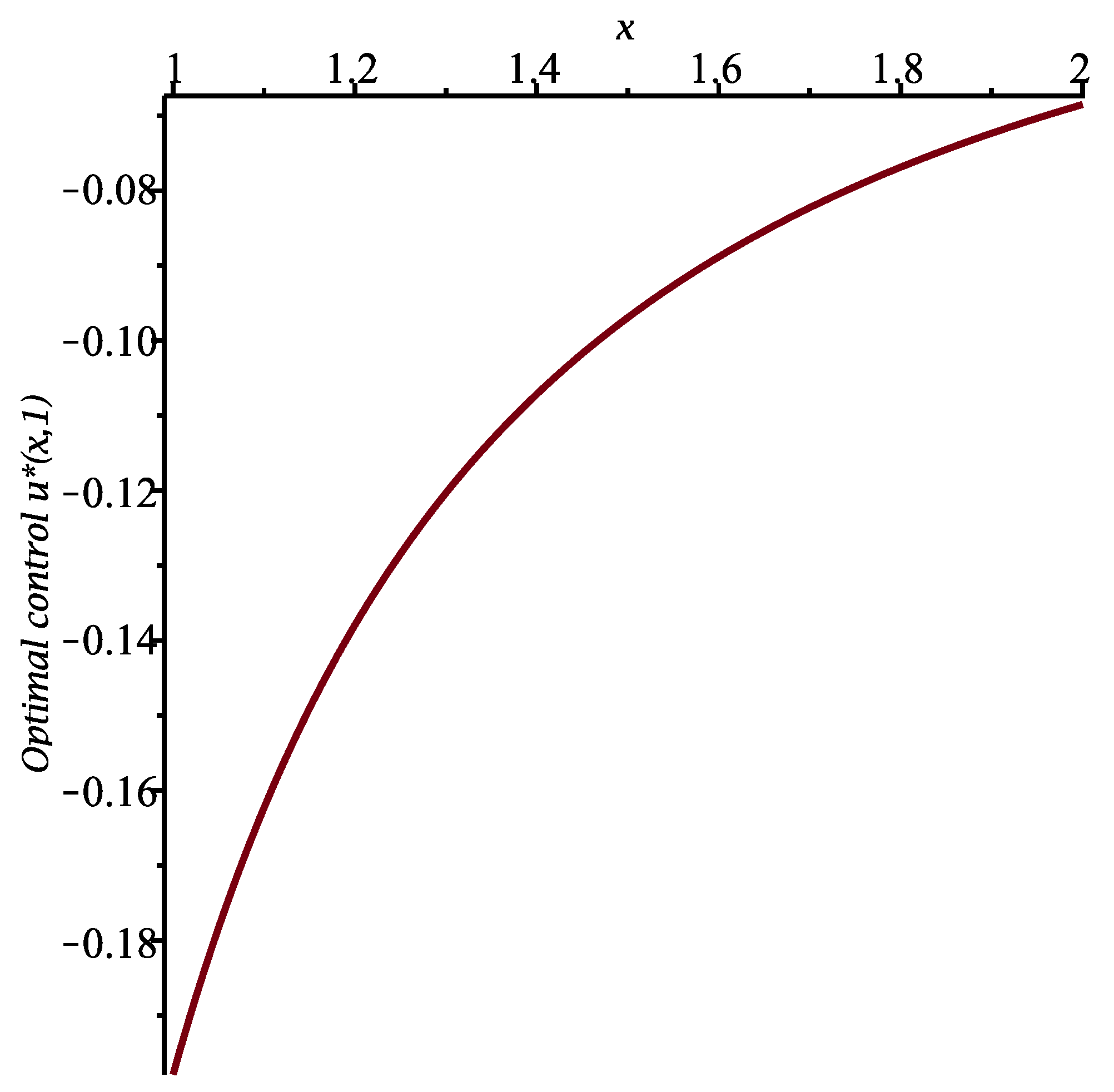

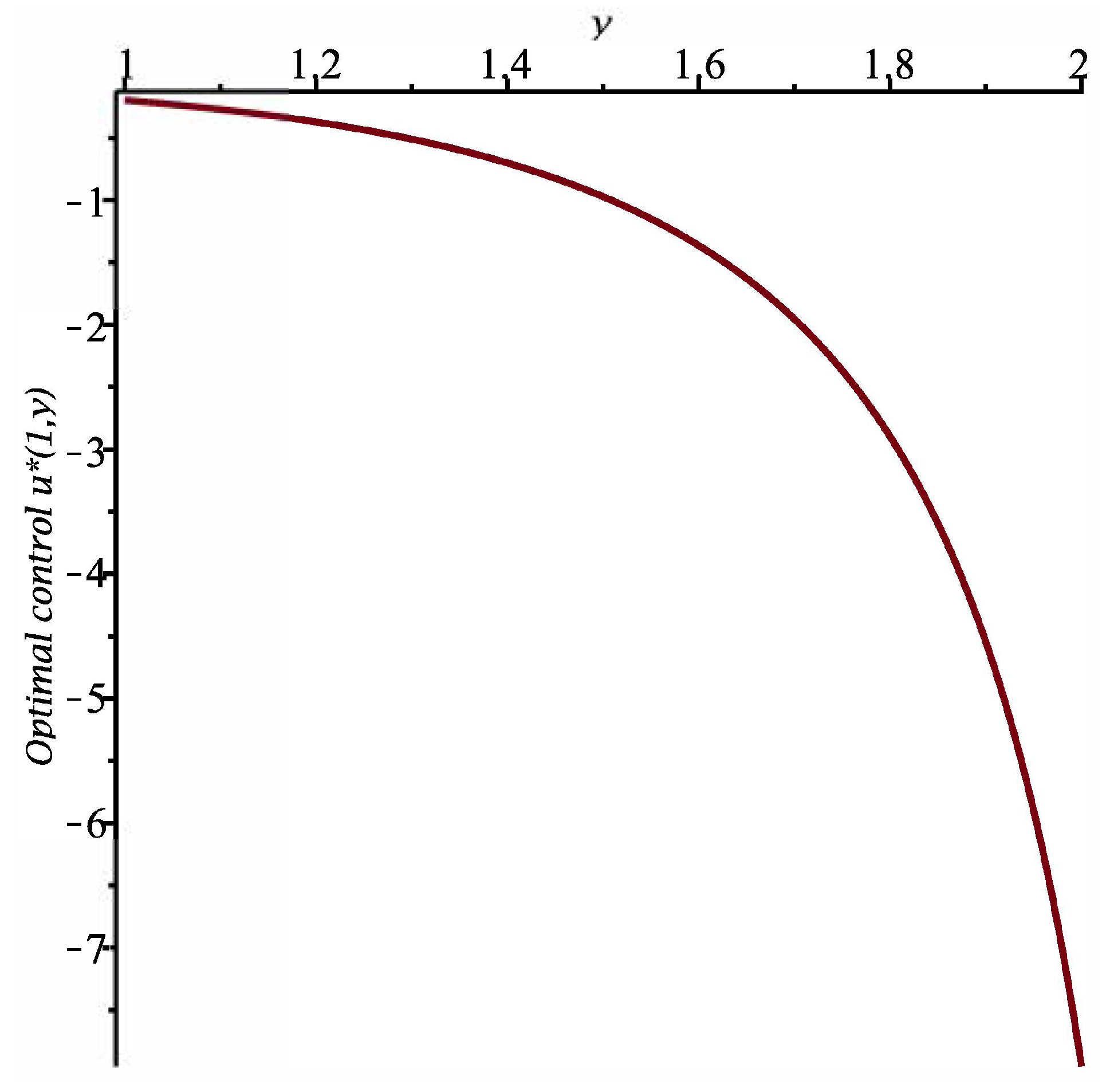

Figure 1. Moreover, the optimal control

for

and

for

are shown in

Figure 2 and

Figure 3, respectively.

Remark 6. Let denote the expected cost if one uses no control. We haveThis function satisfies the partial differential equationThe boundary conditions are if , for . Let us look for a solution of the form . We find that we must solve the ODEWith (as above), the general solution of Equation (59) iswhere is an exponential integral function. With the same choices for the various constants as above, the particular solution that satisfies the boundary conditions and can be expressed as follows:with . This function is displayed in Figure 1. We clearly see the improvement in the expected cost when one uses the optimal control rather than no control at all. Problem II. We now consider the first-passage time

and we suppose that the system (

36), (37) is

where

,

and

. Thus,

is a controlled geometric Brownian motion. Moreover, the cost functional is

where

and the final cost is given by

The value function satisfies the PDE

subject to

if

, for

. We find that the function

is a solution of the ODE

The boundary conditions are

if

, for

.

For the method of similarity solutions to work, the term must be expressed in terms of the similarity variable z. We will consider two cases.

Case 1. Let

, where

. Then, since

, Equation (

68) becomes

Next, let

where

The function

satisfies the linear ODE

The general solution of the above equation involves the special function known as Whittaker function. Let us consider a special case: we set

,

,

,

and

. Equation (

72) then reduces to

whose general solution is

The solution that satisfies the boundary conditions

and

(since

) is

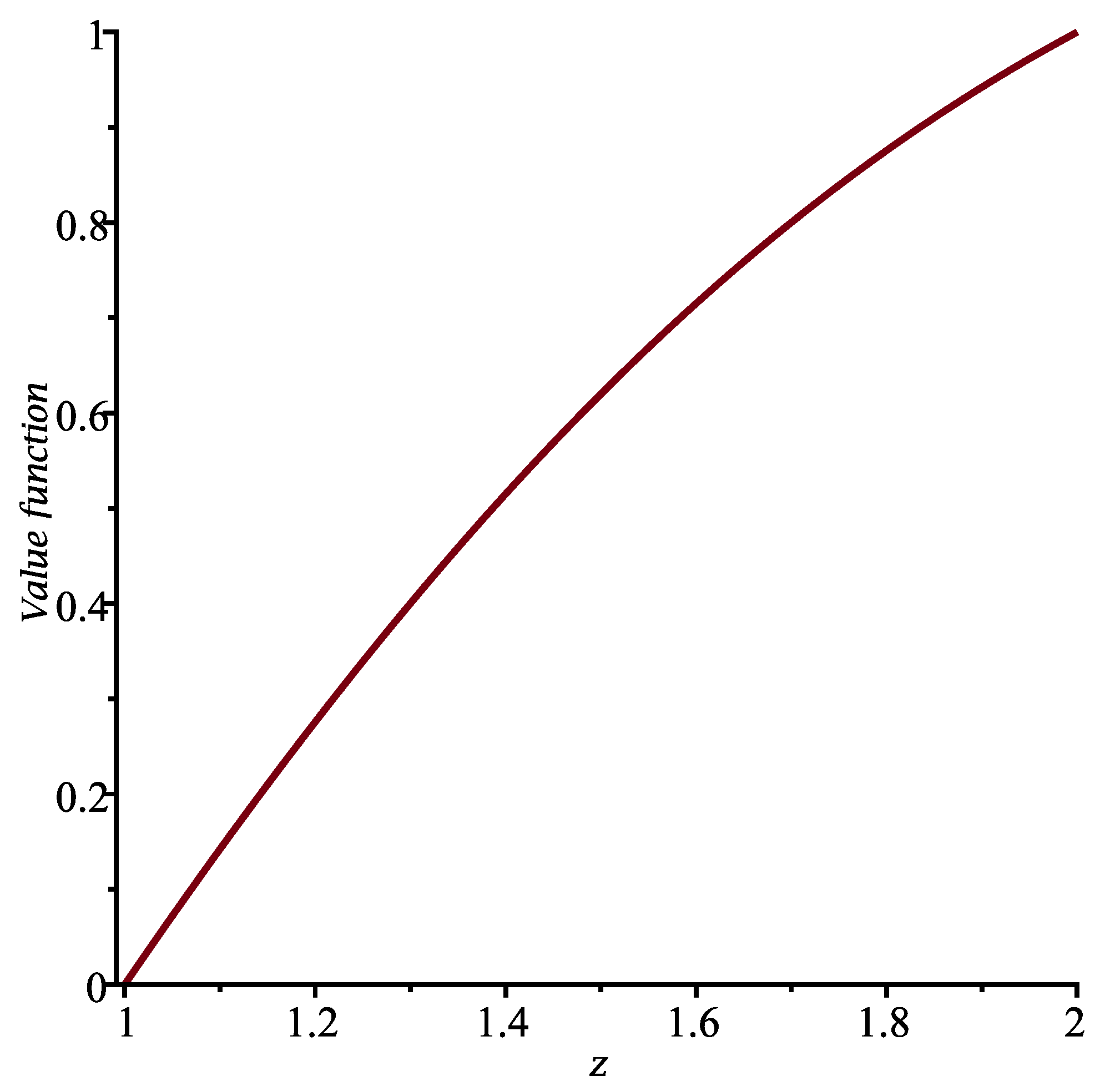

We present the function

in

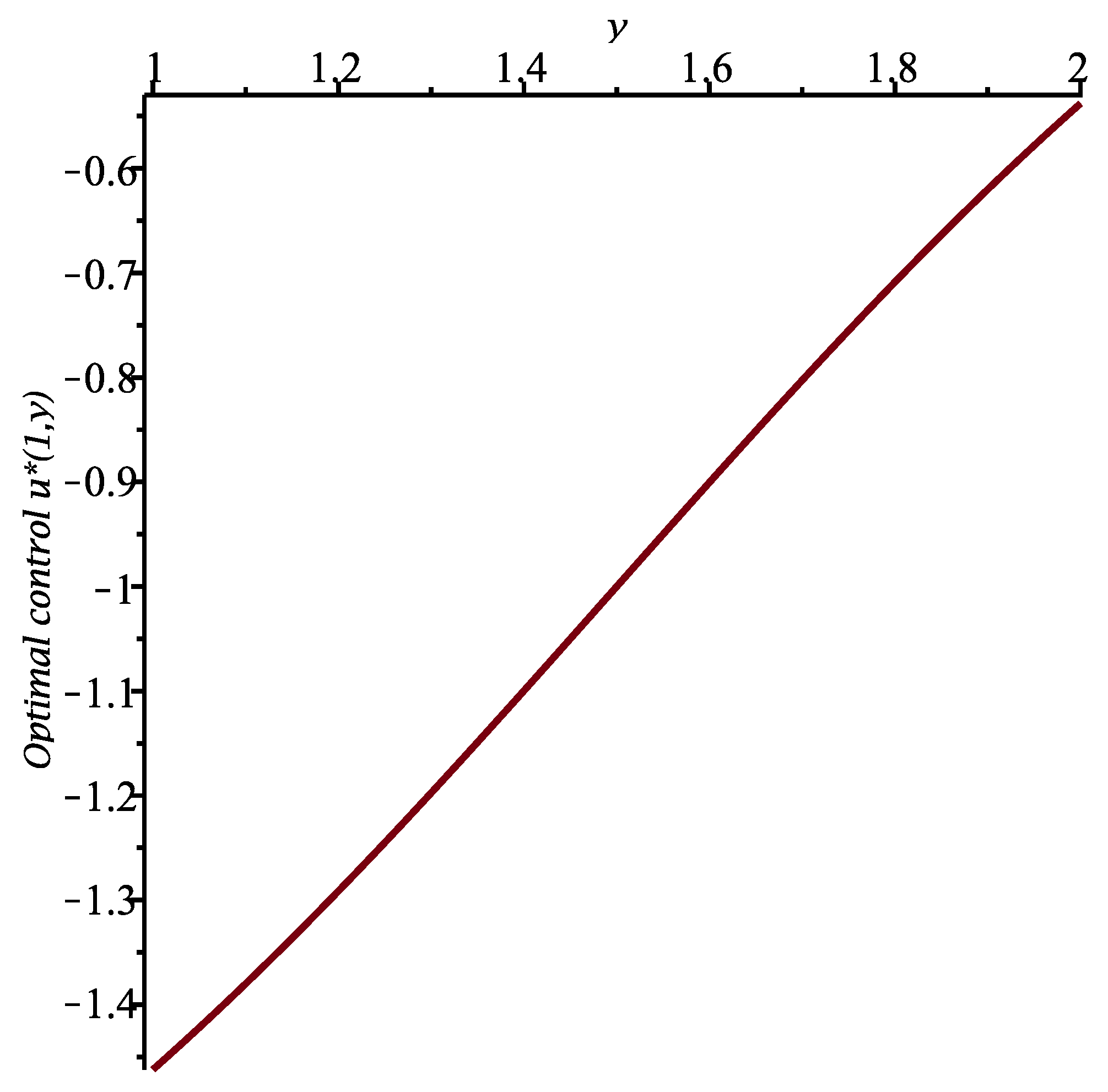

Figure 4. Furthermore, the optimal control is

See

Figure 5 and

Figure 6.

Case 2. Suppose that

, where

. Under the same assumptions as in Case 1, the ODE satisfied by the function

is now

The particular solution that satisfies the boundary conditions

, for

, is

The function

is displayed in

Figure 7 when the constants are the same as in Case 1.

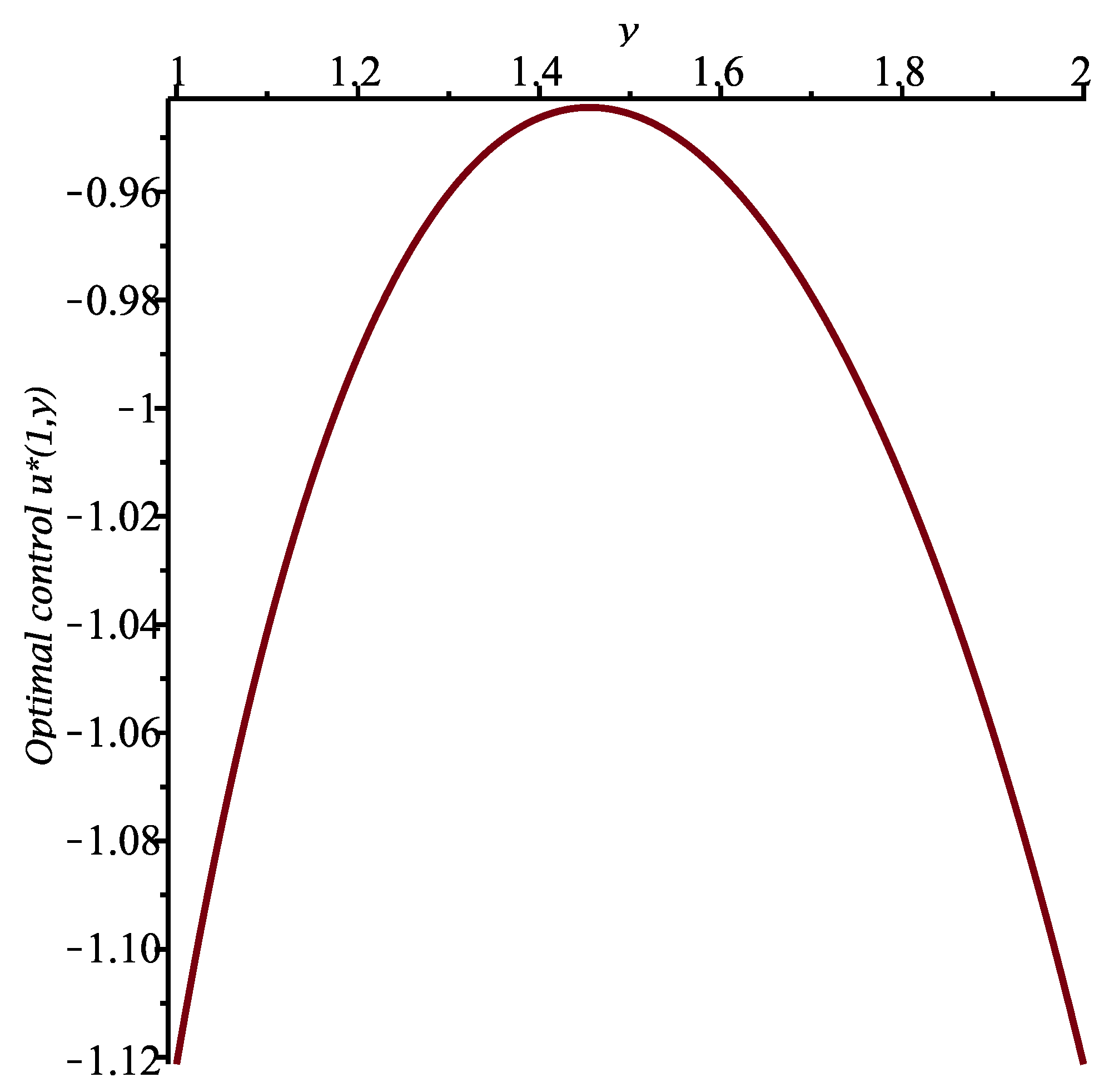

The optimal control is (see Equation (

38))

As in Case 1, the functions

and

are shown in

Figure 8 and

Figure 9, respectively.

5. Conclusions

In this paper, a queuing model in which customers arrive (approximately) according to a degenerate two-dimensional diffusion process was studied. The model is such that, in the case of the absence of service, the number of customers in the system is strictly increasing. Other authors have used diffusion processes as an approximation for the arrival of customers, but in only one dimension, for example a reflected Brownian motion.

Like any model, the one that we propose is based on simplifying assumptions. We believe it could be used successfully in certain applications, while traditional models might yield better results in others. The aim was to propose an alternative model that could prove useful in a variety of situations.

We wrote that the arrival process is approximated by a diffusion process. It should be emphasized that assuming that arrivals constitute a Poisson process, as in the case of the classic model, is also only an approximation of reality. Using an exponential distribution for the time between successive customer arrivals in the system is a simplifying assumption. In some applications, approximating arrivals using a Poisson process may be more realistic than using a diffusion process, while in other applications, the opposite is true.

In a special case, we were able to derive the distribution of the number of customers in the system at time t. It would be interesting to obtain this distribution in other cases, or at least calculate the expected value of , as we did when is a geometric Brownian motion.

In

Section 3, a stochastic control problem of the

homing type was formulated for the queuing model. This is the first time that a homing problem has been studied for a queuing model such as the one proposed in the paper. We gave the non-linear partial differential equation satisfied by the value function, and we saw that it is sometimes possible to linearize this equation. Finally, several problems were solved explicitly and exactly by making use of the method of similarity solutions. When this method does not apply, we could use numerical techniques to find the value function and hence the optimal control for any particular problem.