1. Introduction

The concept of entropy originates in information theory and statistical mechanics as a quantitative measure of uncertainty or information content in a system. Given a discrete probability function

, the Shannon Entropy [

1] is defined as:

where

and

. Entropy reaches its maximum when all outcomes are equally likely (

), and its minimum when one outcome has its probability equal to 1 (i.e., full determinism). In the context of time series analysis, entropy-based methods quantify the degree of regularity, complexity, or predictability of a temporal signal

. A low entropy value indicates highly regular or deterministic dynamics, while high entropy suggests complex, stochastic, or chaotic behavior. Most entropy methods rely on computing its value with the mentioned Shannon expression, but there are many variations where the estimator uses Rényi Entropy [

2] (Equation (

2)) or Tsallis Entropy [

3] (Equation (

3)). These frameworks underpin the mathematical foundation of entropy as measures of uncertainty, diversity, or disorder, concepts that Slope Entropy adapts to geometric and temporal features of real-world time series.

The patterns that entropy-based methods identify correspond to recurring motifs or dynamical signatures within the time series. Mathematically, entropy quantifies the dispersion or uniformity of the pattern distribution in the reconstructed state space. Let

denote the set of all admissible patterns, and let

be the empirical probability of observing pattern

. The entropy measures the heterogeneity of

:

Thus, entropy-based analysis detects whether the system’s evolution is dominated by a few deterministic configurations or explores the available pattern space uniformly: it is a hallmark of stochastic or chaotic dynamics.

Entropy analysis for time series has been playing a crucial role in many scientific domains, such as economy and finances [

4,

5,

6,

7], biology and medicine [

8,

9,

10,

11] and engineering [

12,

13,

14,

15], among others. One of its main applications is using it as a feature for signal classification [

16,

17,

18,

19,

20], since entropy calculation is able to provide very valuable insights into the predictability, dynamics and complexity of the studied signals. Nowadays there are many different entropy estimation methods, but the recently proposed Slope Entropy (SlpEn) shows promise in the field of signal classification, since it is able to capture the different intricacies of the patterns found in a signal, taking advantage of both the amplitude of the analysed temporal series and the symbolic representation of its intrinsic patterns.

SlpEn was introduced in 2019 by Cuesta-Frau [

21]. It takes advantage of the slope between two data samples to estimate entropy in a Shannon entropy-fashion. Making use of two different thresholds,

and

, as well as the basic subsequence length

m, it is able to transform the original time series into a symbolic representation of data subsequences (patterns) according to the differences between consecutive time series samples. The specifics will be described later in

Section 2.2. Making use of the three mentioned parameters, as well as the slope between two data samples, SlpEn is allowed to be more customizable and refined when analyzing temporal series, although it requires more input parameters compared to other of the most commonly used entropy calculation methods, such as Permutation Entropy (PE) [

22] (see

Section 2.3 for further details).

The original SlpEn paper gives some guidelines regarding the selection of values for both

and

, proving it is well behaved under many different conditions. It has been successfully applied in other works, such as [

23,

24,

25], achieving in most cases better results than other most commonly used entropy calculation methods like Permutation, Approximate or Sample Entropy [

26]. In addition, there has been some research to study how to improve SlpEn’s performance by removing

from its parameters [

27] or taking an asymmetric approach with respect to the values of

[

28]. SlpEn has also been successfully applied in combination with other entropy calculation methods [

29], signal decomposition methods like VMD or CEEMDAN [

30,

31], optimization algorithms for choosing the best SlpEn parameters, like the snake optimizer [

32], and also using different time scales [

33].

In this paper, we aim to further improve SlpEn classification capabilities by combining it with downsampling techniques, such as Trace Segmentation (TS), which is a non-uniform downsampling scheme [

34,

35,

36], but also with uniform downsampling (UDS). TS (detailed in

Section 2.4) allows to reduce the size or length of the input temporal series, choosing the samples of the original series where the greatest variation between them occurs, thus enhancing the peaks of the signal at the cost of discarding less significant values. TS has already been successfully applied in combination with Sample Entropy [

37], showing great results and inquiring in many advantages, including shorter computation time and better accuracy results in most cases. We hypothesize that these benefits can be applied to any entropy calculation method, not only to Sample Entropy. Additionally, in the case of SlpEn, we expect that the enhancement of obtained results will be higher than those obtained with other entropy calculation methods, since SlpEn bases its calculation on the differences between samples and TS is able to downsample a sequence choosing the samples where the biggest difference occurs, thus increasing differences among them.

Additionally, we also implemented UDS (

Section 2.5), which allows us to downsample the series uniformly, as its name suggests, up to the desired number of samples. Since non-uniform downsampling has already been applied successfully to entropy-based classification methods, we will try to reproduce similar results with the uniform method, since it is highly correlated to effectively using temporal scales in entropy calculation [

38]: using entropy with

is equivalent to downsample to 50%, with

, to downsampling to 33%, and so on.

Our main hypothesis for applying downsampling is that most features present in datasets and that are considered key in classification tasks belong in certain frequency bands that are below the sampling frequency, thus noise and confounding data is added to the temporal record and classification accuracy is hindered. To support it, we have chosen a comprehensive benchmark that is comprised of seven different datasets from different fields that belong to different domains. For further details on each one of the datasets that comprise our benchmark, refer to

Section 2.1. In EEG recordings, as it is the case of the Bonn EEG and Bern-Barcelona datasets we use in the present work, the data is divided into bands, mainly five: Delta, Theta, Alpha, Beta and Gamma. Then, from these bands, one can extract components to perform classification tasks. Four of these bands (Delta, Theta, Alpha and Beta) are below 30 Hz, whereas the sampling frequencies for Bonn EEG and Bern-Barcelona are 173.61 Hz and 512 Hz respectively, which is way above 30 Hz, or even 60 Hz, which would be the minimal sampling frequency to capture all information from these bands while avoiding loss, according to the Nyquist-Shannon sampling theorem [

39,

40]. Only using such low frequency bands, classification tasks for different applications, such as epileptic seizures, can be performed with relative ease, such as demonstrated in the works [

41,

42,

43,

44]. With the Fantasia RR database, which is comprised of ECGs, something similar happens. The database is sampled at 250 Hz, but the main HRV frequency bands are the Low-Frequency (LF) band (0.04–0.15 Hz) and High-Frequency (HF) band (0.15–0.4 Hz) [

45,

46], even some references going up to approximately 0.9 Hz with respiration signals [

47]. With the Paroxysmal Atrial Fibrillation (PAF) prediction dataset, which is also a ECG-comprised database, the exact same thing happens, its sampling rate is 128 Hz, way more than double of the minimum needed, which would be around 30 Hz if the HRV signal is band-pass filtered between 5 Hz and 15 Hz [

48,

49].

Outside the medical field, we have three more datasets. First, we have the House Twenty database, which contains electricity consumptions, sampled at 8-s interval, which would be a frequency of 0.125 Hz. Next, the Worms Two-Class dataset. This one contains recordings of mutant and non-mutant worms movements. These were sampled at 30 Hz. Finally we have the Ford A dataset, which measures engine noise. Up to our knowledge, there is no reference as how the noise data was captured nor any sampling rate, it is only said that it is “engine noise”, and that the dataset was used for a competition in a 2008 IEEE World Congress on Computational Intelligence. Note that, in these cases, we do not know the ideal frequency components to perform classification tasks, so we do not know the ideal sampling rate for each case neither. Regardless, we are interested to see if, what we think is applicable to medical EEG and ECG datasets, it is also feasible to non-medical datasets.

We have applied TS or UDS as a data processing technique before performing entropy calculation. Since these techniques allow to reduce the temporal sequence in size as much as we want, we have applied downsampling to obtain sequences downsampled from

to

in

steps. Then, we calculate SlpEn on the downsampled sequences, comparing the results, in terms of classification accuracy, with the ones achieved with no downsampling or, in other words, the original time series without applying TS nor UDS. Such comprehensive experiment will allow to see if there is some specific percentage of downsampling more appropriate to improve classification accuracy. In addition, the same experiment has been performed with one of the most commonly used entropy calculation methods: Permutation Entropy. Comparing the results achieved by the two different entropies will show how each entropy, as well as TS and UDS, behave under different conditions. The experiments are explained in more detail in

Section 3.1. In order to carry them out, we will make used of the benchmark mentioned previously. As it has many varied datasets, it will make it possible to evaluate the effectiveness of each method.

The main contribution of this paper is to show how downsampling techniques can be applied effectively in combination with not only SlpEn, but with any entropy calculation methodology in order to improve results in terms of classification accuracy. In addition, in most cases applying downsampling before calculating the entropy value of a temporal series will lead to faster computation times: calculating the downsampled series and then obtaining its entropy value is much cheaper, in terms of computational cost, than calculating the entropy value of the original non-downsampled sequence. To sum up: using downsampling will not only allow to enhance the results in classification tasks, but also to obtain them faster.

The organization of the paper is the following:

Section 2, Materials and Methods, presents a comprehensive review of both the benchmark used in the experiments, having a look into each individual dataset, and the specifics of the four different methods used in the experiments: SlpEn, PE, TS and UDS. Next, in

Section 3, the specifics of how the experiments have been executed are explained, while also presenting the results, paying attention to how TS and UDS affect the accuracy results achieved by both SlpEn and PE. In addition, the time of execution will also be taken into account to show the benefits gained in timing after applying downsampling. A discussion of the results will take place in

Section 4, where the results are evaluated, as well as the implications of using downsampling to enhance the discriminating power of entropy when used as a feature for classification. Finally, the paper concludes in

Section 5, where we summarize the main contributions of this paper and highlight potential future research directions which can enhance SlpEn’s capabilities, or other entropy techniques, in different contexts.

2. Materials and Methods

This section presents the benchmark utilized in the experiments, as well as the methodologies and techniques used. The benchmark is comprised of a total of seven different datasets, coming each one from a different background and captured for different purposes, although in this case all of them have been used in classification tasks. All of them are publicly available, and have been used in many different studies, making them very reputed representatives time series for analysis, allowing for easy comparison between the obtained results and previous ones in different studies. In addition, the specific datasets have been carefully selected so that they are diverse in their intrinsic characteristics, such as length, number of samples, background, etc., ensuring that the results have high potential for generalization and mitigating any possible bias, both in interpretation or regarding the values of the time series themselves.

Apart from the benchmark, this section will also focus on the insights of the techniques used in the experiments: entropy calculation methods, mainly Slope Entropy and Permutation Entropy, and the downsampling techniques, Trace Segmentation (TS) and Uniform Downsampling (UDS). We will review how both entropies work, as well as explaining how TS and UDS perform their downsampling. Combining TS or UDS with both types of entropy, and then comparing results with different levels of downsampling will be the main point of the experiments that have been carried out and that will be presented, along with the results, in

Section 3.

2.1. Benchmark

As previously stated, in order to assess both the resilience and efficacy of the proposed combination of downsampling with SlpEn, it is imperative to conduct experiments on diverse datasets that exhibit several variations in many time series characteristics, such as level of ties, length or regularity. Thus, the specific datasets contained in the benchmark used in this present work are:

Bonn EEG dataset [

50,

51]. This dataset comprises 4097 electroencephalograms (EEGs), each with a duration of 23.6 s. The instances are categorized into five distinct classes (A, B, C, D, and E), representing different neural activity scenarios. Classes A and B correspond to healthy subjects with eyes open and closed respectively. Classes C, D, and E pertain to different classifications of epileptic subjects (see further details in [

50]). For the specific experiments in the present paper, we focused only on classes D and E, with 50 records from each class. Class D corresponds to seizure-free periods at the epileptogenic zone, whilst seizure activity from the hippocampal focus pertains to class E. This dataset has been extensively used in numerous scientific works, being [

10,

52,

53] examples of such research.

Bern–Barcelona EEG database [

54]. This dataset includes both non-focal and focal time series extracted from seizure-free recordings of patients with pharmacoresistant focal-onset epilepsy. The classes have 427 and 433 records each, each record being sampled at a sampling frequency of 512 Hz and being comprised of 272 data points. It has been used in other classification studies, including the works [

55,

56,

57], which reviewed the results achieved using time series from this database.

Fantasia RR database [

58]. It showcases a carefully selected collection consisting of 40 distinct time series, divided into two groups of 20 records each, where one of these two groups corresponds to youthful subjects, whereas the other is comprised of data from mature subjects. All subjects were initially in good health, thus eliminating potential health-related variables. The monitoring duration lasted 120 min, and the sampling frequency was set at 250 Hz. This database has been utilized in various studies, including [

59,

60].

Ford A dataset [

61]. It is a collection of data extracted from a specific automotive subsystem. The primary purpose of its creation was to empirically evaluate the effectiveness of classification schemes on the acoustic characteristics of engine noise. From this experimental project, a set of 40 distinct records was carefully chosen and utilized for analysis from each individual class. Examples of research where this dataset has been used are [

62,

63].

House Twenty dataset [

64,

65]. This dataset consists of temporal sequences originating from 40 different households as part of the Personalised Retrofit Decision Support Tools for UK Homes using Smart Home Technology (REFIT) project. This dataset includes two classes with 20 recordings in each, thus having the dataset data from 40 different households. One of the classes represents overall electricity consumption, while specific electrical consumption of washing machines and dryers is represented on the other. This dataset belongs to the UCR archive [

66,

67].

PAF (Paroxysmal Atrial Fibrillation) prediction dataset [

68]. This dataset comprises discrete 5-min temporal recordings from patients diagnosed with PAF. The recordings are classified into two categories: one preceding the onset of a PAF episode and the other representing instances distant from any PAF manifestation. A total of 25 distinct files is included in each category. This dataset is widely known and used in many and varied scientific research [

69,

70,

71].

Worms two-class dataset [

72,

73]. It is comprised of time series data from a certain species of worm. More specifically, it refers to locomotive patterns, which are used in behavioral genetics research. The records are selected from two classes: non-mutant and mutant worms. The first class consists of 76 records, while the second class has 105 records. Both classes share the time series length, which is 1800 samples. Similar to the other datasets, this one has been utilized in various scientific works [

74,

75].

2.2. Slope Entropy

SlpEn [

21] is a method for calculating entropy by extracting symbolic subsequences through the application of thresholds to amplitude differences between consecutive samples of a time series. The resulting histogram of relative frequencies is then subjected to a Shannon entropy-like expression, allowing to obtain the final result, which is the SlpEn value. This method operates on an input time series

with input parameters

N,

m,

and

, aiming to compute SlpEn

.

The input time series is considered as an N-length vector which contains the samples , and it is then defined as , , . The time series is divided iteratively into overlapping data epochs of length m, denoted as , , with j incremented after each iteration as .

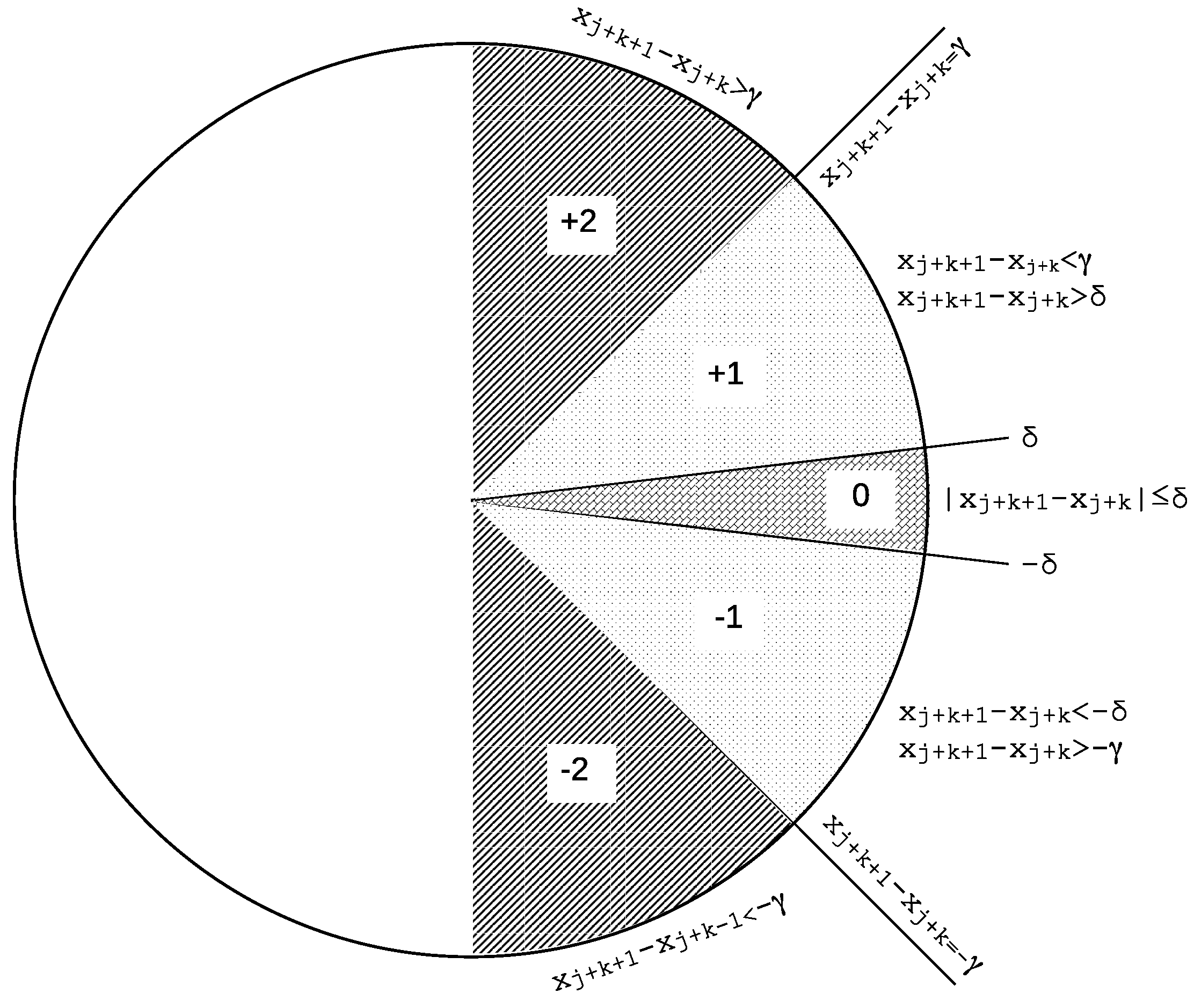

For each subsequence, a symbolic pattern is generated, , where . The symbols used in the pattern are selected from a set , based on a thresholding function f. The function relies on two different thresholds, and . These two thresholds can take any positive real value with the restriction , and the following rules (that apply for ):

If , +2 (or just 2) is the symbol be assigned to the current active symbolic string position, .

If and , +1 (or just 1) is the symbol to be assigned to the current active symbolic string position, .

If

, 0 is the symbol to be assigned to the current active symbolic string position,

. This is the case when, depending on threshold

, two consecutive values are very similar, which could be the case for ties [

76].

If and 1 is the symbol to be assigned to the current active symbolic string position, .

If , −2 is the symbol to be assigned to the current active symbolic string position, .

Possible ties are handled with the use of the threshold

[

21]. It also tries to avoid incorrect conclusions [

77]. On the other hand,

is used to differentiate between low and high consecutive sample gradients. In the standard configuration described in the SlpEn seminal paper [

21],

is recommended to take a small value, 0.001, while

is typically a constant value close to 1.0, depending on the normalization of the input time series. These thresholds are applied to both negative and positive gradients just by changing the sign. This symmetrical baseline scheme has achieved very good results in classification tasks performed in many works, such as [

24,

29,

78,

79,

80].

Figure 1 is a visual representation of how the regions defined by the two thresholds described above. This representation follows the standard symmetric SlpEn approach described in its original paper [

21] and utilized in previous scientific studies, as well as in the experiments described in this paper.

After computing all the symbolic patterns, the histogram bin height is calculated by counting the total number of occurrences for each pattern, and it is then normalized by the number of unique different patterns found. These normalized values are referred to as

. Finally, a Shannon entropy expression is used to obtain the SlpEn value for the temporal series

using the input parameters

m,

and

:

All the steps needed to compute SlpEn are shown in Algorithm 1. Note that, contrary to the basic algorithm presented in [

21], this one is optimized so that the patterns from the last subsequence are reused in the current one, instead of computing them all each time.

| Algorithm 1 Slope Entropy (SlpEn) Algorithm |

- Input:

Time series , embedded dimension , length , , - Initialisation:

, slope pattern counter vector , slope patterns relative frequency vector , list of slope patterns found

for

do if then end if if then end if if then end if if then end if if then end if end for for

do for do end for if then end if if then end if if then end if if then end if if then end if for do if then break end if end for if not then end if end for for

do end for return

|

2.3. Permutation Entropy

PE [

22] is an entropy calculation method based on deriving an ordinal symbolic representation based on the amplitude of the input time series and the application of the Shannon entropy expression to their relative frequencies. It is one of the most popular methods for calculating entropy, along with its many variations, mainly for its simplicity, low computation time and excellent performance in classification applications [

81,

82,

83,

84,

85]. In this study, this method has been included for comparison purposes, as well as to prove that TS is well suited for any kind of entropy, not only to SlpEn.

This procedure divides a time series of length N into overlapping subsequences of length m. An ordering procedure of the samples, generally in ascending order, is performed for each sample position j of length m, .

As a result from ordering the indices of the samples in , which by default were ordered as , a new symbolic pattern is obtained with the indices of each sample at their corresponding ordered position. This symbolic vector is represented as , where is the original index of the sample which is the smallest of , the index of the next sample in ascending order, and so on. To put it in another way, the samples in satisfy .

Once all the patterns have been obtained, a histogram is calculated using all the patterns that have been found as bins and the number of times they have appeared as their value or height. Then, their relative frequencies,

, are extracted using the number of occurrences of each found pattern and the total number of possible ordinal patterns

. Finally, these relative frequencies are used to obtain their Shannon entropy, corresponding in this case to their PE:

The basic steps for computing Permutation Entropy are detailed in Algorithm 2.

2.4. Trace Segmentation

TS is a non-uniform downsampling scheme that samples the input signal at the points where the greatest variation occurs. This technique has been successfully used in other research, such as [

76,

86,

87].

Mathematically, TS works in the following way. Given an input time series

, an accumulative derivative is obtained as:

where

and

. The last point,

, corresponds to the maximum value of the accumulative derivative, and one can obtain the sampling intervals’ amplitude

as

, being

the number of desired output samples, with

. Each sampling point for the output signal

is provided by the minimum index

i of

for which

exceeds an integer multiple

q of

:

with

. The main objective of using TS is to reinforce the presence of peaks in a non-linear way. This may result to be very beneficial in the case of SlpEn, since it takes advantage of the difference between samples and TS transforms the input signal to keep only the most prominent patterns. Algorithm 3 shows how to compute TS.

| Algorithm 2 Permutation Entropy (PE) Algorithm |

- Input:

Time series , embedded dimension , length - Initialisation:

, ordinal pattern counter vector , ordinal patterns relative frequency vector , list of ordinal patterns found

for

do for do end for while do for do if then end if end for end while for do if then break end if end for if not then end if end for for

do end for return

|

| Algorithm 3 Trace Segmentation (TS) Algorithm |

- Input:

Time series , length N, desired number of samples - Initialisation:

|

2.5. Uniform Downsampling

UDS is a basic uniform downsampling technique that allows to reduce the number of samples up to the desired number, removing elements in a uniform way. That is, in the case we want to remove half of the samples, we remove 1 out of 2 consecutive samples (we are using a downsampling factor of 2), removing values across all the series. If we wanted to remove 1/3 of the samples (downsampling to 67% or, said in other words, use a downsampling factor of 1.5 since we are keeping 2/3 of the samples), we would remove 1 out of 3 consecutive samples, removing always the sample in the same position if no overlapping window is used. As an example, with the sequence (3, 9, −7, 0, −34, 5), if we wanted to downsample to 67%, we would remove the pairs of numbers 3 and 0, 9 and −34 or −7 and 5. Note that these examples remove elements in the position of the chosen integer number,

M. This is commonly known as integer decimation. Equation (

9) depicts how the elements for integer decimation are chosen:

where

, so that the inequality

is satisfied.

In the uniform downsampling performed on the experiments in this work, we are downsampling up to a desired number of samples, which is always smaller to the original sequence size, and then translating this to a percentage. This makes it fundamentally different to previously mentioned integer decimation using integer

M, since most percentages won’t be equivalent to just keeping the

Mth sample of a given temporal series, they will be fractional numbers. It is worh noting the integer decimation is a special case of the UDS we have used and implemented. Equation (

10) depicts how the indices are chosen in this case:

where

.

To implement how we will be choosing each sample, we will be using an accumulator. This accumulator,

c, will be adding to itself the fraction of samples we want to keep,

, with respect to the series size

N:

. This addition,

, will take place each time we evaluate whether a certain sample is to be added to the resulting sequence of not. Whenever the accumulator exceeds 1, we will keep this sample, and then reset it by removing 1 from the accumulator, thus keeping the excess for the next iteration where we pick the next sample. Algorithm 4 depicts the algorithm used to perform UDS:

| Algorithm 4 Uniform Downsampling (UDS) Algorithm |

- Input:

Time series , length N, desired number of samples - Initialisation:

for

do if then end if end for return

|

Note that the downsampling factor () can be inferred from the algorithm, mainly by dividing the desired number of samples N by the total number of samples : . In this work, instead of specifying certain downsampling factors, we say we “are downsampling to ”, that is, we are keeping of the samples. To obtain the downsampling factor, one must simply divide 1 by the percentage of kept samples and multiply by 100: .

4. Discussion

The original SlpEn method [

21] by itself already has a very high performance in classification tasks. Its main drawback is the optimization of its parameters:

m,

and

. Since it has three, when performing a grid search, it can be quite slow, specially compared to other methods such as PE, that only has

m to optimize. In this work we have explored the possibility of combining SlpEn with downsampling techniques, such as TS and UDS, not only to try to reduce its computation time by downsampling the temporal series under study, but also to enhance its performance by maximizing the most prominent patterns present in the sequences in the case of TS, while working in different temporal scales and removing noise when using UDS. In addition, downsampling can also be applied to other entropy calculation techniques, as the results have shown with the case of PE and the work [

37] with Sample Entropy with TS, and also with temporal scales, which are specific cases of UDS [

38,

89,

90,

91].

It is evident from

Table 1 and

Table 2 that using TS to downsample the original sequence is quite beneficial, since the accuracy both in SlpEn and PE increases in four out of seven of cases and five out of seven, respectively. For SlpEn, depending on the dataset, the results are enhanced between 4% and 13%. In datasets where results are not enhanced, they only worsen by 4% at maximum, which may be worth it when compared to the time we gain thanks to downsampling. On the other hand, PE seems to benefit more from using TS, since it increases its accuracy results up to 22%, except in the case of the Bonn EEG dataset, where the results worsen considerably by 14%. Comparing the two of them, it appears that PE has its highest increase in performance in the datasets that initially had the worst results (Bern-Barcelona, Fantasia and House Twenty) compared to the initial results of SlpEn. On the other hand, the performance enhancement is less prominent, under 10%, in the datasets where both SlpEn and PE have similar results when TS is not applied.

Regarding the downsampling percentages, for SlpEn, most of them are between 25% and 50% (5 out of 7). In the case of PE, the range varies greatly, being between 10% and 31%, with two datasets with the lowest downsampling percentage tested (10%). From this results we can extract that downsampling below 50% of the data can be highly beneficial, since, both for PE and SlpEn, 11 out of 14 datasets increase their accuracy. Ranges of less than 25% are also quite promising in the case of PE (4 out of 7), whereas SlpEn tends to benefit more for percentages closer to 50% (3 datasets within the vicinity of 40% to 50%). Additionally, it is extremely important to highlight that, depending on the number of available samples, specially if this number is not high, the performance might be hindered because the temporal series result of the downsampling may end up being too short.

In the case of UDS, we can extract similar conclusions from

Table 4 and

Table 5. Classification results for both SlpEn and PE highly benefit from UDS, even more than with combined with TS. With UDS combined with SlpEn, the maximum gain is a bit more conservative (up to 10% in the Fantasia dataset compared to the 13% obtained with TS), but there is no instance where the performance worsens, only with the Bonn EEG dataset it is has no improvement and maintains its accuracy compared when no downsampling is applied. Once again, when UDS is used with PE, the results are similar: in the case where the accuracy levels decrease, they do so in a smaller scale (−9% compared to the −14% obtained in the Bonn EEG dataset).

Results from these tables, both when TS and UDS are used accompanying SlpEn and PE, seem to confirm our initial hypothesis. That is, data which is sampled at a much higher rate than needed is adding a lot of possible confounding data to the sequence. Performing downsampling, we are enhancing the main patterns and reducing this unnecessary data, thus keeping key features that will be used to enhance classification performance. Moreover, it seems performance increases more in cases where the sampling rate in Hz is higher, as it is the case with both the ECG and EEG datasets. In the case of ECG, the Fantasia RR database (sampled at 250 Hz), compared to the PAF dataset (sampled at 128 Hz), has a much higher increase in classification accuracy when used downsampling, with a maximum of 20% when UDS is used with PE, compared to a maximum of 8% with the same settings. Very similar behaviour exhibit the EEG datasets: Bern-Barcelona (sampled at 512 Hz) benefits much more from downsampling than the Bonn EEG dataset (sampled at 173.61 Hz). In this case, the first one increases its accuracy by 21% with UDS and PE, whereas the second datasets sees its performance not increased in the best case, where UDS and SlpEn are combined, and hindered in the remaining cases. Regarding the three non-medical datasets, they depend on the dataset. Both House Twenty and Worms datasets seem to benefit from both techniques of downsampling, specially in the case of House Twenty. Finally, in the case of Ford Machinery A, downsampling seems to be a hinderance, but we do not know the exact sampling rate for these temporal series.

From

Table 7 we can extract another major advantage from using downsampling: the computation time is reduced a lot. Downsampling the original sequences leads to less than half the time used (with TS) when reducing the samples to 50%, both for SlpEn and PE. With UDS, the algorithm is a bit slower, so they the execution times do not halve, but are close to. Of course, the more the number of data in the sequence is reduced by downsampling, the faster the results are calculated. Since those two tables refer to the whole grid search explained in the previous section, the gain in seconds is huge, specially in the case of SlpEn, since it has more combinations to check. It is worth noting that, in the case of our implementations of TS and UDS, UDS is slower, so it leads to slower times, which in any case are by no means negligible. In addition, with smaller downsampling percentages, times for both TS and UDS converge, as one would expect.

Table 8 illustrates similar information, but in this case they focus on individual entropy calculations for different

m values. It is clear that, on an individual basis, SlpEn is faster to calculate than PE, and the speedups are very similar between the two entropies, being a bit higher in the case of PE. This is more noticeable in the cases where

m is 8 and 9, and also in the last column, where the percentage of samples used is only 10. In this case, in terms of physical seconds, the difference is higher for PE, making it much faster when using downsampling compared to the case with no downsampling. Furthermore, there are many instances (at least 3 out of 7), both with SlpEn and PE, where the maximum accuracy has beet attained using high

m values (

) as it can be observed in

Table 3 and

Table 6, thus implying great potential for improvement in execution time. It is also worth noting that the downsampling algorithm used to obtain these execution times is not relevant, since they only take into account the time it takes to perform entropy calculations, excluding the time it takes to downsample.

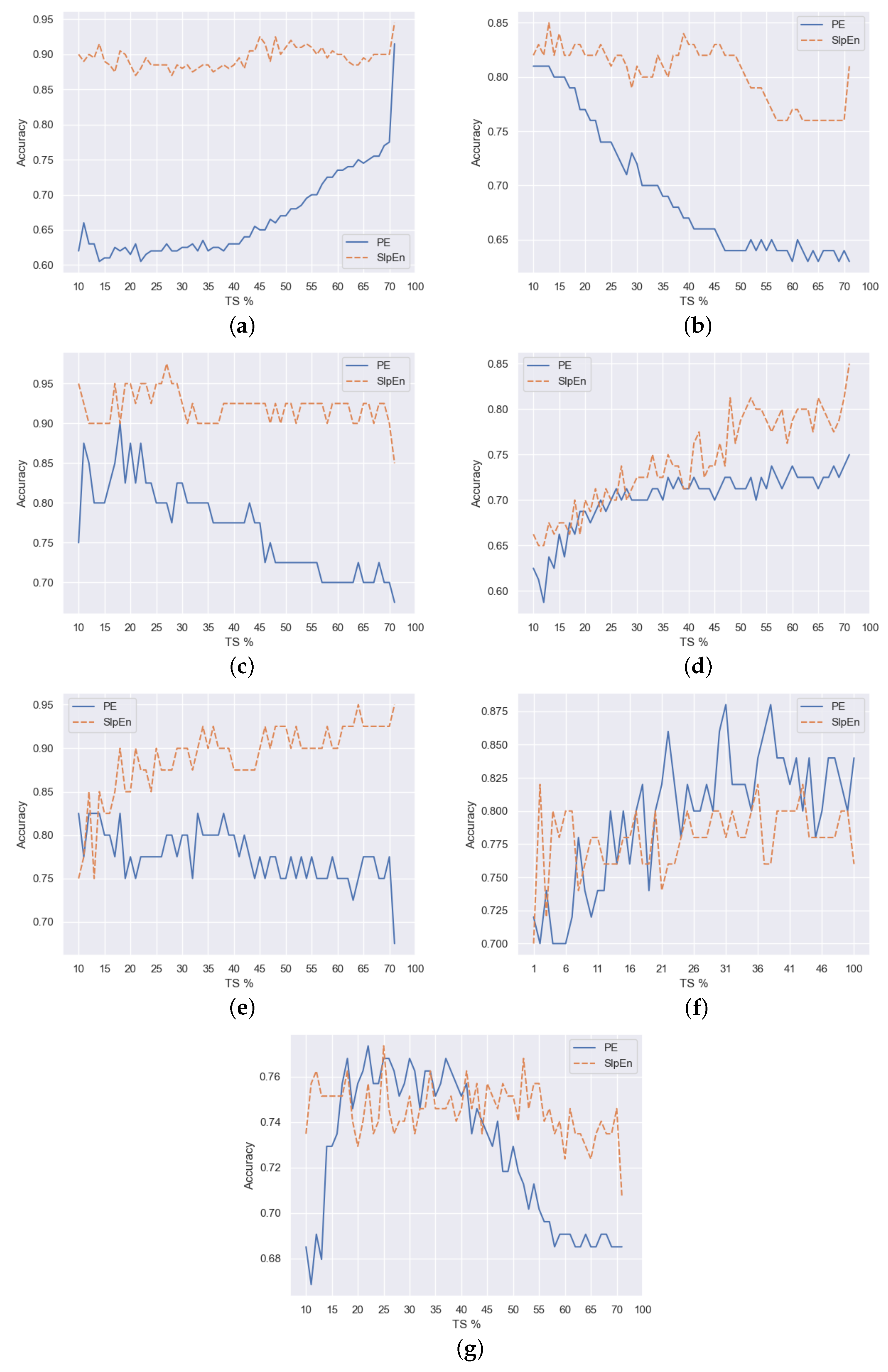

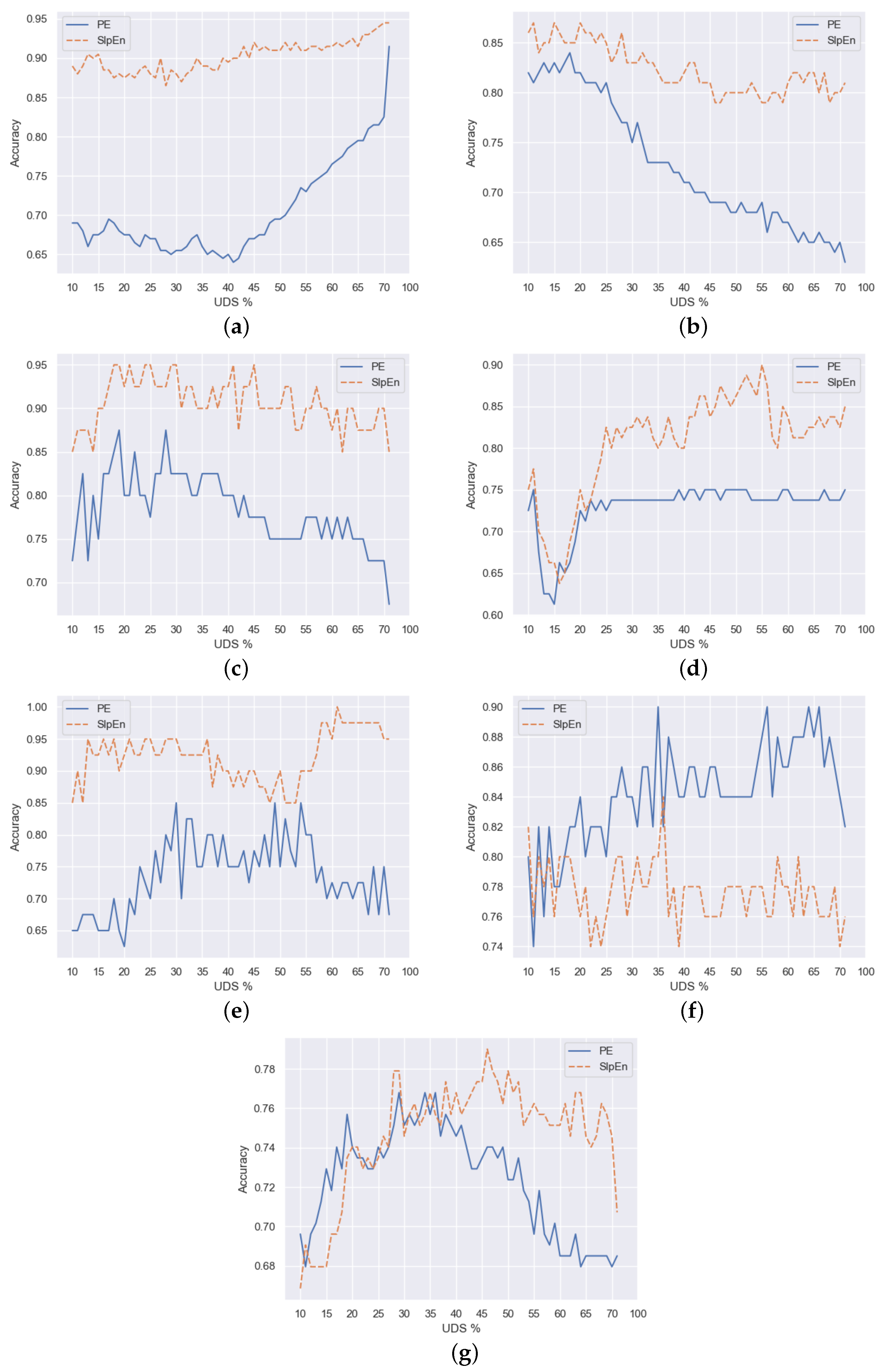

Figure 3 and

Figure 4 show graphs that represent the maximum accuracy achieved for each percentage of downsampling, including also the case without it, which corresponds to the rightmost value of the curves. In general, SlpEn outperforms PE. We can arguably say so in 5 out of 7 datasets (Bonn EEG, Bern-Barcelona, Fantasia, Ford A, House Twenty), regardless of the downsampling technique used. It is also noticeable that in most cases, both for SlpEn and PE, there are many values of downsampling that outperform the accuracy level obtained without using any downsampling technique, which can help to reduce testing to find out any downsampling percentage that is better than just calculating the entropy.

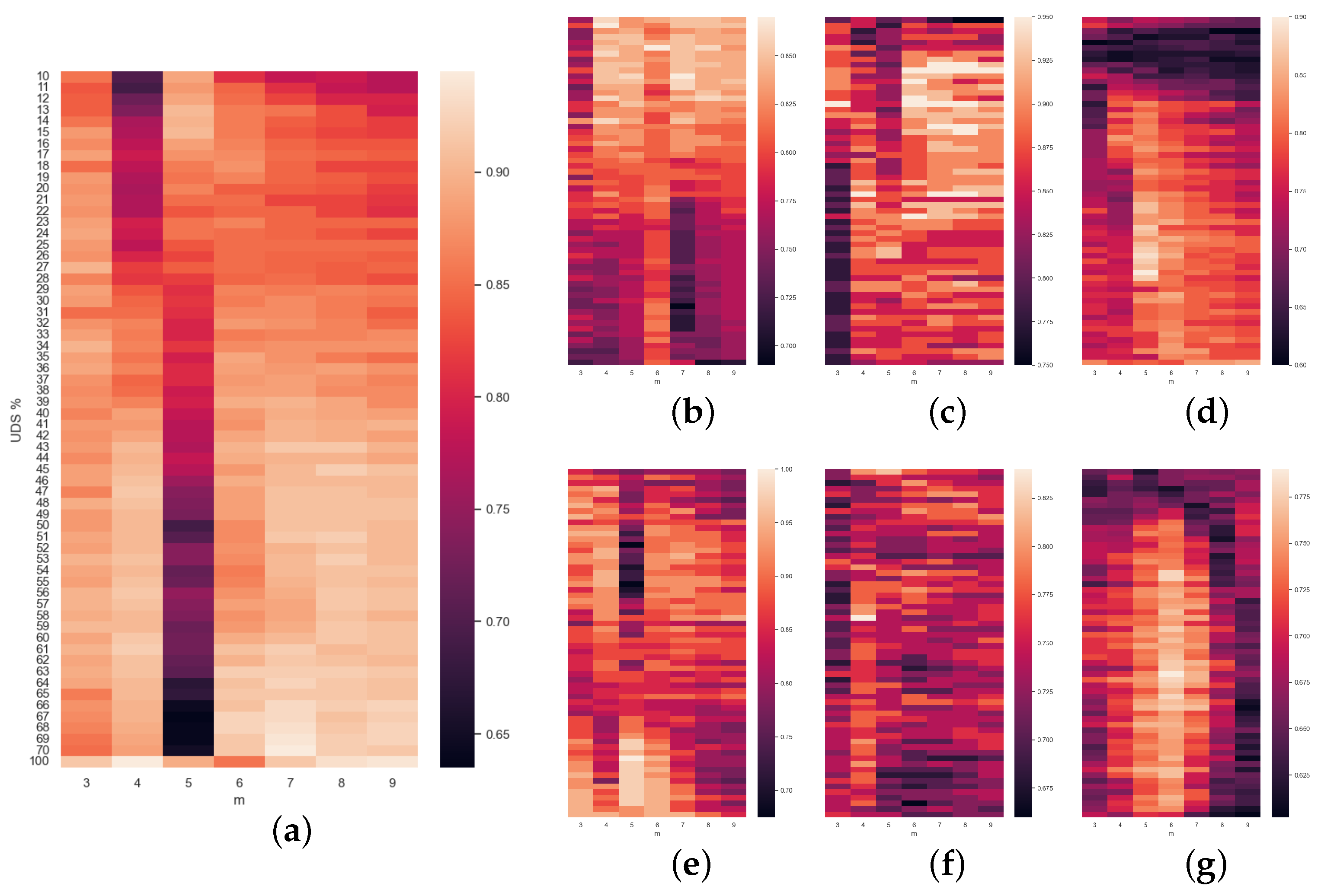

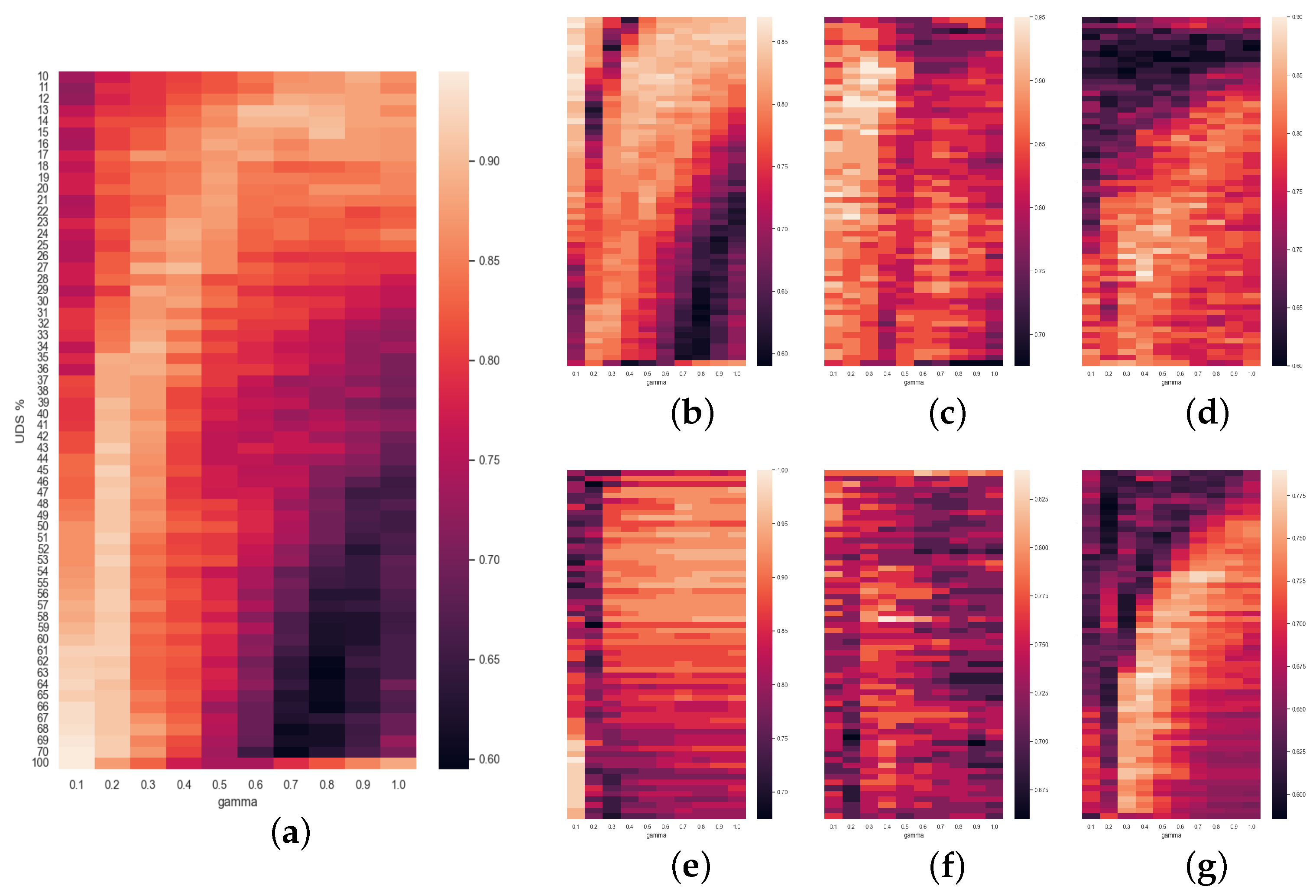

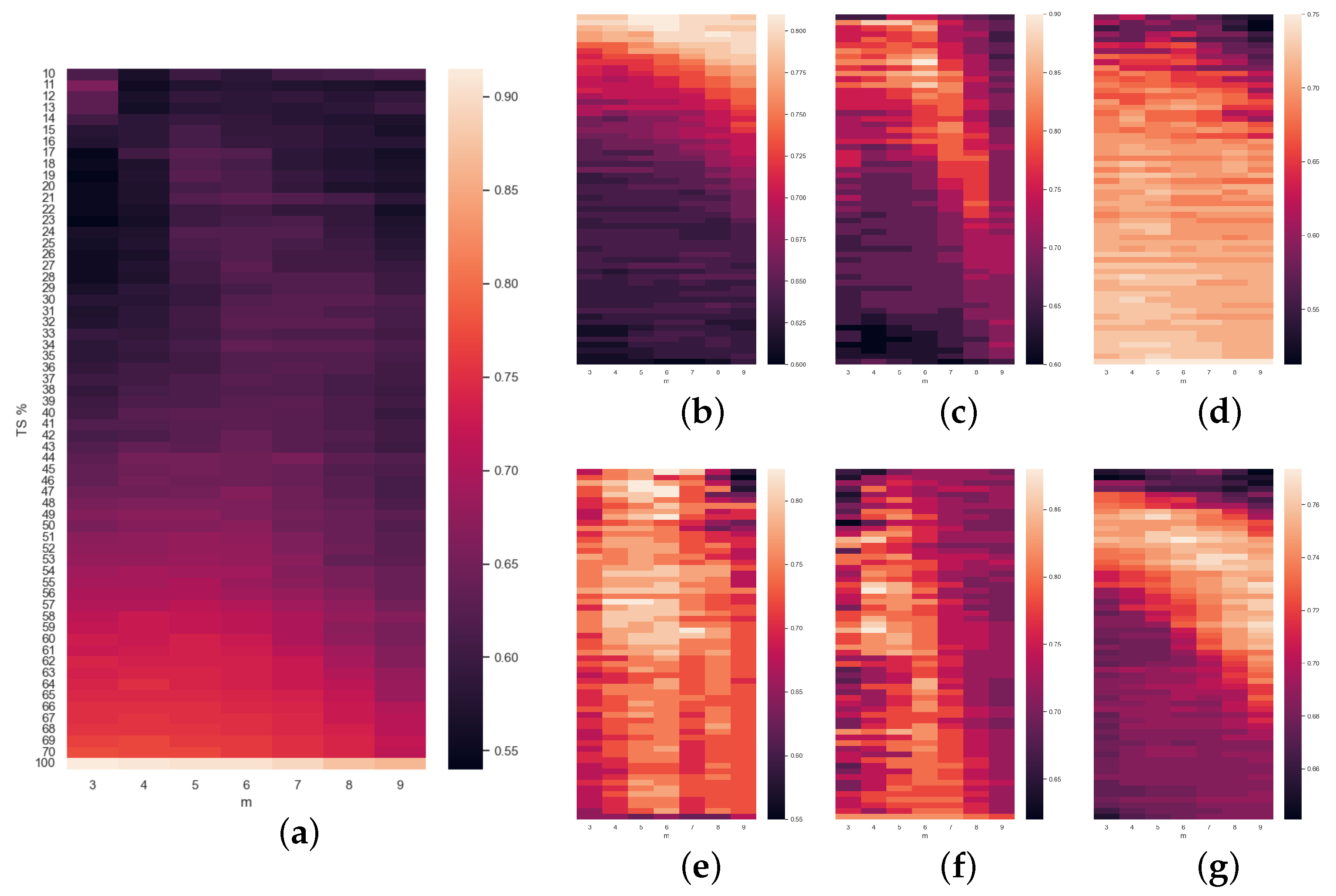

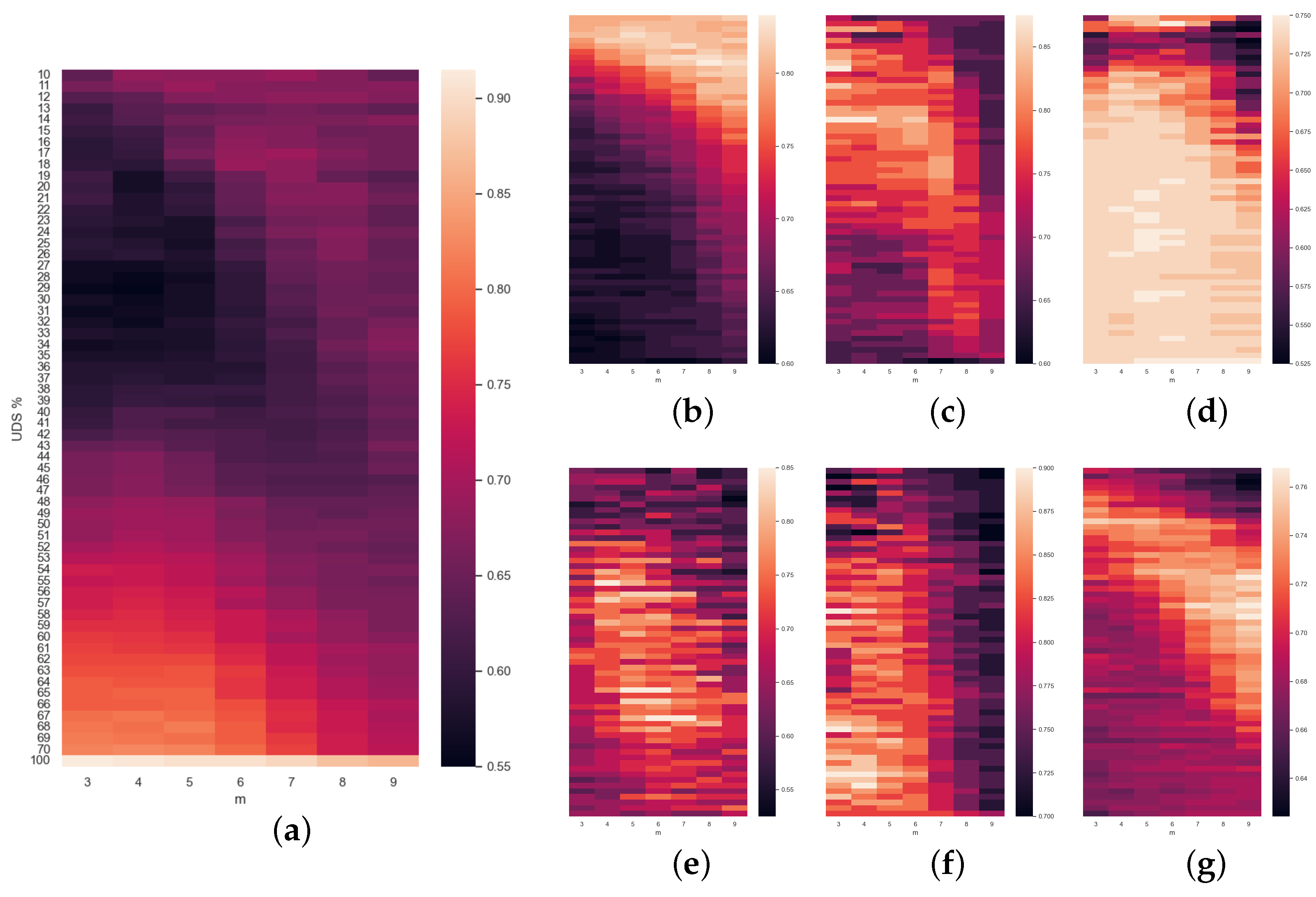

Finally, the rest of the

Figure 5,

Figure 6 and

Figure 7 are a compendium of heatmaps that represent the whole result of the grid search in terms of accuracy. They confront the level of downsampling used (TS% or UDS%) to

m (and also to

in the case of

Figure 6). For SlpEn, comparing the last row (no downsampling) to the rest, we can observe that there are many instances (both in the same column and in other columns) where the accuracy is higher than the one achieved without TS. There are some cases where, for the same value of

m, the best accuracy is obtained using the whole data series with no downsampling. Having said that, even though the accuracy is not as high, it can be compensated by the execution speed gain, since with just 50% of the samples the speedup is 2, and the accuracy loss is almost minimal. Moreover, these maps show that high accuracy normally gathers up in some kind of shape: curves, circles, etc., which can be useful to optimize the search and avoid using grid search as an optimization method, which in theory is much more costly. For example, we could use heuristic methods that look for local or absolute maximums. The same thing can be said of

Figure 7, where PE is compared against UDS%. Heatmaps reporting SlpEn combined with UDS and PE with TS have not been reported since they are very similar to the ones presented in this work, thus we can extract very similar conclusions, if not the same.

Overall, the outcomes of the experiments highlight the high potential that downsampling offers: it is easy to implement, cheap in terms of computational resources and in most cases it is able to not only reduce computation time, but also to enhance classification results in terms of accuracy. However, the search for the right downsampling percentage might increment the computational cost, in the case one wants to maximise performance. In other cases, we recommend the use of TS, performing downsampling in the interval between 25% and 50% for SlpEn and between 10% and 30% for PE, which normally outputs the best results. In addition, we believe percentages below 50% might be a good start for any kind of entropy, not just for SlpEn or PE, specially values in between 20% and 45%. In case such results are not satisfactory, one could try to calculate them with no downsampling to compare and help to decide, since the improvements in reduction of the execution time will always be there, regardless of how well it performs in classification tasks. Moreover, it would seem the best case scenario would be to sample data at the minimum rate necessary, if possible, thus avoiding adding extra data which may lead classification algorithms to lower performance.

5. Conclusions

This study addressed the combination of SlpEn and downsampling, mainly TS and UDS, aimed at enhancing time series classification accuracy, as well as reducing computation time. In addition, our work also has as a secondary objective proving that downsampling can keep its advantages when being used in combination with any other entropy calculation method, as suggested by [

37] with Sample Entropy and TS, many cases where UDS is applied indirectly in the form of temporal scales [

38,

89] and our experiments with both SlpEn and PE with TS and UDS. Our experimentation highlights the benefits of combining downsampling with entropy in classification tasks, showing great improvements both in accuracy results and in computation time.

In general, our results suggest that using TS as a downsampling technique that amplifies the most prominent patterns present in a temporal sequence is beneficial and very helpful when computing its entropy to use as a feature in classification tasks. In most cases, the accuracy levels achieved outperform those of the instances where downsampling has not been applied, both for SlpEn and PE. Of course, this is not true in all scenarios, specially when the resulting sequences have too few samples, so one must apply the downsampling technique carefully to avoid such cases. Notably, the results specially benefit instances where the initial classification accuracy is not very high and leaves room for improvements. Regarding UDS as a technique to reduce noise and focus at different temporal scales when using specific downsampling percentages, we obtain similar results to those obtained with TS. Both PE and SlpEn benefit from UDS in most of the datasets used, having more room for improvement in cases where classification accuracy is initially low, just like it is the case with TS. Moreover, our experiments lead us to believe that sampling rates above the minimum required one might have a negative impact in classification tasks since confounding data is added to the temporal series and the key features less apparent, thus making it harder to classify easily or reliably.

Additionally, both downsampling techniques are very good options in optimization of computational cost. It is able to reduce computation time consistently and independently of the data being treated, providing speedups of approximately 50% when downsampling the original sequence to half of its samples. That is specially notable when the computation times are the slowest, since the amount of time in seconds that downsampling is able to reduce is much higher compared to instances where computation times are faster, even though they have a similar speedup. That can happen when the embedding values (

m) are quite high, as it is in many of our results, thus making the computation times slower. Moreover, according to [

92], high embedding dimension values can have higher performance in many cases, even if the generally accepted inequality

(where

N is the length of the sequence under study) suggests otherwise. In such cases, downsampling would become very useful to reduce computation times.

Other optimization techniques might include making use of more powerful computing hardware, faster programming languages, refining entropy algorithms or parallel processing, but those are not as simple as the downsampling schemes used in this paper. Furthermore, using heuristic techniques when optimizing entropy parameters and downsampling levels instead of our “brute-force” grid-search approach could significantly contribute in the reduction of computational cost in terms of timing and could be beneficial not only to the approach suggested in this work, but to any other research in the field.

As a summary, we encourage the use of downsampling to process the data present in temporal sequences before calculating its entropy: it is able not only to enhance classification results, but also to reduce computational cost. Of course, one must find a good compromise between speedup and classification results, since not in all cases downsampling will help entropy to outperform itself when using the entire series of data. As an initial recommendation, we would choose the interval [25%, 50%] to perform downsampling using TS, being another possibility [10%, 30%] when using UDS, although this will always depend on the intrinsic characteristics of the dataset under study.