Abstract

In this paper, the stochastic Takagi–Sugeno fuzzy recurrent neural networks (STSFRNNS) with distributed delay is established based on the Takagi–Sugeno (TS) model and the fixed time synchronization problem is investigated. In order to synchronize the networks, we design two kinds of controllers: a feedback controller and an adaptive controller. Then, we obtain the synchronization criteria in a fixed time by combining the Lyapunov method and the related inequality theory of the stochastic differential equation and calculate the stabilization time for the STSFRNNS. In addition, to verify the authenticity of the theoretical results, we use MATLABR2023A to carry out numerical simulation.

Keywords:

fixed time synchronization; stochastic Takagi–Sugeno fuzzy recurrent neural networks; distributed delay; feedback control; adaptive control MSC:

93E03; 60H10; 34K24

1. Introduction

Neural network models can be used to analyze and process data. By analyzing the data, the predicted value can be obtained, which is helpful to understand and grasp the change law of the data. Therefore, neural network models are widely used in economic projection, signal processing, intelligent control systems, optimization calculation, robot engineering, speech recognition, and other fields [1,2,3,4,5,6]. At the same time, recurrent neural networks (RNNS) have greatly attracted scholars. Recurrent neural networks are used in many fields, such as natural language processing, power industry, and various time series forecasting [7,8,9,10,11]. Time delay is a common problem in the research. In recurrent neural networks, time delay mainly includes the internal delay of recurrent neural network, the computation delay of each component, and the transmission delay of signal in the process of receiving and transmitting [12]. It is important to note that while the propagation of a signal is sometimes instantaneous, it can also be distributed over time, so we consider two kinds of time delays, namely, discrete and distributed time delays [13,14].

The actual operation of the system is often affected by random noise and human interference. Studying the dynamic system with random noise can reveal the effect of random noise on system behavior more clearly. Therefore, after establishing the neural network model, noise is introduced into the traditional differential equation model, and the deterministic model is transformed into the differential equation model with random disturbance. In 1996, Liao and Mao introduced random noise into neural networks for the first time [15]. Many scholars devote themselves to analyzing the dynamic properties of various kinds of stochastic neural networks [16,17,18].

In recent years, the Takagi–Sugeno fuzzy model has been widely used [19]. The TS fuzzy model converts normal fuzzy rules and their reasoning into a mathematical expression form. The essence is to establish multiple simple linear relationships through fuzzy division of the global nonlinear system, and perform fuzzy reasoning and judgment on the output of multiple models, which can represent complex nonlinear relationships. It was proposed by two scholars, Takagi and Sugeno, in 1985. The main idea of the TS fuzzy model is to express nonlinear systems with many similar line segments, that is, to convert the nonlinear problem that is not easy to consider into a problem on many small line segments. The TS fuzzy model is built on a set of nonlinear systems and described by IF-THEN rules, each of which represents a subsystem. It has been shown that the TS fuzzy system can approximate the arbitrary accuracy compact set of arbitrary continuous function . This allows designers to analyze and design nonlinear systems using traditional linear systems. On this basis, this paper extends the TS fuzzy model to describe delayed recurrent neural networks, and a stochastic recurrent neural network model based on TS fuzzy is established, which is simply called STSFRNNS.

As we all know, synchronization is one of the most basic and important problems in the study of neural network dynamic models [20,21,22]. In practice, the neural network often can not be automatically implemented and usually needs a suitable controller to be designed. Hence, researchers have come up with various control methods and techniques to achieve synchronization, including feedback control [23], adaptive control [24], and so on. These control methods provide great help to solve the problem of system synchronization and promote the development and progress of technology. In addition, synchronization is of great importance in many fields such as biology, climatology, sociology, ecology, and so on [25,26,27]. Therefore, it is greatly meaningful to study the synchronization characteristics for exploring recurrent neural networks.

This paper makes the following three contributions relative to the existing literature:

- In this paper, the TS model is extended to recurrent neural networks, and synchronization properties are studied on this basis.

- For each theoretical result and the generalization of the model, numerical simulation is given to verify its validity.

- Two different kinds of control are used to study the synchronization property of the model, and the two kinds of control are compared.

. Let be a complete probability space with a filtration satisfying usual conditions, is the set of all possible fundamental random events for a randomized trial, is an algebra of , and is a probability measure on a measurable space . N-dimensional Brownian motion is defined on . Write for the trace norm of matrices or the Euclidean norm of vectors. Let stand for n-dimensional Euclidean space. Let sign be a symbolic function and represent the transpose of the vector (matrix) A. The notations and are used. And represents for the family of all nonnegative functions differentiable twice consecutively over with respect to .

1.1. Model Formulations

The distributed delay is first introduced into the recurrent neural network, and the following model is obtained

where vector is used to represent a constant external input, is the neuronal state vector, = [, , … denotes a nonlinear activation function, and we specify that A represents the rate matrix at which the neuronal potential resets to its resting state. Diagonal matrix A = diag[] and > 0, i = 1, 2, … n. > 0 denotes the time delay. D, H, and K are the connection weight matrix between neurons. , H and are the discrete delay connection weight matrices and distributed delay connection weight matrices, respectively.

The TS fuzzy model transforms the nonlinear system into a simpler continuous fuzzy system. By describing the state space of the fuzzy rules of local dependencies, dynamic character of system can be obtained. Under the description of the IF-THEN rule, every local dynamic has a linear input–output relationship. Combining with the TS fuzzy model, we propose the TS fuzzy recurrent neural networks with distributed and discrete delay. The Stochastic Takagi–Sugeno fuzzy recurrent neural networks distributed delay is shown below.

Rule:

IF: ,

THEN:

where are known variables, . is the fuzzy set.

Through the above discussion of IF-THEN rules, the STSFRNNS model is shown below

where . Denote that . Then, the final output expression of STSFRNNS is as follows

For each neuron, it is not difficult to derive the following expression

Considering (3) as the drive system, we can obtain the response system

where represents the neuron. In order to ensure (3) and (4) can achieve synchronization, is set as a controller. Let be the error. Then, according to (3) and (4), the error system is shown below

where

and

1.2. Assumption and Lemma

Assumption 1.

There is a positive number , for all , such that the function g satisfies the bounded and Lipschitz conditions

Assumption 2.

σ satisfies locally Lipschitz and linear growth conditions. Moreover, σ satisfies

Lemma 1

([28]). If , , …, , , , then

Lemma 2

([29]). Consider the following systems,

where indicates the system status, is a Brownian operation. Let and the time when stability is first reached is expressed as

If there is a Lyapunov function V(t, ϕ(t)) , which is a positive definite function and radially unbounded such that

Then the zero solution is stable with probability in stochastic fixed time, and

2. Fixed Time Synchronization Analysis

In this section, the Lyapunov method and inequality relations are combined to obtain a general criterion for fixed time synchronization of STSFRNNS.

2.1. Feedback Control

To make the system realize fixed time synchronization, we first used feedback control. The feedback controller is shown below

where , the constant number is the gain coefficient, and are definite constants. The real number p and q satisfies and , respectively. In all subsequent proofs, for ease of representation, denote

Theorem 1.

Proof.

To prove Theorem 1, we construct . Then

Note that

And in a similar way, we have

Moreover, it is given by the Cauchy–Schwarz inequality that

and

Therefore,

Combined with the inequality relation in Theorem 1, it can finally be obtained

By Lemma 2, the stability time can be calculated

□

2.2. Adaptive Control

In feedback control, the coefficient is not easy to determine. In order to achieve better synchronization control, we set up adaptive control here

The adaptive control gain is represented by and the designed adaptive rate is

where is the undetermined constant. Then the synchronization criterion of STSFRNNS under adaptive control (7) can be obtained.

Theorem 2.

Proof.

To prove Theorem 2, we construct . Then

Note that

It follows that

Combined with the inequality relation in Theorem 2, it can finally be obtained

By Lemma 2, the stability time can be calculated

□

3. Model Improvement and Extension

Modification of the Model Based on the Actual Situation

Usually, the past state of the recurrent neural network system will inevitably have an impact on the current state. That is, the evolution trend of the system depends not only on the system’s current state, but also on the state of a certain moment or several moments in the past. In this section, we introduce distributed and discrete time-varying delay in the model and consider the following system

where and are time-varying delays and satisfy . In this section, considering system (8) as the driving system, there is the following response system

According to (8) and (9), the error system is

with

Let satisfy the locally Lipschitz and linear growth conditions. Moreover, satisfies

For better study the synchronization properties, we consider adaptive control here

The adaptive control gain is represented by and the designed adaptive rate is

where is the undetermined constant. Then the synchronization criterion of STSFRNNS under adaptive control (11) can be obtained.

Theorem 3.

Proof.

To prove Theorem 3, we construct . Then

Note that

Hence,

Combined with the inequality relation in Theorem 3, it can finally be obtained

By Lemma 2, the stability time can be calculated

□

4. Numerical Simulation

The theoretical results in Section 3 prove the synchronization property of the STSFRNNS model. In order to verify the theoretical results, the numerical simulations of the motion trajectory of the system under two kinds of control are carried out, and the simulation results show that the theoretical results are valid. Taking , the expression of drive system (3) is

where , and

Furthermore, let , and . The values of , and are listed in Table 1, Table 2 and Table 3 respectively.

Table 1.

Values of .

Table 2.

Values of .

Table 3.

Values of .

Then the expression of the response system (4) is

It is not hard to obtain

In addition, according to (12) and (13), the error system (5) is

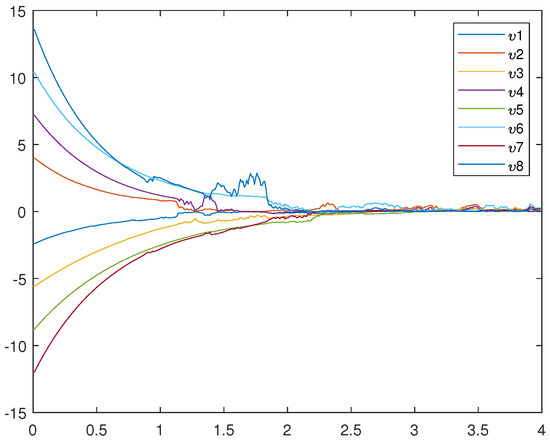

4.1. Numerical Simulation 1

To verify Theorem 1, the following numerical simulations show that the fixed time synchronization of (12) and (13) under the action of feedback control can be achieved. The controller (6) is

where . Any parameters else that are not mentioned are zero. According to the values of the above parameters, the conditions in Theorem 1 can be verified. That is,

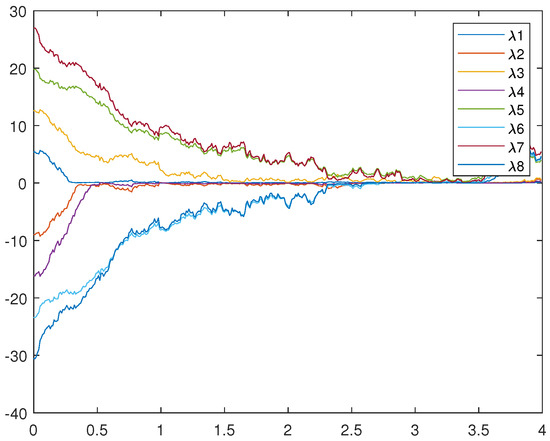

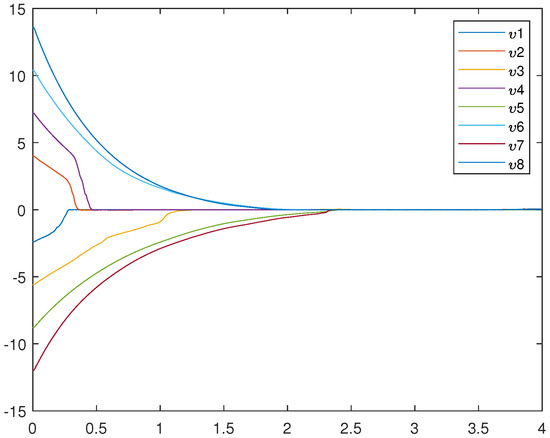

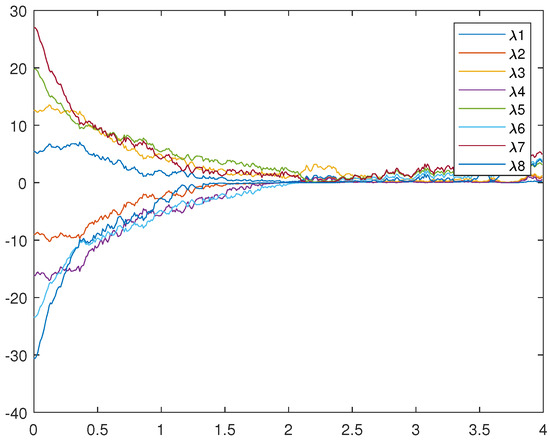

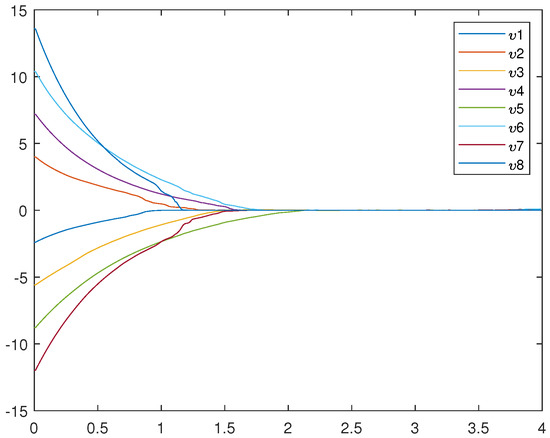

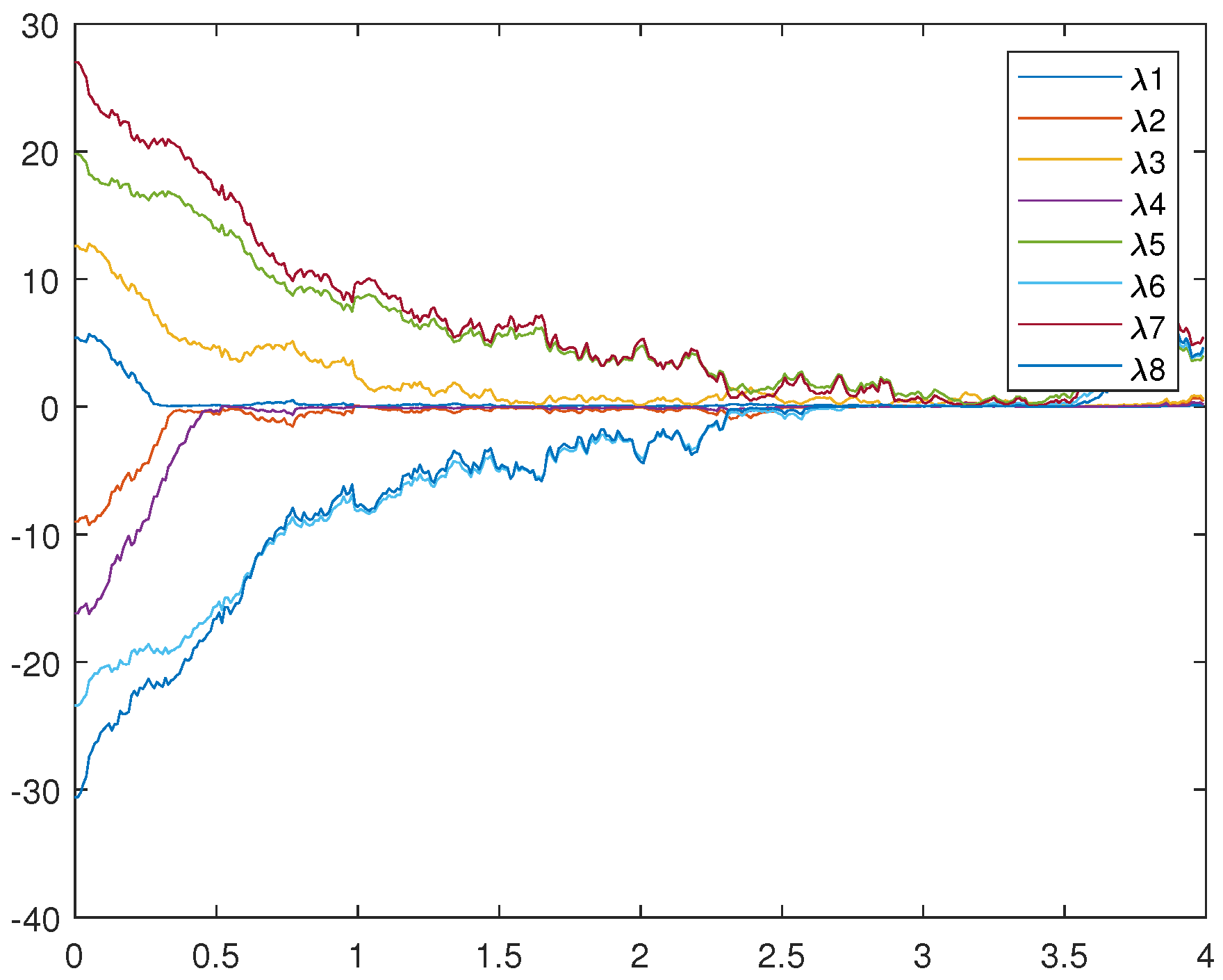

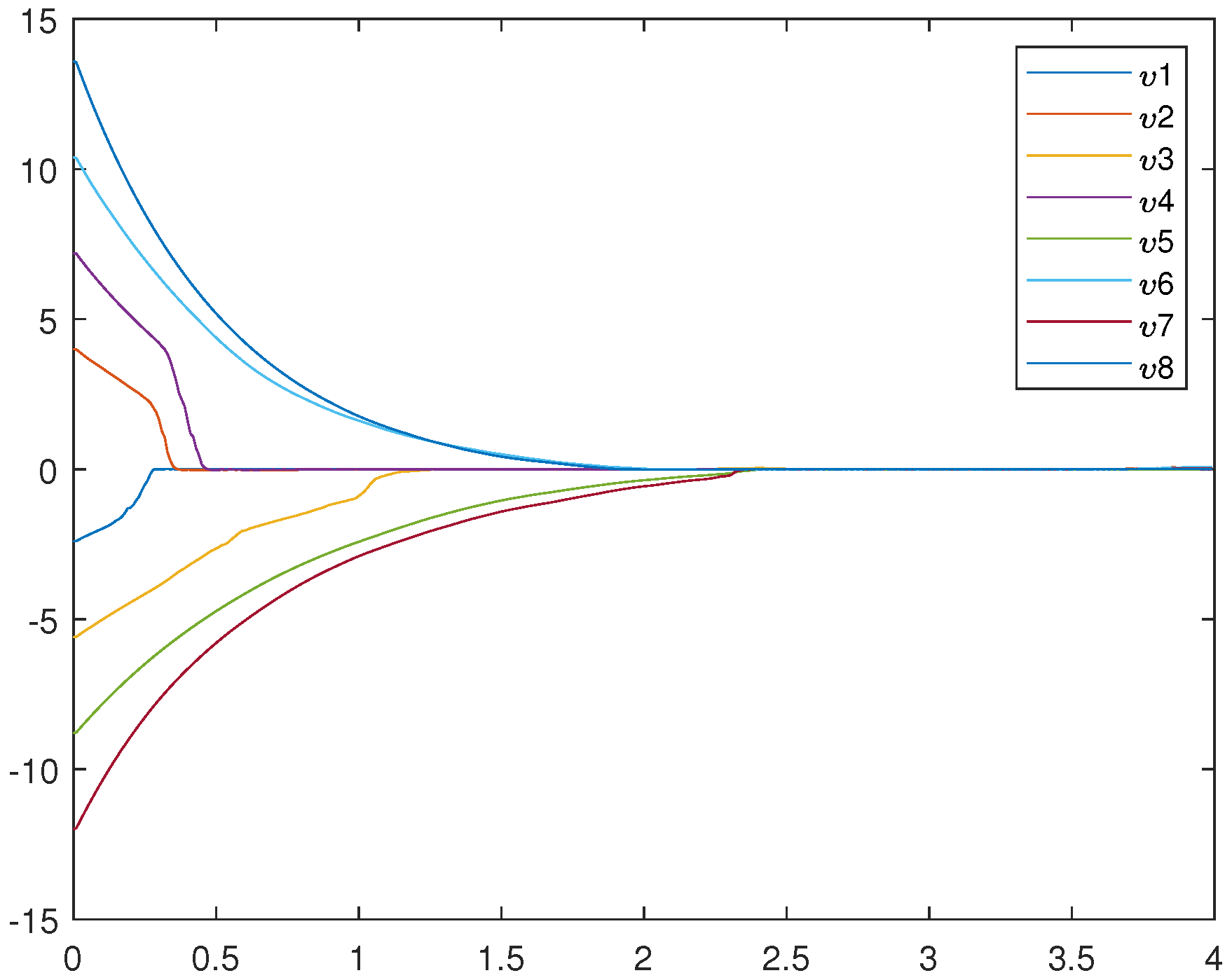

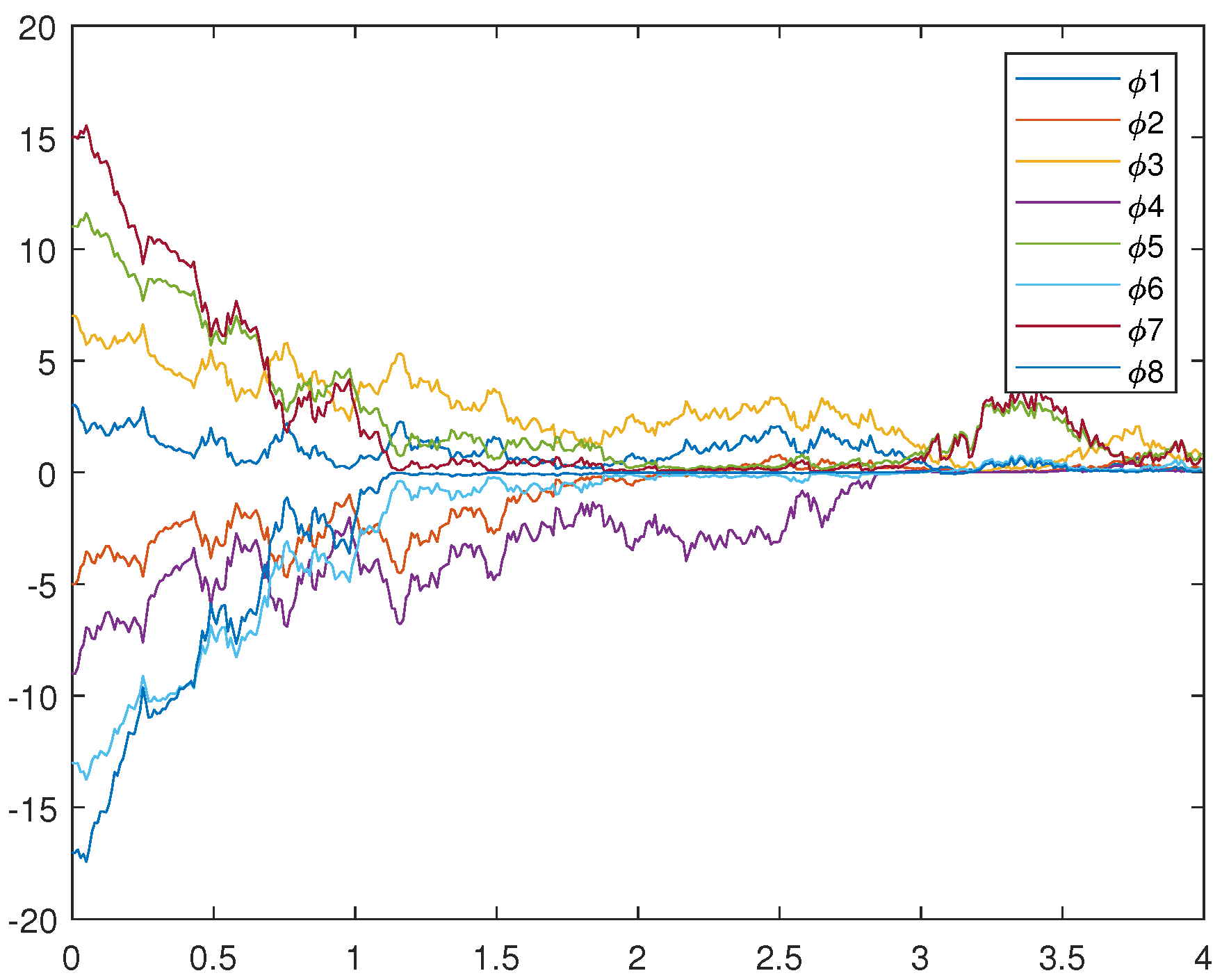

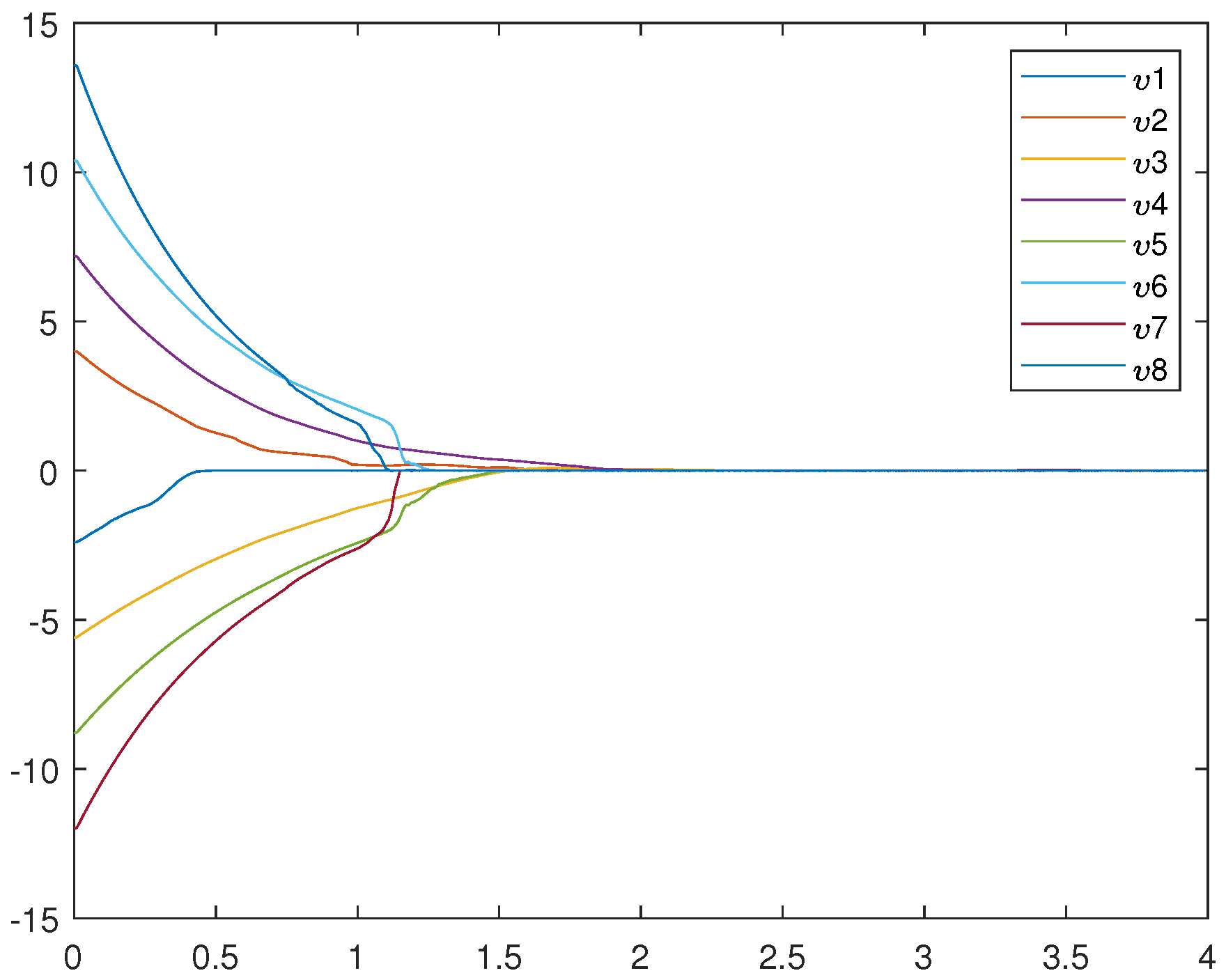

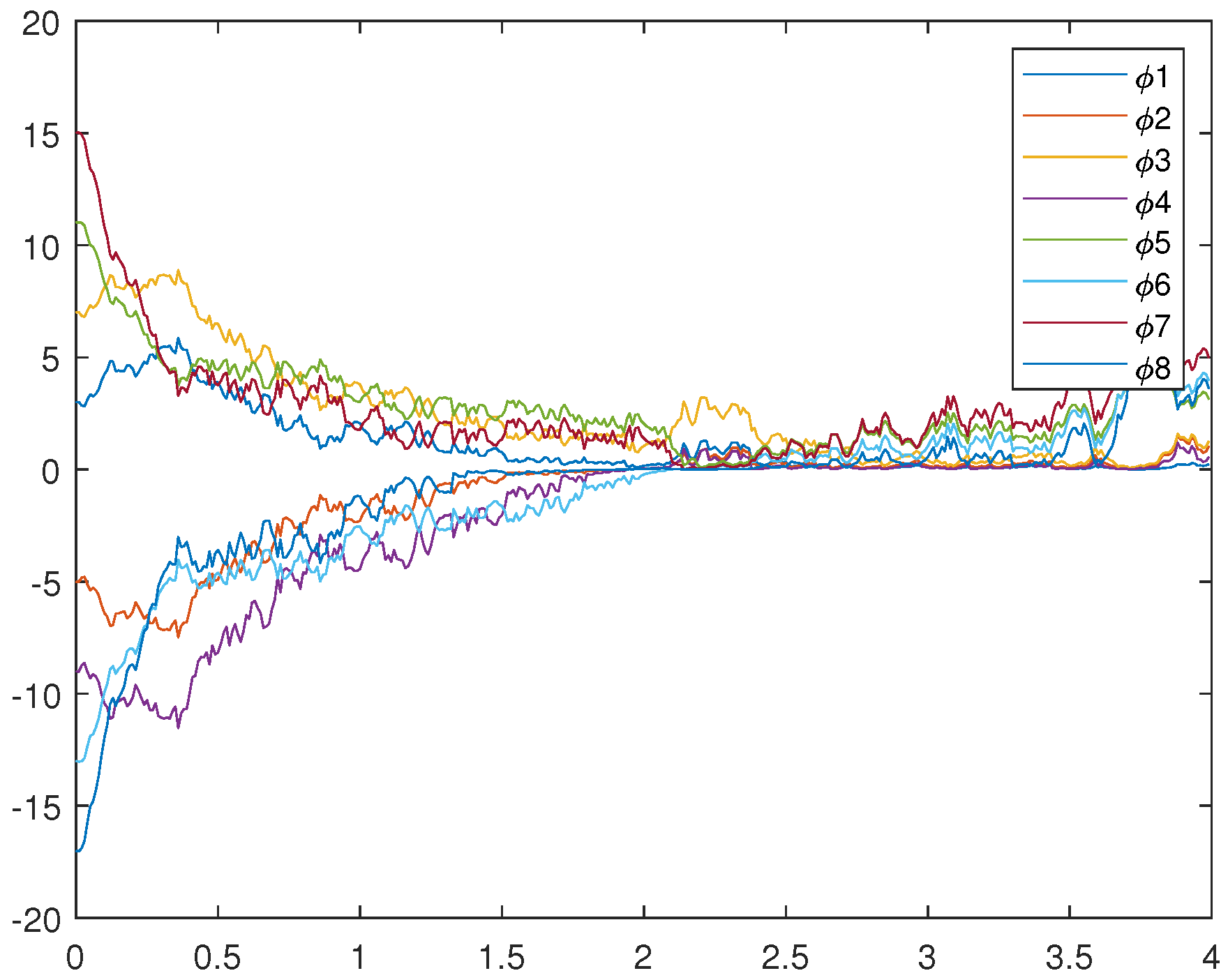

Figure 1 is an uncontrolled error system. It is not hard to find that the motion trajectory of the error system cannot achieve synchronization without adding the controller. Figure 2, Figure 3, and Figure 4 represent (12), (13), and (14), respectively. Figure 4 very intuitively shows that the trajectory of the error system tends to zero in a certain period of time, and the STSFRNNS model also gradually tends to synchronization.

Figure 1.

Trajectories of error system (14) without control ().

Figure 2.

Trajectories of drive system (12).

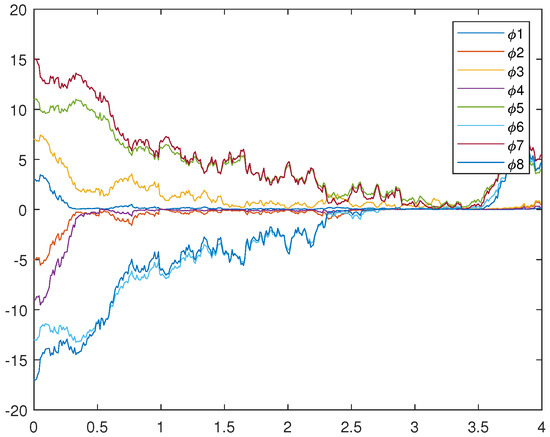

4.2. Numerical Simulation 2

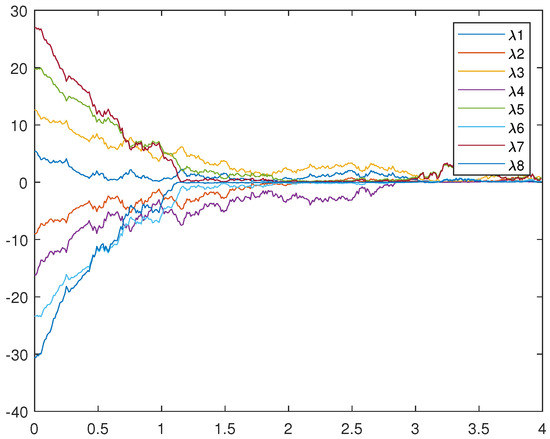

To verify Theorem 2, the following numerical simulations show that the fixed time synchronization of (12) and (13) under the action of adaptive control can be achieved.

The adaptive control gain is represented by and the designed adaptive rate is

where , and the values not mentioned are the same as before. Any parameters else that are not mentioned are zero. According to the values of the above parameters, the conditions in Theorem 2 can be verified. That is,

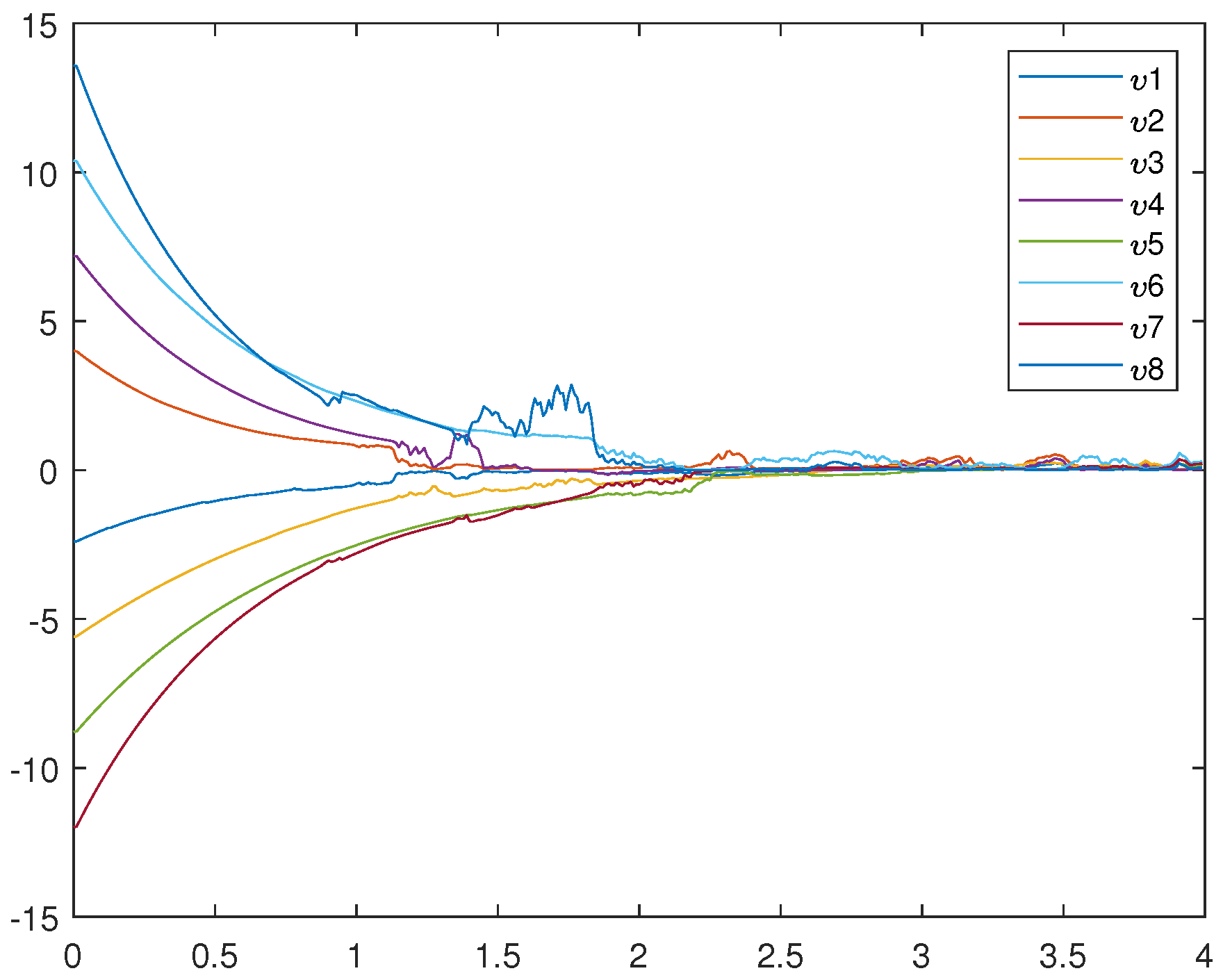

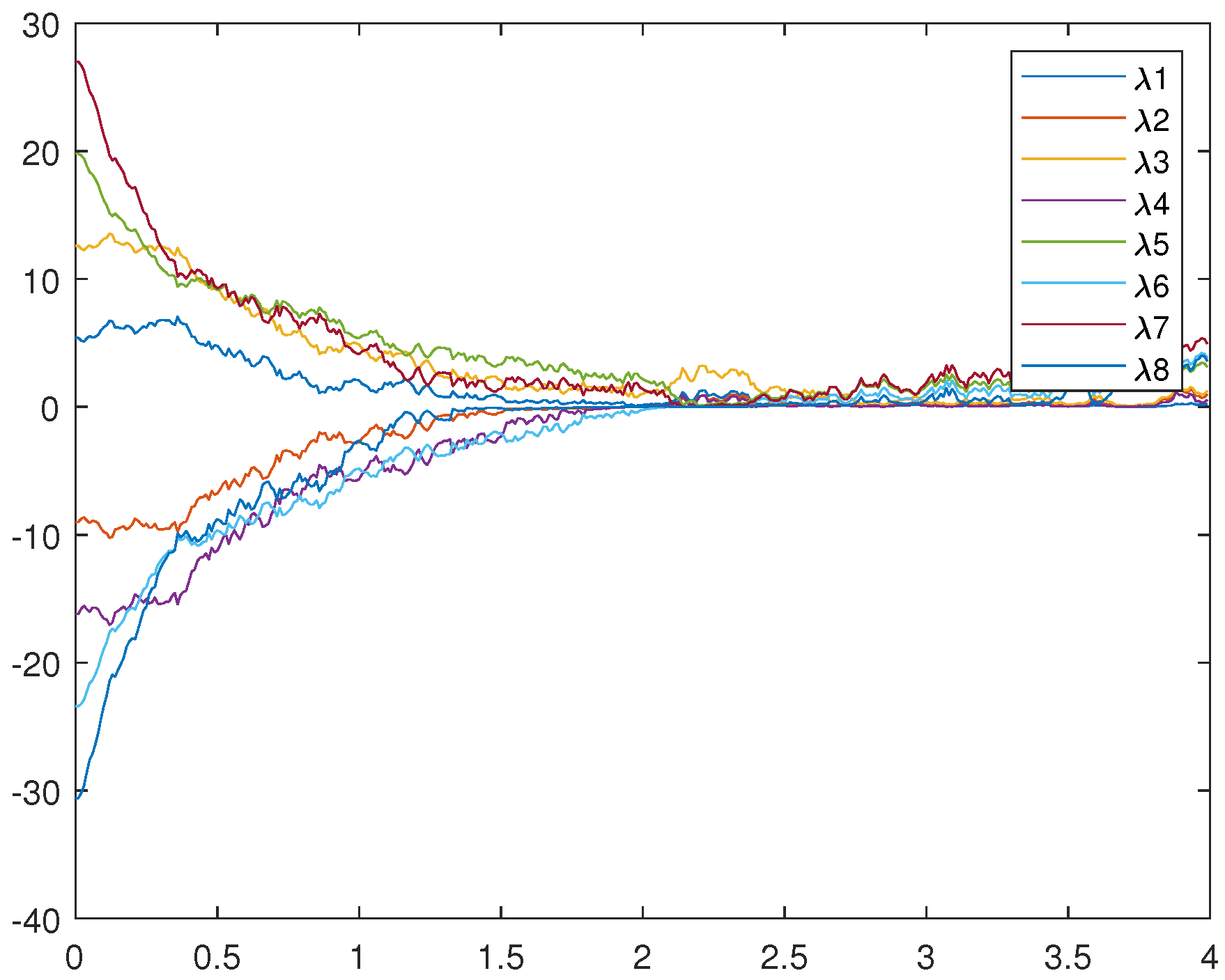

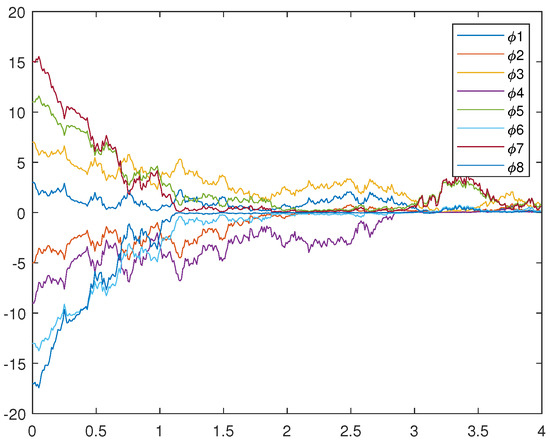

Figure 5, Figure 6, and Figure 7 represent the trajectory of (12), (13), and (14), respectively. Figure 7 very intuitively shows that the trajectory of the error system tends to zero in a certain period of time, and the STSFRNNS model also gradually tends to synchronization.

Figure 5.

Trajectories of drive system (12).

4.3. Brief Summary

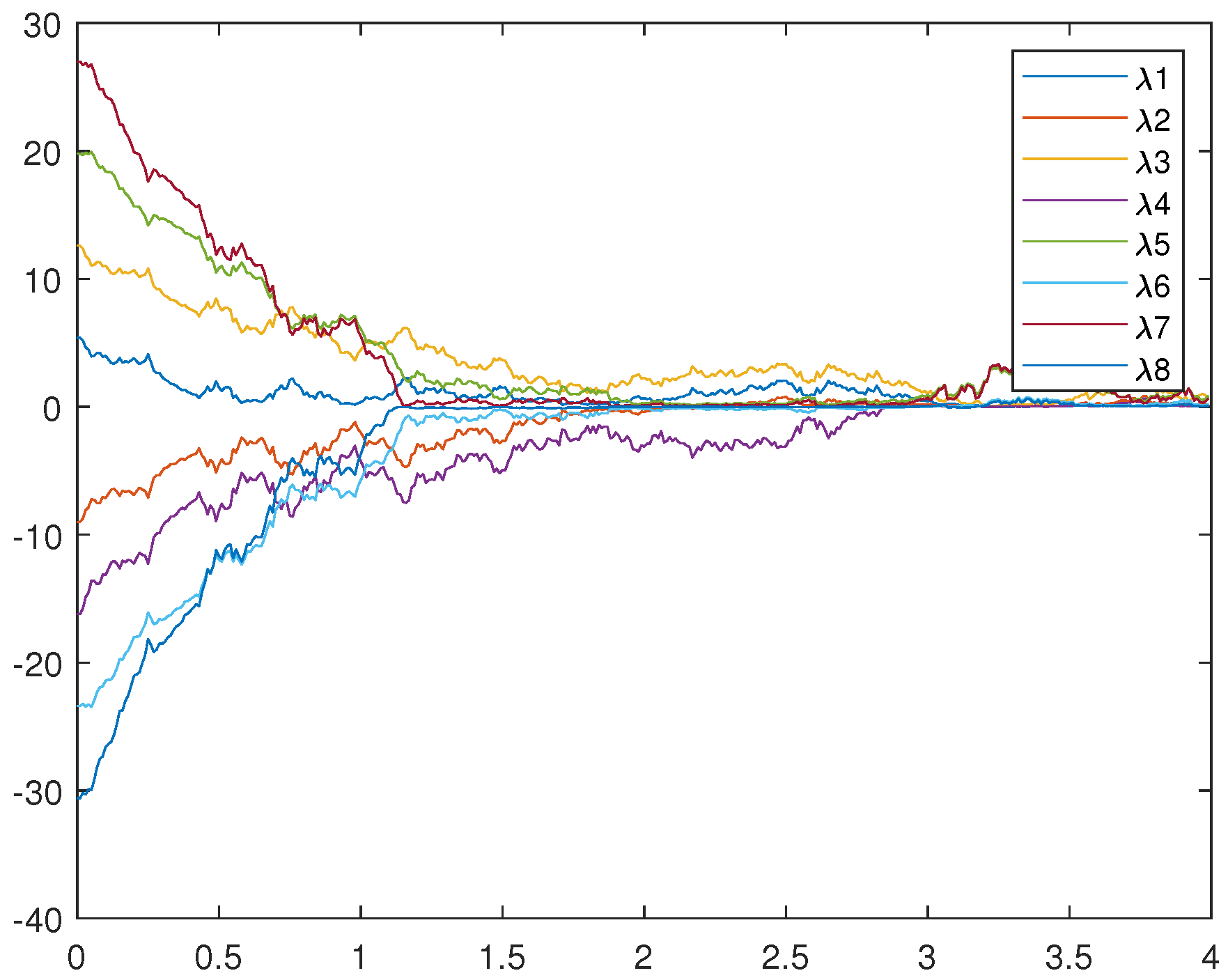

From Figure 4 and Figure 7, it is not hard to find that the two controls designed above can make the STSFRNNS achieve fixed time synchronization. Compared with Figure 4, the convergence rate in Figure 7 is faster and the error system approaches 0 earlier. When other conditions remain unchanged, adaptive control is more suitable for STSFRNNS.

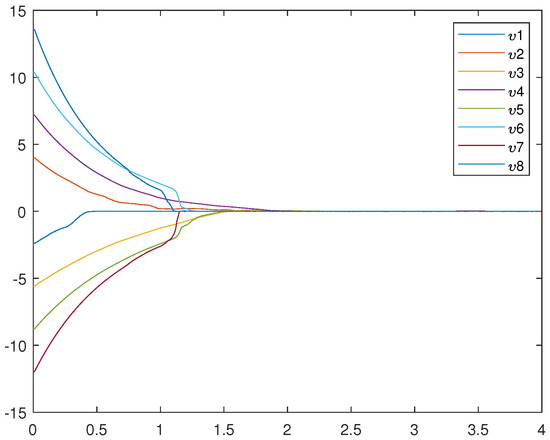

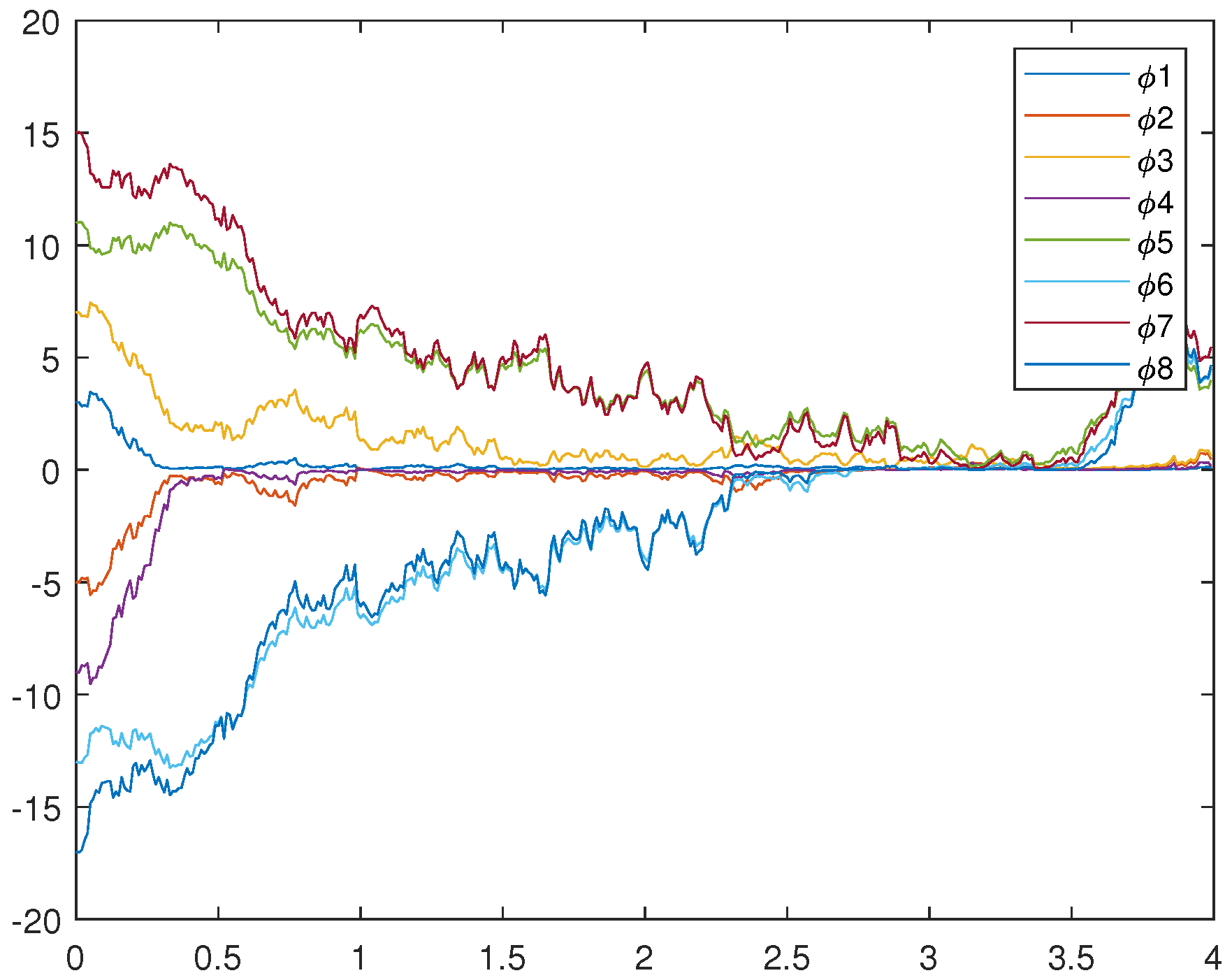

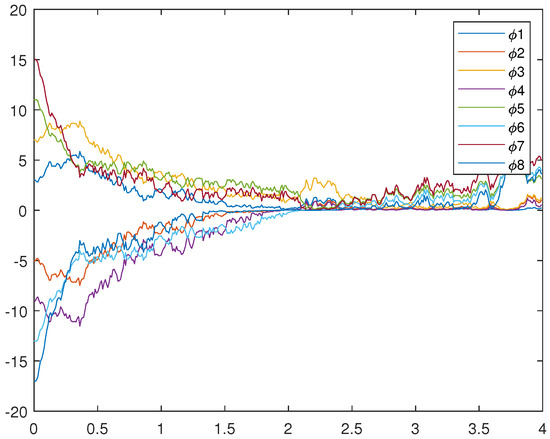

4.4. Numerical Simulation 3

To verify Theorem 3, the following numerical simulations shows that the fixed time synchronization of (12) and (13) under the action of adaptive control can be achieved.

The adaptive control gain is represented by and the designed adaptive rate is

According to the values of the above parameters, the conditions in Theorem 3 can be verified.

The drive system and response system are

and

In addition, according to (18) and (19), the error system is

Let

and

Then

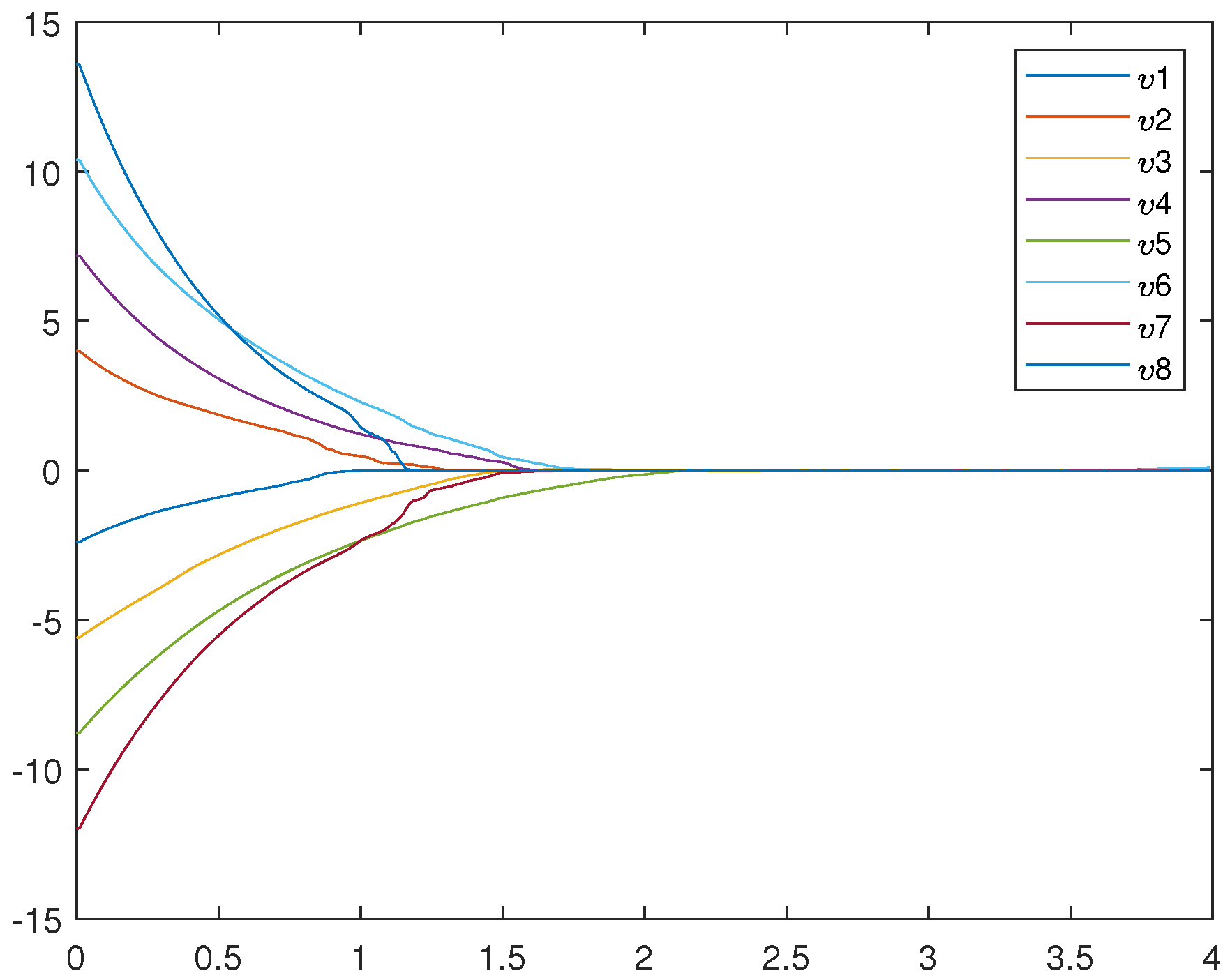

All of the parameter values are the same as in Numerical Simulation 2. Figure 8, Figure 9, and Figure 10 represent the trajectory of (18), (19), and (20), respectively.

Figure 8.

Trajectories of drive system (18).

5. Conclusions

In this paper, we give the synchronization characteristics of STSFRNNS under two control methods. Under these two controllers, we give the synchronization theorem of the system. We compares the two control methods and optimize the model according to the actual situation. Finally, the theory is verified by numerical calculation. Through a series of work, the fixed time synchronization property of RNNS is finally proved.

Compared with exponential synchronization and finite time synchronization [30,31,32,33], the fixed time synchronization control used in this paper can accurately calculate the synchronization time. The conclusions obtained in this paper improve and extend the existing research work on neural network synchronization. However, practical applications are not given in this paper.

This paper mainly studies the theoretical method. In the future, if possible, we want to find real engineering problems and plug in the models to solve specific problems.

Author Contributions

Writing—original draft preparation, Y.N.; software, X.X.; writing—review and editing, M.L. All authors participated in the writing and coordination of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the College Students Innovations Special Project funded by Northeast Forestry University (DC-2024130), the Natural Science Foundation of Heilongjiang Province (No. LH2022A002), and the National Natural Science Foundation of China (No. 12071115).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare that they have no competing interests.

References

- Wang, J.; Xi, R.; Cai, T.; Lu, H.; Zhu, R.; Zheng, B.; Chen, H. Deep Neural Network with Data Cropping Algorithm for Absorptive Frequency-Selective Transmission Metasurface. Adv. Opt. Mater. 2022, 10, 2200178. [Google Scholar] [CrossRef]

- Li, Y.; Lam, J.; Fang, R. Mean square stability of linear stochastic neutral-type time-delay systems with multiple delays. Int. J. Robust Nonlinear Control 2019, 29, 451–472. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Zhou, P.; Tan, Z.; Fan, H.; Zhang, Y.; Shen, L.; Ru, J.; Wang, Y.; Ye, L.; et al. A 148-nW Reconfigurable Event-Driven Intelligent Wake-Up System for AIoT Nodes Using an Asynchronous Pulse-Based Feature Extractor and a Convolutional Neural Network. IEEE J.-Solid-State Circuits 2021, 56, 3274–3288. [Google Scholar] [CrossRef]

- Zhang, H.; Tang, Z.; Xie, Y.; Chen, Q.; Gao, X.; Gui, W. Feature Reconstruction-Regression Network: A Light-Weight Deep Neural Network for Performance Monitoring in the Froth Flotation. IEEE Trans. Ind. Inform. 2021, 17, 8406–8417. [Google Scholar] [CrossRef]

- Saquetti, M.; Canofre, R.; Lorenzon, A.F.; Rossi, F.D.; Azambuja, J.R.; Cordeiro, W.; Luizelli, M.C. Toward In-Network Intelligence: Running Distributed Artificial Neural Networks in the Data Plane. IEEE Commun. Lett. 2021, 25, 3551–3555. [Google Scholar] [CrossRef]

- Forti, M.; Nistri, P. Global convergence of neural networks with discontinuous neuron activations. IEEE Trans. Circuits Syst. Fundam. Theory Appl. 2003, 50, 1421–1435. [Google Scholar] [CrossRef]

- Liu, X.; Cao, J. Nonsmooth Finite-Time Synchronization of Switched Coupled Neural Networks. IEEE Trans. Cybern. 2003, 46, 2360–2371. [Google Scholar] [CrossRef] [PubMed]

- Teixeira, D.; Calili, F.; Almeida, F.M. Recurrent Neural Networks for Estimating the State of Health of Lithium-Ion Batteries. Batteries 2024, 10, 111. [Google Scholar] [CrossRef]

- Pals, M.; Macke, H.J.; Barak, O. Trained recurrent neural networks develop phase-locked limit cycles in a working memory task. PLoS Comput. Biol. 2024, 20, e1011852. [Google Scholar] [CrossRef] [PubMed]

- González, S.; Peñalba, A.; Sumper, A. Distribution network planning method: Integration of a recurrent neural network model for the prediction of scenarios. Electr. Power Syst. Res. 2024, 229, 110125–110129. [Google Scholar] [CrossRef]

- Chen, G. Recurrent neural networks (RNNs) learn the constitutive law of viscoelasticity. Comput. Mech. 2021, 67, 1009–1019. [Google Scholar] [CrossRef]

- Feng, L.; Wu, Z.; Cao, J. Exponential stability for nonlinear hybrid stochastic systems with time varying delays of neutral type. Appl. Math. Lett. 2020, 107, 106468. [Google Scholar] [CrossRef]

- Pichamuthu, M.; An araman, R. The split step theta balanced numerical approximations of stochastic time varying Hopfield neural networks with distributed delays. Results Control Optim. 2023, 13, 100329. [Google Scholar]

- Li, B.; Cheng, X. Synchronization analysis of coupled fractional-order neural networks with time-varying delays. Math. Biosci. Eng. 2023, 20, 14846–14865. [Google Scholar] [CrossRef] [PubMed]

- Liao, X.; Mao, X. Exponential stability and instability of stochastic neural networks. Stoch. Anal. Appl. 1996, 14, 165–185. [Google Scholar] [CrossRef]

- Li, B.; Cao, Y.; Li, Y. Almost automorphic solutions in distribution for octonion-valued stochastic recurrent neural networks with time-varying delays. Int. J. Syst. Sci. 2024, 55, 102–118. [Google Scholar] [CrossRef]

- Zeng, R.; Song, Q. Mean-square exponential input-to-state stability for stochastic neutral-type quaternion-valued neural networks via Itô’s formula of quaternion version. Chaos Solitons Fractals 2024, 178, 114341. [Google Scholar] [CrossRef]

- Carlos, G. Neural network based generation of a 1-dimensional stochastic field with turbulent velocity statistics. Phys. D Nonlinear Phenom. 2024, 458, 133997. [Google Scholar]

- Takagi, T.; Sugeno, M. Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 1985, 15, 116–132. [Google Scholar] [CrossRef]

- Wu, H.; Bian, Y.; Zhang, Y.; Guo, Y.; Xu, Q.; Chen, M. Multi-stable states and synchronicity of a cellular neural network with memristive activation function. Chaos Solitons Fractals 2023, 177, 114201. [Google Scholar] [CrossRef]

- Thazhathethil, R.; Abdulraheem, P.S. In-phase and anti-phase bursting dynamics and synchronisation scenario in neural network by varying coupling phase. J. Biol. Phys. 2023, 49, 345–361. [Google Scholar]

- Thomas, B.; Manuel, C.; Caroline, A.L. Revisiting the involvement of tau in complex neural network remodeling: Analysis of the extracellular neuronal activity in organotypic brain slice co-cultures. J. Neural Eng. 2022, 19, 066026. [Google Scholar]

- Chu, L. Neural network-based robot nonlinear output feedback control method. J. Comput. Methods Sci. Eng. 2023, 23, 1007–1019. [Google Scholar] [CrossRef]

- Shen, F.; Wang, X.; Pan, X. Event-triggered adaptive neural network control design for stochastic nonlinear systems with output constraint. Int. J. Adapt. Control Signal Process. 2023, 38, 342–358. [Google Scholar] [CrossRef]

- Yao, Z.; Wang, C. Control the collective behaviors in a functional neural network. Chaos Solitons Fractals 2021, 152, 111361. [Google Scholar] [CrossRef]

- Phan, C.; Skrzypek, L.; You, Y. Dynamics and synchronization of complex neural networks with boundary coupling. Anal. Math. Phys. 2022, 12, 33. [Google Scholar] [CrossRef]

- Zuo, Z.; Tie, L. Distributed robust finite-time nonlinear consensus protocols for multiagent systems. Int. J. Syst. Sci. 2016, 47, 1366–1375. [Google Scholar] [CrossRef]

- Liu, X.; Wang, F.; Tang, M. Stability and synchronization analysis of neural networks via Halanay-type inequality. J. Comput. Appl. Math. 2017, 319, 14–23. [Google Scholar] [CrossRef]

- Ren, H.; Peng, Z.; Gu, Y. Fixed-time synchronization of stochastic memristor-based neural networks with adaptive control. Neural Netw. 2020, 130, 165–175. [Google Scholar] [CrossRef]

- Wen, S.; Zeng, Z.; Huang, T. Exponential Adaptive Lag Synchronization of Memristive Neural Networks via Fuzzy Method and Applications in Pseudorandom Number Generators. IEEE Trans. Fuzzy Syst. 2013, 22, 1704–1713. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, J. Finite-Time Synchronization for Fuzzy Inertial Neural Networks by Maximum Value Approach. IEEE Trans. Fuzzy Syst. 2022, 30, 1436–1446. [Google Scholar] [CrossRef]

- Asghar, B.; Ehsan, R.; Naveed, K. Recurrent neural network for pitch control of variable-speed wind turbine. Sci. Prog. 2024, 107, 3682–3685. [Google Scholar] [CrossRef] [PubMed]

- Du, F.; Lu, J. New criterion for finite-time synchronization of fractional order memristor-based neural networks with time delay. Appl. Math. Comput. 2020, 389, 125616. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).