Abstract

The portmanteau test is an effective tool for testing the goodness of fit of models. Motivated by the fact that high-frequency data can improve the estimation accuracy of models, a modified portmanteau test using high-frequency data is proposed for ARCH-type models in this paper. Simulation results show that the empirical size and power of the modified test statistics of the model using high-frequency data are better than those of the daily model. Three stock indices (CSI 300, SSE 50, CSI 500) are taken as an example to illustrate the practical application of the test.

MSC:

62H15; 62G20

1. Introduction

Securities trading has always been a prominent topic in the financial sector, and volatility serves as a crucial indicator for analyzing fluctuations in trading price data. Volatility reflects the expected level of price instability in a financial asset or market, which greatly influences investment decisions [1]. In the field of volatility modeling, the autoregressive conditional heteroscedasticity (ARCH) model and the generalized autoregressive conditional heteroscedasticity (GARCH) model are widely recognized as two fundamental models [2]. Let be the log-return of day t. The ARCH model proposed by Engle (1982) [3] is structured as follows:

where is an independent and identically distributed (i.i.d.) sequence, and represents the volatility of . Additionally, it is assumed that the expectation of is zero, i.e., , and the expectation of is equal to one, i.e., . The parameters are the coefficients associated with the lagged squared observations , which need to be estimated. ARCH models are commonly employed in time-series modeling and analysis. However, when the order q of the ARCH(q) model is large, the number of parameters that require estimation increases. This can lead to challenges in estimation, particularly in cases with finite samples where estimation efficiency may decrease. Furthermore, it is possible for the estimated parameter values to turn out negative. To address these limitations, Bollerslev (1986) [4] proposed the generalized autoregressive conditional heteroscedasticity (GARCH) model. For the pure GARCH(1,1) model, the conditional variance equation is expressed as follows:

Obviously, Formula (1) is an iterative equation. Let . Then

From (1) and (2), we obtain

Repeating the process above, we expand . Then, we can obtain an infinite-order ARCH model. Since the GARCH model is a generalization of the ARCH model, they are collectively referred to as the ARCH-type models. In financial data analysis, apart from heteroscedasticity, there are other prominent characteristics, such as the leverage effect (Black, 1976) [5]. To address this, Geweke (1986) [6] introduced the asymmetric log-GARCH model, while Engle et al. (1993) [7] introduced the asymmetric power GARCH model to account for the leverage effect. Furthermore, Drost and Klassen (1997) [8] modified the pure GARCH(1,1) model as follows:

For the ARCH(q) case, the conditional variance equation is given by

When , , and , the models (3) and (4) can be transformed into the pure GARCH(1,1) model. Similarly, the models (3) and (5) can be transformed into the pure ARCH(q) model. The advantage of this model is that the standardization of only affects the parameter . By standardizing the residuals, they are made unit variance, simplifying the estimation process and allowing the focus to be on estimating the parameters of interest without being influenced by the scale of the residuals.

With the advancement of information technology, obtaining intraday high-frequency data has become effortless, and such data often contain valuable information. Recognizing this, Visser (2011) [9] introduced high-frequency data into models (3) and (4), leading to improved efficiency in model parameters estimation. Subsequently, numerous researchers have extensively explored the enhancement of classical models by utilizing high-frequency data. Huang et al. (2015) [10] incorporated high-frequency data into the GJR model (named by the proponents Glosten, Jagannathan and Runkel) [11] and examined a range of robust M-estimators [12]. Wang et al. (2017) [13] employed composite quantile regression to examine the GARCH model using high-frequency data. Other notable studies include Fan et al. (2017) [14], Deng et al. (2020) [15], and Liang et al. (2021) [16].

In addition to model parameter estimation, model testing plays a crucial role in time-series modeling and analysis as researchers seek to assess the adequacy of the established models. Portmanteau tests have been widely used for this purpose. The earliest work in this area can be traced back to Box and Pierce (1970) [17], who demonstrated the utility of square-residual autocorrelations for model testing. Since then, several researchers have applied this test to time-series models, including Granger et al. (1978) [18] and Mcleod and Li (1983) [19]. For instance, Engle and Bollerslev (1986) [20] and Pantula (1988) [21] proposed the test to examine the presence of ARCH in the error term. However, Li and Mak (1994) [22] showed that the variance of the residual autocorrelation function is not idempotent when using ARCH-type models. To overcome this issue, they developed a modified statistic that incorporated the variance of the residual autocorrelation function. They also proved that when the parameter estimates follow asymptotic normality, the test statistic follows a chi-square distribution. After the emergence of the quasi-maximum exponential likelihood estimation (QMELE) method [23], Li and Li (2005) [24] generalized the test statistic proposed by Li and Mak [22] using a new estimation method. They also proposed a similar test statistic for the autocorrelation function with absolute residuals . Carbon and Francq (2011) [25] extended the test of Li and Mak [22] to the asymmetric power GARCH model. Furthermore, Chen and Zhu (2015) [26] constructed a rank-based portmanteau test based on Li and Mak’s [22] statistic. They modified the autocorrelation function of the residuals to the autocorrelation function of the rank-based residuals , making the new test applicable to heavy-tailed data. Even today, scholars like Li and Zhang (2022) [27] continue to show great interest in studying the extension of these tests.

Motivated by the works of Li and Mak (1994) [22] and Visser (2011) [9], this paper introduces a modified portmanteau test for diagnosing ARCH-type models using high-frequency data. The paper also discusses the general procedure for constructing portmanteau test statistics for ARCH-type models based on high-frequency data. It is demonstrated that under weaker conditions such as having a finite residual fourth-order moment and other regularity conditions, the proposed portmanteau test follows a chi-square distribution.

This paper is structured as follows. Section 2 discusses the estimation for ARCH-type models. Section 3 covers the construction of the portmanteau test statistics and provides the corresponding asymptotic distribution. Section 4 presents the simulation process and the related results. Section 5 includes three real data examples along with the analysis of the corresponding results. Finally, the assumptions, proofs, and additional results are deferred to the Appendix B.

2. Estimation Using High-Frequency Data

To introduce high-frequency data, the structure of the log-returns equation has been enhanced. The observed intraday log-return process for day t is associated with the standardized intraday trading time variable u, which ranges from 0 to 1. According to Visser (2011) [9], the modified model is a scaling GARCH(1,1) model, which is expressed as:

where represents a standardized process. The assumption is made that for any , and are independent of each other and share the same distribution. When u is set to 1, the following relationships hold:

Hence, when u is set to 1, models (6) and (7) are transformed into models (3) and (4), which combine the scaling model with the pure GARCH model.

In order to estimate the parameters, the scaling model utilizes a volatility proxy. This proxy reduces high-dimensional information to a single dimension. Furthermore, when the conditional mean is zero, the volatility proxy serves as an unbiased estimate of the conditional variance. Specifically, the volatility proxy is a statistic derived from intraday data and satisfies the following property of positive homogeneity:

When t is fixed, becomes a constant. By applying the homogeneity property of , it can be observed that

For convenience, let , , , and . Then, the volatility proxy GARCH model has the following structure:

where is also an i.i.d. sequence that satisfies . is the parameter of the models (8) and (9) that needs to be estimated. For simplicity, models (8) and (9) are referred to as the VP-GARCH(1,1) model.

In the case of ARCH(q), the return equation aligns with Formula (8), while the conditional variance equation is expressed as:

Similarly, the parameter vector represents the parameters of models (8) and (10), which require estimation. The models (8) and (10) are referred to as the VP-ARCH(q) model.

The Gaussian quasi-maximum likelihood estimation (QMLE) [9] is employed to estimate the parameters of models (8) and (9) and models (8) and (10). The QMLE of is defined as:

To differentiate between them, the parameter vector estimate using low-frequency data is denoted as , while the parameter vector estimate using high-frequency data is denoted as . The asymptotic normality of the parameter estimates for models (8) and (9) has been proven by Visser [9]. This conclusion can also be applied to models (8) and (10). Therefore, the following asymptotic normality can be obtained:

In particular, when is known, let be the parameter vector for models (8) and (9), and let denote the parameter vector for models (8) and (10). Then

3. Portmanteau Test

3.1. Traditional Portmanteau Test

The portmanteau test is employed to evaluate the adequacy of the model’s fit. This statistical test is constructed based on the squared residual autocorrelation function. In cases where the volatility model is inadequate, a certain level of correlation between the squared residual terms exists.

In this paper, the null hypothesis is that the squared residual autocorrelation functions are irrelevant, indicating that the hypothesized model is adequate. The sample squared residual autocorrelation function is calculated as follows:

According to the central limit theorem, it can be proven that follows an asymptotic normal distribution under the null hypothesis. To obtain the test statistic, a finite vector of autocorrelation functions is considered, where m is the maximum lag order of . Let denote the asymptotic variance of . The portmanteau test statistic can be formulated as:

Under Assumptions 3 and 4, converges to constant in probability. Here, can be estimated by , where

Therefore, only the asymptotic distribution of needs to be considered, where

So, Formula (12) can be changed into

where , and V is the asymptotic variance of .

The statistic asymptotically follows a chi-square distribution with m degrees of freedom. By setting the significance level at 0.05, if the calculated result exceeds the quantile , the null hypothesis will be rejected. Conversely, if the calculated result is below the quantile, the null hypothesis will not be rejected, indicating that the model can be considered adequate.

3.2. Portmanteau Test Using High-Frequency Data

From Equation (13), we can observe that the estimate is dependent on the estimate of . The estimator is a function of . Given that the estimator is obtained using high-frequency data, the volatility estimator can be easily obtained. Additionally, the statistic can be calculated as follows:

Similarly,

Furthermore, the asymptotic distribution of the estimator differs from that of the estimator , which is a difference that further impacts the asymptotic variance of . Let denote the modified variance estimator. The following theorem can then be derived.

Theorem 1.

If Assumptions 1–5 are satisfied, then under the null,

where , ,

The proof of Theorem 1 is presented in Appendix A.2.

Indeed, the presence of the parameter poses challenges in obtaining the QMLE in practical applications. However, these challenges can be overcome if an appropriate volatility proxy is identified. When the volatility proxy satisfies , indicating , then . Assuming , we can establish the following lemma.

Lemma 1.

If Assumptions 1–6 are satisfied, then under the null,

where .

The proof of Lemma 1 is also provided in Appendix A.2.

4. Simulation

In this section, the finite-sample performance of the proposed method is examined through Monte Carlo simulations [28]. All data generation, results calculation, and figures plotting in this section are accomplished by running the R program.

In practical applications, the log-return series can be calculated based on the stock price. However, in the simulation, prior to simulating the generation of , it is necessary to generate the high-frequency residual sequences. Following Visser [9], the high-frequency residual sequences can be generated using the stationary Ornstein–Uhlenbeck process [29]:

where and are unrelated Brownian motions [30]. The initial value of is set to 0, and is generated from a stable distribution . To simulate the Chinese stock exchange market, the interval [0, 1] is divided into 240 small equal intervals, representing every minute of intraday trading. The values of , , and are set to

This ensures that the expected value of is equal to 1 [9]. The calculation of is based on the given parameter vector using Equations (6), (7) and (10). For ARCH(2), set and . For VP-GARCH(1,1), set and . The corresponding equation is as follows:

Eventually, volatility proxy is selected as the realized volatility (RV). Three sampling frequencies are considered: 5 min, 15 min, and 30 min, which are denoted as RV5, RV15, and RV30, respectively. Taking RV15 as an example, the formula is

Similarly, for the high-frequency residual , the formula is

To evaluate the performance of the models, let n denote the sample size and four sample sizes are considered: 200, 300, 400, and 500. For each model, 1000 independent replications are generated. Then, the root mean squared error (RMSE) of parameter estimates can be calculated. The formula is as follows:

where is the parameter estimate for the i-th time and is the true value of the parameter. The RMSE results are presented in Table 1.

Table 1.

RMSE of parameter estimates under four volatility proxy models.

The in Table 1 represents the daily model, where is calculated using daily closing prices. Table 1 clearly shows that the estimation results obtained from the intraday models, using high-frequency data, outperform those of the daily model.

Additionally, it is necessary to examine the distribution of the statistic and compare its performance with the daily model. Therefore, Table 2 presents the empirical size values for this purpose.

Table 2.

Empirical size of four volatility proxies under four models.

We set and calculate the empirical size by determining the proportions of rejections based on the 95th percentile of . The results are presented in Table 2. It is evident that as the sample size increases, the results of the intraday models are closer to 0.05 compared to those of the daily model. It suggests that introducing high-frequency data can enhance the accuracy of the model.

Regarding the power, we define the alternative hypotheses for ARCH(2) and GARCH(1,1) as follows:

where and are obtained from models (15) and models (16), respectively, while and are obtained from models (17) and models (18), respectively, which are all fixed values. Next, we introduce and as variables to examine the impact on the power.

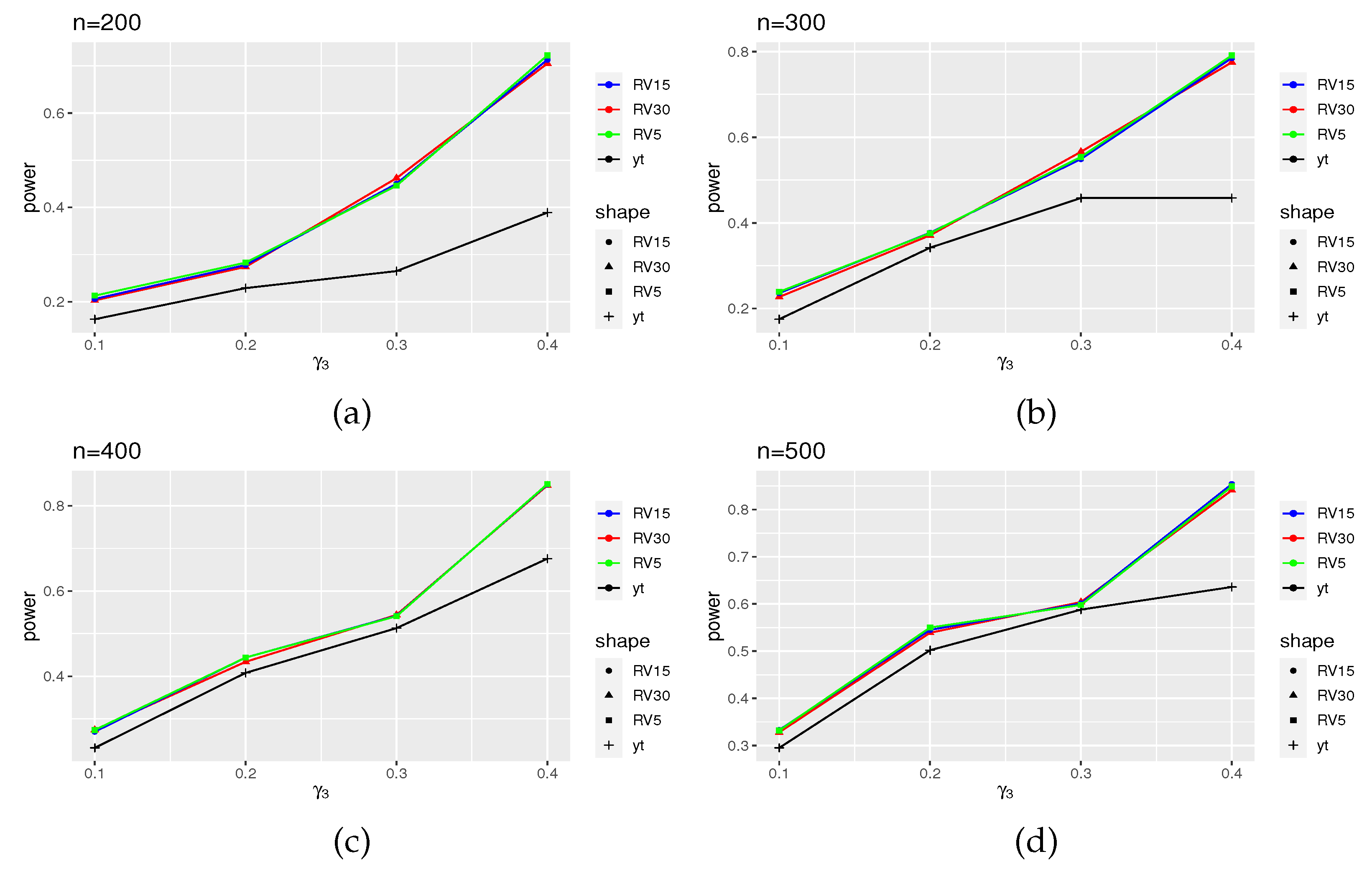

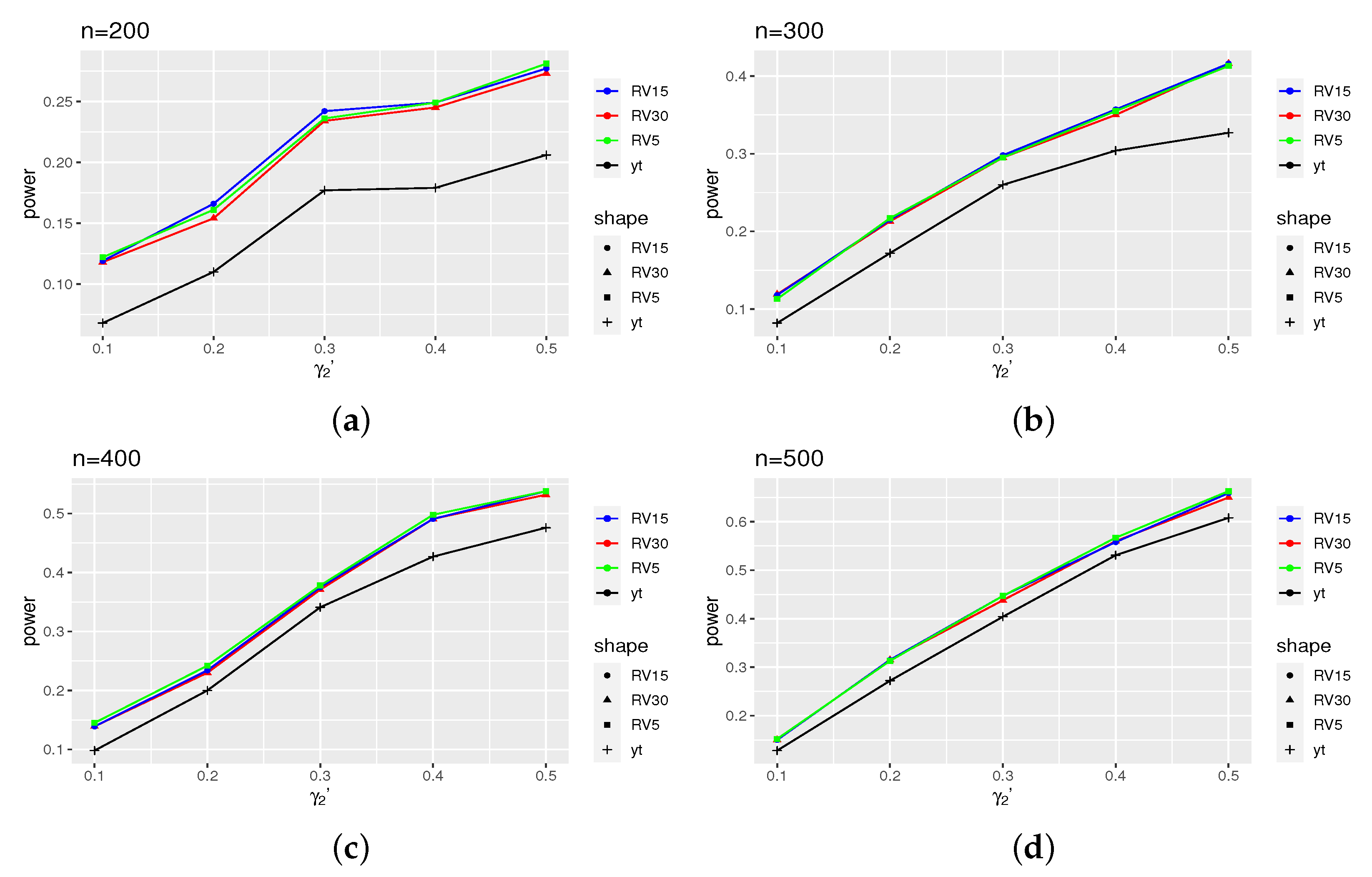

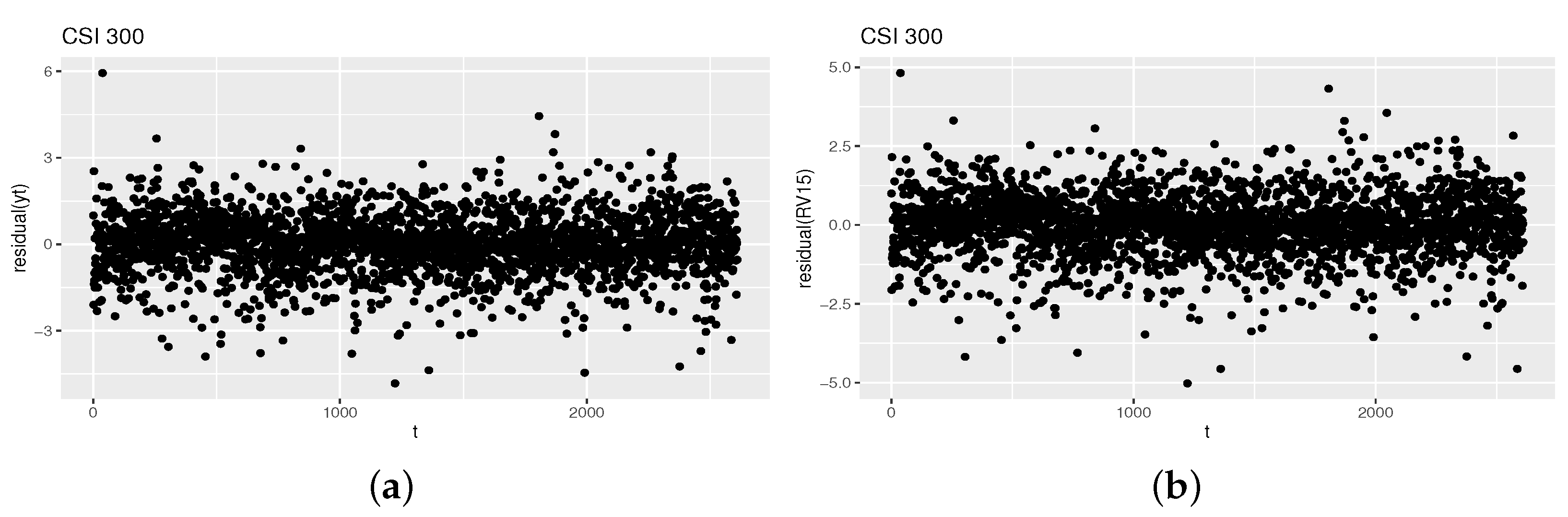

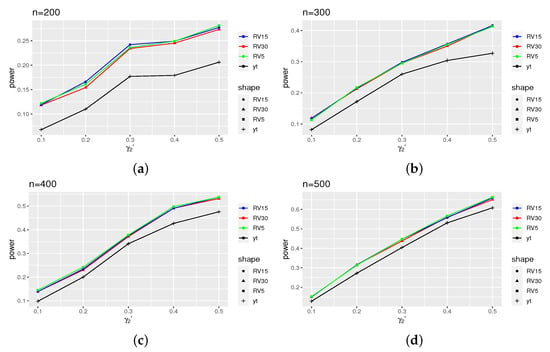

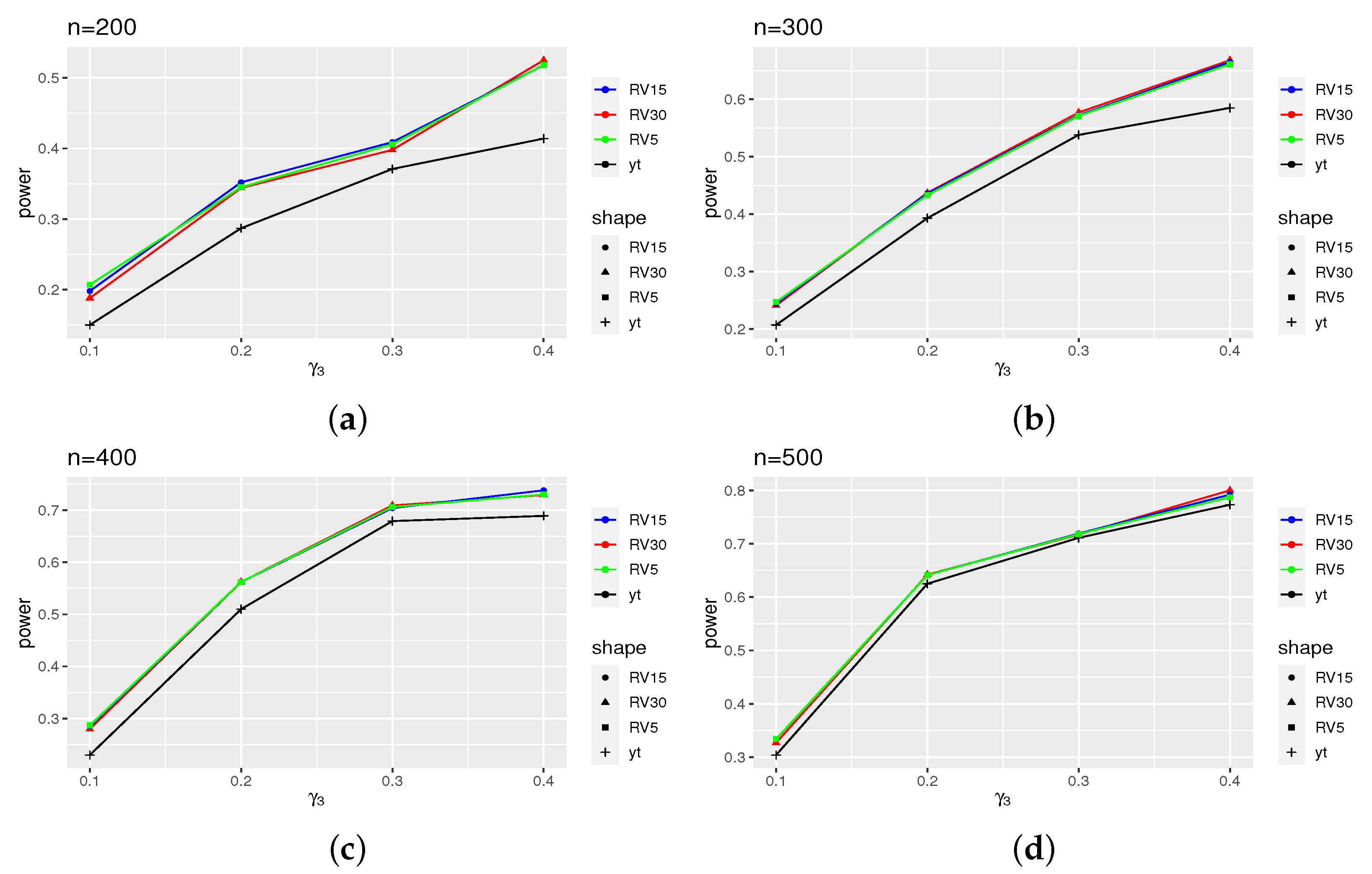

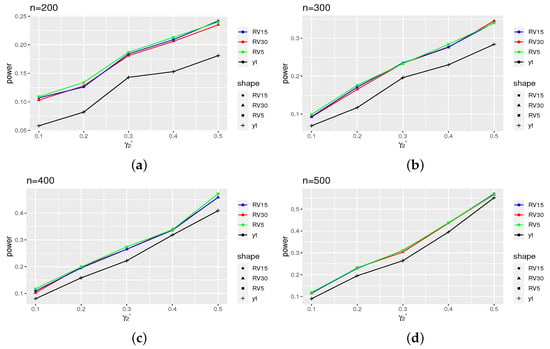

Figure 1 displays the power results of the ARCH(2) model, while Figure 2 presents the power results of the GARCH(1,1) model. The results for models (16) and (18) can be found in Appendix B (Figure A1 and Figure A2). From these figures, it is evident that the power curves of the intraday models exhibit clear distinctions from that of the daily model, although this effect diminishes as the sample size increases. Additionally, upon comparing the power of the four models, we observe that the ARCH model demonstrates a more pronounced power.

Figure 1.

Power for models (15), where takes 0.1, 0.2, 0.3, and 0.4. (a) The variation of power of different volatility proxies as the parameter changes when the sample size is 200. (b) The variation of power of different volatility proxies as the parameter changes when the sample size is 300. (c) The variation of power of different volatility proxies as the parameter changes when the sample size is 400. (d) The variation of power of different volatility proxies as the parameter changes when the sample size is 500.

Figure 2.

Power for models (17), where takes 0.1, 0.2, …, 0.5. (a) The variation of power of different volatility proxies as the parameter changes when the sample size is 200. (b) The variation of power of different volatility proxies as the parameter changes when the sample size is 300. (c) The variation of power of different volatility proxies as the parameter changes when the sample size is 400. (d) The variation of power of different volatility proxies as the parameter changes when the sample size is 500.

5. Application

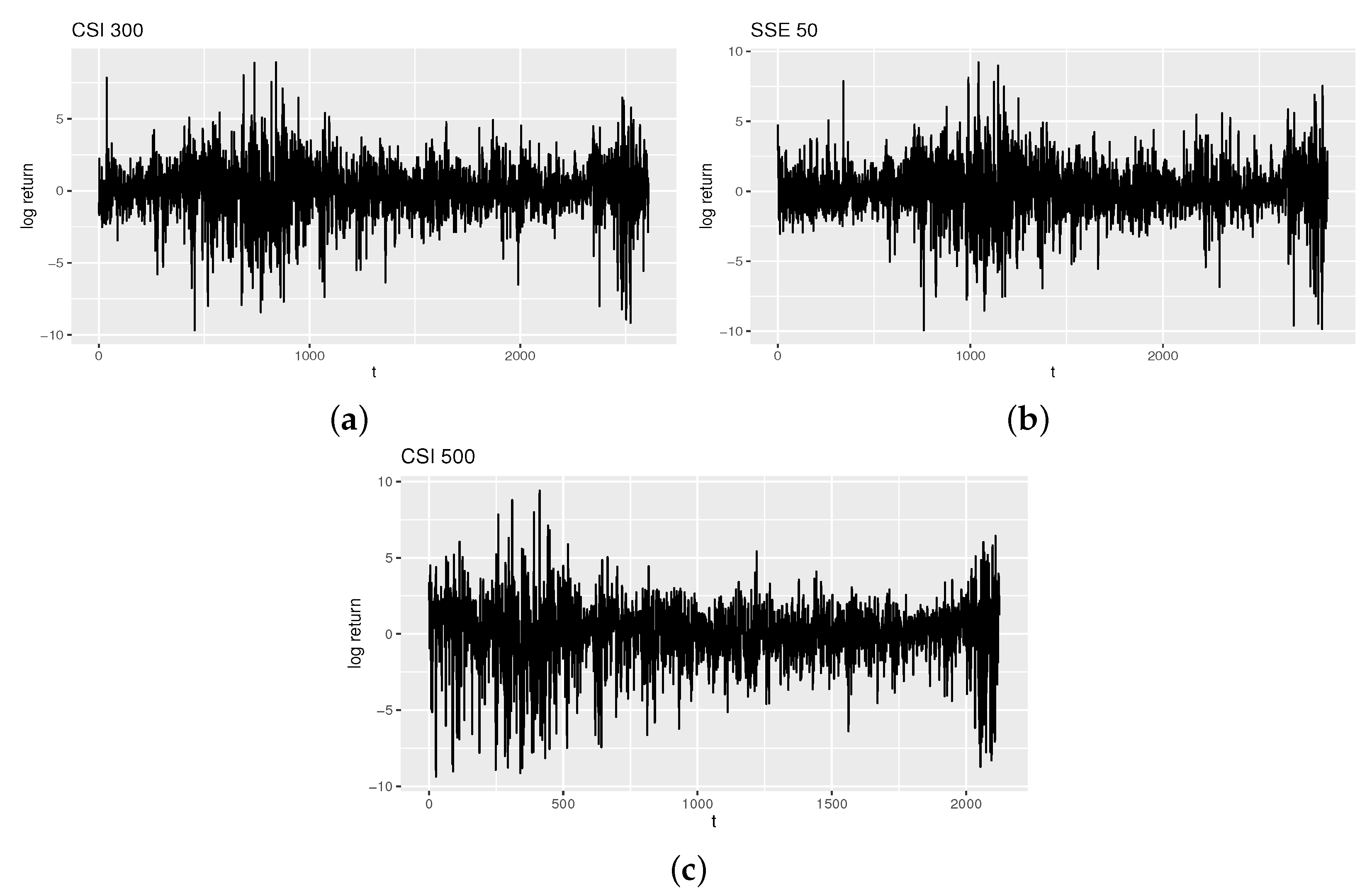

In our analysis, we focus on three stock indices: the CSI 300, SSE 50, and CSI 500. The data cover the period from 2 January 2004 to 6 June 2019. After deleting missing data, we obtained a dataset consisting of 2610 consecutive days (8 April 2005 to 31 December 2015) for the CSI 300 index, 2856 consecutive days (2 January 2004 to 13 October 2015) for the SSE 50 index, and 2124 consecutive days (15 January 2007 to 13 October 2015) for the CSI 500 index. The calculations and figures presented in this section are generated using the R programming language.

To compute the high-frequency log-return [9], the following formula is employed:

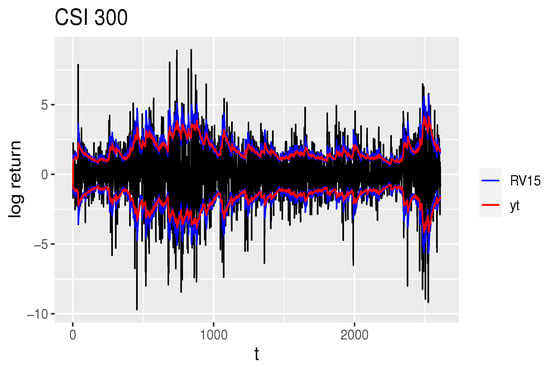

where denotes the trading price within 240 min of day t, and denotes the closing price of day t. The log-return values for the three indices are depicted in Figure 3.

Figure 3.

Log-return of three indices: (a) The figure of CSI 300, (b) the figure of SSE 50 and (c) the figure of CSI 500. The vertical ordinate of all figures is log-return, and the horizontal ordinate is time t.

From Figure 3, it is evident that all three samples exhibit significant heteroscedasticity and fluctuate around 0. Therefore, it is worth considering the use of pure ARCH or GARCH models.

However, an estimation challenge arises in the process, specifically in estimating the parameter . To overcome this, we assume , which leads to . Noting that , we can obtain an estimator for in the daily model. It implies that estimating intraday models will depend on daily models.

We intend to fit the data with the ARCH(2) model first, and the portmanteau test statistics using low-frequency are shown in Table 3.

Table 3.

The results of statistic of ARCH(2).

Choose . Obviously, the results of all three samples are significantly larger than , leading to the rejection of the null hypothesis. Therefore, the ARCH(2) model is deemed inadequate. To identify a more suitable model, we examine the residuals of these models and observe the log-return figures. Notably, the log-return of the CSI 500 exhibits relatively less fluctuation over a period, indicating the potential need for a higher-order ARCH model. Hence, we consider the GARCH(1,1) model. The parameter estimates are presented in Table 4.

Table 4.

The estimators of parameters of GARCH(1,1).

Before calculating the test statistic, it is necessary to consider the hypothesis of . This hypothesis implies that . To validate the hypothesis, an estimate for can be calculated using the following expression:

The calculation results are reported in Table 5.

Table 5.

The estimators of of GARCH(1,1).

The results of Table 5 show that the estimators of are almost close to 1. It suggests that an appropriate volatility measure has been identified. With this in mind, it is straightforward to proceed with the calculation of the portmanteau test statistics. The specific results are shown in Table 6.

Table 6.

The results of statistic of GARCH(1,1).

At a 5% significance level, the critical value for the rejection region is . It is important to note that the null hypothesis of our test is that the model fitting is adequate, while the alternative hypothesis suggests inadequate model fitting. In the portmanteau test, a higher value of the test statistic indicates a greater likelihood of rejecting the null hypothesis, implying inadequate model fitting.

From Table 6, it can be observed that for the daily model, all of the portmanteau test statistics for the three stock indices fall within the accepted region. However, for the intraday model, except for the CSI 500 index, the test statistics for the other two indices fall within the rejection region.

Furthermore, an interesting phenomenon emerges. When the intraday models reject the null hypothesis, the values of the portmanteau test statistic differ significantly from those of the daily model. On the other hand, when the intraday models accept the null hypothesis, the difference between the two is not significant. Specifically, if the intraday model fitting is adequate, then the daily model fitting may be inadequate. Conversely, if the daily model fitting is inadequate, the intraday model fitting will also be inadequate. It suggests that the daily model could serve as a boundary model. In practical terms, when the daily model is inadequate, there is no need to consider the intraday model further.

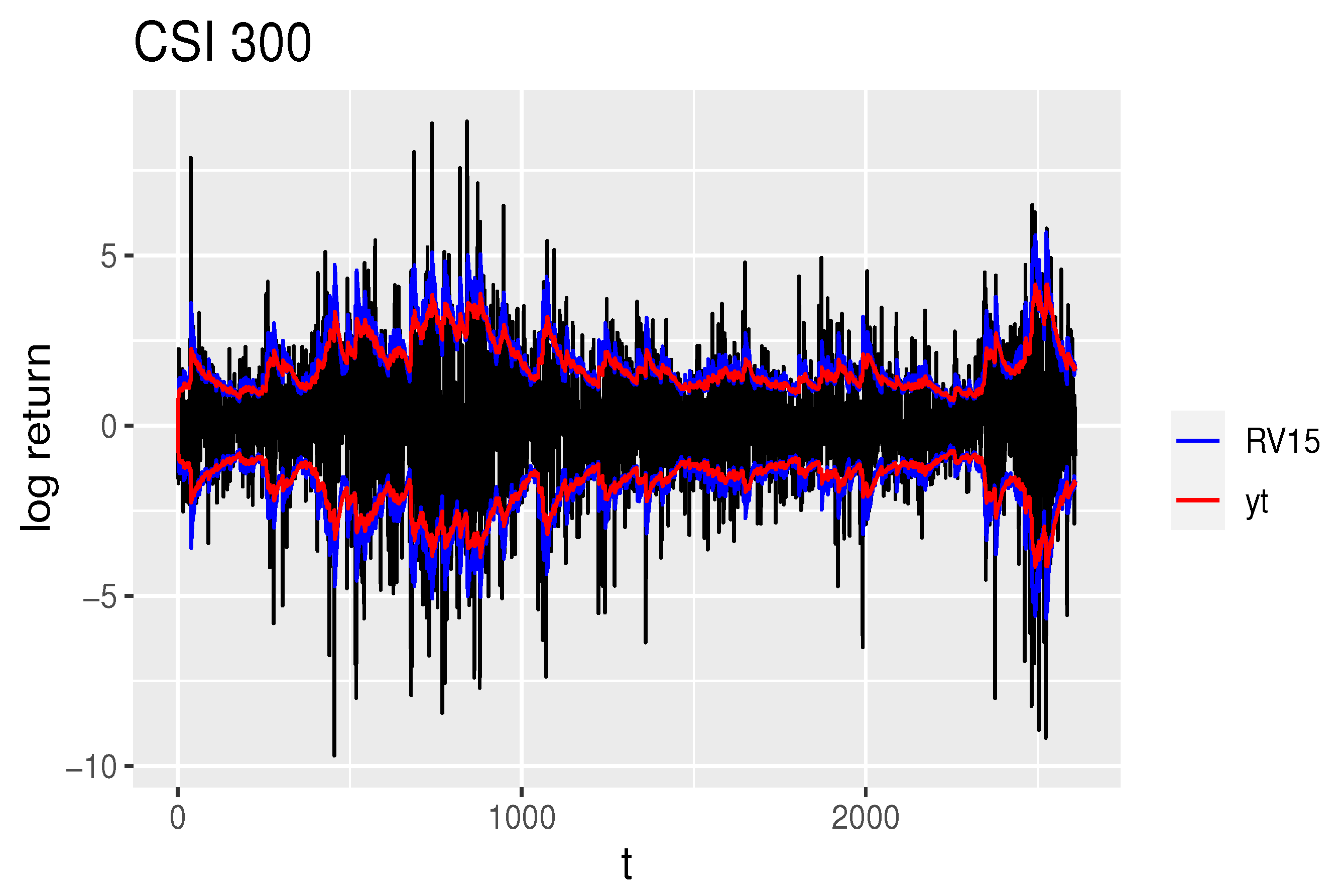

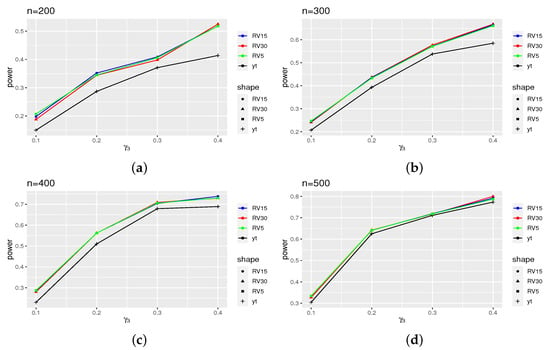

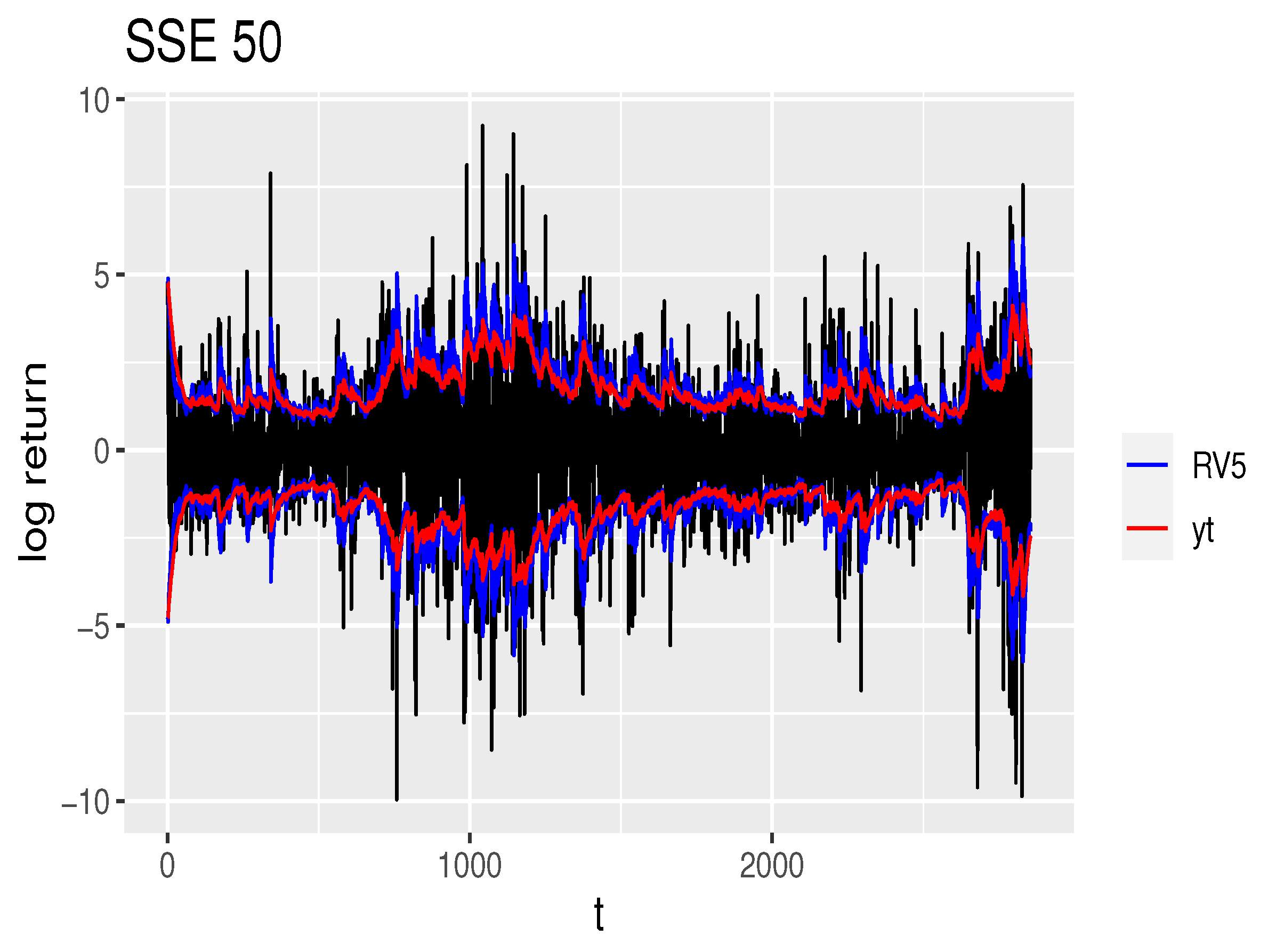

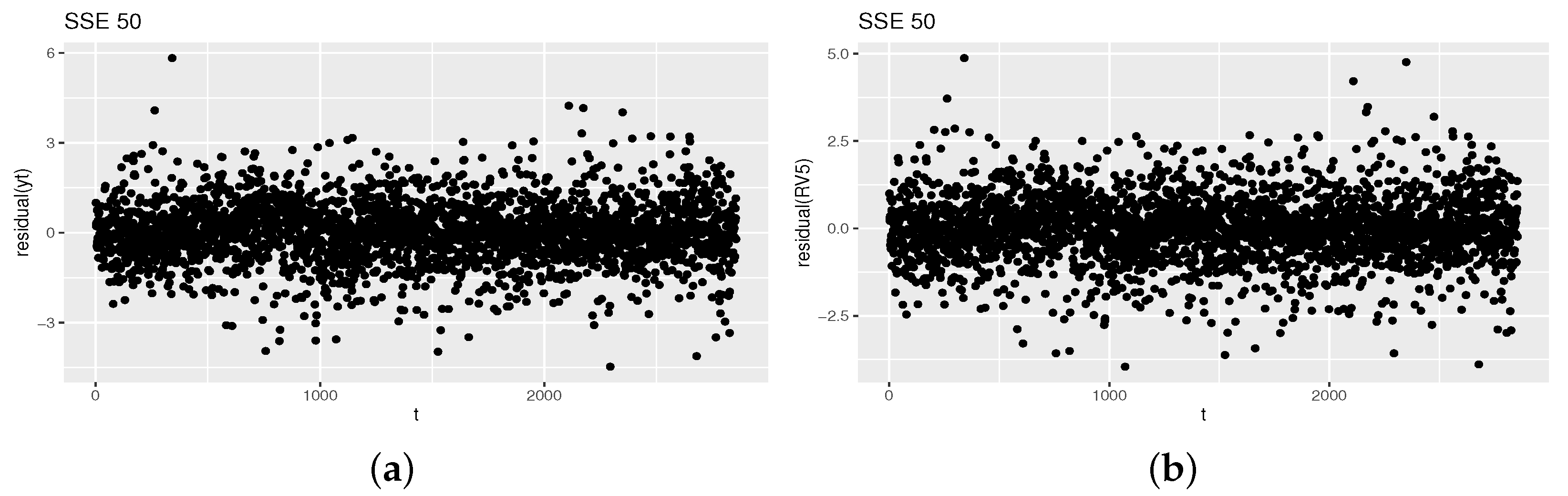

The fact that the intraday models reject the null hypothesis while the daily model accepts it is a noteworthy issue that warrants further study. To facilitate this analysis, the estimated volatility curves and residual scatter plots are shown in Figure 4 and Figure 5.

Figure 4.

The estimated volatility curves of CSI 300, where the black curve is the real data curve, the blue curve is the estimated volatility curve of RV15, and the red curve is the estimated volatility curve of the model using low-frenquency data.

Figure 5.

The residual plots of CSI 300, where (a) is of the model using low-frequency data and (b) is of RV15.

Since the results of different high-frequency volatility proxies (RV30, RV15, RV5) are similar and their curves overlap, the model with closer to 1 is selected. Figure 4 illustrates that the estimated volatility curve derived from high-frequency data exhibits greater fluctuations, indicating its ability to capture more information. A similar pattern can be observed for the SSE 50 index, as shown in the Appendix B (Figure A3).

As can be seen from Figure 5, the residuals of the low-frequency model are mainly concentrated within the range of [−3, 3], whereas the residuals of the high-frequency model are primarily concentrated within the range of [−2.5, 2.5]. However, the results also indicate a certain degree of heteroscedasticity. A similar result can be observed for the SSE 50 index, as shown in Appendix B (Figure A4).

6. Discussion

In this study, we aimed to propose a portmanteau test suitable for ARCH models based on high-frequency data. Based on the asymptotic properties of the QMLE for ARCH-type models with high-frequency data, we developed a new portmanteau test.

Firstly, we constructed the modified portmanteau test statistic in this paper using the vector of residual autocorrelation functions and its variance obtained from the QMLE based on high-frequency information. Through the application of the law of large numbers, central limit theorem and Taylor expansion, we proved that this statistic follows a chi-square distribution. The specific form of this statistic was provided for cases where the high-frequency redundant parameters are both known and unknown, as outlined in Theorem 1 and Lemma 1.

Secondly, the simulation results regarding the size of the test provide evidence that the modified test statistic asymptotically follows a chi-square distribution when the chosen model is adequate. It is evident from the fact that the size of the modified test statistic, based on high-frequency information, approaches 0.05. In other words, the proportion of this test statistic exceeding the 0.95 quantile of the derived chi-square distribution is closer to 0.05. Furthermore, the power results from the simulation demonstrate that the modified test statistic is more effective in rejecting the model when it is inadequate and the sample size is small. In conclusion, the modified test statistic improved identification of the adequacy of ARCH-type models.

Furthermore, empirical studies have provided evidence supporting the applicability of the modified portmanteau test. The test results for the three indices indicate that when the test statistic based on low-frequency data accepts the null hypothesis, the test statistic based on high-frequency information does not always accept the null hypothesis. The discrepancy suggests a difference between the tests based on high-frequency information and those based on low-frequency data. Additionally, by examining the residual plots, it becomes evident that the model test results based on high-frequency data are more reasonable.

However, despite the numerous advantages of the modified portmanteau test, there are several challenges and barriers that need to be addressed. Firstly, ARCH-type models based on high-frequency data often include the redundant parameter . In existing studies, estimating this redundant parameter relies on the estimation results obtained from low-frequency data. Secondly, the modified test statistic based on high-frequency data is more intricate compared to the one based on low-frequency data, requiring additional computational steps. Specifically, the derivation of the modified test statistic becomes feasible when the asymptotic properties of parameter estimation for more complex ARCH-type models are established. It implies a wider applicability of the modified portmanteau test. However, the paper primarily focuses on simpler ARCH-type models, and the study of more complex models or other types of models involving high-frequency data remains unexplored. These areas will be explored in future studies.

7. Conclusions

In conclusion, the modified portmantea test statistic provided a new idea for testing the goodness of fit of the ARCH-type models. This statistic builds upon the principles of the traditional test statistic and the asymptotic properties of QMLE based on high-frequency data. The test statistic takes into account a redundant parameter in ARCH-type models. It is the part left after high-frequency residual regularization, which is not present in traditional portmanteau test. In spite of this redundant parameter, the modified test statistic have been proven to follow a chi-square distribution.

Furthermore, the simulation study confirms that the modified portmanteau test follows a chi-square distribution. The size and power results indicate that the test based on high-frequency data outperforms the test based on low-frequency data in assessing model adequacy. In practical applications, the modified test based on high-frequency data consistently performs well. A comparison of the results from the three indices reveals that the results of tests based on high-frequency data sometimes differ from those based on low-frequency data. Overall, the test based on high-frequency data is more effective in identifying cases of incorrect model selection.

Lastly, it is worth noting that the applicability of the modified portmanteau test extends beyond the simple ARCH-type models examined in this paper. Other ARCH-type models, such as TGARCH and EGARCH models, can also benefit from this portmanteau test. However, the current study focuses solely on the use of simple ARCH-type models. It is important to recognize that leverage effects are prevalent in financial assets, and ARCH-type models capable of capturing such effects should be taken into consideration. We will leave this extension as a task of future study.

Author Contributions

Conceptualization, X.Z.; Formal analysis, X.Z. and C.D.; Funding acquisition, X.Z. and C.D.; Investigation, Y.C.; Methodology, Y.C., X.Z. and C.D.; Project administration, X.Z.; Resources, Y.C.; Validation, Y.L.; Visualization, Y.L.; Writing—original draft, Y.C.; Writing—review and editing, C.D. All authors have read and agreed to the published version of the manuscript.

Funding

The work is partially supported by Guangdong Basic and Applied Basic Research Foundation 2022A1515010046 (Xingfa Zhang), Funding by Science and Technology Projects in Guangzhou SL2022A03J00654 (Xingfa Zhang), 202201010276 (Zefang Song), and Youth Joint Project of Department of Science and Technology in Guangdong Province of China, 2022A1515110009 (Chunliang Deng).

Data Availability Statement

The data used in this study are not publicly available due to confidentiality requirements imposed by the data collection agency.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ARCH | autoregressive conditional heteroscedasticity model |

| GARCH | generalized autoregressive conditional heteroscedasticity model |

| QMLE | Gaussian quasi-maximum likelihood estimation |

| CSI 300 | China Securities Index 300, also called HuShen 300 |

| SSE 50 | Shanghai stock exchange 50 index |

| CSI 500 | China Securities Index 500 |

| GJR | model named by the proponents Glosten, Jagannathan and Runkel |

| QMELE | quasi-maximum exponential likelihood estimation |

| VP-GARCH(1,1) | volatility proxy GARCH(1,1) model |

| VP-ARCH(q) | volatility proxy ARCH(q) model |

| RV | realized volatility |

| RMSE | root mean square error |

Appendix A

Appendix A.1. Assumption

Assumption A1.

Assumption A2.

, , , , .

Assumption A3.

The sequence is i.i.d. with zero mean and unit variance. The sequence is also i.i.d.

Assumption A4.

, .

Note that under Assumption 5, Pan et al. (2008) [31] showed that the model we used admits a strictly stationary solution.

Assumption A6.

.

Appendix A.2. Proof

Proof of Theorem 1.

Since , then

By the Taylor expansion, it follows that

Since as , hence we have

To obtain the asymptotic distribution of , the key is to calculate the covariance between and . Before that, we first need to calculate .

Applying Taylor’s expansion for the function , it follows that

then

where , . Through simple calculations, we have

According to Formula (11),

then

Denote

Then

Owing to

thus

Since , then

Similarly, we have

where

This completes the proof of Theorem 1. □

Proof of Lemma 1.

The proof of Lemma 1 is similar to Theorem 1, except that there is no need to define . Following Visser (2011) [9], suppose and are known, then

If we assume , which means , we can weaken the condition. Even if and are unknown, thanks to , in this case, we can still have

Thus

Owing to

then

In view of , thus

Then, we complete the proof of Lemma 1. □

Appendix B. Remaining Results

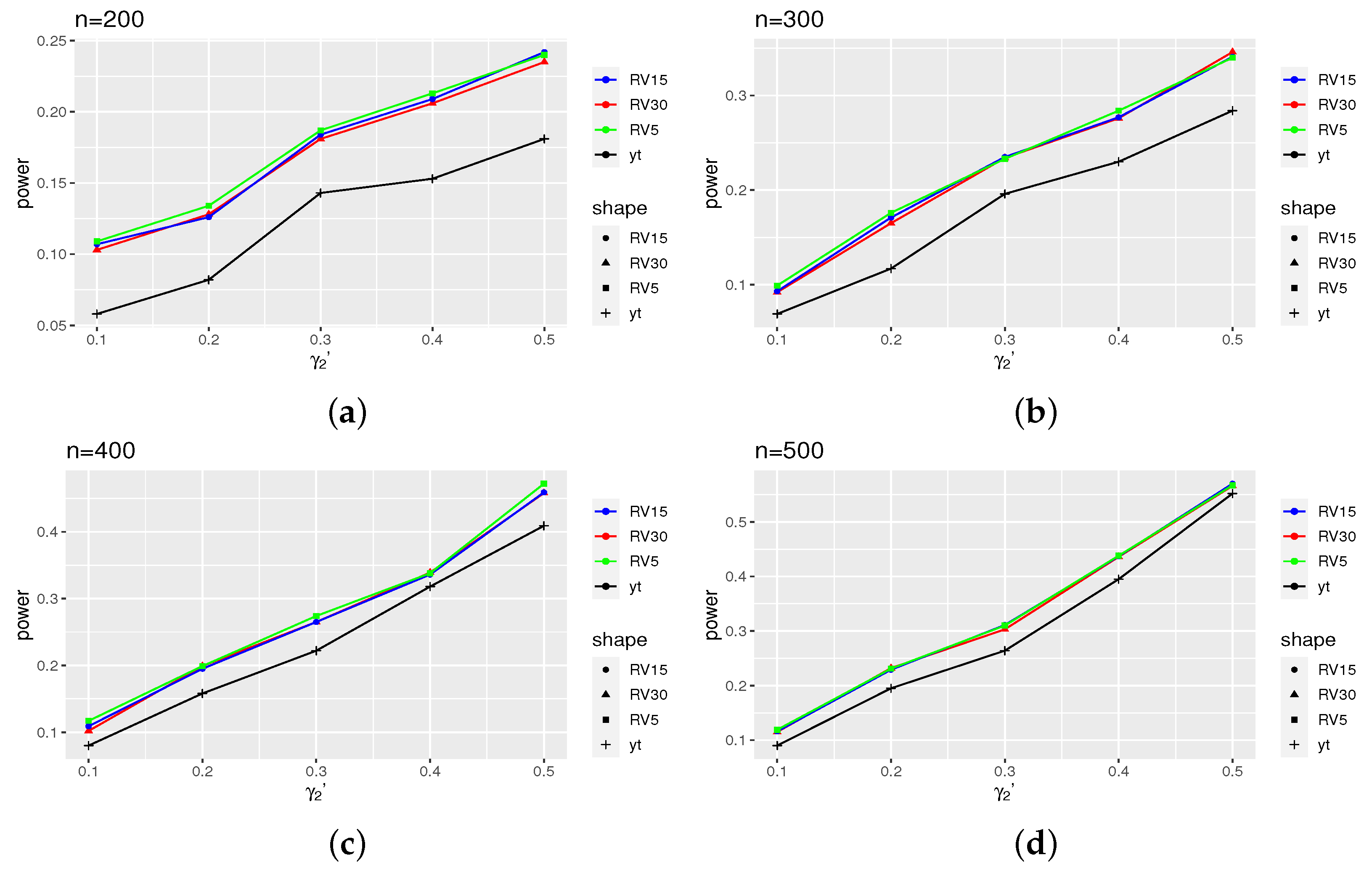

Figure A1.

Power for models (16), where takes 0.1, 0.2, 0.3, and 0.4. (a) The variation of power of different volatility proxies as the parameter changes when the sample size is 200. (b) The variation of power of different volatility proxies as the parameter changes when the sample size is 300. (c) The variation of power of different volatility proxies as the parameter changes when the sample size is 400. (d) The variation of power of different volatility proxies as the parameter changes when the sample size is 500.

Figure A1.

Power for models (16), where takes 0.1, 0.2, 0.3, and 0.4. (a) The variation of power of different volatility proxies as the parameter changes when the sample size is 200. (b) The variation of power of different volatility proxies as the parameter changes when the sample size is 300. (c) The variation of power of different volatility proxies as the parameter changes when the sample size is 400. (d) The variation of power of different volatility proxies as the parameter changes when the sample size is 500.

Figure A2.

Power for models (18), where takes 0.1, 0.2, …, 0.5: (a) The variation of power of different volatility proxies as the parameter changes when the sample size is 200. (b) The variation of power of different volatility proxies as the parameter changes when the sample size is 300. (c) The variation of power of different volatility proxies as the parameter changes when the sample size is 400. (d) The variation of power of different volatility proxies as the parameter changes when the sample size is 500.

Figure A2.

Power for models (18), where takes 0.1, 0.2, …, 0.5: (a) The variation of power of different volatility proxies as the parameter changes when the sample size is 200. (b) The variation of power of different volatility proxies as the parameter changes when the sample size is 300. (c) The variation of power of different volatility proxies as the parameter changes when the sample size is 400. (d) The variation of power of different volatility proxies as the parameter changes when the sample size is 500.

Figure A3.

The estimated volatility curves of SSE 50, where the black curve is the real data curve, the blue curve is the estimated volatility curve of RV5, and the red curve is the estimation curve of the model using low-frequency data.

Figure A3.

The estimated volatility curves of SSE 50, where the black curve is the real data curve, the blue curve is the estimated volatility curve of RV5, and the red curve is the estimation curve of the model using low-frequency data.

Figure A4.

The residual plots of SSE 50, where (a) is of the model using low-frequency data and (b) is of RV5.

Figure A4.

The residual plots of SSE 50, where (a) is of the model using low-frequency data and (b) is of RV5.

References

- Samuelson, P. The Variation of Certain Speculative Prices. J. Bus. 1966, 39, 34–105. [Google Scholar]

- Hamilton, J.D. Time Series Analysis, 1st ed.; Princeton University Press: Princeton, NJ, USA, 1994; pp. 154–196. [Google Scholar]

- Engle, R. Autoregressive Conditional Heteroscedasticity with Estimates of the Variance of United Kingdom Inflation. Econometrica 1982, 50, 987–1007. [Google Scholar] [CrossRef]

- Bollerslev, T. Generalized autoregressive conditional heteroskedasticity. J. Econom. 1986, 31, 307–327. [Google Scholar] [CrossRef]

- Black, F. Studies of stock market volatility changes. In Proceedings of the 1976 Meeting of the Business and Economic Statistics Section; American Statistical Association: Washington, DC, USA, 1986; pp. 177–181. [Google Scholar]

- Geweke, J. Modeling The persistence of Conditional Variances: A Comment. Econom. Rev. 1986, 5, 57–61. [Google Scholar] [CrossRef]

- Engle, R.F.; Granger, C.W.J.; Ding, Z. A long memory property of stock returns. J. Empri. Financ. 1993, 1, 83–106. [Google Scholar]

- Drost, F.; Klaassen, C. Efficient estimation in semiparametric GARCH models. J. Econom. 1997, 81, 193–221. [Google Scholar] [CrossRef]

- Visser, M. GARCH parameter estimation using high-frequency data. J. Financ. 2011, 9, 162–197. [Google Scholar] [CrossRef][Green Version]

- Huang, J.S.; Wu, W.Q.; Chen, Z.; Zhou, J.J. Robust M-estimate of gjr model with high frequency data. Acta Math. Appl. Sin. 2015, 31, 591–606. [Google Scholar] [CrossRef]

- Glosten, L.R.; Jagannathan, R.; Runkle, D.E. On the relation between the expected value and the volatility of the nominal excess return on stocks. J. Financ. 1993, 48, 1779–1801. [Google Scholar] [CrossRef]

- Huber, P.J. Robust Statistics: A Review. Ann. Stat. 1981, 9, 436–466. [Google Scholar]

- Wang, M.; Chen, Z.; Wang, C. Composite quantile regression for garch models using high-frequency data. Econ. Stat. 2018, 7, 115–133. [Google Scholar] [CrossRef]

- Fan, P.; Lan, Y.; Chen, M. The estimating method of var based on pgarch model with high-frequency data. Syst. Eng. 2017, 37, 2052–2059. [Google Scholar]

- Deng, C.; Zhang, X.; Li, Y.; Song, Z. On the test of the volatility proxy model. Commun. Stat. Simul. Comput. 2022, 51, 7390–7403. [Google Scholar] [CrossRef]

- Liang, X.; Zhang, X.; Li, Y.; Deng, C. Daily nonparametric ARCH(1) model estimation using intraday high frequency data. AIMS Math. 2021, 6, 3455–3464. [Google Scholar] [CrossRef]

- Box, G.; Pierce, D. Distribution of Residual Autocorrelations in Autoregressive Integrated Moving Average Time Series Models. J. Am. Stat. Assoc. 1970, 65, 1509–1526. [Google Scholar] [CrossRef]

- Granger, C.; Granger, C.W.J.; Andersen, A.P. An introduction to bilinear time series models. Int. Stat. Rev. 1978, 8, 7–94. [Google Scholar]

- McLeod, A.; Li, W. Diagnostic Checking ARMA Time Series Models Using Squared-Residual Autocorrelations. J. Time Ser. Anal. 1983, 4, 269–273. [Google Scholar] [CrossRef]

- Engle, R.; Bollerslev, T. Modelling the persistence of conditional variances. Econom. Rev. 1986, 5, 1–50. [Google Scholar] [CrossRef]

- Pantula, S.G. Estimation of Autoregressive Models with Arch Errors. Sankhyā Indian J. Stat. 1988, 50, 119–138. [Google Scholar]

- Li, W.K.; Mak, T.K. On the squared residual autocorrelations in non-linear time series with conditional heteroskedasticity. J. Time Ser. Anal. 1994, 15, 627–636. [Google Scholar] [CrossRef]

- Peng, L.; Yao, Q. Least absolute deviations estimation for ARCH and GARCH models. Biometrika 2003, 90, 967–975. [Google Scholar] [CrossRef]

- Li, G.; Li, W.K. Diagnostic checking for time series models with conditional heteroscedasticity estimated by the least absolute deviation approach. Biometrika 2005, 92, 691–701. [Google Scholar] [CrossRef]

- Carbon, M.; Francq, C. Portmanteau Goodness-of-Fit Test for Asymmetric Power GARCH Models. Aust. N. Z. J. Stat. 2011, 40, 55–64. [Google Scholar]

- Chen, M.; Zhu, K. Sign-based portmanteau test for ARCH-type models with heavy-tailed innovations. J. Econom. 2015, 189, 313–320. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Y. Bootstrapping multivariate portmanteau tests for vector autoregressive models with weak assumptions on errors. Comput. Stat. Data Anal. 2022, 165, 107321. [Google Scholar] [CrossRef]

- Ulam, S.; von Neumann, J. Monte Carlo Calculations in Problems of Mathematical Physics. Bull. New Ser. Am. Math. Soc. 1947, 53, 1120–1126. [Google Scholar]

- Uhlenbeck, G.E.; Ornstein, L.S. Carlo Calculations in Problems of Mathematical Physics. Phys. Rev. 1930, 36, 823–841. [Google Scholar] [CrossRef]

- Brown, R. A Brief Account of Microscopical Observations Made in the Months of June, July and August, 1827, on the Particles Contained in the Pollen of Plants; and on the General Existence of Active Molecules in Organic and Inorganic Bodies. Philos. Mag. 1828, 4, 161–173. [Google Scholar] [CrossRef]

- Pan, J.; Wang, H.; Tong, H. Estimation and tests for power-transformed and threshold GARCH models. J. Econom. 2008, 142, 352–378. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).