Abstract

We consider five types of entropies for Gaussian distribution: Shannon, Rényi, generalized Rényi, Tsallis and Sharma–Mittal entropy, establishing their interrelations and their properties as the functions of parameters. Then, we consider fractional Gaussian processes, namely fractional, subfractional, bifractional, multifractional and tempered fractional Brownian motions, and compare the entropies of one-dimensional distributions of these processes.

Keywords:

Shannon entropy; Rényi entropy; Tsallis entropy; Sharma–Mittal entropy; normal distribution; fractional Brownian motion; subfractional Brownian motion; bifractional Brownian motion; multifractional Brownian motion; tempered fractional Brownian motion MSC:

94A17; 60G15; 60G22

1. Introduction

The concept of entropy as a measure of the chaos of a dynamical system has been known for a long time, and this concept is used in numerous applications, starting with the physics of the universe and continuing with chemical reactions, hacking attacks and medical measurements.

The concept of entropy for a random variable was introduced by Shannon [1] to characterize the irreducible complexity inherent in a specific form of randomness. Nowadays, entropy measures have a wide range of potential applications across various fields [2], including information theory, machine learning, thermodynamics, information security, biology, finance, environmental sciences, social sciences, psychology and the study of complex systems. For instance, entropy is used in data compression, decision tree construction [3], statistical mechanics [4], cryptography [5], genetics [6], market analysis [7], climate analysis [8], social network analysis [9] and psychological studies [10]. Entropy measures help quantify information, predictability, complexity and other characteristics in these fields.

The notion of entropy is closely intertwined with the theory of quantum information, as developed in [11]. Recent advancements in this field can be explored in the work by Rahman et al. [12]. Entropy plays a pivotal role in practical applications, notably in signal processing and network traffic analysis. It is employed in the development of algorithms for detecting DDoS attacks [13]. Furthermore, entropy measurements are applied in medical and biological studies, where they facilitate the differentiation of pathologies and aging by quantifying physiological complexity. For instance, these concepts have been utilized to distinguish different Alzheimer’s disease states [14] and to classify signals from patients with Parkinson’s disease [15].

From a mathematical point of view, the entropy of a probability distribution is expressed in terms of its density, given the specified density, and this entropy is not difficult to calculate for specific distributions. Note, however, that there are many different approaches to determining the entropy of a probability distribution, starting with Shannon entropy, and then this concept was successively complicated and generalized by adding new parameters (Rényi, generalized Rényi, Tsallis, Sharma–Mittal entropies). The various definitions of entropy share several basic properties postulated by Alfred Rényi [16].

Rényi entropy [16] generalizes Shannon entropy by introducing an additional parameter that allows for a range of entropy measures. Rényi entropy is used in quantum information theory and quantum statistical mechanics. It helps to describe the entanglement of quantum systems, the behavior of quantum phase transitions and the characterization of quantum states.

Generalized Rényi entropy extends the concept of Rényi entropy by allowing for more flexibility in the choice of the exponent. It is employed in various applications, such as describing the statistics of turbulent flows, analyzing the complexity of biological systems and studying the scaling properties of critical phenomena in condensed matter physics.

Tsallis entropy is another generalization of Shannon entropy, introduced by Constantino Tsallis [17,18]. It introduces a nonextensive parameter to describe systems that do not obey standard statistical mechanics. Tsallis entropy is relevant in the study of complex systems, self-organized criticality and in modeling systems with long-range interactions. It has been applied in various branches of physics, including astrophysics, plasma physics and high-energy particle physics.

Sharma–Mittal entropy [19,20] is a more recent entropy measure that generalizes both Shannon and Tsallis entropy. It introduces two parameters ( and ) to control the balance between order and disorder in a system. While it has not seen as much widespread adoption as Shannon or Tsallis entropy, it has potential applications in various areas of physics, including the study of complex systems and information theory.

In summary, these entropy measures provide different tools for quantifying the information content, complexity and uncertainty in a wide range of physical systems. Depending on the characteristics of the system being studied and the specific questions being asked, one of these entropy measures may be more appropriate and insightful than the others.

All of the indicated entropies can be successfully calculated (and they have already been calculated, for example, in [21]) in the case of a Gaussian distribution, which is the subject of this paper. However, in the presence of additional parameters of the entropy itself (not the distribution), the question immediately arises about the behavior of entropy as a function of the parameter. It is a well-known fact that the Rényi entropy as a function of the parameter decreases. However, its convexity is not a universal property and, in general, depends on the distribution ([22]). Therefore, if we concentrate on Gaussian distribution, we need to investigate the properties of the introduced entropies in as much detail as possible.

Section 2 of this paper is devoted to this issue. More precisely, we begin by revisiting the definitions of various entropies and the corresponding formulas for the entropies of a centered Gaussian distribution with variance . These entropies typically depend on one or two positive parameters, excluding the Shannon entropy. Our primary objective is to analyze the monotonicity and convexity properties exhibited by these entropy measures as functions of the aforementioned parameters. Additionally, we explore limiting cases in which the entropies may not be well-defined. This exploration allows us to extend the definitions of the entropies through continuity. Furthermore, we establish limiting relationships between various entropy concepts. To substantiate and complement our theoretical findings, we provide several graphical illustrations. It is worth noting that certain theoretical properties, particularly the convexity of the Tsallis entropy, are challenging to analyze analytically. In such cases, we employ numerical investigations, which offer insights into theoretical properties.

Since this paper is devoted to the entropies of the Gaussian distribution, the next logical step to consider Gaussian processes, which is what is carried out in Section 3. We restrict ourselves to fractional Gaussian processes, as these objects have numerous applications in technology, finance, economics, biology and other fields. As a rule, fractional processes contain an additional parameter, such as the Hurst index for fractional Brownian motion. Shannon entropy for stationary Gaussian processes, including fractional Gaussian noise, was considered in detail in [23]. The value of this entropy for a multidimensional Gaussian vector depends on the determinant of covariance matrix, and it is quite difficult to analyze this determinant in higher dimensions. For example, the behavior of the entropy of the vector created from fractional Gaussian noise as the function of the Hurst index was investigated in [24], where the hypothesis that the Shannon entropy increases when the Hurst index H increases from 0 to and decreases when when the Hurst index H increases from to 1 was substantiated numerically; however, analytic confirmation of this hypothesis for higher dimensions is still in progress. Taking this into account, in this paper, we decided to limit ourselves to one-dimensional distributions of fractional Gaussian processes, instead of expanding the class of processes under consideration.

Namely, we compare the entropies of the one-dimensional distributions of the following fractional processes: fractional Brownian motion, subfractional Brownian motion, Riemann–Liouville fractional Brownian motion, bifractional Brownian motion and three types of multifractional Brownian motion (moving-average, Volterra-type and harmonizable), as well as tempered fractional Brownian motions of the first and second kind. We consider normalized versions of these processes to ensure that their variances at are equal to 1. After this normalization, we observe that fractional Brownian motion, subfractional Brownian motion and Riemann–Liouville fractional Brownian motion share the same entropies. Similar formulas apply to bifractional Brownian motion; furthermore, its entropies can be compared to those of fractional Brownian motion depending on the values of t.

For multifractional Brownian motion, we have established that the moving-average and harmonizable versions of this process have the same entropies. These entropies can be compared with the corresponding entropies of Volterra-type multifractional Brownian motion, depending on the behavior of the Hurst function. Lastly, for two versions of tempered fractional Brownian motions, we can numerically compare their entropies depending on the ratio between the multiplicative constants involved in their definitions.

Our reason and goal of this comparison was to consider fractional processes from the point of view of quantity of information contained in their one-dimensional distributions, because these processes previously were mostly compared from the point of view of the behavior of their trajectories that is interesting in financial applications, but entropy properties are more interesting in physical applications, for example, in the calculation of the fractal dimension of a solid sample. However, there is also an application to financial models. Namely, the Hurst index of fractional processes affects the behavior of their trajectories; its decrease leads to their irregularity and vice versa. But from the point of view of entropies, the situation showed a dependence on time: near zero, more precisely from zero to one, the variance, and therefore entropy, increases when the Hurst index decreases, but when time passes through unity, the situation changes to the opposite. This means that so-called rough volatility, which corresponds to the instability of the model, plays a crucial role only on short time intervals.

This paper is organized as follows. In Section 2, we investigate the properties of the various entropies for the centered Gaussian distribution with respect to the parameters, mainly paying attention to monotonicity and convexity. Section 3 is devoted to the entropies of fractional Gaussian processes. Fractional, subfractional and bifractional Brownian motions are studied in Section 3.1, three types of multifractional processes are considered in Section 3.2 and tempered fractional Brownian motions of the first and the second kind are compared in Section 3.3. We supplement our paper with three appendices. Appendix A contains derivations of formulas for the entropies of Gaussian distributions. Appendix B includes an auxiliary lemma necessary for studying the convexity of the entropies in Section 2, while Appendix C provides definitions and properties of special functions involved in the covariance functions of tempered fractional Brownian motions.

2. Shannon, Rényi, Generalized Rényi, Tsallis and Sharma–Mittal Entropies for Normal Distribution: Properties of Entropies as Functions of Their Parameters

Since all types of entropy are considered in detail for the normal distribution, Definition 1 of all entropies considered below is provided for the distribution with density. So, let be a density of a probability distribution.

Definition 1.

- 1.

- The Shannon entropy is given by

- 2.

- The Rényi entropy with index is given by

- 3.

- The generalized Rényi entropy in the case is given byThe generalized Rényi entropy (in the case ) is given by

- 4.

- The Tsallis entropy with index , is given by

- 5.

- The Sharma–Mittal entropy with positive indices and is defined as

Now, let us consider the density function of normal distribution with zero mean and variance :

The next proposition summarizes the formulas for various entropies for this probability density. These formulas are well known (see, e.g., [21]) and can be obtained by straightforward calculations. But for the reader’s convenience, we present their proofs in the Appendix A.

Proposition 1.

The following facts hold for the centered normal distribution with variance .

- (1)

- The Shannon entropy equals

- (2)

- The Rényi entropy (, ) equals

- (3)

- The generalized Rényi entropy in the case equalswhere .

- (4)

- The generalized Rényi entropy in the case equals

- (5)

- The Tsallis entropy (, ) equalswhere .

- (6)

- The Sharma–Mittal entropy for equals

Now, let us compare the values of and , and along the way, we will prove one simple useful inequality, which we use in other proofs.

Lemma 1.

For any , for , and for .

Proof.

Therefore, it suffices to prove that the numerator is positive for any . Obviously, if . Moreover, for . This means that for any , , it holds that . □

Now, we consider, step by step, the properties of the entropies introduced in Definition 1 as the functions of parameters. All entropies in this paper are considered for the centered normal distribution, but we shall recall this from time to time. Theorems 1–4 are devoted to the properties of Rényi, generalized Rényi, Tsallis and Sharma–Mittal entropy, respectively, as the functions of entropy parameters, and , if the latter parameter is present. All derivatives that are considered in the proofs of these theorems are taken in ; therefore, we omit it in the notations of derivatives. Let us start with the properties of the Rényi entropy as the function of .

Remark 1.

Theorem 1.

The following facts hold for the centered normal distribution with variance and corresponding Rényi entropy:

- (1)

- As , the Rényi entropy converges to the Shannon entropy, and at the point , the Rényi entropy can be extended by the Shannon entropy to be continuous.

- (2)

- The Rényi entropy is a decreasing and convex function of α.

Remark 2.

The continuity of the Rényi entropy at point , and the fact that it decreases in α, is common knowledge, and we provide it here in order to demonstrate how these properties are realized for the normal distribution. The convexity property is not true for all distributions. This fact was established, e.g., in [22].

Proof.

(1) According to L’Hôpital’s rule, and as . Therefore, the Rényi entropy converges to the Shannon entropy as , and at the point , the Rényi entropy can be extended by the Shannon entropy to be continuous.

(2) Let us calculate the derivative of the Rényi entropy in :

It was established in the proof of Lemma 1 that for all . ; therefore, for such , and the Rényi entropy is a strictly decreasing function in . Note that

and

Therefore, the Rényi entropy decreases from ∞ to Furthermore,

Consider the numerator. Its derivative equals

for , , because for such , which was established in the proof of Lemma 1. We obtain that is a strictly increasing function on and , which is zero if . This means that for , and for . Therefore,

except one point when it equals zero. Consequently, , except one point, , when it equals zero, and so the Rényi entropy is a convex function. □

Now, we proceed with the properties of the generalized Rényi entropy for the normal distribution as the function of and .

Theorem 2.

Consider the centered normal distribution with variance and corresponding generalized Rényi entropy.

- (1)

- In the case , the generalized Rényi entropy is a decreasing and convex function of α.

- (2)

- In the case , the generalized Rényi entropy converges to the generalized Rényi entropy as , and so at the point , considered as the function of β for fixed α, can be extended by to be continuous.

- (3)

- The generalized Rényi entropy, , considered as the function of β for fixed α, is a decreasing and convex function. The behavior in α with β fixed is symmetric.

Proof.

(1) By (3), . This function decreases in from to and is convex. Note that at point , it coincides with Shannon entropy.

(2) Obviously,

So, as , and at the point , can be extended by to be continuous.

(3) Since is a concave function, its slope function is decreasing; therefore, function

considered as the function of for fixed , is a decreasing function. To prove its convexity, we apply Lemma A1, taking

Since

the function

considered as the function of for fixed , is a convex function. The situation with fixed is symmetric. □

Now, we proceed with the properties of the Tsallis entropy as the function of .

Theorem 3.

Consider, as before, the centered normal distribution with variance

- (1)

- As , the Tsallis entropy converges to the Shannon entropy, and at the point , the Tsallis entropy can be extended by the Shannon entropy to obtain a continuous function.

- (2)

- The Tsallis entropy decreases from to when α increases from 0 to .

- (3)

- Let, as in Proposition 1, , and let be the unique root of the equation

- (a)

- Let . Then, is a convex function on the whole interval .

- (b)

- Let . Then, is a convex function on the interval

- (c)

- Let . Then, is a concave function on the interval

- (d)

- For any (consequently, for any ), there exist numbers such that is a convex function on the interval , and it is a concave function on the interval .

Remark 3.

The property of decreasing is common for the Tsallis entropy if the conditions supplying the equality

and the finite values of the last integral for any are satisfied.

Proof.

According to L’Hôpital’s rule,

This means that the Tsallis entropy converges to the Shannon entropy when , and at the point , the Tsallis entropy can be extended by the Shannon entropy to be continuous.

(2) Now, we investigate the monotonicity of the value

First, let us calculate two derivatives of the function Obviously,

It is easy to see that the quadratic function where and This means that is convex, whence its slope function increases when increases from 0 to . In turn, this means that decreases from to when increases from 0 to .

(3) In order to establish the convexity of , denote, as before, and recall that Also,

Then,

and

According to the Taylor formula,

Therefore,

It is easy to calculate the 3rd derivative of function g:

where Function

is increasing on with the unique root Consider several cases. In some of them, we can produce analytical inference about the sign of ; in other cases, numerics are necessary.

- (a)

- Let . Then, , and for all ; consequently, , and for all This means that in the case , is a convex function on the whole interval .

- (b)

- Let Then, and Consequently, and . Similarly, let . Then, and Consequently, and . This means that in the case , is a convex function on the interval

- (c)

- Let Then, ; therefore, , and consequently, , whence . Let Then, and whence . Therefore, in the case , is a concave function on the interval

- (d)

- Analyzing the asymptotics of , and at 0 and at , respectively, we obtain that forFurthermore, for and for , it is sufficient to analyze the sign of the valueThis means that is convex on some interval and concave on some interval , where the first statement is true for any , while the second is true only for .

□

Remark 4.

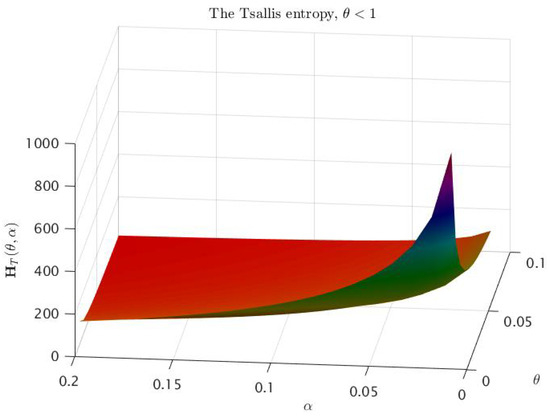

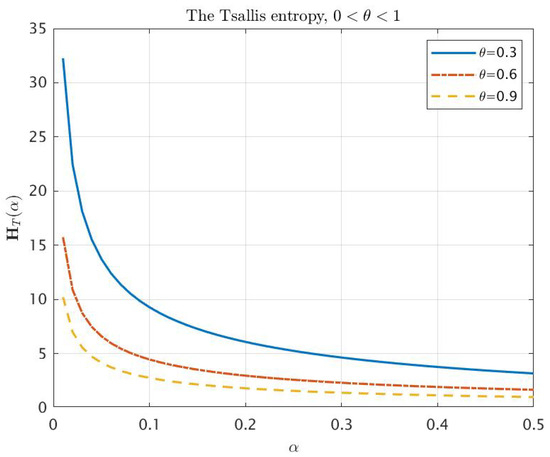

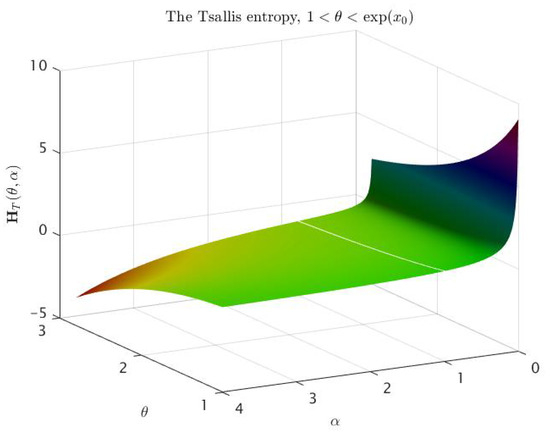

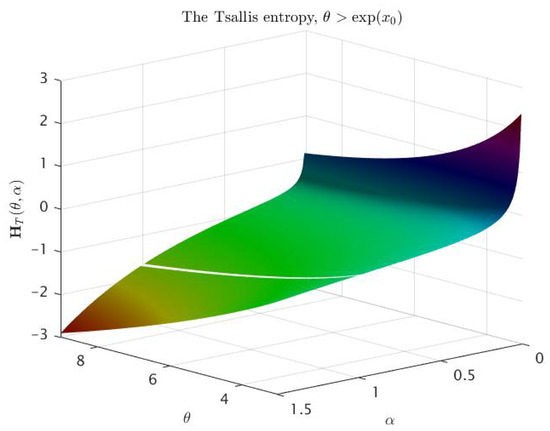

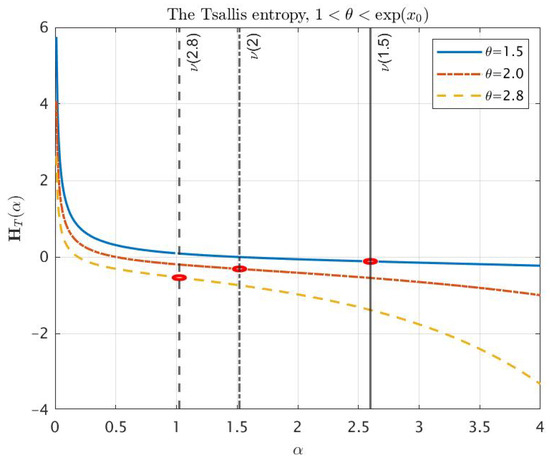

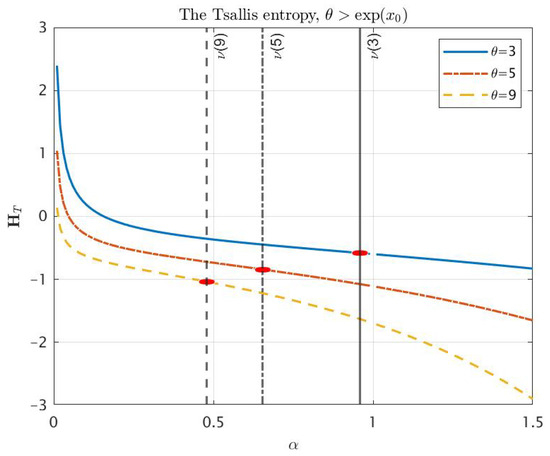

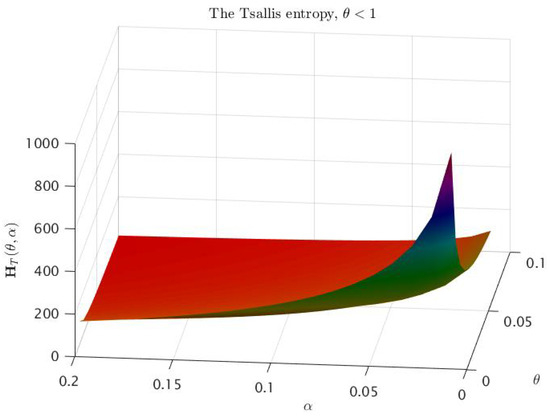

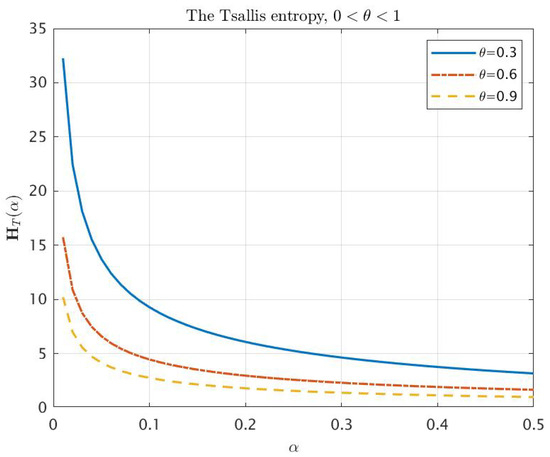

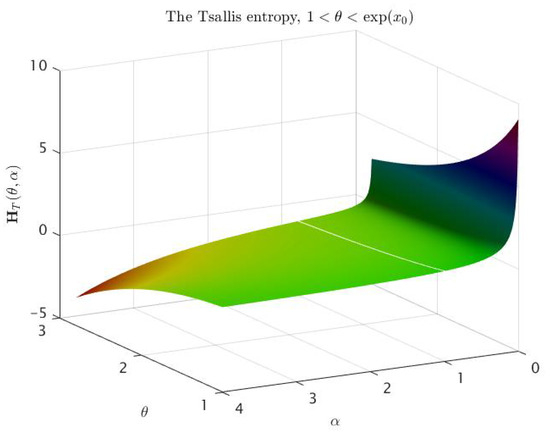

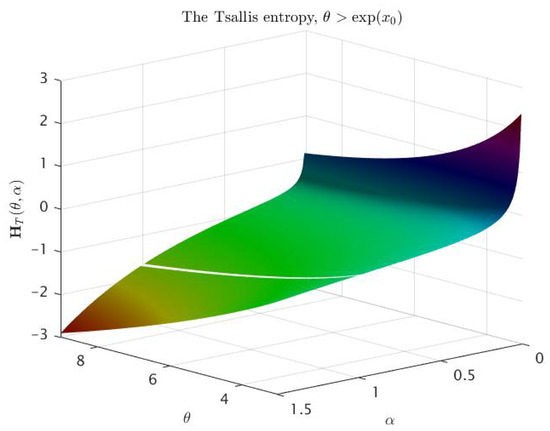

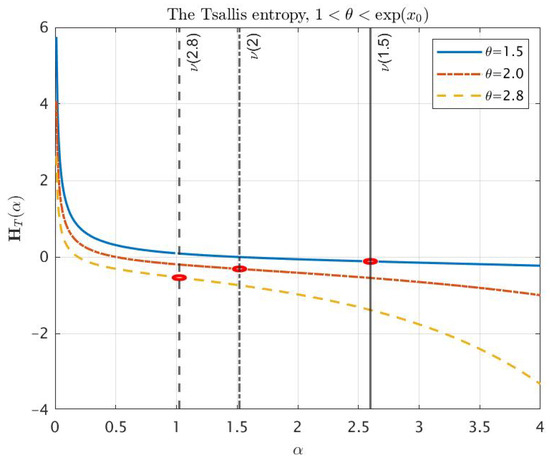

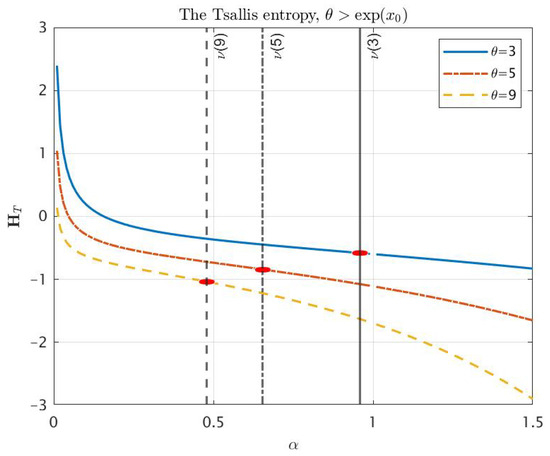

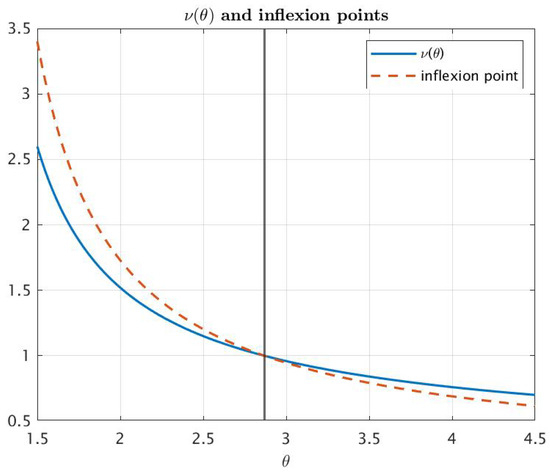

Figure 1 and Figure 2 correspond to the behavior of Tsallis entropy for . Two cases need to be investigated numerically:

and

In both cases, we already know from item of Theorem 3 that is a convex function on the interval and is a concave function on the interval for some . The surfaces plotted on Figure 3 and Figure 4 confirm numerically that for any , there exists the unique inflection point of as the function of α. Furthermore, Figure 5 and Figure 6 give us an idea of the entropy graphs for different θ. The marked points are points of the intersection of the entropy graph with the vertical line . Note that ; therefore, the values of θ in Figure 5 correspond to the interval , while the values in Figure 6 correspond to .

Figure 1.

Tsallis entropy as a function of and , .

Figure 2.

Tsallis entropy as a function of for .

Figure 3.

Tsallis entropy as a function of and , .

Figure 4.

Tsallis entropy as a function of and , .

Figure 5.

Tsallis entropy as a function of for .

Figure 6.

Tsallis entropy as a function of for .

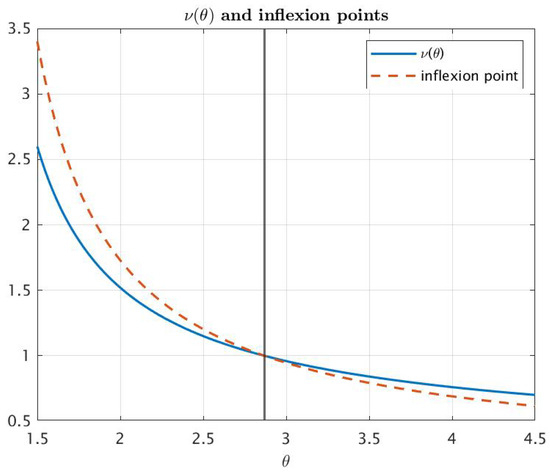

Moreover, we numerically compared the values of the inflection points which are the solutions of the equation (which is equivalent to ; see (7)) with . Figure 7 confirms that the unique inflection point is close to , slightly overcomes for and is less than for . In the case , we have a coincidence of inflection points with

Figure 7.

and inflection points.

Now, let us study the properties of the Sharma–Mittal entropy as the function of parameters and . It is well known (see [21]) that the Shannon, Rényi and Tsallis entropies are the limiting cases of , namely

Therefore, the Sharma–Mittal entropy can be extended to a continuous function of and .

Theorem 4.

Consider, as before, the centered normal distribution with variance

- (1)

- Let us denoteFor any fixed , , decreases in , namely as follows:

- (i)

- If , then .

- (ii)

- If , then decreases from to .

- (iii)

- If , then decreases from to 0.

- (b)

- For any fixed , , the function is concave in β if , and it is convex if .

- (b)

- For a fixed , is a decreasing and convex function in α.

Proof.

(1) We have

Denote . Then,

is a slope function for , which in convex. Therefore, is increasing in . Consequently, the statements (i)–(iii) hold.

(2) Let . We can write

where . Since the third derivative is positive for , we see that is convex in by Lemma A1 from Appendix B. Hence, is concave in .

In the case , we represent in the following form:

where . For , we have ; hence, the desired convexity follows from Lemma A1.

(3) It is not hard to see that

where is the Rényi entropy, which is a decreasing function of according to Theorem 1. Hence, decreases. Moreover,

and also decreases in .

Remark 5.

Let us consider the equation , i.e.,

or

According to the proof of Theorem 1 (statement 3), the function increases from to 0 in .

Thus:

- If , then and (iii) holds.

- If , then (iii) holds too.

- If , then let be a number such thatIf , then and (iii) holds. If , then and (ii) holds. If , then and (i) holds.

3. Examples of Gaussian Fractional Processes with Their Variances: Entropies of Fractional Gaussian Processes

Now, we consider several types of fractional Gaussian processes. Our goal is very simple: to compare the entropies of their marginal distributions. In order to compare their entropies and variances correctly, we normalize the variances using the normalizing coefficients so that at the point , the variance of every process equals 1.

As already mentioned in the introduction, the entropy of vector fractional Gaussian noise is calculated using the formulas given in the book [23]. However, firstly, these calculations are based on the fact that fractional Gaussian noise is a stationary process, and secondly, using them to compare different processes, even fractional Gaussian noise with different Hurst indices, is too complicated a problem for an analytical solution. The main difficulty is that the formula for the entropy of a Gaussian vector contains the determinant of the covariance matrix, the calculation of which there are no simple proposals for at the moment, except for cumbersome standard formulas, and at the same time, they do not make it possible to compare these determinants. Therefore, having at our disposal several classes of fractional processes that model a wide variety of processes, from physics to financial mathematics, we set out to compare in as simple a way as possible the terms of the information they carry, or, more simply, to compare their entropies. The comparisons of entropies presented are based on calculating the variances of the corresponding processes, and these calculations are quite simple and understandable to a wide range of readers.

3.1. Fractional, Subfractional and Bifractional Brownian Motions

Let us start with the definition of fractional Brownian motion. This process was first introduced in [25].

Definition 2.

A centered Gaussian process with a covariance function

is called a fractional Brownian motion (fBm) with the Hurst parameter .

Obviously, .

Definition 3

([26]). A centered Gaussian process with a covariance function

is called a subfractional Brownian motion with the Hurst parameter

Obviously,

Let us put . Then, has the same variance as fractional Brownian motion.

Definition 4

([27] (p.71)). The process , defined by

and where is a Wiener process, is called a Riemann–Liouville fractional Brownian motion.

Then

Therefore, the process has the same variance as fractional Brownian motion and subfractional Brownian motion.

Definition 5

([28]). A centered Gaussian process , starting from zero, with a covariance function

is called a bifractional Brownian motion with and .

Then

Obviously, at point , variance equals .

Proposition 2.

Let X be one of the following processes: , or . Then, one has the following formulas for the entropies of :

- (1)

- The Shannon entropy equals

- (2)

- The Rényi entropy (, ) equalsFor , we extend the Rényi entropy by the Shannon entropy continuously.

- (3)

- The generalized Rényi entropy in the case equalsand for , we extend the generalized Rényi entropy by the Shannon entropy (and Rényi entropy with ) continuously.

- (4)

- The generalized Rényi entropy in the case equalsand for , it can be extended by the generalized Rényi entropy in the case continuously.

- (5)

- The Tsallis entropy (, ) equalsand for , it can be extended by the Shannon entropy continuously.

- (6)

- The Sharma–Mittal entropy for equalsand for , it can be extended by the Rényi entropy continuously.

- (7)

- The same statements hold for with instead of H. This means that any entropy of bifractional Brownian motion with parameters H and K equals to the corresponding entropy of fBm with Hurst index In turn, this means that if we fix the same H in fBm and bifractional Brownian motion and take , thenand opposite inequality holds for . For , the situation is more involved: if or , thenand for or , the opposite inequality holds.

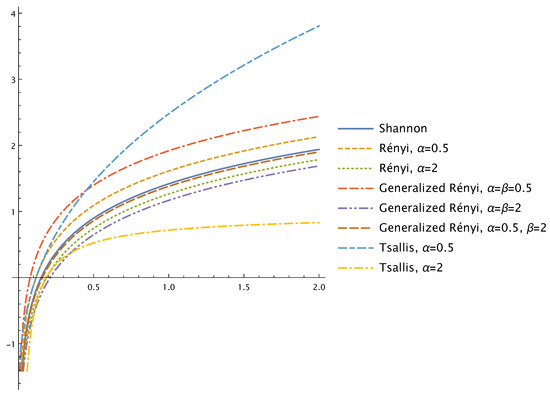

Figure 8 contains graphs of various entropies of fractional Brownian motion with Hurst parameter .

Figure 8.

Various entropies of fractional Brownian motion with Hurst parameter as functions of t.

Remark 6.

It is interesting and natural to compare the variance of fBm with the variance of the corresponding fractional Ornstein–Uhlenbeck process for various H and α and consequently to compare their entropies. Consider the following cases.

Let . Then, according to [29],

If , then , and

Similarly, if , then .

Let . Then, the integral is understood via integration by parts [30]:

Let . Then,

because and similarly .

Let . Denote . Then, our goal is to determine the sign of the value

Now, we change the variables , and take into account that , and similarly, , denote and also take into account the symmetry of the integrand in the 2nd integral and, with all of this at hand, arrive at the value

whose sign is interesting for us. Obviously,

Equivalently, we can consider the sign of function

Obviously, . Furthermore,

This means that for all ; consequently, for all . Together with (16), this finally means that if , and if .

Remark 7.

Note that fractional Ornstein–Uhlenbeck process was generalized in the papers [31,32] to the massive-FBM and the diffusing–diffusivity-FBM. The diffusing–diffusivity-FBM is non-Gaussian, but the massive-FBM can be considered in the framework of fractional Ornstein–Uhlenbeck processes, and the calculations above can help in the comparison of the entropies.

3.2. Multifractional Brownian Motion

Let us consider various definitions of multifractional Brownian motion. The difference is in the form of their representation; the same situation that we have with standard fBm: it admits Mandelbrot–van Ness representation [33] on the whole axis, Molchan–Golosov compact interval representation [29] and spectral representation (Section 7.2.2 of [34]). However, covariance and variance functions of all fBms are the same and can differ only by normalizing multipliers (correct values of the multipliers are provided, for example, in [35]). Considering different representations of multifractional Brownian motion, the authors introduce different normalizing multipliers, basically following the form of this factor for the corresponding representation of fractional Brownian motion, but in this case, they depend on time. Below, we provide these representations and analyze the relations between them and the behavior of the normalizing multipliers as the functions on time, because their value influences the value of variance and consequently the value of the entropy. Obviously, if we renormalize the processes in order to equate their variances, the entropies become equal.

Let be a continuous function.

Definition 6

([36]). For , the following random function is called moving-average multifractional Brownian motion with functional parameter H:

where W denotes the Brownian motion.

It follows from Cor. 3.4 of [33] that

where

The function can be written in the following form Appendix A of [35]:

Let us consider the process . Then,

Remark 8.

The coefficient in (17) goes back to the seminal work of Mandelbrot and van Ness [33], who defined fractional Brownian motion as a fractional integral of the Wiener process (this factor ensures that a fractional integral becomes an ordinary repeated integral for integer values of ). However, in the literature, the moving-average multifractional Brownian motion is often defined with a different normalizing constant, namely, it is defined as . In this case, we obviously have that .

Let us consider a different type of multifractional Brownian motion, introduced in [37,38]. It is based on the Molchan–Golosov compact interval representation of fBm [29]. Note that in the next definition, the class of appropriate Hurst functions is restricted to the case .

Definition 7

([37]). Let . For , the following random function is called Volterra-type multifractional Brownian motion with functional parameter H:

where W denotes the Brownian motion.

Then, by Prop. 2 of [37],

where

Hence, the process has the variance

and .

Remark 9.

In [38], the authors defined the Volterra-type multifractional Brownian motion with a normalizing function in front of the integral, i.e., by the relation . Evidently, in this case, one has .

Definition 8

([39]). The harmonizable multifractional Brownian motion with functional parameter H is defined by

where is the “Fourier transform” of the white noise that is a unique complex-valued random measure such that for all

see [39,40].

It is known from Prop. 4 of [41] that

where

Define the normalized version of the harmonizable multifractional Brownian motion by so that

Proposition 3.

One has the following formulas for the entropies of , .

- (1)

- The Shannon entropy equals

- (2)

- The Rényi entropy (, ) equals

- (3)

- The generalized Rényi entropy in the case equals

- (4)

- The generalized Rényi entropy in the case equals

- (5)

- The Tsallis entropy (, ) equals

- (6)

- The Sharma–Mittal entropy for equals

Now, let us compare entropies for various versions of multifractional Brownian motion. Recall that the Volterra-type multifractional Brownian motion is well-defined only for .

Proposition 4.

Let and .

- (1)

- For all , .

- (2)

- Let . Then

Proof.

It follows immediately from (18) and (21) that for all . This means that for any Hurst function ,

i.e., the entropies for and coincide.

According to Remark 1, all entropies are increasing functions of the variance. Therefore, it suffices to compare the variances of moving-average and Volterra-type multifractional Brownian motions.

Hence,

Since the function decreases for , we see that if and only if . □

3.3. Tempered Fractional Brownian Motion

Two classes of continuous stochastic Gaussian processes, known as tempered fractional Brownian motion (TFBM) and tempered fractional Brownian motion of the second kind (TFBMII), were recently introduced in [42] and [43], respectively. These processes modify the power law kernel used in the moving-average representation of fBm by introducing exponential tempering. Unlike standard fBm, TFBMs can be defined for any Hurst parameter value . These processes attracted the attention of researchers in various fields. Notably, a stochastic phenomenological bifurcation of the Langevin equation perturbed by TFBM was constructed in [44], revealing diverse and intriguing bifurcation phenomena. Additionally, TFBM and TFBMII are valuable as stochastic models for data exhibiting fractional Brownian motion characteristics at intermediate scales but deviating at longer scales, such as wind speed measurements.

Definition 9.

Given an independently scattered Gaussian random measure on with control measure dx, for any and , the stochastic process defined by the Wiener integral

where is called a tempered fractional Brownian motion (TFBM).

Since TFBM ([45] (p. 7)) has the covariance function

for any , where

where and is the modified Bessel function of the second kind (see Appendix C), then we have

Definition 10.

Given an independently scattered Gaussian random measure on with control measure dx, for any and , the stochastic process defined by the Wiener integral

where

is called a tempered fractional Brownian motion of the second kind (TFBMII).

According to [45] (p. 7), TFBMII has the covariance function

for any , where

and is the generalized hypergeometric function, defined in Appendix C. Therefore, the value of the correspondent variance equals

Let us define

Then,

and .

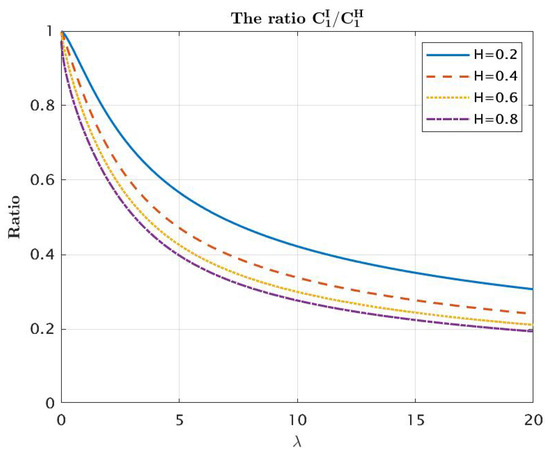

According to Remark 1, in order to compare the entropies of and , it suffices to compare their variances. By (30), this problem can be reduced to the investigation of the behavior of the ratio . Namely, we need to compare its value at arbitrary point t to its value at . Note also that the dependence of and on is such that , . Therefore, it suffices to study the ratio as a function of . As it may be seen from Figure 9, this ratio decreases in for all selected values of H. Thus, our numerical study leads to the following conjecture:

and

Figure 9.

The ratio as a function of .

The analytical proof of this result is challenging due to the complexity of expressions (28) and (29).

Remark 10.

For the reader’s convenience, the MATLAB scripts for the figures are published as Supplementary Material.

4. Conclusions

We examined five distinct entropy measures applied to the Gaussian distribution: Shannon entropy, Rényi entropy, generalized Rényi entropy, Tsallis entropy and Sharma–Mittal entropy. We investigated their interrelationships and analyzed their properties in terms of their dependence on specific parameters. Furthermore, our study extends to fractional Gaussian processes, encompassing fractional Brownian motion, subfractional Brownian motion, bifractional Brownian motion, multifractional Brownian motion and tempered fractional Brownian motion. We conducted a comparative analysis of the entropies associated with the one-dimensional distributions of these processes.

Entropy measures find widespread application in the analysis of fractional processes across various domains, such as signal processing, finance, climate science and image analysis. Fractional processes serve as essential models for capturing long-range dependence and self-similarity in diverse data types. Entropy plays a crucial role in quantifying the complexity and information content of signals generated by fractional processes, which proves invaluable for tasks like prediction, risk assessment and anomaly detection. In the realm of finance, entropy is employed to assess the information content and predictability of asset prices.

Our research opens up possibilities for future extensions in several directions. Potential avenues for further investigation include exploring various entropy measures for non-Gaussian processes, nonstationary processes and processes with nonstationary increments. Additionally, we can delve into the solutions of stochastic differential equations that describe the interactions of particle systems within random environments.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/axioms12111026/s1, the MATLAB scripts for the figures.

Author Contributions

Investigation, A.M., Y.M., K.R. and Y.A.R.; writing—original draft preparation, A.M., Y.M., K.R. and Y.A.R. All authors have read and agreed to the published version of the manuscript.

Funding

The second author is supported by The Swedish Foundation for Strategic Research, grant Nr. UKR22-0017 and by Japan Science and Technology Agency CREST, project reference number JPMJCR2115. The third author acknowledges that the present research is carried out within the frame and support of the ToppForsk project nr. 274410 of the Research Council of Norway with title STORM: Stochastics for Time-Space Risk Models.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Computation of Entropies for Centered Normal Distribution

Appendix A.1. Shannon Entropy

The following transformations are obvious:

Appendix A.2. Rényi Entropy

Appendix A.3. Generalized Rényi Entropy

Let us calculate the generalized Rényi entropy in the case . We denote and use Formula (A1):

To calculate the generalized Rényi entropy in the case , we use Formula (A1):

Appendix A.4. Tsallis Entropy

The Tsallis entropy (, ) can be calculated using Formula (A1) as follows:

Appendix A.5. Sharma–Mittal Entropy

The Sharma–Mittal entropy (, , ) is calculated similarly. By (A1), we have

Appendix B. Auxiliary Lemma

Lemma A1.

Let function and Then, the function

is convex.

Proof.

Let us calculate the derivatives:

According to the Taylor formula,

where is between x and , i.e.,

Therefore,

Finally,

Consequently, the function is convex. □

Appendix C. Special Functions Kν and 2F3

In this subsection, we present definitions of two special functions, and , which we used in Section 3.3.

A modified Bessel function of the second kind has the integral representation

where . The function also has the series representation

where is called the Bessel function. We refer the reader to Section 8.43 of [46] for more information about the modified Bessel function of the second kind.

Next, we define the confluent hypergeometric function that we used to obtain the variance and covariance of TFBMII. In general, a generalized hypergeometric function is defined by

where is called Pochhammer Symbol. Therefore,

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Pathria, R.K. Statistical Mechanics; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Schneier, B. Applied Cryptography: Protocols, Algorithms, and Source Code in C; Wiley: Hoboken, NJ, USA, 1996. [Google Scholar]

- Nei, M.; Tajima, F. DNA polymorphism detectable by restriction endonucleases. Genetics 1981, 97, 145–163. [Google Scholar] [CrossRef]

- Brock, W.; Lakonishok, J.; LeBaron, B. Simple technical trading rules and the stochastic properties of stock returns. J. Financ. 1992, 47, 1731–1764. [Google Scholar] [CrossRef]

- Lorenz, E.N. Deterministic nonperiodic flow. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef]

- Wasserman, S.; Faust, K. Social Network Analysis: Methods and Applications; Cambridge University Press: Cambridge, UK, 1994. [Google Scholar]

- Mullet, E.; Karakus, M. A cross-cultural investigation of the triarchic model of well-being in Turkey and the United States. J. Cross-Cult. Psychol. 2006, 37, 141–149. [Google Scholar]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Rahman, A.U.; Haddadi, S.; Javed, M.; Kenfack, L.T.; Ullah, A. Entanglement witness and linear entropy in an open system influenced by FG noise. Quantum Inf. Process. 2022, 21, 368. [Google Scholar] [CrossRef]

- Li, K.; Zhou, W.; Yu, S.; Dai, B. Effective DDoS Attacks Detection Using Generalized Entropy Metric. In Proceedings of the Algorithms and Architectures for Parallel Processing; 9th International Conference, ICA3PP 2009, Taipei, Taiwan, 8–11 June 2009; Hua, A., Chang, S.L., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 266–280. [Google Scholar]

- Morabito, F.C.; Labate, D.; Foresta, F.L.; Bramanti, A.; Morabito, G.; Palamara, I. Multivariate multi-scale permutation entropy for complexity analysis of Alzheimer’s disease EEG. Entropy 2012, 14, 1186–1202. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, P.; Luo, X.; Wu, M.; Liao, L.; Yang, S.; Rangayyan, R.M. Measuring signal fluctuations in gait rhythm time series of patients with Parkinson’s disease using entropy parameters. Biomed. Signal Process. Control 2017, 31, 265–271. [Google Scholar] [CrossRef]

- Rényi, A. On measures of entropy and information. In Proceedings of the 4th Berkeley Symposium on Mathematical Statistics and Probability, Vol. I, Berkeley, CA, USA, 20 June–30 July 1960; University California Press: Berkeley, CA, USA; Los Angeles, CA, USA, 1960; pp. 547–561. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Tsallis, C. The nonadditive entropy Sq and its applications in physics and elsewhere: Some remarks. Entropy 2011, 13, 1765–1804. [Google Scholar] [CrossRef]

- Sharma, B.D.; Taneja, I.J. Entropy of type (α, β) and other generalized measures in information theory. Metrika 1975, 22, 205–215. [Google Scholar] [CrossRef]

- Sharma, B.D.; Mittal, D.P. New non-additive measures of relative information. J. Combin. Inform. Syst. Sci. 1977, 2, 122–132. [Google Scholar]

- Nielsen, F.; Nock, R. A closed-form expression for the Sharma–Mittal entropy of exponential families. J. Phys. A 2012, 45, 032003. [Google Scholar] [CrossRef]

- Buryak, F.; Mishura, Y. Convexity and robustness of the Rényi entropy. Mod. Stoch. Theory Appl. 2021, 8, 387–412. [Google Scholar] [CrossRef]

- Stratonovich, R.L. Theory of Information and Its Value; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Malyarenko, A.; Mishura, Y.; Ralchenko, K.; Shklyar, S. Entropy and alternative entropy functionals of fractional Gaussian noise as the functions of Hurst index. Fract. Calc. Appl. Anal. 2023, 26, 1052–1081. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. Wienersche Spiralen und einige andere interessante Kurven im Hilbertschen Raum. Dokl. Acad. Sci. USSR 1940, 26, 115–118. [Google Scholar]

- Bojdecki, T.; Gorostiza, L.G.; Talarczyk, A. Sub-fractional Brownian motion and its relation to occupation times. Statist. Probab. Lett. 2004, 69, 405–419. [Google Scholar] [CrossRef]

- Mishura, Y.; Zili, M. Stochastic Analysis of Mixed Fractional Gaussian Processes; ISTE Press: London, UK; Elsevier Ltd.: Oxford, UK, 2018. [Google Scholar]

- Russo, F.; Tudor, C.A. On bifractional Brownian motion. Stoch. Process. Appl. 2006, 116, 830–856. [Google Scholar] [CrossRef]

- Norros, I.; Valkeila, E.; Virtamo, J. An elementary approach to a Girsanov formula and other analytical results on fractional Brownian motions. Bernoulli 1999, 5, 571–587. [Google Scholar] [CrossRef]

- Cheridito, P.; Kawaguchi, H.; Maejima, M. Fractional Ornstein–Uhlenbeck processes. Electron. J. Probab. 2003, 8, 1–14. [Google Scholar] [CrossRef]

- Cherstvy, A.G.; Wang, W.; Metzler, R.; Sokolov, I.M. Inertia triggers nonergodicity of fractional Brownian motion. Phys. Rev. E 2021, 104, 024115. [Google Scholar] [CrossRef]

- Wang, W.; Seno, F.; Sokolov, I.M.; Chechkin, A.V.; Metzler, R. Unexpected crossovers in correlated random-diffusivity processes. New J. Phys. 2020, 22, 083041. [Google Scholar] [CrossRef]

- Mandelbrot, B.B.; Van Ness, J.W. Fractional Brownian motions, fractional noises and applications. SIAM Rev. 1968, 10, 422–437. [Google Scholar] [CrossRef]

- Samorodnitsky, G.; Taqqu, M.S. Stable Non-Gaussian Random Processes; Chapman & Hall: New York, NY, USA, 1994. [Google Scholar]

- Mishura, Y.S. Stochastic Calculus for Fractional Brownian Motion and Related Processes; Lecture Notes in Mathematics; Springer-Verlag: Berlin, Germany, 2008; Volume 1929. [Google Scholar]

- Peltier, R.F.; Lévy Véhel, J. Multifractional Brownian Motion: Definition and Preliminary Results; [Research Report] RR-2645; INRIA: Le Chesnay, France, 1995. [Google Scholar]

- Boufoussi, B.; Dozzi, M.; Marty, R. Local time and Tanaka formula for a Volterra-type multifractional Gaussian process. Bernoulli 2010, 16, 1294–1311. [Google Scholar] [CrossRef]

- Ralchenko, K.; Shevchenko, G. Properties of the paths of a multifractal Brownian motion. Theory Probab. Math. Statist. 2010, 80, 119–130. [Google Scholar] [CrossRef]

- Benassi, A.; Jaffard, S.; Roux, D. Elliptic Gaussian random processes. Rev. Mat. Iberoam. 1997, 13, 19–90. [Google Scholar] [CrossRef]

- Stoev, S.A.; Taqqu, M.S. How rich is the class of multifractional Brownian motions? Stoch. Process. Appl. 2006, 116, 200–221. [Google Scholar] [CrossRef]

- Ayache, A.; Cohen, S.; Lévy Véhel, J. The covariance structure of multifractional Brownian motion, with application to long range dependence. In Proceedings of the 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 00CH37100), Istanbul, Turkey, 5–9 June 2000; Volume 6, pp. 3810–3813. [Google Scholar]

- Meerschaert, M.M.; Sabzikar, F. Tempered fractional Brownian motion. Statist. Probab. Lett. 2013, 83, 2269–2275. [Google Scholar] [CrossRef]

- Sabzikar, F.; Surgailis, D. Tempered fractional Brownian and stable motions of second kind. Statist. Probab. Lett. 2018, 132, 17–27. [Google Scholar] [CrossRef]

- Zeng, C.; Yang, Q.; Chen, Y. Bifurcation dynamics of the tempered fractional Langevin equation. Chaos 2016, 26, 084310. [Google Scholar] [CrossRef]

- Azmoodeh, E.; Mishura, Y.; Sabzikar, F. How does tempering affect the local and global properties of fractional Brownian motion? J. Theoret. Probab. 2022, 35, 484–527. [Google Scholar] [CrossRef]

- Gradshteyn, I.S.; Ryzhik, I.M. Table of Integrals, Series, and Products; Academic Press: New York, NY, USA, 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).