Abstract

The quantum-inspired genetic algorithm (QGA), which combines quantum mechanics concepts and GA to enhance search capability, has been popular and provides an efficient search mechanism. This paper proposes a modified QGA, called dynamic QGA (DQGA). The proposed algorithm utilizes a lengthening chromosome strategy for a balanced and smooth transition between exploration and exploitation phases to avoid local optima and premature convergence. Apart from that, a novel adaptive look-up table for rotation gates is presented to boost the algorithm’s optimization abilities. To evaluate the effectiveness of these ideas, DQGA is tested by various mathematical benchmark functions as well as real-world constrained engineering problems against several well-known and state-of-the-art algorithms. The obtained results indicate the merits of the proposed algorithm and its superiority for solving multimodal benchmark functions and real-world constrained engineering problems.

Keywords:

quantum computing; quantum-inspired algorithms; metaheuristics; numerical optimization; constrained optimization MSC:

81P68; 68Q12; 65K10; 49M41

1. Introduction

Metaheuristic optimization algorithms have been proposed to tackle complex, high-dimensional problems without comprehensive knowledge of the problem’s nature and their search spaces’ derivative information, which is essential for finding critical points of the search space in classical optimization methods. Metaheuristics have the ability to treat optimization problems as black boxes. So, the input, output, and a proper fitness function of an optimization problem suffice to solve the problem. As a result, metaheuristic algorithms are problem-independent, meaning they can be applied to a wide variety of problems with subtle modifications. Metaheuristic algorithms provide acceptable results in a timely manner for many real-world optimization problems, but their computational cost is excessively high using conventional algorithms. Some examples of the applications are image and signal processing [1], engineering and structural design [2], routing problems [3], feature selection [4], stock market portfolio optimization [5], RNA prediction [6], and resource management problems [7].

Han and Kim proposed the prominent genetic quantum algorithm (GQA) [8] and the quantum-inspired evolutionary algorithm [9] for combinatorial optimization problems using qubit representation and quantum rotation gates instead of the ‘crossover’ operator, in the genetic algorithm (GA). The probabilistic representation of qubits used in quantum-inspired metaheuristic optimization algorithms instead of bits expands the diversity of individuals in the algorithm’s population, so it helps the optimization algorithm avoid premature convergence and getting stuck in local optima. Recently, a relatively large number of quantum-inspired metaheuristics have been proposed to solve a vast range of real-world optimization problems. Some recent examples are as follows. In [10,11,12], quantum-inspired metaheuristics are applied to image processing problems, and structural design applications are presented in [13,14,15,16]. In [17,18,19,20,21,22], quantum-inspired metaheuristics are utilized for job-scheduling problems, and some examples of network applications of quantum-inspired metaheuristics are found in [23,24,25,26]. In [27], a quantum-inspired metaheuristic is used for quantum circuit synthesis. Other applications are feature selection [28,29], fuzzy c-means clustering [30], stock market portfolio optimization [31], flight control optimization [32,33], antenna positioning problems [34], airport gate allocation [35], and multi-objective optimization [36]. A comprehensive review of quantum-inspired metaheuristics and their variants is presented in [37,38]. Considering the diverse range of applications of quantum-inspired metaheuristics, it is clear that this scope has gained much attention, and this approach can solve real-world optimization problems effectively.

Metaheuristic optimization algorithms such as GA [39] have been widely used in solving some important optimization problems in, e.g., the field of quantum information and computation, such as in distributed quantum computing [40,41,42,43], in the design and the optimization of quantum circuits [44,45,46], and in finding stabilizers of a given sub-space [47], etc.

The main challenge in all metaheuristic algorithms is achieving a properly balanced transition between the exploration and exploitation phases. This paper proposed DQGA to establish a smooth transition between the exploration and exploitation phases. The principal objective of the proposed algorithms is to boost global search ability without deteriorating the local search.

DQGA enhances the search power of QGA with two contributions:

- Lengthening Chromosomes Size: DQGA increases the size of chromosomes throughout the algorithm run. This strategy leads to increasing precision levels for the duration of generations. Low precision levels for early generations cause higher global focus and less attention to detail, favoring diversification. As opposed to that, higher precision in the last generations promotes intensification. This manner guarantees a smooth shift from the exploration phase to the exploitation phase. It should be noted that the concept of utilizing variable chromosome size was introduced in [48] as an attempt to find a suitable chromosome size for reducing computational time. Also, in [49], the authors used different chromosome sizes to cover diverse coarse-grained and fine-grained parts of a design in topological order. However, in this paper, we utilized incrementing chromosome size for different purposes, namely local optima and premature convergence avoidance.

- Adaptive Rotation Steps: Unlike the look-up table of the original GQA, which consists of fixed values for all generations and ignores the current state of the qubits, the proposed DQGA uses an adaptive look-up table which helps the algorithm to search more properly and improves the exploration–exploitation transition.

The rest of this paper is structured as follows. Section 2 presents the quantum computing basics and an overview of GQA. The proposed DQGA algorithm is described in Section 3. The results and the comparisons of the performance of the proposed algorithm on benchmark functions and real-world engineering optimization problems are given in Section 4, as well as the comparison of the results with well-known metaheuristic algorithms. Finally, Section 5 concludes this work and points out future studies.

2. Fundamentals

2.1. Quantum Computing Basics

Like bits in classical processing are the basic units of information, quantum bits, or qubits, are the units of information in quantum computing. The mathematical representation of a qubit is a unit vector in a two-dimensional Hilbert space for which the basis vectors are denoted by the symbols and . Unlike classical bits, qubits can be in the superposition of and like , where and are complex numbers such that .

If the qubit is measured in the computational, i.e., {, } basis, the classic outcome of 0 is observed with the probability of and the classic outcome of 1 is observed with the probability of . If 0 is observed, the state of the qubit after the measurement collapses to , otherwise, it collapses to [50].

A register with m qubits is defined as:

An m-qubit register is able to represent states simultaneously. The probability of collapsing into each of these states after measurement is the multiplication of the corresponding probability amplitudes squared [40]. For example, consider a system comprised of three qubits as follows:

The state of the system can be described as:

which means the probabilities for the system to represent the states , and are , and , respectively.

Manipulation of qubits is performed through quantum gates. A quantum gate is a linear transformation and is reversible. A unitary matrix U is used to define a quantum gate. A complex square matrix U is unitary if its conjugate transpose and its inverse are the same. So for any unitary matrix U Equation (4) holds.

2.2. GQA

The canonical QGA called GQA is presented by Han and Kim [8]. GQA is similar to its conventional counterpart GA in terms of being population-based and evolving a set of generations. Still, it differs from GA because it uses quantum rotation gates instead of the classical crossover operator. The probabilistic nature of qubit representation made the mutation operator expendable, so there is no mutation operator in GQA.

Just like its ancestor, QGA uses a population of individuals to become evolved through the generations, but unlike GA, the individuals consist of qubits instead of bits in GQA. The population at generation t is defined as:

where n is the population size and is a qubit chromosome and is defined by Equation (6),

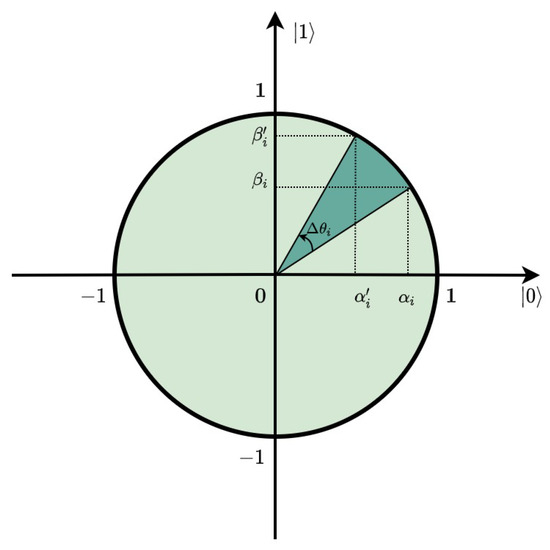

where m is the chromosome size and . Updating qubits through the quantum rotation gate is visually illustrated in Figure 1 and is mathematically presented in Equation (7). To give an equal chance for all qubits to be measured into and , they are initialized by .

where is the rotation angle and and are the probability amplitudes of the qubit after being updated. In each generation, the quantum rotation gates push the qubit population to be more likely to collapse into the best individual state.

Figure 1.

Updating a qubit state using quantum rotation gate.

It is worth mentioning that quantum gates are susceptible to noise that can affect the measurements for real quantum computer implementation of quantum-inspired metaheuristic optimization algorithms. However, because of the stochastic nature of metaheuristics, it is not necessarily a drawback. In fact, the noise in quantum gates can play the role of the mutation operator in conventional GA, as it randomly changes the state of qubits with a small probability, leading to further diversity in the population.

3. DQGA

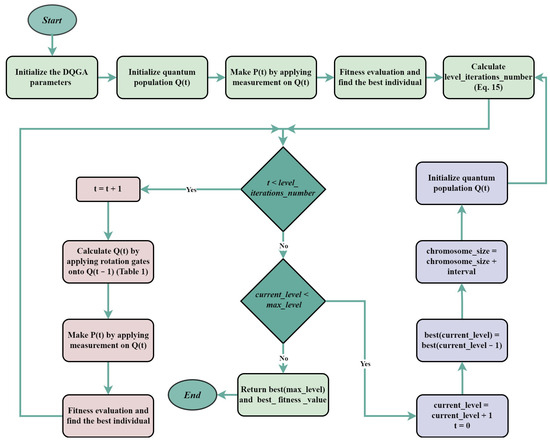

In this section, DQGA is presented. The algorithm uses a lengthening chromosome size strategy and an adaptive look-up table to determine quantum rotation gates. As the algorithm works on different levels (i.e., different chromosome sizes), the whole number of generations should be appropriately distributed among the levels. So, we introduced a generation distribution scheme to maximize the algorithm’s performance in the given number of generations. The pseudo-code of DQGA is given in Algorithm 1, and Figure 2 presents the flowchart of the algorithm. We will explain these concepts in detail in the following subsections.

Figure 2.

Flowchart of DQGA.

3.1. Lengthening Chromosome Size Strategy

The lengthening chromosome size strategy enables the chromosomes of the algorithm to grow longer as the generations pass. This algorithm behavior guarantees a balanced transition between the exploration and exploitation phases. The algorithm starts with short-length chromosomes, which yield answers with low precision. At each level of the precision, the previous level best individual becomes the current level best individual and leads the population to previously found promising areas of the search space.

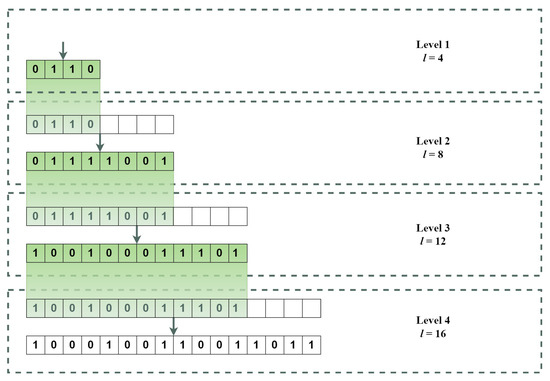

An example of this procedure is depicted in Figure 3. It shows an instance of a four-level run of the algorithm. The chromosome size on levels 1 to 4 are 4, 8, 12, and 16, respectively. At the end of the first level with chromosomes of size four, the best individual is ‘0110’, which is six by conversion to base 10, and 0.4 by normalization. The first level passes its best individual ‘0110’ to the second level, which is of length 8. So, the first 4 bits of the best individual of the second level are initialized by ‘0110’. Level 2 results in ‘01111001’ as the best individual after some number of iterations. Then it passes it to level 3. Finally, level 4 inherits its first iteration’s best individual from level 3, which is ‘100100011101’ in this example.

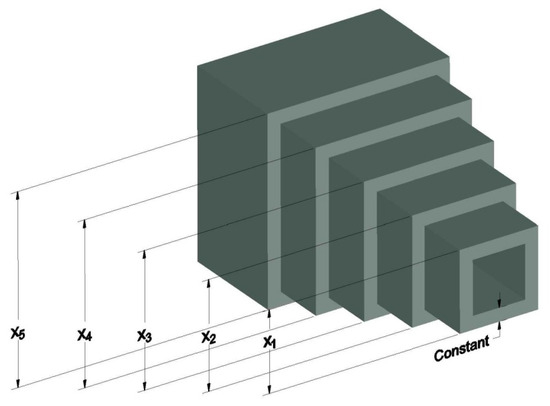

Figure 3.

An example of lengthening chromosomes.

Please note that, although the most significant bits of each level’s best individual are initialized by the preceding level’s best individual, these bits will not necessarily remain the same throughout the evolution of that level. A rough analogy is that when someone tries to find a destination on a map, he first takes an overview of the map and then gradually increases his focus on the areas that seem to be the target for details. Figure 4 shows the exponential growth of solution precision by increasing the chromosome size.

| Algorithm 1 The pseudo-code of DQGA |

|

Figure 4.

Exponential growth of precision by increasing the chromosome size. Possible points to represent different chromosome sizes for each dimension are shown.

Table 1.

Look-up table for DQGA.

Table 1.

Look-up table for DQGA.

| 0 | 0 | false | Equation (15) |

| 0 | 0 | true | Equation (15) |

| 0 | 1 | false | Equation (10) |

| 0 | 1 | true | Equation (12) |

| 1 | 0 | false | Equation (11) |

| 1 | 0 | true | Equation (13) |

| 1 | 1 | false | Equation (14) |

| 1 | 1 | true | Equation (14) |

The main advantage of the lengthening chromosome strategy is local optima avoidance. At the early levels of the algorithm, we do not have many points on the search space, as the small number of bits cannot represent many numbers. Because of this, the chance of the local optima being found in the first levels is minuscule. This fact is also true for the global optimum, but we do not need to find the global optimum solution at the early stages. The algorithm can take its time searching all over the search space even with low precision and gradually elevate the focus at the endmost levels to exploit for the global best solution. We can be more confident that the algorithm would not become trapped in the local optima in this way.

The algorithm starts with a level of precision with chromosomes of length for each dimension and increases the length of the chromosomes by genomes until the chromosomes length reaches . So, the number of levels can be calculated by Equation (8).

3.2. Look-Up Table with Adaptive Rotation Steps

In DQGA, we introduced an adaptive look-up table to boost searchability. For the sake of simplicity and to gain better control of the quantum search space, all states are limited to reside between 0 to 90 degrees. Qubits with states closer to 90 degrees are more likely to collapse to after measurement, while the probability for those closer to 0 degrees to be measured into is more. We try to push the qubits to states that seem to yield better solutions during the algorithm. At early iterations of each level of the algorithm, the rotation steps toward the more fitted state are relatively small, giving the chance for the qubit to be measured even to the opposite state. The algorithm diversifies the search in this manner (exploration phase). As the number of iterations grows, the rotation steps become larger to make the qubit to be more and more likely to be measured into the best solution’s state (exploitation phase). The rotation steps are adjusted by coefficient m, which increases gradually throughout the iterations of each level, leading the rotation steps to become more prominent. Coefficient m is calculated using Equation (9).

where a and b are tuning parameters and in this paper we set them to 1.1 and 0.1, respectively.

To determine the rotation step for each qubit, we consider three cases:

- When the bit of the best fitted binary solution of the previous generation and current chromosome are not equal, and is more fitted than , we rotate the corresponding qubit state in a direction that makes it more likely to collapse into the state of with a huge step. The rotation size of a huge step is formulated in Equation (10) for and and in and Equation (11) for and .where m is the adjustment coefficient calculated by Equation (8).

- When the bit of the best fitted individual b and current chromosome are different and x has a higher fitness value in comparison to b, the corresponding qubit is pushed to the state of but this time with a little caution or hesitation, as the previous iteration’s best individual guides us conversely. This leads to a relatively smaller rotation size, called medium step. Equations (12) and (13) show the mathematical representation of the case with and and the case with and , respectively.

- The last case is when and are identical. In this case, we do not care about which individual yields better fitness, as both of them share a similar state. So, we just move the qubit state by a tiny step in order to slightly confirm the last iteration’s best individual state regardless of the fitness comparison. These minor fluctuations help to keep the diversity of the population. Equation (14) expresses the tiny step when and are in state ‘1’, while Equation 15 shows otherwise.

As is proportional to qubit’s state , the rotation steps are calculated adaptively. It means that the wider the angle between the current state and the desired ket, the larger the rotation step will be. Table 1 presents the look-up table of DQGA and summarizes all the possible cases and their corresponding rotation steps.

3.3. Distribution of Generations in Different Precision Levels

As DQGA utilizes different chromosome sizes during the algorithm, we must assign a certain number of iterations to each level. Intuitively, it is clear that levels with small chromosome sizes need fewer epochs to reach a suitable solution in comparison to those consisting of a larger amount of genomes. Because when we use low levels of precision, the number of potential solutions is far less than the case with high levels of precision. As a result, we determined to distribute the whole iteration number to get consistently larger for more extended chromosome sizes. Equation (15) gives the number of iterations at each level.

where L is the number of each level and n is the whole number of iterations. The sum of the epochs of all levels equals to the whole number of generations. So, we have:

4. Experimental Results and Comparison Discussion

In this section, the comparison results of utilizing the proposed algorithm to solve various optimization problems are presented to assess its efficiency and performance. The DQGA approach is applied to 10 benchmark functions and three classical constrained engineering problems. Furthermore, we applied the Wilcoxon rank-sum test in order to show the significance of the difference between the proposed algorithm and comparison algorithms’ results.

4.1. Testing DQGA on Benchmark Functions

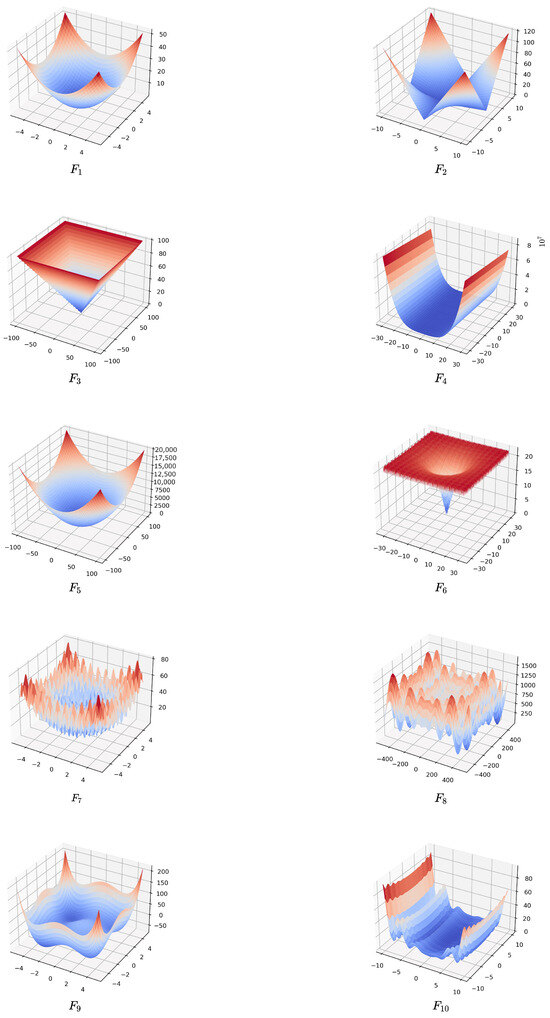

To show the abilities of the metaheuristic algorithms, it is a common practice to test the metaheuristic algorithms by several benchmark functions with different properties. We chose 10 of the most famous benchmark functions from the optimization literature. The description, domain, and the optima of the benchmark functions are taken from [51,52,53]. The benchmark functions are depicted in Table 2 and are visualized in Figure 5. The first five functions are unimodal, meaning they have one global optimum and no local optima. Unimodal functions are suitable for testing the exploitation ability of optimization algorithms. Conversely, the last five benchmark functions are more challenging problems and are called multimodal functions. Each of these consists of numerous local optima. The number of dimensions for all the benchmark functions is set to 30.

Table 2.

Description of benchmark functions.

Figure 5.

Two-dimensional representation of the benchmark functions’ search spaces.

We compared the results with five well-known and highly regarded metaheuristic algorithms, namely GA [54], GQA [8], PSO [55] which is, QPSO [56], and MFO [57]. The Python library Mealpy [58] is utilized to implement GA, PSO, and MFO algorithms for the comparison purpose. The number of iterations and the population size for all algorithms is set to 500 and 30, respectively. Table 3 shows the values of parameters for all algorithms in this experimental comparison.

Table 3.

Parameter values for optimization algorithms.

As can be seen in Table 4, the results of DQGA for the benchmark functions are promising in comparison to other algorithms. For unimodal benchmark functions, the proposed algorithm yields the best average results for all the benchmark functions except for the Sphere function, which is the second best after MFO. The results for the multimodal test functions, which are more similar to real-world problems and are more challenging, are even better, and DQGA outperforms all the other algorithms in all multimodal benchmark functions.

Table 4.

Comparison results for the benchmark functions.

In order to test the significance of the difference in the results, the Wilcoxon statistical test is applied pairwise between DQGA and other comparative algorithms with the level of significance . The p-values obtained from the test are given in Table 5. From the results, it is apparent that none of the p-values is greater than 0.05, rejecting the null hypothesis and confirming the significance of the results.

Table 5.

p-values of the Wilcoxon ranksum test between DQGA and comparison algorithms.

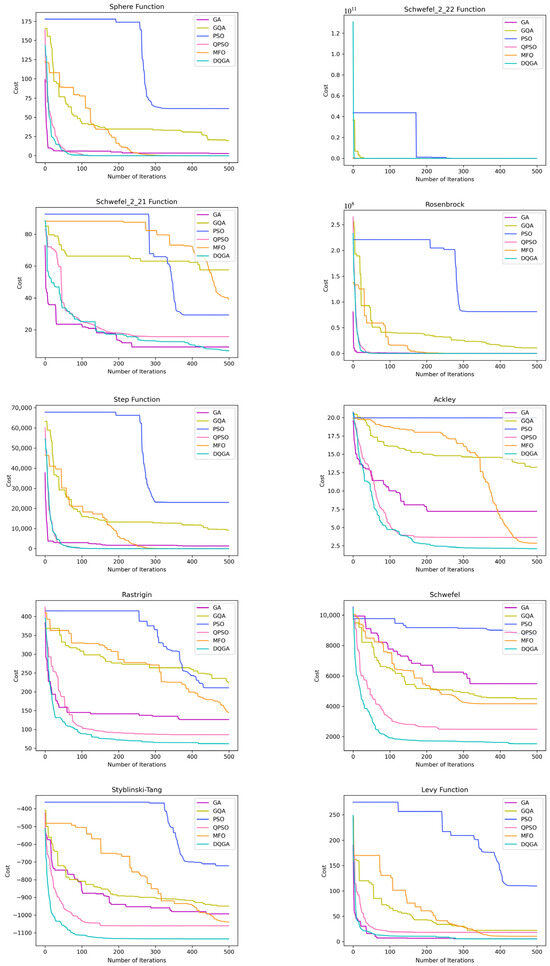

To test the time efficiency of the proposed algorithm, we provided convergence curves that show and compare the algorithms’ iterations needed to reach specific fitness values. It should be noted that comparing the number of iterations is a more fair criterion than the total execution time, especially in our case, as the algorithms are of different implementation approaches that might have a substantial impact on the total execution time. For instance, multi-processing and multi-threading approaches can reduce the computational time to nearly one-fourth on a four-core CPU. For this reason, we chose to compare the algorithms based on the number of iterations criterion rather than the total execution time.

Figure 6 presents the convergence behavior of different algorithms in this experiment. The convergence curve of the proposed algorithm shows a steady improvement in the solution as the generations pass. The convergence speed is also very competitive with other algorithms. The pace is faster than other algorithms in most cases except for the Sphere function, Schwefel 2.21, Rosenbrock function, and Levy function which was roughly equivalent to GA and QPSO algorithms.

Figure 6.

Comparison of convergence curves of DQGA and other algorithms.

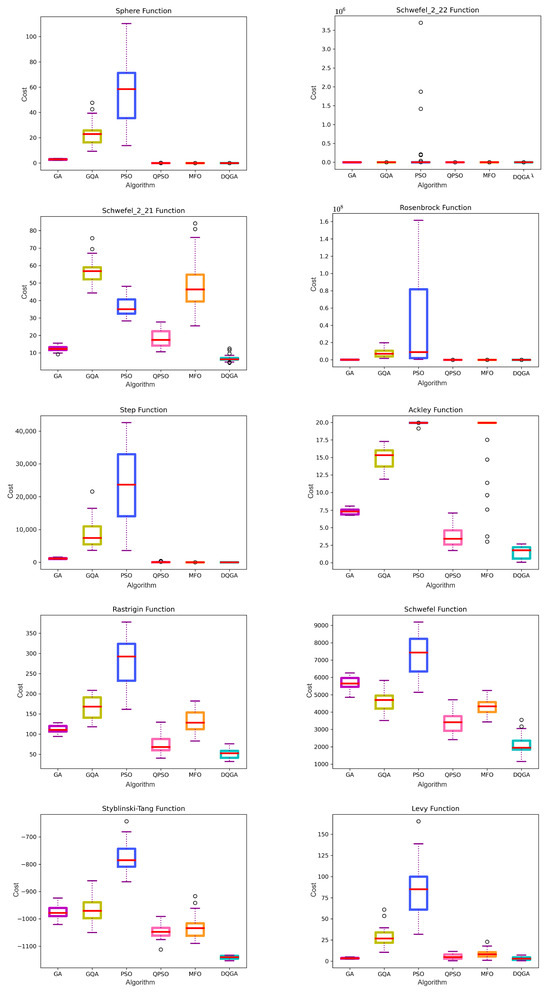

Boxplots in Figure 7 show the range of solutions in different runs. The low range of distribution of results for DQGA compared to the other algorithms verifies the reliability and consistency of the algorithm. The ranges are relatively lower or at least comparable to the best for DQGA except for the Levy function, in which the GA yielded more consistent results.

Figure 7.

Box plots of the algorithms used in the comparison.

4.2. Constrained Engineering Design Optimization Using DQGA

As the ultimate purpose of metaheuristics is to tackle complex real-world problems and not merely solve mathematical benchmark functions, we also applied DQGA to three classical constrained engineering problems. The engineering problems are the pressure vessel design problem, the speed reducer design problem, and the cantilever beam design problem. The constraint handling technique used in this paper is the death penalty, meaning that the constraint violation leads to a substantial negative fitness and inability to compete with other solutions. The results obtained by DQGA were utterly satisfying and are presented in the following subsections in detail.

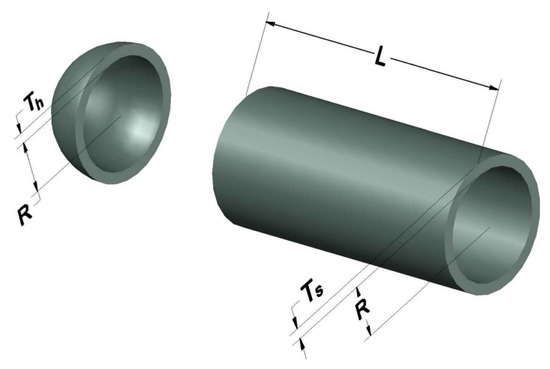

4.2.1. Pressure Vessel Design

The pressure vessel design problem was first introduced by [59]. The problem objective is to minimize the material, forming, and welding costs of producing a cylindrical vessel with two hemispherical caps at both ends (see Figure 8). The problem consists of four design variables: thickness of shell , thickness of head , inner radius R, and the cylindrical section’s length L. The problem is formulated as follows:

Figure 8.

Pressure vessel design problem.

The results of applying DQGA to the pressure vessel design problem are given in Table 6 and are compared with the reported results of Branch-bound [60], GA [54], GWO [61], WOA [62], HHO [63], WSA [64], and AOA [65] algorithms. The results confirm that DQGA outperformed the other algorithms in solving this problem.

Table 6.

Results of different algorithms for the pressure vessel design problem.

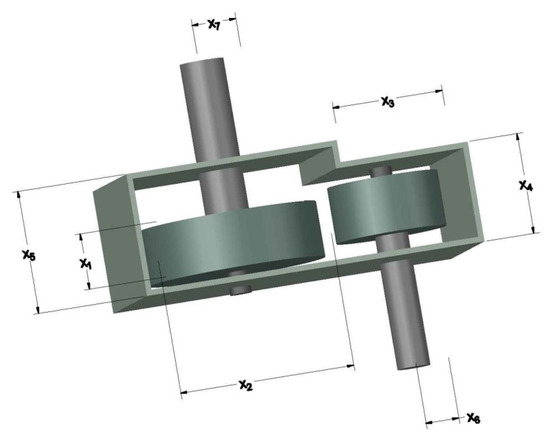

4.2.2. Speed Reducer Design

The speed reducer design problem aims to minimize the total weight of a speed reducer by concerning the bending stress on the gear teeth, stress on the surface, transverse deflections, and stresses in shafts constraints [67]. The problem has six continuous variables and one discrete variable , which corresponds to the number of teeth on the pinion. The structure of a speed reducer is given in Figure 9, and the problem description is defined in the following:

Figure 9.

Speed reducer design problem.

Table 7 presents the comparison results for solving the speed reducer design problem by the proposed algorithm and CS [68], FA [69], WSA [64], hHHO-SCA [70], AAO [71], AO [72], and AOA [65] algorithms. It can be seen that DQGA obtained the best result among the comparison algorithms.

Table 7.

Results for speed reducer design problem using different algorithms.

4.2.3. Cantilever Beam Design

The purpose of the problem is to find the minimum weight for a cantilever beam containing five hollow square based elements with constant thickness (Figure 10). The beam is fixed at the larger end with a vertical force acting at the other end.

Figure 10.

Cantilever beam design problem.

DQGA is applied to this problem and the results are compared with several algorithms including CS [68], SOS [73], MFO [57], GCA_I [74], GCA_II [74], SMA [75], and AO [72]. The results are given in Table 8. From the results, it can be seen that DQGA presents the optimal solution for this problem in comparison to the other algorithms.

Table 8.

Comparison of the optimum results of different algorithms for the cantilever beam design problem.

5. Conclusions and Potential Future Work

In this paper, we proposed a modified QGA called DQGA, which focuses not only on exploration and exploitation abilities but on smoothing the transition between the mentioned phases. These improvements are achieved by an adaptive look-up table and a lengthening chromosomes strategy, which clarifies the search space gradually and postpones the convergence to high precision solutions to the ending generations, avoiding the algorithm from premature convergence and being trapped in the local optima. Experimental tests were conducted to ensure that the proposed concepts are effective in practice. DQGA outperformed the comparative algorithms in most cases, especially for multimodal benchmark functions and the more challenging engineering design problems.

These promising results give hope that the presented algorithm has the potential to tackle other real-world optimization problems. Future studies may include applying DQGA to various applications, such as network applications, fuzzy controller design, image thresholding, flight control, and structural design. In addition, the binary-coded nature of the proposed algorithm makes it suitable for discrete optimization problems like the travelling salesman problem, the 01 knapsack problem, the job-scheduling problem, airport gate allocation, and feature selection. Moreover, a systematic and adaptive tuning of parameters of DQGA, such as the minimum and the maximum length of chromosomes and incremental intervals, can be considered for further studies.

Author Contributions

S.H. under the supervision of M.H. and S.A.H. developed and implemented the main idea and wrote the initial draft of the manuscript. S.H., M.H., S.A.H. and X.Z. verified the idea and the results and revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are available on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hemanth, J.; Balas, V. Nature Inspired Optimization Techniques for Image Processing Applications; Springer: Berlin/Heidelberg, Germany, 2019; Volume 150. [Google Scholar]

- Gandomi, A.; Yang, X.; Talatahari, S.; Alavi, A. Metaheuristic Applications in Structures and Infrastructures; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Elshaer, R.; Awad, H. A taxonomic review of metaheuristic algorithms for solving the vehicle routing problem and its variants. Comput. Ind. Eng. 2020, 140, 106242. [Google Scholar] [CrossRef]

- Agrawal, P.; Abutarboush, H.; Ganesh, T.; Mohamed, A. Metaheuristic algorithms on feature selection: A survey of one decade of research (2009–2019). IEEE Access 2021, 9, 26766–26791. [Google Scholar] [CrossRef]

- Doering, J.; Kizys, R.; Juan, A.; Fito, A.; Polat, O. Metaheuristics for rich portfolio optimisation and risk management: Current state and future trends. Oper. Res. Perspect. 2019, 6, 100121. [Google Scholar] [CrossRef]

- Calvet, L.; Benito, S.; Juan, A.; Prados, F. On the role of metaheuristic optimization in bioinformatics. Int. Trans. Oper. Res. 2023, 30, 2909–2944. [Google Scholar] [CrossRef]

- Bhavya, R.; Elango, L. Ant-Inspired Metaheuristic Algorithms for Combinatorial Optimization Problems in Water Resources Management. Water 2023, 15, 1712. [Google Scholar] [CrossRef]

- Han, K.H.; Kim, J.H. Genetic quantum algorithm and its application to combinatorial optimization problem. In Proceedings of the 2000 Congress on Evolutionary Computation, CEC00 (Cat. No. 00TH8512), La Jolla, CA, USA, 16–19 July 2000; Volume 2, pp. 1354–1360. [Google Scholar]

- Han, K.H.; Kim, J.H. Quantum-inspired evolutionary algorithm for a class of combinatorial optimization. IEEE Trans. Evol. Comput. 2002, 6, 580–593. [Google Scholar] [CrossRef]

- Si-Jung, R.; Jun-Seuk, G.; Seung-Hwan, B.; Songcheol, H.; Jong-Hwan, K. Feature-based hand gesture recognition using an FMCW radar and its temporal feature analysis. IEEE Sens. J. 2018, 18, 7593–7602. [Google Scholar]

- Dey, A.; Dey, S.; Bhattacharyya, S.; Platos, J.; Snasel, V. Novel quantum inspired approaches for automatic clustering of gray level images using particle swarm optimization, spider monkey optimization and ageist spider monkey optimization algorithms. Appl. Soft Comput. 2020, 88, 106040. [Google Scholar] [CrossRef]

- Choudhury, A.; Samanta, S.; Pratihar, S.; Bandyopadhyay, O. Multilevel segmentation of Hippocampus images using global steered quantum inspired firefly algorithm. Appl. Intell. 2021, 52, 7339–7372. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A.; Geran malek, N. Robust design optimization of laminated plates under uncertain bounded buckling loads. Struct. Multidiscip. Optim. 2019, 59, 877–891. [Google Scholar] [CrossRef]

- Arzani, H.; Kaveh, A.; Kamalinejad, M. Optimal design of pitched roof rigid frames with non-prismatic members using quantum evolutionary algorithm. Period. Polytech. Civ. Eng. 2019, 63, 593–607. [Google Scholar] [CrossRef]

- Zhang, S.; Zhou, G.; Zhou, Y.; Luo, Q. Quantum-inspired satin bowerbird algorithm with Bloch spherical search for constrained structural optimization. J. Ind. Manag. Optim. 2021, 17, 3509. [Google Scholar] [CrossRef]

- Talatahari, S.; Azizi, M.; Toloo, M.; Baghalzadeh Shishehgarkhaneh, M. Optimization of Large-Scale Frame Structures Using Fuzzy Adaptive Quantum Inspired Charged System Search. Int. J. Steel Struct. 2022, 22, 686–707. [Google Scholar] [CrossRef]

- Konar, D.; Bhattacharyya, S.; Sharma, K.; Sharma, S.; Pradhan, S.R. An improved hybrid quantum-inspired genetic algorithm (HQIGA) for scheduling of real-time task in multiprocessor system. Appl. Soft Comput. 2017, 53, 296–307. [Google Scholar] [CrossRef]

- Alam, T.; Raza, Z. Quantum genetic algorithm based scheduler for batch of precedence constrained jobs on heterogeneous computing systems. J. Syst. Softw. 2018, 135, 126–142. [Google Scholar] [CrossRef]

- Saad, H.M.; Chakrabortty, R.K.; Elsayed, S.; Ryan, M.J. Quantum-inspired genetic algorithm for resource-constrained project-scheduling. IEEE Access 2021, 9, 38488–38502. [Google Scholar] [CrossRef]

- Wu, X.; Wu, S. An elitist quantum-inspired evolutionary algorithm for the flexible job-shop scheduling problem. J. Intell. Manuf. 2017, 28, 1441–1457. [Google Scholar] [CrossRef]

- Singh, K.V.; Raza, Z. A quantum-inspired binary gravitational search algorithm–based job-scheduling model for mobile computational grid. Concurr. Comput. Pract. Exp. 2017, 29, e4103. [Google Scholar] [CrossRef]

- Liu, M.; Yi, S.; Wen, P. Quantum-inspired hybrid algorithm for integrated process planning and scheduling. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2018, 232, 1105–1122. [Google Scholar] [CrossRef]

- Gupta, S.; Mittal, S.; Gupta, T.; Singhal, I.; Khatri, B.; Gupta, A.K.; Kumar, N. Parallel quantum-inspired evolutionary algorithms for community detection in social networks. Appl. Soft Comput. 2017, 61, 331–353. [Google Scholar] [CrossRef]

- Qu, Z.; Li, T.; Tan, X.; Li, P.; Liu, X. A modified quantum-inspired evolutionary algorithm for minimising network coding operations. Int. J. Wirel. Mob. Comput. 2020, 19, 401–410. [Google Scholar] [CrossRef]

- Li, F.; Liu, M.; Xu, G. A quantum ant colony multi-objective routing algorithm in WSN and its application in a manufacturing environment. Sensors 2019, 19, 3334. [Google Scholar] [CrossRef]

- Mirhosseini, M.; Fazlali, M.; Malazi, H.T.; Izadi, S.K.; Nezamabadi-pour, H. Parallel Quadri-valent Quantum-Inspired Gravitational Search Algorithm on a heterogeneous platform for wireless sensor networks. Comput. Electr. Eng. 2021, 92, 107085. [Google Scholar] [CrossRef]

- Chou, Y.H.; Kuo, S.Y.; Jiang, Y.C.; Wu, C.H.; Shen, J.Y.; Hua, C.Y.; Huang, P.S.; Lai, Y.T.; Tong, Y.F.; Chang, M.H. A novel quantum-inspired evolutionary computation-based quantum circuit synthesis for various universal gate libraries. In Proceedings of the Genetic and Evolutionary Computation Conference Companion 2022, Boston, MA, USA, 9–13 July 2022; pp. 2182–2189. [Google Scholar]

- Ramos, A.C.; Vellasco, M. Chaotic quantum-inspired evolutionary algorithm: Enhancing feature selection in BCI. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Barani, F.; Mirhosseini, M.; Nezamabadi-Pour, H. Application of binary quantum-inspired gravitational search algorithm in feature subset selection. Appl. Intell. 2017, 47, 304–318. [Google Scholar] [CrossRef]

- Di Martino, F.; Sessa, S. A novel quantum inspired genetic algorithm to initialize cluster centers in fuzzy C-means. Expert Syst. Appl. 2022, 191, 116340. [Google Scholar] [CrossRef]

- Chou, Y.H.; Lai, Y.T.; Jiang, Y.C.; Kuo, S.Y. Using Trend Ratio and GNQTS to Assess Portfolio Performance in the US Stock Market. IEEE Access 2021, 9, 88348–88363. [Google Scholar] [CrossRef]

- Qi, B.; Nener, B.; Xinmin, W. A quantum inspired genetic algorithm for multimodal optimization of wind disturbance alleviation flight control system. Chin. J. Aeronaut. 2019, 32, 2480–2488. [Google Scholar]

- Yi, J.H.; Lu, M.; Zhao, X.J. Quantum inspired monarch butterfly optimisation for UCAV path planning navigation problem. Int. J. Bio-Inspired Comput. 2020, 15, 75–89. [Google Scholar] [CrossRef]

- Dahi, Z.A.E.M.; Mezioud, C.; Draa, A. A quantum-inspired genetic algorithm for solving the antenna positioning problem. Swarm Evol. Comput. 2016, 31, 24–63. [Google Scholar] [CrossRef]

- Cai, X.; Zhao, H.; Shang, S.; Zhou, Y.; Deng, W.; Chen, H.; Deng, W. An improved quantum-inspired cooperative co-evolution algorithm with muli-strategy and its application. Expert Syst. Appl. 2021, 171, 114629. [Google Scholar] [CrossRef]

- Sadeghi Hesar, A.; Kamel, S.R.; Houshmand, M. A quantum multi-objective optimization algorithm based on harmony search method. Soft Comput. 2021, 25, 9427–9439. [Google Scholar] [CrossRef]

- Ross, O.H.M. A review of quantum-inspired metaheuristics: Going from classical computers to real quantum computers. IEEE Access 2019, 8, 814–838. [Google Scholar] [CrossRef]

- Hakemi, S.; Houshmand, M.; KheirKhah, E.; Hosseini, S.A. A review of recent advances in quantum-inspired metaheuristics. Evol. Intell. 2022, 1–16. [Google Scholar]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Houshmand, M.; Mohammadi, Z.; Zomorodi-Moghadam, M.; Houshmand, M. An evolutionary approach to optimizing teleportation cost in distributed quantum computation. Int. J. Theor. Phys. 2020, 59, 1315–1329. [Google Scholar] [CrossRef]

- Daei, O.; Navi, K.; Zomorodi-Moghadam, M. Optimized quantum circuit partitioning. Int. J. Theor. Phys. 2020, 59, 3804–3820. [Google Scholar] [CrossRef]

- Ghodsollahee, I.; Davarzani, Z.; Zomorodi, M.; Pławiak, P.; Houshmand, M.; Houshmand, M. Connectivity matrix model of quantum circuits and its application to distributed quantum circuit optimization. Quantum Inf. Process. 2021, 20, 1–21. [Google Scholar] [CrossRef]

- Dadkhah, D.; Zomorodi, M.; Hosseini, S.E. A new approach for optimization of distributed quantum circuits. Int. J. Theor. Phys. 2021, 60, 3271–3285. [Google Scholar] [CrossRef]

- Lukac, M.; Perkowski, M. Evolving quantum circuits using genetic algorithm. In Proceedings of the 2002 NASA/DoD Conference on Evolvable Hardware, Alexandria, VA, USA, 15–18 July 2002; pp. 177–185. [Google Scholar]

- Mukherjee, D.; Chakrabarti, A.; Bhattacherjee, D. Synthesis of quantum circuits using genetic algorithm. Int. J. Recent Trends Eng. 2009, 2, 212. [Google Scholar]

- Sünkel, L.; Martyniuk, D.; Mattern, D.; Jung, J.; Paschke, A. GA4QCO: Genetic algorithm for quantum circuit optimization. arXiv 2023, arXiv:2302.01303. [Google Scholar]

- Houshmand, M.; Saheb Zamani, M.; Sedighi, M.; Houshmand, M. GA-based approach to find the stabilizers of a given sub-space. Genet. Program. Evolvable Mach. 2015, 16, 57–71. [Google Scholar] [CrossRef]

- Kim, I.Y.; De Weck, O. Variable chromosome length genetic algorithm for progressive refinement in topology optimization. Struct. Multidiscip. Optim. 2005, 29, 445–456. [Google Scholar] [CrossRef]

- Pawar, S.N.; Bichkar, R.S. Genetic algorithm with variable length chromosomes for network intrusion detection. Int. J. Autom. Comput. 2015, 12, 337–342. [Google Scholar] [CrossRef]

- Sadeghi Hesar, A.; Houshmand, M. A memetic quantum-inspired genetic algorithm based on tabu search. Evol. Intell. 2023, 1–17. [Google Scholar]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar]

- Molga, M.; Smutnicki, C. Test functions for optimization needs. Test Funct. Optim. Needs 2005, 101, 48. [Google Scholar]

- Jamil, M.; Yang, X.S. A literature survey of benchmark functions for global optimization problems. arXiv 2013, arXiv:1308.4008. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, UK, 1992. [Google Scholar]

- Sun, J.; Feng, B.; Xu, W. Particle swarm optimization with particles having quantum behavior. In Proceedings of the 2004 Congress on Evolutionary Computation (IEEE Cat. No. 04TH8753), Portland, OR, USA, 19–23 June 2004; Volume 1, pp. 325–331. [Google Scholar]

- Yang, S.; Wang, M.; Jiao, L. A quantum particle swarm optimization. In Proceedings of the 2004 Congress on Evolutionary Computation (IEEE Cat. No. 04TH8753), Portland, OR, USA, 19–23 June 2004; Volume 1, pp. 320–324. [Google Scholar]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar]

- Van Thieu, N.; Mirjalili, S. MEALPY: An open-source library for latest meta-heuristic algorithms in Python. J. Syst. Archit. 2023, 139, 102871. [Google Scholar]

- Kannan, B.; Kramer, S.N. An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des. 1994, 116, 405–411. [Google Scholar] [CrossRef]

- Sandgren, E. Nonlinear Integer and Discrete Programming in Mechanical Design Optimization. J. Mech. Des. 1990, 112, 223–229. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar]

- Baykasoğlu, A.; Akpinar, Ş. Weighted Superposition Attraction (WSA): A swarm intelligence algorithm for optimization problems—Part 2: Constrained optimization. Appl. Soft Comput. 2015, 37, 396–415. [Google Scholar]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar]

- Coello, C.A.C. Use of a self-adaptive penalty approach for engineering optimization problems. Comput. Ind. 2000, 41, 113–127. [Google Scholar]

- Siddall, J.N. Analytical Decision-Making in Engineering Design; Prentice Hall: Hoboken, NJ, USA, 1972. [Google Scholar]

- Gandomi, A.H.; Yang, X.S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar]

- Baykasoğlu, A.; Ozsoydan, F.B. Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl. Soft Comput. 2015, 36, 152–164. [Google Scholar]

- Kamboj, V.K.; Nandi, A.; Bhadoria, A.; Sehgal, S. An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Appl. Soft Comput. 2020, 89, 106018. [Google Scholar]

- Czerniak, J.M.; Zarzycki, H.; Ewald, D. AAO as a new strategy in modeling and simulation of constructional problems optimization. Simul. Model. Pract. Theory 2017, 76, 22–33. [Google Scholar]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-Qaness, M.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar]

- Cheng, M.Y.; Prayogo, D. Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput. Struct. 2014, 139, 98–112. [Google Scholar]

- Chickermane, H.; Gea, H.C. Structural optimization using a new local approximation method. Int. J. Numer. Methods Eng. 1996, 39, 829–846. [Google Scholar]

- Zhao, J.; Gao, Z.M.; Sun, W. The improved slime mould algorithm with Levy flight. J. Phys. Conf. Ser. 2020, 1617, 012033. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).