Accessible Interface for Museum Geological Exhibitions: PETRA—A Gesture-Controlled Experience of Three-Dimensional Rocks and Minerals

Abstract

1. Introduction

2. Materials and Methods

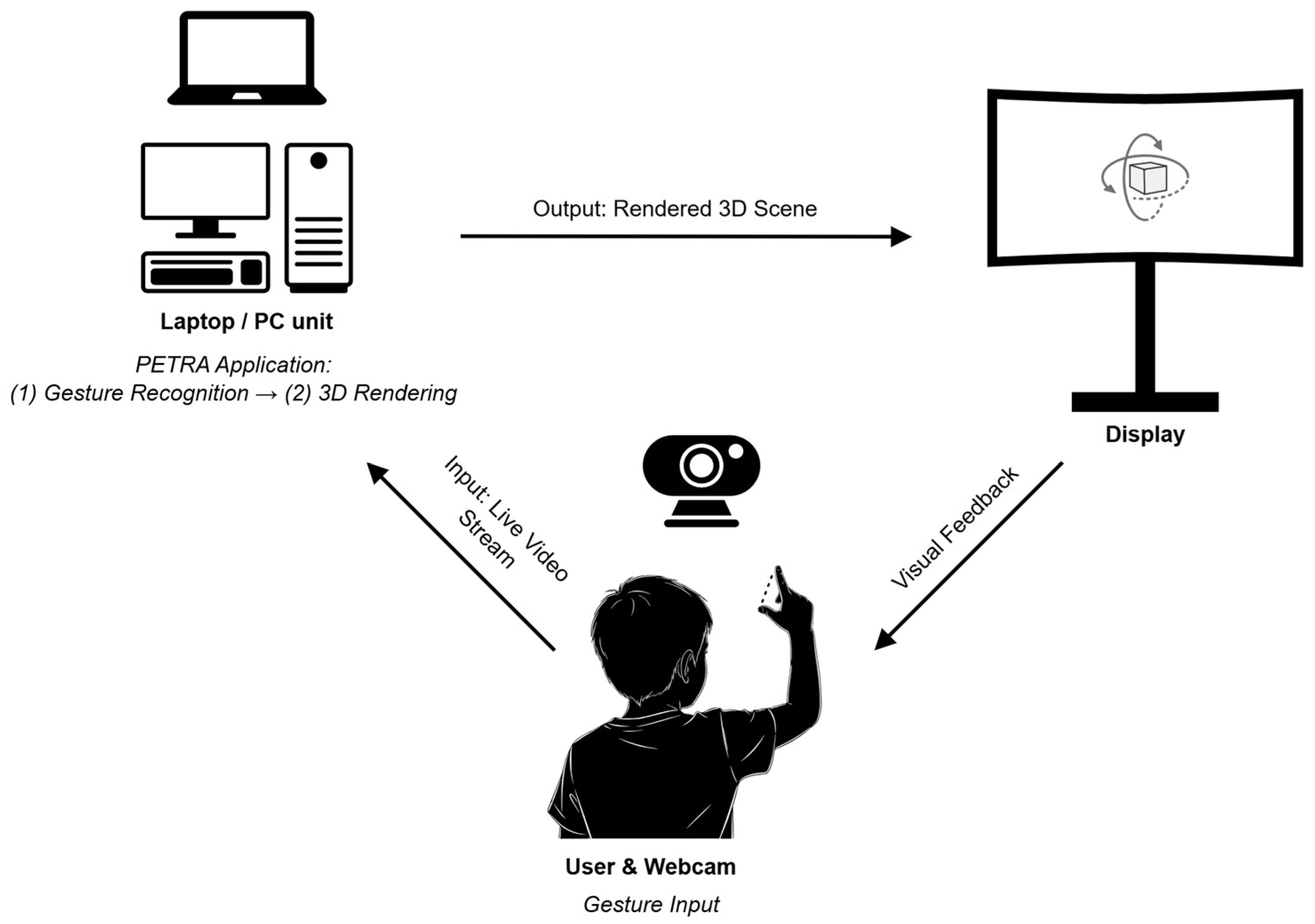

2.1. System Architecture and Hardware

2.2. Digital Assets and Availability

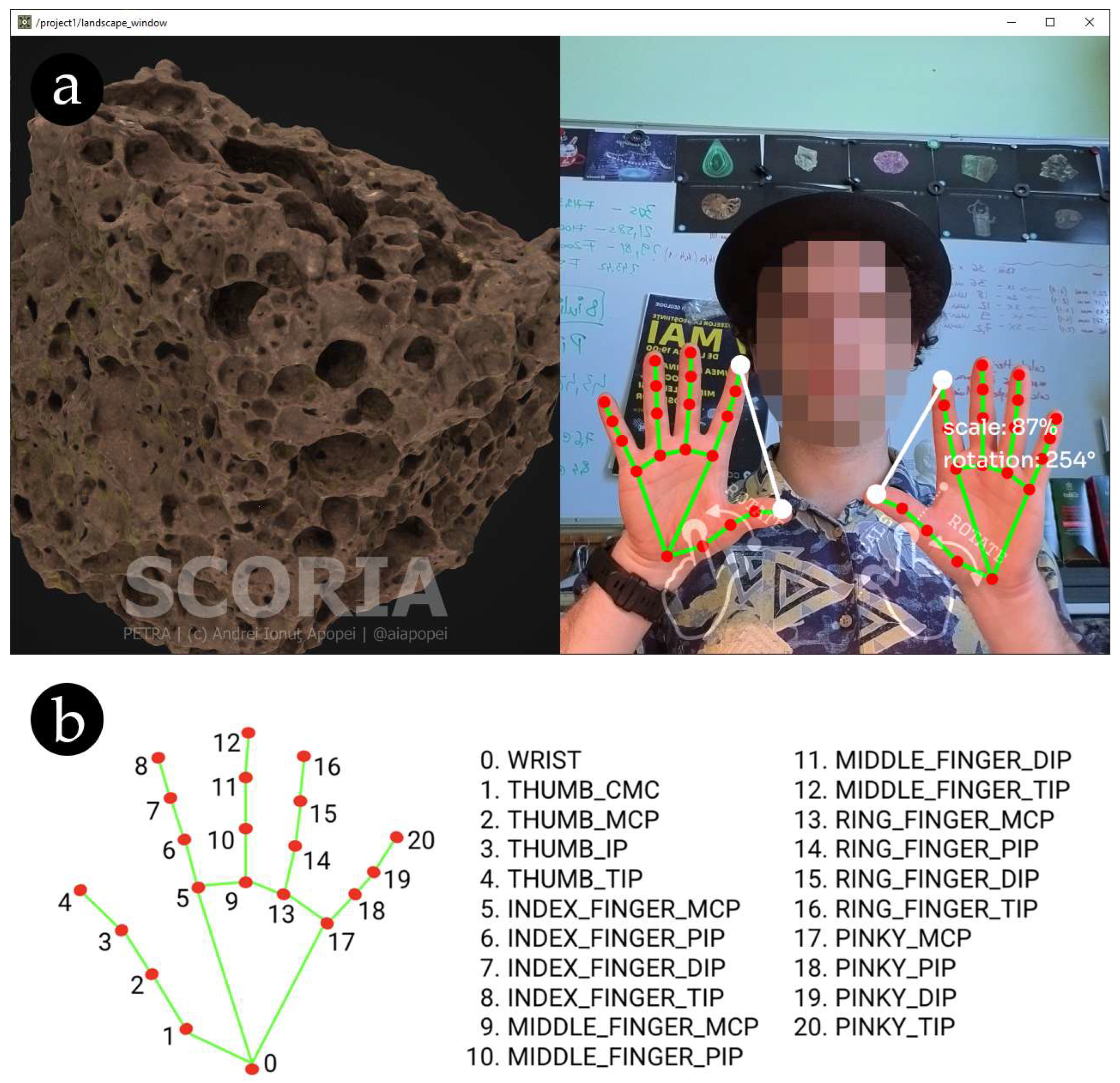

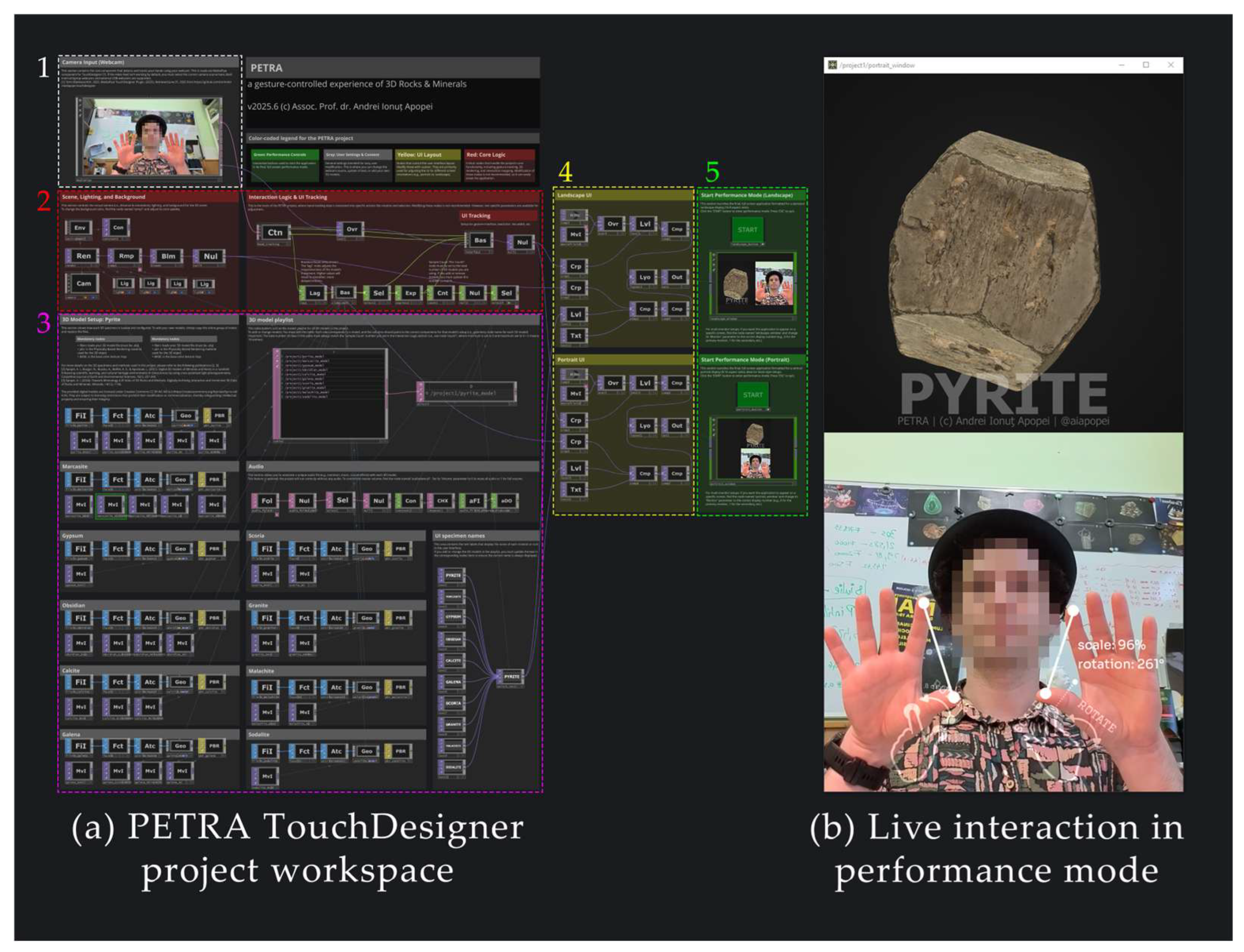

2.3. Software Implementation

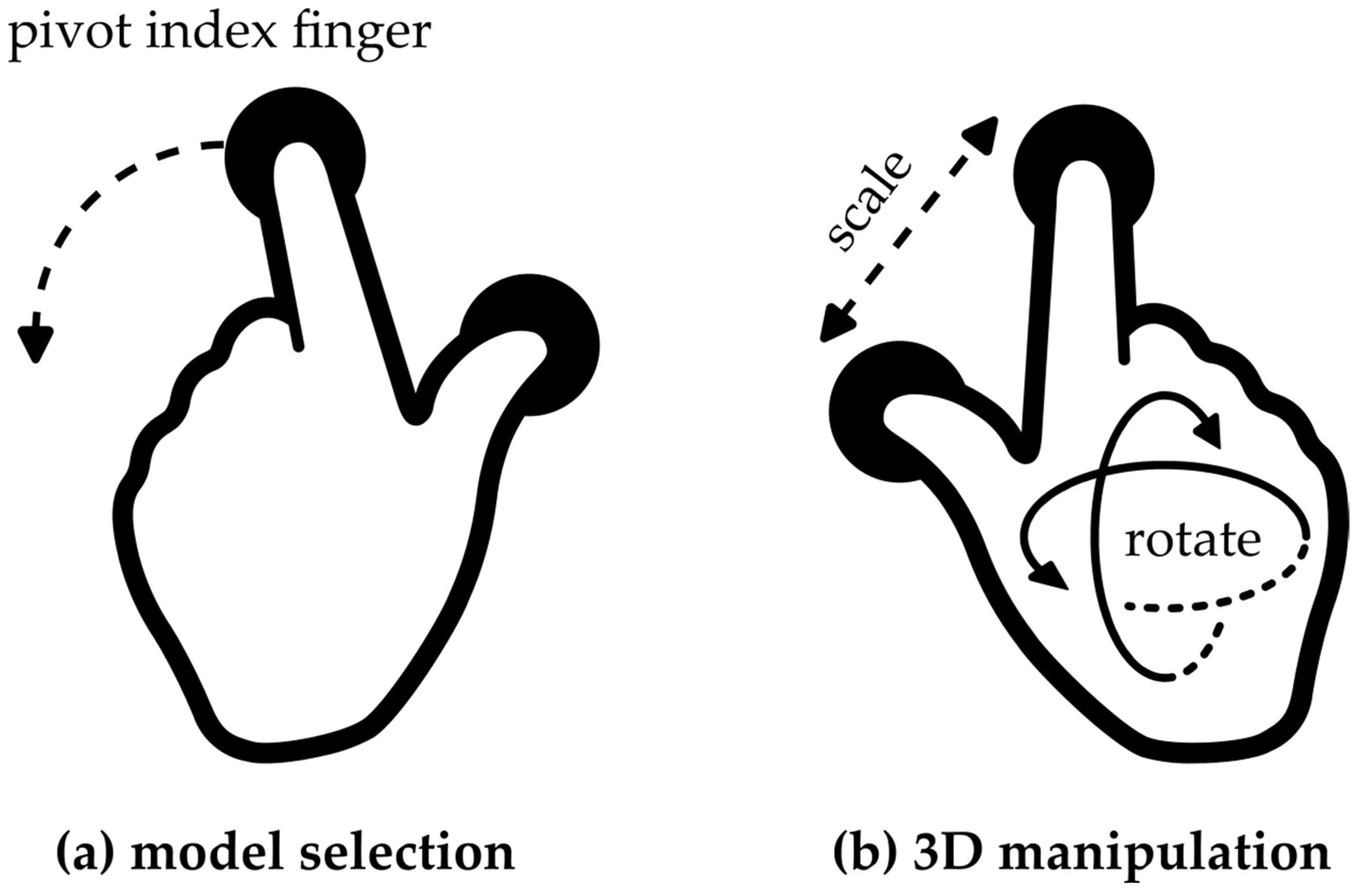

2.4. Interaction Design

3. Results

3.1. PETRA Implementation and Functionality

3.2. Case Study: “Long Night of Museums” Event

3.2.1. User Engagement and Reception

3.2.2. Discoverability and Ease of Use

3.2.3. Technical Performance and System Stability

4. Discussion

4.1. Interpretation of Key Findings

4.2. Contribution and Context

4.3. Benefits and Limitations

- Technical Limitations: The gesture recognition is dependent on adequate lighting and can be compromised in low-light environments. For reliable tracking, standard indoor ambient lighting is sufficient, but the system may struggle with strong backlighting or very dim conditions where the hand is not clearly visible to the camera. The system is also optimized for a single user; the presence of multiple hands can cause tracking errors, reflecting a known challenge in balancing individual and social experiences in museum interactives [50].

- Interaction Limitations: The current gesture vocabulary is intentionally simple. It does not, for example, include a method for accessing the textual metadata about the specimens, which is a key informational function of traditional museum kiosks [30].

- Evaluation Limitations: The findings from this case study are qualitative, based on direct observation of “in-the-wild” user interactions. While this provides valuable initial insights, a formal quantitative evaluation was not performed. A crucial direction for future work is to conduct controlled studies that not only use validated instruments like the System Usability Scale (SUS), but also benchmark PETRA’s technical performance (e.g., gesture accuracy rates, system latency) against other interactive systems. This would provide the rigorous data needed to explicitly validate the system’s effectiveness and potential technical advantages [1,30].

4.4. Broader Implications and Future Work

5. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arici, F.; Yildirim, P.; Caliklar, Ş.; Yilmaz, R.M. Research trends in the use of augmented reality in science education: Content and bibliometric mapping analysis. Comput. Educ. 2019, 142, 103647. [Google Scholar] [CrossRef]

- Champion, E.; Rahaman, H. 3D Digital Heritage Models as Sustainable Scholarly Resources. Sustainability 2019, 11, 2425. [Google Scholar] [CrossRef]

- Clini, P.; Nespeca, R.; Ferretti, U.; Galazzi, F.; Bernacchia, M. Inclusive Museum Engagement: Multisensory Storytelling of Cagli Warriors’ Journey and the Via Flamina Landscape Through Interactive Tactile Experiences and Digital Replicas. Heritage 2025, 8, 61. [Google Scholar] [CrossRef]

- Pescarin, S. Museums and Virtual Museums in Europe Reaching expectations. SCIRES-IT Sci. Res. Inf. Technol. 2014, 4, 131–140. [Google Scholar] [CrossRef]

- Wachowiak, M.J.; Karas, B.V. 3D scanning and replication for museum and cultural heritage applications. J. Am. Inst. Conserv. 2009, 48, 141–158. [Google Scholar] [CrossRef]

- Wali, A.; Collins, R.K. Decolonizing Museums: Toward a Paradigm Shift. Annu. Rev. Anthropol. 2023, 52, 329–345. [Google Scholar] [CrossRef]

- De Paor, D.G. Virtual Rocks. GSA Today 2016, 26, 4–11. [Google Scholar] [CrossRef]

- Janiszewski, M.; Uotinen, L.; Merkel, J.; Leveinen, J.; Rinne, M. Virtual Reality learning environments for rock engineering, geology and mining education. In Proceedings of the 54th U.S. Rock Mechanics/Geomechanics Symposium, Golden, CO, USA, 28 June–1 July 2020. [Google Scholar]

- Ma, X.; Ralph, J.; Zhang, J.; Que, X.; Prabhu, A.; Morrison, S.M.; Hazen, R.M.; Wyborn, L.; Lehnert, K. OpenMindat: Open and FAIR mineralogy data from the Mindat database. Geosci. Data J. 2024, 11, 94–104. [Google Scholar] [CrossRef]

- Prabhu, A.; Morrison, S.M.; Eleish, A.; Zhong, H.; Huang, F.; Golden, J.J.; Perry, S.N.; Hummer, D.R.; Ralph, J.; Runyon, S.E.; et al. Global earth mineral inventory: A data legacy. Geosci. Data J. 2021, 8, 74–89. [Google Scholar] [CrossRef]

- Apopei, A.I. Towards Mineralogy 4.0? Atlas of 3D Rocks and Minerals: Digitally Archiving Interactive and Immersive 3D Data of Rocks and Minerals. Minerals 2024, 14, 1196. [Google Scholar] [CrossRef]

- Apopei, A.I.; Buzgar, N.; Buzatu, A.; Maftei, A.E.; Apostoae, L. Digital 3d Models of Minerals and Rocks in a Nutshell: Enhancing Scientific, Learning, and Cultural Heritage Environments in Geosciences by Using Cross-Polarized Light Photogrammetry. Carpathian J. Earth Environ. Sci. 2021, 16, 237–249. [Google Scholar] [CrossRef]

- Cocal-Smith, V.; Hinchliffe, G.; Petterson, M.G. Digital Tools for the Promotion of Geological and Mining Heritage: Case Study from the Thames Goldfield, Aotearoa, New Zealand. Geosciences 2023, 13, 253. [Google Scholar] [CrossRef]

- Papadopoulos, C.; Gillikin Schoueri, K.; Schreibman, S. And Now What? Three-Dimensional Scholarship and Infrastructures in the Post-Sketchfab Era. Heritage 2025, 8, 99. [Google Scholar] [CrossRef]

- De Reu, J.; Plets, G.; Verhoeven, G.; De Smedt, P.; Bats, M.; Cherretté, B.; De Maeyer, W.; Deconynck, J.; Herremans, D.; Laloo, P.; et al. Towards a three-dimensional cost-effective registration of the archaeological heritage. J. Archaeol. Sci. 2013, 40, 1108–1121. [Google Scholar] [CrossRef]

- Fais, S.; Cuccuru, F.; Casula, G.; Bianchi, M.G.; Ligas, P. Characterization of rock samples by a high-resolution multi-technique non-invasive approach. Minerals 2019, 9, 664. [Google Scholar] [CrossRef]

- Mathys, A.; Semal, P.; Brecko, J.; Van den Spiegel, D. Improving 3D photogrammetry models through spectral imaging: Tooth enamel as a case study. PLoS ONE 2019, 14, e0220949. [Google Scholar] [CrossRef]

- Matsui, K.; Kimura, Y. Museum Exhibitions of Fossil Specimens Into Commercial Products: Unexpected Outflow of 3D Models due to Unwritten Image Policies. Front. Earth Sci. 2022, 10, 874736. [Google Scholar] [CrossRef]

- Sapirstein, P. A high-precision photogrammetric recording system for small artifacts. J. Cult. Herit. 2018, 31, 33–45. [Google Scholar] [CrossRef]

- Sutton, M.D.; Rahman, I.A.; Garwood, R.J. Techniques for Virtual Palaeontology; John Wiley & Sons: Hoboken, NJ, USA, 2014; pp. 1–200. [Google Scholar]

- Darda, K.M.; Estrada Gonzalez, V.; Christensen, A.P.; Bobrow, I.; Krimm, A.; Nasim, Z.; Cardillo, E.R.; Perthes, W.; Chatterjee, A. A comparison of art engagement in museums and through digital media. Sci. Rep. 2025, 15, 8972. [Google Scholar] [CrossRef]

- Andrews, G.D.M.; Labishak, G.D.; Brown, S.R.; Isom, S.L.; Pettus, H.D.; Byers, T. Teaching with Digital 3D Models of Minerals and Rocks. GSA Today 2020, 30, 42–43. [Google Scholar] [CrossRef]

- Zhang, J.; Gao, Q.; Luo, H.; Long, T. Mineral Identification Based on Deep Learning Using Image Luminance Equalization. Appl. Sci. 2022, 12, 7055. [Google Scholar] [CrossRef]

- Davies, N.; Deck, J.; Kansa, E.C.; Kansa, S.W.; Kunze, J.; Meyer, C.; Orrell, T.; Ramdeen, S.; Snyder, R.; Vieglais, D.; et al. Internet of Samples (iSamples): Toward an interdisciplinary cyberinfrastructure for material samples. Gigascience 2021, 10, giab028. [Google Scholar] [CrossRef]

- Davies, T.G.; Rahman, I.A.; Lautenschlager, S.; Cunningham, J.A.; Asher, R.J.; Barrett, P.M.; Bates, K.T.; Bengtson, S.; Benson, R.B.J.; Boyer, D.M.; et al. Open data and digital morphology. Proc. R. Soc. B Biol. Sci. 2017, 284, 20170194. [Google Scholar] [CrossRef] [PubMed]

- Hardisty, A.; Addink, W.; Glöckler, F.; Güntsch, A.; Islam, S.; Weiland, C. A choice of persistent identifier schemes for the Distributed System of Scientific Collections (DiSSCo). Res. Ideas Outcomes 2021, 7, e67379. [Google Scholar] [CrossRef]

- Rivero, O.; Dólera, A.; García-Bustos, M.; Eguilleor-Carmona, X.; Mateo-Pellitero, A.M.; Ruiz-López, J.F. Seeing is believing: An Augmented Reality application for Palaeolithic rock art. J. Cult. Herit. 2024, 69, 67–77. [Google Scholar] [CrossRef]

- Wellmann, F.; Virgo, S.; Escallon, D.; De La Varga, M.; Jüstel, A.; Wagner, F.M.; Kowalski, J.; Zhao, H.; Fehling, R.; Chen, Q. Open AR-Sandbox: A haptic interface for geoscience education and outreach. Geosphere 2022, 18, 732–749. [Google Scholar] [CrossRef]

- Reed, S.E.; Kreylos, O.; Hsi, S.; Kellogg, L.H.; Schladow, G.; Yikilmaz, M.B.; Segale, H.; Silverman, J.; Yalowitz, S.; Sato, E. Shaping Watersheds Exhibit: An Interactive, Augmented Reality Sandbox for Advancing Earth Science Education. In AGU Fall Meeting Abstracts; Department of Energy: Washington, DC, USA, 2014; Volume 2014. [Google Scholar]

- Barbieri, L.; Bruno, F.; Muzzupappa, M. Virtual museum system evaluation through user studies. J. Cult. Herit. 2017, 26, 101–108. [Google Scholar] [CrossRef]

- García-Gil, G.; del Carmen López-Armas, G.; de Jesús Navarro, J. Human-Machine Interaction: A Vision-Based Approach for Controlling a Robotic Hand Through Human Hand Movements. Technologies 2025, 13, 169. [Google Scholar] [CrossRef]

- Piumsomboon, T.; Clark, A.; Billinghurst, M.; Cockburn, A. User-Defined Gestures for Augmented Reality. In Proceedings of the 14th International Conference on Human-Computer Interaction (INTERACT), Cape Town, South Africa, 10–12 September 2013; pp. 282–299. [Google Scholar]

- Wobbrock, J.O.; Morris, M.R.; Wilson, A.D. User-defined gestures for surface computing. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 1083–1092. [Google Scholar]

- Pietroni, E.; Ferdani, D. Virtual Restoration and Virtual Reconstruction in Cultural Heritage: Terminology, Methodologies, Visual Representation Techniques and Cognitive Models. Information 2021, 12, 167. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.-L.; Yong, M.G.; Lee, J. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Bradski, G. The OpenCV library. Dr. Dobb’s J. Softw. Tools 2000, 120, 122–125. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.M.; Chen, Z.F.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the Osdi’16: 12th Usenix Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar] [CrossRef]

- Yoshida, R.; Tamaki, H.; Sakai, T.; Nakadai, T.; Ogitsu, T.; Takemura, H.; Mizoguchi, H.; Kamiyama, S.; Yamaguchi, E.; Inagaki, S.; et al. Novel Application of Kinect Sensor to Support Immersive Learning within Museum for Children. In Proceedings of the 2015 9th International Conference on Sensing Technology (ICST), Auckland, New Zealand, 8–10 December 2015; pp. 834–837. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. KinectFusion: Real-Time Dense Surface Mapping and Tracking. In Proceedings of the 2011 IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar] [CrossRef]

- Palma, G.; Perry, S.; Cignoni, P. Augmented Virtuality Using Touch-Sensitive 3D-Printed Objects. Remote Sens. 2021, 13, 2186. [Google Scholar] [CrossRef]

- Berrezueta-Guzman, S.; Wagner, S. Immersive Multiplayer VR: Unreal Engine’s Strengths, Limitations, and Future Prospects. IEEE Access 2025, 13, 85597–85612. [Google Scholar] [CrossRef]

- Boutsi, A.M.; Ioannidis, C.; Soile, S. Interactive Online Visualization of Complex 3d Geometries. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W9, 173–180. [Google Scholar] [CrossRef]

- Jerkku, I. Designing and Developing an Educational VJ Tool. Master’s Thesis, Aalto University, Espoo, Finland, 2024. [Google Scholar]

- Kuoppala, P. Real-Time Image Generation for Interactive Art: Developing an Artwork Platform with ComfyUI and TouchDesigner. Bachelor’s Thesis, Tampere University of Applied Sciences, Pirkanmaa, Finland, 2025. [Google Scholar]

- Ismiranti, A.S.; Kogia, A. Utilizing Digital Body Tracking Systems to Develop Sustainable and Ideal Workspace. KnE Social Sci. 2025, 10, 1–8. [Google Scholar] [CrossRef]

- Blankensmith, T. MediaPipe TouchDesigner Plugin. 2025. Available online: https://github.com/torinmb/mediapipe-touchdesigner (accessed on 19 June 2025).

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.-L.; Grundmann, M. MediaPipe Hands: On-device Real-time Hand Tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar]

- Ioannakis, G.; Bampis, L.; Koutsoudis, A. Exploiting artificial intelligence for digitally enriched museum visits. J. Cult. Herit. 2020, 42, 171–180. [Google Scholar] [CrossRef]

- Kyriakou, P.; Hermon, S. Can I touch this? Using Natural Interaction in a Museum Augmented Reality System. Digit. Appl. Archaeol. Cult. Herit. 2019, 12, e00088. [Google Scholar] [CrossRef]

- Shehade, M.; Stylianou-Lambert, T. Virtual reality in museums: Exploring the experiences of museum professionals. Appl. Sci. 2020, 10, 4031. [Google Scholar] [CrossRef]

- Falk, J.H.; Dierking, L.D. The Museum Experience Revisited; Routledge: Abingdon, UK, 2016. [Google Scholar]

| Feature | PETRA (Webcam) | Kinect/Leap Motion | VR Headset |

|---|---|---|---|

| Hardware cost | Very Low | Low to Medium | Medium to High |

| Hardware dependency | any webcam | specific sensor | specific headset |

| Setup complexity | Very Low | Medium | High |

| Hygiene (Touchless) | Excellent | Excellent | Poor (Shared headset) |

| User isolation | Low (Social) | Low (Social) | High (Individual) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Apopei, A.I. Accessible Interface for Museum Geological Exhibitions: PETRA—A Gesture-Controlled Experience of Three-Dimensional Rocks and Minerals. Minerals 2025, 15, 775. https://doi.org/10.3390/min15080775

Apopei AI. Accessible Interface for Museum Geological Exhibitions: PETRA—A Gesture-Controlled Experience of Three-Dimensional Rocks and Minerals. Minerals. 2025; 15(8):775. https://doi.org/10.3390/min15080775

Chicago/Turabian StyleApopei, Andrei Ionuţ. 2025. "Accessible Interface for Museum Geological Exhibitions: PETRA—A Gesture-Controlled Experience of Three-Dimensional Rocks and Minerals" Minerals 15, no. 8: 775. https://doi.org/10.3390/min15080775

APA StyleApopei, A. I. (2025). Accessible Interface for Museum Geological Exhibitions: PETRA—A Gesture-Controlled Experience of Three-Dimensional Rocks and Minerals. Minerals, 15(8), 775. https://doi.org/10.3390/min15080775