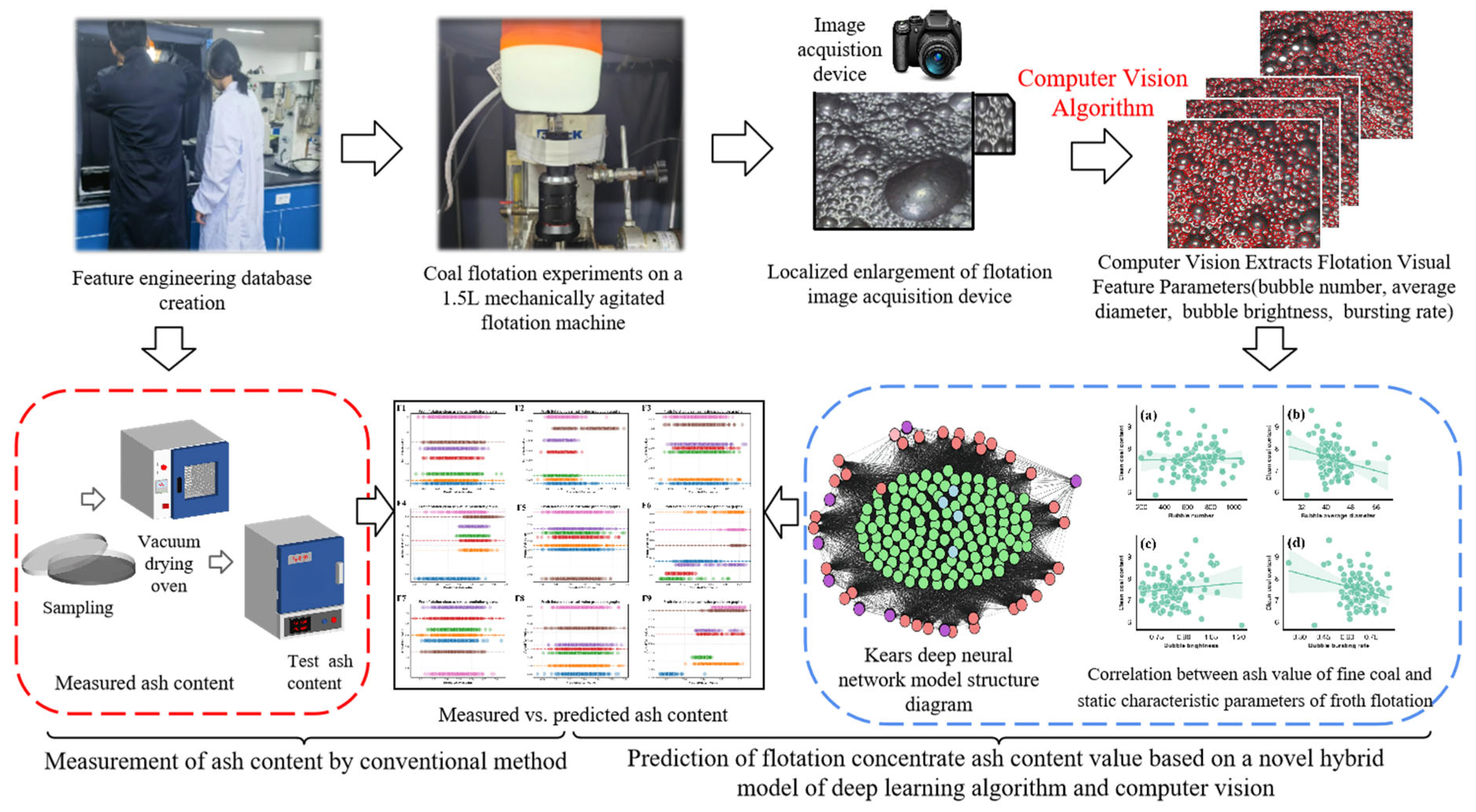

Investigating the Influence of Froth Image Attributes on Clean Coal Ash Content: A Novel Hybrid Model Employing Deep Learning and Computer Vision Techniques for Prediction Exploration

Abstract

1. Introduction

2. Flotation Experiments and Data Collection

2.1. Experimental Environment

2.2. Flotation Experiments

2.3. Data Acquisition

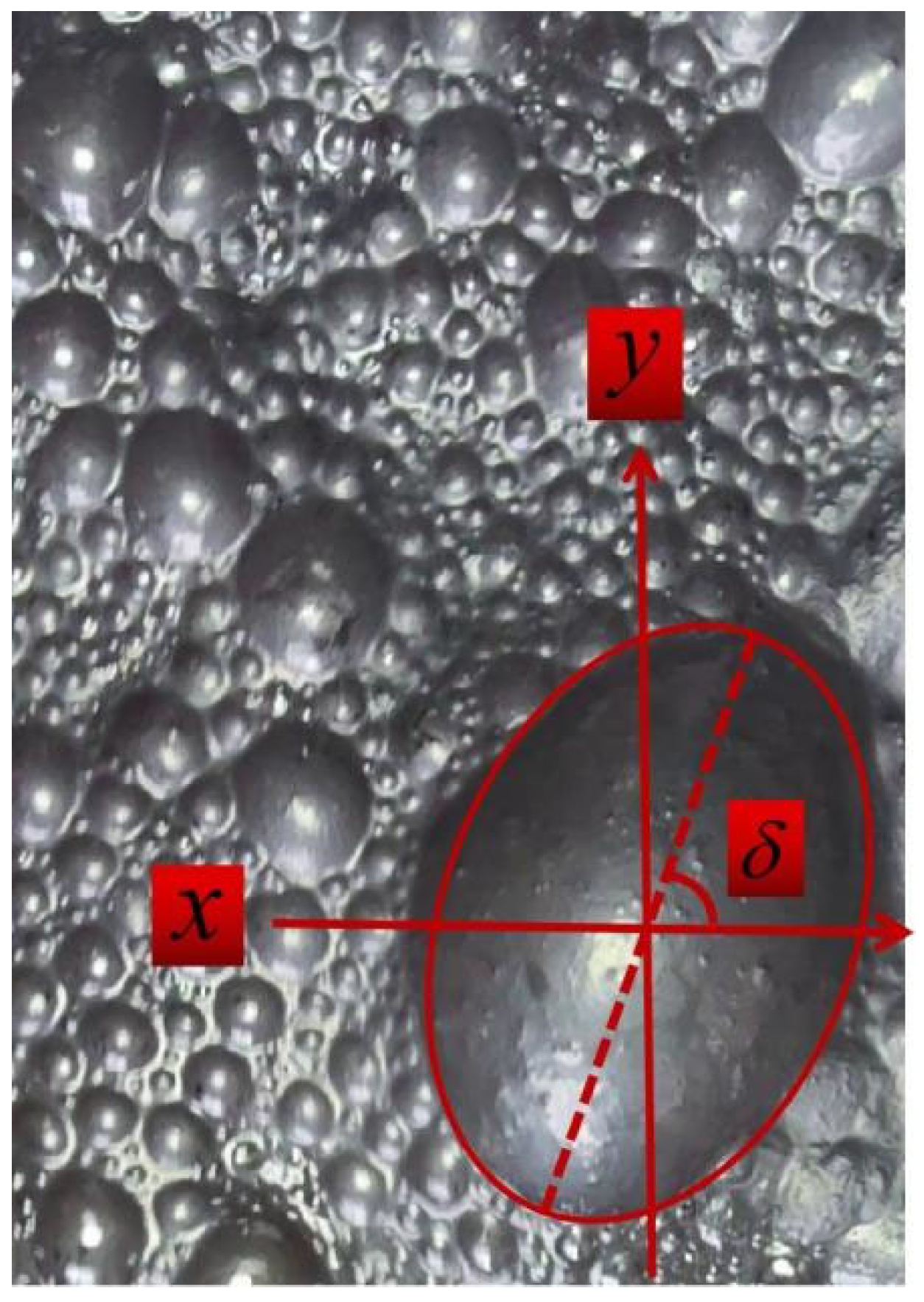

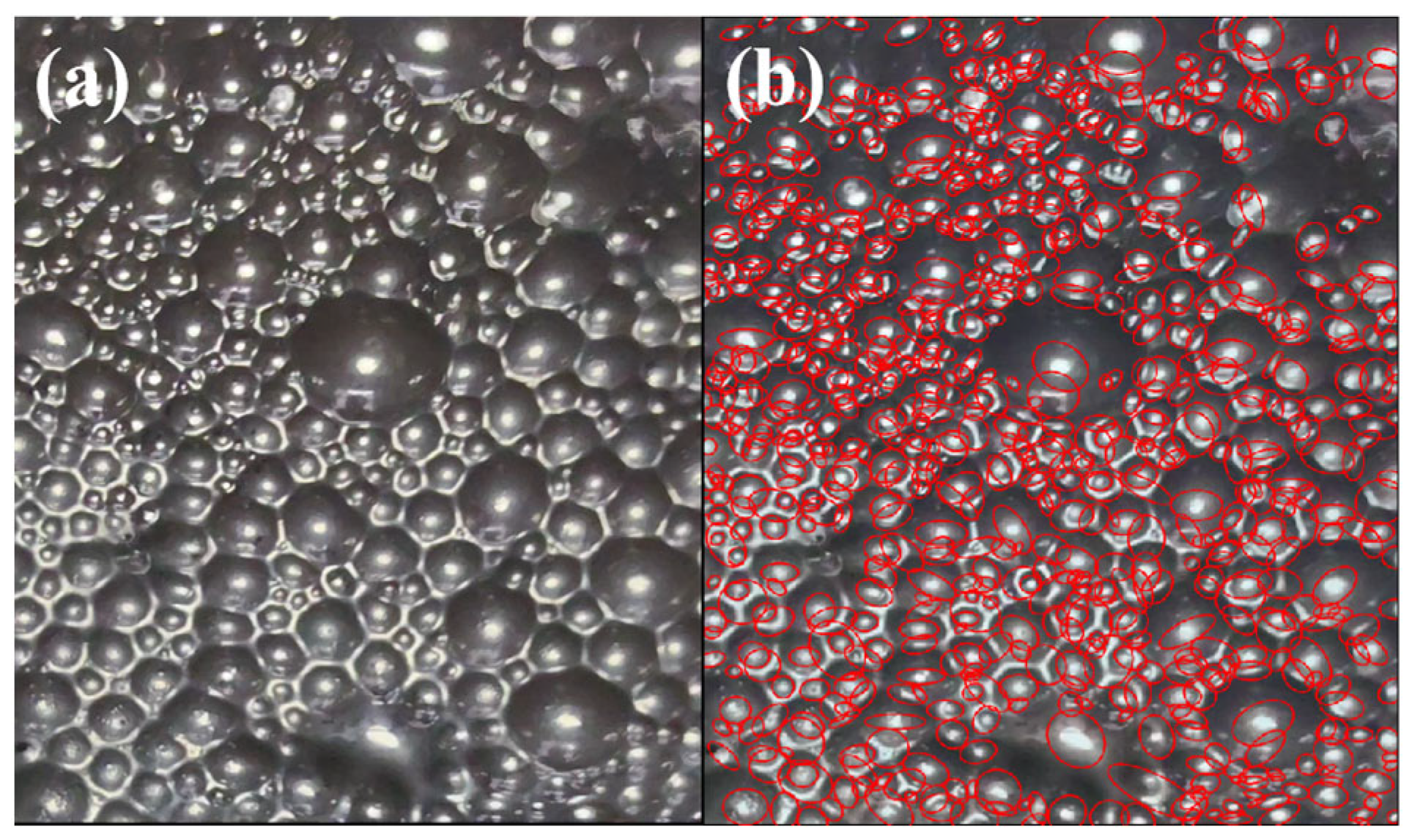

3. Froth Flotation Foam Feature Extraction

3.1. 5 × 5 Convolution Kernel Sobel Operator

3.2. The Size and Quantity of Bubbles

3.3. Bubble Brightness

3.4. Bubble Bursting Rate

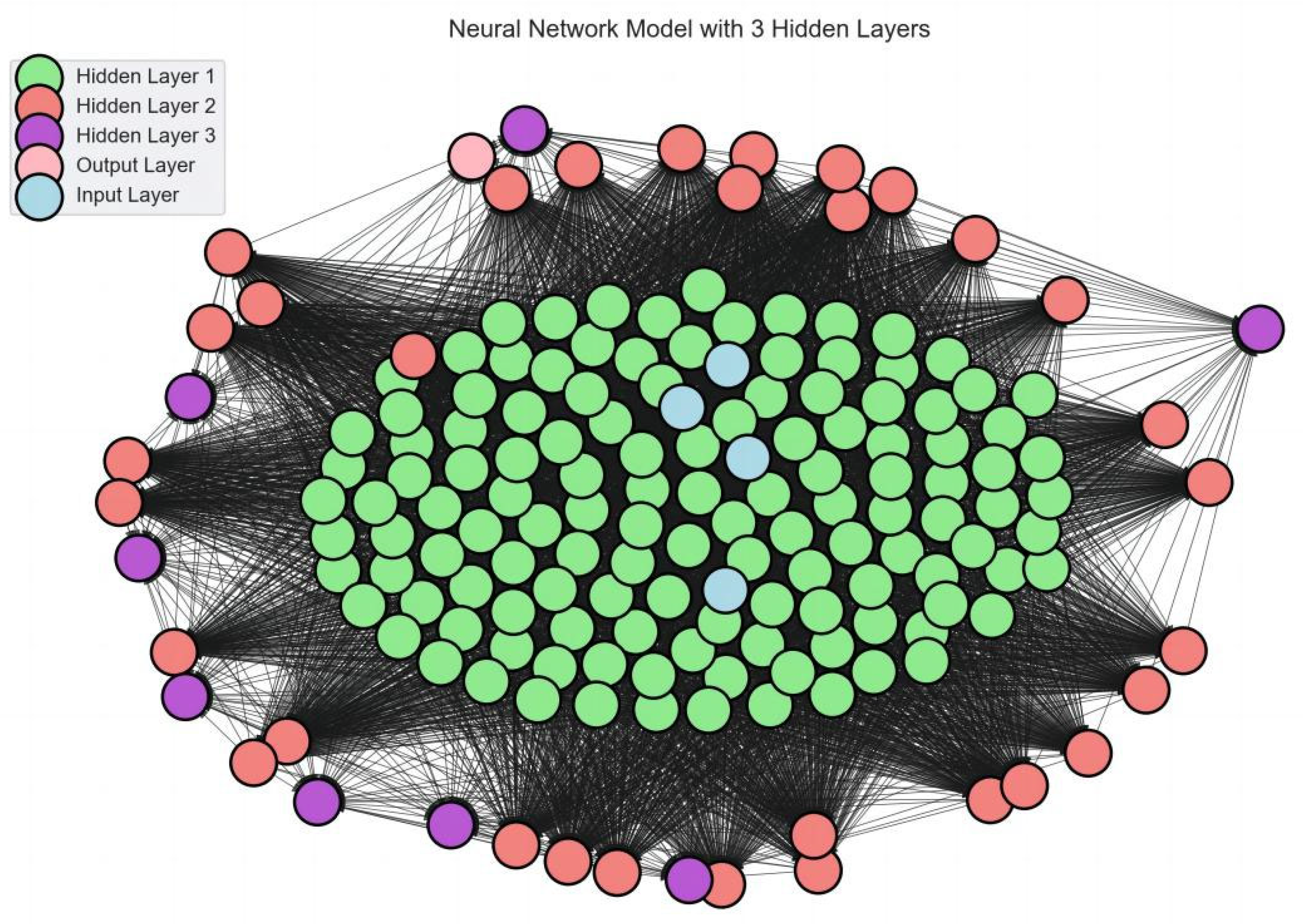

4. KERAS Deep Neural Network Modeling with Multiple Feature Inputs and Mixed Data Inputs

4.1. Predicting Ash Values in Froth Flotation Concentrates Using Deep Neural Networks

4.2. 10-Fold Cross-Validation Design

4.3. Evaluation of Model Prediction Performance Metrics

5. Results and Analysis

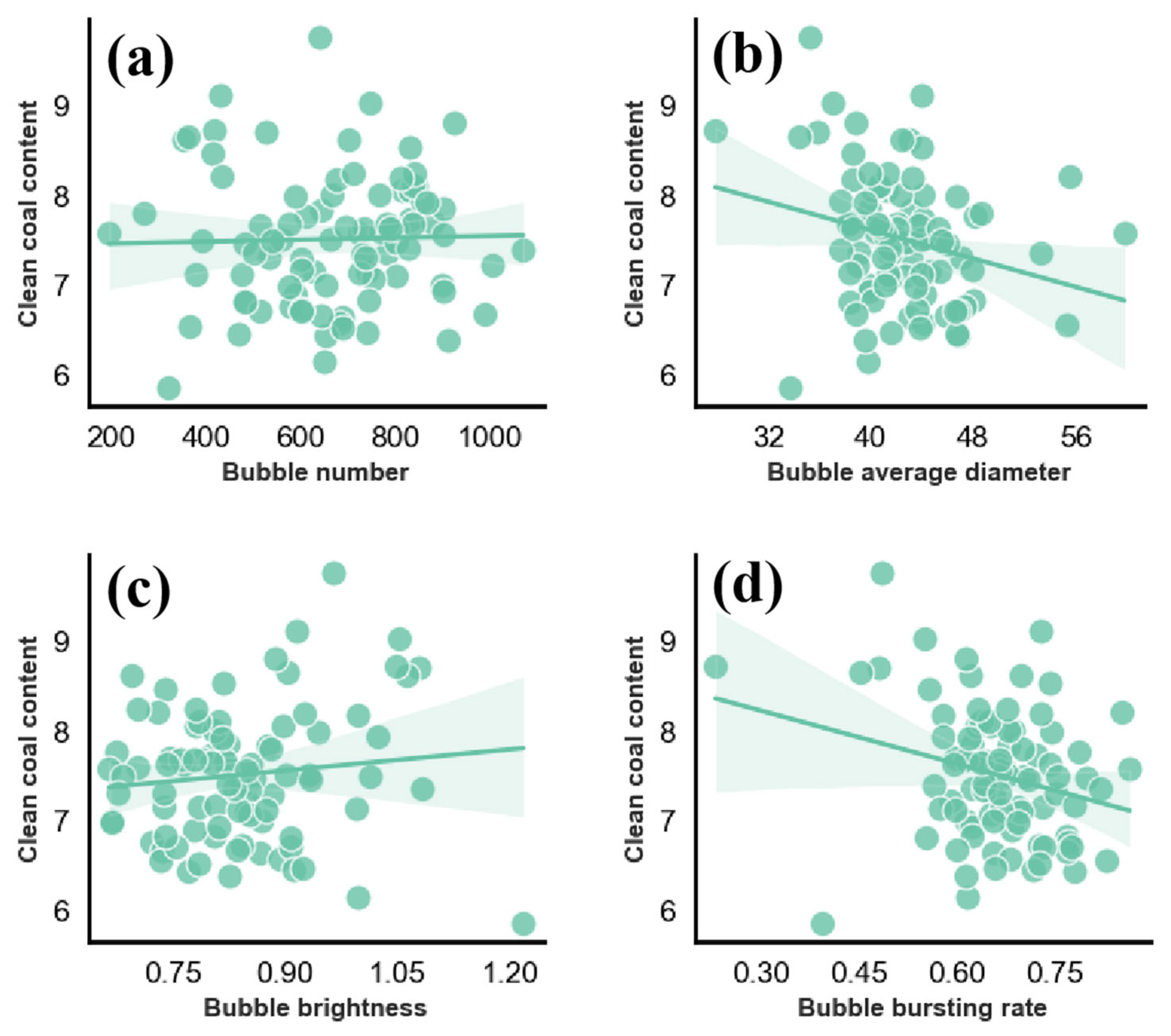

5.1. Relationship between Static Characteristic Parameters of Froth Flotation and Ash Values

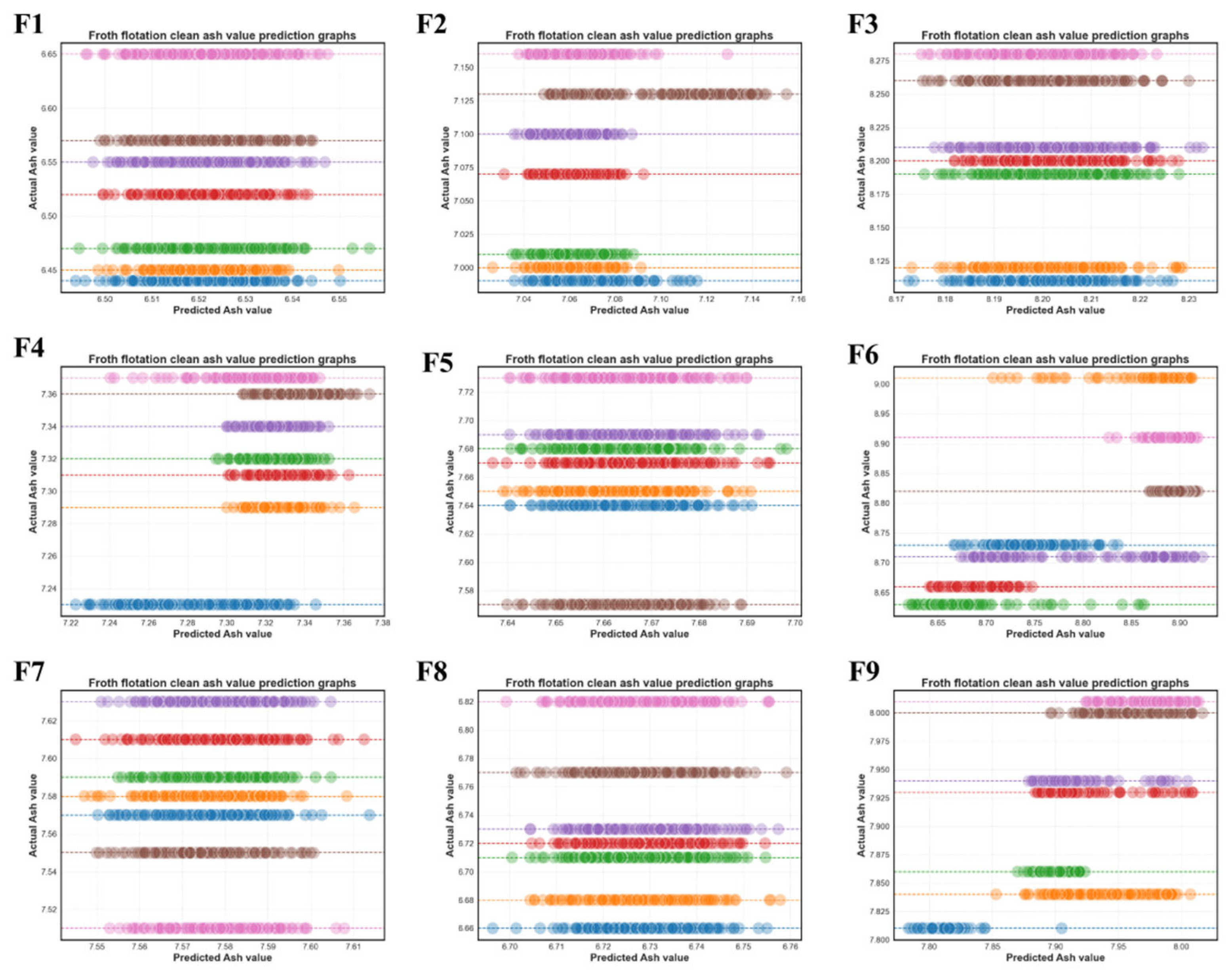

5.2. Froth Flotation Concentrate Ash Value Prediction and Result Analysis

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Woodburn, E.T.; Stockton, J.B.; Robbins, D.J. Vision-based characterization of three-phase froths. In International Colloquium–Developments in Froth Flotation; South African Institute of Mining and Metallurgy: Gordon’s Bay, South Africa, 1989; Volume 1, pp. 1–30. [Google Scholar]

- Symonds, P.; De Jager, G. A technique for automatically segmenting images of the surface froth structures that are prevalent in industrial flotation cells. In Proceedings of the 1992 South African Symposium on Communications and Signal Processing, Cape Town, South Africa, 11 September 1992. [Google Scholar]

- DMoolman, D.W.; Aldrich, C.; Van Deventer, J.S.J. The monitoring of froth surfaces on industria flotation plants using connectionist image processing techniques. Miner. Eng. 1995, 8, 23–30. [Google Scholar] [CrossRef]

- Moolman, D.W.; Eksteen, J.J.; Aldrich, C.; Van Deventer, J.S.J. Thle significance of flotation froth appearance for machine vision control. Int. J. Miner. Process. 1996, 48, 135–158. [Google Scholar] [CrossRef]

- Bonifazi, G.; Massacci, P.; Meloni, A. Prediction of complex sulfide flotation performances by a combined 3D fractal and colour analysis of the froths. Miner. Eng. 2000, 13, 737–746. [Google Scholar] [CrossRef]

- Bonifazi, G.; Serranti, S.; Volpe, F.; Zuco, R. Characterisation of flotation froth colour and structure by machine vision. Comput. Geosci. 2001, 27, 1111–1117. [Google Scholar] [CrossRef]

- Holtham, P.N.; Nguyen, K.K. On-line analysis of froth surface in coal and mineral flotation using JKFrothCam. Int. J. Miner. Process. 2002, 64, 163–180. [Google Scholar] [CrossRef]

- Citir, C.; Aktas, Z.; Berber, R. Off-line image analysis for froth flotation of coal. Comput. Chem. Eng. 2004, 28, 625–632. [Google Scholar] [CrossRef]

- Bartolacci, G.; Pelletier, P.; Tessier, J.; Duchesne, C.; Bossé, P.-A.; Fournier, J. Application of numerical image analysis to process diagnosis and physical parameter measurement in mineral processes—Part I: Flotation control based on froth textural characteristics. Miner. Eng. 2006, 19, 734–747. [Google Scholar] [CrossRef]

- Liu, J.J.; MacGregor, J.F. Froth-based modeling and control of flotation processes. Miner. Eng. 2008, 21, 642–651. [Google Scholar] [CrossRef]

- Aldrich, C.; Marais, C.; Shean, B.; Cilliers, J. Online monitoring and control of froth flotation systems with machine vision: A review. Int. J. Miner. Process. 2010, 96, 1–13. [Google Scholar] [CrossRef]

- Bergh, L.; Yianatos, J. The long way toward multivariate predictive control of flotation processes. J. Process Control 2011, 21, 226–234. [Google Scholar] [CrossRef]

- Morar, S.H.; Harris, M.C.; Bradshaw, D.J. The use of machine vision to predict flotation performance. Miner. Eng. 2012, 36–38, 31–36. [Google Scholar] [CrossRef]

- Mehrabi, A.; Mehrshad, N.; Massinaei, M. Machine vision based monitoring of an industrial flotation cell in an iron flotation plant. Int. J. Miner. Process. 2014, 133, 60–66. [Google Scholar] [CrossRef]

- Jahedsaravani, A.; Marhaban, M.; Massinaei, M.; Saripan, M.; Noor, S. Froth-based modeling and control of a batch flotation process. Int. J. Miner. Process. 2016, 146, 90–96. [Google Scholar] [CrossRef]

- Massinaei, M.; Jahedsaravani, A.; Taheri, E.; Khalilpour, J. Machine vision based monitoring and analysis of a coal column flotation circuit. Powder Technol. 2018, 343, 330–341. [Google Scholar] [CrossRef]

- Massinaei, M.; Jahedsaravani, A.; Mohseni, H. Recognition of process conditions of a coal column flotation circuit using computer vision and machine learning. Int. J. Coal Prep. Util. 2022, 42, 2204–2218. [Google Scholar] [CrossRef]

- Jia, R.; Yan, Y.; Lang, D.; He, D.; Li, K. Compression and reconstruction of flotation foam images based on generative adversarial networks. Miner. Eng. 2023, 202, 108299. [Google Scholar] [CrossRef]

- Jahedsaravani, A.; Massinaei, M.; Marhaban, M. Development of a machine vision system for real-time monitoring and control of batch flotation process. Int. J. Miner. Process. 2017, 167, 16–26. [Google Scholar] [CrossRef]

- GB/T 30046.1-2013; Forth flotation testing―Part 1: Laboratory procedure, General Administration of Quality Supervision, Inspection and Quarantine of the People’s Republic of China. Standardization Administration of the People’s Republic of China: Beijing, China, 2013.

- Marais, C.; Aldrich, C. Estimation of platinum flotation grades from froth image data. Miner. Eng. 2011, 24, 433–441. [Google Scholar] [CrossRef]

- Tan, J.; Liang, L.; Peng, Y.; Xie, G. The concentrate ash content analysis of coal flotation based on froth images. Miner. Eng. 2016, 92, 9–20. [Google Scholar] [CrossRef]

- Chang, Q.; Li, X.; Li, Y.; Miyazaki, J. Multi-directional Sobel operator kernel on GPUs. J. Parallel Distrib. Comput. 2023, 177, 160–170. [Google Scholar] [CrossRef]

- Biswas, S.; Ghoshal, D. Blood cell detection using thresholding estimation based watershed transformation with sobel filter in frequency domain. Procedia Comput. Sci. 2016, 89, 651–657. [Google Scholar] [CrossRef]

- Chen, S.; Yang, X.; You, Z.; Wang, M. Innovation of aggregate angularity characterization using gradient approach based upon the traditional and modified Sobel operation. Constr. Build. Mater. 2016, 120, 442–449. [Google Scholar] [CrossRef]

- Gao, P.; Song, Y.; Song, M.; Qian, P.; Su, Y. Extract nanoporous gold ligaments from SEM images by combining fully convolutional network and Sobel operator edge detection algorithm. Scr. Mater. 2022, 213, 114627. [Google Scholar] [CrossRef]

- Stimpel, B.; Syben, C.; Schirrmacher, F.; Hoelter, P.; Dorfler, A.; Maier, A. Multi-Modal deep guided filtering for comprehensible medical image processing. IEEE Trans. Med. Imaging 2019, 39, 1703–1711. [Google Scholar] [CrossRef] [PubMed]

- Wen, Z.; Zhou, C.; Pan, J.; Nie, T.; Jia, R.; Yang, F. Froth image feature engineering-based prediction method for concentrate ash content of coal flotation. Miner. Eng. 2021, 170, 107023. [Google Scholar] [CrossRef]

- Zhao, L.; Peng, T.; Xie, Y.; Gui, W.; Zhao, Y. Froth stereo visual feature extraction for the industrial flotation process. Ind. Eng. Chem. Res. 2019, 58, 14510–14519. [Google Scholar] [CrossRef]

- Busse, J.; de Dreuzy, J.; Torres, S.G.; Bringemeier, D.; Scheuermann, A. Image processing based characterisation of coal cleat networks. Int. J. Coal Geol. 2016, 169, 1–21. [Google Scholar] [CrossRef]

- Havaran, A.; Mahmoudi, M. Markers tracking and extracting structural vibration utilizing Randomized Hough transform. Autom. Constr. 2020, 116, 103235. [Google Scholar] [CrossRef]

- Dong, H.; Prasad, D.K.; Chen, I.-M. Accurate detection of ellipses with false detection control at video rates using a gradient analysis. Pattern Recognit. 2018, 81, 112–130. [Google Scholar] [CrossRef]

- Riquelme, A.; Desbiens, A.; del Villar, R.; Maldonado, M. Identification of a non-linear dynamic model of the bubble size distribution in a pilot flotation column. Int. J. Miner. Process. 2015, 145, 7–16. [Google Scholar] [CrossRef]

- Lu, M.; Liu, D.; Deng, Y.; Wu, L.; Xie, Y.; Chen, Z. R-K algorithm: A novel dynamic feature matching method of flotation froth. Measurement 2020, 156, 107581. [Google Scholar] [CrossRef]

- Lerer, A.; Supèr, H.; Keil, M.S. Luminance gradients and non-gradients as a cue for distinguishing reflectance and illumination in achromatic images: A computational approach. Neural Netw. 2019, 110, 66–81. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Tang, Z.; Xie, Y.; Gao, X.; Chen, Q.; Gui, W. A Similarity-Based Burst Bubble Recognition Using Weighted Normalized Cross Correlation and Chamfer Distance. IEEE Trans. Ind. Inform. 2020, 16, 4077–4089. [Google Scholar] [CrossRef]

- Chicho, B.T.; Sallow, A.B. A Comprehensive Survey of Deep Learning Models Based on Keras Framework. J. Soft Comput. Data Min. 2021, 2, 49–62. [Google Scholar] [CrossRef]

- Jiang, Z.; Shen, G. Prediction of House Price Based on The Back Propagation Neural Network in the Keras Deep Learning Framework. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019. [Google Scholar]

- Lee, H.; Lee, J. Neural network prediction of sound quality via domain Knowledge-Based data augmentation and Bayesian approach with small data sets. Mech. Syst. Signal Process. 2021, 157, 107713. [Google Scholar] [CrossRef]

| Library Name | Version |

|---|---|

| Programming Language | Python 3.9 |

| Deep Learning Framework | PyTorch 1.12.1 |

| Scikit-learn | 0.24.2 |

| TensorFlow | 2. 9. 1 |

| Keras | 2.9.0 |

| Matplotlib | 3.4.3 |

| Theano | 1.1.2 |

| Id | Number | Diameter | Brightness | Bursting | Clean Ash Content |

|---|---|---|---|---|---|

| 1.jpg | 185 | 35 | 1.248 | 0.416 | 6.55 |

| 2.jpg | 187 | 35 | 1.198 | 0.449 | 6.55 |

| 3.jpg | 183 | 36 | 1.176 | 0.415 | 6.55 |

| 4.jpg | 155 | 37 | 1.234 | 0.439 | 6.55 |

| 5.jpg | 175 | 36 | 1.194 | 0.446 | 6.55 |

| 6.jpg | 186 | 36 | 1.236 | 0.43 | 6.55 |

| 7.jpg | 191 | 36 | 1.243 | 0.455 | 6.55 |

| 8.jpg | 166 | 37 | 1.311 | 0.434 | 6.55 |

| 9.jpg | 167 | 36 | 1.327 | 0.449 | 6.55 |

| 10.jpg | 195 | 35 | 1.221 | 0.431 | 6.55 |

| … | … | … | … | … | … |

| 179.jpg | 872 | 34 | 0.749 | 0.469 | 6.55 |

| 180.jpg | 863 | 34 | 0.761 | 0.457 | 6.55 |

| 181.jpg | 1133 | 37 | 0.926 | 0.575 | 7.23 |

| 182.jpg | 1158 | 37 | 0.811 | 0.537 | 7.23 |

| … | … | … | … | … | … |

| 16200.jpg | 666 | 45 | 0.725 | 0.682 | 8.01 |

| Group | Training Set | Validation Set | ||||

|---|---|---|---|---|---|---|

| MSE | RMSE | MAE | MSE | RMSE | MAE | |

| F1 | 0.004869 | 0.069782 | 0.058772 | 0.202890 | 0.450433 | 0.394718 |

| F2 | 0.003553 | 0.059614 | 0.051532 | 0.004489 | 0.067001 | 0.056004 |

| F3 | 0.003536 | 0.059472 | 0.047269 | 0.127973 | 0.357733 | 0.352445 |

| F4 | 0.001356 | 0.036827 | 0.029944 | 0.015303 | 0.123708 | 0.122085 |

| F5 | 0.001800 | 0.042426 | 0.032838 | 0.006037 | 0.077698 | 0.072213 |

| F6 | 0.005604 | 0.074865 | 0.053637 | 0.326040 | 0.570999 | 0.547659 |

| F7 | 0.001319 | 0.036328 | 0.028983 | 0.006623 | 0.081384 | 0.080377 |

| F8 | 0.002674 | 0.051719 | 0.041912 | 0.022733 | 0.150776 | 0.144273 |

| F9 | 0.002443 | 0.049434 | 0.038880 | 0.017150 | 0.130958 | 0.122604 |

| Average error value | 0.003017 | 0.053385 | 0.042640 | 0.081026 | 0.223410 | 0.210264 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, F.; Liu, N.; Liu, H. Investigating the Influence of Froth Image Attributes on Clean Coal Ash Content: A Novel Hybrid Model Employing Deep Learning and Computer Vision Techniques for Prediction Exploration. Minerals 2024, 14, 536. https://doi.org/10.3390/min14060536

Lu F, Liu N, Liu H. Investigating the Influence of Froth Image Attributes on Clean Coal Ash Content: A Novel Hybrid Model Employing Deep Learning and Computer Vision Techniques for Prediction Exploration. Minerals. 2024; 14(6):536. https://doi.org/10.3390/min14060536

Chicago/Turabian StyleLu, Fucheng, Na Liu, and Haizeng Liu. 2024. "Investigating the Influence of Froth Image Attributes on Clean Coal Ash Content: A Novel Hybrid Model Employing Deep Learning and Computer Vision Techniques for Prediction Exploration" Minerals 14, no. 6: 536. https://doi.org/10.3390/min14060536

APA StyleLu, F., Liu, N., & Liu, H. (2024). Investigating the Influence of Froth Image Attributes on Clean Coal Ash Content: A Novel Hybrid Model Employing Deep Learning and Computer Vision Techniques for Prediction Exploration. Minerals, 14(6), 536. https://doi.org/10.3390/min14060536