2.1. Description of the Data Set

Initially, Lawson Prime data consisted of 472 entries with 37 features, including 1 column for a data label (1 = dynamic failure and 0 = control) and 1 column for a PSOC sample number. Prior to performing machine learning analysis on these data, several pre-processing steps were performed. First, all alphanumeric data features were deleted, along with features that had null data. To avoid ‘multicollinearity’ or the use of interdependent variables, a correlation plot between features was examined. When a high correlation (or anti-correlation) exists, the features may not be independent. Hence, highly correlated features provide the same information, and so one should be dropped. Of the 37 initial features, 17 were then removed. These removals only address the most highly correlated variables, however, and many of the remaining variables retain some degree of interdependency. Based on the number of null data entries, 2 additional features were deleted, leaving 18. The final data set consisted of 468 points. Of these, 95 points were from coal seam samples that reported dynamic failure and the remaining 373 were considered the control group.

Coal rank is determined by differences in the calorific value, fixed carbon content, and volatile matter content, which in turn impact the physical properties of the coal. Lignite, for example, at one end of this spectrum, has a low calorific value and low fixed carbon, is relatively soft with a dull or earthy luster, and may exhibit poorly developed or absent cleating. Anthracite, by contrast, has high fixed carbon and a high calorific value, and is hard with an adamantine luster and very well developed cleating. The data span all commercially mined coal ranks. The majority, however, fall within the bituminous range with 215 or approximately 45% falling within the high-volatility bituminous A range.

The distribution of the final 468 data points, with respect to state location and rank, is given in

Table 1 and

Table 2.

A quick look at these data show that the vast majority of cases were taken from the Appalachia Basin. In addition, most data points were from bituminous coals. This is true of both dynamic failure and control cases. Additionally, the discrepancy between the number of dynamic failure versus control cases renders the data available for analysis, imbalanced with the majority population falling within the control group.

Logistic regression and random forest analyses were performed on the scrubbed data set, assuming that the data were dichotomous (i.e., had or had not experienced dynamic failure), to see if an effective model indicating the potential of seam dynamic failures based on compositional features could be constructed. It must be emphasized that this analysis in no way seeks to replace the consideration of stresses, mining methods, overburden thickness, etc., as agents contributing to dynamic failures. Instead, by assuming that all other factors are equal, this analysis seeks to examine the possibility that compositional factors may provide an indication of whether some coals may have a higher propensity for dynamic failures.

The data set was then further examined for data structure using cluster analysis and dimensionality reduction. Cluster analysis was performed to see if an algorithmic application could extract the same clusters (or other clusters) as an analyst and to explore the data for relationships and structures not readily apparent in higher-dimensional space. Cluster analysis was performed using hierarchical density-based spatial clustering of applications with noise (HDBscan) [

13]. Dimensionality reduction was performed using both principal component analysis (PCA) and t-distributed stochastic neighbor embedding (t-SNE), as described by Van der Maaten and Hinton [

14].

2.2. Logistic Regression

After the number of features was reduced, the logistic regression package available in Python Sklearn [

15] was applied. Numerous articles in the literature point out that in logistic regression, data normalization is not necessarily needed [

16]. However, the different feature values retained for analysis in the Lawson Prime data set possessed values that varied by several orders of magnitude. This variation in data magnitude could potentially reduce or magnify their impact in both regression fitting and cluster analysis, and so normalization was applied. Tests were performed with no scaling or normalization, with data centering, data normalization, and data standardization. Centering provided the best results in terms of preliminary classification accuracy. This method was used to scale data in all subsequent work.

While models can be constructed using the entire feature set, this is not efficient and not all features may contribute to the logistic regression classification accuracy. It is important to try and select only the most significant features for regression based on defined criteria. Recursive feature elimination with cross-validation (RFECV) was applied to the edited Lawson Prime data set [

15,

17]. Recursive feature elimination involves a backward selection of predictors. First, a model is built using all the features and an importance score is computed for each. The least important is removed and the process is repeated. Based on this, a set of features that most effectively performs a classification is determined. These features can then be used to train a final model. The RFE algorithm used was stratified k-fold, with 2-fold cross-validation.

Another item to consider at this point is regularization. During RFECV, the best fits are determined by a maximum likelihood estimator. Regularization applies a penalty function to this expression, so the values of coefficients are driven smaller to prevent overfitting of the data [

18,

19].

Finally, prior to the application of RFECV and training of the classification model, the distribution of data must be considered. As data in the edited Lawson Prime set are asymmetric or imbalanced, the probability of rare dynamic failure events would most likely be underestimated [

20,

21,

22]. To account for this asymmetry, a series of trials were conducted with RFECV where the majority class was down-sampled to create a balanced data set [

23]. From the set of 373 control points, a randomly selected set of 86 was used in combination with 86 dynamic failure cases. A total of 3000 such cases were tested, and the highest scoring features were selected from these 3000 trials.

The 18 features applied in calculating the optimal number to be used for the Lawson data set are listed in

Table 3.

Figure 1 shows how many times a particular number of features was chosen as optimal using RFECV. In the 3000 trials, 4 features were determined to be optimal in the highest percentage of trials (462 of the 3000 trials or 15.4% of the total). This was followed by 5 features in 406 trials, or 13.5% of the total. This plot shows how many features were selected as optimal, but not what those features were.

Examining the optimal features determined from RFECV, four features dominated the most common selection. Pyritic sulfur content was computed to have the highest ranking in 2997 of the 3000 trials. This was followed by organic sulfur content, oxygen content, and volatile matter content. These 4 features were selected to perform logistic regression. The data were split into training and testing sets, the model was trained, and this model was then applied to the test data set. Due to the large asymmetry between dynamic failure and control data entries, as with RFECV, the data were reduced to all dynamic failure entries and a similar number of randomly selected control points. Of the 180 points that were selected, 60% were chosen for training and 40% for testing. Again, a total of 3000 trials with a 60/40 train–test split were conducted.

The classifier was trained on 108 points (60%) and tested on 72 points (40%). A confusion matrix was constructed for each test, giving the number of true positives (TPs), true negatives (TNs), false positives (FPs), and false negatives (FNs). The confusion matrix averages from the 3000 trials are shown in

Table 4. The number of true negatives should be 32 and the number of true positives should be 40. The average results show 32.8 true positives, 25.5 true negatives, 7.2 false negatives, and 6.5 false positives.

Table 5 gives the precision, recall, and F-beta scores computed on each trial and averaged over the total number of trials for the Lawson Prime data. Over the 3000 trials, the average precision and recall were approximately 81%.

2.3. Random Forest Classification

There are several different approaches used to perform classifications in machine learning besides logistic regression, but random forest has several advantages over other learning algorithms. These include a smaller chance of overfitting as compared to decision trees, less training time, and high accuracy within large databases. Disadvantages include the possibility of overfitting on some data sets. The algorithm, as implemented in Python Sklearn, constructs multiple decision trees during training. The decision from the majority of these trees is then chosen as the final decision [

24].

Several variants on a random forest application were tested, and the results were compared to each other and to the results from logistic regression. First, the random forest algorithm was applied to the Lawson Prime data using default program parameters. Second, since the data were imbalanced, decision threshold tuning [

25,

26] was applied to better address the correct classification of minority class data. Finally, to address the minority class and to reduce the chance of overfitting, a random hyperparameter search with cross-validation was applied [

27]. While receiver operating characteristic (ROC) curves of true-positive rates versus false-positive rates can be produced, precision–recall curves are favored as a measure of algorithm effectiveness in the case of imbalanced data [

28]. Because the data are imbalanced, the algorithm skill to predict the majority control cases (or a high number of true negatives) is of lower interest, and the cost of false negatives in dynamic failure classification is much higher than that of false positives. Thus, precision–recall curves, which provide more valuable information on classifier performance in the case of imbalanced data, were used here.

Recall and precision are given by:

where

TP = the number of true positives from the classifier;

FN = the number of false negatives from the classifier;

FP = the number of false positives from the classifier.

Thus, precision describes what proportion of positive identifications was actually correct. Recall quantifies what proportion of actual positives was correctly identified. Recall is then a measure which explains how well the model finds all of the minority class members [

28].

The default decision threshold was set to 0.5, meaning that data points with normalized predicted class membership probabilities greater than or equal to 0.5 were assigned to Class 1 (dynamic failure positive), and those with a probability less than 0.5 were assigned to Class 0 (control cases). Tuning this decision threshold can help address the imbalance and produce better results in terms of classifications [

26]. Results with both a default threshold and a tuned decision threshold were examined. When tuning the decision threshold, several metrics may be used to numerically evaluate the performance of the model: the G-mean, the F-measure, and the Youden J statistic. For use in precision–recall curves, the F-score was used, defined as:

A series of decision thresholds can be used to fit a training data set, and the classifier parameters from the training data applied to the test set. The F score metric is then computed for each test set.. The maximum value of the F-score was assigned as the ‘best’ value and the corresponding decision threshold was assigned as the optimal value. Accuracy can also be computed as the number of correct predictions divided by the total number of predictions, or (TP + TN)/(TP + TN + FP + FN). However, when dealing with class-imbalanced data, there is a significant disparity between the number of positive and negative class members. Hence, for negative members, there is a much higher probability of correct prediction and the true negatives dominate the accuracy parameter, giving a misleading view of model effectiveness [

29]. Thus, accuracy was not used as a measure of model capability.

Along with the random forest analysis, results from a logistic regression were included for comparison purposes. In both cases, 468 points from the Lawson Prime data were used, with a 60/40 split between the training and test data. Results are shown for the test data. Default values in the Sklearn Python library [

15] were used in both models, with the exception that 2000 trees were used in the random forest model. Overall, the random forest model performed better than the logistic regression.

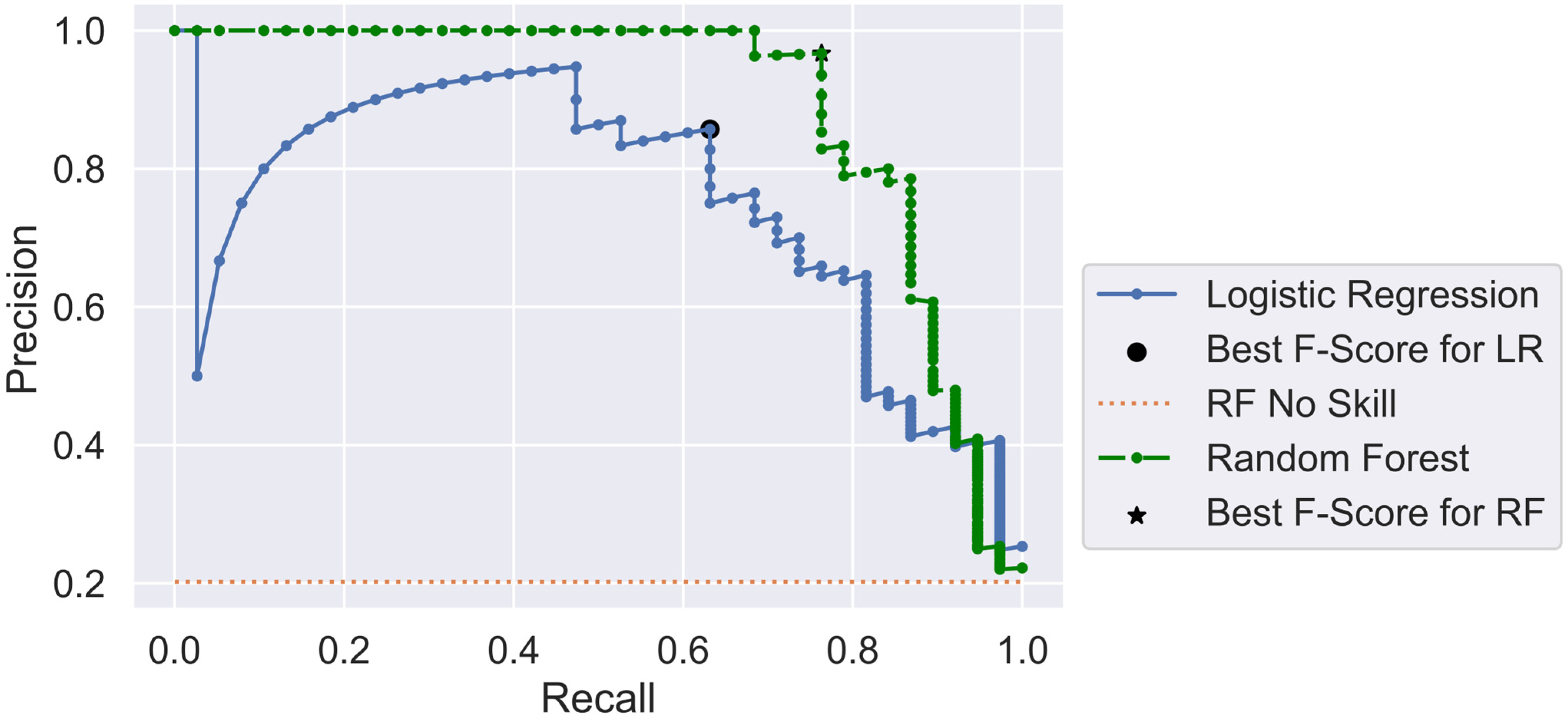

Figure 2 shows a graphic presentation of the precision–recall curves from the two models. A perfect classifier would have a point in the upper right-hand corner of the plot. The random forest classifier (shown in green) consistently scored higher than the logistic regression (shown in blue). The random forest classifier had a precision of 96.7% at its highest F-score, meaning that 96.7% of the points in the minority positive class were classed correctly. Similarly, a recall score of 76.3% indicates that 76.3% of the total number of dynamic failure cases was correctly classed.

Sklearn also allows a measure of the importance of each feature used in the random forest model to be computed. In Sklearn, the Gini importance [

30] is used as this measure. Importance levels are normalized so the sum over all features goes to 1. The features with importance measures greater than 0.05 are oxygen, pyritic sulfur, volatile matter, the Van Krevelen ratio, organic sulfur, moisture, and vitrinite reflectance. For the most part, these features are the same as those found in all the other analyses, indicating the consistency in critical feature selection.

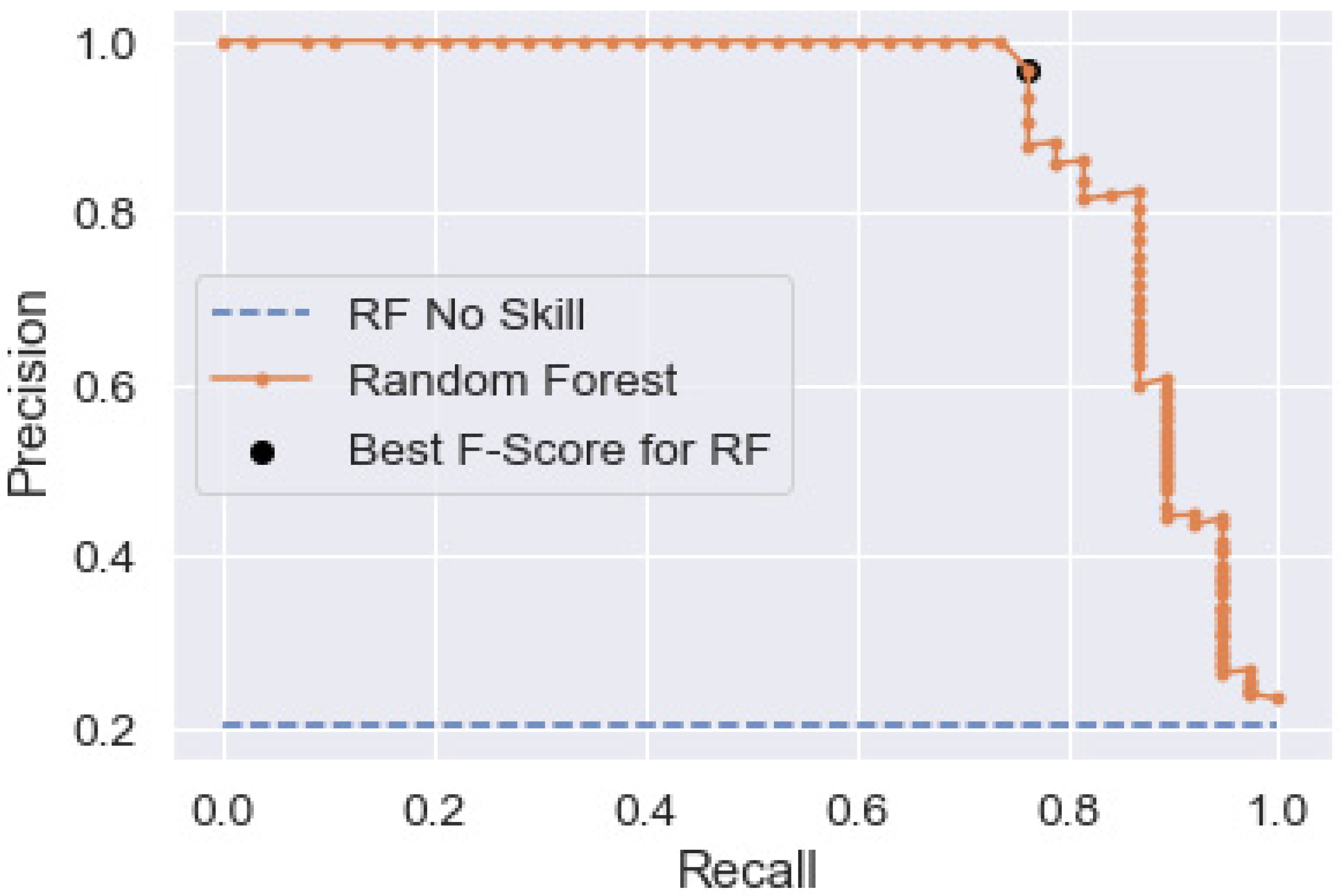

Finally, although the threshold was tuned in the results just shown, the default algorithm values used in the random forest model may not be optimal for model building. To examine this, a feature search using cross-validation was performed. This was completed through the use of a randomized grid search of parameters using 5-fold cross-validation. A total of 500 different combinations were examined. The best parameters obtained were then used to run another random forest and the results evaluated as before. The parameters found in the search, which were different from the default values used previously, were: the number of trees = 1800, the minimum samples per split = 5, and the minimum samples per leaf = 2. The precision–recall plot for these values, again using a 60/40 training–test split, is shown in

Figure 3. The ‘best’ point on the precision–recall curve, as defined by the maximum F-score, is also shown.

Table 6 provides the threshold, precision, and recall values for the model after 5-fold cross-validation and threshold tuning. The optimal threshold is slightly different, but precision and recall show no change. Thus, while cross-validation is probably a desirable processing step to ensure that the data are not overfitted, overall model capability is not different from simple threshold tuning.

Because the majority of dynamic failure cases come from the western U.S. and the majority of control cases from eastern coal fields, it could be argued that simply dividing the data between states of occurrence would produce equal or better results than a machine learning model. This sort of model was constructed by placing all eastern coal points in a control class and all western coals in the dynamic failure class. The result of this very simple model was a precision score of 86.6% and a recall score of 78.8%. Thus, simply classing the occurrences by state yields a good independent classifier. However, these results are not as good as those from a random forest model, nor do they explain any potential risk factors. A summary of precision and recall for the various models is shown in

Table 7.

In summary, a random forest classifier appears to provide superior performance over logistic regression, as measured by precision and recall values. Tuning the decision threshold provides the same level of model improvement as performing a cross-validation on the features, although the cross-validation may provide some protection from overfitting. In operating on the class-imbalanced Lawson Prime data set, the random forest model achieves a precision score of 96.7% and a recall score of 76.3%. In other words, of the events that were classed as dynamic failure seams by the model, 96.7% actually experienced dynamic failures. Of the total number of bump-positive events, 76.3% were correctly classed.

2.4. Cluster Analysis and Dimensionality Reduction

Another objective of the current study was to explore possible relationships between features measurable in coals with respect to the occurrence of dynamic failures. This was carried out using cluster analysis to explore possible groupings in the data based on the feature properties.

Several types of cluster analyses are available in statistical software packages. In this study, the hierarchical density-based spatial clustering of applications with the noise (HDBscan) algorithm was used [

13,

31,

32]. HDBscan offers several advantages over other clustering algorithms. In line with other true clustering algorithms, HDBscan delineates density-based clusters as opposed to partitions. It does not require spherical or elliptical groupings for good performance; it does not require any specification of the number of clusters; and it has the ability to omit single points, noise, and outliers from the cluster membership.

The number of features used was reduced to the top seven, as determined from random forest analysis. These were: oxygen, pyritic sulfur, the Van Krevelen ratio, volatile matter, moisture, organic sulfur, and vitrinite reflectance. As few as four features were analyzed, but the results were not dramatically different from the results using seven features.

As with logistic regression analysis, feature values were scaled using a centering algorithm. Scaling preserves the shape of the original distribution and does not significantly change the information embedded in the original data [

33], nor does it reduce the importance of outliers. With the seven features listed above, using a minimum cluster size of 10 points and a minimum number of 3 samples, HDBscan found three clusters. The minimum cluster size is an intuitive parameter as it defines the smallest size grouping in order to be considered a cluster. Minimum samples are less intuitive, but broadly speaking, they determine how conservative clustering is. The larger the value, the more points that will be declared as noise, and clusters will be restricted to progressively denser areas [

13].

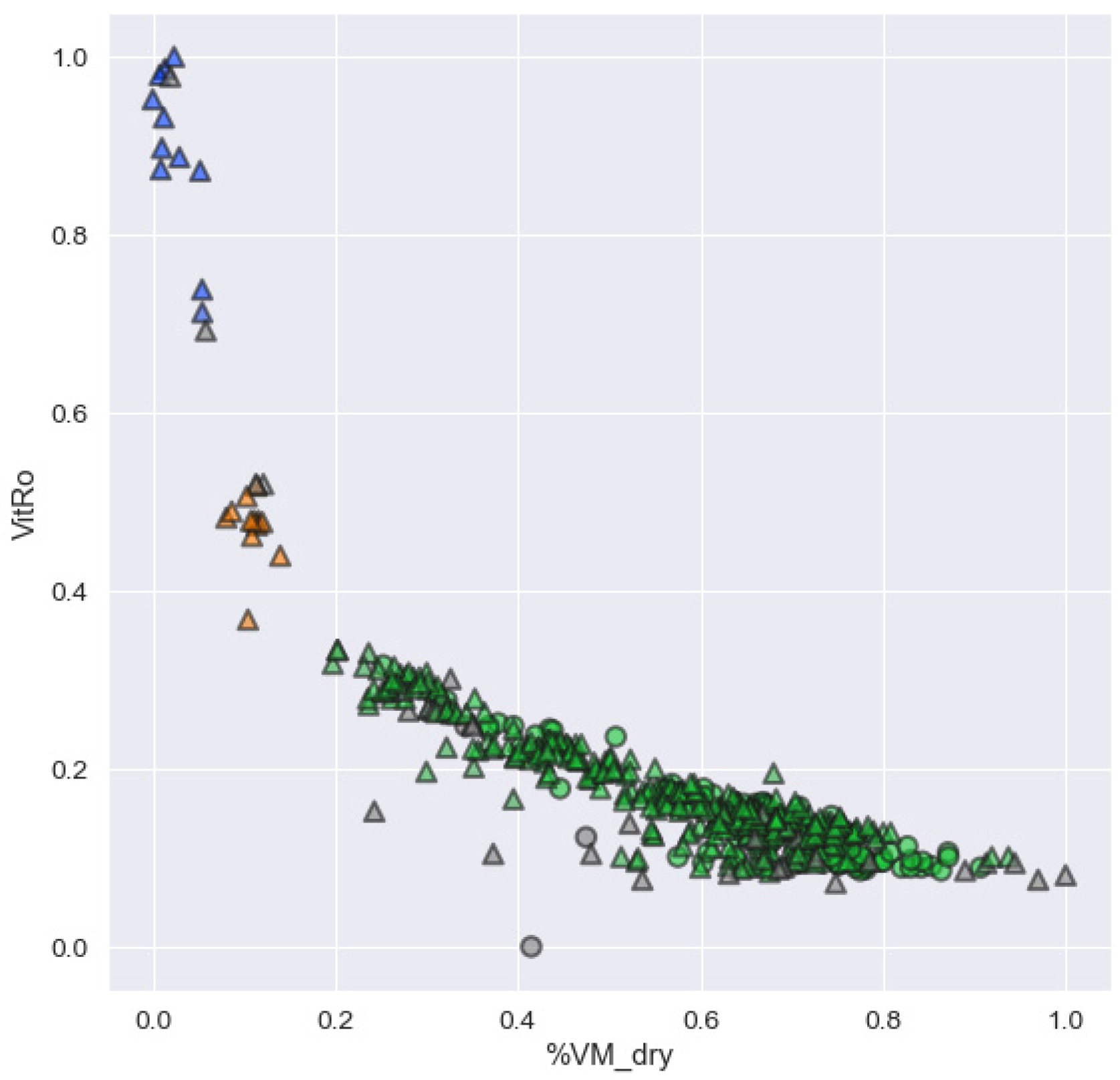

From the seven-feature clustering, a representative cross-plot of the clusters is shown in

Figure 4. This plot is a two-dimensional slice through a seven-dimensional space. Here, the three clusters found by HDBscan are shown in blue, orange, and green. The points in grey were considered outliers and were not assigned to a cluster. The triangles denote the points from control seams and the circles denote the dynamic failure seams, as determined in the initial data selection. The three clusters are relatively well separated but do not separate the dynamic failure and control group seams.

The main objective of this clustering exercise was to see if an unsupervised machine learning technique could separate the dynamic failure and control coal members, without any additional processing or operator interpretation. While some interesting relations were seen, this approach did not appear to be successful in discrimination between the two classes. One reason the classes could not be discriminated may be the number of dimensions present in the data and the complexity of the resulting cluster analysis. A means to explore a simpler data structure was pursued through dimensionality reduction.

Although the Lawson Prime data set can be considered to be of limited size, both in terms of the number of members and features, even this limited dimensional data can be difficult to visualize and extract useful information. As data dimensions increase, the ‘curse of dimensionality’ [

34] arises, and model performance suffers. Sample density decreases exponentially as dimensions increase, and eventually the concept of distance between points, as used in cluster analysis, becomes undefined. One way of overcoming the curse of dimensionality that arises with increasing features and limited training points is to employ dimensionality reduction to project data into lower dimensions. In addition, projections of a higher-dimension sample space onto lower dimensions may reveal structures in the data that are not otherwise apparent.

There are several approaches that can be used to perform dimension reduction, with the most common being principal component analysis (PCA). PCA assumes linear relations between features and works to rotate and project data along directions of increasing variance. PCA can be very successful when applied to data with linear relationships, but fails when the data do not lie on a linear subspace.

At this stage of analysis on the Lawson Prime data set, it was not clear that features can be represented as linear combinations of each other, as is assumed in PCA. Thus, both a linear (PCA) and a nonlinear dimensionality reduction algorithm were employed to determine which provided more interpretable results. The nonlinear dimensionality reduction algorithm applied was t-distributed stochastic neighbor embedding (t-SNE). Briefly, t-SNE computes the probability that pairs of points in a higher-dimensional space are related and computes a lower-dimension embedding that attempts to preserve that distribution [

14,

35,

36,

37]. The main advantages of t-SNE are that it can be applied to nonlinear data and can preserve local and global data structures. Unfortunately, it is computationally expensive and is nondeterministic.

PCA analysis was performed with the same seven features, retaining the top four principal components. The variance ratios explained by the first four principal components are given in

Table 8. The top two components explained 75.6% of the variance, the top three explained 85.4%, and the top four explained 93.2%.

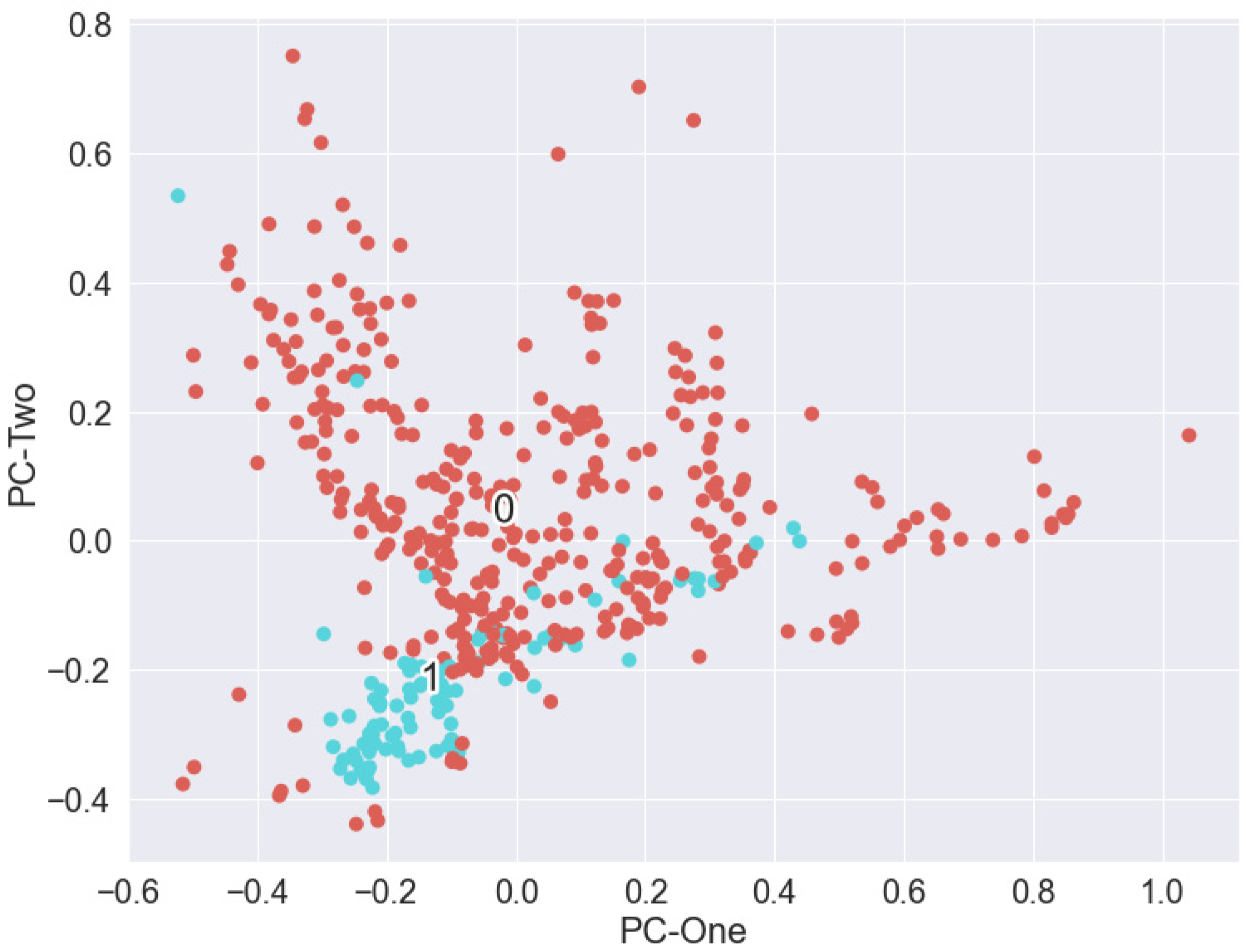

Figure 5 shows the scaled Lawson data points projected onto the top two principal components, PCA1 and PCA2, showing the results of reducing the feature dimensions from 7 to 2. In this figure, the labels ‘0’ (control group, brown) and ‘1’ (dynamic failure group, blue) were plotted at the centroid of the respective point clouds. The bumping and non-bumping points were clustered with like members. However, while there was some separation between the classes, it may not be sufficient to identify them as distinct groups without a priori information of the membership class. Dynamic failure cases were assembled at the smallest values of components 1 and 2.

To examine the impact of each of the seven retained features on the four principal components, both an impact table and a biplot were produced [

38,

39]. From this analysis, it was found that percent volatile matter dominated PC1. Pyritic sulfur exerted a strong positive impact on PC2, and organic sulfur had a strong positive impact on PC3.

While performing PCA on the Lawson data did show some separation between dynamic failure and control data, the separation was not sufficient to allow distinct clusters to be identified without a priori information. t-SNE was applied to the same data set to search for identifiable structures in the data. Algorithm parameters used were the number of iterations = 5000, the learning rate = 20, early exaggeration = 10, perplexity = 25, and metric = ‘Manhattan’. When using a larger number of dimensions, several authors recommend against the use of an L

2 norm (Euclidean distance) [

34], and so a ‘Manhattan’ metric or L

1 norm was used. Perplexity provides a general measure of the structure of the data, with larger values emphasizing more global structures, as opposed to local variations. Larger values of perplexity can be thought of as using more neighbors in the calculation. Wattenberg et al. [

40] recommend that the perplexity should not be set much greater than the number of data points and to tailor the parameters only to show possible structure in the data set, as opposed to interpreting shapes and distances between clusters.

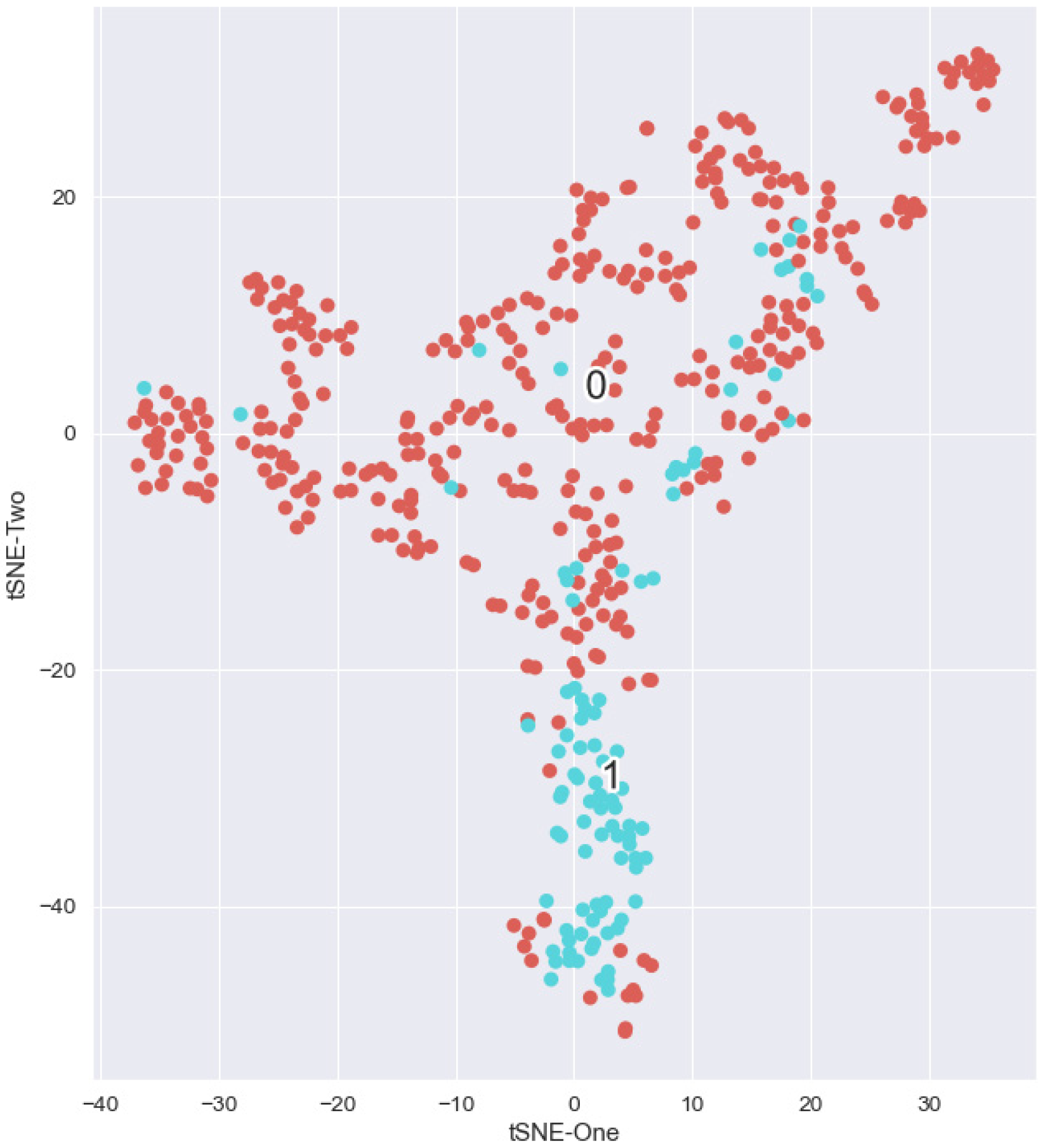

Figure 6 shows t-SNE results when processing the scaled 7-dimensional data. Retaining two components from t-SNE showed improved separation between clusters. It was found that two major clusters exist in the data: one of nearly exclusive control points and one of mostly dynamic failure data points. There is an interesting structure to this plot, as it appeared that the control coals are mostly sensitive to tSNE1 and the bumping coals are mostly sensitive to t-SNE2. These possible dependencies need further investigation. There were several bumping data points scattered throughout the non-bumping cluster, along with bumping points in the non-bumping group, which require further investigation. The majority of bumping points are clustered in a group at the bottom of

Figure 6.

Preliminary analysis was completed to examine if HDBscan could independently find control and dynamic failure clusters from the t-SNE-processed data. The same clustering parameter values used previously were applied to the two-dimensional t-SNE values, as plotted in

Figure 6.

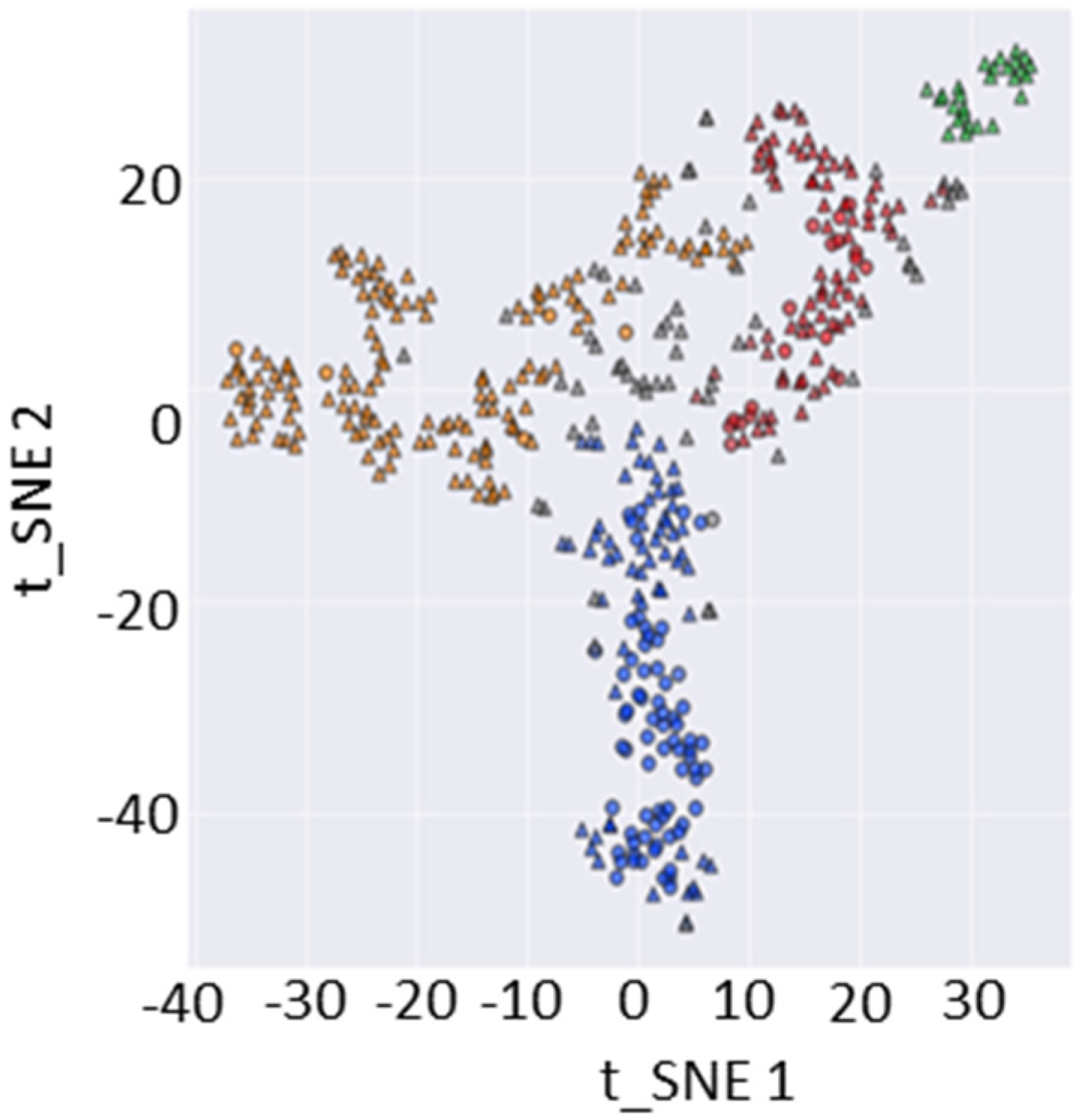

Figure 7 shows the results of this cluster analysis.

As in other plots, triangles and circles indicate non-bumping and bumping coals, respectively. HDBscan computed four clusters in the data, as shown in

Figure 7.

In the two component t-SNE results of

Figure 6, a number of points from control seams were located among dynamic failure points, and vice versa. A total of 17 control data points were located in the area of dynamic failure points. In addition, 29 dynamic failure points were scattered throughout the control population. Within all measurements, noise may be present which could cause these points to be located within groups opposite from their ground truth. Similarly, these data may be outliers that do not follow the general behavior seen on most points of either population. However, it is more likely that this may reflect the impact, or lack thereof, of mining-induced stresses and other in situ, real-world variables that impact dynamic failure occurrence.

To further examine the potential reasons for this structure, each of the points was identified in the original 18 dimensional feature space and properties examined. First, the data points belonging to surface mines were identified and removed from consideration. This removed 9 points from the control population that were plotted among the dynamic failure coal points and 5 surface mines from the complementary population. Thus, the number of apparently anomalous data points was reduced by 14. The remaining points were examined in terms of the mines from which they were taken, geologic setting, and overall geochemical signatures.

The most critical question to be addressed in these data concerns why dynamic failure points are plotted in the control group. This is a false-negative case and bears the highest cost in terms of potential risk analysis. The distribution of the remaining 24 points in this group with a state and basin distribution is shown in

Table 9 (as determined from entries in the PSOC database).

In this group, 17 points were taken from the Appalachian Basin and 7 were taken from the Uinta-Piceance Basin. A more detailed look at the source of the 24 data points showed that two were reported from an eastern mine that failed while mining took place over unknown barrier pillars in a lower mined seam. The cause of the bumps was attributed to this anomalous stress distribution. Six of the points came from the deep gassy L.S. Wood #3 mine in Colorado, and may be the result of gas-driven failure. One sample had geochemical values that matched control coals, but was from a mine under unusually deep cover. This loading may have been the critical factor in the failure. Four points were plotted close to the boundary of the dynamic failure group, and so they may be simple outliers to that population. Six had geochemical values that matched the averages for control coals but had pyritic sulfur ranges that were closer to the dynamic failure averages. Finally, five matched the control group values, and no further mine information could be found.

The second group of anomalous points was plotted closer to the dynamic failure group data, even though they were not reported as having experienced a reportable event. Four of these came from the Cannel or King Cannel seams in Utah. No information could be found on the source mines. These four points had geochemical values closer to the dynamic failure group coals, but experienced no reportable events. Further investigation is needed to determine if these were simply too shallow to experience dynamic failure or if they lack other necessary contributing factors to produce dynamic failure occurrence. The last four points were mixed in terms of their composition as regards the average of dynamic failure coals. It is not clear why these coals plot as they do, although two were taken from the Lower Kittanning seam. All points from this seam had property values well in the control range, except for these two which may represent statistical outliers.

The average values for the features used in t-SNE for dynamic failure, control, and the two anomalous classes are shown in

Table 9. In

Table 9, the feature values for the control points that plot in the dynamic failure cluster match the general values for dynamic failure coals, and vice versa. The exception to this was based on the sulfur content.

For the seven highest ranked random forest features, there were no significant differences between the ‘anomalous’ data points and the majority of points in that group. For example, the organic and pyritic sulfur did not show consistent differences between the four groups. However, oxygen, percent volatile matter, Van Krevelen ratio, percent moisture, and vitrinite reflectance features showed a consistent correlation. For all these features, the average values of the ‘Bump in Bump group’ and the ‘No-Bump in Bump group’ were similar in value. Likewise, the ‘No-Bump in No-Bump group’ and the ‘Bump in No-Bump group’ were similar (

Table 10). Thus, it appears that even though they did not fail dynamically, the control points in the dynamic failure group had geochemical markers more similar to the dynamic failure coals. Other parameters thus need to be examined for these coals. Likewise, the coals that experienced dynamic failure but appeared to be in the control group had feature values that were more similar to the bulk of the control coals. This echoes the findings of Babcock and Bickel [

41] which exhibit that nearly any coal can be induced to dynamically fail under the appropriate conditions. However, some may do so with relatively greater ease. These coals all need to be studied in more detail in order to determine why they have geochemical values that match the opposite population. Additionally, more studies are greatly needed to determine if the presence (or dearth) of certain chemical species contributes to dynamic failure on a fundamental level.